A Framework for Designing an AI Chatbot to Support Scientific Argumentation

Abstract

1. Introduction

2. Literature Review

2.1. Scientific Argumentation in the Context of Middle School Science

2.2. Artificial Intelligence to Support Scientific Practices

2.3. Generative AI to Support Classroom Learning

2.4. Conceptual Frameworks and Learning Theories

2.5. Design Framework

3. Research Questions

- Versions of the system prompt;

- The quality of user inputs;

- The chatbot use cases.

4. Methods

4.1. Target Student Population and Domain

4.2. Assessment Task Description

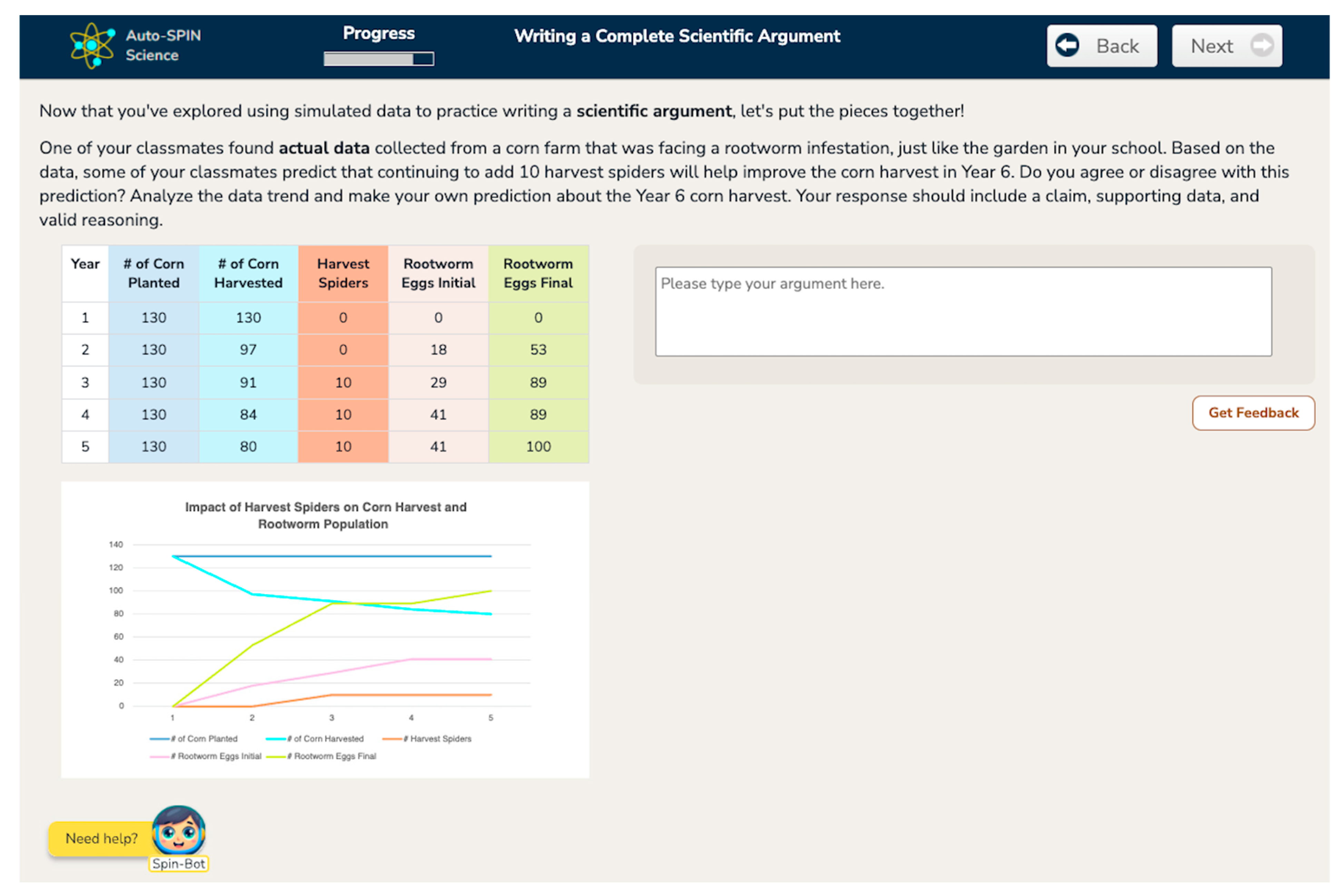

“One of your classmates found actual data collected from a corn farm that was facing a rootworm infestation, just like the garden in your school. Based on this data, some of your classmates predict that if they continue adding 10 harvest spiders, it will help improve the corn harvest in Year 6. Do you agree or disagree with this prediction? Analyze the data trend and make your own prediction about the Year 6 corn harvest. Your response should include a claim, supporting data, and valid reasoning.”

4.3. Chatbot Implementation

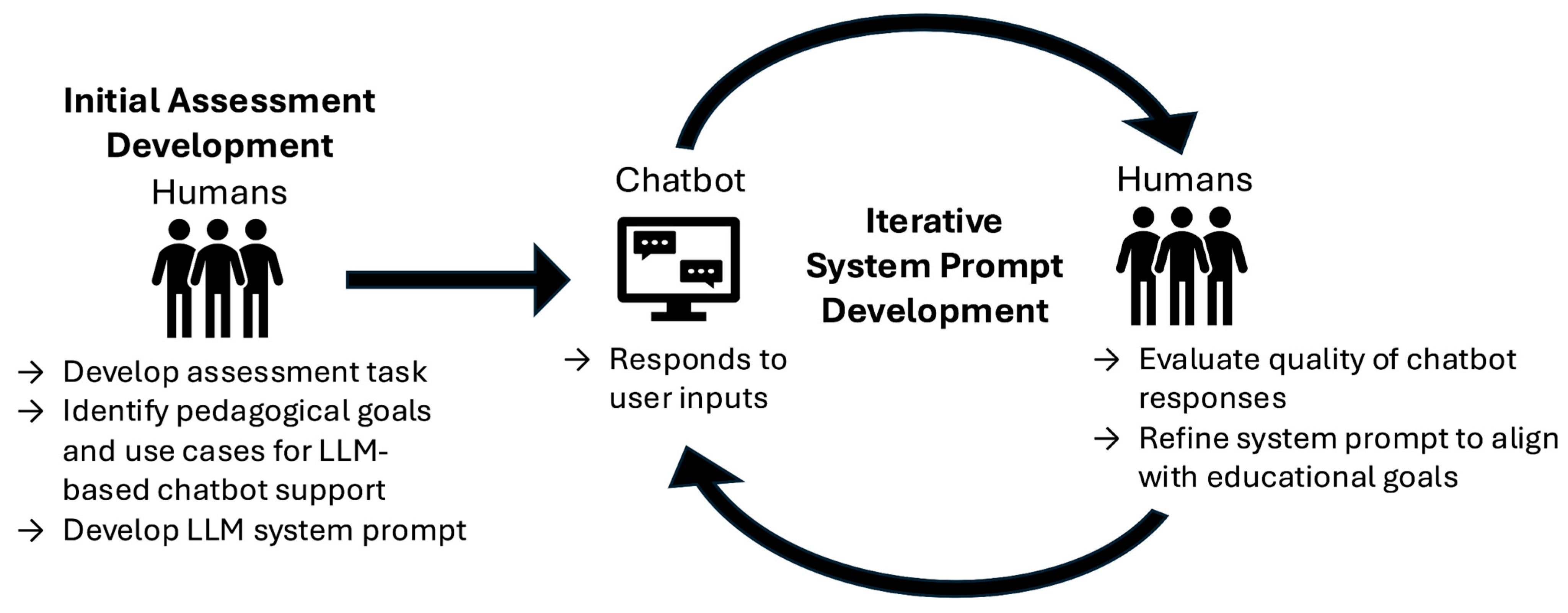

4.4. Iterative Chatbot Development Process

4.5. Synthetic Student Inputs

4.6. Evaluation of the Chatbot Responses

4.7. Statistical Analysis

5. Results

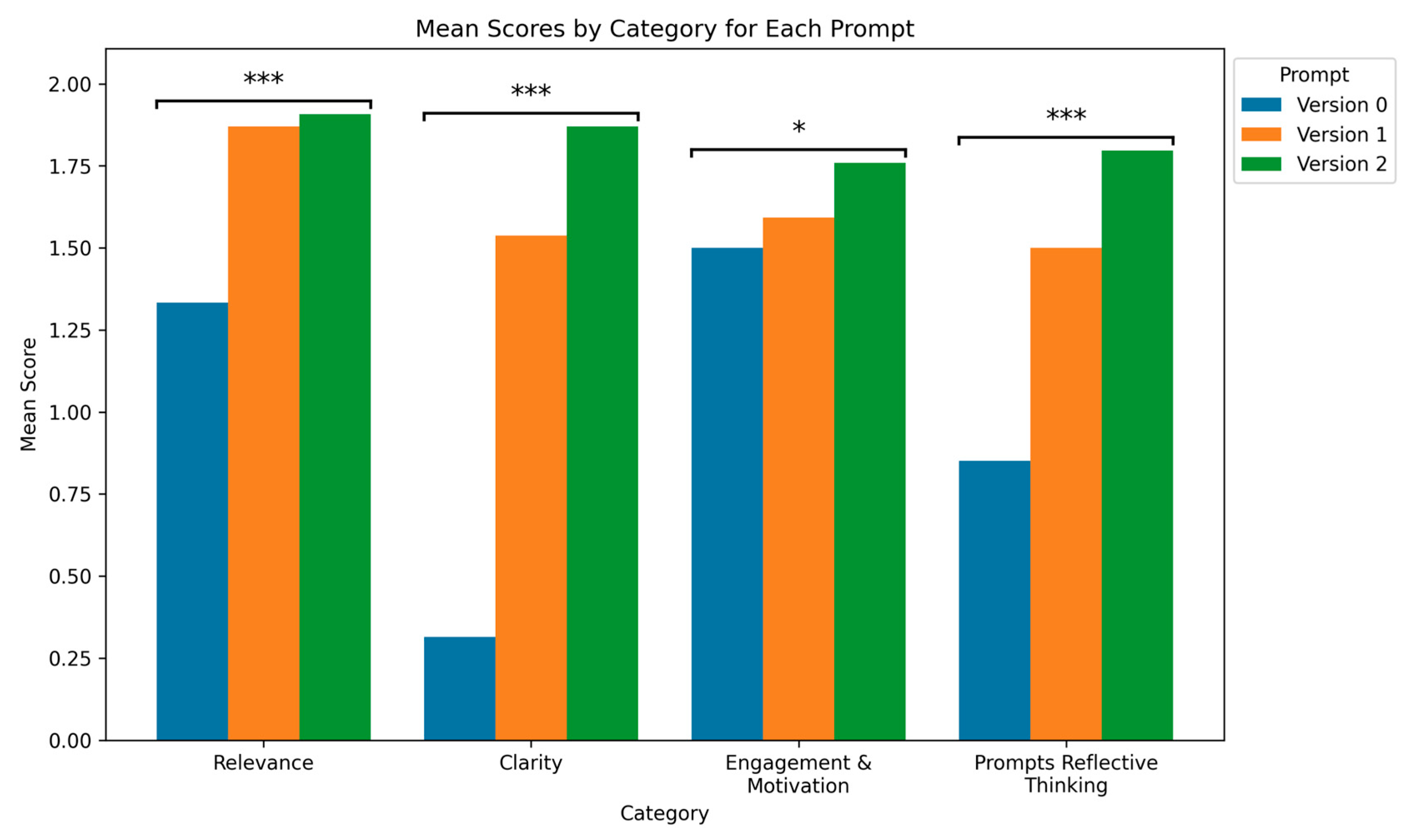

5.1. How Does the Quality of Chatbot Responses Differ Among the Versions of the System Prompt?

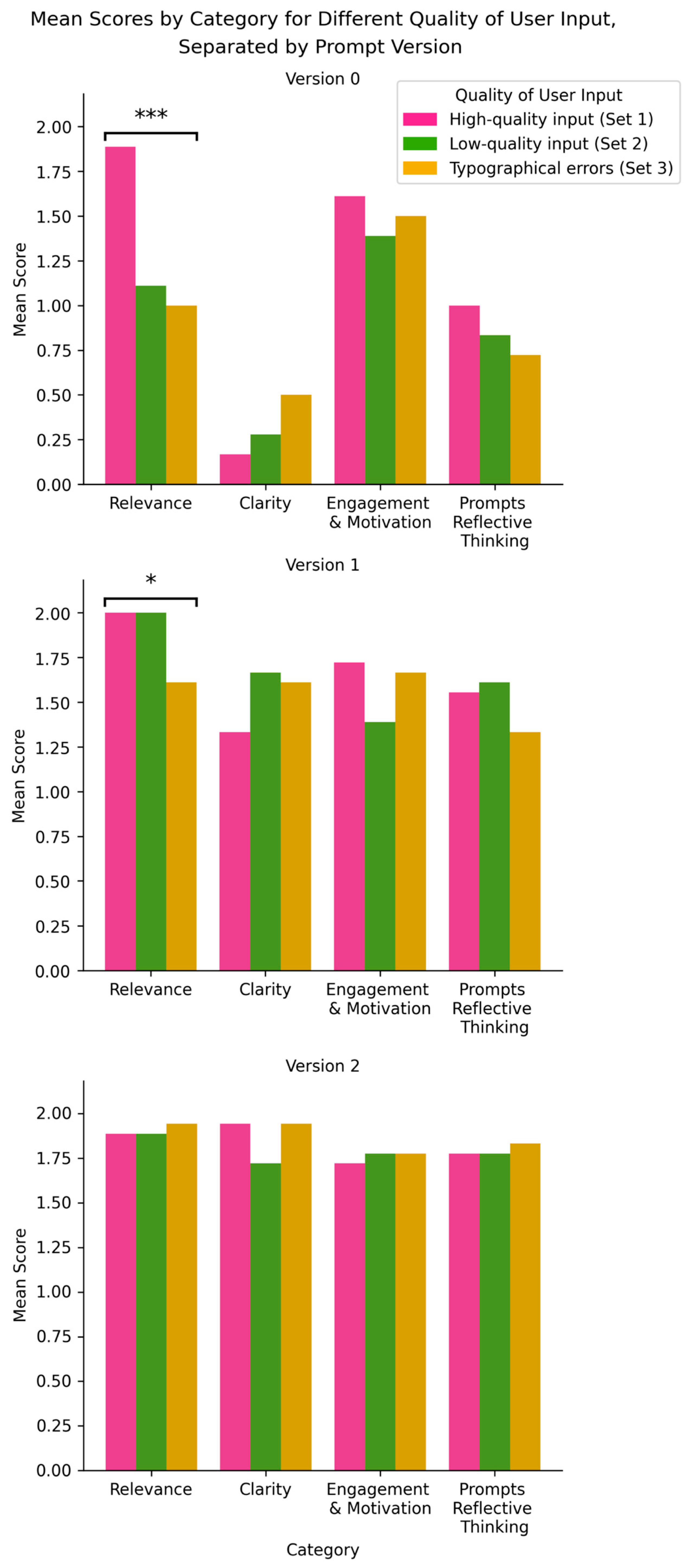

5.2. How Does the Quality of Chatbot Responses Differ Based on the Quality of User Inputs?

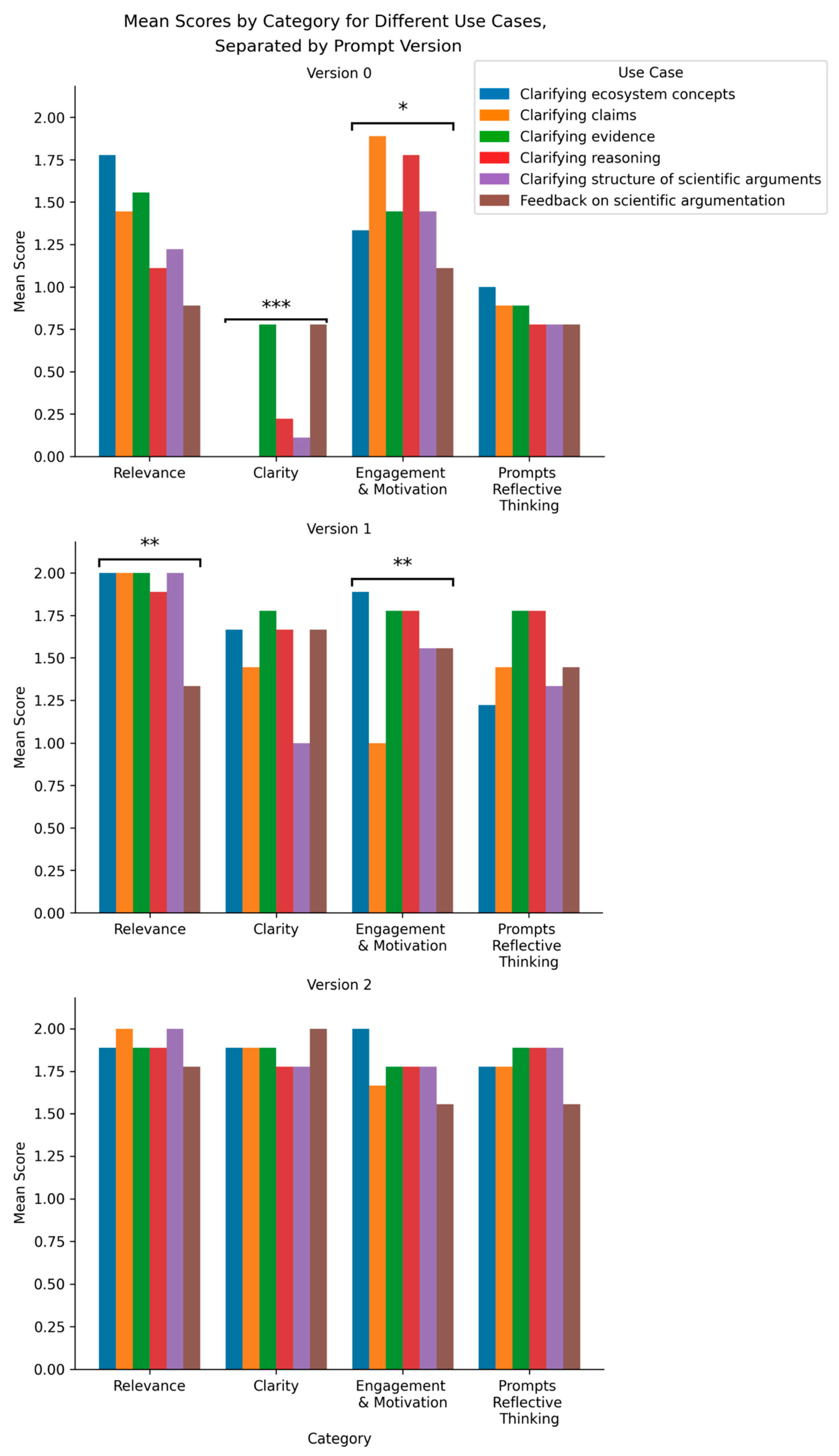

5.3. How Does the Quality of Chatbot Responses Differ for Different Chatbot Use Cases?

6. Discussion

7. Implications

7.1. A Framework for Designing AI Chatbots

7.2. Implications for Future Research

8. Limitations

9. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LLM | Large Language Model |

| AI | Artificial Intelligence |

| GenAI | Generative Artificial Intelligence |

References

- Alam, A. (2023, February 14–15). Intelligence unleashed: An argument for AI-enabled learning ecologies with real world examples of today and a peek into the future. International Conference on Innovations in Computer Science, Electronics & Electrical Engineering (p. 030001), Ashta, India. [Google Scholar]

- Ali, F., Choy, D., Divaharan, S., Tay, H. Y., & Chen, W. (2023). Supporting self-directed learning and self-assessment using TeacherGAIA, a generative AI chatbot application: Learning approaches and prompt engineering. Learning: Research and Practice, 9(2), 135–147. [Google Scholar] [CrossRef]

- Berland, L. K., & Hammer, D. (2012). Students’ framings and their participation in scientific argumentation. In Perspectives on scientific argumentation: Theory, practice and research (pp. 73–93). Springer. [Google Scholar] [CrossRef]

- Berland, L. K., & McNeill, K. L. (2010). A learning progression for scientific argumentation: Understanding student work and designing supportive instructional contexts. Science Education, 94(5), 765–793. [Google Scholar] [CrossRef]

- Berland, L. K., & Reiser, B. J. (2011). Classroom communities’ adaptations of the practice of scientific argumentation. Science Education, 95(2), 191–216. [Google Scholar] [CrossRef]

- Chen, E., Wang, D., Xu, L., Cao, C., Fang, X., & Lin, J. (2024). A systematic review on prompt engineering in large language models for K-12 STEM education. Available online: https://arxiv.org/pdf/2410.11123 (accessed on 25 July 2025).

- Chen, Y., Benus, M., & Hernandez, J. (2019). Managing uncertainty in scientific argumentation. Science Education, 103(5), 1235–1276. [Google Scholar] [CrossRef]

- Cohn, C., Snyder, C., Montenegro, J., & Biswas, G. (2024). Towards a human-in-the-loop LLM approach to collaborative discourse analysis. In Communications in computer and information science (Vol. 2151, pp. 11–19). CCIS. Springer. [Google Scholar] [CrossRef]

- Cooper, M. M., & Klymkowsky, M. W. (2024). Let us not squander the affordances of LLMs for the sake of expedience: Using retrieval augmented generative AI chatbots to support and evaluate student Reasoning. Journal of Chemical Education, 101(11), 4847–4856. [Google Scholar] [CrossRef]

- Dood, A. J., Watts, F. M., Connor, M. C., & Shultz, G. V. (2024). Automated text analysis of organic chemistry students’ written hypotheses. Journal of Chemical Education, 101(3), 807–818. [Google Scholar] [CrossRef]

- Duschl, R. (2003). The assessment of argumentation and explanation. In The role of moral reasoning on socioscientific issues and discourse in science education (pp. 139–161). Springer. [Google Scholar] [CrossRef]

- Faize, F., Husain, W., & Nisar, F. (2017). A critical review of scientific argumentation in science Education. EURASIA Journal of Mathematics, Science and Technology Education, 14(1), 475–483. [Google Scholar] [CrossRef]

- Farah, J. C., Ingram, S., Spaenlehauer, B., Lasne, F. K. L., & Gillet, D. (2023). Prompting large language models to power educational chatbots. In Lecture notes in computer science (Including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics) (Vol. 14409, pp. 169–188). LNCS. Springer. [Google Scholar] [CrossRef]

- Grooms, J., Sampson, V., & Enderle, P. (2018). How concept familiarity and experience with scientific argumentation are related to the way groups participate in an episode of argumentation. Journal of Research in Science Teaching, 55(9), 1264–1286. [Google Scholar] [CrossRef]

- Haudek, K. C., Prevost, L. B., Moscarella, R. A., Merrill, J., & Urban-Lurain, M. (2012). What are they thinking? Automated analysis of student writing about acid-base chemistry in introductory biology. CBE Life Sciences Education, 11(3), 283–293. [Google Scholar] [CrossRef]

- Haudek, K. C., & Zhai, X. (2024). Examining the Effect of Assessment Construct Characteristics on Machine Learning Scoring of Scientific Argumentation. International Journal of Artificial Intelligence in Education, 34(4), 1482–1509. [Google Scholar] [CrossRef]

- Kaldaras, L., Haudek, K., & Krajcik, J. (2024). Employing automatic analysis tools aligned to learning progressions to assess knowledge application and support learning in STEM. International Journal of STEM Education, 11(1), 57. [Google Scholar] [CrossRef]

- Klar, M. (2024). How should we teach chatbot interaction to students? A pilot study on perceived affordances and chatbot interaction patterns in an authentic K-12 setting. In Proceedings of DELFI workshops 2024. Gesellschaft für Informatik e.V. [Google Scholar]

- Kooli, C. (2023). Chatbots in education and research: A critical examination of ethical implications and solutions. Sustainability, 15(7), 5614. [Google Scholar] [CrossRef]

- Kumar, H., Musabirov, I., Reza, M., Shi, J., Wang, X., Williams, J. J., Kuzminykh, A., & Liut, M. (2024). Guiding students in Using LLMs in supported learning environments: Effects on interaction dynamics, learner performance, confidence, and trust. Proceedings of the ACM on Human-Computer Interaction, 8(CSCW2), 499. [Google Scholar] [CrossRef]

- Latifi, S., Noroozi, O., & Talaee, E. (2021). Peer feedback or peer feedforward? Enhancing students’ argumentative peer learning processes and outcomes. British Journal of Educational Technology, 52(2), 768–784. [Google Scholar] [CrossRef]

- Lee, A. V. Y., Tan, S. C., & Teo, C. L. (2023). Designs and practices using generative AI for sustainable student discourse and knowledge creation. Smart Learning Environments, 10(1), 59. [Google Scholar] [CrossRef]

- Lee, H. S., Gweon, G. H., Lord, T., Paessel, N., Pallant, A., & Pryputniewicz, S. (2021). Machine learning-enabled automated feedback: Supporting students’ revision of scientific arguments based on data drawn from simulation. Journal of Science Education and Technology, 30(2), 168–192. [Google Scholar] [CrossRef]

- Lee, J. H., Shin, D., & Hwang, Y. (2024). Investigating the capabilities of large language model-based task-oriented dialogue chatbots from a learner’s perspective. System, 127, 103538. [Google Scholar] [CrossRef]

- Linn, M. C., Gerard, L., Ryoo, K., McElhaney, K., Liu, O. L., & Rafferty, A. N. (2014). Computer-guided inquiry to improve science learning. Science, 344(6180), 155–156. [Google Scholar] [CrossRef]

- Liu, L., Cisterna, D., Kinsey, D., Qi, Y., & Steimel, K. (2024). AI-Based Diagnosis of Student Reasoning Patterns in NGSS Assessments. Uses of Artificial Intelligence in STEM Education, 162–176. [Google Scholar] [CrossRef]

- Liu, O. L., Rios, J. A., Heilman, M., Gerard, L., & Linn, M. C. (2016). Validation of automated scoring of science assessments. Journal of Research in Science Teaching, 53(2), 215–233. [Google Scholar] [CrossRef]

- Makransky, G., Shiwalia, B. M., Herlau, T., & Blurton, S. (2025). Beyond the “wow” factor: Using generative AI for increasing generative sense-making. Educational Psychology Review, 37(3), 60. [Google Scholar] [CrossRef]

- McNeill, K. L., & Knight, A. M. (2013). Teachers’ pedagogical content knowledge of scientific argumentation: The impact of professional development on K–12 teachers. Science Education, 97(6), 936–972. [Google Scholar] [CrossRef]

- McNeill, K. L., & Krajcik, J. (2008). Scientific explanations: Characterizing and evaluating the effects of teachers’ instructional practices on student learning. Journal of Research in Science Teaching, 45(1), 53–78. [Google Scholar] [CrossRef]

- Meyer, J., Jansen, T., Schiller, R., Liebenow, L. W., Steinbach, M., Horbach, A., & Fleckenstein, J. (2024). Using LLMs to bring evidence-based feedback into the classroom: AI-generated feedback increases secondary students’ text revision, motivation, and positive emotions. Computers and Education: Artificial Intelligence, 6, 100199. [Google Scholar] [CrossRef]

- Neumann, A. T., Yin, Y., Sowe, S., Decker, S., & Jarke, M. (2025). An LLM-driven chatbot in higher education for databases and information systems. IEEE Transactions on Education, 68(1), 103–116. [Google Scholar] [CrossRef]

- Nicol, D., & MacFarlane-Dick, D. (2006). Formative assessment and selfregulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218. [Google Scholar] [CrossRef]

- NRC. (2012). A framework for K-12 science education: Practices, crosscutting concepts, and core ideas (pp. 1–385). The National Academies Press. [Google Scholar] [CrossRef]

- OpenAI. (2024). GPT-4o system card. Available online: https://arxiv.org/pdf/2410.21276 (accessed on 25 July 2025).

- Osborne, J., Erduran, S., & Simon, S. (2004). Enhancing the quality of argumentation in school science. Journal of Research in Science Teaching, 41(10), 994–1020. [Google Scholar] [CrossRef]

- Panadero, E., & Lipnevich, A. A. (2022). A review of feedback models and typologies: Towards an integrative model of feedback elements. Educational Research Review, 35, 100416. [Google Scholar] [CrossRef]

- Ping, I., Halim, L., & Osman, K. (2020). Explicit teaching of scientific argumentation as an approach in developing argumentation skills, science process skills and biology understanding. Journal of Baltic Science Education, 19(2), 276–288. [Google Scholar] [CrossRef]

- Ranade, N., Saravia, M., & Johri, A. (2025). Using rhetorical strategies to design prompts: A human-in-the-loop approach to make AI useful. AI and Society, 40(2), 711–732. [Google Scholar] [CrossRef]

- Rapanta, C. (2021). Can teachers implement a student-centered dialogical argumentation method across the curriculum? Teaching and Teacher Education, 105, 103404. [Google Scholar] [CrossRef]

- Sampson, V., & Blanchard, M. R. (2012). Science teachers and scientific argumentation: Trends in views and practice. Journal of Research in Science Teaching, 49(9), 1122–1148. [Google Scholar] [CrossRef]

- Sampson, V., Grooms, J., & Walker, J. P. (2011). Argument-driven inquiry as a way to help students learn how to participate in scientific argumentation and craft written arguments: An exploratory study. Science Education, 95(2), 217–257. [Google Scholar] [CrossRef]

- Schmucker, R., Xia, M., Azaria, A., & Mitchell, T. (2024). Ruffle & Riley: Insights from designing and evaluating a large language model-based conversational tutoring system. Available online: https://arxiv.org/pdf/2404.17460 (accessed on 25 July 2025).

- Scholz, N., Nguyen, M. H., Singla, A., & Nagashima, T. (2025). Partnering with AI: A pedagogical feedback system for LLM integration into programming education. Available online: https://arxiv.org/pdf/2507.00406v1 (accessed on 25 July 2025).

- Seabold, S., & Perktold, J. (2010). Statsmodels: Econometric and statistical modeling with Python. Scipy, 7, 92–96. [Google Scholar] [CrossRef]

- Stamper, J., Xiao, R., & Hou, X. (2024). Enhancing LLM-based feedback: Insights from intelligent tutoring systems and the learning sciences. In Communications in computer and information science (Vol. 2150, pp. 32–43). CCIS. Springer. [Google Scholar] [CrossRef]

- Tian, L., Ding, Y., Tian, X., Chen, Y., & Wang, J. (2025). Design and implementation of an intelligent assessment technology for elementary school students’ scientific argumentation ability. Assessment in Education: Principles, Policy & Practice, 32(2), 231–251. [Google Scholar] [CrossRef]

- Watts, F. M., Dood, A. J., & Shultz, G. V. (2022). Developing machine learning Models for automated analysis of organic chemistry students’ written descriptions of organic reaction mechanisms. Student Reasoning in Organic Chemistry, 285–303. [Google Scholar] [CrossRef]

- Watts, F. M., Dood, A. J., & Shultz, G. V. (2023). Automated, content-focused feedback for a writing-to-learn assignment in an undergraduate organic chemistry course. ACM International Conference Proceeding Series, 531–537. [Google Scholar] [CrossRef]

- White, J., Fu, Q., Hays, S., Sandborn, M., Olea, C., Gilbert, H., Elnashar, A., Spencer-Smith, J., & Schmidt, D. C. (2023). A prompt pattern catalog to enhance prompt engineering with ChatGPT. Available online: https://arxiv.org/pdf/2302.11382 (accessed on 25 July 2025).

- Wilensky, U. (1999). NetLogo home page. Available online: https://ccl.northwestern.edu/netlogo/ (accessed on 25 July 2025).

- Wilson, C. D., Haudek, K. C., Osborne, J. F., Buck Bracey, Z. E., Cheuk, T., Donovan, B. M., Stuhlsatz, M. A. M., Santiago, M. M., & Zhai, X. (2024). Using automated analysis to assess middle school students’ competence with scientific argumentation. Journal of Research in Science Teaching, 61(1), 38–69. [Google Scholar] [CrossRef]

- Zhai, X., Haudek, K. C., Shi, L., Nehm, R. H., & Urban-Lurain, M. (2020). From substitution to redefinition: A framework of machine learning-based science assessment. Journal of Research in Science Teaching, 57(9), 1430–1459. [Google Scholar] [CrossRef]

- Zhai, X., & Nehm, R. H. (2023). AI and formative assessment: The train has left the station. Journal of Research in Science Teaching, 60(6), 1390–1398. [Google Scholar] [CrossRef]

- Zhu, M., Lee, H. S., Wang, T., Liu, O. L., Belur, V., & Pallant, A. (2017). Investigating the impact of automated feedback on students’ scientific argumentation. International Journal of Science Education, 39(12), 1648–1668. [Google Scholar] [CrossRef]

| Version 0 (Minimal Prompt Engineering) | Version 1 (Interim Version with Prompt Engineering) | Version 2 (Final Version with Prompt Engineering) |

|---|---|---|

|

|

|

| Use Case | Set 1—High Quality | Set 2—Low Quality | Set 3—Typographical Errors |

|---|---|---|---|

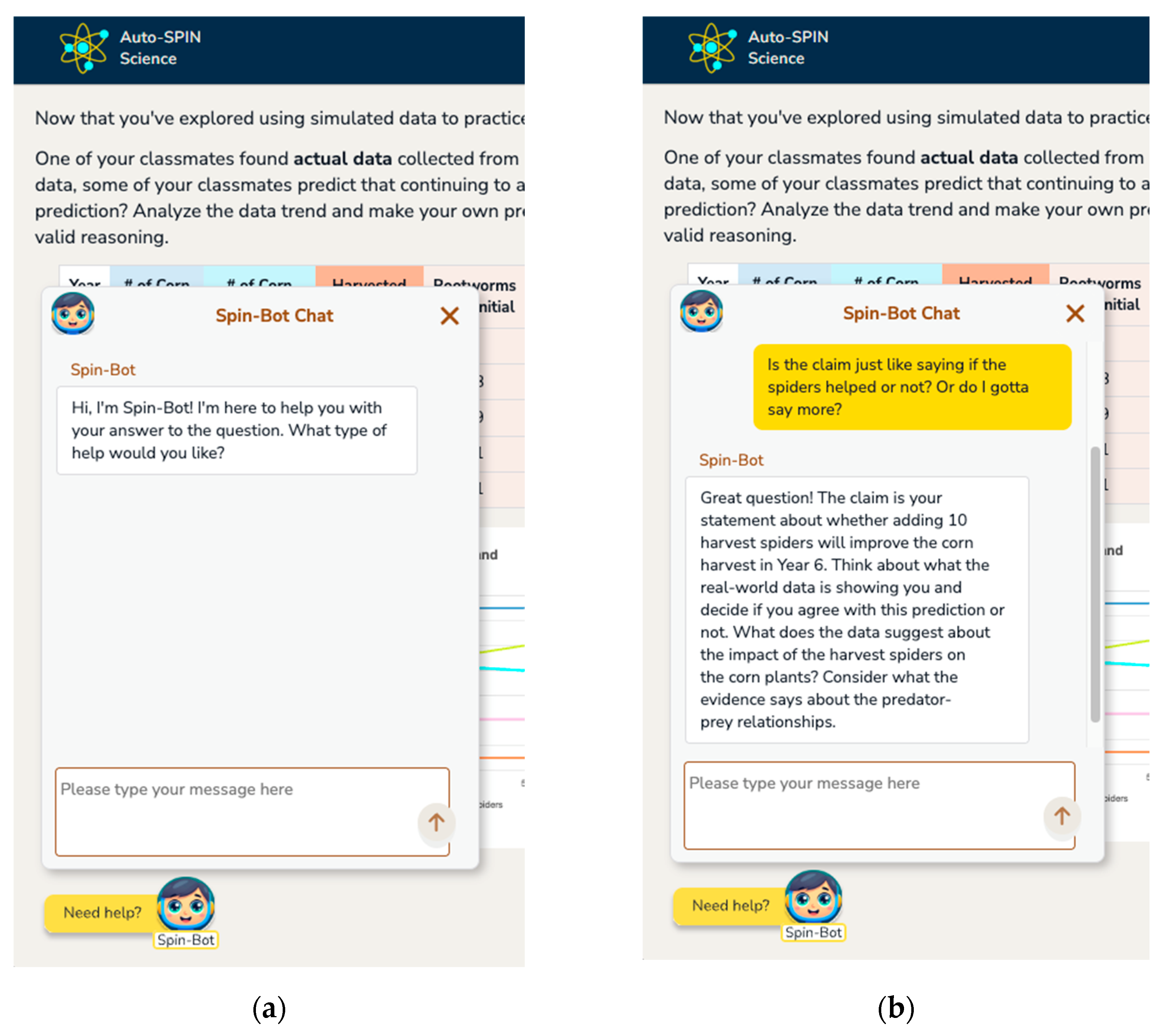

| Clarifying claim | “Is the claim just like saying if the spiders helped or not? Or do I gotta say more?” | “So do I just say like the corn didn’t grow good?” | “do i just say it didnt work or do i need like more stuff” |

| Clarifying evidence | “Do I just write the numbers from the chart or do I have to explain them too?” | “Can I just say the bugs were still there in the chart?” | “i put the chart said 3 corn but idk if thats enuf” |

| Clarifying reasoning | “I said the corn went down and the rootworms went up, but I don’t know what to say for the reasoning part.” | “Is it okay if I say the spiders weren’t fast enough?” | “i said the bugs ate it but not sure if that counts” |

| Clarifying structure of scientific arguments | “I don’t get how to do the whole argument thing. Like what goes first and what goes last?” | “I said the corn didn’t work and the bugs were still there, is that enough?” | “do i need claim and reason or just like say what happend” |

| Clarifying ecosystem concepts | “Why didn’t the harvestmen stop the rootworms if they eat them? Shouldn’t that work?” | “Why didn’t the harvestmen eat all the bugs if they’re supposed to?” | “why the spiders didnt eat all the worm things??” |

| Feedback on scientific argumentation | “Can you check if my answer makes sense? I said the harvestmen didn’t help cuz the corn still went down.” | “I said they should’ve added more spiders, does that count as reasoning?” | “i wrote they werent fast enuf is that ok?” |

| Category | Definition | Indicators | Scale |

|---|---|---|---|

| Relevance | Feedback accurately reflects the argumentation framework and encourages evidence-based reasoning. |

| 0 = Not relevant/inaccurate 1 = Partially relevant 2 = Fully relevant |

| Clarity | Feedback is clear, concise, and developmentally appropriate for middle school students. |

| 0 = Inappropriate length (too lengthy or too brief) 1 = Partially clear 2 = Clear and concise |

| Engagement and Motivation | Feedback is likely to keep students engaged and focused on the task. |

| 0 = Fails to engage/motivate 1 = Partially engages/motivates 2 = Highly engaging/motivating |

| Prompts Reflective Thinking | Feedback encourages students to reflect on their reasoning or understanding of the task. |

| 0 = Provides no prompting 1 = Minimal prompting 2 = Effective prompting |

| Input: “I don’t get how to do the whole argument thing. Like what goes first and what goes last?” |

|---|

| Output, Version 0 (minimal prompt engineering) |

| Great question! Crafting a scientific argument involves organizing your thoughts in a clear way to explain your ideas. Here’s a simple structure that can help you form your argument: 1. Claim: This is the main point or statement you are making. It answers the question you are investigating. Example: “Plants are essential for maintaining a healthy ecosystem.” 2. Evidence: These are the scientific facts, data, and information that support your claim. Make sure your evidence is relevant and credible, such as data from experiments, observations, or reliable sources. Example: “Plants produce oxygen through photosynthesis, which is necessary for most living organisms to survive. They also provide food and habitats for many animals.” 3. Reasoning: This explains how your evidence supports your claim. It connects the dots and shows why your claim makes sense based on the evidence you provided. Example: “Since oxygen is essential for respiration in most living organisms, and plants produce oxygen, the presence of plants ensures that these organisms can survive. Additionally, by being a food source and providing shelter, plants support diverse animal populations, which promotes a balanced and healthy ecosystem.” 4. Counterargument and Rebuttal (optional): Sometimes, it’s helpful to acknowledge an opposing view and explain why your argument still holds. This can make your argument stronger. Example: “Some might argue that animals also produce carbon dioxide, which plants need for photosynthesis. However, without plants, the carbon dioxide levels would rise, and oxygen levels would decrease, harming many organisms.” Putting it all together in a paragraph might look like this: Plants are essential for maintaining a healthy ecosystem. (Claim) Plants produce oxygen through photosynthesis, which is necessary for most living organisms to survive. They also provide food and habitats for many animals. (Evidence) Since oxygen is essential for respiration in most living organisms, and plants produce oxygen, the presence of plants ensures that these organisms can survive. Additionally, by being a food source and providing shelter, plants support diverse animal populations, which promotes a balanced and healthy ecosystem. (Reasoning) Some might argue that animals also produce carbon dioxide, which plants need for photosynthesis. However, without plants, the carbon dioxide levels would rise, and oxygen levels would decrease, harming many organisms. (Counterargument and Rebuttal) Does this structure help clarify how to present your argument? |

| Output, Version 1 (interim version with prompt engineering) |

| I understand that this might seem a bit confusing at first, but don’t worry‚ we can break it down step by step! When you’re making a scientific argument, you need to include three main parts: claim, evidence, and reasoning. Here’s the order in which you should present them: 1. Claim: This is the statement or conclusion you’re making based on your observations or experiments. It’s your answer to the question. For example, “Adding harvest spiders decreases the population of rootworms.” 2. Evidence: This is the data that supports your claim. You should use the information you gathered from your simulation to back up your statement. For example, if your simulation showed that when you added harvest spiders, the number of rootworms decreased, that would be your evidence. 3. Reasoning: This explains why your evidence supports your claim. It involves using your understanding of how the system works. For example, you might explain that harvest spiders are predators of rootworms, and having more predators reduces the prey population, which is why the rootworm numbers went down. So, first you state your claim, then you provide your evidence, and finally, you explain the reasoning connecting your evidence to your claim. Does that help clarify things? |

| Output, Version 2 (final version with prompt engineering) |

| Great question! Let’s break it down step-by-step: 1. Claim: This is your statement about what you think will happen. For example, whether adding 10 harvest spiders will improve the corn harvest. 2. Evidence: This supports your claim with data. Look at the numbers and facts from the simulation or real-world data to use as proof. 3. Reasoning: This explains why your evidence supports your claim. Connect your evidence to what you know about predator-prey relationships and ecosystems. Let’s try to make a claim first. Do you think adding 10 harvest spiders will help the corn harvest? |

| Design Principle | Key Idea | Example System Prompt Elements |

|---|---|---|

| Scaffold argumentation structure to guide student reasoning | Design prompts to scaffold the structure and logic of scientific arguments. Chatbot responses should help students identify relevant evidence, connect evidence to claim, and clarify their reasoning. | “Your role is to motivate, engage, encourage, and support students in demonstrating scientific argumentation and reasoning based on McNeill and Krajcik’s (2008) argumentation framework: 1. Help students make a claim. 2. Encourage them to support it with evidence. 3. Guide them to connect the claim and evidence using reasoning.” |

| Prompt reflection and elaboration | Design prompts that encourage students to reflect on their ideas and elaborate their thinking. Chatbot responses should prompt students to elaborate when their ideas are unclear and encourage reflection. | “* Ask questions that prompt students to use evidence and explain their thinking. * Ask the student to elaborate if a message is unclear, rather than correct the student’s grammar or language.” |

| Prioritize student agency | Design prompts that encourage students to take ownership of their reasoning process. Instead of supplying answers, responses should support decision-making, foster curiosity, and empower students to construct and revise their arguments. | “* Clarify task—related questions from the students. * Be supportive and focused on scientific understanding. * Gently redirect off-topic responses back to the task. * IMPORTANT: Responses must not provide direct answers to the task. * IMPORTANT: Responses must not provide examples.” |

| Limit the length and improve the clarity of chatbot responses | Design prompts that ensure the chatbot responses are concise, focused, and developmentally appropriate for the target age group. | “* IMPORTANT: Responses must be no longer than 4 sentences.” |

| Provide brief and specific instructions in the system prompt | Design prompts that are concise and incorporate only necessary information to improve consistency of chatbot responses. | Reduce the details provided in the system prompt, using bullet points and signals like “IMPORTANT” to emphasize key instructions. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Watts, F.M.; Liu, L.; Ober, T.M.; Song, Y.; Jusino-Del Valle, E.; Zhai, X.; Wang, Y.; Liu, N. A Framework for Designing an AI Chatbot to Support Scientific Argumentation. Educ. Sci. 2025, 15, 1507. https://doi.org/10.3390/educsci15111507

Watts FM, Liu L, Ober TM, Song Y, Jusino-Del Valle E, Zhai X, Wang Y, Liu N. A Framework for Designing an AI Chatbot to Support Scientific Argumentation. Education Sciences. 2025; 15(11):1507. https://doi.org/10.3390/educsci15111507

Chicago/Turabian StyleWatts, Field M., Lei Liu, Teresa M. Ober, Yi Song, Euvelisse Jusino-Del Valle, Xiaoming Zhai, Yun Wang, and Ninghao Liu. 2025. "A Framework for Designing an AI Chatbot to Support Scientific Argumentation" Education Sciences 15, no. 11: 1507. https://doi.org/10.3390/educsci15111507

APA StyleWatts, F. M., Liu, L., Ober, T. M., Song, Y., Jusino-Del Valle, E., Zhai, X., Wang, Y., & Liu, N. (2025). A Framework for Designing an AI Chatbot to Support Scientific Argumentation. Education Sciences, 15(11), 1507. https://doi.org/10.3390/educsci15111507