Using a Multi-Agent System and Evidence-Centered Design to Integrate Educator Expertise Within Generated Feedback

Abstract

1. Introduction

2. Literature Review

2.1. The Complicated Work of Mathematics Teaching

2.2. The Complicated Work of Supporting Mathematics Teacher Learning

2.3. Using Foundation Models to Support the Complicated Work of Mathematics Teacher Learning

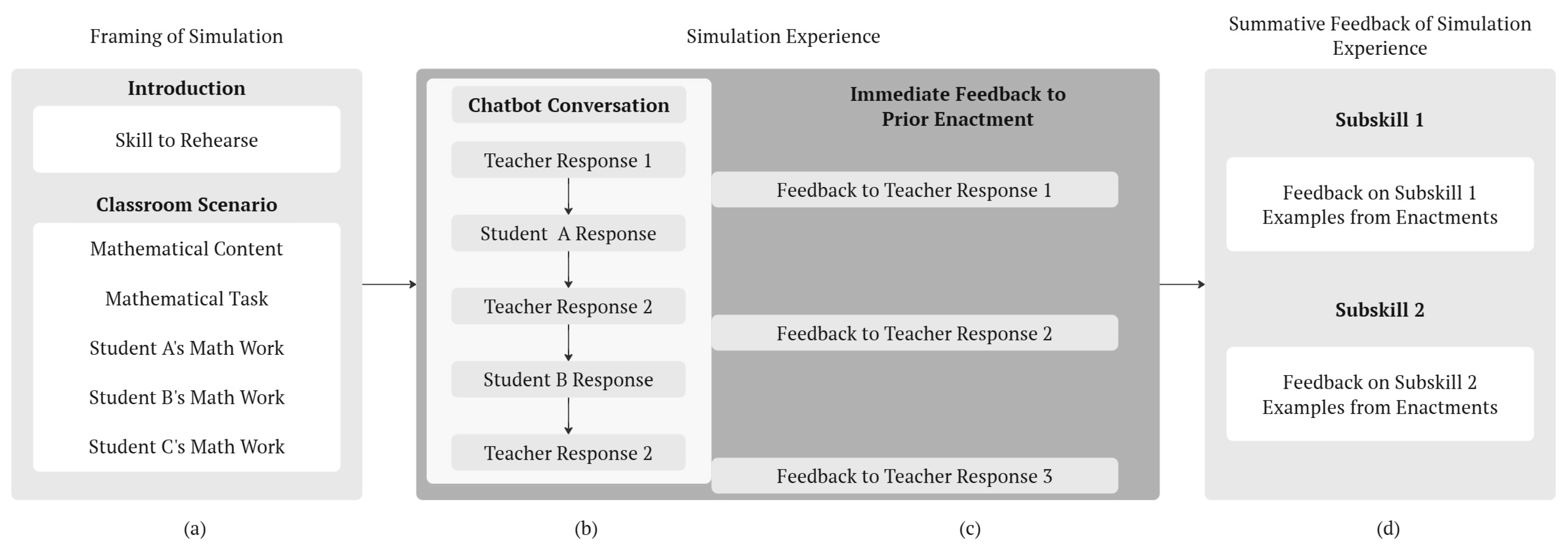

3. Evidence-Centered Design Modeling Logic to Design Templates

3.1. Overview of Evidence-Centered Design

3.2. Relevance of ECD’s Modeling Logic to Simulation Design

3.3. Constructing Design Templates from ECD Modeling Logic

4. An Illustrative Example of a Design Template

Crosswalking ECD Modeling Logic to Design Templates

5. Translating Design Templates into Multiple Prompts

5.1. How and Why Design Templates Shape Prompting

5.2. Prompt Engineering for Generated Students and Feedback

6. An Illustrative Example of a Design Template to Prompt Structure

- Speak when the user asks the first question.

- Speak when the user asks a specific follow-up to your question.

- Speak if the user asks for a summary of three solutions. When you respond at this moment, adjust your thinking to reflect the thinking shared by your classmates.

- Identify how the user’s statement shows evidence of any of the subskills.

- If the user’s statement has evidence of at least one of the subskills, provide 1–2 sentences of feedback about how what they did showed evidence of that subskill. Then encourage the user to keep going.

- If the user’s statement does not have evidence of at least one of the subskills, provide 1–2 sentences of feedback to the user of how they could respond again in a way that would better align with one of the subskills that makes the most sense at that moment in time.

7. Connecting Multiple Prompts to a Multi-Agent System Design

7.1. Adjusting the Back-End of Simulations to Suit the Design Needs

7.2. Multi-Agent Systems to Handle the Complexity of Simulations

Based on the information that the user provides, select the student to respond that forces the user to connect the procedural and conceptual background of the math idea. You have three specialized sub-agents. 1. Student_A: Delegate to this student when the user asks for a broad solution. Student_B: Delegate to this student when the user asks for someone to correct Student_A, or to add an additional solution. Student_C: Delegate to this student when the user asks for the minimum requirements to solve this task.

7.3. The Relationship Between Theory and Technology

8. Discussion

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Abbreviations

| MTP | High-Leverage Mathematics Teaching Practice |

| ECD | Evidence-Centered Design |

References

- Alier, M., Peñalvo, F. J. G., & Camba, J. D. (2024). Generative artificial intelligence in education: From deceptive to disruptive. International Journal of Interactive Multimedia and Artificial Intelligence, 8(5), 5–14. [Google Scholar] [CrossRef]

- Aperstein, Y., Cohen, Y., & Apartsin, A. (2025). Generative AI-based platform for deliberate teaching practice: A review and a suggested framework. Education Sciences, 15(4), 405. [Google Scholar] [CrossRef]

- Ball, D. L., & Bass, H. (2002, May 24–28). Toward a practice-based theory of mathematical knowledge for teaching. 2002 Annual Meeting of the Canadian Mathematics Education Study Group (pp. 3–14), Kingston, ON, Canada. [Google Scholar]

- Ball, D. L., & Forzani, F. M. (2009). The work of teaching and the challenge for teacher education. Journal of Teacher Education, 60(5), 497–511. [Google Scholar] [CrossRef]

- Ball, D. L., Thames, M. H., & Phelps, G. (2008). Content knowledge for teaching. Journal of Teacher Education, 59, 389–407. [Google Scholar] [CrossRef]

- Barno, E., Albaladejo-González, M., & Reich, J. (2024, July 18–20). Scaling generated feedback for novice teachers by sustaining teacher educators’ expertise: A design to train LLMS with teacher educator endorsement of generated feedback. Eleventh ACM Conference on Learning @ Scale (L@S ’24) (, 4p), Atlanta, GA, USA. [Google Scholar]

- Barno, E., Benoit, G., & Dietiker, L. (2025). Designing digital clinical simulations to support equitable mathematics teaching. Educational Designer, 5(18), 76. [Google Scholar]

- Benoit, G., Barno, E., & Reich, J. (2025). Simulating equitable discussions using practice-based teacher education in math professional learning. In C. W. Lee, L. Bondurant, B. Sapkota, & H. Howell (Eds.), Promoting equity in approximations of practice for mathematics teachers. IGI Global. [Google Scholar] [CrossRef]

- Bhowmik, S., West, L., Barrett, A., Zhang, N., Dai, C. P., Sokolikj, Z., Southerland, S., Yuan, X., & Ke, F. (2024). Evaluation of an LLM-powered student agent for teacher training. In European conference on technology enhanced learning (pp. 68–74). Springer Nature. [Google Scholar]

- Bywater, J. P., Chiu, J. L., Hong, J., & Sankaranarayanan, V. (2019). The teacher responding tool: Scaffolding the teacher practice of responding to student ideas in mathematics classrooms. Computers & Education, 139, 16–30. [Google Scholar] [CrossRef]

- Chiu, T. K. F., Xia, Q., Zhou, X., Chai, C. S., & Cheng, M. (2023). Systematic literature review on opportunities, challenges, and future research recommendations of artificial intelligence in education. Computers and Education: Artificial Intelligence, 4, 100118. [Google Scholar] [CrossRef]

- Cohen, J., Wong, V., Krishnamachari, A., & Berlin, R. (2020). Teacher coaching in a simulated environment. Educational Evaluation and Policy Analysis, 42(2), 208–231. [Google Scholar] [CrossRef]

- Copur-Gencturk, Y., & Orrill, C. (2023). A promising approach to scaling up professional development: Intelligent, interactive, virtual professional development with just-in-time feedback. Journal of Mathematics Teacher Education. [Google Scholar] [CrossRef]

- Franke, M. L., Kazemi, E., & Battey, D. (2007). Mathematics education and student diversity: The role of classroom practices, professional development, and school policy. In F. K. Lester (Ed.), Second handbook of research on mathematics teaching and learning (pp. 227–300). Information Age Publishing. [Google Scholar]

- Ghousseini, H. (2015). Core practices and problems of practice in learning to lead classroom discussions. The Elementary School Journal, 115(3), 334–357. [Google Scholar] [CrossRef]

- Google. (2024a). ADK documentation. Available online: https://google.github.io/adk-docs (accessed on 1 May 2025).

- Google. (2024b). Generative AI on vertex AI: Prompt design. Available online: https://cloud.google.com/vertex-ai/generative-ai/docs/learn/prompts/prompt-design-strategies (accessed on 1 May 2025).

- Grossman, P., Compton, C., Igra, D., Ronfeldt, M., Shahan, E., & Williamson, P. (2009a). Teaching practice: A cross-professional perspective. The Teachers College Record, 111(9), 2055–2100. [Google Scholar]

- Grossman, P., Hammerness, K., & McDonald, M. (2009b). Redefining teaching, re-imagining teacher education. Teachers and Teaching, 15(2), 273–289. [Google Scholar] [CrossRef]

- Herbel-Eisenmann, B., & Wagner, D. (2010). Appraising lexical bundles in mathematics classroom discourse: Obligation and choice. Educational Studies in Mathematics, 75(1), 43–63. [Google Scholar] [CrossRef]

- Herbel-Eisenmann, B. A., & Breyfogle, M. L. (2005). Questioning our patterns of questioning. Mathematics Teaching in the Middle School, 10(9), 484–489. [Google Scholar] [CrossRef]

- Herbel-Eisenmann, B. A., Steele, M., & Cirillo, M. (2013). (Developing) teacher discourse moves: A framework for professional development. Mathematics Teacher Educator, 1(2), 181–196. [Google Scholar] [CrossRef]

- Herbst, P., Chieu, V., & Rougee, A. (2014). Approximating the practice of mathematics teaching: What learning can web-based, multimedia storyboarding software enable? Contemporary Issues in Technology and Teacher Education, 14(4), 356–383. [Google Scholar]

- Hillaire, G., Waldron, R., Littenberg-Tobias, J., Thompson, M., O’Brien, S., Marvez, G. R., & Reich, J. (2022, April 25–30). Digital clinical simulation suite: Specifications and architecture for simulation-based pedagogy at scale. 2022 ACM Conference on Learning@Scale, Honolulu, HI, USA. [Google Scholar] [CrossRef]

- Jacobs, V. R., Lamb, L. L. C., & Philipp, R. A. (2010). Professional noticing of children’s mathematical thinking. Journal for Research in Mathematics Education, 41(2), 169–202. [Google Scholar] [CrossRef]

- Kavanagh, S. S., Conrad, J., & Dagogo-Jack, S. (2020a). From rote to reasoned: Examining the role of pedagogical reasoning in practice-based teacher education. Teaching and Teacher Education, 89, 102991. [Google Scholar] [CrossRef]

- Kavanagh, S. S., Metz, M., Hauser, M., Fogo, B., Taylor, M. W., & Carlson, J. (2020b). Practicing responsiveness: Using approximations of teaching to develop teachers’ responsiveness to students’ ideas. Journal of Teacher Education, 71(1), 94–107. [Google Scholar] [CrossRef]

- Lampert, M., Franke, M. L., Kazemi, E., Ghousseini, H., Turrou, A. C., Beasley, H., Cunard, A., & Crowe, K. (2013). Keeping it complex: Using rehearsals to support novice teacher learning of ambitious teaching. Journal of Teacher Education, 64(3), 226–243. [Google Scholar] [CrossRef]

- Lee, D., & Yeo, S. (2022). Developing an AI-based chatbot for practicing responsive teaching in mathematics. Computers & Education, 191, 104646. [Google Scholar] [CrossRef]

- Lee, U., Lee, S., Koh, J., Jeong, Y., Jung, H., Byun, G., Lee, Y., Moon, J., Lim, J., & Kim, H. (2023, December 15). Generative agent for teacher training: Designing educational problem-solving simulations with large language model-based agents for preservice teachers. NeurIPS’23 workshop on generative AI for education (GAIED), New Orleans, LA, USA. [Google Scholar]

- Liu, N. F., Lin, K., Hewitt, J., Paranjape, A., Bevilacqua, M., Petroni, F., & Liang, P. (2024). Lost in the middle: How language models use long contexts. Transactions of the Association for Computational Linguistics, 12, 157–173. [Google Scholar] [CrossRef]

- Mikeska, J. N., Howell, H., & Kinsey, D. (2023). Inside the black box: How elementary teacher educators support preservice teachers in preparing for and learning from online simulated teaching experiences. Teaching and Teacher Education, 122, 103979. [Google Scholar] [CrossRef]

- Mikeska, J. N., Howell, H., & Kinsey, D. (2024a). Teacher educators’ use of formative feedback during preservice teachers’ simulated teaching experiences in Mathematics and Science. International Journal of Science and Mathematics Education, 23(6). [Google Scholar] [CrossRef]

- Mikeska, J. N., Klebanov, B. B., Bhatia, A., Halder, S., & Suhan, M. (2025). Evaluating the use of generative artificial intelligence to support learning opportunities for teachers to practice engaging in key instructional skills. In A. I. Cristea, E. Walker, Y. Lu, O. C. Santos, & S. Isotani (Eds.), Artificial intelligence in education. AIED 2025 (Vol. 15878). Lecture Notes in Computer Science. Springer. [Google Scholar] [CrossRef]

- Mikeska, J. N., Klebanov, B. B., Marigo, A., Tierney, J., Maxwell, T., & Nazaretsky, T. (2024b, July 8–12). Exploring the potential of automated and personalized feedback to support science teacher learning. International Conference on Artificial Intelligence in Education (pp. 251–258), Recife, Brazil. [Google Scholar]

- Mislevy, R. J., Almond, R. G., & Lukas, J. F. (2004). A brief introduction to evidence-centered design (CSE Technical Report 632). National Center for Research on Evaluation, Standards, and Student Testing (CRESST), Center for the Study of Evaluation, UCLA. [Google Scholar] [CrossRef]

- Mislevy, R. J., & Haertel, G. (2006). Implications for evidence-centered design for educational assessment. Educational Measurement: Issues and Practice, 25, 6–20. [Google Scholar] [CrossRef]

- National Council of Teachers of Mathematics. (2014). Principles to actions. National Council of Teachers of Mathematics. [Google Scholar]

- National Council of Teachers of Mathematics. (2018). Catalyzing change in high school mathematics: Initiating critical conversations. The National Council of Teachers of Mathematics, Inc. [Google Scholar]

- Park, J. S., O’Brien, J., Cai, C. J., Morris, M. R., Liang, P., & Bernstein, M. S. (2023, October 29–November 1). Generative agents: Interactive simulacra of human behavior. 36th Annual ACM Symposium on User Interface Software and Technology (pp. 1–22), San Francisco, CA, USA. [Google Scholar]

- Reich, J. (2022). Teaching drills: Advancing practice-based teacher education through short, low-stakes, high-frequency practice. Journal of Technology and Teacher Education, 30(2), 217–228. [Google Scholar] [CrossRef]

- Shaughnessy, M., & Boerst, T. A. (2018). Uncovering the skills that preservice teachers bring to teacher education: The practice of eliciting a student’s thinking. Journal of Teacher Education, 69(1), 40–55. [Google Scholar] [CrossRef]

- Shaughnessy, M., Ghousseini, H., Kazemi, E., Franke, M., Kelley-Petersen, M., & Hartmann, E. S. (2019). An investigation of supporting teacher learning in the context of a common decomposition for leading mathematics discussions. Teaching and Teacher Education, 80, 167–179. [Google Scholar] [CrossRef]

- Smith, M. S., & Stein, M. K. (2011). 5 practices for orchestrating productive mathematical discussions. National Council of Teacher of Mathematics. [Google Scholar]

- Son, T., Yeo, S., & Lee, D. (2024). Exploring elementary preservice teachers’ responsive teaching in mathematics through an artificial intelligence-based Chatbot. Teaching and Teacher Education, 146, 104640. [Google Scholar] [CrossRef]

- Stein, M. K., Russell, J. L., Bill, V., Correnti, R., & Speranzo, L. (2022). Coach learning to help teachers learn to enact conceptually rich, student-focused mathematics lessons. Journal of Mathematics Teacher Education, 25, 321–346. [Google Scholar] [CrossRef]

- Thompson, M., Owho-Ovuakporie, K., Robinson, K., Kim, Y. J., Slama, R., & Reich, J. (2019). Teacher Moments: A digital simulation for preservice teachers to approximate parent–teacher conversations. Journal of Digital Learning in Teacher Education, 35(3), 144–164. [Google Scholar] [CrossRef]

| Mathematics Topic: Systems of Linear Equations Focal Task: Consider the equation y = 2/5x + 1. Write a second linear equation to create a system of equations that has exactly one solution. Key Learning Objective of Focal Task: A line that creates a system with exactly one solution, where the line y = 2/5x + 1 is any line without a slope of 2/5. | ||

| Anticipated Student A Understanding | Anticipated Student B Understanding | Anticipated Student C Understanding |

| The students’ understanding of the ideas involved in the problem/process: The student conceptually understands that opposite-reciprocal slopes mean that two linear equations will intersect exactly once, as they are perpendicular. The student also understands that having the same y-intercept means that two lines will intersect exactly once. | The students’ understanding of the ideas involved in the problem/process: The student conceptually understands that two lines with the same y-intercept must cross at the location of the y-intercept. The student also understands that for those lines to intersect only once, at the point of the y-intercept, their slopes must be different. | The students’ understanding of the ideas involved in the problem/process: The student conceptually understands that any two linear equations with different slopes will intersect exactly once. The student also understands that having the same y-intercept means that any two lines will also cross only once; however, they note that this condition is not necessary if the slopes are different. |

| Other information about the student’s thinking, language, and orientation in this scenario: The student may not be confident that having either the opposite-reciprocal slope or the same y-intercept ensures the system has one solution, as opposed to both. However, this might be because the task only asks for a line that creates a system with exactly one solution, which the student’s solution satisfies, albeit with additional conditions. | Other information about the student’s thinking, language, and orientation in this scenario: The student may not be confident about the difference between two lines with the same y-intercept but different slopes, as having one solution in comparison with two lines with the same y-intercept and the same or equivalent slopes, as having infinite solutions. However, because the task requires a line that forms a system with exactly one solution, the student’s proposed conditions—having the same y-intercept and different slopes—meet the task’s condition. | Other information about the student’s thinking, language, and orientation in this scenario: The student’s response does not specifically clarify that having different slopes includes the condition of having non-equivalent slopes (e.g., noting that having two linear equations with slopes of 2/5 and 4/10 does not mean the lines will intersect only once due to those “different” slopes being equivalent fractions). |

| Sample Student A Responses | |

| “This works, y = −5/2 x +1. You have to have a line with a flipped and opposite slope for it to cross only once, with the same b.” “Well, with those slopes, they are going perpendicular to each other, so they have to cross. And they definitely cross at (0,1) because they have the same b.” | |

| Student’s Process and Understanding | Student’s Way of Being |

| The student’s process: The student is using the slope and y-intercept of the original line to create a system with one solution. They achieve this by finding the opposite reciprocal slope and leaving the y-intercept unchanged. The students’ understanding of the ideas involved in the problem/process: The student conceptually understands that opposite-reciprocal slopes mean that two linear equations will intersect exactly once, as they are perpendicular. The student also understands that having the same y-intercept means that two lines will intersect exactly once. Other information about the student’s thinking, language, and orientation in this scenario: The student may not be confident that having either the opposite-reciprocal slope or the same y-intercept ensures the system has one solution, as opposed to both. However, this might be because the task only asks for a line that creates a system with exactly one solution, which the student’s solution satisfies, albeit with additional conditions. | The student is confident that their solution satisfies the task’s condition. When asked to explain their process, the student explains how their solution is a method that will always work. When it is brought to their attention, the student realizes that they did not have to have the specific conditions of the opposite-reciprocal slope and same y-intercept and connects their condition of the opposite-reciprocal slope to be a subset of any slope that is not 2/5. |

| Posing Purposeful Questions | ||

|---|---|---|

| Subskill of MTP | Evidence | Non-Evidence |

| Advancing student understanding by asking questions that build on, but do not take over or funnel, student thinking | Asking questions that build on students’ thinking about…

| Asking questions that take over or funnel students’ thinking about…

|

| Making certain to ask questions that go beyond gathering information to probing thinking and requiring explanation and justification | Asking questions that probe thinking and require an explanation and justification about…

| Asking questions that gather information about…

|

| Asking intentional questions that make the mathematics more visible and accessible for student examination and discussion | Asking intentional questions that make mathematics more visible and accessible for student examination and discussion about…

| Asking questions that focus on procedure or non-visual representations about…

|

| Student Response Template Component | Example of Prompt Component |

|---|---|

| Student A: Mathematical Process | <OBJECTIVE_AND_PERSONA> You are an 8th-grade student about to share your mathematical ideas about a problem. The user is your teacher, who is going to ask you and your classmates questions about your different solutions to the problem. Your task is to answer the user’s questions when they ask about a general solution to the problem and only answer the questions in relation to your solution. Your solution is using the slope and y-intercept of the original line y = 2/5 x + 1 to create a system with one solution by finding the opposite-reciprocal slope and leaving the y-intercept the same. |

| Student A: Mathematical Ideas & Student A: Language and Orientation | <CONTEXT> To perform the task, you need to consider the mathematical problem that the user is talking about with you and your classmates, and how it relates to your solution. This is the problem: <Given the linear equation y = 2/5 x + 1, write a linear equation that, with the first equation, makes a system of linear equations with one solution.> To perform the task, you need to answer only using the ideas listed below.

|

| Student A: Mathematical Way of Being | <CONSTRAINTS> Dos and don’ts for the following aspects.

|

| Task and Response Template Component | Example of Prompt Component |

|---|---|

| Immediate Feedback: MTP and Subskills of MTP | <OBJECTIVE_AND_PERSONA> You are a friendly coach to the user who is practicing how to ask questions to students during a whole-group mathematics discussion. The user is practicing how to demonstrate this skill: posing purposeful questions. Your task is to identify whether the user has demonstrated the following subskills when speaking, and provide feedback on what they did well, what they could improve, and what they should consider in order to demonstrate the following subskills if they were to repeat the conversation again. |

| Immediate Feedback: Look Fors for Subskills of MTPs | <CONTEXT> To perform the task, you need to consider the mathematical problem that the user is talking about with their students, and how it relates to demonstrating a subskill: <Given the linear equation y = 2/5 x + 1, write a linear equation that, with the first equation, makes a system of linear equations with one solution.> To perform the task, you need to identify if the user completed any of the following subskills:

|

| Immediate Feedback: Subskills of MTPs | <CONSTRAINTS> Dos and don’ts for the following aspects.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barno, E.; Phelps, G. Using a Multi-Agent System and Evidence-Centered Design to Integrate Educator Expertise Within Generated Feedback. Educ. Sci. 2025, 15, 1273. https://doi.org/10.3390/educsci15101273

Barno E, Phelps G. Using a Multi-Agent System and Evidence-Centered Design to Integrate Educator Expertise Within Generated Feedback. Education Sciences. 2025; 15(10):1273. https://doi.org/10.3390/educsci15101273

Chicago/Turabian StyleBarno, Erin, and Geoffrey Phelps. 2025. "Using a Multi-Agent System and Evidence-Centered Design to Integrate Educator Expertise Within Generated Feedback" Education Sciences 15, no. 10: 1273. https://doi.org/10.3390/educsci15101273

APA StyleBarno, E., & Phelps, G. (2025). Using a Multi-Agent System and Evidence-Centered Design to Integrate Educator Expertise Within Generated Feedback. Education Sciences, 15(10), 1273. https://doi.org/10.3390/educsci15101273