Lessons Learned from 10 Experiments That Tested the Efficacy and Assumptions of Hypothetical Learning Trajectories

Abstract

:1. Introduction

- A goal is the target developmental level. Goals are based on the structure of mathematics, societal needs, and research on children’s thinking about and learning of mathematics and require input from experts in mathematics, mathematics education, educational policy, and developmental psychology [5,6,7].

- A developmental progression is a sequence of theoretically and research-based increasingly sophisticated patterns of thinking that most children pass on the way to achieving the goal or target. Theoretically, each level serves as a foundation for successful learning of subsequent levels.

- Instructional activities include theory and research-based curricular tasks and pedagogical strategies designed explicitly to promote the development of each level.

Learning progressions have captured the imaginations and rhetoric of school reformers and education researchers as one possible elixir for getting K-12 education “on track” … Learning progressions and research on them have the potential to improve teaching and learning; however, we need to be cautious … The enthusiasm gathering around learning progressions might lead to giving heavy weight to one possible solution when experience show single solutions to education reform come and go.

2. Rationale of the HLT Project

2.1. Goals

- Assumption 1. Instruction in which LT levels are taught consecutively (e.g., for children at level n, using instructional activities to foster level n + 1 and then n + 2 before instruction on a goal or target-level knowledge at level n + 3) results in greater learning than instruction that immediately and solely targets level n + 3 (or higher levels), namely the “Skip-Level” or “Teach-to-Target” approach.

- Assumption 2. Instruction aligned with an LT sequence results in greater learning than instruction that either uses a traditional curriculum’s activities and sequence (business as usual) or uses the same activities as those of the LT but chosen and ordered to fit a theme-based project.

2.1.1. Assumption 1

“When we wish to fix a new thing in a pupil’s [mind], our … effort should not be so much to impress and retain it as to connect it with something already there … If we attend clearly to the connection, the connected thing will… likely… remain within recall”.(pp. 101–102)

2.1.2. Assumption 2

2.2. Existing Evidence of Efficacy and Its Limitations

3. Methods

- Ensured causal interpretation of the findings via Randomized Control Trials.

- Ensured a control group received an intervention that was as similar as possible to the HLT intervention, except for a single defining attribute of the HLT construct.

- Identified each participant’s location on a LT at pretest and ensured an equivalent baseline for posttest comparison of interventions on the dependent measure(s).

3.1. Research Design to Test Assumption 1

3.2. Research Design to Test Assumption 2

4. Results

4.1. Results for Assumption 1

4.2. Results for Assumption 2

5. Discussion of Theoretical Issues

5.1. Nature of the Relation between Successive Levels

| Experiment: Domain | Published | Assumption | Method | Statistically and Practically Significant b | Reason for Non-Significance | Relation |

|---|---|---|---|---|---|---|

| Experiment 1 (n = 76 preschoolers): Counting/subitizing/ cardinality | - | 1 | LT vs. TtT/Skip | No | Methodology | - |

| Experiment 2 (n = 180 pre-K): Counting/subitizing/ cardinality a | - | 1 | LT vs. TtT/Skip | No | Methodology | - |

| Experiment 3 (n = 152 preschoolers): Shape composition | [66] | 1 | LT vs. TtT/Skip | Yes ES = 0.55 p = 0.016 | - | Not necessary but strongly facilitative |

| Experiment 4 (n = 26 kindergartners): Addition and subtraction | [67] | 1 | LT vs. TtT/Skip | Yes Multiple qualitative indicators of a gain of 24% or greater | - | Not necessary but strongly facilitative |

| Experiment 5 (n = 16 preschoolers): Patterning pilot | [71] | 2 | LT vs. Unord | No ES = 0.238 for main variable, p = 0.48 | Type of relation, faulty LT | Somewhat facilitative |

| Experiment 6 (n = 48 preschoolers): Patterning | [72,73] | 2 | LT vs. Unord | No Unord scored higher on some measures, ns | Type of relation, faulty LT | Somewhat facilitative |

| Experiment 7 (n = 291 kindergartners): Early arithmetic | [68] | 1 | LT vs. TtT/Skip | Overall: Yes; small for those with highest entry level Target: Yes | - | Not necessary but facilitative; near necessary for those with lowest entry level |

| Experiment 8 (n = 189 kindergartners): Length measurement | [70] | 2 | LT vs. REV vs. BAU | Yes/No ES = 0.32 (LT vs. REV) | - | Not necessary but highly facilitative |

| Experiment 9 (n = 20 preschoolers): Cardinality | - | 1 | LT vs. TtT/Skip | No | Methodology | - |

| Experiment 10 (n = 15 preschoolers): Cardinality | [69] | 1 | LT vs. TtT/Skip | Yes ES = 1.3, p = 0.032 (procedural fluency) ES = 1.68 p = 0.016 (conceptual understanding) | - | Necessary (or necessary and sufficient?) |

- Five of the seven participants who received the HLT-based intervention, which included prior training on the conceptual prerequisite, had (some) success on the target-concept measure; six of seven, on the target procedural-fluency measure.

- The one HLT participant who was unsuccessful on both the conceptual and the procedural-fluency task had negligible success learning the conceptual prerequisite.

- Seven of the eight participants who were trained on the target concept and skill but not the prerequisite concept had (almost) no success learning the target knowledge.

- Finally, post hoc analysis indicated that the exceptional Teach-to-Target participant who mastered both the target concept and skill not only exhibited the best pretest performance of the sample but appeared to have learn the prerequisite concept during the pretesting.

5.2. Qualitative Differences between Successive Levels

5.3. Number of Paths to Target Knowledge

5.4. Validity of the LT

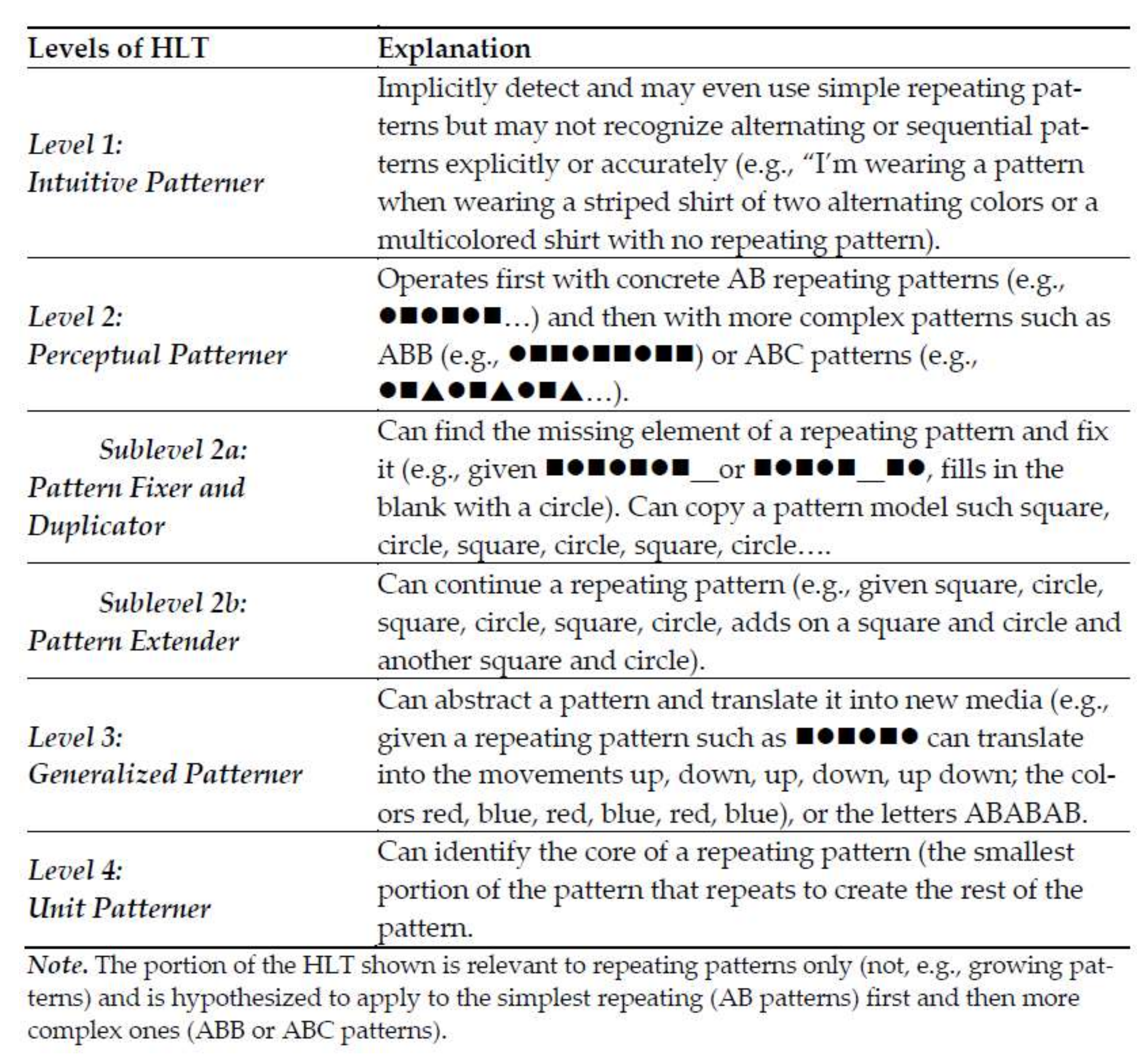

- The early use of letters to label the elements of a pattern may have fostered the Level-2 competencies (e.g., extending a repeating pattern) by counterfactual participants.

- Early use of letters to label the core of a pattern may have helped some such participants achieve Level-4 competence (identifying the core of a repeating pattern). (Parenthetically, translating a pattern into different objects (listed as a Level-3 competence in Figure 1) may be more challenging and facilitated by an explicit understanding the concept of a core unit (listed as a Level-4 competence in Figure 1). This conjecture is consistent not only with Baroody et al.’s [71] observations but with Fyfe et al.’s [82] finding that using letters to identify unit cores was efficacious in promoting the ability to translate a pattern into different objects. Although an implicit consideration of unit may naturally help some children to translate a repeating pattern into different materials, more explicit instruction that entails systematic instruction that first involves using letters to label the elements of a pattern (Level-2) and then the core of a pattern may provide a better basis for most children to tackle this challenging task.)

6. Discussion of Methodological Issues

6.1. General Methodological Challenges

6.1.1. Issues with the Starting Level

6.1.2. Sacrifice of Ecological Validity

6.1.3. Small Sample Size

6.1.4. Entangling Lower with Higher of Levels of Instruction

6.1.5. Imprecise Dependent Measures

6.2. A Case in Point: Cardinality Development

6.2.1. Experiment 9: Lessons Learned, Part 1

6.2.2. Experiment 10: Lessons Learned, Part 2

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lobato, J.; Walters, C.D. A taxonomy of approaches to learning trajectories and progressions. In Compendium for Research in Mathematics Education; Cai, J., Ed.; National Council of Teachers of Mathematics: Reston, VA, USA, 2017; pp. 74–101. [Google Scholar]

- Sarama, J.; Clements, D.H. Early Childhood Mathematics Education research: Learning Trajectories for Young Children; Routledge: New York, NY, USA, 2009. [Google Scholar]

- Maloney, A.P.; Confrey, J.; Nguyen, K.H. (Eds.) Learning Over Time: Learning Trajectories in Mathematics Education; Information Age Publishing: Charlotte, NC, USA, 2014. [Google Scholar]

- Simon, M.A. Reconstructing mathematics pedagogy from a constructivist perspective. J. Res. Math. Educ. 1995, 26, 114–145. [Google Scholar] [CrossRef]

- Clements, D.H.; Sarama, J. Learning trajectories in mathematics education. Math. Think. Learn. 2004, 6, 81–89. [Google Scholar] [CrossRef]

- Fuson, K.C. Pre-K to grade 2 goals and standards: Achieving 21st century mastery for all. In Engaging Young Children in Mathematics: Standards for Early Childhood Mathematics Education; Clements, D.H., Sarama, J., DiBiase, A.-M., Eds.; Erlbaum: Mahwah, NJ, USA, 2004; pp. 105–148. [Google Scholar]

- Wu, H.-H. Understanding Numbers in Elementary School Mathematics; American Mathematical Society: Providence, RI, USA, 2011. [Google Scholar]

- Frye, D.; Baroody, A.J.; Burchinal, M.R.; Carver, S.; Jordan, N.C.; McDowell, J. Teaching Math to Young Children: A Practice Guide; U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance (NCEE): Washington, DC, USA, 2013.

- Corcoran, T.; Mosher, F.A.; Rogat, A. Learning Progressions in Science: An Evidence-Based Approach to Reform; Center on Continuous Instructional Improvement, Teachers College—Columbia University: New York, NY, USA, 2009. [Google Scholar]

- Shavelson, R.J.; Karplus, A. Reflections on learning progressions. In Learning Progressions in Science: Current Challenges and Future Directions; Alonzo, A.C., Gotwals, A.W., Eds.; Sense: Rotterdam, The Netherlands, 2012; pp. 13–26. [Google Scholar]

- Clarke, B.; Clarke, D.; Cheeseman, J. The mathematical knowledge and understanding young children bring to school. Math. Educ. Res. J. 2006, 18, 78–102. [Google Scholar] [CrossRef]

- Engel, M.; Claessens, A.; Finch, M.A. Teaching students what they already know? The (mis)alignment between mathematics instructional content and student knowledge in kindergarten. Educ. Eval. Policy Anal. 2013, 35, 157–178. [Google Scholar] [CrossRef] [Green Version]

- Ginsburg, H.P.; Lee, J.S.; Boyd, J.S. Mathematics education for young children: What it is and how to promote it. Soc. Policy Rep. 2008, 22, 3–23. [Google Scholar] [CrossRef]

- Kilday, C.R.; Kinzie, M.B.; Mashburn, A.J.; Whittaker, J.V. Accuracy of teachers’ judgments of preschoolers’ math skills. J. Psychoeduc. Assess. 2012, 30, 48–158. [Google Scholar] [CrossRef]

- Lee, J.S.; Ginsburg, H.P. What is appropriate mathematics education for four-year-olds? Pre-kindergarten teachers’ beliefs. J. Early Child. Res. 2007, 5, 2–31. [Google Scholar] [CrossRef]

- Balfanz, R. Why do we teach children so little mathematics? Some historical considerations. In Mathematics in the Early Years; Copley, J.V., Ed.; National Council of Teachers of Mathematics: Reston, VA, USA, 1999; pp. 3–10. [Google Scholar]

- Hachey, A. The early childhood mathematics education revolution. Early Educ. Dev. 2013, 24, 419–430. [Google Scholar] [CrossRef]

- Lee, J.S. Preschool teachers’ shared beliefs about appropriate pedagogy for 4-year-olds. Early Child. Educ. J. 2006, 33, 433–441. [Google Scholar] [CrossRef]

- Lee, J.S.; Ginsburg, H.P. Early childhood teachers’ misconceptions about mathematics education for young children in the United States. Australas. J. Early Child. 2009, 34, 37–45. [Google Scholar] [CrossRef]

- Li, X.; Chi, L.; DeBey, M.; Baroody, A.J. A Experiment of early childhood mathematics teaching in the U.S. and China. Early Educ. Dev. 2015, 26, 37–41. [Google Scholar] [CrossRef]

- Ferguson, R.F. Can schools narrow the black-white test score gap? In The Black-White Test Score Gap; Jencks, C., Phillips, M., Eds.; Brookings Institution Press: Washington, DC, USA, 1998; pp. 318–374. ISBN 9780815746102. [Google Scholar]

- Layzer, J.L.; Goodson, B.D.; Moss, M. Life in preschool: Observational Experiment of early childhood programs for disadvantaged four-year-olds. Final Rep. 1993, 1, ED366468. [Google Scholar]

- Lee, J.S. Multiple facets of inequality in racial and ethnic achievement gaps. Peabody J. Educ. 2004, 79, 51–73. [Google Scholar] [CrossRef]

- Lee, J.S.; Ginsburg, H.P. Preschool teachers’ beliefs about appropriate early literacy and mathematics education for low- and middle-SES children. Early Educ. Dev. 2007, 18, 111–143. [Google Scholar] [CrossRef]

- Lubienski, S.T.; Shelley, M.C., II. A closer look at U.S. mathematics instruction and achievement: Examinations of race and SES in a decade of NAEP data. In Proceedings of the Paper presented at the Annual Meeting of the American Educational Research Association, Chicago, IL, USA, 21–25 April 2003. [Google Scholar]

- Bereiter, C. Does direct instruction cause delinquency? Response to Schweinhart and Weikart. Educ. Leadersh. 1986, 44, 20–21. [Google Scholar]

- Clark, R.E.; Kirschner, P.A.; Sweller, J. Putting students on the path to learning: The case for fully guided instruction. Am. Educ. 2012, 36, 6–11. [Google Scholar]

- Kirschner, P.A.; Sweller, J.; Clark, R.E. Why minimal guidance during instruction does not work: An analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educ. Psychol. 2006, 41, 75–86. [Google Scholar] [CrossRef]

- Rosenshine, B. The empirical support for direct instruction. In Constructivist Theory Applied to Instruction: Success or Failure? Tobias, S., Duffy, T.M., Eds.; Taylor & Francis: London, UK, 2009; pp. 201–220. [Google Scholar]

- Rosenshine, B. Principles of instruction: Research-based strategies that all teachers should know. Am. Educ. 2012, 36, 12. [Google Scholar]

- Clark, R.C.; Nguyen, F.; Sweller, J. Efficiency in Learning: Evidence-Based Guidelines to Manage Cognitive Load; Pfeiffer: San Francisco, CA, USA, 2006. [Google Scholar]

- Renkl, A. The worked-out examples principle in multimedia learning. In The Cambridge Handbook of Multimedia Learning; Mayer, R.E., Ed.; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Schwonke, R.; Renkl, A.; Krieg, C.; Wittwer, J.; Alven, V.; Salden, R. The worked-example effect: Not an artefact of lousy control conditions. Comput. Hum. Behav. 2009, 25, 258–266. [Google Scholar] [CrossRef]

- Borman, G.D.; Hewes, G.M.; Overman, L.T.; Brown, S. Comprehensive school reform and achievement: A meta-analysis. Rev. Educ. Res. 2003, 73, 125–230. [Google Scholar] [CrossRef] [Green Version]

- Carnine, D.W.; Jitendra, A.K.; Silbert, J. A descriptive analysis of mathematics curricular materials from a pedagogical perspective: A case Experiment of fractions. Remedial Spec. Educ. 1997, 18, 66–81. [Google Scholar] [CrossRef]

- Gersten, R. Direct instruction with special education students: A review of evaluation research. J. Spec. Educ. 1985, 19, 41–58. [Google Scholar] [CrossRef]

- Heasty, M.; McLaughlin, T.F.; Williams, R.L.; Keenan, B. The effects of using direct instruction mathematics formats to teach basic math skills to a third grade student with a learning disability. Acad. Res. Int. 2012, 2, 382–387. [Google Scholar]

- James, W. Talks to Teachers on Psychology: And to Students on Some of Life’s Ideals; Talk Originally Given in 1892; W.W. Norton & Company: New York, NY, USA, 1958. [Google Scholar]

- Piaget, J. Development and learning. In Piaget Rediscovered; Ripple, R.E., Rockcastle, V.N., Eds.; Cornell University Press: Ithaca, NY, USA, 1964; pp. 7–20. [Google Scholar]

- Resnick, L.B.; Ford, W.W. The Psychology of Mathematics for Instruction; Erlbaum: Mahwah, NJ, USA, 1981. [Google Scholar]

- Hasselbring, T.S.; Goin, L. Research Foundation and Evidence of Effectiveness for FASTT Math. 2005. Available online: http://www.tomsnyder.com/reports/ (accessed on 16 September 2005).

- Thorndike, E.L. The Psychology of Arithmetic; Macmillan: New York, NY, USA, 1922. [Google Scholar]

- Bezuk, N.S.; Cegelka, P.T. Effective mathematics instruction for all students. In Effective Instruction for Students with Learning Difficulties; Cegelka, P.T., Berdine, W.H., Eds.; Allyn and Bacon: Boston, MA, USA, 1995; pp. 345–384. [Google Scholar]

- Rathmell, E.C. Using thinking strategies to teach basic facts. In Developing Computational Skills; Suydam, M.N., Reys, R.E., Eds.; 1978 Yearbook; National Council of Teachers of Mathematics: Reston, VA, USA, 1978; pp. 13–50. [Google Scholar]

- Thornton, C.A. Emphasizing thinking strategies in basic fact instruction. J. Res. Math. Educ. 1978, 9, 214–227. [Google Scholar] [CrossRef]

- Thornton, C.A. Solution strategies: Subtraction number facts. Educ. Stud. Math. 1990, 21, 241–263. [Google Scholar] [CrossRef]

- Baroody, A.J. Using number and arithmetic instruction as a basis for fostering mathematical reasoning. In Reasoning and Sense Making in the Mathematics Classroom: Pre-K—Grade 2; Battista, M.T., Ed.; National Council of Teachers of Mathematics: Reston, VA, USA, 2016; pp. 27–69. [Google Scholar]

- National Research Council. Adding It Up: Helping Children Learn Mathematics; Kilpatrick, J., Swafford, J., Findell, B., Eds.; National Academy Press: Washington, DC, USA, 2001. [Google Scholar]

- National Mathematics Advisory Panel (NMAP). Foundations for Success: The Final Report of the National Mathematics Advisory Panel; U.S. Department of Education: Washington, DC, USA, 2008.

- Vygotsky, L.S. Thought and Language; Hanfmann, E., Vakar, G., Eds.; MIT Press: Cambridge, MA, USA, 1962. [Google Scholar]

- Vygotsky, L.S. Mind in Society; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Baroody, A.J. Curricular approaches to introducing subtraction and fostering fluency with basic differences in grade 1. In The Development of Number Sense: From Theory to Practice; Bracho, R., Ed.; University of Granada: Granada, Spain, 2016; pp. 161–191. [Google Scholar] [CrossRef]

- Butterfield, B.; Forrester, P.; Mccallum, F.; Chinnappan, M. Use of Learning Trajectories to Examine Pre-Service Teachers’ Mathematics Knowledge for Teaching Area and Perimeter. 2013. Available online: https://files.eric.ed.gov/fulltext/ED572797.pdf (accessed on 21 December 2021).

- Broderick, J.T.; Hong, S.B. From Children’s Interests to Children’s Thinking: Using a Cycle of Inquiry to Plan Curriculum; National Association for the Education of Young Children: Washington, DC, USA, 2020. [Google Scholar]

- Edwards, C.; Gandini, L.; Forman, G.E. The Hundred Languages of Children: The Reggio Emilia Approach to Early Childhood Education; Ablex: New York, NY, USA, 1993. [Google Scholar]

- Helm, J.H.; Katz, L.G. Young Investigators: The Project Approach in the Early Years, 3rd ed.; Teachers College Press: New York, NY, USA, 2016. [Google Scholar]

- Hendrick, J. (Ed.) First Steps toward Teaching the Reggio Way; Prentice-Hall: Upper Saddle River, NJ, USA, 1997. [Google Scholar]

- Katz, L.G.; Chard, S.C. Engaging Children’s Minds: The Project Approach, 2nd ed.; Greenwood Publishing Group: Westport, CT, USA, 2000. [Google Scholar]

- Tullis, P. The death of preschool. Scientific Amer. Mind 2011, 22, 36–41. [Google Scholar] [CrossRef]

- Dewey, J. Experience and Education; Collier: New York, NY, USA, 1963. [Google Scholar]

- Barrett, J.E.; Battista, M.T. Two approaches to describing the development of students’ reasoning about length: A case Experiment for coordinating related trajectories. In Learning Over Time: Learning Trajectories in Mathematics Education; Maloney, A.P., Confrey, J., Nguyen, K.H., Eds.; Information Age Publishing: Charlotte, NC, USA, 2014; pp. 97–124. [Google Scholar]

- Murata, A. Paths to learning ten-structured understanding of teen sums: Addition solution methods of Japanese Grade 1 students. Cogn. Instr. 2004, 22, 185–218. [Google Scholar] [CrossRef]

- Steffe, L.; Cobb, P. Construction of Arithmetical Meanings and Strategies; Springer: Berlin/Heidelberg, Germany, 1988. [Google Scholar]

- Steffe, L.; Olive, J. Children’s Fractional Knowledge; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Clements, D.H.; Sarama, J. Experimental evaluation of the effects of a research-based preschool mathematics curriculum. Am. Educ. Res. J. 2008, 45, 443–494. [Google Scholar] [CrossRef]

- Clements, D.H.; Sarama, J.; Baroody, A.J.; Joswick, C.; Wolfe, C. Evaluating the efficacy of a learning trajectory for early shape composition. Amer. Educ. Res. J. 2019, 56, 2509–2530. [Google Scholar] [CrossRef]

- Clements, D.H.; Sarama, J.; Baroody, A.J.; Joswick, C. Efficacy of a learning trajectory approach compared to a teach-to-target approach for addition and subtraction. ZDM Math. Educ. 2020, 52, 637–649. [Google Scholar] [CrossRef]

- Clements, D.H.; Sarama, J.; Baroody, A.J.; Kutaka, T.S.; Chernyavskiy, P.; Joswick, C.; Cong, M.; Joseph, E. Comparing the efficacy of early arithmetic instruction based on a learning trajectory and teaching-to-a-target. J. Educ. Psych. 2021, 113, 1323–1337. [Google Scholar] [CrossRef]

- Baroody, A.J.; Clements, D.H.; Sarama, J. Does Use of a Learning Progression Facilitate Learning an Early Counting Concept and Skill? University of Illinois Urbana: Champaign, IL, USA, 2022; submitted. [Google Scholar]

- Sarama, J.; Clements, D.H.; Barrett, J.E.; Cullen, C.J.; Hudyma, A. Length measurement in the early years: Teaching and learning with learning trajectories. Math. Think. Learn. 2021, 1–24. [Google Scholar] [CrossRef]

- Baroody, A.J.; Yilmaz, N.; Clements, D.H.; Sarama, J. Evaluating a basic assumption of learning trajectories: The case of early patterning learning. J. Math. Educ. 2021, 13, 8–32. [Google Scholar] [CrossRef]

- Yilmaz, N.; Baroody, A.J.; Clements, D.H.; Sarama, J.; Sahin, V. Does a Learning Trajectory Facilitate Learning to Recognize the Core Unit of a Repeating Pattern. In Proceedings of the Exploring Cognitive Processes in Mathematics; American Educational Research Association Annual Meeting, San Francisco, CA, USA, 18 April 2020. [Google Scholar]

- Yilmaz, N.; Baroody, A.J.; Sahin, V. What do eye-tracking data say about the cognitive mechanisms underlying the pattern extension skills of young children? In Proceedings of the Poster presented at the Stanford Educational Data Science Conference, Stanford, CA, USA, 18 September 2020. [Google Scholar]

- Fuson, K.C. Children’s Counting and Concepts of Number; Springer: Berlin/Heidelberg, Germany, 1988. [Google Scholar]

- Slavin, R.; Smith, D. The relationship between sample sizes and effect sizes in systematic reviews in education. Educ. Eval. Policy Anal. 2009, 31, 500–506. [Google Scholar] [CrossRef]

- Cronbach, L.J.; Ambron, S.R.; Dornbusch, S.M.; Hess, R.O.; Hornik, R.C.; Phillips, D.C. Toward Reform of Program Evaluation: Aims, Methods, and Institutional Arrangements; Jossey-Bass: San Francisco, CA, USA, 1980. [Google Scholar]

- Sarama, J.; Clements, D.H.; Barrett, J.E.; van Dine, D.W.; McDonel, J.S. Evaluation of a learning trajectory for length in the early years. ZDM. 2011, 43, 667–680. [Google Scholar] [CrossRef]

- Duncan, R.G.; Gotwals, A.W. A tale of two progressions: On the benefits of careful comparisons. Sci. Educ. 2015, 99, 410–416. [Google Scholar] [CrossRef]

- Lesh, R.; Yoon, C. Evolving communities of mind—Where development involves several interacting and simultaneously developing strands. Math. Think. Learn. 2004, 6, 205–226. [Google Scholar] [CrossRef]

- Clements, D.H.; Sarama, J. Building Blocks, Volumes 1 and 2; McGraw-Hill: Columbus, OH, USA, 2013. [Google Scholar]

- Baratta-Lorton, M. Workjobs: Activity-Centered Learning for Early Childhood; Addison-Wesley: Menlo Park, CA, USA, 1972. [Google Scholar]

- Fyfe, E.R.; McNeil, N.M.; Rittle-Johnson, B. Easy as ABCABC: Abstract language facilitates performance on a concrete patterning. Child Dev. 2015, 86, 927–935. [Google Scholar] [CrossRef]

- Ginsburg, H.P.; Baroody, A.J. Test of Early Mathematics Ability, 3rd ed.; Pro-Ed: Austin, TX, USA, 2006. [Google Scholar]

- Clements, D.H.; Sarama, J.; Wolfe, C.B.; Day-Hess, C.A. REMA—Research-Based Early Mathematics Assessment; Kennedy Institute, University of Denver: Denver, CO, USA, 2008. [Google Scholar]

- Baroody, A.J.; Purpura, D.J. Early number and operations: Whole numbers. In Compendium for Research in Mathematics Education; Cai, J., Ed.; National Council of Teachers of Mathematics: Reston, VA, USA, 2017; pp. 308–354. [Google Scholar]

- Le Corre, M.; van de Walle, G.A.; Brannon, E.; Carey, S. Revisiting the performance/competence debate in the acquisition of counting as a representation of the positive integers. Cogn. Psychol. 2006, 52, 130–169. [Google Scholar] [CrossRef]

- Sarnecka, B.W.; Carey, S. How counting represents number: What children must learn and when they learn it. Cognition 2008, 108, 662–674. [Google Scholar] [CrossRef] [Green Version]

- Palmer, A.; Baroody, A.J. Blake’s development of the number words “one,” “two,” and “three”. Cogn. Instr. 2011, 29, 265–296. [Google Scholar] [CrossRef]

- Wynn, K. Children’s acquisition of the counting words in the number system. Cogn. Psychol. 1992, 24, 220–251. [Google Scholar] [CrossRef]

- Dixon, J.A.; Moore, C.F. The logic of interpreting evidence of developmental ordering: Strong inference and categorical measures. Dev. Psychol. 2000, 36, 826–834. [Google Scholar] [CrossRef] [PubMed]

- Rittle-Johnson, B.; Fyfe, E.R.; Loehr, A.L.; Miller, M.R. Beyond numeracy in preschool: Adding patterns to the equation. Early Child. Res. Q. 2015, 31, 101. [Google Scholar] [CrossRef]

| Understanding of Target Concept at Posttest | |||

|---|---|---|---|

| No | Yes | ||

| Knowledge of the prerequisite (count-to-cardinal) concept before target training on the target (cardinal-to-count) concept | Yes | A 1 | B 7 |

| No | C 8 | D 0 | |

| Fluency of Target Skill at Posttest | |||

|---|---|---|---|

| No or Little | Modest or Good | ||

| Knowledge of the prerequisite (count-to-cardinal) concept before target training on the target (counting-out) skill | Yes | A 0 | B 8 |

| No | C 8 | D 0 | |

| Aspect of Cardinal Number | Conceptual Basis | Mapping | Direct Measure |

|---|---|---|---|

| Pre-meaningful counting (verbal subitizing-based) cardinality development | |||

| Level 1A: number recognition (n-knower levels) | Cardinal representation of a small number underlies immediate subitizing of 1, 2, or 3 | Quantity-to-word (via subitizing) | How-many task |

| Level 1B: putting out a requested n (also commonly called n-knower levels) | Cardinal representation of small numbers used to subitize when 1, 2 or 3 have been put out | Word-to-quantity (via subitizing) | Give-n task |

| Counting-based cardinality development | |||

| Level 2: cardinality-principle knower [CP-knower] level) a | Count→cardinal concept or cardinality principle (last number word = total) | Quantity-to-word (via counting) | How-many task |

| Level 3: applications of CP: Number-constancy concepts | 3A. Counting-based conservation of cardinal identity: Addition or subtraction, but not irrelevant physical transformations, changes total 3B. Counting-based cardinal equivalence: Sets with same number are equal despite looking different | Quantity-to-word over a quantity transformation Comparing two quantity-to-word mappings | 1. Conservation of cardinal identity 2. Cardinal equivalence |

| Level 4: Counting out a requested n | Cardinal→count concept (a cardinal number = the last number word used if a set is counted) | Word-to-quantity (via counting) | Predict last n word and give-n tasks |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baroody, A.J.; Clements, D.H.; Sarama, J. Lessons Learned from 10 Experiments That Tested the Efficacy and Assumptions of Hypothetical Learning Trajectories. Educ. Sci. 2022, 12, 195. https://doi.org/10.3390/educsci12030195

Baroody AJ, Clements DH, Sarama J. Lessons Learned from 10 Experiments That Tested the Efficacy and Assumptions of Hypothetical Learning Trajectories. Education Sciences. 2022; 12(3):195. https://doi.org/10.3390/educsci12030195

Chicago/Turabian StyleBaroody, Arthur J., Douglas H. Clements, and Julie Sarama. 2022. "Lessons Learned from 10 Experiments That Tested the Efficacy and Assumptions of Hypothetical Learning Trajectories" Education Sciences 12, no. 3: 195. https://doi.org/10.3390/educsci12030195

APA StyleBaroody, A. J., Clements, D. H., & Sarama, J. (2022). Lessons Learned from 10 Experiments That Tested the Efficacy and Assumptions of Hypothetical Learning Trajectories. Education Sciences, 12(3), 195. https://doi.org/10.3390/educsci12030195