Identifying Factors of Students’ Failure in Blended Courses by Analyzing Students’ Engagement Data

Abstract

:1. Introduction

1.1. Literature Review

- the use of technology

- the interaction process

- the characteristics of the students

- the characteristics of the class

1.2. Our Research Objective

2. Methods

2.1. Research Framework

- Data Collection

- Risk Model Development

- Risk Model Verification

2.2. Data Collection

- Students Logins into the System;

- Time Students spent on System;

- Time Students spent on specific activities;

- Activities completed by Students;

- Activities started by Students;

- Students Forum Usage;

- Messages sent by Students through LMS;

- Students clicks on a resource;

- Number of times a resource has been accessed by students;

- Students grades/scores on graded activities.

2.3. Risk Model Development

2.4. Risk Model Verification

2.5. Applying Our Methodology

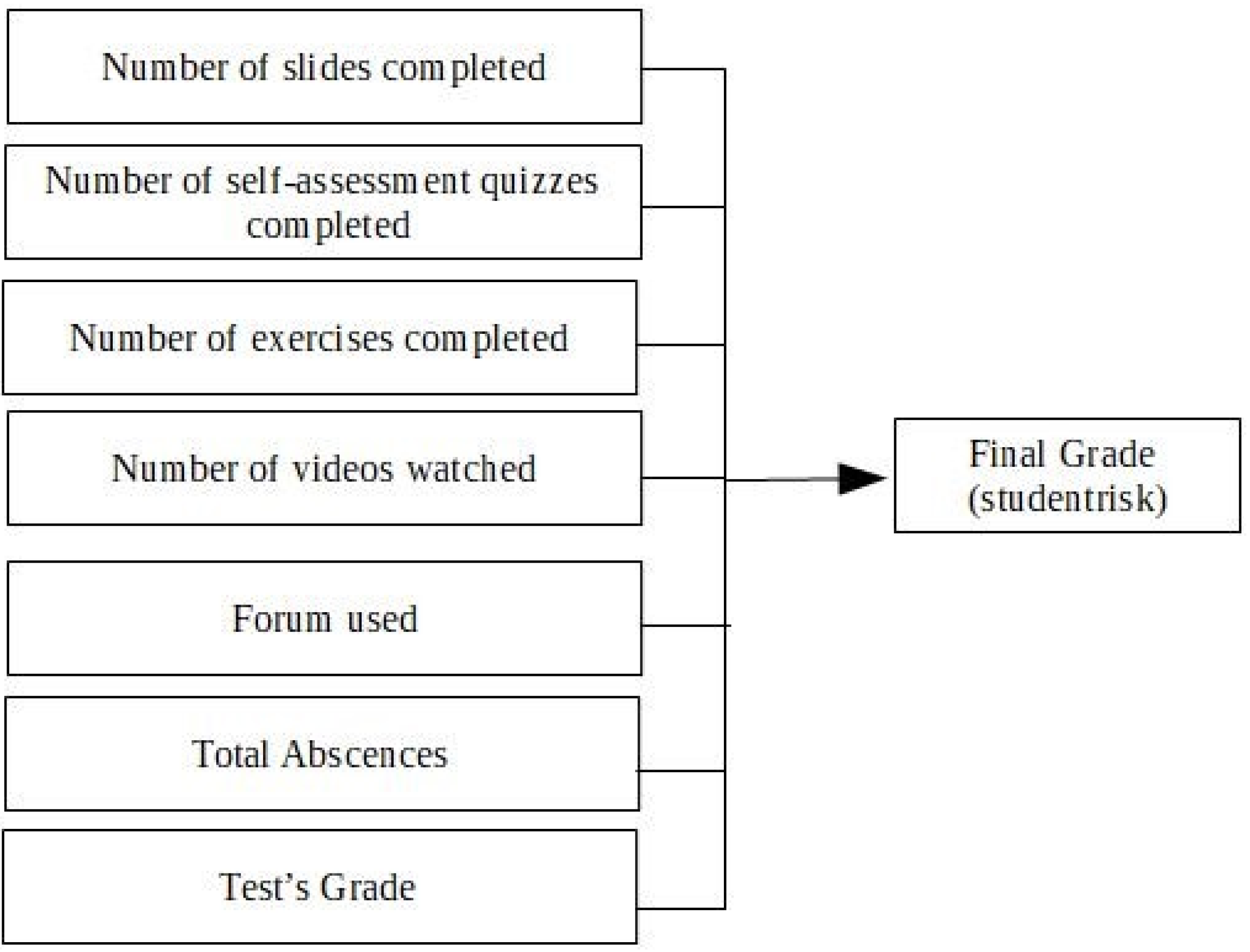

- Number of Slides completed (“0”: not completed, “1”: completed);

- Number of Self-assessment quizzes completed (“0”: not completed, “1”: completed);

- Number of Exercises done/completed;

- Number of videos watched/completed;

- Forum used (“0”: not used, “1”: used);

- Total absences (skipping class);

- Test grade (before the final exams).

3. Results

3.1. Binary Logistics Analysis Outcome (Course 1)

3.2. Binary Logistics Analysis Outcome (Course 2)

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Friesen, N. Report: Defining Blended Learning. Available online: https://www.normfriesen.info/papers/Defining_Blended_Learning_NF.pdf (accessed on 10 July 2020).

- Philadelphia Education Research Consortium (PERC). BLENDED LEARNING Defining Models and Examining Conditions to Support Implementation. Available online: http://8rri53pm0cs22jk3vvqna1ub-wpengine.netdna-ssl.com/wp-content/uploads/2015/11/Blended-Learning-PERC-Research-Brief-September-2014.pdf (accessed on 10 November 2015).

- Wu, J.; Liu, W. An Empirical Investigation of the Critical Factors Affecting Students’ Satisfaction in EFL Blended Learning. J. Lang. Teach. Res. 2013, 4, 176–185. [Google Scholar] [CrossRef]

- Wang, Y.S. Effects of Using a Blended Learning Method on Students’ Achievement and Motivation to Learn English in Jordan: A Pilot Case Study. Educ. Res. Int. 2018, 2018, 7425924. [Google Scholar]

- Akbarov, A.; Gonen, K.; Aydogan, H. Students’ attitudes toward blended learning in EFL context. Acta Didact. Napoc. 2018, 11, 61–68. [Google Scholar] [CrossRef]

- Bakeer, A.M. Students’ Attitudes towards Implementing Blended Learning in Teaching English in Higher Education Institutions: A Case of Al-Quds Open University. Int. J. Humanit. Soc. Sci. 2018, 8, 6. [Google Scholar] [CrossRef] [Green Version]

- Maccoun, H. The effect of using blended learning on the achievement of students and information retention of fifth graders in the biology course. J. Fac. Educ. 2016, 22, 209–240. [Google Scholar]

- Vernadakis, N.; Giannousi, M.; Derri, V.; Michalopoulos, M.; Kioumourtzoglou, E. The impact of blended and traditional instruction in students’ performance. Procedia Technol. 2012, 1, 439–443. [Google Scholar] [CrossRef] [Green Version]

- Alsalhi, N.R.; Eltahir, M.E.; Al-Qatawneh, S.S. The effect of blended learning on the achievement of ninth grade students in science and their attitudes towards its use. Heliyon 2019, 5, e02424. [Google Scholar] [CrossRef] [Green Version]

- Saritepeci, M.; Cakir, H. The Effect of Blended Learning Environments on Student Motivation and Student Engagement: A Study on Social Studies Course. Educ. Sci. 2015, 40, 203–216. [Google Scholar] [CrossRef] [Green Version]

- Ismail, A.O.; Mahmood, A.K.; Abdelmaboud, A. Factors Influencing Academic Performance of Students in Blended and Traditional Domains. Int. J. Emerg. Technol. Learn. 2018, 13, 170–187. [Google Scholar] [CrossRef]

- Ramirez-Arellano, A.; Acosta-Gonzaga, E.; Bory-Reyes, J.; Hernández-Simón, L.M. Factors affecting student learning performance: A causal model in higher blended education. J. Comput. Assist. Learn. 2018, 34, 807–815. [Google Scholar] [CrossRef]

- Wu, W.; Hwang, L.Y. The effectiveness of e-learning for blended courses in colleges: A multi-level empirical study. Int. J. Electron. Bus. Manag. 2010, 8, 312–322. [Google Scholar]

- Owson, R.; York, D.; Murtha, S. Student perceptions and achievement in a university blended learning strategic initiative. Int. High. Educ. 2013, 18, 38–46. [Google Scholar] [CrossRef]

- Filippidi, A.; Tselios, N.; Komis, V. Impact of Moodle usage practices on students’ performance in the context of a blended learning environment. In Proceedings of the Social Applications for Lifelong Learning, Patra, Greece, 4–5 November 2010. [Google Scholar]

- Keskin, S.; Yurdugul, H. Factors Affecting Students’ Preferences for Online and Blended Learning: Motivational Vs. Cognitive. Eur. J. Open Distance E-Learn. 2019, 22, 72–86. [Google Scholar] [CrossRef] [Green Version]

- Volchok, E. Toward a Predictive Model of Community College Student Success in Blended Classes. Community Coll. J. Res. Practice. 2018, 42, 274–288. [Google Scholar] [CrossRef]

- Macfadyen, L.; Dawson, S. Mining LMS data to develop an ‘‘early warning system” for educators: A proof of concept. Comput. Educ. 2010, 54, 588–599. [Google Scholar] [CrossRef]

- Anagnostopoulos, T.; Kytagias, C.; Xanthopoulos, T.; Georgakopoulos, I.; Psaromiligkos, I.; Salmon, I. Intelligent Predictive Analytics for Identifying Students at Risk of Failure in Moodle Courses. Available online: https://link.springer.com/chapter/10.1007/978-3-030-49663-0_19#citeas (accessed on 3 June 2020).

- Marks, H.M. Student engagement in instructional activity: Patterns in the elementary, middle, and high school years. Am. Educ. Res. J. 2000, 37, 153–184. [Google Scholar] [CrossRef]

- Newmann, F.M.; Wehlage, G.G.; Lamborn, S.D. The significance and sources of student engagement. In Student Engagement and Achievement in American Secondary Schools; Newmann, F.M., Ed.; Teachers College Press: New York, NY, USA, 1992. [Google Scholar]

- Willms, J.D. Student Engagement at School: A Sense of Belonging and Participation; Organisation for Economic Co-Operation and Development: Paris, France, 2003. [Google Scholar]

- Fredericks, J.A.; Blumenfeld, P.C.; Paris, A.H. School engagement: Potential of the concept, state of the evidence. Rev. Educ. Res. 2004, 74, 59–109. [Google Scholar] [CrossRef] [Green Version]

- Zhang, G.; Anderson, T.J.; Ohland, M.W.; Thorndyke, B.R. Identifying factors inuencing engineering student graduation: A longitudinal and cross-institutional study. J. Eng. Educ. 2004, 93, 313–320. [Google Scholar] [CrossRef]

- Appleton, J.J.; Christenson, S.L.; Furlong, M.J. Furlong. Student Engagement with School: Critical Conceptual and Methodological Issues of the Construct. Psychol. Sch. 2008, 45, 369–386. [Google Scholar] [CrossRef]

- Vose, D. Risk Analysis: A Quantitative Guide, 3rd ed.; Wiley: Hoboken, NJ, USA, 2008. [Google Scholar]

- Georgakopoulos, I.; Kytagias, C.; Psaromiligkos, Y.; Voudouri, A. Identifying risks factors of students’ failure in e-learning systems: Towards a warning system. Int. J. Decis. Support Syst. 2018, 3, 190–206. [Google Scholar] [CrossRef]

- Zinn, C.; Scheuer, O. Getting to Know Your Student in Distance Learning Contexts. In Innovative Approaches for Learning and Knowledge Sharing; Nejdl, W., Tochtermann, K., Eds.; Springer: Berlin, Germany, 2006. [Google Scholar]

- Petropoulou, O.; Lazakidou, G.; Retalis, S.; Vrasidas, C. Analysing interaction behaviour in network supported collaborative learning environments: A holistic approach. Int. J. Knowl. Learn. 2007, 3, 450–464. [Google Scholar] [CrossRef]

- Morris, L.V.; Finnegan, C.; Wu, S. Tracking student behavior, persistence, and achievement in online courses. Internet High. Educ. 2005, 8, 221–231. [Google Scholar] [CrossRef]

- Kytagias, C.; Liomas, Y.; Livanis, T.; Psaromiligkos, Y.; Dimakos, G. Mining the Log Files of Moodle: Towards Moodle Analytics. In Proceedings of the International Scientific Conference eRA–10, Egaelo, Greece, 23–25 September 2015. [Google Scholar]

- Allison, P.D. Measures of fit for logistic regression. In Proceedings of the SAS Global Forum 2014 Conference, Washington, DC, USA, 23–26 March 2014. [Google Scholar]

- Menard, S. Coefficients of determination for multiple logistic regression analysis. Am. Stat. 2000, 54, 17–24. [Google Scholar]

- Smith, T.J.; McKenna, C.M. A comparison of the logistic regression pseudo Rsquared indices. Mult. Linear Regres. Viewp. 2013, 39, 17–26. [Google Scholar]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E.; Tatham, R.L. Multivar. Data Analysis, 6th ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2006. [Google Scholar]

- Hosmer, D.W.; Lemeshow, S. Applied Logistic Regression, 2nd ed.; John Wiley & Sons Inc.: New York, NY, USA, 2000. [Google Scholar]

- Dobson, A. An Introduction to Generalized Linear Models, 2nd ed.; Chapman and Hall: London, UK, 2001. [Google Scholar]

- Ntanos, S.; Asonitou, S.; Kyriakopoulos, G.; Skordoulis, M.; Chalikias, M.; Arabatzis, G. Environmental Sensitivity of Business School Students and Their Attitudes towards Social and Environmental Accounting. In Strategic Innovative Marketing and Tourism; Kavoura, A., Kefallonitis, E., Theodoridis, P., Eds.; Springer: Berlin, Germany, 2020. [Google Scholar]

- Papasotiriou, E.; Sidiropoulos, G.; Ntanos, S.; Chalikias, M.; Skordoulis, M. The relationship between professional burnout and satisfaction: A case study for physical education teachers in a Greek urban area. Serb. J. Manag. 2018, 13, 353–363. [Google Scholar] [CrossRef]

- Skordoulis, M.; Sparangis, P.; Stampolis, O.; Mougkolia, I.; Papageorgiou, A.; Chondreli, C. A framework for quality in education: Applying quality function deployment to a higher education institute. In Proceedings of the International Scientific Conference eRA–10, Egaelo, Greece, 23–25 September 2015. [Google Scholar]

| Cox & Snell R Square | 0.566 |

| Nagelkerke R Square | 0.799 |

| Hosmer & Lemeshow Test (Sig. value) | 0.398 |

| Classification Table a | |||||

|---|---|---|---|---|---|

| Observed | Predicted | ||||

| Srisk | Percentage Correct | ||||

| Not at Risk | at Risk | ||||

| Step 1 | srisk | not at risk | 95 | 5 | 95.0 |

| at risk | 6 | 38 | 86.4 | ||

| Overall Percentage | 92.4 | ||||

| Coefficients | B (Coefficient Value) | Sig |

|---|---|---|

| Test Grade | −0.654 | 0.000 |

| Total Absences | 0.463 | 0.002 |

| Cox & Snell R Square | 0.542 |

| Nagelkerke R Square | 0.743 |

| Hosmer & Lemeshow Test (Sig. value) | 0.199 |

| Classification Table a | |||||

|---|---|---|---|---|---|

| Observed | Predicted | ||||

| Studrisk | Percentage Correct | ||||

| Not at Risk | at Risk | ||||

| Step 1 | studentrisk | not at risk | 137 | 13 | 91.3 |

| at risk | 12 | 72 | 85.7 | ||

| Overall Percentage | 89.3 | ||||

| Coefficients | B (Coefficient Value) | Sig |

|---|---|---|

| Number of Slides Completed | −4.666 | 0.000 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Georgakopoulos, I.; Chalikias, M.; Zakopoulos, V.; Kossieri, E. Identifying Factors of Students’ Failure in Blended Courses by Analyzing Students’ Engagement Data. Educ. Sci. 2020, 10, 242. https://doi.org/10.3390/educsci10090242

Georgakopoulos I, Chalikias M, Zakopoulos V, Kossieri E. Identifying Factors of Students’ Failure in Blended Courses by Analyzing Students’ Engagement Data. Education Sciences. 2020; 10(9):242. https://doi.org/10.3390/educsci10090242

Chicago/Turabian StyleGeorgakopoulos, Ioannis, Miltiadis Chalikias, Vassilis Zakopoulos, and Evangelia Kossieri. 2020. "Identifying Factors of Students’ Failure in Blended Courses by Analyzing Students’ Engagement Data" Education Sciences 10, no. 9: 242. https://doi.org/10.3390/educsci10090242

APA StyleGeorgakopoulos, I., Chalikias, M., Zakopoulos, V., & Kossieri, E. (2020). Identifying Factors of Students’ Failure in Blended Courses by Analyzing Students’ Engagement Data. Education Sciences, 10(9), 242. https://doi.org/10.3390/educsci10090242