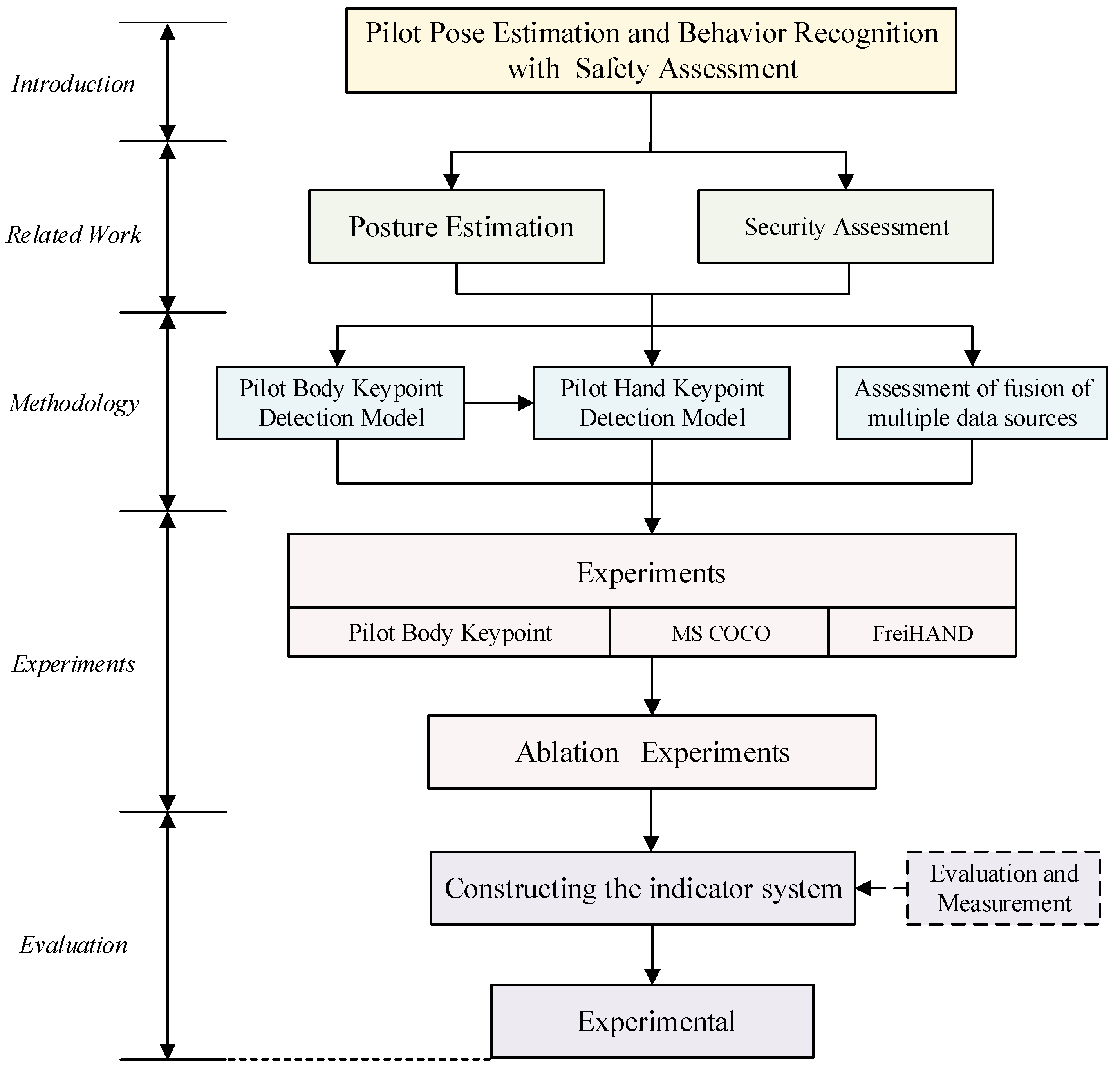

A Lightweight Framework for Pilot Pose Estimation and Behavior Recognition with Integrated Safety Assessment

Abstract

1. Introduction

- In pilot action recognition tasks, safety assessment is a critical component to ensure the reliable operation of the system. Accurately assessing the behavioral state of pilots not only helps improve flight safety but also provides a scientific basis for flight training and operational standards. However, traditional data-driven assessments often rely on single-modal data [15], such as visual data. Single-modal data struggles to comprehensively reflect the actual state of pilots, particularly under complex environments, occlusions, or dynamic conditions. Multimodal data fusion techniques (e.g., combining visual data, inertial measurement units, and physiological signals) offer new possibilities for comprehensively evaluating system performance [16]. By integrating multimodal data, it is possible to more comprehensively capture the behavioral characteristics of pilots, significantly enhancing the robustness of assessments, especially in complex environments [17]. Recent studies have employed approaches such as Fault Tree Analysis, Bayesian Networks, and machine learning-based fusion models [18] to leverage multimodal information, demonstrating the potential of combining multiple data sources to assess pilot state and workload more comprehensively [19]. In addition, explainable AI [20] techniques can reveal which features or sensor inputs contribute most to model predictions, improving interpretability and supporting objective safety assessment. Nevertheless, existing assessment methods still have shortcomings in the design of evaluation metrics, making it difficult to fully leverage the advantages of multimodal data, particularly in complex environments where they fail to meet practical requirements.

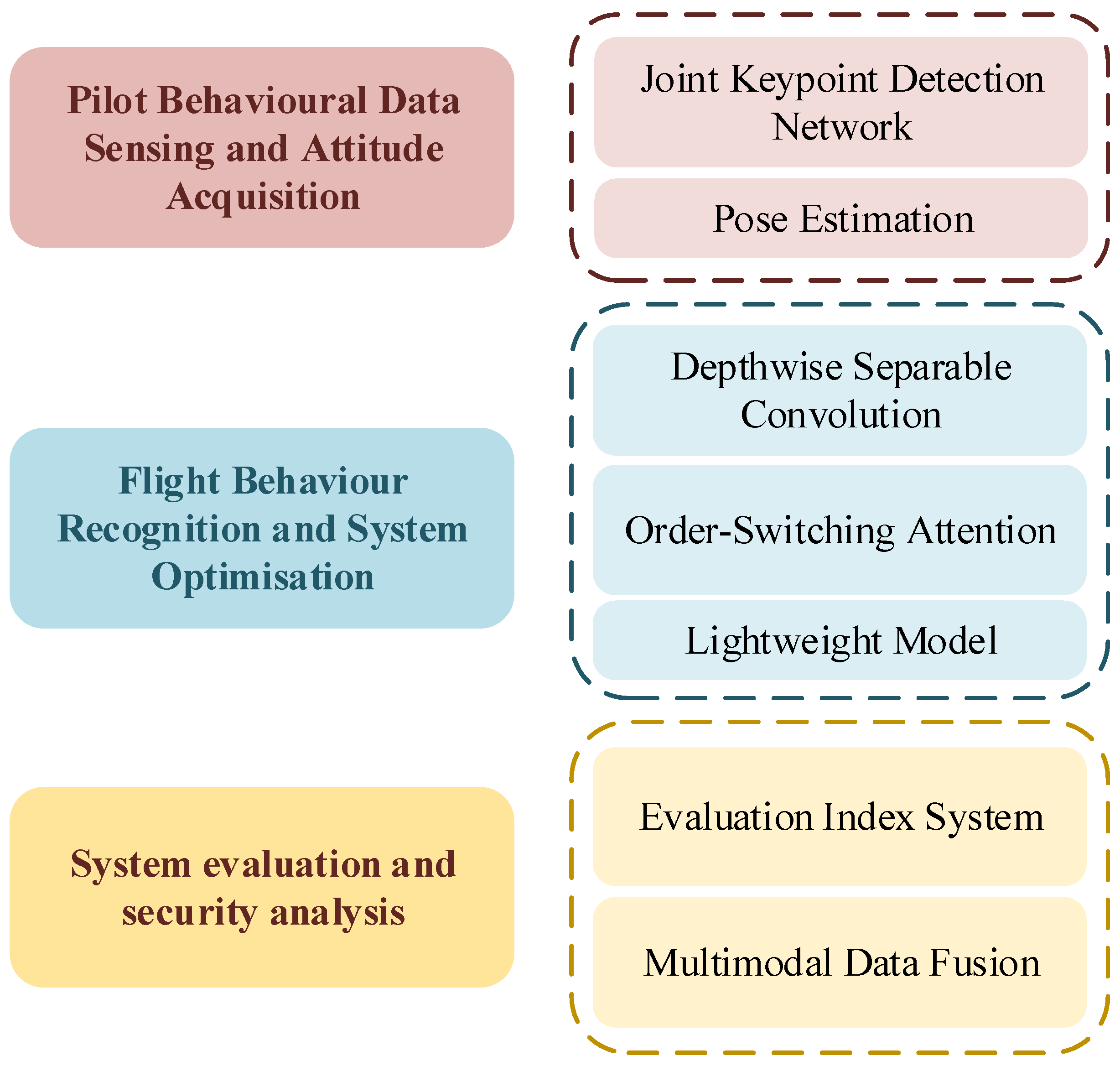

- In summary, although existing methods have made some progress in pilot pose estimation and behavior recognition tasks, they still face the following key challenges: (1) Transformer-based methods, while significantly improving global modeling capabilities, suffer from high computational complexity, making real-time inference difficult in resource-constrained scenarios; (2) Existing evaluation methods lack a scientifically supported metric system, making it challenging to fully leverage the advantages of multimodal data and meet practical requirements in complex environments. To address these challenges, this paper proposes a lightweight framework for pilot pose estimation and behavior recognition, and innovatively introduces multimodal data fusion technology to achieve a scientific evaluation of system performance. The main contributions of this paper are as follows:

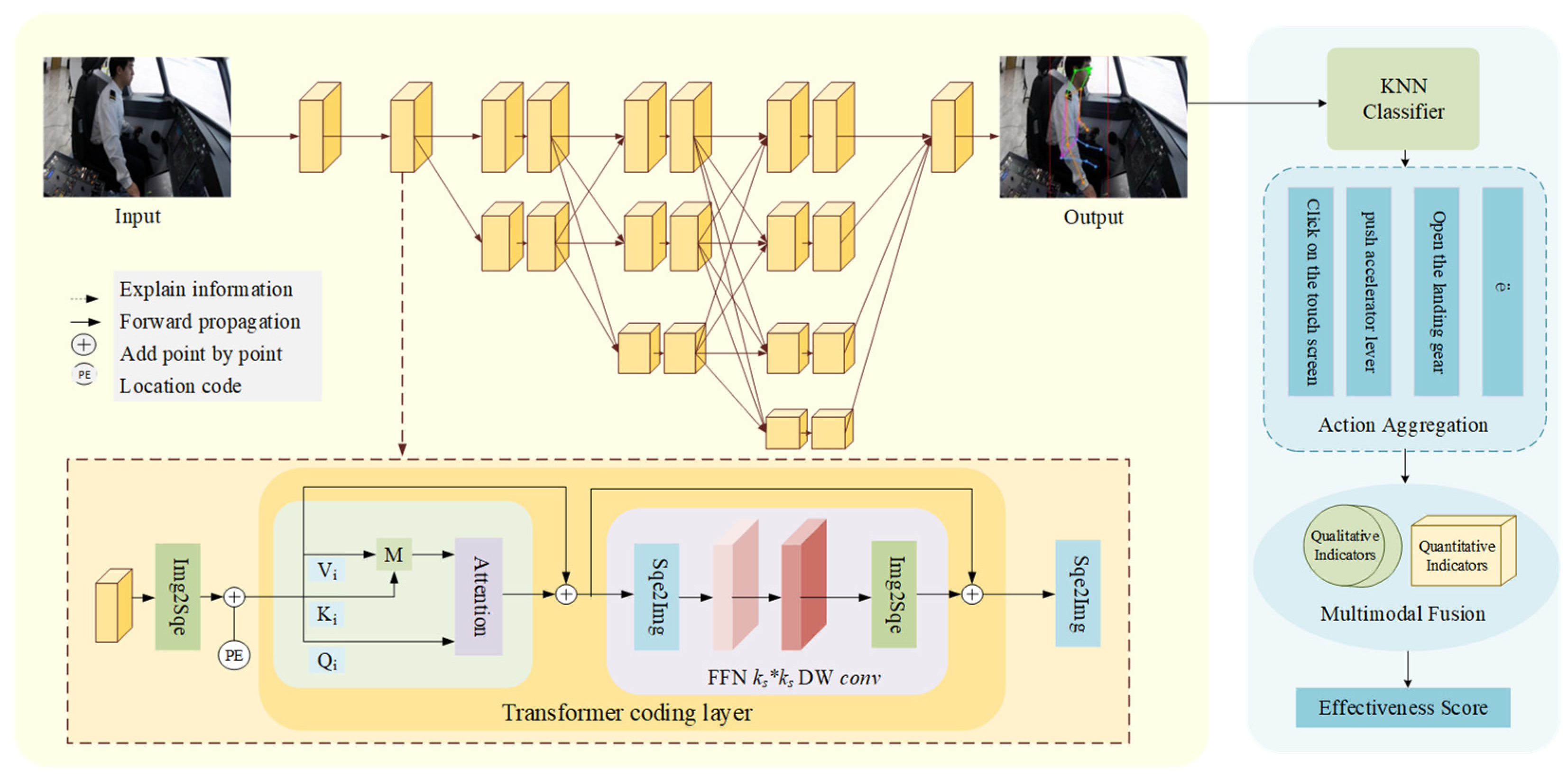

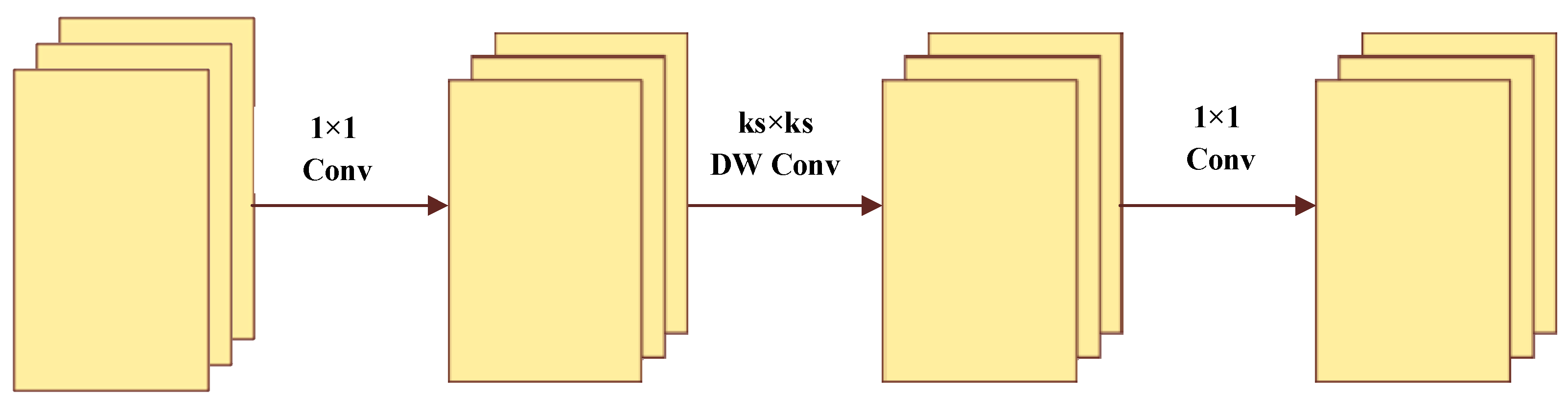

- This paper innovatively proposes a vision Transformer framework for pilot pose estimation, combining a collaborative optimization strategy of order-swapped attention mechanisms and self-attention mechanisms. Depth-wise separable convolutions are introduced to enhance local feature extraction capabilities, achieving joint optimization of hand and limb keypoint detection, significantly improving the accuracy and efficiency of pilot keypoint localization.

- The effectiveness of the proposed model is validated through extensive experiments. The results demonstrate that the model significantly enhances the accuracy and efficiency of pilot keypoint localization in complex environments.

- Based on extensive experiments and practice, a scientific metric system is summarized, providing a reliable basis for system safety assessment. Additionally, multimodal data fusion technology is innovatively introduced, and the system’s reliability and safety are evaluated using this metric system, effectively ensuring the robustness and stability of the system in complex environments.

2. Related Research Progress

2.1. Posture Estimation and Behavior Recognition

2.2. Security Assessment

3. Methodology

3.1. Pilot Body Keypoint Detection Model

3.1.1. HRNet-Former

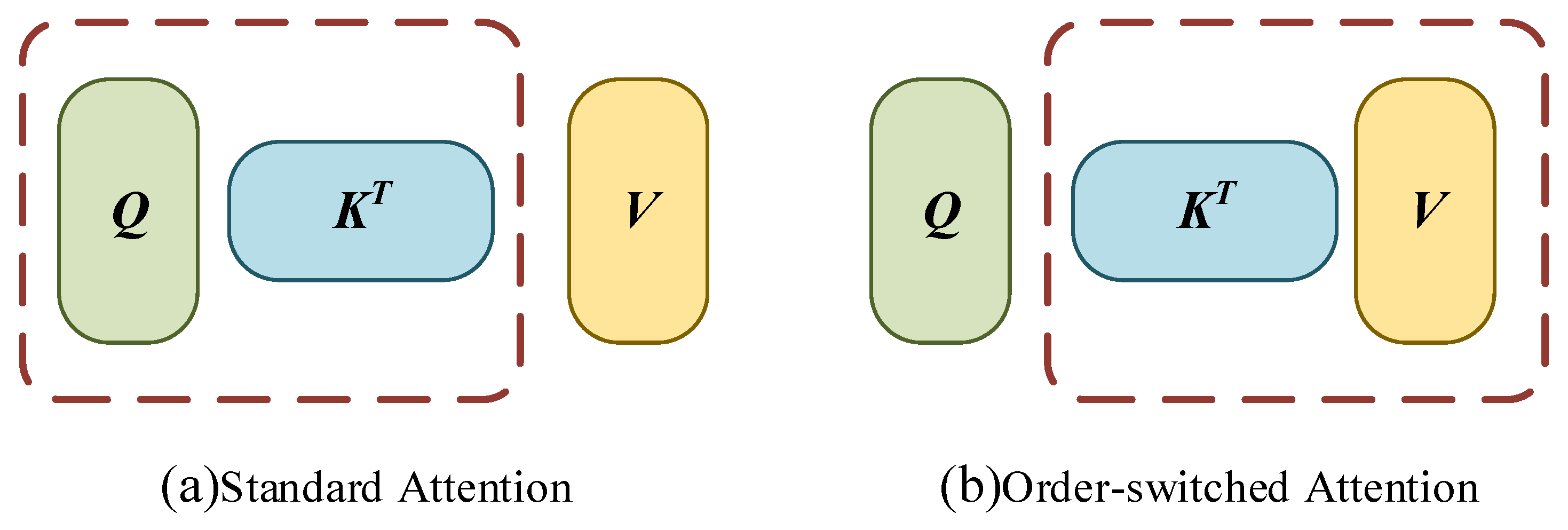

3.1.2. Standard Attention Module and Order-Switched Attention Module

3.2. Pilot Hand Keypoint Detection Model

3.3. Assessment of Multi-Source Data Fusion

4. Discussion

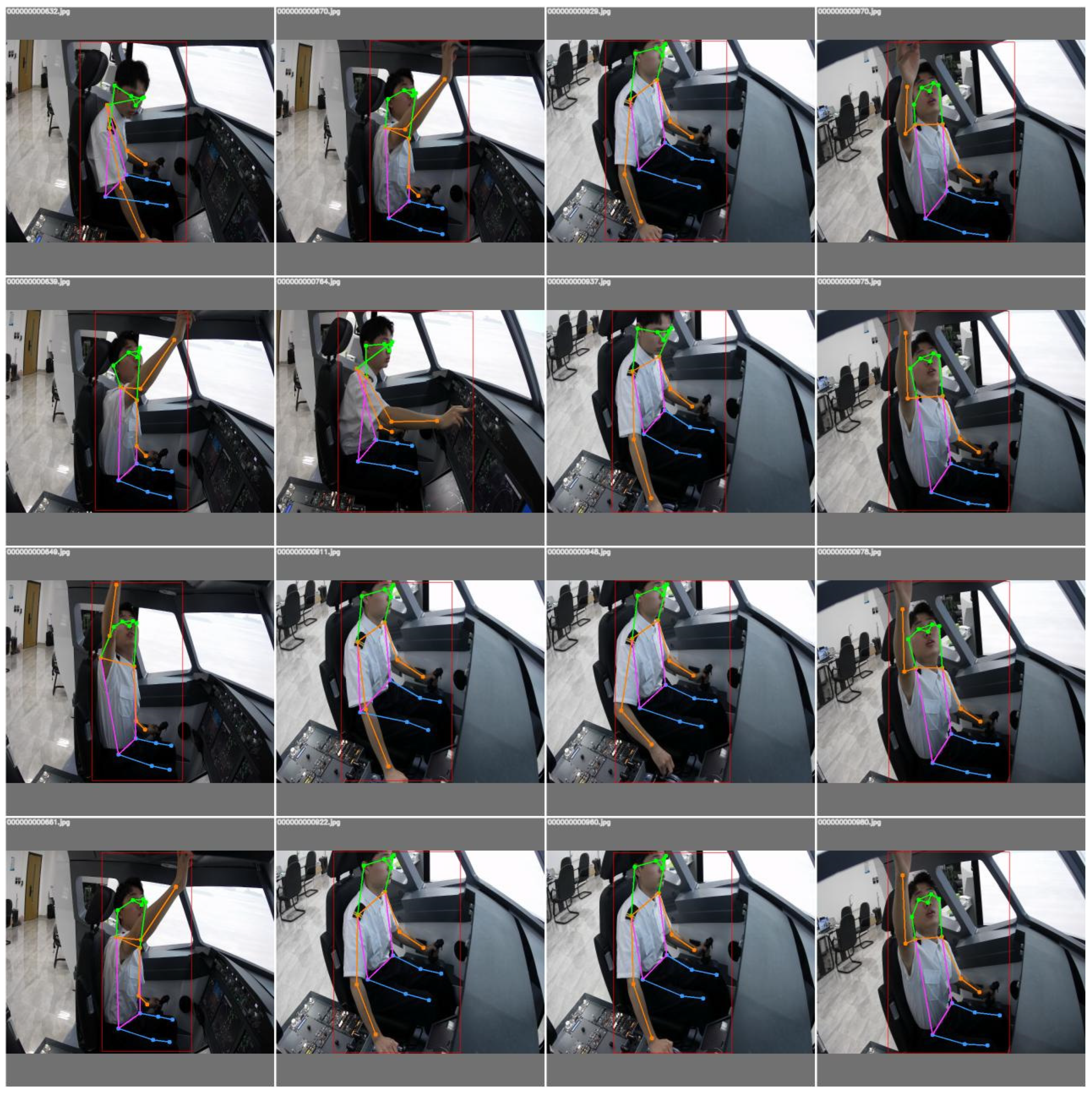

4.1. Pilot Body Keypoint Detection Dataset

4.1.1. Dataset Source

4.1.2. Evaluation Criteria

4.1.3. Experimental Setup

4.1.4. Result Analysis

4.2. MS COCO Dataset

4.2.1. Introduction to the MS COCO Dataset

4.2.2. Experimental Settings

4.2.3. Analysis of Experimental Results

4.3. FreiHAND Hand Keypoint Dataset

4.3.1. Introduction to the FreiHAND Hand Keypoint Dataset

4.3.2. Analysis of Experimental Results

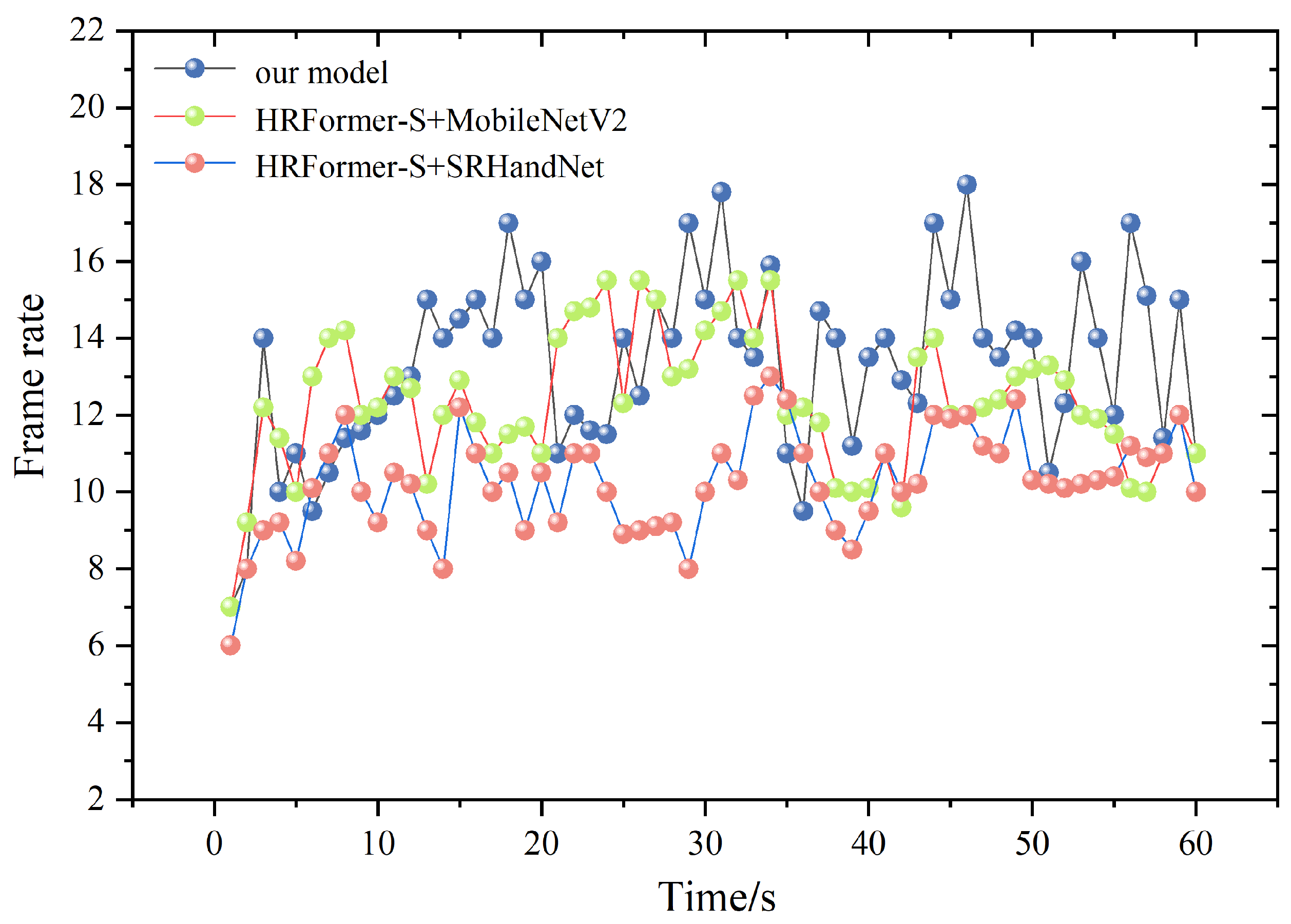

4.4. Joint Deployment of Limb and Hand Keypoints

4.5. Ablation Experiment

5. Evaluation

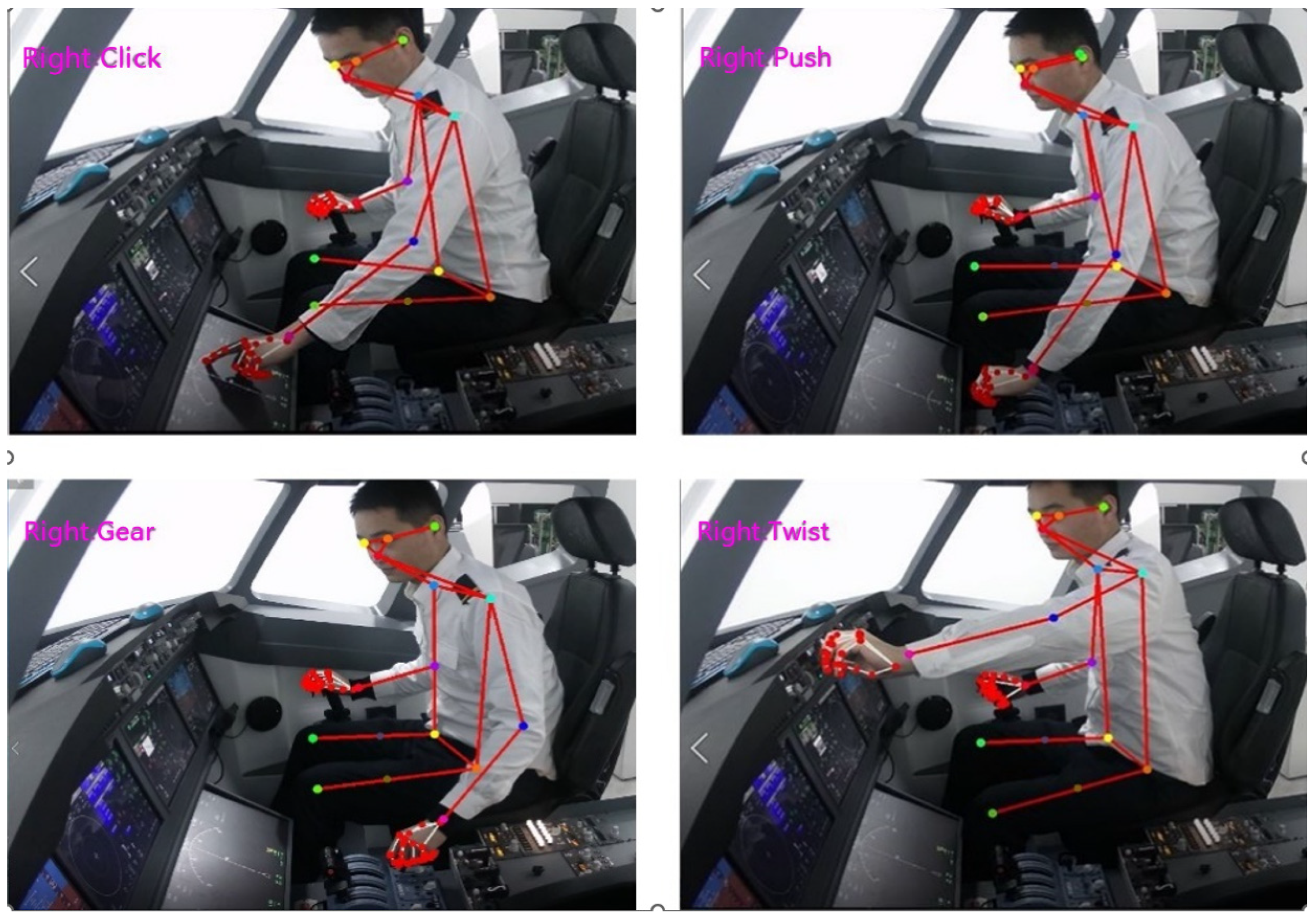

5.1. The Construction of a Pilot Behavior Recognition Indicator System

5.2. Experimental Content

5.3. Analysis of Experimental Results

6. Summary

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CSP | Crew Standard Operating Procedures |

| OKS | Object Keypoint Similarity |

| AP | Average Precision |

| AR | Average Recall |

| PCK | Percentage of Correct Keypoints |

| MS COCO | Microsoft Common Objects in Context |

| FreiHAND | Freiburg Hand Dataset |

| FFN | Feed-Forward Network |

| GFLOPs | Giga Floating Point Operations per Second |

References

- Liang, B.; Chen, Y.; Wu, H. A Conception of Flight Test Mode for Future Intelligent Cockpit. In Proceedings of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; pp. 3260–3264. [Google Scholar]

- Li, Q.; Ng, K.K.H.; Yiu, C.Y.; Yuan, X.; So, C.K.; Ho, C.C. Securing Air Transportation Safety through Identifying Pilot’s Risky VFR Flying Behaviours: An EEG-Based Neurophysiological Modelling Using Machine Learning Algorithms. Reliab. Eng. Syst. Saf. 2023, 238, 109449. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Zheng, C.; Zhao, L.; Liu, J.; Wang, L. Evaluation of Random Forest for Complex Human Activity Recognition Using Wearable Sensors. In Proceedings of the 2020 International Conference on Networking and Network Applications (NaNA), Haikou, China, 10–13 December 2020; pp. 310–315. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields 2019. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef]

- Li, Y.; Wang, C.; Cao, Y.; Liu, B.; Luo, Y.; Zhang, H. A-HRNet: Attention Based High Resolution Network for Human Pose Estimation. In Proceedings of the 2020 Second International Conference on Transdisciplinary AI (transai), Irvine, CA, USA, 21–23 September 2020; pp. 75–79. [Google Scholar]

- Wang, Z.; Liu, Y.; Zhang, E. Pose Estimation for Cross-Domain Non-Cooperative Spacecraft Based on Spatial-Aware Keypoints Regression. Aerospace 2024, 11, 948. [Google Scholar] [CrossRef]

- Su, X.; Xu, H.; Zhao, J.; Zhang, F.; Chen, X. GM-HRNet: Human Pose Estimation Based on Global Modeling. In Proceedings of the 2023 IEEE 7th Information Technology and Mechatronics Engineering Conference (Itoec), Chongqing, China, 15–17 September 2023; Volume 7, pp. 1144–1147. [Google Scholar]

- A Survey on Convolutional Neural Networks and Their Performance Limitations in Image Recognition Tasks. Available online: https://onlinelibrary.wiley.com/doi/epdf/10.1155/2024/2797320 (accessed on 5 March 2025).

- Yang, S.; Quan, Z.; Nie, M.; Yang, W. TransPose: Keypoint Localization via Transformer. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 11782–11792. [Google Scholar]

- Yuan, Y.; Fu, R.; Huang, L.; Lin, W.; Zhang, C.; Chen, X.; Wang, J. HRFormer: High-Resolution Transformer for Dense Prediction. arXiv 2021, arXiv:2110.09408. [Google Scholar] [CrossRef]

- Zhao, Z.; Song, A.; Zheng, S.; Xiong, Q.; Guo, J. DSC-HRNet: A Lightweight Teaching Pose Estimation Model with Depthwise Separable Convolution and Deep High-Resolution Representation Learning in Computer-Aided Education. Int. J. Inf. Technol. 2023, 15, 2373–2385. [Google Scholar] [CrossRef]

- Caishi, H.; Sijia, W.; Yan, L.; Zihao, D.; Feng, Y. Real-Time Human Pose Estimation on Embedded Devices Based on Deep Learning. In Proceedings of the 2023 20th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 15–17 December 2023; pp. 1–7. [Google Scholar]

- He, Y.; Kang, G.; Dong, X.; Fu, Y.; Yang, Y. Soft Filter Pruning for Accelerating Deep Convolutional Neural Networks 2018. arXiv 2018, arXiv:1808.06866. [Google Scholar]

- Barabanov, M.Y.; Bedolla, M.A.; Brooks, W.K.; Cates, G.D.; Chen, C.; Chen, Y.; Cisbani, E.; Ding, M.; Eichmann, G.; Ent, R.; et al. Diquark Correlations in Hadron Physics: Origin, Impact and Evidence. Prog. Part. Nucl. Phys. 2021, 116, 103835. [Google Scholar] [CrossRef]

- Reconstruction of Pilot Behaviour from Cockpit Image Recorder. Available online: https://arc.aiaa.org/doi/10.2514/6.2020-1873 (accessed on 12 March 2025).

- Li, Y.; Li, K.; Wang, S.; Chen, X.; Wen, D. Pilot Behavior Recognition Based on Multi-Modality Fusion Technology Using Physiological Characteristics. Biosensors 2022, 12, 404. [Google Scholar] [CrossRef]

- Wang, Z.Z.; Xia, X.; Chen, Q. Multi-Level Data Fusion Enables Collaborative Dynamics Analysis in Team Sports Using Wearable Sensor Networks. Sci. Rep. 2025, 15, 28210. [Google Scholar] [CrossRef] [PubMed]

- Xie, D.; Zhang, X.; Gao, X.; Zhao, H.; Du, D. MAF-Net: A Multimodal Data Fusion Approach for Human Action Recognition. PLoS ONE 2025, 20, e0319656. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Wang, H.; Zhang, H. Cognitive Workload Assessment in Aerospace Scenarios: A Cross-Modal Transformer Framework for Multimodal Physiological Signal Fusion. Multimodal Technol. Interact. 2025, 9, 89. [Google Scholar] [CrossRef]

- Rodis, N.; Sardianos, C.; Radoglou-Grammatikis, P.; Sarigiannidis, P.; Varlamis, I.; Papadopoulos, G.T. Multimodal Explainable Artificial Intelligence: A Comprehensive Review of Methodological Advances and Future Research Directions 2024. IEEE Access 2024, 12, 159794–159820. [Google Scholar] [CrossRef]

- Lowe, D.G. Object Recognition from Local Scale-Invariant Features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Deng, L.; Suo, H.; Jia, Y.; Huang, C. Pose Estimation Method for Non-Cooperative Target Based on Deep Learning. Aerospace 2022, 9, 770. [Google Scholar] [CrossRef]

- Phisannupawong, T.; Kamsing, P.; Torteeka, P.; Channumsin, S.; Sawangwit, U.; Hematulin, W.; Jarawan, T.; Somjit, T.; Yooyen, S.; Delahaye, D.; et al. Vision-Based Spacecraft Pose Estimation via a Deep Convolutional Neural Network for Noncooperative Docking Operations. Aerospace 2020, 7, 126. [Google Scholar] [CrossRef]

- Sharma, P.; Shah, B.B.; Prakash, C. A Pilot Study on Human Pose Estimation for Sports Analysis. In Pattern Recognition and Data Analysis with Applications; Gupta, D., Goswami, R.S., Banerjee, S., Tanveer, M., Pachori, R.B., Eds.; Lecture Notes in Electrical Engineering; Springer Nature: Singapore, 2022; Volume 888, pp. 533–544. ISBN 978-981-19-1519-2. [Google Scholar]

- Li, X.; Du, H.; Wu, X. Algorithm of Pedestrian Pose Recognition Based on Keypoint Detection. In Proceedings of the 2023 8th IEEE International Conference on Network Intelligence and Digital Content (IC-NIDC), Beijing, China, 3–5 November 2023; pp. 122–126. [Google Scholar]

- Jeong, J.; Park, B.; Yoon, K. 3D Human Skeleton Keypoint Detection Using RGB and Depth Image. Trans. Korean Inst. Electr. Eng. 2021, 70, 1354–1361. [Google Scholar] [CrossRef]

- Zhu, Z.; Dong, W.; Gao, X.; Peng, A.; Luo, Y. Towards Human Keypoint Detection in Infrared Images. In Proceedings of the 29th International Conference on Neural Information Processing, ICONIP 2022, New Delhi, India, 22–26 November 2022; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2023; Volume 1792, pp. 528–539. [Google Scholar]

- Yuan, M.; Bi, X.; Huang, X.; Zhang, W.; Hu, L.; Yuan, G.Y.; Zhao, X.; Sun, Y. Towards Time-Series Key Points Detection through Self-Supervised Learning and Probability Compensation. In Proceedings of the Database Systems for Advanced Applications, Tianjin, China, 17–20 April 2023; Wang, X., Sapino, M.L., Han, W.-S., El Abbadi, A., Dobbie, G., Feng, Z., Shao, Y., Yin, H., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 237–252. [Google Scholar]

- Yu, C.; Yang, X.; Bao, W.; Wang, S.; Yao, Z. A Self-Supervised Pressure Map Human Keypoint Detection Approch: Optimizing Generalization and Computational Efficiency across Datasets. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024. [Google Scholar] [CrossRef]

- Aghaomidi, P.; Aram, S.; Bahmani, Z. Leveraging Self-Supervised Learning for Accurate Facial Keypoint Detection in Thermal Images. In Proceedings of the 2023 30th National and 8th International Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 30 November–1 December 2023; pp. 452–457. [Google Scholar]

- Vdoviak, G.; Sledevič, T. Enhancing Keypoint Detection in Thermal Images: Optimizing Loss Function and Real-Time Processing with YOLOv8n-Pose. In Proceedings of the 2024 IEEE 11th Workshop on Advances in Information, Electronic and Electrical Engineering (AIEEE), Valmiera, Latvia, 31 May–1 June 2024; pp. 1–5. [Google Scholar]

- Shuster, M.D. The TRIAD Algorithm as Maximum Likelihood Estimation. J. Astronaut. Sci. 2006, 54, 113–123. [Google Scholar] [CrossRef]

- Zaal, P.M.T.; Mulder, M.; Van Paassen, M.M.; Mulder, J.A. Maximum Likelihood Estimation of Multi-Modal Pilot Control Behavior in a Target-Following Task. In Proceedings of the 2008 IEEE International Conference on Systems, Man and Cybernetics, Singapore, 12–15 October 2008; pp. 1085–1090. [Google Scholar]

- Roggio, F.; Trovato, B.; Sortino, M.; Musumeci, G. A Comprehensive Analysis of the Machine Learning Pose Estimation Models Used in Human Movement and Posture Analyses: A Narrative Review. Heliyon 2024, 10, e39977. [Google Scholar] [CrossRef]

- Brutch, S.; Moncayo, H. Machine Learning Approach to Estimation of Human-Pilot Model Parameters. In Proceedings of the AIAA Scitech 2024 Forum, Orlando, FL, USA, 8–12 January 2024; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2024. [Google Scholar]

- Wei, C.; Long, W.; Jiang, S.; Chen, C.; Wu, D.; Jiang, L. A Trend of 2D Human Pose Estimation Base on Deep Learning. In Proceedings of the 2022 4th International Symposium on Smart and Healthy Cities (ISHC), Shanghai, China, 16–17 December 2022; pp. 214–219. [Google Scholar]

- Liu, Y.; Qiu, C.; Zhang, Z. Deep Learning for 3D Human Pose Estimation and Mesh Recovery: A Survey. Neurocomputing 2024, 596, 128049. [Google Scholar] [CrossRef]

- Wu, Y.; Kong, D.; Gao, J.; Li, J.; Yin, B. Joint Multi-Scale Transformers and Pose Equivalence Constraints for 3D Human Pose Estimation. J. Vis. Commun. Image Represent. 2024, 103, 104247. [Google Scholar] [CrossRef]

- Li, W.; Liu, M.; Liu, H.; Guo, T.; Wang, T.; Tang, H.; Sebe, N. GraphMLP: A Graph MLP-like Architecture for 3D Human Pose Estimation. Pattern Recognit. 2025, 158, 110925. [Google Scholar] [CrossRef]

- Xiang, X.; Li, X.; Bao, W.; Qiao, Y.; El Saddik, A. DBMHT: A Double-Branch Multi-Hypothesis Transformer for 3D Human Pose Estimation in Video. Comput. Vis. Image Underst. 2024, 249, 104147. [Google Scholar] [CrossRef]

- Sun, Q.; Pan, X.; Ling, X.; Wang, B.; Sheng, Q.; Li, J.; Yan, Z.; Yu, K.; Wang, J. A Vision-Based Pose Estimation of a Non-Cooperative Target Based on a Self-Supervised Transformer Network. Aerospace 2023, 10, 997. [Google Scholar] [CrossRef]

- Zhu, M.; Ho, E.S.L.; Chen, S.; Yang, L.; Shum, H.P.H. Geometric Features Enhanced Human–Object Interaction Detection. IEEE Trans. Instrum. Meas. 2024, 73, 5026014. [Google Scholar] [CrossRef]

- Cheng, C.; Xu, H. Human Pose Estimation in Complex Background Videos via Transformer-Based Multi-Scale Feature Integration. Displays 2024, 84, 102805. [Google Scholar] [CrossRef]

- Zhang, T.; Li, Q.; Wen, J.; Philip Chen, C.L. Enhancement and Optimisation of Human Pose Estimation with Multi-Scale Spatial Attention and Adversarial Data Augmentation. Inf. Fusion 2024, 111, 102522. [Google Scholar] [CrossRef]

- Luo, Y.; Gao, X. A Lightweight Network for Human Keypoint Detection Based on Hybrid Attention. In Proceedings of the 4th International Conference on Neural Networks, Information and Communication Engineering, NNICE 2024, Guangzhou, China, 19–21 January 2024; Institute of Electrical and Electronics Engineers Inc.: Guangzhou, China, 2024; pp. 10–15. [Google Scholar]

- Xiao, Q.; Zhao, R.; Shi, G.; Deng, D. PLPose: A Bottom-up Lightweight Pose Estimation Detection Model. In Proceedings of the 2024 5th International Conference on Computer Vision, Image and Deep Learning (CVIDL), Zhuhai, China, 19–21 April 2024; pp. 1439–1443. [Google Scholar]

- Xu, W.; Xu, Y.; Chang, T.; Tu, Z. Co-Scale Conv-Attentional Image Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021. [Google Scholar]

- Suck, S.; Fortmann, F. Aircraft Pilot Intention Recognition for Advanced Cockpit Assistance Systems. In Foundations of Augmented Cognition: Neuroergonomics and Operational Neuroscience, Proceedings of the 10th International Conference, AC 2016, Held as Part of HCI International 2016, Toronto, ON, Canada, 17–22 July 2016; Schmorrow, D.D., Fidopiastis, C.M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 231–240. [Google Scholar]

- Pasquini, A.; Pozzi, S.; McAuley, G. Eliciting Information for Safety Assessment. Saf. Sci. 2008, 46, 1469–1482. [Google Scholar] [CrossRef]

- McMurtrie, K.J.; Molesworth, B.R.C. The Variability in Risk Assessment between Flight Crew. Int. J. Aerosp. Psychol. 2017, 27, 65–78. [Google Scholar] [CrossRef]

- Guo, Y.; Sun, Y.; He, Y.; Du, F.; Su, S.; Peng, C. A Data-Driven Integrated Safety Risk Warning Model Based on Deep Learning for Civil Aircraft. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 1707–1719. [Google Scholar] [CrossRef]

- Shi, L.-L.; Chen, J. Assessment Model of Command Information System Security Situation Based on Twin Support Vector Machines. In Proceedings of the 2017 International Conference on Network and Information Systems for Computers (ICNISC), Shanghai, China, 14–16 April 2017; pp. 135–139. [Google Scholar]

- Jiang, S.; Su, R.; Ren, Z.; Chen, W.; Kang, Y. Assessment of Pilots’ Cognitive Competency Using Situation Awareness Recognition Model Based on Visual Characteristics. Int. J. Intell. Syst. 2024, 2024, 5582660. [Google Scholar] [CrossRef]

- Sun, H.; Yang, F.; Zhang, P.; Jiao, Y.; Zhao, Y. An Innovative Deep Architecture for Flight Safety Risk Assessment Based on Time Series Data. CMES Comput. Model. Eng. Sci. 2023, 138, 2549–2569. [Google Scholar] [CrossRef]

- Zheng, X.; Liu, Q.; Li, Y.; Wang, B.; Qin, W. Safety Risk Assessment for Connected and Automated Vehicles: Integrating FTA and CM-Improved AHP. Reliab. Eng. Syst. Saf. 2025, 257, 110822. [Google Scholar] [CrossRef]

- Wang, J.; Fan, K.; Mo, W.; Xu, D. A Method for Information Security Risk Assessment Based on the Dynamic Bayesian Network. In Proceedings of the 2016 International Conference on Networking and Network Applications (NaNA), Hakodate, Japan, 23–25 July 2016; pp. 279–283. [Google Scholar]

- Xu, C.; Hu, C.; Nie, W. Application of Fuzzy Theory and Digraph Method in Security Assessment System. In Proceedings of the 2010 International Conference on Intelligent Computation Technology and Automation, Changsha, China, 11–12 May 2010; Volume 1, pp. 754–757. [Google Scholar]

- Wei, Z.; Zou, Y.; Wang, L. Applying Multi-Source Data to Evaluate Pilots’ Flight Safety Style Based on Safety-II Theory. In Engineering Psychology and Cognitive Ergonomics; Harris, D., Li, W.-C., Eds.; Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2023; Volume 14017, pp. 320–330. ISBN 978-3-031-35391-8. [Google Scholar]

- Pei, H.; Li, G.; Ma, Y.; Gong, H.; Xu, M.; Bai, Z. A Mental Fatigue Assessment Method for Pilots Incorporating Multiple Ocular Features. Displays 2025, 87, 102956. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, Q.; Yang, L. Machine Learning-Based Multimodal Fusion Recognition of Passenger Ship Seafarers’ Workload: A Case Study of a Real Navigation Experiment. Ocean Eng. 2024, 300, 117346. [Google Scholar] [CrossRef]

- Liu, X.; Xiao, G.; Wang, M.; Li, H. Research on Airworthiness Certification of Civil Aircraft Based on Digital Virtual Flight Test Technology. In Proceedings of the 2019 IEEE/AIAA 38th Digital Avionics Systems Conference (DASC), San Diego, CA, USA, 8–12 September 2019; pp. 1–6. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need 2023. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications 2017. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 5686–5696. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection 2017. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Liu, Q.; Yao, J.; Yao, L.; Chen, X.; Zhou, J.; Lu, L.; Zhang, L.; Liu, Z.; Huo, Y. M2Fusion: Bayesian-Based Multimodal Multi-Level Fusion on Colorectal Cancer Microsatellite Instability Prediction. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2023 Workshops; Woo, J., Hering, A., Silva, W., Li, X., Fu, H., Liu, X., Xing, F., Purushotham, S., Mathai, T.S., Mukherjee, P., et al., Eds.; Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2023; Volume 14394, pp. 125–134. ISBN 978-3-031-47424-8. [Google Scholar]

- Qiu, H.; Yu, J.; Chyad, M.H.; Singh, N.S.S.; Hussein, Z.A.; Jasim, D.J.; Khosravi, M. Comparative Analysis of Kalman Filters, Gaussian Sum Filters, and Artificial Neural Networks for State Estimation in Energy Management. Energy Rep. 2025, 13, 4417–4440. [Google Scholar] [CrossRef]

- Strelet, E.; Wang, Z.; Peng, Y.; Castillo, I.; Rendall, R.; Reis, M.S. Regularized Bayesian Fusion for Multimodal Data Integration in Industrial Processes. Ind. Eng. Chem. Res. 2024, 63, 20989–21000. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context 2015. arXiv 2015, arXiv:1405.0312. [Google Scholar]

- Xiao, B.; Wu, H.; Wei, Y. Simple Baselines for Human Pose Estimation and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 472–487. [Google Scholar] [CrossRef]

- Mao, W.; Ge, Y.; Shen, C.; Tian, Z.; Wang, X.; Wang, Z.; den Hengel, A. van Poseur: Direct Human Pose Regression with Transformers. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 72–88. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Z.; Peng, Y.; Zhang, Z.; Yu, G.; Sun, J. Cascaded Pyramid Network for Multi-Person Pose Estimation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–23 June2018; pp. 7103–7112. [Google Scholar] [CrossRef]

- Wang, Y.; Li, M.; Cai, H.; Chen, W.; Han, S. Lite Pose: Efficient Architecture Design for 2D Human Pose Estimation. In Proceedings of the Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; Volume 2022, pp. 13116–13126. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet V2: Practical Guidelines for Efficient Cnn Architecture Design. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 122–138. [Google Scholar] [CrossRef]

- Yu, C.; Xiao, B.; Gao, C.; Yuan, L.; Zhang, L.; Sang, N.; Wang, J. Lite-HRNet: A Lightweight High-Resolution Network. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10435–10445. [Google Scholar]

- Zimmermann, C.; Ceylan, D.; Yang, J.; Russell, B.; Argus, M.; Brox, T. FreiHAND: A Dataset for Markerless Capture of Hand Pose and Shape from Single RGB Images 2019. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Bulat, A.; Kossaifi, J.; Tzimiropoulos, G.; Pantic, M. Toward Fast and Accurate Human Pose Estimation via Soft-Gated Skip Connections. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition, FG 2020, Buenos Aires, Argentina, 16–20 November 2020; pp. 8–15. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, B.; Peng, C. SRHandNet: Real-Time 2D Hand Pose Estimation with Simultaneous Region Localization. IEEE Trans. Image Process. 2020, 29, 2977–2986. [Google Scholar] [CrossRef]

| Size | Stage 1 | Stage 2 | Stage 3 | Stage 4 |

|---|---|---|---|---|

| Item | Specification/Description |

|---|---|

| Camera model | Generic RGB Camera |

| Sensor type | CMOS |

| Resolution | 1920 × 1080 pixels |

| Frame rate | 30 fps |

| Mounting | Driver-side upper right, Driver-side upper left |

| Quantity | 2 |

| Moudule | Core Network | Input Size | Params | GFLOPs | AP | AR |

|---|---|---|---|---|---|---|

| SimpleBaseline [71] | ResNet-50 | 256 × 192 | 34.0 M | 8.9 | 77.9 | 78.5 |

| HRNetV1 [64] | HRNet-W32 | 256 × 192 | 28.5 M | 7.1 | 80.8 | 81.6 |

| TransPose [9] | HRNet-W32 | 256 × 192 | 8.0 M | 10.2 | 81.3 | 83.2 |

| PoseUR [72] | HRFormer-B | 256 × 192 | 28.8 M | 12.6 | 82.1 | 83.8 |

| HRFormer-S [10] | HRFormer-S | 256 × 192 | 2.5 M | 1.3 | 80.6 | 82.2 |

| Our Model | HRNet-Former | 256 × 192 | 4.5 M | 3.8 | 81.9 | 83.3 |

| Module | Params (M) | GFLOPs | Frame Rate/Minute |

|---|---|---|---|

| MobileNetV2 [73] | 9.6 | 1.97 | 53.4 |

| HRNetV1 [64] | 7.6 | 1.70 | 28.6 |

| HRFormer-S [10] | 2.5 | 1.30 | 33.1 |

| Our Model | 4.5 | 3.80 | 44.3 |

| Module | Input Size | Params | GFLOPs | AP | AR |

|---|---|---|---|---|---|

| Complex network model | |||||

| CPN [74] | 256 × 192 | 27.0 M | 6.2 | 68.6 | - |

| SimpleBaseline [71] | 256 × 192 | 34.0 M | 8.9 | 70.4 | 76.3 |

| HRNetV1 [64] | 256 × 192 | 28.5 M | 7.1 | 73.4 | 78.9 |

| DAEK [75] | 128 × 96 | 63.6 M | 3.6 | 71.9 | 77.9 |

| TransPose-H-S [9] | 256 × 192 | 8.0 M | 10.2 | 74.2 | 78.0 |

| PoseUR-HRNet-32 | 256 × 192 | 28.8 M | 4.48 | 74.7 | - |

| Light-weight network model | |||||

| MobileNetV2 [73] | 256 × 192 | 9.6 M | 1.48 | 64.6 | 70.7 |

| ShuffleNetV2 [76] | 256 × 192 | 7.6 M | 1.28 | 59.9 | 66.4 |

| Lite-HRNet-18 [77] | 256 × 192 | 1.1 M | 0.2 | 64.8 | 71.2 |

| HRFormer-S [10] | 256 × 192 | 2.5 M | 1.3 | 70.9 | 76.6 |

| Our model | 256 × 192 | 4.5 M | 3.8 | 72.8 | 78.4 |

| Module | Params | PCK Score | FPS |

|---|---|---|---|

| MobileNetV2 [73] | 9.6 M | 81.48 | 49.5 |

| MobileNetV3 [79] | 8.7 M | 83.61 | 41.1 |

| ShuffleNetV2 [76] | 7.6 M | 82.86 | 45.3 |

| SRHandNet [80] | 16.3 M | 95.43 | 28.4 |

| Our model | 4.8 M | 98.84 | 38.6 |

| Joint Model (Limb + Hand) | Params | FPS |

|---|---|---|

| HRNetV1 + MobileNetV2 | 38.1 M | 7.6 |

| HRFormer-S + MobileNetV2 | 12.1 M | 11.2 |

| HRNetV1 + ShuffleNetV2 | 36.1 M | 7.4 |

| HRFormer-S + ShuffleNetV2 | 10.1 M | 11.1 |

| HRNetV1 + SRHandNet [80] | 44.8 M | 5.4 |

| HRFormer-S + SRHandNet [80] | 18.8 M | 8.8 |

| Our model | 9.7 M | 14.3 |

| Module | Params | GFLOPs | AP | FPS |

|---|---|---|---|---|

| Standard Attention | 5.0 M | 8.56 | 82.2 | 21.3 |

| Order-switched Attention (without FFNDW) | 4.1 M | 3.34 | 74.4 | 39.7 |

| Proposed model | 4.5 M | 3.80 | 82.3 | 35.4 |

| System | Subsystem | Indicator |

|---|---|---|

| Pilot Behavioral Recognition System | Pilot Attitude Estimation System | A1 Reliability of the pose estimation dataset |

| A2 Recognition accuracy of pose estimation algorithms | ||

| A3 Operational procedure masking | ||

| A4 Hardware system power | ||

| A5 Pose estimation algorithm real-time performance | ||

| A6 Number of parameters quantities for the pose estimation model | ||

| A7 Computational complexity of the pose estimation model | ||

| Motion Capture System | A8 Reliability of Motion Capture Datasets | |

| A9 Operating procedure recognition accuracy | ||

| A10 False touch rate for operating procedure recognition | ||

| A11 Motion capture model computational complexity | ||

| A12 Number of Motion Capture Model Parameters | ||

| A13 Operating procedure complexity | ||

| A14 Urgency of operating procedures | ||

| Lighting Environment System | A15 Stability of filtering algorithms | |

| A16 Communication Transmission Stability | ||

| A17 Cockpit light intensity level | ||

| A18 External ambient light intensity level | ||

| A19 Screen light intensity level | ||

| Individual Differences | A20 Height | |

| A21 Arm length | ||

| A22 Knowledge and experience | ||

| A23 Duration of training received |

| Action Type | Proposed Method Efficacy | Manual Method Efficacy |

|---|---|---|

| Click on the touch screen | 0.782 | 0.98 |

| Push the throttle lever | 0.753 | 0.99 |

| Open the landing gear | 0.474 | 0.97 |

| Switch on the autopilot | 0.638 | 0.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, H.; Lu, X.; Sun, Y.; Liu, H. A Lightweight Framework for Pilot Pose Estimation and Behavior Recognition with Integrated Safety Assessment. Aerospace 2025, 12, 986. https://doi.org/10.3390/aerospace12110986

Wu H, Lu X, Sun Y, Liu H. A Lightweight Framework for Pilot Pose Estimation and Behavior Recognition with Integrated Safety Assessment. Aerospace. 2025; 12(11):986. https://doi.org/10.3390/aerospace12110986

Chicago/Turabian StyleWu, Honglan, Xin Lu, Youchao Sun, and Hao Liu. 2025. "A Lightweight Framework for Pilot Pose Estimation and Behavior Recognition with Integrated Safety Assessment" Aerospace 12, no. 11: 986. https://doi.org/10.3390/aerospace12110986

APA StyleWu, H., Lu, X., Sun, Y., & Liu, H. (2025). A Lightweight Framework for Pilot Pose Estimation and Behavior Recognition with Integrated Safety Assessment. Aerospace, 12(11), 986. https://doi.org/10.3390/aerospace12110986