Ensuring Safety for Artificial-Intelligence-Based Automatic Speech Recognition in Air Traffic Control Environment

Abstract

:1. Introduction

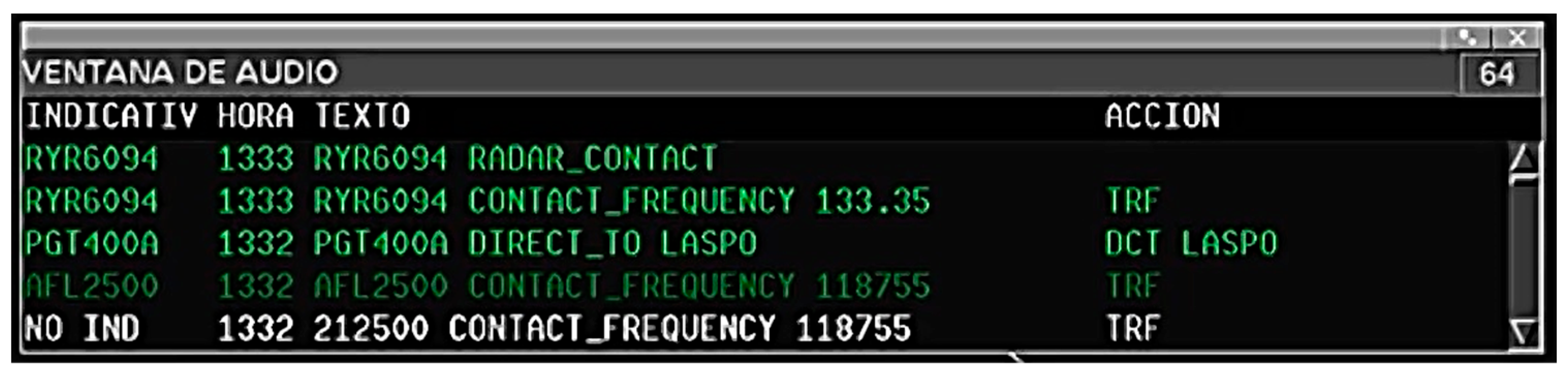

- Recognition of relevant aircraft callsigns from ATCO and pilot utterances as well as highlighting the callsigns on the controller working position (CWP) HMI display.

- Recognition of ATCO commands and input of the command contents into the aircraft radar data labels displayed at the ATCO CWP HMI.

2. Background

3. Materials and Methods

- A success approach, in which the effectiveness of the new concepts and technologies is assessed, when they are working as intended, i.e., how much the pre-existing risks that are inherent and already present in aviation will be reduced by the changes to the ATM system under assessment, i.e., defining the positive contribution to aviation safety that the ATM changes under assessment may deliver in the absence of failure.

- A failure approach, in which the ATM system generated risks, induced by the ATM changes under assessment are evaluated. This approach defines the negative contribution to the risk of an accident that the ATM changes under assessment may induce in the event of failure(s), however caused.

3.1. Selected Use Cases

3.1.1. Use Case “Highlight of Callsigns (Aircraft Identifier) on the CWP Based on the Recognition of Pilot Voice Communications”

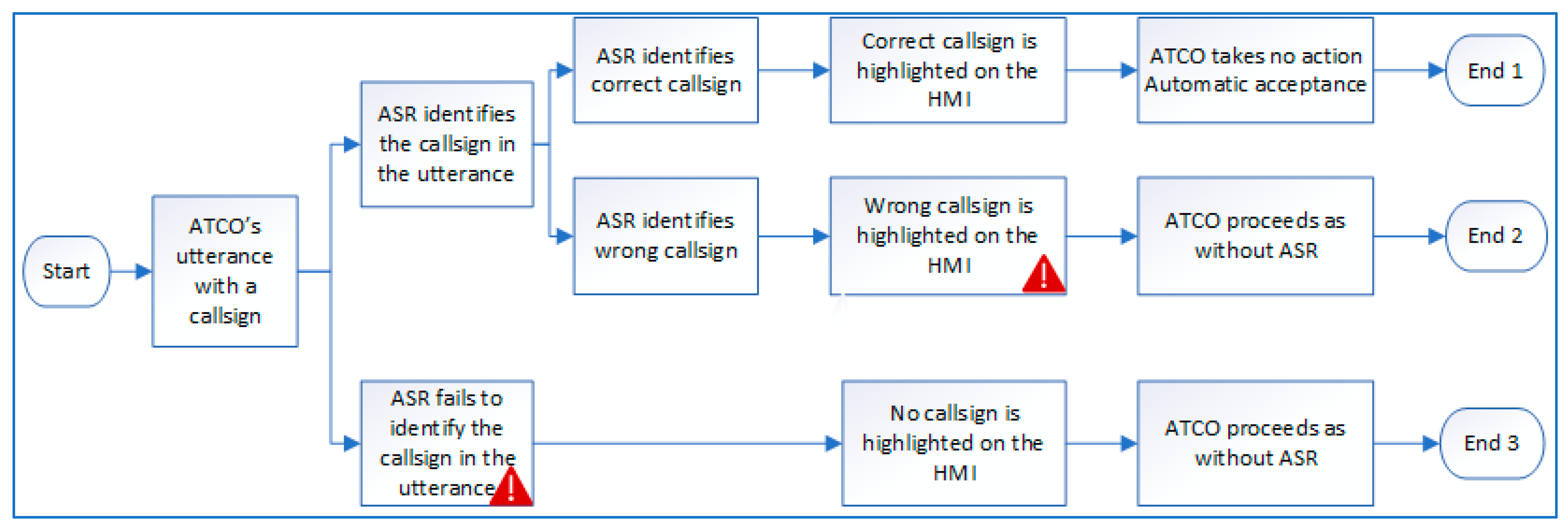

3.1.2. Use Case “Highlight of Callsigns on the CWP Based on the Recognition of ATCO Voice Communications”

3.1.3. Use Case “Annotation of ATCO Commands”

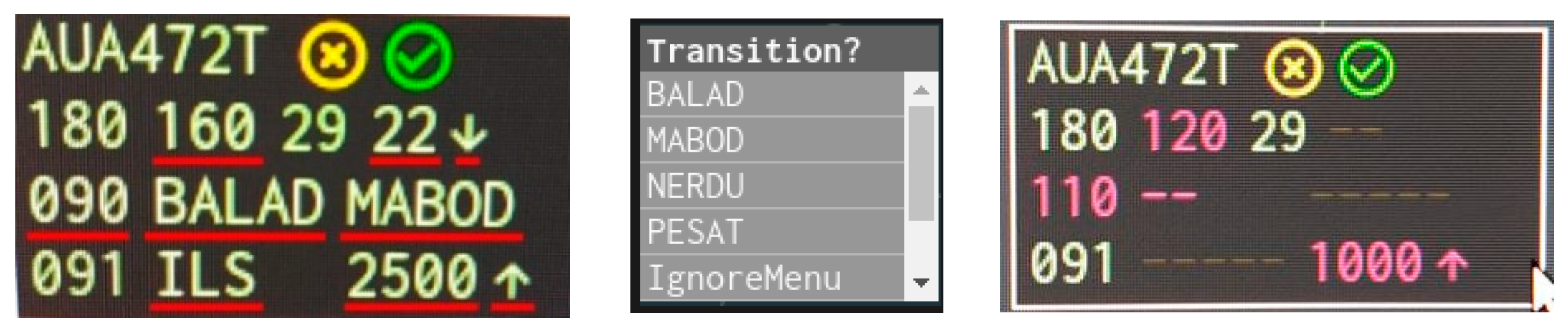

3.1.4. Use Case “Pre-Filling of Commands in the CWP”

- The exercise performed by CRIDA, Indra and ENAIRE places emphasis on a very low callsign recognition error rate (approx. 0%). Consequently, a lower callsign recognition rate (between 50% and 85%) is foreseen. This exercise will be referred to as the Callsign Highlighting exercise in the rest of the text.

- The second exercise performed by DLR and Austro Control attempts to identify a compromise between low callsign recognition error rate (<1%) and acceptable callsign recognition rate (>97%). This exercise will be referred to as the Radar Label Maintenance exercise in the rest of the text.

3.2. Safety Assessment Methodology

- Identification of hazards’ effects on operations, including the effect on aircraft operations.

- Assessment of the severity of each hazard effect.

- Specification of target performance (safety objectives), i.e., determination of the maximum tolerable frequency of the hazard’s occurrence.

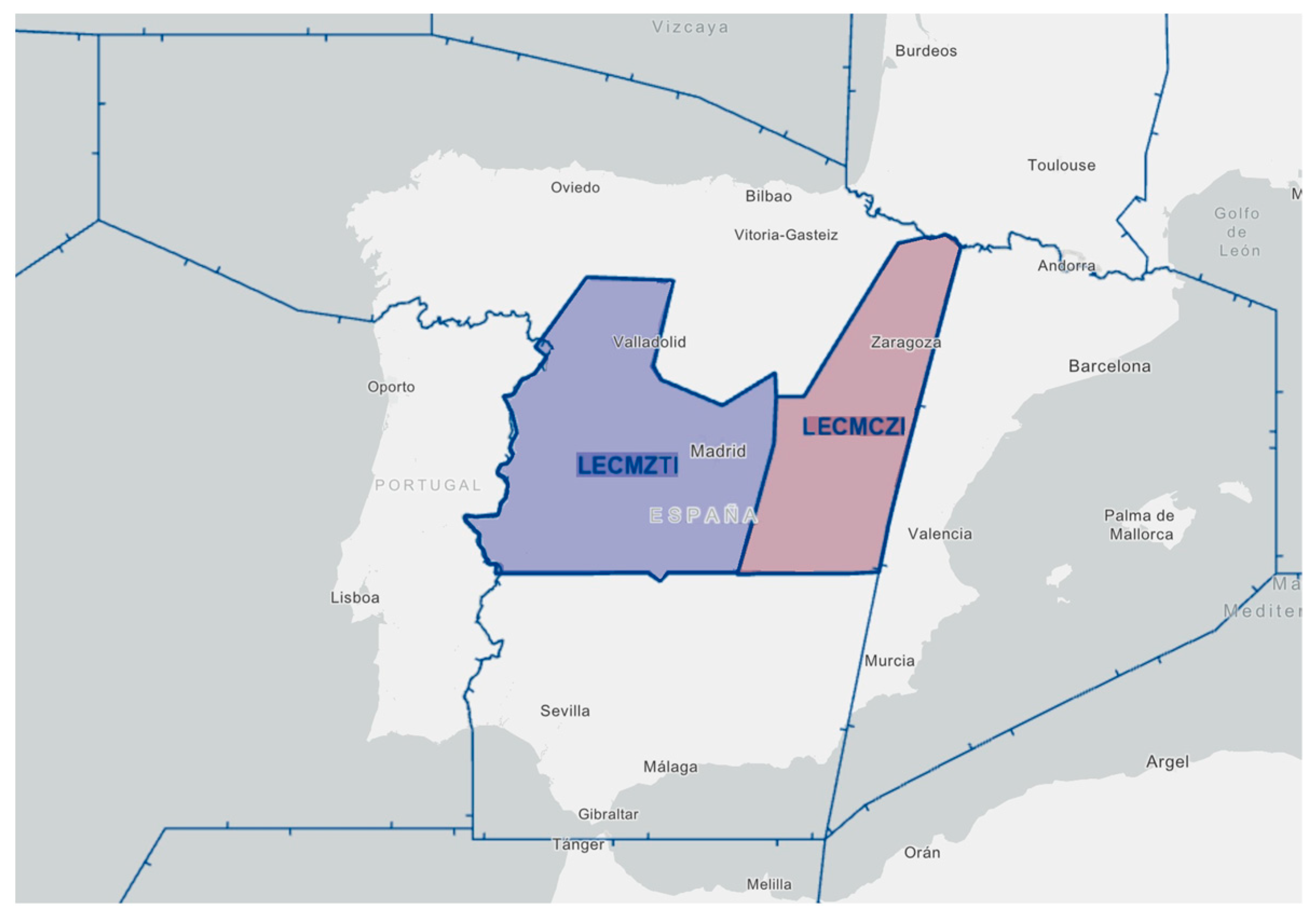

3.3. Callsign Highlighting Exercise with Focus on Low Callsign Recognition Error Rates

- Use Case 1. Highlight of callsigns … based on the recognition of pilot voice,

- Use Case 2. Highlight of callsigns … based on the recognition of ATCO voice,

- Use Case 3. Annotation of ATCO commands.

- Collection of subjective operational feedback from ATCOs gathered by means of questionnaires, debriefings and observations. This was achieved through a real-time human-in-the-loop simulation.

- Collection of statistically significant objective data regarding ASR performance. This was achieved through the analysis of operational recordings of real-life communications between ATCOs and flight crew. Audios from different Spanish en-route sectors were processed by the ASR system to obtain the accuracy on callsign identification and command annotation.

- Individual questionnaires: standard and specific questionnaires were developed to assess the validation objectives. The questionnaires were agreed with the subject matter experts participating in dedicated Safety and Human performance workshops.

- Debriefing sessions: after each simulation run the findings, i.e., opportunities, difficulties, general findings observed during the exercise were discussed among all participants (operational and simulation staff).

- Over the shoulder observations: direct and non-intrusive over-the-shoulder observation were carried out by human factors expert, during the runs. This non-intrusive observation had the purpose of providing detailed, complete and reliable information on the way the activity is carried out, especially, if further commented and discussed with the observed users during the debriefing.

3.4. Radar Label Maintenance Exercise with Focus on High Callsign Recognition Rates

- Use Case 2. Highlight of callsigns … based on the recognition of ATCO voice,

- Use Case 3. Annotation of ATCO commands,

- Use Case 4. Pre-filling of commands in the CWP.

4. Results

4.1. Hazard, Severity and Corresponding Design Requirements

- FHz#01: Significant delay in ASR callsign/command recognition and/or display (relevant for use cases 3 and 4)

- FHz#02: ASR fails to identify an aircraft callsign from pilot’s utterance, i.e., no aircraft is highlighted (relevant for use case 1)

- FHz#03: ASR fails to identify an aircraft callsign from controller’s utterance, i.e., no aircraft is highlighted (relevant for use case 2)

- FHz#04: ASR erroneously identifies an aircraft callsign from pilot’s utterance, i.e., the wrong aircraft is highlighted (relevant for use case 1)

- FHz#05: ASR erroneously identifies an aircraft callsign from controller’s utterance, i.e., the wrong aircraft is highlighted (relevant for use case 2)

- FHz#06: ASR fails to identify a command from controller’s utterance, i.e., no given command is shown to the ATCO (relevant for use cases 3, 4)

- FHz#07: ASR erroneously identifies a command from controller’s utterance, i.e., a wrong command or a command never given is shown to the ATCO (relevant for use cases 3, 4)

- FHz#08: ASR recognizes an incorrect aircraft callsign, and the (correct or wrong) command is displayed for the incorrect flight in the CWP HMI (relevant for all use cases)

- Severity Class 1: Accidents (max safety target with a probability of less than 10−9, i.e., one catastrophic accident per one billion flight hours attributable to ATM.

- Severity Class 2: Serious Incidents (max safety target with a probability of less than 10−6)

- Severity Class 3: Major Incidents (max safety target with probability of less than 10−5)

- Severity Class 4: Significant Incidents (max safety target with a probability of less than 10−3)

- Severity Class 5: No Immediate Effect on Safety (no target).

4.1.1. Safety and Performance Requirements Concerning Callsign

- For 99.9% of the ATCO utterances (except callsign), the system shall be able to give the output in less than 2 s after the ATCO ended the radio transmission.

- If the confidence level of the callsign recognition is not sufficiently high, it shall not be highlighted. Confidence level corresponds to a plausibility value derived by ASR. If the plausibility value is below a given threshold, the callsign is set to ‘not recognized’.

- The HMI shall highlight the track label or part of it (or the track symbol) after recognizing the corresponding callsign.

4.1.2. Safety and Performance Requirements Concerning Commands

- The ASR shall recognize commands of different command categories (such as descend, reduce, heading).

- The Command Recognition Rate of ASR for ATCOs should be higher than 85%.

- The Command Recognition Error Rate of ASR should be less than 2.5% for ATCOs.

- The Command Recognition Error Rate of ASR should be less than 5% for pilots.

- The HMI should present the recognized (and validated) command types together with the command values in the radar label.

- The HMI shall enable manual correction/update of automatically proposed command value/type.

- The ASR system shall have no significant differences in the recognition rates of different command types, if the command types are often used (e.g., more than 1% of the time).

4.2. Results of the Validation Activities

4.2.1. Validation Activity “Callsign Highlighting”

Evidence Based on the Objective Metrics

Evidence Based on Subjective Feedback

4.2.2. Validation Activity 2: Radar Label Maintenance

Evidence Based on the Objective Metrics

Evidence Based on the Subjective Metrics

4.3. Limitations

5. Discussion of Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Top-Down Analysis

| Cause ID (in Fault Tree) | Cause | Detailed Description | Mitigation/Safety Requirement |

| FHz#01 Significant delay in ASR callsign/command recognition and/or display | -ASR provides delayed output | One or more of the ASR components is not performing as expected and causing the delay in the ASR output display. | The ASR system should provide the functionality to be switched off and switched on when necessary. ASR should send a recognized callsign to the cooperating ATC system when the controller ends the radio transmission within a maximum of 1.0 s. For 99.9% of the ATCO utterances except callsign itself, the system shall be able to give the output in less than two seconds after the ATCO has released the push-to-talk button. The ASR system shall have no significant differences in the recognition rates of different command types, if the command types are not very seldom use (e.g., less than 1% of the time). |

| FHz#02 ASR fails to identify an aircraft from pilot’s utterance—no aircraft is highlighted | -Pilot utters a non-understandable callsign -Pilot utters a legal and understandable callsign, but ASR fails to recognize it | Pilot performs the radio call and the flight is not highlighted in the CWP HMI. | The HMI shall highlight the Track Label or part of it (or the track symbol) after recognizing the corresponding callsign. If the confidence level of the callsign recognition is not sufficiently high, it shall not be highlighted. |

| FHz#03 ASR fails to identify an aircraft from controller’s utterance—no aircraft is highlighted | -ATCO utters an illegal/non-understandable callsign -ATCO utters a legal and understandable callsign, but ASR fails to recognize it | ATCO performs the radio call and the flight is not highlighted in the CWP HMI. | The HMI shall highlight the Track Label or part of it (or the track symbol) after recognizing the corresponding callsign. If the confidence level of the callsign recognition is not sufficiently high, it shall not be highlighted. |

| FHz#04 ASR erroneously identifies an aircraft from pilot’s utterance—wrong aircraft is highlighted | -Pilot utters a non-understandable callsign Pilot utters a legal and understandable callsign, but ASR recognizes an existing wrong callsign -Pilot utters a legal and understandable callsign, but ASR recognizes a wrong callsign not matching to callsigns considered by the system | ATCO focuses on the highlighted aircraft and issues the clearance intended for the calling aircraft to the wrong flight. If the ATCO issues the clearance to the wrongly highlighted aircraft, it may result in an unintended trajectory change. | The HMI shall highlight the Track Label or part of it (or the track symbol) after recognizing the corresponding callsign. The Command Recognition Error Rate of ASR should be less than 5% for pilots. The Command Recognition Rate of ASR for pilots should be higher than 75%. If the confidence level of the callsign recognition is not sufficiently high, it shall not be highlighted. |

| FHz#05 ASR erroneously identifies an aircraft from controller’s utterance—wrong aircraft is highlighted | -ATCO utters an illegal/non-understandable callsign -ATCO utters a legal and understandable callsign, but -ASR recognizes an existing wrong callsign -ATCO utters a legal and understandable callsign, but -ASR recognizes a wrong callsign not matching to callsigns considered by the system | ATCO may get confused and issue a wrong clearance. If the ATCO issues the clearance to the wrongly highlighted aircraft, it may result in an unintended trajectory change. | ATCOs will use standard phraseology as per ICAO Doc.4444 [32]. The ASR shall recognize commands of different command categories (such as descend, reduce, heading). The Command Recognition Error Rate of ASR should be less than 2.5% for ATCOs. The Command Recognition Rate of ASR of ATCOs should be higher than 85%. If the confidence level of the callsign recognition is not sufficiently high, it shall not be highlighted. |

| FHz#06 ASR fails to identify a command from controller’s utterance | -ATCO utters an illegal/non-understandable command ATCO utters a legal and understandable command, but ASR fails to recognize it | ATCO manually makes the input resulting in workflow disruptions and workload increase and situational awareness reduction. | The HMI shall enable manual correction/update of automatically proposed command value/type. The HMI should present the recognized (and validated) command types together with the command values in the radar label. The ASR system should provide the functionality to be switched off and switched on when necessary. The Command Recognition Rate of ASR of ATCOs should be higher than 85%. |

| FHz#07 ASR erroneously identifies a command from controller’s utterance | -ATCO utters an illegal/non-understandable command -ATCO utters a legal and understandable command, but ASR recognizes an incorrect callsign, which is being considered by the system, and the command is displayed for the incorrect flight in the CWP HMI -ATCO utters a legal and understandable command, but ASR recognizes the incorrect command, and wrong command is displayed in the CWP HMI | ATCO will have to change information already input into the system. Depending on the ATM system, some parts of correcting the clearance, route change, etc., may require manipulation of the FPL route data to input the correction. | The HMI shall enable manual correction/update of automatically proposed command value/type. The HMI associated with ASR shall enable the ATCO to reject recognized command values for pre-filling radar label values by clicking on a rejection button. The ASR system should provide the functionality to be switched off and switched on when necessary. The Command Recognition Error Rate of ASR should be less than 2.5% for ATCOs. |

| FHz#08 ASR recognizes an incorrect callsign, and the command is displayed for the incorrect flight in the CWP HMI | -ATCO utters an illegal/non-understandable callsign followed by a command ATCO utters a legal and understandable callsign and a command, but ASR recognizes the incorrect callsign | ATCO utters a legal and understandable command, but ASR recognizes an incorrect callsign, which is being considered by the system, and the command is displayed for the incorrect flight in the CWP HMI. | The Command Recognition Error Rate of ASR should be less than 2.5% for ATCOs. The Command Recognition Rate of ASR of ATCOs should be higher than 85%. ATCO is supported by the clearance monitoring aids as in today’s operations. |

Appendix B. Bottom-Up Analysis

| Technical System Element | Failure Mode | Effects | Mitigation/Safety Requirement |

| Command Prediction | Fails to forecast possible future controller commands. Failure to receive external data required for forecast of future controller commands (external data can be radar data, flight plan data, weather data, airspace data, and also historic data of those types). | The speech recognizer relies on the input of the predicted commands. Commands which are not predicted (normally) cannot be recognized. So, if command prediction accuracy is worse than recognition accuracy itself, the command prediction functionality might have no benefits for the recognition engine any more. | If ASR is used, the Command Prediction Error Rate should not be higher than 10% and also not be higher than 50% of the opposite command recognition rate (i.e., 100% minus the command recognition rate), without using a plausibility checker. |

| Recognize Voice Words | Fails to analyze the voice flow and to transform into a text string. Does not receive the Voice Flow. | No callsign or command is recognized by ASR and displayed on the CWP HMI. | If ASR does not provide an input, ATCO proceeds as in current operations (manual input and with no highlight of the callsign). |

| Apply Ontology and Logical check | Fails to analyze the text string and to transform into a set of predefined commands to discard incoherent commands. | The ASR output is erroneous and incoherent. | The ASR shall recognize commands of different command categories. |

References

- Helmke, H.; Ohneiser, O.; Mühlhausen, T.; Wies, M. Reducing Controller Workload with Automatic Speech Recognition. In Proceedings of the 35th Digital Avionics Systems Conference (DASC), Sacramento, CA, USA, 25–29 September 2016. [Google Scholar] [CrossRef]

- European Commission. Commission Implementing Regulation (EU) 2017/373 of 1 March 2017 Laying down Common Requirements for Providers of Air Traffic Management/Air Navigation Services and Other Air Traffic Management Network Functions and Their Oversight Repealing Regulation (EC) No 482/2008, Implementing Regulations (EU) No 1034/2011, (EU) No 1035/2011 and (EU) 2016/1377 and Amending Regulation (EU) No 677/2011. 2017. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32017R0373 (accessed on 23 October 2023).

- SESAR. SESAR Safety Reference Materials Ed 4.1. 2019. Available online: https://www.sesarju.eu/sites/default/files/documents/transversal/SESAR2020%20Safety%20Reference%20Material%20Ed%2000_04_01_1%20(1_0).pdf (accessed on 23 October 2023).

- García, R.; Albarrán, J.; Fabio, A.; Celorrio, F.; Pinto de Oliveira, C.; Bárcena, C. Automatic Flight Callsign Identification on a Controller Working Position: Real-Time Simulation and Analysis of Operational Recordings. Aerospace 2023, 10, 433. [Google Scholar] [CrossRef]

- Helmke, H.; Kleinert, M.; Ahrenhold, N.; Ehr, H.; Mühlhausen, T.; Ohneiser, O.; Klamert, L.; Motlicek, P.; Prasad, A.; Zuluaga-Gómez, J.; et al. Automatic Speech Recognition and Understanding for Radar Label Maintenance Support Increases Safety and Reduces Air Traffic Controllers’ Workload. In Proceedings of the 15th USA/Europe Air Traffic Management Research and Development Seminar, ATM 2023, Savannah, GA, USA, 5–9 June 2023. [Google Scholar]

- Kleinert, M.; Helmke, H.; Moos, S.; Hlousek, P.; Windisch, C.; Ohneiser, O.; Ehr, H.; Labreuil, A. Reducing Controller Workload by Automatic Speech Recognition Assisted Radar Label Maintenance. In Proceedings of the 9th SESAR Innovation Days, Athens, Greece, 2–5 December 2019. [Google Scholar]

- European Space Agency. Technology Readiness Levels Handbook for Space Applications. September 2008. TEC-SHS/5551/MG/ap. Available online: https://connectivity.esa.int/sites/default/files/TRL_Handbook.pdf (accessed on 23 October 2023).

- Santorini, R.; SESAR Digital Academy—Innovation in Airspace Utilization, 29 April 2021. SESAR Joint Undertaking|Automated Speech Recognition for Air Traffic Control. Available online: https://www.sesarju.eu/node/3823 (accessed on 6 October 2023).

- Zuluaga-Gomez, J.; Nigmatulina, I.; Prasad, A.; Motlicek, P.; Khalil, D.; Madikeri, S.; Tart, A.; Szoke, I.; Lenders, V.; Rigault, M.; et al. Lessons Learned in Transcribing 5000 h of Air Traffic Control Communications for Robust Automatic Speech Understanding. Aerospace 2023, 10, 898. [Google Scholar] [CrossRef]

- Khalil, D.; Prasad, A.; Motlicek, P.; Zuluaga-Gomez, J.; Nigmatulina, I.; Madikeri, S.; Schuepbach, C. An Automatic Speaker Clustering Pipeline for the Air Traffic Communication Domain. Aerospace 2023, 10, 876. [Google Scholar] [CrossRef]

- Zuluaga-Gomez, J.; Prasad, A.; Nigmatulina, I.; Motlicek, P.; Kleinert, M. A Virtual Simulation-Pilot Agent for Training of Air Traffic Controllers. Aerospace 2023, 10, 490. [Google Scholar] [CrossRef]

- Kleinert, M.; Helmke, H.; Shetty, S.; Ohneiser, O.; Ehr, H.; Prasad, A.; Motlicek, P.; Harfmann, J. Automated Interpretation of Air Traffic Control Communication: The Journey from Spoken Words to a Deeper Understanding of the Meaning. In Proceedings of the 40th Digital Avionics Systems Conference (DASC), Hybrid Conference, San Antonio, TX, USA, 3–7 October 2021. [Google Scholar]

- Chen, S.; Kopald, H.D.; Chong, R.; Wei, Y.; Levonian, Z. Read back error detection using automatic speech recognition. In Proceedings of the 12th USA/Europe Air Traffic Management Research and Development Seminar (ATM2017), Seattle, WA, USA, 26–30 June 2017. [Google Scholar]

- Lin, Y.; Deng, L.; Chen, Z.; Wu, X.; Zhang, J.; Yang, B. A Real-Time ATC Safety Monitoring Framework Using a Deep Learning Approach. IEEE Trans. Intell. Transp. Syst. 2020, 21, 4572–4581. [Google Scholar] [CrossRef]

- Helmke, H.; Ohneiser, O.; Buxbaum, J.; Kern, C. Increasing ATM Efficiency with Assistant Based Speech Recognition. In Proceedings of the 12th USA/Europe Air Traffic Management Research and Development Seminar (ATM2017), Seattle, WA, USA, 26–30 June 2017. [Google Scholar]

- Kleinert, M.; Ohneiser, O.; Helmke, H.; Shetty, S.; Ehr, H.; Maier, M.; Schacht, S.; Wiese, H. Safety Aspects of Supporting Apron Controllers with Automatic Speech Recognition and Understanding Integrated into an Advanced Surface Movement Guidance and Control System. Aerospace 2023, 10, 596. [Google Scholar] [CrossRef]

- Karlsson, J. Automatic Speech Recognition in Air Traffic Control: A Human Factors Perspective. In NASA, Langley Research Center, Joint University Program for Air Transportation Research, 1989–1990; NASA: Washington, DC, USA, 1990; pp. 9–13. [Google Scholar]

- Lin, Y.; Ruan, M.; Cai, K.; Li, D.; Zeng, Z.; Li, F.; Yang, B. Identifying and managing risks of AI-driven operations: A case study of automatic speech recognition for improving air traffic safety. Chin. J. Aeronaut. 2023, 36, 366–386. [Google Scholar] [CrossRef]

- Zhou, S.; Guo, D.; Hu, Y.; Lin, Y.; Yang, B. Data-driven traffic dynamic understanding and safety monitoring applications. In Proceedings of the 2022 IEEE 4th International Conference on Civil Aviation Safety and Information Technology, Dali, China, 12–14 October 2022. [Google Scholar]

- European Union Aviation Safety Agency. EASA Artificial Intelligence Roadmap 2.0; Human-Centric Approach to AI in Aviation; European Union Aviation Safety Agency: Cologne, Germany, 2023; Available online: https://www.easa.europa.eu/ai (accessed on 23 October 2023).

- European Union Aviation Safety Agency. EASA Concept Paper: Guidance for Level 1 & 2 Machine Learning Applications—Proposed Issue 02, Cologne, Germany. 2023. Available online: https://www.easa.europa.eu/en/downloads/137631/en (accessed on 23 October 2023).

- SESAR. Guidance to Apply SESAR Safety Reference Material, Ed. 3.1. 2018. Available online: https://www.sesarju.eu/sites/default/files/documents/transversal/SESAR%202020%20-%20Guidance%20to%20Apply%20the%20SESAR2020%20Safety%20Reference%20Material.pdf (accessed on 23 October 2023).

- EUROCONTROL. Safety Assessment Methodology Ed2.2; EUROCONTROL: Brussels, Belgium, 2006. [Google Scholar]

- Insignia. Available online: https://insignia.enaire.es (accessed on 28 February 2023).

- SESAR. D4.1.100—PJ.10-W2-96 ASR-TRL6 Final TVALR—Part I. V 01.00.00; SESAR Joint Undertaking, Brussels, Belgium, May 2023. Available online: https://cordis.europa.eu/project/id/874464/results (accessed on 23 October 2023).

- European Organization for Civil Aviation Equipment. EUROCAE ED-125, Process for Specifying risk Classification Scheme and Deriving Safety Objectives in ATM; EUROCAE: Malakoff, France, 2010. [Google Scholar]

- SESAR. D4.1.020—PJ.10-W2-96 ASR-TRL6 Final TS/IRS—Part I. V 01.00.00; SESAR Joint Undertaking, Brussels, Belgium, May 2023. Available online: https://cordis.europa.eu/project/id/874464/results (accessed on 23 October 2023).

- Dehn, D.M. Assessing the Impact of Automation on the Air Traffic Controller: The SHAPE Questionnaires. Air Traffic Control Q. 2008, 16, 127–146. [Google Scholar] [CrossRef]

- Hart, S. NASA-task load index (NASA-TLX); 20 years later. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Los Angeles, CA, USA, 16–20 October 2006; pp. 904–908. [Google Scholar]

- Stolcke, A.; Droppo, J. Comparing Human and Machine Errors in Conversational Speech Transcription. In Proceedings of the Proc. Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 137–141. Available online: https://www.isca-speech.org/archive/interspeech_2017/stolcke17_interspeech.html (accessed on 23 October 2023).

- Jordan, C.S.; Brennen, S.D. Instantaneous Self-Assessment of Workload Technique (ISA); Defence Research Agency: Portsmouth, UK, 1992. [Google Scholar]

- ICAO. Procedures for Air Navigation Services (PANS)—Air Traffic Management Doc 4444, 16th ed.; ICAO: Montreal, QC, Canada, 2016. [Google Scholar]

| Question ID | Content |

|---|---|

| 1 | How insecure, discouraged, irritated, stressed, and annoyed were you? (Stress annoyed) |

| 2 | What was your peak workload? (Peak workload) |

| 3 | In the previous run I … started to focus on a single problem or a specific aircraft. (Single aircraft) |

| 4 | In the previous run there … was a risk of forgetting something important (such as inputting the spoken command values into the labels). (Risk to Forget) |

| 5 | In the previous run, how much effort did it take to evaluate conflict resolution options against the traffic situation and conditions? (Conflict resolution) |

| 6 | In the previous run, how much effort did it take to evaluate the consequences of a plan? (Consequences) |

| 7 | In the previous working period, I felt that … the system was reliable. (Reliable) |

| 8 | In the previous working period, I felt that … I was confident when working with the system. (Confidence) |

| 9 | I … found the system unnecessarily complex. (Complexity) |

| 10 | Please read the descriptors and score your overall level of user acceptance experienced during the run. Please check the appropriate number. (User Acceptance) |

| Functionality Hazard & Severity | Potential Causes & Operational Effect | Mitigations Protecting against Propagation of Effects |

|---|---|---|

| FHz#01 Significant delay in ASR callsign/command recognition and/or display No Immediate Effect on Safety | -Design issue -ASR provides delayed output If the use of ASR introduces delays in the usage of speech information (display of inputs, identification of aircraft, etc.) this may cause the ATCOs to focus on specific flight/area of the Area of Responsibility, until they can verify that the action induced by ASR has been correctly processed and displayed. This may have a negative impact on ATCO situational awareness. | Contingency measure to switch off ASR. |

| FHz#02 ASR fails to identify an aircraft from pilot’s utterance—no aircraft is highlighted No Immediate Effect on Safety | -Pilot utters a non-understandable callsign or noise environment -Pilot utters a legal and understandable callsign, but ASR fails to recognize it. If the pilot performs the radio call and the flight is not highlighted, ATCO may have to scan the area of responsibility (AoR) to locate the aircraft. However, if ASR functionality to highlight the callsign is defined in the new operating method, there is a default expectation by the ATCO that it is functional and assisting in locating aircraft, resulting in minor workload increase and situational awareness reduction. | ATCO may have to scan the Area of Responsibility to locate the aircraft. No difference to current operating method. |

| FHz#03 ASR fails to identify an aircraft from controller’s utterance—no aircraft is highlighted No Immediate Effect on Safety | -ATCO utters an illegal/non-understandable callsign -ATCO utters a legal and understandable callsign, but ASR fails to recognize it. If ATCO performs the radio call, it is assumed the impact is minor, because the ATCO’s attention is focused on the aircraft being called and the impact is negligible. | No difference to current operating method. |

| FHz#04 ASR erroneously identifies an aircraft from pilot’s utterance—wrong aircraft is highlighted No Immediate Effect on Safety | -If pilot performs the radio call and erroneous flight is highlighted, the ATCO may focus on the highlighted aircraft and issue the clearance intended for the calling aircraft to the wrong flight. The difference to the current operating method is that while occasional callsign confusion may occur between similar callsigns, now the ASR system is enforcing the ATCO’s perception of issuing the clearance to what is expected to be the correct flight. If the confusion is not clarified through read-back and hear-back procedure or with the assistance of the planning controller, issued clearance to the wrongly highlighted aircraft may result in an unintended trajectory change. From a safety perspective, this is not significantly different from the current operating method, when ATCO enters a clearance into the radar label for the wrong callsign. | If the confidence level of the callsign recognition is not sufficiently high, it is not highlighted. For lower confidence levels to highlight with different color to emphasize the uncertainty of correct recognition. If the erroneous recognition persists, ATCO switches off the ASR and continues working as in today’s operations. |

| FHz#05 ASR erroneously identifies an aircraft from controller’s utterance—wrong aircraft is highlighted. No Immediate Effect on Safety | If ATCO performs the radio call and erroneous flight is highlighted, it is assumed the impact is minor as the ATCO’s attention is on the aircraft being called and the impact of erroneous highlight is negligible. | If the confidence level of the callsign recognition is not sufficiently high, it is not highlighted. For lower confidence levels to highlight with different color to emphasize the uncertainty of correct recognition. |

| FHz#06 ASR fails to identify a command from controller’s utterance. No Immediate Effect on Safety | -ATCO utters an illegal/non-understandable command -ATCO utters a legal and understandable command, but ASR fails to recognize it The failure of ASR to identify a complete command force ATCO to manually make the input. In such cases the negative impact on ATCO workload and situational awareness is expected, as in the new operating method there is a default expectation by the ATCO that ASR is functional and assisting in inputting commands in the labels. | ATCO inputs command manually. If the failure of ASR to recognize commands persists, ATCO switches off the ASR and continues working as in today’s operations. |

| FHz#07 ASR erroneously identifies a command from controller’s utterance No Immediate Effect on Safety | -ATCO utters an illegal/non-understandable command -ATCO utters a legal and understandable command, but ASR recognizes the incorrect command, and wrong command is displayed in the CWP HMI. In cases where inputs are provided but are erroneous, the ATCO will have to recognize the error and change information already put into the system. Depending on the ATM system, some parts of correcting the clearance, route change, etc., may require manipulation of the flight plan route data to input the correction. In such cases the impact on ATCO workload and potential disruption to the ATCO workflow may be higher than in cases where only missing data need to be input to complete the clearance. | ATCO corrects ASR input manually for the intended callsign. If the failure of ASR to recognize commands correctly persists, ATCO switches off the ASR and continues working as in today’s operations. |

| FHz#08 ASR recognizes an incorrect callsign, and the command is displayed for the incorrect flight in the CWP HMI No Immediate Effect on Safety | ATCO utters a legal and understandable command, but ASR recognizes an incorrect callsign, which is being considered by the system, and the command is displayed for the incorrect flight in the CWP HMI In cases where inputs are provided but are erroneous (i.e., command input for wrong aircraft), the ATCO will have to recognize the error and change information already put into the system. If the error is not recognized by the controller, the contacted pilot will nevertheless follow the clearance issued by controller on the frequency. The erroneous input in the label of another aircraft will soon be detected by clearance monitoring aids. | If ATCO recognizes the error, he/she rejects and either repeats the clearance or inputs it manually directly into the label of the correct aircraft radar label. If ATCO does not recognize the error, monitoring aids will detect the discrepancy between the flown trajectory and the command inserted in the label of the erroneous aircraft. |

| Hazard | Objective Metrics | Subjective Feedback on the Statement |

|---|---|---|

| FHz#01 Significant delay in ASR command recognition and/or display (all use cases). | Timeliness (processing time) | Applicable to all hazards The accuracy of the information provided by the ASR system is adequate for the accomplishment of operations. Command Recognition Error Rate stays in the acceptable limits. The number and/or severity of errors resulting from the introduction of the ASR system is within tolerable limits, considering error type and operational impact. The level of ATCO’s situational awareness is not reduced with the introduction of the ASR system (ATCO is able to perceive and interpret task relevant information and anticipate future events/actions). The level of ATCO’s workload is maintained or decreased with the introduction of the ASR system. The number and/or severity of errors resulting from the introduction of the ASR system is within tolerable limits, considering error type and operational impact. |

| FHz#02: ASR fails to identify an aircraft from pilot’s utterance—no aircraft is highlighted (use case 1) | Pilot’s callsign recognition rate (no callsign highlighted) | |

| FHz#04: ASR erroneously identifies an aircraft from pilot’s utterance—wrong aircraft is highlighted (use case 1). | Pilot’s callsign recognition error rate | |

| FHz#03: ASR fails to identify an aircraft from controller’s utterance—no aircraft is highlighted (use case 2). | Controller’s callsign recognition rate (no callsign highlighted) Controller’s callsign recognition error rate (wrong callsign highlighted) | |

| FHz#05: ASR erroneously identifies an aircraft from controller’s utterance—wrong aircraft is highlighted (use case 2). | Controller’s callsign recognition rate (no callsign highlighted) Controller’s callsign recognition error rate (wrong callsign highlighted) | |

| FHz#06: ASR fails to identify a command from controller’s utterance (use case 3, 4). | Controller’s command recognition rate | |

| FHz#07: ASR erroneously identifies a command from controller’s utterance (use case 3 and 4) | Controller’s command recognition error rate | |

| FHz#08: ASR recognizes an incorrect callsign, and the command is displayed for the incorrect flight in the CWP HMI (use case 3, 4) | Controller’s callsign recognition error rate |

| ATCO | Flight Crew | |||||

|---|---|---|---|---|---|---|

| Analysis Type | N° of Callsigns | N° Callsigns Detected | Percentage | N° of Callsigns | N° Callsigns Detected | Percentage |

| RTS recordings | 859 | 721 | 84% | 457 | 687 | 67% |

| Operational recordings | 143 | 127 | 87% | 158 | 77 | 49% |

| Only Command Recognition | Callsign + Command Recognition | |||||

|---|---|---|---|---|---|---|

| Analysis Type | Commands | Detected Commands | % | Commands | Detected Callsign + Commands | % |

| RTS recordings | 695 | 619 | 89% | 695 | 523 | 75% |

| Operational recordings | 182 | 167 | 92% | 182 | 146 | 80% |

| Complete Command Recognition | Callsign + Complete Command Recognition | |||||

|---|---|---|---|---|---|---|

| Analysis Type | Commands | Detected Commands | % | Commands | Detected Commands | % |

| RTS recordings | 695 | 498 | 72% | 695 | 416 | 60% |

| Level of Evaluation | WER | Cmd-Recog-Rate | Cmd-Error-Rate | Csgn-Recog-Rate | Csgn-Error-Rate |

|---|---|---|---|---|---|

| Full Command | 3.1% | 92.1% | 2.8% | 97.8% | 0.6% |

| Only Label | 92.5% | 2.4% |

| Question | Diff | p-Value |

|---|---|---|

| Stress annoyed | −0.16 | 34% |

| Peak workload | −0.32 | 9.9% |

| Single aircraft | 0.04 | −41% |

| Risk to forget | −0.64 | 0.7% |

| Conflict resolution | −0.26 | 24% |

| Consequences | 0.30 | −21% |

| Reliable | −0.24 | 30% |

| Confidence | −1.59 | 1.1% |

| Complexity | −1.98 | 2.0 × 10−4 |

| User Acceptance | −1.01 | 6.3% |

| Total | −0.56 | 0.4% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pinska-Chauvin, E.; Helmke, H.; Dokic, J.; Hartikainen, P.; Ohneiser, O.; Lasheras, R.G. Ensuring Safety for Artificial-Intelligence-Based Automatic Speech Recognition in Air Traffic Control Environment. Aerospace 2023, 10, 941. https://doi.org/10.3390/aerospace10110941

Pinska-Chauvin E, Helmke H, Dokic J, Hartikainen P, Ohneiser O, Lasheras RG. Ensuring Safety for Artificial-Intelligence-Based Automatic Speech Recognition in Air Traffic Control Environment. Aerospace. 2023; 10(11):941. https://doi.org/10.3390/aerospace10110941

Chicago/Turabian StylePinska-Chauvin, Ella, Hartmut Helmke, Jelena Dokic, Petri Hartikainen, Oliver Ohneiser, and Raquel García Lasheras. 2023. "Ensuring Safety for Artificial-Intelligence-Based Automatic Speech Recognition in Air Traffic Control Environment" Aerospace 10, no. 11: 941. https://doi.org/10.3390/aerospace10110941

APA StylePinska-Chauvin, E., Helmke, H., Dokic, J., Hartikainen, P., Ohneiser, O., & Lasheras, R. G. (2023). Ensuring Safety for Artificial-Intelligence-Based Automatic Speech Recognition in Air Traffic Control Environment. Aerospace, 10(11), 941. https://doi.org/10.3390/aerospace10110941