What Went Wrong for Bad Solvers during Thematic Map Analysis? Lessons Learned from an Eye-Tracking Study

Abstract

1. Introduction

- 1

- What distinguishes less successful and more successful users when solving map analysis tasks?

- 2

- Do strategies applied by less successful map users feature some similarities?

- 3

- Are outliers from the perspective of task-solving strategies among the less successful users only?

1.1. Searching for Group Differences among Map Users

- experts are able to solve tasks faster than novices by recalling the necessary information from long-term memory more easily and quickly and, therefore, also solving them more effectively;

- experts link information based on its similarity to the task being solved, while novices tend to link information based on its visual similarity; therefore, it is more difficult for them to distinguish non-essential from essential information;

- experts are able to process a greater part of the stimulus at a certain time than novices as they are able to extract information from widely distanced and parafoveal regions;

- experts consider various possibilities when solving a task, they verify the solution obtained and, based on that, they adjust their strategy for other task solving.

1.2. Methods of Gaining Insight into Map-Reading and Analysis

2. Materials and Methods

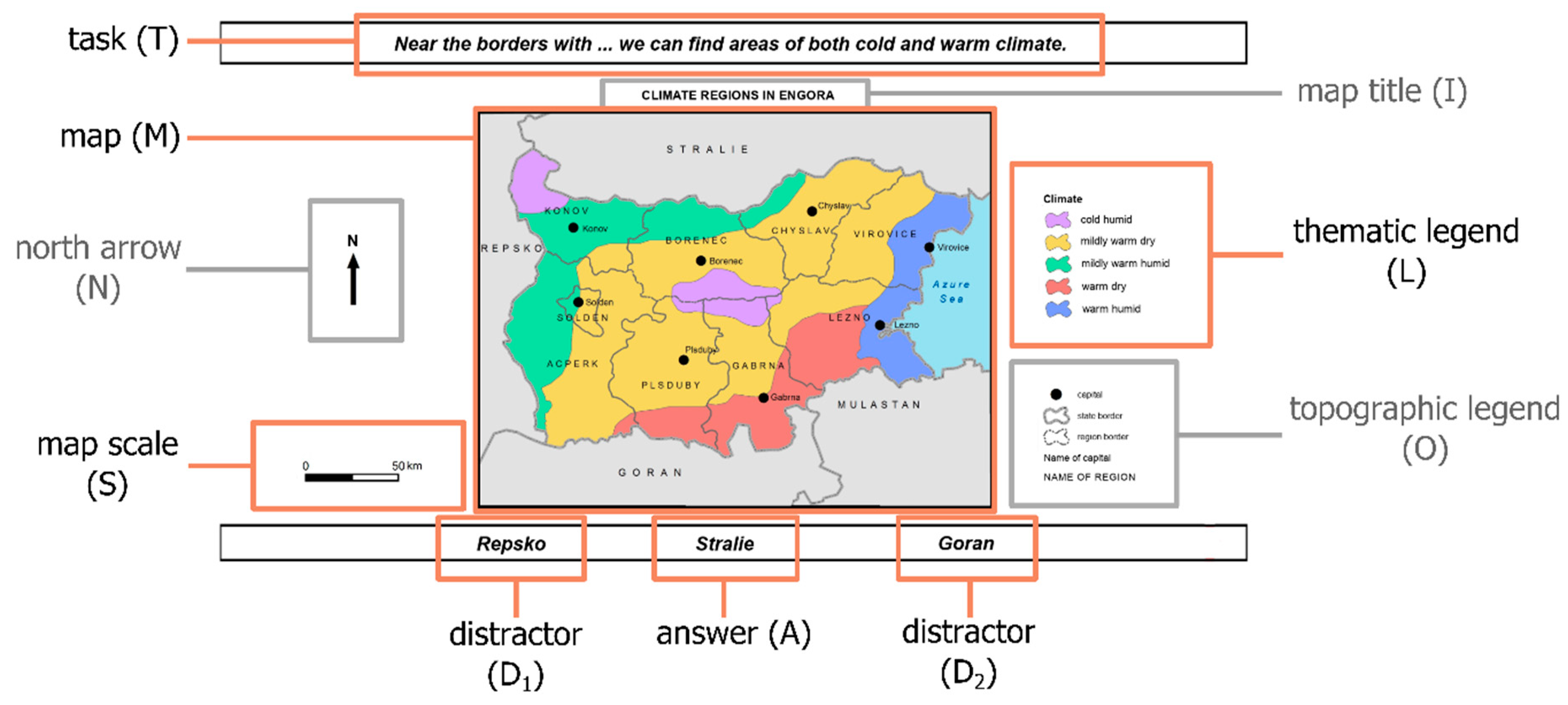

2.1. Methods and Materials

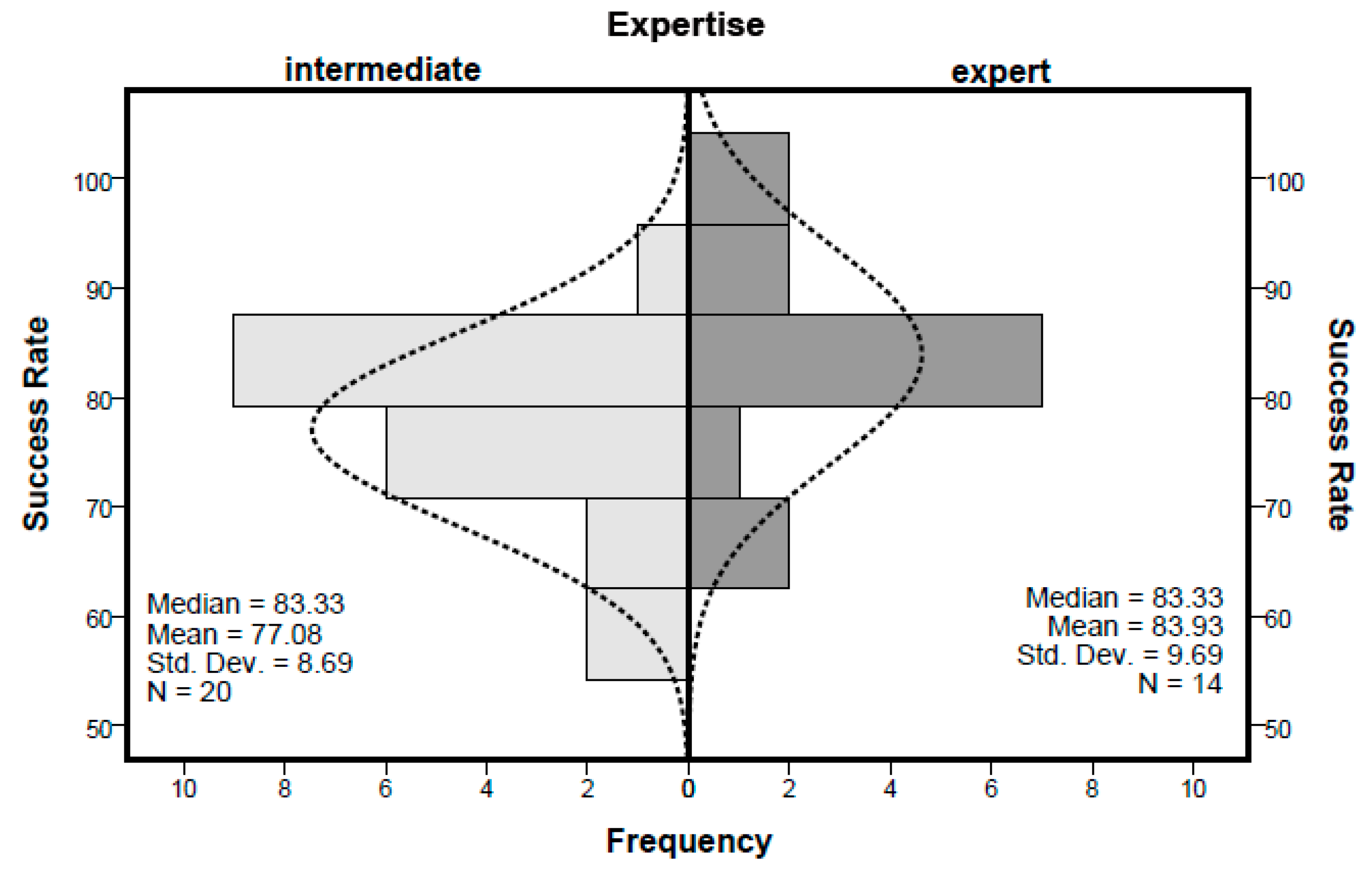

2.2. Participants

2.3. Apparatus

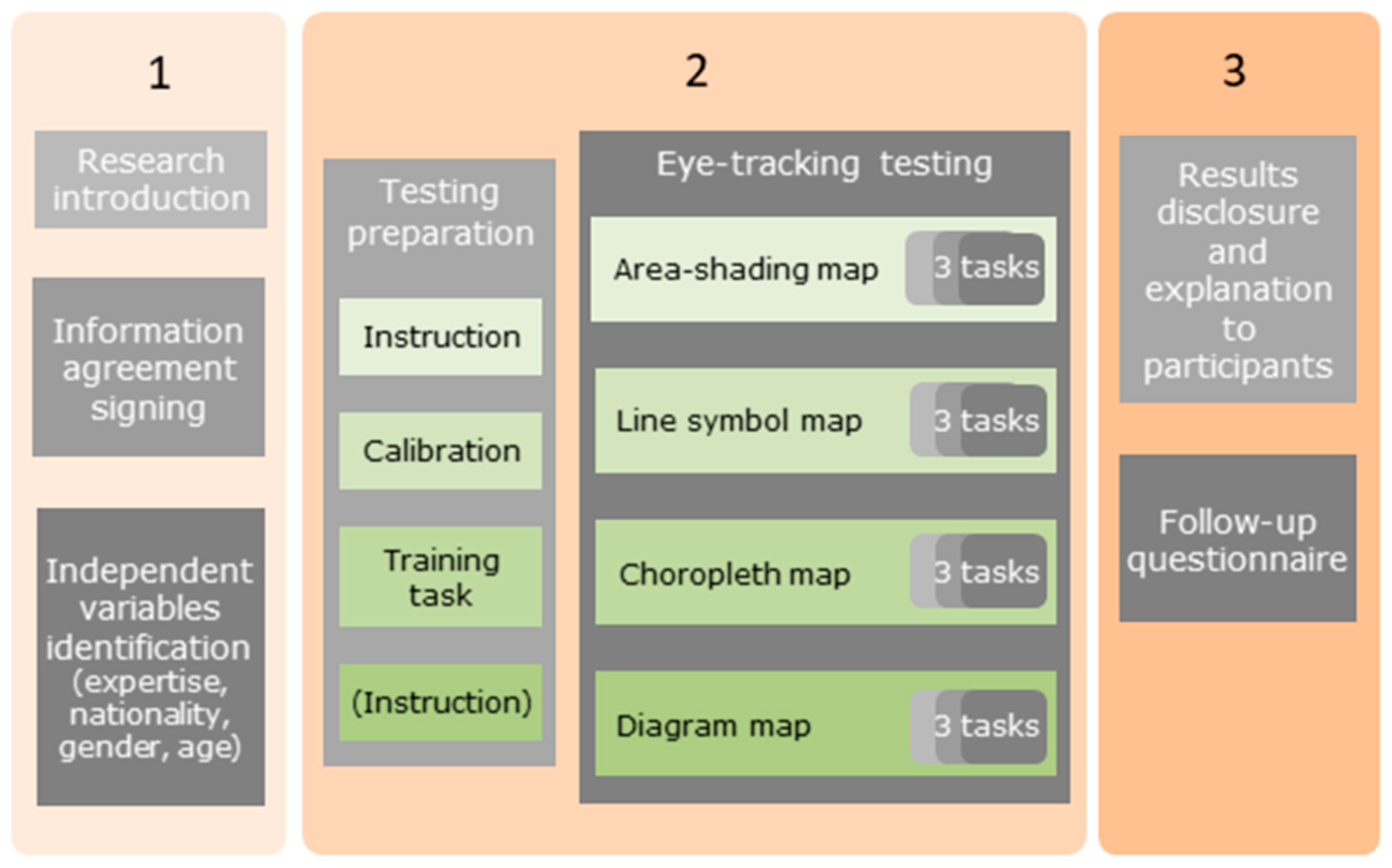

2.4. Procedure

2.5. Data Analysis

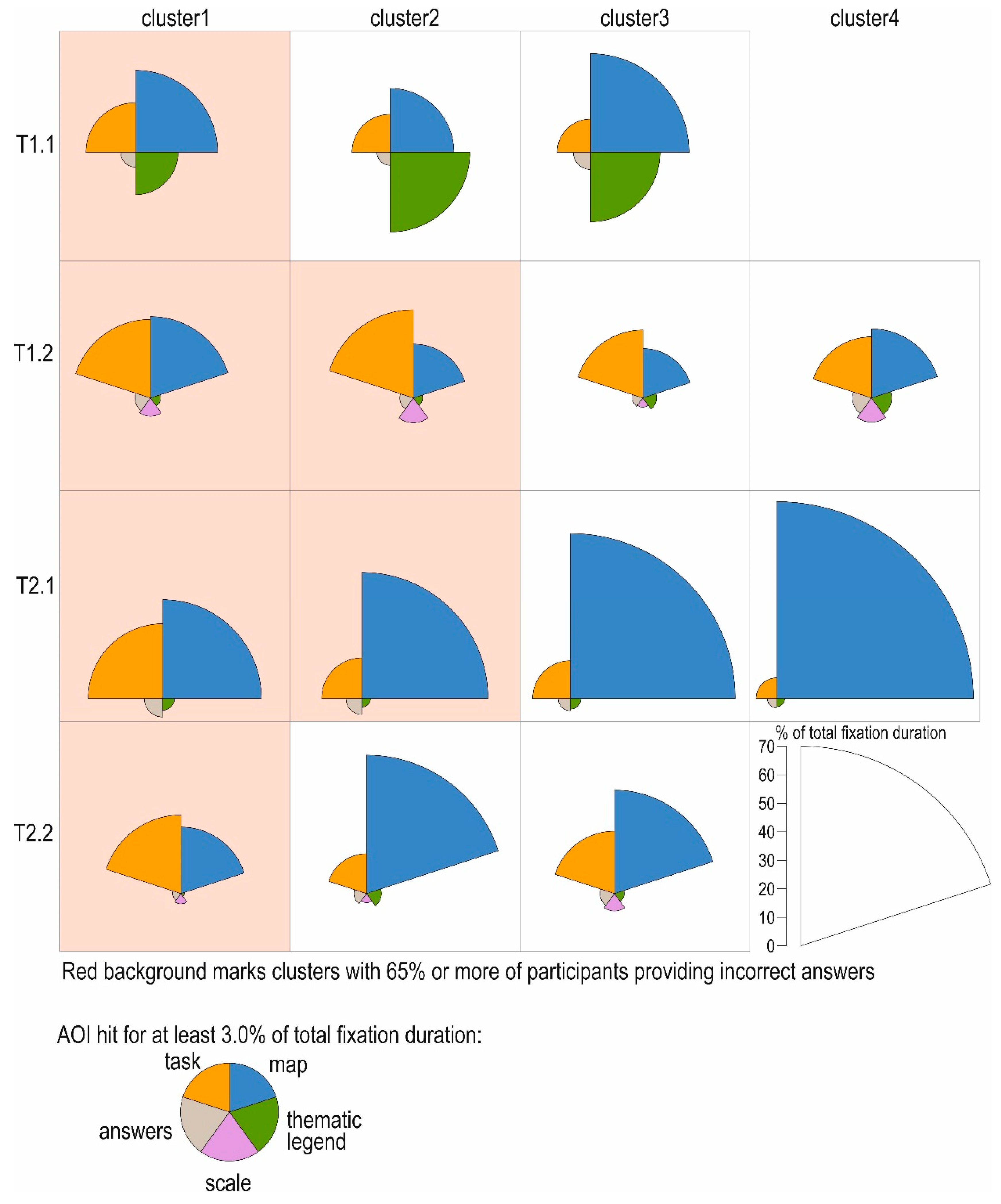

2.5.1. Attention Distribution on Map AOI

2.5.2. Cluster Analysis of Relative Fixation Duration Distribution

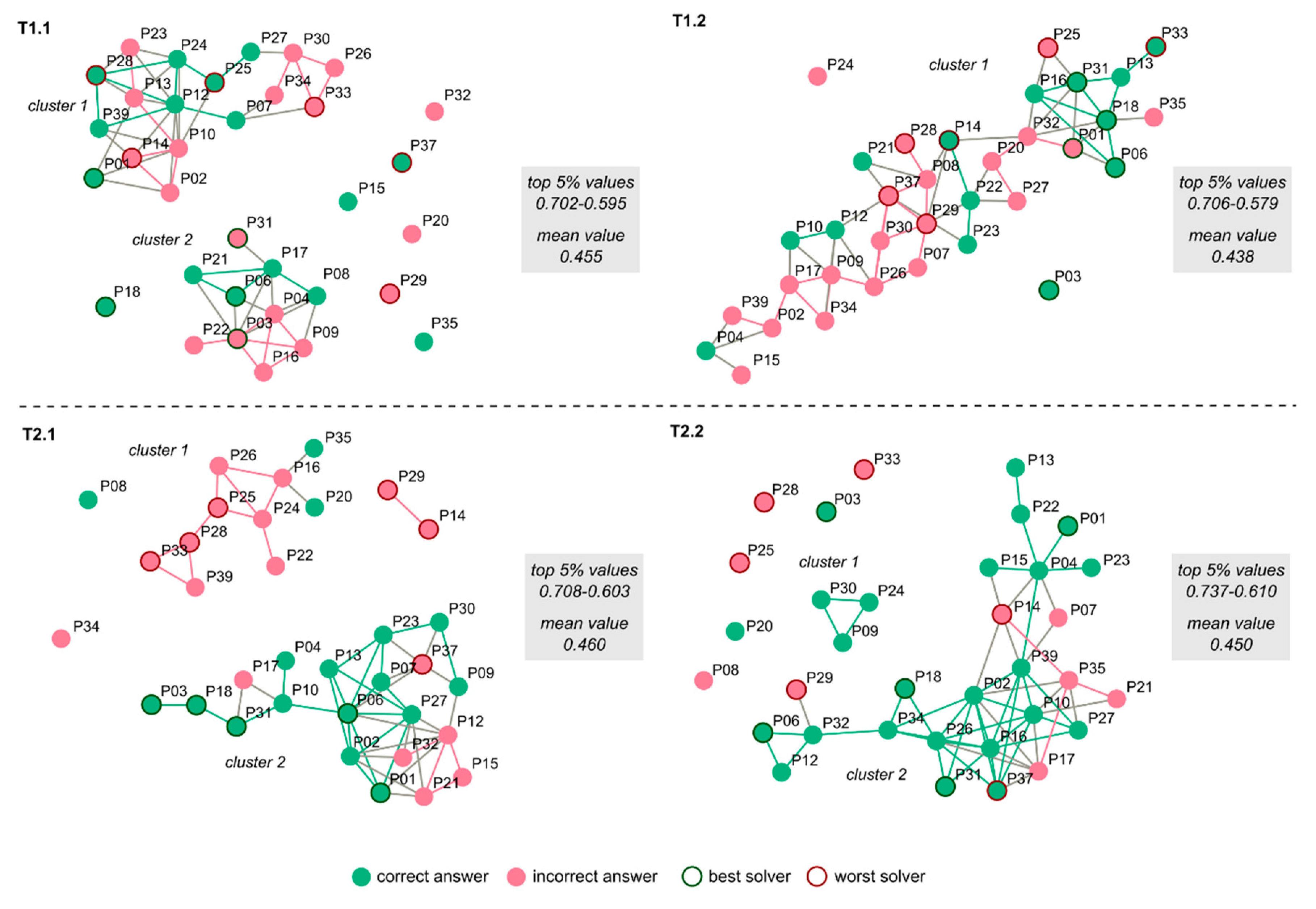

2.5.3. Data-Driven Analysis of Task Solving Similarity

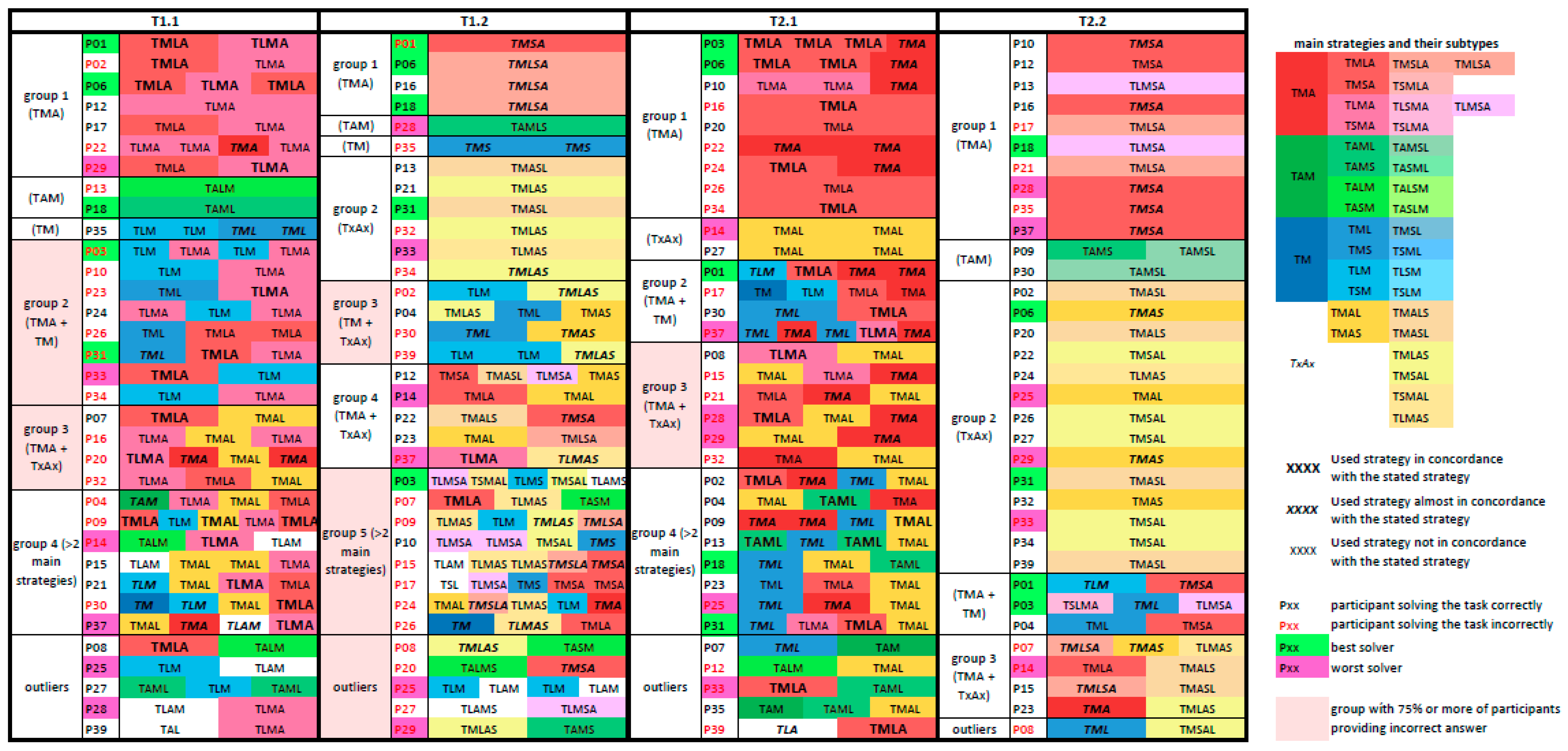

2.5.4. Theory-Driven Analysis of Similarity in Task Solving

- getting familiar with the problem » solving the problem » comparing the solution found with given possible solutions (Task » Map » Answer, i.e., TMA; the approach expressed using the abbreviations for the key AOIs representing individual task-solving phases);

- getting familiar with the problem » checking given possible solutions to the problem » solving the problem (finding which of the possible solutions is the correct one) (TAM);

- getting familiar with the problem » starting to solve the problem » checking given possible solutions to the problem » continuing to solve the problem (TMAM);

- getting familiar with the problem » solving the problem (TM).

- map;

- map » map layout element(s) (i.e., map title, thematic and topographic legend, map scale, north arrow);

- map layout element(s) » map;

- map layout element(s) » map » (an)other map layout element(s).

3. Results

3.1. Comparing Intermediates and Experts

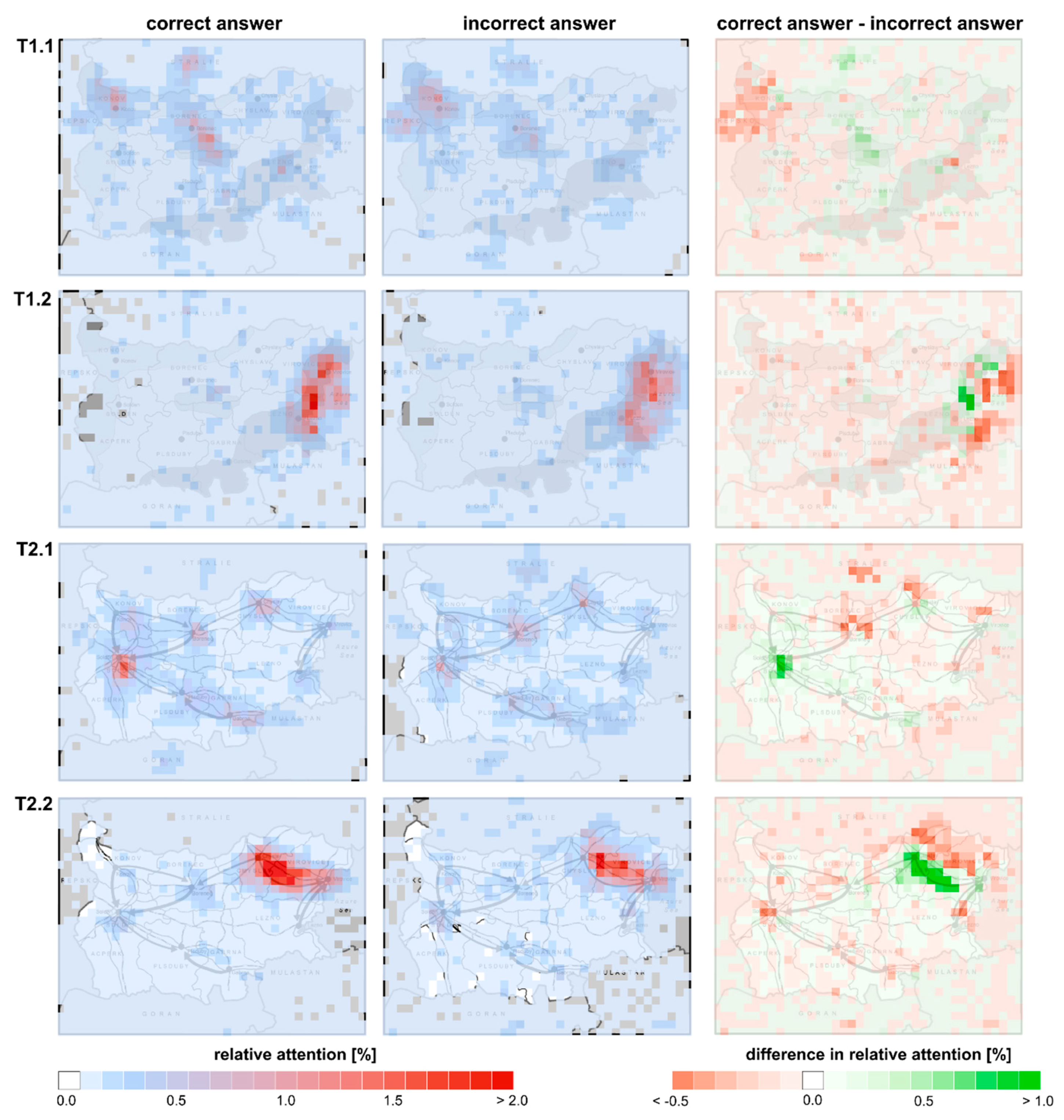

3.2. Visual Attention Distribution

3.2.1. Attention Spread on the Map

3.2.2. Attention Distribution among Layout Elements

3.3. Spatio-Temporal Pattern Discovery

3.3.1. Sequence Similarity Analysis

3.3.2. Theory-Driven Identification of Task-Solving Strategies

4. Discussion

4.1. What Less Successful and More Successful Map Users Do Differently, and Do Strategies Applied by Less Successful Users Feature Some Similarities?

4.2. What Enables/Hinders Identifying Features of Strategies that Characterise Unsuccessful Participants?

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Participant ID | Expertise | T1.1 | T1.2 | T1.3 | T2.1 | T2.2 | T2.3 | T3.1 | T3.2 | T3.3 | T4.1 | T4.2 | T4.3 | Success Rate (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P01 | e | 91.7 | ||||||||||||

| P02 | i | 83.3 | ||||||||||||

| P03 | i | 91.7 | ||||||||||||

| P04 | e | 83.3 | ||||||||||||

| P06 | e | 100.0 | ||||||||||||

| P07 | i | 83.3 | ||||||||||||

| P08 | i | 75.0 | ||||||||||||

| P09 | i | 83.3 | ||||||||||||

| P10 | i | 83.3 | ||||||||||||

| P12 | i | 83.3 | ||||||||||||

| P13 | e | 83.3 | ||||||||||||

| P14 | i | 66.7 | ||||||||||||

| P15 | i | 83.3 | ||||||||||||

| P16 | e | 83.3 | ||||||||||||

| P17 | i | 75.0 | ||||||||||||

| P18 | e | 100.0 | ||||||||||||

| P20 | e | 83.3 | ||||||||||||

| P21 | e | 83.3 | ||||||||||||

| P22 | i | 75.0 | ||||||||||||

| P23 | i | 83.3 | ||||||||||||

| P24 | i | 83.3 | ||||||||||||

| P25 | i | 66.7 | ||||||||||||

| P26 | i | 75.0 | ||||||||||||

| P27 | i | 75.0 | ||||||||||||

| P28 | i | 58.3 | ||||||||||||

| P29 | i | 58.3 | ||||||||||||

| P30 | i | 83.3 | ||||||||||||

| P31 | e | 91.7 | ||||||||||||

| P32 | e | 75.0 | ||||||||||||

| P33 | e | 66.7 | ||||||||||||

| P34 | i | 75.0 | ||||||||||||

| P35 | e | 83.3 | ||||||||||||

| P37 | e | 66.7 | ||||||||||||

| P39 | e | 83.3 | ||||||||||||

| Share of correct answers (%) | 47 | 41 | 94 | 50 | 71 | 94 | 100 | 85 | 91 | 100 | 91 | 94 | ||

References

- Wood, D. The Power of Maps; The Guilford Press: New York, NY, USA, 1992. [Google Scholar]

- Cinnamon, S.A. Map as Weapon. Constructing Knowledge: Curriculum Studies in Action. In Through a Distorted Lens: Media as Curricula and Pedagogy in the 21st Century; Nicosia, L.M., Goldstein, R.A., Eds.; Sense Publishers: Rotterdam, The Netherlands, 2017; pp. 99–114. [Google Scholar]

- Monmonier, M. How to Lie with Maps; University of Chicago Press: Chicago, IL, USA, 2014. [Google Scholar]

- Havelková, L.; Hanus, M. Map skills in education: A systematic review of terminology, methodology, and influencing factors. Rev. Int. Geogr. Educ. Online 2019, 9, 361–401. [Google Scholar]

- Ooms, K.; De Maeyer, P.; Fack, V.; Van Assche, E.; Witlox, F. Interpreting maps through the eyes of expert and novice users. Int. J. Geogr. Inf. Sci. 2012, 26, 1773–1788. [Google Scholar] [CrossRef]

- Gerber, R.; Lidstone, J.; Nason, R. Modelling expertise in map reading: Beginnings. Int. Res. Geogr. Environ. Educ. 1992, 1, 31–43. [Google Scholar] [CrossRef]

- Ooms, K.; De Maeyer, P.; Fack, V. Study of the attentive behavior of novice and expert map users using eye tracking. Cartogr. Geogr. Inf. Sci. 2014, 41, 37–54. [Google Scholar] [CrossRef]

- Postigo, Y.; Pozo, J.I. The Learning of a Geographical Map by Experts and Novices. Educ. Psychol. 1998, 18, 65–80. [Google Scholar] [CrossRef]

- Dong, W.; Ying, Q.; Yang, Y.; Tang, S.; Zhan, Z.; Liu, B.; Meng, L. Using Eye Tracking to Explore the Impacts of Geography Courses on Map-based Spatial Ability. Sustainability 2018, 11, 76. [Google Scholar] [CrossRef]

- Clark, D.; Reynolds, S.; Lemanowski, V.; Stiles, T.; Yasar, S.; Proctor, S.; Lewis, E.; Stromfors, C.; Corkins, J. University Students’ Conceptualization and Interpretation of Topographic Maps. Int. J. Sci. Educ. 2008, 30, 377–408. [Google Scholar] [CrossRef]

- Logan, T.; Lowrie, T.; Diezmann, C.M. Co-thought gestures: Supporting students to successfully navigate map tasks. Educ. Stud. Math. 2014, 87, 87–102. [Google Scholar] [CrossRef]

- Çöltekin, A.; Brychtová, A.; Griffin, A.L.; Robinson, A.C.; Imhof, M.; Pettit, C. Perceptual complexity of soil-landscape maps: A user evaluation of color organization in legend designs using eye tracking. Int. J. Digit. Earth 2017, 10, 560–581. [Google Scholar] [CrossRef]

- Çöltekin, A.; Fabrikant, S.I.; Lacayo, M. Exploring the efficiency of users’ visual analytics strategies based on sequence analysis of eye movement recordings. Int. J. Geogr. Inf. Sci. 2010, 24, 1559–1575. [Google Scholar] [CrossRef]

- Opach, T.; Gołębiowska, I.; Fabrikant, S.I. How Do People View Multi-Component Animated Maps? Cartogr. J. 2014, 51, 330–342. [Google Scholar] [CrossRef]

- Baker, K.M.; Petcovic, H.; Wisniewska, M.; Libarkin, J.C. Spatial signatures of mapping expertise among field geologists. Cartogr. Geogr. Inf. Sci. 2012, 39, 119–132. [Google Scholar] [CrossRef]

- Krstić, K.; Šoškić, A.; Ković, V.; Holmqvist, K. All good readers are the same, but every low-skilled reader is different: An eye-tracking study using PISA data. Eur. J. Psychol. Educ. 2018, 33, 521–541. [Google Scholar] [CrossRef]

- Roth, R.E. Cartographic Interaction Primitives: Framework and Synthesis. Cartogr. J. 2012, 49, 376–395. [Google Scholar] [CrossRef]

- Dong, W.; Wang, S.; Chen, Y.; Meng, L. Using Eye Tracking to Evaluate the Usability of Flow Maps. ISPRS Int. J. Geo-Inf. 2018, 7, 281. [Google Scholar] [CrossRef]

- Burian, J.; Popelka, S.; Beitlova, M. Evaluation of the Cartographical Quality of Urban Plans by Eye-Tracking. ISPRS Int. J. Geo-Inf. 2018, 7, 192. [Google Scholar] [CrossRef]

- Wehrend, S.; Lewis, C. A Problem-oriented Classification of Visualization Techniques. In Proceedings of the 1st Conference on Visualization ’90, San Francisco, CA, USA, 23–26 October 1990. [Google Scholar]

- Amar, R.; Eagan, J.; Stasko, J. Low-Level Components of Analytic Activity in Information Visualization. In Proceedings of the 2005 IEEE Symposium on Information Visualization, Minneapolis, MN, USA, 23–25 October 2005; p. 15. [Google Scholar]

- Kimerling, A.J.; Buckley, A.R.; Muehrcke, P.C.; Muehrcke, J.O. Map Use: Reading and Analysis; ESRI Press Academic: Redlands, CA, USA, 2009. [Google Scholar]

- Michaelidou, E.; Nakos, B.; Filippakopoulou, V. The Ability of Elementary School Children to Analyse General Reference and Thematic Maps. Cartographica 2004, 39, 65–88. [Google Scholar] [CrossRef]

- Carmichael, A.; Larson, A.; Gire, E.; Loschky, L.; Rebello, N.S. How Does Visual Attention Differ Between Experts and Novices on Physics Problems? In 2010 Physics Education Research Conference; Singh, C., Sabella, M., Rebello, S., Eds.; Amer Inst Physics: Melville, NY, USA, 2010; p. 93. [Google Scholar]

- Bednarik, R. Expertise-dependent visual attention strategies develop over time during debugging with multiple code representations. Int. J. Hum. Comput. Stud. 2012, 70, 143–155. [Google Scholar] [CrossRef]

- Li, W.-C.; Chiu, F.-C.; Kuo, Y.; Wu, K.-J. The Investigation of Visual Attention and Workload by Experts and Novices in the Cockpit. In Proceedings of the Engineering Psychology and Cognitive Ergonomics. Applications and Services; Harris, D., Ed.; Springer: Berlin, Germany, 2013; pp. 167–176. [Google Scholar]

- Warren, A.L.; Donnon, T.L.; Wagg, C.R.; Priest, H.; Fernandez, N.J. Quantifying Novice and Expert Differences in Visual Diagnostic Reasoning in Veterinary Pathology Using Eye-Tracking Technology. J. Vet. Med. Educ. 2018, 45, 295–306. [Google Scholar] [CrossRef]

- Moser-Mercer, B. The expert-novice paradigm in interpreting research. In Translationsdidaktik; Gunter Narr Verlag: Tübingen, Germany, 1997; pp. 255–261. [Google Scholar]

- Ooms, K.; De Maeyer, P.; Fack, V. Listen to the Map User: Cognition, Memory, and Expertise. Cartogr. J. 2015, 52, 3–19. [Google Scholar] [CrossRef]

- Anderson, J.R. The Architecture of Cognition; Harvard University Press: Cambridge, UK, 1983. [Google Scholar]

- Gerace, W.J. Problem Solving and Conceptual Understanding. In Proceedings of the 2001 Physics Education Research Conference; Franklin, S., Marx, J., Cummings, K., Eds.; PERC Publishing: New York, NY, USA, 2001; pp. 33–45. [Google Scholar]

- Gegenfurtner, A.; Lehtinen, E.; Säljö, R. Expertise Differences in the Comprehension of Visualizations: A Meta-Analysis of Eye-Tracking Research in Professional Domains. Educ. Psychol. Rev. 2011, 23, 523–552. [Google Scholar] [CrossRef]

- Haider, H.; Frensch, P.A. The Role of Information Reduction in Skill Acquisition. Cogn. Psychol. 1996, 30, 304–337. [Google Scholar] [CrossRef]

- Paas, F.; Renkl, A.; Sweller, J. Cognitive Load Theory and Instructional Design: Recent Developments. Educ. Psychol. 2003, 38, 1–4. [Google Scholar] [CrossRef]

- Crampton, J. A Cognitive Analysis of Wayfinding Expertise. Cartographica 1992, 29, 46–65. [Google Scholar] [CrossRef]

- Stofer, K.; Che, X. Comparing Experts and Novices on Scaffolded Data Visualizations using Eye-tracking. J. Eye Mov. Res. 2014, 7, 1–15. [Google Scholar]

- Popelka, S.; Vondráková, A.; Hujňáková, P. Eye-tracking Evaluation of Weather Web Maps. ISPRS Int. J. Geo-Inf. 2019, 8, 256. [Google Scholar] [CrossRef]

- Dong, W.; Zheng, L.; Liu, B.; Meng, L. Using Eye Tracking to Explore Differences in Map-Based Spatial Ability between Geographers and Non-Geographers. ISPRS Int. J. Geo-Inf. 2018, 7, 337. [Google Scholar] [CrossRef]

- McArdle, G.; Tahir, A.; Bertolotto, M. Interpreting map usage patterns using geovisual analytics and spatio-temporal clustering. Int. J. Digit. Earth 2015, 8, 599–622. [Google Scholar] [CrossRef]

- Nielsen, J. Usability Engineering; Morgan Kaufmann: San Francisco, CA, USA, 1994. [Google Scholar]

- Çöltekin, A.; Heil, B.; Garlandini, S.; Fabrikant, S.I. Evaluating the Effectiveness of Interactive Map Interface Designs: A Case Study Integrating Usability Metrics with Eye-Movement Analysis. Cartogr. Geogr. Inf. Sci. 2009, 36, 5–17. [Google Scholar] [CrossRef]

- Kerkovits, K.; Szigeti, C. Relationship between The Distortions in Map Projections and The Usability of Small-Scale Maps. In Proceedings of 7th International Conference on Cartography and GIS; Bandrova, T., Konečný, M., Eds.; Bulgarian Cartographic Association: Sozopol, Bulgaria, 2018; pp. 236–245. [Google Scholar]

- Šavrič, B.; Jenny, B.; White, D.; Strebe, D.R. User preferences for world map projections. Cartogr. Geogr. Inf. Sci. 2015, 42, 398–409. [Google Scholar] [CrossRef]

- Kastens, K.A.; Liben, L.S. Children’s Strategies and Difficulties while Using a Map to Record Locations in an Outdoor Environment. Int. Res. Geogr. Environ. Educ. 2010, 19, 315–340. [Google Scholar] [CrossRef]

- Lee, J.; Bednarz, R.S. Video Analysis of Map-Drawing Strategies. J. Geogr. 2005, 104, 211–221. [Google Scholar] [CrossRef]

- van Gog, T.; Paas, F.; van Merriënboer, J.J.G.; Witte, P. Uncovering the Problem-Solving Process: Cued Retrospective Reporting Versus Concurrent and Retrospective Reporting. J. Exp. Psychol. Appl. 2005, 11, 237–244. [Google Scholar] [CrossRef]

- Ericsson, K.A.; Simon, H.A. Protocol Analysis: Verbal Reports as Data; MIT Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Gołębiowska, I. Legend Layouts for Thematic Maps: A Case Study Integrating Usability Metrics with the Thinking Aloud Method. Cartogr. J. 2015, 52, 28–40. [Google Scholar] [CrossRef]

- Donker, A.; Markopoulos, P. A Comparison of Think-aloud, Questionnaires and Interviews for Testing Usability with Children. In People and Computers XVI—Memorable Yet Invisible; Faulkner, X., Finlay, J., Détienne, F., Eds.; Springer London: London, UK, 2002; pp. 305–316. [Google Scholar]

- Ooms, K.; De Maeyer, P.; Fack, V. Analyzing eye movement patterns to improve map design. In Proceedings of the AutoCarto 2010; CaGIS: Orlando, FL, USA, 2010; Volume 38, p. 5. [Google Scholar]

- Duchowski, A. Eye Tracking Methodology: Theory and Practice; Springer London: London, UK, 2007. [Google Scholar]

- Just, M.A.; Carpenter, P.A. Eye fixations and cognitive processes. Cogn. Psychol. 1976, 8, 441–480. [Google Scholar] [CrossRef]

- Dong, W.; Liao, H.; Xu, F.; Liu, Z.; Zhang, S. Using eye tracking to evaluate the usability of animated maps. Sci. China-Earth Sci. 2014, 57, 512–522. [Google Scholar] [CrossRef]

- Brychtová, A.; Çöltekin, A. An Empirical User Study for Measuring the Influence of Colour Distance and Font Size in Map Reading Using Eye Tracking. Cartogr. J. 2016, 53, 202–212. [Google Scholar] [CrossRef]

- Lei, T.-C.; Wu, S.-C.; Chao, C.-W.; Lee, S.-H. Evaluating differences in spatial visual attention in wayfinding strategy when using 2D and 3D electronic maps. GeoJournal 2016, 81, 153–167. [Google Scholar] [CrossRef]

- Dong, W.; Jiang, Y.; Zheng, L.; Liu, B.; Meng, L. Assessing Map-Reading Skills Using Eye Tracking and Bayesian Structural Equation Modelling. Sustainability 2018, 10, 3050. [Google Scholar] [CrossRef]

- Havelková, L.; Hanus, M. Research into map-analysis strategies: Theory- and data-driven approaches. Geografie 2019, 124, 187–216. [Google Scholar] [CrossRef]

- Liao, H.; Dong, W.; Peng, C.; Liu, H. Exploring differences of visual attention in pedestrian navigation when using 2D maps and 3D geo-browsers. Cartogr. Geogr. Inf. Sci. 2017, 44, 474–490. [Google Scholar] [CrossRef]

- Keskin, M.; Ooms, K.; Dogru, A.O.; De Maeyer, P. EEG & Eye Tracking User Experiments for Spatial Memory Task on Maps. ISPRS Int. J. Geo-Inf. 2019, 8, 546. [Google Scholar]

- Havelková, L.; Hanus, M. The Impact of the Map Type on the Level of Student Map Skills. Cartographica 2018, 53, 149–170. [Google Scholar] [CrossRef]

- Voßkühler, A.; Nordmeier, V.; Kuchinke, L.; Jacobs, A.M. OGAMA (Open Gaze and Mouse Analyzer): Open-source software designed to analyze eye and mouse movements in slideshow study designs. Behav. Res. Methods 2008, 40, 1150–1162. [Google Scholar] [CrossRef]

- Popelka, S. Optimal eye fixation detection settings for cartographic purposes. In Proceedings of the 14th SGEM GeoConference on Informatics, Geoinformatics and Remote Sensing (SGEM’14), Albena, Bulgaria, 17–26 June 2014. [Google Scholar]

- Doležalová, J.; Popelka, S. ScanGraph: A Novel Scanpath Comparison Method Using Visualisation of Graph Cliques. J. Eye Mov. Res. 2016, 9, 1–13. [Google Scholar]

- Bojko, A. Informative or Misleading? Heatmaps Deconstructed, New Trends. In Proceedings of the Human-Computer Interaction; Jacko, J.A., Ed.; Springer: Berlin, Germany, 2009; pp. 30–39. [Google Scholar]

- Nétek, R.; Pour, T.; Slezáková, R. Implementation of Heat Maps in Geographical Information System – Exploratory Study on Traffic Accident Data. Open Geosci. 2018, 10, 367–384. [Google Scholar] [CrossRef]

- Kent, R.A. Analysing Quantitative Data: Variable-Based and Case-Based Approaches to Non-Experimental Datasets; SAGE: London, UK, 2015. [Google Scholar]

- Gołębiowska, I.; Opach, T.; Rød, J.K. For your eyes only? Evaluating a coordinated and multiple views tool with a map, a parallel coordinated plot and a table using an eye-tracking approach. Int. J. Geogr. Inf. Sci. 2017, 31, 237–252. [Google Scholar] [CrossRef]

- Doležalová, J.; Popelka, S. Evaluation of the User Strategy on 2d and 3d City Maps Based on Novel Scanpath Comparison Method and Graph Visualization. In XXIII ISPRS Congress, Commission II; Halounová, L., Li, S., Šafář, V., Tomková, M., Rapant, P., Brázdil, K., Shi, W., Anton, F., Liu, Y., Stein, A., et al., Eds.; Copernicus Gesellschaft Mbh: Gottingen, Germany, 2016; Volume 41, pp. 637–640. [Google Scholar]

- Anderson, N.C.; Anderson, F.; Kingstone, A.; Bischof, W.F. A comparison of scanpath comparison methods. Behav. Res. 2015, 47, 1377–1392. [Google Scholar] [CrossRef]

- Needleman, S.B.; Wunsch, C.D. A general method applicable to the search for similarities in the amino acid sequence of to proteins. J. Mol. Biol. 1970, 48, 443–453. [Google Scholar] [CrossRef]

- Levenshtein, V.I. Binary codes capable of correcting deletions, insertions, and reversals. Sov. Phys. Dokl. 1966, 10, 707–710. [Google Scholar]

- Cristino, F.; Mathôt, S.; Theeuwes, J.; Gilchrist, I.D. ScanMatch: A novel method for comparing fixation sequences. Behav. Res. Methods 2010, 42, 692–700. [Google Scholar] [CrossRef] [PubMed]

- Polson, P.G.; Lewis, C.; Rieman, J.; Wharton, C. Cognitive walkthroughs: A method for theory-based evaluation of user interfaces. Int. J. Man-Mach. Stud. 1992, 36, 741–773. [Google Scholar] [CrossRef]

- Svenson, O. Differentiation and consolidation theory of human decision making: A frame of reference for the study of pre- and post-decision processes. Acta Psychol. 1992, 80, 143–168. [Google Scholar] [CrossRef]

- Dimopoulos, K.; Asimakopoulos, A. Science on the Web: Secondary School Students’ Navigation Patterns and Preferred Pages’ Characteristics. J. Sci. Educ. Technol. 2010, 19, 246–265. [Google Scholar] [CrossRef]

- Jarodzka, H.; Scheiter, K.; Gerjets, P.; van Gog, T. In the eyes of the beholder: How experts and novices interpret dynamic stimuli. Learn. Instr. 2010, 20, 146–154. [Google Scholar] [CrossRef]

- She, H.C.; Cheng, M.T.; Li, T.W.; Wang, C.Y.; Chiu, H.T.; Lee, P.Z.; Chou, W.C.; Chuang, M.H. Web-based undergraduate chemistry problem-solving: The interplay of task performance, domain knowledge and web-searching strategies. Comput. Educ. 2012, 59, 750–761. [Google Scholar] [CrossRef]

- Mayer, R.E. Cognitive, metacognitive, and motivational aspects of problem solving. Instr. Sci. 1998, 26, 49–63. [Google Scholar] [CrossRef]

- MacEachren, A.M. How Maps Work: Representation, Visualization and Design; Guilford Press: New York, NY, USA, 1995. [Google Scholar]

- Brunyé, T.T.; Taylor, H.A. When Goals Constrain: Eye Movements and Memory for Goal-Oriented Map Study. Appl. Cogn. Psychol. 2009, 23, 772–787. [Google Scholar] [CrossRef]

- Snopková, D.; Švedová, H.; Kubíček, P.; Stachoň, Z. Navigation in Indoor Environments: Does the Type of Visual Learning Stimulus Matter? ISPRS Int. J. Geo-Inf. 2019, 8, 251. [Google Scholar] [CrossRef]

- Fiorina, L.; Antonietti, A.; Colombo, B.; Bartolomeo, A. Thinking style, browsing primes and hypermedia navigation. Comput. Educ. 2007, 49, 916–941. [Google Scholar] [CrossRef]

- Hsieh, T.; Wu, K. The Influence of Gender Difference on the Information-Seeking Behaviors for the Graphical Interface of Children’s Digital Library. Univers. J. Educ. Res. 2015, 3, 200–206. [Google Scholar] [CrossRef]

- Cho, S. The role of IQ in the use of cognitive strategies to learn information from a map. Learn. Individ. Differ. 2010, 20, 694–698. [Google Scholar] [CrossRef]

- Mason, L.; Tornatora, M.C.; Pluchino, P. Do fourth graders integrate text and picture in processing and learning from an illustrated science text? Evidence from eye-movement patterns. Comput. Educ. 2013, 60, 95–109. [Google Scholar] [CrossRef]

| Task Formulation | Task Code | ||

|---|---|---|---|

| Near the borders with … we can find areas of both cold and warm climates. | T1.1 | ||

| Repsko | Stralie | Goran | |

| An approximately …-kilometer-wide area of a warm humid climate edges the coast of the Azure Sea. | T1.2 | ||

| 25 | 40 | 55 | |

| All the regional capitals of the regions neighboring … are connected by a highway. | T2.1 | ||

| Goran | Mulastan | Stralie | |

| Residents commuting by train from the capital of Chyslav to the capital of Virovice travel approximately … | T2.2 | ||

| 40 | 80 | 120 | |

| Specific Tips for (thematic) Map Analysis | General Tips for Task Solving |

|---|---|

| Get familiar with the map as a whole upon first seeing it and, particularly, if more complex map skills are required (i.e., map analysis or map interpretation). Specifically, become acquainted with the meaning of all the cartographic signs used by referring to both thematic and topographic legends. | Use all task elements that may be helpful in solving the task efficiently and effectively. Therefore, get familiar with possible solutions if they are provided in the first phase of solving the task, as it can be helpful to narrow the number of task elements that need to be used. |

| Efficiently take in individual map elements. Specifically, take in the information depicted on the map by comparing the cartographic signs with their meanings stated in the (thematic) legend. | If not working to a time constraint, do not prioritize the time it takes to answer. Double-check if the solution found corresponds to the task and, possibly, the solutions given. Moreover, verify that it is the only solution that fits the task as it was comprehended when only one solution can be correct. |

| Having understood the given task, try to distinguish relevant map layout elements from irrelevant ones in order to decrease the number of map elements you have to thoroughly analyze and repeatedly refer to. The same is true with the map content presented. Try to reduce the analyzed area presented on a map and/or thematic layers, to the ones that are relevant to the given task. Having completed this, try to focus only on this content when executing the given task. | Try to decode the given task prior to actually solving it to find its structure and to use an appropriate strategy for the task type identified, based on the set sequence of sub-goals that will lead to its solution. Moreover, try to use the same strategy to an identical type of task (e.g., independent of map type) if it proves to be effective. If not, get familiar with the correct answer and find the reason behind the incorrect solution to be able to aptly modify the strategy. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Havelková, L.; Gołębiowska, I.M. What Went Wrong for Bad Solvers during Thematic Map Analysis? Lessons Learned from an Eye-Tracking Study. ISPRS Int. J. Geo-Inf. 2020, 9, 9. https://doi.org/10.3390/ijgi9010009

Havelková L, Gołębiowska IM. What Went Wrong for Bad Solvers during Thematic Map Analysis? Lessons Learned from an Eye-Tracking Study. ISPRS International Journal of Geo-Information. 2020; 9(1):9. https://doi.org/10.3390/ijgi9010009

Chicago/Turabian StyleHavelková, Lenka, and Izabela Małgorzata Gołębiowska. 2020. "What Went Wrong for Bad Solvers during Thematic Map Analysis? Lessons Learned from an Eye-Tracking Study" ISPRS International Journal of Geo-Information 9, no. 1: 9. https://doi.org/10.3390/ijgi9010009

APA StyleHavelková, L., & Gołębiowska, I. M. (2020). What Went Wrong for Bad Solvers during Thematic Map Analysis? Lessons Learned from an Eye-Tracking Study. ISPRS International Journal of Geo-Information, 9(1), 9. https://doi.org/10.3390/ijgi9010009