Utilizing A Game Engine for Interactive 3D Topographic Data Visualization

Abstract

1. Introduction

2. Related Works

2.1. 3D Geospatial Data Visualization

2.2. Unity Game Engine

3. Methods

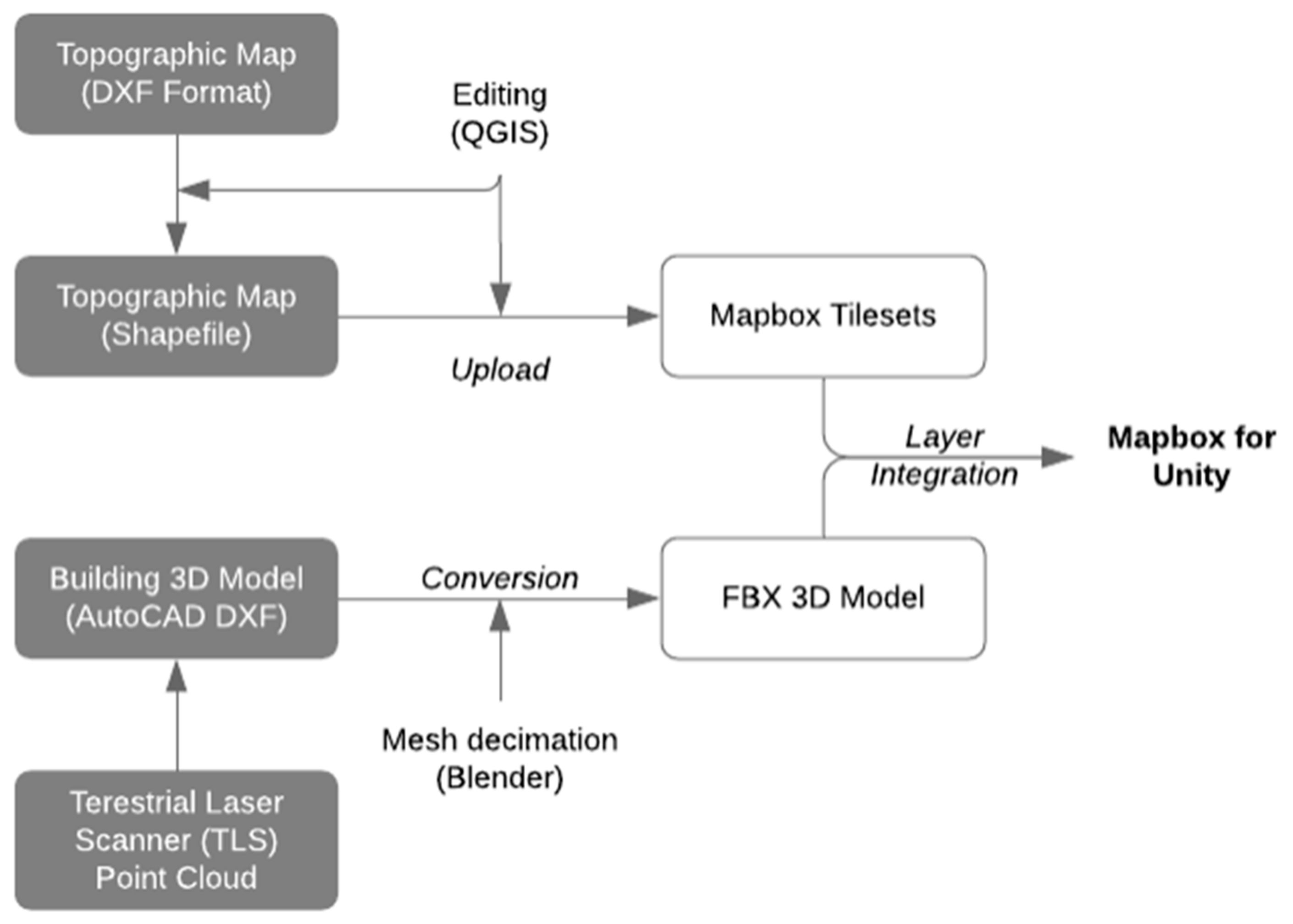

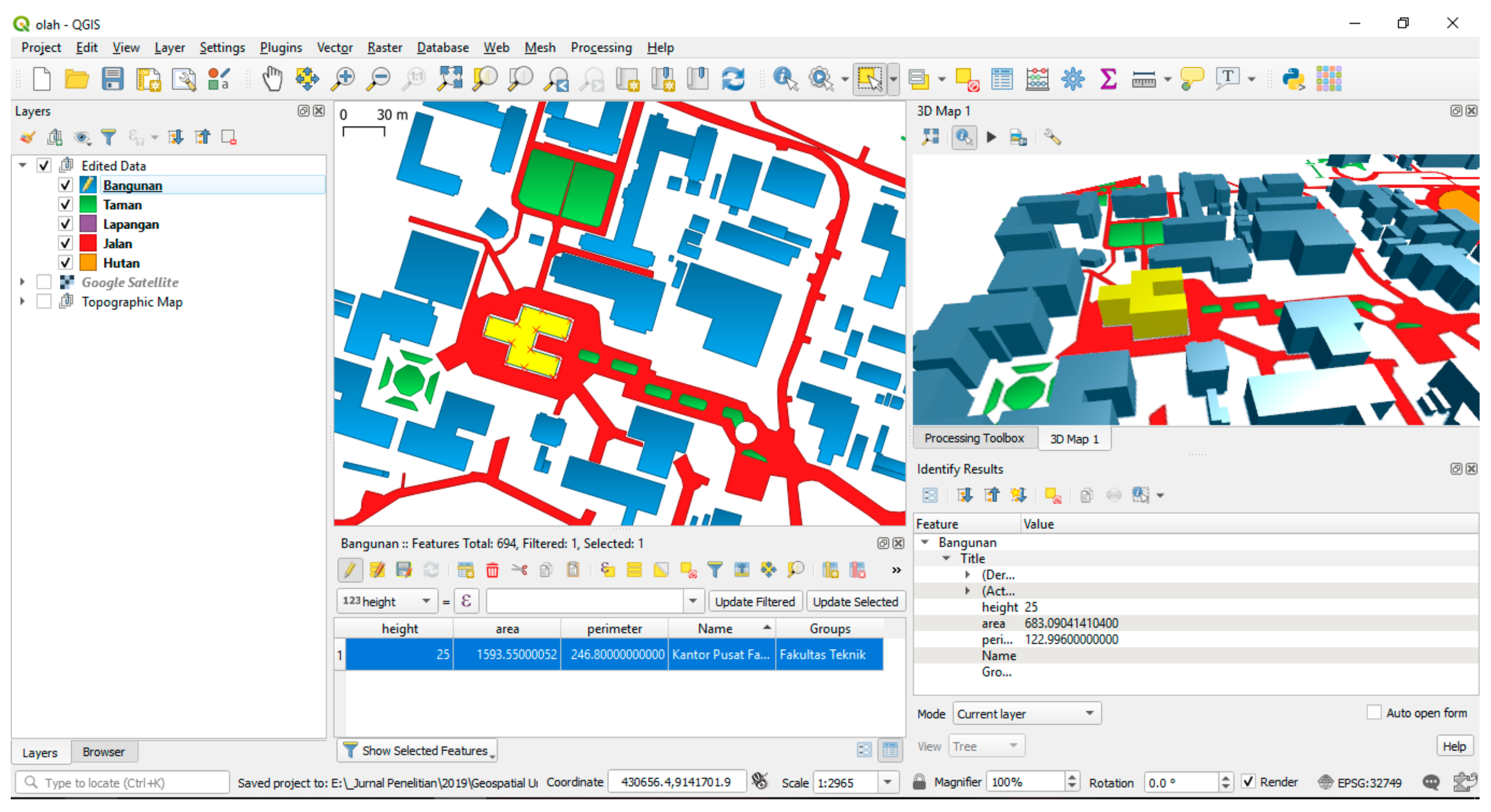

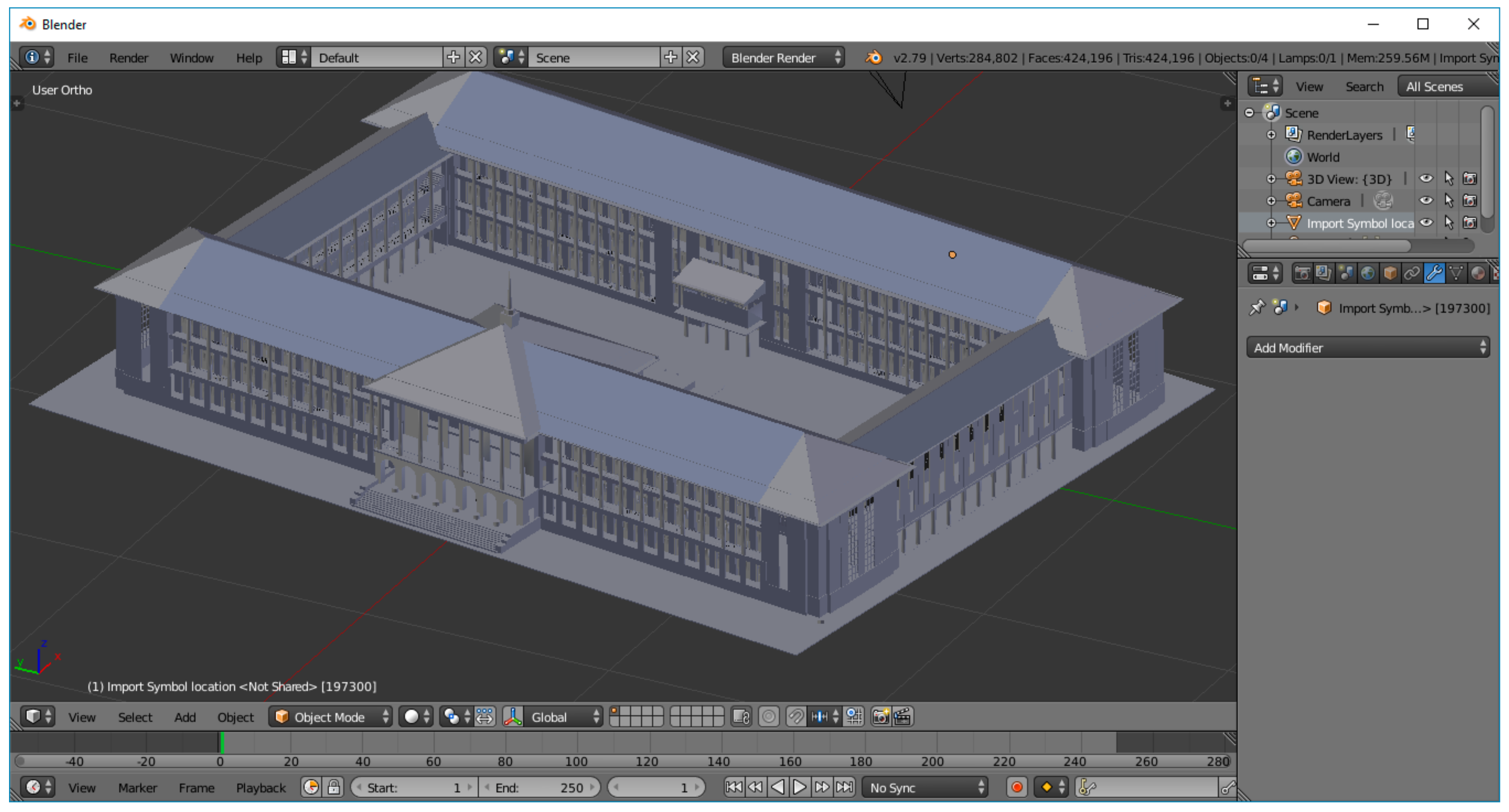

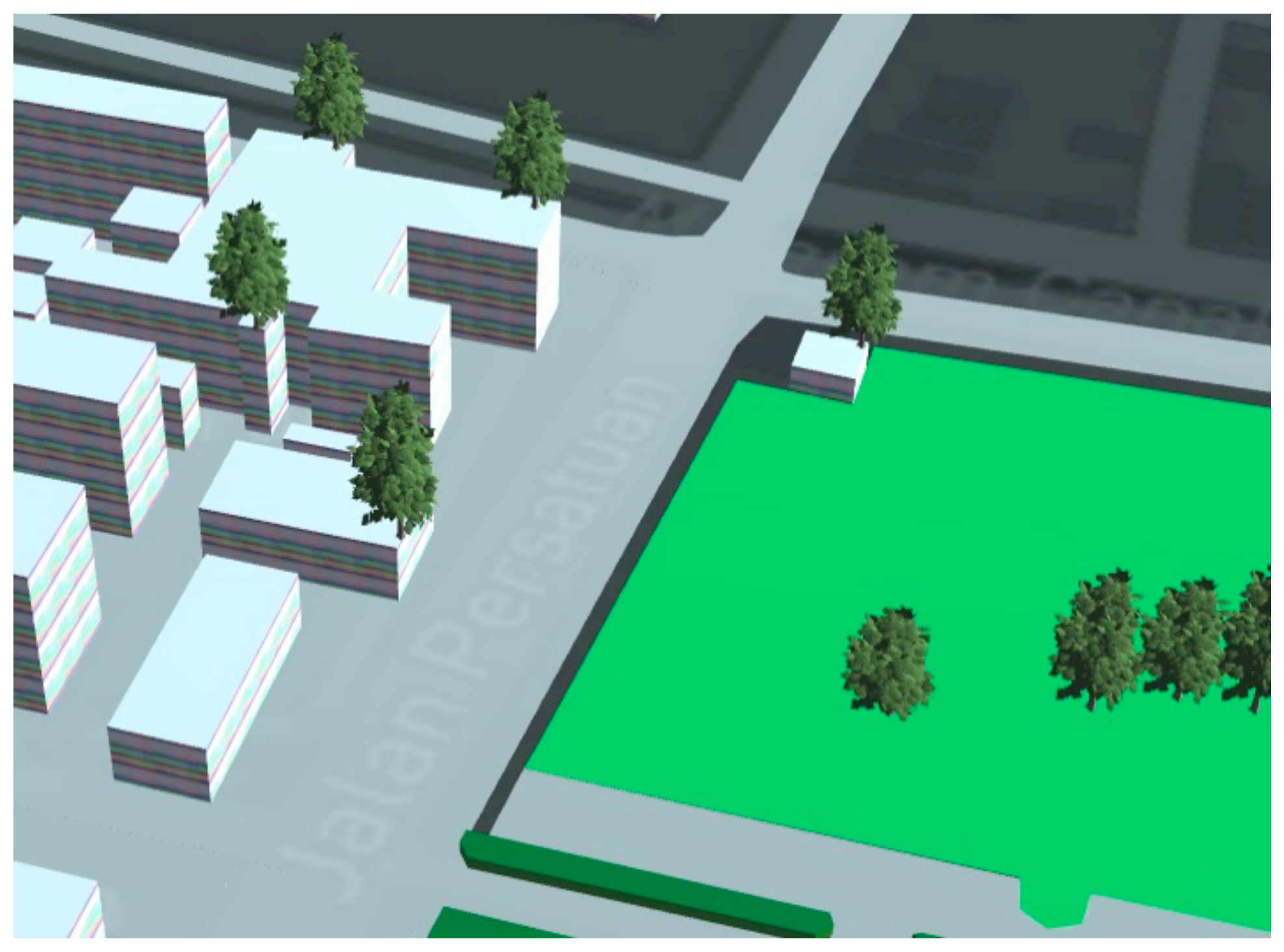

3.1. Data Integration

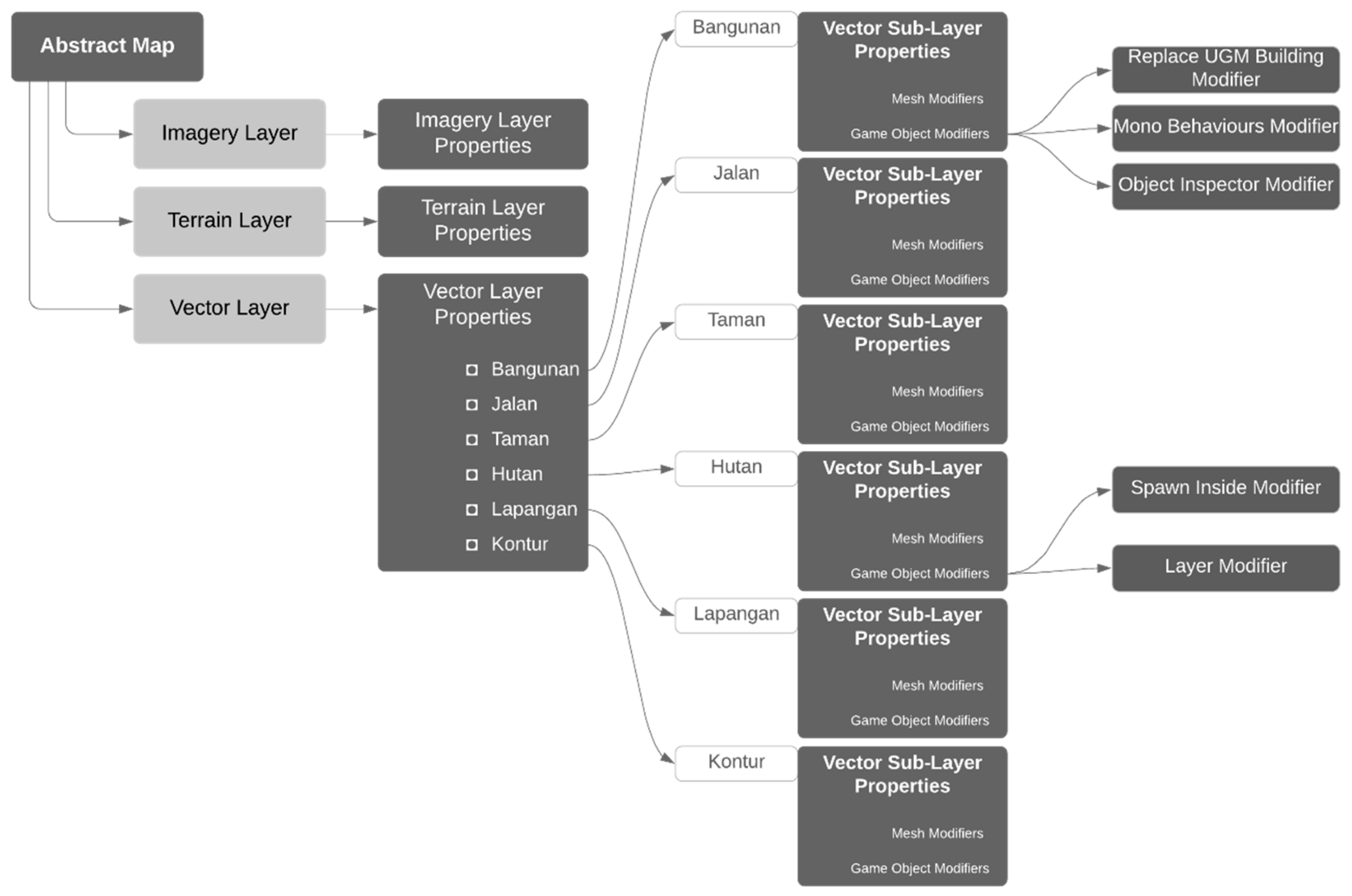

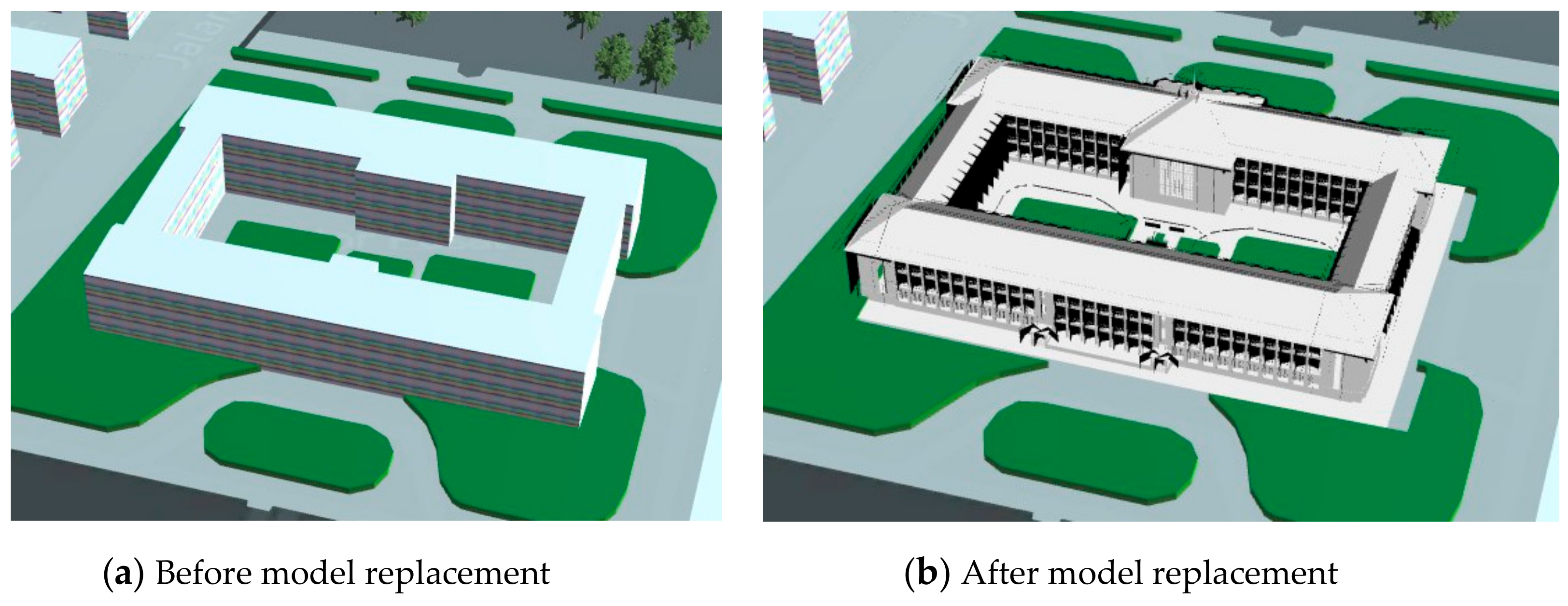

3.2. Visual Appearances and Attributes Information

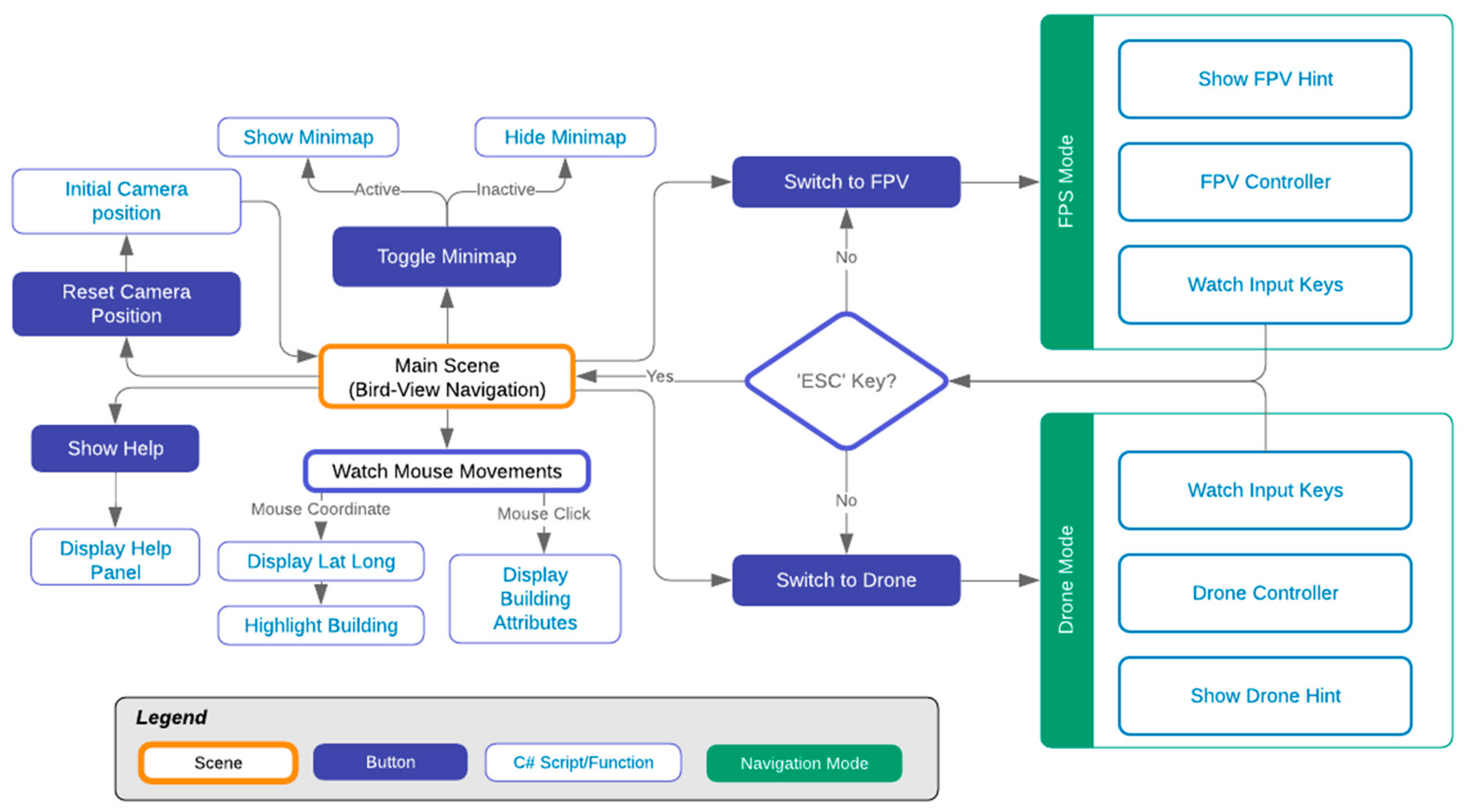

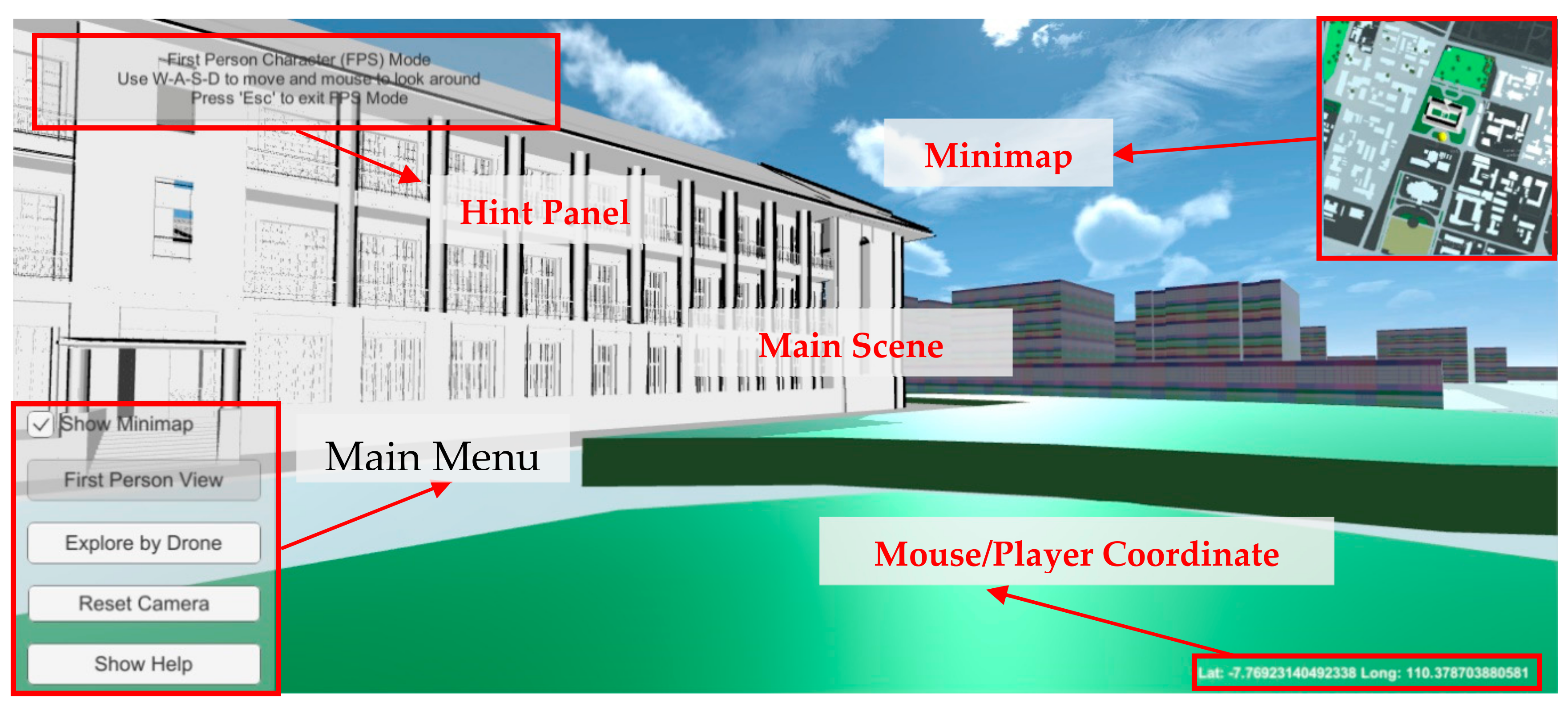

3.3. Map Interactions

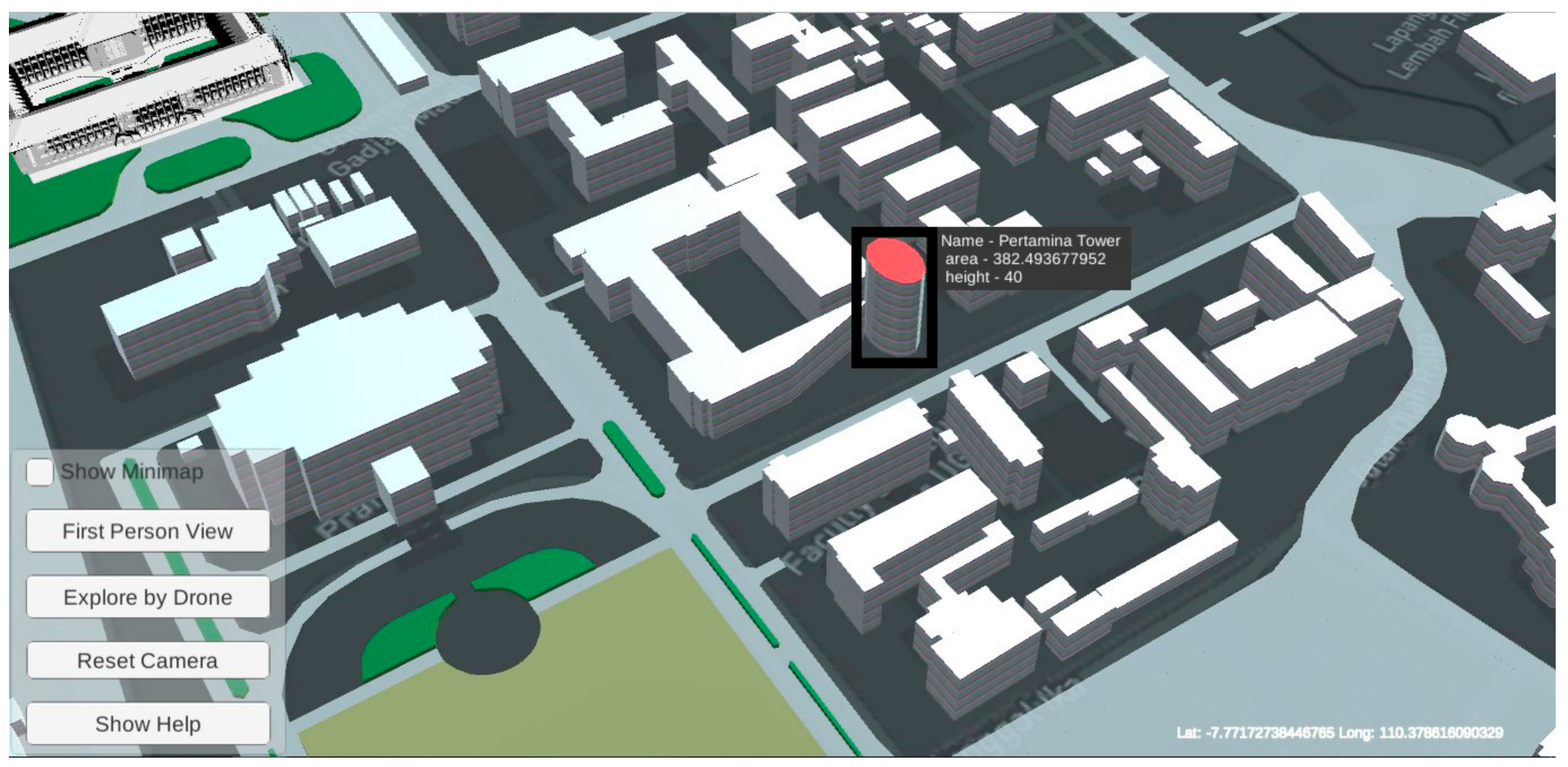

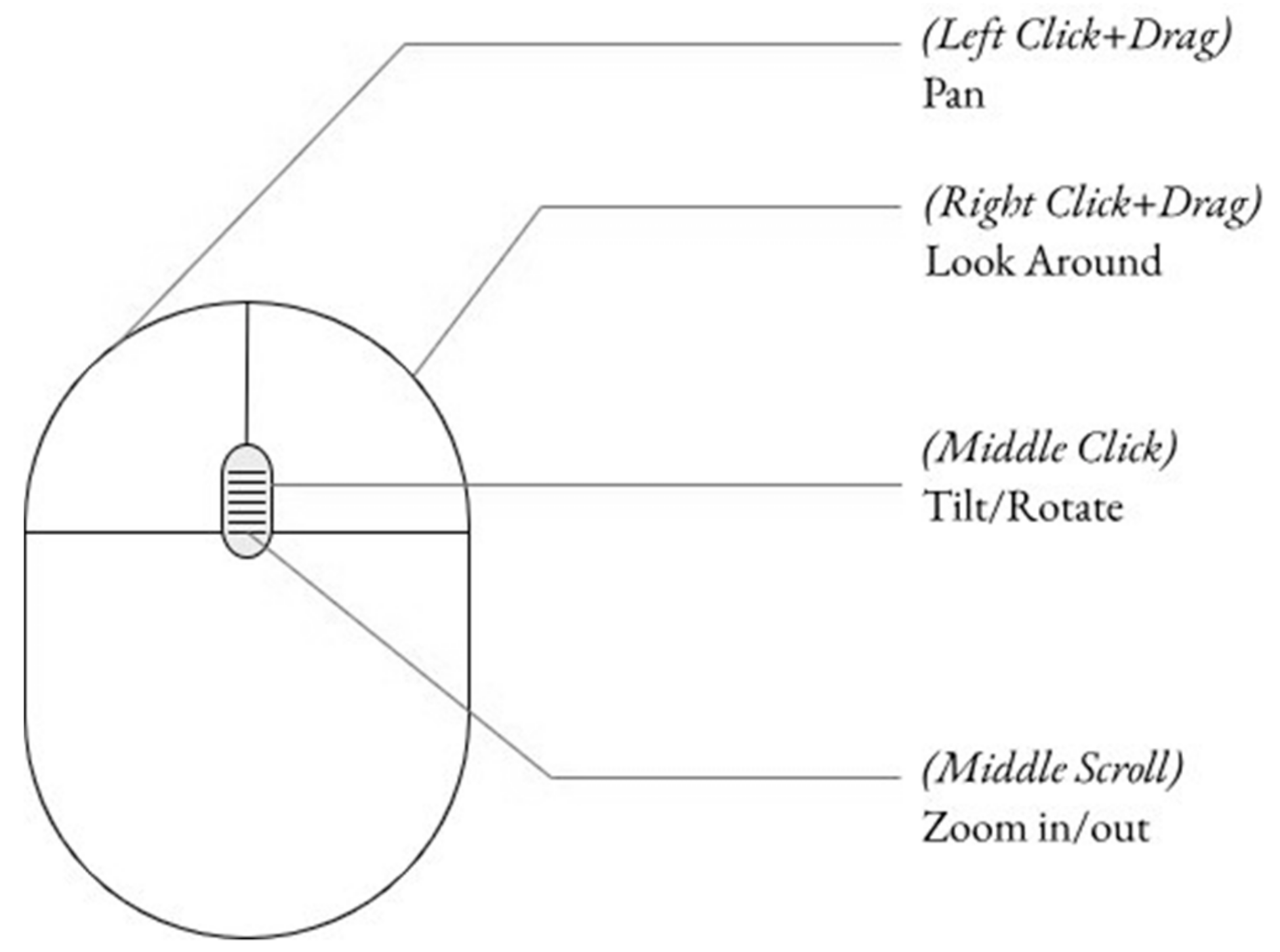

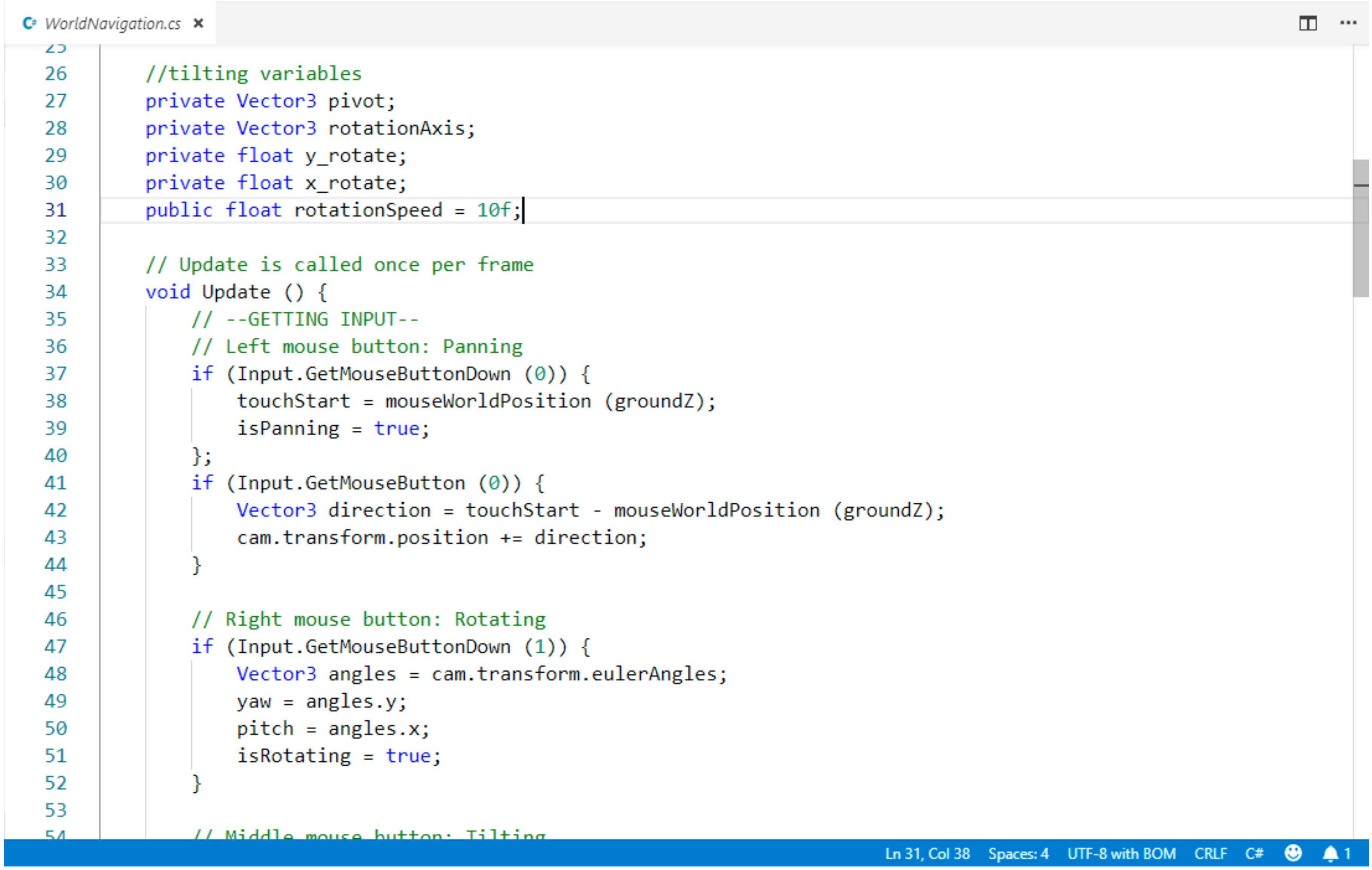

- Bird Eye View. Users control the camera movements on the map, i.e., panning, rotating/tilting, look-around, and zoom in/out.

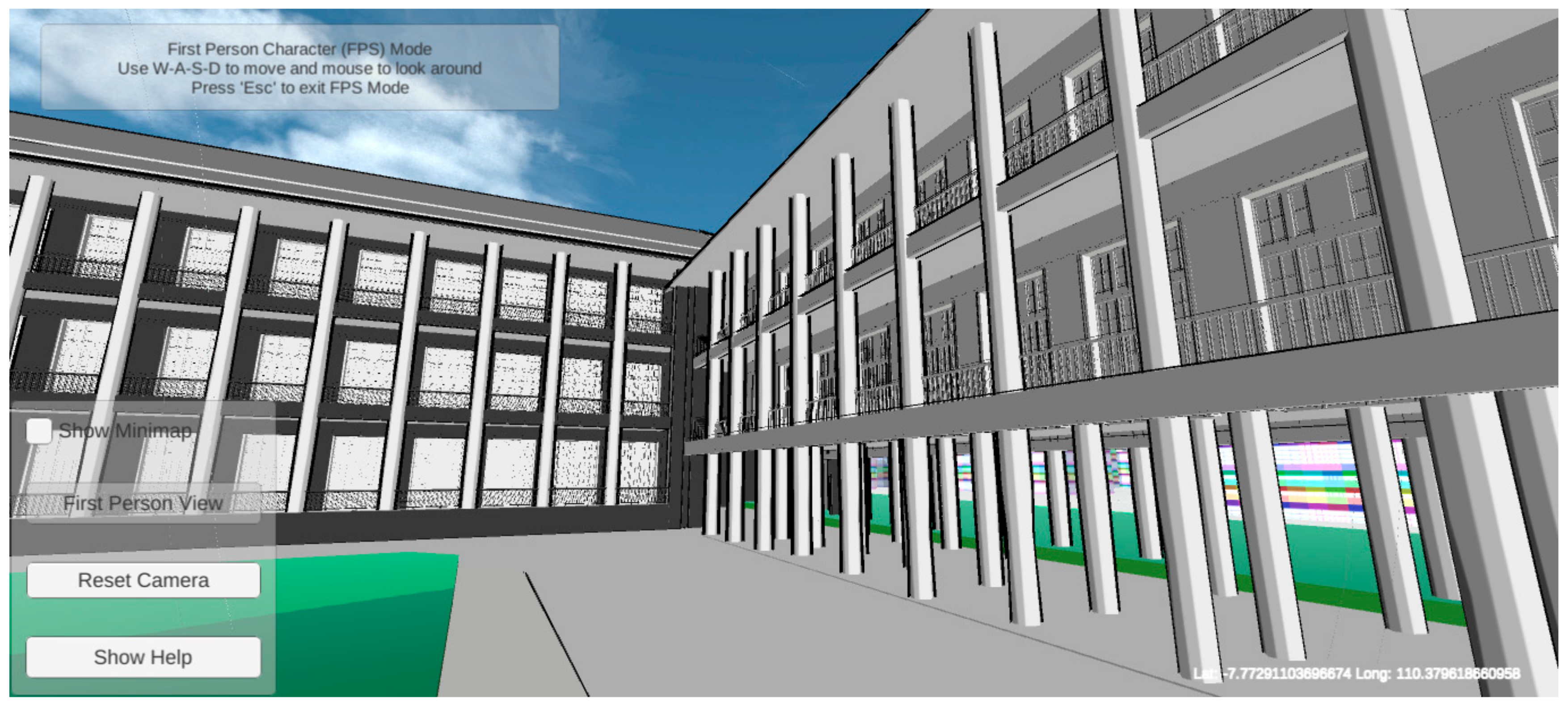

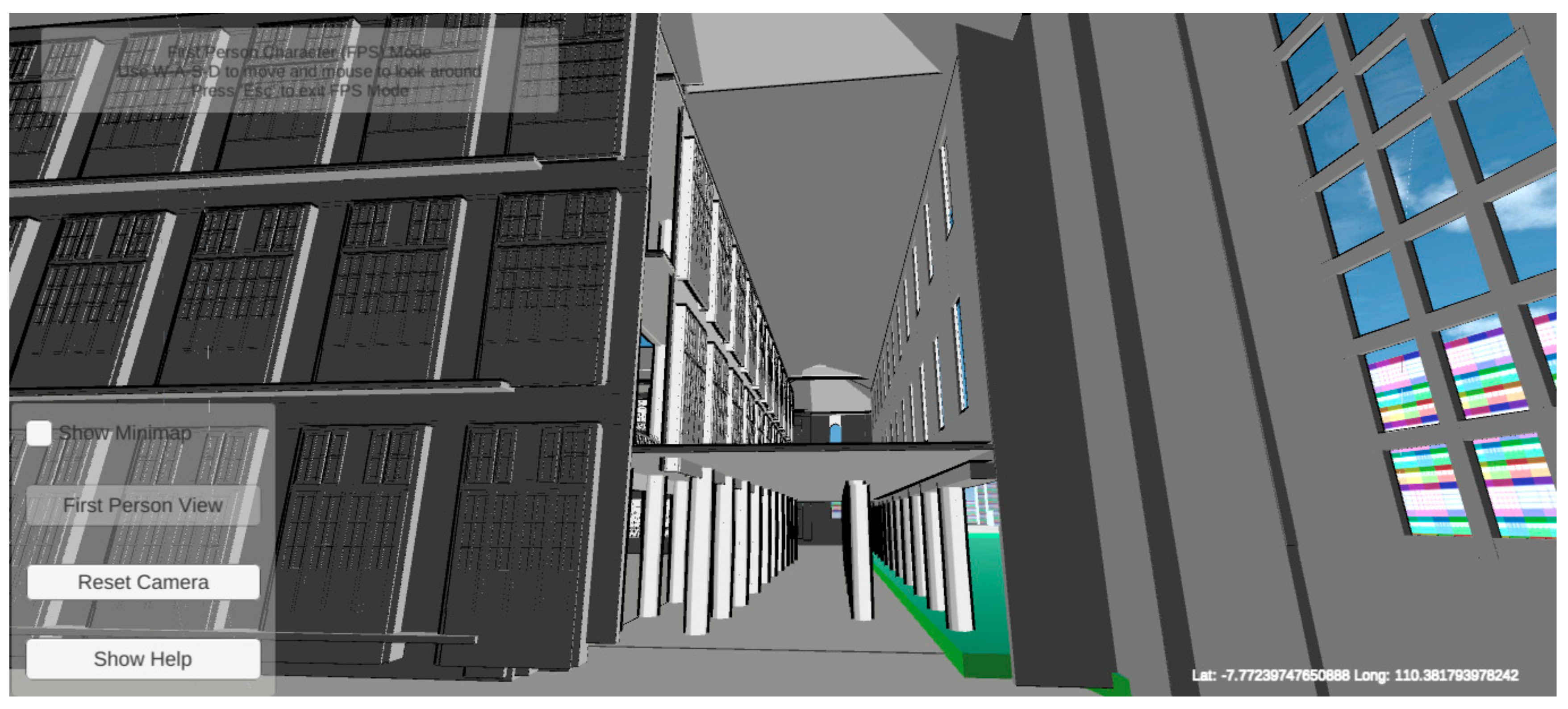

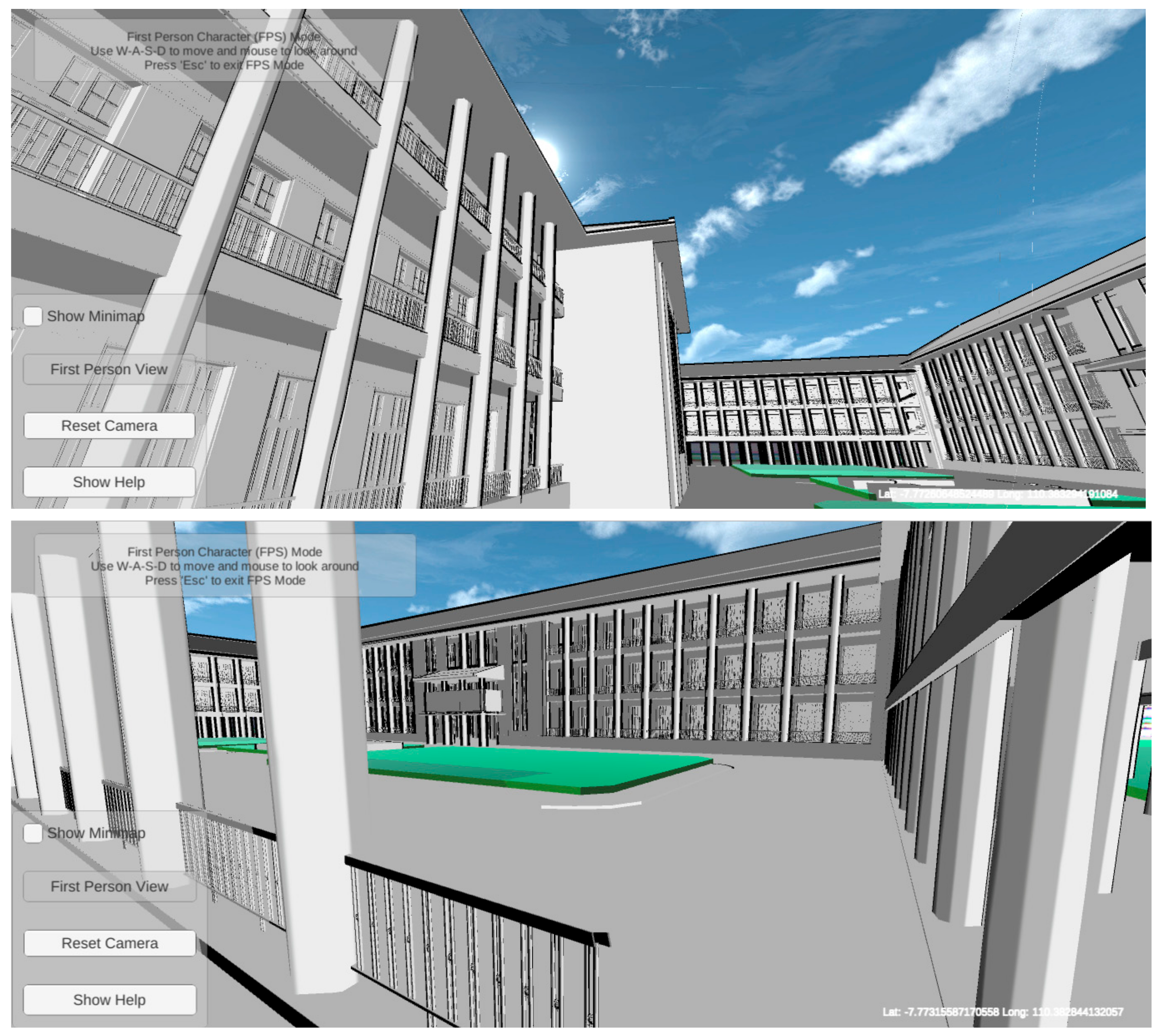

- FPV. Camera is attached to the player, where users control their movement freely.

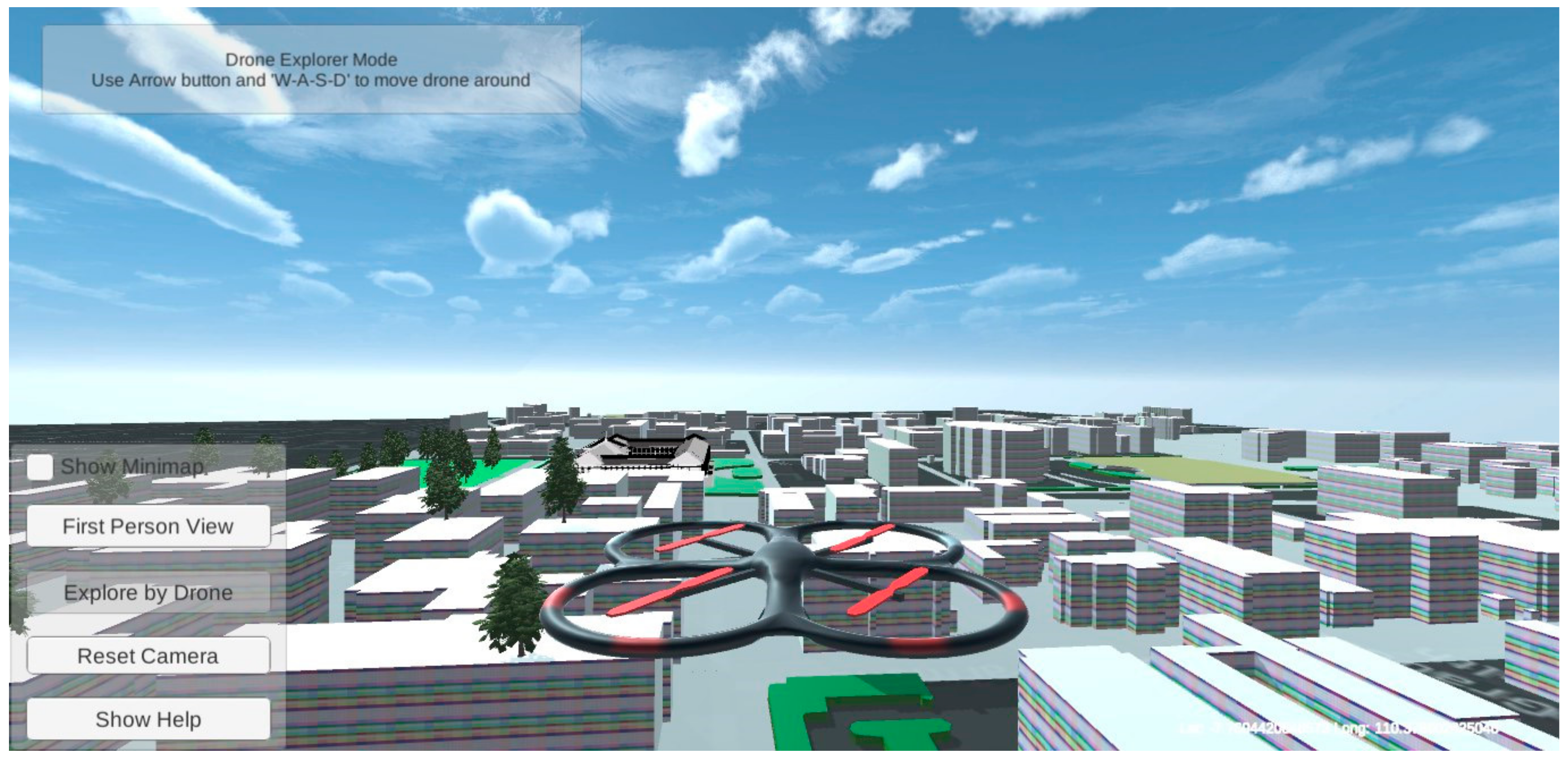

- Drone View. The user controls a quadcopter drone to explore the scene.

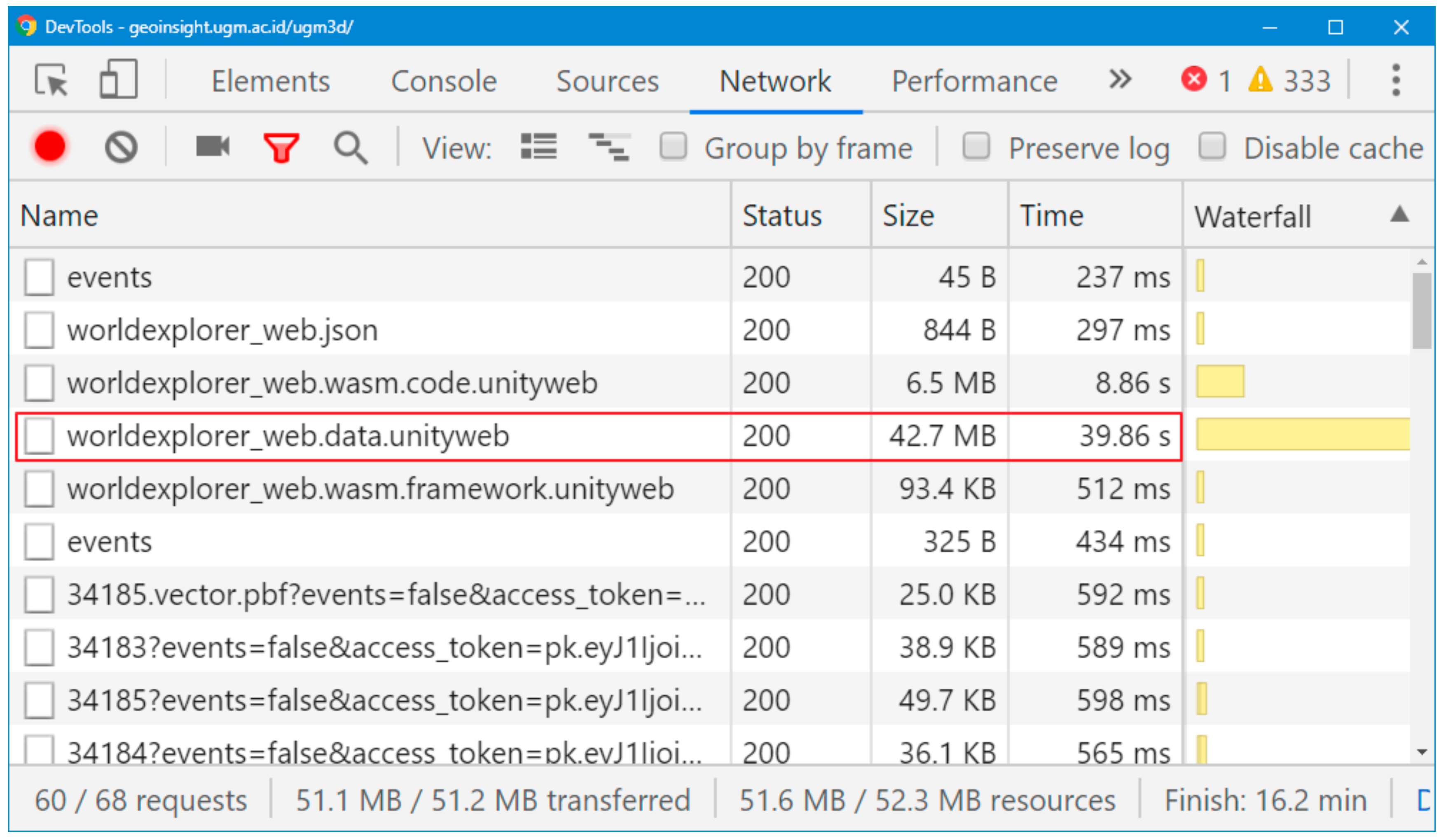

4. Results

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviations | Full Text | Descriptions |

| API | Application Programming Interface | A set of routines which specifies how specific function of a software is being used |

| DXF | AutoCAD Drawing Exchange Format | A binary or an ASCII representation of a drawing file. It is often used to share drawing data between other CAD programs |

| FBX | Autodesk FilmBoX | A 3D asset exchange format that facilitates higher-fidelity 3D data exchange |

| FPV | First-Person View | An interactive visualization where the player experience virtual world through the eye of a character |

| LiDAR | Light Detection and Ranging | A remote sensing method that uses light in the form of a pulsed laser to measure ranges (variable distances) to the Earth |

| LOD | Level of Detail | A number which specifies level of 3D model representation detail [40] |

| SDK | Software Development Kit | A set of software creation tools to build application package based on certain framework |

| TLS | Terrestrial Laser Scanner | A terrestrial survey method that uses laser scanning technology to scan the object and record the 3D point-clouds, also known as topographic LiDAR |

| UGM | Universitas Gadjah Mada | University Campus in Yogyakarta, Indonesia |

Appendix A

Appendix B

References

- Farman, J. Mapping the digital empire: Google Earth and the process of postmodern cartography. New Media Soc. 2010, 12, 869–888. [Google Scholar] [CrossRef]

- Yu, L.; Gong, P. Google Earth as a virtual globe tool for Earth science applications at the global scale: Progress and perspectives. Int. J. Remote Sens. 2012, 33, 3966–3986. [Google Scholar] [CrossRef]

- Boschetti, L.; Roy, D.P.; Justice, C.O. Using NASA’s World Wind virtual globe for interactive internet visualization of the global MODIS burned area product. Int. J. Remote Sens. 2008, 29, 3067–3072. [Google Scholar] [CrossRef]

- Chaturvedi, K.; Kolbe, T.H. Dynamizers: Modeling and implementing dynamic properties for semantic 3d city models. Proc. Eurographics Work. Urban Data Model. Vis. 2015, 43–48. [Google Scholar] [CrossRef]

- Brovelli, M.A.; Minghini, M.; Moreno-sanchez, R. Free and open source software for geospatial applications (FOSS4G ) to support Future Earth. Int. J. Digit. Earth 2017, 10, 386–404. [Google Scholar] [CrossRef]

- Tully, D.; El Rhalibi, A.; Carter, C.; Sudirman, S.; Rhalibi, A. Hybrid 3D Rendering of Large Map Data for Crisis Management. ISPRS Int. J. Geo Inf. 2015, 4, 1033–1054. [Google Scholar] [CrossRef]

- Virtanen, J.; Hyyppä, H.; Kämäräinen, A.; Hollström, T.; Vastaranta, M.; Hyyppä, J. Intelligent Open Data 3D Maps in a Collaborative Virtual World. ISPRS Int. J. Geo Inf. 2015, 4, 837–857. [Google Scholar] [CrossRef]

- Lin, H.; Chen, M.; Lu, G. Virtual Geographic Environment: A Workspace for Computer-Aided Geographic Experiments. Ann. Assoc. Am. Geogr. 2013, 103, 465–482. [Google Scholar] [CrossRef]

- Trenholme, D.; Smith, S.P. Computer game engines for developing first-person virtual environments. Virtual Real. 2008, 12, 181–187. [Google Scholar] [CrossRef]

- Natephra, W.; Motamedi, A.; Fukuda, T.; Yabuki, N. Integrating building information modeling and virtual reality development engines for building indoor lighting design. Vis. Eng. 2017, 5, 21. [Google Scholar] [CrossRef]

- Rua, H.; Alvito, P. Living the past: 3D models, virtual reality and game engines as tools for supporting archaeology and the reconstruction of cultural heritage e the case-study of the Roman villa of Casal de Freiria. J. Archaeol. Sci. 2011, 38, 3296–3308. [Google Scholar] [CrossRef]

- Indraprastha, A.; Shinozaki, M. The Investigation on Using Unity3D Game Engine in Urban Design Study. ITB J. Inf. Commun. Technol. 2009, 3, 1–18. [Google Scholar] [CrossRef]

- Rafiee, A.; Van Der Male, P.; Dias, E.; Scholten, H. Interactive 3D geodesign tool for multidisciplinary wind turbine planning. J. Environ. Manag. 2018, 205, 107–124. [Google Scholar] [CrossRef] [PubMed]

- Trubka, R.; Glackin, S.; Lade, O.; Pettit, C. A web-based 3D visualisation and assessment system for urban precinct scenario modelling. ISPRS J. Photogramm. Remote Sens. 2016, 117, 175–186. [Google Scholar] [CrossRef]

- Susi, T.; Johannesson, M.; Backlund, P. Serious Games: An Overview; Institutionen för kommunikation och information: Skövde, Sweden, 2007. [Google Scholar]

- Wahyudin, D.; Hasegawa, S. The Role of Serious Games in Disaster and Safety Education: An Integrative Review. In Proceedings of the 25th International Conference on Computers in Education; Asia-Pacific Society for Computers in Education: Christchurch, New Zealand, 2017; pp. 180–190. [Google Scholar]

- Aditya, T.; Laksono, D. Geogame on the peat: Designing effective gameplay in geogames app for haze mitigation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 5–10. [Google Scholar] [CrossRef]

- Mat, R.C.; Shariff, A.R.M.; Zulkifli, A.N.; Rahim, M.S.M.; Mahayudin, M.H. Using game engine for 3D terrain visualisation of GIS data: A review. IOP Conf. Ser. Earth Environ. Sci. 2014, 20, 012037. [Google Scholar] [CrossRef]

- Reyes, M.E.P.; Chen, S.C. A 3D Virtual Environment for Storm Surge Flooding Animation. In Proceedings of the 2017 IEEE Third International Conference on Multimedia Big Data (BigMM), Laguna Hills, CA, USA, 19–21 April 2017; pp. 244–245. [Google Scholar]

- Evangelidis, K.; Papadopoulos, L.; Papatheodorou, K.; Mastorokostas, P.; Hilas, C. Geospatial Visualizations: Animation and Motion Effects on Spatial Objects. Comput. Geosic. 2018, 111, 200–212. [Google Scholar] [CrossRef]

- Thöny, M.; Schnürer, R.; Sieber, R.; Hurni, L.; Pajarola, R. Storytelling in Interactive 3D Geographic Visualization Systems. ISPRS Int. J. Geo Inf. 2018, 7, 123. [Google Scholar] [CrossRef]

- Tsai, Y.T.; Jhu, W.Y.; Chen, C.C.; Kao, C.H.; Chen, C.Y. Unity game engine: Interactive software design using digital glove for virtual reality baseball pitch training. Microsyst. Technol. 2019, 9, 1–17. [Google Scholar] [CrossRef]

- Alatalo, T.; Pouke, M.; Koskela, T.; Hurskainen, T.; Florea, C.; Ojala, T. Two real-world case studies on 3D web applications for participatory urban planning. In Proceedings of the 22nd International Conference on 3D Web Technology, Brisbane, Australia, 5–7 June 2017. [Google Scholar]

- Lee, W.L.; Tsai, M.H.; Yang, C.H.; Juang, J.R.; Su, J.Y. V3DM+: BIM interactive collaboration system for facility management. Vis. Eng. 2016, 4, 5. [Google Scholar] [CrossRef][Green Version]

- Dutton, C. Correctly and accurately combining normal maps in 3D engines. Comput. Games J. 2013, 2, 41–54. [Google Scholar] [CrossRef]

- Julin, A.; Jaalama, K.; Virtanen, J.P.; Pouke, M.; Ylipulli, J.; Vaaja, M.; Hyyppa, J.; Hyyppä, H. Characterizing 3D City Modeling Projects: Towards a Harmonized Interoperable System. ISPRS Int. J. Geo Inf. 2018, 7, 55. [Google Scholar] [CrossRef]

- Petridis, P.; Dunwell, I.; Panzoli, D.; Arnab, S.; Protopsaltis, A.; Hendrix, M.; Freitas, S.; De Freitas, S. Game Engines Selection Framework for High-Fidelity Serious Applications. Int. J. Interact. Worlds 2012, 2012, 1–19. [Google Scholar] [CrossRef]

- Bayburt, S.; Baskaraca, A.P.; Karim, H.; Rahman, A.A.; Buyuksalih, I. 3D Modelling And Visualization Based On The Unity Game Engine—Advantages And Challenges. ISPRS Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. 2017, W4, 161–166. [Google Scholar]

- Unity3D. Skybox Series. 2019. Available online: https://assetstore.unity.com/packages/2d/textures-materials/sky/skybox-series-free-103633 (accessed on 28 March 2019).

- Unity3D. Unity Scripting API: Physics Raycast. Available online: https://docs.unity3d.com/ScriptReference/Physics.Raycast.html (accessed on 12 May 2019).

- Unity3D. Unity3D Standard Assets. 2019. Available online: https://assetstore.unity.com/packages/essentials/asset-packs/standard-assets-32351 (accessed on 19 March 2019).

- Aditya, T.; Laksono, D.; Sutanta, H.; Izzahudin, N.; Susanta, F. A usability evaluation of a 3d map display for pedestrian navigation. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 3–10. [Google Scholar] [CrossRef]

- Liao, H.; Dong, W. An Exploratory Study Investigating Gender Effects on Using 3D Maps for Spatial Orientation in Wayfinding. ISPRS Int. J. Geo Inf. 2017, 6, 60. [Google Scholar] [CrossRef]

- Liao, H.; Dong, W.; Peng, C.; Liu, H. Exploring differences of visual attention in pedestrian navigation when using 2D maps and 3D. Cartogr. Geogr. Inf. Sci. 2017, 44, 474–490. [Google Scholar] [CrossRef]

- Halik, Ł. Challenges in Converting the Polish Topographic Database of Built-Up Areas into 3D Virtual Reality Geovisualization. Cartogr. J. 2018, 55, 391–399. [Google Scholar] [CrossRef]

- Bakri, H.; Allison, C. Measuring QoS in web-based virtual worlds. In Proceedings of the 8th International Workshop on Massively Multiuser Virtual Environments, Klagenfurt, Austria, 10–13 May 2016; pp. 1–6. [Google Scholar]

- Belussi, A.; Migliorini, S. A Framework for Integrating Multi-accuracy Spatial Data in Geographical Applications. Geoinformatica 2012, 16, 523–561. [Google Scholar] [CrossRef]

- Caquard, S.; Cartwright, W. Narrative Cartography: From Mapping Stories to the Narrative of Maps and Mapping. Cartogr. J. 2014, 51, 101–106. [Google Scholar] [CrossRef]

- Cartwright, W.; Field, K. Exploring Cartographic Storytelling. Reflections on Mapping Real-life and Fictional Stories. In Proceedings of the International Cartographic Conference, Rio de Janeiro, Brazil, 23–28 August 2015. [Google Scholar]

- Biljecki, F.; LeDoux, H.; Stoter, J. An improved LOD specification for 3D building models. Comput. Environ. Urban Syst. 2016, 59, 25–37. [Google Scholar] [CrossRef]

| Layer | Texture | Custom Modifiers |

|---|---|---|

| Buildings 1 | Mapbox top and side material | Highlight building on mouse-over Display building’s attributes Replace building with 3D model Set colliders |

| Roads | Mapbox light color with transparency | Remove colliders |

| Parks | Custom color (light green) | Remove colliders |

| City Forests | Custom color (green) | Spawn 3D tree model Set collider for trees |

| Fields | Custom color (brown) | Remove colliders |

| Contours | Custom color (light brown) | Remove colliders |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Laksono, D.; Aditya, T. Utilizing A Game Engine for Interactive 3D Topographic Data Visualization. ISPRS Int. J. Geo-Inf. 2019, 8, 361. https://doi.org/10.3390/ijgi8080361

Laksono D, Aditya T. Utilizing A Game Engine for Interactive 3D Topographic Data Visualization. ISPRS International Journal of Geo-Information. 2019; 8(8):361. https://doi.org/10.3390/ijgi8080361

Chicago/Turabian StyleLaksono, Dany, and Trias Aditya. 2019. "Utilizing A Game Engine for Interactive 3D Topographic Data Visualization" ISPRS International Journal of Geo-Information 8, no. 8: 361. https://doi.org/10.3390/ijgi8080361

APA StyleLaksono, D., & Aditya, T. (2019). Utilizing A Game Engine for Interactive 3D Topographic Data Visualization. ISPRS International Journal of Geo-Information, 8(8), 361. https://doi.org/10.3390/ijgi8080361