A High-Definition Road-Network Model for Self-Driving Vehicles

Abstract

:1. Introduction

2. Literature Review

- The road-network structure and the relationships between layers should be complete, enabling adaptation to applications and calculations in different situations.

- The geometry, topology and attribute data of each layer of the road network should be complete.

- The attribute data of the road-network components should support dynamic storage and updates to meet the needs of real-time self-driving.

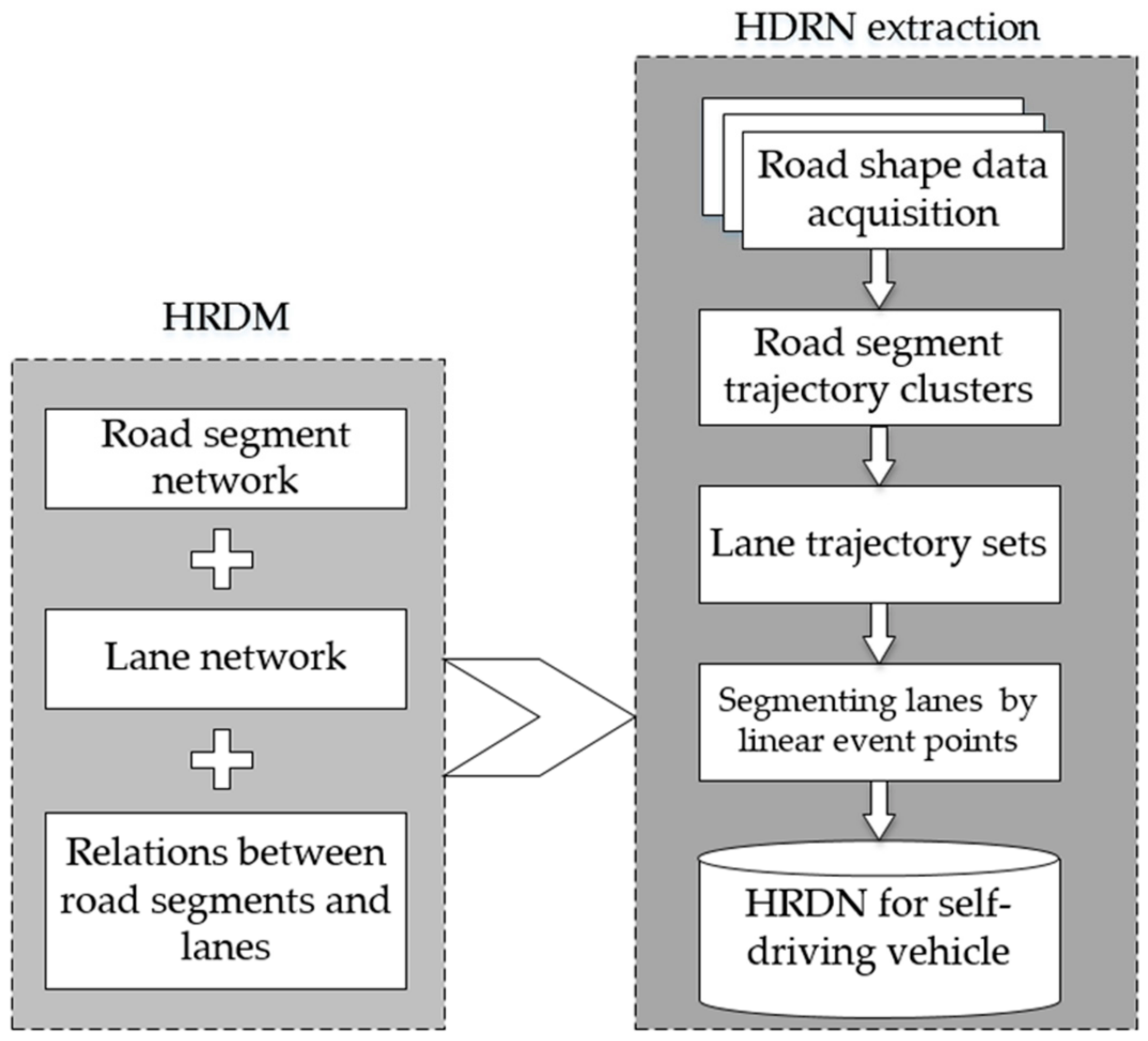

3. HDRNM for a Self-Driving Vehicle

- Complete road-network structure and layer-to-layer relationship

- Complete data of all road network layers

- Attributes of road-network components supporting dynamic storage and update

4. HDRNM Automatic Construction Method

4.1. Extracting Segment Direction and Sets of Points with the PCA Algorithm

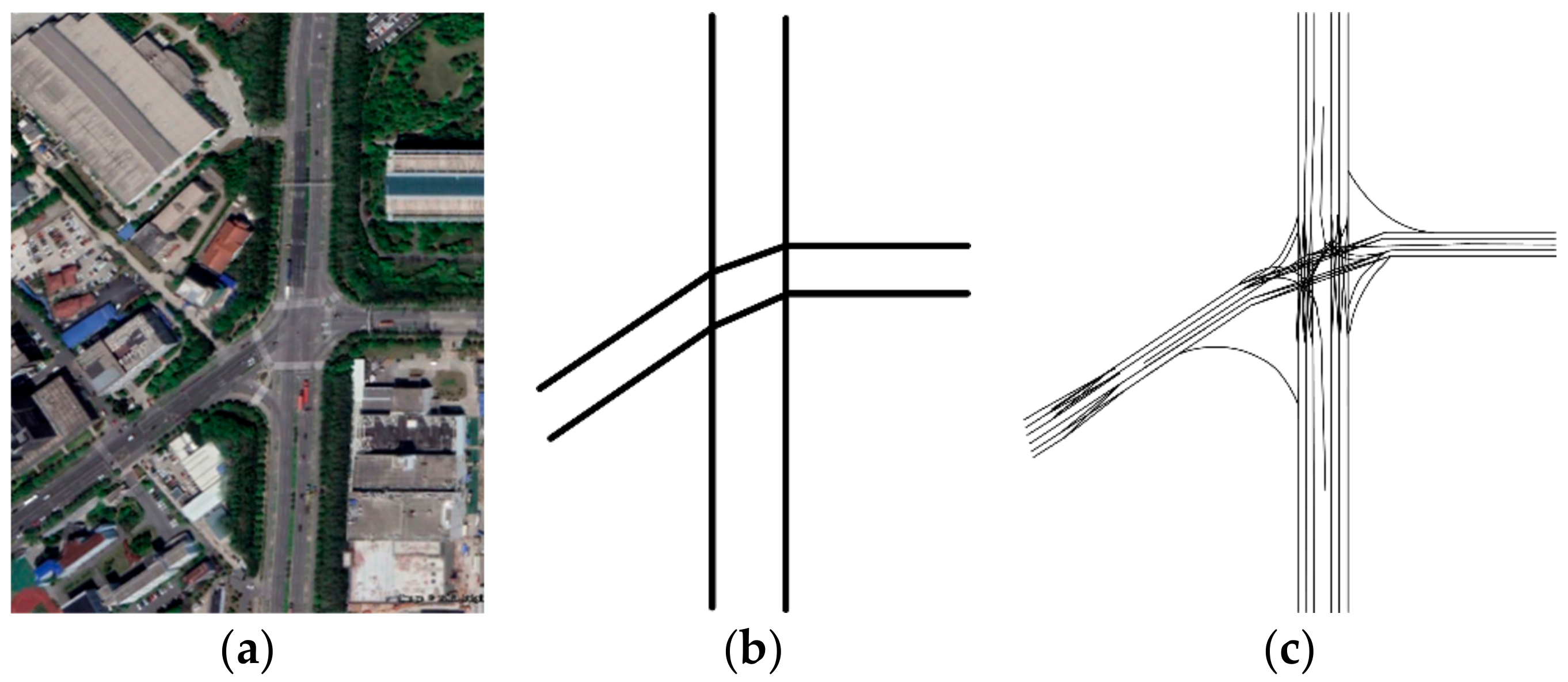

4.2. Lane-Network Construction under Multi-Directional Constraints

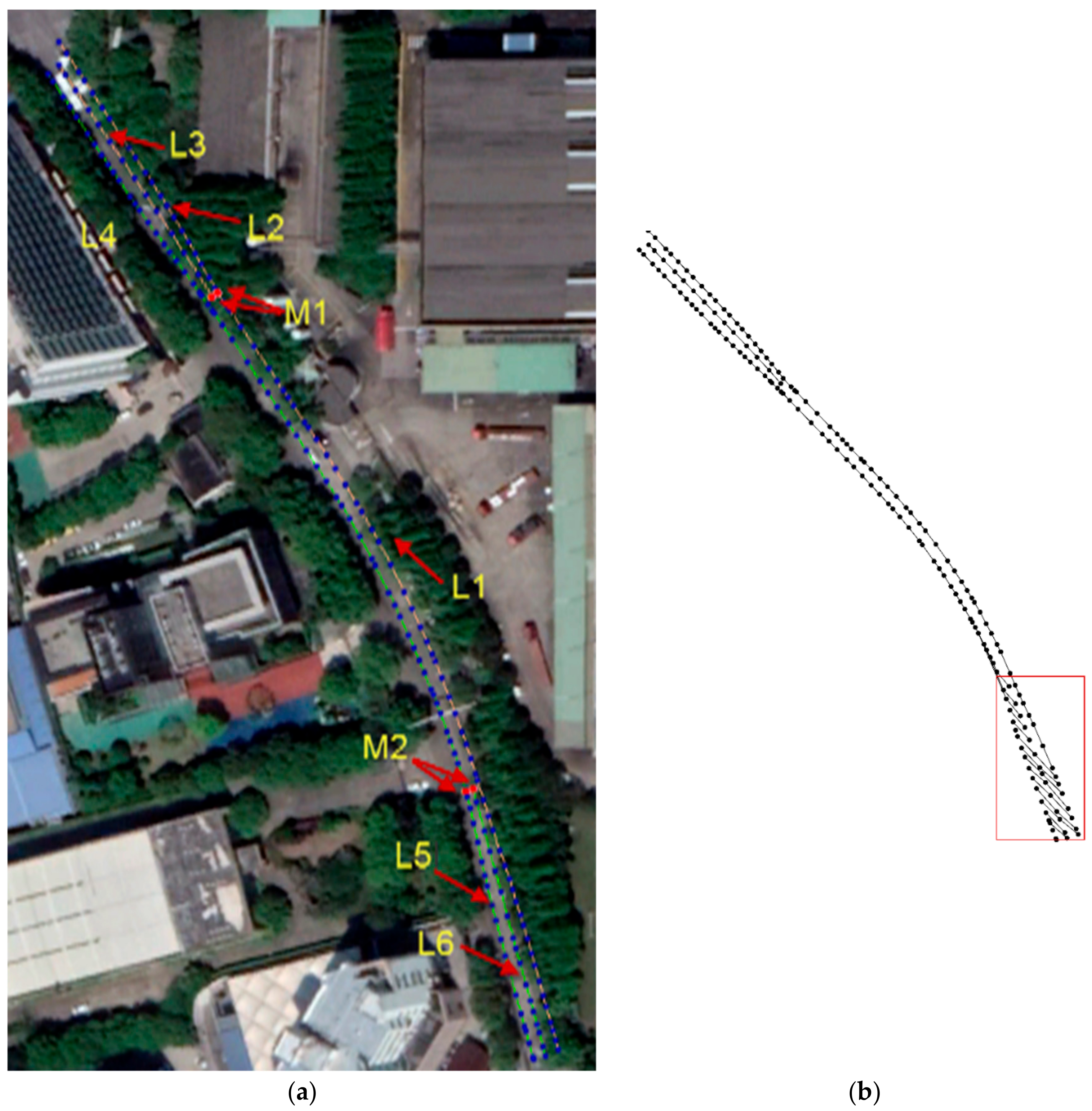

- From the results of the previous process, we obtained points arranged in the direction of each road segment and their angles in the primary direction.

- First, we tracked each road segment in the direction of the first point and then traversed them by giving priority to the main direction. Then, we calculated the angles between the current point and the traversal point. When the angle was in the range of σ, we considered the two points to be on the same lane.

- We repeated this loop tracking until all points were traversed.

- We calculated the length of all lanes in the road direction and determined the linear metric value of linear event points on the road segment.

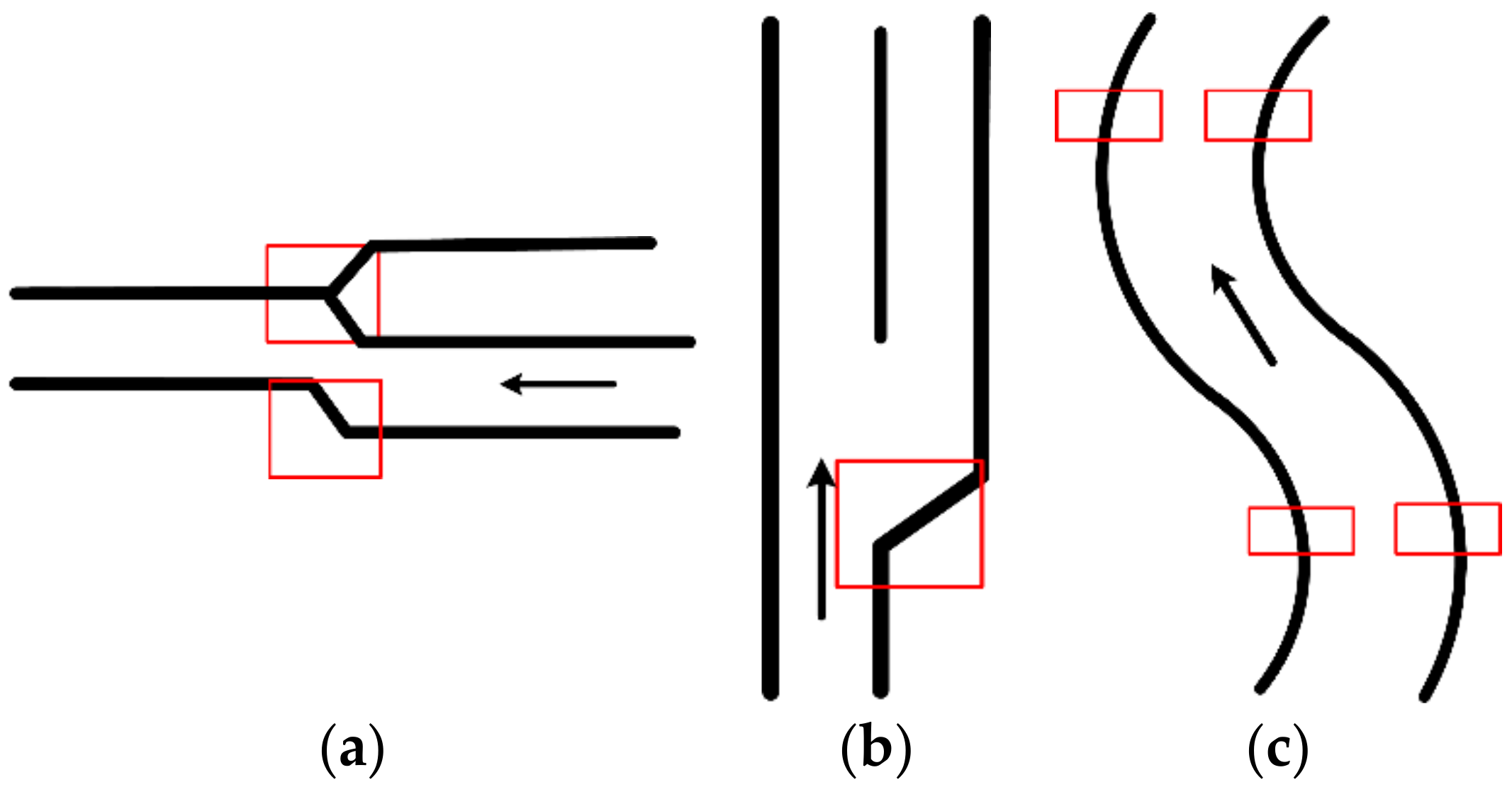

4.3. Generation of Road Network Based on Relationships between Linear Event Points

5. Experiments and Results

5.1. Data Collection

5.2. HDRNM Generation and Evaluation

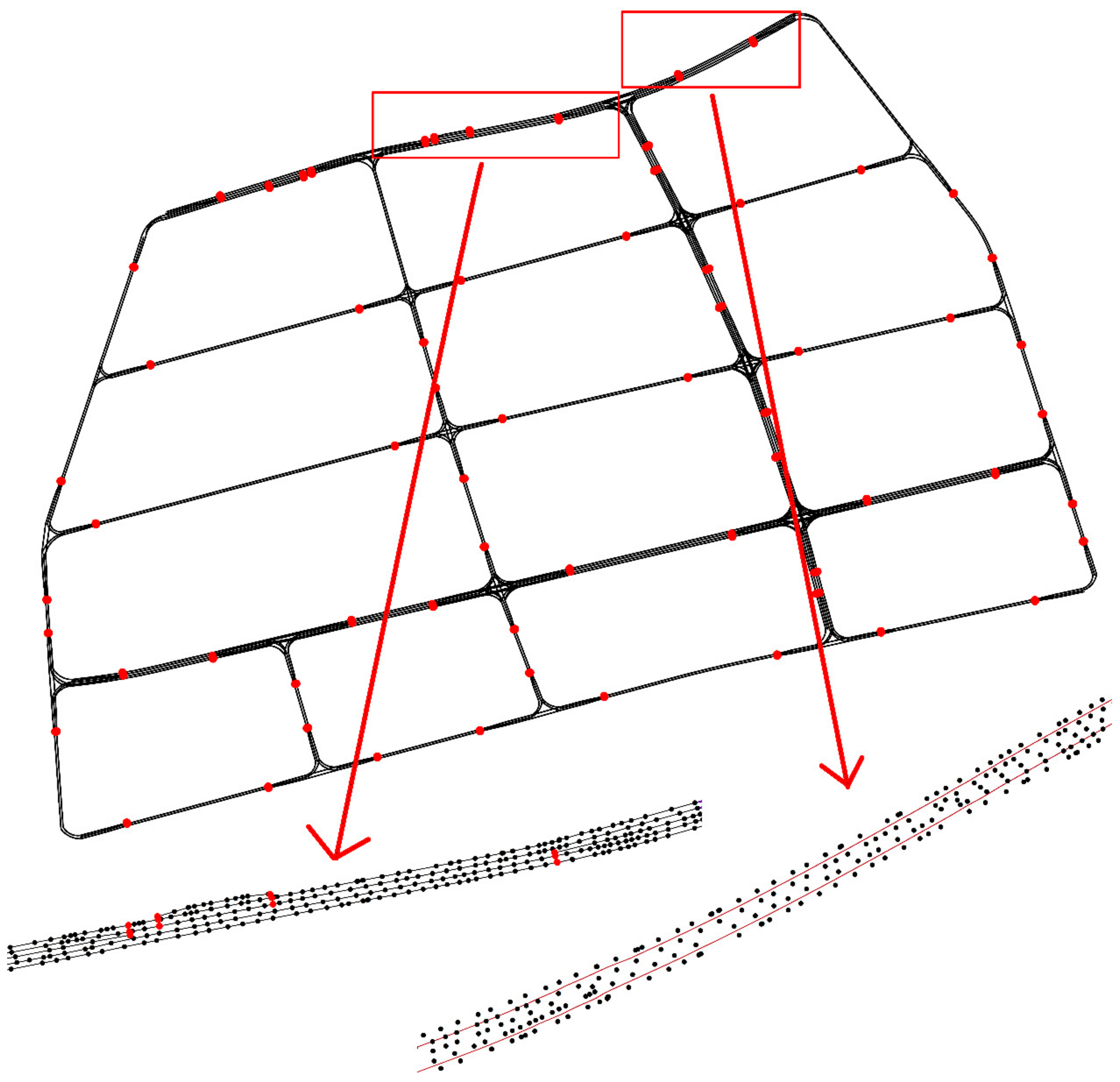

5.2.1. HDRNM Generation

5.2.2. Generation of Linear Event Points under Different Angle Thresholds

5.2.3. Evaluation of HDRNM Accuracy

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nedevschi, S.; Popescu, V.; Danescu, R.; Marita, T.; Oniga, F. Accurate ego-vehicle global localization at intersections through alignment of visual data with digital map. IEEE Trans. Intell. Transp. Syst. 2013, 14, 673–687. [Google Scholar] [CrossRef]

- Bétaille, D.; Toledo-Moreo, R. Creating enhanced maps for lane-level vehicle navigation. IEEE Trans. Intell. Transp. Syst. 2010, 11, 786–798. [Google Scholar] [CrossRef]

- Rohani, M.; Gingras, D.; Gruyer, D. A novel approach for improved vehicular positioning using cooperative map matching and dynamic base station DGPS concept. IEEE Trans. Intell. Transp. Syst. 2016, 17, 230–239. [Google Scholar] [CrossRef]

- Suganuma, N.; Uozumi, T. Precise Position Estimation of Autonomous Vehicle Based on Map-Matching. In Proceedings of the Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011; pp. 296–301. [Google Scholar]

- Aeberhard, M.; Rauch, S.; Bahram, M.; Tanzmeister, G. Experience, results and lessons learned from automated driving on Germany’s highways. IEEE Intell. Transp. Syst. Mag. 2015, 7, 42–57. [Google Scholar] [CrossRef]

- Toledo-Moreo, R.; Betaille, D.; Peyret, F.; Laneurit, J. Fusing GNSS, dead-reckoning, and enhanced maps for road vehicle lane-level navigation. IEEE J. Sel. Top. Signal Process. 2009, 3, 798–809. [Google Scholar] [CrossRef]

- Driankov, D.; Saffiotti, A. Fuzzy Logic Techniques for Autonomous Vehicle Navigation; Physica: Heidelberg, Germany, 2013; Volume 61. [Google Scholar]

- Gaoya, G.; Damerow, F.; Flade, B.; Helmling, M.; Eggert, J. Camera to map alignment for accurate low-cost lane-level scene interpretation. In Proceedings of the Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 498–504. [Google Scholar]

- Gruyer, D.; Belaroussi, R.; Revilloud, M. Accurate Lateral Positioning from Map Data and Road Marking Detection; Pergamon Press, Inc.: Tarrytown, NY, USA, 2016; Volume 43, pp. 1–8. [Google Scholar]

- Hao, L.; Nashashibi, F.; Toulminet, G. Localization for intelligent vehicle by fusing mono-camera, low-cost GPS and map data. In Proceedings of the International IEEE Conference on Intelligent Transportation Systems, Funchal, Portugal, 19–22 September 2010; pp. 1657–1662. [Google Scholar]

- Bo, T.; Khokhar, S.; Gupta, R. Turn prediction at generalized intersections. In Proceedings of the Intelligent Vehicles Symposium (IV), Seoul, Korea, 28 June–1 July 2015; pp. 1399–1404. [Google Scholar]

- Kim, J.; Jo, K.; Chu, K.; Sunwoo, M. Road-model-based and graph-structure-based hierarchical path-planning approach for autonomous vehicles. Proc. Inst. Mech. Eng. Part D 2014, 228, 909–928. [Google Scholar] [CrossRef]

- Lozano-Perez, T. Autonomous Robot Vehicles; Springer Science & Business Media: Heidelberg, Germany, 2012. [Google Scholar]

- Jingnan, L.; Hangbin, W.; Chi, G.; Hongmin, Z.; Wenwei, Z.; Cheng, Y. Progress and consideration of high precision road navigation map. Eng. Sci. 2018, 20, 99–105. [Google Scholar] [CrossRef]

- Chunzhao, G.; Kidono, K.; Meguro, J.; Kojima, Y.; Ogawa, M.; Naito, T. A low-cost solution for automatic lane-level map generation using conventional in-car sensors. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2355–2366. [Google Scholar] [CrossRef]

- Mattern, N.; Schubert, R.; Wanielik, G. High-accurate vehicle localization using digital maps and coherency images. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium (IV), San Diego, CA, USA, 21–24 June 2010; pp. 462–469. [Google Scholar]

- Gwon, G.-P.; Hur, W.-S.; Kim, S.-W.; Seo, S.-W. Generation of a precise and efficient lane-level road map for intelligent vehicle systems. IEEE Trans. Veh. Technol. 2017, 66, 4517–4533. [Google Scholar] [CrossRef]

- Gikas, V.; Stratakos, J. A novel geodetic engineering method for accurate and automated road/railway centerline geometry extraction based on the bearing diagram and fractal behavior. IEEE Trans. Intell. Transp. Syst. 2012, 13, 115–126. [Google Scholar] [CrossRef]

- Máttyus, G.; Wang, S.W.; Fidler, S.; Urtasun, R. HD maps: Fine-grained road segmentation by parsing ground and aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3611–3619. [Google Scholar]

- Xue, Y.; Luliang, T.; Le, N.; Xia, Z.; Qingquan, L. Generating lane-based intersection maps from crowdsourcing big trace data. Transp. Res. Part C Emerg. Technol. 2018, 89, 168–187. [Google Scholar] [CrossRef]

- Tao, Z.; Stefano, A.; Marco, G.; Dian-ge, Y.; Cheli, F. A lane-level road network model with global continuity. Transp. Res. Part C Emerg. Technol. 2016, 71, 32–50. [Google Scholar] [CrossRef]

- Tao, Z.; Diange, Y.; Ting, L.; Keqiang, L.; Xiaomin, L. An improved virtual intersection model for vehicle navigation at intersections. Transp. Res. Part C Emerg. Technol. 2011, 19, 413–423. [Google Scholar] [CrossRef]

- Jo, K.; Lee, M.; Kim, C.; Sunwoo, M. Construction process of a three-dimensional roadway geometry map for autonomous driving. Proc. Inst. Mech. Eng. Part D 2017, 231, 1414–1434. [Google Scholar] [CrossRef]

- Anning, C.; Ramanandan, A.; Farrell, J.A. High-precision lane-level road map building for vehicle navigation. In Proceedings of the 2010 IEEE/ION Position Location and Navigation Symposium (PLANS), Indian Wells, CA, USA, 4–6 May 2010; pp. 1035–1042. [Google Scholar]

- Karagiorgou, S.; Pfoser, D.; Skoutas, D. A layered approach for more robust generation of road network maps from vehicle tracking data. ACM Trans. Spat. Algorithm. Syst. 2017, 3, 3. [Google Scholar] [CrossRef]

- Xingzhe, X.; Wong, K.B.-Y.; Aghajan, H.; Veelaert, P.; Philips, W. Road network inference through multiple track alignment. Transp. Res. Part C Emerg. Technol. 2016, 72, 93–108. [Google Scholar] [CrossRef]

- Jia, Q.; Wang, R. Automatic extraction of road networks from GPS traces. Photogramm. Eng. Remote. Sens. 2016, 82, 593–604. [Google Scholar] [CrossRef]

- Jie, D.; Matthew, J.B. Next-generation automated vehicle location systems: Positioning at the lane level. IEEE Trans. Intell. Transp. Syst. 2008, 9, 48–57. [Google Scholar] [CrossRef]

- Crash Avoidance Metrics Partnership. Enhanced Digital Mapping Project: Final Report; Technical Report; Crash Avoidance Metrics Partnership: Washington, DC, USA, 2004. Available online: https://rosap.ntl.bts.gov/view/dot/3704 (accessed on 29 October 2018).

- Jia, Q.; Wang, R.W. Road map inference: A segmentation and grouping framework. ISPRS Int. J. Geo-Inf. 2016, 5, 130. [Google Scholar] [CrossRef]

- Lin, L.; Daigang, L.; Xiaoyu, X.; Fan, Y.; Wei, R.; Haihong, Z. Extraction of road intersections from GPS traces based on the dominant orientations of roads. ISPRS Int. J. Geo-Inf. 2017, 6, 403. [Google Scholar] [CrossRef]

- Framework Data Exchange Format for Navigation Electronic Map; Standards Press of China: Beijing, China, 2017.

- Jolliffe, I. Principal component analysis. In International Encyclopedia of Statistical Science; Springer: Berlin, Germany, 2011; pp. 1094–1096. [Google Scholar]

- Xuechen, L.; Hongchao, F.; Bisheng, Y.; Qiuping, L. Arterial Roads Extraction in Urban Road NetworksBased on Shape Analysis. Geomat. Inf. Sci. Wuhan Univ. 2014, 39, 327–331. [Google Scholar] [CrossRef]

| Road Segment | From Measure (m) | To Measure (m) | Number of Lanes | Lane Set |

|---|---|---|---|---|

| A | 0 | 30 | 2 | |

| A | 30 | 70 | 3 | |

| A | 70 | 100 | 2 |

| Roll | Pitch | Heading | Frequency | Accuracy |

|---|---|---|---|---|

| 0.015° RMS | 0.015° RMS | 0.040° RMS | 200 Hz | 1 cm + 1 ppm |

| Resolution | Optionally Programmable | Electrical Interfaces | Diameter |

|---|---|---|---|

| Up to 16 bits | Yes | 5 and 24 V | 60 mm |

| Segment | Lane | Lane Length |

|---|---|---|

| R1 | = 6.48 m | |

| R2 | = 6.22 m |

| Items | Actual Number | Tpositive Number | Fpositive Number | Precision (Precision_Score) |

|---|---|---|---|---|

| Road segment | 68 | 68 | 0 | 100% |

| Physically-connected, but unsegmented lane | 155 | 151 | 4 | 97.4% |

| Segmented lane | 248 | 240 | 12 | 95.2% |

| Total input coordinate points | 7559 | 7520 | 30 | 99.6% |

| Linear event point | 156 | 68 | 4 | 94% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, L.; Li, B.; Zhang, H.; Shan, Y.; Zhou, J. A High-Definition Road-Network Model for Self-Driving Vehicles. ISPRS Int. J. Geo-Inf. 2018, 7, 417. https://doi.org/10.3390/ijgi7110417

Zheng L, Li B, Zhang H, Shan Y, Zhou J. A High-Definition Road-Network Model for Self-Driving Vehicles. ISPRS International Journal of Geo-Information. 2018; 7(11):417. https://doi.org/10.3390/ijgi7110417

Chicago/Turabian StyleZheng, Ling, Bijun Li, Hongjuan Zhang, Yunxiao Shan, and Jian Zhou. 2018. "A High-Definition Road-Network Model for Self-Driving Vehicles" ISPRS International Journal of Geo-Information 7, no. 11: 417. https://doi.org/10.3390/ijgi7110417

APA StyleZheng, L., Li, B., Zhang, H., Shan, Y., & Zhou, J. (2018). A High-Definition Road-Network Model for Self-Driving Vehicles. ISPRS International Journal of Geo-Information, 7(11), 417. https://doi.org/10.3390/ijgi7110417