Spatial Data Sequence Selection Based on a User-Defined Condition Using GPGPU

Abstract

:1. Introduction

2. State of the Art and Background

2.1. State of the Art

2.2. Background

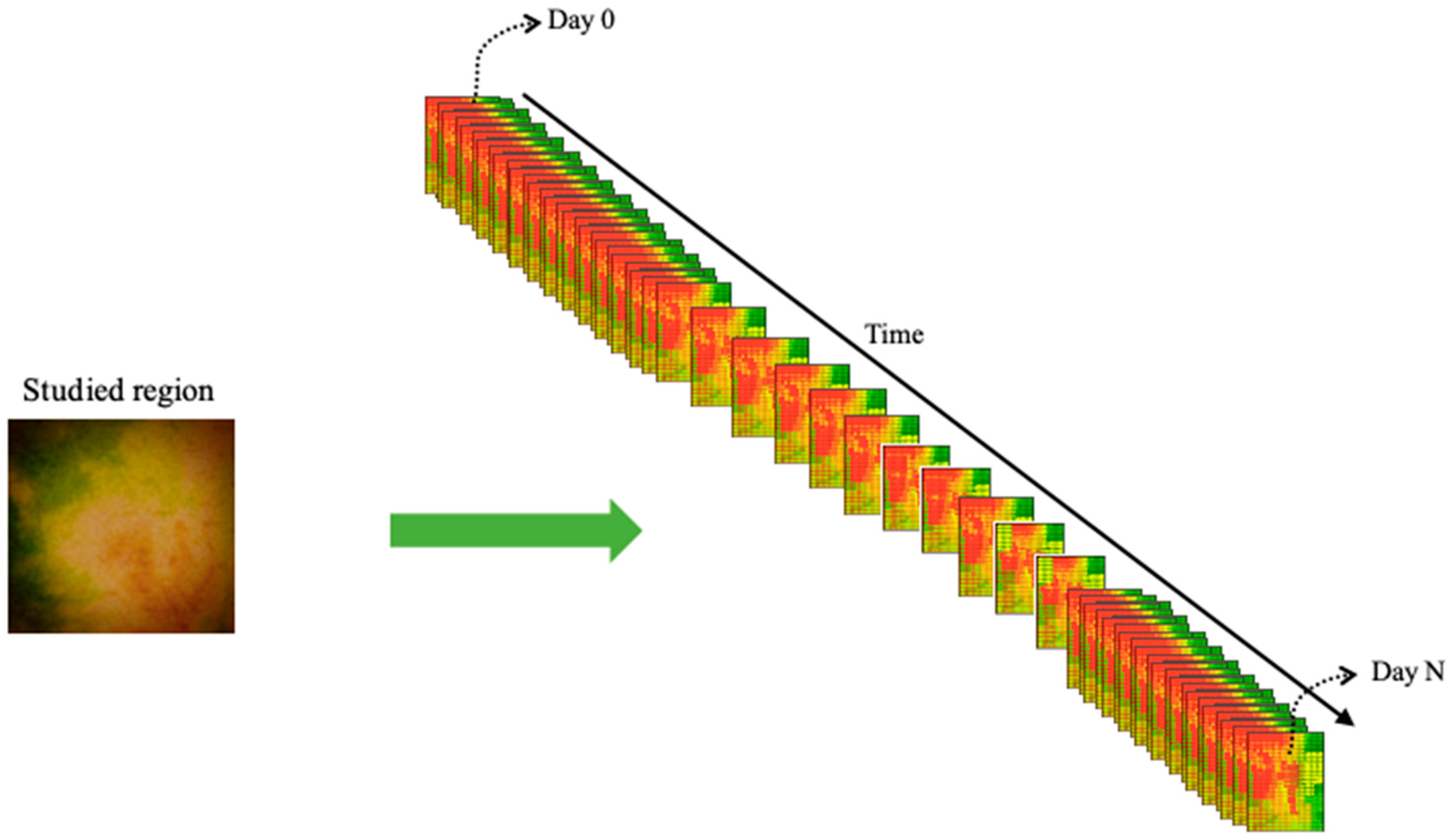

2.2.1. Spatio–Temporal Raster Data

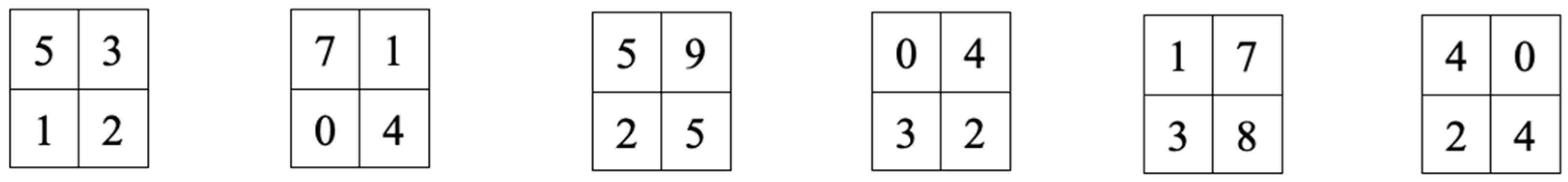

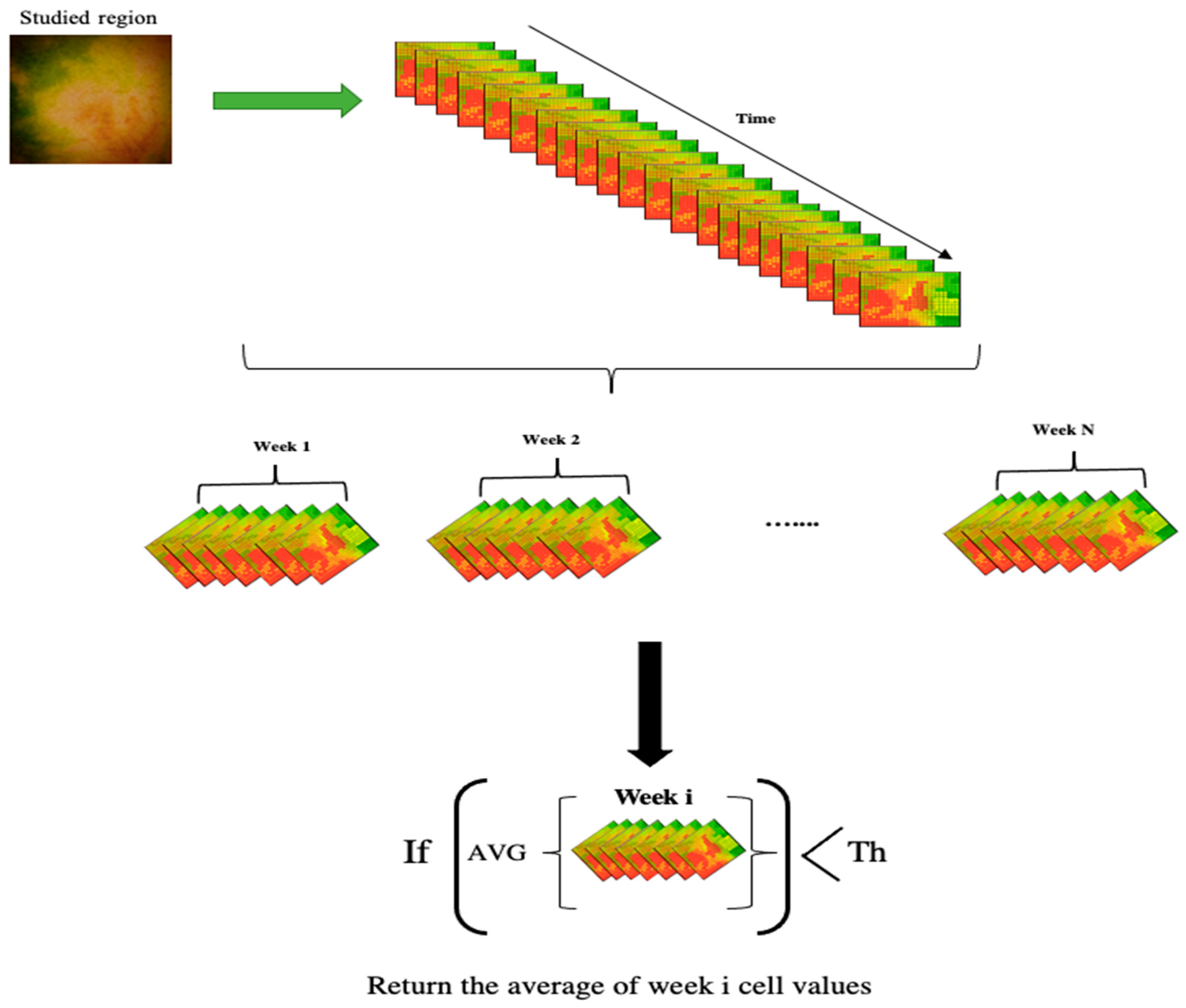

2.2.2. Query Spatio–Temporal Raster Data

2.2.3. Heterogenous Computing

2.2.4. GPU Architecture

- Registers,

- Memory caches,

- Warp schedulers,

- Execution pipelines.

- Initializing and transferring the data from the Host (CPU) to the Device (GPU),

- Calling the kernel (parallel function executed on the device by many threads),

- At the end of the data processing, transferring the results from the Device to the Host.

2.2.5. GPU-Accelerated Libraries for Computing

Thrust

CUB

- Device-wide primitives,

- Block-wide “collective” primitives;

- Warp-wide “collective” primitives.

3. Query Definition and the Proposed Methods

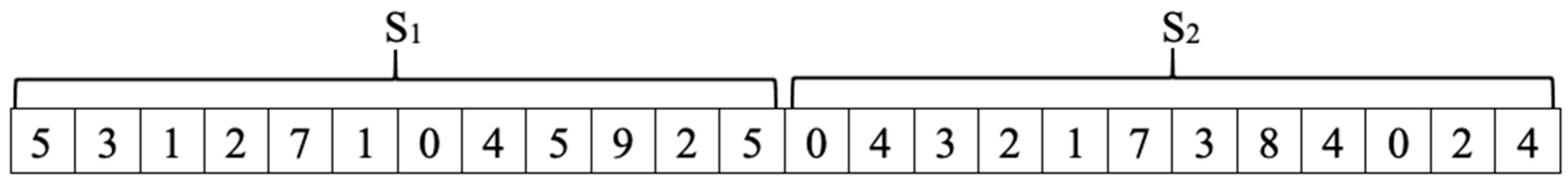

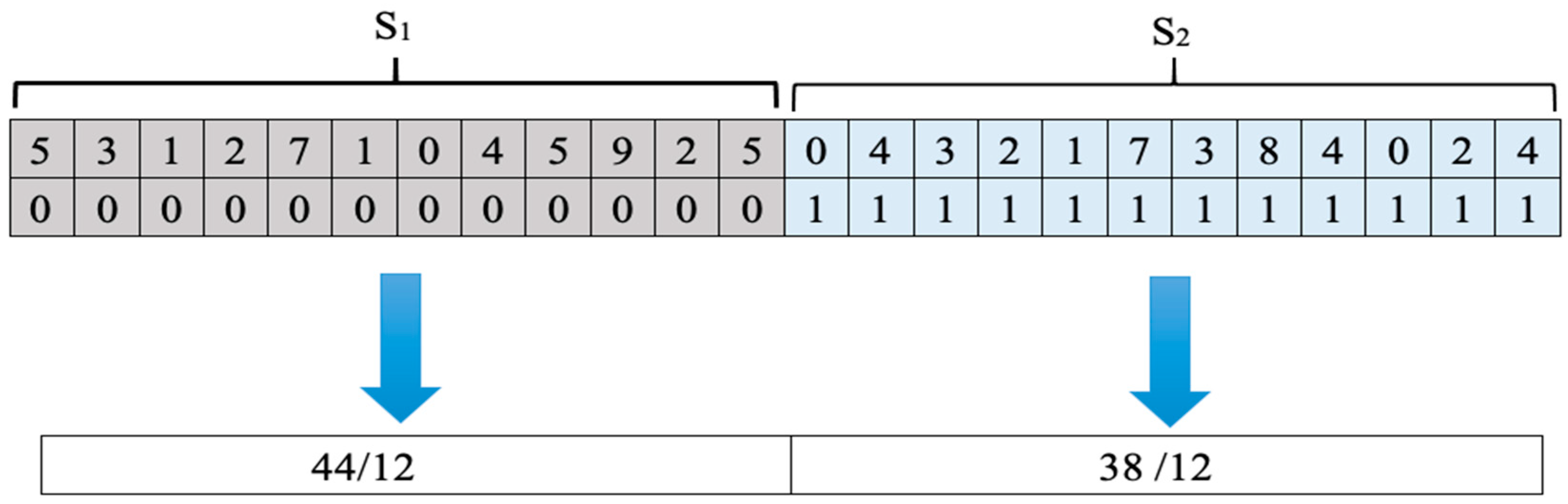

3.1. Query Formulation

3.2. Sequential Method for Query Processing

3.3. Parallel Methods for Query Processing

3.3.1. Straightforward Parallel Approach

3.3.2. Improved Parallel Approach

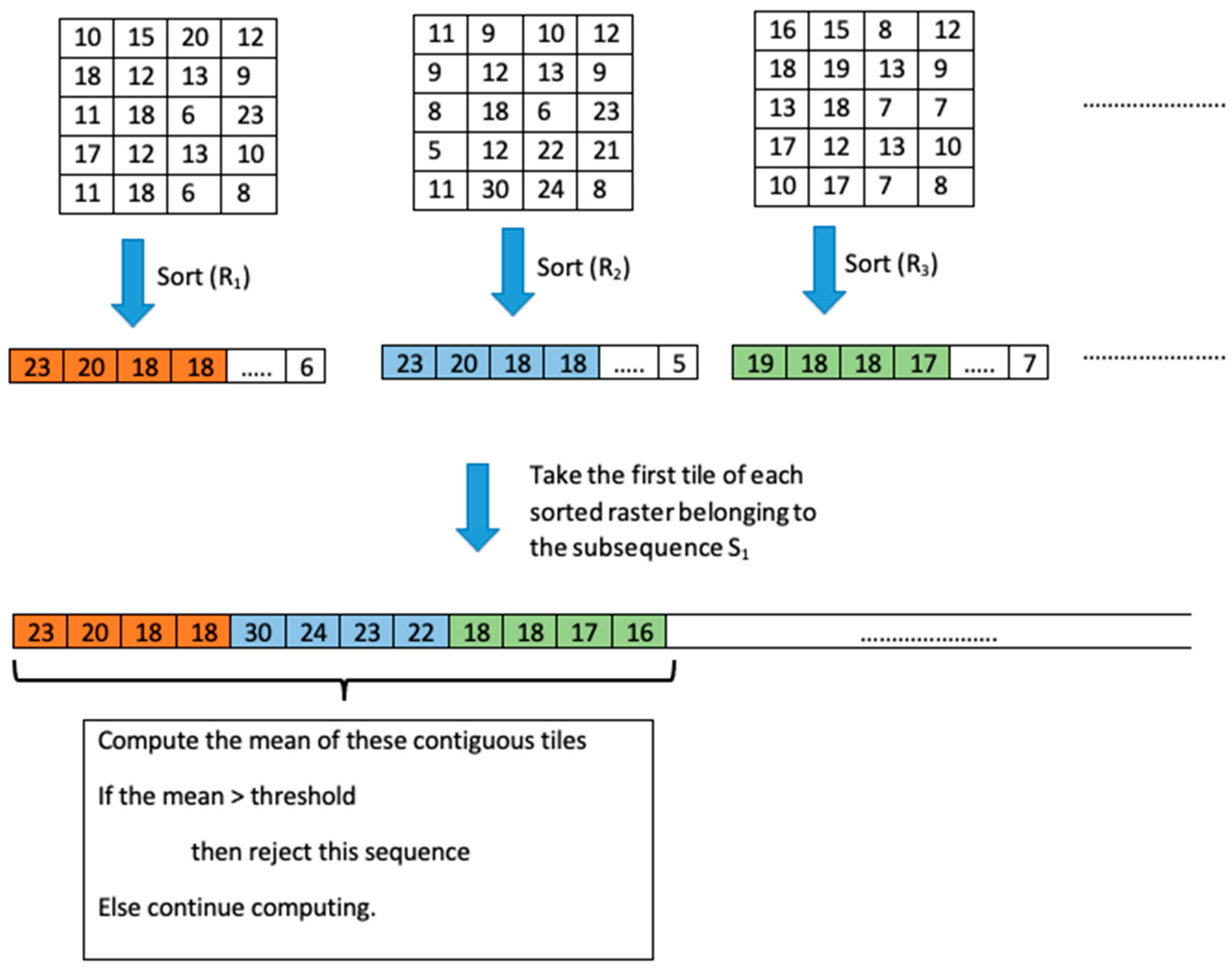

Based on a Sort

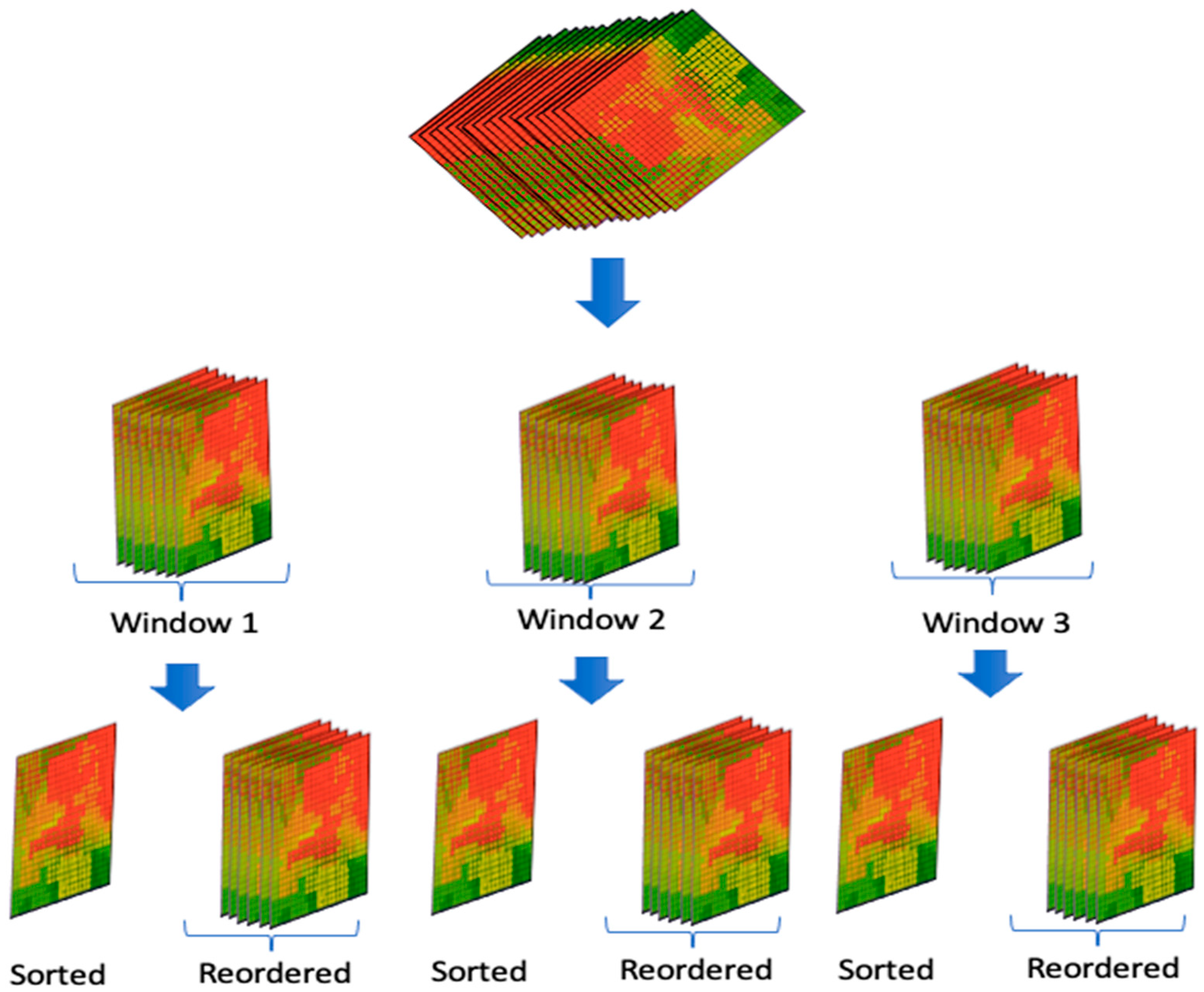

Based on the Sophisticated Sort

- Split the dataset of our rasters in time windows containing a fixed number of rasters. Thus, we propose to sort only one raster from each time window. The sorting result can be viewed as an array M mapping a cell position (x, y) to an order position I.

- The cells of rasters falling in the same time window will be reordered based on the sorted raster of this time window. To do this, the array M will be directly used during the average computation without an impact on time complexity. The time window can be the size of the subsequences.

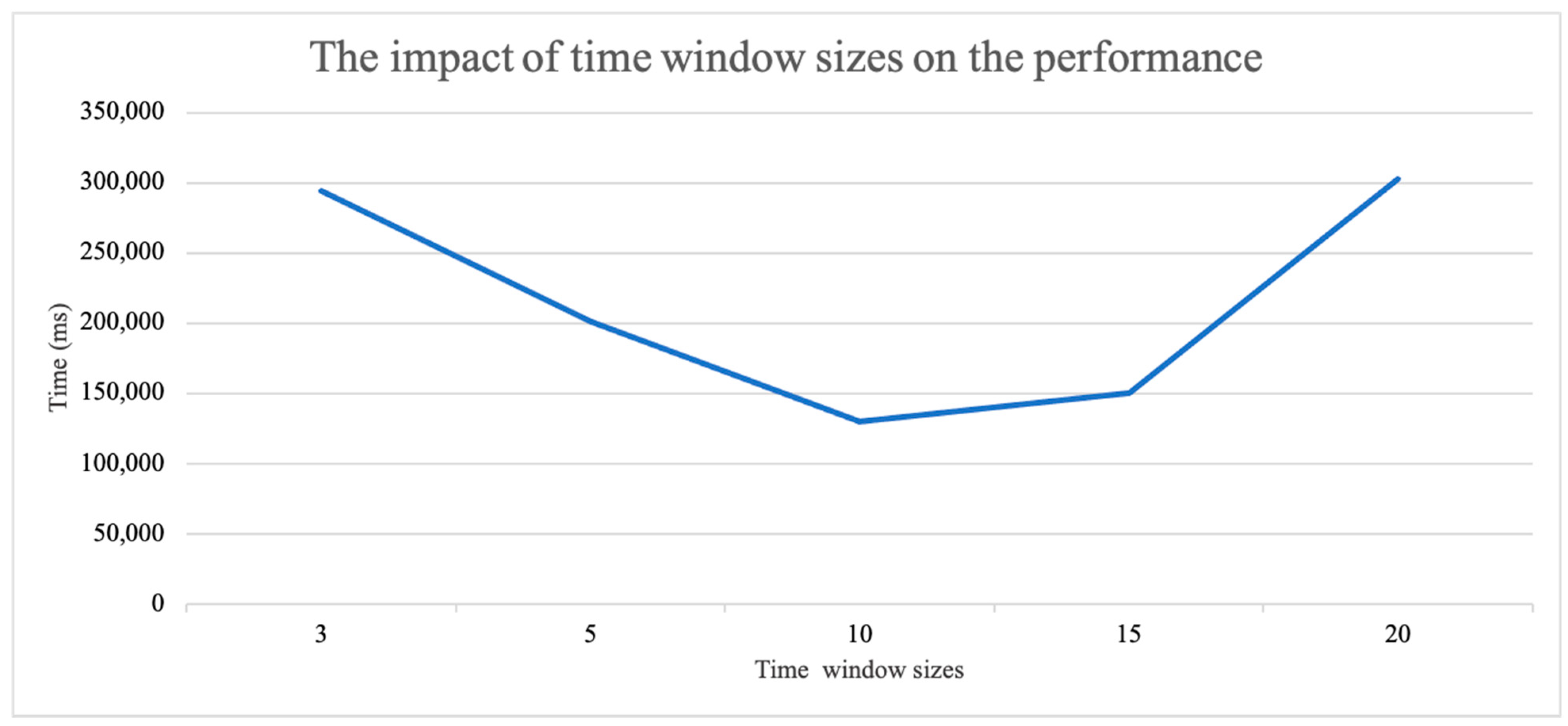

- The size of the time window—which must not be too large and also not too small. If it is too large, the difference between the estimated cell sorting and the real cell sorting may increase inside the time window. Hence the performance may drop (the sort becomes useless). If the time window size is too small, the number of the sorted rasters will increase in the global processing and it will slow down the execution time.

- The type of data (the temperature, pressure, etc.). The less the data is evolving over time the more the sorting is accurate. The proposed methods perform better when the spatial locations of maximal and minimal values evolve slowly over time.

4. Tests and Analysis

4.1. Configuration of the Experimental Environment

4.2. Dataset

4.2.1. INRAE Montoldre Site

- The network table concerns information about 10 sensor nodes,

- The sensors table contains columns such as: myNodeID, battery, temperature, humidity, light, etc., and 14,970,995 rows which correspond to the measurements of the different sensors during many months with a different fine-grained frequency of acquisition.

4.2.2. Statistical Description of the Montoldre Hourly Dataset

- Min: is the minimum value of the measure in the whole dataset,

- Max: is the maximum value of the measure in the whole dataset,

- Mean: is the mean value of the measure in the whole dataset,

- Standard deviation: is the standard deviation of the measure in the whole dataset,

- Mean of rasters means: to compute this value, first we computed the mean of each raster used for our experiments then we computed the global mean which is the mean over the raster means,

- Mean of rasters standard deviation: to compute this value, first we computed the standard deviation of each raster used for our experiments then we computed the mean over all the standard deviations of the rasters.

4.3. Experiment Results and Analysis

4.3.1. Experiment Results

4.3.2. The Impact of the Time Window Size on the Performance

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sawant, S.; Durbha, S.S.; Jagarlapudi, A. Interoperable agro-meteorological observation and analysis platform for precision agriculture: A case study in citrus crop water requirement estimation. Comput. Electron. Agric. 2017, 138, 175–187. [Google Scholar] [CrossRef]

- Pinet, F. Entity-relationship and object-oriented formalisms for modeling spatial environmental data. Environ. Model. Softw. 2012, 30, 80–91. [Google Scholar] [CrossRef]

- Laurini, R.; Thompson, D. 6—Tessellations: Regular and Irregular Cells, Hierarchies. In Fundamentals of Spatial Information Systems; Laurini, R., Thompson, D., Eds.; Academic Press: Cambridge, MA, USA, 1992; pp. 217–256. ISBN 9780124383807. [Google Scholar] [CrossRef]

- Kang, M.A.; Zaamoune, M.; Pinet, F.; Bimonte, S.; Beaune, P. Optimisation des performances des opérations d’agrégation au sein des entrepôts de grilles spatialisées (in French). In Proceedings of the SAGEO 2013 Conférence Internationale de Géomatique et d’analyse Spatiale, Brest, France, 26 September 2013. [Google Scholar]

- Kang, M.-A.; Zaamoune, M.; Pinet, F.; Bimonte, S.; Beaune, P. Performance optimization of grid aggregation in spatial data warehouses. Int. J. Digit. Earth 2015, 8, 970–988. [Google Scholar] [CrossRef]

- Pullar, D. MapScript: A Map Algebra Programming Language Incorporating Neighborhood Analysis. GeoInformatica 2001, 5, 145–163. [Google Scholar] [CrossRef]

- Tomlin, C.D. Map algebra: One perspective. Landsc. Urban Plan. 1994, 30, 3–12. [Google Scholar] [CrossRef]

- En-Nejjary, D.; Pinet, F.; Kang, M.-A. A Method to Improve the Performance of Raster Selection Based on a User-Defined Condition: An Example of Application for Agri-environmental Data. Adv. Intell. Syst. Comput. 2018, 893, 190–201. [Google Scholar]

- En-Nejjary, D.; Pinet, F.; Kang, M. Modeling and Computing Overlapping Aggregation of Large Data Sequences in Geographic Information Systems. Int. J. Inf. Syst. Modeling Des. 2019, 10, 20–41. [Google Scholar] [CrossRef]

- Thrust. Available online: https://thrust.github.io (accessed on 28 November 2021).

- CUB. Available online: https://nvlabs.github.io/cub (accessed on 28 November 2021).

- Viola, I.; Kanitsar, A.; Groller, M.E. Hardware-based nonlinear filtering and segmentation using high-level shading languages. In Proceedings of the the IEEE Visualization 2003, Seattle, WA, USA, 19–24 October 2003; pp. 309–316. [Google Scholar] [CrossRef]

- Yang, Z.; Zhu, Y.; Pu, Y. Parallel Image Processing Based on CUDA. In Proceedings of the the 2008 International Conference on Computer Science and Software Engineering, Wuhan, China, 12–14 December 2008; pp. 198–201. [Google Scholar] [CrossRef]

- Temizel, A.; Halici, T.; Logoglu, B.; Temizel, T.T.; Omruuzun, F.; Karaman, E. Experiences on image and video processing with CUDA and OpenCL. In GPU Computing Gems; Morgan Kaufmann: Boston, MA, USA, 2011; pp. 547–567. [Google Scholar]

- Jain, P.; Mo, X.; Jain, A.; Subbaraj, H.; Durrani, R.S.; Tumanov, A.; Gonzalez, J.; Stoica, I. Dynamic Space-Time Scheduling for GPU Inference. arXiv 2018, arXiv:1901.00041. [Google Scholar]

- Jianbo, Z.; Wenxin, Y.; Jing, S.; Yonghong, L. GPU-accelerated parallel algorithms for map algebra. In Proceedings of the the 2010 The 2nd Conference on Environmental Science and Information Application Technology, Wuhan, China, 17–18 July 2010; pp. 882–885. [Google Scholar] [CrossRef]

- Jianting, Z.; Simin, Y.; Le, G. Large-scale spatial data processing on GPUs and GPU-accelerated clusters. Sigspatial Spec. 2015, 6, 27–34. [Google Scholar] [CrossRef] [Green Version]

- Steinbach, M.; Hemmerling, R. Accelerating batch processing of spatial raster analysis using GPU. Comput. Geosci. 2012, 45, 212–220. [Google Scholar] [CrossRef]

- Zhang, J.; You, S.; Gruenwald, L. Parallel online spatial and temporal aggregations on multi-core CPUs and many-core GPUs. Inf. Syst. 2014, 44, 134–154. [Google Scholar] [CrossRef]

- Jianting, Z.; Simin, Y. High-performance quadtree constructions on large-scale geospatial rasters using GPGPU parallel primitives. Int. J. Geogr. Inf. Sci. 2013, 27, 2207–2226. [Google Scholar] [CrossRef]

- Doraiswamy, H.; Freire, J. A GPU-friendly Geometric Data Model and Algebra for Spatial Queries. In Proceedings of the ACM SIGMOD International Conference on Management of Data, SIGMOD ’20, Portland, OR, USA, 14–19 June 2020; pp. 1875–1885. [Google Scholar] [CrossRef]

- Doraiswamy, H.; Vo, H.T.; Silva, C.T.; Freire, J. A GPU-based index to support interactive spatio-temporal queries over historical data. In Proceedings of the 2016 IEEE 32nd International Conference on Data Engineering, ICDE, Helsinki, Finland, 16–20 May 2016; pp. 1086–1097. [Google Scholar] [CrossRef]

- MongoDB. Available online: http://www.mongodb.org (accessed on 28 November 2021).

- Beutel, A.; Mølhave, T.; Agarwal, P.K. Natural Neighbor Interpolation Based Grid DEM Construction Using a GPU. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 172–181. [Google Scholar] [CrossRef] [Green Version]

- Simion, B.; Ray, S.; Brown, A.D. Speeding up Spatial Database Query Execution using GPUs. Procedia Comput. Sci. 2012, 9, 1870–1879. [Google Scholar] [CrossRef] [Green Version]

- Walsh, S.D.C.; Saar, M.; Bailey, P.; Lilja, D. Accelerating geoscience and engineering system simulations on graphics hardware. Comput. Geosci. 2009, 35, 2353–2364. [Google Scholar] [CrossRef]

- Wu, Y.; Ge, Y.; Yan, W.; Li, X. Improving the performance of spatial raster analysis in GIS using GPU. In Proceedings of the SPIE-The International Society for Optical Engineering, Nanjing, China, 7 August 2007. [Google Scholar]

- En-Nejjary, D.; Pinet, F.; Kang, M. Large-scale geo-spatial raster selection method based on a User-defined condition using GPGPU. In Proceedings of the 11th International Conference on Computer Science and Information Technology, Paris, France, 21–23 December 2018; p. 8. [Google Scholar]

- Cheng, J.; Grossman, M.; McKercher, T. Professional CUDA C Programming; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Roussey, C.; Bernard, S.; André, G.; Boffety, D. Weather data publication on the LOD using SOSA/SSN ontology. Semant. Web 2020, 11, 581–591. [Google Scholar] [CrossRef]

- Touseau, L.; Le Sommer, N.L. Contribution of the web of things and of the opportunistic computing to the smart agriculture: A practical experiment. Future Internet 2019, 11, 33. [Google Scholar] [CrossRef] [Green Version]

- Hou, K.M.; de Sousa, G.; Chanet, J.P.; Zhou, H.Y.; Kara, M.; Amamra, A.; Diao, X.; de Vaulx, C.; Li, J.J.; Jacquot, A. LiveNode: LIMOS versatile embedded wireless sensor node. In Proceedings of the Workshop International sur Les Réseaux de Capteurs sans Fil en Conjonction avec la 7ème Conférence Internationale sur les NOuvelles TEchnologies de la REpartition (NOTERE), Marrakech, Marrakech, 4 June 2007; p. 5. [Google Scholar]

- Li, Z.; Wang, K.; Ma, H.; Wu, Y. An Adjusted Inverse Distance Weighted Spatial Interpolation Method. In Proceedings of the 2018 3rd International Conference on Communications, Information Management and Network Security (CIMNS 2018), Wuhan, China, 27–28 September 2018; pp. 128–132. [Google Scholar] [CrossRef] [Green Version]

- Hoyer, S.; Hamman, J. xarray: N-D labeled Arrays and Datasets in Python. J. Open Res. Softw. 2017, 5, 10. [Google Scholar] [CrossRef] [Green Version]

- Dask Development Team. Dask: Library for Dynamic Task Scheduling. 2016. Available online: https://dask.org (accessed on 25 November 2021).

| Platform | Hardware Configuration | Software Configuration |

|---|---|---|

| CPU | Intel(R) Core(TM) i7-2600K CPU @ 3.40 GHz Device global memory: 16 GB Cache size: 20,480 KB | Linux Ubuntu 19.04 C/C++ CUDA 8.0 Thrust v10.1.105 CUB v1.8.0 |

| GPU | Tesla K20C CUDA Cores: 2496 Device global memory: 5 GB Memory Bandwidth: 208 GB/s |

| Measure | Meaning | Units |

|---|---|---|

| Battery | Battery state of node | mV |

| Temperature | Temperature measurement | C degree |

| Humidity | Air humidity measurement | Percent |

| Light | Light measurement | N/A |

| Watermark n | Measurement value of the n-th watermark device. Watermark is a soil humidity sensor. The Watermark sensors are in the soil at different soil depth. | Watermark’s unit (range: 0 to 200) |

| Measure | Min | Max | Mean | Standard Deviation | Mean of Rasters Means | Mean of Rasters Standard Deviation |

|---|---|---|---|---|---|---|

| Temperature (°C) | 0 | 45.7 | 11.89 | 8.73 | 12 | 2.73 |

| Humidity (%) | 9.9 | 100 | 78 | 21.97 | 78.15 | 15.20 |

| Watermark 2 (watermark’s unit) | 1 | 200 | 63.80 | 88.96 | 61.71 | 43.79 |

| Dataset | Size of Rasters | CPU (ms) | Sophisticated CPU-Sorting (ms) | GPU (ms) | Sophisticated GPU-Sorting (ms) |

|---|---|---|---|---|---|

| Dataset 1 | 100 × 100 | 25,464 | 34,180 | 21,023 | 29,117 |

| Dataset 2 | 200 × 200 | 240,255 | 380,521 | 190,762 | 316,284 |

| Dataset 3 | 1000 × 100 | 349,654 | 471,290 | 260,273 | 397,641 |

| Dataset 4 | 500 × 500 | 587,323 | 697,138 | 418,012 | 631,540 |

| Dataset | Size of Rasters | CPU (ms) | GPU (ms) | Sophisticated GPU-Sorting (ms) |

|---|---|---|---|---|

| Dataset 1 | 100 × 100 | 28,231 | 17,328 | 7133 |

| Dataset 2 | 200 × 200 | 250,165 | 131,571 | 61,604 |

| Dataset 3 | 1000 × 100 | 369,719 | 171,803 | 83,251 |

| Dataset 4 | 500 × 500 | 608,413 | 372,631 | 127,679 |

| Dataset | Size of Rasters | CPU (ms) | GPU (ms) | Sophisticated GPU-Sorting (ms) |

|---|---|---|---|---|

| Dataset 1 | 100 × 100 | 27,647 | 12,981 | 7276 |

| Dataset 2 | 200 × 200 | 266,499 | 116,730 | 63,452 |

| Dataset 3 | 1000 × 100 | 382,122 | 162,014 | 84,916 |

| Dataset 4 | 500 × 500 | 625,116 | 302,833 | 130,233 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

En-Nejjary, D.; Pinet, F.; Kang, M.-A. Spatial Data Sequence Selection Based on a User-Defined Condition Using GPGPU. ISPRS Int. J. Geo-Inf. 2021, 10, 816. https://doi.org/10.3390/ijgi10120816

En-Nejjary D, Pinet F, Kang M-A. Spatial Data Sequence Selection Based on a User-Defined Condition Using GPGPU. ISPRS International Journal of Geo-Information. 2021; 10(12):816. https://doi.org/10.3390/ijgi10120816

Chicago/Turabian StyleEn-Nejjary, Driss, François Pinet, and Myoung-Ah Kang. 2021. "Spatial Data Sequence Selection Based on a User-Defined Condition Using GPGPU" ISPRS International Journal of Geo-Information 10, no. 12: 816. https://doi.org/10.3390/ijgi10120816

APA StyleEn-Nejjary, D., Pinet, F., & Kang, M.-A. (2021). Spatial Data Sequence Selection Based on a User-Defined Condition Using GPGPU. ISPRS International Journal of Geo-Information, 10(12), 816. https://doi.org/10.3390/ijgi10120816