SR-SYBA: A Scale and Rotation Invariant Synthetic Basis Feature Descriptor with Low Memory Usage

Abstract

1. Introduction

2. Related Work

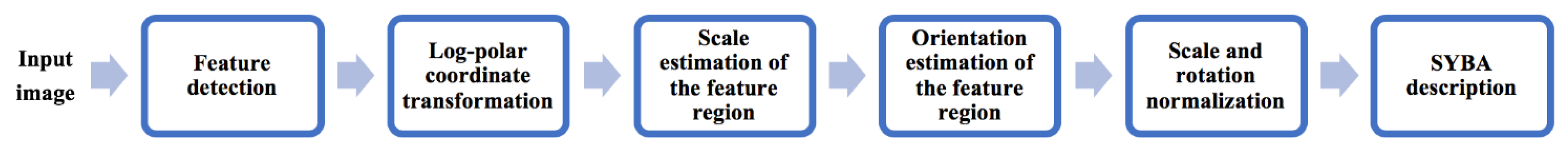

3. SR-SYBA Algorithm

3.1. Log-Polar Coordinate Transformation

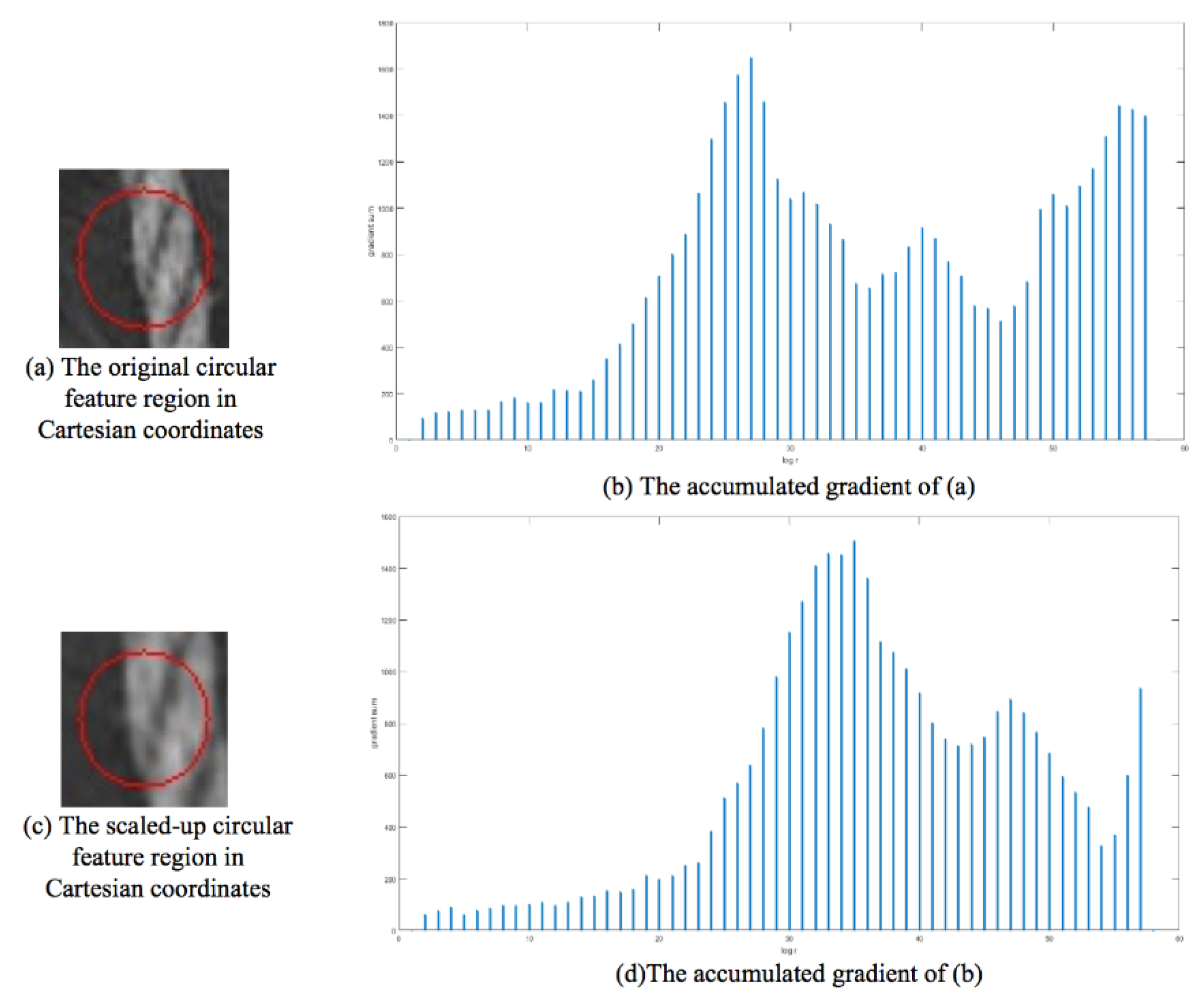

3.2. Scale Representation Estimation

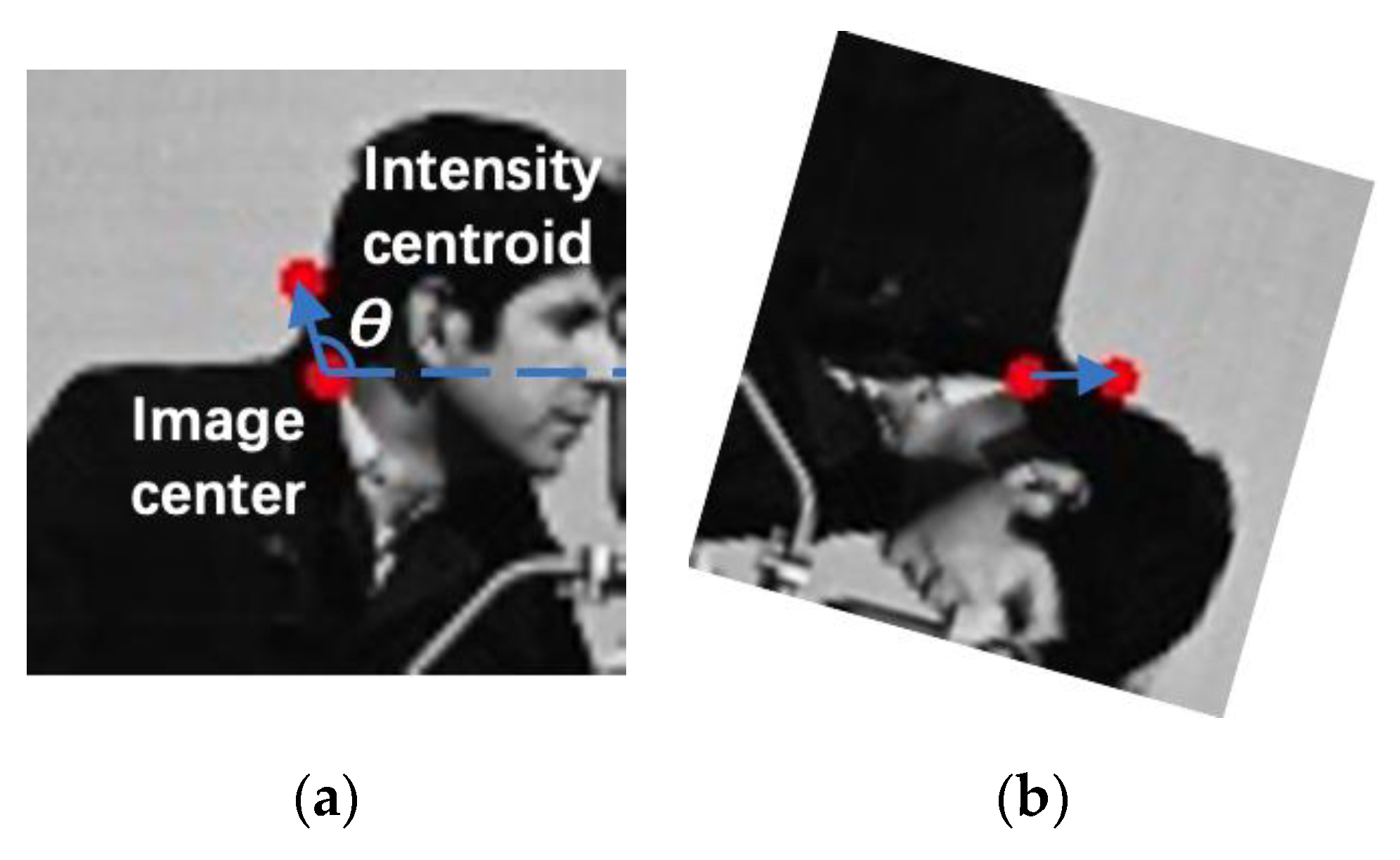

3.3. Orientation Representation Estimation

3.4. Scale and Orientation Normalization

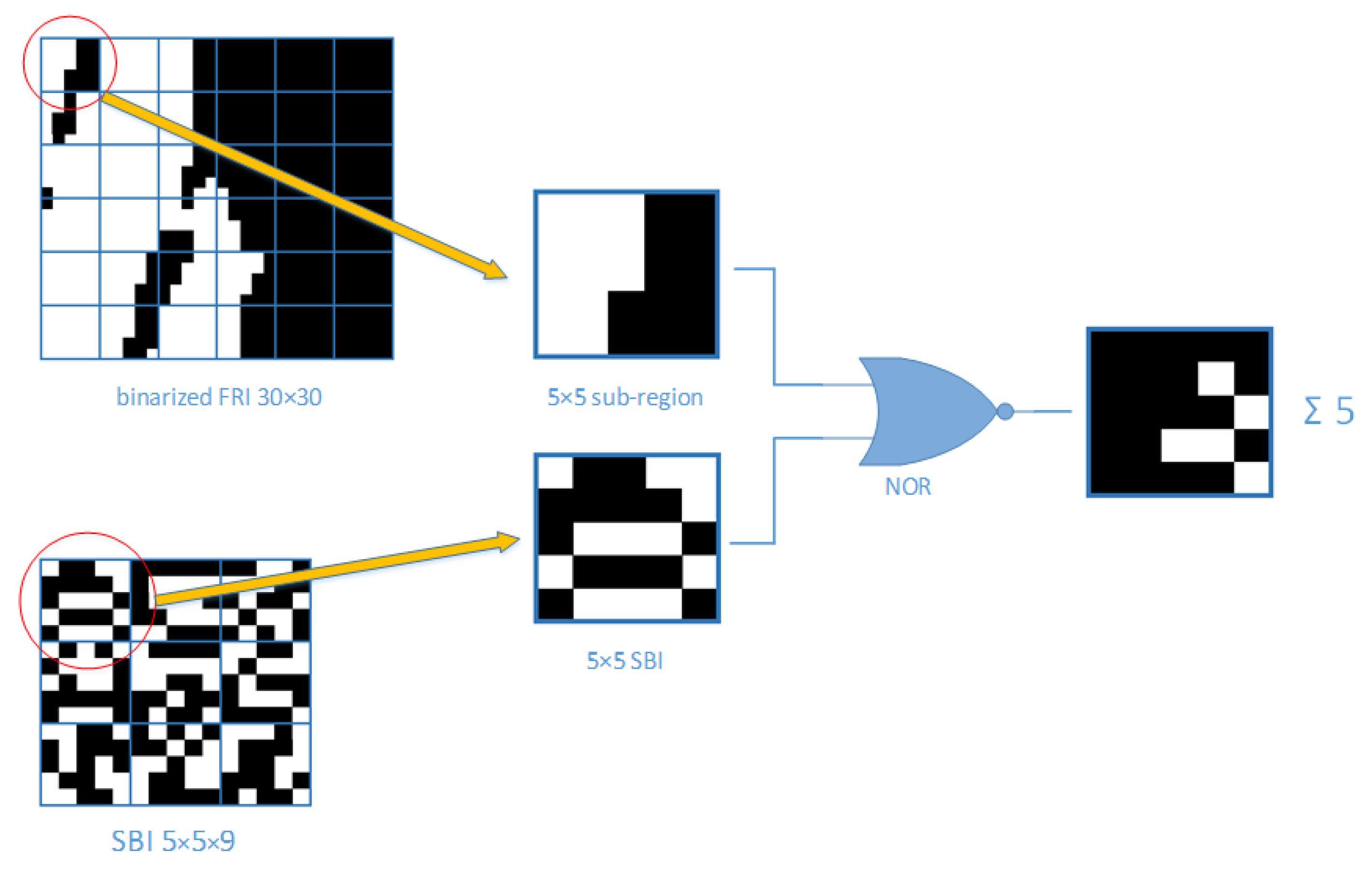

3.5. SR-SYBA Algorithm

4. Experiments and Discussion

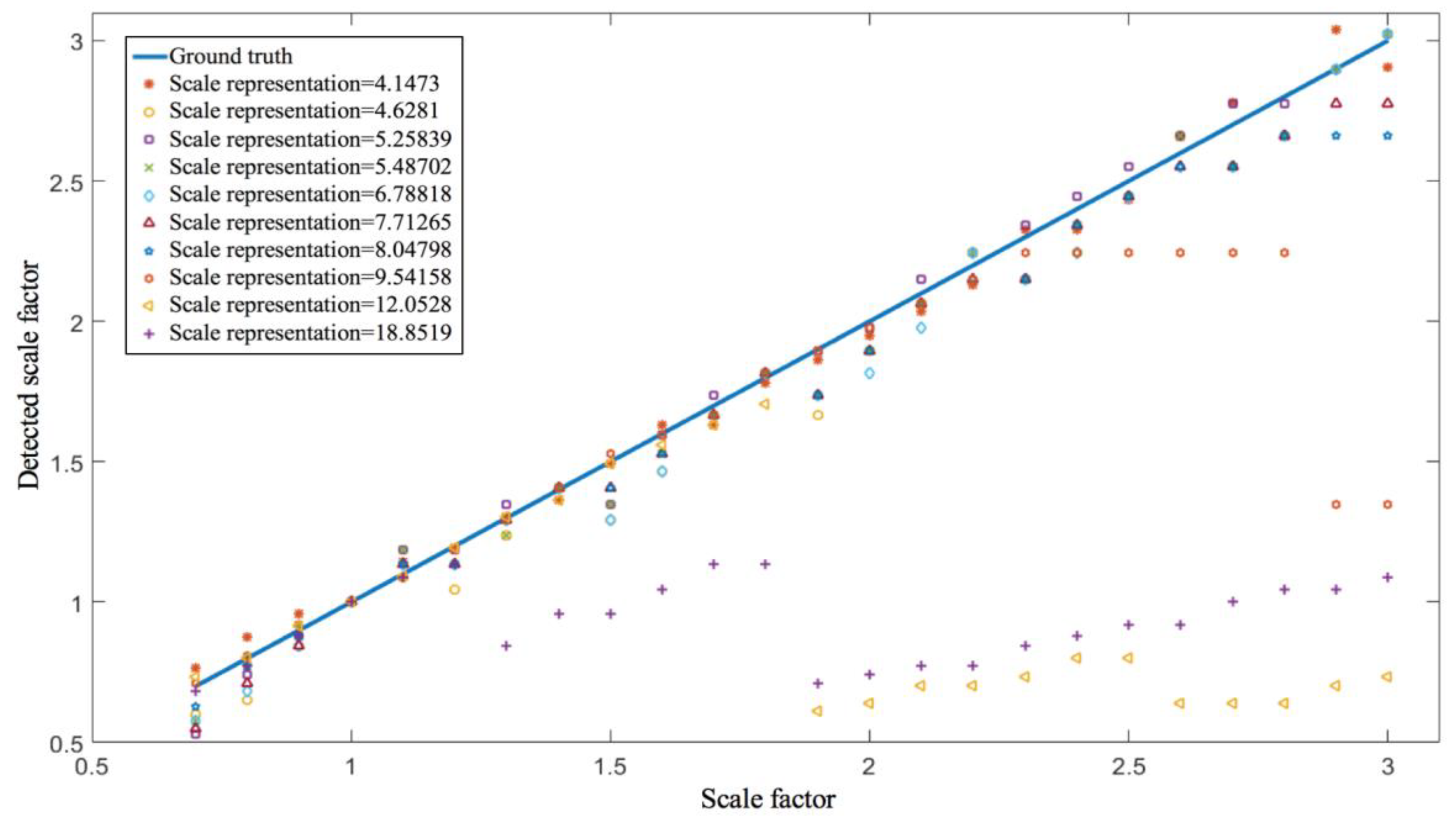

4.1. Verification of Scale Representation Estimation

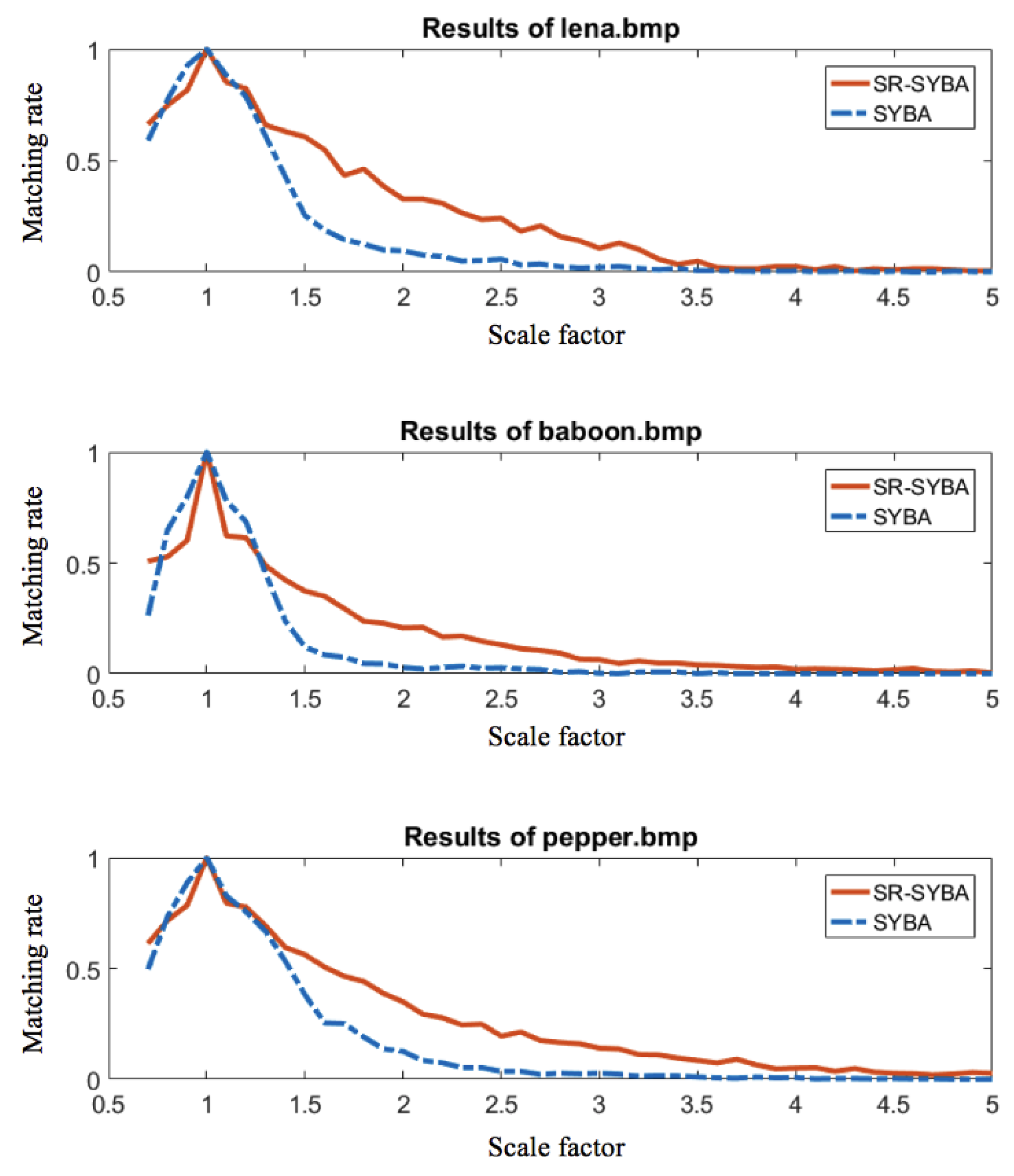

4.2. Performance Comparison with the Original SYBA

4.2.1. Scaling Variation

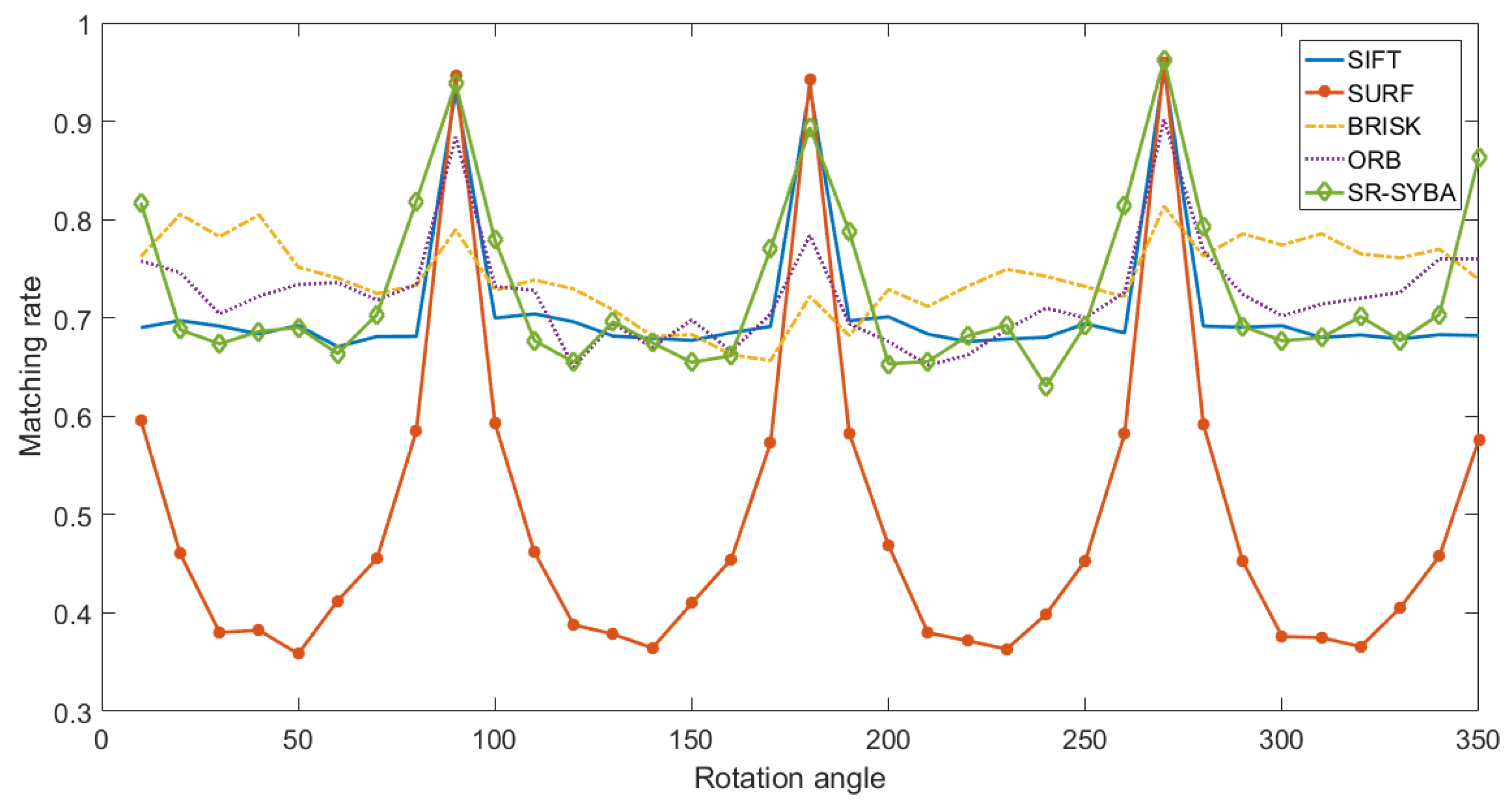

4.2.2. Rotation Variation

4.3. Performance Comparison with rSYBA

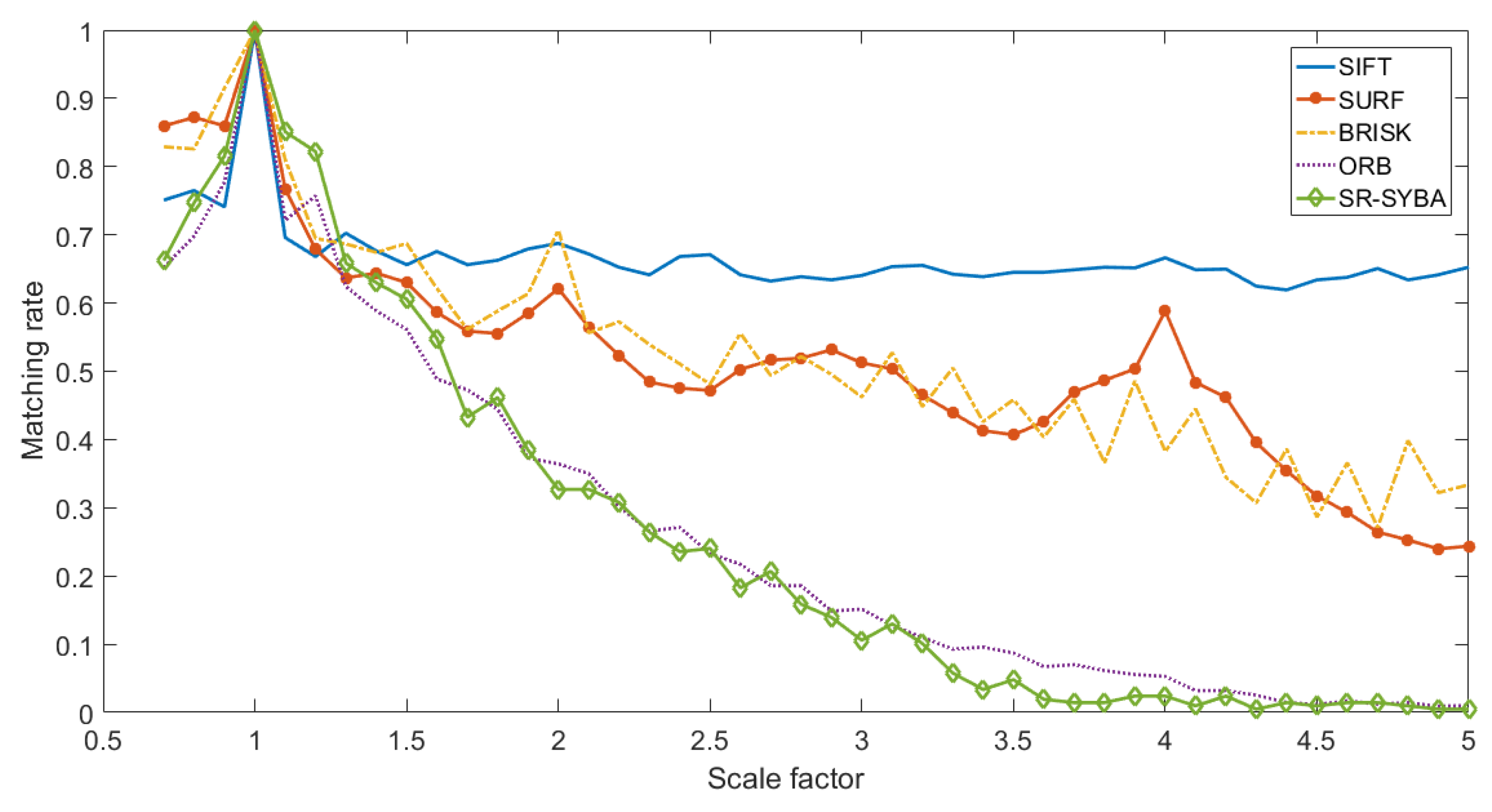

4.4. Performance Comparison with Other Feature Fescription Algorithms

4.5. Memory Usage Comparison with Other Feature Description Algorithms

4.6. Performance for Real Sscenes

4.6.1. The Oxford Affine Dataset

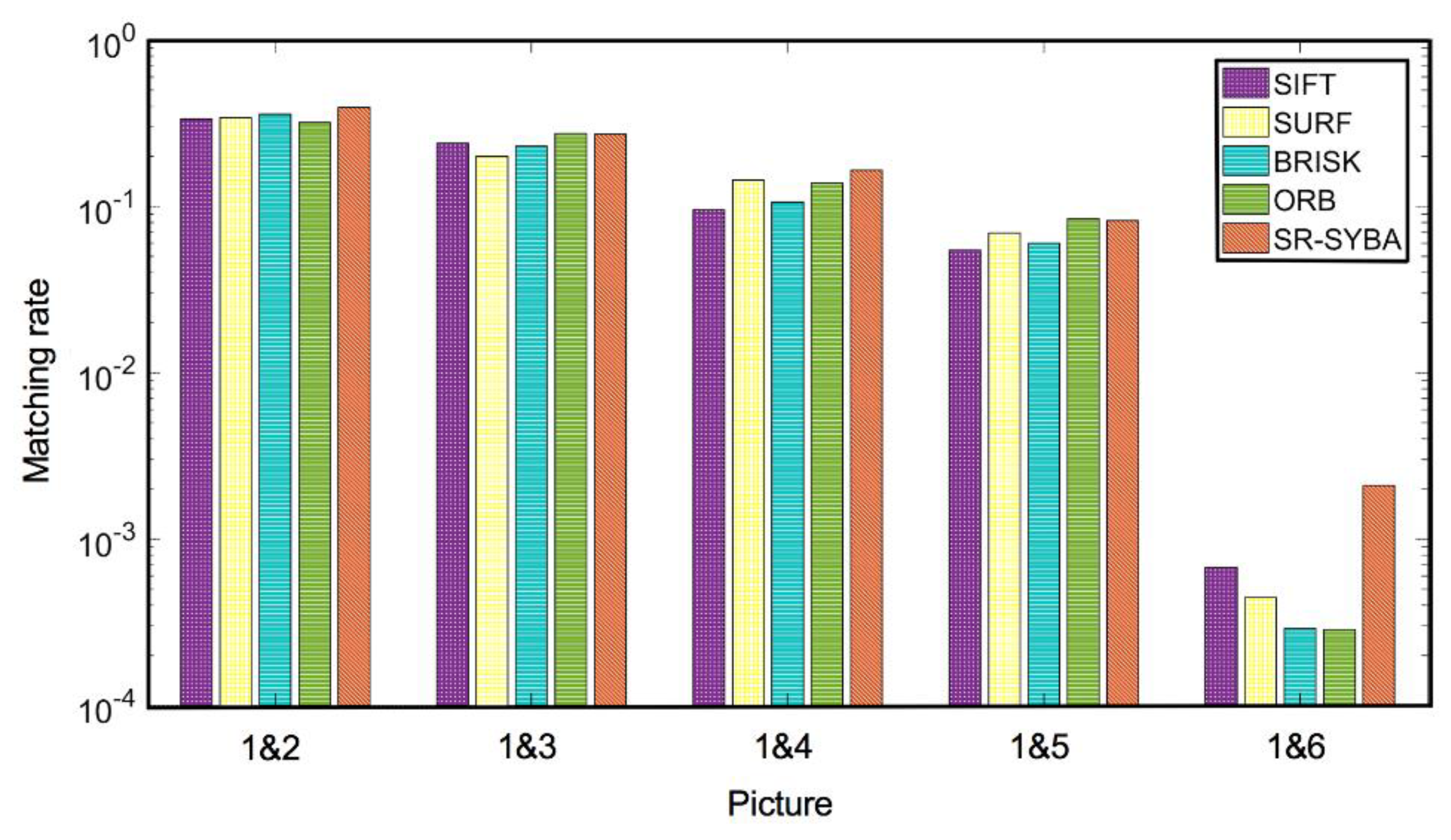

4.6.2. Statistical t-Test Using the BYU Feature Matching Dataset

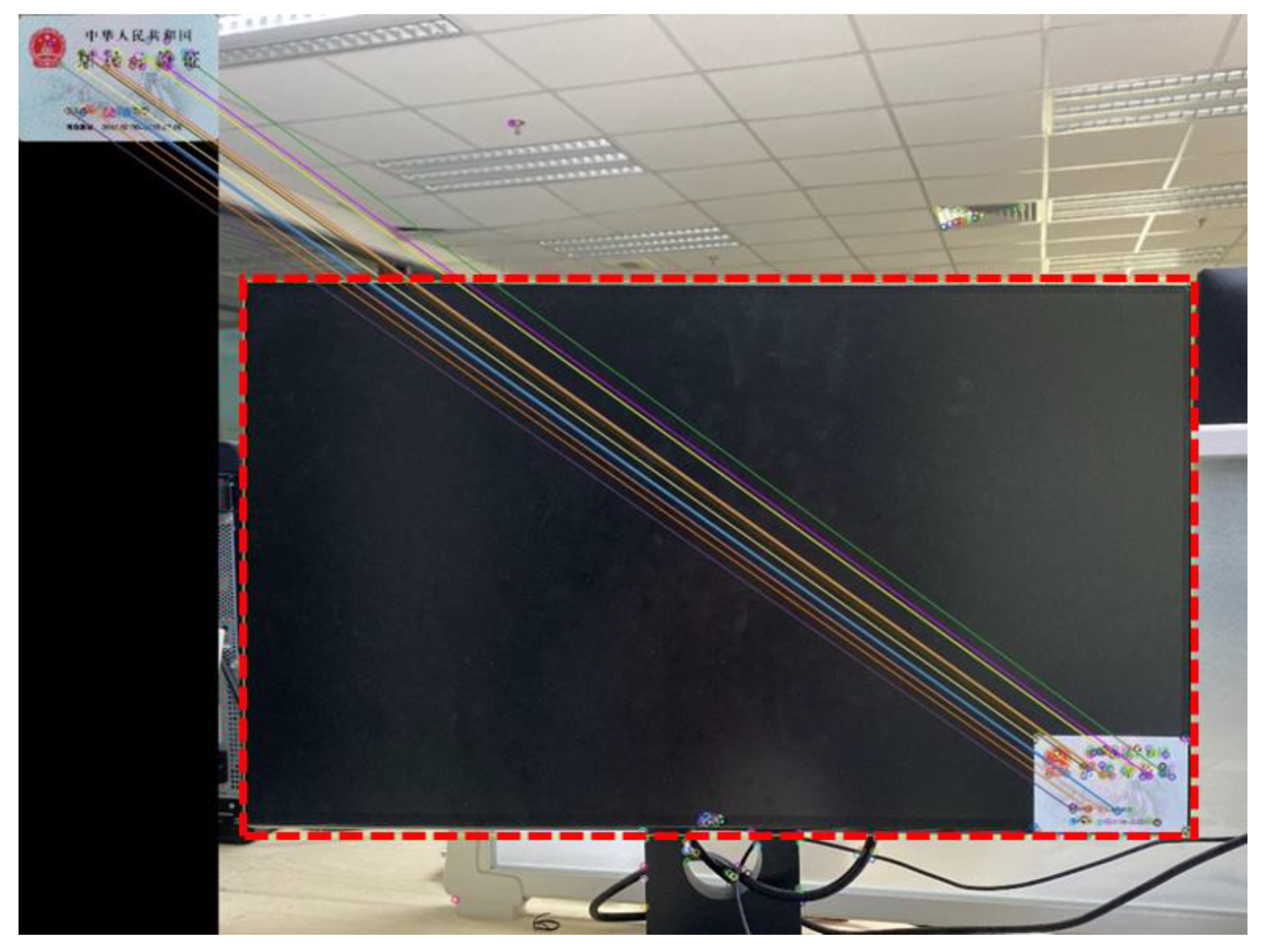

5. Application for Vision-Based Measurement

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Krishnan, R.; Anil, A.R. A survey on image matching methods. Int. J. Latest Res. Eng. Technol. 2016, 22, 58–61. [Google Scholar]

- Yao, J. Image registration based on both feature and intensity matching. In Proceedings of the 26th IEEE International Conference on Acoustics, Speech, and Signal Processing, Salt Lake City, UT, USA, 7–11 May 2001; pp. 1693–1696. [Google Scholar]

- Hassaballah, M.; Abdelmgeid, A.A.; Alshazly, H.A. Image Feature Detection and Descriptor, 1st ed.; Springer: Heidelberg, Germany, 2016; pp. 11–45. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded up robust features. In Proceedings of the 7th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary robust independent elementary features. In Proceedings of the 11th European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; pp. 778–792. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Theodoros, G.; Yu, L.; Wei, C.; Micheal, L. A survey of traditional and deep learning-based feature descriptors for high dimensional data in computer vision. Int. J. Multimed. Info. Retr. 2019, 1–36. Available online: https://doi.org/10.1007/s13735-019-00183-w (accessed on 22 November 2019).

- Desai, A.; Lee, D.J.; Ventura, D. An efficient feature descriptor based on synthetic basis functions and uniqueness matching strategy. Comput. Vis. Image Underst. 2016, 142, 37–49. [Google Scholar] [CrossRef]

- Moravec, H.P. Obstacle Avoidance and Navigation in the Real World by a Seeing Robot Rover; Technical Report; Stanford University: Stanford, CA, USA, 1980. [Google Scholar]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; p. ii. [Google Scholar]

- Kwang, M.Y.; Eduard, T.; Vincent, L.; Pascal, F. LIFT: Learned invariant feature transform. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, Netherlands, 8–16 October 2016; pp. 467–483. [Google Scholar]

- Daniel, D.; Tomasz, M.; Andrew, R. SuperPoint: Self-supervised Interest Point Detection and Description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 17 December 2018; pp. 337–349. [Google Scholar]

- Ono, Y.; Eduard, T.; Pascal, F.; Kwang, M.Y. LF-Net: Learning local features from images. In Proceedings of the 32nd Neural Information Processing System, Montreal, QC, Canada, 3–8 December 2018; pp. 1–11. [Google Scholar]

- Zhen, Z.; Wee, S.L. Deep graphical feature learning for the feature matching problem. In Proceedings of the 2019 International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 5087–5096. [Google Scholar]

- Zhang, D.; Raven, L.A.; Lee, D.J.; Meng, Y.; Desai, A. Hardware friendly robust synthetic basis feature descriptor. Electronics. 2019, 8, 847. [Google Scholar] [CrossRef]

- Matungka, R. Studies on Log-Polar Transform for Image Registration and Improvements Using Adaptive Sampling and Logarithmic Spiral. Ph.D. Thesis, Ohio State University, Columbus, OH, USA, 2016. [Google Scholar]

- Araujo, H.; Dias, J.M. An introduction to the log-polar mapping. In Proceedings of the II Workshop on Cybernetic Vision, Sao Carlos, Brazil, 9–11 December 1996; pp. 139–144. [Google Scholar]

- Tao, T.; Zhang, Y. Detection and description of scale-invariant keypoints in log-polar space. J. Image Graph. 2015, 20, 1639–1651. [Google Scholar]

- Schneider, P.J.; Eberly, D.H. Geometric Tools for Computer Graphics, 1st ed.; Morgan Kaufmann: Burlington, MA, USA, 2002; pp. 98–103. [Google Scholar]

- Rosin, P.L. Measuring corner properties. Comput. Vis. Image Underst. 1999, 73, 291–307. [Google Scholar] [CrossRef]

- Noble, F.K. Comparison of OpenCV’s feature detectors and feature matchers. In Proceedings of the 23rd International Conference on Mechatronics and Machine Vision in Practice, Nanjing, China, 28–30 November 2016; pp. 1–6. [Google Scholar]

- Trajković, M.; Hedley, M. Fast corner detection. Image Vision Comput. 1998, 16, 75–87. [Google Scholar] [CrossRef]

- Affine Covariant Features. Available online: http://www.robots.ox.ac.uk/~{}vgg/research/affine/ (accessed on 22 March 2020).

- Shirmohammadi, S.; Ferrero, A. Camera as the instrument: The rising trend of vision based measurement. IEEE Instrum. Meas. Mag. 2014, 17, 41–47. [Google Scholar] [CrossRef]

- What You See and Think—Requirements and Scenarios. Available online: www.muflyguo.com/archives/1557 (accessed on 22 March 2020).

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Brown, L.G. A survey of image registration techniques. ACM Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Santos, E.S.F.; Xavier, W.B.; Rodrigues, R.N.; Botelho, S.S.C.; Werhli, A.V. Vision based measurement applied to industrial instrumentation. In Proceedings of the 20th World Congress of the International Federation of Automatic Control, Toulouse, France, 9–14 July 2017; pp. 788–793. [Google Scholar]

| Image Transformation | Feature Count | Matching Rate (%) | |

|---|---|---|---|

| rSYBA | SR-SYBA | ||

| Scale factor = 0.8 | 300 | 55.33 | 57.64 |

| Scale factor = 0.9 | 300 | 70.33 | 69.67 |

| Scale factor = 1.05 | 300 | 79.00 | 75.67 |

| Scale factor = 1.1 | 300 | 73.67 | 74.67 |

| Scale factor = 1.2 | 300 | 67.00 | 72.33 |

| Rotation degree = 5 | 300 | 63.33 | 78.33 |

| Rotation degree = 7 | 300 | 46.33 | 75.67 |

| Rotation degree = 10 | 300 | 54.00 | 70.00 |

| Rotation degree = 15 | 300 | 45.67 | 56.67 |

| Scaling Invariance | Rotation Invariance | SYBA & Matching | Total | |

|---|---|---|---|---|

| SR-SYBA | 300 × 6.57 = 1971 | 300 × 0.012 = 3.6 | 9.33 for 300 × 300 | 1983.93 |

| rSYBA | 300 × 0.78 = 234 | 1500 × 6.24 = 9360 | 46.65 for 1500 × 300 | 9640.65 |

| Image Size | SIFT | SURF | BRISK | ORB | SR-SYBA | SYBA |

|---|---|---|---|---|---|---|

| 397 × 298 | 34.0 | 28.2 | 52.5 | 27.4 | 7.6 | 7.5 |

| 794 × 595 | 114.0 | 52.1 | 56.4 | 28.8 | 7.9 | 7.8 |

| 1587 × 1190 | 443.1 | 130.4 | 61.2 | 36.0 | 9.4 | 9.3 |

| 2381 × 1786 | 965.6 | 262.8 | 72.0 | 48.2 | 11.6 | 11.5 |

| 3174 × 2381 | 1.7 × 103 | 446.4 | 87.1 | 63.5 | 14.7 | 14.6 |

| 3968 × 2976 | 2.6 × 103 | 682.5 | 106.8 | 83.2 | 18.7 | 18.6 |

| Image Pair | Data | SIFT | SURF | BRISK | ORB | SR-SYBA |

|---|---|---|---|---|---|---|

| 1|2 | Average Matching Rate % | 31.01 | 42.17 | 45.80 | 30.14 | 52.58 |

| p-value | 6.75 × 10−8 | 7.91 × 10−5 | 0.0083 | 1.25 × 10−8 | / | |

| 1|3 | Average Matching Rate % | 25.96 | 38.13 | 38.82 | 25.66 | 39.27 |

| p-value | 4.77 × 10−4 | 0.3822 | 0.4602 | 0.0021 | / | |

| 1|4 | Average Matching Rate % | 19.17 | 28.97 | 28.64 | 18.17 | 33.83 |

| p-value | 1.25 × 10−4 | 0.0368 | 0.0203 | 2.27 × 10−5 | / | |

| 1|5 | Average Matching Rate % | 18.96 | 29.84 | 28.18 | 17.96 | 36.17 |

| p-value | 3.14 × 10−5 | 0.0012 | 7.16 × 10−4 | 2.28 × 10−6 | / | |

| 1|6 | Average Matching Rate % | 16.13 | 25.88 | 26.28 | 15.02 | 31.18 |

| p-value | 1.35 × 10−4 | 0.0130 | 0.0266 | 3.29 × 10−5 | / |

| Object | Actual Size (mm) | Measurement Result (mm) | Error (%) | |

|---|---|---|---|---|

| Student card | Length | 85 | 84.223 | 0.914 |

| Width | 54.5 | 53.972 | 0.969 | |

| Packing box | Length | 105 | 103.621 | 1.313 |

| Width | 105 | 106.466 | 1.396 | |

| Bookmarker | Length | 144 | 145.030 | 0.715 |

| Width | 40 | 39.958 | 0.105 | |

| Book | Length | 210 | 206.426 | 1.702 |

| Width | 235 | 229.328 | 2.414 | |

| A4 paper | Length | 210 | 215.541 | 2.639 |

| Width | 297 | 298.314 | 0.442 | |

| Computer monitor | Length | 537.6 | 552.450 | 2.762 |

| Width | 314.3 | 311.946 | 0.749 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, M.; Zhang, D.; Lee, D.-J.; Desai, A. SR-SYBA: A Scale and Rotation Invariant Synthetic Basis Feature Descriptor with Low Memory Usage. Electronics 2020, 9, 810. https://doi.org/10.3390/electronics9050810

Yu M, Zhang D, Lee D-J, Desai A. SR-SYBA: A Scale and Rotation Invariant Synthetic Basis Feature Descriptor with Low Memory Usage. Electronics. 2020; 9(5):810. https://doi.org/10.3390/electronics9050810

Chicago/Turabian StyleYu, Meng, Dong Zhang, Dah-Jye Lee, and Alok Desai. 2020. "SR-SYBA: A Scale and Rotation Invariant Synthetic Basis Feature Descriptor with Low Memory Usage" Electronics 9, no. 5: 810. https://doi.org/10.3390/electronics9050810

APA StyleYu, M., Zhang, D., Lee, D.-J., & Desai, A. (2020). SR-SYBA: A Scale and Rotation Invariant Synthetic Basis Feature Descriptor with Low Memory Usage. Electronics, 9(5), 810. https://doi.org/10.3390/electronics9050810