Goal-Oriented Obstacle Avoidance with Deep Reinforcement Learning in Continuous Action Space

Abstract

1. Introduction

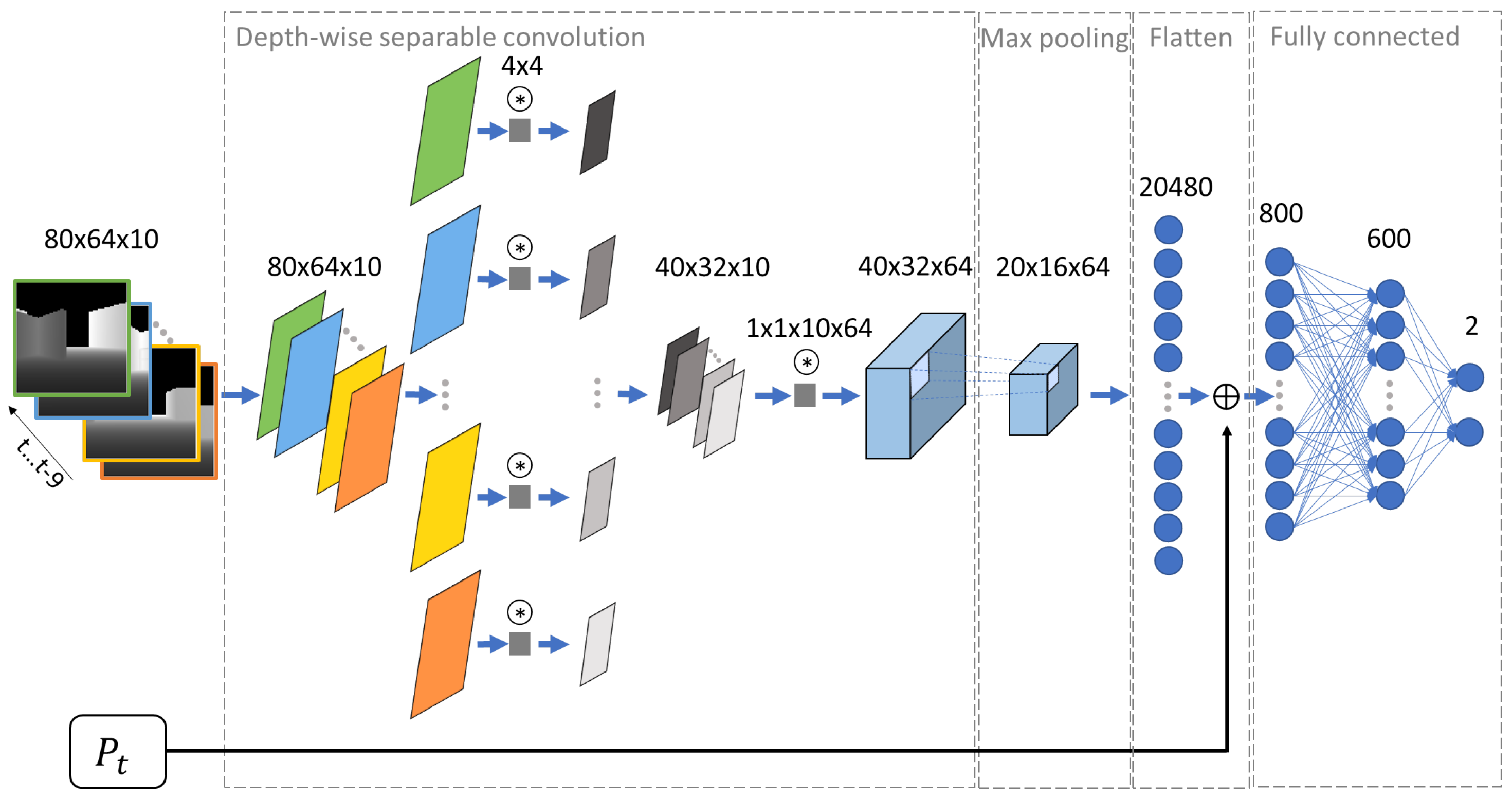

- Creation of a convolutional deep deterministic policy gradient network for tackling a large amount of input data.

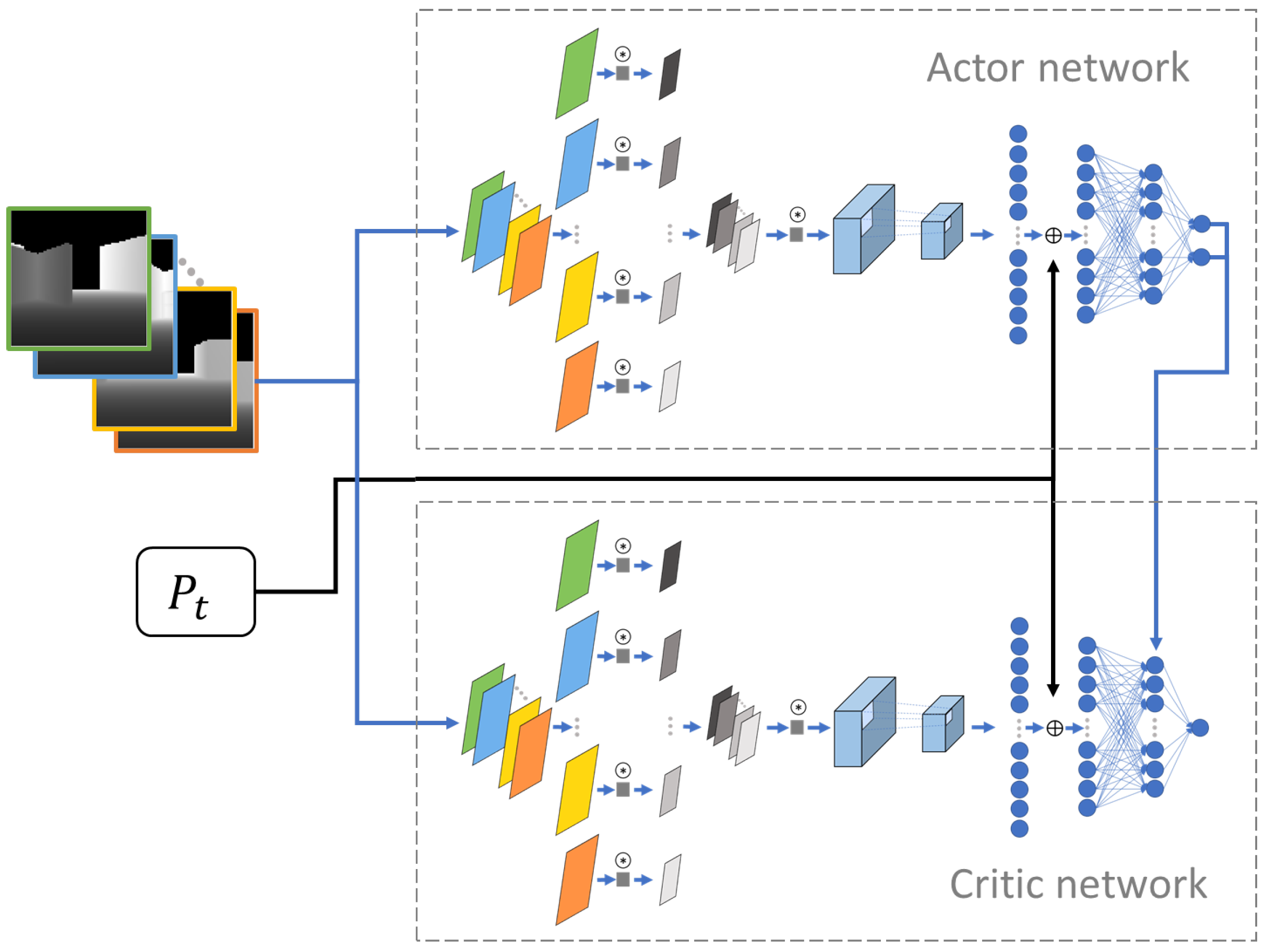

- Development of a deep deterministic policy gradient network with mixed inputs for goal-oriented collision avoidance.

- Transfer of a network, learned in a simulation, to the real environment for map-less vector navigation with depth image inputs.

2. Related Works

3. Deep Learning Network for Collision Avoidance in a Continuous Action Space

3.1. Convolutional Deep Deterministic Policy Gradient

3.2. Reward

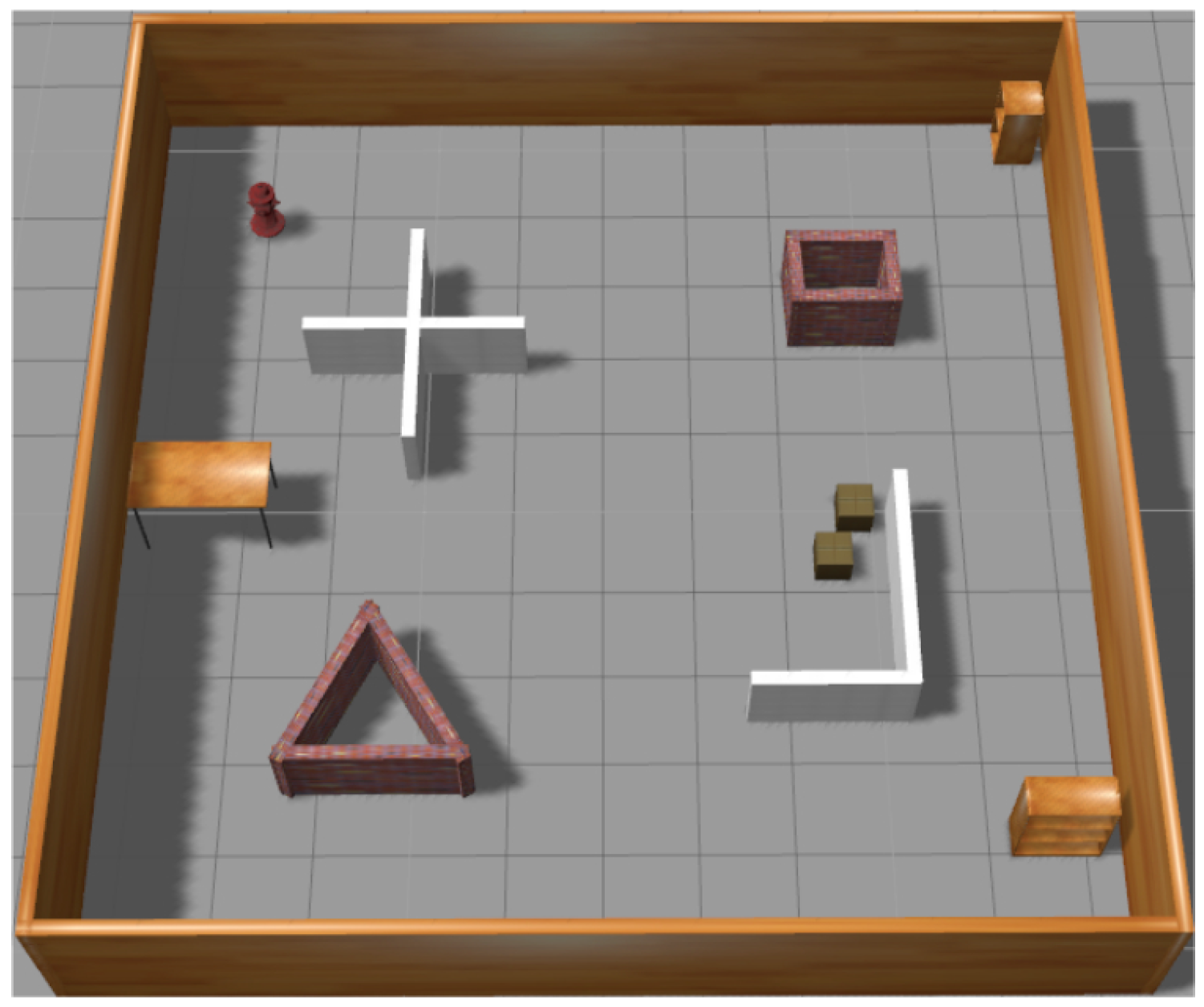

4. Training

5. Experiments

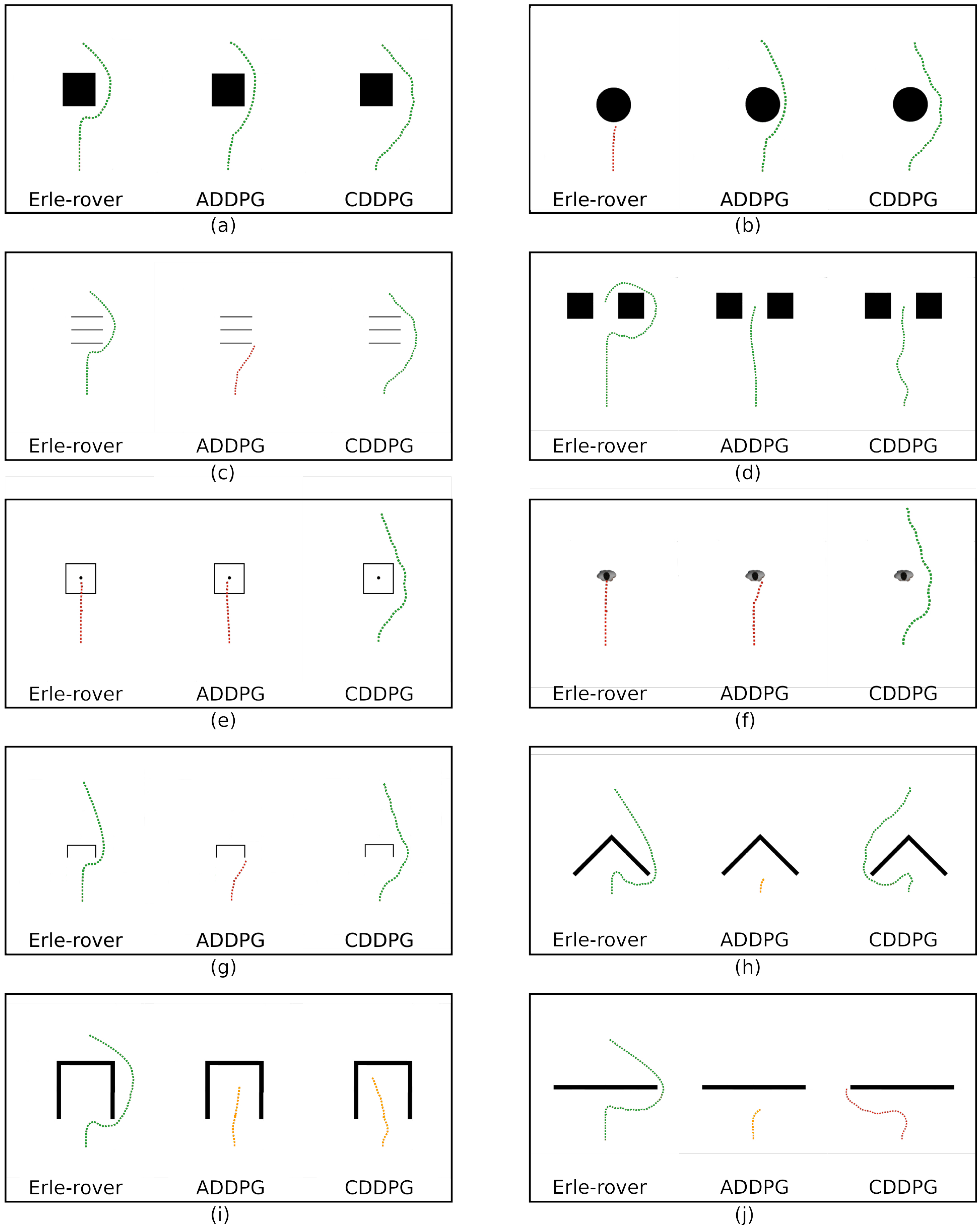

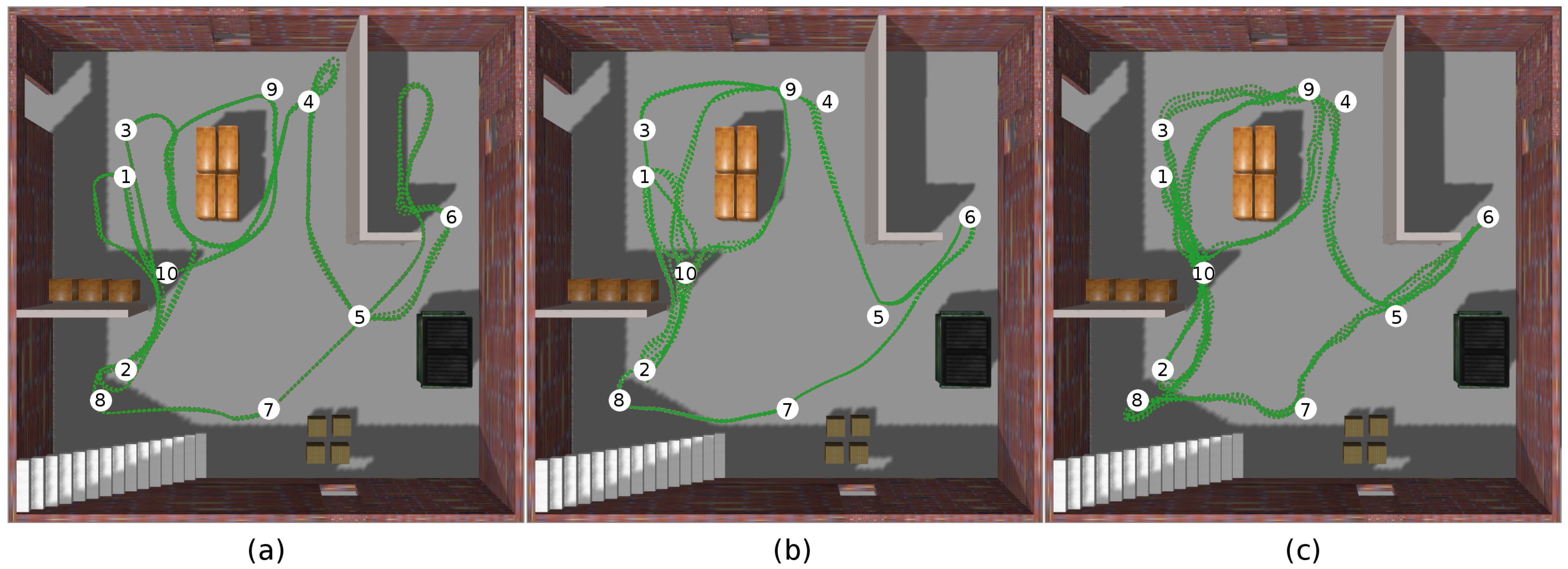

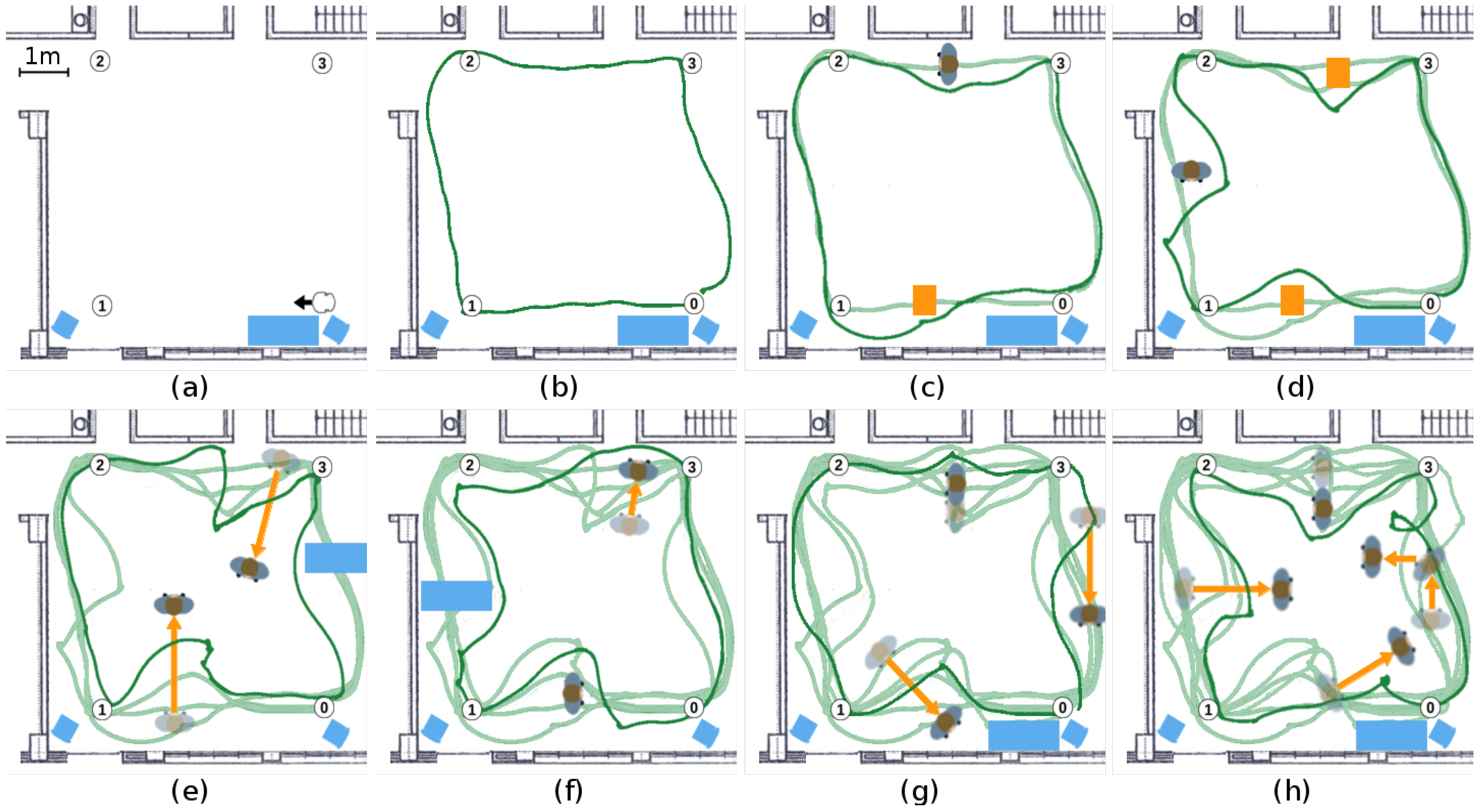

5.1. Experiments in the Simulated Environment

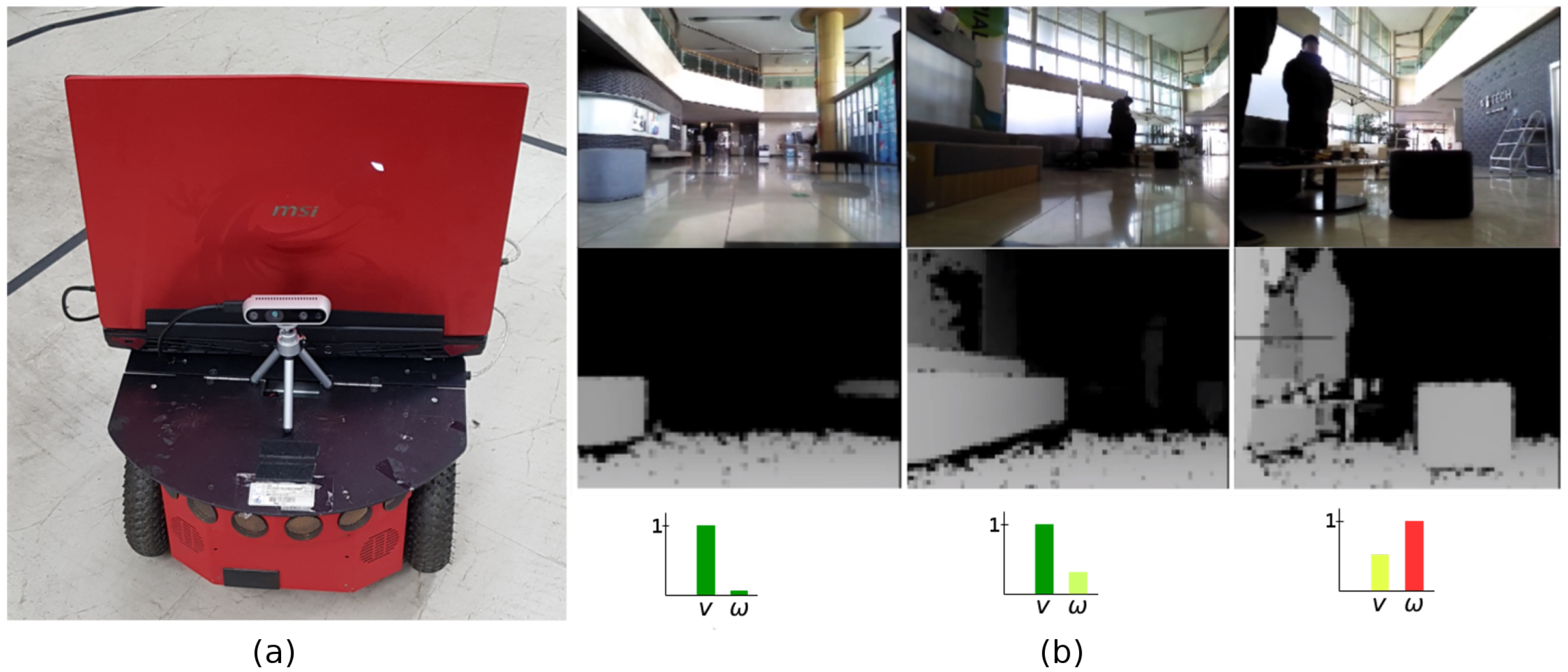

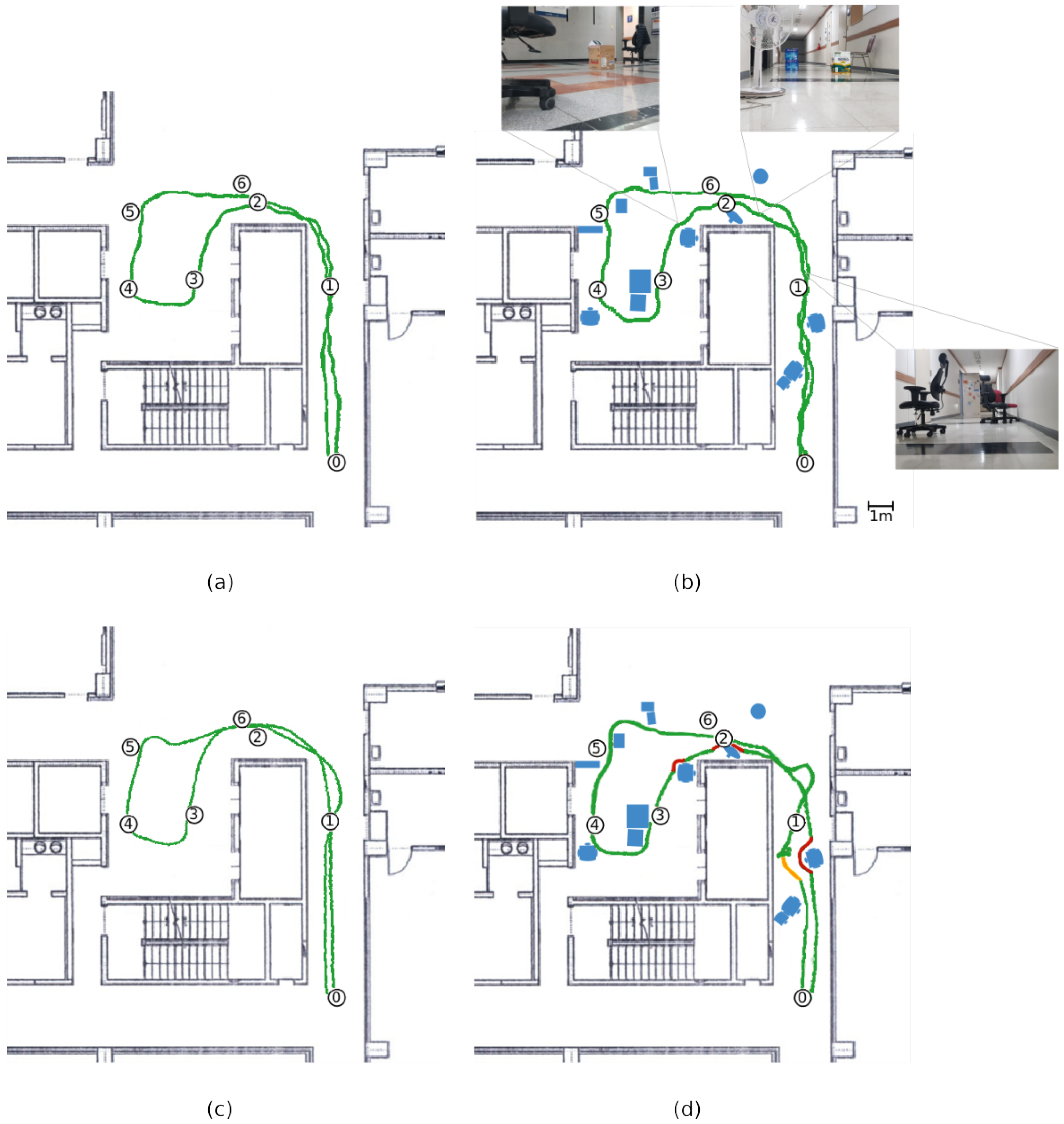

5.2. Experiments in a Real Environment

6. Summary and Discussion

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| RRT* | Rapidly-exploring Random Tree Star |

| SLAM | Simultaneous Localization and Mapping |

| D3QN | Deep Double Q Network |

| DDPG | Deep Deterministic Policy Gradient |

| ADDPG | Asynchronous Deep Deterministic Policy Gradient |

| CDDPG | Convolutional Deep Deterministic Policy Gradient |

| ReLU | Rectified Linear Unit |

| ROS | Robot Operating System |

References

- Sifre, L.; Mallat, S. Rigid-motion scattering for texture classification. arXiv 2014, arXiv:1403.1687. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Sariff, N.; Buniyamin, N. An overview of autonomous mobile robot path planning algorithms. In Proceedings of the 2006 4th Student Conference on Research and Development, Selangor, Malaysia, 27–28 June 2006; pp. 183–188. [Google Scholar]

- Radmanesh, M.; Kumar, M.; Guentert, P.H.; Sarim, M. Overview of path-planning and obstacle avoidance algorithms for UAVs: A comparative study. Unmanned Syst. 2018, 6, 95–118. [Google Scholar] [CrossRef]

- Noreen, I.; Khan, A.; Habib, Z. A comparison of RRT, RRT* and RRT*-smart path planning algorithms. Int. J. Comput. Sci. Netw. Secur. (IJCSNS) 2016, 16, 20. [Google Scholar]

- Kim, Y.N.; Ko, D.W.; Suh, I.H. Confidence random tree-based algorithm for mobile robot path planning considering the path length and safety. Int. J. Adv. Rob. Syst. 2019, 16, 1729881419838179. [Google Scholar] [CrossRef]

- Cimurs, R.; Suh, I.H. Time-optimized 3D Path Smoothing with Kinematic Constraints. Int. J. Control Autom. Syst. 2020. [Google Scholar] [CrossRef]

- Ribeiro, J.; Silva, M.; Santos, M.; Vidal, V.; Honório, L.; Silva, L.; Rezende, H.; Neto, A.S.; Mercorelli, P.; Pancoti, A. Ant Colony Optimization Algorithm and Artificial Immune System Applied to a Robot Route. In Proceedings of the 2019 20th International Carpathian Control Conference (ICCC), Krakow-Wieliczka, Poland, 26–29 May 2019; pp. 1–6. [Google Scholar]

- Lamini, C.; Benhlima, S.; Elbekri, A. Genetic algorithm based approach for autonomous mobile robot path planning. Procedia Comput. Sci. 2018, 127, 180–189. [Google Scholar] [CrossRef]

- Cimurs, R.; Hwang, J.; Suh, I.H. Bezier curve-based smoothing for path planner with curvature constraint. In Proceedings of the 2017 First IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 10–12 April 2017; pp. 241–248. [Google Scholar]

- Ferguson, D.; Stentz, A. Field D*: An interpolation-based path planner and replanner. In Robotics Research; Springer: Berlin/Heidelberg, Germany, 2007; pp. 239–253. [Google Scholar]

- Ferguson, D.; Stentz, A. The Field D* Algorithm for Improved Path Planning and Replanning in Uniform and Non-Uniform Cost Environments; Tech. Rep. CMU-RI-TR-05-19; Robotics Institute, Carnegie Mellon University: Pittsburgh, PA, USA, 2005. [Google Scholar]

- Dolgov, D.; Thrun, S.; Montemerlo, M.; Diebel, J. Path planning for autonomous vehicles in unknown semi-structured environments. Int. J. Robot. Res. 2010, 29, 485–501. [Google Scholar] [CrossRef]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Fuentes-Pacheco, J.; Ruiz-Ascencio, J.; Rendón-Mancha, J.M. Visual simultaneous localization and mapping: A survey. Artif. Intell. Rev. 2015, 43, 55–81. [Google Scholar] [CrossRef]

- Ko, D.W.; Kim, Y.N.; Lee, J.H.; Suh, I.H. A scene-based dependable indoor navigation system. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 1530–1537. [Google Scholar]

- Lin, J.; Wang, W.J.; Huang, S.K.; Chen, H.C. Learning based semantic segmentation for robot navigation in outdoor environment. In Proceedings of the 2017 Joint 17th World Congress of International Fuzzy Systems Association and 9th International Conference on Soft Computing and Intelligent Systems (IFSA-SCIS), Otsu, Japan, 27–30 June 2017; pp. 1–5. [Google Scholar]

- Zhang, Y.; Chen, H.; He, Y.; Ye, M.; Cai, X.; Zhang, D. Road segmentation for all-day outdoor robot navigation. Neurocomputing 2018, 314, 316–325. [Google Scholar] [CrossRef]

- Niijima, S.; Sasaki, Y.; Mizoguchi, H. Real-time autonomous navigation of an electric wheelchair in large-scale urban area with 3D map. Adv. Robot. 2019, 33, 1006–1018. [Google Scholar] [CrossRef]

- Pham, H.; Smolka, S.A.; Stoller, S.D.; Phan, D.; Yang, J. A survey on unmanned aerial vehicle collision avoidance systems. arXiv 2015, arXiv:1508.07723. [Google Scholar]

- Hoy, M.; Matveev, A.S.; Savkin, A.V. Algorithms for collision-free navigation of mobile robots in complex cluttered environments: A survey. Robotica 2015, 33, 463–497. [Google Scholar] [CrossRef]

- Garcia-Cruz, X.; Sergiyenko, O.Y.; Tyrsa, V.; Rivas-Lopez, M.; Hernandez-Balbuena, D.; Rodriguez-Quiñonez, J.; Basaca-Preciado, L.; Mercorelli, P. Optimization of 3D laser scanning speed by use of combined variable step. Opt. Lasers Eng. 2014, 54, 141–151. [Google Scholar] [CrossRef]

- Ivanov, M.; Sergiyenko, O.; Tyrsa, V.; Mercorelli, P.; Kartashov, V.; Hernandez, W.; Sheiko, S.; Kolendovska, M. Individual scans fusion in virtual knowledge base for navigation of mobile robotic group with 3D TVS. In Proceedings of the IECON 2018-44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018; pp. 3187–3192. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529. [Google Scholar] [CrossRef]

- Dann, M.; Zambetta, F.; Thangarajah, J. Integrating skills and simulation to solve complex navigation tasks in Infinite Mario. IEEE Trans. Games 2018, 10, 101–106. [Google Scholar] [CrossRef]

- Tampuu, A.; Matiisen, T.; Kodelja, D.; Kuzovkin, I.; Korjus, K.; Aru, J.; Aru, J.; Vicente, R. Multiagent cooperation and competition with deep reinforcement learning. PLoS ONE 2017, 12, e0172395. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, Y.; Liu, T.; Duan, J. Deep learning for event-driven stock prediction. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Akita, R.; Yoshihara, A.; Matsubara, T.; Uehara, K. Deep learning for stock prediction using numerical and textual information. In Proceedings of the 2016 IEEE/ACIS 15th International Conference on Computer and Information Science (ICIS), Okayama, Japan, 26–29 June 2016; pp. 1–6. [Google Scholar]

- Chong, E.; Han, C.; Park, F.C. Deep learning networks for stock market analysis and prediction: Methodology, data representations, and case studies. Expert Syst. Appl. 2017, 83, 187–205. [Google Scholar] [CrossRef]

- Sünderhauf, N.; Brock, O.; Scheirer, W.; Hadsell, R.; Fox, D.; Leitner, J.; Upcroft, B.; Abbeel, P.; Burgard, W.; Milford, M.; et al. The limits and potentials of deep learning for robotics. Int. J. Robot. Res. 2018, 37, 405–420. [Google Scholar]

- Tai, L.; Li, S.; Liu, M. A deep-network solution towards model-less obstacle avoidance. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, South Korea, 9–14 October 2016; pp. 2759–2764. [Google Scholar]

- Tai, L.; Paolo, G.; Liu, M. Virtual-to-real deep reinforcement learning: Continuous control of mobile robots for mapless navigation. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 31–36. [Google Scholar]

- Zhu, Y.; Mottaghi, R.; Kolve, E.; Lim, J.J.; Gupta, A.; Fei-Fei, L.; Farhadi, A. Target-driven visual navigation in indoor scenes using deep reinforcement learning. In Proceedings of the 2017 IEEE international conference on robotics and automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3357–3364. [Google Scholar]

- Richter, C.; Roy, N. Safe visual navigation via deep learning and novelty detection. In Proceedings of the Robotics: Science and Systems XIII, Cambridge, MA, USA, 12–16 July 2017. [Google Scholar]

- Zhang, J.; Springenberg, J.T.; Boedecker, J.; Burgard, W. Deep reinforcement learning with successor features for navigation across similar environments. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 2371–2378. [Google Scholar]

- Giusti, A.; Guzzi, J.; Cireşan, D.C.; He, F.L.; Rodríguez, J.P.; Fontana, F.; Faessler, M.; Forster, C.; Schmidhuber, J.; Di Caro, G.; et al. A machine learning approach to visual perception of forest trails for mobile robots. IEEE Robot. Autom. Lett. 2016, 1, 661–667. [Google Scholar] [CrossRef]

- Kahn, G.; Villaflor, A.; Pong, V.; Abbeel, P.; Levine, S. Uncertainty-aware reinforcement learning for collision avoidance. arXiv 2017, arXiv:1702.01182. [Google Scholar]

- Xie, L.; Wang, S.; Markham, A.; Trigoni, N. Towards monocular vision based obstacle avoidance through deep reinforcement learning. arXiv 2017, arXiv:1706.09829. [Google Scholar]

- Wang, Y.; He, H.; Sun, C. Learning to navigate through complex dynamic environment with modular deep reinforcement learning. IEEE Trans. Games 2018, 10, 400–412. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Rusu, A.A.; Vecerik, M.; Rothörl, T.; Heess, N.; Pascanu, R.; Hadsell, R. Sim-to-real robot learning from pixels with progressive nets. arXiv 2016, arXiv:1610.04286. [Google Scholar]

- James, S.; Johns, E. 3d simulation for robot arm control with deep q-learning. arXiv 2016, arXiv:1609.03759. [Google Scholar]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the International Conference on Machine Learning (ICML), Beijing, China, 21–26 June 2014; pp. 387–395. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- ROS. Erle-Rover. 2016. Available online: http://wiki.ros.org/Robots/Erle-Rover (accessed on 4 July 2019).

| Parameter | Value |

|---|---|

| Actor Network Learning Rate | 0.0001 |

| Critic Network Learning Rate | 0.001 |

| Critic Network Discount Factor | 0.99 |

| Soft Target Update Parameter | 0.001 |

| Buffer Size | 80,000 |

| Mini-Batch Size | 10 |

| Random Seed Value | 1234 |

| Distance (m) | Time (s) | |||||

|---|---|---|---|---|---|---|

| Erle-rover | ADDPG | CDDPG | Erle-rover | ADDPG | CDDPG | |

| Lap 1 | 62.01 | 46.24 | 49.78 | 168 | 128 | 123 |

| Lap 2 | 63.25 | 46.74 | 49.97 | 173 | 126 | 129 |

| Lap 3 | 63.41 | 46.47 | 49.64 | 171 | 127 | 125 |

| Lap 4 | 63.69 | 46.66 | 49.87 | 172 | 125 | 123 |

| Lap 5 | 63.84 | 46.61 | 50.05 | 172 | 122 | 126 |

| Average | 63.24 | 46.54 | 49.86 | 171.2 | 125.6 | 125.2 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cimurs, R.; Lee, J.H.; Suh, I.H. Goal-Oriented Obstacle Avoidance with Deep Reinforcement Learning in Continuous Action Space. Electronics 2020, 9, 411. https://doi.org/10.3390/electronics9030411

Cimurs R, Lee JH, Suh IH. Goal-Oriented Obstacle Avoidance with Deep Reinforcement Learning in Continuous Action Space. Electronics. 2020; 9(3):411. https://doi.org/10.3390/electronics9030411

Chicago/Turabian StyleCimurs, Reinis, Jin Han Lee, and Il Hong Suh. 2020. "Goal-Oriented Obstacle Avoidance with Deep Reinforcement Learning in Continuous Action Space" Electronics 9, no. 3: 411. https://doi.org/10.3390/electronics9030411

APA StyleCimurs, R., Lee, J. H., & Suh, I. H. (2020). Goal-Oriented Obstacle Avoidance with Deep Reinforcement Learning in Continuous Action Space. Electronics, 9(3), 411. https://doi.org/10.3390/electronics9030411