Abstract

Age progression is associated with poor performance of verification systems. Thus, there is a need for further research to overcome this problem. Three-dimensional facial aging modeling for employment in verification systems is highly serviceable, and able to acknowledge how variations in depth and pose can provide additional information to accurately represent faces. In this article, the impact of aging on the performance of three-dimensional facial verification is studied. For this purpose, we employed three-dimensional (3D) faces obtained from a 3D morphable face aging model (3D F-FAM). The proposed 3D F-FAM was able to simulate the facial appearance of a young adult in the future. A performance evaluation was completed based on three metrics: structural texture quality, mesh geometric distortion and morphometric landmark distances. The collection of 500 textured meshes from 145 subjects, which were used to construct our own database called FaceTim V.2.0, was applied in performance evaluation. The experimental results demonstrated that the proposed model produced satisfying results and could be applicable in 3D facial verification systems. Furthermore, the verification rates proved that the 3D faces achieved from the proposed model enhanced the performance of the 3D verification process.

1. Introduction

The human face, as a complex structure composed of diverse soft and hard tissue layers, is a primary source of information for revealing a person’s age, gender and ethnicity. Human faces have been thoroughly studied from different perspectives across several disciplines, including the neuroscience, psychology, anthropometry, medical and computer science fields.

The mystery of human longevity has received much consideration to search for a better quality of life for individuals. Facial aging has gained widespread popularity in recent years due to numerous applications in biometrics, forensics, security, healthcare and in the search for missing children. Aging is an inevitable and complex process that affects both the face shape and texture; however, it is neither uniform nor linear. According to morphological studies, faces do not age homogeneously. The aging process involves many dynamic components, which are associated with variations in all structural layers of the face, including both hard craniofacial tissues and soft tissues—namely the skeleton, muscle, fat, and skin [1]. The facial skeleton does not grow homogeneously as a result of aging; i.e., all of the different bones are not involved in the same growth pattern. Most facial muscle alterations emerge from the loss of skeletal muscle mass and strength, which occurs during aging. In addition, the pattern of fat deposition on the face undergoes specific alterations due to the aging process. Skin is the most superficial and complex structural layer of the face, and its appearance is the primary indicator of age. It changes in function and structure and becomes thinner with age [2]. In brief, changes in the balance of facial layers cause the phenomenon of aging and inform facial aging models.

The main challenge in aging simulation is that changes in facial features due to aging vary for different individuals. Gender plays an important role in the aging process. By understanding the aging process pattern and making predictions about an individual’s facial components according to its specific growth curves, it may be possible to identify solutions which slow down the impact of aging.

In order to trace the aging pattern, an extension to third dimensional space (3D) is required, as it provides additional information to compensate for variations in depth and pose. Hence, 3D modeling is highly applicable in capturing aging patterns and is ostensibly the best type of modeling to analyze for the purpose of obtaining realistic results. However, extracting reliable facial features in a 3D environment is a challenging task.

In the present study, 3D faces obtained from a fully automatic, robust and morphable face age progression model were enrolled in a 3D facial verification process. The proposed model aimed to mathematically predict and simulate an individual’s facial appearance in the forthcoming years (up to 80 years of age) using a 3D facial textured mesh input at the current age. The results were employed in a 3D facial verification system in order to analyze the impact of aging on the system’s performance.

Early models for facial age progression date back to 1995 [3,4], where visual signs of age were studied by creating facial prototypes from several faces in several age groups. Other research was based on characterizing a pattern of variation for aging parameters [5]. Specifically, the curves of facial aging parameters were obtained by measuring variation in predefined image parameters at two different ages. The facial images were then manipulated using the obtained aging curves [6,7]. A locally-estimated probability distributions method was introduced [8,9] to separate high and low-resolution information by transforming images into a wavelet domain. Later, a statistical appearance model [10] was generated to explain variations due to age and estimate the relationship between the age of a person and the model’s parameters. A semi-automatic procedure in which principal component analysis was applied to a set of faces was presented in Ref. [11] in order to construct shape and texture models. A linear method was examined to predict the effects of aging; however, the results for small aging gaps can be considered reliable for adults since their face shape does not change remarkably.

Other works include craniofacial growth of human faces during formative years [12,13], modeling of wrinkles and skin aging [14], and caricaturing 3D face models [15,16]. A multi-resolution dynamic model was defined by Suo et al. [17] to simulate face aging by using both geometrical and textural information. In this model, they integrated global appearance changes in face shape, hair style, wrinkle appearance, and deformation and aging effects of facial components in various facial zones. A prototype-based approach, presented as illumination-aware age progression, was proposed by Kemelmacher et al. [18]. Their method was able to compensate for illumination variation in the tested prototypes. Knowing that the aging process should contain personalized facial characteristics, a dictionary of aging patterns for several age groups was learned by a model in Ref. [19] to automatically render aging faces in a personalized way based on a set of age-group-specific dictionaries.

Later, a two-layer approach using guidance vectors was proposed for the purpose of facial aging [20]. More recent approaches apply decomposition of the facial input, such as the Robust Face Age Progression [21] and Hidden Factor Analysis [22] methods. Consequently, an image can be progressed to another age group by using a linear combination of the age and common components. In Generative Adversarial Net (GAN) based methods for automatic face aging, which are imposed on encoders and generators [23,24], identity-preserving optimization of GAN’s latent vectors and conditional adversarial auto encoders (CAAE) that are able to learn face manifolds are introduced. However, these GAN-based methods independently model the distribution of a single age group without capturing the trajectory patterns of the natural face aging process.

The aforementioned methods come with certain limitations since they are mostly based on two-dimensional representations [25]. On the contrary, 3D facial representations capture both shape and texture information and are able to obtain pose invariance. In Ref. [26], a three-dimensional model was used to build an age progression system for the faces of children from a small exemplar-image set. In this study, the basic assumption was that if two children look similar, they will continue to look similar when they grow older. In Ref. [27], a different method in 3D aging was modeled as a weighted average of all the texture and shape aging patterns in the training set. Similarly, texture and shape were modeled separately in Ref. [28].

Notably, not all of the aforementioned methods were able to produce photorealistic results. Moreover, a large number of them were studied in two-dimensional space. Table 1 lists notable contributions to the facial aging modeling topic, sorted by date order.

Table 1.

A list of notable contributions to the facial aging modeling topic.

In this study, the proposed approach presents a method for facial aging deformation in three-dimensional space that considers depth information and pose variation, which is efficient in time complexity. The most significant advantage of our model is simply its robustness in simulating realistic faces while preserving facial identities by visualizing the biological aging trajectory through the morphable 3D model. Here, we present our method for predicting facial aging, wherein the output (predicted faces) was processed by a verification system to enhance its performance. This was performed with consideration of the anthropometrical measurements and facial aging growth patterns using 3D facial modeling [29,30].

The remainder of this paper is structured as follows. In Section 2, the method is defined and the sub-sections describe each step of the approach. Section 3 describes the different performance evaluation protocols used to assess the model perceptually, as well as morphometrically. Section 4 defines the 3D facial verification process in conventional and modified scenarios. The experimental results are discussed thoroughly in Section 5 by presenting the renderings of 3D faces, analysis of performance evaluation metrics, and comparison results from the facial verification process. The discussion is presented in Section 6.

2. Materials and Methods

We developed a methodology to model the aging of a human face, enabling simulation of the trajectory of the face aging process. This methodology consisted of (i) collecting a database for the purpose of face template construction, (ii) consideration of the variation in the faces of both females and males, where the aging process was perceptible, by measuring variations in shape and texture for a period of time in a group of people, and (iii) the construction of the 3D facial aging model through measurements based on personal features.

In the proposed approach, a polygon mesh of the 3D face indicated by a mesh-vector and a UV texture map specified by a texture-vector were defined while they were mapped point by point.

Each step involved in the construction of the model will be explained comprehensively in the following sections.

2.1. Face Template Construction

Age-related effects and the aging (growth) trajectory are different from one person to another since the aging process is under the influence of different factors like genetics, health and lifestyle. However, there are common age-related aspects for faces in a specific age range which cannot be overlooked.

For the proposed approach, we started with the fact that there are a finite number of typical faces in each age group, and there are certain characteristics which change in almost all faces of a given type in each age category. As a result, face templates could be constructed. The template associated with each age group was calculated by (separately) averaging male and female faces. Consequently, the 3D template faces were constructed using a database of faces, m, each represented by its mesh-vector () and texture-vector (), where . Accordingly, a face template () could be interpreted as shown in Equation (1):

The first phase of the proposed method involved selection of the appropriate template 3D face model from the database representing the common facial characteristics in each age group. The aging trajectory curve of the selected age was then applied to simulate the appearance of the person at a specific older age. Age-related facial alterations could be classified as geometrical or textural. Contrary to the growth process during childhood, the geometric trajectory of adulthood aging encountered insignificant changes, with most of the transformations classified as textural.

To apply geometrical face changes, which are delicate even after 18 years old, craniofacial measurements in different age groups were required. Geometrically changing the facial age requires transformation of the size and distance between facial components, as well as face shape and its size. We started this process by studying craniofacial morphology and collecting information about craniofacial development. One part of this collection of information involved understanding how organs develop and change during the aging process. The result turned out to be useful for a better perception of facial maturation, so the focus was shifted to changes in the magnitude of facial landmarks. After collecting all the craniofacial measurements, they were filtered. First, measurements deemed useful for facial modeling were kept, with information such as head length and width eliminated. Second, those which could represent a component or its distribution were determined and retained. For example, from inner canthal distance, outer canthal distance and interpapillary distance of the two eyes, only the first element was retained, as it represents the distribution of the eyes in the face, while the other two are redundant. To apply facial age transformation as a non-linear function, the transformation curves of these measurements were employed.

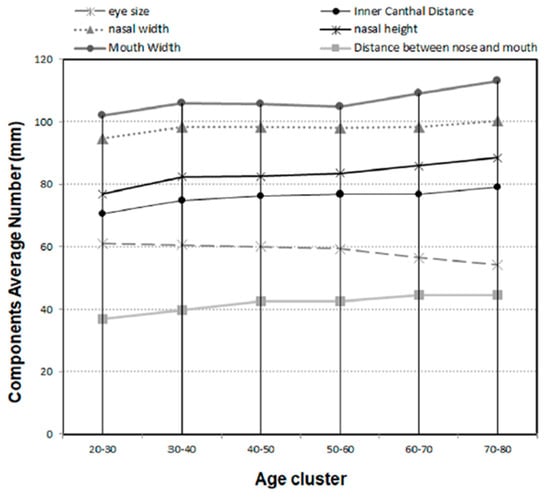

All the aforementioned measures were achieved by calculating the average size of, and distance between, facial components, obtained from all the face images in the different age groups of the constructed database. The calculated component size and distance measures in the aging trajectory for both genders, extracted as an average from all the database faces, is illustrated in Figure 1. This figure shows the changing process of the components’ size and distribution from age 20 to 80, over the different age groups.

Figure 1.

Facial components’ size and distance distribution.

For the per-vertex morphable model, texture information was used to assess textural alterations in each age group, which was an appropriate method of representing the surface color of the skin. The advantage is that the resolution was independent of the geometry. For this reason, we defined a single texture UV map for the facial regions of the head. A texture average was considered to calculate a single mean texture template for each age group as a UV texture map.

While human face aging during childhood is mainly reflected in craniofacial changes resulting in shape changes, it is mostly represented by relatively large texture changes with skin variations—including wrinkle appearance and minor shape changes—after entering adulthood. Based on this theory, approximation of coefficients is not the same for geometric meshes and perception aspects due to the fact that most of the changes in the polygon mesh of the face occur during the formative years until the beginning of the 20s [12], while textural changes take place when wrinkles appear and facial muscles begin to lose their elasticity.

2.2. 3D Face Forward Aging Model (3D F-FAM)

The 3D face aging morphable model can be defined as in Equation (2):

where is the target face of age , and is the face template at the same age consisting of The non-linear transformation function is applied on the face at age to adapt the geometrical changes of the face to the target age , by keeping the entire identity of the input face. To apply facial age transformation, craniofacial measurements in different ages are needed. Such measurements have previously been completed by Hall et al. [34]. In addition, they calculated means for all of the sizes and distances of facial components in each age group, creating a graph similar to that presented in Figure 1. The function provided in Equation (2) is the combination of translating, scaling and rotating extracted measurements on the original face, and contains all of the geometrical modifications according to the extracted measurements. is the input age, is the target age and . is the difference in age between the current input age and the target output age. Coefficients and , associated with the face template and geometrical transformation function, respectively, are specified such that the similarity measurement concerning personal characteristics between the current age and target age can be retained as much as possible. The weight given to each input is crucial, as it decides which face has more share in the result: the geometrically changed input or the face template. In this work, the value of 0.6 < w2 < 0.7 for the weight of the geometrically changed input gave an acceptable result.

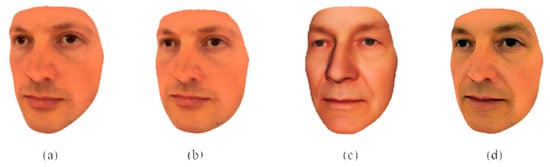

Thus, aged faces for different genders were generated using the original face shape as an input, while personal characteristics throughout all ages were retained to obtain realistic 3D facial appearance results. Figure 2 illustrates a 3D face at the current age, which is the input to the model, and the textured mesh after applying the transformation function, along with the textured mesh of an age-specific face template. As can be seen, personal features of the input face, such as the structural texture and color information, are maintained, while the output face is generated as a morph model between the template face and the geometrically transformed face.

Figure 2.

(a) Original input three-dimensional (3D) face: ; (b) 3D face inferred from the transformation function: ; (c) 3D face template at the target age: ; and (d) the aged 3D face: .

3. Performance Evaluation Protocol

To evaluate the performance of our proposed model, a generic perception-based mode (GPM) was used to evaluate the visual quality of the 3D textured face meshes as a linear combination of their structural texture quality, geometric distortion quality and morphometric landmarks. Such comparisons were carried out using the texture metric structural similarity (SSIM) [35], a 3D mesh distortion metric (MSDM) [36] and a face landmarks metric (FLM) [37], respectively. All metric scores were normalized to a value between 0 (exactly the same) and 1 (totally different), such that 0 represented two faces that were identical and 1 indicated they were dissimilar. Our combined metric is thus defined as follows in Equation (3):

SSIM was used to compare structural texture similarity between the reference faces (considered the ground truth faces) and the simulated faces (obtained from the proposed model) by measuring perceptual image degradation changes in terms of luminance, contrast and structural information.

In order to compare local pixel intensities between two images and , was defined as in Equation (4).

where is a luminance comparison function, is a contrast comparison function and is a structure comparison function. To calculate SSIM, the computation was carried out over local windows of size 11 × 11, which moved pixel by pixel over the entire image.

MSDM was used to measure geometric distortion through comparison of the reference mesh topology and the simulated face by calculating differences in the curvature statistics over local windows for two face meshes, as given in Equation (5).

where MSDM is the local distance between two local mesh windows and , which can be defined by the luminance (curvature), contrast and structure comparison functions (, and , respectively). In this work, a local window was defined as a connected set of vertices belonging to a mesh with a given radius; this radius was a parameter of the method, and was used in the performance evaluation.

Lastly, FLM was used to quantify the disparity between the extracted landmarks of corresponding mesh pairs, which were the reference and simulated faces at the same age. To do so, an analysis was implemented to locate nine landmarks in the eyes, nose, lip contour, chin and procerus center of the face meshes by finding the maximum and minimum local curvatures. Such landmarks are best suited for 3D facial comparison. The metric, FLM, was thus defined as follows (see Equation (6)), and measured the Euclidean distance between the landmark point on the reference face and the landmark point on the simulated face .

4. 3D Face Verification Process

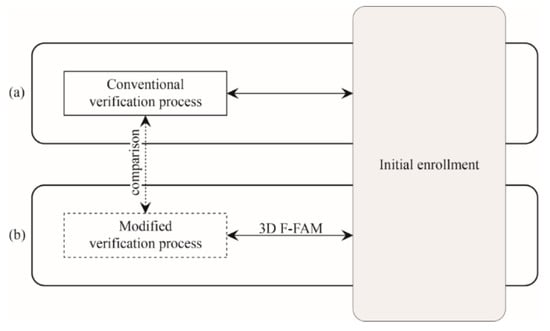

In order to analyze the impact of aging on 3D facial verification, the performance of the conventional verification process and that of the modified verification process was compared. This evaluation investigated the process of verification by comparing the claimed identities with the enrolled faces. As can be seen in Figure 3a, first, a verification between young adults (i.e., “inputs”) who were enrolled in the initial enrollment and their older faces (i.e., “references”) was performed in the context of the conventional verification process. Afterward (see Figure 3b), the faces which were enrolled initially (i.e., the “input” faces) were aged by the proposed 3D F-FAM model, providing the set of “simulated” faces. A modified verification process of the claimed identity was then executed which considered the “simulated” older faces obtained from our model instead of the real old faces. The intention was to assess the performance of the verification process in these two scenarios.

Figure 3.

Diagram of the verification process for (a) conventional verification and (b) modified verification.

For performance evaluation, we used a false acceptance rate (FAR), calculated as a fraction of the impostor scores exceeding the threshold, and a false rejection rate (FRR), calculated as a fraction of genuine scores falling below the threshold. Determining the FAR and FRR allowed us to consider an equal error rate (EER), and thereafter the performance of the system. The lower the EER is, the better the system performs.

5. Results

5.1. Database Collection

We collected two databases for this work. The first one was built from 900 facial images [38] and was used to create the face template explained in Section 2.1. All images were constrained to the frontal view with minor facial expression to minimize facial pose artefacts. They were split into five different age groups for both genders, each spanning 10 years. Each defined age group contained 180 faces of Caucasian ethnicity, among which the number of female and male faces were kept equal. It is worth noting that there are distinct differences between faces and the shape of their components across ethnicities; however, this template can be used as a prototype in different age studies.

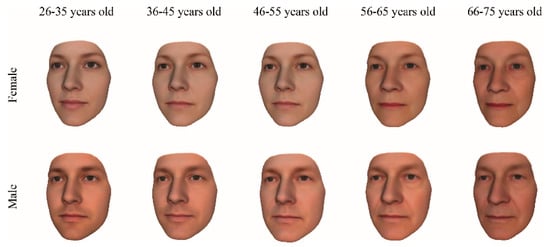

Some of the faces collected were directly captured in 3D and some were reconstructed from frontal and profile views. All images were normalized geometrically and texturally, classified into five specific age groups (26–35, 36–45, 46–55, 56–65, and 66–75 years old), and separated by gender. Figure 4 illustrates the face templates of each age group for both genders. The age groups were defined in such a way that the aging differences between two continuous groups were distinctive. Table 2 explains the demographics of each group.

Figure 4.

Rendering results of 3D face templates for five age groups from adulthood to a geriatric age.

Table 2.

The demography of the presented face database.

The second database was constructed to evaluate the proposed model. The Face Time-Machine database (FaceTim V.2.0) consisted of 500 3D textured meshes from 145 subjects (75 females and 75 males) at different ages in the frontal view with minimum facial expression. It is worth noting that for each subject, we collected at least three faces at three different ages. This database was generated mostly by capturing 3D images and partly using the web and celebrities’ facial images, as well as volunteers who agreed to contribute. Obviously 3D facial image acquisition of an adult individual as a child was impossible, so we were obliged to collect photos of their past in 2D and then reconstruct them in 3D. The collection of suitable data for testing the model was very challenging considering the intricacy of acquisitions under specific conditions, such as illumination, expression, pose, and resolution.

5.2. Face Renderings Using the Proposed 3D Model

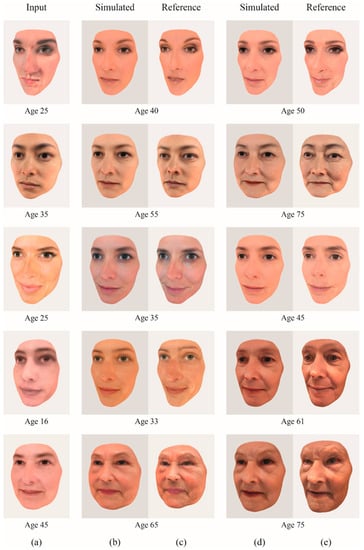

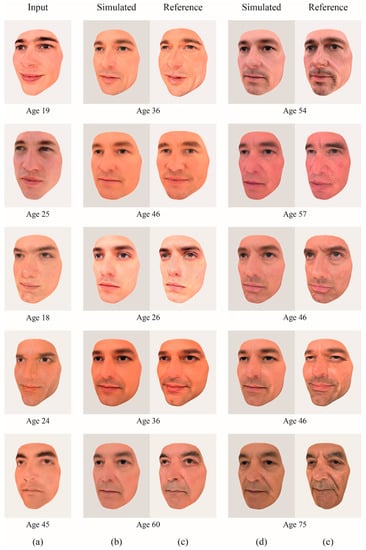

Figure 5 and Figure 6 illustrate the renderings of 5 females and 5 males after applying the 3D facial aging morphable model. In the figures, column (a) is the input and represents the textured mesh face of a young adult, while columns (b) and (d) are the simulated faces representing the output at any older target age. The reference textured meshes, presented in columns (c) and (e), are considered the ground truth faces, and were used for comparison and performance evaluation. Perceptibly, the textured surface meshes appear similar to the reference textured mesh faces, and the renderings are quite realistic. As demonstrated in columns (b) and (d), the face renderings are visually closer to the input when the age difference is small. In some cases where there is facial expression (e.g., smiling), as in female 5 at age 65, the simulated face looks slightly distorted. For the male rendering faces, shown in Figure 6, we obtained realistic aged faces while maintaining the identity of the input face. However, in some other cases (e.g., men with beard), we encountered less authentic renderings, for instance male 1 at age 54.

Figure 5.

Female rendering results of applying the proposed 3D morphable face aging model (3D F-FAM). (a) Input textured mesh at actual age; (b,d) output at target age—older; and (c,e) reference textured mesh at the same age as the output to apply in the evaluation mode.

Figure 6.

Male rendering results of applying the proposed 3D morphable face aging model (3D F-FAM). (a) Input textured mesh at actual age—adult; (b,d) output at target age— older; and (c,e) reference textured mesh at the same age as the output to apply in the evaluation mode.

5.3. Performance Evaluation Results

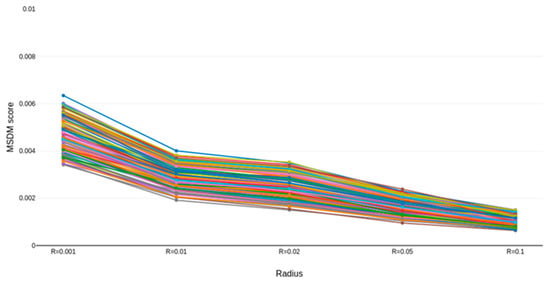

Table 3 demonstrates the mean MSDM scores resulting from the comparison between the simulated aged textured mesh face and the reference textured mesh face for both genders. We considered different radius values (R = 0.001, R = 0.01, R = 0.02, R = 0.05 and R = 0.1), in which a smaller radius value meant a more accurate comparison (as the bounding box got smaller throughout the comparison process). The metric value tended toward 1 when the measured objects were very different, and was equal to 0 for identical objects. As can be seen in Table 3, the calculated mean MSDM score for the set of evaluated 3D faces was 0.006 when R = 0.001 and 0.001 when R = 0.1. Thus, the scores were almost equal to 0, suggesting the compared meshes were identical. According to the trend illustrated in Figure 7, increasing the radius value lowers the accuracy of the comparison, resulting in a smaller MSDM score for both females and males. As can be seen, the model behaves the same for both genders, resulting in a high similarity rate and low distortion between the 3D output and the corresponding reference faces for both females and males. We can therefore conclude that our model does not generate significant geometric distortion and is robust for both genders.

Table 3.

Mean 3D mesh distortion metric (MSDM) scores of females and males in the testing database for five different radius values.

Figure 7.

Mean MSDM scores of the testing database for five different radius values.

In Table 4, the generic perception-based mode (GPM) is discussed comprehensively. As can be seen, the age difference between the input and output in the testing phase is defined as . We divided into four different age intervals of < 5 years, between 5 and 10 years, between 10 and 20 years and > 20 years. We can see that, for both genders, the mean scores for all metrics increase with increasing . For instance, in the case of females, SSIM = 0.1737 in the interval < 5 and reaches 0.2414 in the interval > 20 years. The trend is the same for the two other metrics, as well as for males. For instance, in FLM—for which nine craniofacial features were extracted from the face meshes in order to compare the disparity between corresponding mesh pairs (explained in Section 3)—we can see that the mean scores increase as Δ increases, suggesting that the results are less identical with an increase in . This indicates that simulating face aging effects is more challenging when the time period between input and output is larger. According to the mean score values for > 20 years, we can conclude that the method is still robust, even when there is a huge age difference between the input and output faces.

Table 4.

Mean scores of generic perception-based mode (GPM) evaluation mode: structural similarity (SSIM), face landmarks metric (FLM) and 3D mesh distortion metric (MSDM) for both genders.

Although the model behaves almost the same for both genders, a comparison of the mean score values for females and males showed slightly higher mean scores for males. This difference may be accounted for by the textural impact of the presence of a beard. According to Table 4, the mean scores in all cases are slightly higher for males than those for females in all classes. This mode of variation generates an increase in the size of the male face, especially in the nose, eyebrows, and chin. All in all, analysis of the Table 4 metrics shows that our model is robust regarding textural, geometrical and landmark comparisons, and does not create considerable distortion in either gender.

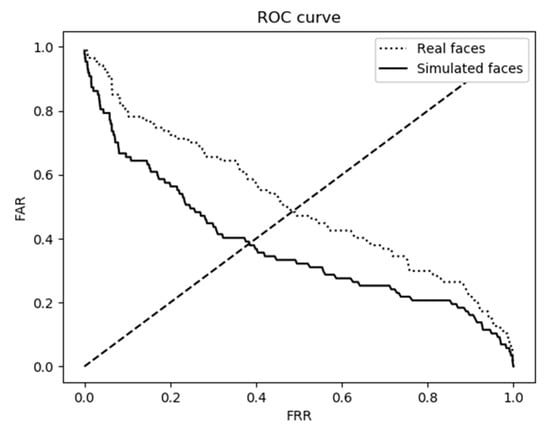

5.4. Objective Comparison of 3D Facial Verification

For the purpose of objectively comparing the verification systems, reference faces of 90 adult subjects from the Face Time-Machine database were used in the conventional verification process, while 3D aged results obtained from our model were employed in the modified verification process. To do this, we used our model to simulate the input faces at an advanced aged, which could be used against the real or ground truth faces. Then, we integrated the new module containing 90 simulated aged faces to conduct a comparison study and to analyze the performance of the verification system. Figure 8 illustrates the receiver operating characteristic (ROC) curves of our system’s verification performance. The curves represent the comparison of the system’s performance in both the conventional and modified processes. As can be seen, the EER is 50% in the case where real faces were used in the conventional verification process, while it is reduced to 35% when the simulated faces obtained from our proposed 3D aging morphable model were applied. This indicates that the system’s verification performance improved by considering the simulated faces instead of the real ones (i.e., the references used as the ground truth faces). It is worth noting that 35% does not indicate a great performance, but the purpose was to highlight an error rate reduction while comparing the conventional and modified verification processes. These results suggest that the performance of the verification process can be improved using the 3D faces output by our model, rather than the real enrolled faces. Undeniably, by considering all of the factors which may occur during the aging process, like gaining weight, or having a beard for men and makeup for women, the EER value will decrease. Moreover, by extending the number of faces in the testing database, a more accurate number for the EER of the system will be achieved.

Figure 8.

Receiver operating characteristic (ROC) curves of the 3D facial verification system.

In addition to the database issues mentioned, the quality of the reference facial textured meshes influence the evaluation results. This is because most of the references (the ground truth faces) do not have the same pose, light conditions or, in some cases, facial expression as the final result.

In Table 5, a comparison of notable performance evaluation metrics used in state-of-the-art facial aging models, specifying the name of the method, face model dimension, sensitivity to texture or shape, score on the 3D F-FAM and score of the methodology from the literature, is shown in order to compare these against our proposed model. As can be seen, GPM examines the model in both textural and geometrical aspects. It should be noted that the proposed evaluation method has a higher similarity rate compared to other methods, and it associates geometrical, textural and landmark distortion measurements. As can be seen, except for the objective performance similarity rate of Ref. [39] in 2D, which shows a higher rate than our model, only the texture-based method in Refs. [35,39] have a higher rate. However, the evaluation phase of the latter studies [35,39] only considered 5 samples in their test study, while our test was employed on 145 textured meshes.

Table 5.

Comparison of notable performance evaluation metrics used in facial aging models.

6. Discussion and Conclusions

In this article, we have described the impact of aging on the 3D facial verification process. We proposed a 3D facial aging morphable model (3D F-FAM) for creating detailed deformable models from a set of 3D facial surface meshes. We have demonstrated how separate aging trajectories for male and female subgroups can be modeled to simulate aging through a forward path, in which a 3D facial textured mesh input transmits to its older target age (up to the 80s). This morphable 3D model deals simultaneously with both 3D shape and texture deformations throughout the aging process. The 3D rendering results show that our approach is subject specific; it retains the identity information of the young face (input) and produces satisfactory results compared to the state-of-the-art and ground truths. Further, we evaluated the proposed model using a generic perception-based mode to analyze its performance in textural, geometrical and morphometrical aspects. Based on the results, the textural measurement points to approximately 0.2 for females and 0.27 for males, which shows the model represents plausible accuracy. In the geometrical measurement, the distance differences of the two compared meshes and the landmark comparisons indicate score values tending toward 0, showing that the model does not generate significant disparity.

We verified that aging impacts the performance of a 3D facial verification process, and that our 3D model improves the performance by reducing the EER rate. Although the verification performance rate was not considerably high using our model, the reduction in the EER value (compared to using the real enrolled faces) was noticeable. As a result, we can consider the applicability of this aging model for 3D face verification and facial appearance prediction across aging. Indeed, by taking into account some external factors in the process of aging, a better value can be obtained.

The extension of face modeling to the 3D domain allows for additional capabilities, particularly in compensating for accuracy in the representation of faces. Computation of the proposed model was roughly real-time and was efficient in time complexity. Further, it has widespread applications in educational, medical and psychological fields, and can be considered as a potential solution in biometrics, forensics and general computer vision applications. In future work, we would like to extend our 3D database and adapt our model to account for different ethnicities and external factors.

Author Contributions

For research articles with several authors, a short paragraph specifying their individual contributions must be provided. The following statements should be used “Conceptualization, A.N.; Methodology, F.M.Z.H.; Software, F.M.Z.H.; Validation, F.M.Z.H.; Formal Analysis, F.M.Z.H.; Writing-Original Draft Preparation, F.M.Z.H.; Visualization, F.M.Z.H.; Resources, E.F.; Co-supervision: R.F.; Supervision, A.N.”.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fitzgerald, R.; Graivier, M.H.; Kane, M.; Lorenc, Z.P.; Vleggaar, D.; Werschler, W.P.; Kenkel, J.M. Update on Facial Aging. Aesthetic Surg. J. 2010, 30, 11S–24S. [Google Scholar] [CrossRef] [PubMed]

- Castelo-Branco, C.; Duran, M.; Gonzalez-Merlo, J. Skin collagen changes related to age and hormone replacement therapy. Maturitas 1992, 15, 113–119. [Google Scholar] [CrossRef]

- Burt, D.M.; Perrett, D.I. Perception of Age in Adult Caucasian Male Faces: Computer Graphic Manipulation of Shape and Colour Information. Proc. R. Soc. B Biol. Sci. 1995, 259, 137–143. [Google Scholar]

- Rowland, D.A.; Perrett, D.I. Manipulating Facial Appearance through Shape and Color. IEEE Comput. Graph. Appl. 1995, 15, 70–76. [Google Scholar] [CrossRef]

- Pitanguy, I.; Leta, F.; Pamplona, D.; Weber, H.I. Defining and measuring aging parameters. Appl. Math. Comput. 1996, 78, 217–227. [Google Scholar] [CrossRef]

- Leta, F.R.; Conci, A.; Pamplona, D.; Pitanguy, I. Manipulating Facial Appearance Through Age Parameters. Proc. Ninth Brazilian Symp. Comput. Graph. Image Process. 1996, 167–172. [Google Scholar]

- Pitanguy, I.; Pamplona, D.; Weber, H.I.; Leta, F.; Salgado, F.; Radwanski, H.N. Numerical modeling of facial aging. Plast. Reconstr. Surg. 1998, 102, 200–204. [Google Scholar] [CrossRef]

- Tiddeman, B.P.; Burt, D.M.; Perrett, D.I. Prototyping and transforming facial texture for perception research. IEEE Comput. Graph. Appl. 2001, 21, 42–50. [Google Scholar] [CrossRef]

- Tiddeman, B.P.; Stirrat, M.R.; Perrett, D.I. Towards realism in facial image transformation: Results of a wavelet MRF method. Comput. Graph. Forum 2005, 24, 449–456. [Google Scholar] [CrossRef]

- Lanitis, A.; Taylor, C.J.; Cootes, T.F. Toward automatic simulation of aging effects on face images. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 442–455. [Google Scholar] [CrossRef]

- Scandrett, C.M.; Solomon, C.J.; Gibson, S.J. A person-specific, rigorous aging model of the human face. Pattern Recognit. Lett. 2006, 27, 1776–1787. [Google Scholar] [CrossRef]

- Ramanathan, N.; Chellappa, R. Modeling age progression in young faces. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 387–394. [Google Scholar]

- Ramanathan, N.; Chellappa, R.; Biswas, S. Age progression in Human Faces: A Survey. J. Vis. Lang. Comput. 2009, 15, 3349–3361. [Google Scholar]

- Wu, Y.; Thalmann, N.M.; Thalmann, D. A dynamic wrinkle model in facial animation and skin ageing. J. Vis. Comput. Animat. 1995, 6, 195–205. [Google Scholar] [CrossRef]

- O’Toole, A.J.; Price, T.; Vetter, T.; Bartlett, J.C.; Blanz, V. 3D shape and 2D surface textures of human faces: The role of ‘averages’ in attractiveness and age. Image Vis. Comput. 1999, 18, 9–19. [Google Scholar] [CrossRef]

- O’toole, A.J.; Vetter, T.; Volz, H.; Salter, E.M. Three-dimensional caricatures of human heads: Distinctiveness and the perception of facial age. Perception 1997, 26, 719–732. [Google Scholar] [CrossRef] [PubMed]

- Suo, J.; Min, F.; Zhu, S. A Multi-Resolution Dynamic Model for Face Aging Simulation. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognitio, Minneapolis, MN, USA, 18–23 June 2007; pp. 1–8. [Google Scholar]

- Kemelmacher-Shlizerman, I.; Suwajanakorn, S.; Seitz, S.M. Illumination-aware age progression. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition 2014, Columbus, OH, USA, 23–28 June 2014; pp. 3334–3341. [Google Scholar]

- Shu, X.; Tang, J.; Lai, H.; Liu, L.; Yan, S. Personalized Age Progression with Aging Dictionary. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3970–3978. [Google Scholar]

- Tsai, M.; Liao, Y.; Lin, I. Human face aging with guided prediction and detail synthesis. Multimed. Tools Appl. 2014, 72, 801–824. [Google Scholar] [CrossRef]

- Sagonas, C.; Panagakis, Y.; Arunkumar, S.; Ratha, N.; Zafeiriou, S. Back to the future: A fully automatic method for robust age progression. In Proceedings of the 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 4226–4231. [Google Scholar]

- Yang, H.; Huang, D.; Wang, Y.; Wang, H.; Tang, Y. Face Aging Effect Simulation Using Hidden Factor Analysis Joint Sparse Representation. IEEE Trans. Image Process. 2016, 25, 2493–2507. [Google Scholar] [CrossRef]

- Antipov, G.; Dugelay, J. Face aging with conditional generative adversarial networks. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 2089–2093. [Google Scholar]

- Zhang, Z.; Song, Y.; Qi, H. Age Progression/Regression by Conditional Adversarial Autoencoder. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Li, Y. (Li Yuancheng); Li, Y. (Yan Li). Face aging effect simulation model based on multilayer representation and shearlet transform. J. Electron. Imaging 2017, 26, 053011. [Google Scholar] [CrossRef]

- Shen, C.T.; Huang, F.; Lu, W.H.; Shih, S.W.; Liao, H.Y.M. 3D age progression prediction in children’s faces with a small exemplar-image set. J. Inf. Sci. Eng. 2014, 30, 1131–1148. [Google Scholar]

- Park, U.; Tong, Y.; Jain, A.K. Age-Invariant Face Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 947–954. [Google Scholar] [CrossRef]

- Maronidis, A.; Lanitis, A. Facial age simulation using age-specific 3D models and recursive PCA. In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP 2013), Barcelona, Spain, 21–24 February 2013; Volume 1. [Google Scholar]

- Blanz, V.; Vetter, T. A Morphable Model For The Synthesis Of 3D Faces. Siggraph 1999, 99, 187–194. [Google Scholar]

- Park, U.; Tong, Y.; Jain, A.K. Face recognition with temporal invariance: A 3D aging model. In Proceedings of the 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition, Amsterdam, The Netherlands, 17–19 September 2008; pp. 1–7. [Google Scholar]

- Scherbaum, K.; Sunkel, M.; Seidel, H.P.; Blanz, V. Prediction of individual non-linear aging trajectories of faces. Comput. Graph. Forum 2007, 26, 285–294. [Google Scholar] [CrossRef]

- Suo, J.; Zhu, S.; Shan, S.; Chen, X. A Compositional and Dynamic Model for Face Aging. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 385–401. [Google Scholar] [PubMed]

- Sagonas, C.; Panagakis, Y.; Leidinger, A.; Zafeiriou, S. Robust Joint and Individual Variance Explained. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5739–5748. [Google Scholar]

- Hall, J.; Allanson, J.; Gripp, K.; Slavotinek, A. Handbook of Physical Measurements; Oxford University Press: Oxford, UK, 2006. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Lavoue, G.; Corsini, M. A comparison of perceptually-based metrics for objective evaluation of geometry processing. IEEE Trans. Multimed. 2010, 12, 636–649. [Google Scholar] [CrossRef]

- Urbanová, P. Performance of distance-based matching algorithms in 3D facial identification. Egypt. J. Forensic Sci. 2016, 6, 135–151. [Google Scholar] [CrossRef]

- Farazdaghi, E. Facial Ageing and Rejuvenation Modeling Including Lifestyle Behaviours, Using Biometrics-Based Approaches; Université Paris-Est: Paris, France, 2017. [Google Scholar]

- Farazdaghi, E.; Nait-Ali, A. Backward face ageing model (B-FAM) for digital face image rejuvenation. IET Biom. 2017, 6, 478–486. [Google Scholar] [CrossRef]

- Guo, J.; Vidal, V.; Cheng, I.; Basu, A.; Baskurt, A.; Lavoue, G. Subjective and Objective Visual Quality Assessment of Textured 3D Meshes. ACM Trans. Appl. Percept. 2016, 14, 1–20. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).