Abstract

As one of the most reliable biometric identification techniques, iris recognition has focused on the differences in iris textures without considering the similarities. In this work, we investigate the correlation between the left and right irises of an individual using a VGG16 convolutional neural network. Experimental results with two independent iris datasets show that a remarkably high classification accuracy of larger than 94% can be achieved when identifying if two irises (left and right) are from the same or different individuals. This exciting finding suggests that the similarities between genetically identical irises that are indistinguishable using traditional Daugman’s approaches can be detected by deep learning. We expect this work will shed light on further studies on the correlation between irises and/or other biometric identifiers of genetically identical or related individuals, which would find potential applications in criminal investigations.

1. Introduction

There has been increasing interest in reliable, rapid, and unintrusive approaches for automatically recognizing the identity of persons. Because each eye’s iris has a highly detailed, unique and stable texture feature, the iris was suggested originally by ophthalmologists to be used as an effective biometric identifier [1]. Compared with other biometric features, the iris combines many desirable attributes. For example, the iris is well protected from the environment and keeps stable over time, and it is convenient to localize and isolate the iris of distinctive annular shape from a face image taken at distances of up to 1 m [2].

It was estimated that the probability that two irises could produce exactly the same pattern is approximately 1 in (as a reference, the population of the earth is around ). Therefore, it has been widely accepted that no two irises are alike, even for the irises of twins, or for the left and right irises of the same individual [3]. Taking advantage of the clear distinctiveness of the iris patterns, automated iris biometrics systems based on Daugman’s algorithms for encoding and recognizing [1] can distinguish individuals, even twin siblings [4]. This is the reason why the iris has been used to recognize personal identity [5].

Over the years, the communities have focused on the differences in iris textures for biometric identification applications. However, little research has addressed the question of whether genetically identical or related irises are sufficiently similar in some sense to correctly determine their relationship [3,4]. These similarities are not only interesting in fundamental research, but also useful in practical applications such as criminal investigation. Although the formation of the complex pattern of the iris is non-regular and is greatly influenced by the chaotic morphogenetic processes during the embryonic development [2], it was shown that the overall statistical measurement of iris texture is correlated with genetics [6]. Therefore, great efforts have been put on the genetic correlation of the iris [7,8,9,10,11].

Recently, many algorithms such as machine learning techniques [12] have been developed for iris recognition besides the early algorithms developed by Daugman. Due to tremendous success of deep learning in computer vision problems, there has been a lot of interest in applying features learned by convolutional neural networks (CNN) on general image recognition to other tasks, including iris recognition [13].

In this work, we investigate the correlation between the left and right irises of an individual using a deep neural network, more specifically, VGG16 proposed by Simonyan and Zisserman [14]. We adopted two independent iris datasets, and classified whether two irises (left and right) are from the same or different individuals. The remainder of this paper is organized as follows. Section 2 summarizes the related research on the iris recognition and on the genetically related classification using CNN techniques. Section 3 describes the modified VGG16 architecture used in this work. Section 4 details the data acquisition, image preprocessing, and left–right irises’ classification experiments. Classification results using other networks and the traditional Daugman’s approach will also be discussed. Our major findings and conclusions will be summarized in Section 5.

2. Related Work

Qiu et al. [7] showed that the iris texture is race related, and its genetic information is illustrated in coarse scale texture features, rather than preserved in the minute local features of state-of-the-art iris recognition algorithms. They thus proposed a novel ethnic classification method based on the global texture information of iris images and classified iris images into two race categories, Asian and non-Asian, with an encouraging correct classification rate of 85.95%.

Thomas et al. [15] employed machine learning techniques to develop models that predict gender with accuracies close to 80% based on the iris texture features. Tapia et al. [16] further showed that, from the same binary iris code used for recognition, gender can be predicted with 89% accuracy.

Lagree and Bowyer [17] showed that not only the ethnicity but also the gender can be classified using the iris textures, the accuracy of predicting (Asian, Caucasian) ethnicity using person-disjoint 10-fold cross-validation exceeds 90%, and the accuracy of predicting gender using person-disjoint 10-fold cross-validation is close to 62%.

Sun et al. [11] proposed a general framework for iris image classification based on texture analysis. By using the proposed racial iris image classification method called a hierachical visual codebook, they can discriminate iris images of Asian and non-Asian subjects with an extremely high accuracy (>97%).

These above-mentioned studies on race classification demonstrate the genotypic nature of iris textures. More specifically, the success of race classification based on iris images indicates that an iris image is not only a phenotypic biological signature but also a genotypic biometric pattern [11].

Hollingsworth et al. [3,4] showed that, by using the standard iris biometric algorithm, i.e., the Daugman’s algorithm, the left and right irises of the same person are as different as irises of unrelated individuals, and the eyes of twins are as different as irises of unrelated individuals. However, in their experiments in which humans viewed pairs of iris images and then evaluated how likely these images are from the same person, twins or unrelated individuals, the accuracy was found to be greater than 75%. This suggests that the similarities in the iris images from genetically identical or related individuals can be detected from image comparison.

Quite recently, a number of deep CNN based methods have been proposed for iris recognition or classification. Liu et al. [18] proposed DeepIris, a deep learning based framework for heterogeneous iris verification, which learns relational features to measure the similarity between pairs of iris images based on CNN. Experimental results showed that the proposed method achieves promising performance for both cross-resolution and cross-sensor iris verification.

Gangwar et al. [19] proposed a deep learning based method named DeepIrisNet for iris representation. Experimental analysis revealed that proposed DeepIrisNet can model the micro-structures of iris very effectively and provide robust and discriminative iris representation with state-of-the-art accuracy.

Nguyen et al. [20] further showed that the CNN features originally trained for classifying generic objects are also extremely good for the iris recognition.

Bobeldyk and Ross [21] demonstrated that it is possible to use simple texture descriptors, such as binarized statistical image features and local binary patterns, to extract gender and race attributes from a near-infrared ocular image that is used in a typical iris recognition system. The proposed method can predict gender and race from a single iris image with an accuracy of 86% and 90%, respectively.

3. Deep Learning Architecture

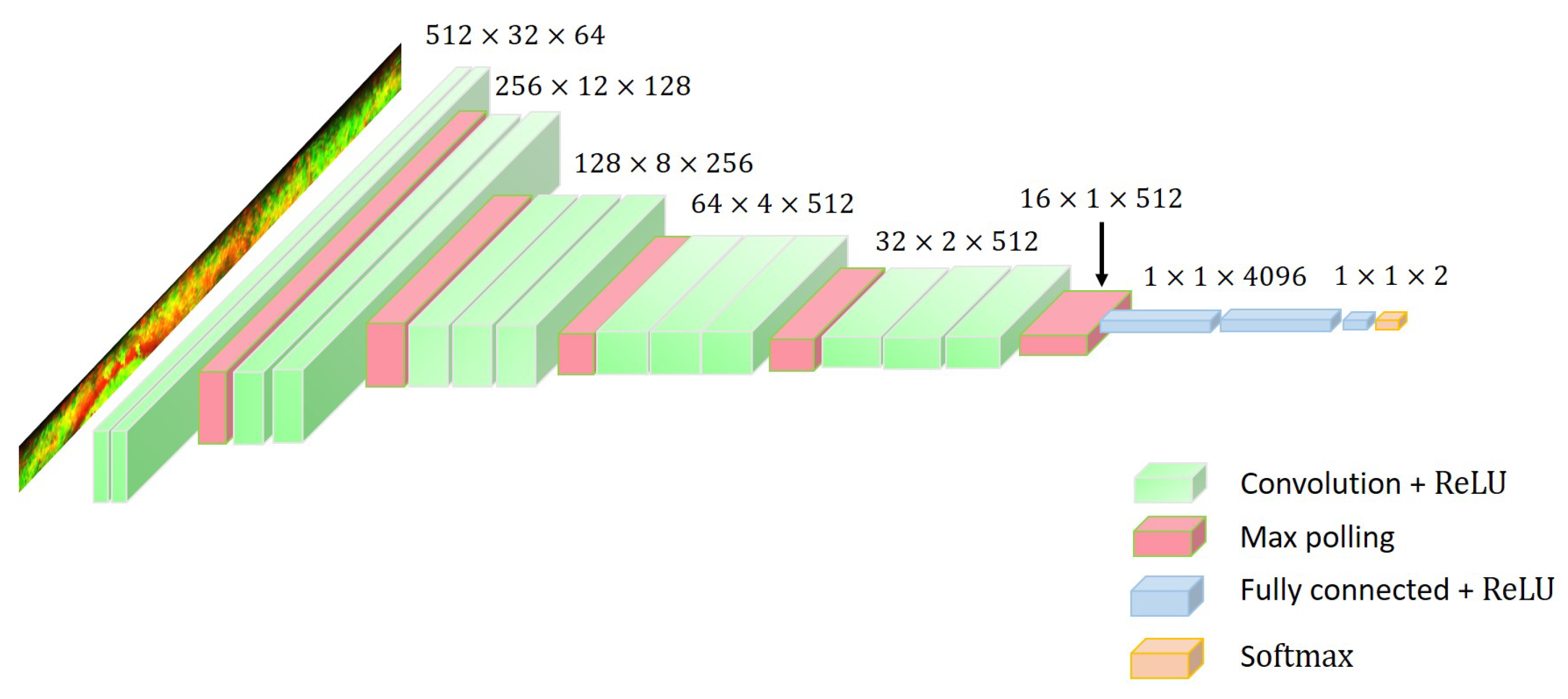

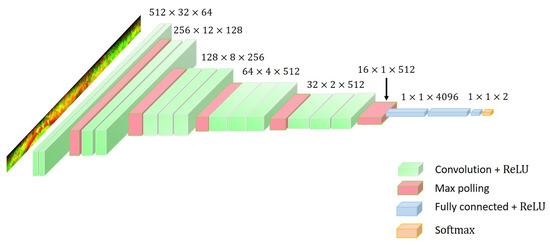

Figure 1 illustrates the architecture of VGG16 adopted in this work, which has been proven to be a powerful and accurate CNN for classification of image datasets [22,23]. During training, the input is a Red-Green-Blue (RGB) image of fixed-size pixels. Compared with the conventional VGG16 architecture, where images of pixels are used as the input, here we adopted pixels so that we do not need to resize the normalized iris images.

Figure 1.

Architecture of VGG16 used in our left–right irises’ classification experiments.

The network contains a stack of 13 conventional layers and three fully-connected layers. The first two fully-connected layers have 4096 channels each; the third layer performs two-way classification. There are five pooling layers and each carries out spatial pooling and follows two or three conventional layers. The final layer is the softmax layer. More details about the VGG16 architecture can be found in [14,24,25].

The network is initialized using zero biases and random weights with zero mean. The model learns the features from the data and calculates the weights by reverse propagation after each batch. In order to reduce overfitting, we employed thee dropout method, which is placed on the fully-connected layers and sets the output of each hidden unit to zeros with a probability of 50% [26].

4. Experimental Results and Analysis

4.1. Dataset

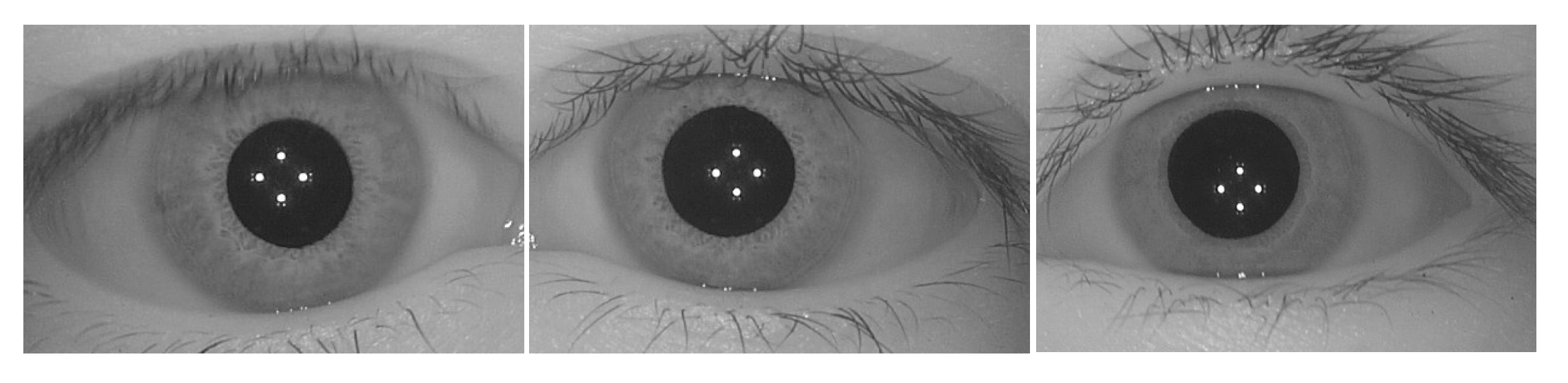

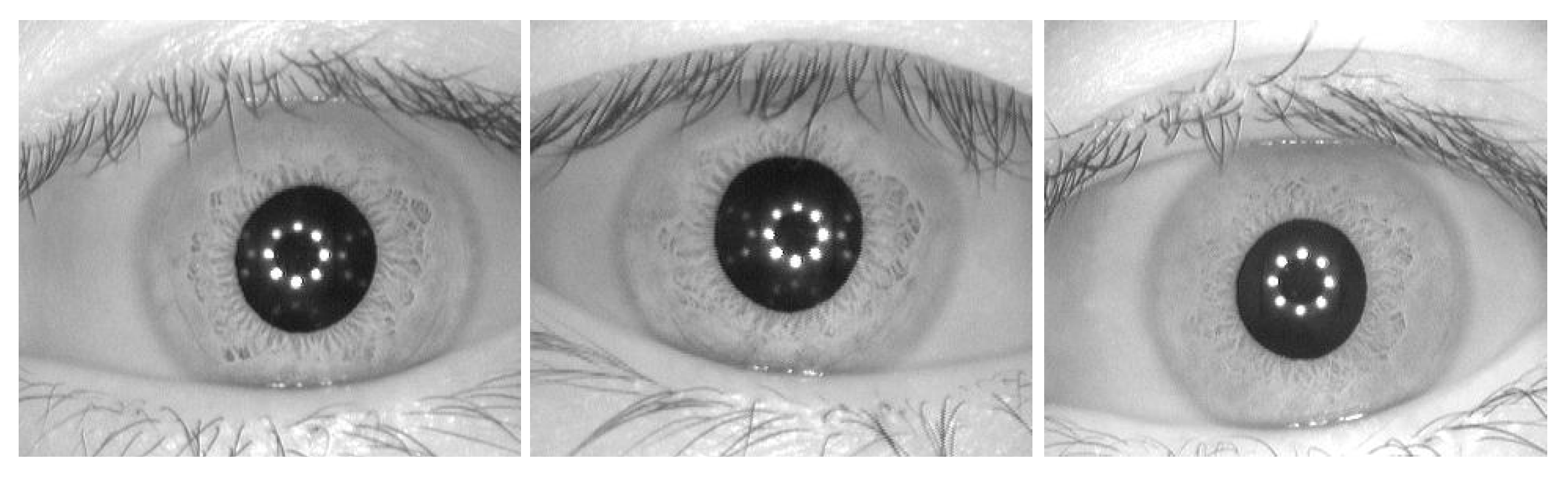

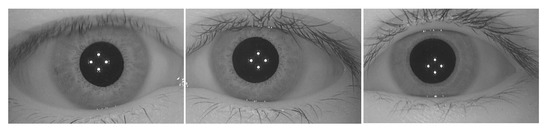

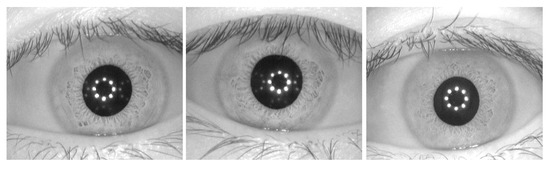

In order to carry out experiments on the classification of genetically identical left–right irises using the above VGG16 architecture, two independent datasets were used. The iris images of the first dataset were collected by the authors using a home-developed hand-held iris capture device in near-infrared illumination. This dataset, referred to as the CAS-SIAT-Iris dataset (here “CAS-SIAT” stands for Chinese Academy of Sciences’ Shenzhen Institutes of Advanced Technology), contains 3365 pairs of left and right iris images taken from 3365 subjects. These images went through quality assessment and careful screening before saving in 8-bit greyscale BMP format at pixels resolution, as shown in Figure 2. The other is the CASIA-Iris-Interval dataset (here “CASIA” stands for Chinese Academy of Sciences’ Institute of Automation) [27], which contains 2655 iris images in 8-bit grayscale JPEG format of pixels taken from 249 subjects, as illustrated by Figure 3. We note that there is more than one image taken from the same iris for the CASIA-Iris-Interval dataset.

Figure 2.

Sample images from the (Chinese Academy of Sciences’ Shenzhen Institutes of Advanced Technology) CAS-SIAT-Iris dataset.

Figure 3.

Sample images from the (Chinese Academy of Sciences’ Institute of Automation) CASIA-Iris-Interval dataset.

4.2. Data Preprocessing

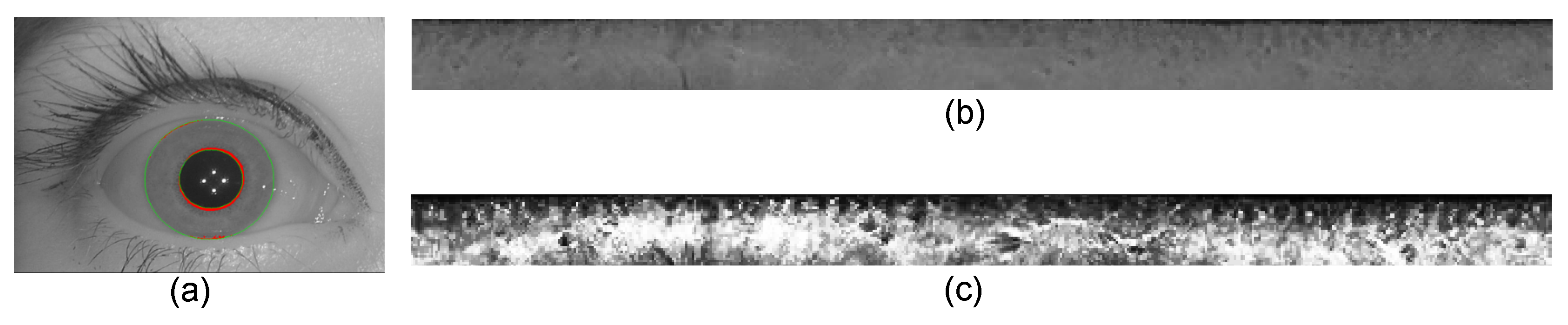

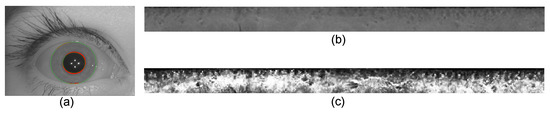

In order to extract the iris texture feature, an iris image as illustrated by Figure 4a needs to be preprocessed. The iris texture region outlined by the red inner and green outer circles is first segmented from the iris image. This process is known as the iris localization. The annular region of the iris is then unwrapped into an isolated rectangular image of fixed pixels, as shown by Figure 4b. This process known as iris normalization is performed based on Daugman’s rubber-sheet model [4]. Finally, the contrast of the normalized iris image is further enhanced by histogram equalization so as to improve the clarity of the iris texture, as shown by Figure 4c.

Figure 4.

Preprocessing of iris image: (a) localization; (b) normalization; and (c) contrast enhancement.

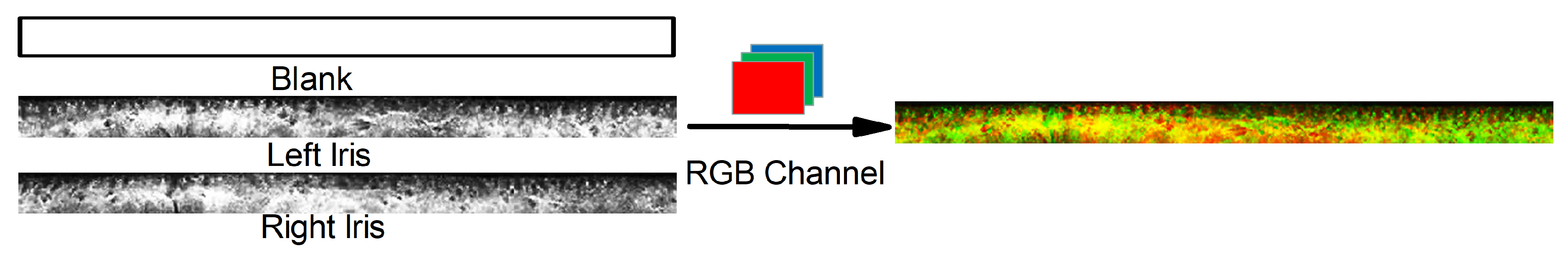

To classify whether two irises (left and right) are from the same or different individuals using the as-modified VGG16 model, we carried out feature fusion of a left and a right greyscale irises so as to form an RGB image as the model input. We combined left and right iris images and a blank image (all pixels have a value of zero), all of which are 8-bit greyscale, into an RGB24 image, as illustrated in Figure 5.

Figure 5.

Illustration for the generation of a CNN (convolutional neural network) input image from two iris images (left and right).

In order to examine the robustness and versatility of the model, we performed classification experiments using both the CAS-SIAT-Iris and the CASIA-Iris-Interval datasets as well as cross datasets, in both the person-disjoint and non-person-disjoint manners, and with and without image augmentation. Here, the person-disjoint manner means that there is no overlap of the subjects in the training, validation and test sets. In other words, if an iris image of a subject is used in the training set, any iris image of that subject will no longer be used in the validation set or the test set. For the non-person-disjoint manner, however, although there are no repeated iris images in the training, validation and test sets, there are overlapped subjects. The image augmentation is performed by image translations, horizontal reflections and zooms, so as to enlarge the database for achieving better performance.

We first performed experiments by training the network using the CAS-SIAT-Iris dataset. We constructed 3365 positive samples, each corresponds to a subject, and 2500 negative samples, which are randomly selected. Here, a positive or negative sample indicates that the input two irises (left and right) are from the same or different individuals. Both the positive and negative samples were partitioned in a person-disjoint manner into the training, validation and test sets with an approximate ratio of 7:2:1. Moreover, we further tested the as-trained network using the CASIA-Iris-Interval dataset, from which 215 positive samples and 200 negative samples were constructed. The numbers of partitioned samples are shown in Table 1.

Table 1.

Data distribution in a person-disjoint manner for the network trained with CAS-SIAT-Iris dataset and tested with both datasets.

We also performed similar experiments by first training the network with the CASIA-Iris-Interval dataset, and then testing with both datasets. Since the CASIA-Iris-Interval dataset containing only 2655 iris images from 249 subjects is relatively small, we artificially enlarged the database using label-preserving transformations to improve the model accuracy and to keep from over-fitting. In this way, we constructed 4075 positive samples and 4115 negative samples, which were partitioned in a non-person-disjoint manner into the training, validation and test sets with approximate ratios of 85.6%, 7.1% and 7.3%, respectively. Moreover, the as-trained network was further tested using the CAS-SIAT-Iris dataset, from which the 600 positive and 400 negative samples were constructed. The numbers of samples are shown in Table 2.

Table 2.

Data distribution in a non-person-disjoint manner for the network trained with CASIA-Iris-Interval dataset and tested with both datasets.

4.3. Results and Discussion

The as-modified VGG16 model was implemented using the Keras library. A learning rate of 0.0001, a batch-size of 128, the SGD optimizer, the categorical_crossentropy loss, and a strategy of 50 epochs per round until convergence were adopted for the training. Classification experiments were carried out on a high-performance computer with two Intel® Xeon® E5-2699 V4 processors (2.20 GHz), 256 GB RAM and a NVIDIA® Quadro GP100 GPU.

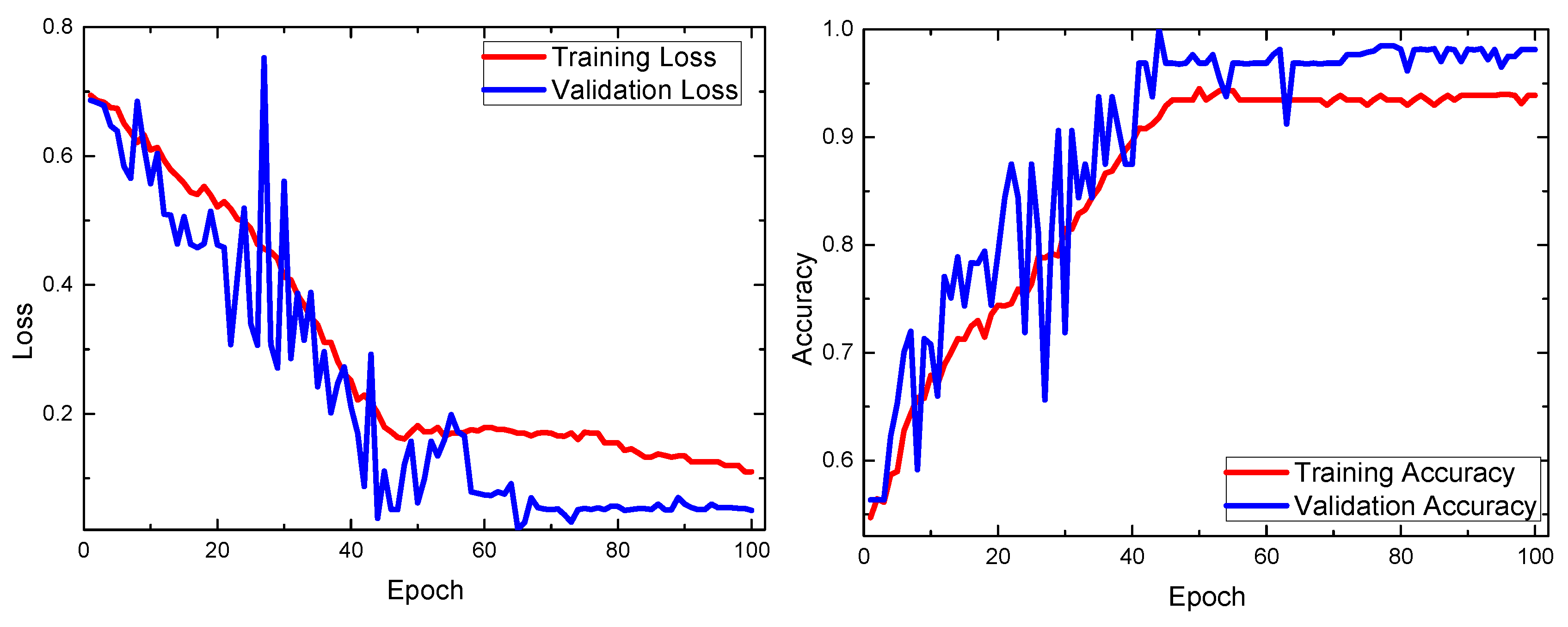

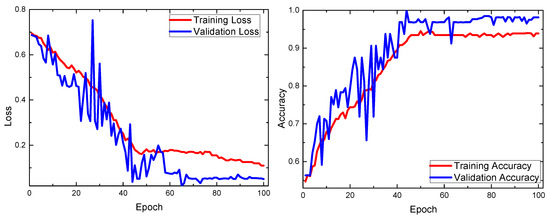

Figure 6 shows that the training and validation loss curves keep decreasing while the accuracy curves approach 100%. These model learning curves indicate that high accuracy and relatively fast convergence can be achieved within 100 epochs.

Figure 6.

Model learning curves of fine-tuning for the network initialized with random weights of zero mean using the CAS-SIAT-Iris dataset: (left) training and validation loss curves, and (right) training and validation accuracy curves.

The confusion matrices for the networks trained by the CAS-SIAT-Iris dataset and by the CASIA-Iris-Interval dataset are summarized in Table 3 and Table 4, respectively. By using these confusion matrices, metrics of the classification, such as precision, recall and accuracy, can be calculated, as summarized in Table 5 and Table 6. Table 5 shows that, by using the network trained with the CAS-SIAT-Iris dataset, the classification precision reaches 97.67%, recall is 94.25%, and accuracy reaches 94.25% for the CAS-SIAT-Iris test set, and these metrics are 94.71%, 91.63% and 93.01%, respectively, for the CASIA-Iris-Interval test set. By using the network trained with the CASIA-Iris-Interval dataset, Table 6 shows that the classification precision reaches 94.28%, recall is 87.83%, and accuracy reaches 89.50% for positive samples, 95.59% for the CAS-SIAT-Iris test set, and these metrics are 95.67%, 94.10% and 94.83%, respectively, for the CASIA-Iris-Interval test set.

Table 3.

Confusion matrix for the network trained with the CAS-SIAT-Iris dataset and and tested with both datasets.

Table 4.

Confusion matrix for the network trained with the CASIA-Iris-Interval dataset and tested with both datasets.

Table 5.

Classification report for the network trained with the CAS-SIAT-Iris dataset and tested with both datasets.

Table 6.

Classification report for the network trained with the CASIA-Iris-Interval dataset and tested with both datasets.

Note that for these two datasets we further examined other CNN architectures such as the VGG19 and DeepIris models, which were also initialized with zero biases and random weights with zero mean, and in which dropout is placed on the full-connected layers to reduce overfitting. The results summarized in Table 7 show that the accuracy is up to 94% for the VGG16 model but is only about 63–69% for both the VGG19 and DeepIris models. In other words, the VGG16 model exhibits the best performance in terms of the classification accuracy.

Table 7.

Comparison of classification accuracy for different CNN architectures. The training and test of these networks were performed with the same dataset (cross datasets were not considered).

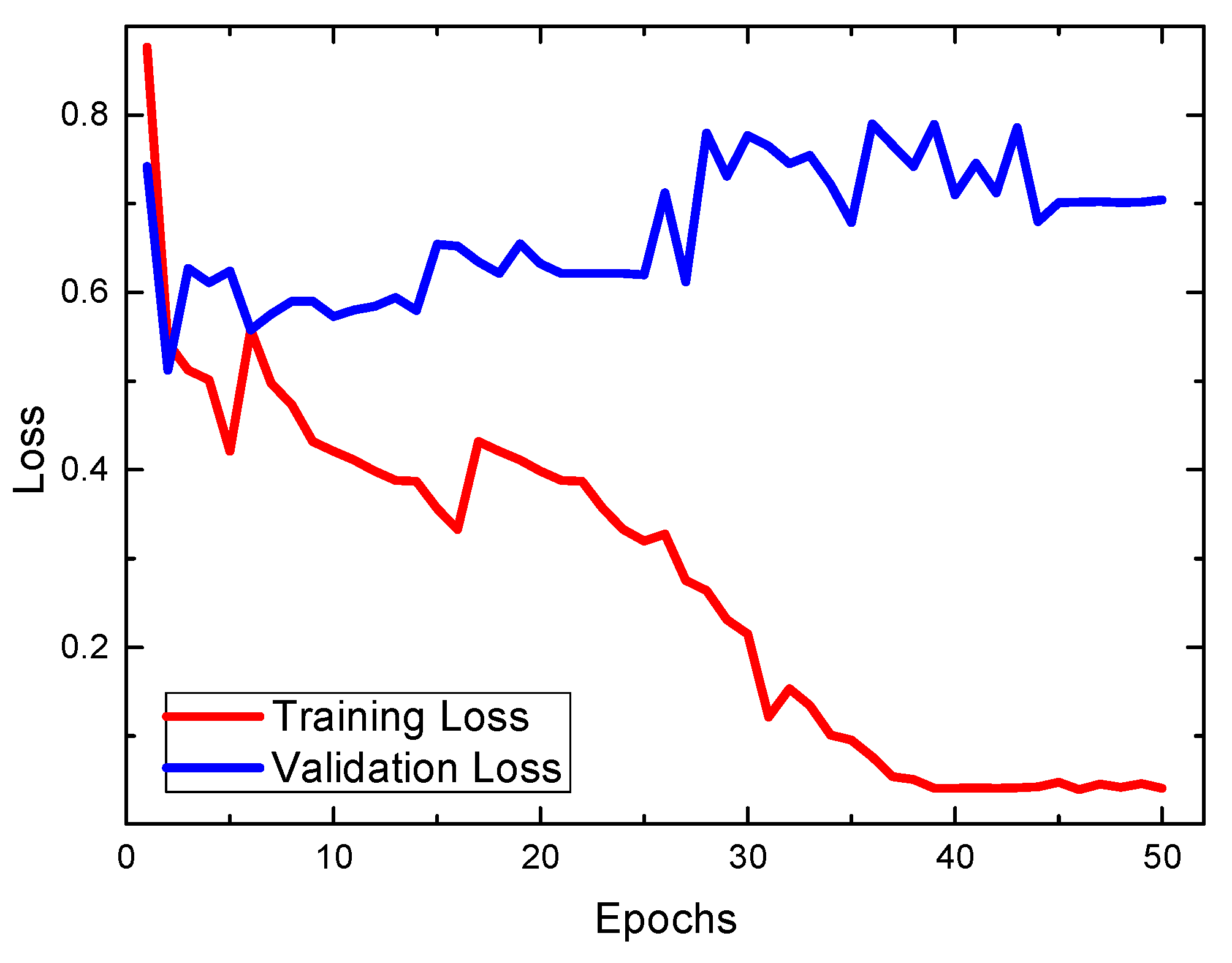

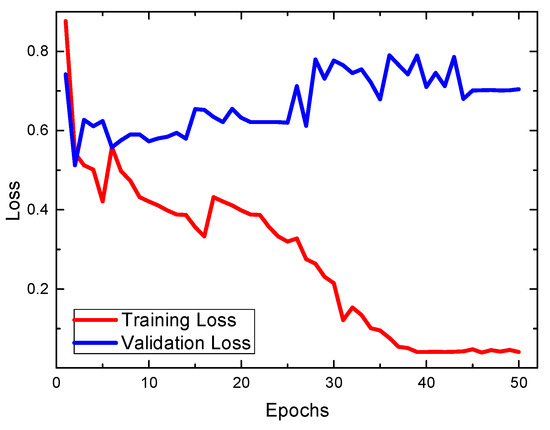

We also tried to make use of the conventional VGG16 architecture, which was initialized using pre-trained weights taken from a model in ImageNet [28]. In that model, the input image is of pixels, thus we further performed image cutting, splicing, and resizing so that the preprocessed iris images are now of pixels. Note that the dropout method has been used in that model. Our experimental results in Figure 7 showed that the conventional VGG16 architecture with pre-trained weights, which was widely used for general image classification, is not suitable for the classification of the left–right iris images.

Figure 7.

Model training and validation loss curves for the network initialized with pre-trained weights using the CAS-SIAT-Iris dataset.

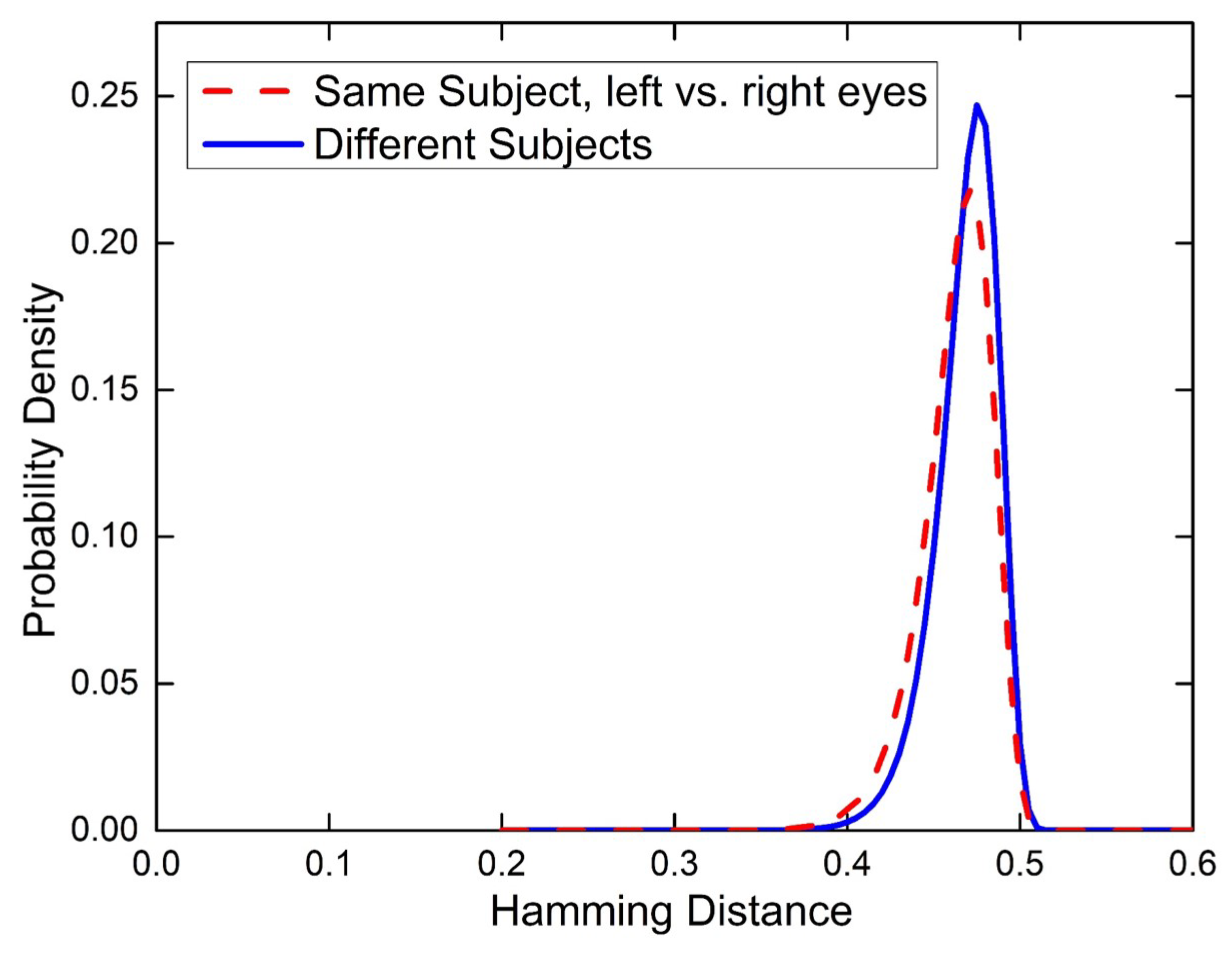

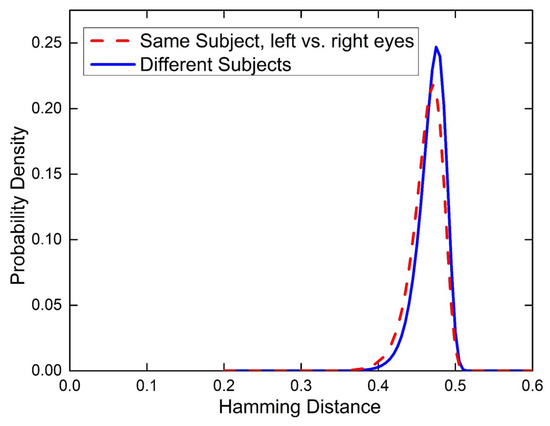

We note that, by using the traditional Daugman’s approach, it is impossible to distinguish whether two irises (left and right) are from the same or different individuals. Figure 8 shows that comparisons between left and right irises from the same person (blue solid curve) yield similar Hamming distances as comparisons between left and right irises from two individuals (red dashed curve). In other words, from an iris recognition perspective, each iris is so distinct that even the left and right irises from an individual are distinguishable. These results obtained from the CAS-SIAT-Iris dataset are similar to those from the CASIA-Iris-Interval dataset (not shown for clarity), and are consistent with the literature [3].

Figure 8.

The distribution of iris biometric scores between left and right eyes of the same subject is statistically indistinguishable from the distribution of scores from left and right eyes of different subjects. The results were obtained using the CAS-SIAT-Iris dataset, and are consistent with [3].

5. Conclusions

In conclusion, we have studied the correlation between the left and right irises. Experimental results have shown that, by using the VGG16 convolutional neural network, two irises (left and right) from the same or different individuals can be classified with a high accuracy of larger than 94% for both the CAS-SIAT-Iris dataset and the CASIA-Iris-Interval dataset. This strikingly high accuracy suggests that, by making use of deep learning, it is possible to distinguish whether a pair of left and right iris images belongs to the same individual or not, which is indistinguishable using the traditional approach. We believe that this classification accuracy can be further improved by collecting more samples, i.e., more iris images from more individuals. We expect this work will inspire further studies on the correlation among multiple biometric features such as face, iris, fingerprint, palmprint, hand vein, retina, speech, and hand geometry for genetically related individuals. The discovered similarities between different biometric identifiers would greatly advance effective criminal investigations.

Author Contributions

Conceptualization, Y.L.; methodology, B.F., Y.L., L.Y., and G.L.; software, B.F.; iris image acquisition, Y.L., Z.Z., and G.J.; modeling and data processing, B.F.; data analysis, all authors; writing—original draft preparation, B.F. and G.L.; writing—review and editing, all authors; supervision, Y.L. and G.L.

Funding

This work was supported by the National Key Research and Development Program of China (No. 2017YFC0803506), the Shenzhen Research Foundation (Grant Nos. JCYJ20170413152328742 and JCYJ20160608153308846), and the Youth Innovation Promotion Association of the Chinese Academy of Sciences (No. 2016320).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Daugman, J.G. High confidence visual recognition of persons by a test of statistical independence. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 1148–1161. [Google Scholar] [CrossRef]

- Daugman, J.G. How iris recognition works. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 21–30. [Google Scholar] [CrossRef]

- Hollingsworth, K.; Bowyer, K.W.; Lagree, S.; Fenker, S.P.; Flynn, P.J. Genetically identical irises have texture similarity that is not detected by iris biometrics. Comput. Vis. Image Underst. 2011, 115, 1493–1502. [Google Scholar] [CrossRef]

- Hollingsworth, K.; Bowyer, K.W.; Flynn, P.J. Similarity of iris texture between identical twins. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 22–29. [Google Scholar]

- Boyer, K.W.; Hollingsworth, K.; Flynn, P.J. Image understanding for iris biometric: A survey. Comput. Vis. Image Underst. 2008, 110, 281–307. [Google Scholar] [CrossRef]

- Sturm, R.; Larsson, M. Genetics of human iris colour and patterns. Pig. Cell Melan. Res. 2009, 22, 544–562. [Google Scholar] [CrossRef] [PubMed]

- Qiu, X.; Sun, Z.; Tan, T. Global texture analysis of iris images for ethnic classification. In Advances in Biometrics; Zhang, D., Jain, A.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3832. [Google Scholar]

- Qiu, X.; Sun, Z.; Tan, T. Learning appearance primitives of iris images for ethnic classification. Proc. IEEE Int. Conf. Image Process. 2007, 2, 405–408. [Google Scholar]

- Zarei, A.; Mou, D. Artificial neural network for prediction of ethnicity based on iris texture. Proc. IEEE Int. Conf. Mach. Learn. Appl. 2012, 1, 514–519. [Google Scholar]

- Zhang, H.; Sun, Z.; Tan, T.; Wang, J. Ethnic classification based on iris images. In Chinese Conference on Biometric Recognition; Springer: Berlin/Heidelberg, Germany, 2011; pp. 82–90. [Google Scholar]

- Sun, Z.; Zhang, H.; Tan, T.; Wang, J. Iris image classification based on hierarchical visual codebook. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1120–1133. [Google Scholar] [CrossRef] [PubMed]

- Marsico, M.; Petrosino, A.; Ricciardi, S. Iris recognition through machine learning techniques: A survey. Pattern Recognit. Lett. 2016, 82, 106–115. [Google Scholar] [CrossRef]

- Minaee, S.; Abdolrashidiy, A.; Wang, Y. An experimental study of deep convolutional features for iris recognition. In Proceedings of the 2016 IEEE Signal Processing in Medicine and Biology Symposium (SPMB), Philadelphia, PA, USA, 3 December 2016. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Thomas, V.; Chawla, N.V.; Bowyer, K.W.; Flynn, P.J. Learning to predict gender from iris images. In Proceedings of the 2007 First, IEEE International Conference on Biometrics: Theory, Applications, and Systems, Crystal City, VA, USA, 27–29 September 2007. [Google Scholar]

- Tapia, J.E.; Perez, C.A.; Bowyer, K.W. Gender classification from the same iris code used for recognition. IEEE Trans. Inf. Forensics Secur. 2016, 11, 1760–1770. [Google Scholar] [CrossRef]

- Lagree, S.; Bowyer, K.W. Predicting ethnicity and gender from iris texture. In Proceedings of the 2011 IEEE International Conference on Technologies for Homeland Security (HST), Waltham, MA, USA, 15–17 November 2011. [Google Scholar]

- Liu, N.; Zhang, M.; Li, H.; Sun, Z.; Tan, T. DeepIris: Learning pairwise filter bank for heterogeneous iris verification. Pattern Recognit. Lett. 2016, 82, 154–161. [Google Scholar] [CrossRef]

- Gangwar, A.; Joshi, A. Deep iris representation with applications in iris recognition and cross-sensor iris recognition. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2301–2305. [Google Scholar]

- Nguyen, K.; Fookes, C.; Ross, A.; Sridharan, S. Iris recognition with off-the-shelf CNN features: A deep learning perspective. IEEE Access 2018, 6, 18848–18855. [Google Scholar] [CrossRef]

- Bobeldyk, D.; Ross, A. Analyzing covariate influence on gender and race prediction from near-infrared ocular images. IEEE Access 2019, 7, 7905–7919. [Google Scholar] [CrossRef]

- Al-Waisy, A.S.; Qahwaji, R.; Ipson, S.; Al-Fahdawi, S.; Nagem, T.A.M. A multi-biometric iris recognition system based on a deep learning approach. Pattern Anal. Appl. 2018, 21, 783–802. [Google Scholar] [CrossRef]

- Liu, S.; Deng, W. Very deep convolutional neural network based image classification using small training sample size. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 730–734. [Google Scholar]

- Liu, L.; Li, H.; Dai, Y. Stochastic attraction-repulsion embedding for large scale image localization. arXiv 2018, arXiv:1808.08779. [Google Scholar]

- Qassim, H.; Verma, A.; Feinzimer, D. Compressed residual-VGG16 CNN model for big data places image recognition. In Proceedings of the 2018 IEEE 8th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–10 January 2018; pp. 169–175. [Google Scholar]

- Zhang, N.; Donahue, J.; Girshick, R.; Darrell, T. Part-based r-CNNs for fine-grained category detection. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- CASIA Iris Image Database. Available online: http://biometrics.idealtest.org/dbDetailForUser.do?id=4 (accessed on 20 January 2019).

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Lake Tahoe, NV, USA, 2012; pp. 1097–1105. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).