1. Introduction

For the past decades, numerous attempts have been made to allow patients with paralysis, suffering from neurological disorders such as amyotrophic lateral sclerosis or spinal cord injury, to communicate with the external world using an electroencephalography (EEG)-based brain–computer interface (EEG-based BCI) [

1]. Recently, the application of BCIs has been extended from clinical areas to non-medical fields, including entertainment, cognitive training, and others, for normal users [

2]. Especially, it becomes plausible that the user of a BCI can achieve the control of home systems by thoughts through the Internet of things (IoT) [

3].

A type of EEG-based BCI leverages an event-related potential (ERP), mostly the P300 component (P300) of it, to enable the selective control of communication interfaces through attentive brain responses to target stimuli [

4]. For instance, the speller made of a BCI based on P300 (P300 BCI) allows a user to type a letter simply by selectively attending to the target letter [

5]. However, the performance of P300 BCI relying on visual stimuli is vulnerable to visual distraction due to interferences of adjacent stimuli or other environmental distractors [

1,

6,

7,

8]. This issue has been practically resolved by the design of stimulus presentation paradigms to minimize interferences between target and non-target stimuli [

8]. On the other hand, recent studies have reported that complex visual and auditory distractions did not affect the P300 amplitude and BCI performance because they enhanced brain responses by increasing a task difficulty [

9,

10]. These suggest that P300 BCI can be used in daily living environments where visual and auditory distractions are rampant.

To design a BCI with visual stimuli, a few studies have proposed using the N200 component (N200) of ERPs as well [

11]. N200 is evoked by an exogenous attentional stimulus and shown to be a useful feature for BCIs since its amplitudes remain relatively stable even with visual-motion distraction [

11,

12]. Guan et al. showed that N200-BCI conveyed the information of users’ intention as much as P300 BCI did [

13]. Moreover, a N200-based BCI speller using motion-onset visual responses demonstrated similar performance to the P300 speller [

11,

12]. Accordingly, the integration of P300 and N200 can be advantageous to maintain robust performance of BCIs for home appliance control [

12].

In the aspect of environmental control, ERPs, especially P300 have been widely used for BCIs. In order to elicit P300, the arrangement of visual stimuli in the form of a matrix has been the most commonly used. The matrix-based paradigm to present stimuli originally designed for a P300 speller [

14] has also been used for the purpose of controlling environmental devices, replacing spelling characters with icons associated with device control functions [

15,

16,

17,

18,

19,

20,

21,

22]. Among studies which chose the matrix-based paradigm for their P300 BCIs, some studies especially considered a real-life situation when using P300 BCI for the purpose of environmental control. Schettini et al. developed a P300 BCI system for Amyotrophic lateral sclerosis (ALS) patients to control devices and showed that the usability of P300 BCI was comparable to that of other user interfaces such as touch screens and buttons [

20]. Corralejo et al. proposed a P300 BCI for disabled people to control multiple devices considering real-life scenarios [

17]. In the study, the proposed BCI received favorable reviews about the design and usefulness from users who had motor or cognitive disabilities. Zhang et al. also developed an environmental control system that enables patients with spinal cord injuries to control multiple home appliances based on a P300 BCI. The proposed P300 BCI was extended further in terms of considering real-life scenarios, so it included an asynchronous mode to allow users to switch the environmental control system and selection of devices [

22]. Another suggested arrangement of icons was a region-based paradigm (RBP) [

23]. Aydin et al. [

24,

25] designed a Web-based P300 BCI for controlling home appliances, where a two-level RBP was applied to enable users to control various appliances in a single interface without complex visual presentation. Despite recent advances in virtual reality (VR) and augmented reality (AR), few studies confirmed the feasibility of using VR or AR as a new visual interface for P300 BCI where matrix-based visual stimuli were presented in a user’s real or virtual environment [

26,

27].

However, previously developed BCIs for environmental control required a separate display to provide visual stimuli [

15,

16,

17,

19,

21,

22,

28,

29,

30], and the display only presents control icons as visual stimuli. In such a system environment, users are not able to see the real devices they are controlling and find it difficult to recognize instantly whether the devices operate as intended. Considering the real-life situation of controlling home appliances, it is desirable that a user interface (UI) for BCIs shows both visual stimuli and the resulting operation of devices in a single screen. Some home appliances whose main purpose is displaying videos on screen such as TV and video intercom can additionally show stimuli on the existing screen while the appliance is working [

31,

32]. On the contrary, most home appliances (e.g., lamp, fridge, and washing machine) are equipped with only a limited screen or none, thus requiring a separate screen for UI to integrate visual stimuli and control results. Therefore, we propose a UI displaying a control icon and a real time image of corresponding appliances together and verify that the proposed UI works effectively in a P300-based BCI even with increased potential distractions due to the live image of appliances.

In this study, we developed a set of real-time BCIs for controlling home appliances, including a TV set (BCITV), a digital door-lock (DL) (BCIDL), and an electric light (EL) (BCIEL). The developed BCIs harnessed both P300 and N200 to overcome visual distractions. For BCITV, we developed a UI based on the Multiview TV function showing four different preview channels simultaneously along with a main channel to which the BCI user attended. For both BCIDL and BCIEL, we developed a see-through UI on the tablet screen that captured a live image of the appliances while displaying appliance control icons on top of the live image. The control commands for BCIDL included lock and unlock, whereas those for BCIEL included the degrees of brightness. We evaluated the applicability of our online BCIs for controlling diverse home appliances in an unshielded environment.

2. Materials and Methods

2.1. Participants

Sixty healthy subjects participated in the study (14 females, mean age of 21.7 ± 2.3 years old). Subjects had no history of neurological disease or injury and reportedly a good sleep over seven hours (7.4 ± 1.6 hours) the day before the experiment. Among them, thirty subjects participated in the experiment of BCITV, fifteen participated in that of BCIDL and fifteen in BCIEL. In previous studies, the number of subjects were from 5 to 18 [

5,

6,

8,

12,

15,

17,

19,

20,

22,

24,

25,

26], so we set the number of subjects at a level similar to this range. All subjects gave informed consent for this study, approved by the Ulsan National Institutes of Science and Technology, Institutional Review Board (IRB: UNISTIRB-18-08-A).

2.2. EEG Recordings

The scalp EEG of subjects was recorded from 31 active wet electrodes (FP1, FPz, FP2, F7, F3, Fz, F4, F8, FC5, FC1, FCz, FC2, FC6, T7, C3, Cz, C4, CP5, T8, CP1, CPz, CP2, CP6, P7, P3, Pz, P4, P8, O1, Oz, and O2) using a standard EEG cap following the 10–20 system of American Clinical Neurophysiology Society Guideline 2 (actiCHamp, Brain Products GmbH, Germany). Reference and ground electrodes were placed on linked mastoids of the left and right ears, respectively. Impedances of all electrodes were reduced to <5 kΩ. EEG signals were digitized at 500 Hz and band-pass filtered between 0.01 and 50 Hz.

2.3. Experiment Setup

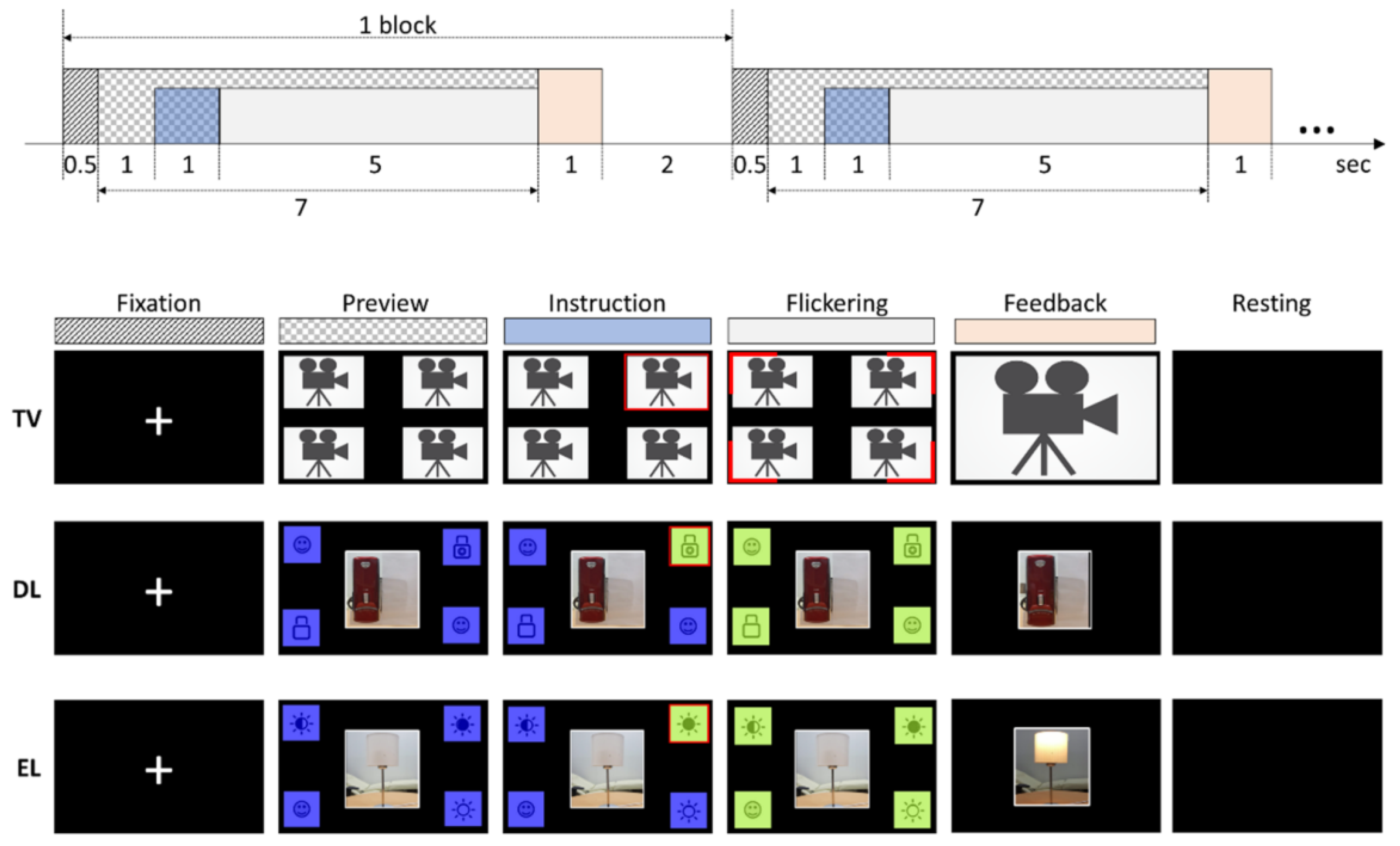

To build a BCI for controlling TV channels, we developed an emulated Multiview TV platform that displayed four preview channels simultaneously at four quadrants from the screen center (see

Figure 1). The video clips in each channel provided the information about channel previews, but at the same time played as visual distractors. The video clips were presented on a 50 inch TV with a refresh rate of 60 Hz and a 1920 × 1080 resolution (commercial Ultra-high-definition television (UHD TV), LG Electronics Co., Ltd., Republic of Korea). The corner parts of the video clip windows were surrounded by additional red stimuli. Each of these four stimuli flickered with a frequency of 8 Hz. The flickering period (125 ms) was divided into 62.5 ms flash and 62.5 ms dark phases [

33].

A trial block began with a gaze fixation at the center of the screen. Then, the instruction about a target channel was given in a manner that the boundary of the target channel turned to red. Following the instruction, the four video clips were simultaneously displayed together with the four surrounding stimuli that flickered one at a time in a random order, each for 10 times (

Figure 1). Subjects were instructed to gaze at the stimulus surrounding the target channel while being seated comfortably on an armchair placed at the distance of 2.5 m from the TV screen. Then, the video clip of the channel selected by either the system software (during training) or the BCI (during testing) was presented for 1000 ms on full screen as feedback. The locations of the video clips were shuffled across the trial blocks.

The BCI experiments to control the DL or the EL also employed the same paradigm as the TV experiment, except that the stimuli were presented on the tablet PC screen. Furthermore, instead of showing additional flickering stimuli along with the video clips in the case of BCITV, the designed control icons, located at four corners on the screen, directly flickered. Subjects were instructed to select one of the two control icons for BCIDL (lock/unlock) or three for BCIEL (on/off/±brightness). However, to maintain the ratio of the target versus non-target stimuli as 1:3 for all types of the BCIs developed here, we added two or one dummy stimuli (that also flickered) to BCIDL or BCIEL, respectively.

2.4. EEG Preprocessing and Online BCI

EEG signal preprocessing in our analysis included bad channel removal, re-referencing, and artifact removal [

11,

12,

13,

33,

34]. The “bad” channels were detected by cross-correlation of low-pass filtered oscillations between neighboring channels (<2 Hz) [

34]. In our case, a channel showing average correlation with all other neighboring channels less than 0.4 was deemed to be a bad channel and removed from the analysis. The noise components from the reference source were eliminated by the common average re-reference (CAR) [

5]. This reference-free EEG was band-pass filtered through 0.5–12 Hz with an infinite impulse response filter (Butterworth filter, the fourth order). Artifacts were removed by the artifact subspace reconstruction (ASR) method [

35]. ERPs were extracted by segmenting and averaging EEG signals in the epochs that were time-locked to the stimuli, where segmentation extracted EEG signals 200 ms before and 600 ms after the stimulus onset. Finally, we applied the matched filter to enhance ERP waveforms [

5].

In each experiment, the training took 50 trial blocks while the testing was performed over 30 trial blocks. During the training session, the user gazed at a randomly displayed target. The feedback provided the actual, not to be decoded, channel. From the acquired training data, we extracted distinguishable amplitude features between the target and non-target ERPs by the two-sample t-test (p < 0.01). Then, we reduced the dimensionality of the feature space using the principal component analysis (PCA) with the number of principal components determined by the amount of variance of the features the principal components explained (>90% in our study). Next, we built a classifier for identifying the target from ERP features based on the support vector machine (SVM) with the linear kernel. During the testing session, subjects controlled the given home appliance following the target instruction using the trained BCI. The classifier trained in the previous session predicted an intended target command from ERPs and the prediction outcome was displayed as feedback.

2.5. Evalutation

To evaluate the discriminability of ERPs between the target and non-target stimuli, we calculated the proportion of the EEG signal variance explained by stimuli (

), which represents the degree of a difference between the target and non-target ERPs [

36]. Hence, the magnitude of

is likely to be associated with BCI performance.

at time

t was defined as a ratio of an explained sum of squares (

ESS) to a total sum of squares (

TSS):

where

is the magnitude of grand average of all ERPs for both target and nontarget stimuli, providing a baseline ERP magnitude.

is the magnitude of a single ERP, also for either target or non-target stimuli.

is a modeled ERP, which represents the magnitude of average target ERP or averaged non-target ERP. The modeled ERP means a representative target or non-target ERP estimated by averaging single-trial target or non-target ERPs, respectively. More blocks with clear ERP components would lead to higher

, being close to 1. If some blocks do not show a clear ERP component, the magnitude of the average target ERP would be smaller than that of the single ERP, resulting in smaller

.

where

K and

K′ indicate the number of presentations of target and non-target stimuli, respectively.

With the ERPs that showed high (i.e., > 0.8), we measured the peak latencies of P300 and N200 components. The peak latency of each component could reflect characteristics of cognitive processes in controlling different home appliance devices.

To assess BCI performance, we first measured accuracy and the information transfer rate (ITR). Accuracy was calculated as

where

n denotes the number of times a correct target was selected by a BCI, and

N denotes a total number of trial blocks (i.e., 30 in our testing scenario). ITR was calculated as

where

P denotes measured accuracy and

C denotes the number of classes. We also assessed the efficiency of BCI control by measuring changes of accuracy and ITR according to the number of the repetitions of stimulus presentation. The number of repetitions varied from 1 to 10 in this analysis. To verify performance reliability, we determined the chance level of each device control by constructing the distribution of surrogate data, which were generated by randomly shuffling the class labels. In other words, we repeated a procedure 1000 times in which a classifier was randomly built and tested using the surrogate data. Then, we regarded the upper bound of a 95% interval of accuracy as the chance level.

Finally, we analyzed potential differences in ERPs between a good performance group (Good PG) and a poor performance group (Poor PG). Here, each subject was assigned to Good PG if his/her performance (i.e., accuracy) was higher than the mean of all the subjects, or to Poor PG otherwise. Then, we quantified the distinctness of ERP features between classes (i.e., target vs. non-target) using the Fisher’s ratio (FR), which represents the degree of separation between classes, for each subject:

where

σb2 is between-class variance and

σw2 is within-class variance.

2.6. Statistical Tests

Statistical tests were conducted on three hypotheses about results from our online BCI experiment for controlling home appliances. One of the hypotheses was established for ERP peak latency to compare the ERP patterns of BCITV, BCIDL, and BCIEL. In this case, the null hypothesis was formed as follows [

37]:

H0:There is no difference in the average of peak latency between appliances.

The other hypotheses were established to compare the performance metrics between appliances, with the null hypotheses given by:

H0:There is no difference in the average accuracy between appliances.

H0:There is no difference in the average ITR between appliances.

For these hypotheses, the independent variable was the appliance (TV, DL, or EL) controlled by BCIs, and one-way analysis of variance (ANOVA) was used to test the hypotheses. The sample size was the same as the number of subjects, 30 for BCITV and 15 for BCIDL and BCIEL, respectively. The significance level (α) was set as 0.05.

Another statistical test was performed to compare the Fisher’s ratio between performance-based groups (i.e., Good PG and Bad PG). The null hypothesis was set as:

H0:There is no difference in the Fisher’s ratio between Good PG and Bad PG.

This hypothesis was tested for each appliance respectively using the Mann–Whitney U test. The sample size was equal to the number of subjects included in each group and α = 0.05.

3. Results

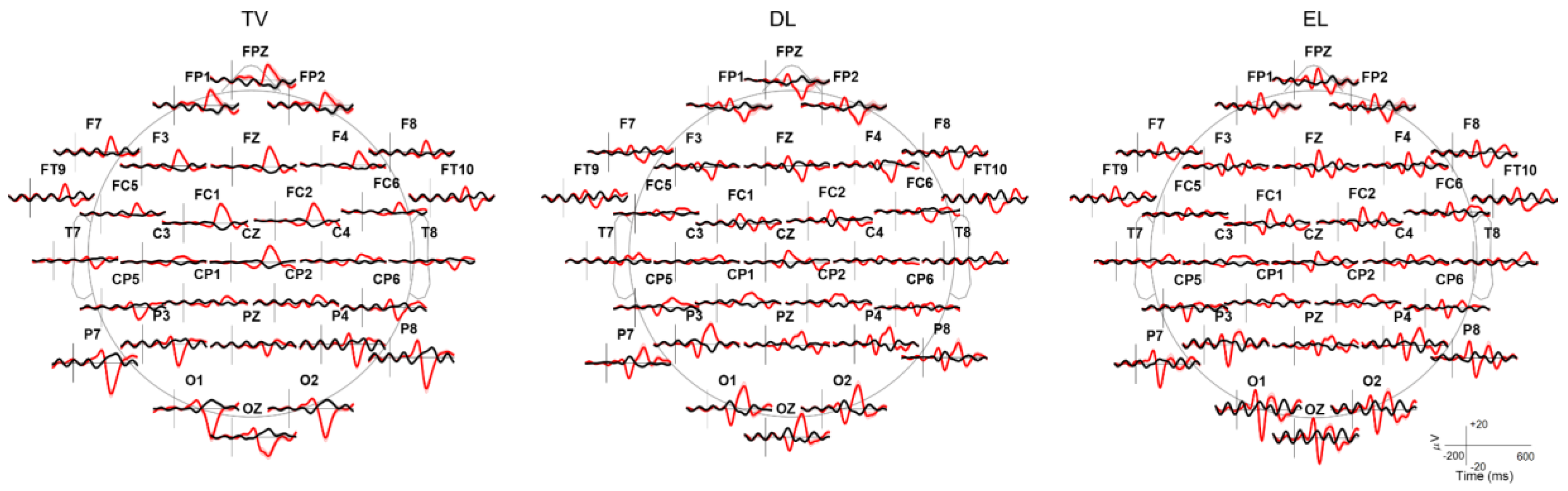

We examined the ERP patterns generated during the BCI control of each home appliance.

Figure 2 depicts the grand average of ERPs in response to the target and non-target stimuli at all the EEG channels. It is clearly shown that the target stimuli elicited large deflections with locally distributed ERP components, whereas the non-target stimuli did not. The target stimuli apparently elicited more prominent P300 and N200 components in BCITV than in BCIDL and BCIEL. Spatiotemporal patterns of ERPs were seemingly different between the BCIs for each appliance. In BCITV, a positive component was dominantly observed over the frontocentral area 300 ms after stimulus onset (i.e., P300), along with a negative component mostly observed over the occipitoparietal area 338 ms after onset (i.e., N2pc, pc denotes posterior contralateral scalp distribution). In BCIDL, on the contrary, P300 was predominantly observed over the occipitoparietal area, whereas N2pc was found on the frontal area. Unlike frontocentral P300 shown in BCITV, P300 for BCIDL appeared right after a smaller negative component. In BCIEL, ERP components were shorter than those in other BCIs, without clear observation of P300 or N2pc. The most dominant component over the frontal area was a positive component appearing earlier than P300 in other BCIs, preceded and followed by smaller negative components, and the dominant component over the occipitoparietal area was a negative component, preceded and followed by smaller positive components.

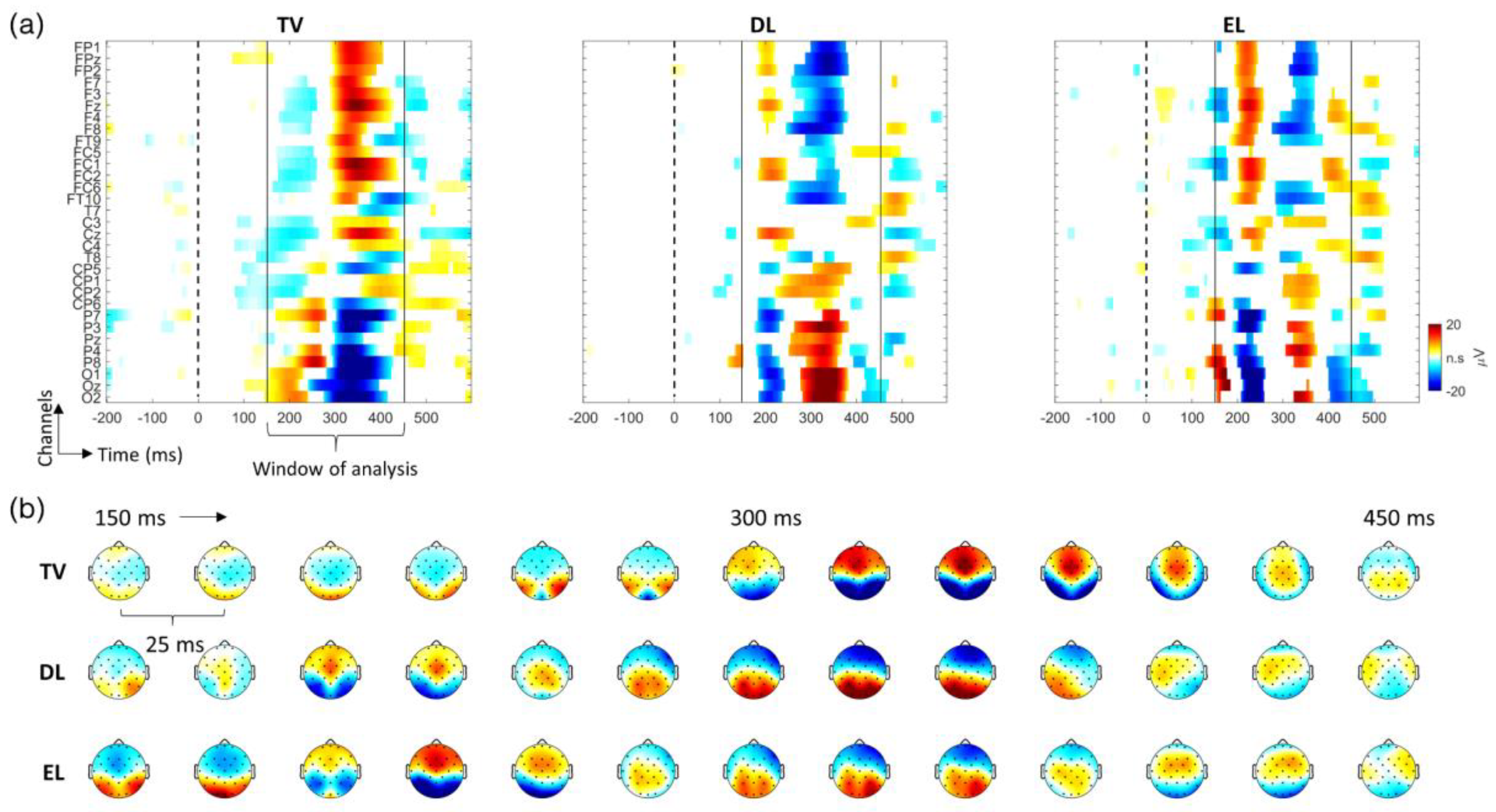

Statistical evaluation of ERP patterns could more clearly reveal appliance-specific spatiotemporal ERP patterns.

Figure 3a depicts the time–channel maps of the ERP amplitudes that were significantly different from baseline in response to the target stimuli (one-way analysis of variance test on a sample-by-sample basis,

p < 0.01). The maps for each BCI showed that significant ERP components appeared predominantly in a time window between 150 and 450 ms. Hence, we set the window of ERP analysis to this period and generated a series of topological maps at every 25 ms within the window.

Spatiotemporal ERPs in the time window above in response to the stimuli for each home appliance showed dissimilar patterns, as illustrated in

Figure 3b. In BCITV, a small positive deflection began to appear at 200 ms in the occipital area and spread forward over parietal and frontal areas. This positive deflection (i.e., positivity) became pronounced in anterior areas after 300 ms, and at the same time, a negative deflection (i.e., negativity) generated at the occipital area before 300 ms became pronounced in posterior areas, showing a clear contrast with anterior positivity. In BCIDL, a spatial pattern of anterior positivity together with posterior negativity, similar to the pattern shown after 350 ms in BCITV, appeared earlier at 200 ms. Then, posterior negativity faded out and anterior positivity moved backward over posterior areas. Around 300 ms, negative deflections replaced the previous positivity with wider coverage of lateral areas. At the same time, posterior negativity migrated from anterior areas became larger and wider over lateral areas. This pattern of anterior negativity together with posterior positivity gradually disappeared until 400 ms. In BCIEL, spatiotemporal ERP patterns changed more rapidly than in the cases of other BCIs. A spatial pattern of weaker anterior negativity and stronger posterior positivity began to appear after 150 ms, which was flipped over after 200 ms. Then, a pronounced pattern of anterior positivity along with posterior negativity, which was similar to the spatial pattern shown at 300 ms in BCITV, appeared briefly. Then, an opposite pattern of anterior negativity and posterior positivity emerged after 300 ms, which was similar to that in BCIDL.

We also examined the latency of the ERP components observed within the time window above.

Table 1 shows the latencies of the most predominant positive and negative ERP amplitudes showing the greatest t-values (through the paired t-test). On average, BCITV yielded the slowest ERP components for both positive and negative deflections (the positive peak latency: 312.26 ± 66.02 ms and the negative peak latency: 271.81 ± 97.46 ms), whereas the BCIEL revealed the fastest ERP components (the positive peak latency: 235.03 ± 104.52 ms and the negative peak latency: 226.26 ± 102.75 ms). A one-way analysis of variance (ANOVA) of latency dependent upon home appliances showed a significant difference in latency between the three appliances for both positive deflection (F = 9.91,

p < 0.01) and negative deflection (F = 21.18,

p < 0.01).

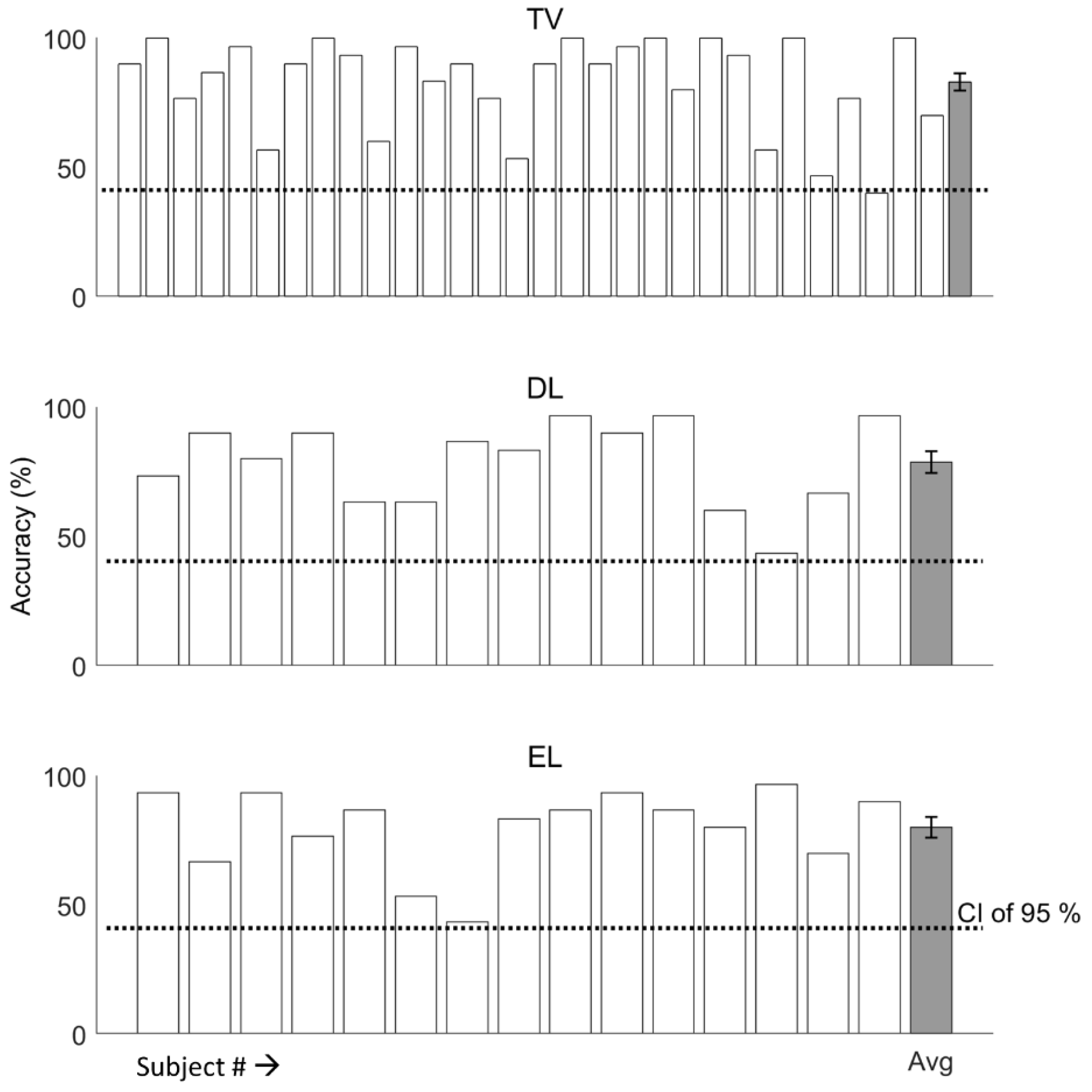

Next, we tested the online BCI control performance for each appliance.

Figure 4 shows the online accuracy of each appliance control. BCITV, BCIDL, and BCIEL achieved average (minimum~maximum) accuracy across subjects as 83.0% ± 17.9% (40.0%~100%), 78.7% ± 16.2% (43.3%~96.7%), and 80.0% ± 15.6% (43.3%~96.7%), respectively. One-way ANOVA showed no significant difference in accuracy between the BCIs (

p = 0.69). The chance level of classification, obtained by the surrogate data, was 41.17% for BCITV, 40.35% for BCIDL, and 41.02% for BCIEL, respectively (see

Section 2.5). The averaged ITR was 12.06 ± 6.19, 3.44 ± 2.78, and 7.27 ± 3.71 bits/min for BCITV, BCIDL, and BCIEL, respectively. One-way ANOVA showed a significant difference in ITR (F = 16.67,

p < 0.001).

In a post hoc analysis, we examined the effect of the number of stimulus repetitions on BCI performance.

Figure 5 depicts BCI performance as a function of the number of stimulus repetitions calculated offline after online BCI experiments. Two performance measures, accuracy and information transfer rate (ITR) [

38], increased with the number of repetitions but saturated at some points after which no increase in performance was achieved (paired t-test,

p < 0.05). The result shows that BCITV required the most repetitions (nine repetitions), followed by BCIEL (six) and BCIDL (six).

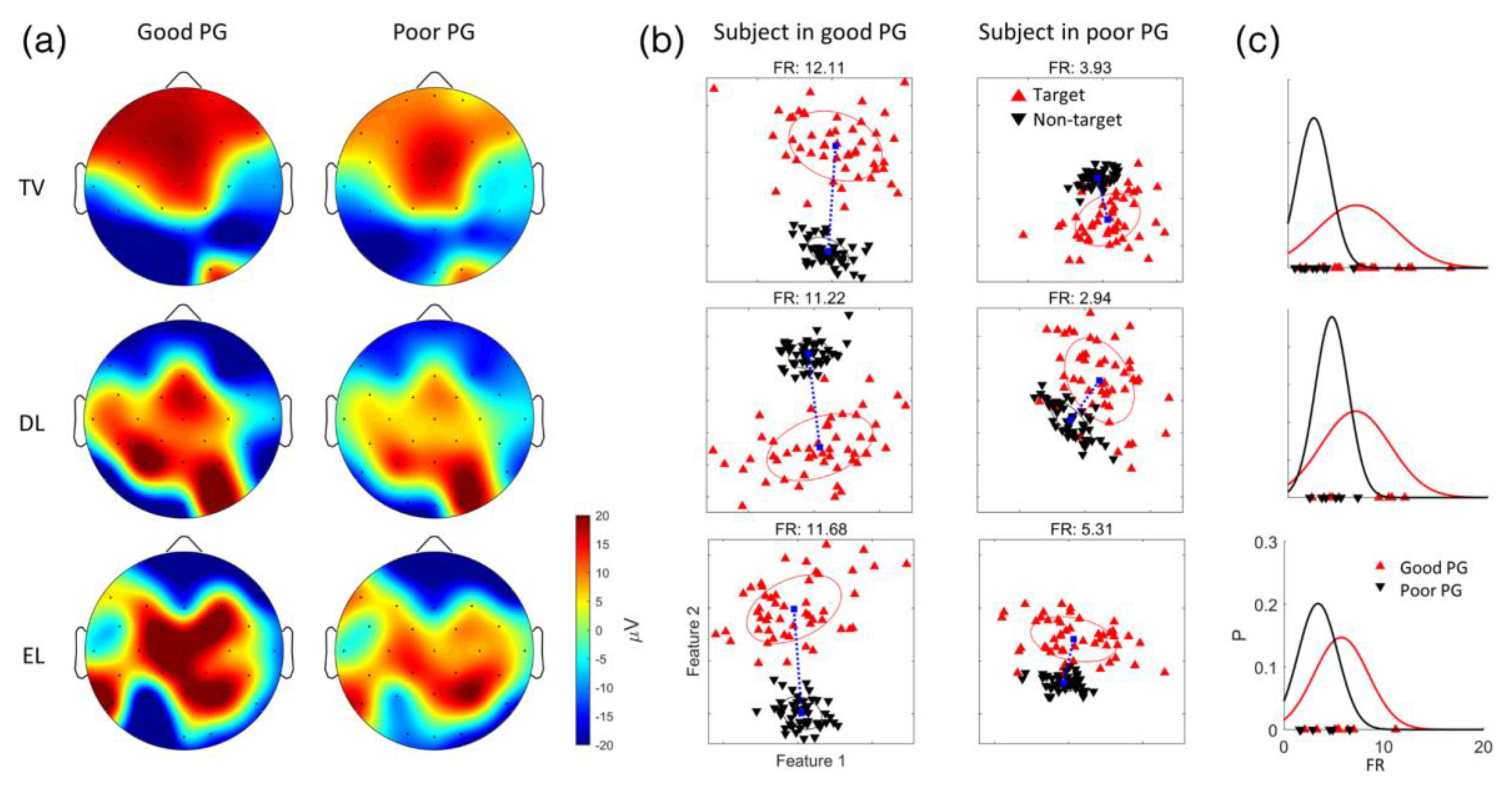

Finally, we investigated differences in neural activity between the Good PG and Poor PG (see

Section 2 for defining a performance group (PG)).

Figure 6a depicts the topological maps of the ERP amplitudes at latency described in

Table 1. It demonstrates that the Good PG elicited more evident ERP components than the Poor PG did. We further analyzed the feature space of each group where the classifiers discriminated a target command for BCIs.

Figure 6b shows the examples of the feature space of the Good PG and the Poor PG. Features 1 and 2 denote the first and second principal components of the ERPs, although more features were actually used in classification. Apparently, the feature vectors of two classes (i.e., target vs. non-target) were better separated in the Good PG than in the Poor PG. The overall Fisher ratio (FR) in each group showed that the ERP features were more distinguishable between the classes in the Good PG than in the Poor PG (

Figure 6c). The Mann–Whitney U test showed a significant difference between Good PG and Poor PG in FR of BCITV (U = 32.0,

p < 0.01) and a marginal difference of FR in BCIEL (U = 12.0,

p = 0.08), but not in BCIDL (U = 17.0,

p = 0.27).

4. Discussion

This study examined the plausibility of using EEG-BCIs for controlling home appliances in real time, including TV, door lock, and electric light. The developed BCIs generated an appliance control command in real time by detecting the EEG features elicited when the user recognized the appearance of a stimulus associated with an intended command. Healthy participants in the study could control the appliances via BCIs with an accuracy ranging from 78.7% to 83.0% on average. No difference in online performance among the appliances was found. Such controllability drew upon differential spatiotemporal ERP patterns between the target and non-target stimuli. Interestingly, the ERP patterns in response to the target for individual appliances appeared to be distinct from each other, even though the same oddball paradigm was employed. This implies that the ERP components traditionally exploited in BCIs based on the oddball paradigm, such as P300 and N200 [

5,

6,

11,

13], could be further individualized to a particular appliance when an ERP-based BCI is practically applied for home environments.

ERP waveforms involving various components were distinctively observed during the control of each appliance. It is likely that different background distraction could influence the recognition of a visual stimulus in different ways. In BCITV, visual stimulation elicited frontocentral N200 as well as occipitoparietal P200 and N300 besides typical frontocentral P300. The appearance of these ERP components might be associated with various cognitive processes in the presence of visual distraction. For instance, visual distraction by video clips could make a viewer emotionally responsive to video while concentrating on its adjacent target stimulus to select the channel. This might be reflected in N200 as a previous study revealed that N200 is induced by affective processing in decision-making [

39]. Moreover, the Multiview TV could lead to prospective memory (PM) retrieval as the user anticipated viewing a target channel, which could elicit occipital N300 associated with PM retrieval [

40]. In the design of BCITV, the clamp-like shape of a stimulus was attached to the corner of a rectangular TV channel window. Accordingly, highlighting of the stimulus could generate a mismatch among the four corners of the window, which might lead to an incongruence effect. This incongruence effect could increase occipitoparietal P200 [

41].

On the contrary, visual stimulation in both BCIDL and BCIEL elicited frontocentral P200 and N300 as well as occipitoparietal N200 and P300. Particularly, occipitoparietal N200 here can be regarded as N2pc (pc denotes posterior contralateral scalp distribution) preceding P300. N2pc is known to be associated with the focusing of attention [

42]. Since the stimuli in BCIDL and BCIEL did not involve much visual distraction, as was the case of BCITV, and were clearer than those in BCITV, the user might focus more readily on a target stimulus, as reflected in N2pc. However, it remains unclear what cognitive processes were possibly associated with frontocentral P200 and N300 in BCIDL and BCIEL. Based on our results, we speculate that different visual distractions associated with each appliance might lead to differences in spatiotemporal ERP patterns, but further investigations should follow.

If the usage context of TV was designed similar to that of BCIDL and BCIEL, where a user selected an icon representing a specific function, the resulting ERP components in BCITV would be similar to those in BCIDL and BCIEL. Yet, here, we designed BCITV in the form of Multiview TV to effectively make use of an existing display and investigate whether this type of BCI causes different results from BCIs that uses a separate screen presenting icons and appliances controlled. In terms of accuracy, there was no significant difference between types of BCI, but ERP components were different. This result could be useful for the design of a BCI for controlling home appliances mainly used on a screen.

According to our results, BCITV required more repetitions of stimuli than others. Generally, visual distraction and working memory are known to involve more cognitive loads [

43]. That is, TV with complicated visual distractions could lead to slow latency through more demanding cognitive processes [

44]. Therefore, BCITV might require a considerable number of repetitions to capture pronounced ERP components.

Meanwhile, an individual difference among subjects in the online BCI control performance could be caused by several factors such as user age, physical, and psychological conditions, and information processing capacity [

33,

45,

46]. Particularly, the stimulus types designed in this study, accompanying various ambient visual distractions, could lead to individual differences in information processing loads. Additionally, differences in motivation might be related. For example, the user who used BCITV could be more motivated to choose a preferred channel than the users of BCIDL or BCIEL with simpler control objectives.

The UI of BCIEL and BCIDL displayed live images of devices together with visual stimuli to show a user the real-time feedback of the operation of devices. We envision that this can be further developed to create BCIs integrated with augmented reality (AR) using a see-through display, which will more effectively allow a user to see target appliances and visual stimuli at the same time [

47]. In sum, the present study demonstrated that a BCI based on spatiotemporal ERP patterns can help users control home appliances along with advances in the Internet of things (IoT) and AR, to which the in-depth understanding of brain activity patterns for controlling different appliances will be essential.