Charuco Board-Based Omnidirectional Camera Calibration Method

Abstract

1. Introduction

2. Related Works

2.1. Single Camera Calibration

2.2. Omnidirectional Camera Calibration

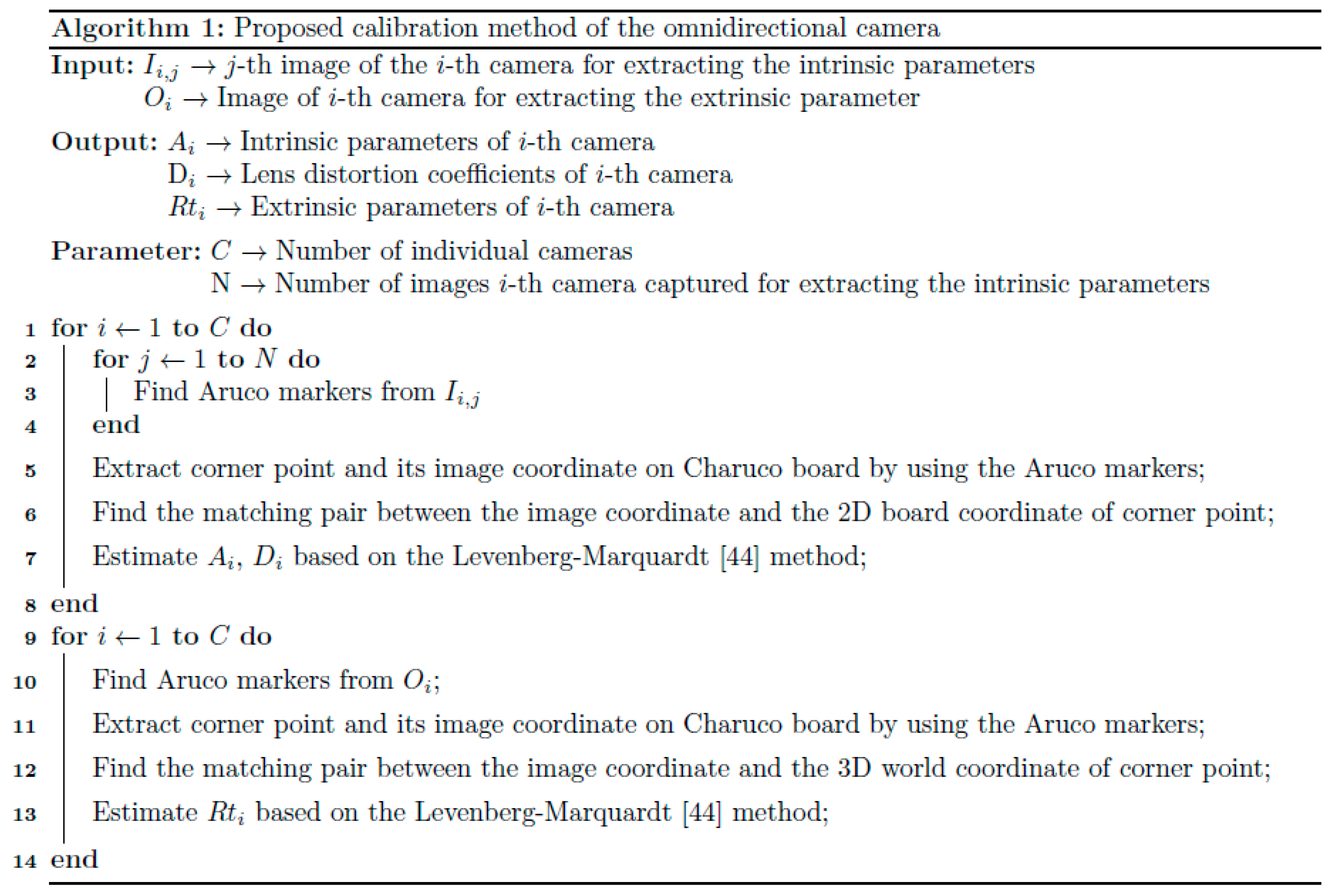

3. Proposed Method

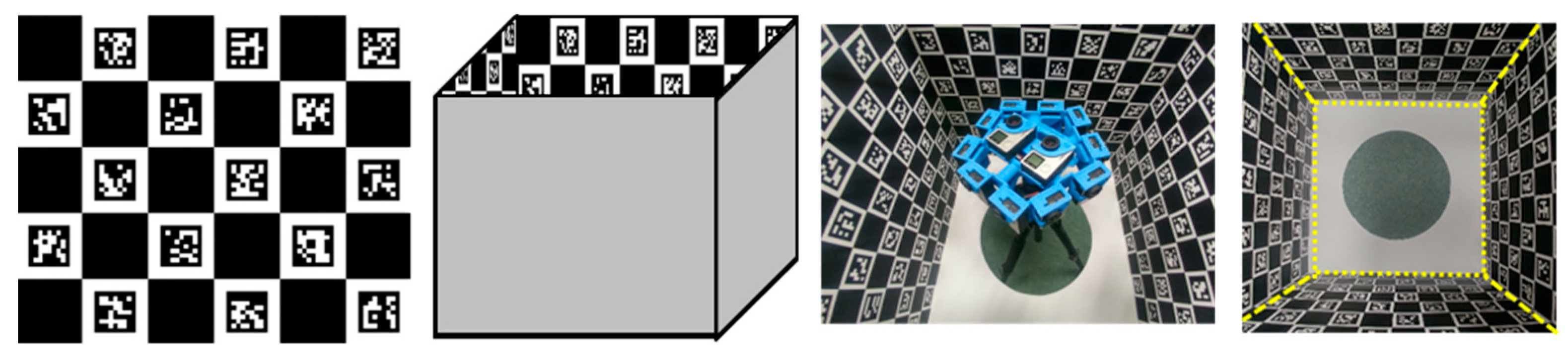

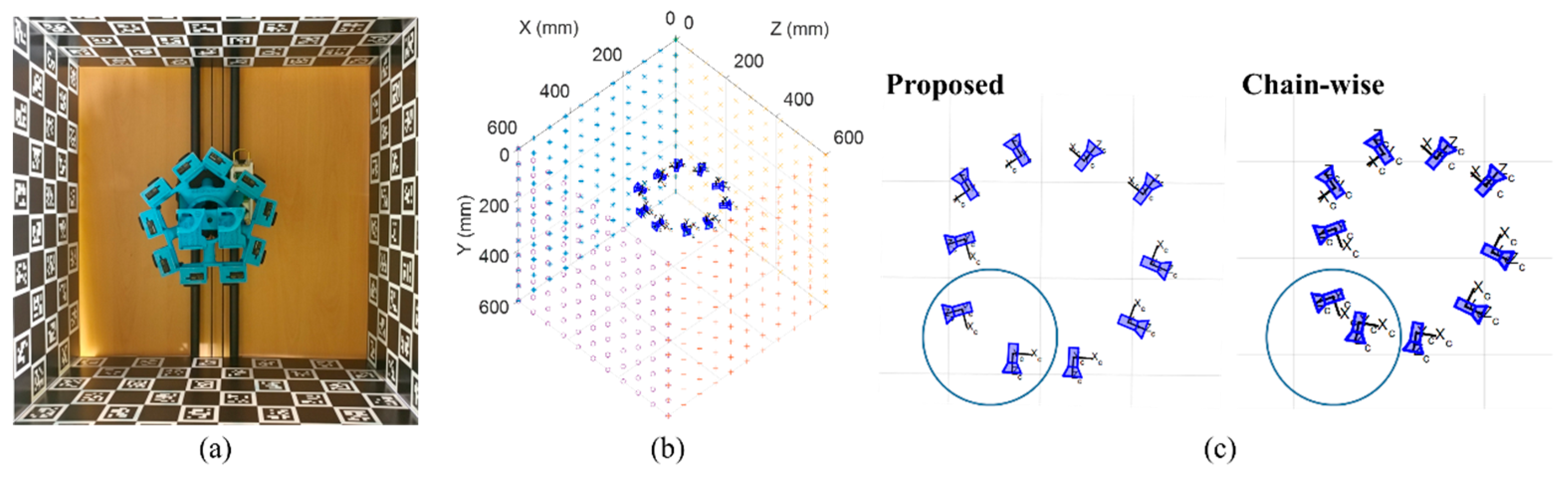

3.1. Proposed Calibration Structure

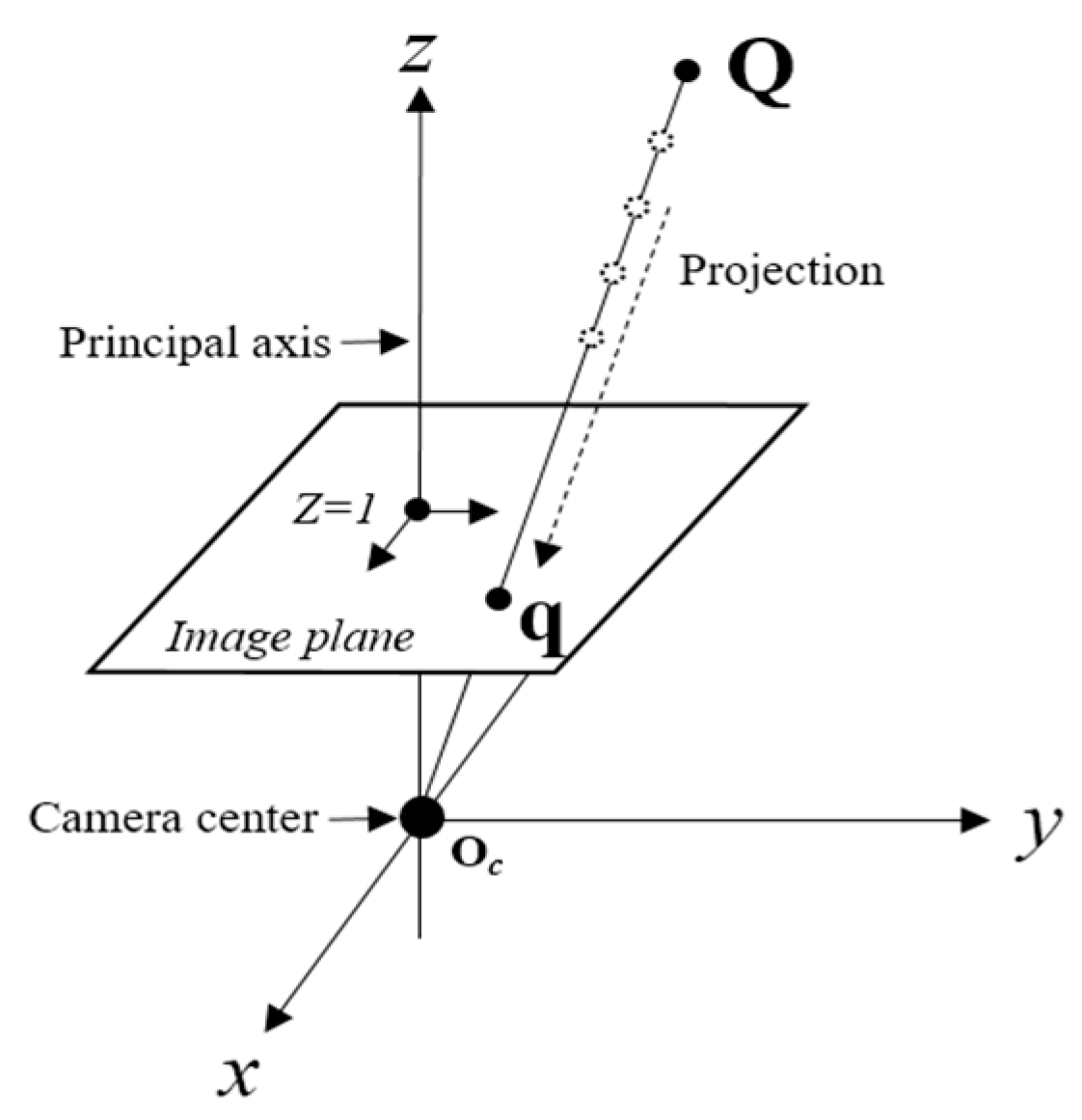

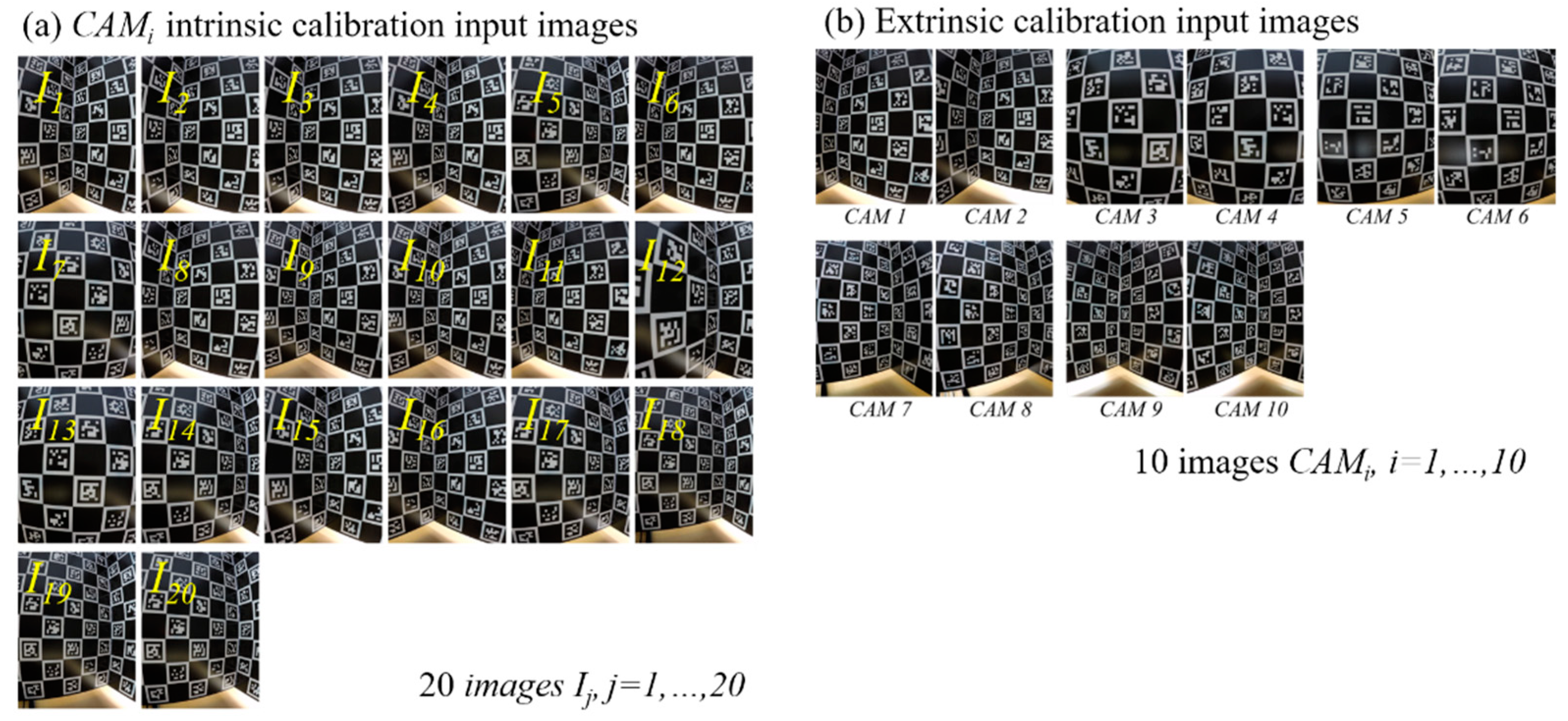

3.2. Intrinsic Parameters Estimation of the Omnidirectional Camera

3.3. Estimation of Extrinsic Parameters Using Estimated Intrinsic Parameters

4. Experimental Setting and Result

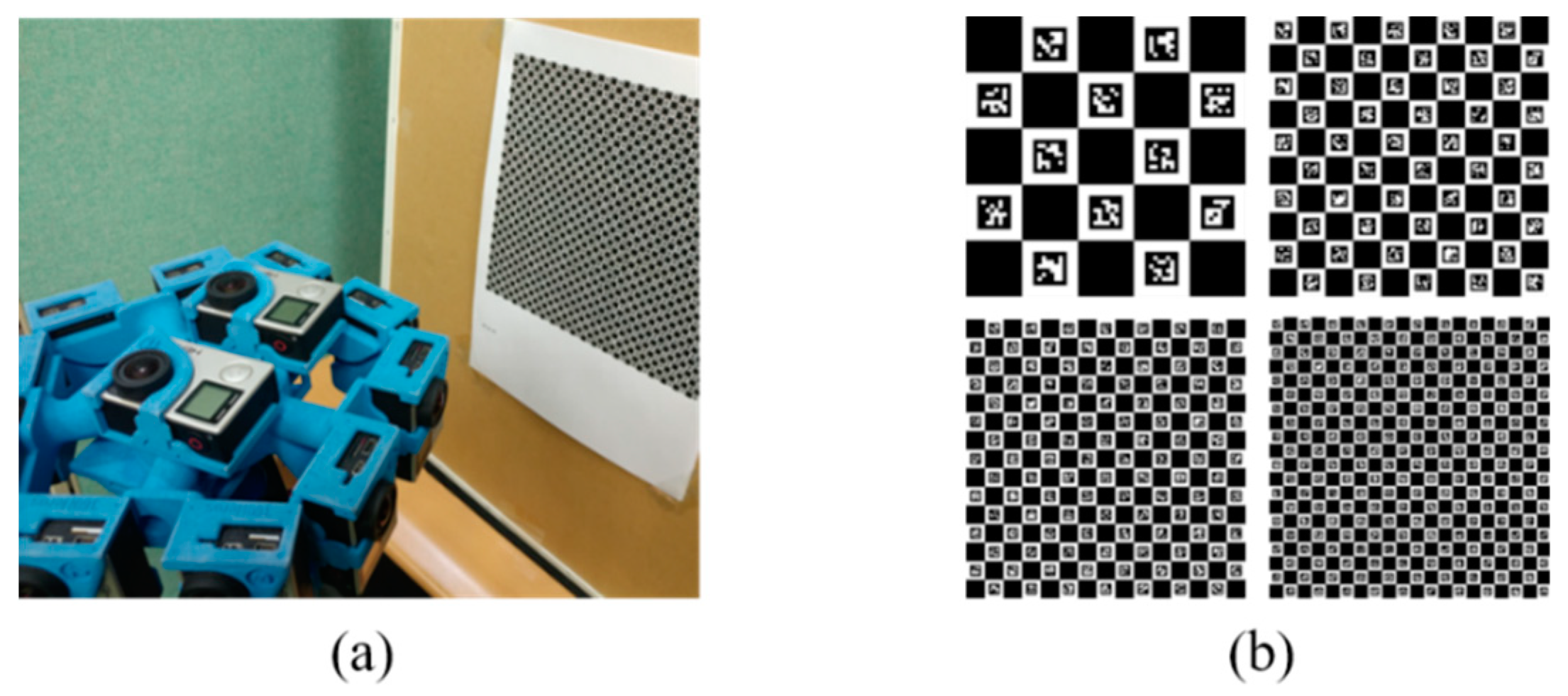

4.1. Experiment on Proper Size of Calibration Pattern Unit

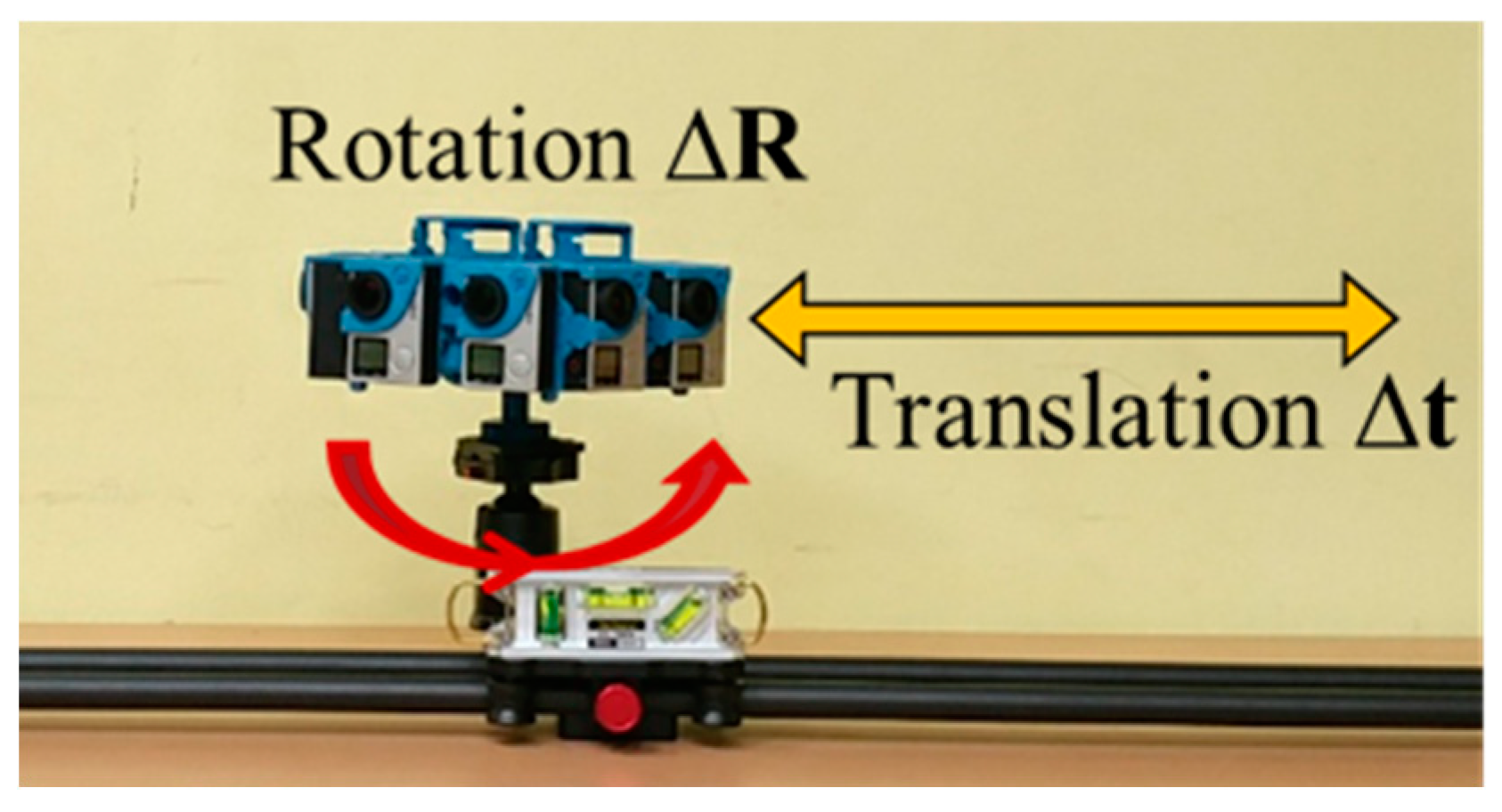

4.2. Experimental Setting and Process

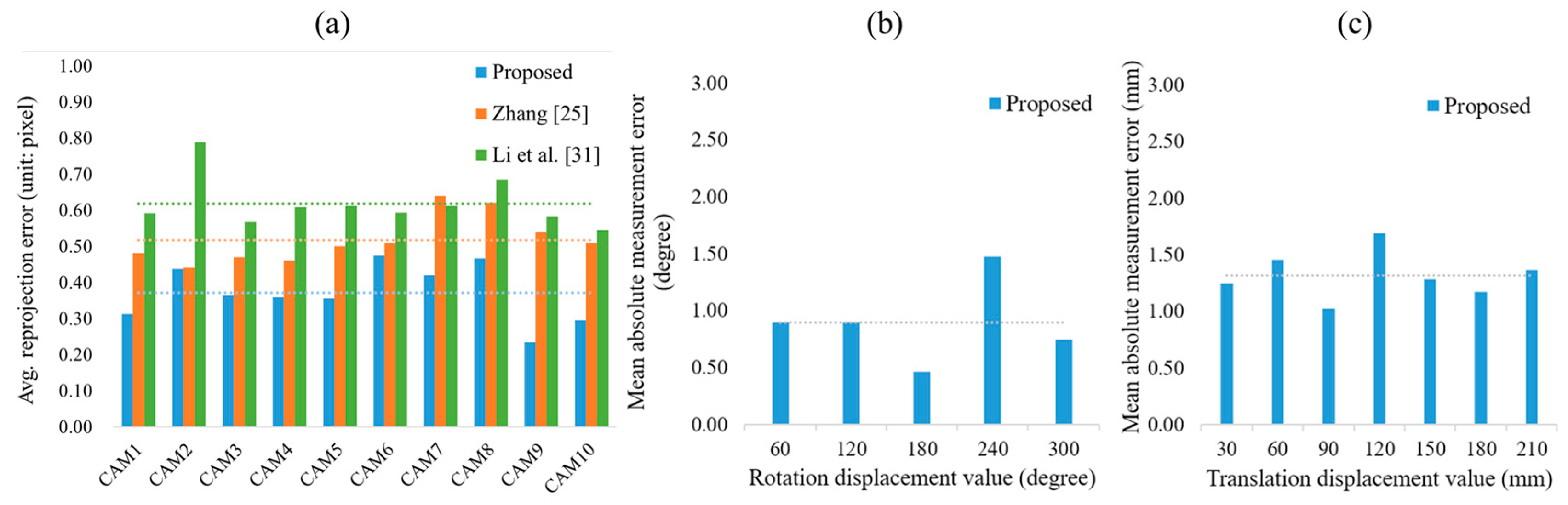

4.3. Experimental Results

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Agrawal, A.; Taguchi, Y.; Ramalingam, S. Analytical forward projection for axial non-central dioptric and catadioptric cameras. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 129–143. [Google Scholar]

- Scaramuzza, D. Omnidirectional camera. In Computer Vision; Springer: Berlin, Germany, 2014; pp. 552–560. [Google Scholar]

- Xiang, Z.; Sun, B.; Dai, X. The camera itself as a calibration pattern: A novel self-calibration method for non-central catadioptric cameras. Sensors 2012, 12, 7299–7317. [Google Scholar] [PubMed]

- Valiente, D.; Payá, L.; Jiménez, L.; Sebastián, J.; Reinoso, Ó. Visual information fusion through bayesian inference for adaptive probability-oriented feature matching. Sensors 2018, 18, 2041. [Google Scholar] [CrossRef]

- Junior, J.M.; Tommaselli, A.M.G.; Moraes, M.V.A. Calibration of a catadioptric omnidirectional vision system with conic mirror. ISPRS J. Photogramm. Remote Sens. 2016, 113, 97–105. [Google Scholar] [CrossRef]

- Neumann, J.; Fermuller, C.; Aloimonos, Y. Polydioptric camera design and 3D motion estimation. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; Volume 2. [Google Scholar]

- Yin, L.; Wang, X.; Ni, Y.; Zhou, K.; Zhang, J. Extrinsic Parameters Calibration Method of Cameras with Non-Overlapping Fields of View in Airborne Remote Sensing. Remote Sens. 2018, 10, 1298. [Google Scholar] [CrossRef]

- Nuger, E.; Benhabib, B. A Methodology for Multi-Camera Surface-Shape Estimation of Deformable Unknown Objects. Robotics 2018, 7, 69. [Google Scholar]

- Chen, J.; Benzeroual, K.; Allison, R.S. Calibration for high-definition camera rigs with marker chessboard. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Providence, RI, USA, 16–21 June 2012; pp. 29–36. [Google Scholar]

- Facebook Surround 360. Available online: https://developers.facebook.com/videos/f8-2017/surround-360-beyond-stereo-360-cameras/ (accessed on 7 November 2018).

- Jump-Google VR. Available online: https://vr.google.com/jump/ (accessed on 7 November 2018).

- Ricoh Theta. Available online: https://theta360.com/ (accessed on 7 November 2018).

- Samsung Gear 360. Available online: http://www.samsung.com/global/galaxy/gear-360/ (accessed on 7 November 2018).

- Sonka, M.; Hlavac, V.; Boyle, R. Image Processing, Analysis, and Machine Vision; Cengage Learning: Boston, MA, USA, 2014. [Google Scholar]

- Duane, C.B. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Kenefick, J.F.; Gyer, M.S.; Harp, B.F. Analytical self-calibration. Photogramm. Eng. 1972, 38, 1117–1126. [Google Scholar]

- Clarke, T.A.; Fryer, J.G. The development of camera calibration methods and models. Photogramm. Rec. 1998, 16, 51–66. [Google Scholar] [CrossRef]

- Habib, A.; Detchev, I.; Kwak, E. Stability analysis for a multi-camera photogrammetric system. Sensors 2014, 14, 15084–15112. [Google Scholar] [CrossRef]

- Detchev, I.; Habib, A.; Mazaheri, M.; Lichti, D. Practical in situ Implementation of a Multicamera Multisystem Calibration. J. Sens. 2018, 2018. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marin-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Muñoz-Salinas, R.; Marin-Jimenez, M.J.; Yeguas-Bolivar, E.; Medina-Carnicer, R. Mapping and localization from planar markers. Pattern Recognit. 2018, 73, 158–171. [Google Scholar] [CrossRef]

- Germanese, D.; Leone, G.; Moroni, D.; Pascali, M.; Tampucci, M. Long-Term Monitoring of Crack Patterns in Historic Structures Using UAVs and Planar Markers: A Preliminary Study. J. Imaging 2018, 4, 99. [Google Scholar] [CrossRef]

- Tsai, R.Y. An efficient and accurate camera calibration technique for 3D machine vision. Proc. Comp. Vis. Patt. Recog. 1986, 364–374. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Tang, D.; Hu, T.; Shen, L.; Ma, Z.; Pan, C. AprilTag array-aided extrinsic calibration of camera--laser multi-sensor system. Robot. Biomim. 2016, 3, 13. [Google Scholar] [CrossRef] [PubMed]

- Dong, S.; Shao, X.; Kang, X.; Yang, F.; He, X. Extrinsic calibration of a non-overlapping camera network based on close-range photogrammetry. Appl. Opt. 2016, 55, 6363–6370. [Google Scholar] [CrossRef] [PubMed]

- Carrera, G.; Angeli, A.; Davison, A.J. SLAM-based automatic extrinsic calibration of a multi-camera rig. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 2652–2659. [Google Scholar]

- Olson, E. AprilTag: A robust and flexible visual fiducial system. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3400–3407. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 1052–1067. [Google Scholar] [CrossRef]

- Li, B.; Heng, L.; Koser, K.; Pollefeys, M. A multiple-camera system calibration toolbox using a feature descriptor-based calibration pattern. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 1301–1307. [Google Scholar]

- Strauß, T.; Ziegler, J.; Beck, J. Calibrating multiple cameras with non-overlapping views using coded checkerboard targets. In Proceedings of the 2014 IEEE 17th International Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 2623–2628. [Google Scholar]

- Yu, L.; Han, Y.; Nie, H.; Ou, Q.; Xiong, B. A calibration method based on virtual large planar target for cameras with large FOV. Opt. Lasers Eng. 2018, 101, 67–77. [Google Scholar] [CrossRef]

- Fraser, C.S. Automated processes in digital photogrammetric calibration, orientation, and triangulation. Digit. Signal Process. 1998, 8, 277–283. [Google Scholar] [CrossRef]

- Fraser, C.S. Automatic camera calibration in close range photogrammetry. Photogramm. Eng. Remote Sens. 2013, 79, 381–388. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Kumar, R.K.; Ilie, A.; Frahm, J.-M.; Pollefeys, M. Simple calibration of non-overlapping cameras with a mirror. In Proceedings of the IEEE Conference on CVPR 2008 Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–7. [Google Scholar]

- Miyata, S.; Saito, H.; Takahashi, K.; Mikami, D.; Isogawa, M.; Kojima, A. Extrinsic camera calibration without visible corresponding points using omnidirectional cameras. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 2210–2219. [Google Scholar] [CrossRef]

- Zhu, C.; Zhou, Z.; Xing, Z.; Dong, Y.; Ma, Y.; Yu, J. Robust plane-based calibration of multiple non-overlapping cameras. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 658–666. [Google Scholar]

- Tommaselli, A.M.G.; Galo, M.; De Moraes, M.V.A.; Marcato, J.; Caldeira, C.R.T.; Lopes, R.F. Generating virtual images from oblique frames. Remote Sens. 2013, 5, 1875–1893. [Google Scholar] [CrossRef]

- Tommaselli, A.M.G.; Marcato, J., Jr.; Moraes, M.V.A.; Silva, S.L.A.; Artero, A.O. Calibration of panoramic cameras with coded targets and a 3D calibration field. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 137–142. [Google Scholar] [CrossRef]

- Campos, M.B.; Tommaselli, A.M.G.; Marcato Junior, J.; Honkavaara, E. Geometric model and assessment of a dual-fisheye imaging system. Photogramm. Rec. 2018, 33, 243–263. [Google Scholar] [CrossRef]

- Khoramshahi, E.; Honkavaara, E. Modelling and automated calibration of a general multi-projective camera. Photogramm. Rec. 2018, 33, 86–112. [Google Scholar] [CrossRef]

- Marquardt, D.W. An algorithm for least-squares estimation of nonlinear parameters. J. Soc. Ind. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- GoPro Hero4 Black. Available online: https://gopro.com/ (accessed on 7 November 2018).

- Camera Calibration with OpenCV. Available online: https://docs.opencv.org/3.4.0/d4/d94/tutorial_camera_calibration.html (accessed on 7 November 2018).

- Calibration with ArUco and ChArUco. Available online: https://docs.opencv.org/3.4/da/d13/tutorial_aruco_calibration.html (accessed on 7 November 2018).

- Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (accessed on 7 November 2018).

- Kolor Autopano. Available online: http://www.kolor.com/ (accessed on 29 November 2018).

| The Total Number of Patterns in the Given Area | 1 | 2 | |||

| Pattern unit length (Unit: pixel) | 370 | 190 | 130 | 100 | 80 |

| The number of actual corner points in the captured image | 16 | 81 | 196 | 361 | 576 |

| The number of correctly detected corner points in the captured image | 16 | 78.91 | 194.64 | 353.82 | 524.27 |

| Accuracy (%) | 100.0 | 97.42 | 99.30 | 98.01 | 91.02 |

| (Unit: pixel) | CAM1 | CAM2 | CAM3 | CAM4 | CAM5 | CAM6 | CAM7 | CAM8 | CAM9 | CAM10 | ||

| Proposed | 0.31 | 0.44 | 0.36 | 0.36 | 0.36 | 0.48 | 0.42 | 0.47 | 0.23 | 0.29 | 0.37 | 0.08 |

| Zhang [25] | 0.48 | 0.44 | 0.47 | 0.46 | 0.50 | 0.51 | 0.64 | 0.62 | 0.54 | 0.51 | 0.52 | 0.07 |

| Li et al. [31] | 0.59 | 0.79 | 0.57 | 0.61 | 0.61 | 0.59 | 0.61 | 0.68 | 0.58 | 0.54 | 0.62 | 0.07 |

| Rotation Displacement Value (Degree) | 60 | 120 | 180 | 240 | 300 | |

| Measurement results | CAM1 | 59.81 | 119.48 | 180.47 | 240.79 | 299.30 |

| CAM2 | 61.56 | 119.57 | 181.06 | 242.66 | 299.18 | |

| CAM3 | 60.12 | 121.10 | 180.43 | 241.21 | 300.73 | |

| CAM4 | 60.23 | 121.11 | 180.44 | 241.22 | 300.82 | |

| CAM5 | 59.78 | 120.94 | 180.51 | 240.74 | 300.52 | |

| CAM6 | 62.25 | 120.25 | 180.43 | 243.28 | 299.67 | |

| CAM7 | 59.34 | 120.87 | 180.38 | 240.08 | 300.45 | |

| CAM8 | 59.35 | 120.91 | 180.82 | 240.21 | 300.51 | |

| CAM9 | 61.65 | 121.51 | 180.09 | 242.28 | 301.39 | |

| CAM10 | 61.44 | 121.39 | 180.00 | 242.27 | 301.20 | |

| Mean absolute error | 0.90 | 0.90 | 0.46 | 1.48 | 0.75 | |

| Standard deviation | 0.76 | 0.41 | 0.31 | 1.09 | 0.33 | |

| Translation Displacement Value (mm) | 30 | 60 | 90 | 120 | 150 | 180 | 210 | |

| Measurement results | CAM1 | 33.16 | 60.98 | 90.90 | 121.61 | 151.32 | 181.14 | 211.59 |

| CAM2 | 30.62 | 61.56 | 90.86 | 121.78 | 151.07 | 181.27 | 212.17 | |

| CAM3 | 31.24 | 62.64 | 90.71 | 121.46 | 151.97 | 181.75 | 211.63 | |

| CAM4 | 31.11 | 61.19 | 91.23 | 121.11 | 151.41 | 181.27 | 211.86 | |

| CAM5 | 29.03 | 63.61 | 90.92 | 121.64 | 151.47 | 181.32 | 212.24 | |

| CAM6 | 30.90 | 60.54 | 91.17 | 117.24 | 151.38 | 179.90 | 210.37 | |

| CAM7 | 31.10 | 61.12 | 90.73 | 121.42 | 151.44 | 181.02 | 211.89 | |

| CAM8 | 30.83 | 60.79 | 91.32 | 121.57 | 151.24 | 181.65 | 210.17 | |

| CAM9 | 30.83 | 60.79 | 91.32 | 121.57 | 151.24 | 181.65 | 210.17 | |

| CAM10 | 31.74 | 61.30 | 91.07 | 122.02 | 150.31 | 181.61 | 211.62 | |

| Mean absolute error | 1.25 | 1.45 | 1.02 | 1.69 | 1.28 | 1.18 | 1.37 | |

| Standard deviation | 0.74 | 0.95 | 0.23 | 0.44 | 0.41 | 0.47 | 0.81 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

An, G.H.; Lee, S.; Seo, M.-W.; Yun, K.; Cheong, W.-S.; Kang, S.-J. Charuco Board-Based Omnidirectional Camera Calibration Method. Electronics 2018, 7, 421. https://doi.org/10.3390/electronics7120421

An GH, Lee S, Seo M-W, Yun K, Cheong W-S, Kang S-J. Charuco Board-Based Omnidirectional Camera Calibration Method. Electronics. 2018; 7(12):421. https://doi.org/10.3390/electronics7120421

Chicago/Turabian StyleAn, Gwon Hwan, Siyeong Lee, Min-Woo Seo, Kugjin Yun, Won-Sik Cheong, and Suk-Ju Kang. 2018. "Charuco Board-Based Omnidirectional Camera Calibration Method" Electronics 7, no. 12: 421. https://doi.org/10.3390/electronics7120421

APA StyleAn, G. H., Lee, S., Seo, M.-W., Yun, K., Cheong, W.-S., & Kang, S.-J. (2018). Charuco Board-Based Omnidirectional Camera Calibration Method. Electronics, 7(12), 421. https://doi.org/10.3390/electronics7120421