Abstract

Virtual reality (VR) has existed in the realm of education for over half a century; however, it has never achieved widespread adoption. This was traditionally attributed to costs and usability problems associated with these technologies, but a new generation of consumer VR headsets has helped mitigate these issues to a large degree. Arguably, the greater barrier is now the overhead involved in creating educational VR content, the process of which has remained largely unchanged. In this paper, we investigate the use of video as an alternative way of producing educational VR content with a much lower barrier to entry. We report on the differences in user experience between and standard desktop video. We also compare the short- and long-term learning retention of tertiary students who viewed the same video recordings but watched them in either or standard video formats. Our results indicate that students retain an equal amount of information from either video format but perceive video to be more enjoyable and engaging, and would prefer to use it as additional learning resources in their coursework.

1. Introduction

In the past two decades, numerous studies have demonstrated the benefits of virtual reality (VR) over multiple levels of education [1,2] and in many application domains [3,4]. The term VR encompasses the array of software and hardware that creates a digital representation of a 3D object or environment, with which a user can interact and feel a sense of immersion.

Immersion is one of the primary motivations for educators employing VR, because a sense of immersion in students can foster engagement with the learning content [5,6,7].

The definition of immersion is widely debated. In this paper, we use an integrative definition from Mütterlein, describing immersion as “the subjective experience of feeling totally involved in and absorbed by the activities conducted in a place or environment, even when one is physically situated in another” [8]. This activity-centric definition allows immersion to be differentiated from the related concept of presence, which describes “how realistically participants respond to the environment, as well as their subjective sense of being in the place depicted by the Virtual Environment” [9]. A heightened sense of presence in a virtual environment is associated with increased immersion in the learning activities performed there [4].

VR has encompassed experiences on many different devices with different levels of immersion and interactivity, from low-immersion, 2D computer screens, to fully immersive, VR head-mounted displays (HMDs). However, there has been a recent surge in consumer interest in VR and the concurrent release of a new generation of affordable HMDs and peripherals, greatly reducing the barrier of entry to immersive VR [10,11,12,13]. This has transformed the landscape of research into VR and has led the educational literature to focus on immersive VR as a newly accessible option.

Immersive VR provides a complete simulation of a new reality by tracking the user’s position and creating multiple sensory outputs through a VR HMD [14]. It has been successfully applied to education in many fields, including engineering [15], architecture [16], medicine [17], history [18], and music [19]. Educators using immersive VR often rely on constructivist pedagogy, because the medium facilitates the experiential and environment-driven learning at the centre of this methodology [20,21]. Studies have consistently found positive learner attitudes towards immersive VR [22], and it has been shown to improve time on task [23], motivation [6], and knowledge acquisition [24].

Despite these findings, immersive VR has failed to achieve widespread adoption in education. Traditionally, this failure was attributed to the high cost and questionable usability of the technology [2,3,20,25]. With modern VR systems available, this hesitance now arguably comes from educators weighing two other factors: whether there is evidence that VR has enough of an impact on students’ learning to justify costs; and whether they can guarantee the availability of VR content in the long term [22].

Currently, if educators wish to create their own VR content, they either have to learn how to program 3D graphical applications themselves or hire content developers to create it for them [26,27]. Novel technologies such as neural radiance field [28] and photogrammetry [29] have made it easier to create customised 3D content, but content creation is still a major barrier towards a wider use of VR in education.

To address these barriers to VR adoption, we investigated video as an alternative for educational VR content. video, when viewed inside an HMD, has been considered by some authors as a subset of immersive VR [30,31], but it is more commonly defined as different from VR [32]. We consider video as a simplified form of VR, since it shares some properties of VR but lacks essential functionalities. video provides a movable, user-centric viewpoint inside an immersive, digitally projected environment. However, standard video only allows passive viewing, users can not interact with the 3D environment, and the view position is fixed and movements restricted to the prerecorded camera movements. This differentiates it from VR using 3D environments, where users can interact with the environment and actively change it. More complex interactions are possible, e.g., gaze-based point-and-click mechanics or possibilities to change the storyline [33], but this usually requires post-processing of recorded videos and specialized applications.

video is much easier to create than interactive VR. Spherical cameras that capture video have followed a similar trajectory to VR HMDs, with a recent generation of consumer-focused models greatly reducing the cost barrier [34,35]. In response to this, popular video sharing platforms YouTube and Facebook added support for video in 2015, and later Vimeo in 2017 [36]. Once captured, video can be edited using traditional video editing software in the same file formats as standard videos [37,38]. This means that video is cheaper to make than immersive VR, more easily sharable, and requires less upskilling of current content creators. Recent research has looked into making video more useful by proposing more powerful editing algorithms [39] and mixing it with other media [40,41].

videos can also be combined with VR environments, e.g., improving collaborations by sharing remote environments via videos [42,43,44], improving the realism of 3D environments by fusing them with (potentially real-time) video data [45], creating immersive virtual field trips [40], or creating gamified learning experiences by combining technologies with VR [46].

Finally, videos can be used to create immersive VR environment by reconstructing 3D scenes using photogrammetry techniques [47,48,49] or by training Neural Radiance Field (NeRF) models [50,51]. The advantages of using video for 3D reconstruction are a comprehensive () coverage of the environment, efficiency, smooth data acquisition, and that the video itself can be immersively viewed (e.g., as fast preview of the reconstructed scene). Disadvantages of using video for 3D reconstruction are the data size requiring high processing power, limited resolution, and potentially calibration and stitching issues.

Research into educational video is relatively new, but it has already been applied to many fields, including medicine [52,53], language [30], sports [54], business [55], collaborative design [56], education and training [46,57,58,59], sustainability [60], and marine biology [61]. The literature suggests many of the same benefits as immersive VR, such as enjoyment, motivation, and improved learning outcomes [33], though more research is needed in this area [36,62,63].

Many of these studies were applied to domains that the researchers believed would particularly benefit from video [30,54]. The evaluated videos were often hard to compare to standard videos because they were embellished with features that did not translate between the two formats [52,61]. Additionally, the studies directly comparing video to standard video only evaluated learning through immediate knowledge tests [52,54,55].

In this paper, we describe a randomised, crossover study in which we sought to compare the effects of video and standard video. Twelve videos were recorded and then produced in both and standard video formats.

To minimise the effect of domain and prior knowledge, the content presented was believable but fictitious. To ensure a comparable learning experience from the same video presented in either video format, we placed certain restrictions on the production of the videos, which incidentally lead to parallel production processes between the two video types. And to measure learning outcomes that had not yet been investigated, we included long-term retention and special cases for active and passive visual recollection in our study design.

This paper reports on both the results of a User evaluation surveying participants’ experience and the comparative short- and long-term learning outcomes of participants who viewed the same video in and standard formats. This is, to the best of our knowledge, the first time long-term learning retention has been investigated for video.

Research Questions

- RQ1:

- What differences in user experience exist when presenting educational content in video on a virtual reality head-mounted display, compared to standard video on a desktop PC?

- RQ2:

- What differences in short- and long-term learning retention exist when presenting educational content in video on a virtual reality head-mounted display, compared to standard video on a desktop PC?

2. Materials and Methods

This is a randomised, crossover study intended to compare the learning experience of video, viewed on a VR HMD, to standard video, viewed on a desktop PC. We chose an HMD since we wanted to test whether the additional immersion provided by it affected learning outcomes, e.g., no distraction from the real environment and more intuitive navigation (move head instead of a mouse). The study was spread over a six-week period and collected results about the user experience and short- and long-term learning retention of participants.

2.1. Participants

In total, 20 tertiary students participated in this study, 16 of which were undergraduate and 4 postgraduate. Of these students, 18 were male and 2 were female. The mean age of participants was 25; however, the ages of the participants varied greatly (standard deviation = 9.02, minimum age = 18, maximum = 55). No participants dropped out of this study, and all successfully completed all activities.

2.2. Educational Content

There were two primary considerations when designing the educational content used during this study. Firstly, we wanted to limit the effect that the application domain may have on the learning outcomes of students. Participants may have prior knowledge in different domain areas, affecting the study, and we wanted to confirm that the benefits of video were not constrained to especially well-suited subjects. Secondly, we needed to create videos covering the exact same learning content in and standard formats.

As a result of these considerations, we created a series of 12 short lectures in both video formats, filmed at 12 visually distinctive locations (e.g., a forest, a lecture theatre, a playground, inside a car). In 10 of these locations, a teacher would present a series of facts about that particular location, sometimes referring to visible features around them. The other two locations will be discussed in Section 2.3.

To address the effect of domain and prior knowledge, the location names and information presented in the videos were designed to be believable but were actually fictitious. For example, in the “Whiterock Bush” video recording, participants were informed that the area had been the site of “the frequent illegal dumping of rubbish”. “Whiterock Bush” is not a real location, nor is the presented fact true. Participants were not informed that the information was fictitious until after the study was complete, as that knowledge could have an effect on learning outcomes.

Ensuring the two video formats provided the same information placed two limitations on video development. Firstly, as extra information is visible when viewing a video, we needed to ensure learning did not rely on content outside of the frame captured in the standard video. Secondly, the quality of accessible cameras varies considerably, and while the pixel resolution is high compared to standard video, it is stretched over the full sphere when viewed. This is compounded by re-projection through VR HMDs of varying resolutions, leading to video experiences often being of low quality with poor text legibility. It is for this reason that we chose to use verbally delivered lectures in visually distinct locations as the educational content.

We also decided to reduce the resolution of the standard videos to match that of the videos. Although the current technology results in a difference in resolution between typical and standard desktop videos, we anticipate that, in the future, the gap will close, and the experience of resolution in each technology will be more similar. We adjusted the resolution to ensure our results are more robust to changes in technology and not significantly influenced by the current technology limits.

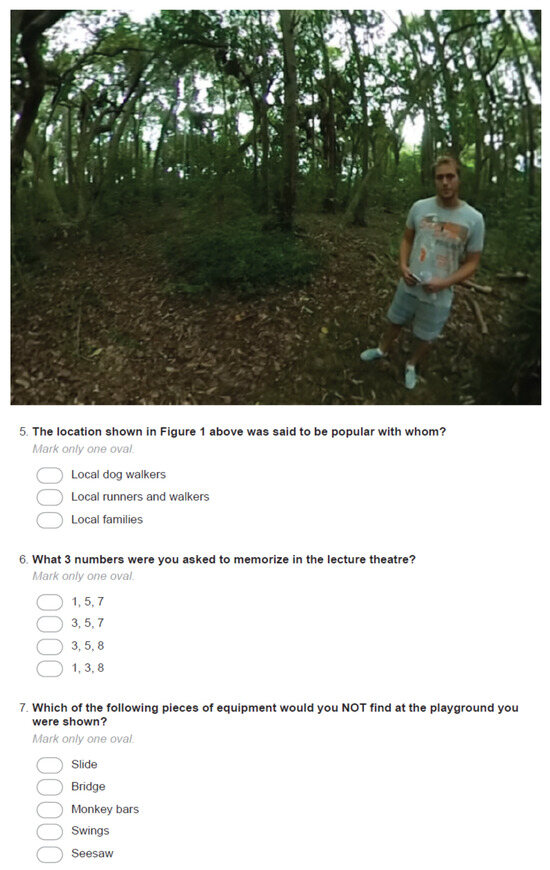

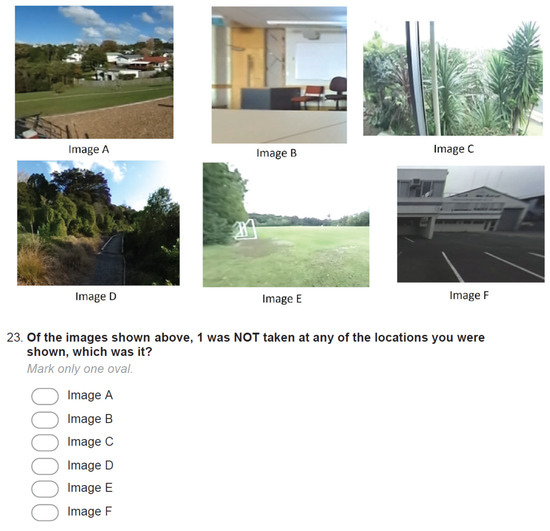

Figure 1 and Figure 2 show screenshots of the videos participants watched and various questions about these videos. The questions covered specific locations (e.g., which location is shown in this image?), objects in these locations (e.g., which painting did you see in the building?), positions of objects (e.g., where was the swing located within the playground?), and information about these locations (e.g., which bus numbers stopped at this bus stop?).

Figure 1.

A screenshot of one of the videos participants watched and three questions from the short-term and long-term questionnaire about these videos.

Figure 2.

A question from the short-term and long-term questionnaire, which contains five screenshots of locations of videos participants watched and one location participants did not see.

2.3. Special Case Videos

There were 3 additional factors of interest we wanted to measure, for which we designed 2 special case videos and modified one of the location-based lectures.

Firstly, as arguably the most common setting for tertiary educational videos, we included a lecture theatre as one of the locations. Rather than presenting facts about the location in this recording, we instead performed a traditional lecture where information was presented both verbally and via an overhead projector. This was performed to evaluate whether increasing the feeling of presence in a lecture theatre would affect learning outcomes.

We were also interested in whether viewing a video in would affect the active and passive recollection of visual information. To investigate active visual recollection, we included a recording located inside of a car. Participants were not presented with any facts but were instead asked to memorise as many details as they could about the interior of the vehicle. They were later tested on this information.

To investigate passive visual recollection, a bright red ribbon was tied around a tree in one of the existing locations. The ribbon was clearly visible in the recordings; however, the ribbon was never explicitly mentioned by the speaker at this location. The participants were later asked to recall where they had seen this ribbon among 3 visually similar locations.

2.4. Technologies

The videos were captured using a Ricoh Theta S spherical camera. The camera features two fish-eye lenses capable of recording each. We imported the videos into the Ricoh Theta software, which stitches the video together to a video and exports it as a standard MP4 file, which we then edited with Adobe Premiere Pro.

We displayed videos on a Samsung Gear VR HMD (used in conjunction with a Samsung Galaxy Note 5). While more modern HMDs, such as the Meta Quest 2, offer higher comfort and display quality, very few students own such HMDs, and hence, we decided to use a mobile phone-based HMD, which we considered more accessible for education applications.

The standard videos were obtained using the free and open-source OBS Studio to take screen recordings of the videos, running on the free Ricoh Theta desktop video player software. They were recorded at 60 fps and adjusted to a resolution of 1920 × 1080, to match the video’s resolution. Standard videos were played on a 23″ PC monitor with a resolution of 1920 × 1080.

In most of the standard videos, all necessary information was contained within the intial field of view. However, in some locations, small rotations of the camera were needed to include other details. These rotations were integrated into the standard video recordings, not controlled by or performed in front of the participants.

In total, 24 video clips were created, including both the and standard versions of each location and lecture. For a detailed description of the content creation process see [64].

2.5. Challenges in Creating 360° Videos

We encountered several challenges when creating videos for practical applications. Finding a suitable position for the camera was non-trivial. Placing the camera on a table using a normal tripod resulted in an unnatural experience when viewing the video with an HMD. Ideally, the camera had to be located at the eye height of a participant. Holding the camera by hand or using a large tripod resulted in the hand or tripod being visible when looking down. In the end, we used a large monopod (a one-legged camera stand). This single-legged stand was almost invisible, hidden under the camera, and provided the most natural viewing experience.

Another problem we encountered was the low video quality caused by the use of a cheaper older camera model. While the device is capable of recording in 1920 × 1080 pixels, this resolution is spread across the entire , resulting in a display resolution more similar to a 240p video than a 1080p video when viewed on a desktop screen. This limited the educational content we could display; e.g., we avoided situations requiring the recording of text.

2.6. Study Design

Participants were randomly divided into Groups 1 and 2. Both groups were shown all 12 recordings; however, Group 1 was shown locations 1–6 in video on a VR HMD and locations 7–12 in a standard video on a desktop PC. Group 2 was shown the opposite videos on the HMD and PC (see Table 1). This is a randomised, crossover design to evaluate user experience across video formats, with both groups experiencing both treatments and subsequently compared through a user evaluation.

Table 1.

Distribution of the 24 video recordings among the 2 groups.

This study is additionally a randomised design to evaluate the learning outcomes between the video formats, achieved by comparing the test scores of participants experiencing the same recordings in different formats. These scores were obtained from both a Short-Term Retention Test and Long-Term Retention Test.

The groups compared in this second design are not Groups 1 and 2, but rather the pseudo-groups HMD and PC. This is because the scores associated with experiencing video will include question scores from both Groups 1 and 2, depending on the recording being assessed by each individual question. In fact, all participants may appear in both pseudo-groups, depending on the specific condition, since all participants experience both and standard videos.

2.7. Assessment Instruments

Three assessment instruments were used in this study: a user evaluation, a Short-Term Retention Test, and a Long-Term Retention Test.

The user evaluation was 12 questions long, comprising 4 Likert-scale questions, 1 short-answer question, and 7 open-ended questions. In all questions, participants were asked to compare and standard video experiences, except the final question, which directly asked participants for their opinion on video as an educational tool.

The user evaluation included questions about participants’ senses of enjoyment, immersion, engagement, and their level of distraction while watching the different video formats. We also asked about their preference of format for lecture recordings and any issues that were caused by video and screen quality, motion sickness, or other factors. These are some of the demonstrated benefits of immersive VR, and we wanted to validate that they were evident in video as well.

The Short-Term Retention Test was 20 questions long, comprising 14 multiple-choice questions and 6 short-answer questions about the content of the video recordings. This test also included questions about the active and passive visual recollection from the special case videos.

The Long-Term Retention Test was 21 questions long, comprising 15 multiple-choice questions and 6 short-answer questions. It is identical to the Short-Term Retention Test except for 4 questions, which are slight simplifications of 3 of the original questions. Two of these asked the participants to recall information from an image instead of a location name, and one short-answer question asking for two pieces of information was split into both a short-answer and multiple-choice question.

The Short- and Long-Term Retention Tests were divided into 2 sections, “Content Retention” and “Location Recognition”. Questions about content retention focused on the recall of the information participants were taught during the lectures, while the location recognition questions targeted visual information about the recording locations. The questions were designed to be unambiguous and binary in nature, allowing for a rigid marking rubric.

2.8. Study Procedure

Participants were first asked to fill out a Demographic Questionnaire. This questionnaire collected basic information, including the participants’ age, area of study, experience with VR HMDs, and whether they had any issues affecting their vision (so the HMD could be adjusted accordingly).

Next, participants were asked to put on the HMD and instructed to complete both a pre-installed Oculus Tutorial as well as watch a 2-minute-long introductorylecture on the HMD. This was to familiarise the participants with video.

After the familiarisation protocols, participants were asked to watch the first set of videos on either the HMD or the PC, depending on the group they had been assigned to (see Table 1). These video sets each comprised 6 location recordings stitched together, forming one continuous recording approximately 5 min long.

Participants were then asked to perform an unrelated reading task for 3 min, designed to act as a distractor between the 2 video sessions. After the distractor task, they were shown the second set of videos in the other video format.

When they had finished viewing the video content, participants were asked to complete both the user evaluation and Short-Term Retention Test. Six weeks later, participants were asked to complete the online Long-Term Retention Test.

3. Results

This section is split into three primary subsections. Section 3.1 discusses the results of the score-based questions in the user evaluation, before Section 3.2 reports on our thematic analysis of the open-ended responses. Section 3.3 then outlines our statistical analysis of the results of the Short- and Long-Term Retention Tests.

3.1. Quantitative User Evaluation

The user evaluation was a combination of Likert-scale, short-answer, and open-ended questions. It was completed by all participants after they had finished viewing both video formats.

This section reports the quantitative results from the four Likert-scale and one short-answer question, discussed in two groups: Subjective Experiences and Study Habits.

3.1.1. Subjective Experiences

In the first three Likert-scale questions, participants were asked to rate the degree to which they felt enjoyment, immersion, and engagement while watching the two different video formats. These questions used a five-point Likert scale, from −2 (“Strongly Disagree”) to 2 (“Strongly Agree”), with 0 corresponding to “Neutral”.

Immersion was described to participants as the degree to which they forgot about their surroundings and “lost themselves” in the experience. Engagement was described as the degree to which a learning experience holds their attention and makes them actively focus on their learning.

Table 2 presents the means and medians of the responses to these three questions, which range between −2 and 2. It also presents the results of Wilcoxon signed rank tests between the scores for video and standard video, as well as the Cohen’s d effect sizes where the tests were considered significant at the threshold. Cohen’s d values of 0.20, 0.50, and 0.80 correspond to small, medium, and large effect sizes, respectively. Wilcoxon tests were used because Likert responses are ordinal, the sample size is small (n = 20), and each group of scores heavily defied a Shapiro–Wilk test for normality.

Table 2.

Comparative enjoyment, immersion, and engagement between and standard video formats. The mean and median are in the range of −2 to 2, and the mean is given with a 95% confidence interval.

Based on these analyses, we found statistically significant results across all three questions, with participants expressing strong preference towards video over desktop video in all factors.

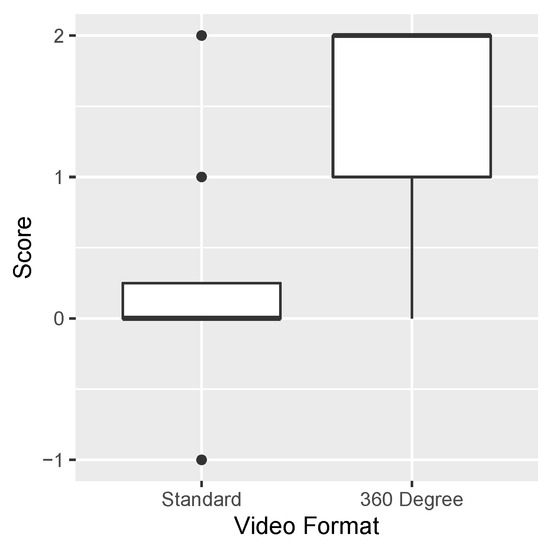

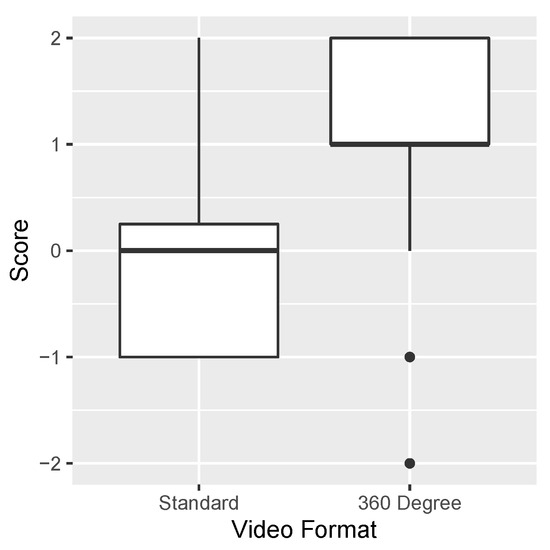

As Figure 3 shows, participants rated their sense of enjoyment much higher for video compared to standard video. The majority of respondents, 12 of 20, responded neutrally to standard video, with only 5 responding positively. Conversely, 18 participants responded positively to video, with 11 of them saying they “Strongly Agree” that they enjoyed the experience. This is strong evidence that the students enjoyed the versions of the videos more than the standard versions, with a large effect size in our sample.

Figure 3.

Participants’ rating of enjoyment of standard and video formats. These box-plots show the median and inter-quartile ranges of the scores, which range from −2 to 2.

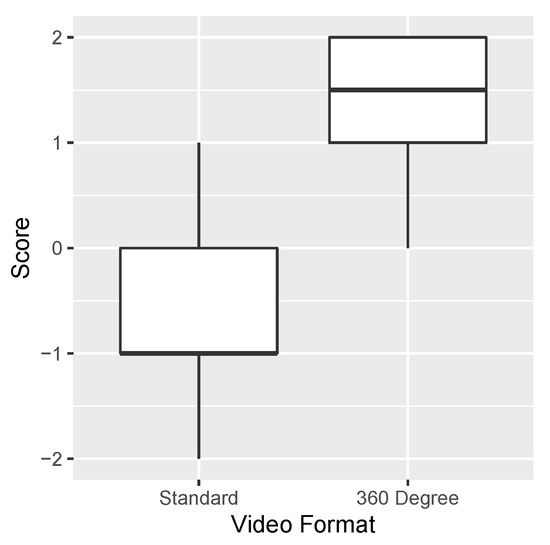

Figure 4 shows the results of the participants rating their sense of immersion during the or standard videos.

Figure 4.

Participants’ rating of immersion in Standard and video formats. These box-plots show the median and inter-quartile ranges of the scores, which range from −2 to 2.

Of these three subjective Likert questions, this one had the smallest p-value and largest effect size, suggesting that the participants felt much more strongly immersed in the video than the standard one. A total of 19 of 20 participants responded positively to this question regarding video, while 12 responded negatively regarding desktop video.

Regarding engagement, shown in Figure 5, the responses were again much more in favour of the video approach, with 16 of 20 participants rating their engagement level positively, compared to just 5 participants for the standard video.

Figure 5.

Participants’ rating of engagement with Standard and video formats. These box-plots show the median and inter-quartile ranges of the scores, which range from −2 to 2.

Unlike the previous two questions, however, two participants responded negatively regarding their feeling of engagement with the content. While the p-value was significant and the effect size large, this question had the smallest difference between groups of these three subjective Likert questions. Potential reasons for this will be expanded on in the discussion, after evidence about attention and distraction is presented in Section 3.2.1 of the thematic analysis.

3.1.2. Study Habits

The next two questions concerned the potential study habits of participants using the two video formats. Participants were asked how long they believed they could comfortably study by watching video on a VR HMD or by watching standard video on a desktop PC. They were then asked how likely they would be to use educational materials of a similar nature to those they had been shown, as an additional (but optional) material for their own courses. These were a short-answer and a five-point Likert-scale question, respectively.

The differences between the responses to the two video formats were analysed using Wilcoxon signed rank tests, and the effect sizes were estimated by Cohen’s d when the result was significant. The results are given in Table 3 and are illustrated in Figure 6.

Table 3.

Study habits of participants using standard and video, comparing the length of time they believed they could study and their likelihood to use either video type as additional learning material. The mean and median of supplementary use are in the range of −2 to 2, and the mean is given with a 95% confidence interval.

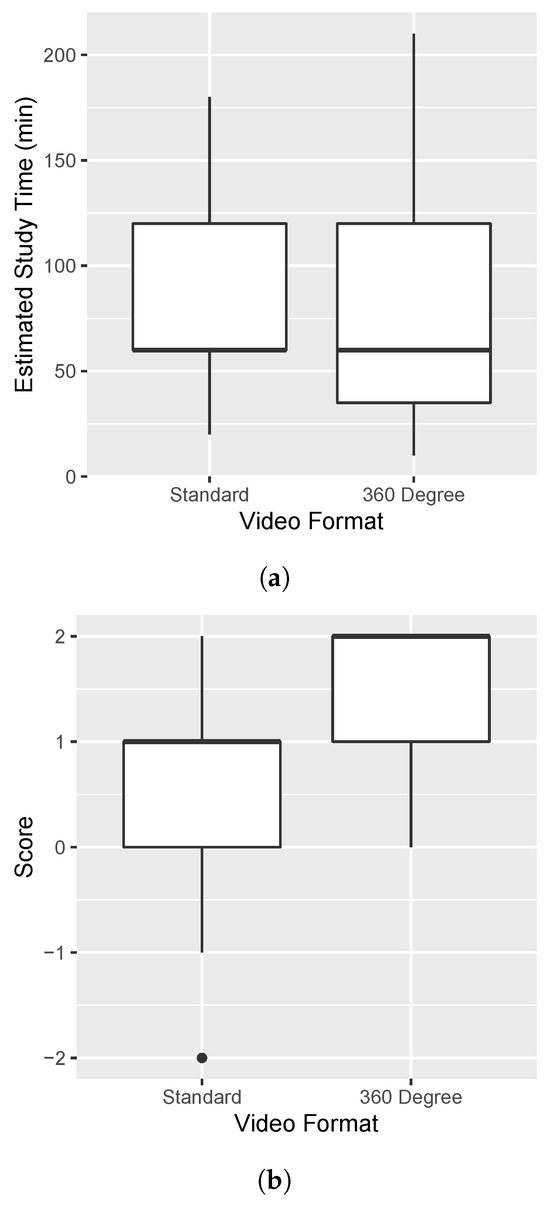

Figure 6.

Potential study habits of students using standard and video. (a) Maximum reported potential study times using standard and video. (b) Likelihood of using additional learning materials in standard and video formats.

These responses indicate that participants believed they could study using the different video formats for very similar amounts of time. The mean reported time was 87.1 min for the standard video and 79.4 min for the () video. A Wilcoxon signed rank test on the reported times found , showing no significant difference between these times.

Assuming that they had equal and easy access to a desktop PC and a VR HMD, participants showed a statistically significant preference for the idea of using video as supplementary content to their own courses. Most participants answered that they were “Likely” or “Very Likely” to use both video and standard formats, but this number was larger for , with responses more heavily weighted towards “Very Likely”.

3.2. Thematic Analysis

This section outlines the results of our thematic analysis of the open-ended responses to the user evaluation. The evaluation included six questions in which participants were asked to comment on both the standard and video experiences, followed by a final question in which they were asked their opinion on video as an overall tool for education.

As explained in Section 2.7, participants were asked about their senses of immersion and engagement, their level of distraction, their preference of format for lecture recordings, and any issues that were caused by video and screen quality, motion sickness, or other factors.

Qualitative responses were examined for key themes, patterns, and outliers based on the “Coding in Detail” approach outlined by Bazeley and Jackson [65]. NVivo was used for the coding process, and as a result, we created 29 codes organised into four primary themes: “engagement”, “immersion”, “learning” and “technology limitations”. These codes were applied for a total of 268 times to the data.

To test the validity of this coding scheme, two reviewers independently coded a subset of the data. Cohen’s Kappa was then calculated, resulting in a coefficient of , suggesting a high degree of inter-rater agreement.

The four primary themes relate to the questions asked, but the codes for each theme were spread among responses for every question. The following sections will discuss the findings in each theme, with notable sub-themes presented in subsections where appropriate.

3.2.1. Engagement

Of the four primary themes we identified during our analysis, “engagement” was the most prevalent. Participant responses were coded 117 times as relating to engagement, accounting for 43% of the total coded data.

Responses in this category can be grouped into two major sub-themes: “enthusiasm and enjoyment”, which align with the expected trends observed in the quantitative results above; and “attention”, which revealed substantially more mixed opinions and interesting insights about the video formats. These sub-themes are discussed in further detail in the following two subsections.

Enthusiasm and Enjoyment

Mirroring the quantitative results from Section 3.1.1, participants expressed much more enthusiasm and enjoyment regarding learning in video. A total of 12 of 20 participants described it as “enjoyable” or “fun”, while 4 participants explicitly described the standard video as “boring”.

The major factor to which participants attributed their enjoyment in video was the interactive, immersive environment. One participant said that “the experience of being able to look around and follow visual cues was engaging”, while another described video as “dynamic” compared to the “stagnant” standard video. This idea of passivity in the standard video was mentioned by another participant, who stated that “it was quite boring to just stare dully at the screen, and try to just take in all the information”.

Another factor that three participants mentioned was the novelty of experiencing video. Only 7 of the 20 participants in the Demographic Questionnaire claimed to have used a VR HMD for more than an hour, and many others reported an increased sense of curiosity and interest in the immersive environment. Novelty will be discussed in Section 4 as it is likely to impact enjoyment, and also affected the leniency reported in the “Leniency” section below.

Overall, these responses clearly show that participants enjoyed the video format more, but whether this translated into engagement with the learning content itself will be discussed further after more evidence is provided.

Attention

While participants rated the overall engagement of video higher than standard video, by asking specifically about distractions, we were able to derive greater nuance from their responses.

Surprisingly, 11 of the 20 participants claimed to be more distracted in video. Only five felt more distracted watching standard video, while four claimed no difference or did not compare the formats directly. The interesting factors here, however, are the vastly different reasons students felt distracted.

Participants who felt more distracted in standard video often cited boredom, repetitive content, and a lack of focus or attention. They also mention distractions from the real world, and that video has an advantage because “it hides distractions in the surroundings”.

For the larger group who felt more distracted in video, the major reason was the extra information available in the surrounding environment. One participant reported they “can’t focus on the speaker because [they’re] curious with the surrounding things”, while another said that video “was very immersive...but I don’t know how much the distraction of looking around was combating the usefulness of it”. Another participant claimed they “enjoyed being able to look at random things while listening”, but conceded that they “got distracted more with this”.

Five participants additionally reported accidental encounters with the real environment as a distraction in video. For example, two of these participants specifically mentioned bumping their feet against the wall while wearing the HMD.

Interpreted together, the responses regarding distraction highlight the distinctions between the two video formats. Watching a standard video is a less engaging activity, but the limited information can help students identify what to focus on for their learning. video is much more fun and engaging, but the immersive environment can pull attention away from the learning content if it is not directly relevant. This is a key finding that will be expanded on further in the part “Content” of Section 3.2.3 and in Section 4.

3.2.2. Immersion

A sense of immersion was commonly mentioned by the participants, with responses coded to this theme 57 times (accounting for 21% of the coded data). A total of 16 of the 20 participants described experiencing immersion in the video, whereas only 1 of the participants described finding the desktop video “somewhat immersive”.

The responses regarding immersion were frequently strongly emotive in nature, with participants using superlatives including “surreal”, “incredible”, and “powerful” to describe their experiences. As noted in the part “Enthusiasm and Enjoyment” of Section 3.2.1, immersion in the environment was often given as the reason a participant enjoyed video more than standard video.

Immersion is a primary motivation for using video, so these results are encouraging but not surprising. However, the sources of immersion are useful to understand so that they can be taken advantage of.

Two distinct groupings emerged from this theme: immersion through a heightened sense of presence and realism in the environment; and immersion from a sense of intimacy and closeness to the presenter.

Presence

A feeling of presence in the video was explicitly mentioned by seven participants but did not apply to standard video at all.

One participant wrote “I can feel the surround[ings] with me, just like I am there”, while another said they “Forgot [they were] actually still in a room for a moment...”. Some participants noted very powerful feelings of presence, with one stating “I felt as if I was a new person and I’m in a different world”.

Two participants described the lack of presence in standard video, with one saying it “felt more like looking through someone’s perspective”. Another participant, when comparing the video formats, said of standard video “I imagine I was there, imagining what was there”, in contrast to feeling “like I am there” in video.

A sense of presence can be reinforced by a sense of realism in a virtual environment, and the realism of the video was mentioned by three participants. One said “it allows me to step into the content and explore...so it feels like the experience is more real”, and another described video as “a more ‘real’ experience” than standard video.

The sense of presence potentially afforded by video is a major motivation for its use over standard video, which is reinforced here by our study results.

Intimacy

Another way in which participants experienced immersion in video was through an increased sense of intimacy with the speaker. Three participants mentioned this directly.

One of the participants listed the following as a benefit of video: “I found the headset gave me a greater sense of interaction with the presenter, I could understand him a bit more”. Another wrote as a criticism of standard video, “The experience was almost less personal than in VR, so I felt like I could pay less attention to the facts”. This feeling of obligation to the presenter was echoed by a third participant, who wrote “I feel like I have to pay more attention because the person speaking feels more like a real person”.

3.2.3. Learning

This theme encapsulated any discussion about how the video formats may impact learning outcomes or the learning content of the videos themselves. This was the least common theme and was only coded 28 times during the analysis (approximately 10% of the coded data).

Three participants said they felt video helped with their learning outcomes, with one stating “it seemed like [my] brain learnt by itself as I do not need to concentrate too much”. The other two directly mentioned it helping with memory retention, with one postulating that “perhaps it also engages spatial processing in the brain more which creates more robust memories”. The other attributed the better retention to novelty and immersion, saying they “felt HMD is the more memorable experience hence [they] would be able to remember and recall more things”.

However, most responses under this theme were about which types of learning content are appropriate for standard and video.

Content

Generally, responses about content suggested that effectiveness of video would depend on the content being taught and how it was presented.

When asked about their preference of video format to watch lecture recordings, nine participants said they would prefer video, eight would prefer standard video, and two said it would depend on the content of the lecture.

When participants preferred video for lecture recordings, they cited increased immersion and novelty as the reason. One participant even claimed they would use video until their novelty wore off and likely switch to standard video after that.

The reasons participants would prefer using standard video for lecture recordings included that they can more easily take notes, they would be less likely to encounter distractions, and that a lecture recording is not appropriate content for video. Integrating these last two reasons, one participant wrote “If it’s a lecture recording; there’s no need to be aware of my surroundings—it’d only be an unnecessary distraction”. Another wrote quite directly “The content of the lectures doesn’t get improved by HMD. A lecture is a lecture!”

The topic of content appropriateness was again mentioned by several participants when giving their opinion of video as an educational tool. In one participant’s words: “For material which benefits from being in the environment, it’s a much more enjoyable way to learn it. For material which has no benefit from being in the environment (e.g., a traditional lecture), the desktop is more comfortable to use”.

A couple of participants linked this idea back to distractions, with one saying “the content should be related to the spherical video, otherwise it’s too easy to get distracted”. This was echoed almost verbatim by another participant, who responded “immersing students in a subject is how you best learn. If you could immerse a student and make sure the distractions are informational, you have a very powerful tool”.

The presentation of learning content is closely tied to the themes of attention and distraction reported above. The implications of these findings will be explored in the discussion.

3.2.4. Technological Limitations

The final theme in our thematic analysis encompasses any issues relating to the technologies themselves. Responses were coded as relating to this theme 85 times (approximately 31% of the coded data) and came largely from questions on the user evaluation about screen and video quality, motion sickness, and other issues encountered by participants.

The nature of the issues discussed varied considerably; however, they can be classified as relating to either the comfort of the participants or issues with the viewing experience and video quality. The other interesting sub-theme that emerged was leniency; multiple participants exhibited forgiveness towards technical issues with video. These three sub-themes are discussed below in the final sections of this thematic analysis.

Comfort

Problems with the physical comfort of the HMD were among the most common issues with video, being mentioned by eight of the participants. Of these, two described experiencing a sense of disorientation when removing the HMD, while another expressed difficulty swivelling the computer chair while watching video. The remaining five participants all mentioned that the HMD was heavy, with several stating that it caused discomfort in their neck.

Surprisingly, and despite being explicitly asked about it, only one participant reported any motion sickness while wearing the HMD.

The final issue of comfort while viewing video was not physical. Instead, it was the fact that students cannot take notes while wearing a VR HMD. This was explicitly mentioned by three of the participants, two of whom provided it as their justification for why they believed that video may not be an effective tool for education overall.

Viewing Experience

An equal number of participants reported issues with the quality of the video and the standard video. Six participants mentioned their learning was impacted by video or screen quality in each format.

This is surprising because the videos were of the same resolution, but the video is spread over the full visual range of the viewer, generally decreasing perceived quality. We anticipated more issues with video than standard video, but two participants even stated they believed the video had a higher resolution than the standard video. This shows a level of leniency that the participants had towards video, which will be noted in the next section.

Three participants encountered a specific issue with the viewing quality in video: the Screen Door Effect. These participants described being able to identify individual pixels, with one writing “Because the pixels were visible, my immersion and attention were both negatively affected”. This was the only viewing quality issue ascribed to the screen itself, instead of the resolution of the video.

Leniency

In reviewing responses about the limitations of video, an interesting trend emerged of participants expressing leniency towards these limitations. This forgiveness was expressed by six of the participants and usually came in the form of a criticism followed immediately by a justification as to why it was unimportant.

For example, when asked if video quality affected their experience, one participant wrote: “Yes! But overall the experience felt quite real so the low quality was a compromise”. Another similarly responded “Yes, resolution could have been better, but I think that being able to interact with the environment lessened the impact of this”.

This leniency could have a number of causes, including novelty and social desirability bias, which will be expanded on during the discussion in Section 4.

3.3. Learning Retention

The second major section of results involves learning retention. Learning retention was measured through the Short-Term Retention Test, taken by participants directly after watching the videos, and the Long-Term Retention Test, taken 6 weeks later. The tests comprised multiple-choice and short-answer questions separated into two sections: “Content Retention” and “Location Recognition”.

Test scores for short- and long-term retention were analysed by comparing the scores between participants that experienced the same recording in and standard video. This involved comparing the pseudo-groups HMD and PC, which conditionally contained all participants, but for questions about different locations. For example, for questions pertaining to the “James Cook Domain” recording, scores from Group 2 were considered HMD because they experienced it in video, while scores from Group 1 were considered PC. For questions about the “Rawene Nature Reserve” recording, scores from Group 1 were considered HMD, and scores from Group 2 were PC.

This setup effectively splits the sample into two groups, each with n = 10. The reduced sample size means that statistical comparisons are less powerful than would be desired, but they can still indicate if an effect may be present and could warrant further investigation. None of the score distributions for HMD and PC in either test was significantly different to normal, according to Shapiro–Wilk tests, so they were compared using two-tailed two-sample Student’s t-tests assuming unequal variances. These results are reported in Section 3.3.1 and Section 3.3.2.

The Short- and Long-Term Retention Test also included questions about the special case videos, which sought to test active and passive visual recollection and recollection from a lecture theatre environment. These questions were part of the “Content Retention” Section of the tests and are analysed individually in Section 3.3.4 below.

3.3.1. Short-Term Retention

The Short-Term Retention Test was scored out of 32 total marks. A total of 27 marks were available for “Content Retention”, and 5 for “Location Recognition”.

From Table 4, we can see that the total mean marks of both the HMD and PC groups are very similar, at 21.65 and 21.45, respectively.

Table 4.

Results of the Short-Term Retention Test, comparing the learning outcomes of the HMD and PC groups. Mean scores for each group and test section are given with a 95% confidence interval.

These means are 67.65% and 67.03% of the available marks.

The overall t-test found , suggesting that there is no statistically significant difference in the learning outcomes of the two groups.

While the HMD group did appear to perform better in the “Location Recognition” questions, a second t-test run on just these results found , indicating that the difference was not statistically significant.

3.3.2. Long-Term Retention

The Long-Term Retention Test was scored out of 33 marks, with 27 available for “Content Rentention” and 6 for “Location Recognition”.

From Table 5, we can see that again, while the HMD group performed better on average than the PC group, the difference in learning outcomes was not statistically significant.

Table 5.

Results of the Long-Term Retention Test, comparing the learning outcomes of the HMD and PC groups. Mean scores for each group and test section are given with a 95% confidence interval.

The HMD group achieved an average of 15.25 (46.21%), while the PC group averaged 14.7 (44.55%), and the t-test found .

As the HMD group appeared to perform better on the “Location Recognition” questions, a second t-test was conducted on these results for the two groups. However, the result was not significant at the threshold, with .

3.3.3. Question Performance

Interestingly, from Table 6, we can see that the HMD group performed better on more than twice as many questions as the PC group in both the Short- and Long-Term Retention Tests. We investigated the probability of this occurring by chance using a binomial test, assuming equal probability that either group would outperform the other on a question. The cumulative probability that the HMD group performed better on 24 questions across the two tests is , under the null hypothesis that video format had no effect.

Table 6.

Comparative performance on questions between the HMD and PC groups across the Short- and Long-Term Retention Tests.

While this value is not significant at the threshold, this is an indication that a study with more power has the potential to detect an effect on learning, or that the impact of video format may vary considerably between types of question.

3.3.4. Special Case Questions

As outlined in Section 2.3, participants were tested for three special interest cases: recollection from a lecture theatre, and active and passive visual recollection. Content from the lecture theatre video accounted for four marks in both the Short- and Long-Term Retention Tests, and one mark was assigned to each of the visual recollection cases. The results of these questions are in Table 7, along with students t-test results comparing the performance between the HMD and PC groups.

Table 7.

Results of the “special case” questions, comparing between the HMD and PC groups across the Short- and Long-Term Retention Tests. Mean scores for each group and case are given with a 95% confidence interval.

From Table 7, we can see that the HMD group performed better on all of the cases across both tests, with the exception of long-term retention of passive visual information, for which the two groups performed equally. Though this may suggest a trend, the t-tests again failed to identify any significant differences for the individual factors.

4. Discussion

- RQ1. What differences in the user experience exist when presenting educational content in video on a virtual reality head-mounted display, compared to standard video on a desktop PC?

The results of our user evaluation analysis highlighted many of the key differences between using standard and video.

In terms of general preference, participants were found to enjoy video more, feel more engaged by it, and would prefer to use it as additional material for their learning, provided they could access it as easily as standard video. This was largely attributed to the increased sense of immersion and interaction in the environment in video, amplified by feelings of presence and realism. These results are consistent with existing research around immersive VR and validate our motivations for using video as a learning tool.

Looking further into the thematic analysis, we see a more complex relationship between immersion and engagement in the two video formats. While video was more engaging, participants commonly reported being more distracted while using it, primarily by the interesting, immersive environment itself. The extra visual information pulled attention away from the speaker in the video who was delivering learning content verbally.

This illuminated a distinction in attention and focus between the video formats. Participants watching standard video were more likely to get bored and be distracted by elements of the real world. However, they paid more attention to the core learning elements of the video. Viewers of video were more engaged, but that did not translate directly to engagement with the learning content itself.

While a couple of participants took this as evidence that standard video was the better teaching tool, more noted the potential strength of harnessing distractions in video. They noted that if the learning content is reinforced by the visual environment, instead of being distracted by it, video has the potential to create greater engagement with learning content than standard video.

This related directly to another major emergent theme: content appropriateness. Participants stated that video would be more suited to topics that relied on visual and environmental information, instead of those that are taught verbally or through text.

Our study design should be taken into account when discussing distractions and content appropriateness. We included videos that were heavy in visual information (e.g., memorising details inside a car), and heavy in verbal information (e.g. a traditional lecture recording), incidentally allowing participants to compare the two kinds of content. We also tried to create as comparable an experience as possible between the two video formats, by restricting the learning content in the videos to a frame that could also be captured as standard video. This meant most of the environmental information in the videos was not part of the learning by design, which would have increased the effect of distractions.

Interestingly, the sub-theme of intimacy suggested that video may have an advantage with some verbal content. Some participants felt an increased sense of connection to the speaker due to the presence and realism of the video format, which translated into a sense of obligation to listen to them. This kind of authentic connection can be particularly useful in situations like language learning [30].

Enhanced intimacy could even be harnessed to create better learning resources for students from cultural backgrounds that emphasise the relationship between student and teacher as a part of learning. For instance, Reynolds suggests that for students of Pacific Island background, “teu le va [the nurturing and valuing of a relationship] between a teacher and student is crucial because a student’s identification with a subject can come through a positive connection with a teacher” [66]. With the increasing prevalence of digital learning resources, consciously maintaining an element of human intimacy as part of their design will benefit some students.

The final major difference between the video formats to mention here is usability. Watching video was reportedly less comfortable, with a few participants noting the weight of the HMD or a sense of disorientation from using it. These drawbacks of watching videos with HMDs have also been reported in previous research using different types of HMDs [67]. Participants also noted that you cannot take written or typed notes while in immersive VR. Overall, however, these limitations did not appear to outweigh the benefits of video, as participants also claimed they could study from both formats for similar amounts of time, and would prefer to use video to do so.

- RQ2. What differences in short- and long-term learning retention exist when presenting educational content in video on a virtual reality head-mounted display, compared to standard video on a desktop PC?

Our analysis of the results of the Short- and Long-Term Retention Tests found no statistically significant differences in learning retention between the HMD and PC pseudo-groups. This is true of the overall scores, the separate scores for the “content retention” and “location recognition” subsections of the tests, and the scores associated with the three “special case” videos.

This means that video was as effective as standard video in conveying learning content, even with the limitations we placed on production. There were no major differences in short- and long-term retention in the context of this study.

It should be noted that the small effective sample size (n = 10) limited the statistical power of this study. When looking at the results in aggregate, the HMD group outperformed the PC group in every measure except two (short-term content retention and long-term passive visual recollection) and scored better in over twice as many total questions. As mentioned, none of these results were statistically significant at the threshold, but there is an indication that a study with greater strength may be able to detect an effect.

Our results are interesting in the context of previous research, which has focused on evaluating the use of video for specific applications and/or different VR technologies in education.

Schroeder et al. [63] conducted a review investigating how video influences cognitive learning outcomes. The authors identified 26 studies and report that overall, there was no evidence of benefits or detriments on learning. The authors also looked at specific properties of video, such as interactivity and contextual information, but neither property had a significant effect on learning. These results are in line with our results for short-term and long-term learning retention.

Baysan et al. [67] reviewed the use of video for nursing education. The authors included 12 studies in their review and found that video can improve motivation, confidence, and task performance. A total of 4 of the 12 reviewed studies used a smartphone-based HMD for better accessibility as we did in our study. Only one study compared display options and found that viewing video with an HMD was preferred to using a touch screen, but the authors did not investigate the effect of these options on learning [68].

Rosendahl and Wagner [59] reviewed application areas of video in education. The authors found 44 papers and reported that videos are mainly for three teaching–learning purposes: presentation and observation of teaching–learning content, immersive and interactive theory–practice mediation, and external and self-reflection. Our research focused on learning retention, which is relevant for the first two of these purposes. The authors cite multiple studies that report that viewer engagement increases with the immersion level (e.g., [69,70]) but only Rupp et al. investigated the effect on knowledge retention and claimed that, videos, with the most immersive display, enabled users to remember more verbally presented information [69]. However, the authors did not investigate long-term retention.

Atal et al. reviewed the use of video in teacher education [71]. The authors analysed 17 papers and found that video is a preferred method for overcoming the limitations of standard video, as it offers viewers multiple perspectives and levels of decision-making and enables viewers to notice more details faster due to the ability to look around freely. Furthermore, the authors cite a few studies investigating different display technologies for video. Two studies suggested a potential usefulness of HMDs for perceptual capacity, reflection, and teacher noticing [72,73], and one study showed no added usefulness of HMDs [74].

Muzata et al. [75] reviewed 66 publications and reported that video constitutes one of the most beneficial learning environments due to (1) the low cost of the required equipment; (2) the ability of viewers to employ their expected sensory–motor contingencies, such as head movements; and (3) the encouragement for viewers to use more immersive technologies. VR is preferred when users need to interact with objects and explore them in detail, e.g., in engineering and medicine.

In summary, our study supports and builds upon previous research. Learners enjoy using video for education. There are few usability and accessibility issues. Given a choice, most students prefer using an HMD over a desktop display for viewing. In our study, the display technology had no significant effect on learning, although we did observe higher (non-significant) values when using an HMD. This supports results by Rupp et al, which, in a larger study, found that participants using an HMD remembered more verbally presented information [69]. In contrast to Rupp et al., we also investigated long-term retention, where we again found (non-significant) higher values. This indicates that, while no evidence was found to suggest that using an HMD is better, there is a suggestion that a larger study may reveal such an effect. Similar to previous research, we found that HMDs result in increased enjoyment and engagement, which might have a positive long-term effect on learning [76].

5. Limitations

Novelty is a commonly observed confounding effect in studies involving new learning technologies [77,78], including immersive VR [22]. It can lead to increased motivation or perceived usability of a technology, which may translate into increased attention and engagement in learning activities [7]. The responses to the user evaluation showed evidence of the novelty effect among participants, which should be taken into account with these results. The novelty effect also manifested itself as leniency towards issues with video.

Connected to this novelty effect is the potential for anchoring bias in our study results. Participants will have likely watched many standard videos in the past, giving them high-quality reference points or “anchors” against which to evaluate the study videos. However, they are much less likely to have encountered videos before and, therefore, may not have any expectations of quality in that format. The anchoring effect can lead to more severe criticisms of experiences that can be compared, as well as more lenient evaluations of novel experiences [79].

Another factor that could generate leniency is social desirability bias. This is where survey responses are informed by a participant’s desire to project a favourable image to others [80,81]. In this study, participants may have been less critical of video, aware that we (the researchers) were studying the format, and mistakenly believing we may perceive them undesirably for answering negatively.

As noted previously, the other major limitation of this study is the small sample size. This caused our statistical analysis to have limited power to detect differences in learning outcomes between the HMD and PC groups. Also, most of our user study participants were students or staff from Computer Science and all of them were at least 18 years old.

Future studies comparing and standard video will need larger sample sizes and more diverse user study populations, including children, to conclusively comment on any effects on learning retention in different learning applications. Furthermore, there is a need for more elaborate longitudinal studies assessing the long-term impact on motivation and academic performance.

6. Conclusions

In this paper we presented and analysed the results of a user study to evaluate differences in user experience and short- and long-term learning retention in tertiary students viewing educational videos in both and standard desktop formats. We found that participants retained the same amount of learning from both types of video, but engaged with and enjoyed video more. We also found that participants believed they could study using either format for a similar amount of time and would generally prefer to use video as additional learning materials for their coursework, provided it was accessible.

To create a more comparable experience between the and standard videos used in this study, we placed restrictions on the production of the videos. That means these results were obtained using videos produced in an accessible way, parallel to standard video production. This indicates that video is a viable way for institutions to continue generating value from an investment in immersive VR technology, without having to upskill their current learning content creators or pay for expensive bespoke VR development. We believe that video also offers an attractive alternative to other new learning technologies such as game-based learning, which, while highly engaging, suffers from high content creation costs, vendor lock-in, limited diversity in teaching, and often too much focus on entertainment [82].

User responses also suggested that video has much greater potential for learning engagement if additional effort is put into incorporating the learning content into the surrounding environment. Our results show that at its most accessible level, video is equally effective and more engaging than standard video. However, it is a different medium with a different user experience, creating engagement and enjoyment through a sense of immersion in the wider environment. To unlock the potential of video for education, this environment should be harnessed to reinforce learning objectives.

We see significant potential by combining videos with VR technologies, as demonstrated in previous research [40,42,43,44,45,46]. In an educational context, 360-degree videos can be used in VR to provide realistic backgrounds (skybox) and instructor information or display large-scale information which is difficult to manually model or reconstruct (e.g., archaeological sites), whereas VR content should be used for objects users need to interact with.

Furthermore, video could be useful in learning applications where cognitive load must be measured. Cognitive load measurements can be used for adjusting the difficulty of learning content [83]. However, measuring cognitive load in interactive VR applications is difficult since body movements can interfere with physiological measures of cognitive load [84,85,86]. video could be more useful in such applications since users usually don’t move a lot while watching. However, the fact that students have limited interactions with the video can also be a limitation, since it makes it difficult to measure users’ behavior, which is an important parameter in intelligent tutoring systems [87,88].

Supplementary Materials

A document containing the demographic questionnaire and the questionnaires for the short-term and long-term retention test can be downloaded at https://www.mdpi.com/article/10.3390/electronics14091830/s1.

Author Contributions

Conceptualisation, S.K., A.L.-R., B.C.W., B.P. and S.D.; methodology, S.K., A.L.-R., B.C.W., B.P. and S.D.; software, S.K.; validation, S.K., A.L.-R., B.C.W., B.P. and S.D.; formal analysis, S.K.; investigation, S.K.; resources, S.K.; data curation, S.K.; writing—original draft preparation, S.K.; writing—review and editing, S.K., A.L.-R., B.C.W., B.P. and S.D.; supervision, A.L.-R., B.C.W. and B.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The user study was approved by the University of Auckland Human Participants Ethics Committee, reference number UAHPEC016696.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are unavailable due to privacy or ethical restrictions.

Acknowledgments

The authors of this paper would like to acknowledge and thank our user study participants for their interest, effort, and contribution.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Merchant, Z.; Goetz, E.T.; Cifuentes, L.; Keeney-Kennicutt, W.; Davis, T.J. Effectiveness of virtual reality-based instruction on students’ learning outcomes in K-12 and higher education: A meta-analysis. Comput. Educ. 2014, 70, 29–40. [Google Scholar] [CrossRef]

- Kavanagh, S.; Luxton-Reilly, A.; Wünsche, B.; Plimmer, B. A Systematic Review of Virtual Reality in Education. Themes Sci. Technol. Educ. 2017, 10, 85–119. [Google Scholar]

- Mikropoulos, T.A.; Natsis, A. Educational virtual environments: A ten-year review of empirical research (1999–2009). Comput. Educ. 2011, 56, 769–780. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Freina, L.; Ott, M. A Literature Review on Immersive Virtual Reality in Education: State of the Art and Perspectives. In Proceedings of the Conference on eLearning and Software for Education (eLSE 2015), Bucharest, Romania, 23–24 April 2015; pp. 133–141. [Google Scholar]

- Loup, G.; Serna, A.; Iksal, S.; George, S. Immersion and Persistence: Improving Learners’ Engagement in Authentic Learning Situations. In Proceedings of the European Conference on Technology Enhanced Learning, Lyon, France, 13–16 September 2016; Volume 9891. [Google Scholar]

- Huang, W.; Roscoe, R.D.; Johnson-Glenberg, M.C.; Craig, S.D. Motivation, engagement, and performance across multiple virtual reality sessions and levels of immersion. J. Comput. Assist. Learn. 2021, 37, 745–758. [Google Scholar] [CrossRef]

- Mütterlein, J. The Three Pillars of Virtual Reality? Investigating the Roles of Immersion, Presence, and Interactivity. In Proceedings of the 51st Annual Hawaii International Conference on System Sciences (HICSS ’18), Waikoloa Village, HI, USA, 3–6 January 2018. [Google Scholar] [CrossRef]

- North, M.M.; North, S.M. A Comparative Study of Sense of Presence of Traditional Virtual Reality and Immersive Environments. Australas. J. Inf. Syst. 2016, 20, 1–15. [Google Scholar] [CrossRef]

- Purchese, R. Oculus Answers the Big Rift Questions. 2014. Available online: http://www.eurogamer.net/articles/2014-09-01-oculus-answers-the-big-rift-questions (accessed on 24 April 2025).

- Sony. PlayStation®VR Launches October 2016 Available Globally at 44,980 Yen, $399 USD, €399 and £349. 2016. Available online: https://web.archive.org/web/20160522011956/http://www.sony.com/en_us/SCA/company-news/press-releases/sony-computer-entertainment-america-inc/2016/playstationvr-launches-october-2016-available-glob.html (accessed on 24 April 2025).

- HTC Corporation. Vive Now Shipping Immediately from HTC. 2016. Available online: https://www.htc.com/us/newsroom/2016-06-07/ (accessed on 24 April 2025).

- Samsung. Samsung Gear VR. 2025. Available online: https://www.samsung.com/nz/support/model/SM-R322NZWAXNZ/?srsltid=AfmBOorKIv4cFB4ud564v9hoZ67RUj8b6UXaToOPyMwN1-jS1W-HfKec (accessed on 24 April 2025).

- Cipresso, P.; Giglioli, I.A.C.; Raya, M.A.; Riva, G. The Past, Present, and Future of Virtual and Augmented Reality Research: A Network and Cluster Analysis of the Literature. Front. Psychol. 2018, 9, 2086. [Google Scholar] [CrossRef]

- Wang, P.; Wu, P.; Wang, J.; Chi, H.L.; Wang, X. A Critical Review of the Use of Virtual Reality in Construction Engineering Education and Training. Int. J. Environ. Res. Public Health 2018, 15, 1204. [Google Scholar] [CrossRef] [PubMed]

- Bashabsheh, A.K.; Alzoubi, H.H.; Ali, M.Z. The application of virtual reality technology in architectural pedagogy for building constructions. Alex. Eng. J. 2019, 58, 713–723. [Google Scholar] [CrossRef]

- Lerner, D.; Mohr, S.; Schild, J.; Göring, M.; Luiz, T. An Immersive Multi-User Virtual Reality for Emergency Simulation Training: Usability Study. JMIR Serious Games 2020, 8, e18822. [Google Scholar] [CrossRef]

- Egea-Vivancos, A.; Arias-Ferrer, L. Principles for the design of a history and heritage game based on the evaluation of immersive virtual reality video games. E-Learn. Digit. Media 2021, 18, 383–402. [Google Scholar] [CrossRef]

- Innocenti, E.D.; Geronazzo, M.; Vescovi, D.; Nordahl, R.; Serafin, S.; Ludovico, L.A.; Avanzini, F. Mobile virtual reality for musical genre learning in primary education. Comput. Educ. 2019, 139, 102–117. [Google Scholar] [CrossRef]

- Huang, H.M.; Rauch, U.; Liaw, S.S. Investigating learners’ attitudes toward virtual reality learning environments: Based on a constructivist approach. Comput. Educ. 2010, 55, 1171–1182. [Google Scholar] [CrossRef]

- Sharma, S.; Agada, R.; Ruffin, J. Virtual Reality Classroom as an Constructivist Approach. In Proceedings of the IEEE Southeastcon, Jacksonville, FL, USA, 4–7 April 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Jensen, L.; Konradsen, F. A review of the use of virtual reality head-mounted displays in education and training. Educ. Inf. Technol. 2018, 23, 1515–1529. [Google Scholar] [CrossRef]

- Alhalabi, W. Virtual reality systems enhance students’ achievements in engineering education. Behav. Inf. Technol. 2016, 35, 919–925. [Google Scholar] [CrossRef]

- Allcoat, D.; von Mühlenen, A. Learning in virtual reality: Effects on performance, emotion and engagement. Res. Learn. Technol. 2018, 26, 1–13. [Google Scholar] [CrossRef]

- Budziszewski, P. A Low Cost Virtual Reality System for Rehabilitation of Upper Limb. In Proceedings of the 5th International Conference of Virtual, Augmented and Mixed Reality. Systems and Applications (VAMR 2013), Las Vegas, NV, USA, 21–26 July 2013; Shumaker, R., Ed.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Allison, D.; Hodges, L.F. Virtual reality for education? In Proceedings of the ACM Symposium on Virtual Reality Software and Technology (VRST ’00), Seoul, Republic of Korea, 22–25 October 2000; pp. 160–165. [Google Scholar] [CrossRef]

- Wiecha, J.; Heyden, R.; Sternthal, E.; Merialdi, M. Learning in a virtual world: Experience with using second life for medical education. J. Med. Internet Res. 2010, 12, e1. [Google Scholar] [CrossRef]

- Mirocha, L. Novel Approaches in Game Engine-Based Content Creation: Unveiling the Potential of Neural Radiance Fields and 3D Gaussian Splatting. In Proceedings of the SIGGRAPH Asia 2024 Courses, Tokyo, Japan, 3–6 December 2024. [Google Scholar] [CrossRef]

- Cheng, D.; Ch’ng, E. Harnessing Collective Differences in Crowdsourcing Behaviour for Mass Photogrammetry of 3D Cultural Heritage. ACM J. Comput. Cult. Herit. 2023, 16, 19. [Google Scholar] [CrossRef]

- Chen, M.R.A.; Hwang, G.J. Effects of experiencing authentic contexts on English speaking performances, anxiety and motivation of EFL students with different cognitive styles. Interact. Learn. Environ. 2020, 30, 1619–1639. [Google Scholar] [CrossRef]

- Concannon, B.J.; Esmail, S.; Roduta Roberts, M. Head-Mounted Display Virtual Reality in Post-secondary Education and Skill Training. Front. Educ. 2019, 4, 1–23. [Google Scholar] [CrossRef]

- Roche, L.; Cunningham, I.; Rolland, C. What is and what is not 360° video: Conceptual definitions for the research field. Front. Educ. 2025, 10, 1448483. [Google Scholar] [CrossRef]

- Pirker, J.; Dengel, A. The Potential of 360° Virtual Reality Videos and Real VR for Education – A Literature Review. IEEE Comput. Graphics Appl. 2021, 41, 76–89. [Google Scholar] [CrossRef] [PubMed]

- Shadiev, R.; Wang, X.; Wu, T.T.; Huang, Y.M. Review of Research on Technology-Supported Cross-Cultural Learning. Sustainability 2021, 13, 1402. [Google Scholar] [CrossRef]

- Fraustino, J.D.; Lee, J.Y.; Lee, S.Y.; Ahn, H. Effects of 360° video on attitudes toward disaster communication: Mediating and moderating roles of spatial presence and prior disaster media involvement. Public Relations Rev. 2018, 44, 331–341. [Google Scholar] [CrossRef]

- Snelson, C.; Hsu, Y.C. Educational 360-Degree Videos in Virtual Reality: A Scoping Review of the Emerging Research. TechTrends 2020, 64, 404–412. [Google Scholar] [CrossRef]

- Adobe. VR Editing in Premiere Pro. 2025. Available online: https://helpx.adobe.com/nz/premiere-pro/using/VRSupport.html (accessed on 24 April 2025).

- Apple Inc. Final Cut Pro User Guide. 2025. Available online: https://help.apple.com/pdf/final-cut-pro/en_US/final-cut-pro-user-guide.pdf (accessed on 24 April 2025).

- De Castro Araújo, G.; Domingues Garcia, H.; Farias, M.C.; Prakash, R.; Carvalho, M.M. A 360-degree Video Player for Dynamic Video Editing Applications. In ACM Transactions on Multimedia Computing, Communications and Applications; ACM: New York, NY, USA, 2025; pp. 1–22. [Google Scholar] [CrossRef]

- Li, L.; Carnell, S.; Harris, K.; Walters, L.; Reiners, D.; Cruz-Neira, C. LIFT—A System to Create Mixed 360° Video and 3D Content for Live Immersive Virtual Field Trip. In Proceedings of the 2023 ACM International Conference on Interactive Media Experiences (IMX ’23), Nantes, France, 12–15 June 2023; pp. 83–93. [Google Scholar] [CrossRef]

- Huang, X.; Yin, M.; Xia, Z.; Xiao, R. VirtualNexus: Enhancing 360-Degree Video AR/VR Collaboration with Environment Cutouts and Virtual Replicas. In Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology (UIST ’24), Pittsburgh, PA, USA, 13–16 October 2024. [Google Scholar] [CrossRef]

- Teo, T.; Hayati, A.F.; Lee, G.A.; Billinghurst, M.; Adcock, M. A Technique for Mixed Reality Remote Collaboration using 360 Panoramas in 3D Reconstructed Scenes. In Proceedings of the 25th ACM Symposium on Virtual Reality Software and Technology (VRST ’19), Parramatta, Australia, 12–15 November 2019. [Google Scholar] [CrossRef]

- Teo, T.; Lawrence, L.; Lee, G.A.; Billinghurst, M.; Adcock, M. Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ’19), Glasgow Scotland, UK, 4–9 May 2019; pp. 1–14. [Google Scholar] [CrossRef]