Systematic Mapping Study of Test Generation for Microservices: Approaches, Challenges, and Impact on System Quality

Abstract

1. Introduction

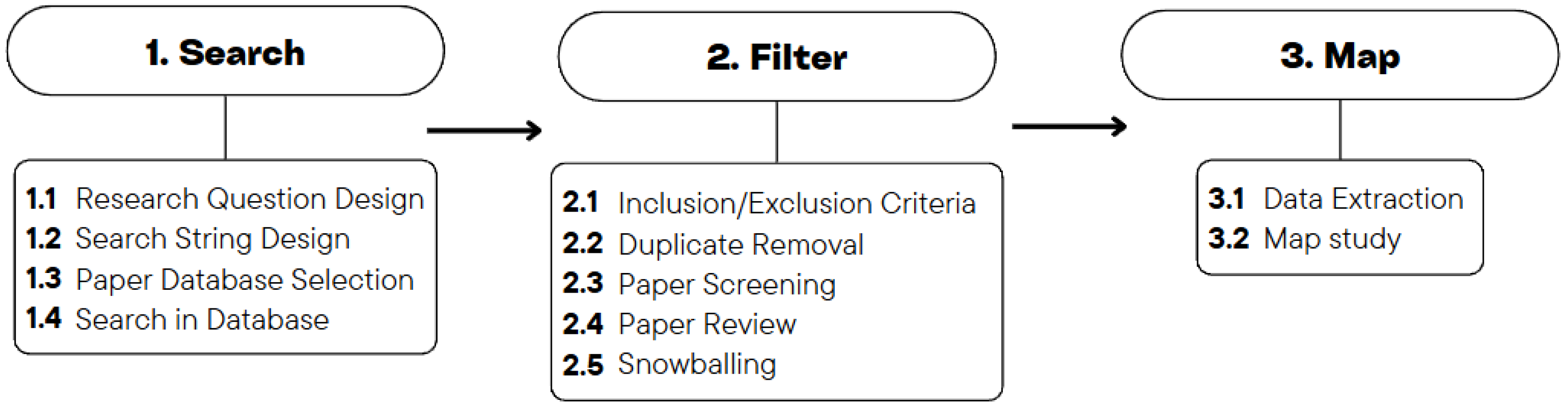

2. Research Method

2.1. Search

2.1.1. Research Questions

RQ1: What are the key approaches and challenges in test generation for microservices?

RQ2: What is the impact of test generation on the quality of microservices-based systems?

2.1.2. Paper Database Selection

2.1.3. Search in the Database

2.2. Filter

2.2.1. Selection Criteria

2.2.2. Selection Process

- Removing Duplicates: We identified duplicate papers resulting from indexing overlaps across databases. A total of 31 duplicates were removed to ensure uniqueness in our dataset.

- Title and Abstract Screening: Next, we manually reviewed the titles and abstracts of the 2313 remaining papers. Each paper was evaluated against predefined inclusion and exclusion criteria. After this screening, 49 papers remained.

- Introduction Review: Some abstracts lacked adequate detail to determine relevance. In these instances, we reviewed the introduction sections to verify alignment with our research questions on test generation for microservices. Papers not explicitly addressing these topics were excluded, reducing the total to 47 papers.

- In-Depth Manual Review: We conducted an extensive review of the remaining papers, emphasizing the methodology and results sections. Special attention was given to sources from SpringerLink due to limited filtering capabilities. Following this detailed assessment, 46 papers were confirmed as relevant.

- Snowballing [12]: Finally, to identify additional relevant papers potentially missed in previous steps, we performed a backward snowballing search by reviewing the references of each selected paper. This process led to the inclusion of two more papers, bringing the final total of selected papers for our study to 48.

2.3. Map

2.3.1. Data Extraction

- Publication details: These give background information and the basic metadata about the paper, which helps to determine its relevance and credibility. These details also show how research is spread over time and among different venues, thereby indirectly pointing out major contributors or trends within a particular realm.

- Research focus: This provides clarity on the primary objectives and themes addressed in the paper, allowing us to ascertain its relevance and suitability for addressing our research questions.

- Methodology: This information explains how we conducted and implemented our investigations, helping us assess the robustness of each paper and its approach to testing. Understanding the methodology also supports the evaluation of techniques and challenges encountered during the testing phase (RQ1.1, RQ1.2, and RQ1.4). Additionally, it provides insight into the evaluation of test generation methods (RQ2.3).

- Tools and frameworks: These are essential for RQ1.3, which investigates the available tools and frameworks for test generation in microservices. This information helps in understanding the technological landscape and the practical implementations of test generation strategies.

- Findings: These are critical for all research questions, as they summarize the key results and contributions of each paper, helping to identify strengths and weaknesses (RQ1.4), the impact on quality(RQ2), and overall advancements in the field.

- Metrics: These are specifically relevant to RQ2.2 and RQ2.4. They focus on the evaluation criteria used to measure the effectiveness of test generation techniques. These criteria clarify how quality is quantified and how test coverage relates to test generation efficiency.

2.3.2. Map Study

- Categorization: We organized the extracted data into themes corresponding to RQ1 and RQ2. These themes represent recurring patterns in testing approaches, challenges, and evaluation methods. This process resulted in four primary categories: Testing Techniques, Tools, and Frameworks (C1); Systematic Study Contributions (C2); Impact on Quality (C3); and Metrics (C4). Table 9 presents these research categories, describes their scope, and aligns them with relevant research questions.

- Trend Analysis: The study analyzed trends by examining the frequency of research in different areas and their publication sources. This analysis identified popular topics, emerging areas of interest, and underexplored aspects of microservices testing.

- Gap Identification: We identified research gaps by analyzing areas with limited coverage. This step is essential for guiding future research on microservices test generation.

- Visualization: To enhance comprehension of the findings, various visualizations, such as tables and graphs, were created. These visualizations summarize the distribution and focus of the selected papers concisely.

3. Results

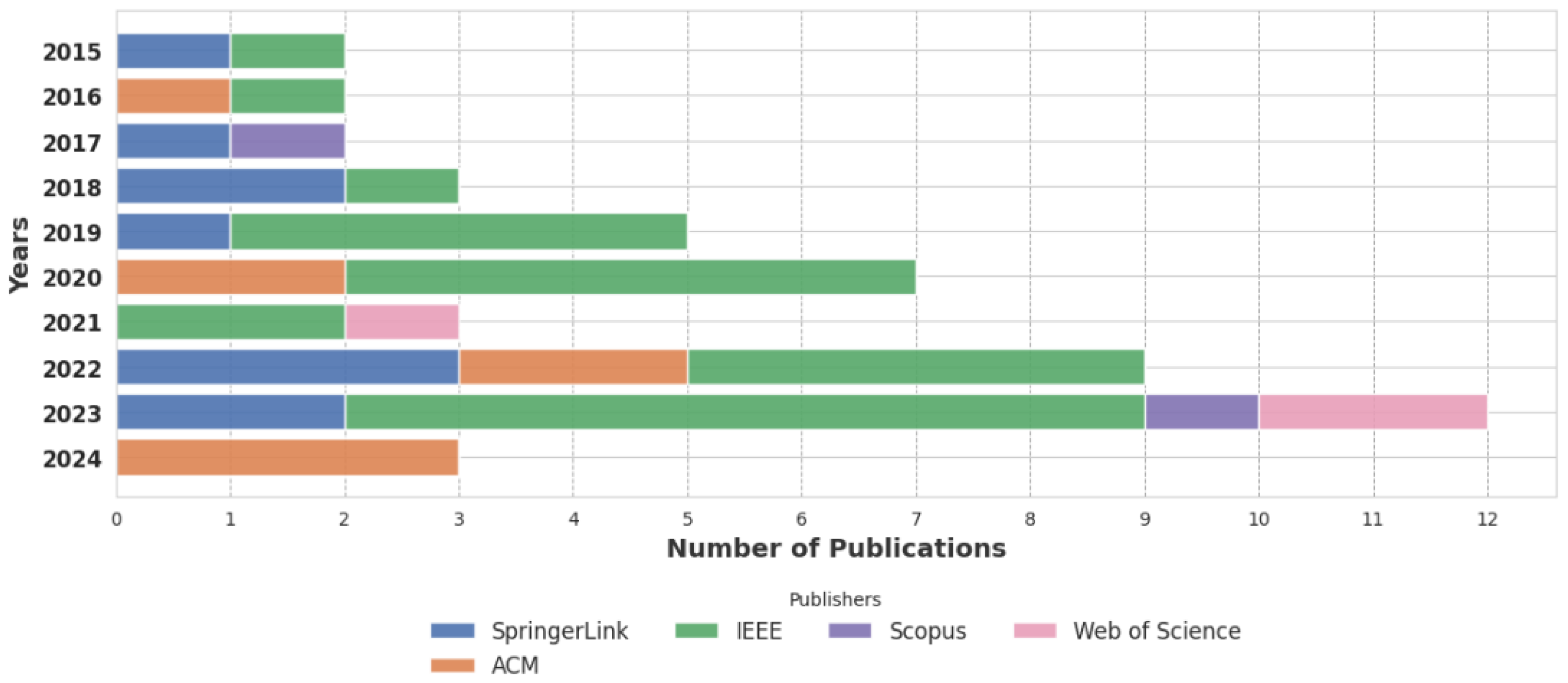

3.1. Trends in Microservice Testing Publications

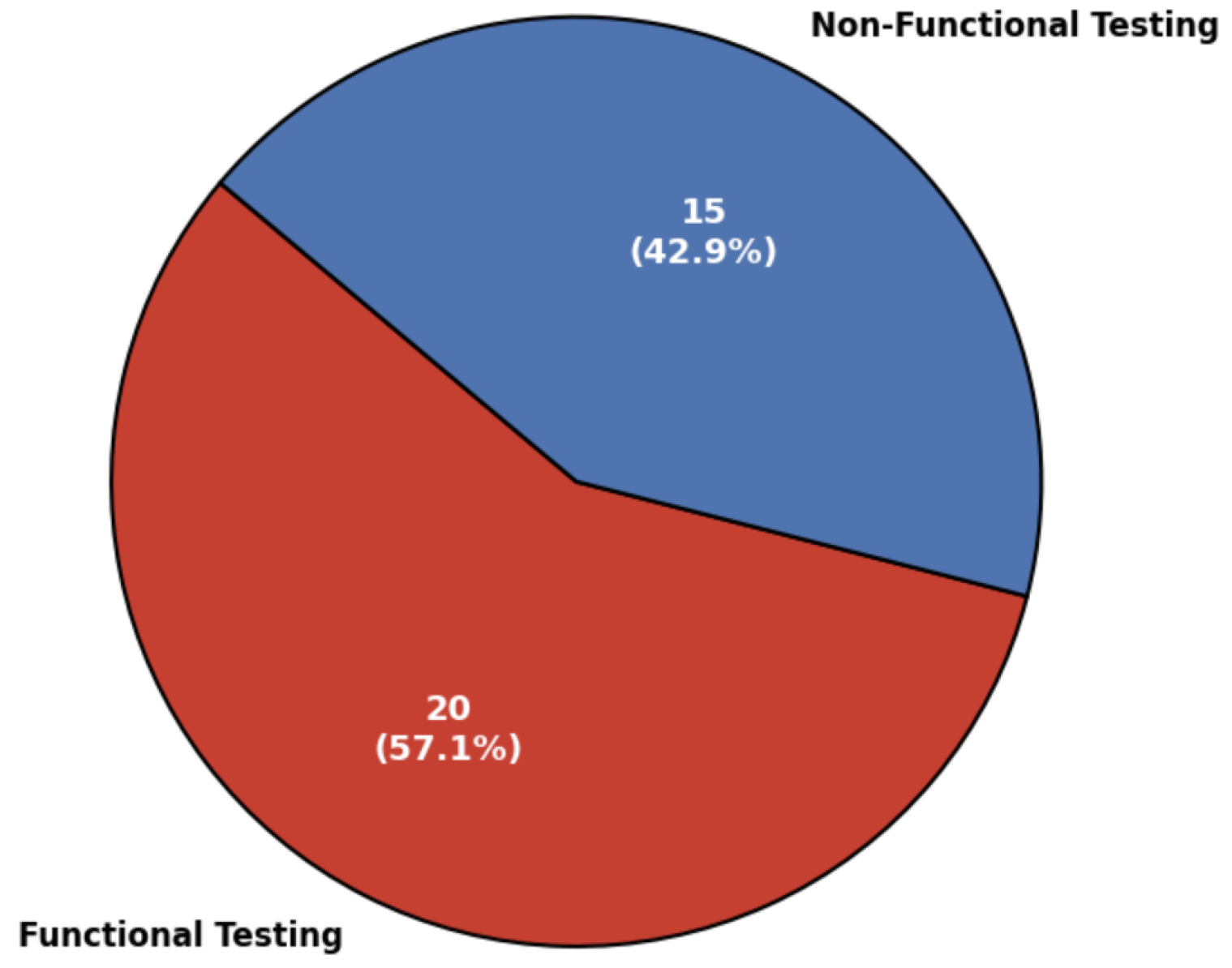

3.2. General Quantitative Trends of Microservice Testing

- Testing Techniques, Tools, and Frameworks (C1): A total of twenty-nine papers (60.4% of the final set) concentrate on designing or enhancing specialized testing methods, and automation frameworks tailored to microservices. This prevalence underscores the community’s focus on practical, hands-on solutions that address testing challenges in distributed services.

- Impact on Quality (C3): Fourteen papers (29.2%) explore how various test generation strategies influence system reliability, fault tolerance, and performance.Their empirical findings guide practitioners in strengthening microservice architectures.

- Metrics (C4): Only one paper (2.1%), P48 [59], explicitly targets metrics for assessing test effectiveness or performance in microservices. This scarcity of publications indicates a need for standardized measurement approaches. Such standardization would benefit practitioners seeking rigorous comparisons of testing methods.

4. Approaches and Challenges (RQ1)

4.1. Prevalent Testing Techniques (RQ1.1)

4.2. Commonly Adopted Testing Approaches (RQ1.1)

- Unit Testing for Event-Driven Microservices (TE1): Event-driven architectures introduce asynchronous complexities, necessitating specialized unit testing approaches. Paper [34] proposes UTEMS, explicitly designed to validate the correctness of event-driven microservices under various event-trigger scenarios, thereby addressing complexities unique to asynchronous service communication.

- Regression Testing (TE2): Due to frequent updates that are inherent in microservice systems, automated regression testing is essential to quickly identify regressions caused by continuous deployment. Papers [18,19,20] present automated frameworks designed explicitly for regression testing. Paper [18] focuses on ex-vivo regression testing in isolated microservice environments, while papers [19,20] emphasize regression testing automation for detecting feature regressions efficiently, especially under rapid deployments.

- Black-box and grey-box testing (TE3): Black-box testing assesses external interfaces without internal knowledge, whereas grey-box testing incorporates partial insights into the internal structure. Paper [48] demonstrates a black-box approach (uTest) that systematically covers functional scenarios, and Paper [14] details MACROHIVE, a grey-box approach integrating internal service-mesh insights to achieve deeper coverage during testing.

- Acceptance Testing (TE4): Behavior-Driven Development (BDD) facilitates clear, user-centric specification and acceptance criteria. It bridges communication between developers and non-developers through natural-language scenarios. Paper [13] exemplifies this by introducing a reusable automated acceptance testing architecture leveraging BDD for enhanced clarity and maintainability in microservices.

- Performance and Load Testing (TE5): Performance and load testing techniques address the crucial aspect of scalability and stability under high operational stress in microservices. Papers [16,21,46] detail various methods, from quantitative assessments of deployment alternatives ([46]) to automated architectures specifically tailored to microservice performance testing ([16]). Paper [21] further emphasized end-to-end benchmarking to capture realistic, whole-system performance characteristics rather than isolated service behavior alone.

- Contract Testing (TE6): Contract testing is crucial due to independent deployments and frequent changes in microservice interfaces. Papers [33,43,50] illustrate how contract testing approaches can ensure reliable interactions by explicitly validating API agreements. Paper [33] specifically integrates consumer-driven contracts with state models, enhancing the accuracy and consistency verification across microservices.

- Fault Injection and Resilience Testing (TE7): Fault injection and resilience evaluation techniques verify the system’s ability to operate effectively despite faults and disruptions. Papers [30,41,51] exemplify controlled fault-simulation scenarios aimed at testing fault tolerance. For instance, paper [51] introduces fitness-guided resilience testing, systematically identifying weaknesses to guide resilience improvements.

- Fault Diagnosis (TE8): Fault diagnosis identifies root causes of failures within interconnected microservices, facilitating targeted debugging. Paper [28] specifically addressed fault diagnosis using multi-source data to pinpoint the precise cause of microservice disruptions, facilitating targeted debugging and quicker resolution.

- Test Case/Data Generation (TE9): Automated test case and data generation aims to improve coverage and efficiency while revealing complex edge cases in microservices testing. Papers [15,17,48] demonstrate different approaches, from automatic data generation techniques for microservice scenarios to RAML-based mock service generators, emphasizing automation’s role in handling diverse input scenarios.

- Static and Dynamic Analysis: These analyses uncover code- and runtime-level issues. Papers [17,24,42] demonstrate static code checks to catch early defects and dynamic monitoring to observe real-time performance. Paper [24] employs both methods to enhance test effectiveness in microservice applications.

- API Testing and Tracing: Detailed request tracing can reveal hidden integration flaws. Paper [32] proposes Microusity, a specialized tool for RESTful API testing and visualization of error-prone areas. Paper [37] extends EvoMaster with white-box fuzzing for RPC-based APIs, uncovering security weaknesses.

- Cloud-Based Testing Techniques: As microservices often run in cloud environments, specialized solutions have emerged. Paper [45] presents methods to leverage automated provisioning and large-scale simulations, ensuring microservice deployments can scale and remain reliable. Paper [26] applies such ideas to Digital Twins, underscoring the practical breadth of cloud-based testing.

- Instance Identification and Resilience Evaluation: Identifying distinct microservice instances helps testers monitor localized failures. Paper [35] offers a method to isolate specific components, clarifying root causes and streamlining resiliency checks.

4.3. Techniques Comparison with Monolithic Applications (RQ1.2)

4.4. Test Generation Techniques (RQ1.3)

4.5. Strengths and Weaknesses (RQ1.4)

5. Impact on the Quality of Testing (RQ2)

5.1. Factors Influencing the Testing (RQ2.1)

- Dynamic Service Discovery: Microservice architectures rely heavily upon dynamic service discovery mechanisms, where services find each other during runtime. Depending on the current state of the system and network conditions, various interaction patterns may arise from this process.

- Load Balancing: Incoming requests are distributed over multiple service instances by load balancers to utilize resources optimally while ensuring availability. However, due to traffic routing through different instances by the load balancer, there might be inconsistency within the service interaction chain.

- Service Versioning: Frequent updates are made to microservices, whereby new versions are deployed. Thus, when more than one version is running concurrently, interactions may differ based on feature set compatibility across versions, which leads to inconsistencies.

- Failure and Recovery: Failure and recovery events can occur unpredictably within service instances. During such events, communication paths break down temporarily, and the sequence of interactions changes as the system reconfigures itself to handle the failure.

5.2. Metrics for Evaluating the Quality of Tests (RQ2.2)

5.3. Enhancing Quality (RQ2.3)

5.4. Relationship: Test Coverage and the Efficiency of Test Generation (RQ2.4)

6. Discussion

7. Threats to Validity

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dragoni, N.; Lanese, I.; Larsen, S.; Mazzara, M.; Mustafin, R.; Safina, L. Microservices: Yesterday, today, and tomorrow. In Present and Ulterior Software Engineering; Springer: Berlin/Heidelberg, Germany, 2017; pp. 195–216. [Google Scholar]

- Fowler, M.; Lewis, J. Microservices: A Definition of This New Architectural Term. Available online: https://martinfowler.com/articles/microservices.html (accessed on 12 March 2025).

- Newman, S. Building Microservices: Designing Fine-Grained Systems; O’Reilly Media, Inc.: Newton, MA, USA, 2015. [Google Scholar]

- Christian, J.; Steven.; Kurniawan, A.; Anggreainy, M.S. Analyzing Microservices and Monolithic Systems: Key Factors in Architecture, Development, and Operations. In Proceedings of the 2023 6th International Conference of Computer and Informatics Engineering (IC2IE), Lombok, Indonesia, 14–15 September 2023; pp. 64–69. [Google Scholar] [CrossRef]

- Eismann, S.; Bezemer, C.P.; Shang, W.; Okanović, D.; van Hoorn, A. Microservices: A Performance Tester’s Dream or Nightmare? In Proceedings of the ACM/SPEC International Conference on Performance Engineering, New York, NY, USA, 25–30 April 2020; pp. 138–149. [Google Scholar] [CrossRef]

- Panahandeh, M.; Miller, J. A Systematic Review on Microservice Testing. Preprint, Version 1. Research Square. 2023. Available online: https://www.researchsquare.com/article/rs-3158138/v1 (accessed on 17 July 2023). [CrossRef]

- Petersen, K.; Vakkalanka, S.; Kuzniarz, L. Guidelines for conducting systematic mapping studies in software engineering: An update. Inf. Softw. Technol. 2015, 64, 1–18. [Google Scholar] [CrossRef]

- Ahmed, B.S.; Bures, M.; Frajtak, K.; Cerny, T. Aspects of Quality in Internet of Things (IoT) Solutions: A Systematic Mapping Study. IEEE Access 2019, 7, 13758–13780. [Google Scholar] [CrossRef]

- Dyba, T.; Dingsoyr, T.; Hanssen, G.K. Applying Systematic Reviews to Diverse Study Types: An Experience Report. In Proceedings of the First International Symposium on Empirical Software Engineering and Measurement (ESEM 2007), Madrid, Spain, 20–21 September 2007; pp. 225–234. [Google Scholar] [CrossRef]

- Chadegani, A.A.; Salehi, H.; Yunus, M.M.; Farhadi, H.; Fooladi, M.; Farhadi, M.; Ebrahim, N.A. A Comparison between Two Main Academic Literature Collections: Web of Science and Scopus Databases. arXiv 2013, arXiv:1305.0377. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Wohlin, C. Guidelines for snowballing in systematic literature studies and a replication in software engineering. In Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering, New York, NY, USA, 13–14 May 2014. [Google Scholar] [CrossRef]

- Rahman, M.; Gao, J. A Reusable Automated Acceptance Testing Architecture for Microservices in Behavior-Driven Development. In Proceedings of the 2015 IEEE Symposium on Service-Oriented System Engineering, San Francisco, CA, USA, 30 March–3 April 2015; pp. 321–325. [Google Scholar] [CrossRef]

- Giamattei, L.; Guerriero, A.; Pietrantuono, R.; Russo, S. Automated Grey-Box Testing of Microservice Architectures. In Proceedings of the 2022 IEEE 22nd International Conference on Software Quality, Reliability and Security (QRS), Guangzhou, China, 5–9 December 2022; pp. 640–650. [Google Scholar] [CrossRef]

- Ashikhmin, N.; Radchenko, G.; Tchernykh, A. RAML-Based Mock Service Generator for Microservice Applications Testing. In Supercomputing: Third Russian Supercomputing Days, RuSCDays 2017, Moscow, Russia, 25–26 September 2017; Voevodin, V., Sobolev, S., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 456–467. [Google Scholar] [CrossRef]

- de Camargo, A.; Salvadori, I.; Mello, R.d.S.; Siqueira, F. An architecture to automate performance tests on microservices. In iiWAS ’16: Proceedings of the 18th International Conference on Information Integration and Web-Based Applications and Services; ACM Digital Library: New York, NY, USA, 2016; pp. 422–429. [Google Scholar] [CrossRef]

- Ding, H.; Cheng, L.; Li, Q. An Automatic Test Data Generation Method for Microservice Application. In Proceedings of the 2020 International Conference on Computer Engineering and Application (ICCEA), Guangzhou, China, 18–20 March 2020; pp. 188–191. [Google Scholar] [CrossRef]

- Gazzola, L.; Goldstein, M.; Mariani, L.; Segall, I.; Ussi, L. Automatic Ex-Vivo Regression Testing of Microservices. In Proceedings of the IEEE/ACM 1st International Conference on Automation of Software Test (AST 2020), Seoul, Republic of Korea, 25–26 May 2020; pp. 11–20. [Google Scholar] [CrossRef]

- Janes, A.; Russo, B. Automatic Performance Monitoring and Regression Testing During the Transition from Monolith to Microservices. In Proceedings of the 2019 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW), Berlin, Germany, 28–31 October 2019; pp. 163–168. [Google Scholar] [CrossRef]

- Kargar, M.J.; Hanifizade, A. Automation of Regression Test in Microservice Architecture. In Proceedings of the 2018 4th International Conference on Web Research (ICWR), Tehran, Iran, 25–26 April 2018; pp. 133–137. [Google Scholar] [CrossRef]

- Smith, S.; Robinson, E.; Frederiksen, T.; Stevens, T.; Cerny, T.; Bures, M.; Taibi, D. Benchmarks for End-to-End Microservices Testing. In Proceedings of the 2023 IEEE International Conference on Service-Oriented System Engineering (SOSE), Athens, Greece, 17–20 July 2023; pp. 60–66. [Google Scholar] [CrossRef]

- Elsner, D.; Bertagnolli, D.; Pretschner, A.; Klaus, R. Challenges in Regression Test Selection for End-to-End Testing of Microservice-Based Software Systems. In Proceedings of the 3rd ACM/IEEE International Conference on Automation of Software Test (AST 2022), Pittsburgh, PA, USA, 17–18 May 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Chen, L.; Wu, J.; Yang, H.; Zhang, K. A Microservice Regression Testing Selection Approach Based on Belief Propagation. J. Cloud Comput. 2023, 12, 21. [Google Scholar] [CrossRef]

- Duan, T.; Li, D.; Xuan, J.; Du, F.; Li, J.; Du, J.; Wu, S. Design and Implementation of Intelligent Automated Testing of Microservice Application. In Proceedings of the 2021 IEEE 5th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Xi’an, China, 15–17 October 2021; Volume 5, pp. 1306–1309. [Google Scholar] [CrossRef]

- Wang, Y.; Cheng, L.; Sun, X. Design and Research of Microservice Application Automation Testing Framework. In Proceedings of the 2019 International Conference on Information Technology and Computer Application (ITCA), Guangzhou, China, 20–22 December 2019; pp. 257–260. [Google Scholar] [CrossRef]

- Lombardo, A.; Morabito, G.; Quattropani, S.; Ricci, C. Design, Implementation, and Testing of a Microservices-Based Digital Twins Framework for Network Management and Control. In Proceedings of the 2022 IEEE 23rd International Symposium on a World of Wireless, Mobile and Multimedia Networks (WoWMoM), Belfast, UK, 14–17 June 2022; pp. 590–595. [Google Scholar] [CrossRef]

- Jiang, P.; Shen, Y.; Dai, Y. Efficient Software Test Management System Based on Microservice Architecture. In Proceedings of the 2022 IEEE 10th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 17–19 June 2022; Volume 10, pp. 2339–2343. [Google Scholar] [CrossRef]

- Zhang, S.; Zhu, J.; Hao, B.; Sun, Y.; Nie, X.; Zhu, J.; Liu, X.; Li, X.; Ma, Y.; Pei, D. Fault Diagnosis for Test Alarms in Microservices through Multi-source Data. In Companion Proceedings of the 32nd ACM International Conference on the Foundations of Software Engineering (FSE 2024), Porto de Galinhas, Brazil, 15–19 July 2024; pp. 115–125. [Google Scholar] [CrossRef]

- Giallorenzo, S.; Montesi, F.; Peressotti, M.; Rademacher, F.; Unwerawattana, N. JoT: A Jolie Framework for Testing Microservices. In Proceedings of the 25th IFIP WG 6.1 International Conference on Coordination Models and Languages (COORDINATION 2023), Lisbon, Portugal, 19–23 June 2023; Jongmans, S.S., Lopes, A., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 172–191. [Google Scholar] [CrossRef]

- Meinke, K.; Nycander, P. Learning-Based Testing of Distributed Microservice Architectures: Correctness and Fault Injection. In Proceedings of the 13th International Conference on Software Engineering and Formal Methods (SEFM 2015), York, UK, 7–11 September 2015; Bianculli, D., Calinescu, R., Rumpe, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; pp. 3–10. [Google Scholar] [CrossRef]

- Camilli, M.; Guerriero, A.; Janes, A.; Russo, B.; Russo, S. Microservices Integrated Performance and Reliability Testing. In Proceedings of the 3rd ACM/IEEE International Conference on Automation of Software Test (AST 2022), Pittsburgh, PA, USA, 16–17 May 2022; pp. 29–39. [Google Scholar] [CrossRef]

- Rattanukul, P.; Makaranond, C.; Watanakulcharus, P.; Ragkhitwetsagul, C.; Nearunchorn, T.; Visoottiviseth, V.; Choetkiertikul, M.; Sunetnanta, T. Microusity: A Testing Tool for Backends for Frontends (BFF) Microservice Systems. In Proceedings of the 31st IEEE/ACM International Conference on Program Comprehension (ICPC 2023), Melbourne, Australia, 15–16 May 2023; pp. 74–78. [Google Scholar] [CrossRef]

- Wu, C.F.; Ma, S.P.; Shau, A.C.; Yeh, H.W. Testing for Event-Driven Microservices Based on Consumer-Driven Contracts and State Models. In Proceedings of the 29th Asia-Pacific Software Engineering Conference (APSEC 2022), Virtual Event, Japan, 6–9 December 2022; pp. 467–471. [Google Scholar] [CrossRef]

- Ma, S.P.; Yang, Y.Y.; Lee, S.J.; Yeh, H.W. UTEMS: A Unit Testing Scheme for Event-driven Microservices. In Proceedings of the 10th International Conference on Dependable Systems and Their Applications (DSA 2023), Tokyo, Japan, 10–11 August 2023; pp. 591–592. [Google Scholar] [CrossRef]

- Vassiliou-Gioles, T. Solving the Instance Identification Problem in Micro-service Testing. In Proceedings of the IFIP International Conference on Testing Software and Systems (ICTSS 2022), Almería, Spain, 27–29 September 2022; Clark, D., Menendez, H., Cavalli, A.R., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 189–195. [Google Scholar]

- Christensen, H.B. Crunch: Automated Assessment of Microservice Architecture Assignments with Formative Feedback. In Proceedings of the 12th European Conference on Software Architecture (ECSA 2018), Madrid, Spain, 24–28 September 2018; Cuesta, C.E., Garlan, D., Pérez, J., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 11048, pp. 175–190. [Google Scholar] [CrossRef]

- Zhang, M.; Arcuri, A.; Li, Y.; Liu, Y.; Xue, K. White-Box Fuzzing RPC-Based APIs with EvoMaster: An Industrial Case Study. ACM Trans. Softw. Eng. Methodol. 2023, 32, 122:1–122:38. [Google Scholar] [CrossRef]

- Arcuri, A. RESTful API Automated Test Case Generation. In Proceedings of the 2017 IEEE International Conference on Software Quality, Reliability and Security (QRS), Prague, Czech Republic, 25–29 July 2017; pp. 9–20. [Google Scholar] [CrossRef]

- Wang, S.; Mao, X.; Cao, Z.; Gao, Y.; Shen, Q.; Peng, C. NxtUnit: Automated Unit Test Generation for Go. In Proceedings of the 27th International Conference on Evaluation and Assessment in Software Engineering (EASE 2023), Oulu, Finland, 14–16 June 2023; pp. 176–179. [Google Scholar] [CrossRef]

- Sahin, Ö.; Akay, B. A Discrete Dynamic Artificial Bee Colony with Hyper-Scout for RESTful Web Service API Test Suite Generation. Appl. Soft Comput. 2021, 104, 107246. [Google Scholar] [CrossRef]

- Heorhiadi, V.; Rajagopalan, S.; Jamjoom, H.; Reiter, M.K.; Sekar, V. Gremlin: Systematic Resilience Testing of Microservices. In Proceedings of the 2016 IEEE 36th International Conference on Distributed Computing Systems (ICDCS), Nara, Japan, 27–30 June 2016; pp. 57–66. [Google Scholar] [CrossRef]

- Fischer, S.; Urbanke, P.; Ramler, R.; Steidl, M.; Felderer, M. An Overview of Microservice-Based Systems Used for Evaluation in Testing and Monitoring: A Systematic Mapping Study. In Proceedings of the 5th ACM/IEEE International Conference on Automation of Software Test (AST 2024), Lisbon, Portugal, 15–16 April 2024; pp. 182–192. [Google Scholar] [CrossRef]

- Simosa, M.; Siqueira, F. Contract Testing in Microservices-Based Systems: A Survey. In Proceedings of the 2023 IEEE 14th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 17–18 October 2023; pp. 312–317. [Google Scholar] [CrossRef]

- Waseem, M.; Liang, P.; Márquez, G.; Salle, A.D. Testing Microservices Architecture-Based Applications: A Systematic Mapping Study. In Proceedings of the 27th Asia-Pacific Software Engineering Conference (APSEC 2020), Singapore, 1–4 December 2020; pp. 119–128. [Google Scholar] [CrossRef]

- Zhang, J.; Jiang, S.; Wang, K.; Wang, R.; Liu, Q.; Yuan, X. Overview of Information System Testing Technology Under the “CLOUD + Microservices” Mode. In Proceedings of the 5th International Conference on Computer and Communication Engineering (CCCE 2022), Beijing, China, 19–21 August 2022; Neri, F., Du, K.L., Varadarajan, V.K., Angel-Antonio, S.B., Jiang, Z., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 62–74. [Google Scholar] [CrossRef]

- Avritzer, A.; Ferme, V.; Janes, A.; Russo, B.; Schulz, H.; van Hoorn, A. Avritzer, A.; Ferme, V.; Janes, A.; Russo, B.; Schulz, H.; van Hoorn, A. A Quantitative Approach for the Assessment of Microservice Architecture Deployment Alternatives by Automated Performance Testing. In Proceedingsof the 12th European Conference on Software Architecture (ECSA 2018), Madrid, Spain, 24–28 September 2018; Cuesta, C.E., Garlan, D., Pérez, J., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 159–174. [Google Scholar] [CrossRef]

- Gong, J.; Cai, L. Analysis for Microservice Architecture Application Quality Model and Testing Method. In Proceedings of the 2023 26th ACIS International Winter Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD-Winter), Taichung, Taiwan, 6–8 December 2023; pp. 141–145. [Google Scholar] [CrossRef]

- Giamattei, L.; Guerriero, A.; Pietrantuono, R.; Russo, S. Assessing Black-box Test Case Generation Techniques for Microservices. In Proceedings of the Quality of Information and Communications Technology, Talavera de la Reina, Spain, 12–14 September 2022; Vallecillo, A., Visser, J., Pérez-Castillo, R., Eds.; Springer International Publishing: Cham, Switezrland, 2022; pp. 46–60. [Google Scholar]

- Sotomayor, J.P.; Allala, S.C.; Alt, P.; Phillips, J.; King, T.M.; Clarke, P.J. Comparison of Runtime Testing Tools for Microservices. In Proceedings of the 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), Milwaukee, WI, USA, 15–19 July 2019; Volume 2, pp. 356–361. [Google Scholar] [CrossRef]

- Lehvä, J.; Mäkitalo, N.; Mikkonen, T. Consumer-Driven Contract Tests for Microservices: A Case Study. In Proceedings of the Product-Focused Software Process Improvement, Barcelona, Spain, 27–-29 November 2019; Franch, X., Männistö, T., Martínez-Fernández, S., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 497–512. [Google Scholar]

- Long, Z.; Wu, G.; Chen, X.; Cui, C.; Chen, W.; Wei, J. Fitness-guided Resilience Testing of Microservice-based Applications. In Proceedings of the 2020 IEEE International Conference on Web Services (ICWS), Beijing, China, 19–23 October 2020; pp. 151–158. [Google Scholar] [CrossRef]

- De Angelis, E.; De Angelis, G.; Pellegrini, A.; Proietti, M. Inferring Relations Among Test Programs in Microservices Applications. In Proceedings of the 2021 IEEE International Conference on Service-Oriented System Engineering (SOSE), Oxford Brookes University, 23–26 August 2021; pp. 114–123. [Google Scholar] [CrossRef]

- Schulz, H.; Angerstein, T.; Okanović, D.; van Hoorn, A. Microservice-Tailored Generation of Session-Based Workload Models for Representative Load Testing. In Proceedings of the 2019 IEEE 27th International Symposium on Modeling, Analysis, and Simulation of Computer and Telecommunication Systems (MASCOTS), Rennes, France, 22–24 October 2019; pp. 323–335. [Google Scholar] [CrossRef]

- Lin, D.; Liping, F.; Jiajia, H.; Qingzhao, T.; Changhua, S.; Xiaohui, Z. Research on Microservice Application Testing Based on Mock Technology. In Proceedings of the 2020 International Conference on Virtual Reality and Intelligent Systems (ICVRIS), Zhangjiajie, China, 18–19 July 2020; pp. 815–819. [Google Scholar] [CrossRef]

- Li, H.; Wang, J.; Dai, H.; Lv, B. Research on Microservice Application Testing System. In Proceedings of the 2020 IEEE 3rd International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 27–29 September 2020; pp. 363–368. [Google Scholar] [CrossRef]

- Lu, Z. Research on Performance Optimization Method of Test Management System Based on Microservices. In Proceedings of the 2023 4th International Conference on Computer Engineering and Application (ICCEA), Hangzhou, China, 7–9 April 2023; pp. 445–451. [Google Scholar] [CrossRef]

- Pei, Q.; Liang, Z.; Wang, Z.; Cui, L.; Long, Z.; Wu, G. Search-Based Performance Testing and Analysis for Microservice-Based Digital Power Applications. In Proceedings of the 2023 6th International Conference on Energy, Electrical and Power Engineering (CEEPE), Guangzhou, China, 12–14 May 2023; pp. 1522–1527. [Google Scholar] [CrossRef]

- Wang, W.; Benea, A.; Ivancic, F. Zero-Config Fuzzing for Microservices. In Proceedings of the 38th IEEE/ACM International Conference on Automated Software Engineering (ASE), Echternach, Luxembourg, 11–15 September 2023; pp. 1840–1845. [Google Scholar] [CrossRef]

- Abdelfattah, A.S.; Cerny, T.; Salazar, J.Y.; Lehman, A.; Hunter, J.; Bickham, A.; Taibi, D. End-to-End Test Coverage Metrics in Microservice Systems: An Automated Approach. In Proceedings of the Service-Oriented and Cloud Computing, Larnaca, Cyprus, 24–25 October 2023; Papadopoulos, G.A., Rademacher, F., Soldani, J., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 35–51. [Google Scholar]

- Erl, T. Service-Oriented Architecture: Concepts, Technology, and Design; Prentice Hall PTR: Hoboken, NJ, USA, 2005; pp. 1–792. [Google Scholar]

- Newman, S. Monolith to Microservices: Evolutionary Patterns to Transform Your Monolith; O’Reilly Media: Sebastopol, CA, USA, 2020; pp. 1–270. [Google Scholar]

- Auer, F.; Lenarduzzi, V.; Felderer, M.; Taibi, D. From monolithic systems to Microservices: An assessment framework. Inf. Softw. Technol. 2021, 137, 106600. [Google Scholar] [CrossRef]

- Basiri, A.; Hochstein, L.; Jones, N.; Tucker, H. Automating chaos experiments in production. arXiv 2019, arXiv:1905.04648. [Google Scholar]

- JUnit Team. JUnit: A Programmer-Centric Testing Framework for Java. Available online: https://junit.org/junit5/ (accessed on 12 March 2024).

- Rojas, J.M.; Fraser, G.; Arcuri, A. Seeding strategies in search-based unit test generation. Softw. Test. Verif. Reliab. 2016, 26, 366–401. [Google Scholar] [CrossRef]

| ID | Specific Research Question | Main Motivation |

|---|---|---|

| RQ1.1 | What are the most prevalent testing techniques for microservices? | Identifying and categorizing the most commonly used testing techniques helps practitioners select the most suitable methods. Due to the distributed nature of microservices, diverse testing strategies are essential. |

| RQ1.2 | How do these testing techniques compare with those applied to traditional monolithic application testing? | Understanding how microservices testing differs from monolithic testing highlights unique challenges and advantages, guiding practitioners in adopting appropriate strategies. |

| RQ1.3 | What tools and frameworks are available specifically for test generation in microservices? | This question aims to identify dedicated tools and frameworks that facilitate automated test generation for microservices, assessing their effectiveness and limitations. |

| RQ1.4 | What are the strengths and weaknesses of various test methods in the context of microservices? | Evaluating the advantages and drawbacks of different testing techniques helps determine which methods are more effective, scalable, or challenging in microservices environments. |

| ID | Specific Research Question | Main Motivation |

|---|---|---|

| RQ2.1 | What factors influence the testing of microservices? | Understanding the key factors that impact microservices testing, such as service interactions and deployment environments, helps improve test strategies and reliability. |

| RQ2.2 | Which metrics are crucial for evaluating the quality of microservice tests or test cases? | Identifying effective test quality metrics ensures that testing strategies are properly assessed and optimized for robustness and reliability. |

| RQ2.3 | How does effective test generation enhance the quality of microservices? | Investigating the role of test generation in improving software quality provides insights into best practices for increasing fault tolerance and stability. |

| RQ2.4 | What is the relationship between test coverage and test generation efficiency in microservices? | Analyzing how test coverage impacts test generation efficiency helps balance thorough testing with practical resource constraints. |

| Set # | Search Strings | # Results | # Missing Papers |

|---|---|---|---|

| Set #1 | (“test generation“) AND (“microservices”) | 119 | 9 |

| Set #2 | (“test generation” OR “test automation”) AND (“microservices” OR “microservice architecture”) | 191 | 8 |

| Set #3 | (“test” OR “test generation” OR “test automation”) AND (“microservices” OR “microservice architecture”) | 2 992 | 1 |

| Set #4 | (“test” OR “test generation” OR “test automation”) AND (“microservices” OR “microservice architecture”) AND (“approaches” OR “techniques” OR “challenges” OR “problems”) | 2 035 | 3 |

| Set #5 | (“test” OR “test generation” OR “test automation”) AND (“microservice” OR “microservice architecture”) AND (“approaches” OR “techniques” OR “methods” OR “challenges” OR “problems” OR “impact” OR “effect” OR “quality”) | 2 096 | 0 |

| Database | Number of Papers Retrieved |

|---|---|

| SpringerLink | 224 |

| ACM Digital Library | 1753 |

| IEEEXplore | 343 |

| Scopus | 14 |

| Web of Science | 5 |

| Total | 2339 |

| ID | Criteria |

|---|---|

| I1 | Papers discussing approaches and challenges in test generation for microservices. |

| I2 | Papers comparing testing techniques for microservices with traditional monolithic applications. |

| I3 | Papers presenting tools and frameworks specifically for test generation in microservices. |

| I4 | Empirical studies evaluating the quality of microservices-based systems through test generation. |

| I5 | Papers published in peer-reviewed journals or conferences. |

| ID | Criteria |

|---|---|

| E1 | Papers not directly related to microservice testing. |

| E2 | Papers not available in full text. |

| E3 | Papers not written in English. |

| E4 | Books and non-peer-reviewed sources. |

| Database | Number of Papers Selected |

|---|---|

| SpringerLink | 10 |

| IEEE Xplore | 25 |

| ACM Digital Library | 8 |

| Scopus | 2 |

| Web of Science | 3 |

| Total | 48 |

| ID | Data Points | Description | Relevant Specific RQs |

|---|---|---|---|

| DP1 | Publication Details | Authors, title, publication year, and venue. | - |

| DP2 | Research Focus | The specific aspects of test generation for microservices addressed in the paper. | - |

| DP3 | Methodology | The research methods and techniques used in the paper. | RQ1.1, RQ1.2, RQ1.4, RQ2.3 |

| DP4 | Tools and Frameworks | Specific tools or frameworks used for test generation mentioned in the paper. | RQ1.3, RQ1.4 |

| DP5 | Findings | Key results identified in the paper. | RQ1.4, RQ2.1, RQ2.2, RQ2.3, RQ2.4 |

| DP6 | Metrics | Performance metrics used to evaluate the quality of microservices tests. | RQ2.2, RQ2.4 |

| ID | Category | Description | Relevant RQs |

|---|---|---|---|

| C1 | Testing Techniques, Tools, and Frameworks | We placed papers here if they described or evaluated specific methods, tools, or frameworks for microservices test generation. Examples include model-based testing, combinatorial testing, and automation tools. | RQ1.1, RQ1.3, RQ1.4 |

| C2 | Systematic Study Contributions | We included papers that provide systematic reviews, surveys, or mapping studies on microservices test generation. These synthesize multiple sources to present trends and insights. | RQ1 |

| C3 | Impact on Quality | We used this category for papers examining how test generation affects the quality of microservices-based systems. Topics here include strengths and weaknesses, empirical data on effectiveness, and how different testing methods detect defects. | RQ2 |

| C4 | Metrics | We assigned papers to this category if they proposed or assessed functional and non-functional metrics for microservices test generation. Such metrics include coverage, fault detection rate, and execution time. | RQ2.2, RQ2.4 |

| ID | Title | Publisher | Year |

|---|---|---|---|

| Category: Testing Techniques, Tools, and Frameworks (C1) | |||

| P1 | A Reusable Automated Acceptance Testing Architecture for Microservices in Behavior-Driven Development [13] | IEEE | 2015 |

| P2 | Automated Grey-Box Testing of Microservice Architectures [14] | IEEE | 2022 |

| P3 | RAML-Based Mock Service Generator for Microservice Applications Testing [15] | SpringerLink | 2017 |

| P4 | An architecture to automate performance tests on microservices [16] | ACM | 2016 |

| P5 | An Automatic Test Data Generation Method for Microservice Application [17] | IEEE | 2020 |

| P6 | Automatic Ex-Vivo Regression Testing of Microservices [18] | ACM | 2020 |

| P7 | Automatic performance monitoring and regression testing during the transition from monolith to microservices [19] | IEEE | 2019 |

| P8 | Automation of Regression test in Microservice Architecture [20] | IEEE | 2018 |

| P9 | Benchmarks for End-to-End Microservices Testing [21] | IEEE | 2023 |

| P10 | Challenges in Regression Test Selection for End-to-End Testing of Microservice-based Software Systems [22] | IEEE | 2022 |

| P11 | A Microservice Regression Testing Selection Approach Based on Belief Propagation [23] | ACM | 2023 |

| P12 | Design and Implementation of Intelligent Automated Testing of Microservice Application [24] | IEEE | 2021 |

| P13 | Design and Research of Microservice Application Automation Testing Framework [25] | IEEE | 2019 |

| P14 | Design, implementation, and testing of a microservices-based Digital Twins framework for network management and control [26] | IEEE | 2022 |

| P15 | Efficient software test management system based on microservice architecture [27] | IEEE | 2022 |

| P16 | Fault Diagnosis for Test Alarms in Microservices through Multi-source Data [28] | ACM | 2024 |

| P17 | JoT: A Jolie Framework for Testing Microservices [29] | ACM | 2023 |

| P18 | Learning-Based Testing of Distributed Microservice Architectures: Correctness and Fault Injection [30] | ACM | 2015 |

| P19 | Microservices Integrated Performance and Reliability Testing [31] | IEEE | 2022 |

| P20 | Microusity: A testing tool for Backends for Frontends (BFF) Microservice Systems [32] | IEEE | 2023 |

| P21 | Testing for Event-Driven Microservices Based on Consumer-Driven Contracts and State Models [33] | IEEE | 2022 |

| P22 | UTEMS: A Unit Testing Scheme for Event-driven Microservices [34] | IEEE | 2023 |

| P23 | Solving the Instance Identification Problem in Microservice Testing [35] | SpringerLink | 2021 |

| P24 | Crunch: Automated Assessment of Microservice Architecture Assignments with Formative Feedback [36] | SpringerLink | 2018 |

| P25 | White-Box Fuzzing RPC-Based APIs with EvoMaster: An Industrial Case Study [37] | Scopus | 2023 |

| P26 | RESTful API automated test case generation [38] | Scopus | 2017 |

| P27 | NxtUnit: Automated Unit Test Generation for Go [39] | Web of Science | 2023 |

| P28 | A Discrete Dynamic Artificial Bee Colony with Hyper-Scout for RESTful web service API test suite generation [40] | Web of Science | 2021 |

| P29 | Gremlin: Systematic Resilience Testing of Microservices [41] | IEEE | 2016 |

| Category: Systematic Study Contributions (C2) | |||

| P30 | An Overview of Microservice-Based Systems Used for Evaluation in Testing and Monitoring: A Systematic Mapping Study [42] | IEEE | 2024 |

| P31 | Contract Testing in Microservices-Based Systems: A Survey [43] | IEEE | 2023 |

| P32 | Testing Microservices Architecture-Based Applications: A Systematic Mapping Study [44] | IEEE | 2020 |

| P33 | Overview of Information System Testing Technology Under the “CLOUD + Microservices” Mode [45] | SpringerLink | 2022 |

| Category: Impact on Quality (C3) | |||

| P34 | A Quantitative Approach for the Assessment of Microservice Architecture Deployment Alternatives by Automated Performance Testing [46] | SpringerLink | 2018 |

| P35 | Analysis for Microservice Architecture Application Quality Model and Testing Method [47] | IEEE | 2023 |

| P36 | Assessing Black-box Test Case Generation Techniques for Microservices [48] | SpringerLink | 2022 |

| P37 | Comparison of Runtime Testing Tools for Microservices [49] | IEEE | 2019 |

| P38 | Consumer-Driven Contract Tests for Microservices: A Case Study [50] | SpringerLink | 2019 |

| P39 | Fitness-guided Resilience Testing of Microservice-based Applications [51] | IEEE | 2020 |

| P40 | Inferring Relations Among Test Programs in Microservices Applications [52] | IEEE | 2021 |

| P41 | Microservice-tailored Generation of Session-based Workload Models for Representative Load Testing [53] | IEEE | 2019 |

| P42 | Microservices: A Performance Tester’s Dream or Nightmare? [5] | ACM | 2020 |

| P43 | Research on Microservice Application Testing Based on Mock Technology [54] | IEEE | 2020 |

| P44 | Research on Microservice Application Testing System [55] | IEEE | 2020 |

| P45 | Research on Performance Optimization Method of Test Management System Based on Microservices [56] | IEEE | 2023 |

| P46 | Search-Based Performance Testing and Analysis for Microservice-Based Digital Power Applications [57] | IEEE | 2023 |

| P47 | Zero-Config Fuzzing for Microservices [58] | Web of Science | 2023 |

| Category: Metrics (C4) | |||

| P48 | End-to-End Test Coverage Metrics in Microservice Systems: An Automated Approach [59] | SpringerLink | 2023 |

| ID | Technique | Focus or Objective | Key References |

|---|---|---|---|

| TE1 | Unit Testing | Validates individual microservices at a fine-grained level. Ensures correct functionality in isolation. | [34,39] |

| TE2 | Regression Testing | Checks that recent updates do not break existing features. | [18,19,20,22,23] |

| TE3 | Grey-Box Testing | Combines black- and white-box insights. Uses partial knowledge of internal structures and service interactions. | [14] |

| TE4 | Acceptance Testing | Validates overall behavior against user requirements. Uses natural-language or BDD approaches. | [13] |

| TE5 | Performance/Load Testing | Evaluates scalability and robustness under stress conditions. Identifies bottlenecks in distributed services. | [16,21,46] |

| TE6 | Contract Testing | Verifies service interfaces and consumer-driven contracts. Ensures compatibility across independent services. | [33,43,50] |

| TE7 | Fault Injection/Resilience | Simulates service failures. Tests error-handling, fallback mechanisms, and overall fault tolerance. | [30,41,51] |

| TE8 | Fault Diagnosis | Identifies root causes of failures in interconnected microservices. Facilitates targeted debugging. | [28] |

| TE9 | Test Case/Data Generation | Automatically produces test inputs or scenarios. Aims to increase coverage and reveal edge cases. | [15,17,37,48] |

| Dimension | Monolithic Focus | Microservices Focus |

|---|---|---|

| Granularity and Isolation | All functionalities reside in a single deployment. Tests often target the entire system at once [61]. | Independent services run in their own contexts. Each service undergoes isolated unit and integration testing. This prevents cascading failures [13,18]. |

| Deployment Environment | Stable, homogenous configurations. Fewer variations in test scenarios. | Dynamic, containerized settings [16,46]. Testing tools handle multiple orchestrated services with varied resource allocations. |

| Continuous Integration | A single artifact updates the whole application. Regression tests can be slower and less frequent [1,61]. | Rapid releases demand automated checks after each update. Regression testing quickly confirms no new defects [19,20]. |

| Inter-Service Communication | Internal calls happen within one codebase. Communication stays straightforward. | Services rely on lightweight APIs and evolving contracts. Consumer-driven contract tests reduce integration issues [33,43,50]. |

| Fault Tolerance and Resilience | Errors may propagate [62] but remain in a single codebase. Traditional chaos testing sees limited use [63]. | Distributed faults require resilience tests and fault injection [30,41,51]. Network delays and partial failures often occur. |

| Performance and Scalability | Focus on overall throughput or response time [1]. One large codebase handles vertical scaling [62]. | Each service scales independently, but network overhead affects performance. Tests target latency and load across components [21,46]. |

| Testing Tools and Frameworks | Classic frameworks (e.g., JUnit [64]) check end-to-end behaviors in one environment. | Specialized tools handle contract tests, mock services, and asynchronous flows [15,29]. Environments evolve faster. |

| ID | Tool/Framework | Focus or Method | References |

|---|---|---|---|

| TF1 | JoT | Jolie-based framework targeting service-oriented testing for microservices. | [29] |

| TF2 | RAML-based Mock Gen. | Generates mock services from RAML specs for integration and end-to-end testing. | [15] |

| TF3 | EvoMaster (Extended) | Uses white-box fuzzing and evolutionary algorithms to improve coverage and detect flaws. | [37] |

| TF4 | RESTful API Test Tool | Applies evolutionary computation for integration test generation and optimizes fault detection. | [38] |

| TF5 | NxtUnit | Automates test generation for Go microservices using static and dynamic analysis. | [39] |

| TF6 | AI-Driven Algorithm | Selects and optimizes test cases based on coverage and efficiency metrics. | [40] |

| TF7 | Ex-Vivo Regression Testing | Detects regressions using isolated systems, replicating real environments. | [18] |

| TF8 | Search-Based Perf. Testing | Generates performance test cases under varying parameters to identify bottlenecks. | [57] |

| TF9 | uTest | Uses a pairwise combinatorial approach for black-box test generation via OpenAPI. | [48] |

| Aspect | Key Observations |

|---|---|

| RQ2.1: Factors | Interaction chain inconsistency [5] Service independence vs. inter-dependencies [33,43,50] Dynamic environments (cloud-native) [45] Complex regression test selection (E2E) [22] |

| RQ2.2: Metrics | Endpoint coverage metrics [59] Functional (e.g., parameter coverage) [14,48] Non-functional (response time, throughput) [16,46] |

| RQ2.3: Enhancing Quality | Mock services for consistent integration tests [15] Early defect detection with grey-box testing [14] Performance and resilience testing synergy [30,41,51] CI/CD-driven automation [20,25] |

| RQ2.4: Coverage vs. Efficiency | Comprehensive coverage often conflicts with test generation speed Mock services can improve coverage while controlling complexity [15] Balancing coverage and resource usage is critical [5,18,20] |

| Functional Metrics | |

| Coverage Metrics | |

| Path Coverage [14,48] | Operation Coverage [48] |

| Parameter Coverage [48] | Parameter Value Coverage [48] |

| Request Content-Type Coverage [48] | Status Code Class Coverage [14,48] |

| Status Code Coverage [14,48] | Response Content-Type Coverage [48] |

| Microservice Endpoint Coverage [59] | Test Case Endpoint Coverage [59] |

| Complete Test Suite Endpoint Coverage [59] | |

| Test Execution Metrics | |

| Avg. Number of Executed Tests [48] | Avg. Failure Rate [14,48] |

| Error Coverage Rate [17] | Count of Generated Test Data [17] |

| Structural Metrics | |

| Coupling (MPC) [47] | Cohesion (LCOS) [47] |

| Traceability [47] | |

| Non-Functional Metrics | |

| Performance Metrics | |

| Response Time [5,16,18,20,21,30,36,46,53,56,57] | Throughput [16,53] |

| Scalability [46,47] | Availability [47] |

| Fault Tolerance [36,47] | Resource Usage Rate [5,20] |

| System Failure Rate [20] | Performance Thresholds [19,20,53] |

| Usage Intensities [18] | CPU Utilization [53] |

| Memory Consumption [53] | |

| Failure Metrics | |

| Failed Requests (FR) [31] | Connection Errors Ratio (CE) [31] |

| Server Errors Ratio (SE) [31] | Internal Microservice Coverage [14] |

| Dependency Coverage [14] | Propagated & Masked Failures [14] |

| Timing Metrics | |

| Start-Up Time [22] | Runtime Overhead [22] |

| Execution Time [49,57] | Trace Coverage [57] |

| Performance Degradation (PD) [31] | |

| ID | Strategy | Focus or Approach | Key References |

|---|---|---|---|

| S1 | Comprehensive Coverage | Tests should span the entire system. Metrics such as Microservice Endpoint Coverage, Test Case Endpoint Coverage, and Complete Test Suite Endpoint Coverage ensure thorough validation. | [59] |

| S2 | Mock Services | Mocks stabilize testing by simulating external services in controlled environments. This preserves interaction chains and allows consistent inputs and outputs. Papers highlight improved reliability through isolation during integration and regression tests. | [15,18,55] |

| S3 | Early Defect Detection | Diverse test-case generation uncovers faults before production. Frameworks that combine static and dynamic analysis accelerate detection of subtle issues. This prevents cascades triggered by minor code changes. | [14] |

| S4 | Validating Inter-Service Interactions | Microservices depend on well-defined contracts. Consumer-driven tests ensure that updates in one service do not disrupt upstream or downstream partners. This preserves communication integrity across versions. | [43,50] |

| S5 | Performance and Scalability Assessment | Performance-focused tools generate tests that mimic various load scenarios. These tests reveal bottlenecks and verify capacity for high-traffic demands. Metrics from these assessments guide optimization. | [21,46] |

| S6 | Resilience Testing | Fault injection introduces controlled errors, such as network delays or crashes. Effective strategies systematically orchestrate realistic failure scenarios and validate inter-service communications to ensure fault tolerance in production. | [30,41,51] |

| S7 | Automation and CI | Automated frameworks plug into CI pipelines. Developers receive fast feedback on whether new code breaks existing functionality. Rapid iteration maintains consistent quality in frequent releases. | [20,25] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miao, T.; Shaafi, A.I.; Song, E. Systematic Mapping Study of Test Generation for Microservices: Approaches, Challenges, and Impact on System Quality. Electronics 2025, 14, 1397. https://doi.org/10.3390/electronics14071397

Miao T, Shaafi AI, Song E. Systematic Mapping Study of Test Generation for Microservices: Approaches, Challenges, and Impact on System Quality. Electronics. 2025; 14(7):1397. https://doi.org/10.3390/electronics14071397

Chicago/Turabian StyleMiao, Tingshuo, Asif Imtiaz Shaafi, and Eunjee Song. 2025. "Systematic Mapping Study of Test Generation for Microservices: Approaches, Challenges, and Impact on System Quality" Electronics 14, no. 7: 1397. https://doi.org/10.3390/electronics14071397

APA StyleMiao, T., Shaafi, A. I., & Song, E. (2025). Systematic Mapping Study of Test Generation for Microservices: Approaches, Challenges, and Impact on System Quality. Electronics, 14(7), 1397. https://doi.org/10.3390/electronics14071397