1. Introduction

User mobility support is one of the main and the most attractive features of mobile wireless technologies. Furthermore, user devices typically support more than one wireless technology. Smartphones, for example, support access to mobile networks like 4G or 5G, but at the same time support WiFi access as well. Modern wireless technologies also represent the main driving force for emerging applications in areas such as V2X (Vehicle-to-everything), IoT (Internet of Things), eHealth, etc. [

1]. The goal of modern communications is to utilize these available multiple network accesses to provide users better QoE (Quality of Experience), higher communication reliability and availability, and seamless communication sessions [

2,

3]. Moreover, 5G technology has recognized this trend and has defined the ATSSS (Access Traffic Steering, Switching and Splitting) paradigm that is in line with the aforementioned goal [

4].

Satellite technologies have always offered great coverage and access to communication in difficult-to-cover zones. However, the usual downsides are the price, limited upstream bandwidth, sensitivity to weather conditions and high latency. With this in mind, modern LEO (Low Earth Orbit) satellite communication systems have been emerging to provide much lower latencies and good bandwidth in both directions (downstream/upstream) at affordable prices that will probably be even lower in the near future. Starlink and OneWeb are typical and successful examples of such systems [

5,

6]. Also, drone technology evolution and expansion will provide UAV (Unmanned Aerial Vehicle)-based extensions of mobile communication networks to support congested cell traffic offload and coverage expansion [

7,

8].

Given the advancements in LEO satellite communication systems, these systems have been recognized as a good complement to 5G terrestrial systems to provide increased coverage, better reliability and availability, and bandwidth increase to mobile users [

9,

10]. For these reasons, hybrid satellite–terrestrial network access is gaining attention as a good strategy approach in the near future that would enable many benefits to users [

11,

12]. Vertical handover can be used to move the ongoing user session between different network technologies like between terrestrial and satellite network access [

13]. However, the ATSSS paradigm expands this approach by enabling users to utilize both accesses at the same time. Steering and switching functions can be observed to utilize only one access at a given time, but splitting by definition assumes the usage of multiple network accesses at the same time. ATSSS implementation has two approaches—low level (the data link layer) and high level (the transport layer). Low level requires that the networks involved share the same 5G network core, while high level may even be implemented in a way that access networks are unaware of it. Also, it is worth noting that machine learning and artificial intelligence are becoming more and more popular approaches in optimizing multiple network access utilization according to user preferences such as price, latency, packet loss rate, etc. [

14,

15].

To estimate the quality of multiple network accesses approaches and/or used techniques (optimization techniques, machine learning, etc.), it would be very beneficial to have simulation frameworks and platforms in order to generate diverse and plentiful data [

16]. Simulations can efficiently and economically provide some insight in performances of the tested approaches [

17]. Based on these insights, the further tuning and elimination of detected issues can be performed. Software simulations are a more economical approach, but hardware-based simulations can provide a shorter simulation time and can use hardware accelerators to not only reduce the simulation time but also to enable the inspection of the features that are not feasible in software (for example, LDPC (Low-Density Parity Check) coding/decoding [

18]).

In this paper, we propose a flexible hardware platform that is intended for an evaluation of hybrid terrestrial–satellite access performances. Also, we introduce an intelligent selection of network access using the predictions performed by long short-term neural networks (LSTMs) based on the measured SNR (Signal-to-Noise Ratio). Appropriate channel models are used in the simulation process, generating SNR data for both satellite and terrestrial network channels. The goal of our proposal is to create a simulation framework that can be adjusted to the broader scope of use cases and scenarios. For this reason, we have included in our framework components like an intelligent controller that can aid in network access selection, hardware boards that can set link delays or be used for hardware accelerations, different channel models support, etc. By modifying appropriate components, the framework can be adjusted to the desired scenario. In this paper, a demonstration is performed for a UDP streaming-based session where an intelligent selector, based on SNR measurements, selects better access that provides higher bandwidth. UDP streaming is selected because, in this scenario, the obtained results focus on intelligent selection performance, avoiding the impact of flow and congestion control mechanisms of TCP-based flows. The results show that the proposed platform enables the fast simulation and estimation of performances of the proposed intelligent controller. Also, we show that the intelligent controller enables the optimal usage of resources.

There are two main contributions of this paper. The first is the design of an intelligent session controller that utilizes LSTMs to estimate the condition of both network accesses based on SNR measurements. The second contribution is the design of flexible hardware-based simulation platform that is intended for the inspection of high-level based multi-access approaches and protocols. It is important to note that the framework is designed to be flexible, so all modules and components can be modified and adjusted to different scenarios. Also, most of the simulation setups focus on terrestrial wireless networks, while in this paper, we focus on a hybrid satellite–terrestrial combination of wireless networks. Additional contributions include performance testing for UDP-based streaming sessions and performance measurements using the proposed hardware-based simulation platform.

2. Related Work

Utilizing multiple network interfaces has always been an attractive feature because it provides many benefits like increased reliability, availability and bandwidth to communication sessions [

19,

20]. This has been recognized in wired communications, for example, the SCTP (Stream Control Transmission Protocol), which is very popular for the transport of signaling messages in telecom operator network support multi-homing, increasing availability and reliability [

21]. In the case of wireless communications, this feature provides additional benefits, like greater coverage and cell traffic offloading, providing users a better QoS (Quality of Service) [

22]. Considering that satellite communications are advancing very quickly with already available commercial solutions like Starlink and OneWeb, a hybrid combination of satellite and terrestrial communications is becoming more and more popular [

11,

23,

24,

25].

Multi-access support has been recognized by the 3GPP by defining the ATSSS paradigm that covers basic functions that comprise multi-access: steering, switching and splitting [

26]. Steering defines how to select access at the start of the session—it can be based on a number of factors like user preferences, available bandwidth, latency, application requirements, etc. During the session, there is a possibility that the Quality of Service of the initially selected network access will deteriorate or even that the connection will be completely lost. This usually happens when the user is moving out of the coverage zone. In such cases, the session needs to seamlessly move to another available network access, and this function is called switching. Splitting enables the usage of multiple network access simultaneously. The typical use of the splitting function is to increase the used bandwidth, which can be important for some applications.

There are two ways to support ATSSS—at the data link layer (low level) and at the transport layer (high level). In this paper, we consider high-level support. The 3GPP has recognized the potential of the MP-TCP (MultiPath TCP) and considers it as a good candidate for a high-level ATSSS solution [

27]. In addition, the MP-TCP and MP-QUIC (MultiPath QUIC) are also recognized as potential ATSSS high-level solutions by the 3GPP [

28]. Both the MP-TCP and MP-QUIC have their pros and cons. For example, the MP-TCP does not support UDP sessions, which we consider in this paper. Thus, there is room for improvements of existing multipath protocols or the development of new ones that can better cover some specific use cases. Hybrid terrestrial–satellite access can be considered as a specific use case as both accesses are wireless, meaning there are specific conditions compared to wired access like fading, shadowing, interference, weather condition influence, etc. These conditions make wireless links less reliable, which should be considered to avoid the erroneous detections of link congestion [

29].

Numerous papers cover the topic of wireless multiple network access and ATSSS support and optimization. Since smartphones usually have a 4G/5G interface as well as WiFi, some papers investigate the performance of transport multipath protocols in such environments [

30,

31]. A survey on multipath transport protocols for 5G ATSSS is given in [

4]. A survey on multi-connectivity in 5G is given in [

10]. A performance evaluation of combining 5G-NR, WiFi and LiFi for Industry 4.0 is addressed in [

32]. Given the advancements in satellite communications, many papers cover the topic of multipath in satellite–terrestrial environments. The use of network coding in a satellite–terrestrial multipath environment is proposed in [

33]. A study regarding the integration of a non-3GPP satellite interface in a 5G multiple-access configuration is covered in [

34]. Some papers analyze specific use cases like in [

35], where multipath analysis for a case of a satellite higher-bandwidth link and terrestrial lower-bandwidth link is given.

Machine learning and artificial intelligence are gaining popularity in wireless networks to optimize the utilization of resources and user QoE. In case of multiple network accesses, they are used to optimize network access selection and switching or handover processes. The SARA (Smart Aggregated RAT Access) method, which uses multi-agent reinforcement learning, is proposed in [

36] to optimize network access capacity in a multi- RAT (Radio Access Technology) environment. Reinforcement learning to optimize the selection of non-terrestrial links with multipath routing in non-stationary multipath 5G non-terrestrial networks is proposed in [

37]. Deep reinforcement learning for multipath routing in LEO mega constellation networks is proposed in [

38]. An AI (artificial intelligence)-based framework for multiple network access that, besides terrestrial network access, considers LEO satellites is presented in [

39].

To evaluate the performance of new methods or algorithms, measurements can be conducted on real networks or testbeds designed using real devices [

40]. The existence of open-source 5G network core solutions like Free5GC or OpenAirInterface enables the development of testbeds in labs. For example, an MP-TCP ATSSS testbed using Free5GC as a network core and UERANSIM for user equipment is presented in [

41]. A 5G-MANTRA testbed for 4G/5G and WiFi multipath evaluation is presented in [

16]. However, this is not always possible or feasible. Simulations can provide a means to inspect and analyze performance in a more economical way. A very detailed survey on network simulators, emulators and testbeds is given in [

17]. In [

42], the authors conducted their own simulation experiments to analyze the performance of their proposed multiservice-based traffic scheduling for 5G ATSSS. In [

43], an ns3 simulator is used to analyze the performance of the proposed intelligent access traffic management for hybrid 5G-WiFi networks. A comprehensive list and discussion on 5G simulation techniques is given in [

44], where link-level and system-level simulators are also covered.

Based on the related work analysis, there is a lack of simulators that focus of on hybrid wireless network access, especially for cases where one of them is satellite access. Also, most of the existing simulation solutions are focused on a narrow set of use cases, like 4G or 5G in combination with WiFi. Hardware components are typically used in real testbeds and represent actual devices without the possibility for meaningful modifications, for example, hardware acceleration. For these reasons, we designed and proposed a distributed AI-based simulation framework that should overcome the aforementioned shortcomings and provide users a flexible environment to test existing and novel solutions such as AI models, channel models, and multipath protocols under different scenario settings.

3. Simulation Framework

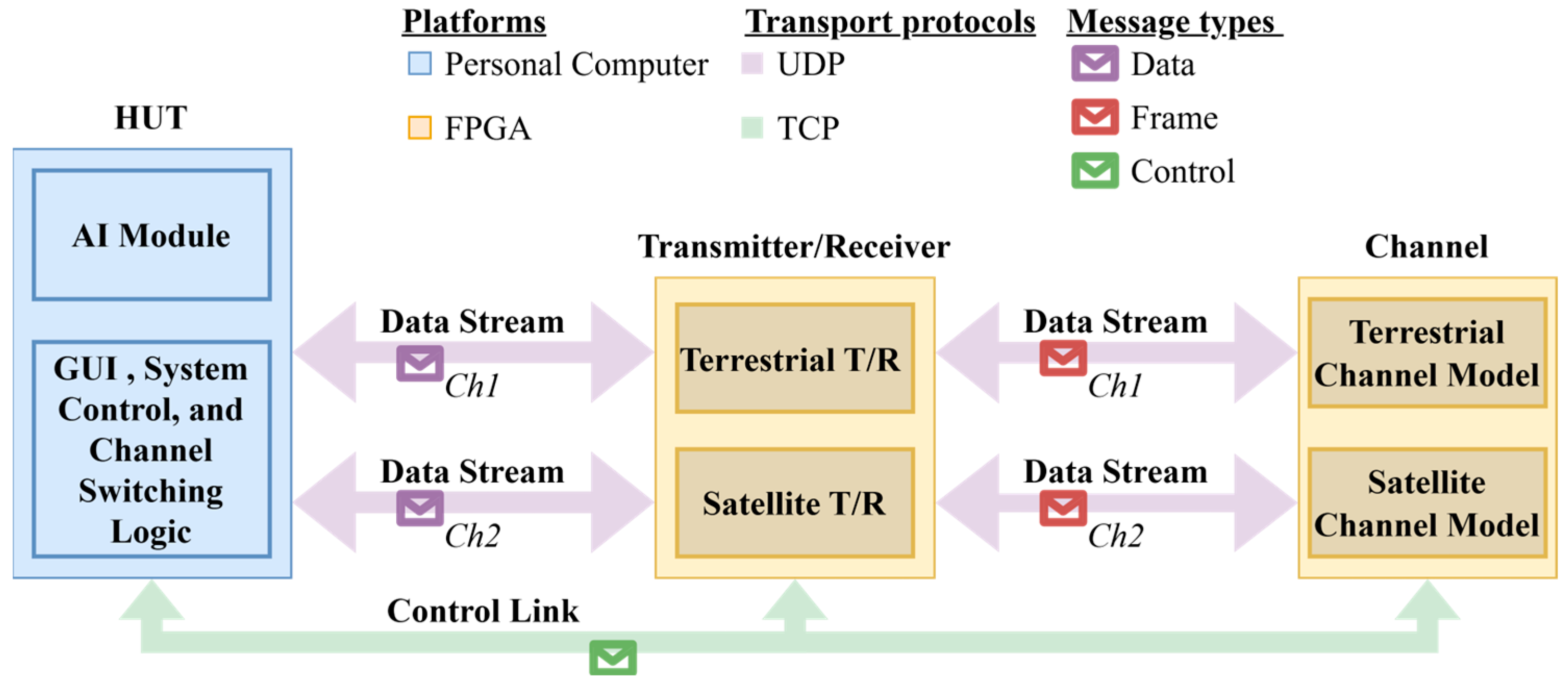

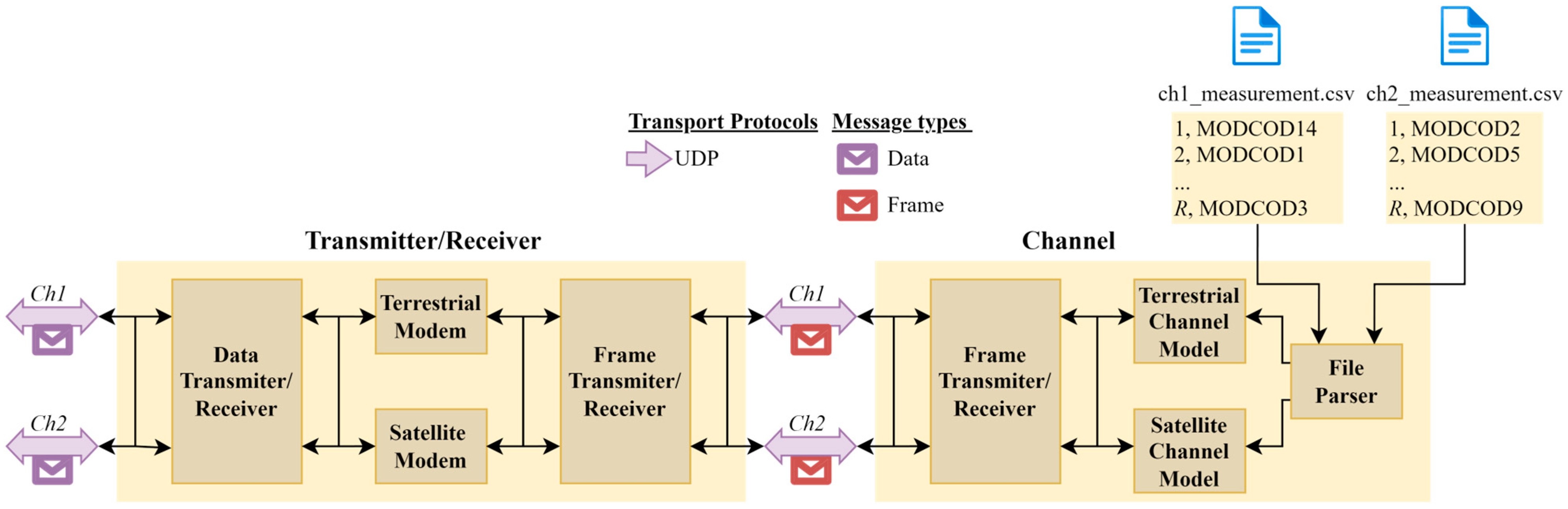

The overall architecture of the simulation framework, intended to enable the simulation of multi-RAT access, is illustrated in

Figure 1. It contains basic elements and modules that mimic a multi-RAT access scenario:

HUT (Hybrid User Terminal)—user equipment (UE) capable of utilizing two different RATs—in this paper, these are terrestrial and satellite RATs.

Transmitter/receiver (T/R)—to simulate a Tx/Rx module of the HUT.

Channel—to simulate selected radio channel models.

This approach enables flexible adaptations to various use case scenarios. In the next section, the simulation results for a UDP streaming session are given. The initial development stage of the proposed framework has been presented in [

45].

The core functionalities of the simulation framework utilized in this research study are detailed in [

45]. For this study, the framework has been modified to support terrestrial and satellite channel models. The overall architecture of the simulation framework is illustrated in

Figure 1.

The two main building components of the developed simulation framework are as follows:

The simulation framework is implemented as a distributed solution that includes three software modules, deployed on separate physical platforms, that are in charge of generating, receiving, transmitting and processing user data. Two types of links are established between the modules of the simulation framework:

User data that are transmitted within the simulation framework are encapsulated within Messages that have a specific format detailed in the next section.

3.1. Messages

The configuration and data flow between modules are implemented using Messages. Although the presented framework architecture is flexible and enables the definition of different message types, for the evaluation purpose, three message types are defined: Data, Frame and Control.

Data Messages are generated by the HUT and are used to transfer information to and from the T/R module. These messages are transmitted over the UDP protocol in a binary format, as illustrated in

Figure 2. The message format consists of two main parts: a header and a payload. The header includes five 4-byte fields. The first field, Req/Res, indicates whether the message is sent from the HUT to the Channel module (uplink) or a response sent by the Channel module to the HUT module (downlink). Each Data Message has a unique Data ID, which helps packet loss detection, since this mechanism is not provided by the UDP protocol. Additionally, the Slot ID field identifies the timeslot to which the Data Message belongs. The HUT module makes predictions, and the timeslot represents a time period for which one prediction is considered valid. The Metadata field is a bit-accessible field that contains other miscellaneous parameters and control signals needed for data processing across the modules. The MODCOD (modulation and coding) field contains an index representing the modulation and code rate used for the current transmission. The payload section consists of

n bits of user data.

Frame Messages are generated within the T/R module, where they encapsulate the content of Data Messages in the appropriate terrestrial or satellite physical layer format. Once created, these messages are transmitted from the T/R module to the Channel module via a UDP link, where they undergo further processing within the corresponding channel model.

In addition to the two messages that are in raw binary format, Control Messages are implemented using ASCII string format. These messages are generated on the HUT side and transmitted to the device via the TCP protocol. For every Control Message received and processed by the device, a corresponding response is generated to indicate the execution status. For example, the device supports the “hello” control message, which serves to verify the device’s presence at a specific IP address. The command for this check is “device hello”, and if the device software is running on the platform, the expected response is “OK”.

3.2. Modules

The current simulation framework includes the following software modules: Hybrid User Terminal (HUT), Transmitter/Receiver (T/R) and Channel. These modules are instances of simulator components, the HUT Software V1.0 Component and Device Software V1.0 Component, which are defined in [

45].

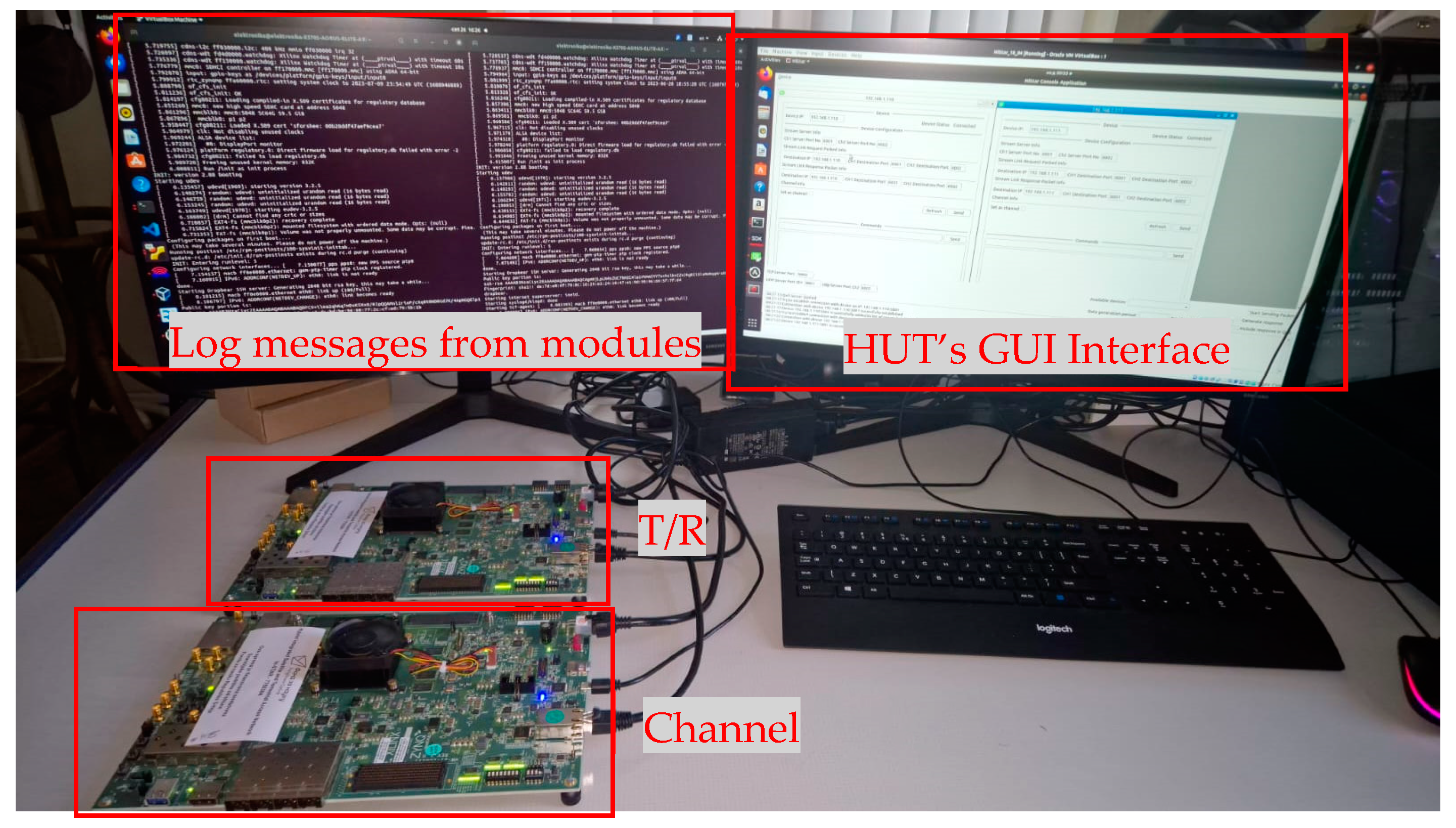

The HUT module is an instance of the HUT Software Component and is deployed in a PC. It is basically a GUI application responsible for generating user data and selecting the active communication channel. Additionally, it configures other simulation framework components such as the T/R and Channel modules.

The T/R and Channel modules are instances of the Device Software Component and are deployed in a dedicated embedded platform that can enable processing acceleration. The T/R module adds a specific frame header to Data Messages, which contain user data, and forms Frame Messages that contain coded data. This module is also responsible for the decoding process, e.g., extracting Data Messages from received Frame Messages.

The Channel module is responsible for modeling the behavior of terrestrial and satellite channels.

3.2.1. Hybrid User Terminal (HUT)

The HUT module is implemented as a Graphical User Interface (GUI) application written in C++ language, developed using the Qt framework [

46], which ensures portability across different operating systems. Overall, the HUT architecture is presented in

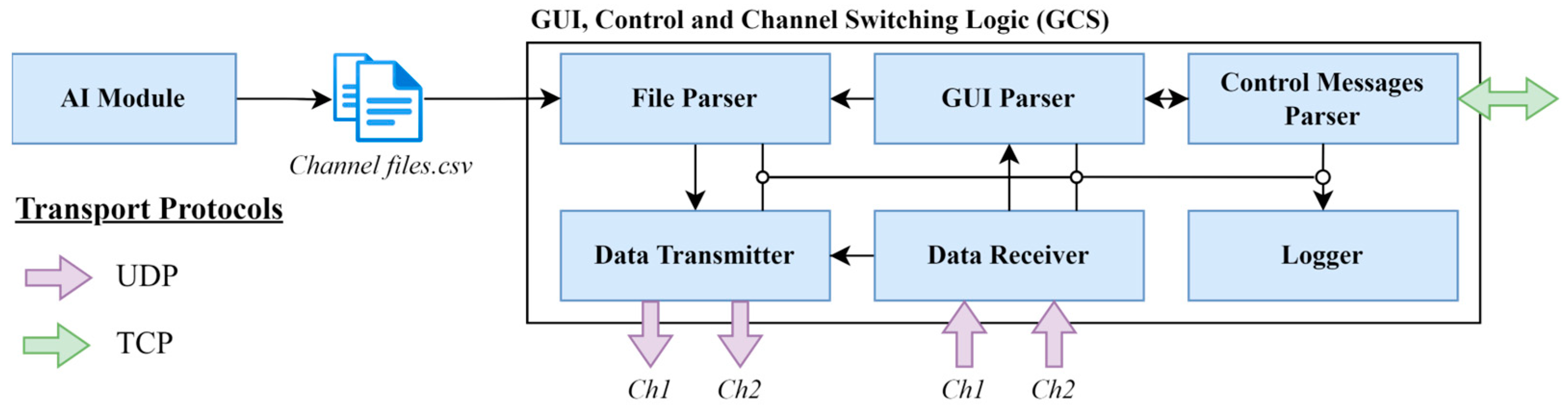

Figure 3.

The HUT consists of two main components:

The functionalities integrated within the AI module will be described in detail alongside the channel simulation description in

Section 4. These functionalities primarily involve the decision-making process that takes several samples of the measured SNR sequence for a given channel and tries to predict the optimal MODCOD that should be used in the following timeslot. This algorithm is developed to keep the transmission error rate under a certain margin while providing the highest possible spectral efficiency, hence enabling the fastest data transmission rate without errors under the given channel conditions. Based on the predicted MODCOD, the maximal number of frames that can be transmitted over each channel in the following timeslot is calculated.

This value is used by the GCS on the HUT side to select the best channel for frame transmission. In the simulation environment, this channel is called the Active channel. Since MODCOD, used for channel switching, is predicted based on earlier SNR values, it should be compared to the actual MODCOD calculated using the channel model in the Channel module block.

The GCS is a component of the HUT Module responsible for configuring all modules, supervising communication, and managing data frame routing. This component consists of the following software submodules: GUI Parser, File Parser, Data Transmitter, Data Receiver, and Control Message Parser.

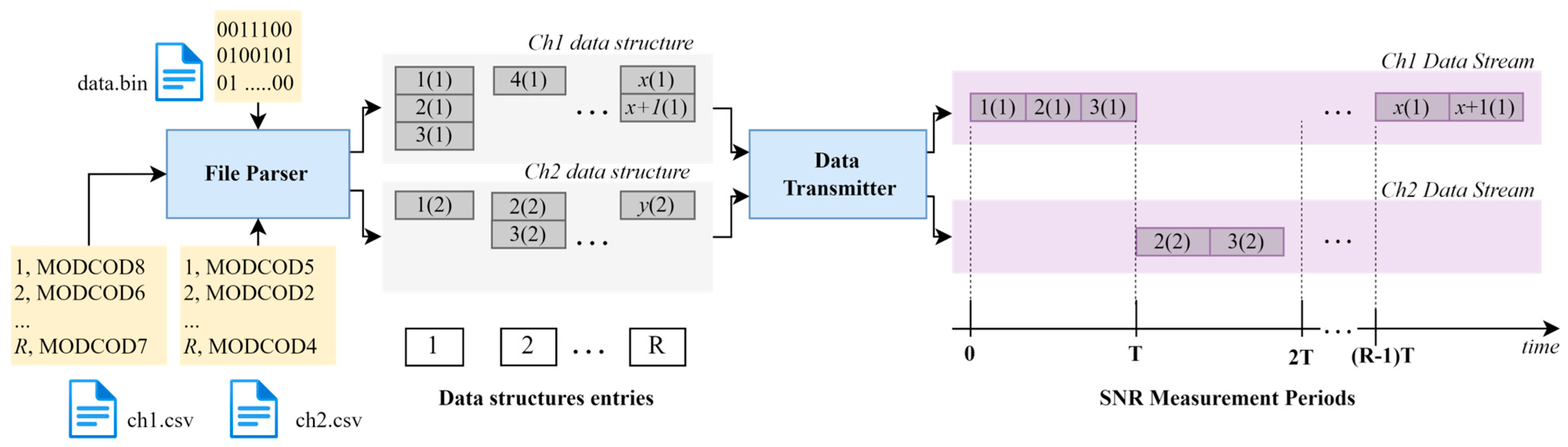

The GUI Parser is responsible for generating GUI control elements and processing user-defined configuration parameters through the corresponding control inputs. Once a configuration is received, it is forwarded to the Control Message Parser submodule, which generates control message commands from configuration parameters. Besides the configuration of a simulation framework, the GUI Parser also load files containing user data. Once loaded, this file is sent to the File Parser submodule. Here, user data are divided into code words whose number depends on the used MODCOD, as shown in

Figure 4. These data represent a payload that is transferred inside the Data message frame, as shown in

Figure 2.

MODCOD that is used for current data transmission is provided by the AI module. Since different channels have different characteristics and instantaneous conditions, different sets of MODCOD parameters are provided for each channel. The MODCOD file for each channel contains R rows, representing R timeslots. The File Parser submodule generates a data structure for each channel that contains information for each R timeslot. Each element of this structure contains N substructures, representing the data messages that should be sent during a single timeslot if the corresponding channel is selected. The value of N, as well as the data message transmission period within a single timeslot, is determined based on the MODCOD and the preconfigured bandwidth for each channel.

Once these structures are generated, they are forwarded to the Data Transmitter submodule. A key function of this submodule is to determine which channel should be active in the given timeslot. The selection algorithm is triggered periodically at the end of each timeslot, and the active channel is chosen with a goal of maximizing the number of frames that can be transmitted in the given timeslot. In addition to selecting the active channel, the Data Transmitter submodule formats data from the channel data structure and transmits them over the selected active channel using the UDP transport protocol. These data are sent to the T/R module.

Figure 4 illustrates the file parsing, data splitting into packets and data transmission process on the HUT side. In the demonstrated example, the duration of the timeslot is set to T, and the entire simulation contains R timeslots.

The Data Receiver submodule receives Data Messages from the T/R module, extracts all relevant information and updates the associated channel data structure. An update of the channel data structure triggers the Logger submodule that records all Data Receiver activities into a log file. This log file is later analyzed to extract the relevant information.

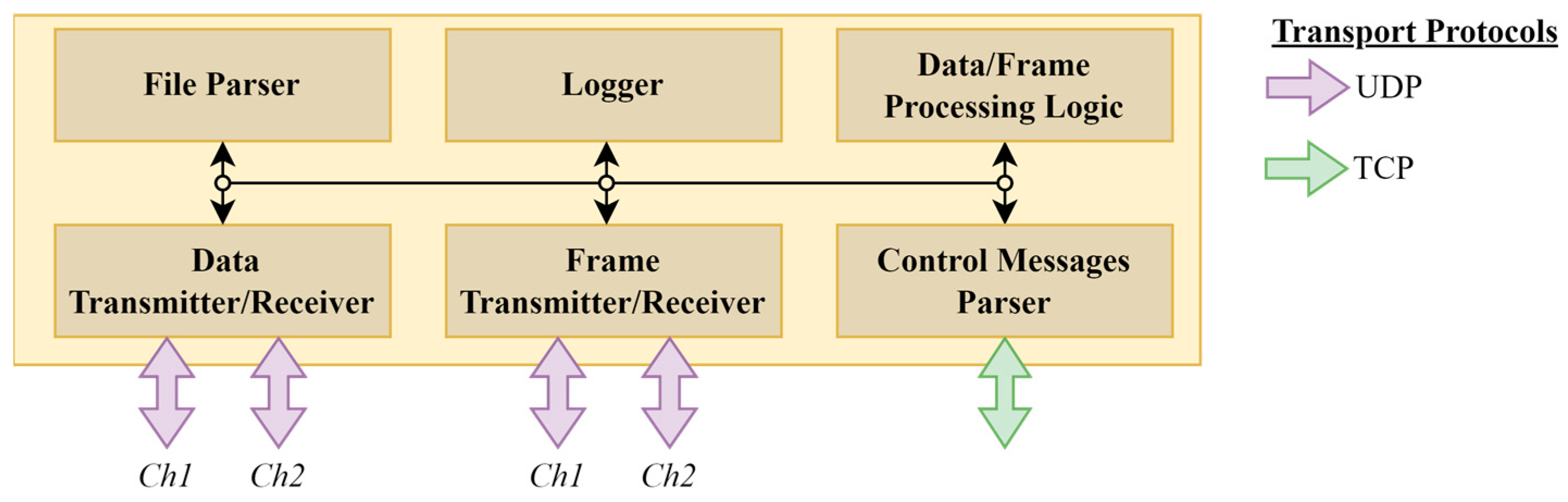

3.2.2. Transmitter/Receiver (T/R) and Channel

The Transmitter/Receiver (T/R) and Channel modules are responsible for transmitting, receiving, processing, and routing data and frame messages. Both modules are instances of the Device Software Component (Device) illustrated in

Figure 5 that is introduced in [

45]. The Device Software Component is written in C and is designed as a multi-threaded application for the Linux operating system.

The Device Software Component is composed of several software submodules: File Parser, Logger, Data/Frame Processing Logic, Data Transmitter/Receiver, Frame Transmitter/Receiver and Control Messages Parser. It can be configured to operate as the Transmitter/Receiver module or the Channel module. The configuration process is performed from the HUT module via the control interface during the simulation initialization phase.

Figure 6 illustrates the configuration of the T/R and Channel module, as well as the connection between them.

When the Device Software Component is configured to operate in Transmitter/Receiver (T/R) mode, all submodules shown in

Figure 5 are utilized, except for the File Parser submodule. The primary function of the T/R module is to process Data Messages received from the HUT module at the uplink as well as Frame Messages received from the Channel module at the downlink over the corresponding channel. At the uplink, it encodes Data Messages using appropriate channel modems and encapsulates the payload and header of these messages into a terrestrial or satellite physical layer format, thus producing Frame Messages that are sent to the Channel module. At the downlink, it decodes Frame Messages received from the Channel module and extracts Data Messages that are sent back to the HUT module. When the simulation is started, the Logger submodule sends corresponding messages over the UART interface, which can be used for debugging.

When the Device Software Component is configured to operate in Channel mode, all submodules shown in

Figure 5 are utilized, except for the Data T/R submodule. The primary function of the Channel module is to process Frame Messages received over the uplink, from the T/R module, by applying the appropriate channel model, thus simulating real-life scenarios. One of the key functions of channel processing is to determine whether a Frame Message should pass or should be dropped. If the MODCOD, used in the current Frame Message, is not strong enough to handle the channel SNR, then the frame should be dropped since it cannot be decoded successfully at the receiver’s side. Otherwise, the Frame Message should pass to the downlink and almost certainly will be decoded successfully in the T/R module. A dropping mechanism is implemented by comparing the MODCOD used for the current Frame Message encoding with the optimal MODCOD that should be used considering the current channel conditions. The first MODCOD, which is used for Frame Message coding, is a prediction provided by the HUT module, while the latter MODCOD is a value produced by the Channel model and represents the optimal code considering channel conditions. If the first MODCOD, used for encoding, has a larger SNR threshold value than the optimal MODCOD, the Frame Message will be dropped; otherwise, it will pass to the downlink.

5. Results and Discussion

The results of the simulation are presented in

Table 2, including the test cases, the used algorithm, expected SNRs, the hybrid average bitrate (obtained when switching from one channel to another within the same test case), the percentage of the channel 1 usage (satellite) and the average rejection rate of the sent packets.

The presented results show several important implications. Firstly, there is an expected increase in the average bitrate for the hybrid channel communication with the increase in the average SNR, which is to be expected. Secondly, the average rejection rate is 0 for both the LSTM and outdated information for the cases with the expected SNR being lower or equal to 6 dB. This is an interesting phenomenon as there is a rejection percentage for higher SNRs, even if higher SNR values are considered a more favorable communication condition. This is the result of two different factors. Firstly, for the lower SNR values, the lower MODCODs are more frequent, and according to

Table 1, for the considered simulation parameters, no packets are to be sent for the lowest four considered MODCODs. If the decision is not to send data, then there is no data rejection. In addition to this, the second factor that causes no data rejection is the margin used to keep the transmission error rates below 1% for both the outdated information and LSTM. These margins lower the selected MODCODs and therefore make them often fall in the range of the low MODCOD values for which no data are sent. This lowers the rejection rate and also lowers the communication speed.

In contrast, for the higher expected SNRs, an increase in the average speed can be seen, and the rejection rate rises above 0 but still falls under 1%. This is to be expected as higher MODCODs are being used, but the limit of the 1% transmission error rate that is used for margin determination can be seen in the results by the rejection rate falling under 1%.

It is also important to note that the performance of LSTM is consistently better than the outdated information approach, which can justify the usage of LSTM, but the improvements are not equal for all test cases. For the test case with the expected SNR of 15 dB, there is no clear improvement in the second decimal of the average speed. There is, however, a decrease in the rejection rate from 0.6 to 0.47 percent. It can also be seen that for this case, the satellite channel is used very little (under 1% of the time), and hence, the terrestrial channel has the conditions that suit a very high MODCOD without the need for complex decision making. The conclusion is that the main contribution of LSTM implementation is around 9 or 12 dB, as there is the most usage of the satellite communication channel, and the widest range of MODCODs is considered for communication.

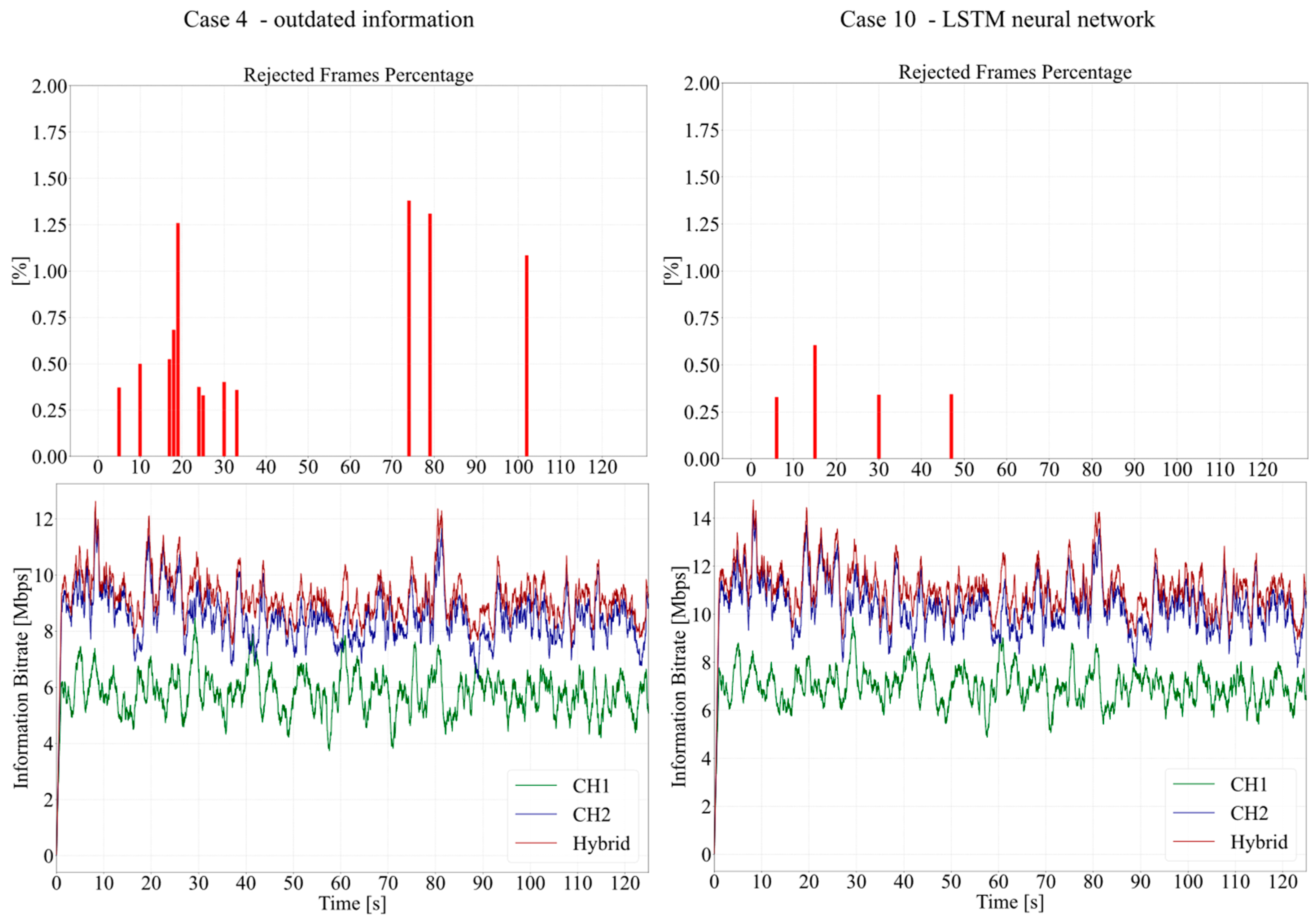

Going more in depth into test cases 4 and 10 to see the details of the simulation process,

Figure 8 depicts the rejected frame percentage and the information bitrate over time.

For both types of graphs displayed in

Figure 8, the averages are computed at a time interval of 1 s to make the data more visually interpretable. It can be seen that the information bitrate over time has the same shape for test cases 4 and 10. The terrestrial channel is superior to the satellite channel, and the hybrid channel communication exhibits an increase when compared to the terrestrial one. These shapes simply show that the trends of MODCOD selection are not deformed or changed by a more complex decision-making algorithm such as LSTM but rather simply shift to higher values. Because of this, the axis range on the information bitrate are intentionally left to be different so that it can be seen that the results between algorithms are similar in shape but that the LSTM provides an increase in the mean value of about 2 Mbps. The decrease in the rejected frame percentage is also notable, and although it is low in both cases, it significantly decreases for the LSTM implementation.

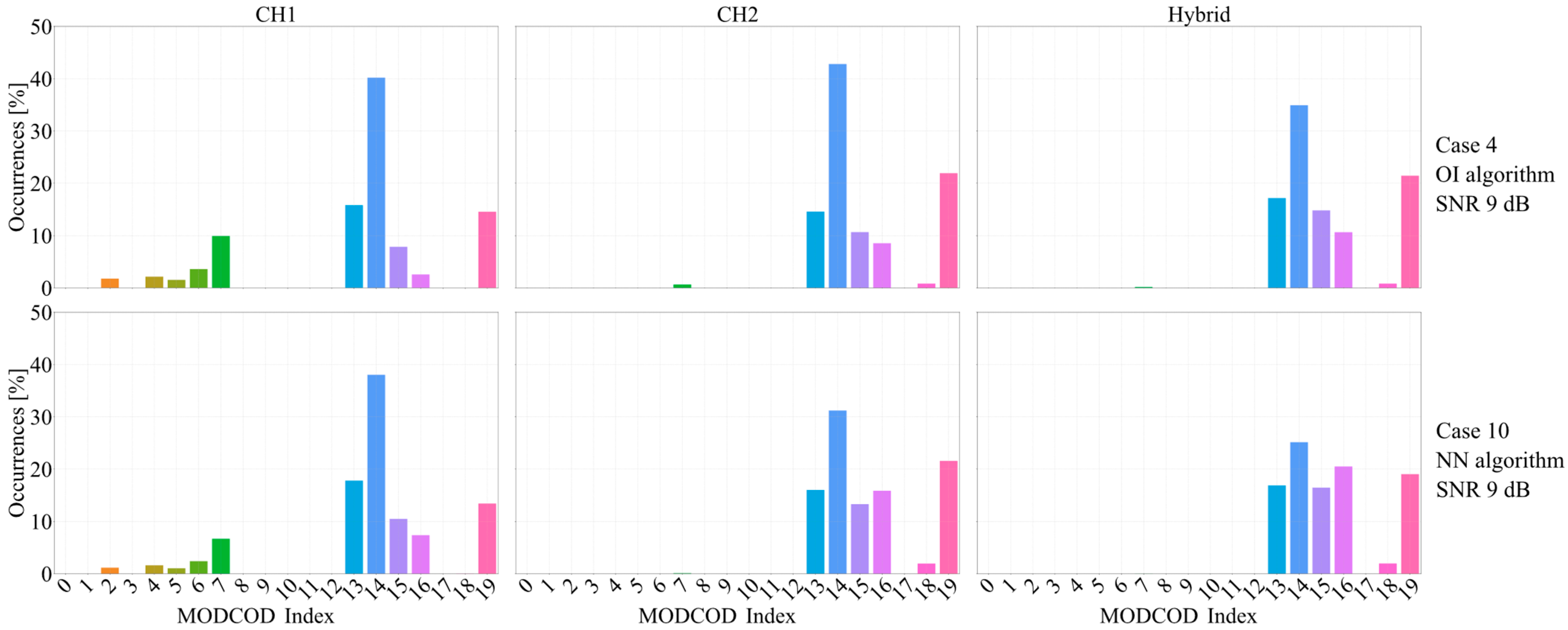

One more interesting characteristic that can be observed for test cases 4 and 10 is the distribution of the used MODCODs. The occurrences of different MODCODs for channel 1 (CH1), channel 2 (CH2) and the hybrid communication are given for test case 4 of the outdated information (OI) and test case 10 for the LSTM neural network (NN) in

Figure 9.

A similar trend as shown in

Figure 8 can be observed in that the overall used MODCODs are changed by LSTM but not drastically in comparison to the outdated information. In general, the same MODCODs can be observed in both cases, most likely influenced by the shape of the simulated SNR values for both channels. The distribution, however, is shifted, with LSTM ensuring that higher MODCODs are more frequently used. For both individual channels, the main increase between the algorithms can be seen in the frequency of the MODCODs indexed at 15 and 16 (16APSK 26/45, 16APSK 3/5), while the lowering of occurrences of lower MODCODs is most prominent for the MODCOD with index 14 being 16APSK 8/15. For the hybrid communication, we can see that no lower MODCODs are prominent in the distribution, and the main shift is lowering the 16APSK 8/15 occurrences in favor of 16APSK 26/45 and 16APSK 3/5. This shows that the improvements LSTM can make are not distributed evenly across all MODCODs, but rather in a given range, which stands in line with the previous conclusions that for extremely high SNR values and hence MODCOD, the LSTM neural network simply does not offer much in comparison to outdated information.

6. Conclusions

In this paper, we proposed a simulation framework for the performance evaluation of hybrid satellite–terrestrial network access. The framework contains an AI part that enables the intelligent selection of better network access. The framework is successfully demonstrated on one use case scenario. The UDP streaming session scenario was selected to clearly demonstrate the benefits of AI aid. This demonstration shows the potential of our proposed framework. By simply changing the experimental setup, one can easily and very quickly gain insight into the relation between network accesses and which access should be used more in a hybrid session. In our demonstration scenario, we changed the expected average SNR value. By simulating other conditions, users can gain insight into a multitude of scenarios and gain a very good understanding of multipath, i.e., hybrid, access performance for various conditions. The MP-TCP and similar multipath solutions will be tested and presented in our future work. In these scenarios, multiple factors impact the overall performance because of additional mechanisms like flow and congestion control algorithms. For example, link delays need to be included, and by simulating different relations between link delays over both accesses, one will be able to analyze the performances for various scenarios and also determine which flow/congestion control algorithms are the most suitable for hybrid satellite–terrestrial access. Note that this aspect of flexibility in testing various scenarios emphasizes the economic aspect of our proposed and also any other simulator as well. By using the same environment with different module versions or parameter setups, a vast set of scenarios can be tested. Our proposed framework includes hardware components that increase the cost of the simulation environment compared to software-only simulators, but they add to the versatility of our framework in adding potential hardware accelerators for computing intensive functions or setting the precise link delays.

Also, an important factor in practice is power consumption. Currently, the proposed simulation framework does not estimate power consumption. However, it should be noted that the proposed AI model is not complex, and thus, it can be used in energy-aspect-critical devices like smartphones. Considering that the input to the neural network is a signal containing 10 values, the neural network architectures used in the authors’ previous (and current) work are not of high complexity. In the authors’ previous work [

48], the best performing model was LSTM with 17.8 thousand parameters, with the second one being LSTM with 4.8 thousand parameters, both with a single LSTM layer and a single hidden fully connected layer. These architectures do not require substantial amounts of resources to run, even on mobile devices, and can be easily implemented with a framework designed for mobile platform use—LiteRT (formerly TensorFlow Lite).

One very important property of the proposed simulation framework is its distributed nature, which provides great flexibility and its ability to adapt to different scenarios. The distributed approach enables the very easy replacement of existing modules with their other versions. And this will be utilized in our future work. One direction of our future work will focus on AI components to use other parameters besides SNR measurements like latency or user preferences in network access selection. For example, the user preference parameter can offer one network access if it satisfies a desired QoS. Link delays are important in testing the performance of the MP-TCP and other similar multipath transport protocols as well. Also, new control mechanisms in such protocols can be easily tested and evaluated and compared to existing ones. In this way, it is possible to take into account the specifics of hybrid satellite–terrestrial (wireless) network access and propose improvements in the MP-TCP and similar protocols. The goal of the proposed framework is to be used for hybrid network access testing, thus avoiding complex network topologies and scalability issues in our proposed framework environment. But we believe that it can be extended by interconnecting it with network simulators for wired networks, thus extending the multipath approach beyond wireless access networks. This will also be one of our future work directions.