Abstract

The security of ultra-high-voltage (UHV) overhead transmission lines is frequently threatened by diverse external-force damages. As real-world transmission line scenarios are complex and external-force-damage objects exhibit varying scales, deep learning-based object detection methods necessitate the capture of multi-scale information. However, the downsampling and upsampling operations employed to learn multi-scale features work locally, resulting in the loss of details and boundaries, which makes it difficult to accurately locate external-force-damage objects. To address this issue, this paper proposes a content-aware method based on the generalized efficient layer aggregation network (GELAN) framework. A newly designed content-aware downsampling module (CADM) and content-aware upsampling module (CAUM) were integrated to optimize the operations with global receptive information. CADM and CAUM were embedded into the GELAN detection framework, providing a new content-aware method with improved cost accuracy trade-off. To validate the method, a large-scale dataset of external-force damages on transmission lines with complex backgrounds and diverse lighting was constructed. The experimental results demonstrate the proposed method’s superior performance, achieving 96.50% mean average precision (mAP) on the transmission line dataset and 91.20% mAP on the pattern analysis, statical modeling and computational learning visual object classes (PASCAL VOC) dataset.

1. Introduction

Ultra-high-voltage (UHV) overhead transmission lines are a critical component of the national power grid and global energy interconnection [1], which often face various external-force damages such as construction activities and wildfires [2,3], especially in remote scenarios. These external-force damages pose significant risks to personnel safety and power grid operation. With the rapid development of computer vision technology [4,5,6], deep learning-based object detection techniques provide a new methodology for the visual tasks of external-force-damage object detection in the UHV overhead transmission lines scenes. However, the actual transmission line scenarios where intricate backgrounds, varying lighting conditions, and diverse target poses exist bring great challenges to external-force-damage object detection [7,8].

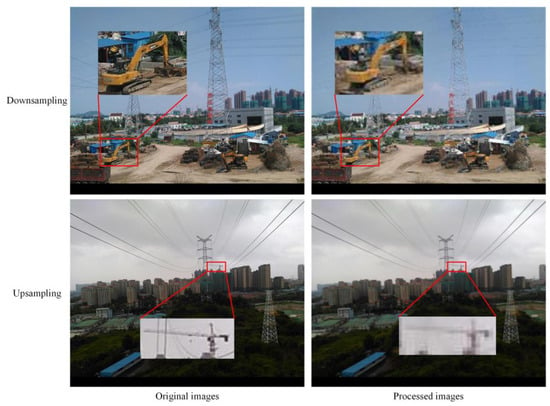

The deep learning-based object detection methods [9] for detecting external-force damages can be divided into two categories: two-stage detectors and one-stage detectors. Recent studies [10,11,12] have used two-stage detectors, while others [13,14] have chosen one-stage detectors for this task. Many of these methods utilize feature pyramid networks (FPN) [15] and path aggregation networks (PANet) [16] to capture and integrate multi-scale feature information, thereby enhancing the accuracy of detecting external-force-damage objects, particularly in scenes with objects of different sizes. To enhance the propagation of inter-level feature information, FPN and PANet commonly utilize pooling (or strided convolution [17]) and interpolation algorithms, respectively, for downsampling and upsampling the feature map to construct the feature pyramid. However, these downsampling methods operate within the local window of the predefined kernel, leading to the loss of detailed information in the feature map and limiting the network’s visual understanding ability [18]. Meanwhile, interpolation algorithms utilize spatial distances between pixels to guide the upsampling process for enlarging the feature map. However, this may result in the blurring of boundaries around objects, which poses challenges in determining the locations of external-force-damage objects in the scenes, as shown in Figure 1.

Figure 1.

Visualizing the limitations of downsampling and upsampling in image processing.

To address these issues, researchers have proposed various improvement methods. For downsampling, mixed pooling [19] combines max pooling and average pooling, demonstrating better performance than the individual method. However, mixed pooling still uses non-learnable sampling kernels to achieve feature downsampling within local regions. In recent years, some advanced downsampling methods have gradually introduced learnable weights to achieve adaptive downsampling operations. For instance, detail preserving pooling (DPP) [20] based on the idea of inverse bilateral filters focuses on important structural details. Local importance-based pooling (LIP) [21] uses a weighted average pooling method to aggregate features for downsampling. Nevertheless, these methods still retain image details in small neighborhoods, which may enhance local noise.

As for upsampling, SegNet [22] employs max pooling indices in semantic segmentation to preserve edge information, but this may introduce noise and disrupt the semantic consistency of smooth areas. Pixel shuffle [23] rearranges pixel values along the depth in the channel into the width and height in space. Unlike the formers, some upsamplers introduce learnable parameters during the upsampling process to enlarge the feature map. For example, deconvolution [24] employs a method of mapping a single input to multiple outputs to upsample features. CARAFE [25,26] reassembles features within predefined regions through weighted combinations. Overall, these learnable upsampling methods help restore the boundaries of objects but require learning many parameters as a tradeoff.

The above methods primarily rely on local neighborhood context information or require expensive computations to perform adaptive sampling operations. These approaches restrict the coverage range of the sampler, thereby limiting its expressive power and performance. In transmission line scenarios, the complex and variable environment requires global context to accurately detect potential external-force-damage objects. However, learning global context features involves handling more data and performing complex computations, which increases the system’s cost and complexity. Therefore, it is necessary to find more effective methods to process and utilize global contextual information while maintaining performance.

Humans often tend to focus their attention on more interested regions when dealing with complex tasks, in order to fully utilize limited processing resources [27,28]. Imagine a person standing in a garden. Initially, they absorb the overall layout and the distribution of flowers. Then, their attention gradually shifts to the colors, shapes, and textures of the flowers they wishes to observe. This is an analytical process that moves from the global to the detailed, reflecting the innate human visual perception. But many deep-learning architectures cannot fully mimic this ability in human.

Therefore, we are inspired to propose a content-aware method for detecting external-force-damage objects on transmission lines based on the generalized efficient layer aggregation network (GELAN) [29] object detection model. This method captures global contextual information through the interaction between channel and spatial features, utilizing this information as a content-aware weight to compensate for the limitations of local feature information. By leveraging both global and contextual information, the proposed approach enhances focus on object details while improving the performance of the GELAN model with minimal computational resources.

The main contributions of this paper are summarized as follows:

(1) We designed a novel content-aware downsampling module (CADM) for model downsampling. By learning the global information of the feature map, content-aware weights were generated to supplement the fine-grained features of downsampling and reduce the loss of details. This optimizes the feature extraction capability of the model on the downsampled feature map.

(2) We designed a newly content-aware upsampling module (CAUM) for model upsampling, which also utilizes important information from deep feature maps to generate content-aware weights, dynamically enhancing the upsampled feature map obtained through an interpolation algorithm. This can help highlight the hierarchical relationships of sampling points, including object boundaries.

(3) We constructed a large-scale dataset of external-force damages to UHV overhead transmission lines using the data augmentation method. By integrating CADM and CAUM modules into the GELAN detection framework, a mean average precision (mAP) of 96.50% was achieved for this dataset. This provides a new solution for the safe operation of UHV overhead transmission lines.

(4) In addition, the proposed method was further validated on the public dataset PASCAL VOC. Compared to existing popular object detectors, the highest accuracy of 91.20% mAP was achieved, which proves the generality and effectiveness of our proposed method.

2. Related Work

2.1. Object Detection

Object detection techniques are mainly divided into two types: two-stage methods that use region proposal networks (RPNs) and one-stage methods that do not.

Two-stage object detection methods are renowned for their high detection accuracy. The introduction of the R-CNN algorithm [30] marks the first implementation of deep learning in object detection. Fast R-CNN [31] introduces the region of interest (RoI) pooling layer, significantly enhancing detection speed, while Faster R-CNN [32] further optimizes the generation process of candidate boxes through RPNs, achieving faster detection speed. R-FCN [33] accelerates the process by adopting a fully convolutional network, and Cascade R-CNN [34] increases object confidence and accuracy by utilizing a series of detectors with incrementally higher intersection-over-union (IoU) thresholds.

One-stage object detection methods are popular for their high detection speed as they perform dense predictions directly across the entire image, eliminating the need for candidate box generation. YOLOv1 [35] dramatically increases speed by completing detection and classification in a single forward pass. SSD [36] improves the detection capabilities for smaller objects by conducting multi-scale detection. YOLOv2 [37], YOLOv3 [38], YOLOv4 [39], and YOLOv5 [40] introduce anchor box mechanisms, deeper feature extraction networks, CSPDarknet53 [41], and the PANet [16] structure, further enhancing performance. YOLOv7 [42] further employs efficient layer aggregation networks (ELANs) [43] as its main computational unit, achieving higher detection precision and speed. Recently, a one-stage object detection model, GELAN [29], which combines CSPNet [41] and ELAN [43], has been proposed and has outperformed most object detection models. In the development process of real-time, end-to-end object detectors such as RT-DETR [44], YOLOv10 [45], and YOLO11 [46], researchers are committed to eliminating non-maximum suppression (NMS) in post-processing while thoroughly examining and improving the network architecture. These advancements have significantly reduced computational overhead and improved model performance.

As research deepens, one-stage object detection algorithms have made significant progress in terms of real-time capabilities and accuracy, especially in engineering applications [47]. While GELAN [29] is currently considered one of the most efficient one-stage object detectors, there is still ample space for enhancing both feature downsampling and upsampling processes.

2.2. Detection of External-Force Damages on Transmission Lines

High-voltage transmission lines are critical for long-distance power transmission. As the economy grows, remote transmission corridors are now targeted for infrastructure, industry, and real estate. External factors like construction and fire damages now pose significant threats to grid stability. Deep learning-based object detection provides an efficient solution for automatically identifying the external-force damages affecting transmission lines.

Some researchers have employed two-stage detectors to identify external-force-damage objects on transmission lines. Leng et al. [10] developed an anti-damage system for overhead lines, proposing an algorithm based on an improved R-FCN [33]. Qu et al. [11] enhanced Faster R-CNN [32] with E-OHEM [48] to reduce the false detection rates of external-damage risks. Liu et al. [12] created a deep learning-based method for detecting damages, demonstrating high accuracy and robustness under varied lighting and weather conditions. As one-stage detectors gained popularity, Liu et al. [13] introduced a YOLOv3-based model [38] for detecting engineering vehicles near transmission lines, achieving a nearly 7% improvement in the mean average precision (mAP). Siddiqui et al. [49] discussed the use of drones and deep learning for real-time transmission line monitoring, improving defect detection and reducing inspection time. Li et al. [14] addressed detection speed and power consumption issues in embedded devices, introducing a real-time hazard detection method using YOLOv5s [40], which efficiently detects cranes, machinery, smoke, flames, and foreign objects. Zou et al. [50] improved the YOLOX-s [51] model for better hazard detection in complex, multi-object scenarios, and later [52] optimized YOLOv8s [53] to enhance the safety monitoring of transmission lines. Recently, Li et al. [54] improved YOLOv7-tiny [42] to enhance small object detection while reducing computational and storage requirements, making it ideal for low-power devices.

Both two-stage and one-stage detectors have demonstrated effectiveness in detecting external-force-damage objects on transmission lines. However, challenges remain in accurately detecting multi-scale objects in complex environments. This paper aims to address these issues by improving upsampling and downsampling techniques, enhancing detection accuracy and robustness in multi-object scenarios.

2.3. Feature Downsampling

Feature downsampling is the fundamental operation in modern convolutional neural networks (CNNs), especially in feature pyramid networks [15,16], which often use spatial downsampling layers like pooling to increase the receptive field and conserve memory. However, regular methods can result in the loss of important details, which are crucial for accurate object location and detection, especially for small-sized objects.

Some methods like Mixed Pooling [19], SoftPool [55], and Spatial Transformer Networks [56] attempt to preserve more details by combining pooling techniques or learning spatial transformations. Other approaches, such as Fractional Pooling [57], S3Pool [58], and Stochastic Pooling [59], use random sampling within the pooling region to prevent overfitting and enhance generalization. Despite these advancements, most existing downsamplers are based on manually designed non-linear mappings and fixed, non-learnable kernels, limiting their potential to preserve details. Recently, the introduction of learnable downsampling techniques has begun to overcome this limitation. DPP [20] uses learnable weights to emphasize spatial variations, while LIP [21] and spatial attention pooling [60] refine local feature representations by learning important feature weights. Content-adaptive downsampling [61] provides an additional mask to adaptively specify which patches participate in downsampling while preserving the input resolution. Although this method utilizes the concept of resource-adaptive allocation, the given downsampling mask in the local region limits its application in constructing the feature pyramids.

The above-mentioned downsamplers demonstrate the potential to minimize the loss of detailed information during the local downsampling process, albeit without considering global contextual information. This paper aims to investigate how advanced downsampling methods, incorporating global receptive information, can improve model performance by achieving a better balance between cost and accuracy.

2.4. Feature Upsampling

Feature upsampling is essential in encoder–decoder architectures for tasks such as semantic segmentation and object detection. It helps restore spatial resolution to a low-resolution decoder feature maps and merges features from different network levels, where lower-level maps provide details and higher-level maps offer semantic information.

Traditional upsampling techniques are simple but often need to pay more attention to the semantic information, leading to information loss. Methods like max-unpooling in SegNet [22] can reduce this loss but may introduce noise and disrupt semantic consistency. To improve upsampling, learnable parameter techniques have been introduced, including deconvolution [24] and Pixel Shuffle [23], which provide more flexibility and effectiveness. Approaches like Guided Upsampling [62] and Pixel Transposed Convolutional Networks [63] also address issues such as the checkerboard effect, but they can reduce computational efficiency.

The latest dynamic upsampling algorithms, such as CAREFE [25,26], FADE [64], SAPA [65], and DySample [66], achieve more refined upsampling by generating dynamic kernels or combining multi-resolution features. While these methods enhance adaptivity, they also increase model complexity and computational cost, especially for high-resolution inputs.

This paper aimed to develop a simple and efficient upsampler that can enhance performance at a low cost and in a versatile manner, while retaining the benefits of dynamic upsampling.

3. Proposed Method

To meet the requirement for the good trade-off of external-force-damage objects detection on transmission lines, this paper proposes novel CADM and CAUM used as downsampling and upsampling operations, respectively, and then implements a series of improvements on the GELAN framework. The following will delve deeper into these improvement strategies.

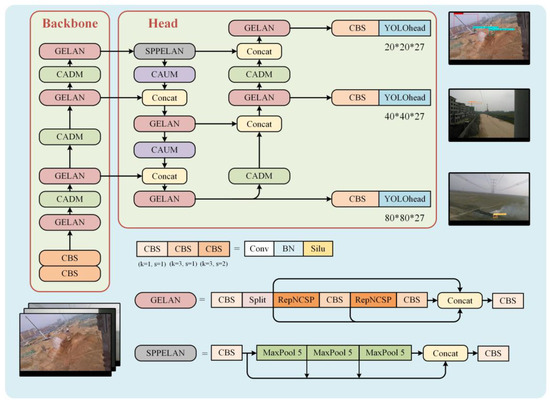

3.1. Overall Network Structure

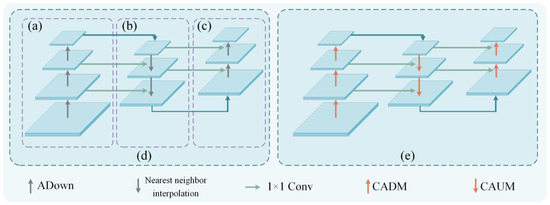

The GELAN framework is an end-to-end object detection system, divided into two main parts: backbone and head, as shown in Figure 2d.

Figure 2.

Overall structure of GELAN before and after improvement. (a) Backbone structure; (b) top–down path augmentation; (a,b) FPN; (c) bottom–up path augmentation of PANet; (b,c) head structure; (d) original GELAN structure; (e) the structure of our methods.

The backbone, as the feature extraction network, is responsible for extracting features from input images. Within the backbone, three feature layers are obtained for constructing the head, as shown in Figure 2a.

The head is responsible for merging low-level spatial information and high-level semantic information to detect the location and category of objects. The three feature layers obtained from the backbone are integrated into the head to consolidate features across different scales. In the head, the previously obtained feature layers are used for further feature extraction. GELAN still employs a combination of FPN and PANet to achieve feature fusion by upsampling and downsampling twice. Three enhanced feature layers are obtained through backbone and feature fusion, as shown in Figure 2c. These layers are then input into decoupled heads for object classification and regression.

In the overall architecture, as shown in Figure 2d, the original downsampling employs the ADown block, which combines mixed pooling and stride convolution. The upsampling uses the nearest-neighbor interpolation algorithm. GELAN has a sampling rate of 2 for both upsampling and downsampling. However, these techniques that work according to the local rule, are prone to lose details of the feature map when constructing a feature pyramid, thereby limiting the network’s ability to capture global contextual information. Inspired by human perception [27], we designed a content-aware downsampling module (CADM) and a content-aware upsampling module (CAUM) to address this issue. Within these modules, a newly designed perceptual attention block (PAB) captures global contextual information and dynamically generates content-aware weights. These weights enable the PAB to adaptively focus on areas of interest, enhancing the interaction between global spatial features and channel features. By replacing the downsamplers and upsamplers in the original GELAN model with CADM and CAUM, respectively, the improved GELAN greatly enhances its ability to capture global visual information, as shown in Figure 2e and Figure 3.

Figure 3.

The overall framework of the proposed method for detecting external-force-damage objects.

3.2. Content-Aware Downsampling Module (CADM)

A content-aware downsampling module (CADM) was designed as a new learnable downsampler which can aggregate information in the global receptive field and dynamically adapt to specific content while maintaining computational efficiency in an optimal way. Specifically, CADM operates by weighted combination and the rearrangement of features at each position of the input feature map. These spatially adaptive weights are dynamically predicted and generated through a lightweight multi-layer perceptron (MLP) with a sigmoid activation function.

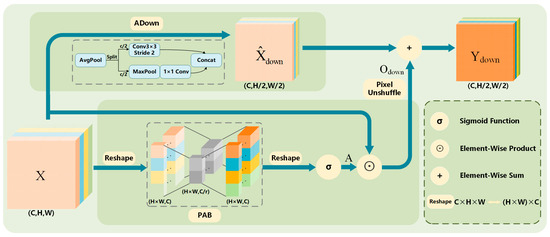

To enhance content relevance and expressive power during the feature extraction process, CADM consists of three key components, including the ADown block [29], the perceptual attention block (PAB), and the Pixel UnShuffle layer [23], as shown in Figure 4.

Figure 4.

The newly designed CADM.

The ADown block. As the downsampling operation derived from GELAN, ADown block consists of a combination of average pooling, max pooling, and stride convolution, which aims to reduce the spatial dimension of the feature map and extract semantic information.

The PAB. Different from the attention mechanisms [67,68], to utilize a three-dimensional arrangement to preserve information and enhance the interaction features across global dimensions, an MLP was employed to enhance the dependency between channels and spatial dimensions. MLP is an encoder–decoder structure, consisting of two linear layers with a reduction ratio . A ReLU activation function was inserted in the middle, adding some nonlinearity to the activations and intuitively aligning with natural understanding. The structure is as follows:

Here, C represents the number of input channels, while r controls the parameters and the number of neurons in the PAB. The output of the PAB is activated by a sigmoid function, generating content-aware weights for each position, ranging from 0 to 1.

The Pixel UnShuffle layer. The operation rearranges the features of each pixel without losing information to increase the depth of the feature map. The scaling factor corresponds to the downsampling factor in the network.

Given an input feature map X with dimensions (C, H, W) and a downsampling factor s = 2, CADM produces a new feature map with dimensions (C, H/2, W/2) during the downsampling process. The process is defined as follows:

where is defined as the coarse downsampled feature map, generated by the original downsampling operation ADown block. is defined as the fine-grained downsampled feature map, produced by the PAB and the Pixel UnShuffle layer. By adding the feature map with the feature map , the fusion of the coarse and fine-grained features is achieved.

Here, ⊙ denotes element-wise multiplication, and represents the model’s attention to the corresponding position in the input feature map . After the operation, the number of channels is transformed through a 1 × 1 convolution to ensure that the output has the same shape as the feature map . By feature weighting, adjusting features based on the PAB before downsampling can alleviate the impact of information loss, enabling the model to maintain richness in information when processing downsampled features. The operation also performs downsampling without information loss. The content-aware weights matrix can be calculated using Equation (5).

where is the sigmoid function, denotes the perceptual attention block, and represents the reshaped form of the input feature map with channels rearranged.

In this paper, the newly designed CADM not only effectively reduces the loss of detailed information, but also enhances feature information in the critical content areas, thereby improving the model’s sensitivity to salient regions in global.

3.3. Content-Aware Upsampling Module (CAUM)

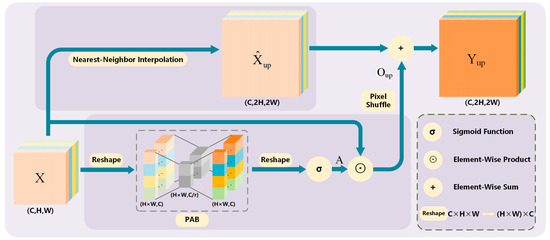

As shown in Figure 5, our proposed CAUM consists of the nearest-neighbor interpolation algorithm, the PAB, and the Pixel Shuffle layer. The PAB is consistent with what we introduced in the CADM. The reduction ratio controls the parameter quantity of CAUM, while also affecting the filtering of important information by perceptual weights.

Figure 5.

The newly designed CAUM.

The nearest-neighbor interpolation. We retained the nearest-neighbor interpolation upsampling operation used by the baseline model because this process not only magnifies the feature map while preserving the original feature map information, but also does not require additional parameters and computational costs.

The Pixel Shuffle layer. It utilizes super-resolution techniques to increase the image resolution by rearranging pixels instead of simple interpolation. This approach better preserves spatial information in the image, avoiding artifacts and distortions that may be introduced during interpolation. The scaling factor of the Pixel Shuffle layer is set to 2 due to the network’s upsampling factor of 2.

The content-aware weights can be computed using Equation (5). Feature weighting and Pixel Shuffle upsampling:

After operation, channel transformation is performed using a 1 × 1 convolution to ensure consistency with the shape of the output feature map from the interpolation algorithm. Feature weighting helps to mitigate potential issues such as boundary blurring and ambiguity in the affiliation of sampling points caused by interpolation algorithm, thus learning details of feature map effectively. Therefore, is defined as the fine-grained upsampled feature map. Finally, the output is an upsampled feature map with dimensions (C, 2H, 2W)

where represents the nearest neighbor interpolation algorithm. is defined as the coarse upsampled feature map in the CAUM process.

By adding the feature map obtained through nearest-neighbor interpolation with the feature map , the coarse and fine-grained feature fusion is achieved during the upsampling operation. This fusion of coarse and fine-grained features helps better capture different levels of information in the image, enhancing the diversity and accuracy of the feature map.

4. Experiments

This section presents multiple experiments validating the effectiveness of the proposed method. We conducted experiments using our proposed method on the dataset of external-force-damage objects, including both comparative experiments and a series of ablation studies. To verify the generalization of the method, experiments were performed on the PASCAL VOC dataset which contains 20 categories.

4.1. Dataset Collection and Expansion

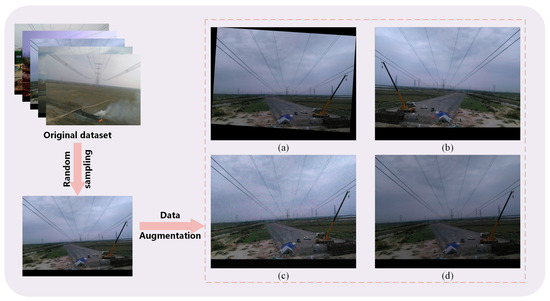

The original image dataset used in this study was collected through monitoring equipment deployed by a certain power grid corp. The initial dataset was messy, and after organization, images with external-force damages were classified and integrated. The final dataset of external-force damages includes four common types: crane, tower crane, construction machinery, and wildfire.

However, the dataset suffers from imbalanced class distribution and a shortage of samples for certain damage types. Therefore, we employed a data augmentation method [69] to address these issues. This method utilized existing samples to generate new ones. The data augmentation method included affine transformations, random Gaussian noise, color jittering, and brightness adjustment.

Figure 6 shows the process of generating augmented samples and the effect of data augmentation. These operations simulate different imaging conditions under transmission line scenarios, including variations in position, background, blurriness, and lighting changes, thereby increasing the sample quantity and enriching the diversity of backgrounds and lighting conditions. The statistical data of the dataset of external-force damages on transmission lines are shown in Table 1.

Figure 6.

Data augmentation process and results: (a) rotation, (b) flip, (c) random gaussian noise, and (d) color jittering.

Table 1.

Sample statistics of the dataset.

4.2. Implementation Details and Evaluation Metrics

Following the common practice in transfer learning, we initially pre-trained all backbone networks on the ImageNet1k image classification dataset and then fine-tuned them on the training set of the existing dataset. All models were trained, evaluated, and tested using the PyTorch 1.12 deep learning framework and Nvidia RTX 3090 GPU. The hyperparameter settings are shown in Table 2.

Table 2.

Hyperparameter settings.

For the external-force-damage dataset of transmission lines, we divided the dataset into training, validation, and test sets in a 6:2:2 ratio. In experiments on the public dataset, we utilized Pascal VOC 2007 trainval [70] and 2012 trainval [71] for training, and the 2007 test set for validation, as shown in Table 3.

Table 3.

Sample partitioning of each dataset.

The performance evaluation metrics include the average precision (AP) and mean average precision (mAP). AP calculates the average precision across different categories. mAP averages the precision across all categories and is a common metric for measuring the overall performance of object detection models. Frames per second (FPS) evaluation is used to assess the model’s inference speed with a batch size of 1.

4.3. Experiments on the Dataset of External-Force Damages on Transmission Lines

4.3.1. Comparison with Popular Object Detector

We compared the performance of different popular object detectors on the external-force-damages dataset B, and the results are shown in Table 4. Our method was compared with Faster-RCNN, SSD, and the YOLO series.

Table 4.

Comparison results with popular object detector algorithms.

From Table 4, we can see that when using VGG16 and ResNet-50 as the backbone networks, Faster-RCNN achieves 83.77% mAP and 82.70% mAP, respectively. SSD300 achieves 78.33% mAP and the highest detection speed of 80.87 FPS. The YOLO series of object detectors has a higher detection accuracy and also certain advantages in detection speed. YOLOv3 achieved 90.60% mAP and 60.61 FPS. YOLOv4, YOLOv5-L, and YOLOv7 further improved the detection accuracy. The end-to-end object detectors also performed well, with RT-DETR-L achieving a mAP of 94.43%, YOLOv10-L achieving 94.66% mAP, and the latest YOLO11-L achieving 95.06% mAP with 39.26 FPS. The new object detector GELAN-C achieved 94.59% mAP and 39.37 FPS. We used GELAN-C as the baseline, and the improved method achieved the best detection accuracy of 96.50% mAP, which was 1.91% higher than GELAN-C. However, while our model showed a slight decrease in computational efficiency and inference speed compared to others, it still met the real-time requirements.

4.3.2. Ablation Experiments

Different reduction ratios r. To investigate the influence of different reduction ratios on the performance of our proposed modules, we tested the reduction ratios of 2, 4, 8, and 16 in CADM and CAUM, analyzing the performance variations.

As shown in Table 5, for CADM, using different r values has varying degrees of promotion on the model’s performance. The experimental results demonstrate that as r increases, the number of neurons in the module decreases, leading to a reduction in the module’s parameter count, which, in turn, contributes to performance improvements. When r was 8, the model’s performance reached an optimum state, with 95.63% mAP. However, when r was set to 16, there is a downward trend in model performance. Therefore, we chose a reduction ratio of 8 for CADM. Table 6 shows that our CAUM also contributed to improving model performance with fewer parameters. Similar to CADM’s performance, when r was set to 8, the model achieved the highest mAP of 95.69%. The above two experiments both reflect the importance of the reduction ratio r for the proposed methods CADM and CAUM.

Table 5.

Performance of the CADM at different reduction ratios.

Table 6.

Performance of the CAUM at different reduction ratios.

Different learnable downsamplers. As shown in Table 7, we employed various learnable downsamplers in GELAN-C and evaluated their performance based on model parameter quantity, detection accuracy, and inference speed. Additionally, since the downsamplers and upsamplers of the model are significant for capturing multi-scale features, we introduced metrics for evaluating multi-scale performance. APS refers to the average precision for small objects, reflecting the model’s performance in detecting small objects. APM pertains to the average precision for medium-sized objects, assessing the model’s effectiveness in detecting medium objects, while APL indicates the average precision for large objects, representing the model’s accuracy in identifying large objects. Generally, small objects are defined as having an area less than 32 × 32 pixels, medium objects have an area between 32 × 32 pixels and 96 × 96 pixels, and large objects have an area greater than 96 × 96 pixels. We compared ADown, Strided Convolution (S-Conv), Pixel Unshuffle (P-Unshuffle), DPP, LIP, CAREFE++, and CADM, where ADown served as the baseline.

Table 7.

Performance of using different learnable downsamplers in GELAN-C.

Experimental results demonstrate that CADM exhibited higher AP and mAP values across all objects. With a minimal increase in parameter quantity, CADM achieved a 1.04% mAP higher than the baseline while maintaining a high inference speed. The CADM significantly improved detection accuracy for multi-scale object detection, particularly for small objects, achieving a 7.72% AP increase compared to the original model. This demonstrates a strong advantage, which is crucial for detecting potential damages at long distances. The introduction of CAREFE++ significantly increased model complexity, unexpectedly resulting in lower performance compared to the baseline. We speculate that the poor portability of the CAREFE++ downsampler makes it unsuitable for the direct replacement of the ADown method in the model. Despite incorporating a significant number of parameters, DPP and LIP do not yield performance improvements over the baseline. The Strided Convolution downsampling introduced additional 5.96 M parameters, resulting in a 0.57% mAP improvement over the baseline but lower than CADM. However, such a significant increase in computational load without significant performance improvement is often suboptimal. Pixel Unshuffle and CADM strike a balance between model complexity and performance improvement, but Pixel Unshuffle lags behind CADM in inference speed. Furthermore, we observed a severe gradient vanishing issue with SoftPool [55] in our experiments, resulting in a detection accuracy of only 1.17% mAP. We infer that SoftPool is not suitable for YOLO series models.

Overall, CADM enhanced object details by introducing global contextual information through an additional branch, enabling the network to effectively learn about potential damage objects. This advantage is particularly pronounced for small object detection. In contrast, other downsampling methods that focus on local areas lack this capability.

Different learnable upsamplers. To demonstrate the superiority of CAUM, we compared several popular learnable upsamplers, including deconvolution (Deconv), Pixel Shuffle (P-Shuffle), SAPA, DySample, and CAREFE++. The nearest-neighbor interpolation algorithm, originally used in GELAN-C, served as the baseline, and the experimental results are shown in Table 8.

Table 8.

Performance of using different learnable upsamplers in GELAN-C.

The results demonstrate that CAUM achieved the highest overall detection accuracy of 95.69% mAP, significantly enhancing the performance of the baseline model with an improvement of 1.1% mAP. However, the inference speed only slightly decreased by 0.63 FPS, and the parameters slightly increased by 0.26 M. For such a small parameter budget, CAUM brings significant benefits, indicating more effective parameter utilization. Additionally, CAUM demonstrates significant advantages in multi-scale object detection accuracy. The detection accuracy for small targets was 9.18% AP higher than the baseline model, while medium targets showed an increase of 3.73% AP, and large targets saw an improvement of 1.64% AP. All these improvements surpassed those of other upsampling operators. Deconvolution exhibited similar performance and inference speed to CAUM but required more parameters (33.83M compared to 25.70M). Pixel Shuffle achieved 95.43% mAP with a similar parameter quantity to CAUM, but reduced the inference speed by 2.51 FPS. CAREFE++ requires more parameters than CAUM. However, there is a significant performance gap between them. SAPA-B requires more parameters than CAUM, but its performance gain is not significant, with only a 0.31% mAP improvement. The new upsampling method DySample achieves performance similar to CAUM, but it has 0.17 M more parameters and is 2.30 FPS slower. CAUM outperforms these respective methods while maintaining computational efficiency.

Overall, CAUM captures the interaction relationships between sampling points by introducing global information, effectively restoring image details, including object boundaries. This aids the network model in accurately locating potential damage objects. In contrast, other upsampling operators either only incorporate local contextual information or require a substantial number of parameters as a learning cost.

Application to various models. To verify the applicability and portability of the proposed method, we conducted ablation experiments on YOLOv7 and YOLOv10, with the results shown in Table 9 and Table 10. We observed that adding the CADM structure to the original model in YOLOv7 improved the mAP by 1.31%, while adding the CAUM structure resulted in a 1.38% improvement. These additions enhanced the network’s ability to capture global context information. Notably, the simultaneous addition of both modules yielded a 2.50% gain in mAP.

Table 9.

The applicability results of the proposed method for detecting damage objects in YOLOv7.

Table 10.

The applicability results of the proposed method for detecting damage objects in YOLOv10.

In YOLOv10, when the CADM and CAUM structures were added separately to the Enhanced CSPNet-PANet, the baseline mAP performance improved by 0.96% and 0.91%, respectively. When these two components were integrated into the baseline method, it was clear that the proposed modules effectively enhanced the performance of the original model, resulting in a mAP improvement of 1.62%.

The two models mentioned above are representative and can be regarded as variants of FPN and PANet. Our proposed CADM and CAUM can be easily integrated as plug-and-play components into these structures, leading to performance improvements.

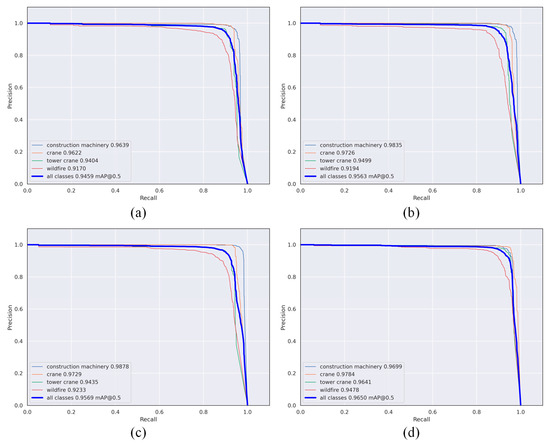

Ablation study based on GELAN. To validate the effectiveness of all proposed components, we used GELAN-C as the baseline for ablation studies and gradually added CADM and CAUM. Table 11 shows the ablation study data for all components.

Table 11.

Ablation studies on all the proposed components in GELAN-C.

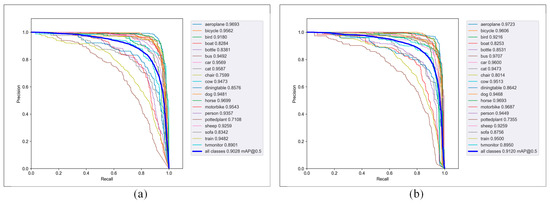

Overall, the improvements brought by each component were complementary. Specifically, the mAP of the CADM was 1.04% higher than the baseline, validating the effectiveness of CADM. Building upon this, incorporating the CAUM increases the mAP from 95.63% to 96.50%, confirming that CADM and CAUM can alleviate the information loss in the upsampling and downsampling processes. Through the analysis of evaluation metrics, it is evident that the overall performance improves after embedding these two components into the model. Figure 7 illustrates the precision–recall curves for the ablation studies of the proposed components. Our methods enhanced the detection precision and recall for the damage objects, achieving a mAP of 96.50%, which is higher than the 94.59% mAP of GELAN-C. It should be noted that the integrated two-module architecture introduced additional computational overhead, resulting in a decline in real-time performance (FPS reduced by 4.0%, parameters increased by 16.4%). However, considering the application of our model in the field of transmission line safety. If there is a situation of missed detection of external-force-damage objects, it is likely to lead to major safety accidents. Therefore, our study adopted a precision-oriented design paradigm.

Figure 7.

The precision–recall curves: (a) baseline, (b) baseline + CADM, (c) baseline + CAUM, and (d) ours.

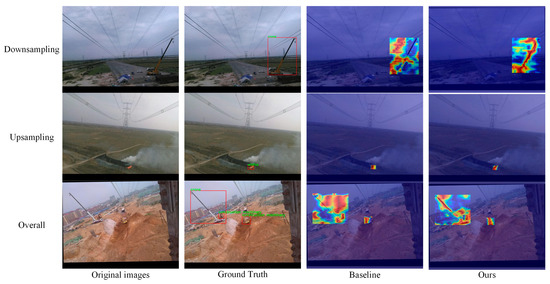

4.3.3. Visualization Result Presentation

As shown in Figure 8, we computed heatmaps for large-scale objects using the feature maps after downsampling and for small-scale objects using the feature maps after upsampling. Finally, we used the entire model to output the heatmaps of multi-scale objects. The results indicate that our content-aware modules focused more on the damage objects themselves and provided clearer outlines of the objects compared to the original network.

Figure 8.

Visualizations of heatmaps computed using feature maps from different layers.

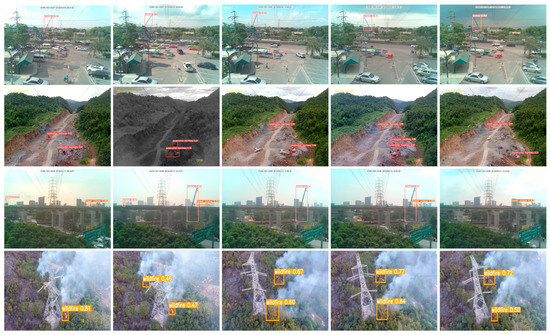

Figure 9 shows a comparison of the detection results between the baseline model and our method in different scenes, including a complex background, varying lighting, and diverse poses. It is evident that our method demonstrates better detection capability and higher confidence. Figure 10 presents additional detection results, with the upper part displaying the detection results from the GELAN-C algorithm and the lower part showcasing our algorithm’s performance on the dataset of external-force damages on transmission lines. It can be observed that compared to the baseline model, our method can more accurately localize the bounding boxes of objects, reducing instances of missed detections. Our method exhibits stronger robustness and generalization capability in scenarios with multiscale variations, different lighting conditions, occlusions, rotations, and noise interference. In contrast, the baseline model shows a performance decline in these scenarios.

Figure 9.

Comparison of the detection results between the baseline model and our method in different scenarios.

Figure 10.

More visualization of the detection results for both the baseline model and our approach.

4.4. Experiments on the Public Dataset

To further validate the effectiveness and generalization capability of our method, we conducted experiments on the natural scene PASCAL VOC dataset. We compared our method with state-of-the-art object detection algorithms.

Table 12 shows the experimental results of the aforementioned advanced methods and our method on the VOC 2007 test set. The results indicate that our method achieved the best detection accuracy (91.20% mAP), which is 0.92% higher than the baseline network, demonstrating its effectiveness and reliability in object detection tasks. However, a slight decrease in inference speed was also observed. Nevertheless, our method still met the requirement for real-time performance, indicating that it can still achieve high-performance results in practical applications.

Table 12.

Comparison with other methods on the PASCAL VOC dataset.

In summary, these advanced object detection methods utilized local operations such as pooling (or strided convolution) and interpolation algorithms for downsampling and upsampling feature maps. In contrast, our approach introduced global information during the downsampling and upsampling processes to capture the interactions between sampling points, thereby enhancing the expressive power of the feature maps. Our method not only preserved important contextual information, but also effectively restored image details, particularly at object boundaries and key features. The experimental results validated the effectiveness and application potential of our method in the field of object detection.

Figure 11 illustrates the precision–recall curves of our method compared to the baseline model on the PASCAL VOC dataset. It can be observed that our method achieved a certain degree of improvement in both detection precision and recall on the dataset. Additionally, it showcased the detection performance for each class in the dataset and the overall performance.

Figure 11.

The precision–recall curves on the PASCAL VOC dataset: (a) baseline, (b) ours.

4.5. Experiment in Real-World Scenarios

In this section, we will present experiments conducted in real-world scenarios to evaluate the performance of our proposed method. We selected a variety of environments where incidents of external-force damage could occur, including urban, suburban, and rural areas. These scenarios exhibited significant variations in lighting, weather, and background complexity. Data were collected using cameras installed on high-voltage overhead transmission lines, which enabled us to capture multiple angles and perspectives, ensuring that the dataset accurately reflected real-world conditions. Additionally, some of the data for these scenes were sourced from the HUAWEI Information and Communications Technology (ICT) competition.

Figure 12 demonstrates the model’s performance in detecting external-force-damage objects across different scenes and time periods. It is clear that both the position of the damage objects and the surrounding environment changed over time, including variations in lighting and background. Our method effectively detected and tracked these objects, maintaining good performance even under nighttime conditions. This showcases the robustness of our approach in real-world environments.

Figure 12.

The detection performance of our method for external-force-damage objects in real-world scenarios as time changes.

Figure 13 shows the detection results of our method for four types of external-force-damage objects in real-world scenarios: crane, tower crane, construction machinery, and wildfire. The figure clearly shows that these damage objects are effectively detected, demonstrating the effectiveness and feasibility of our approach in practical environments.

Figure 13.

The detection performance of our method for four types of external-force-damage objects in real-world scenarios.

5. Conclusions

This paper presents a content-aware method for detecting external-force-damage objects on transmission lines. To resolve the problem of regular downsampling and upsampling operations lacking global contextual information, the newly designed CADM and CAUM were incorporated into the original GELAN framework as downsampling and upsampling operations. The improved GELAN model can achieve higher accuracy with a moderate increase in computational cost. As the two modules proposed in this paper were designed based on information preservation principles and consideration of global information, CADM and CAUM significantly enhanced the richness of features and improved the model’s performance. CADM can alleviate the problem of losing fine-grained features during consecutive downsampling processes, and CAUM optimizes the interpolated upsampled feature map to preserve the affiliation between sampling points. The experimental results show that the proposed content-aware method achieved higher accuracy on the dataset of external-force damages to transmission lines, with only a slight sacrifice in computational efficiency. Furthermore, the highest detection accuracy was achieved on the public dataset PASCAL VOC, and the computational efficiency could meet real-time requirement.

In summary, our approach holds significant practical value and application prospects for the safe operation of UHV overhead transmission lines, providing new insights and methodologies for related research. In addition, our method can also be extended to object detection tasks in other fields. In future work, we will continue to delve into the following: due to experimental constraints in this study, we did not validate the object detection performance under adverse environmental conditions (e.g., foggy, rainy, and snowy scenarios). Future research will extend the evaluation to these challenging environments to enhance the robustness of the detection models; expand the categories on the existing dataset of external-force-damage objects to provide more comprehensive protection for transmission lines; further optimize other modules to integrate a superior object detector, especially focusing on model inference speed, model parameters, and detection accuracy; and collaborate with power grid corps to conduct field trials and validate the proposed method’s effectiveness and robustness in the realistic engineering applications.

Author Contributions

M.L.: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Writing—original draft, Writing—review and editing; M.C.: Conceptualization, Data curation, Investigation, Methodology, Visualization, Writing—original draft, Writing—review and editing; B.W.: Conceptualization, Investigation, Resources, Software, Validation, Writing—review and editing; M.W.: Data curation, Funding acquisition, Project administration, Resources, Supervision; J.W. (Juan Wang): Funding acquisition, Project administration, Resources, Software; J.W. (Jianda Wang): Investigation, Validation, Visualization; H.H.: Formal analysis, Investigation, Visualization; Y.Y.: Software, Validation, Visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Hubei Province, grant number 2022CFA007, and the Hubei Provincial Central Guidance Local Science and Technology Development Project, grant number 2023EGA027.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request. The PASCAL VOC dataset can be accessed at http://host.robots.ox.ac.uk/pascal/VOC/ (accessed on 18 April 2024).

Acknowledgments

The authors wish to thank the editor and reviewers for their valuable suggestions. They also acknowledge the financial support from the Natural Science Foundation of Hubei Province and the Hubei Provincial Central Guidance Local Science and Technology Development Project.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Quimbre, F.; Rueda, I.A.; van Soest, H.; Schmid, J.; Shatz, H.J.; Heath, T.R.; Meidan, M.; Wullner, A.; Deane, P.; Glynn, J.; et al. China’s Global Energy Interconnection: Exploring the Security Implications of a Power Grid Developed and Governed by China; RAND Corporation: Santa Monica, CA, USA, 2023. [Google Scholar]

- Chen, B. Fault Statistics and Analysis of 220-kV and Above Transmission Lines in a Southern Coastal Provincial Power Grid of China. IEEE Open Access J. Power Energy 2020, 7, 122–129. [Google Scholar] [CrossRef]

- Panossian, N.; Elgindy, T. Power System Wildfire Risks and Potential Solutions: A Literature Review & Proposed Metric; National Renewable Energy Laboratory (NREL): Golden, CO, USA, 2023. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E.; Andina, D. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Wang, J.; Shou, W.; Ngo, T.; Sadick, A.-M.; Wang, X. Computer Vision Techniques in Construction: A Critical Review. Arch. Comput. Methods Eng. 2021, 28, 3383–3397. [Google Scholar] [CrossRef]

- Ai, D.; Jiang, G.; Lam, S.-K.; He, P.; Li, C. Computer Vision Framework for Crack Detection of Civil Infrastructure—A Review. Eng. Appl. Artif. Intell. 2023, 117, 105478. [Google Scholar] [CrossRef]

- Jia, H.; Han, Z.; Xu, X.; Wu, P.; Qin, R.; Jin, Y.; Wang, X.; Huang, W. A Background Reasoning Framework for External Force Damage Detection in Distribution Network. In The Proceedings of the 17th Annual Conference of China Electrotechnical Society; Xie, K., Hu, J., Yang, Q., Li, J., Eds.; Springer: Singapore, 2023; pp. 771–778. [Google Scholar]

- Liu, Z.; Wu, G.; He, W.; Fan, F.; Ye, X. Key Target and Defect Detection of High-Voltage Power Transmission Lines with Deep Learning. Int. J. Electr. Power Energy Syst. 2022, 142, 108277. [Google Scholar] [CrossRef]

- Kaur, R.; Singh, S. A Comprehensive Review of Object Detection with Deep Learning. Digit. Signal Process. 2023, 132, 103812. [Google Scholar] [CrossRef]

- Leng, X.; Dai, J.; Gao, Y.; Xu, A.; Jin, G. Overhead Transmission Line Anti-External Force Damage System. IOP Conf. Ser. Earth Environ. Sci. 2022, 1044, 012006. [Google Scholar] [CrossRef]

- Qu, L.; Liu, K.; He, Q.; Tang, J.; Liang, D. External Damage Risk Detection of Transmission Lines Using E-OHEM Enhanced Faster R-CNN. In Proceedings of the Pattern Recognition and Computer Vision; Lai, J.-H., Liu, C.-L., Chen, X., Zhou, J., Tan, T., Zheng, N., Zha, H., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 260–271. [Google Scholar]

- Liu, J.; Huang, H.; Zhang, Y.; Lou, J.; He, J. Deep Learning Based External-Force-Damage Detection for Power Transmission Line. J. Phys. Conf. Ser. 2019, 1169, 012032. [Google Scholar] [CrossRef]

- Liu, P.; Song, C.; Li, J.; Yang, S.X.; Chen, X.; Liu, C.; Fu, Q. Detection of Transmission Line against External Force Damage Based on Improved Yolov3. Int. J. Robot. Autom. 2020, 35, 460–468. [Google Scholar] [CrossRef]

- Li, H.; Jiang, F.; Guo, F.; Meng, W. A Real-Time Detection Method of Safety Hazards in Transmission Lines Based on YOLOv5s. In Proceedings of the International Conference on Artificial Intelligence and Intelligent Information Processing (AIIIP 2022), Qingdao, China, 17–29 June 2022; Loskot, P., Ed.; SPIE: Bellingham, WA, USA, 2022; Volume 12456, p. 124561C. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated Residual Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)), Honolulu, HI, USA, 21–26 July 2017; pp. 636–644. [Google Scholar]

- Yu, D.; Wang, H.; Chen, P.; Wei, Z. Mixed Pooling for Convolutional Neural Networks. In Proceedings of the Rough Sets and Knowledge Technology: 9th International Conference, RSKT 2014, Shanghai, China, 24–26 October 2014; Proceedings. Springer: Berlin/Heidelberg, Germany, 2023; pp. 364–375. [Google Scholar]

- Saeedan, F.; Weber, N.; Goesele, M.; Roth, S. Detail-Preserving Pooling in Deep Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9108–9116. [Google Scholar]

- Gao, Z.; Wang, L.; Wu, G. LIP: Local Importance-Based Pooling. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3354–3363. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning Deconvolution Network for Semantic Segmentation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. CARAFE: Content-Aware ReAssembly of FEatures. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. CARAFE++: Unified Content-Aware ReAssembly of FEatures. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4674–4687. [Google Scholar] [CrossRef] [PubMed]

- Bruckmaier, M.; Tachtsidis, I.; Phan, P.; Lavie, N. Attention and Capacity Limits in Perception: A Cellular Metabolism Account. J. Neurosci. 2020, 40, 6801–6811. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Newry, UK, 2017; Volume 30. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Mark Liao, H.-Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Proceedings of the Computer Vision—ECCV 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerland, 2025; pp. 1–21. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-Based Fully Convolutional Networks. In Proceedings of the Advances in Neural Information Processing Systems; Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Newry, UK, 2016; Volume 29. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Michael, K.; Fang, J.; Yifu, Z.; Wong, C.; Montes, D.; et al. Ultralytics/Yolov5: V7. 0-Yolov5 Sota Realtime Instance Segmentation. Zenodo 2022. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Mark Liao, H.-Y.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A New Backbone That Can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Yeh, I.-H. Designing Network Design Strategies through Gradient Path Analysis. arXiv 2022, arXiv:2211.04800. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs Beat Yolos on Real-Time Object Detection. In Proceedin Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 11–15 June 2024. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. YOLO11 Release, version 8.3.0; GitHub: San Francisco, CA, USA, 2024.

- Hussain, M. YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Shrivastava, A.; Gupta, A.; Girshick, R. Training Region-Based Object Detectors with Online Hard Example Mining. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 761–769. [Google Scholar]

- Siddiqui, Z.A.; Park, U. A Drone Based Transmission Line Components Inspection System with Deep Learning Technique. Energies 2020, 13, 3348. [Google Scholar] [CrossRef]

- Zou, H.; Ye, Z.; Sun, J.; Chen, J.; Yang, Q.; Chai, Y. Research on Detection of Transmission Line Corridor External Force Object Containing Random Feature Targets. Front. Energy Res. 2024, 12, 1295830. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Zou, H.; Yang, J.; Sun, J.; Yang, C.; Luo, Y.; Chen, J. Detection Method of External Damage Hazards in Transmission Line Corridors Based on YOLO-LSDW. Energies 2024, 17, 4483. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. YOLOv8 Release, version 8.1.0; GitHub: San Francisco, CA, USA, 2023.

- Li, J.; Zheng, H.; Cui, Z.; Huang, Z.; Liang, Y.; Li, P.; Liu, P. Intelligent Detection Method with 3D Ranging for External Force Damage Monitoring of Power Transmission Lines. Appl. Energy 2024, 374, 123983. [Google Scholar] [CrossRef]

- Stergiou, A.; Poppe, R.; Kalliatakis, G. Refining Activation Downsampling with SoftPool. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 10337–10346. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial Transformer Networks. In Proceedings of the Advances in Neural Information Processing Systems; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Newry, UK, 2015; Volume 28. [Google Scholar]

- Graham, B. Fractional Max-Pooling. arXiv 2014, arXiv:1412.6071. [Google Scholar] [CrossRef]

- Zhai, S.; Wu, H.; Kumar, A.; Cheng, Y.; Lu, Y.; Zhang, Z.; Feris, R. S3Pool: Pooling with Stochastic Spatial Sampling. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4003–4011. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Stochastic Pooling for Regularization of Deep Convolutional Neural Networks. arXiv 2013, arXiv:1301.3557. [Google Scholar] [CrossRef]

- Ma, J.; Gu, X. Scene Image Retrieval with Siamese Spatial Attention Pooling. Neurocomputing 2020, 412, 252–261. [Google Scholar] [CrossRef]

- Hesse, R.; Schaub-Meyer, S.; Roth, S. Content-Adaptive Downsampling in Convolutional Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 4544–4553. [Google Scholar]

- Mazzini, D. Guided Upsampling Network for Real-Time Semantic Segmentation. arXiv 2018, arXiv:1807.07466. [Google Scholar] [CrossRef]

- Gao, H.; Yuan, H.; Wang, Z.; Ji, S. Pixel Transposed Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 1218–1227. [Google Scholar] [CrossRef]

- Lu, H.; Liu, W.; Fu, H.; Cao, Z. FADE: Fusing the Assets of Decoder and Encoder for Task-Agnostic Upsampling. In Proceedings of the Computer Vision—ECCV 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 231–247. [Google Scholar]

- Lu, H.; Liu, W.; Ye, Z.; Fu, H.; Liu, Y.; Cao, Z. SAPA: Similarity-Aware Point Affiliation for Feature Upsampling. In Proceedings of the Advances in Neural Information Processing Systems; Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Curran Associates, Inc.: Newry, UK, 2022; Volume 35, pp. 20889–20901. [Google Scholar]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to Upsample by Learning to Sample. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 6004–6014. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2007 (VOC2007) Results; University of Oxford: Oxford, UK, 2007. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results; University of Oxford: Oxford, UK, 2012. [Google Scholar]

- Fu, C.-Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional Single Shot Detector. arXiv 2017, arXiv:1701.06659. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).