Abstract

In the field of 3D technology, depth-image-based rendering (DIBR) has been widely adopted due to its inherent advantages including low data volume and strong compatibility. However, during network transmission of DIBR 3D images, both center and virtual views are susceptible to unauthorized copying and distribution. To protect the copyright of these images, this paper proposes a channel interaction mamba-guided generative adversarial network (CIMGAN) for DIBR 3D image watermarking. To capture cross-modal feature dependencies, a channel interaction mamba (CIM) is designed. This module enables lightweight cross-modal channel interaction through a channel exchange mechanism and leverages mamba for global modeling of RGB and depth information. In addition, a feature fusion module (FFM) is devised to extract complementary information from cross-modal features and eliminate redundant information, ultimately generating high-quality 3D image features. These features are used to generate an attention map, enhancing watermark invisibility and identifying robust embedding regions. Compared to the current state-of-the-art (SOTA) 3D image watermarking methods, the proposed watermark model shows superior performance in terms of robustness and invisibility while maintaining computational efficiency.

1. Introduction

With the rapid advancement of computing power and the widespread adoption of internet technologies, generative artificial intelligence (AI) is developing at an unprecedented pace, driving extensive creation and utilization of digital images across various fields [,,,]. However, the portability and ease of transmission of digital media may also lead to copyright infringement issues. In this context, digital watermarking technology serves as an effective means of copyright protection by embedding imperceptible watermarks into images, providing crucial technical support for safeguarding digital content security [].

Since the introduction of digital watermarking, extensive research efforts have been directed towards exploring watermarking techniques for two-dimensional images, videos, and textual content. However, research on digital watermarking for 3D images remains relatively insufficient. In contrast to conventional 2D imagery, 3D images offer a more immersive visual experience, catering to the human desire for stereoscopic depth perception [,]. Nonetheless, copyright protection for 3D images faces unique challenges. Depth-image-based rendering (DIBR) is a crucial representation of 3D images. In scenarios involving illegal distribution, it is necessary to protect the copyrights of both center and virtual views simultaneously []. Additionally, the inherent desynchronization attacks in the DIBR can cause shifts in watermark embedding positions, leading to incorrect watermark extraction. Therefore, researchers have introduced a series of watermarking models based on conventional methods [,,]. While these models have brought about certain performance improvements, they also introduce new issues. Nam et al. [] leveraged the similarity of scale-invariant feature transform (SIFT) parameters between virtual and center views to perform geometric alignment, which enhances robustness against synchronization attacks caused by DIBR operations. However, the watermarking capacity is limited. Kim et al. [] embedded watermarks into the downsampled sub-bands of the dual-tree complex wavelet transform (DT-CWT) domain by exploiting its translation invariance. Nevertheless, this method leads to noticeable degradation in image quality. In contrast, non-subsampled contourlet transform (NSCT)-based methods [] also exhibit translation invariance while avoiding downsampling during image decomposition, thereby having less impact on image quality after watermark embedding. However, robustness against geometric attacks remains relatively weak. Given that deep neural networks are capable of learning robust features, He et al. [] proposed a DIBR 3D image watermarking network named BAGAN. In this method, image attention is computed by exploiting the correlation between the center view and the depth map, which is used to guide the watermark embedding strength. However, the global feature relationships between the center view and the depth map are not sufficiently mined, so watermarking performance is limited. Moreover, BAGAN employs dual decoders to extract watermarks from center and virtual views. In practical scenarios, it is often difficult to determine whether the provided image is the virtual view or the center view, significantly limiting its applicability.

To effectively address the various challenges faced by existing 3D image watermarking methods, this paper proposes a channel interaction mamba-guided generative adversarial network (CIMGAN) for DIBR 3D image watermarking. This model employs a channel interaction mamba (CIM) to enable lightweight cross-modal channel interaction for global modeling, thereby optimizing feature representation across modalities. Additionally, the feature fusion module (FFM) leverages the inherent correlations between modalities to filter out redundant image features and fuse RGB and depth features. This process generates high-quality 3D image features that guide watermark embedding. Moreover, it employs a unified decoder to extract watermarks from both center and virtual views, enhancing its practicality in real-world applications. Compared to state-of-the-art (SOTA) models, the proposed model maintains watermarking invisibility while demonstrating stronger robustness against various image attacks. The main contributions of this paper are listed as follows.

- (1)

- We propose the CFEGAN for depth-image-based rendering (DIBR) 3D image watermarking. The experimental results show that the proposed method maintains high visual quality and strong robustness while also having a low computational cost.

- (2)

- We design the CIM by using the global modeling of mamba to facilitate cross-modal feature interactions at the channel level, considering that RGB images and depth maps contain distinct image information.

- (3)

- We devise the FFM by using the modality correlations for generating 3D image features to guide watermarking, so that RGB and depth features can be fused effectively.

2. Related Work

2.1. Deep Learning-Based 2D Image Watermarking

In recent years, deep learning technology has experienced rapid and significant advancement. In this context, Goodfellow et al. [] proposed the generative adversarial network (GAN), which has demonstrated remarkable performance in computer vision tasks such as image deblurring and deraining [,]. Hayes et al. [] introduced the GAN into steganography and demonstrated that adversarial training can produce robust steganographic techniques, marking the first successful application of the GAN in the domain of information hiding. This also provided insights into the integration of watermarking and deep learning.

On this basis, Zhu et al. [] presented an end-to-end watermarking framework based on deep neural networks (DNNs), named Hidden. This model incorporates the GAN into the watermark embedding process, thereby improving image quality. Additionally, a noise layer is introduced to simulate common image processing, enhancing resistance to noise attacks. Hao et al. [] proposed a GAN-based watermarking model which employs high-pass filters to optimize the selection of watermark embedding regions for enhancing watermarking invisibility. To combat non-differentiable JPEG noise, Jia et al. [] proposed a novel training strategy that alternates between “real JPEG” and “simulated JPEG” noise during training. This strategy optimizes its search solution in different directions, thereby ensuring the accuracy of the update direction. To address the issue of limited receptive fields in convolutional operations and insufficient image feature capture, Luo et al. [] leveraged the global modeling capability of the Transformer to capture long-range dependencies between the image and the watermarking, thereby optimizing latent image features and facilitating the effective fusion of the watermarking and the cover image. Due to insufficient coupling between the encoder and decoder in the END framework, Fang et al. [] proposed a decoder-driven watermarking network, named De-END. Unlike traditional END architectures, this method first feeds the cover image into the decoder for feature analysis and then transmits the extracted latent image features to the encoder, enabling functional sharing between encoding and decoding processes.

Although deep learning-based 2D image watermarking methods have made significant progress in terms of watermarking performance, directly applying these models to depth-image-based rendering (DIBR) 3D images makes it difficult to effectively extract watermarks in a synthesized view, thereby leading to the failure of copyright protection.

2.2. DIBR 3D Image Watermarking

Unlike 2D image watermarking, DIBR 3D image watermarking requires copyright protection for both center and synthesized views. Halici et al. [] embedded watermarks in the spatial domain of center view texture maps and optimized watermarking by estimating the projection matrix of the synthesized view. Lee et al. [] leveraged the visual characteristics of depth information to identify occluded pixel regions in the center view after DIBR operations and embedded watermarks accordingly to ensure the image quality. However, these methods rely on the cover image during the watermark extraction process, which imposes certain limitations in practical applications. To enhance robustness against DIBR attacks, Kim et al. [] employed dual-tree complex wavelet transform (DT-CWT) to group coefficients and embedded watermarks into corresponding quantized coefficients. Nam et al. [] proposed a DIBR 3D watermarking method based on scale-invariant feature transform (SIFT), which selects embedding regions by exploiting the similarity in position and scale of SIFT key points between the virtual and center views, thereby improving the robustness to DIBR synchronization attacks. Nam et al. [] utilized the translation invariance of the non-subsampled contourlet transform (NSCT) to identify sub-bands resilient to DIBR attacks. In addition, this method dynamically adjusts the watermark embedding strength through perceptual masking value calculations, effectively ensuring watermarking invisibility.

Currently, most 3D digital watermarking methods operate in the transform domain to embed watermarks by modifying specific transform coefficients. However, these methods often exhibit limited robustness against various attacks and rely on manually designed features, restricting their generalization capability and making it challenging to ensure copyright protection for 3D images. Leveraging deep learning, He et al. [] proposed a bilateral attention-based generative adversarial network, which generates a visual attention mask by exploring the correlation between the center view and the depth map to dynamically adjust the watermarking distribution across different image regions. Moreover, by employing inter-channel and intra-channel weight learning, this method reduces redundancy in the depth map, facilitating a more effective fusion of the center view and depth information. While this model outperforms traditional watermarking methods, the design of two separate decoders limits its applicability across different scenarios.

3. Proposed Method

3.1. Preliminaries

The mamba architecture is fundamentally predicated on the structured state space sequence model (S4) [], which employs the HiPPO matrix [] to generate latent states capable of preserving historical data. This mechanism is instrumental in effectively capturing long-range dependencies within the data. The model operates by initially mapping a one-dimensional function or sequence to a variable denoted as y(t), and subsequently modeling this variable through a series of latent states . Within this system, the evolution parameter is and the projection parameters are and . Mathematically, the system can be articulated through the following:

The S4 and mamba models represent discrete analogs of their continuous counterparts, wherein time–scale parameters are incorporated to facilitate the transformation of continuous variables and into their discrete equivalents, and , respectively. This conversion is typically executed using the Zero-Order Hold (ZOH) method. The mathematical formulation of this process is delineated as follows:

The discrete representation of the linear system can be expressed as follows:

Finally, the output is obtained by global convolution calculation, and its mathematical expression is as follows:

where represents the length of sequence x and represents a structured convolution kernel.

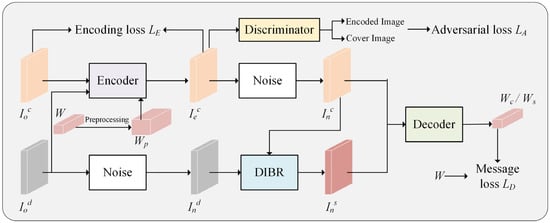

3.2. Overview of CIMGAN

As illustrated in Figure 1, the CIMGAN consists of four core components: the encoder Eθ, the noise layer N, the decoder Dφ, and the discriminator Aε. Here, θ, φ, and ε denote the parameter matrices associated with the encoder, decoder, and discriminator, respectively. During the training process, these parameter matrices are iteratively optimized to enhance model performance, while the noise network N, functioning as a nonparametric network, simulates noisy interference environments. The encoder takes the center view image ∈ RH×W×3 and the preprocessed L-bit binary watermark Wp to produce the encoded center view ∈ RH×W×3. Subsequently, the noise layer applies preset noise to produce the noised center view ∈ RH×W×3. Concurrently, the image ∈ RH×W×1 is also subjected to noise, and following a DIBR operation with , a noisy virtual view image is generated. Ultimately, the decoder receives either or and extracts the watermark Wc or Ws, respectively. It is noteworthy that an adversarial relationship exists between the encoder and the discriminator, wherein the discriminator aims to improve its ability to distinguish watermarked images, while the encoder optimizes the encoding process to make visually indistinguishable, thereby deceiving the discriminator. This adversarial training strategy not only effectively enhances the watermark’s imperceptibility but also achieves a balance between image quality and watermark robustness.

Figure 1.

The framework of the CIMGAN.

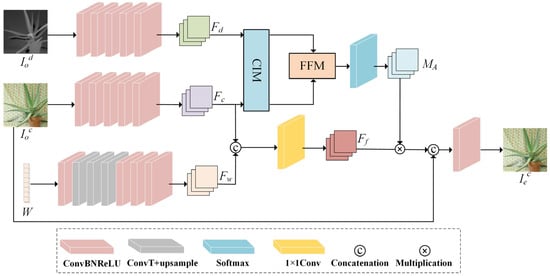

Encoder. The objective of the encoder is to embed a watermark into the robust features of the cover image while maintaining low visual distortion. Therefore, comprehensive learning of image features is crucial for the watermarking task. To achieve this, the CIMGAN leverages the correlation between RGB and depth maps via the CIM, which performs lightweight cross-modal interaction through partial channel swapping between RGB and depth data. Additionally, the FFM further exploits the inherent cross-modal relationship between RGB and depth features to enhance their respective representational capabilities and enable efficient fusion. This process yields depth-aware 3D image features, which serve as a weight matrix to guide the spatial distribution of watermark, so as to enhance watermarking robustness while preserving the high-quality visual image.

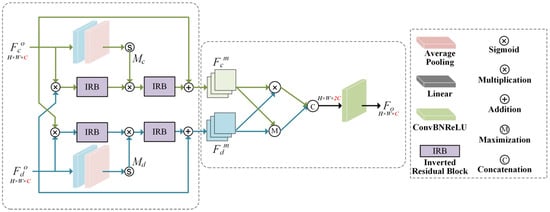

Specifically, as illustrated in Figure 2, watermark W, through a series of multi-layer convolution and upsampling operations, enables the creation of the watermark feature map Fw ∈ RH×W×C. Simultaneously, the center view and the depth image undergo convolution operations independently, which leads to the extraction of the respective center view feature Fc ∈ RH×W×C and depth feature Fd ∈ RH×W×C. Subsequently, Fc and Fd are fed into the CIM to facilitate initial interaction between cross-modal features. The mamba block’s inherent long-range modeling capability effectively captures global feature dependencies. Building upon this foundation, redundant features are sieved through the FFM, giving rise to the extraction of multi-modal fusion features. These features are then subjected to a Softmax function to yield attention weight matrix MA, which is employed to optimize the watermark distribution within the image feature Ff. Ultimately, the encoded image is crafted through a combination of residual connections and convolution operations.

Figure 2.

Encoder structure.

The training objective of the encoder Eθ is to minimize the difference between the original image and the encoded image Ie by optimizing the parameter matrix, θ. The image loss function LC is defined as follows:

where MSE (·) denotes the mean square error and E(·) denotes the coding process.

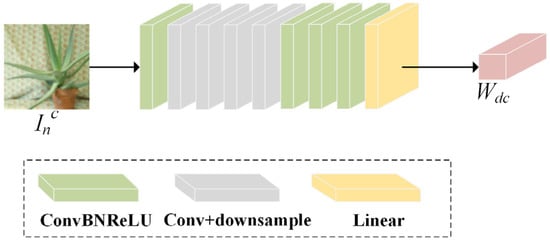

Decoder. The purpose of the decoder is to extract the watermark from the noised encoded image. This process is essentially the inverse operation of watermark embedding, as illustrated in Figure 3. Specifically, the decoder initiates by extracting the low-level features from the noised encoded image , through a 3 × 3 convolutional layer, with expansion in the channel dimension. Then, multiple downsampling operations are performed using convolutions, progressively halving the spatial dimensions of the decoded features while retaining key information. Finally, the decoded features are converted into a single-channel output via convolution and reshaped using a linear layer, yielding the extracted watermark Wdc.

Figure 3.

Decoder structure.

The decoder Dφ aims to extract the complete watermark from the noised center view and the synthesized view . Therefore, the decoder parameter φ is optimized to minimize the decoding loss LD:

where D(·) represents the decoding process.

Noise Layer. The noise layer serves to enhance the decoder’s ability to extract the watermark from noised encoded images. It introduces diverse attacks into the encoded image and designs these attacks as differentiable network layers, simulating real-world attack scenarios during iterative training to improve watermarking robustness. To augment robustness and enhance its generalization capabilities, a strategic approach involving an equal probability random selection attack has been incorporated into the training process. The noise layer, denoted as N, comprises four distinct noise layers: the JPEG, Median Filter, Dropout, and Cropout. Although the proposed model is trained on only four types of attacks, it demonstrates strong generalization performance against untrained attacks as well.

Furthermore, and generate the virtual view through DIBR. The detailed procedure for this operation is expressed as follows:

where Render (·) represents the virtual rendering operation. In the presence of noise perturbations, the watermark embedded in the and undergoes degradation, thereby reducing their watermark extraction capability of the synthesized view .

Discriminator. In the context of digital watermarking, the discriminator plays a pivotal role in enhancing imperceptibility through the adversarial relationship with the encoder. The encoder is responsible for embedding the watermark into the cover image , generating the encoded image that is visually indistinguishable from . The discriminator, conversely, evaluates whether the input image contains the watermark by discerning the feature distribution of the input image. This adversarial mechanism helps the encoder generate an encoded image that closely resembles the cover image in terms of visual appearance. The discriminator is composed of three 3 × 3 convolutional layers, followed by a global average pooling operation and a linear layer. As an adversary to the encoder, the discriminator Aε endeavors to ascertain whether is a watermarked image by iteratively updating the parameter ε. The loss function, LA, which is used to evaluate the performance of the discriminator, is formulated as follows:

where A (·) represents the discrimination process.

On the other hand, θ is updated in the encoder to generate an encoded image similar to , aiming to mislead the discriminator:

The objective function of the CIMGAN encompasses three distinct components: the encoding loss, the adversarial loss, and the decoding loss. These components are used for the concurrent training of the encoder and decoder. The overall objective loss function is delineated as follows:

where λ1 represents the encoding weight, λ2 represents the decoding weight, and λ3 represents the discriminator weight.

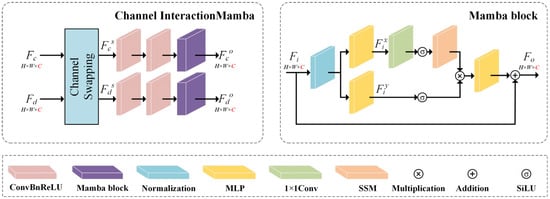

3.3. Channel Interaction Mamba (CIM)

Current deep learning-based DIBR 3D watermarking methods face two critical limitations: (1) During feature extraction, RGB and depth features are typically enhanced independently, without considering the collaborative dependencies between cross-modal features. (2) The lack of global feature modeling limits the overall watermarking performance.

The global modeling capability enables the model to comprehensively understand the intrinsic structure and contextual information, thereby significantly enhancing feature representation. However, global feature modeling typically entails substantial computational overhead, which somewhat limits its practical application in real-world scenarios. Similarly, cross-modal interactions can also be computationally expensive.

In dual-modal object tracking method [], a channel swapping mechanism has been employed to enable lightweight feature interaction, and a richer and more diverse set of shallow features for subsequent deep feature extraction are provided. Meanwhile, mamba achieves global modeling with linear complexity and demonstrates outstanding performance across various tasks [,]. Inspired by these advances, this paper proposes a channel interaction mamba (CIM), as illustrated in Figure 4 and Figure 5, aiming to enhance watermarking performance while maintaining network efficiency. The proposed module strengthens the interaction between cross-modal features through channel exchange and leverages the global modeling capability of mamba to optimize the representation of RGB and depth features for improving overall watermarking performance.

Figure 4.

CIM structure.

Figure 5.

FFM structure.

Specifically, the RGB feature Fc ∈ RH×W×C and depth feature Fd ∈ RH×W×C are inputs. Initially, each feature matrix is partitioned into two equal segments along the channel dimension. Subsequently, the first half of Fc is concatenated with the second half of Fd, while the first half Fd is concatenated with the second half of Fc. This channel-wise cross-concatenation operation effectively encapsulates information from both modalities, enabling the initial cross-modal interaction.

Following the channel exchange operation, further refinement of the modified features is required. To achieve this, after performing local feature extraction through convolution layers, we introduce mamba blocks to process the interacted features. Unlike traditional attention mechanisms, mamba is based on the linear SSM, enabling global modeling of long sequences with linear complexity. It does not rely on explicit attention weight matrices, thereby significantly improving computational efficiency while maintaining effective modeling capability.

For feature optimization after channel exchange, it can be expressed as follows:

where Conv(·) represents the convolution layer and Mamba(·) denotes the mamba operation. The specific Mamba steps are as follows.

Firstly, layer normalization is applied to the input image features Fi ∈ RH×W×C for preliminary standardization. Then, a multi-layer perception (MLP) is used to project the normalized sequence, yielding and . Subsequently, undergoes processing through a combination of one-dimensional convolution and the SiLU activation function. Subsequently, the features are projected onto parameters , , and , and combined with the time–scale parameter to transform them into discrete forms and . This generation process corresponds to Equation (2). Building upon this, a state space model is employed to perform calculations, gating the resulting output with following the application of the SiLU activation function. Finally, the output is combined with the input Fi via residual connection to produce the final output sequence Fo.

3.4. Feature Fusion Module (FFM)

Existing DIBR 3D watermarking methods typically employ simple concatenation to fuse RGB and depth information for 3D image features. However, due to the inherent discrepancies between modalities, such a direct fusion strategy often limits the effectiveness of feature representation. In RGB-D saliency detection tasks [,], cross-modal interaction is commonly used to reduce modality gaps and to obtain effective image features. Inspired by this, to obtain 3D image features beneficial for watermark embedding, the efficient cross-modal feature fusion strategy needs to be designed to filter redundant features while achieving effective fusion.

The CIM has already accomplished cross-modal interaction at the channel level. Building upon this, we further exploit the intrinsic correlations between modalities to enhance feature representation, which is then combined with an effective fusion strategy to derive the final 3D image features. These 3D image features will subsequently be transformed into an attention matrix to adjust the watermark distribution in the encoded image, thereby improving both robustness and imperceptibility. The complete architecture of the FFM is illustrated in Figure 5.

Specifically, the center view feature firstly extracts RGB features through global average pooling and the linear layer and generates the weight map Mc through the sigmoid function. The specific process is defined as follows:

where GAP (·) represents global average pooling, Linear(·) denotes the linear layer, and Sigmoid(·) represents the sigmoid activation function.

In this study, the cross-modal communication between and is facilitated through matrix multiplication, which effectively captures the complementary information inherent in these modalities. Following this initial interaction, the resultant features are subjected to further extraction using the inverse residual block (IRB). Under the guidance of Mc, this process enables the retention of pivotal information while filtering out watermark-irrelevant components. Finally, is generated through a combination of IRB and residual connections. The detailed procedure is defined as follows:

where IRB (·) denotes the inverse residual block, which encompasses a combination of a 1 × 1 convolution operation with a depth separable convolution. This configuration is devised to effectively diminish computational complexity while concurrently upholding substantial capabilities in feature extraction.

Similar to the processing of RGB features, the depth feature generates the depth weight map Md and finally outputs by interacting with .

Upon acquisition of the cross-modal features and , a fusion strategy involving maximization and element-wise multiplication is employed. Specifically, the element-wise product operation serves to efficiently capture the correlations between the features, thereby mitigating discrepancies between RGB images and depth images, while the maximization process concentrates on extracting the most salient feature information. Subsequently, the results obtained from both methods are concatenated along the channels and subjected to adaptive weighting through a trainable convolutional layer. Consequently, the feature representation Ff, characterized by comprehensive 3D information, is derived. The fusion process can be described as follows:

where CBR (·) includes 3 × 3 convolution, batch normalization, and the ReLU activation function, and Max(·) represents maximization operation.

4. Experimental Results

The proposed CIMGAN was implemented using PyTorch [] and executed on an NVIDIA RTX 4090. For the training phase, 5000 pairs of DIBR 3D images were randomly selected from the SUN RGB-D dataset []. To assess the generalization capability of the CIMGAN, cross-dataset evaluation was conducted across various datasets, including SUN RGB-D [], Microsoft Research 3D video dataset (MSR) [], Heinrich Hertz Institute (HHI) [], and Middlebury Stereo dataset (MSD) [], with each dataset encompassing 200 pairs of DIBR 3D images. Throughout both the training and testing phases, all images were standardized to dimensions of 256 × 256 × 3. The watermark length L was set to 32, with a batch size of 4, and the intensity factor β was fixed to 1. The network was optimized using the Adam optimizer [] with a learning rate of 10−3. Through experimental validation, the loss function weight factors were determined as λ1 = 12, λ2 = 5, λ3 = 0.0001.

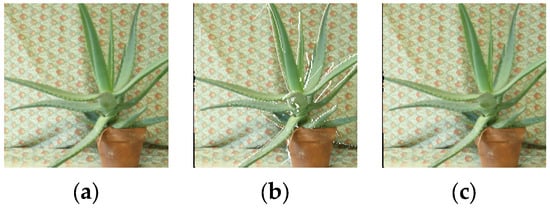

In DIBR, the baseline distance tx was set to 5% of the center view width during training to accommodate most users’ viewing habits [], with additional testing conducted at baseline distances ranging from 3% to 7%. The depth map was normalized within the range of Zfar = tx/2 to Znear = 1, with the focal length f fixed at 1. Furthermore, Gaussian filtering was applied for depth map preprocessing, where the horizontal standard deviation σh and vertical standard deviation σv were set to 10 and 30, respectively. As illustrated in Figure 6, the white holes represent 3D objects that are occluded in the center view but visible in the virtual view. These holes are filled using linear interpolation to generate the final virtual view image. Based on these parameters, the rendered left view is evaluated.

Figure 6.

Image synthesis based on DIBR. (a) Center view of cover, (b) distorted center view of 3D image, and (c) virtual view image after hole filling.

This study evaluated watermarking imperceptibility using the Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM), while the Bit Error Rate (BER) was employed to assess watermarking robustness. To demonstrate the effectiveness of the proposed CIMGAN, comparative experiments were conducted with four benchmark methods: BAGAN [], DTCWT-based [], SIFT-based [], and NSCT-based [] methods. Specifically, BAGAN [] is a deep learning-based method that removes redundancy from the depth map and uses the resulting features to compute a 3D image attention map, thereby identifying imperceptible regions for watermark embedding. The SIFT-based [] method enhances robustness against DIBR synchronization attacks by exploiting the similarity of SIFT parameters between the virtual and center views. The DTCWT-based [] and NSCT-based [] methods embed watermarks into the DT-CWT and NSCT domains, respectively to achieve translation invariance, thereby improving watermarking robustness. Notably, the SIFT-based method [] embedded 12-bit watermark information, while all other methods maintained a consistent 32-bit watermarking capacity to ensure fair comparison. For objective benchmarking, the results obtained from published studies are directly cited.

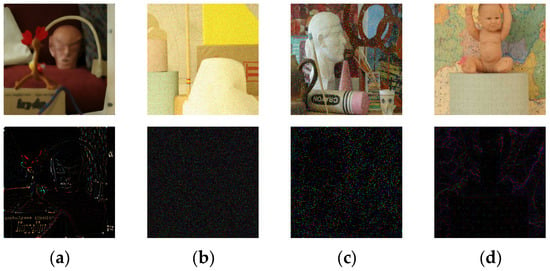

4.1. Watermarking Invisibility Evaluation

To evaluate the subjective visual quality of the encoded images, this section conducts tests on the center view, with the results illustrated in Figure 7. From top to bottom, the images are the cover view , the encoded view , the noised view , and the residual map (3 × | − |). Comparative analysis revealed that the encoded image was nearly indistinguishable from the cover image in terms of visual perception, which fully demonstrated the excellent watermarking imperceptibility. From left to right, the noised view was processed with Median Filter (7), Gaussian noise (0.01), Salt & Pepper (0.04), and JPEG (50). From the residual map, we can find that each distortion changes the image significantly, while the watermarking remains visually imperceptible within these residual maps.

Figure 7.

Visualization of the encoded view. (a) Median Filter (7), (b) Gaussian noise (0.01), (c) Salt & Pepper (0.04), and (d) JPEG (50).

To evaluate watermarking imperceptibility, an objective quality comparison was conducted on the watermarked center view images as shown in Table 1. The PSNR values revealed that the CIMGAN achieved 45.32 dB, surpassed only by the SIFT-based method [] with a lower embedding capacity. But it outperformed all other benchmark methods. This demonstrates the CIMGAN’s significant advantage in image quality. Regarding the SSIM, the CIMGAN attained 0.9948, exceeding the DTCWT-based method [] and showing superior visual quality compared to other methods. By integrating the PSNR and SSIM, it can be concluded that the CIMGAN is capable of effectively maintaining excellent visual quality while ensuring adequate watermarking capacity, thereby achieving optimal imperceptibility.

Table 1.

PSNR and SSIM of center view.

4.2. Robustness on DIBR

In DIBR-based 3D image watermarking methods, the watermark is typically embedded in the center view and subsequently propagated to the virtual view through DIBR operations, thereby achieving copyright protection for both the center and the virtual views. However, the DIBR process itself can be regarded as an attack, as it alters the spatial distribution of the watermark and consequently affects the extraction performance of the decoder. To evaluate robustness against DIBR attacks, the watermark extraction performance on the center view was tested. Table 2 shows that the CIMGAN achieved optimal watermark extraction, capable of fully recovering all embedded watermark information. In contrast, conventional methods exhibited significantly inferior performance. Hence, the advantage of the CIMGAN in the field of DIBR 3D image watermarking is proven.

Table 2.

BER of center view of different datasets.

Next, the watermark extraction capabilities of five different methods on center views and virtual views were compared, as listed in Table 3. The experiments show that the proposed method achieved the lowest BER for all tests, outperforming other methods. This result further verifies that the proposed CIMGAN not only had excellent watermark extraction performance on the center view but also effectively protected the copyright of the virtual view, proving its effectiveness in copyright protection of 3D images.

Table 3.

BER of center views and virtual views.

In addition, different baseline distances tx were tested. As one of the key parameters that determine disparity, tx affects the pixel displacement distance in the horizontal direction, consequently affecting watermark extraction performance in the virtual view. In this experiment, the test range of the baseline distance tx was set to 3% to 7% of the center view width W. As shown in Table 4, the proposed CIMGAN demonstrated the lowest average BER compared to other methods while maintaining relatively stable BER values across different baseline distances. This robust performance indicates that the proposed CIMGAN exhibited strong robustness to baseline distance variations.

Table 4.

BER of different baseline distance ratios.

4.3. Robustness in Common Image Processing

4.3.1. Robustness of Center View

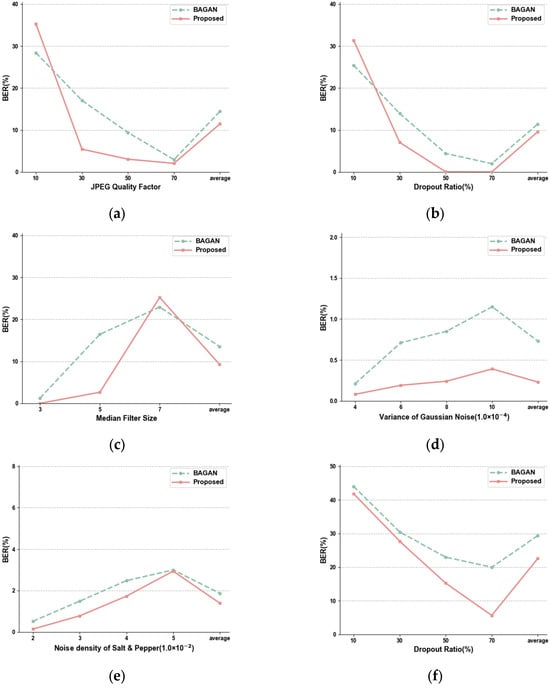

To validate the robustness of the proposed CIMGAN, watermark extraction on center view was evaluated, and comparative analysis with the deep learning-based BAGAN [] under various noise strengths was conducted, as illustrated in Figure 8.

Figure 8.

Watermark extraction from the center view compared with BAGAN. (a) JPEG. (b) Dropout. (c) Median Filter. (d) Gaussian Noise. (e) Salt & Pepper. (f) Median Filter (5) + Dropout.

Firstly, the training noise was analyzed. JPEG is a common image compression attack. The proposed CIMGAN showed a lower average BER under this attack. Notably, robustness is weak only when the quality factor is set to 10, which confirms the CIMGAN’s strong robustness to JPEG compression attacks and its enhanced capability to accurately extract the watermark. Dropout randomly eliminated partial watermark information in certain pixels and impeded the effective extraction of the watermark. BAGAN [] outperformed under lower Dropout coefficients, while the CIMGAN exhibited superior robustness under common attack intensities. Regarding the Median Filter, the CIMGAN maintained lower average BER values overall, with performance degradation only occurring under a 7 × 7 window size. Comprehensive experimental results demonstrate that the CIMGAN possesses enhanced interference robustness against training noise attacks, meeting practical application requirements.

Next, the performance against non-trained noise was examined. Regarding Gaussian noise, both the CIMGAN and BAGAN [] demonstrated excellent performance, with BER values consistently below 1%. Notably, the CIMGAN showed greater stability with less susceptibility to variations in noise intensity. When subjected to Salt & Pepper noise and combined noise attacks (comprising Median Filter and Dropout), we can see that although the robustness of the proposed CIMGAN is not strong, it is higher than that of BAGAN []. These experimental results conclusively demonstrate the CIMGAN’s exceptional generalization capability against various noise attacks.

4.3.2. Robustness of Synthesized View

After the image processing attack, the watermark extraction accuracy in the virtual view tends to be degraded. Therefore, the robustness of the virtual view against common image attacks was evaluated, as shown in Table 5. In comparison to BAGAN [], the proposed CIMGAN demonstrated slightly inferior robustness only under Gaussian noise (0.0002), while exhibiting superior performance under all other attack conditions. In terms of average BER, the CIMGAN achieved lower BER values than BAGAN [], indicating its overall stronger robustness. Although the SIFT-based method [] showed optimal performance under JPEG (90) attacks, the embedding capacity is limited to 12-bit. When considering diverse attack types and intensities, the CIMGAN also outperformed NSCT-based [] and DTCWT-based [] methods. These comprehensive experiments confirm that the proposed CIMGAN achieved the lowest BER value in virtual view watermark extraction, demonstrating exceptional robustness against common image processing attacks.

Table 5.

Watermark extraction from the synthesized view.

4.4. Comparison Under the NYU Dataset

To further evaluate the generalization performance of the proposed CIMGAN under different scenarios, the NYU dataset [] was selected as the training set. This dataset contains a variety of indoor scene video sequences captured by the Microsoft Kinect RGB-D camera, consisting of 1700 RGB-D image pairs. In addition, the deep learning model BAGAN [] and a modified version of WFormer [] were selected for comparisons. For the revised WFormer [], the loss function for decoding from synthesized views was integrated.

As shown in Table 6, the proposed CIMGAN outperformed BAGAN [] and WFormer [] in terms of the PSNR and achieved comparable SSIM performance to BAGAN []. For WFormer [], the PSNR was approximately 0.5 dB lower than that of the CIMGAN. Although numerous Transformer blocks are employed in WFormer [] for watermark fusion, image quality is degraded since depth features are not considered. BAGAN [] lacks global modeling of cross-modal features, making it difficult to focus on the imperceptible regions. Overall, the proposed CIMGAN achieved high image quality by introducing the CIM for cross-modal feature enhancement and interaction, and moreover, the FFM was utilized to obtain 3D image attention for watermark embedding.

Table 6.

The PSNR and SSIM of the center view under the NYU dataset.

In terms of watermarking robustness, as shown in Table 7, WFormer [] exhibited the worst performance, especially for Salt & Pepper noise and Resize noise. In contrast, BAGAN effectively utilized depth information for increasing robustness, with the average BER values for the center and virtual views being 0.0185 and 0.0315, respectively. However, due to insufficient interaction between depth and RGB information and the lack of global modeling capability, the BERs of BAGAN were higher than those of the proposed CIMGAN. Although for Resize, the BERs of BAGAN [] were slightly lower than those of the proposed CIMGAN; considering all types of noises, the proposed CIMGAN achieved the best performance.

Table 7.

The robustness of different watermarking models.

4.5. Statistical Significance Analysis

To further validate the effectiveness of the proposed CIMGAN, we conducted statistical significance tests on the PSNR, SSIM, and BER metrics across various comparative methods. Specifically, paired t-tests at the 95% confidence level were performed on 800 test samples to determine whether there were significant differences in the mean values of the PSNR, SSIM, and BER between the two methods. In Table 8 and Table 9, “1” indicates that the proposed CIMGAN statistically outperforms the method on the horizontal line, “0” denotes no statistically significant difference between the two methods, and “−1” indicates that the proposed CIMGAN performs statistically worse than the method on the horizontal line. As shown in Table 8, the proposed CIMGAN demonstrates statistically significant advantages in visual quality metrics and is only inferior to BAGAN in terms of the SSIM. However, the mean difference between the two methods is minimal and visually indistinguishable. Regarding robustness, as shown in Table 9, the CIMGAN generally outperforms BAGAN [] and WFormer [].

Table 8.

Paired t-test results across different methods. (a) PSNR; (b) SSIM.

Table 9.

Paired t-test results of different methods under different noise attacks.

4.6. Ablation Study

(1) Effectiveness of CIM and FFM: In the encoder, the CIMGAN consists of the CIM and FFM, which influence the watermarking embedding position and strength. The CIM facilitates the interaction between the center view and the depth map, enabling the global modeling of image features. The FFM further filters out features irrelevant to the watermark, refining the modal features for fusion. This guides the spatial distribution of the watermark in the image and enhances robustness. To verify the effectiveness of these two modules, a total of four comparative experimental models were designed. In the first model, the two modules of the CIM and FFM were removed, and the attention map MA was directly generated using the center view feature Fc, named Model#1. The second model removed the channel interaction operation of the CIM, named Model#2. The third model removed the mamba block of the CIM to evaluate the importance of global modeling, named Model#3. The fourth model removed the cross-modal interaction process in the FFM and directly fused the input features and , named Model#4.

According to the analysis of the experimental results in Table 10 and Table 11, Model#1 performed the worst considering all metrics, and it was mainly attributed to the fact that the model failed to make full use of the depth information for optimizing the image feature representation and relied only on the RGB features as the source of image attention. Furthermore, the absence of a comprehensive global feature modeling capacity resulted in suboptimal image feature extraction. In contrast, Model#2 demonstrated comparable image quality performance to that of the CIMGAN, albeit with a perceivable decrease in robustness. This discrepancy underscores the significance of the channel interaction operation, which facilitates initial integration among modalities. Consequently, the model can better identify robust regions for watermarking by leveraging spatial information from the depth map.

Table 10.

Invisibility comparisons of four watermarking models.

Table 11.

Robustness comparisons of four watermarking models.

Model#3, derived by removing the mamba block from the CIMGAN, showed degraded performance in both image quality and robustness. This degradation occurred because the channel-exchanged features lacked comprehensive global modeling, and the limited receptive field failed to locate optimal regions that balanced invisibility and robustness. When compared to Model#4, the CIMGAN demonstrated superiority in both imperceptibility and robustness. This advantage originated from its cross-modal interaction mechanism, which enhanced feature representation by excavating intrinsic correlations between modalities, thereby enabling more accurate watermark region selection and overall performance improvement.

5. Discussion of Computational Cost

To evaluate the computational cost of the DIBR 3D watermarking model, the proposed CIMGAN was comprehensively compared with BAGAN [] and the 2D watermarking method WFormer [] in terms of model size, time complexity, and throughput, as shown in Table 12. The model size was measured by the number of parameters, time complexity was evaluated based on floating point operations per second (FLOPs), and runtime was assessed using the running time per image. These metrics collectively provided a multidimensional evaluation of the computational performance of the models.

Table 12.

Computational cost comparisons.

In terms of model complexity, both BAGAN [] and the CIMGAN exhibit relatively low parameter counts, whereas the Transformer-based WFormer [] has a comparatively higher number of parameters. Regarding computational efficiency, WFormer [] has the lowest FLOPs value due to its lack of cross-modal interaction. However, the CIMGAN significantly outperforms BAGAN [] in terms of FLOPs, primarily because the CIMGAN incorporates the mamba block in the cross-modal feature extraction process. The mamba block reduces linear computational complexity, effectively avoiding the high computational overhead typically associated with cross-modal interactions. Furthermore, the adoption of lightweight network architecture for cross-modal feature fusion significantly reduces computational burdens. In terms of runtime efficiency, the CIMGAN surpasses both BAGAN [] and WFormer [], achieving an average processing time of 0.0187 s per image. This advantage stemmed from mamba’s hardware-aware algorithm design, which maintains high inference speeds while preserving model performance.

To further explore the advantages of the mamba block in terms of computational efficiency, in this section, we conduct comparative analyses with Transformer-based alternatives, such as Restormer [] and Swin Transformer []. As shown in Table 13, mamba exhibits clear superiority considering the optimal performance of parameter count, FLOPs, and running time. In particular, mamba achieves a reduction of over 20G in FLOPs, which strongly improves computational efficiency. This is mainly because Transformers process sequential data using the self-attention mechanism, which calculates the dependencies between elements with each other in the sequence. This achieves the time complexity of O(n2), meaning that the computational cost increases rapidly as the sequence length grows. In contrast, mamba employs an SSM that models sequences more efficiently by using specific state space transformations to capture sequence information. As a result, mamba achieves the near-linear time complexity of O(n) in sequence processing, significantly reducing computational overhead in scenarios involving long sequences.

Table 13.

Computational cost comparisons of mamba and Transformer.

6. Conclusions

This paper proposes a DIBR 3D image watermarking model based on the channel interaction mamba. Firstly, the channel interaction mamba (CIM) is designed to enable lightweight cross-modal channel interaction through a channel exchange mechanism while leveraging mamba to perform global modeling of RGB and depth information. Building on this, the feature fusion module (FFM) further extracts complementary information between cross-modal features and filters out redundant features, ultimately generating high-quality 3D image features. These features are then used to generate the image’s attention map, enhancing the invisibility of the watermark and identifying robust regions for watermark embedding. The experimental results demonstrate that the proposed CIMGAN achieves strong performance in both image quality and watermark robustness while maintaining computational efficiency. Additionally, ablation experiments further validate the effectiveness of the CIM and FFM.

7. Future Work

Although the proposed framework effectively enhances watermarking performance while maintaining low computational cost, there is still room for further optimization.

- (1)

- The model architecture can be further refined by leveraging the rendering characteristics of DIBR to improve watermarking robustness and imperceptibility.

- (2)

- By integrating visual perception technology, the watermark embedding strategy can be optimized to embed more watermark information in complex texture regions and reduce embedding strength in smooth areas, thereby minimizing perceptual distortion.

- (3)

- Currently, the watermark embedding capacity of the DIBR 3D image remains limited. Future research may focus on optimizing watermark preprocessing to improve embedding capacity.

Author Contributions

Conceptualization, Q.C.; methodology, Q.C. and Z.S.; investigation, R.B.; validation, C.J.; supervision, Q.C., Z.S., R.B. and C.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of Ningbo under Grant No. 2024J211, the Ningbo Public Welfare Research Project under Grant No. 2024S054, and the Scientific Research Fund of Zhejiang Provincial Education Department under Grant No. Y202454484.

Data Availability Statement

The data presented in this study are openly available at https://github.com/Chengit7/CIMGAN (accessed on 14 May 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Cao, Y.; Li, S.; Liu, Y.; Yan, Z.; Dai, Y.; Yu, P.; Sun, L. A survey of AI-generated content (AIGC). ACM Comput. Surv. 2025, 57, 1–38. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, Y.; Qi, S.; Zhao, R.; Xia, Z.; Weng, J. Security and privacy on generative data in AIGC: A survey. ACM Comput. Surv. 2024, 57, 1–34. [Google Scholar] [CrossRef]

- Luo, T.; Zhou, Y.; He, Z.; Jiang, G.; Xu, H.; Qi, S.; Zhang, Y. StegMamba: Distortion-free Immune-Cover for Multi-Image Steganography with State Space Model. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 4576–4591. [Google Scholar] [CrossRef]

- Zhou, Y.; Luo, T.; He, Z.; Jiang, G.; Xu, H.; Chang, C.C. CAISFormer: Channel-wise attention transformer for image steganography. Neurocomputing 2024, 603, 128295. [Google Scholar] [CrossRef]

- Wan, W.; Wang, J.; Zhang, Y.; Li, J.; Yu, H.; Sun, J. A comprehensive survey on robust image watermarking. Neurocomputing 2022, 488, 226–247. [Google Scholar] [CrossRef]

- Qiu, Z.; He, Z.; Zhan, Z.; Pan, Z.; Xian, X.; Jin, Z. Sc-nafssr: Perceptual-oriented stereo image super-resolution using stereo consistency guided nafssr. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 1426–1435. [Google Scholar]

- Zou, W.; Gao, H.; Chen, L.; Zhang, Y.; Jiang, M.; Yu, Z.; Tan, M. Cross-view hierarchy network for stereo image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1396–1405. [Google Scholar]

- Fehn, C. Depth-image-based rendering (DIBR), compression, and transmission for a new approach on 3D-TV. Stereosc. Disp. Virtual Real. Syst. XI 2004, 5291, 93–104. [Google Scholar]

- Nam, S.H.; Kim, W.H.; Mun, S.M.; Hou, J.U.; Choi, S.; Lee, H.K. A SIFT features based blind watermarking for DIBR 3D images. Multimed. Tools Appl. 2018, 77, 7811–7850. [Google Scholar] [CrossRef]

- Kim, H.D.; Lee, J.W.; Oh, T.W.; Lee, H.K. Robust DT-CWT watermarking for DIBR 3D images. IEEE Trans. Broadcast. 2012, 58, 533–543. [Google Scholar] [CrossRef]

- Nam, S.H.; Mun, S.M.; Ahn, W.; Kim, D.; Yu, I.J.; Kim, W.H.; Lee, H.K. NSCT-based robust and perceptual watermarking for DIBR 3D images. IEEE Access 2020, 8, 93760–93781. [Google Scholar] [CrossRef]

- He, Z.; He, L.; Xu, H.; Chai, T.Y.; Luo, T. A bilateral attention based generative adversarial network for DIBR 3D image watermarking. J. Vis. Commun. Image Represent. 2023, 92, 103794. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 139–144. [Google Scholar]

- Zhang, K.; Luo, W.; Zhong, Y.; Ma, L.; Liu, W.; Li, H. Adversarial spatio-temporal learning for video deblurring. IEEE Trans. Image Process. 2018, 28, 291–301. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Li, D.; Luo, W.; Ren, W.; Liu, W. Enhanced spatio-temporal interaction learning for video deraining: Faster and better. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1287–1293. [Google Scholar] [CrossRef] [PubMed]

- Hayes, J.; Danezis, G. Generating steganographic images via adversarial training. Adv. Neural Inf. Process. Syst. 2017, 30, 1951–1960. [Google Scholar]

- Zhu, J.; Kaplan, R.; Johnson, J.; Fei-Fei, L. Hidden: Hiding data with deep networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 657–672. [Google Scholar]

- Hao, K.; Feng, G.; Zhang, X. Robust image watermarking based on generative adversarial network. China Commun. 2020, 17, 131–140. [Google Scholar] [CrossRef]

- Jia, Z.; Fang, H.; Zhang, W. Mbrs: Enhancing robustness of dnn-based watermarking by mini-batch of real and simulated jpeg compression. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 41–49. [Google Scholar]

- Luo, T.; Wu, J.; He, Z.; Xu, H.; Jiang, G.; Chang, C.C. Wformer: A transformer-based soft fusion model for robust image watermarking. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 4179–4196. [Google Scholar] [CrossRef]

- Fang, H.; Jia, Z.; Qiu, Y.; Zhang, J.; Zhang, W.; Chang, E.C. De-END: Decoder-driven watermarking network. IEEE Trans. Multimed. 2022, 25, 7571–7581. [Google Scholar] [CrossRef]

- Halici, E.; Alatan, A.A. Watermarking for depth-image-based rendering. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 4217–4220. [Google Scholar]

- Lee, M.J.; Lee, J.W.; Lee, H.K. Perceptual watermarking for 3D stereoscopic video using depth information. In Proceedings of the 2011 Seventh International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Dalian, China, 14–16 October 2011; pp. 81–84. [Google Scholar]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Gu, A.; Dao, T.; Ermon, S.; Rudra, A.; Ré, C. Hippo: Recurrent memory with optimal polynomial projections. Adv. Neural Inf. Process. Syst. 2020, 33, 1474–1487. [Google Scholar]

- Luan, T.; Zhang, H.; Li, J.; Zhang, J.; Zhuo, L. Object fusion tracking for RGB-T images via channel swapping and modal mutual attention. IEEE Sens. J. 2023, 23, 22930–22943. [Google Scholar] [CrossRef]

- Wang, C.; Huang, J.; Lv, M.; Du, H.; Wu, Y.; Qin, R. A local enhanced mamba network for hyperspectral image classification. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104092. [Google Scholar] [CrossRef]

- Sun, F.; Ren, P.; Yin, B.; Wang, F.; Li, H. CATNet: A cascaded and aggregated transformer network for RGB-D salient object detection. IEEE Trans. Multimed. 2023, 26, 2249–2262. [Google Scholar] [CrossRef]

- Zhou, T.; Fu, H.; Chen, G.; Zhou, Y.; Fan, D.P.; Shao, L. Specificity-preserving RGB-D saliency detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4681–4691. [Google Scholar]

- Collobert, R.; Kavukcuoglu, K.; Farabet, C. Torch7: A matlab-like environment for machine learning. In Proceedings of the BigLearn NIPS Workshop 2011, Sierra Nevada, Spain, 16–17 December 2021. [Google Scholar]

- Song, S.; Lichtenberg, S.P.; Xiao, J. Sun rgb-d: A rgb-d scene understanding benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 567–576. [Google Scholar]

- Zitnick, C.L.; Kang, S.B.; Uyttendaele, M.; Winder, S.; Szeliski, R. High-quality video view interpolation using a layered representation. ACM Trans. Graph. (TOG) 2004, 23, 600–608. [Google Scholar] [CrossRef]

- Hirschmuller, H.; Scharstein, D. Evaluation of cost functions for stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from rgbd images. In Proceedings of the Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 746–760. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).