Abstract

Recently, prompt learning has emerged as a viable technique for fine-tuning pre-trained vision–language models (VLMs). The use of prompts allows pre-trained VLMs to be quickly adapted to specific downstream tasks, bypassing the necessity to update the original pre-trained weights. Nevertheless, much of the existing work on prompt learning has focused primarily on the utilization of non-specific prompts, with little attention paid to the category-specific data. In this paper, we present a novel method, the Category-Specific Prompt (CSP), which integrates task-oriented information into our model, thereby augmenting its capacity to comprehend and execute complex tasks. In order to enhance the exploitation of features, thereby optimizing the utilization of the combination of category-specific and non-specific prompts, we introduce a novel deep prompt-learning method, Deep Contextual Prompt Enhancement (DCPE). DCPE outputs features with rich text embedding knowledge that changes in response to input through attention-based interactions, thereby ensuring that our model contains instance-oriented information. Combining the above two methods, our architecture CSP-DCPE contains both task-oriented and instance-oriented information, and achieves state-of-the-art average scores on 11 benchmark image-classification datasets.

1. Introduction

Vision–language models (VLMs), including CLIP [1], CoOp [2], ALIGN [3], CoCoOp [4] and FILIP [5], have demonstrated substantial efficacy in the domain of image-classification tasks.

These models are trained using extensive datasets that include image–text pairs, exemplified by the 400 million image–text combinations employed in the training of CLIP [1]. Consequently, they are capable of providing robust generalization for few-shot and zero-shot image-classification tasks with open vocabulary [1]. During the inference phase, prompts connected to downstream task labels (e.g., “a photo of a <CLASSNAME>”) [1] are passed into the textual encoder to derive the corresponding features. Meanwhile, images are introduced into the visual encoder to extract the corresponding features. Subsequently, the similarity score between the aforementioned features is calculated in order to predict the probability distribution.

Although these VLMs show remarkable generalization capabilities, there are still challenges in fine-tuning their performance for specific downstream tasks. Owing to their substantial size and limited training data, the method of fine-tuning all pre-trained parameters not only requires a considerable amount of storage space but also carries a notable likelihood of overfitting to downstream tasks. To address the issues of storage space demands and the propensity for overfitting when fine-tuning large pre-trained VLMs under constrained training data conditions, the concept of prompt learning [6,7,8,9] has been incorporated into pre-trained VLMs, which takes cues from the domains of Natural Language Processing (NLP). This strategy entails preserving the initial pre-trained weights and incorporating a limited quantity of trainable parameters, which mitigates the potential for overfitting and diminishes the requirement for extensive storage capacity, thus enabling the effective customization of large pre-trained VLMs to specific downstream tasks.

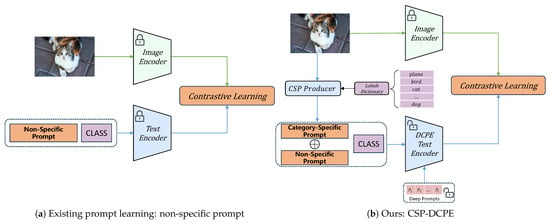

As shown in Figure 1a, previous studies on multi-modal prompt learning, as referenced in the literature [2,4,10,11,12], have mostly focused on non-specific prompts, which have some basic ability to focus on general information, and can fine-tune pre-trained models through prompt learning, but will lack category-relative information. In fact, image-classification tasks require models with a strong category representation bias. The efficacy of these models is significantly dependent upon the selection of input prompts, which requires a judicious choice to guarantee superior performance. Considering the aforementioned, it is clear that utilizing fixed prompts for adjusting the representation within the text branch is not the most effective approach. This is due to the fact that the input prompts are fixed and cannot be varied according to the specific task, which does not allow for the flexibility needed to dynamically adjust the representation space according to the category information. For this reason, it is essential to introduce category information for the textual encoder. To this end, we propose a new approach Category-Specific Prompt (CSP), which ensures that the prompts of the input textual encoder also have a strong category representation bias.

Figure 1.

Comparison of CSP-DCPE with existing prompt-learning methods. (a) Existing prompt-learning approaches that use only non-specific prompts to fine-tune CLIP, ignoring category-related information. (b) CSP-DCPE introduces Category-Specific Prompts with rich category-related information on the textual encoder and enhances the exploitation of features derived from the preceding layer by introducing DCPE, thereby optimizing the utilization of the combination of category-specific and non-specific prompts. DCPE works by adding attention between encoder layers, combining the deep prompt with the previous layer’s output to serve as input for the next layer. This improves the extraction of feature information and enhances overall model performance.

As shown in Figure 1b, the CSP Producer facilitates the full interaction between image and category labels, generating a Category-Specific Prompt to be added to a non-specific prompt, thereby incorporating rich category information into the image-classification process. In response to changes in the task, the output of the CSP Producer also changes. This allows our model to contain task-oriented information, which is particularly beneficial in image-classification tasks.

As with the present article, CoOp [2] proposed a Class-Specific Context mechanism that captures certain category-related information. However, in this work, the Class-Specific Prompt (CSP) is a dynamically generated mechanism designed to adaptively produce category-guided features tailored to the current input image. Its core principle lies in jointly modeling image features and class label information to generate prompts highly correlated with the image content, thereby optimizing representation learning for downstream tasks. In contrast, the Class-Specific Context introduced in CoOp predefines a learnable context for each category without incorporating dynamic features from the input images.

The key distinction between the two approaches is as follows: The Class-Specific Context in CoOp relies on learnable contexts independent of input images, performing category-level learning solely in the vector space, which fails to adapt to content variations across different images. In comparison, our CSP dynamically generates prompts by fusing image features with class labels, significantly enhancing the specificity and discriminability of feature representations.

Various methods [10,11,12] have been devised to incorporate deep prompts in order to fully fine-tune the large pre-trained VLMs. These methods acquire depth insights progressively by incorporating deep prompts into either the visual or textual encoders throughout the training process. This deep prompt-learning strategy permits the modeling of contextual relationships autonomously, thereby enabling enhanced flexibility in aligning the vision and language representations to achieve a superior match between the two features obtained from the large pre-trained VLMs. However, existing deep prompt-learning methods have the disadvantage of not being able to make better use of the features output from the preceding layer. Some of the previous multi-modal prompt learning dropped the features extracted from the preceding layer and only input the deep prompts to the next layer, such as MaPLe [11], MuDPT [12]. Others do not introduce deep prompt learning and input the features extracted from each layer directly into the next layer, with some redundant information entered into the next layer, such as CLIP [1], CoOp [2]. On the one hand, if the features extracted in the previous layer are discarded and only the deep prompts are fed into the next layer, a significant amount of information will be lost and the final extracted features will not be able to represent the input text comprehensively. On the other hand, in the absence of deep prompts, the textual encoder is unable to extract features efficiently when textual input embeddings combine category-specific and non-specific prompts.

As shown in Figure 1b, in order to enhance the exploitation of features derived from the preceding layer, thereby optimizing the utilization of the combination of category-specific and non-specific prompts, we propose a novel Deep Contextual Prompt Enhancement (DCPE) textual encoder. Specifically, a trainable attention [13] module is incorporated between each layer of the textual encoder. During training, the remaining parameters of the textual encoder are frozen, while both deep prompts and the aforementioned attention module are trained. Through this additional attention module, The DCPE textual encoder is able to filter out the redundant parts of the information in the previous layer and can focus on the important information. By combining the output of the attention module with deep prompt, it can avoid the problem of the previous prompt learning not being able to make good use of the features provided by the previous layer, and it can enable the textual encoder to better extract the text features of the combination of non-specific prompts and Category-Specific Prompts. The DCPE structure outputs features with rich text embedding knowledge that changes in response to input through attention-based interactions. This provides our architecture with instance-oriented information.

To sum up, the CSP-DCPE framework takes into account both task-oriented and instance-oriented data, bolstering its flexibility for various downstream tasks and its capacity to generalize across different classes. Our key contributions include the following:

- We examine the function of prompt learning in image-classification tasks and suggest a new prompt-learning structure, the Category-Specific Prompt (CSP). As far as we are aware, this marks the inaugural endeavor to leverage a combination of non-specific and Category-Specific Prompts in the domain of image classification.

- In order to enhance the exploitation of features derived from the preceding layer and to optimize the utilization of the combination of category-specific and non-specific prompts, we propose a novel deep prompt-learning approach, Deep Contextual Prompt Enhancement (DCPE). This approach represents the input text in a more comprehensive manner, thereby optimizing the extraction process.

- Our proposed CSP-DCPE architecture combines both instance-oriented and task-oriented data. We evaluated our proposed architecture on 11 benchmark image-classification datasets, achieving optimal outcomes.

2. Related Work

2.1. Pre-Trained VLMs

Studies have shown that pre-trained vision–language models (VLMs), trained on aligned image–text datasets, exhibit outstanding performance in numerous subsequent vision and language tasks, as evidenced in [1,3,14,15,16,17]. The pre-trained VLMs with both visual and textual supervision showed improved performance in subsequent tasks compared to models pre-trained with visual or textual supervision only. This suggests that the incorporation of multi-modal data during the pre-training phase can significantly enhance the model’s capabilities in various downstream applications. This is because the model is able to capture more comprehensive multi-modal representations, allowing it to transfer knowledge effectively. The pre-training strategies can be primarily categorized into four main categories: masked language modeling [18,19], masked-region modeling [20,21], contrastive learning [1,2,3,11,22,23], and image–text matching [2,4,11,12]. In this research, we concentrate on VLMs that have been pre-trained using contrastive learning methods, including CLIP [1], CoOp [2], CoCoOp [4], and so on. CLIP employs a dual-encoder framework comprising a visual encoder and a textual encoder. This architecture has been devised to integrate visual depictions with textual characteristics, enabling the model to rapidly adjust to diverse downstream tasks under the direction of a textual prompt. The dual-encoder structure enables effective knowledge transfer from the pre-trained large VLMs to these downstream tasks, showcasing the cross-modal transferability of CLIP. Furthermore, CLIP’s textual encoder plays a pivotal role in generating classifier weights by transforming class names into embeddings that can be matched with visual features, highlighting the model’s adaptability to different visual recognition tasks. After extensive training on a vast web-based dataset comprising 400 million image pairs alongside descriptive texts, CLIP successfully integrates textual and visual information into a unified embedding space. Consequently, the CLIP model has shown notable success across various applications, such as scenarios involving no prior examples (zero-shot) and those with limited examples (few-shot). Even though these pre-trained large VLMs possess robust generalization properties, tailoring them to specific downstream tasks in a targeted manner presents a considerable obstacle. Research has delved into customizing pre-trained large VLMs for diverse uses, including tasks like image classification with a small number of examples [24,25,26], identifying objects within images [27,28,29,30,31,32], and dividing images into segments [33,34,35]. However, modifying the entire pre-trained large VLMs to fit a single downstream task might not yield the best results and could lead to overfitting. To address this, the current study presents an innovative approach to prompt learning, known as CSP-DCPE, designed to effectively adapt pre-trained large VLMs for use in tasks that involve classifying images with a small number of examples.

2.2. Few-Shot Learning

The investigative domain of Few-Shot Learning [36] centers on developing algorithms that can acquire knowledge from a restricted set of annotated base classes, aiming ultimately to generalize to novel, unseen classes.Conventional approaches within the Few-Shot Learning domain encompass techniques such as metric learning [37,38], transfer learning [39,40] and meta-learning [41,42,43]. These methods aim to facilitate the development of robust models that can generalize from a limited number of annotated examples to new classes with high accuracy. Metric learning methods are crafted to create a feature space where the closeness between samples belonging to the same category is reduced, and the distance between samples of distinct categories is augmented. The study referenced in [37] enhances Few-Shot Learning by extracting multi-scale features and learning relationships between samples using a feature pyramid structure and a novel intra and inter relation loss. Methods leveraging transfer learning initiate the process by training a foundational network on a set of base classes to acquire generalizable knowledge, subsequently refining this network on new classes for specific downstream task adaptation. As demonstrated in the literature [39], integrating high-definition and low-definition features during the backbone network’s training phase leads to superior outcomes in object-detection tasks. The application of meta-learning facilitates a more efficacious adaptation to novel tasks through the utilization of a meta-learner across a range of tasks. The research cited in [43] employs batch meta-learning to fine-tune the normalization techniques, thereby creating a more advanced backbone network. Nevertheless, the aforementioned techniques necessitate extensive datasets for training, which restricts their expandability. The effectiveness of pre-trained VLMs presents a viable resolution to this issue. They possess the ability to produce remarkable outcomes on downstream tasks in a zero-shot manner, thereby eliminating the need for datasets containing base classes. Additional enhancements in performance can be realized by employing prompt-learning strategies.

2.3. Prompt Tuning in Pre-Trained VLMs

Considering the scarcity of downstream data and the substantial size of pre-trained VLMs, fine-tuning the full VLMs is often not the most efficient approach. Additionally, there is a significant risk of overfitting to particular downstream tasks. Prompt tuning, as demonstrated in various studies [6,7,8,9], has proven to be an effective approach for tailoring pre-trained VLMs in the field of NLP. Throughout the training phase, a restricted set of parameters is utilized for training, with the pre-trained VLMs weights remaining unchanged. This makes prompt tuning a parameter-efficient method of adaptation. Prompt tuning only necessary to retain the task-oriented prompt parameters, rather than the complete set of model parameters for each task. There has been a great deal of research conducted into the use of prompt learning in VLMs, encompassing single-modal prompt learning [2,4,10,44,45,46,47,48] and multi-modal prompt learning [11,12,49,50,51]. As the pioneering effort to integrate prompt learning within VLMs, the CoOp framework [2] enhances the textual component of the model by tuning a series of prompt vectors, which improves the ability to adapt to new tasks with limited data in the CLIP model. CoCoOp [4] has observed that CoOp’s performance diminishes when applied to classes not seen during training and has developed a strategy to link prompts with particular image samples to overcome this limitation. The work by [46] introduces an approach that involves the optimization of various prompt sets through the acquisition of insights into their distribution. Ref. [52] has adapted the CLIP model for video analysis by training it on relevant prompts. Ref. [45] addresses overfitting by ensuring that the model retains its pre-existing broad knowledge base, which is accomplished by selectively updating prompts that align with the general knowledge encapsulated in the initial prompts. To enhance the ability to retain general knowledge acquired through pre-training, ref. [44] suggests reducing the variance between trainable prompts and the original prompts by utilizing knowledge-guided Context Optimization. To circumvent the problem of internal representational transition, ref. [49] incorporates read-only prompt in both visual and textual encoder, thereby yielding a more resilient and generalisable adaptation. Adapter-based approaches can also be regarded as a subset of prompt tuning techniques. CLIP-Adapter [24] enhances downstream task adaptation by freezing CLIP backbones and fine-tuning lightweight adapter modules with minimal target data. CLIPCEIL [53] improves cross-modal alignment by integrating contrastive embedding enhancement layers and dynamic feature calibration, while preserving CLIP’s zero-shot capabilities.

This paper examines a significant question: considering that image classification necessitates a robust category representation, would it be more efficacious to adapt CLIP by integrating category information into the textual encoder, ensuring that prompts fed into the textual encoder possess both non-specific and category-specific information? Our work answers this question for the first time by considering both non-specific and Category-Specific Prompts, while combining task-oriented and instance-oriented information. The results of our experiments demonstrate the superiority of our approach.

3. Method

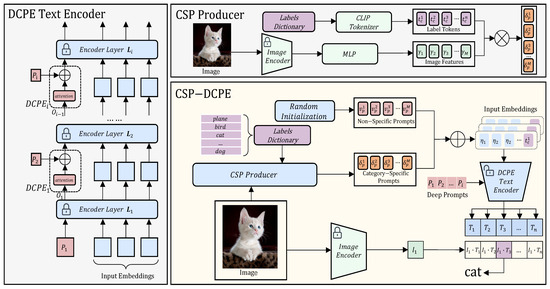

Our approach involves the adaptation of multi-modal pre-trained large VLMs that combine task-oriented and instance-oriented information by considering CSP and DCPE, with the aim of enhancing the recognition and classification capabilities of the pre-trained VLMs and improving the generality of specific downstream tasks. An overview of our approach is presented in Figure 2. Our architecture introduces a Category-Specific Prompt (CSP), which offers category information for image classification and fully interacts with image features retrieved from the visual encoder. This allows our model to contain task-oriented information. To make more efficient use of features derived from the preceding layer and to more effectively extract both non-specific and category-specific features, we have introduced Deep Contextual Prompt Enhancement (DCPE). This structure outputs features containing rich text embedding knowledge, which vary with the input through attention-based interactions. This gives our architecture instance-oriented information. Subsequently, this paper initially presents an overview of the CLIP architecture, followed by an exhaustive exposition of our proposed methodology.

Figure 2.

Overall architecture of CSP-DCPE. Our architecture introduces a Category-Specific Prompt (CSP), which provides category information for image classification and interacts fully with image features obtained from the visual encoder. This allows our model to contain task-oriented information. To extract textual features more effectively from the combination of non-specific and Category-Specific Prompts, we have introduced Deep Contextual Prompt Enhancement (DCPE). DCPE functions by inserting attention modules between encoder layers. It merges the deep prompts with the output from the preceding layer, which then becomes the input for the subsequent layer. This mechanism enhances the extraction of feature information and boosts the overall performance of the model.

3.1. Revisit CLIP

The CLIP model, as referenced in [1], is distinguished by its dual-encoder architecture that operates across different modalities, incorporating both a visual and a textual encoder. While undergoing the learning process, CLIP utilizes a comparative loss function to improve the alignment between visual content and its corresponding textual narratives, while also reducing the alignment of textual pairs that are mismatched. By undergoing pre-training on an extensive web dataset comprising 400 million image–text pairs, CLIP is capable of mapping images and their respective textual descriptions onto a shared embedding space. Consequently, CLIP is capable of performing few-shot and zero-shot image-classification tasks by evaluating the resemblance between the traits of a manually created template, for instance, “a photo of a <CLASSNAME>”, and the image’s feature representation. Define the set of labels for the downstream task as , with denoting the ith class label. A manually constructed prompt, incorporating , is input into the textual encoder to produce a collection of textual representations . Meanwhile, the image is input into the visual encoder for obtaining the visual representation . The CLIP model calculates the likelihood of predictions by evaluating the alignment, measured through cosine similarity, between the image’s feature representation and a set of n text-based feature representations:

in which signifies the cosine similarity measure and represents the temperature parameter that has been learned.

3.2. Category-Specific Prompt

To obtain the categorical content of an image x, we propose the introduction of a CSP Producer, as depicted in Figure 2. To be precise, it extracts image features using the CLIP visual encoder and a Multi-Layer Perceptron (MLP), as detailed below:

Here, , , , , with representing the output dimension of the CLIP visual encoder, indicating the output dimension of the hidden layer, and denoting the output dimension of the final layer. In this context, n is the number of class labels, and M is a hyper-parameter that specifies the number of vectors for Category-Specific Prompts. is the intermediate features, and is an activation function used to make the MLP have non-linear properties.

Subsequently, we transform the image feature , originally in , into the space :

Concurrently, the labels are introduced into the CLIP Tokenizer, resulting in the acquisition of category tokens . To conclude, the set of n tokenized category labels, represented by the category tokens, is utilized to integrate class-specific information. The Category-Specific Prompts, expressed as , with each for , are derived as follows:

Here, signifies the dimensionality of the texual encoder’s embedding space, and ⊙ denotes the element-wise product of vectors.

3.3. Textual Input Embeddings

In order to boost the prompts’ adaptability across tasks, we train non-specific prompts for a thorough fine-tuning of the encoders. To be precise, we initiate the training vectors in a random fashion, with each , for , where M is the count of non-specific prompts. Following this, the non-specific prompts are utilized for the tuning of the text encoder, facilitating the incorporation of Category-Specific Prompts.

Category semantic information can be learned by combining non-specific prompts with Category-Specific Prompts conducted by CSP Producer. In a formal sense, the prompt , which incorporates the explicit combination of category labels and text representations, is derived as follows:

Following the concatenation of the category label tokens, the textual input embeddings are then defined as follows:

where is the tokenized category label.

3.4. Deep Contextual Prompt Enhancement

In the context of prior research on deep prompt learning, each prompt served a singular purpose, exclusively guiding the activation of its corresponding layer. Upon completion of processing for the layer, the resultant output for the prompt , termed , was typically discarded without further utilization. The discarded outputs from the prompts could encapsulate significant insights into the pre-trained VLMs, which might exert a beneficial impact on the final performance outcomes. To retrieve this information, we introduce a novel prompt-learning architecture, known as the Deep Contextual Prompt Enhancement (DCPE) encoder. Within each layer of the DCPE textual encoder, an attention mechanism is employed to combine the novel prompt embeddings with the outputs from the preceding layer. The prompting approach of the DCPE encoder has been refined as delineated below:

for the first layer , and

for the subsequent layers, where and is attention layer which can be learned.

In contrast to earlier deep-like architectures, the attention connections within the DCPE framework function serve as an example of adaptability: the output generated from the prompt in the previous block, denoted as , exhibits a profound interconnection with the input embeddings, adapting dynamically to the input variations. Meanwhile, the newly introduced prompt, , maintains its invariance to the input. Consequently, the modified prompt is expressed as , which varies across distinct images. Drawing parallels with the CoCoOp approach [4], we have observed that this architecture exhibits a heightened focus on instance-oriented details, diverging from an emphasis on a subset of classes. The risk of overfitting is mitigated, and the model’s resilience to domain variations is enhanced, by introducing this instance-oriented information.

3.5. Loss Function

As illustrated in Figure 2, our CSP-DCPE architecture comprises two encoders. The visual encoder analyses the input image to extract relevant visual features, whereas the textual encoder performs a similar operation on the textual tokens. The loss function employed in our architecture is contingent upon the degree of similarity between these two sets of features. Formally, denote as the visual features that are extracted from an image by the visual encoder. Denote the ensemble of weight vectors as which are generated by the textual encoder, where n represents the overall count of categories. For a given image and its corresponding label , the probability of the model predicting class i is formulated as Equation (1).

After acquiring the probabilities for each class, the cross-entropy loss is computed by employing the subsequent formula:

4. Experiment

Consistent with prior research, we assess our proposed methodology across two different experimental configurations: (1) Few-Shot Learning (FSL) setting. This is used to assess the adaptability of our CSP-DCPE model. We perform few-shot classification by training pre-trained large VLMs using a limited quantity of labeled images and evaluating its performance on a dataset containing the same categories as those in the training set. (2) Base-to-Novel Generalization (B2N) setting. Within this setting, the collection of data is divided into base classes and novel classes utilizing arbitrary seed numbers. The VLM, which has been pre-trained, first undergoes training on the base classes and subsequently is assessed on the novel classes. This approach enables us to assess the generalization capability of our CSP-DCPE architecture.

- Datasets

In line with previous research, we utilize 11 image benchmark datasets in both settings: ImageNet [54] and Caltech101 [55] for general object recognition; FGVCAircraft [56], Flowers102 [57], Food101 [58], OxfordPets [59], and StanfordCars [60] for fine-grained object classification; DTD [61] for texture classification; EuroSAT [62] for celestial body imagery classification; UCF101 [63] for action classification; and SUN397 [64] for scene classification.

- Implementation details

All experiments make use of a pre-trained VLM, specifically the VIT-B/16 CLIP model, as referenced in [1]. For optimization, we utilize the Stochastic Gradient Descent (SGD) method. The training process involves a batch size configuration of 4 and a learning rate set at 0.1. The performance metrics for Few-Shot Learning and the ability to generalize from base to novel scenarios are derived from the mean values obtained over a series of three separate executions (random seed = 1, 2 and 3).

In the context of FSL, aligning with established research, we conduct model training across a range of shot configurations: 1, 2, 4, 8, and 16 shots, followed by assessments on comprehensive test sets. Within the framework of generalizing from base to novel classes, we standardize our training to a 16-shot regimen to maintain equitable comparison standards. The outcomes we present are derived from the mean of three independent runs, with each initiated using unique random seeds designated as 1, 2, and 3.

In the B2N setup, we set the deep prompt depth to 9, the non-specific prompt length to 5 and the deep prompt length to 3, with a maximum of 8 epochs. The deep prompt length is set to 9 in the Few-Shot Learning setup, with a deep prompt depth of 8, a non-specific prompt length of 5, and a maximum of 50 epochs for 16 shots and 30 epochs for fewer shots. For ImageNet’s 1000-class dataset, all shots are trained with a maximum of 30 epochs.

4.1. Few-Shot Learning

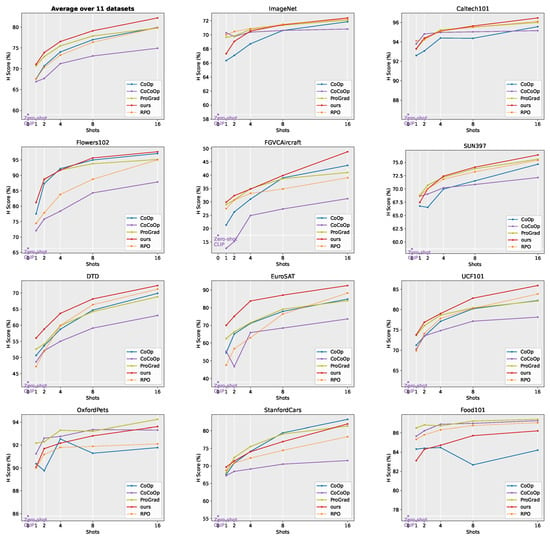

FSL involves training models on a restricted number of labeled images, followed by evaluating them on a dataset containing the same categories. Figure 3 presents the comprehensive results across 11 datasets of five architectures (CoOp [2], CoCoOp [4] ProGrad [45], RPO [49] and ours), with the top-left sub-figure showing the average performance. In contrast to alternative methodologies, our method achieves notable improvements across all shot settings. As the sample size increases, average performance shows substantial gains, underscoring the efficacy of our approach, particularly with larger training samples. This trend is consistent across both FSL and B2N settings.

Figure 3.

A thorough comparison of CSP-DCPE with prior methods in FSL shows that CSP-DCPE significantly improves performance in eight datasets out of eleven, resulting in a remarkable boost in overall average performance.

Notably, in comparison to the prior SOTA (ProGrad), in the 16-shot configuration, our method demonstrates significant improvements on datasets with substantial data domain variations. For example, on FGVCAircraft (7.78%), EuroSAT (8.56%), UCF101 (3.81%), DTD (3.49%) and Flowers102 (2.51%), further validating the effectiveness of our CSP-DCPE. The empirical data, indicating an increase in average performance metrics irrespective of the shot conditions, corroborate the advanced capabilities of the CSP-DCPE methodology in optimizing prompt learning while concurrently emphasizing its data-efficient attributes.

4.2. Base-to-Novel Generalization

To evaluate the adaptability of the CSP-DCPE model, we conduct trials within a B2N configuration. Here, the data is segmented into base categories and novel categories. The VLM, which has been pre-trained, receives further training on the base categories with an emphasis on refining its prompt responsiveness, followed by an assessment of its efficacy against the novel categories. For an in-depth examination of the approach’s effectiveness, we execute generalization tests from base to novel generalization scenarios, experimenting with a range of K-shot instances. Table 1 presents the average performance across all 11 benchmark image-classification datasets for various shot configurations. Table 2 provides a detailed breakdown of the results for each dataset. Additionally, Table 3 displays the confidence intervals, where the mean represents the average performance, the standard deviation (Std) indicates the variability of the data, and the 95% confidence interval (95% CI) provides a range within which the true performance is likely to fall, helping to assess the reliability of the results. In both experimental settings, we employ the pre-trained VLM, specifically the VIT-B/16 CLIP model [1].

Table 1.

An evaluation, using ViT-B/16 as a backbone, validates the performance of Base-to-Novel Generalization by computing the average score over 11 benchmark datasets using different k-shot. The highest value in each column is highlighted in bold, while the second highest value is underlined.

Table 2.

We conduct an extensive evaluation of various models, including CLIP, CoOp, CoCoOp, ProGrad, KgCoOp, RPO, and our own method, focusing on their ability to generalize from base to novel classes. Our model undergoes training on a restricted set of examples (16-shot) from a selection of classes, referred to as base classes, and then assessed on a comprehensive test set that encompasses both base and novel classes. The metric H is used to represent the harmonic mean, which provides a balanced measure of our model’s performance on both base and novel classes. The highest value in each column is highlighted in bold, while the second highest value is underlined.

Table 3.

Base and novel accuracy of different methods at , averaged over 11 datasets.

- Overall assessment:

As demonstrated in Table 1, our introduced CSP-DCPE exhibits significant advancements in terms of novel accuracy and harmonic mean across all configurations when compared to alternative approaches. This serves as confirmation of its excellence in generalizing from base classes to novel classes. The KgCoOp [44] method reaches a baseline accuracy of 79.90% in the 4-shot setting, marking the optimal performance in this configuration. However, our proposed method demonstrates a notable enhancement in accuracy for novel classes in comparison to KgCoOp. For instance, it attains an improvement of 2.61% and 0.12s% in terms of novel accuracy and harmonic mean, respectively, in comparison to KgCoOp. CoOp [2] attains a peak base accuracy of 80.74% when K equals 4. However, the accuracy for novel classes experiences a significant decline, for example, achieving the worst performance of 68.40%. It is our contention that CoOp exhibits a pronounced tendency towards overfitting to the base classes, which in turn precipitates a marked deterioration in performance with respect to the novel classes. When contrasted with the CoOp methodology, our CSP-DCPE shows a minor decrease in efficacy with the foundational classes, yet it realizes notable advancements, with a 7.46% boost in the precision of novel classes and a 3.94% augmentation in the harmonic mean of performance. Within the 16-shot scenario, our CSP-DCPE leads in precision for both base (82.81%) and novel (76.38%) classes, as well as in the overall harmonic mean metric (79.46%), surpassing other current methods. The RPO [49] technique excels particularly in its handling of new classes, placing a close second to our innovative strategy. Our approach, however, demonstrates a pronounced advantage in dealing with base classes, surpassing RPO with margins of 3.14% in the 4-shot scenario, 3.18% in the 8-shot, and 1.75% in the 16-shot configurations. This robust set of results validates the efficacy of our method in adapting pre-trained vision–language models for specific downstream tasks, with improvements observed in the accuracy of both base classes and novel classes.

- Detailed assessment:

Table 2 shows CSP-DCPE’s detailed performance in the B2N configuration across 11 benchmark image-classification datasets. When averaged across these datasets, our CSP-DCPE architecture exhibits significant advancements in both base and novel accuracy, surpassing all currently available methods. Specifically, CSP-DCPE achieves notable gains in comparison to the previous state-of-the-art (RPO), with increases of 1.75% in base accuracy and 1.37% in novel accuracy.

A key finding is that CSP-DCPE achieves significant improvements in harmonic mean score on datasets with large variations in data domain from the pre-trained data, including enhancements of 1.09% on FGVCAircraft, 2.21% on UCF101, 2.27% on DTD, and especially 12.04% on EuroSAT. These results validate CSP-DCPE’s effectiveness. Notably, the varying magnitude of improvements reveals important limitations: For FGVCAircraft where domain shift is less pronounced (aircraft images in both pre-training and target domains), the marginal gain suggests that Category-Specific Prompts provide limited added value when domain characteristics are already well-aligned. This implies that our method’s advantage mainly emerges in scenarios requiring substantial domain adaptation. For datasets with pronounced domain shifts, using Category-Specific Prompts to incorporate task-oriented information enables the model to better manage domain shift effects. Additionally, the performance ceiling on fine-grained classification tasks (e.g., aircraft subtypes in FGVCAircraft) indicates that our current prompt design may insufficiently capture subtle inter-class distinctions, pointing to an important direction for future refinement.

4.3. Ablation Experiments

- Assessment of Module Effectiveness:

The proposed architecture is based on two core modules: Category-Specific Prompt (CSP) and Deep Contextual Prompt Enhancement (DCPE). To determine the contribution of each module, experiments were conducted on all 11 datasets, with each module being introduced individually in order to observe its impact on both the base and novel. The baseline employs a combination of non-specific and deep prompts, designated as non-specific + deep. Subsequently, we introduce CSP and DCPE to assess the effectiveness of each module. The comprehensive experimental results are showcased in Table 4. Introducing CSP without incorporating deep prompt is denoted as “CSP”. With the introduction of CSP, compared to baseline, there is an improvement of 0.54%, 0.06%, and 0.27% in base accuracy, novel accuracy, and harmonic mean, respectively. Building on the above, the introduction of deep prompt is further denoted as “CSP+deep”. The incorporation of the Category-Specific Prompt leads to improvements in base accuracy by 0.97%, novel accuracy by 0.45%, and harmonic mean by 0.69%, all when compared to the baseline. The findings of the research study demonstrate that Category-Specific Prompts facilitate the introduction of category-related information into the model, thereby enabling it to adapt more effectively to downstream tasks and enhance its generalization ability with an increased capacity to process unseen classes. The incorporation of the Deep Contextual Prompt Enhancement resulted in a statistically significant improvement of 1.34%, 1.3%, and 1.32%, respectively, on the base classes, novel classes, and harmonic mean, when compared to the baseline. This is because the baseline only inputs deep prompts to the next layer, thereby discarding the information transmitted from the previous layer. The Deep Contextual Prompt Enhancement module, however, is capable of extracting valuable implicit knowledge from this information. The combination of Category-Specific Prompt and Deep Contextual Prompt Enhancement has resulted in a 3.03% improvement in classification accuracy for base classes, a 2.04% improvement for novel classes, and a 2.5% improvement for the harmonic mean, compared to the baseline. The clear benefits demonstrate that the integration of all modules leads to enhanced adaptation and generalization capabilities.

Table 4.

Module ablation result averaged over 11 datasets.

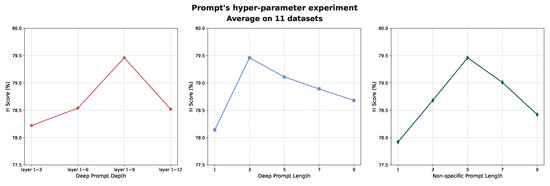

- Experiment on Prompt Hyper-Parameter:

As illustrated in Figure 2, our model incorporates three types of prompts: deep prompts within the DCPE text encoder, class-specific prompts, and non-specific prompts. The class-specific prompt length is set equal to the non-specific prompt length to facilitate additive integration, necessitating both to have the same length. To systematically assess hyper-parameter sensitivity, we conducted controlled experiments using the one-factor-at-a-time (OAT) methodology: when a target parameter was varied, the other two parameters were kept fixed at their optimal values (deep prompt depth = 9, length = 3, non-specific length = 5). Figure 4 depicts the impact of deep prompt depth, deep prompt length, and non-specific prompt length on performance, using data from eleven benchmark image-classification datasets. The parameters varied as per the research objectives. As shown in the left curve of Figure 4, performance peaked at a depth of layer 1∼9 for the deep prompts (79.46). Introducing prompts at greater depths (layer 1∼12) negatively impacted performance, indicating that extracting information from deeper parameters is sufficiently effective without additional prompts. Therefore, the deep prompt depth was ultimately set to 9. The middle and right curves of Figure 4 demonstrate that when the deep prompt length is set to 3 and the non-specific prompt length is set to 5, the harmonic mean performance is optimized. The trends observed for increasing deep prompt length and non-specific prompt length mirror those seen with deep prompt depth. Regarding the length of the deep prompt and the non-specific prompt, we posit that a longer prompt length results in more pronounced overfitting to the base classes due to the increased number of parameters being learned. Consequently, performance on novel classes is significantly diminished.

Figure 4.

Investigations on prompt’s hyper-parameters.

- Ablation on Deep Structure:

As illustrated in the Figure 2, the DCPE textual encoder incorporates an attention structure between each layer. The output of each layer, denoted by , is fed into the attention structure of that layer. Subsequently, it is combined with the of the subsequent layer to generate , which is then input into the next layer. To determine the effectiveness of the DCPE module, we carry out ablation experiments using the following setup: (1) The DCPE module is not incorporated into the model; only the CSP module is utilized, with dropped and directly inputted into the subsequent layer, designated CSP+deep. (2) The attentions of each layer are parameter sharing, labelled as single attention. (3) The attentions of each layer are parameter independent, labelled as multi attention, which is our proposed CSP-DCPE. (4) Each layer’s attentions are replaced with a multi-layer perceptron (MLP), which is parameter independent, labelled as multi MLP. (5) Each layer’s attentions are replaced with a long short-term memory (LSTM) unit, which is parameter independent, labelled as multi LSTM. All experimental results are presented in the Table 5. The experimental results show that incorporating single attention significantly improves the accuracy of base, novel, and harmonic mean, with increases of 0.29%, 0.32%, and 0.31%, compared to the absence of depth structure. This observation suggests that the introduction of depth structure enhances the model’s capacity to extract features, facilitating the filtering of redundant information and the extraction of valid information for subsequent processing. The multi MLP enhances the precision of base accuracy by 0.57%, novel accuracy by 0.81%, and harmonic mean by 0.7%, compared to single attention. This demonstrates that the parameters of each layer are not shared but independent, thereby enabling each layer to possess distinct capabilities, allowing each layer to extract comprehensive information at varying scales and to augment the accuracy of the model. The incorporation of multi LSTM resulted in an improvement in base accuracy by 0.54%, novel accuracy by 0.18%, and harmonic mean by 0.35%, compared to multi MLP. This indicates that LSTM is a more effective approach than MLP when dealing with sequential data characterized by long-term dependencies, due to the availability of memory and the ability to forget information. The multi-attention (DCPE) method has been shown to enhance the accuracy of base, novel, and harmonic mean by 0.66%, 0.28% and 0.45% in comparison to the multi-LSTM approach. This observation provides evidence that the attention mechanism offers greater flexibility and more pronounced benefits than LSTM for this specific type of long, sequential, multi-layer iterative data.

Table 5.

Deep ablation result averaged over 11 datasets.

- Experiment on Computational Efficiency:

As shown in Table 6, we compare the computational efficiency and performance of different approaches with the following configurations: (1) non-specific+deep: combines non-specific prompts with deep prompts; (2) CSP: uses Category-Specific Prompts exclusively; (3) CSP+deep: integrates deep prompts into CSP; (4) DCPE: independently applies Deep Context Prompt Enhancement; (5) CSP-DCPE: our final framework integrating CSP and DCPE. Experiments reveal that the non-specific+deep method achieves a 2.32% harmonic mean improvement over the CoOp baseline while significantly reducing training epochs, with 14.33 K additional parameters and unchanged FLOPS. This demonstrates the effectiveness of deep prompts in enhancing model representation. The CSP approach also excels, delivering a 2.59% harmonic mean gain with a parameter increase of 33.43 K, confirming its capability in extracting category-specific semantic features. Notably, while DCPE achieves a 0.63% harmonic mean improvement over CSP+deep, it introduces significant parameter and FLOPS overhead due to the attention modules inserted between text encoder layers. This design enhances feature learning but compromises computational efficiency. Our CSP-DCPE achieves the highest harmonic mean as the top-performing solution, whereas CSP+deep offers better parameter efficiency and computational economy, making it preferable for resource-constrained scenarios.

Table 6.

Efficiency analysis of multiple methods over 11 datasets.

5. Conclusions

Large-scale vision–language models, such as CLIP [1] and FLIP [5], have exhibited remarkable generalization capabilities in image-classification tasks. Nevertheless, the question of how to optimize the adaptation of these large-scale VLMs to downstream tasks represents a significant challenge. The extensive scale of the model and the limited availability of training data increase the risk of overfitting when the entire model is fine-tuned for a specific task. This approach also necessitates the use of significant storage space, which can lead to inefficiency. Prompt learning represents a promising avenue for addressing these challenges. However, existing methods do not consider the category-specific information associated with the category labels, resulting in suboptimal performance. In order to address this limitation, we propose a novel prompt-learning approach Category-Specific Prompt (CSP). Furthermore, existing deep prompt-learning methods are unable to effectively utilize the deep information transmitted from the previous layer. The current deep prompt-learning methods either discard valuable information or feed redundant information into the subsequent layer, which is an inefficient use of resources. In order to enhance the exploitation of features derived from the preceding layer, thereby optimizing the utilization of the combination of category-specific and non-specific prompts, we propose a novel deep prompt-learning approach, Deep Contextual Prompt Enhancement (DCPE). Our model incorporates both task-oriented and instance-oriented information, as derived from the CSP and DCPE modules respectively. Our approach has been shown to surpass existing methods in both few-shot and Base-to-Novel Generalization configurations, demonstrating enhanced performance.

Author Contributions

Conceptualization, Supervision and Validation, C.W.; Investigation, Software and Writing—Original Draft, Y.W.; Methodology, Data Curation, Q.X.; Formal analysis, Writing—Review and Editing, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the grants from the Natural Science Foundation of Shandong Province (ZR2024MF145), the National Natural Science Foundation of China (62072469), and the Qingdao Natural Science Foundation (23-2-1-162-zyyd-jch).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Learning to prompt for vision–language models. Int. J. Comput. Vis. 2022, 130, 2337–2348. [Google Scholar] [CrossRef]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.H.; Li, Z.; Duerig, T. Scaling up visual and vision–language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 4904–4916. [Google Scholar]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Conditional prompt learning for vision–language models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16816–16825. [Google Scholar]

- Yao, L.; Huang, R.; Hou, L.; Lu, G.; Niu, M.; Xu, H.; Liang, X.; Li, Z.; Jiang, X.; Xu, C. Filip: Fine-grained interactive language-image pre-training. arXiv 2021, arXiv:2111.07783. [Google Scholar]

- Li, X.L.; Liang, P. Prefix-tuning: Optimizing continuous prompts for generation. arXiv 2021, arXiv:2101.00190. [Google Scholar]

- Liu, X.; Zheng, Y.; Du, Z.; Ding, M.; Qian, Y.; Yang, Z.; Tang, J. GPT understands, too. AI Open 2024, 5, 208–215. [Google Scholar] [CrossRef]

- Lester, B.; Al-Rfou, R.; Constant, N. The power of scale for parameter-efficient prompt tuning. arXiv 2021, arXiv:2104.08691. [Google Scholar]

- Gu, Y.; Han, X.; Liu, Z.; Huang, M. Ppt: Pre-trained prompt tuning for Few-Shot Learning. arXiv 2021, arXiv:2109.04332. [Google Scholar]

- Xu, C.; Shen, H.; Shi, F.; Chen, B.; Liao, Y.; Chen, X.; Wang, L. Progressive Visual Prompt Learning with Contrastive Feature Re-formation. arXiv 2023, arXiv:2304.08386. [Google Scholar] [CrossRef]

- Khattak, M.U.; Rasheed, H.; Maaz, M.; Khan, S.; Khan, F.S. Maple: Multi-modal prompt learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 19113–19122. [Google Scholar]

- Miao, Y.; Li, S.; Tang, J.; Wang, T. MuDPT: Multi-modal Deep-symphysis Prompt Tuning for Large Pre-trained Vision-Language Models. arXiv 2023, arXiv:2306.11400. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Desai, K.; Kaul, G.; Aysola, Z.; Johnson, J. RedCaps: Web-curated image–text data created by the people, for the people. arXiv 2021, arXiv:2111.11431. [Google Scholar]

- Srinivasan, K.; Raman, K.; Chen, J.; Bendersky, M.; Najork, M. Wit: Wikipedia-based image text dataset for multimodal multilingual machine learning. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, Canada, 11–15 July 2021; pp. 2443–2449. [Google Scholar]

- Schuhmann, C.; Beaumont, R.; Vencu, R.; Gordon, C.; Wightman, R.; Cherti, M.; Coombes, T.; Katta, A.; Mullis, C.; Wortsman, M.; et al. Laion-5b: An open large-scale dataset for training next generation image–text models. Adv. Neural Inf. Process. Syst. 2022, 35, 25278–25294. [Google Scholar]

- Hu, X.; Gan, Z.; Wang, J.; Yang, Z.; Liu, Z.; Lu, Y.; Wang, L. Scaling up vision–language pre-training for image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17980–17989. [Google Scholar]

- Kim, W.; Son, B.; Kim, I. Vilt: Vision-and-language transformer without convolution or region supervision. In Proceedings of the International Conference on Machine Learning. PMLR, Virtual, 18–24 July 2021; pp. 5583–5594. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. Adv. Neural Inf. Process. Syst. 2019, 32, 13–23. [Google Scholar]

- Tan, H.; Bansal, M. Lxmert: Learning cross-modality encoder representations from transformers. arXiv 2019, arXiv:1908.07490. [Google Scholar]

- Su, W.; Zhu, X.; Cao, Y.; Li, B.; Lu, L.; Wei, F.; Dai, J. Vl-bert: Pre-training of generic visual-linguistic representations. arXiv 2019, arXiv:1908.08530. [Google Scholar]

- Li, J.; Selvaraju, R.; Gotmare, A.; Joty, S.; Xiong, C.; Hoi, S.C.H. Align before fuse: Vision and language representation learning with momentum distillation. Adv. Neural Inf. Process. Syst. 2021, 34, 9694–9705. [Google Scholar]

- Huo, Y.; Zhang, M.; Liu, G.; Lu, H.; Gao, Y.; Yang, G.; Wen, J.; Zhang, H.; Xu, B.; Zheng, W.; et al. WenLan: Bridging vision and language by large-scale multi-modal pre-training. arXiv 2021, arXiv:2103.06561. [Google Scholar]

- Gao, P.; Geng, S.; Zhang, R.; Ma, T.; Fang, R.; Zhang, Y.; Li, H.; Qiao, Y. Clip-adapter: Better vision–language models with feature adapters. Int. J. Comput. Vis. 2024, 132, 581–595. [Google Scholar] [CrossRef]

- Kim, K.; Laskin, M.; Mordatch, I.; Pathak, D. How to Adapt Your Large-Scale Vision-and-Language Model. 2022. Available online: https://openreview.net/forum?id=EhwEUb2ynIa (accessed on 1 February 2025).

- Zhang, R.; Fang, R.; Zhang, W.; Gao, P.; Li, K.; Dai, J.; Qiao, Y.; Li, H. Tip-adapter: Training-free clip-adapter for better vision–language modeling. arXiv 2021, arXiv:2111.03930. [Google Scholar]

- Feng, C.; Zhong, Y.; Jie, Z.; Chu, X.; Ren, H.; Wei, X.; Xie, W.; Ma, L. Promptdet: Towards open-vocabulary detection using uncurated images. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 701–717. [Google Scholar]

- Gu, X.; Lin, T.Y.; Kuo, W.; Cui, Y. Open-vocabulary object detection via vision and language knowledge distillation. arXiv 2021, arXiv:2104.13921. [Google Scholar]

- Maaz, M.; Rasheed, H.; Khan, S.; Khan, F.S.; Anwer, R.M.; Yang, M.H. Class-agnostic object detection with multi-modal transformer. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 512–531. [Google Scholar]

- Bangalath, H.; Maaz, M.; Khattak, M.U.; Khan, S.H.; Shahbaz Khan, F. Bridging the gap between object and image-level representations for open-vocabulary detection. Adv. Neural Inf. Process. Syst. 2022, 35, 33781–33794. [Google Scholar]

- Zang, Y.; Li, W.; Zhou, K.; Huang, C.; Loy, C.C. Open-vocabulary detr with conditional matching. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 106–122. [Google Scholar]

- Zhou, X.; Girdhar, R.; Joulin, A.; Krähenbühl, P.; Misra, I. Detecting twenty-thousand classes using image-level supervision. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 350–368. [Google Scholar]

- Ding, J.; Xue, N.; Xia, G.S.; Dai, D. Decoupling zero-shot semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11583–11592. [Google Scholar]

- Lüddecke, T.; Ecker, A. Image segmentation using text and image prompts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7086–7096. [Google Scholar]

- Rao, Y.; Zhao, W.; Chen, G.; Tang, Y.; Zhu, Z.; Huang, G.; Zhou, J.; Lu, J. Denseclip: Language-guided dense prediction with context-aware prompting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18082–18091. [Google Scholar]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on Few-Shot Learning. ACM Comput. Surv. (csur) 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Jiang, W.; Huang, K.; Geng, J.; Deng, X. Multi-scale metric learning for Few-Shot Learning. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 1091–1102. [Google Scholar] [CrossRef]

- Hao, F.; He, F.; Cheng, J.; Wang, L.; Cao, J.; Tao, D. Collect and select: Semantic alignment metric learning for few-shot learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8460–8469. [Google Scholar]

- Li, H.; Ge, S.; Gao, C.; Gao, H. Few-shot object detection via high-and-low resolution representation. Comput. Electr. Eng. 2022, 104, 108438. [Google Scholar] [CrossRef]

- Sun, Q.; Liu, Y.; Chua, T.S.; Schiele, B. Meta-transfer learning for Few-Shot Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 403–412. [Google Scholar]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-learning in neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5149–5169. [Google Scholar] [CrossRef]

- Wang, J.X. Meta-learning in natural and artificial intelligence. Curr. Opin. Behav. Sci. 2021, 38, 90–95. [Google Scholar] [CrossRef]

- Lei, S.; Dong, B.; Shan, A.; Li, Y.; Zhang, W.; Xiao, F. Attention meta-transfer learning approach for few-shot iris recognition. Comput. Electr. Eng. 2022, 99, 107848. [Google Scholar] [CrossRef]

- Yao, H.; Zhang, R.; Xu, C. Visual-language prompt tuning with knowledge-guided context optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6757–6767. [Google Scholar]

- Zhu, B.; Niu, Y.; Han, Y.; Wu, Y.; Zhang, H. Prompt-aligned gradient for prompt tuning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 15659–15669. [Google Scholar]

- Lu, Y.; Liu, J.; Zhang, Y.; Liu, Y.; Tian, X. Prompt distribution learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5206–5215. [Google Scholar]

- Zhang, Y.; Fei, H.; Li, D.; Yu, T.; Li, P. Prompting through prototype: A prototype-based prompt learning on pretrained vision–language models. arXiv 2022, arXiv:2210.10841. [Google Scholar]

- Jia, M.; Tang, L.; Chen, B.C.; Cardie, C.; Belongie, S.; Hariharan, B.; Lim, S.N. Visual prompt tuning. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 709–727. [Google Scholar]

- Lee, D.; Song, S.; Suh, J.; Choi, J.; Lee, S.; Kim, H.J. Read-only Prompt Optimization for Vision-Language Few-Shot Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 1401–1411. [Google Scholar]

- Liu, X.; Tang, W.; Lu, J.; Zhao, R.; Guo, Z.; Tan, F. Deeply Coupled Cross-Modal Prompt Learning. arXiv 2023, arXiv:2305.17903. [Google Scholar]

- Cho, E.; Kim, J.; Kim, H.J. Distribution-Aware Prompt Tuning for Vision-Language Models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 22004–22013. [Google Scholar]

- Ju, C.; Han, T.; Zheng, K.; Zhang, Y.; Xie, W. Prompting visual-language models for efficient video understanding. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 105–124. [Google Scholar]

- Yu, X.; Yoo, S.; Lin, Y. CLIPCEIL: Domain Generalization through CLIP via Channel rEfinement and Image–text aLignment. In Proceedings of the Thirty-Eighth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Fei-Fei, L.; Fergus, R.; Perona, P. Learning generative visual models from few training examples: An incremental bayesian approach tested on 101 object categories. In Proceedings of the 2004 Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 27 June–2 July 2004; IEEE: Piscataway, NJ, USA, 2004; p. 178. [Google Scholar]

- Maji, S.; Rahtu, E.; Kannala, J.; Blaschko, M.; Vedaldi, A. Fine-grained visual classification of aircraft. arXiv 2013, arXiv:1306.5151. [Google Scholar]

- Nilsback, M.E.; Zisserman, A. Automated flower classification over a large number of classes. In Proceedings of the 2008 Sixth Indian Conference on Computer Vision, Graphics & Image Processing, Bhubaneswar, India, 16–19 December 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 722–729. [Google Scholar]

- Bossard, L.; Guillaumin, M.; Van Gool, L. Food-101–mining discriminative components with random forests. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part VI 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 446–461. [Google Scholar]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A.; Jawahar, C. Cats and dogs. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3498–3505. [Google Scholar]

- Krause, J.; Stark, M.; Deng, J.; Fei-Fei, L. 3d object representations for fine-grained categorization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 554–561. [Google Scholar]

- Cimpoi, M.; Maji, S.; Kokkinos, I.; Mohamed, S.; Vedaldi, A. Describing textures in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3606–3613. [Google Scholar]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar] [CrossRef]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Xiao, J.; Hays, J.; Ehinger, K.A.; Oliva, A.; Torralba, A. Sun database: Large-scale scene recognition from abbey to zoo. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 3485–3492. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).