1.1. Background

Tools for communication in remote and virtual environments, such as video chats and metaverse platforms in VR spaces, have become increasingly prevalent. These tools are not only used for everyday communication but have also been adopted in domains like healthcare, welfare, education, and tourism, which traditionally relied on face-to-face interactions. By enabling communication without the constraints of physical distance, they hold the potential to address issues faced by industries suffering from a shortage of skilled personnel.

However, current communication tools have limitations, particularly in the range of information that can be transmitted. In face-to-face interactions, humans exchange not only verbal information but also nonverbal cues such as facial expressions, body movements, touch, odors, interpersonal distance, and gaze. These nonverbal cues play critical roles in expressing emotions and attitudes, shaping impressions, and interpreting the emotions of others. They are often used alongside verbal communication [

1,

2,

3]. In contrast, remote and virtual environments lack these nonverbal cues due to restricted visual information from cameras and the absence of olfactory and tactile feedback, leading to a decline in communication quality compared to face-to-face interactions.

To address these challenges, research into devices capable of transmitting haptic information, which is closely related to nonverbal communication, has gained significant attention. These studies have proposed various methods to replicate social touches such as tapping, hugging, massaging, and stroking [

3,

4,

5,

6]. These proposed methods have demonstrated improvements in trust, social presence, and emotional expression in remote and virtual communication, indicating their potential to enhance the overall quality of communication.

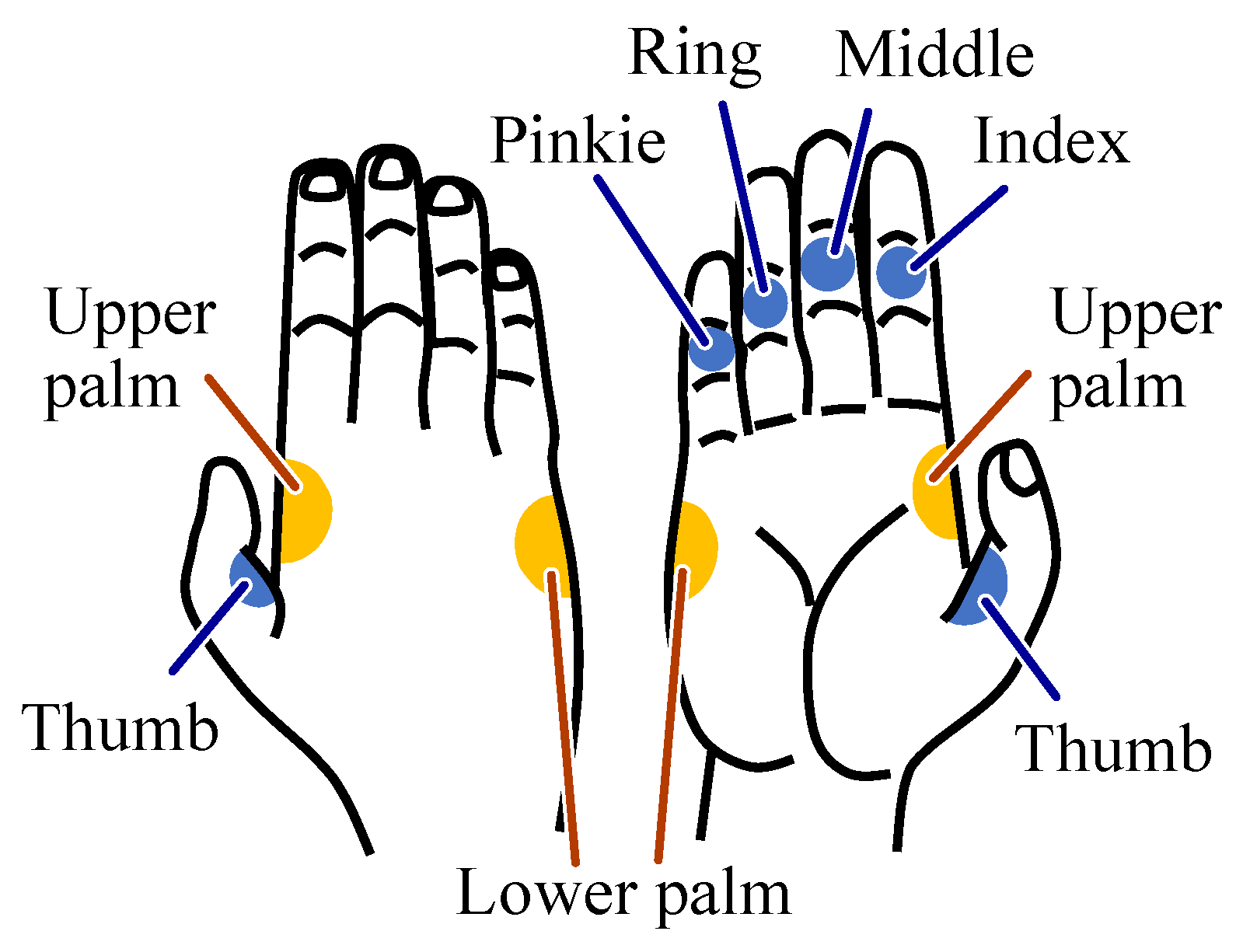

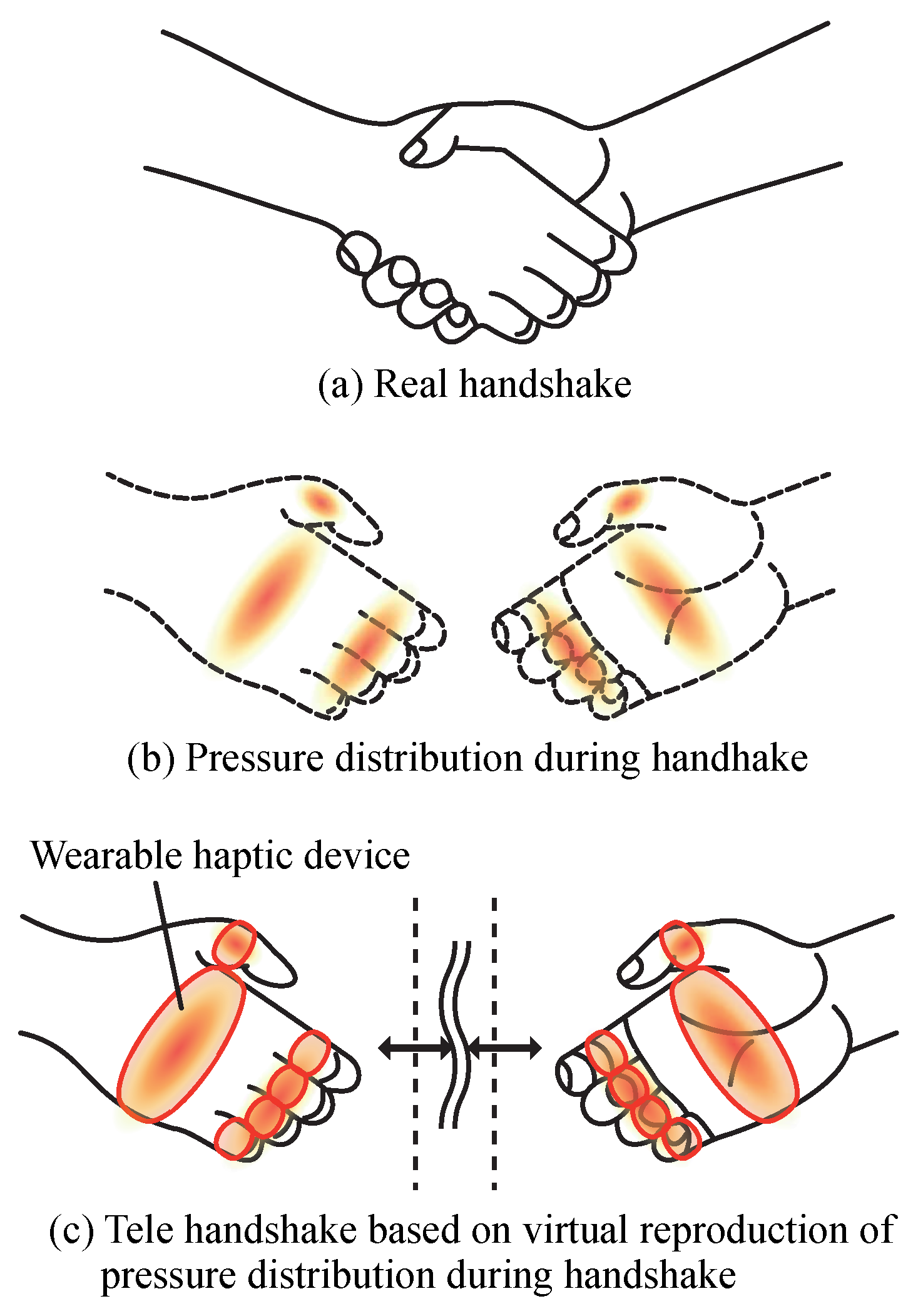

This study focuses on handshakes, a fundamental form of social touch among humans. Unlike unidirectional social touches, such as stroking, where one party acts upon the other, handshakes involve mutual interaction, enabling simultaneous self-expression and understanding of the other party. This mutual adjustment of actions can significantly enhance the communicative effects. Furthermore, unlike other interactive social touches such as hugs, which are often limited to close relationships, handshakes are widely used in diverse contexts, including greetings among friends, business settings, and sports. Therefore, enabling handshakes in remote and virtual environments is expected to be applicable across various scenarios, regardless of the relationship between participants. Additionally, as handshakes are globally recognized as a form of tactile interaction, they are expected to facilitate communication across different cultures [

7].

Applications of a remote handshake system span multiple fields. In professional settings, such as international business negotiations or virtual job interviews, a remote handshake could serve as a symbolic and tactile substitute for establishing rapport and trust. In the context of virtual reality and the metaverse, remote handshakes could significantly improve the realism and social presence of interactions, particularly in virtual collaboration, training, or social networking platforms.

1.2. Related Works

Several approaches have been proposed to achieve handshakes in remote and virtual environments using robotic hands. Vigni et al. applied a controller that integrates both active and passive actions, similar to humans, to improve the quality of handshakes [

8]. Nakanishi et al. developed a robotic hand that replicates human hand temperature, softness, and grip strength [

9]. They reported that handshakes mediated by this robotic hand during video chats created a more favorable impression of the remote partner. Ammi et al. implemented handshake functionality in robots to enhance emotional expressiveness [

10]. Their results indicated that tactile feedback during handshakes, combined with facial expressions, made it easier for humans to identify the robot’s emotions compared to facial expressions alone. Faisal et al. developed a device capable of replicating gripping and shaking motions, demonstrating that human personality traits can be conveyed through remote handshakes [

11].

While these studies successfully utilized robotic hands to replicate handshakes, they have notable limitations. Robotic hands tend to be large and stationary, posing challenges in terms of installation and space constraints. As a result, these approaches are less feasible for home environments, which are the primary use cases for communication tools. In VR settings, robotic hands can restrict user mobility due to limited actuation ranges, further diminishing their practicality.

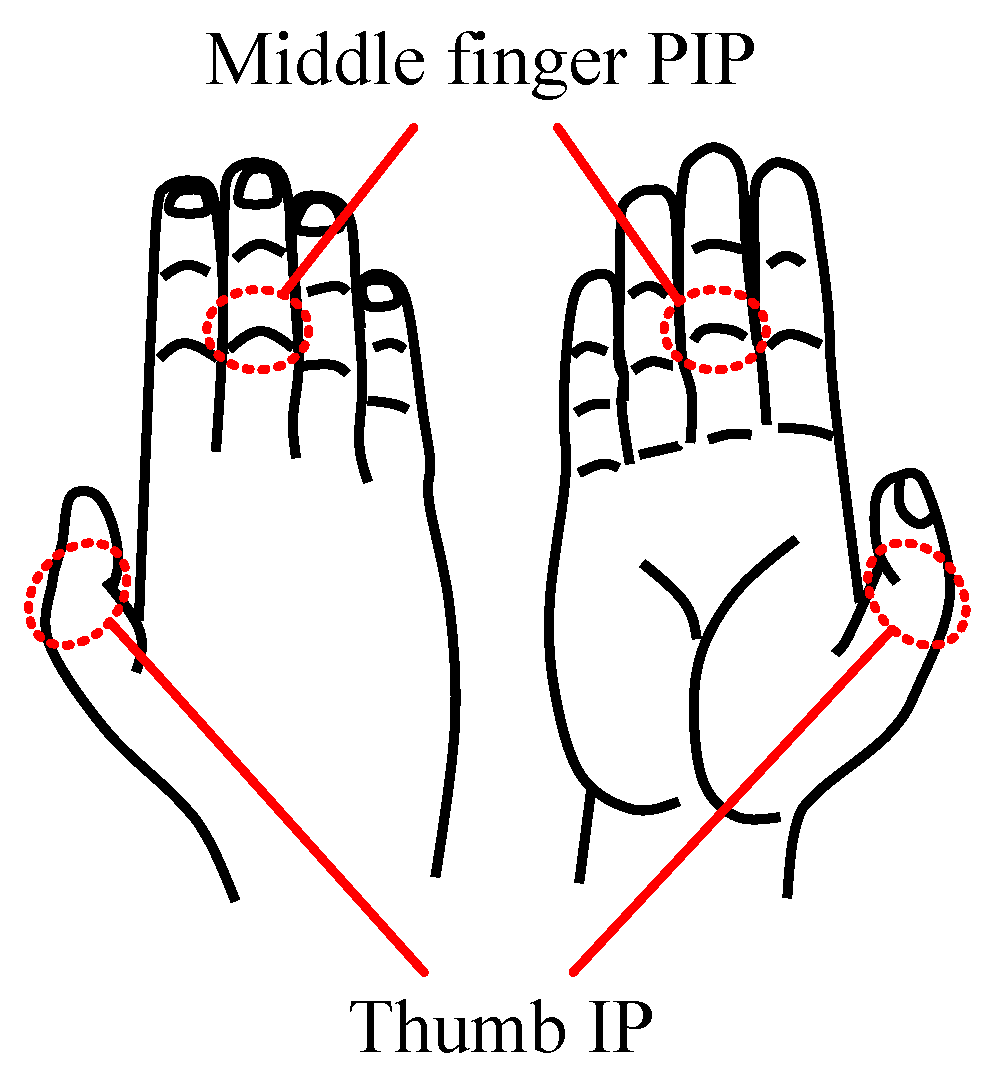

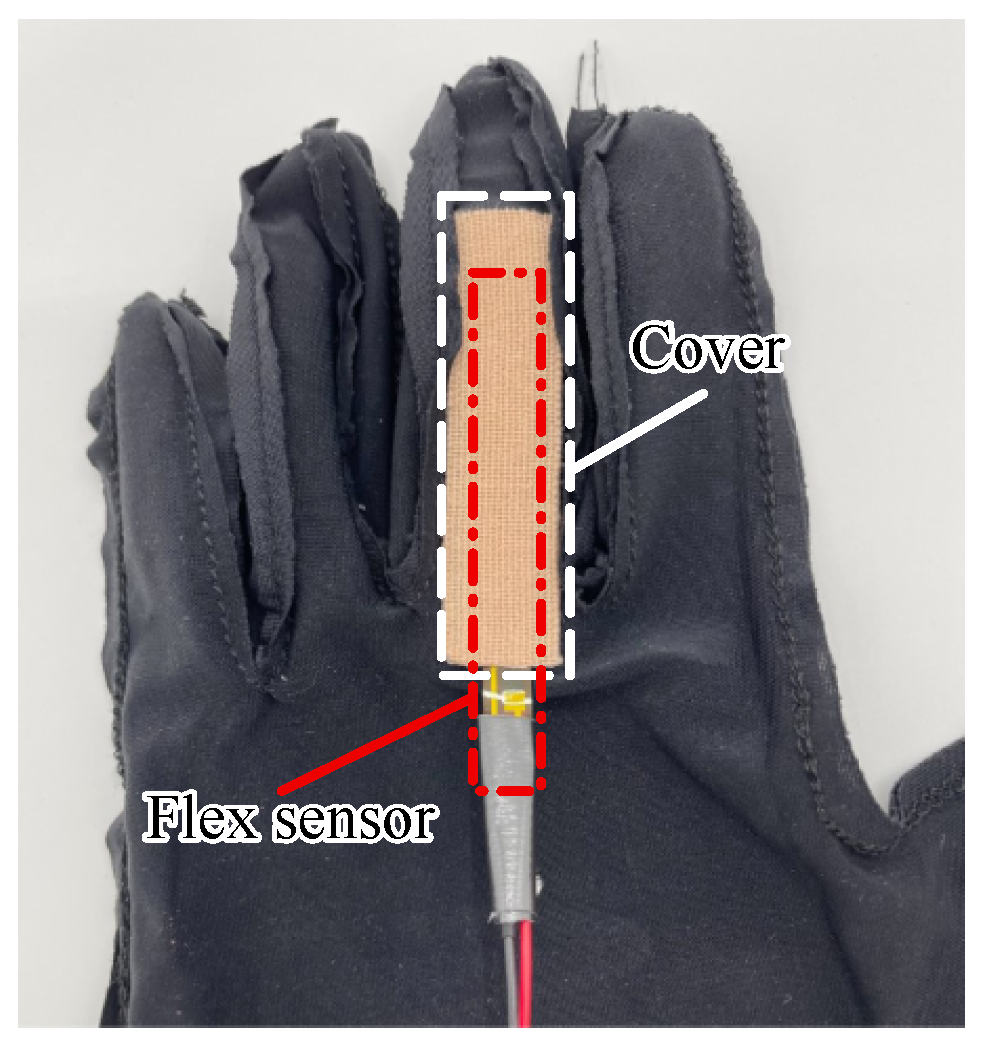

Other methods have explored wearable haptic devices for tactile feedback. For example, devices providing force feedback to finger flexion have been proposed [

12,

13,

14]. Although some of these devices are commercially available, they are often costly, limiting their practicality. Vibratory stimulation devices, which use mechanical vibrations to provide tactile feedback to the skin, have also been developed [

15,

16,

17,

18]. These devices are lightweight, compact, and cost-effective, offering advantages in wearability. However, the tactile information they provide is limited to vibrations, which may not fully replicate the forces experienced in real-world interactions.

Additionally, alternative approaches have been proposed. Nagano et al. developed a skin suction device that uses air tubes to apply suction pressure to the fingertips, creating the illusion of pressure [

19]. Abad et al. developed a pin-based device capable of providing tactile feedback to multiple fingers using five actuators [

20]. Yem et al. designed an electrical stimulation device that delivers stimuli to the fingertips, creating an illusion of pressure [

21]. These methods offer high spatial resolution and precise control of tactile feedback positions but face challenges such as limited maximum tactile intensity.

Finally, pressure-based feedback devices using soft materials have been proposed. Yamaguchi et al. developed a device using a bag-type actuator that expands with the evaporation of a low-boiling-point liquid to provide pressure [

22]. Yarosh et al. created a device that uses shape-memory alloys embedded in a band to squeeze the palm, providing pressure feedback [

23]. While these approaches enable pressure simulation during handshakes and can replicate thermal sensations, they lack the ability for users to adjust handshake intensity freely. As handshake intensity is associated with personality traits [

11,

24] and emotions [

25], developing a device and control model that allows users to freely express these factors through handshake intensity is crucial.