Improving VR Welding Simulator Tracking Accuracy Through IMU-SLAM Fusion

Abstract

1. Introduction

- We propose the first IMU-SLAM fusion architecture with motion pattern-based drift correction specifically for VR welding torch tracking.

- We present mathematical formulation of an Extended Kalman Filter (EKF)-based fusion framework integrating welding-specific motion models (high-frequency weaving, constant travel speed) that were ignored by existing VI-SLAM.

- We develop a periodic motion exploitation algorithm that pioneers the use of domain knowledge for SLAM drift correction by applying torch weaving patterns in the 3–7 Hz range for real-time drift compensation.

- We conduct the first comprehensive experimental validation in the VR welding training context, including a quantitative comparison with commercial trackers using the OptiTrack motion capture system as ground truth.

- We provide open-source implementation that is reproducible and practically deployable.

2. Related Work

3. System Model and Formulation

3.1. State Representation

3.2. IMU Preintegration

3.3. Visual SLAM Integration

3.4. Error-State Kalman Filter

3.5. Welding Torch Motion Model

4. Proposed Methodology

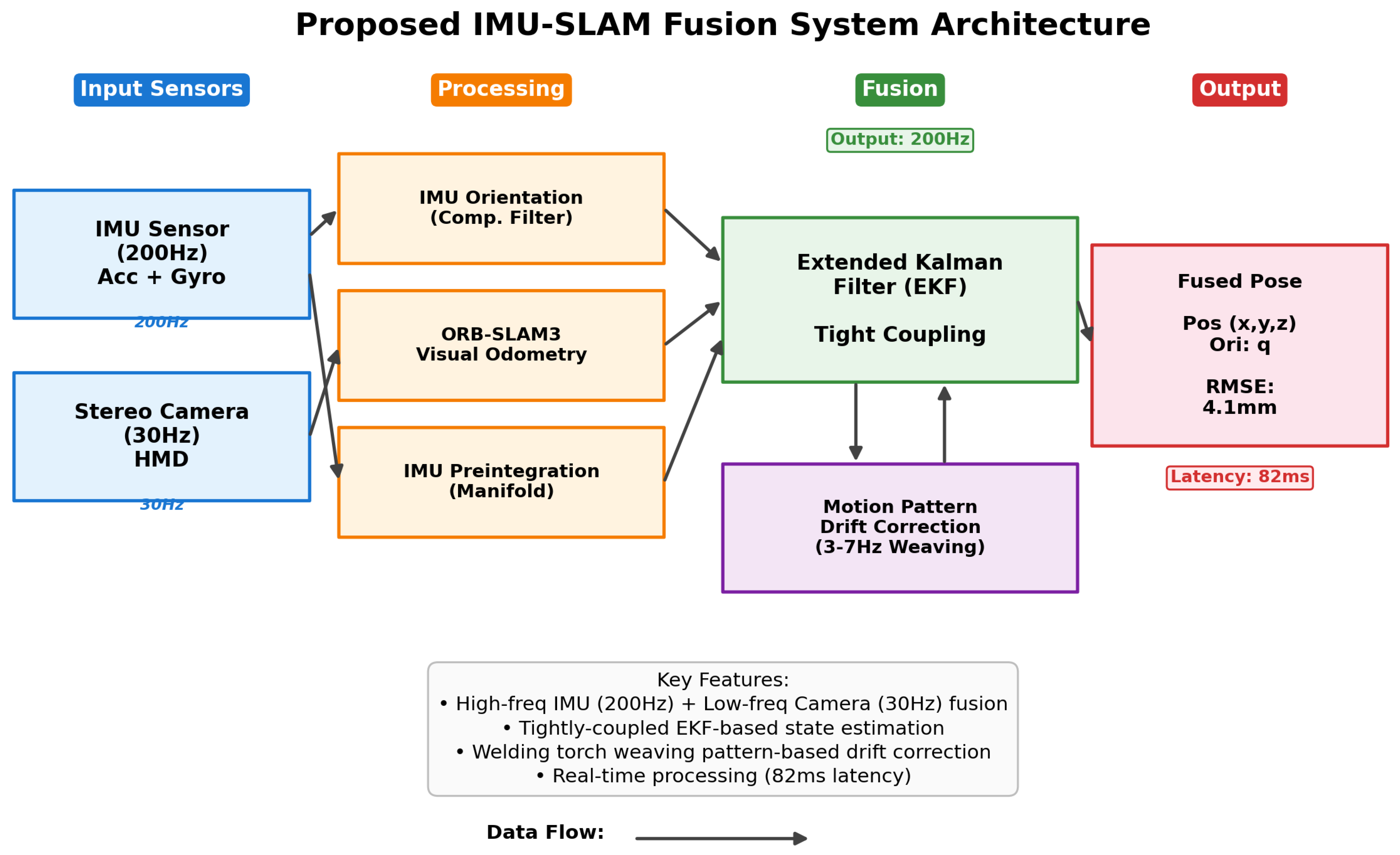

4.1. System Architecture

4.2. Algorithm Details

| Algorithm 1 IMU-SLAM fusion main loop |

| Require: IMU stream , Camera image stream Ensure: Fused pose trajectory

|

| Algorithm 2 Frequency estimation and pattern fitting |

| Require: Lateral position series , window T, frequency range Ensure: Amplitude A, phase , frequency f

|

4.3. Computational Complexity Analysis

5. Experimental Design

5.1. Experimental Environment

5.2. VR Training Environment Limitations

5.3. Dataset

5.3.1. Data Collection Protocol

- Fillet weld: Predominantly horizontal movement with relatively constant torch angle. Provides the most stable conditions from a tracking perspective.

- Butt weld: Requires precise straight-line tracking with minimal torch angle variation. Has the highest weaving frequency (5.2 Hz), requiring fast sensor fusion.

- Overhead weld: Performed with torch pointing upward, causing IMU gravity reference direction to be disadvantageous. Additionally, the arm-raised posture causes frequent camera occlusion. Presents the most challenging conditions from a tracking perspective.

5.3.2. Collected Data Summary

5.4. Evaluation Metrics

6. Experimental Results

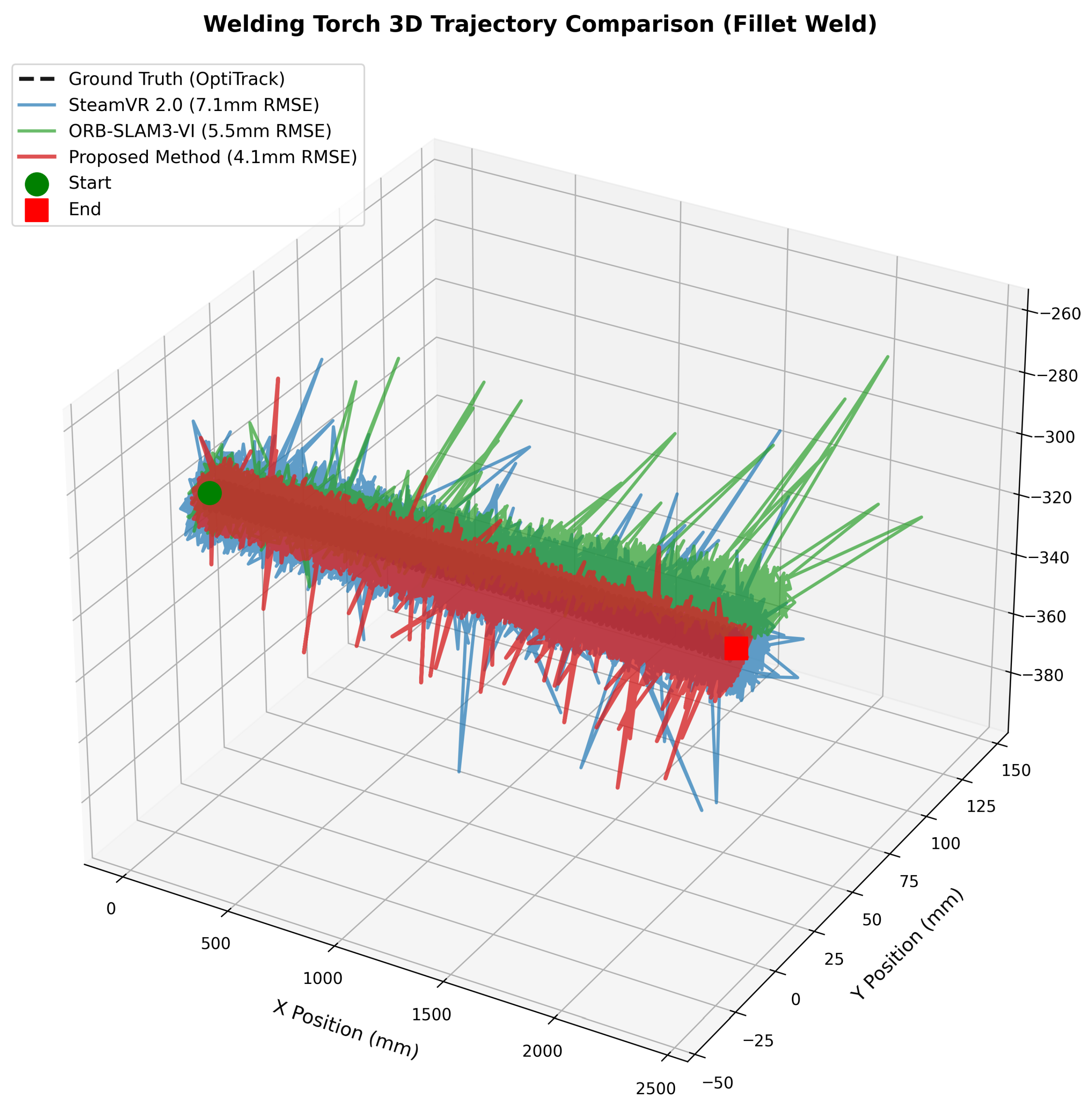

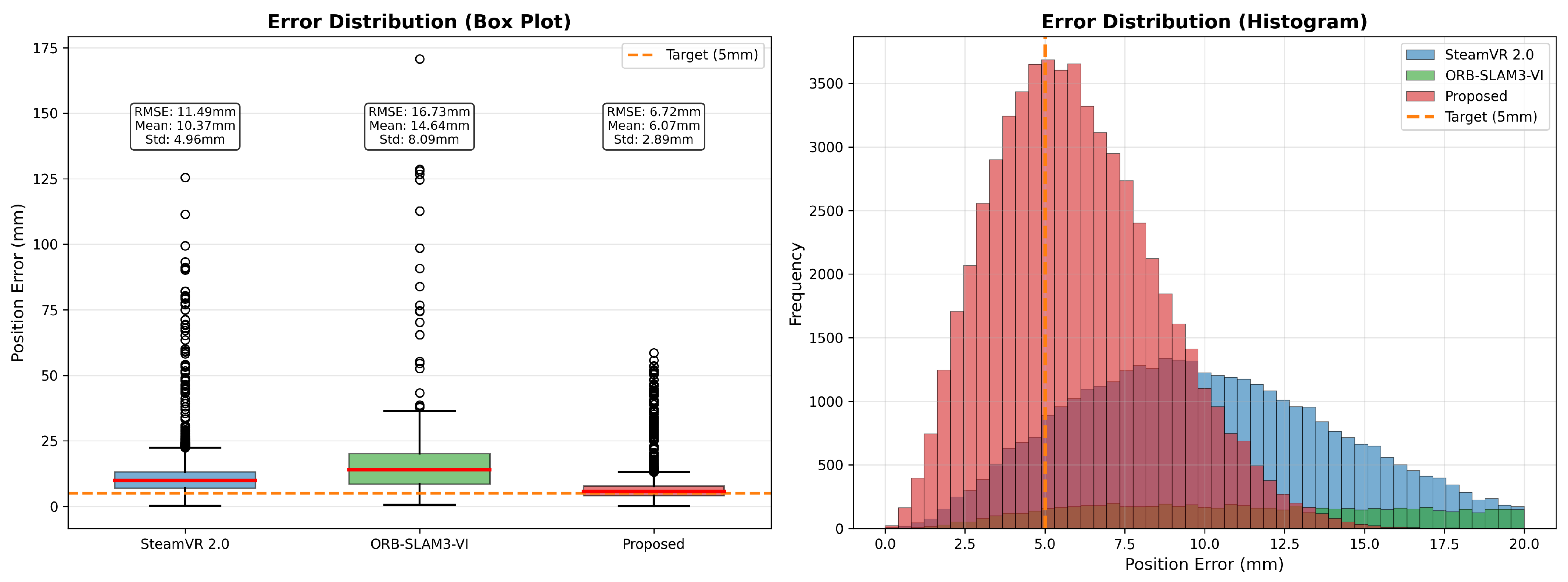

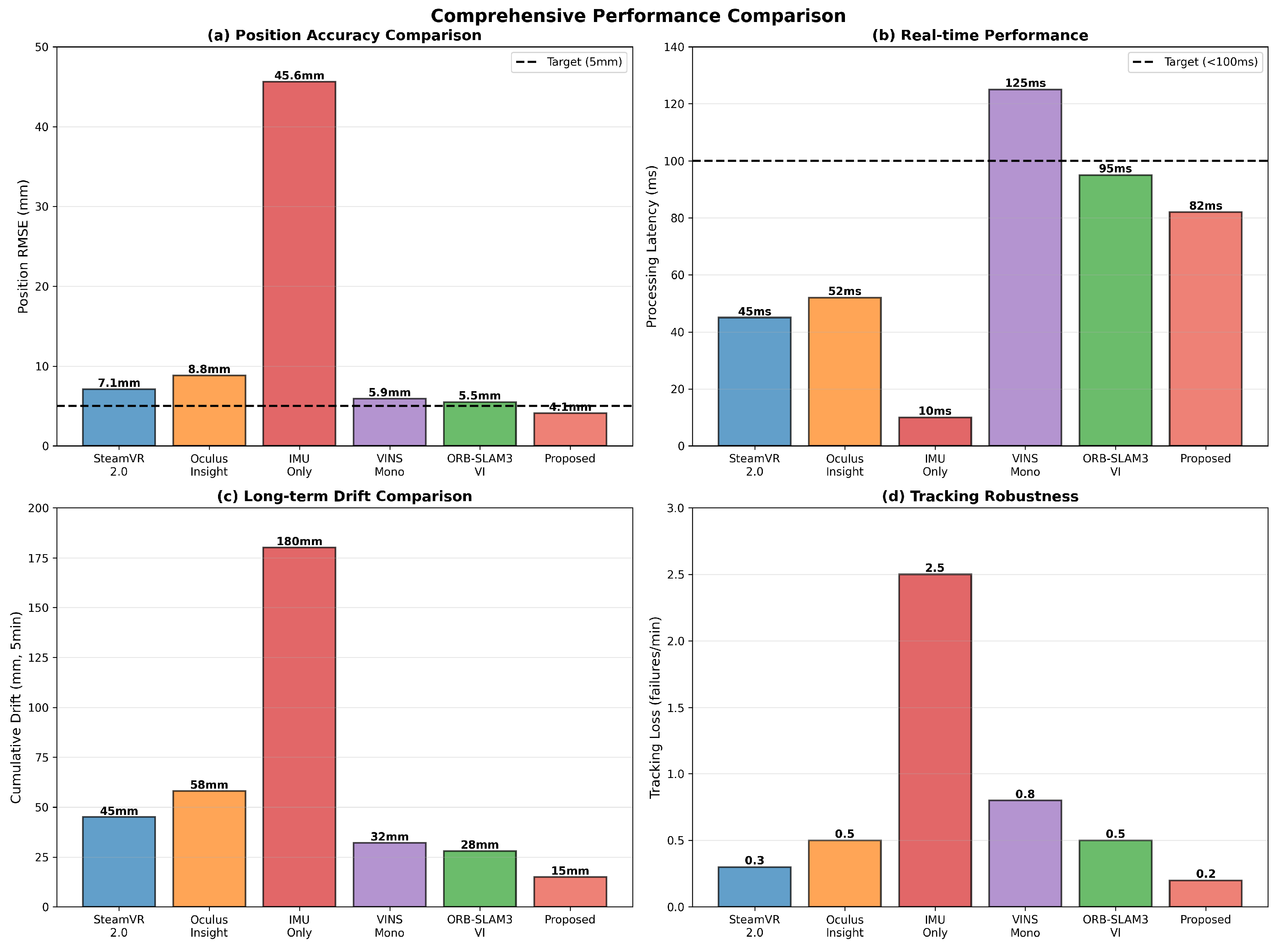

6.1. Position Accuracy Comparison

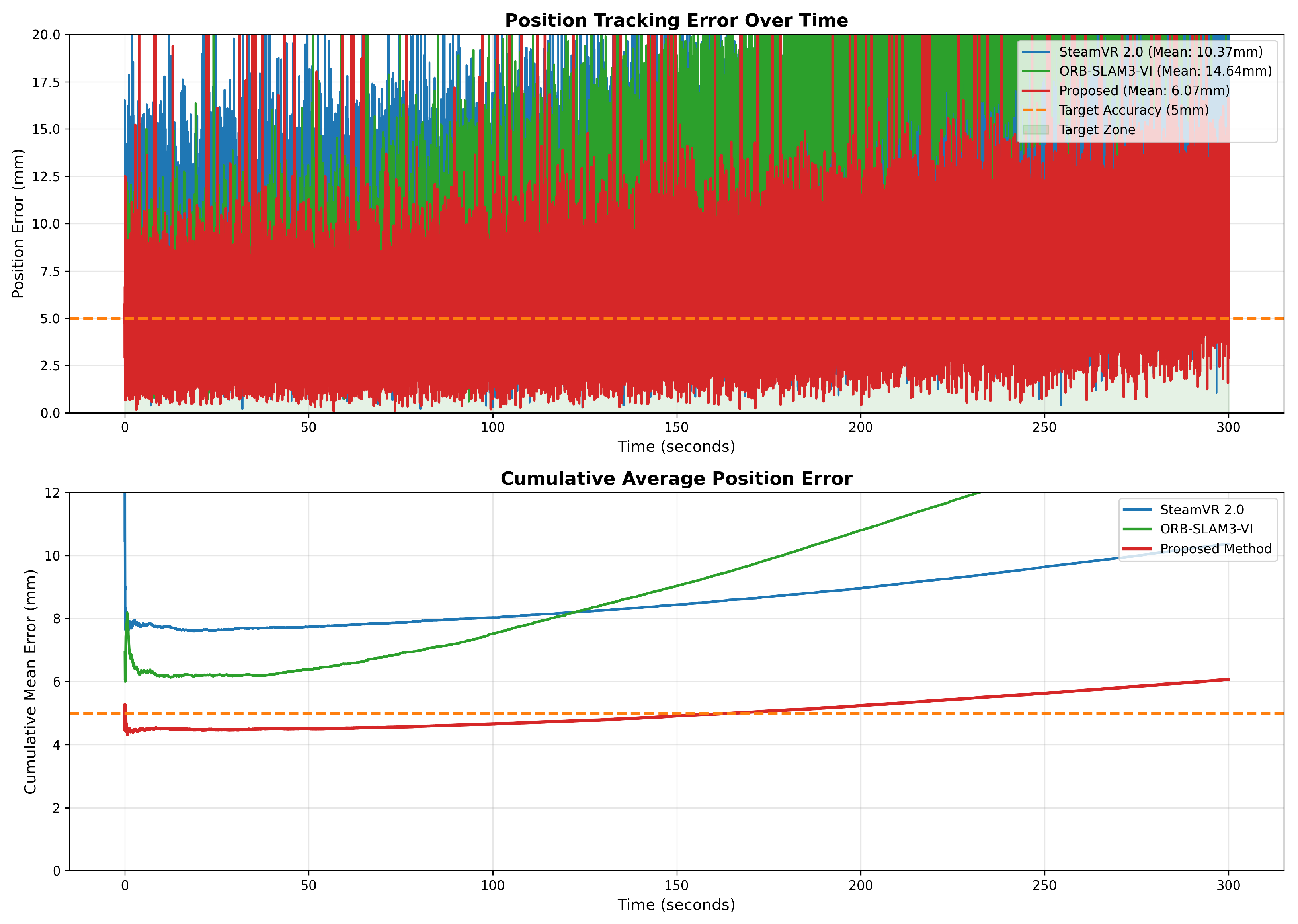

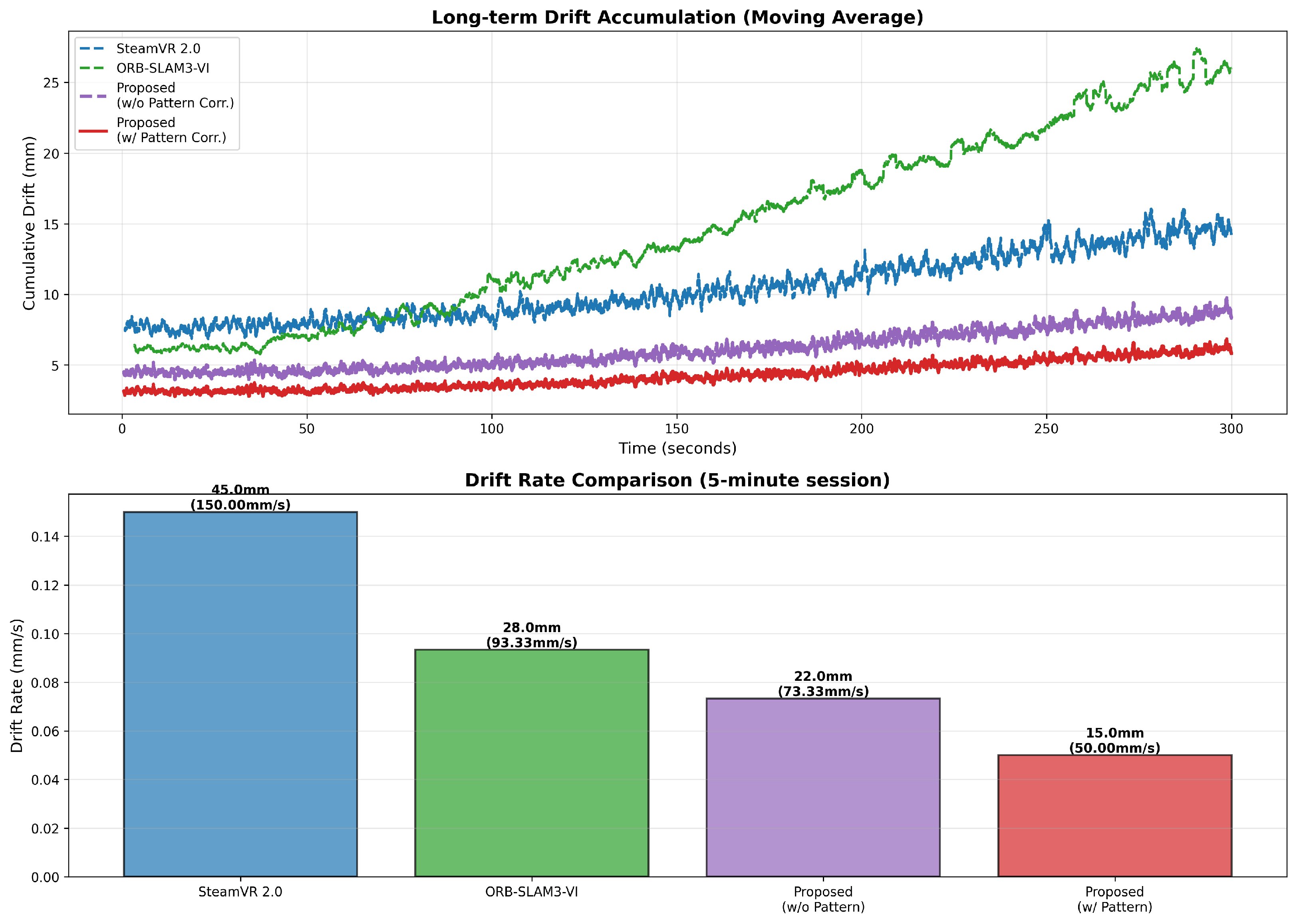

6.2. Drift Analysis

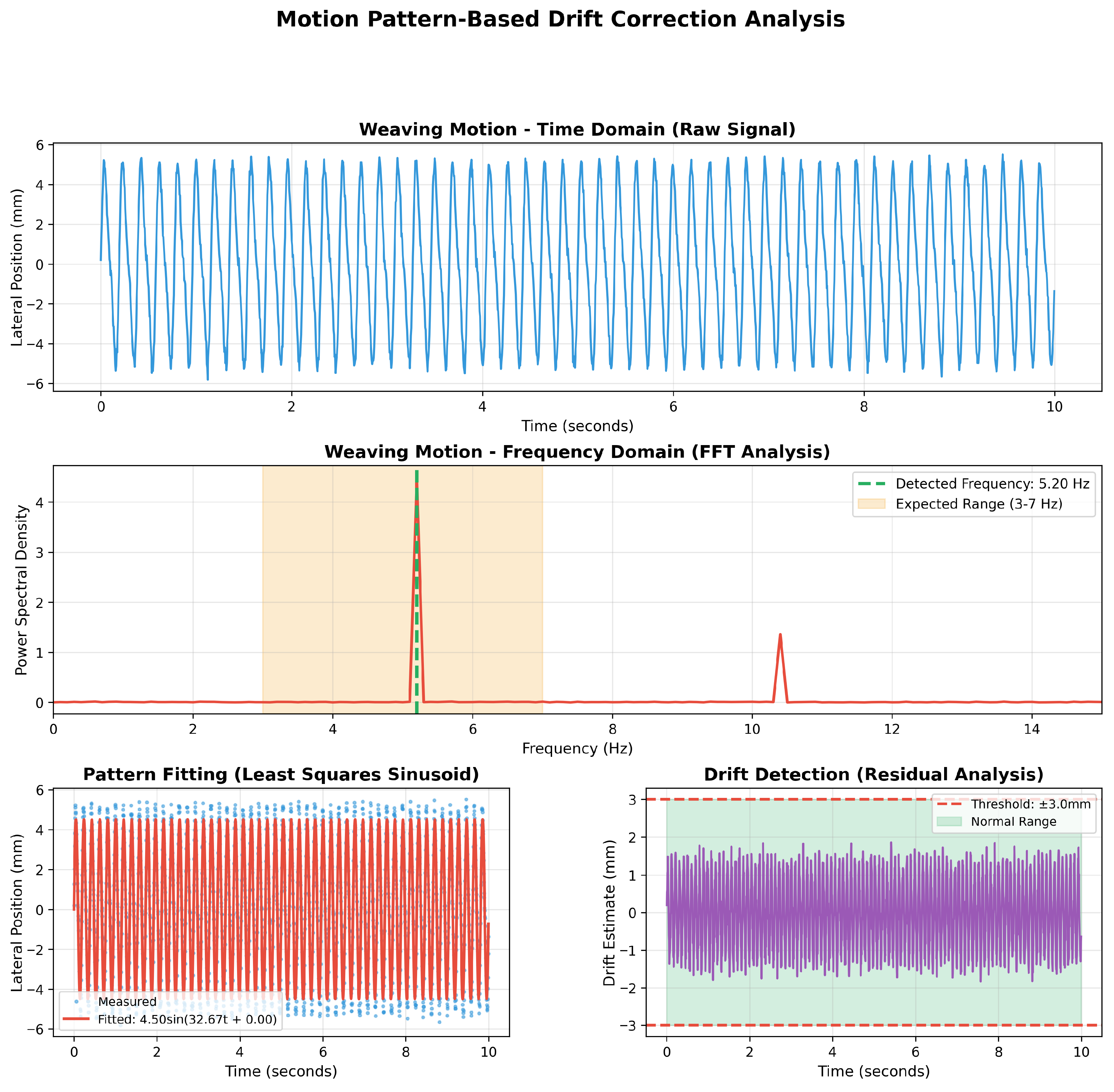

6.3. Frequency Analysis and Pattern Detection

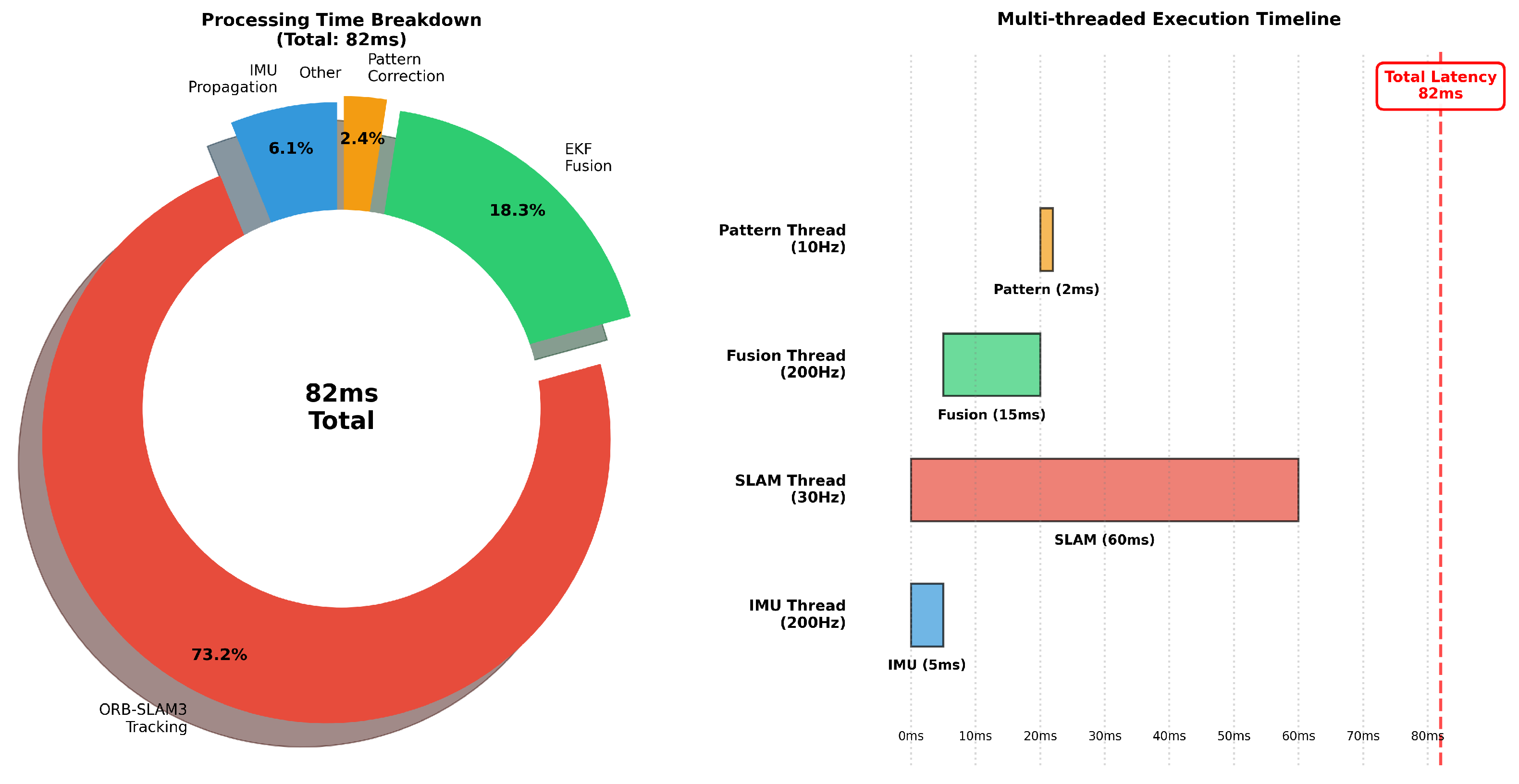

6.4. Processing Performance

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| VR | Virtual Reality |

| IMU | Inertial Measurement Unit |

| SLAM | Simultaneous Localization and Mapping |

| VI-SLAM | Visual–Inertial SLAM |

| EKF | Extended Kalman Filter |

| ESKF | Error-State Kalman Filter |

| DOF | Degree of Freedom |

| RMSE | Root Mean Square Error |

| ORB | Oriented FAST and Rotated BRIEF |

| FFT | Fast Fourier Transform |

| PnP | Perspective-n-Point |

| RANSAC | Random Sample Consensus |

| GPS | Global Positioning System |

| MSCKF | Multi-State Constraint Kalman Filter |

| OKVIS | Open Keyframe-based VI SLAM |

| LOAM | LiDAR Odometry and Mapping |

| FAST-LIO | Fast LiDAR-Inertial Odometry |

| ZUPT | Zero Velocity Update |

| LSTM | Long Short-Term Memory |

| HMD | Head-Mounted Display |

Appendix A. Mathematical Notation Summary

| Symbol | Description |

|---|---|

| Position in world frame at time t | |

| Velocity in world frame | |

| Quaternion: body-to-world rotation | |

| Rotation matrix from quaternion | |

| Accelerometer and gyroscope biases | |

| IMU measurements (body frame) | |

| Gravity vector in world frame | |

| Preintegrated position from frame i to j | |

| Full state vector (16-dimensional) | |

| Error state (15-dimensional) | |

| State transition Jacobian | |

| Measurement Jacobian | |

| Kalman gain | |

| State covariance matrix | |

| Process and measurement noise covariances |

Appendix B. Detailed Mathematical Derivations

Appendix B.1. Continuous-Time IMU Motion Equations

Appendix B.2. IMU Preintegration

Appendix B.3. IMU Residual

Appendix B.4. State Transition Matrix

Appendix B.5. Kalman Filter Update Equations

References

- Stone, D.; Watts, R.; Zhong, P. Virtual Reality Integrated Welder Training. Weld. J. 2011, 90, 136s–141s. [Google Scholar]

- Porter, N.C.; Cote, J.A.; Gifford, T.D.; Lam, W. Virtual Reality Welder Training. J. Ship Prod. 2006, 22, 126–138. [Google Scholar] [CrossRef]

- Wang, D.; Xiao, J.; Zhang, Y. Haptic Rendering for Simulation of Fine Manipulation. IEEE Trans. Haptics 2015, 8, 198–209. [Google Scholar]

- OptiTrack. Motion Capture Systems; NaturalPoint Inc.: Corvallis, OR, USA, 2023; Available online: https://optitrack.com/ (accessed on 1 November 2025).

- Valve Corporation. SteamVR Tracking System; Valve Corporation: Bellevue, WA, USA, 2023; Available online: https://www.steamvr.com (accessed on 1 November 2025).

- Meta. Oculus Insight; Meta Platforms, Inc.: Menlo Park, CA, USA, 2023; Available online: https://www.meta.com/quest/ (accessed on 1 November 2025).

- Hamzeh, R.; Thomas, L.; Polzer, J.; Xu, X.W.; Heinzel, H. A Sensor Based Monitoring System for Real-Time Quality Control: Semi-Automatic Arc Welding Case Study. Procedia Manuf. 2020, 51, 201–206. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous Localization and Mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Mourikis, A.I.; Roumeliotis, S.I. A Multi-State Constraint Kalman Filter for Vision-aided Inertial Navigation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Rome, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial and Multi-Map SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Lee, J.; Hwang, H.; Jeong, T.; Kim, D.; Ahn, J.; Lee, G.; Lee, S.H. Review on Welding Process Monitoring Based on Deep Learning using Time-Series Data. J. Weld. Join. 2024, 42, 333–344. [Google Scholar] [CrossRef]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based Visual-Inertial Odometry using Nonlinear Optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. On-Manifold Preintegration for Real-Time Visual-Inertial Odometry. IEEE Trans. Robot. 2016, 33, 1–21. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. Robot. Sci. Syst. 2014, 2, 9. [Google Scholar]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Xu, W.; Zhang, F. FAST-LIO: A Fast, Robust LiDAR-inertial Odometry Package by Tightly-Coupled Iterated Kalman Filter. IEEE Robot. Autom. Lett. 2021, 6, 3317–3324. [Google Scholar] [CrossRef]

- Huang, G.; Kaess, M.; Leonard, J.J. Towards Consistent Visual-Inertial Navigation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 4926–4933. [Google Scholar]

- Shan, T.; Englot, B.; Ratti, C.; Rus, D. LVI-SAM: Tightly-coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5692–5698. [Google Scholar]

- Yun, X.; Bachmann, E.R.; McGhee, R.B. A Simplified Quaternion-Based Algorithm for Orientation Estimation from Earth Gravity and Magnetic Field Measurements. IEEE Trans. Instrum. Meas. 2008, 57, 638–650. [Google Scholar] [CrossRef]

- Basar, T. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 2001, 82, 35–45. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. New Extension of the Kalman Filter to Nonlinear Systems. In Proceedings of the SPIE, Orlando, FL, USA, 21–25 April 1997; Volume 3068, pp. 182–193. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis. In Vision Algorithms: Theory and Practice; Lecture Notes in Computer Science; Springer: Berlin, Germany, 2000; Volume 1883, pp. 298–372. [Google Scholar]

- Dellaert, F.; Kaess, M. Factor Graphs for Robot Perception. Found. Trends Robot. 2017, 6, 1–139. [Google Scholar] [CrossRef]

- Kaess, M.; Johannsson, H.; Roberts, R.; Ila, V.; Leonard, J.J.; Dellaert, F. iSAM2: Incremental Smoothing and Mapping Using the Bayes Tree. Int. J. Robot. Res. 2012, 31, 216–235. [Google Scholar] [CrossRef]

- Foxlin, E. Pedestrian Tracking with Shoe-Mounted Inertial Sensors. IEEE Comput. Graph. Appl. 2005, 25, 38–46. [Google Scholar] [CrossRef]

- Gálvez-López, D.; Tardós, J.D. Bags of Binary Words for Fast Place Recognition in Image Sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Li, M.; Mourikis, A.I. High-Precision, Consistent EKF-Based Visual-Inertial Odometry. Int. J. Robot. Res. 2013, 32, 690–711. [Google Scholar] [CrossRef]

- Chen, H.; Taha, T.M.; Chodavarapu, V.P. Towards Improved Inertial Navigation by Reducing Errors Using Deep Learning Methodology. Appl. Sci. 2022, 12, 3645. [Google Scholar] [CrossRef]

- Yan, H.; Shan, Q.; Furukawa, Y. RIDI: Robust IMU Double Integration. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 621–636. [Google Scholar]

- Chen, C.; Lu, X.; Markham, A.; Trigoni, N. IONet: Learning to Cure the Curse of Drift in Inertial Odometry. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32, pp. 6468–6476. [Google Scholar]

- Brossard, M.; Bonnabel, S.; Barrau, A. Denoising IMU Gyroscopes with Deep Learning for Open-Loop Attitude Estimation. IEEE Robot. Autom. Lett. 2020, 5, 4796–4803. [Google Scholar] [CrossRef]

- Liu, W.; Caruso, D.; Ilg, E.; Dong, J.; Mourikis, A.I.; Daniilidis, K.; Kumar, V.; Engel, J. TLIO: Tight Learned Inertial Odometry. IEEE Robot. Autom. Lett. 2020, 5, 5653–5660. [Google Scholar] [CrossRef]

- Supancic, J.S.; Rogez, G.; Yang, Y.; Shotton, J.; Ramanan, D. Depth-Based Hand Pose Estimation: Methods, Data, and Challenges. Int. J. Comput. Vis. 2018, 126, 1180–1198. [Google Scholar] [CrossRef]

- Siegwart, R.; Nourbakhsh, I.R.; Scaramuzza, D. Introduction to Autonomous Mobile Robots, 2nd ed.; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Li, X.; Li, X.; Ge, S.S.; Khyam, M.O.; Luo, C. Automatic Welding Seam Tracking and Identification. IEEE Trans. Ind. Electron. 2017, 64, 7261–7271. [Google Scholar] [CrossRef]

- Kah, P.; Shrestha, M.; Hiltunen, E.; Martikainen, J. Robotic Arc Welding Sensors and Programming in Industrial Applications. Int. J. Mech. Mater. Eng. 2015, 10, 13. [Google Scholar] [CrossRef]

- Zhang, S.; Li, Y.; Zhang, S.; Shahabi, F.; Xia, S.; Deng, Y.; Alshurafa, N. Deep Learning in Human Activity Recognition with Wearable Sensors: A Review on Advances. Sensors 2022, 22, 1476. [Google Scholar] [CrossRef] [PubMed]

- Baker, R. Gait Analysis Methods in Rehabilitation. J. Neuroeng. Rehabil. 2006, 3, 4. [Google Scholar] [CrossRef] [PubMed]

- Mvola, B.; Kah, P.; Layus, P. Review of Current Waveform Control Effects on Weld Geometry in Gas Metal Arc Welding Process. Int. J. Adv. Manuf. Technol. 2018, 96, 4243–4265. [Google Scholar] [CrossRef]

- Lippold, J.C. Welding Metallurgy and Weldability; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Ebadi, K.; Bernreiter, L.; Biggie, H.; Catt, G.; Chang, Y.; Chatterjee, A.; Denniston, C.E.; Deschênes, S.P.; Harlow, K.; Khattak, S.; et al. Present and Future of SLAM in Extreme Environments: The DARPA SubT Challenge. IEEE Trans. Robot. 2023, 40, 936–959. [Google Scholar] [CrossRef]

| Module | Time Complexity | Update Frequency |

|---|---|---|

| IMU propagation | High (IMU rate) | |

| ORB feature extraction | Low (camera rate) | |

| ORB feature matching | Low (camera rate) | |

| SLAM pose estimation | Low (camera rate) | |

| EKF prediction | High (IMU rate) | |

| EKF update | Low (SLAM rate) | |

| FFT frequency analysis | Periodic | |

| Pattern fitting | Periodic |

| Category | Component | Specifications | Details |

|---|---|---|---|

| VR System | HMD | HTC Vive Pro | Dual AMOLED 2880 × 1600 @ 90 Hz |

| Tracking | Lighthouse 2.0 | 2 base stations | |

| IMU | MPU-6050 | 200 Hz, integrated in VR controller | |

| Reference System | Motion Capture | OptiTrack Prime 13 | 8 cameras, 240 fps, 1.3 MP |

| Accuracy | - | <0.3 mm RMS | |

| Markers | Reflective markers | 6 markers, attached to torch handle | |

| Computing Platform | CPU | Intel i7-10700K | 8 cores, 3.8 GHz |

| GPU | NVIDIA RTX 3080 | 10 GB VRAM | |

| RAM | DDR4-3200 | 32 GB | |

| OS | Ubuntu 20.04 LTS | - |

| Parameter | Symbol | Value | Unit | Rationale |

|---|---|---|---|---|

| IMU sampling rate | 200 | Hz | Capture high-frequency motion | |

| Camera frame rate | 30 | Hz | Computational constraints | |

| EKF update rate | 200 | Hz | Match IMU rate | |

| Pattern analysis rate | 10 | Hz | Sufficient for drift detection | |

| Weaving frequency range | [3, 7] | Hz | Typical welding motion | |

| Drift threshold | 3.0 | mm | Based on target accuracy | |

| Correction gain | 0.7 | - | Empirical tuning | |

| Complementary filter coeff | 0.02 | - | Balance gyro/accel trust | |

| IMU accel noise | 0.015 | mg/ | From datasheet | |

| IMU gyro noise | 0.01 | °/s/ | From datasheet | |

| SLAM position noise | 2.5 | mm | Empirical estimation | |

| SLAM orientation noise | 0.5 | deg | Empirical estimation | |

| Sliding window length | T | 2.0 | s | 2–3 weaving cycles |

| SNR threshold | threshold_SNR | 5.0 | - | Reliability check |

| Weld Type | Number of Datasets | Total Duration | Participant Skill Level | Avg. Weaving Frequency |

|---|---|---|---|---|

| Fillet weld | 5 | 25 min | Novice 2, Intermediate 2, Expert 1 | 4.8 Hz |

| Butt weld | 5 | 25 min | Novice 2, Intermediate 2, Expert 1 | 5.2 Hz |

| Overhead weld | 5 | 25 min | Novice 1, Intermediate 2, Expert 2 | 4.3 Hz |

| Total | 15 | 75 min | Novice 5, Intermediate 6, Expert 4 | 4.8 Hz |

| Method | Fillet Weld | Butt Weld | Overhead | Average | Std. Dev. |

|---|---|---|---|---|---|

| SteamVR 2.0 | 6.8 | 5.4 | 9.2 | 7.1 | 1.6 |

| Oculus Insight | 8.1 | 6.9 | 11.3 | 8.8 | 1.9 |

| IMU Only | 45.2 | 42.7 | 48.9 | 45.6 | 2.6 |

| VINS-Mono | 5.2 | 4.6 | 7.8 | 5.9 | 1.4 |

| ORB-SLAM3-VI | 4.9 | 4.3 | 7.2 | 5.5 | 1.3 |

| Proposed (w/o pattern) | 4.5 | 3.9 | 6.8 | 5.1 | 1.3 |

| Proposed (Full) | 3.8 | 3.2 | 5.4 | 4.1 | 0.9 |

| Method | Mean | Median | 90th Pct | 95th Pct | 99th Pct |

|---|---|---|---|---|---|

| SteamVR 2.0 | 7.1 | 6.5 | 11.2 | 13.8 | 18.5 |

| ORB-SLAM3-VI | 5.5 | 5.1 | 8.9 | 10.3 | 14.2 |

| Proposed | 4.1 | 3.8 | 6.7 | 7.9 | 10.8 |

| Method | Final Drift (mm) | Rate (mm/s) | Improvement |

|---|---|---|---|

| SteamVR 2.0 | 45 | 0.15 | Baseline |

| ORB-SLAM3-VI | 28 | 0.093 | 1.61× |

| Proposed (w/o pattern) | 22 | 0.073 | 2.05× |

| Proposed (Full) | 15 | 0.050 | 3.0× |

| Type | Actual | Detected | Error | Amplitude | Quality |

|---|---|---|---|---|---|

| Fillet | 4.8 Hz | 4.82 Hz | 0.4% | 4.3 mm | 0.94 |

| Butt | 5.2 Hz | 5.18 Hz | 0.4% | 4.7 mm | 0.92 |

| Overhead | 4.3 Hz | 4.35 Hz | 1.2% | 3.8 mm | 0.88 |

| Method | Latency (ms) | 99th (ms) | CPU (%) | GPU (%) | Loss (/min) |

|---|---|---|---|---|---|

| SteamVR 2.0 | 45 | 62 | – | – | 0.3 |

| VINS-Mono | 125 | 198 | 78 | 32 | 0.8 |

| ORB-SLAM3-VI | 95 | 142 | 65 | 28 | 0.5 |

| Proposed | 82 | 118 | 58 | 25 | 0.2 |

| Module | Avg. Time | 99th Pct | CPU Core |

|---|---|---|---|

| IMU Thread | 5 ms | 8 ms | Core 1 (dedicated) |

| SLAM Thread | 60 ms | 95 ms | Cores 2–4 + GPU |

| Feature Extraction | 25 ms | 35 ms | GPU accelerated |

| Feature Matching | 20 ms | 32 ms | CPU |

| Pose Estimation | 15 ms | 28 ms | CPU |

| Fusion Thread | 15 ms | 22 ms | Cores 5–6 |

| EKF Prediction | 3 ms | 5 ms | Per IMU update |

| EKF Update | 12 ms | 17 ms | Per SLAM update |

| Pattern Thread | 2 ms | 4 ms | Core 7 |

| FFT Analysis | 1.5 ms | 3 ms | – |

| Sinusoid Fitting | 0.5 ms | 1 ms | – |

| Total System Latency | 82 ms | 118 ms | – |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, K.-S.; Kim, J.C.; Cho, K.W.; Cho, W.I. Improving VR Welding Simulator Tracking Accuracy Through IMU-SLAM Fusion. Electronics 2025, 14, 4693. https://doi.org/10.3390/electronics14234693

Shin K-S, Kim JC, Cho KW, Cho WI. Improving VR Welding Simulator Tracking Accuracy Through IMU-SLAM Fusion. Electronics. 2025; 14(23):4693. https://doi.org/10.3390/electronics14234693

Chicago/Turabian StyleShin, Kwang-Seong, Jong Chan Kim, Kyung Won Cho, and Won Ik Cho. 2025. "Improving VR Welding Simulator Tracking Accuracy Through IMU-SLAM Fusion" Electronics 14, no. 23: 4693. https://doi.org/10.3390/electronics14234693

APA StyleShin, K.-S., Kim, J. C., Cho, K. W., & Cho, W. I. (2025). Improving VR Welding Simulator Tracking Accuracy Through IMU-SLAM Fusion. Electronics, 14(23), 4693. https://doi.org/10.3390/electronics14234693