Abstract

Individuals with Autism Spectrum Disorder often experience distress or discomfort when facing changes in daily routines, particularly during major transitions such as beginning attendance at a Day Activity Center. To address this challenge, we designed and developed a virtual reality system based on an interactive digital twin of an actual center. The application allows users to explore the environment and engage in typical daily activities through repeated exposures in an immersive, caregiver-guided virtual setting, following an experiential training approach. The current study presents a method for validating the system, conducted with individuals formally diagnosed with Autism at DSM-5 Level 1 and currently attending the center, within a user-centered co-design framework. The results indicate that the system is both usable and suitable for the target population, and the caregiver’s presence is perceived as important for an enjoyable and supportive experience. The proposed framework can be adapted to other use cases and used for the validation of virtual reality systems for people on the spectrum at different levels.

1. Introduction

Autism Spectrum Disorder (ASD) is a neurodevelopmental condition and a recognized form of neurodiversity. While clinical frameworks define ASD through diagnostic criteria and its functional impact, the neurodiversity perspective regards it as a natural variation in human cognition, associated with both strengths and challenges. It is typically characterized by differences in social communication and interaction, alongside varying levels of restricted and repetitive patterns of behavior, interests, or activities [1]. In that sense, people with ASD can benefit from several kinds of interventions that aim at providing the tools for developing skills that can lead to an easier approach to everyday life and social interaction. The interventions can be of different natures, including training based on the repetition of a task, i.e., the whole process of going to a cafe, ordering food, paying, and leaving. We refer to this approach as experiential training [2], referring to the possibility to repeat a task several times in a controlled experimental environment, until the person feels ready to face the situation in real life.

Virtual reality (VR) has shown to be a solid and promising intervention tool for individuals with ASD, due to its ability to provide controlled, engaging, and repeatable experiences [3], converging in experiential training opportunities [4,5]. VR environments can be customized to minimize sensory overload [6], and tailored to address individual learning needs [7]. Moreover, the strong visual–spatial learning preferences common in ASD align well with the immersive nature of VR, while the generally high affinity for technology among people on the spectrum [8] supports acceptance.

People in the spectrum present specific support needs and functional profiles. According to the Diagnostic and Statistical Manual of Mental Disorders, published by the American Psychiatric Association, the intensity of support required by people in the spectrum can be defined on a three-level scale [1]. The current edition of the scale is DSM-5 (published in 2013, with text revision DSM-5-TR in 2022), and classifies the levels as shown in Table 1.

Table 1.

Autism spectrum disorder severity levels and support needs, based on the current edition of the scale (DSM-5) [1].

Day Activity Centers (DACs) are non-residential facilities offering structured, supervised daytime programs aimed at enhancing social, vocational, and life skills for individuals with disabilities or special needs. These centers provide essential opportunities, particularly for people with ASD, as activities are tailored to individual needs and adapted according to severity levels. The current use case is related to the DAC “Il Margine” https://www.ilmargine.it/ (accessed on 25 October 2025) in Torino, Italy, targeting individuals with ASD at DSM-5 Level 1, as their cognitive and mobility skills make them suitable potential users of VR. Initial visits and transitions to new daily routines, however, often elicit significant distress and discomfort [9], impeding smooth and effective adaptation. To facilitate transitions for new residents, we developed a VR system that replicates the DAC, allowing users to virtually familiarize themselves with the center. The project, “Knock Knock. It’s open.”, implements an experiential training approach, enabling repeated and safe exploration before in-person visits.

Our approach presents two main key points that, to the best of our knowledge, represent a novelty. (i) The digital twin represents the specific center and not a generic DAC. This way, users already know where the kitchen will be and where to go for a snack, or where they will find the restroom. The system also includes a set of interactive activities that are available in the specific center, such as playing table football or printing graphics on t-shirts using a screen-printing machine. This allows users to virtually explore the center and become familiar with the environment before visiting in person. (ii) The multiplayer sessions allow users to enter the virtual center together with their personal caregiver, the same way it will happen in person. The user is guided through the virtual visit by an avatar embodied by the participant’s actual caregiver, introducing a familiar and trusted presence, which may enhance engagement and reduce stress during the session. Throughout the session, the two can freely navigate the virtual environment, talk to each other, see each other’s avatar and share the virtual space, allowing the caregiver to tailor the experience to the participant’s needs.

A fundamental goal in the development of a virtual reality experiential training approach consists in validating an asset that is appropriate for people with ASD, which is the aim of the current work. We hereby present a user-centered co-designed system validation framework to assess usability and suitability for autistic people (in particular DMS-5 Level 1). The validation was conducted following a co-design approach, with current center attendees, allowing us to gather feedback from individuals with needs and cognitive skills akin to those of future newcomers who will benefit from the approach prior to entering the center.

In a previous work, we presented a preliminary validation of the VR system with neuro-typical users [10], leveraging standard assessment methods, showing that the system is suitable and appropriate for a general audience, and the presence of an avatar companion was perceived as enjoyable. However, at that stage, we did not address its usability for individuals with ASD. The present work investigates the system’s suitability for people with autism and introduces a methodology for evaluation tailored to their needs. An additional finding was that the system elicits a generally high Sense of Presence. However, in the current work, we chose not to investigate this aspect with individuals on the spectrum, as the concept is too abstract for our target population.

In this scenario, we formulated the following hypotheses:

Hypothesis 1 (H1).

A virtual reality application that represents a Day Activity Center can be usable for people diagnosed with Autistic Spectrum Disorder at DSM-5 Level 1;

Hypothesis 2 (H2).

A virtual reality reproduction of a Day Activity Center is a suitable and appropriate experience for people diagnosed with Autistic Spectrum Disorder at DSM-5 Level 1;

Hypothesis 3 (H3).

The presence of a caregiver in the virtual world provides a sense of enjoyment and support.

We conducted a preliminary user study with individuals diagnosed with ASD at DSM-5 Level 1 to validate the acceptability of the asset and gather insights on assessment methods for users with ASD. We then ran the main experiment with participants from the same population. The results indicate that the system is both usable and suitable for the target group. The presence of a caregiver, represented by an avatar, was perceived as a significant source of support. These findings confirm that our approach provides a valid and appropriate framework for validating an experiential training system designed for individuals with autism.

2. Related Works

The literature search focused on 2017–2025 studies in Scopus https://www.scopus.com/ (accessed on 25 October 2025) using keywords on Autism Spectrum Disorder, virtual reality, social skills training, and transfer to real-world contexts. Peer-reviewed English-language studies were prioritized to enable contextual synthesis rather than a systematic review. Several works suggested that experiential training in VR can be beneficial for individuals with ASD in improving daily life social skills (see Mosher et al. [11] and Chiappini et al. [12] for reviews). It is important to note that studies involving participants with ASD often involve small samples due to practical and ethical recruitment constraints [13,14,15]; this occurs in both system validation studies and efficacy-oriented investigations.

Due to their limited generalization abilities, people with ASD often struggle to transfer the skills acquired through conventional therapies to real-world scenarios [16]. Yet, several works suggested that VR-based interventions can facilitate this transfer to everyday contexts [13,17], with several projects specifically targeting social skill development. Ip it et al. investigated the potential of VR to enhance affective expression and social reciprocity skills in children with ASD [18]. A total of 176 children participated in the study, which included 30 VR training sessions over 15 weeks, targeting social norms, communication, conflict resolution, emotion recognition, and reciprocal conversation. Results show significant improvements in both affective expression and social reciprocity compared to the control group. Other studies explored how VR can reduce stress and help individuals with ASD become more familiar with real-world scenarios. Dixon et al. used VR to teach individuals with ASD to assess safe street-crossing conditions [13]. Through multiple 360° video sessions with adjustable difficulty and environmental distractions, all three participants learned to identify safe crossing situations in both virtual and real-world scenarios. Yet, the training did not include the act of crossing itself. Soccini et al. proposed a VR experiential training system that allows users to try an airport scenario several times before facing it in real life, to improve their understanding of airport procedures and reduce the associated distress [2]. The study aimed to compare the effectiveness of the VR intervention with a commonly used step-by-step textual guide. Miller et al. conducted a feasibility and preliminary learning-outcomes evaluation of a low-cost mobile VR air-travel training for individuals with ASD [14]. Seven participants completed a non-interactive narrated VR airport tour. All participants tolerated and accepted the VR setup, and mean comprehension-retell scores improved by about from baseline. However, feasibility and acceptability were evaluated primarily through clinician observation and brief adverse-effects check-ins rather than standardized participant-reported measures. Simões et al. [19] proposed a VR serious game to help individuals with ASD familiarize themselves with the process of taking a bus. The experiment included 10 neuro-atypical participants and consisted of several tasks involving boarding a bus and reaching a designated destination. Results suggested an increase in the knowledge related to taking a bus and a reduction in anxiety during the experience. Kim et al. used VR to enhance self-efficacy and improve social skills in individuals with ASD [20]. A total of 14 participants completed four scenarios of increasing difficulty, simulating typical work-related social interactions such as making coffee, using a cash machine, and holding conversations. Results showed a significant increase in perceived self-efficacy and greater self-awareness of both emotions and social behaviors. Schmidt et al. conducted a usability and learner-experience evaluation of Virtuoso, an immersive intervention for public-transport training in adults with ASD [15]. Five participants completed two brief sessions, a 360° video walkthrough of the shuttle procedure, and a coach-guided rehearsal in an interactive VR environment, followed by standardized usability ratings. Both modules achieved above-average usability on the System Usability Scale, and interviews indicated high engagement in the interactive, coach-guided VR session, despite some controller-mapping and stability issues. The study emphasized design feedback over efficacy or real-world transfer outcomes. The presented works have shown to be effective in training particular social skills and familiarizing users with real-world scenarios. However, most interventions involved solo experiences, which may cause stress or discomfort for participants [21]. To address this issue, some recent projects explored the use of conversational agents, powered by large language models (LLMs), to guide participants during VR experiences [22,23,24]. While promising, conversational agents embodied within virtual avatar guides might resemble unfamiliar individuals, potentially causing discomfort or distress among participants due to the absence of a familiar and trusted presence.

3. Preliminary Study: Co-Design of the Experience and Methods Evaluation

The inclusion criteria comprised residents with a formal diagnosis of ASD at DSM-5 Level 1. A preliminary experiment was conducted with four volunteers (3 male, 1 female; mean age = 38 years, range = 23–50), none of whom had previous experience with VR. The gender distribution of participants reflects the demographic composition of DAC residents and aligns with the male percentage typically observed in individuals with ASD [25,26]. The purpose of this preliminary study was to evaluate and refine the investigation methods in VR with the specific target population, namely individuals with ASD at DSM-5 Level 1, who are capable of developing autonomy in everyday tasks but still require structured support through attendance at a DAC.

We defined our methodological research questions:

- RQp1: Does our population accept wearing a Head Mounted Display (HMD)?

- RQp2: Are the tasks in virtual reality adequate?

- RQp3: Are questionnaires suitable for our population and in line with their cognitive level?

- RQp4: Is a 7-point Likert scale a suitable way to provide a response to questions mainly related to user experience?

- RQp5: How do users rate the overall experience?

3.1. Experimental Design

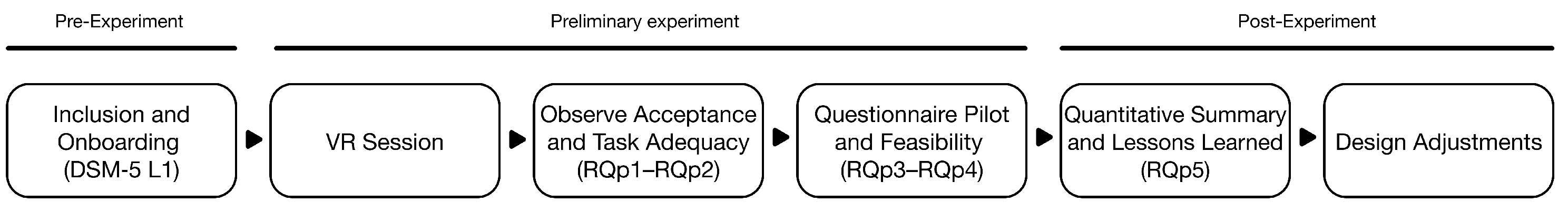

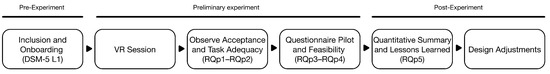

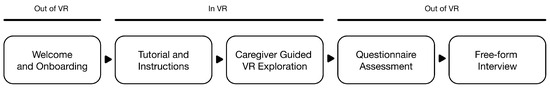

Before starting the experiment, the subjects were told by the caregivers they would try a virtual reality experience representing the center, developed by friendly scientists that would be in the room during the whole period. Caregivers instead received specific information and instructions on how to play the serious game and guide the participant. Every participant, together with their caregiver, was welcomed by the operator in a room at the center. Both the participant and the caregiver were asked to wear an HMD and freely explore the application. During their experience, we observed their behavior, focusing on the research questions RQp1 and RQp2. At the end of the experience, the caregiver asked every user about the experience in a free-form dialogue, and also proposed a set of six questions, reported in Table 2, whose responses were collected on a Likert scale either of 1 to 7 or of 1 to 5, depending on the preferences and capabilities of the user. This would provide an insight on the methodological questions RQp3 and RQp4. The results of the questionnaire would provide users’ ratings of the experience, which falls under RQp5. Figure 1 summarizes the co-design workflow and the preliminary experiment design.

Table 2.

Preliminary study questionnaire used to assess the suitability of the evaluation methods (difficulty of the items and range of the scales used for the responses) for the target population, before proceeding with the main study.

Figure 1.

Schematic representation of the co-design workflow. After inclusion and onboarding, participants completed VR sessions with caregiver support to evaluate HMD acceptance and task adequacy (RQp1–RQp2) and to pilot questionnaire feasibility and scale use (RQp3–RQp4). After the preliminary experiment, results were reviewed (RQp5) and translated into design adjustments.

3.2. Insights on Methods

All the subjects easily wore the HMD with no issues (RQp1), and understood the basics of navigation and interaction in the virtual environment in a timely manner, similarly to what we would expect from a neuro-typical population (RQp2). While the questions were, overall, considered appropriate, the presence and the support of the caregiver in the process was fundamental to provide punctual explanations on the concepts asked. Without the caregivers, the administration of the questionnaires would not be feasible (RQp3). The caregivers underlined that responses on a 7-point Likert scale required a strong sense of abstraction that resulted in difficulty and stress for the participants. Instead they suggested using a 5-point Likert scale that was perceived as easier and friendlier, and therefore, was approved. Also, to aid in the evaluation, we associated each number with an image depicting sad, neutral, or happy emoticons, allowing users to select their response based on these. The caregivers supported this approach and users appreciated it (RQp4).

3.3. Quantitative Results

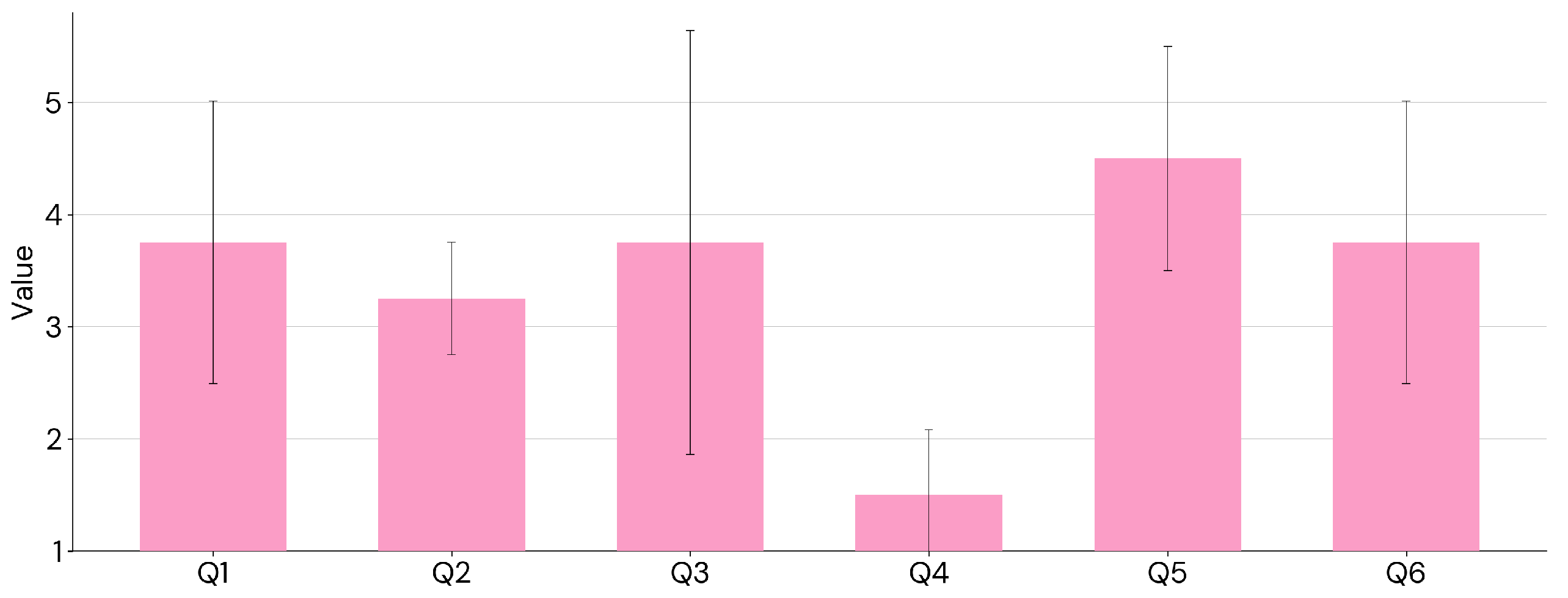

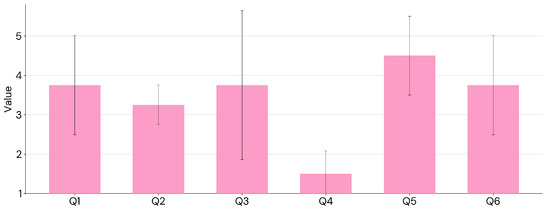

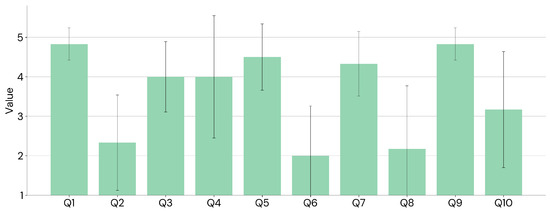

As mentioned, responses were provided on a Likert scale from 1 to 5, where 1 corresponds to “Very Little” and 5 to “Very Much”. The total score, calculated as the sum of individual responses, ranged from 6 to 30, with question 4 representing a negative effect and being treated accordingly. Overall, the questionnaire results align with our observations: mean values indicate that participants generally felt comfortable and safe within the simulation (see Table 3 and Figure 2), responding to RQp5. However, participants reported varying levels of distress and differing appreciation of the virtual experience, particularly for questions 3 and 6.

Table 3.

Result of the preliminary study questionnaire. For each item, the mean () and standard deviation () of the responses are reported.

Figure 2.

Results from the preliminary experiment questionnaire, shown separately for each item. The mean and standard deviation of the responses are reported.

3.4. Lessons Learned

Thanks to the preliminary study, we learned that

- Users with Autism at DSM-5 Level 1 seem to have a positive attitude towards wearing an HMD and experiencing virtual reality.

- Providing a high abstraction of the tasks in virtual reality is suitable, as users were able to match their virtual activities with real-world tasks (i.e. throw a ball, play table football, print a t-shirt). On that regard, there is no need to force users towards learning combinations of buttons or fine gestures.

- Users were able to provide responses to the questionnaires, but several aspects need to be considered:

- (i)

- Caregiver support is crucial for verbalizing feedback and recording responses on a scale;

- (ii)

- Questions investigating physical discomfort should be avoided, as they tend to elicit unease;

- (iii)

- Response options should be limited to five, as seven options are more difficult to manage;

- (iv)

- The number of questionnaires and items should be kept to the minimum necessary, since users otherwise tend to become distracted or irritated.

4. Materials and Methods

4.1. System Description

As mentioned in Section 1, the VR application is a 1:1 site-specific digital replica of an existing DAC, named “Il Margine”, located in Torino, Italy. The architectural structure of the center was reconstructed using on-site photogrammetry data as a 3D reference, in combination with the cadastral (property) map. Several photographs were also taken and applied as wall textures, including posters and artworks. The virtual DAC was modeled with high fidelity to achieve a photorealistic appearance. At the same time, unnecessary objects were removed from the virtual rooms to minimize sensory overload and reduce the risk of overwhelming situations. Figure 3 shows a side-by-side comparison of the physical center and its digital twin.

Figure 3.

Pictures of the physical areas (left) and their corresponding digital twin (right) of the Day Activity Center, used to replicate the real-world environment within the virtual setting.

The experience was designed following state-of-the-art principles of interaction and navigation. Navigation within the virtual center was implemented through teleportation, while object interaction was based on our previously published framework for interaction design in VR [27]. This framework is grounded in spatial computing principles and neuroscientific research on peripersonal space in virtual reality [28,29], designed to simplify interaction and reduce the cognitive load associated with memorizing buttons and gestures, thereby allowing users to focus on the overall experience. To this end, we introduced simplified gestures and a layer of abstraction for object interaction; for example, users can open doors simply by touching the handle or pick up a brush in a single movement.

The digital twin was modeled using Autodesk Maya 2023 https://www.autodesk.it/products/maya/overview (accessed on 25 October 2025), while game mechanics were implemented in Unity3D version 2022.3 https://unity.com/ (accessed on 25 October 2025). The application was designed to run in standalone mode on Meta Quest Pro https://www.meta.com/quest/quest-pro/ (accessed on 25 October 2025) headsets (Meta, Menlo Park, CA, USA ). In cases where participants experience discomfort wearing an HMD and refuse to use it, the virtual scenario can be displayed on a screen and explored by the caregiver alone. However, we expect such cases to be rare.

Within the virtual environment, users are represented by a self-avatar (Figure 4) whose body movements and facial expressions mirror those of the user. Facial expressions are captured in real time using the embedded features of the Oculus Quest Pro. Furthermore, a multiplayer setup was implemented using Unity Gaming Services https://unity.com/solutions/gaming-services (accessed on 25 October 2025), enabling caregivers to embody a second avatar, in a multiplayer setup, and actively guide users through the digital twin.

Figure 4.

First-person and third-person views of the virtual environment, with full-body avatars that mirror users’ and caregivers’ movements.

4.2. Evaluation Methods

As demographic information, we collected participants’ age, gender, and prior experience with virtual reality. To assess our hypotheses, we used three questionnaires: the System Usability Scale (SUS) for H1, a subset of the Virtual Reality Neuroscience Questionnaire (VRNQ) for H2, and the Avatar Guide Questionnaire for H3.

The SUS questionnaire, reported in Table 4, consists of 10 items related to perceived usability, each rated on a Likert scale from 1 (“Strongly Disagree”) to 5 (“Strongly Agree”). The usability score for each participant was calculated following the original method provided by Brooke et al. [30], with a total scores ranging from 0 to 100, and a benchmark value of 50, generally considered as the cutoff for acceptable usability.

Table 4.

System Usability Scale (SUS) questionnaire. It consists of 10 items related to perceived usability, each rated on a Likert scale from 1 to 5.

The VRNQ comprises 20 items assessing user experience, game mechanics, in-game assistance quality, and Virtual Reality-Induced Symptoms and Effects (VRISE) [31]. Following the lessons learned in the preliminary study (Section 3) to minimize participant discomfort, we excluded the VRISE section and, in collaboration with caregivers, selected two representative questions from each remaining category, yielding a 6-item subset, reported in Table 5. The scale was adapted from the original 7-point format to a 5-point scale, and each participant’s VRNQ score was calculated as the sum of their responses, ranging from 6 to 30. In the original questionnaire, the acceptability threshold is set at 100. Since we employed a reduced version, using six items instead of the original twenty and adapting the response format from a 7-point to a 5-point scale, we rescaled the acceptability threshold accordingly. The rescaling was based on the ratio between the original maximum score of 140 and our adapted maximum score of 30, which yielded a proportional threshold of , calculated as .

Table 5.

Subset of the items that compose the Virtual Reality Neuroscience Questionnaire (VRNQ). From the original 20-item questionnaire, we excluded the items related to VR-Induced Symptoms and Effects (VRISE) and selected two representative questions from each of the remaining categories (User Experience, Game Mechanics, and In-Game Assistance). Each item is rated on a 5-point Likert scale from 1 to 5.

To assess participants’ engagement and validate H3, we used the Avatar Guide questionnaire [10]. It consists of three items related to the participants’ reactions to the virtual avatar, each rated on a Likert scale from 1 (“Not at all”) to 5 (“Very much”). The questionnaire is reported in Table 6, and the score for each participant was calculated as the sum of their responses, ranging from 3 to 15, with a benchmark value of , corresponding to of the maximum score. Additionally, we observed their behavior during the VR experience, focusing on externalization of affects, gestures, facial expressions, and oral communication.

Table 6.

Avatar Guide questionnaire. It consists of 3 items related to the user’s perception of the avatars in the virtual environment, each rated on a Likert scale from 1 to 5.

For all questionnaires, we run a Wilcoxon signed-rank test to determine whether participants’ scores were significantly greater than their respective benchmark values (50 for SUS, for VRNQ and for Avatar Guide questionnaire).

To account for multiple comparisons across the questionnaires, we applied the Benjamini–Hochberg correction to adjust for the false discovery rate at . For each questionnaire, we run a post hoc power analysis using G*Power 3.1 https://www.gpower.hhu.de/ (accessed on 25 October 2025), to compute power starting from the error probability , the sample size n, and the effect size d calculated starting from the mean value of the responses and the standard deviation of the three questionnaires.

Finally, as a further insight on the measures, we examined the relationships between the three questionnaires by computing pairwise Pearson correlations for each pair of questionnaire scores (SUS vs. VRNQ, SUS vs. Avatar Guide Questionnaire, and VRNQ vs. Avatar Guide Questionnaire).

4.3. Experimental Design

A total of six volunteers participated in the main study (6 males; age range: 18–50 years, mean = 28.33). Inclusion criteria were i) a formal diagnosis of Autism Spectrum Disorder at DSM-5 Level 1 and ii) current attendance at the Day Activity Center. Although the sample shows a strong male bias, this aligns with the gender distribution observed among DAC attendants. At the time of the main experiment, the DAC population reflected the ASD ratio (4:1) [25,26,32], but among those eligible for the virtual reality experience (DSM-5 Level 1), all were males. The women in the center exhibited more severe impairments (Levels 2 and 3) and were therefore not recruited [25,26]. Recruitment and data collection were conducted on two separate days at the DAC; caregivers pre-screened attendees for potential eligibility, after which investigators confirmed inclusion criteria and obtained written consent.

None of the participants had more than minimal prior experience with virtual reality. For each participant, a caregiver also volunteered to take part as a guide in the virtual experience. Both participants and caregivers signed informed consent forms in accordance with the European General Data Protection Regulation (GDPR). During the experiment, both wore VR headsets. The intervention consisted of a virtual visit to the digital twin of the DAC (described in Section 4.1) in a collective and unstructured format. The experimental design followed the structure of the preliminary study presented in Section 3. Once in the virtual environment, participants were briefly instructed on how to navigate the rooms and interact with virtual objects.

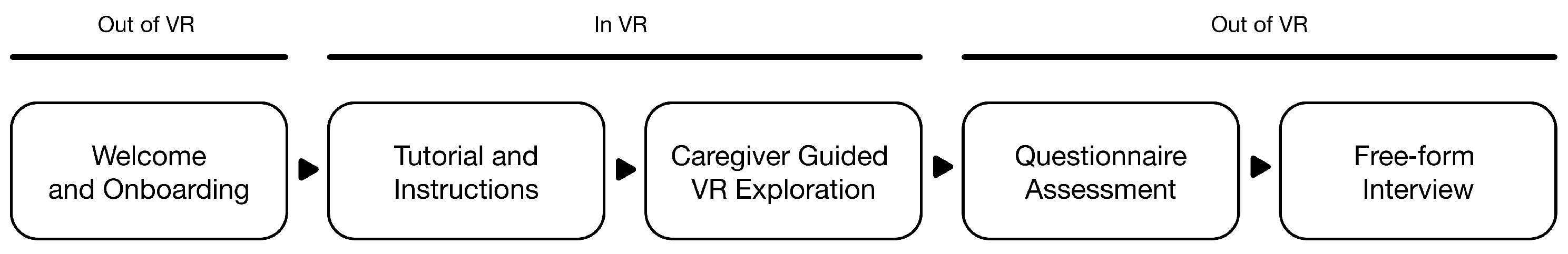

Caregivers guided participants through the environment, encouraging exploration and interaction with virtual objects. The visit was deliberately unstructured, allowing free exploration based on the interests of participants and caregivers. Similarly, interaction with virtual objects was optional, with no predefined sequence or mandatory tasks. While the overall structure of the experiment was consistent with the preliminary study, the post-intervention assessment was adapted to address the main hypotheses. Following the VR session, caregivers assisted with completion of the three questionnaires described in Section 4 and then conducted a free-form interview on the experience. A schematic of the experimental design is shown in Figure 5.

Figure 5.

Schematic representation of the main experiment design. Participants are welcomed and onboarded (out of VR), receive a brief tutorial and instructions (in VR), complete a caregiver-guided VR exploration (in VR), and then proceed to questionnaire administration and a free-form interview (both out of VR). In each step, subjects are assisted by the caregiver.

5. Results

Overall, participants provided positive feedback across all evaluated parameters, supporting our hypotheses. The SUS results confirmed the good usability of the VR system (H1), as shown in Table 7 and Figure 6. The statistical analysis further supported this outcome, with and (). A post hoc power analysis for a one-sample t-test comparing the observed SUS mean to the acceptability benchmark of indicated that, with , , and an effect size of , (derived from the sample , ), statistical power was adequate (power = 0.85), supporting the sufficiency of the sample for this validation.

Table 7.

Results of the System Usability Scale (SUS) questionnaire from the main study. Means () and standard deviations () for each item and for the overall SUS score are reported, calculated using the original method by Brooke et al. [30].

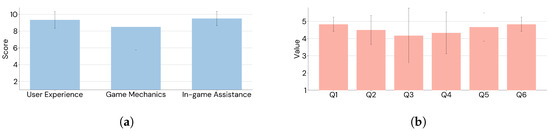

Figure 6.

System Usability Scale (SUS) questionnaire item responses from the main experiment, showing mean and standard deviation.

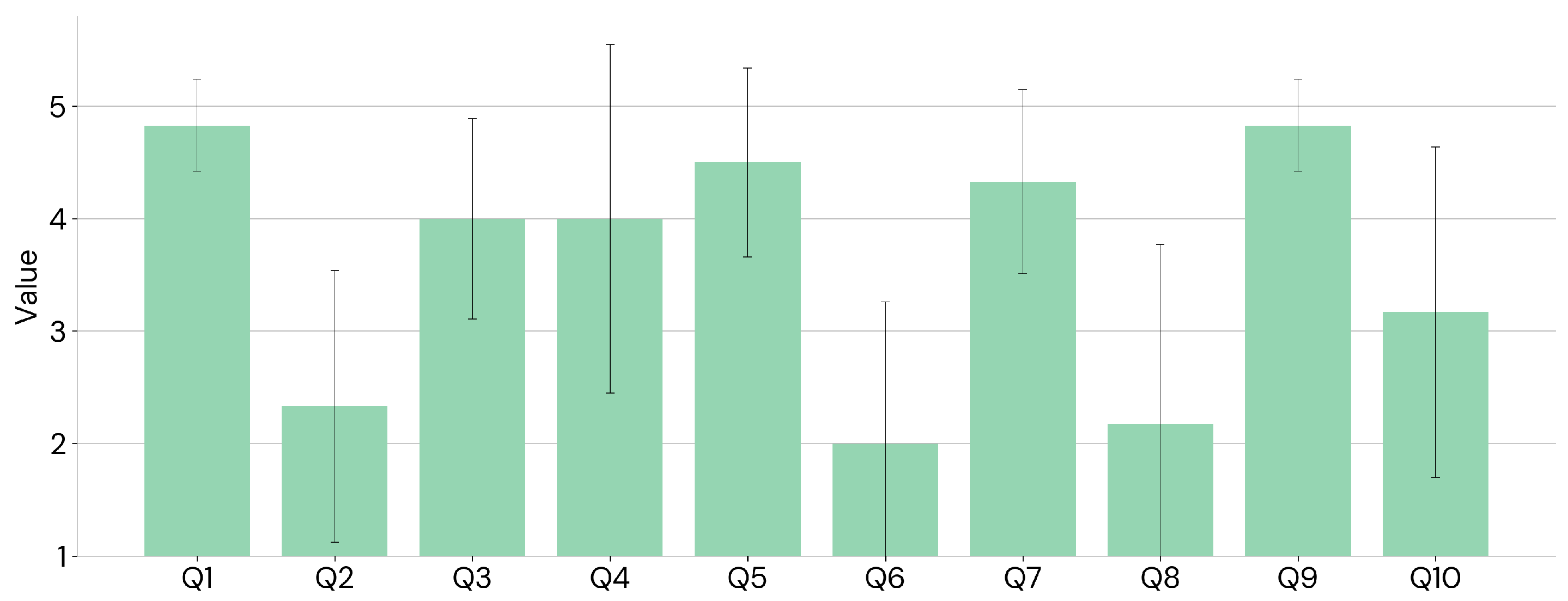

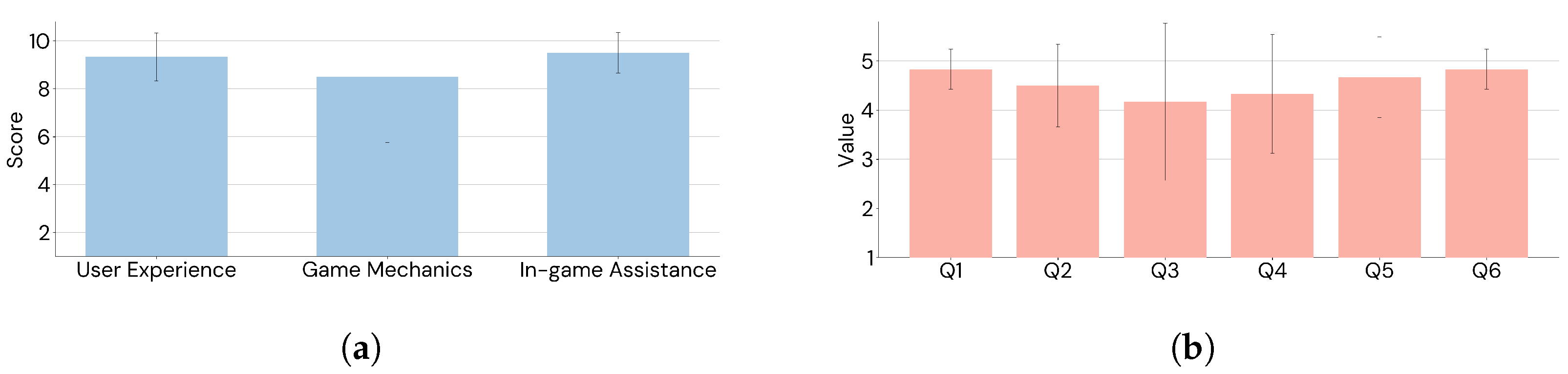

Participants reported high ratings on the VRNQ (H2), as presented in Table 8 and Figure 7. The results were statistically significant, with and (). The power analysis for the one-sample t-test () and the effect size (using the sample mean and standard deviation ) indicated sufficient power (power = 0.99).

Table 8.

Result of the Virtual Reality Neuroscience Questionnaire (VRNQ). For each item, the mean () and standard deviation () of the responses are reported. Additionally, the overall VRNQ score is provided, with its mean and standard deviation.

Figure 7.

Virtual Reality Neuroscience Questionnaire (VRNQ) outcomes from the main experiment, with mean and standard deviation reported for subscales (a) and individual items (b).

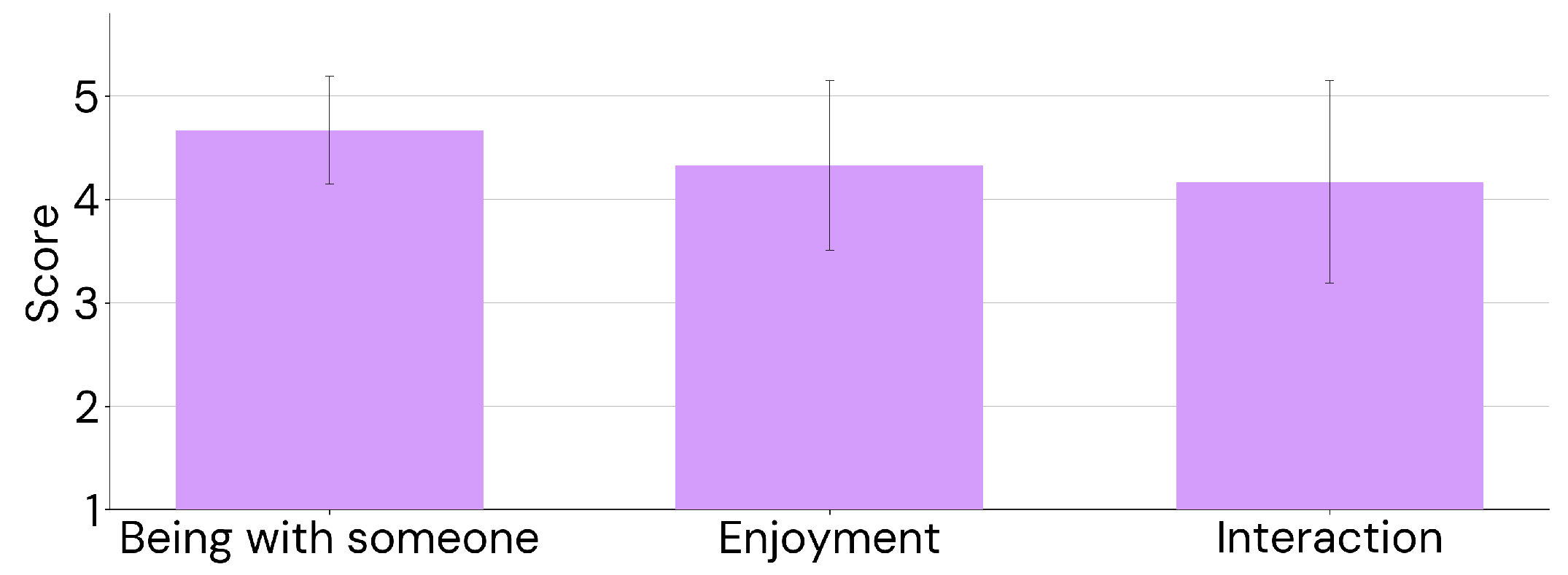

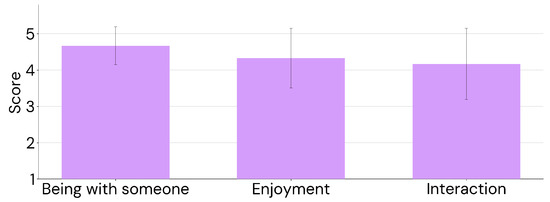

Finally, participants also provided high ratings on the Avatar Guide questionnaire (H3), reported in Table 9 and Figure 8. Scores were significantly above the threshold of , with and (). A one-sample t-test power check, given and effect size (from and ), indicated high power (power = 0.97).

Table 9.

Result of the Avatar Guide questionnaire in the main study. For each item, the mean () and standard deviation () of the responses are reported. The mean and standard deviations of the total score are also provided.

Figure 8.

Avatar Guide questionnaire responses per item in the main experiment, reporting mean and standard deviation.

During the VR experience, we observed participants’ behavior, focusing on their emotional responses and bodily cues. Overall, all six participants appeared enthusiastic and engaged, supporting H3. At the beginning of the virtual visit, caregivers prompted participants to perform certain activities, and all followed these suggestions with interest and motivation. After a few minutes, participants began exploring the virtual center independently, without further guidance. Most showed strong curiosity, actively requesting to interact with virtual objects and explore all available rooms.

To apply the Benjamini–Hochberg correction, p-values were ranked in ascending order as , , , and compared to the corresponding adjusted p-values , , , calculated, respectively, as , and , where . Since all raw p-values were below their adjusted values, the null hypotheses were rejected and the three tests remained significant after Benjamini–Hochberg correction.

We finally spotted a strong negative correlation between VRNQ and the Avatar Guide Questionnaire total scores (Pearson , ). Pearson correlations between SUS and VRNQ and between SUS and the Avatar Guide Questionnaire were instead weak and not statistically significant. The results are reported in Table 10.

Table 10.

Pairwise Pearson correlations between the three questionnaires (SUS, VRNQ, and Avatar Guide Questionnaire). Correlation coefficients (r) and corresponding p-values are reported for all pairwise combinations of the questionnaire scores.

6. Discussion

This study evaluated a virtual reality experiential training system designed to support individuals with ASD at DSM-5 Level 1 in adapting to a new daily environment, specifically a Day Activity Center (DAC). The positive usability and engagement outcomes observed in the study align with the core hypotheses that such a location-specific digital twin VR system can be both usable and suitable for this population, especially when combined with caregiver guidance. To test these assumptions, the evaluation targeted three hypotheses: usability (H1), suitability (H2), and the importance of the caregivers’ presence in providing a sense of enjoyment and perceived support (H3).

In the main study with six adult participants diagnosed with ASD at DSM-5 Level 1 (all male; 18–50 years), both standardized questionnaires and observational data converged in supporting these hypotheses. The SUS total score (mean ) exceeded the acceptability benchmark and was statistically significant (one-sample Wilcoxon signed-rank test vs. 50: , ), indicating good usability of the system for the target population (H1). The adapted VRNQ total score (mean ) was also significantly above the rescaled acceptability threshold (one-sample Wilcoxon signed-rank test vs. : , ), supporting suitability (H2). Beyond total scores, subscales help interpret which experience factors worked well. Participants reported high enjoyment and perceived technology quality (User Experience subscale total = ), solid ease of navigation and manipulation (Game Mechanics total = ), and particularly strong ratings for guidance and onboarding (In-Game Assistance total = ), suggesting that tutorials and caregiver-supported scaffolding effectively lowered the entry barrier to the experience. Participants’ feedback on the Avatar Guide questionnaire suggested that the presence of the caregiver, embodied in a virtual avatar, contributed to the overall enjoyment of the VR experience and provided meaningful support to participants (mean ), significantly greater than the threshold (one-sample Wilcoxon signed-rank test vs. 10.5: , ), supporting H3. All three tests remained significant after Benjamini–Hochberg correction, indicating that results retain statistical significance despite the limited sample while controlling the expected proportion of false positives. It is worth noting that the primary goal of the present study is system validation rather than inferential hypothesis testing; therefore, despite the small sample size, the statistical analysis supports immediate observations from user interaction rather than broad generalizations. Observationally, all participants displayed engagement and curiosity, transitioning from caregiver-prompted actions to increasingly autonomous exploration of the digital twin. This behavioral pattern towards adaptation aligns with the intent of the experiential training approach, and further supports H3. While one participant wore the headset only briefly, the group as a whole showed motivated exploration and interest in interacting with the modeled activities, such as those mirroring real objects and routines in the center.

Interestingly, we found a strong and significant negative correlation between VRNQ and Avatar Guide Questionnaire. Although highly preliminary and exploratory, this result might suggest that participants who experienced some difficulties with the virtual reality application (lower VRNQ scores) found caregiver support particularly helpful (high Avatar Guide Questionnaire score). On the other hand, participants who rated the VR experience positively (high VRNQ score) may have perceived the caregiver as less essential (low Avatar Guide Questionnaire score). This relationship will be investigated deeper in future works.

The presented outcomes are consistent with the co-design lessons learned in the preliminary study, which led us, together with caregivers, to minimize cognitive load in measurement (5-point Likert with visual supports), remove VRISE items from VRNQ, and keep the assessment concise; they also reaffirmed the practical necessity of caregiver assistance when administering questionnaires in this population. We can consider these findings as guidelines for the design of future system validations for this population. Taken together, these findings suggest that reproducing the specific DAC rather than a generic facility, including its real activities, and allowing the familiar caregiver to accompany the user as an avatar may be critical to usability, perceived appropriateness, and engagement. The design choice to validate directly with current attendees, whose needs and skills resemble those of future newcomers, further grounds the ecological relevance of the approach for transition support.

6.1. Limitations

A limitation of this study is the small sample size, which was due to restrictive inclusion criteria (individuals formally diagnosed with ASD at DSM-5 Level 1 and currently attending the center), as well as the logistical complexity involved in organizing and executing the experiments on site. Nevertheless, power analysis indicates that despite the small sample, the study was sufficiently powered to detect statistically significant improvements. Across the assessed outcomes, all three results remain significant after Benjamini–Hochberg correction. However, despite the limited sample size, these findings align with the existing literature, as many studies involving participants with ASD are based on small groups. They can therefore be regarded as a meaningful contribution to the field, particularly given the exploratory and preliminary nature of the present work.

A second limitation is that the presented findings may not apply to all autistic individuals, as users at different DSM-5 levels may need different design practices for virtual experiences and alternative reporting methods. The system was intentionally developed to serve individuals with ASD at DSM-5 Level 1 and is not intended for those who are nonverbal or have limited autonomous mobility. Although this targeted approach restricts the applicability of the results to other ASD populations, it represents a conscious methodological decision guiding the scope of this study. By focusing on a specific group, the investigation aims to ensure that the system is appropriately tailored to the abilities and needs of its intended users, acknowledging that broader generalizations should be made with caution. However, the co-design framework hereby presented may facilitate future identification of the most appropriate methods for individuals at different DSM-5 levels, while participant feedback will offer valuable insights regarding the suitability of both the system and assessment methods. Third, the intervention and assessment were conducted in a single session, limiting the evaluation of longer-term retention of familiarity with the technology, as well as sustained engagement and enthusiasm toward the experience. While this was not essential for system validation, it may offer valuable insights into mid- and long-term acceptance among individuals with ASD in future studies. Accordingly, this work should be interpreted as a pilot validation study conducted within a specific target population, with an emphasis on evaluating the proposed framework.

6.2. Future Works

Although discussed as contextual background, the effectiveness of the system in facilitating users’ familiarization with the virtual center prior to an in-person visit was not evaluated in this study and will be addressed in future research, involving upcoming attendees of the center. We plan to initially measure affective states such as distress, unease, and enthusiasm before and after the VR experiential training and the initial in-person visits, using subjective reports and speech sentiment analysis. Such multimodal evaluations are essential to determine whether observed usability, suitability, and the supportive role of the caregiver avatar translate into sustained benefits during the transition to a DAC. Finally, we will assess the overall efficacy of the methodology by comparing adaptation success rates with those of individuals adapting without the support of the VR system. These investigations will be essential to quantify long-term adaptation and verify real-world transfer.

Author Contributions

Conceptualization, A.M.S. and A.C.; Methodology, A.M.S. and A.C.; Software, A.C.; Validation, A.M.S. and A.C.; Formal analysis, A.M.S. and A.C.; Investigation, A.M.S. and A.C.; Data curation, A.M.S. and A.C.; Writing—original draft, A.M.S. and A.C.; Writing—review & editing, A.M.S. and A.C.; Visualization, A.C.; Supervision, A.M.S.; Project administration, A.M.S.; Funding acquisition, A.M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of the University of Torino (protocol number 0664642, approved on 15 January 2021).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Datasets are available from the corresponding author upon reasonable request for academic research use, subject to ethics approval and a data-use agreement; requests should briefly describe the intended use and institutional affiliation.

Acknowledgments

The present work was carried out in collaboration with the Social Cooperatives “Cooperativa Crescere Insieme” and “Cooperativa Il Margine”, both active in the city of Torino, Italy. The authors wish to thank the founders of the project “Knock Knock. It’s Open”, Mauro Maurino, Antonio Celentano, and Simonetta Matzuzi, as well as the caregivers Federico Baccili and Enrico Manganaro for their valuable support. This work was developed at the Virtual Reality Laboratory of the Human Sciences and Technologies (HST) Center, University of Torino.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ASD | Autism Spectrum Disorder |

| VR | Virtual Reality |

| DAC | Day Activity Center |

| SUS | System Usability Scale |

| VRNQ | Virtual Reality Neuroscience Questionnaire |

| VRISE | Virtual Reality-Induced Symptoms and Effects |

References

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Association Publishing: Arlington, TX, USA, 2013. [Google Scholar]

- Soccini, A.M.; Cuccurullo, S.A.G.; Cena, F. Virtual Reality Experiential Training for Individuals with Autism: The Airport Scenario. In Virtual Reality and Augmented Reality; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 234–239. [Google Scholar] [CrossRef]

- Bozgeyikli, L.; Raij, A.; Katkoori, S.; Alqasemi, R. A Survey on Virtual Reality for Individuals with Autism Spectrum Disorder: Design Considerations. IEEE Trans. Learn. Technol. 2018, 11, 133–151. [Google Scholar] [CrossRef]

- Glaser, N.; Schmidt, M. Systematic Literature Review of Virtual Reality Intervention Design Patterns for Individuals with Autism Spectrum Disorders. Int. J. Hum.–Comput. Interact. 2021, 38, 753–788. [Google Scholar] [CrossRef]

- Wunsch, V.; Picka, E.F.; Schumm, H.; Kopp, J.; Abdulbaki Alshirbaji, T.; Arabian, H.; Möller, K.; Wagner-Hartl, V. Virtual Reality-Based Approach to Evaluate Emotional Everyday Scenarios for a Digital Health Application. Multimodal Technol. Interact. 2024, 8, 113. [Google Scholar] [CrossRef]

- Grynszpan, O.; Martin, J.C.; Nadel, J. Multimedia interfaces for users with high functioning autism: An empirical investigation. INternational J. -Hum.-Comput. Stud. 2008, 66, 628–639. [Google Scholar] [CrossRef]

- Caruso, F.; Peretti, S.; Barletta, V.S.; Pino, M.C.; Mascio, T.D. Recommendations for Developing Immersive Virtual Reality Serious Game for Autism: Insights From a Systematic Literature Review. IEEE Access 2023, 11, 74898–74913. [Google Scholar] [CrossRef]

- Drigas, A.; Vlachou, J.A. Information and Communication Technologies (ICTs) and Autistic Spectrum Disorders (ASD). Int. J. Recent Contrib. Eng. Sci. IT (iJES) 2016, 4, 4. [Google Scholar] [CrossRef][Green Version]

- Spain, D.; Sin, J.; Linder, K.B.; McMahon, J.; Happé, F. Social anxiety in autism spectrum disorder: A systematic review. Res. Autism Spectr. Disord. 2018, 52, 51–68. [Google Scholar] [CrossRef]

- Fumero, N.; Fiscale, V.; Clocchiatti, A.; Soccini, A.M. Virtual Reality Multiplayer Experiential Training: Guiding People with Autism towards New Habits. In Proceedings of the 2024 IEEE Gaming, Entertainment, and Media Conference (GEM), Turin, Italy, 5–7 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Mosher, M.A.; Carreon, A.C.; Craig, S.L.; Ruhter, L.C. Immersive Technology to Teach Social Skills to Students with Autism Spectrum Disorder: A Literature Review. Rev. J. Autism Dev. Disord. 2021, 9, 334–350. [Google Scholar] [CrossRef]

- Chiappini, M.; Dei, C.; Micheletti, E.; Biffi, E.; Storm, F.A. High-functioning autism and virtual reality applications: A scoping review. Appl. Sci. 2024, 14, 3132. [Google Scholar] [CrossRef]

- Dixon, D.R.; Miyake, C.J.; Nohelty, K.; Novack, M.N.; Granpeesheh, D. Evaluation of an Immersive Virtual Reality Safety Training Used to Teach Pedestrian Skills to Children With Autism Spectrum Disorder. Behav. Anal. Pract. 2019, 13, 631–640. [Google Scholar] [CrossRef] [PubMed]

- Miller, I.T.; Miller, C.S.; Wiederhold, M.D.; Wiederhold, B.K. Virtual Reality Air Travel Training Using Apple iPhone X and Google Cardboard: A Feasibility Report with Autistic Adolescents and Adults. Autism Adulthood 2020, 2, 325–333. [Google Scholar] [CrossRef]

- Schmidt, M.; Glaser, N. Investigating the usability and learner experience of a virtual reality adaptive skills intervention for adults with autism spectrum disorder. Educ. Technol. Res. Dev. 2021, 69, 1665–1699. [Google Scholar] [CrossRef]

- Karkhaneh, M.; Clark, B.; Ospina, M.B.; Seida, J.C.; Smith, V.; Hartling, L. Social Stories™ to improve social skills in children with autism spectrum disorder: A systematic review. Autism 2010, 14, 641–662. [Google Scholar] [CrossRef]

- Bekele, E.; Crittendon, J.; Zheng, Z.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. Assessing the utility of a virtual environment for enhancing facial affect recognition in adolescents with autism. J. Autism Dev. Disord. 2014, 44, 1641–1650. [Google Scholar] [CrossRef]

- Ip, H.H.; Wong, S.W.; Chan, D.F.; Li, C.; Kon, L.L.; Ma, P.K.; Lau, K.S.; Byrne, J. Enhance affective expression and social reciprocity for children with autism spectrum disorder: Using virtual reality headsets at schools. Interact. Learn. Environ. 2024, 32, 1012–1035. [Google Scholar] [CrossRef]

- Simões, M.; Bernardes, M.; Barros, F.; Castelo-Branco, M. Virtual Travel Training for Autism Spectrum Disorder: Proof-of-Concept Interventional Study. JMIR Serious Games 2018, 6, e5. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.I.; Jang, S.y.; Kim, T.; Kim, B.; Jeong, D.; Noh, T.; Jeong, M.; Hall, K.; Kim, M.; Yoo, H.J.; et al. Promoting Self-Efficacy of Individuals with Autism in Practicing Social Skills in the Workplace Using Virtual Reality and Physiological Sensors: Mixed Methods Study. JMIR Form. Res. 2024, 8, e52157. [Google Scholar] [CrossRef] [PubMed]

- Hodgson, A.R.; Freeston, M.H.; Honey, E.; Rodgers, J. Facing the unknown: Intolerance of uncertainty in children with autism spectrum disorder. J. Appl. Res. Intellect. Disabil. 2017, 30, 336–344. [Google Scholar] [CrossRef]

- Hartholt, A.; Mozgai, S.; Fast, E.; Liewer, M.; Reilly, A.; Whitcup, W.; Rizzo, A.S. Virtual humans in augmented reality: A first step towards real-world embedded virtual roleplayers. In Proceedings of the 7th International Conference on Human-Agent Interaction, Kyoto, Japan, 6–10 October 2019; pp. 205–207. [Google Scholar]

- Cao, Y.; He, Y.; Chen, Y.; Chen, M.; You, S.; Qiu, Y.; Liu, M.; Luo, C.; Zheng, C.; Tong, X.; et al. Designing LLM-simulated Immersive Spaces to Enhance Autistic Children’s Social Affordances Understanding in Traffic Settings. In Proceedings of the 30th International Conference on Intelligent User Interfaces, Cagliari, Italy, 24–27 March 2025; pp. 519–537. [Google Scholar]

- Garzotto, F.; Gianotti, M.; Patti, A.; Pentimalli, F.; Vona, F. Empowering Persons with Autism Through Cross-Reality and Conversational Agents. IEEE Trans. Vis. Comput. Graph. 2024, 30, 2591–2601. [Google Scholar] [CrossRef]

- Ferri, S.L.; Abel, T.; Brodkin, E.S. Sex differences in autism spectrum disorder: A review. Curr. Psychiatry Rep. 2018, 20, 9. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, N.; Li, C.; Zhang, Z.; Teng, H.; Wang, Y.; Zhao, T.; Shi, L.; Zhang, K.; Xia, K.; et al. Genetic evidence of gender difference in autism spectrum disorder supports the female-protective effect. Transl. Psychiatry 2020, 10, 4. [Google Scholar] [CrossRef] [PubMed]

- Clocchiatti, A.; Mirabella, C.; Soccini, A.M. What Button was That? Interaction Design in Virtual Reality for Usability, Enjoyment, and Inclusion. In Proceedings of the Companion Publication of the 2025 ACM Designing Interactive Systems Conference, Madeira, Portugal, 5–9 July 2025; pp. 369–373. [Google Scholar]

- Soccini, A.M.; Ferroni, F.; Ardizzi, M. From Virtual Reality to Neuroscience and Back: A Use Case on Peripersonal Hand Space Plasticity. In Proceedings of the IEEE International Conference on Artificial Intelligence and Virtual Reality, AIVR 2020, Virtual Event, 14–18 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 394–396. [Google Scholar] [CrossRef]

- Ferroni, F.; Gallese, V.; Soccini, A.M.; Langiulli, N.; Rastelli, F.; Ferri, D.; Bianchi, F.; Ardizzi, M. The Remapping of Peripersonal Space in a Real but Not in a Virtual Environment. Brain Sci. 2022, 12, 1125. [Google Scholar] [CrossRef]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Kourtesis, P.; Collina, S.; Doumas, L.A.; MacPherson, S.E. Validation of the virtual reality neuroscience questionnaire: Maximum duration of immersive virtual reality sessions without the presence of pertinent adverse symptomatology. Front. Hum. Neurosci. 2019, 13, 417. [Google Scholar] [CrossRef] [PubMed]

- Loomes, R.; Hull, L.; Mandy, W.P.L. What Is the Male-to-Female Ratio in Autism Spectrum Disorder? A Systematic Review and Meta-Analysis. J. Am. Acad. Child Adolesc. Psychiatry 2017, 56, 466–474. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).