1. Introduction

In recent years, the Internet has grown into the world’s largest communication platform, supported by rapid progress in networking technologies that affect almost every aspect of daily life. Millions of users now rely on it for activities such as e-commerce, social networking, and online banking. However, the open and largely uncontrolled nature of the Internet makes it a common target for cyberattacks, where weaknesses in its infrastructure can be exploited. One of the most widespread threats is phishing, in which attackers trick users into revealing personal information by clicking on malicious Uniform Resource Locators (URLs) [

1].

The main goal of information security is to protect systems and prevent unauthorized access to valuable resources through effective measures and policies. Despite these efforts, illegitimate URLs remain a serious threat. As global identifiers of online resources, URLs can easily be manipulated to host phishing content and mislead users [

2]. Reports show that phishing incidents increased from about 114,702 cases in 2019 to more than 241,000 in 2020 during the COVID-19 pandemic. Verizon’s 2021 Data Breach Investigations Report [

3] further highlighted that phishing was the most common type of breach, accounting for 43% of incidents across 88 countries.

Malicious URLs are designed to deceive users and steal sensitive data such as usernames, passwords, and financial details. Numerous researchers have investigated artificial intelligence techniques, particularly deep learning approaches, to efficiently identify and prevent malicious URLs which have achieved good results [

4]. A key advantage of deep learning is its ability to process raw data directly, without requiring manual feature engineering [

5]. Most studies on URLs attacks classification and detection have primarily relied on traditional deep learning models such as Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Long Short-Term Memory (LSTM) networks. Recently, some cybersecurity research has begun exploring the use of Graph Neural Networks (GNNs) in areas like network protection and fake content detection on web pages, due to their ability to capture complex structural relationships, model interdependencies among entities, and generalize across irregular data formats.

Despite the advancements, existing approaches still suffer from many limitations. Some approaches are restricted to capturing sequential patterns, while others rely heavily on external page content such as HTML. Phishing Attacks remain the preferred technique for attackers because it exploits both system vulnerabilities and human behavior. Many users become victims of phishing due to a lack of awareness, carelessness, or accidental mistakes. At the same time, attackers are constantly improving their techniques, often using machine learning to conduct large-scale phishing attacks which specifically target users with high-level access to sensitive data [

6].

In this study, we present a hybrid framework that leverages the combined strengths of Graph Convolutional Networks (GCN), Attention Mechanism and Long Short-Term Memory (LSTM), to accurately enhance the classification of malicious and benign URLs. This architecture is designed to capture both the structural patterns and sequential behaviors within URLs, enabling more intelligent and reliable threat detection.

Experiments were conducted on a large-scale dataset exceeding 100,000 labeled samples, with evaluation performed before and after dataset augmentation. The proposed model consistently outperformed both classical machine learning and recent deep learning baselines, achieving over 90% accuracy.

The contributions of this paper are summarized as follows:

Use a hybrid architecture that combines GCN, Attention Mechanism and LSTM to jointly capture structural and sequential URL features.

Evaluate the model on large-scale real-world datasets, including augmented samples.

Provide a comprehensive comparison with traditional and state-of-the-art baselines to demonstrate the advantage of the hybrid models.

Highlight the significance of implementing deep learning techniques into cybersecurity tasks to enhance intelligent defense mechanisms.

Demonstrate the effectiveness of graph-based representations in phishing URL classification and emphasize their promising potential as a specialized defense mechanism in cybersecurity.

The rest of this paper is organized as follows.

Section 2 reviews related work on URL classification.

Section 3 describes the dataset and preprocessing steps.

Section 4 presents the proposed methodology.

Section 5 discusses the experimental results. Finally,

Section 6 concludes the paper and outlines future directions.

2. Related Works

A variety of approaches have been proposed to address phishing URL challenges, ranging from traditional machine learning (ML) classifiers to more advanced deep learning (DL) models, including those based on graph structures. In this section, we review the most relevant studies, organized into three categories: ML-based approaches, DL-based models, and hybrid graph-based architecture.

Zhang et al. [

7] proposed CANTINA, a TF–IDF–based method that compares webpage keywords with search engine results to identify phishing pages. Building on similar principles, Ma et al. [

8] employed lexical and host-based features with classifiers such as Naïve Bayes, SVM, and logistic regression to detect malicious URLs with high accuracy. Chen et al. [

9] further improved detection reliability using a reputation-based two-stage model that combines domain trust evaluation and sandbox inspection, achieving 94% accuracy.

Subsequent studies have shifted toward deep learning to overcome the limitations of manual feature extraction. A CNN-based model [

6] was developed with multiple convolutional and pooling layers followed by a sigmoid classifier, achieving 94.31% accuracy, while Singh et al. [

10] proposed a text-based CNN architecture that processes tokenized and embedded URL sequences through convolutional and dense layers, attaining 98% accuracy. Similarly, RNN-based architecture has been utilized for sequential data modeling. A comparative study [

11] revealed that LSTM networks outperform Random Forest classifiers by effectively capturing long-term dependencies, reaching 98.7% accuracy. An improved AB-BiLSTM with an attention mechanism [

12] further enhanced performance by capturing bidirectional sequence semantics and anomalous URL segments, achieving 98.06% accuracy. Likewise, Su in [

13] proposed a deep LSTM optimized with stochastic gradient descent, demonstrating 99.1% accuracy on Yahoo Directory and PhishTank datasets.

Recently, hybrid and graph-based architectures have been investigated to further boost detection robustness. Yao et al. [

14] introduced an improved Feature Pyramid Network combined with Faster R-CNN for QR code–based phishing detection, where logo extraction and recognition verified website legitimacy, achieving 91.4% precision. Similarly, Adebowale et al. [

15] developed the Intelligent Phishing Detection System (IPDS), which integrates CNN and LSTM to analyze image, text, and frame features, achieving 93.28% accuracy. In another study, Pooja and Sridhar [

16] proposed a multidimensional hybrid model employing deep learning and XGBoost to enhance detection speed and scalability, also achieving 93.28% accuracy.

In the context of graph learning, Ouyang and Zhang [

17] introduced an enhanced Graph Convolutional Network (GCN) utilizing DOM tree structures and RNN-based node embeddings for phishing detection, achieving 95.5% accuracy. Huang et al. [

18] applied GNNs with cross-protocol feature integration and random forest pre-selection to detect malicious IP addresses, obtaining 85.28% accuracy. Moreover, Ariyadasa et al. [

19] developed PhishDet, a hybrid model combining Long-term Recurrent Convolutional Networks (LRCN) with GCN to jointly analyze URL and HTML features, achieving 96.42% accuracy.

Overall, the literature demonstrates that while traditional machine learning methods perform well with handcrafted features, deep learning and graph-based architectures provide superior adaptability and feature-learning capabilities, making them more effective for modern phishing detection.

Table 1 provides a summary of previous studies in terms of dataset, model type, and achieved accuracy.

In summary, existing approaches to solve malicious URL related issues either rely on manually engineered features, treat URLs as flat sequential data, or depend heavily on external page content such as HTML and JavaScript. While hybrid and graph-based models have shown promising results, there remains a lack of methods that jointly capture both the structural relationships within a URL and its sequential behavior using graph-based deep learning. In addition, attention mechanisms have been employed to help the model focus on the most informative parts of the URL. However, despite these improvements, most DL-based methods still treat URLs as flat sequences and fail to capture the complex structural relationships that exist between different components of the URL string. This gap has recently led to increased interest in graph-based methods, which are better suited to modeling such dependencies. Moreover, attention mechanisms have rarely been integrated with GCN and LSTM in the context of malicious URL related attacks.

To address this gap, our study presents a hybrid deep learning model that integrates GCN, Attention Mechanism and LSTM, using only the raw URL string as input. This architecture combines structural and sequential learning capabilities, leveraging the strengths of each component to improve classification accuracy without relying on external webpage content. This model serves as a foundational step toward future research that aims to further integrate graph-based learning into the development of more advanced solutions for malicious URL detection and prevention.

3. Dataset and Preprocessing

A large-scale dataset of URLs was collected from publicly available sources, comprising both malicious and benign examples. The data were structured in two columns: one containing the URL string and the other containing its corresponding binary label. To ensure quality, duplicates were removed, and basic preprocessing steps were applied to retain only meaningful and well-structured entries. The resulting dataset included over 100,000 unique URLs, representing a diverse set of patterns relevant to malicious link analysis.

The dataset was collected from several publicly available sources, including Alexa [

20], PhishTank [

21] and Openphish [

22].

Given that this study focuses on the textual nature of URLs, Term Frequency-Inverse Document Frequency (TF-IDF) was employed to transform raw strings into meaningful numerical vectors. This encoding method highlights the significance of individual tokens within URLs without relying on manually crafted features. To enhance the model’s sensitivity to subtle variations common in phishing attempts, TF-IDF was applied at both the character and word levels. The resulting vectors served as inputs to the learning models in subsequent stages [

23].

3.1. Data Collection

The initial dataset consisted of approximately 100,000 labeled URLs, collected from a publicly available source. To increase the variety and improve generalization, additional phishing and benign URLs were later gathered from multiple repositories, including recent threat intelligence feeds and well-known public datasets such as Alexa, OpenPhish and PhishTank. This process aimed to expose the model to diverse URL patterns and reduce overfitting. Unlike artificial oversampling techniques, dataset expansion was achieved through direct data gathering without duplicating any entries.

3.2. Model Input Preparation

The TF-IDF vectors were reshaped to align with the input requirements of the hybrid GCN-Attention-LSTM model, ensuring architectural compatibility without modifying its core structure.

For evaluation, two experimental phases were conducted:

Run 1: The model was trained and tested on approximately 100,000 unique samples.

Run 2: The dataset was expanded by including additional non-redundant samples, nearly doubling its size, and the model was re-evaluated under the same conditions.

In both phases, the dataset was divided into training and testing subsets to maintain balanced class distributions.

Table 2 summarizes the dataset distribution across the two runs.

Both datasets used in this study were balanced, containing an equal number of benign and malicious URLs. The average URL length was approximately 52 characters. Each URL consisted of an average of 7 tokens, indicating moderate structural diversity. To ensure data reliability and fair evaluation, several standard measures were applied during preprocessing. The dataset was cleaned to remove duplicates and normalized by stripping redundant components such as protocol identifiers, query strings, and “www” subdomains. The data were then divided into training and testing subsets using an 80/20 split, ensuring representative coverage across domains and a balanced distribution of classes. These precautions were taken to maintain consistency and prevent bias in the evaluation process.

4. Proposed Methodology

4.1. Problem Formulation

The task of identifying malicious URLs is framed as a binary classification problem. Given an input URL string , the objective is to predict its corresponding label , where denotes a malicious URL and indicates a benign one. Formally, the model learns a function that maps URL strings to their respective classes. The goal is to minimize classification errors on unseen URLs by effectively capturing both sequential patterns and structural dependencies present in the input. This study also aims to assess its overall effectiveness in accurately distinguishing between malicious and benign links based on structural and sequential patterns present in the data.

4.2. Data Representation

Each URL in the dataset was treated as raw text and encoded into numerical vectors using the Term Frequency–Inverse Document Frequency (TF-IDF) technique. This method assigns higher weights to informative tokens that frequently appear in malicious URLs but are uncommon in benign ones. Unlike handcrafted lexical or content-based features, TF-IDF offers a scalable, text-only representation that does not rely on external metadata such as HTML or JavaScript [

23].

The tokenized input was represented as a graph, where nodes represent tokens and edges indicate their relative positions within the string. This structure helps the model learn how different parts of the URL are arranged, supporting further processing by GCN and LSTM components. To effectively process the structured and sequential representations of URLs, this study employs a hybrid architecture that combines GCN, Attention Mechanism and LSTM. Each component was selected for its ability to handle a specific aspect of the data, enabling the model to extract richer patterns and improve classification accuracy.

4.3. Model Architecture

This section provides comprehensive reviews of each methodology algorithm discussed in the proposed model, such as the GCN model, an Attention mechanism and LSTM model. The proposed GCN-Attention-LSTM (GCA-LSTM) model is then explained.

4.3.1. Graph Convolutional Network (GCN)

The Graph Convolutional Network (GCN) is employed for the analysis of data organized in a graph structure. Graph structures consist of vertices and edges. The Graph Convolutional Network (GCN) is used in a scenario where URLs need to be classified as either benign or malicious. The goal is to analyze the structural relationships within the URLs dataset that are relevant for this classification task. The GCN aims to distinguish between harmless and dangerous URLs by examining the relationships and patterns between them to identify structural indications. It has the ability for finding connections between dangerous URLs or identify shared linking patterns among harmless URLs.

This model is concerned with capturing data in the form of a graph consisting of nodes and edges. The moment the data enters during this model, it is understandable, so all the characters are transformed into numbers so that we can deal with them. They are dealt with in the form of feature vectors. The relationships between the feature vectors on graph are stored in adjacency matrix.

The adjacency matrix A of a graph

with

vertices

and m edges

, contains

on the diagonal to represent each vertex being connected to itself, and

at position

,

to indicate an edge

connecting node vi to node

[

24].

4.3.2. Attention Mechanism

The implementation of attention mechanism has proven to be highly effective in several deep learning applications, including image recognition, natural language processing, and voice recognition. The attention function contains the process of connecting a query with several key-value pairs and providing a weighted sum of the values. The form of the output varies depending on the level of information you want to highlight, such as spatial, temporal, or other elements [

25].

The attention technique dynamically allocates importance to different URL features and temporal sequences that are relevant to the classification of URLs as either benign or harmful. The purpose is to prioritize important indicators or sequences that have significant effects on the classification process, increasing its focus on highlighting the fundamental components of URLs that determine their benign or harmful nature. For instance, it may give priority to specific URL features or time-related patterns that reflect harmful intent.

Attention mechanisms are commonly incorporated into hybrid neural models to enhance feature selection and representation. There are several forms of attention that can be integrated and applied depending on the model structure and the nature of the data. For example, temporal attention is often used alongside models such as LSTM to highlight important time steps within a sequence. In graph-based models, graph attention mechanisms enable the model to assign different weights to neighboring nodes during the aggregation process. The graph attention module follows a multi-head structure, where multiple attention heads jointly learn the importance of node connections and aggregate their representations through concatenation or averaging.

Figure 1 displays the multi-head attention technique with a vertex in its neighborhood. The features obtained from both the neighboring vertices and the vertex itself are combined either by concatenation or by averaging in order to form the attention vector [

25]. In this study, a single-head attention mechanism was implemented to dynamically assign weights to graph-encoded URL features before sequential modelling. Unlike multi-head structures that aggregate multiple attention subspaces, this configuration applies one adaptive weighting process across all node representations.

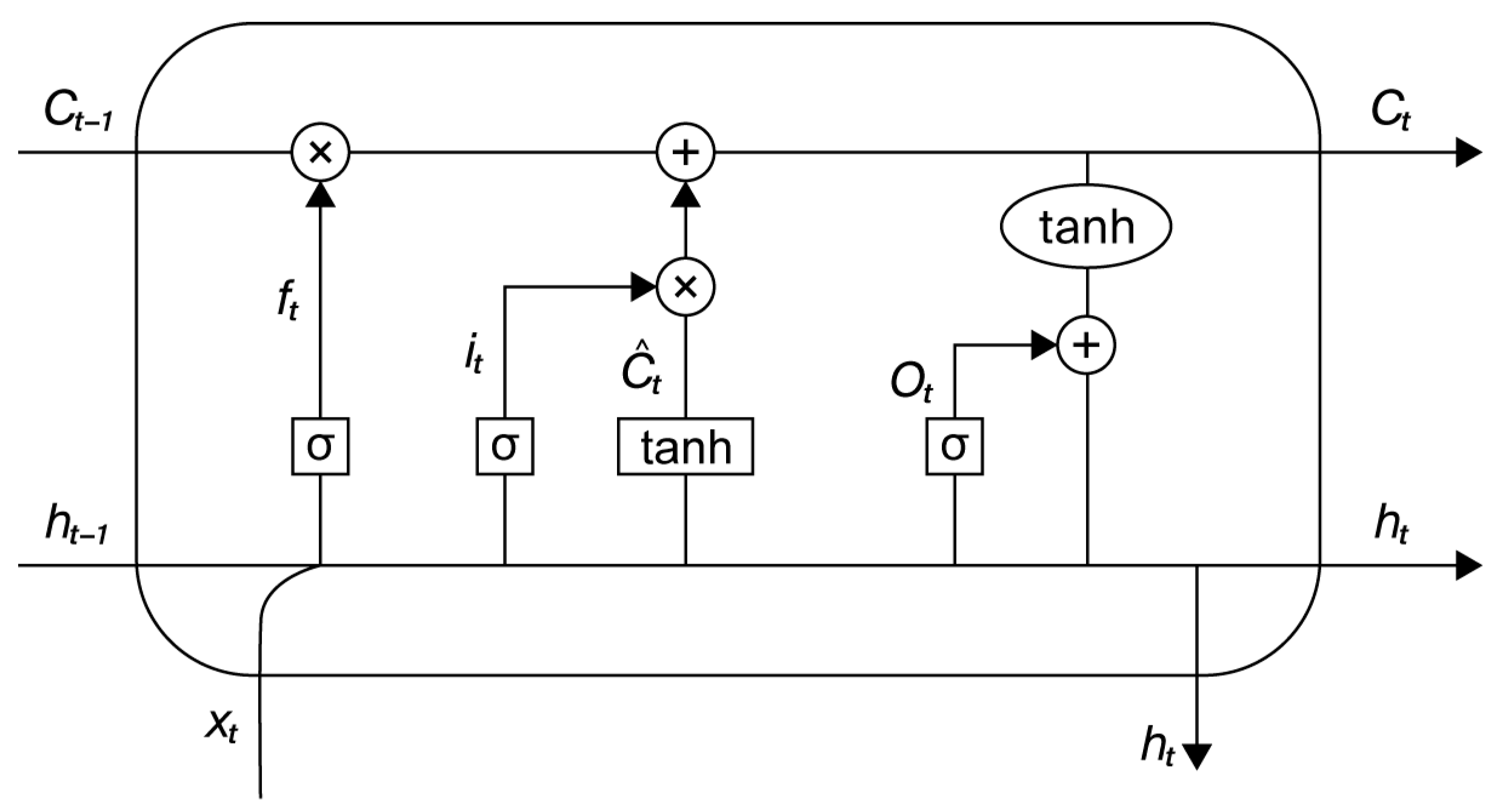

4.3.3. Long Short-Term Memory (LSTM)

The Recurrent Neural Network (RNN) is frequently employed for predicting sequential data. However, problems such as expanding gradients may occur when using RNN models to capture long-term dependencies in time-series data. The derivatives of the neural network will be repeatedly compounded across the layers. A few modifications in the derivatives cause a rapid decrease in the gradient, whereas significant changes in the derivatives lead to a rapid increase in the gradient. Both conditions can result in the occurrence of the vanishing or growing gradient issue. LSTM, an improved version of the original RNN, successfully resolves the problems of vanishing or expanding gradients by efficiently capturing long-range relationships. The main difference is that LSTM integrates a singular cell state and includes three gates at each recurrent unit. These structures enhance the model in capturing long-term interactions [

26].

Figure 2 displays an individual LSTM cell.

The proposed model incorporates an LSTM component to effectively capture temporal relationships and sequences of events. This enables the model to accurately identify URLs as either benign or malicious in the binary classification. The system will analyze temporal patterns of user activity and URL accesses to detect specific sequences of events and user interactions that determine whether a URL is classified as benign or harmful. This may involve analyzing common trends of behavior that occur when accessing malicious URLs or recognizing normal user activities before visiting harmless URLs.

4.3.4. Graph Convolutional Network-Attention-Long Short-Term Memory (GCA-LSTM)

The classification model proposed is based on the approaches described in the research conducted by X Ma et al. [

27]. This study presents a graph framework for predicting the short-term demand of a bike-sharing system at the station level. The framework utilizes multi-sources of data, including historical bike-sharing trip data, land-use data, meteorological data, and users’ personal information. Inspired by this structure, our proposed model implements architecture which is tailored for a different application domain: binary URL classification. It aligns with the spatial-temporal graph learning framework, where Graph Convolutional Networks (GCNs) capture the structural relationships between URL components, Long Short-Term Memory (LSTM) layers model their sequential dependencies, and the attention mechanism enhances representation by emphasizing the most informative features. This hybrid design is theoretically grounded in spatial-temporal graph learning, which integrates structural and temporal dimensions to jointly optimize feature representation. By modeling URLs in this unified framework, the proposed model effectively captures both the structural correlations and temporal dynamics underlying evolving malicious behaviors, thereby strengthening its ability to detect complex threat patterns.

Our proposed model is specifically designed for the classification of URLs as either benign or malicious. It integrates Graph Convolutional Networks (GCNs) to capture the structural dependencies within URL components, Attention mechanism to emphasize the most informative features and Long Short-Term Memory (LSTM) networks to model the sequential characteristics of the data. These components work together to enable accurate and robust classification of harmful and benign URLs.

In this paper, we refer to our proposed hybrid model as GCA-LSTM, a variant of GCN-LSTM where attention mechanism is explicitly integrated into the GCN and LSTM layers. The name reflects the key architectural enhancement (Graph Convolution + Attention + LSTM) while maintaining a consistent naming convention.

The proposed model was selected for its robustness and performance [

27] with the goal of effectively addressing the problem of malicious URL classification which requires capturing both structural and sequential characteristics from input data.

As shown in

Figure 3, URLs will be fed into the model for processing in the input layer which defines the input shape for the model. The second layer was a Reshape layer which reshapes the input data to a specific shape. It reshapes the input data to ensure that the input data aligns with the expected format for further processing.

Graph Convolutional Networks (GCNs) are used first to extract the underlying structural relationships between different URL components, such as subdomains, paths, and query parameters.

Accordingly, an Attention mechanism is applied to focus on the most informative features, helping it prioritizes critical patterns that may indicate malicious behavior. Long Short-Term Memory (LSTM) networks are utilized to analyze the sequential flow of URL components, enabling the model to detect irregularities or abnormal repetition patterns.

A Flatten layer was applied to convert the output from the GCA-LSTM layer into a one-dimensional vector, which is often required before passing it to a traditional neural network layer. In addition to the dense layer, this layer was used with a sigmoid activation function, which is commonly used for binary classification tasks. It produces the final output of the model which represents the probability of the input URL being malicious or benign based on the learned features from the previous layers.

To compile the model, a Binary cross-entropy was used as the loss function in addition to the Adam optimizer to adjust the learning rate. Furthermore, the model was training for 10 epochs with early stopping after 3 epochs of no loss improvements.

All experiments were conducted on Google Colab. The implementation was developed in Python v3.8.8 with TensorFlow v2.13.0 and Keras libraries v2.13.1. These models were trained and tested as follows Windows10; Processor: Intel (R) Core (TM) i5-1035G4 CPU@1.10 GHz 1.50 GHz; RAM: 8 GB.

The model was trained using the Adam optimizer to minimize the binary cross-entropy loss function. Hyperparameters were empirically selected as follows: a batch size of 32, a learning rate of 0.001, and 10 training epochs.

The dataset was randomly divided into training and testing subsets using an 80% and 20% ratio. This split was consistently applied across both experimental phases, regardless of dataset size. The same split strategy was applied across baseline models used for comparison to ensure an objective assessment.

The proposed GCA-LSTM model integrates graph convolution, attention, and sequential learning in a unified structure. The embeddings produced by the Graph Convolution (GCN) layer are sequentially passed into the LSTM unit to capture temporal dependencies. An attention mechanism is applied between these components to assign adaptive importance weights to graph-structured features before sequential modelling. The training objective encourages the model to jointly learn both spatial structure from the (GCN) and temporal behaviour from the (LSTM), so that both sources of information contribute to the final binary cross-entropy loss used for classification. The dimensional flow of the model progresses from the input layer (1000 features × 1) through the GCN layer (1000 features × 128 nodes) and the attention layer (128 nodes × 64 units), followed by the LSTM (64 units × 32 units) and the final dense layer (32 units × 1), which produces the classification output.

4.4. Evaluation Metrics

To comprehensively assess the performance of the proposed model, several standard classification metrics were employed. These include:

Accuracy: Measures the overall proportion of correctly classified URLs.

Precision: Represents the fraction of URLs predicted as malicious that are malicious.

Recall: Indicates the model’s ability to identify all malicious URLs in the dataset.

F1-Score: The harmonic means of precision and recall, balancing false positives and false negatives.

All metrics were computed based on the confusion matrix generated from the held-out test set. In addition, the training time was recorded to evaluate the computational efficiency of the proposed hybrid architecture.

To further validate robustness, the model’s performance was compared with baseline machine learning and deep learning models under the same experimental settings.

5. Experimental Results and Discussion

5.1. Experimental Runs

Two experimental settings were considered to evaluate the proposed model.

Table 3.

Performance of the baseline machine learning models on dataset A.

Table 3.

Performance of the baseline machine learning models on dataset A.

| Input | Dataset | Algorithm | Accuracy | Precision | Recall | F1-Score |

|---|

| URLs | Dataset A | XG-Boost | 0.9930 | 0.9989 | 0.9873 | 0.9931 |

| SVM | 0.9929 | 0.9987 | 0.9873 | 0.9929 |

| LDA | 0.9863 | 0.9938 | 0.9790 | 0.9864 |

| KNN | 0.9805 | 0.9883 | 0.9731 | 0.9806 |

| QDA | 0.9890 | 0.9962 | 0.9821 | 0.9891 |

| Gaussian NB | 0.9853 | 0.9870 | 0.9841 | 0.9855 |

Table 4.

Performance of the baseline machine learning models on dataset B.

Table 4.

Performance of the baseline machine learning models on dataset B.

| Input | Dataset | Algorithm | Accuracy | Precision | Recall | F1-Score |

|---|

| URLs | Dataset B | XG-Boost | 0.8429 | 0.8182 | 0.8815 | 0.8487 |

| SVM | 0.8742 | 0.8402 | 0.9239 | 0.8801 |

| LDA | 0.8492 | 0.8165 | 0.9009 | 0.8565 |

| KNN | 0.8531 | 0.8080 | 0.9260 | 0.8630 |

| QDA | 0.7544 | 0.9605 | 0.5304 | 0.6834 |

| | | Gaussian NB | 0.7897 | 0.9283 | 0.6312 | 0.7499 |

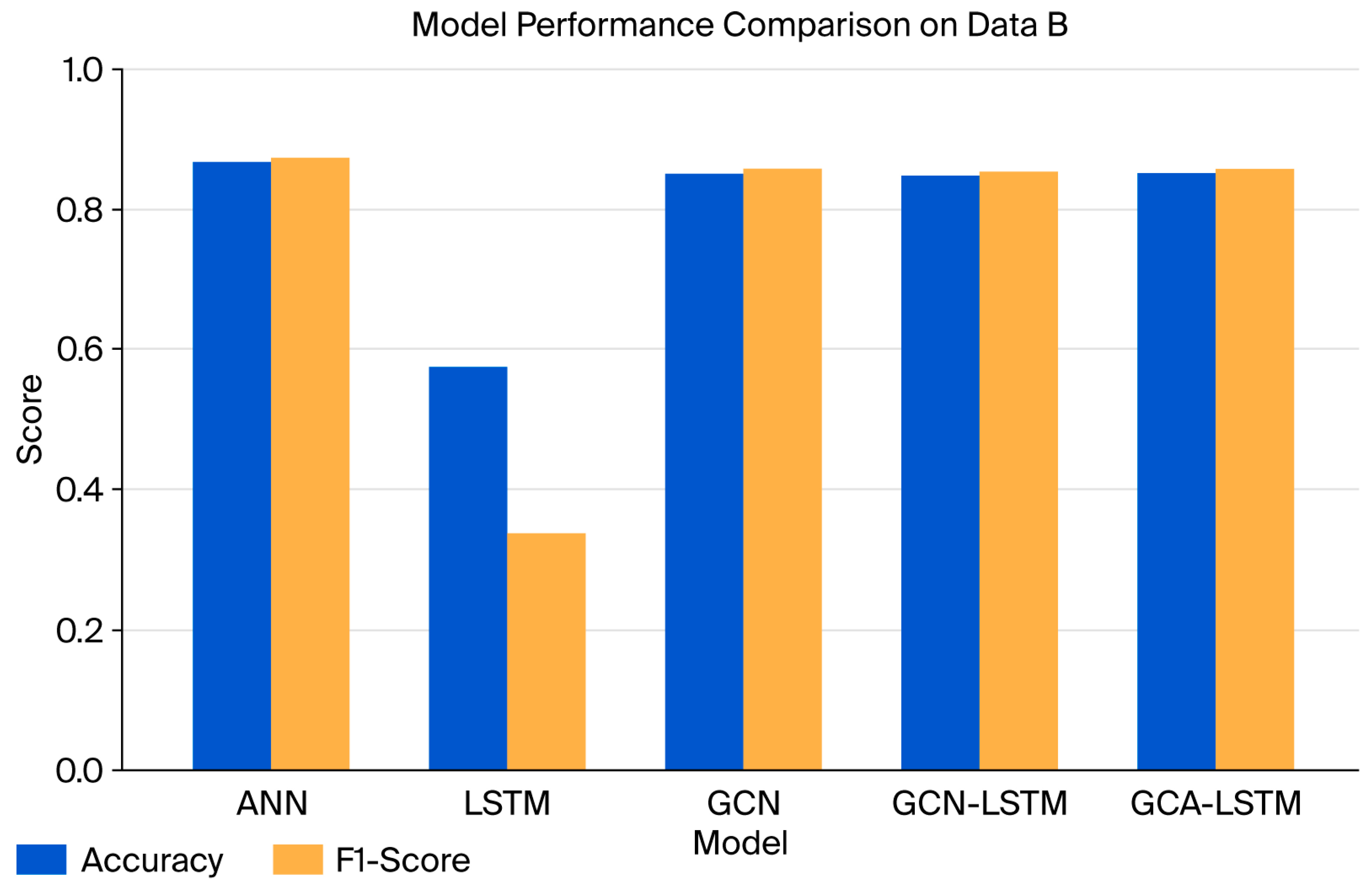

Table 5 and

Table 6 summarize the results obtained from both runs using some recent deep learning-based models used for comparison.

The results demonstrate that the proposed model consistently outperformed the baselines in both experimental runs, achieving an accuracy above 85% and higher F1-scores on the larger evaluation dataset. Graph-based approaches reliably outperformed purely sequential or feature-engineering-based methods, highlighting the importance of incorporating structural relationships into URL classification tasks.

5.2. Performance Comparison

The proposed hybrid model was compared against six baseline approaches, including both classical ML algorithms and recent DL architectures. These models included XG-Boost, SVM, LDA, KNN, QDA, Gaussian NB, LSTM, GCN, GCN with LSTM.

5.2.1. Comparison Against GCN-LSTM

To assess the value of integrating attention mechanisms into graph-based models, a direct comparison was conducted between the proposed GCA-LSTM model and the GCN-LSTM baseline. GCN-LSTM combines graph convolutional layers with LSTM units to capture both local structures using GCN and temporal features using LSTM.

However, the proposed GCA-LSTM enhances this architecture by incorporating an attention mechanism, allowing the model to focus on the most informative parts of the input. While GCN-LSTM processes the input uniformly, the attention mechanism in GCA-LSTM prioritizes critical patterns, which improves classification accuracy.

Based on the experiments, GCA-LSTM consistently outperformed GCN-LSTM in both accuracy and F1-score across both datasets. This confirms the advantage of integrating attention to improve the model’s focus and adaptability to complex patterns, especially when classifying URL-based textual inputs.

5.2.2. Comparison Against Deep Learning Models

To further validate the impact of combining temporal and graph-based features, we compared GCA-LSTM to standalone deep learning models: LSTM and GCN.

LSTM: Although capable of modeling sequential dependencies, its performance was limited due to the non-sequential nature of URL data. URLs often encode structured patterns rather than time-based sequences, making LSTM less effective in this context. To ensure fair comparisons, all baseline models were trained under identical experimental settings, including the same input features (TF-IDF vectors), binary classification targets, and consistent train–test splits. The LSTM model exhibited notable training difficulties on Dataset B, likely due to the dataset’s high dimensionality and large size. Its sequential architecture and recurrent dependencies make it more sensitive to long input sequences and memory constraints, which may explain its lower performance in this context despite being trained under the same conditions as the other baseline models. Furthermore, unlike natural language, URLs are characterized by structural rather than linguistic dependencies, making graph-based and attention-driven approaches more effective for capturing their underlying patterns.

GCN: GCN captures structural relationships between data points but lacks the ability to model sequential context or prioritize important parts of the input without an attention mechanism.

The results showed that GCA-LSTM achieved superior performance compared to both LSTM and GCN individually, validating the benefit of combining attention-based enhancement with hybrid modeling strategies.

ANN: To establish a fair baseline for comparison, an Artificial Neural Network (ANN) was included as a simple reference model. The ANN represents a conventional feed-forward network, suitable for testing textual datasets and verifying the model’s learning efficiency under minimal architectural complexity. Although ANN achieved slightly higher accuracy on Dataset A, this outcome is expected given its simplicity and the dataset’s limited structural diversity. Nevertheless, the proposed GCA-LSTM demonstrated competitive performance while incorporating graph-based and sequential components, indicating its ability to generalize across both structural and temporal dependencies—an essential characteristic for real-world malicious URL analysis.

5.2.3. Comparison with Machine Learning Algorithms

In alignment with prior work, several traditional machine learning models were also used as baselines, including SVM, XG-Boost, LDA, QDA, KNN, and GaussianNB. Below is a brief description of each and a summary of their behavior on the dataset:

XG-Boost: A powerful gradient boosting method that performed strongly on the dataset due to its ability to handle structured data like TF-IDF representations of URLs [

28]. It consistently ranked among the top-performing models.

SVM: Well-suited for high-dimensional text data, SVM also achieved competitive results and showed reliable performance in classification tasks [

29].

LDA & QDA: These discriminant analysis methods performed reasonably well, especially LDA. QDA required more data to generalize effectively, which affected its consistency [

30].

KNN: Performance dropped significantly due to the high dimensionality and large volume of the dataset, which KNN struggles with [

31].

GaussianNB: This model assumes a Gaussian distribution of features, which does not align well with TF-IDF vectors, resulting in lower performance [

32].

Overall, while some traditional models like XG-Boost and SVM delivered competitive results, especially on Dataset A, their performance declined as the dataset scale increased. Notably, models like QDA, KNN, and GaussianNB exhibited significant drops in accuracy and F1-score on Dataset B, suggesting limitations in handling high-dimensional, large-scale textual data.

In contrast, our proposed hybrid model consistently maintained high performance across both datasets, particularly excelling after dataset expansion. This highlights the robustness and scalability of the model, making it more suitable for real-world applications where data complexity and volume are significant.

5.2.4. Graph-Based Models

Both GCA-LSTM and GCN-LSTM, as graph-enhanced models, showed better alignment with the characteristics of the data. Their ability to handle structured relationships across different URL segments enabled better pattern extraction and classification accuracy. Additionally, the inclusion of attention in GCA-LSTM provided further advantage by guiding the model to focus on the most informative parts of the TF-IDF input, rather than treating all features equally.

The following

Figure 4 and

Figure 5 illustrate the accuracy and F1-scores obtained from various deep learning models including the proposed hybrid model and the GCN-LSTM model. Accuracy and F1-score were selected for visualization and comparative charts, as they provide the most balanced and interpretable assessment of model performance in classification tasks.

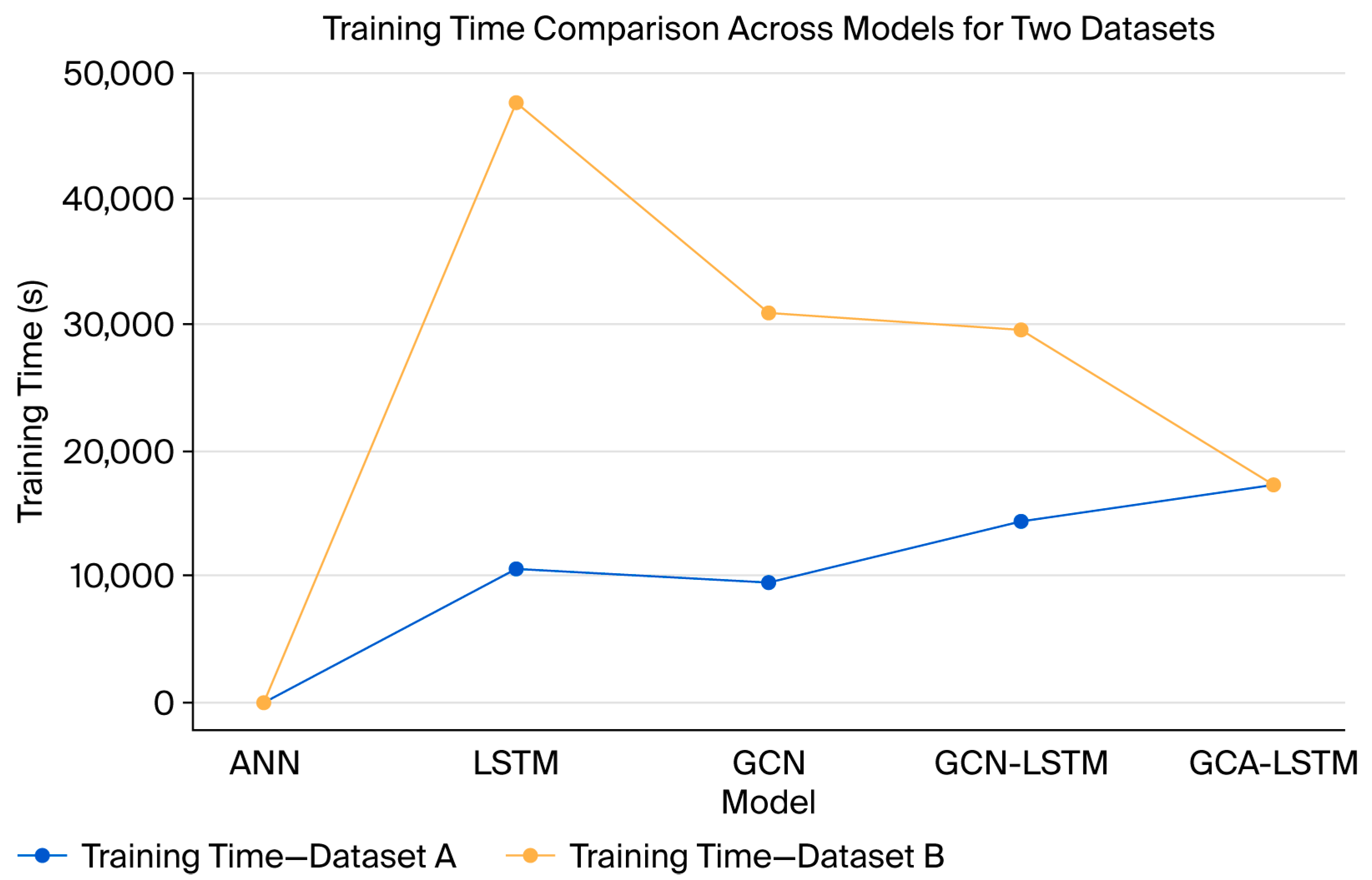

5.2.5. Evaluation of Training Time

Following initial experiments, Dataset B was introduced to evaluate model scalability. This helped to assess whether increased data volume impacts performance and training dynamics.

As shown in

Figure 6, The training time analysis revealed that while GCA-LSTM had a slightly higher training time on Dataset A, it demonstrated greater efficiency and faster convergence on Dataset B, outperforming models like GCN-LSTM in training speed. This indicates that attention mechanisms improve scalability and training efficiency as data complexity increases.

5.3. Discussion

The effective performance of the proposed hybrid architecture can be driven by three key factors:

Structural modeling by GCN model: Effectively captures complex relationships within URL components that sequential models fail to capture.

Sequential dependency modeling by LSTM model: Preserves the order of characters, which is critical for detecting subtle malicious patterns.

Feature prioritization by Attention mechanism: Focuses on the most discriminative parts of the URL, improving robustness to noise and irrelevant tokens.

Moreover, the results demonstrate that using a larger dataset in Run 2 led to more consistent model behavior and improved generalization performance, without relying on synthetic duplication techniques.

While the integration of GCN, Attention, and LSTM significantly increased training time, especially during the second experimental run with a larger dataset, the resulting performance demonstrated high classification accuracy. This indicates that despite higher training cost, the model remains well-suited for deployment in real-word and automated URL filtering systems.

Although this study specifically focused on applications related to URLs and web-based content, other domains have also adopted the spatial-temporal framework to address diverse prediction and other challenges, such as traffic flow forecasting [

27], complex traffic network security [

33], and environmental monitoring [

34]. Our work stands alongside these studies by extending the spatial-temporal concept to contribute a novel perspective that can guide future research on malicious URL detection and model enhancement.

6. Conclusions and Future Work

In this paper, we proposed a hybrid deep learning framework for malicious URL classification that integrates Graph Convolutional Networks (GCN), An attention mechanism and Long Short-Term Memory (LSTM) networks. The model leverages both structural and sequential representations of URLs, enabling more accurate and robust classification compared to traditional ML approaches and recent DL baselines. Experimental results on large-scale datasets demonstrated the effectiveness of the proposed model, achieving accuracy exceeding 99% and 85% across different datasets and consistently outperforming recent deep learning-based methods across multiple evaluation metrics. This study addresses the growing need to enhance URL filtering technologies, given the significant risks associated with malicious URLs to both users and security infrastructures. It explores the application of graph-based deep learning, particularly Graph Convolutional Networks (GCNs) due to their ability to model the structural characteristics of URLs. In addition, the study demonstrates how integrating structural learning, sequential modeling, and feature weighting within an integrated architecture can enhance URL classification and support more effective filtering of malicious links. Beyond its experimental contributions, this work establishes a foundational baseline for future research, providing a flexible architecture that can be extended, combined, or adapted to advance the development of more robust solutions for malicious URL classification.

Although the current evaluation did not specifically test the model on shortened or dynamically generated URLs, these types of URLs often present unique challenges in real-world environments. Future work will therefore consider extending the model to handle such cases more effectively. One potential direction involves experimenting with additional data sources that incorporate HTML content and JavaScript-based features. Alongside this, future work will include general improvements in data preprocessing and validation strategies. These enhancements combined with alternative feature extraction techniques aim to determine the most effective approach for modeling and analyzing URL-related components. Lastly, future efforts will focus on deploying the model in real-time environments and evaluating its robustness against adversarial attacks, with the goal of assessing its practical readiness for deployment in cybersecurity systems.