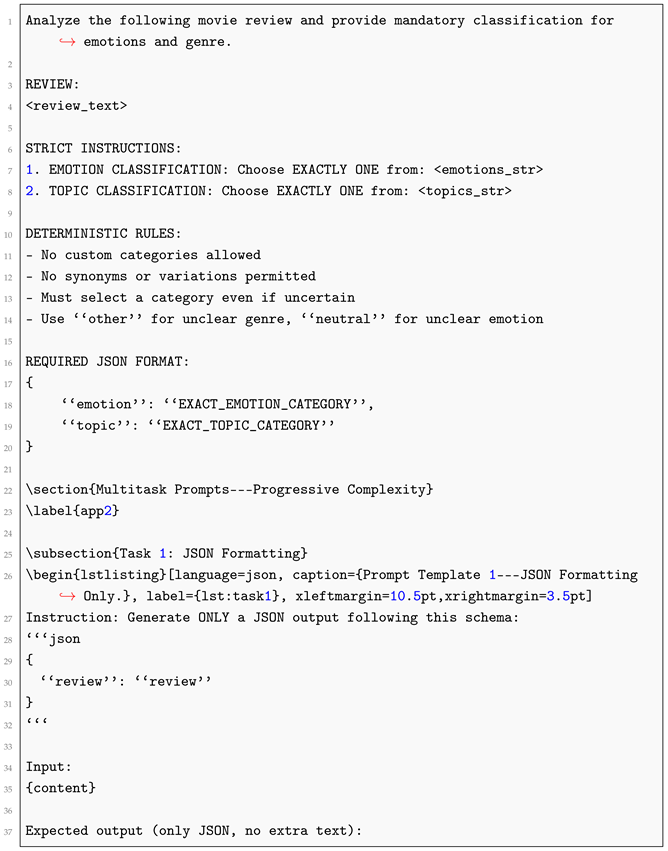

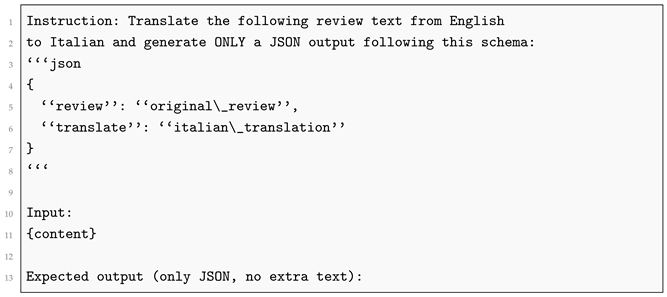

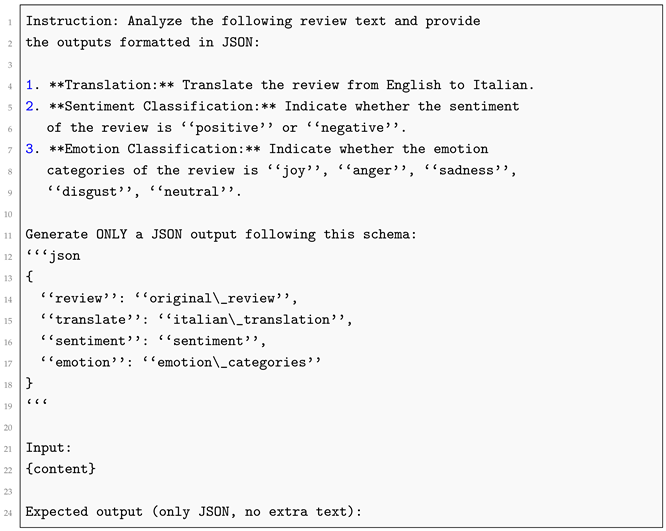

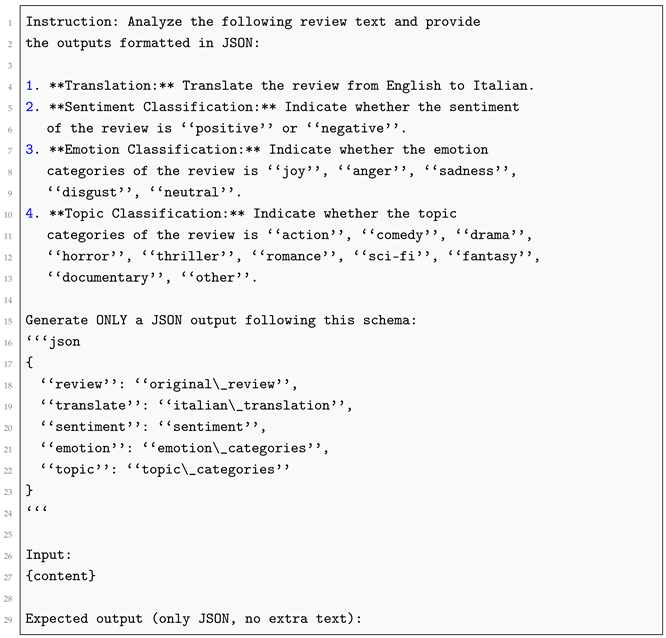

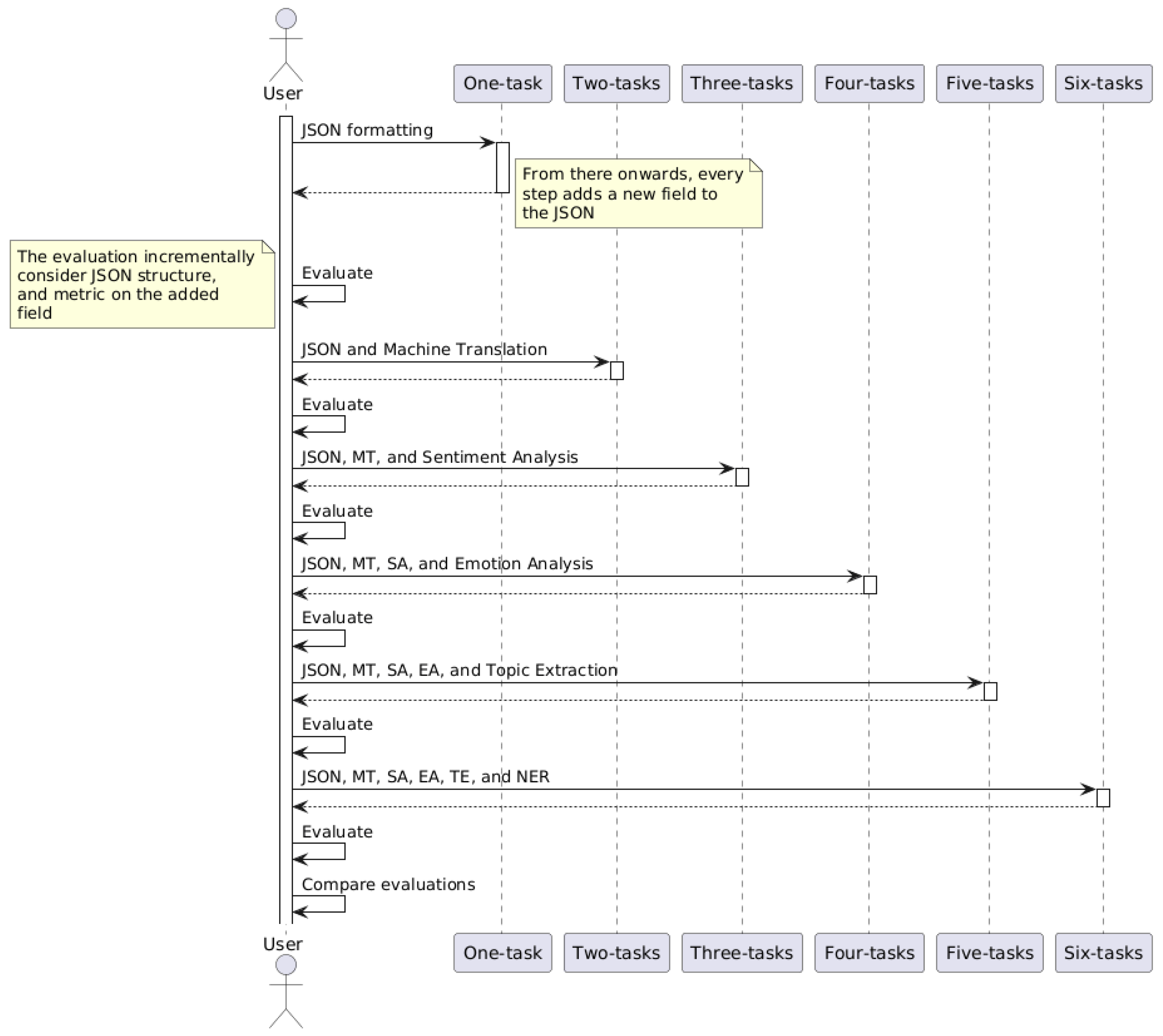

In this section, we present the empirical findings from our systematic evaluation of six representative LLMs under an incremental multitask prompting framework. The results are organized by model, with detailed performance metrics reported for each of the six NLP tasks as complexity increases. We focus on both absolute performance levels and relative degradation patterns, highlighting key trends and anomalies observed across different architectures.

3.1. Standard Order Results

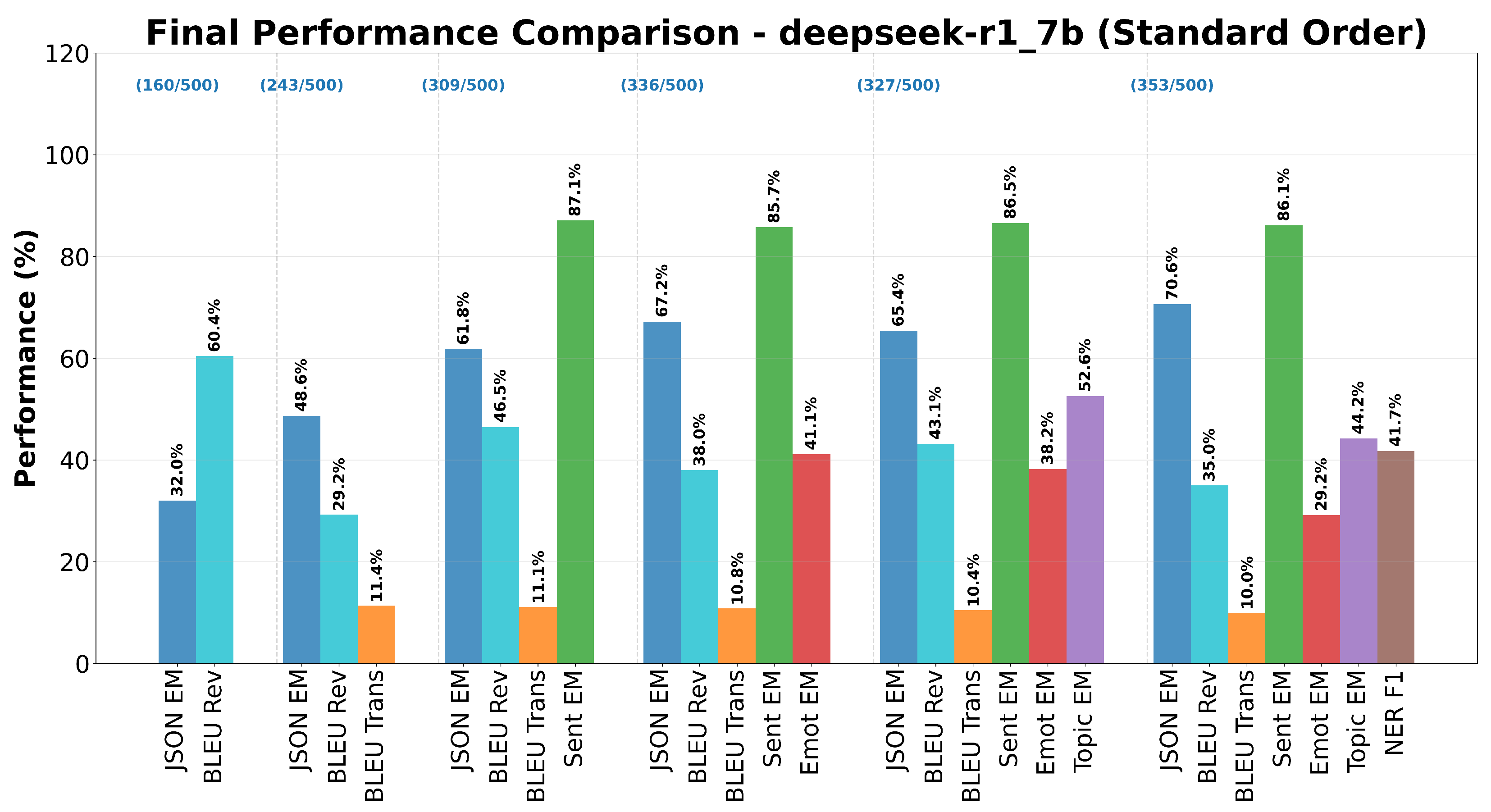

The DeepSeek R1 7B model demonstrated distinct performance characteristics across the six-task incremental evaluation framework. The model’s response to increasing prompt complexity revealed both architectural strengths and notable degradation patterns that warrant detailed examination.

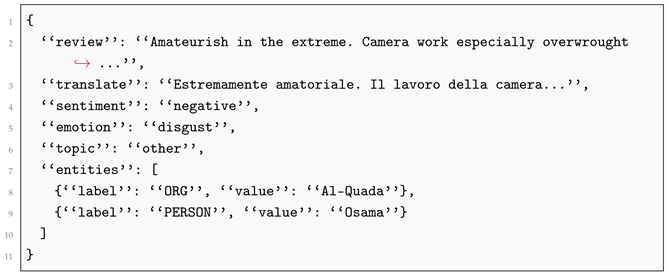

As shown in

Figure 2, the model exhibited a substantial improvement in JSON formatting accuracy as task complexity increased, with exact match scores rising from

in the single-task condition (Task 1) to

in the complete six-task configuration (Task 6). The increasing trend is not strictly monotonic. The exact match drops of around

between the fourth and fifth tasks, when Topic Extraction is added. This counterintuitive improvement suggests that the additional task constraints in multitask prompts may have provided structural guidance that enhanced the model’s ability to maintain proper JSON formatting, the corresponding increase in valid JSON count from 160 to 353 samples out of 500 further supports this interpretation.

In contrast to JSON formatting improvements, Machine Translation quality showed consistent degradation across task configurations, BLEU scores for translation declined from (Task 2) to (Task 6) representing a relative decrease. This degradation pattern suggests that the MoE architecture may struggle to maintain translation quality when cognitive resources are distributed across multiple concurrent tasks.

The model demonstrated remarkable stability in Sentiment Classification across all multitask configurations, maintaining exact match accuracy between and . This consistency indicates that sentiment analysis, being a relatively straightforward binary classification task, remains robust to prompt complexity increases in the DeepSeek R1.

Emotion Analysis showed significant susceptibility to task interference, with performance declining from (Task 4) to (Task 6), representing a relative degradation.

Topic Extraction demonstrated moderate resilience to increasing complexity, declining from (Task 5) to (Task 6), an relative decrease.

NER achieved an F1 score of

in the complete six-task configuration, representing the baseline performance for this most complex task scenario.

Table 3 details the precision, recall, and F1 scores for each entity category observed. It is noteworthy that while precision for the PERSON category was relatively high (

), recall was substantially lower (

), indicating that the model was conservative in its entity predictions. Moreover, the ORG and LOC categories exhibited both low precision and recall, resulting in F1 scores below

. A total of 109 entities out of 3060 (

) were classified out of scope, indicating challenges in accurately identifying and categorizing named entities within the multitask context. The relatively modest performance suggests that NER, requiring precise linguistic analysis and categorization, may be particularly challenging within highly complex multitask contexts.

The degradation overview of

Figure 3 reveals asymmetric interference patterns across tasks. While structural tasks (JSON formatting) showed general improvements with increased complexity, semantic tasks (emotion analysis, translation) demonstrated clear degradation.

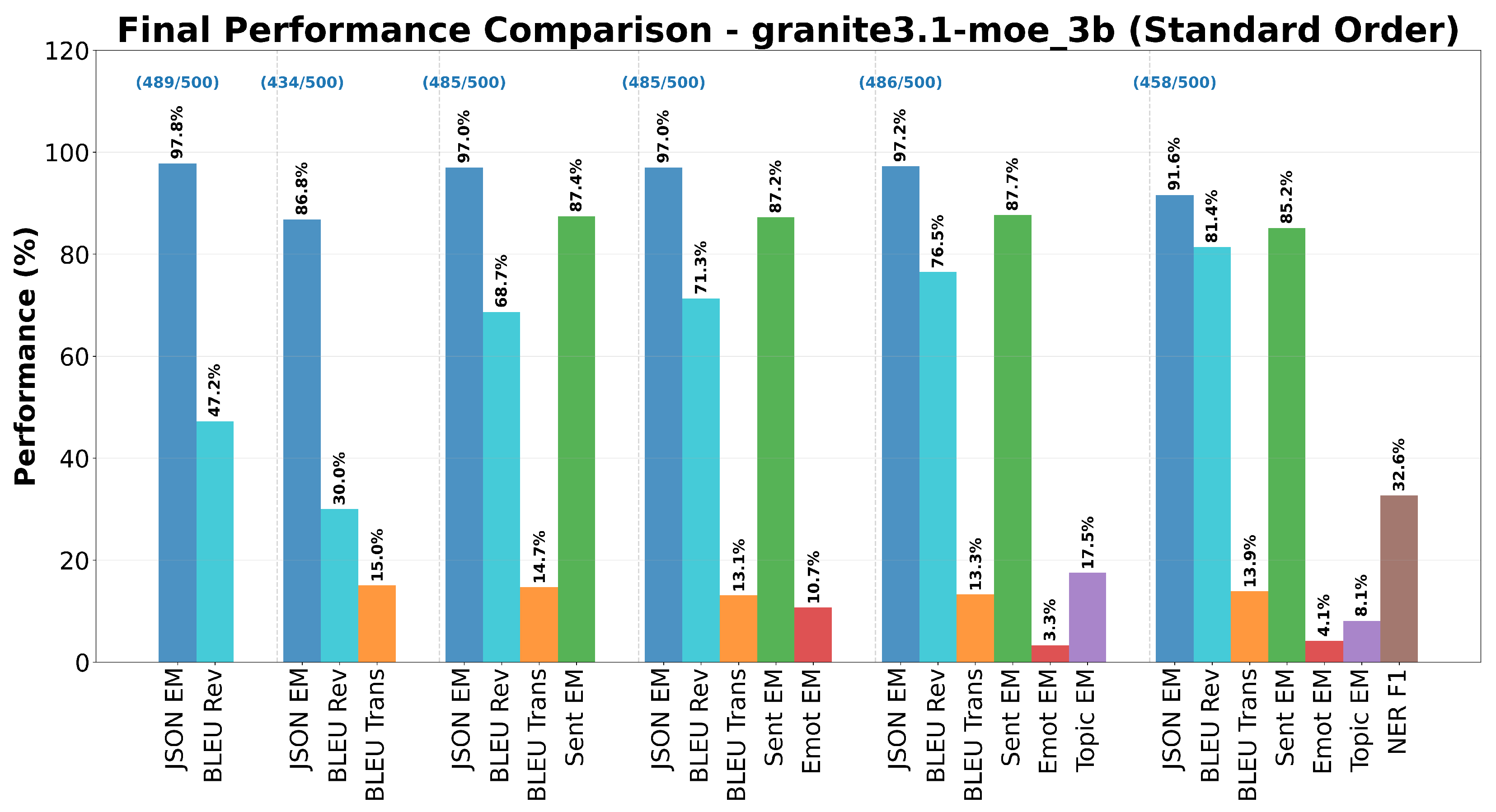

Figure 4 illustrates the performance of the Granite 3.1 3B model across the six-task incremental evaluation framework. The model demonstrated exceptionally high baseline performance in JSON formatting with a

exact match in Task 1. However, these performances showed variability with increasing complexity, dropping to

in Task 2, then recovering and maintaining consistently high levels (97.0–

) through Tasks 3–5, before experiencing a decline to

in the final six-task configuration. This pattern suggests that the addition of NER creates interference with JSON generation capabilities.

Machine Translation performance exhibited a trajectory with baseline scores of in Task 2, followed by relatively stable performance around 13– in subsequent configurations. Translation quality maintained consistency between and across Tasks 3–6.

Sentiment Classification maintained robust performance across all multitask configurations, with exact match scores ranging from to . This consistency demonstrates that sentiment analysis represents a well-preserved capability, showing resilience to interference effects generated by increasing prompt complexity.

Emotion Analysis revealed severe limitations within the multitask framework, with performance ranging from to across configurations, these extremely low scores indicate fundamental difficulties in discriminating between emotional categories.

Topic Extraction showed constrained performance, achieving scores between and across tasks. The consistently low performance levels indicate that genre-based thematic categorization presents significant challenges for this model, particularly when combined with other semantic processing requirements.

NER achieved an F1 score of

in the complete six-task configuration. This indicates that entity extraction and categorization remains achievable within the architectural constraints, though it represents a considerable computational challenge.

Table 4 details the precision, recall, and F1 scores for each entity category observed by Granite 3.1 3B model. Substantially, the PERSON category exhibited the highest precision (

) but relatively low recall (

), indicating a conservative prediction strategy. As well as DeepSeek R1 7B, the ORG and LOC categories showed low precision and recall, resulting in F1 scores below

. A total of 46 entities out of 3424 (

) were classified out of scope, a smaller amount than observed in other models such as DeepSeek R1 7B.

The performance profile reveals a clear hierarchy in the model’s capability allocation, structural tasks (JSON formatting) and basic semantic classification (sentiment analysis) maintain high performance levels, while more nuanced semantic discriminations (emotion analysis, topic classification) show severe degradation. This pattern suggests resource management that prioritizes fundamental functionalities.

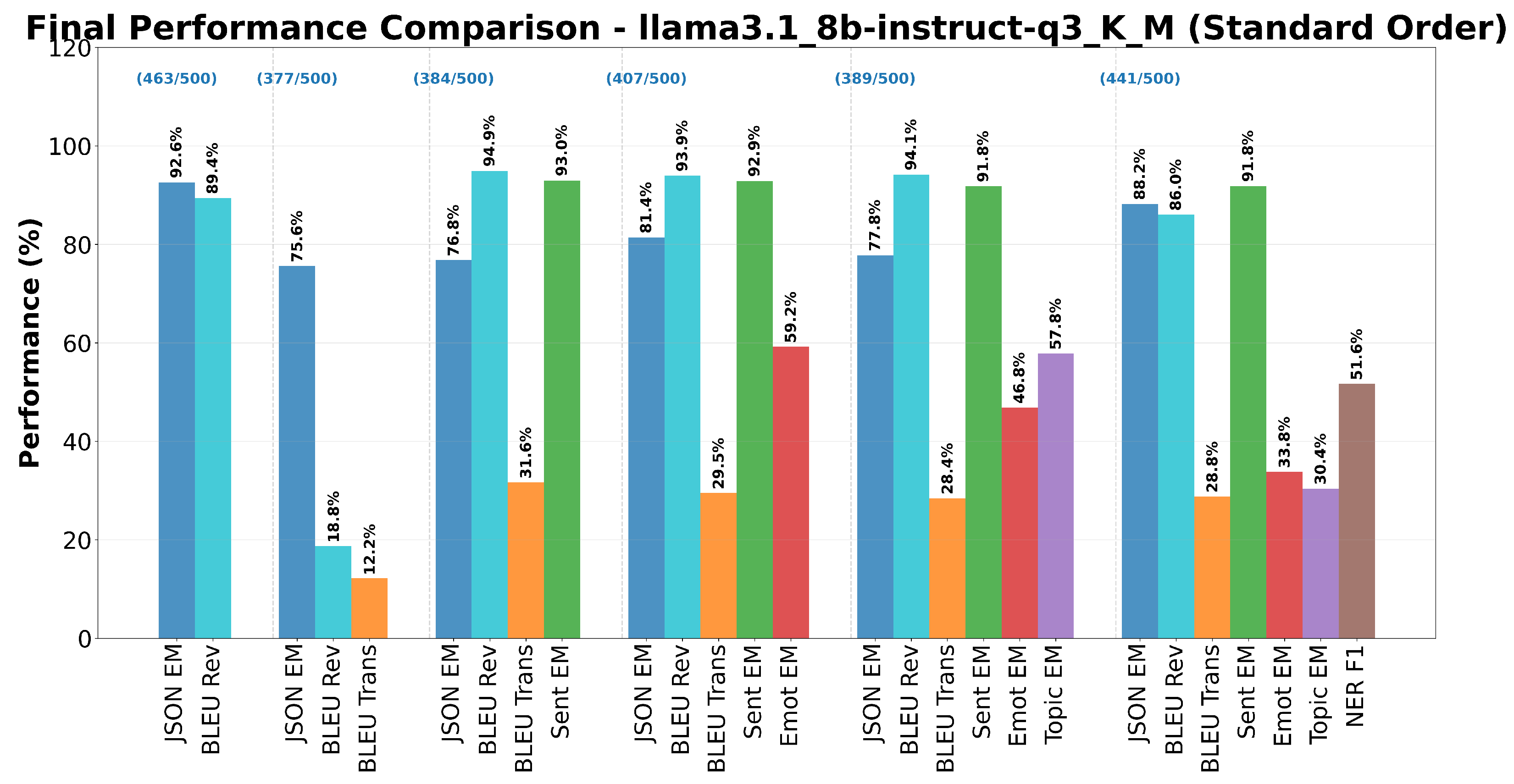

The Llama 3.1 8B model, representative of the traditional Transformer decoder-only architecture, demonstrated distinct performance patterns in the incremental evaluation framework, exhibiting differentiated behaviors across the various task types analyzed.

As

Figure 5 shows, the model exhibited excellent initial performance in JSON formatting with a

exact match in Task 1, indicating strong baseline capability for data structuring tasks. However, as complexity increased, a degradation pattern followed by partial recovery was observed: performance dropped to

in Task 2, stabilized around 77–

in Tasks 3–5, then rose again to

in Task 6.

Machine Translation presented a particularly interesting performance pattern. BLEU scores for translation showed initial performance of in Task 2, followed by notable improvement reaching in Task 3, then stabilizing around 28–29 points in subsequent tasks. This behavior indicates that the introduction of Sentiment Analysis may have provided additional semantic context that facilitates translation quality, suggesting positive synergies between semantically related tasks.

Sentiment Analysis maintained excellent and stable performance across all multitask configurations, with exact match scores varying between and , this consistency demonstrates that sentiment classification represents a robust and well-consolidated capability in the Llama 3.1, resistant to interference generated by increasing prompt complexity.

Emotion Classification showed marked susceptibility to increasing task complexity, performance decreased from (Task 4) to (Task 6), evidencing a loss of percentage points. This significant degradation suggests that recognition of more nuanced emotional categories requires specialized attentional resources that become compromised by competition with other cognitively demanding tasks.

The Topic Extraction task presented substantial degradation, declining from (Task 5) to (Task 6), with a loss of percentage points, this marked decrease indicates that thematic categorization, requiring high-level semantic analysis to identify film genres, proves particularly vulnerable to interference when combined with NER tasks.

NER achieved an F1 score of

in the complete six-task configuration.

Table 5 shows the detailed metrics for each entity category identified by the model. The PERSON category exhibited high precision (

) but moderate recall (

), indicating a tendency towards accurate but conservative entity recognition. The ORG and LOC categories showed lower precision and recall, resulting in F1 scores of

and

, respectively. A total of 43 entities out of 3073 (

). This number is in line with previous models. This performance, while moderate, represents the attainable result under maximum prompt complexity conditions, where the model must simultaneously manage entity identification, semantic and emotional categorization, translation, and structural formatting.

The results synthetized in

Figure 6 shows achieved from Llama reveal the presence of both positive and negative effects from task interactions. The positive effect is observable in the improvement of translation quality when sentiment analysis is introduced, suggesting that correlated semantic information can create beneficial synergies. However, negative effects prevail in tasks requiring fine-grained semantic discriminations, such as emotion analysis and topic classification, where competition for limited attentional resources leads to significant performance degradations. The Llama 3.1 8B results demonstrate how the Transformer decoder-only architecture manifests complex behavior under increasing task complexity, characterized by trade-offs between structural maintenance capabilities and precision in specialized semantic processing.

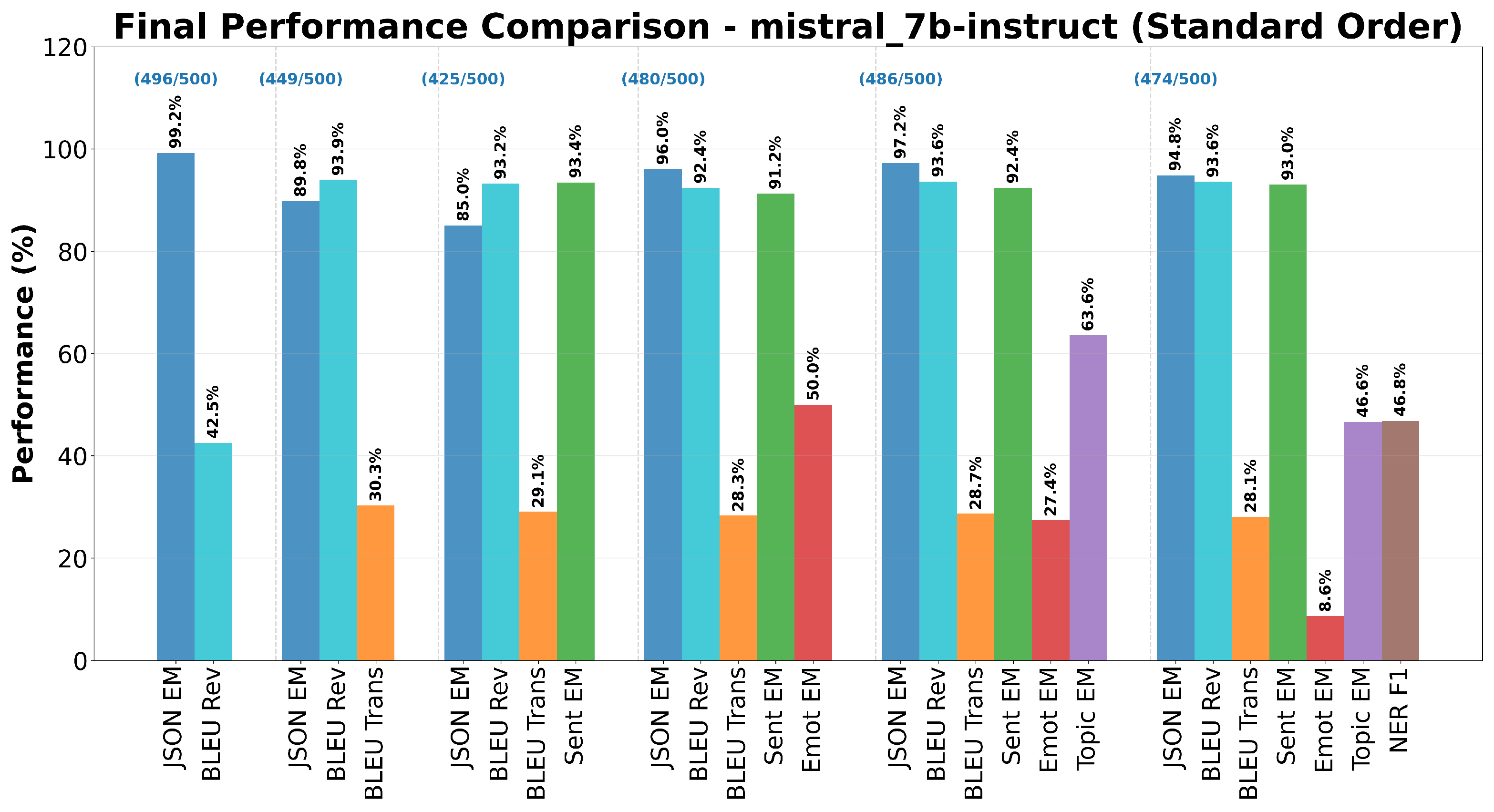

The Mistral 7B model demonstrated robust performance characteristics across the incremental evaluation framework, exhibiting distinctive patterns in handling multitask complexity with notable strengths in structural and semantic processing capabilities.

As reported in

Figure 7, the model achieved exceptional baseline performance in JSON formatting with an exact match of

in Task 1, representing nearly perfect structural formatting capability. Performance showed some variability between task configurations, dropping to

in Task 3, then recovering substantially to maintain high levels (

–

) through Tasks 4–6. The corresponding valid JSON count ranged from 425 to 496 samples, indicating that structural formatting remains a core strength even under increasing cognitive demands.

Machine Translation performance exhibited consistent high-quality output in all configurations. BLEU scores for translation remained stable between and from Task 2 through Task 6, showing minimal degradation despite increasing task complexity, this stability suggests robust translation capabilities that resist interference from concurrent semantic processing requirements.

Sentiment Analysis demonstrated excellent stability across all multitask configurations, with exact match scores ranging from to , consistent performance indicates that binary sentiment classification represents a well-consolidated capability within the architecture, maintaining high accuracy regardless of prompt complexity increases.

Emotion Analysis showed significant performance variation in task configurations. Performance peaked at in Task 4 (four-task configuration), but decreased substantially to in Task 6, representing a dramatic degradation when the recognition of named entities was introduced; this pattern suggests that emotion classification is particularly susceptible to resource competition from entity extraction tasks.

Topic Extraction exhibited moderate performance with notable sensitivity to task complexity. The scores ranged from in Task 5 (five-task configuration) to in Task 6, showing a 17 percentage point decrease when NER was added; this degradation indicates that thematic categorization competes for cognitive resources similar to entity recognition tasks.

Detailed Mistral 7B NER performance is summarized in

Table 6. NER achieved an F1 score of

in the complete six-task configuration, this performance level indicates that the model can effectively identify and categorize named entities even within complex multitask contexts, though the addition of this task created notable interference with other semantic processing capabilities. Precision for the PERSON category was high (

), while recall was moderate (

), indicating a quite balanced approach to entity recognition. The ORG and LOC categories exhibited lower precision and recall, resulting in F1 scores of

and

, respectively, confirming the general trend observed in other models. Mistral scored the highest value of entities classified out of scope, exhibiting major entropy despite the temperature set to

for each model: 118 entities out of 3135 (

).

The results reveal specific patterns of interference between tasks, as illustrated in

Figure 8. The introduction of NER in Task 6 created the most significant disruption, particularly affecting emotion classification performance while maintaining relatively stable performance in other areas. This suggests that entity extraction requires specialized attention mechanisms that compete directly with emotion processing resources. High-level structural tasks (JSON formatting) and fundamental semantic classifications (sentiment analysis) maintain robust performance, while more specialized semantic discriminations (emotion analysis) show sensitivity to resource competition, particularly when combined with linguistically demanding tasks like NER.

Despite showing some degradation in specific areas, the model maintained functional performance across all evaluated tasks simultaneously. The ability to achieve moderate-to-high performance across six diverse NLP tasks concurrently demonstrates the architectural capacity for complex multitask processing, albeit with predictable trade-offs in specialized semantic processing capabilities. The Mistral 7B results demonstrate a balanced approach to multitask processing, with strong foundational capabilities in structural formatting and basic semantic classification, while showing selective vulnerability in fine-grained semantic discriminations under resource competition scenarios.

The model achieved high baseline performance in JSON formatting with a exact match in Task 1. Performance showed relative stability across configurations, maintaining consistent levels between and through Task 5, with a slight decline to in Task 6. The number of valid JSON samples varied from 464 to 484, indicating that structural formatting represents a consolidated capability even under increasing cognitive complexity.

Figure 9 illustrates the performance of the Gemma 3 4B model across the six-task incremental evaluation framework. Machine Translation performance exhibited an interesting pattern with initial BLEU scores of

in Task 2, followed by substantial stability around 40–

across subsequent configurations. Translation quality maintained relatively high levels, varying between

and

, suggesting robust translation capabilities that moderately resist interference from increasing task complexity.

Sentiment Analysis demonstrated consistently high performance across all multitask configurations, with exact match scores ranging from to . This consistency indicates that sentiment analysis represents a well-preserved capability within the architecture, maintaining high accuracy despite increasing prompt complexity.

Emotion Analysis revealed a dramatic vulnerability to increasing task complexity. Performance decreased from in Task 4 to in Task 6, representing an almost total degradation of the ability to discriminate emotionally.

The Topic Extraction task showed an equally severe degradation, dropping from in Task 5 to in Task 6. This performance collapse indicates that genre-based thematic categorization competes directly for the same cognitive resources required by Named Entity Recognition.

NER achieved an F1 score of

in the complete six-task configuration. This relatively high performance level indicates that the model maintains effective capabilities for named entity identification and categorization, but at the cost of severe compromises in other specialized semantic capabilities. As reported in

Table 7, Gemma 3 4B exhibited the highest precision across all entity categories, particularly for PERSON (

) and LOC (

). However, recall was moderate to low, especially for ORG (

). The out-of-scope ratio registered by Gemma 3 4B is about 85 entities out of 3228 (

). This number is aligned with previous models. Gemma 3 4B’s performance profile reveals a pronounced trade-off between maintaining core structural and basic semantic capabilities versus specialized semantic discriminations, while the recognition process is still conservative; recall rates indicate that many entities remain undetected.

While structural tasks (JSON formatting), basic semantics (sentiment), and translation maintain reasonable performance, tasks requiring fine-grained semantic discriminations (emotions, topics) suffer devastating interference. This suggests a rigid hierarchy in cognitive resource management.

Figure 10 illustrates the degradation patterns observed in the Gemma 3 4B model. The analysis highlights how the Gemma 3 4B architecture rapidly reaches operational limits when confronted with multiple tasks requiring sophisticated semantic processing. The ability to maintain some functionalities while others collapse completely indicates binary rather than graduated resource management. The Gemma 3 4B results demonstrate an architecture with strong fundamental capabilities but severe limitations in simultaneously managing complex semantic tasks, evidencing drastic trade-offs rather than gradual degradations under incremental cognitive pressure.

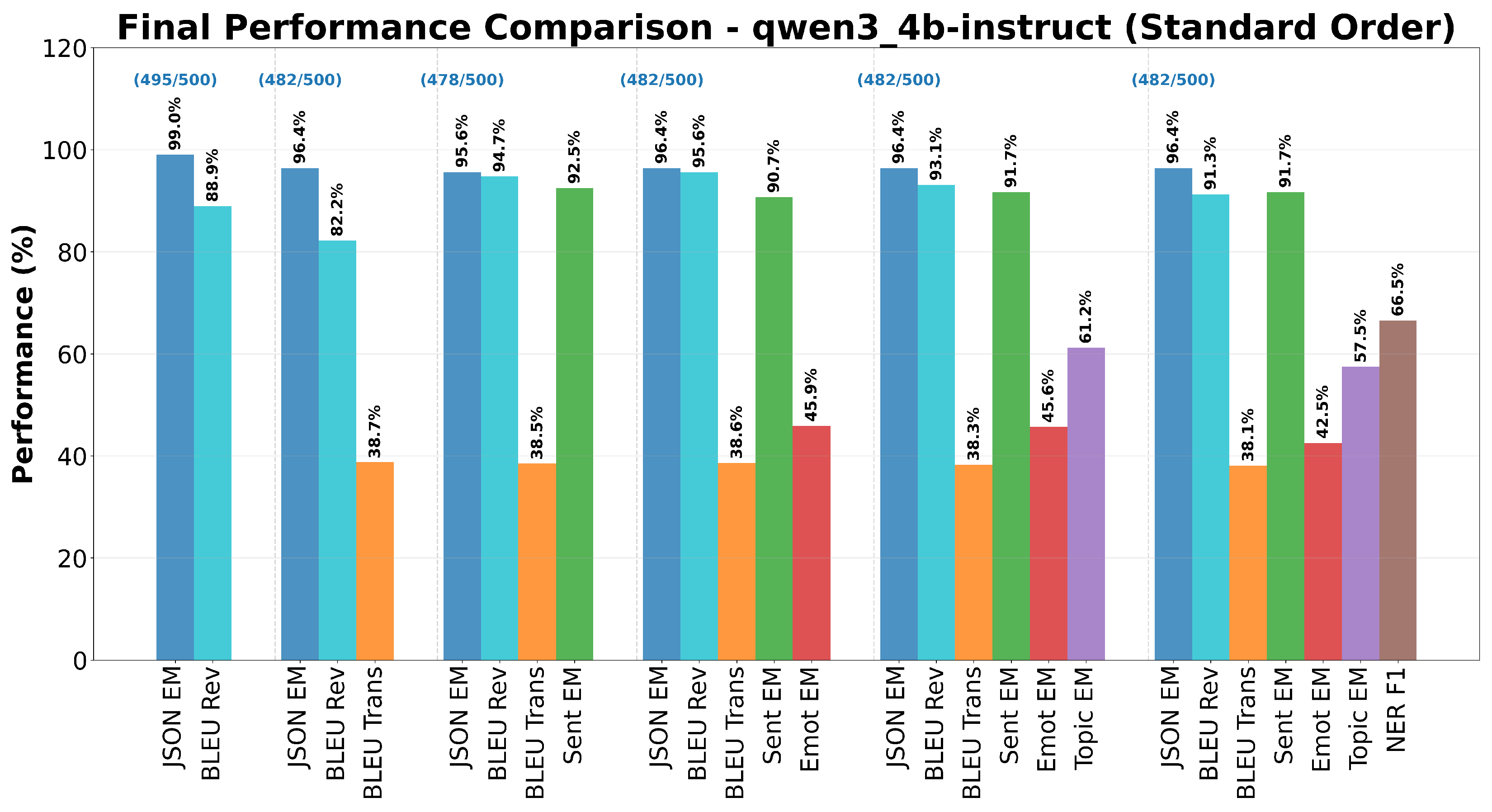

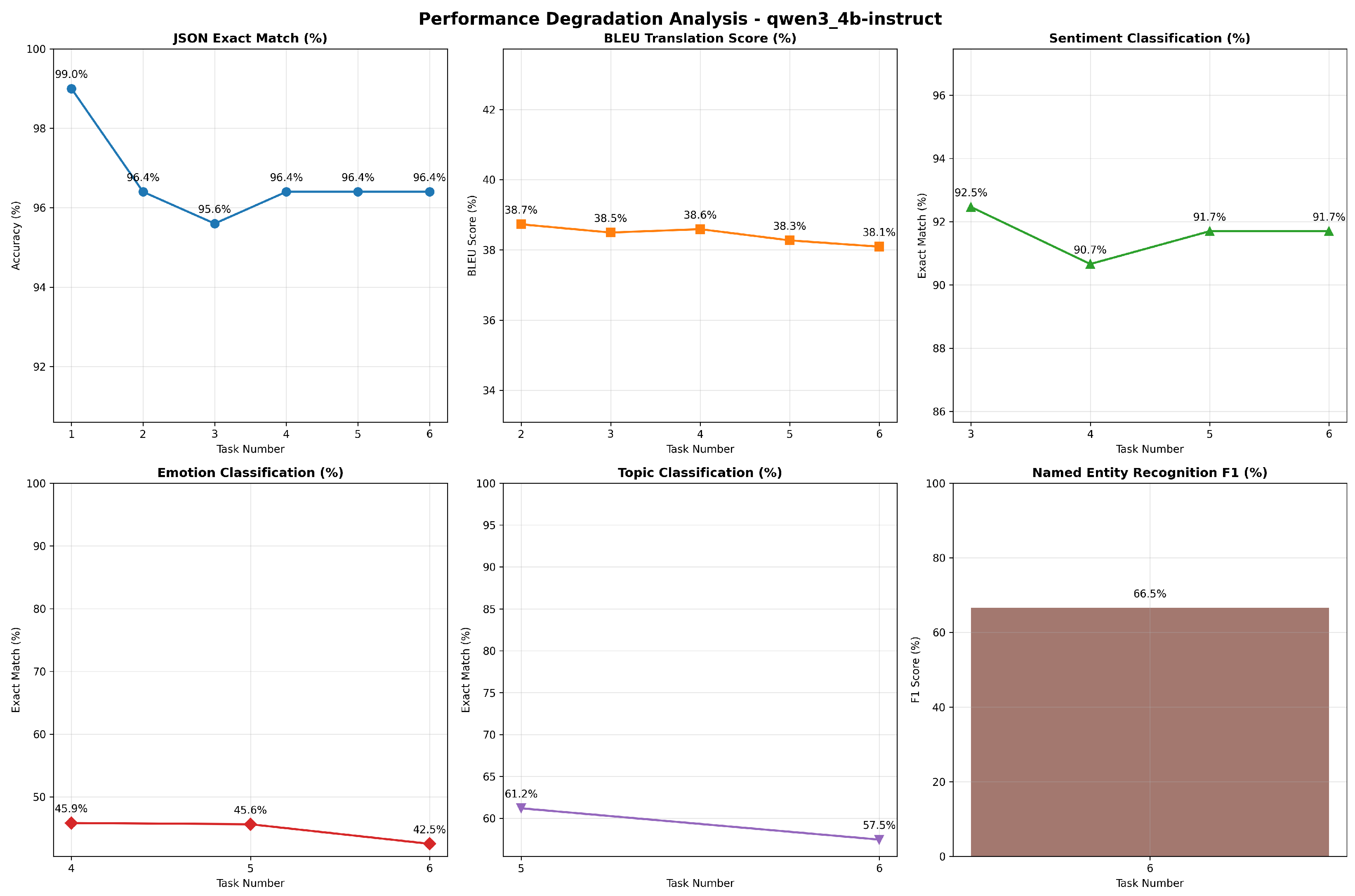

3.1.1. Qwen3 4B

Figure 11 illustrates the performance of the Qwen3 4B model across the six-task incremental evaluation framework. The model achieved near-perfect baseline performance in JSON formatting with a

exact match in Task 1, representing the highest initial structural formatting capability observed. Performance remained remarkably stable across all configurations, maintaining levels between

and

throughout Tasks 2–6. The valid JSON count consistently remained between 478–495 samples, indicating that structural formatting represents an exceptionally robust capability that resists interference from concurrent cognitive demands.

Machine Translation performance exhibited consistent high-quality output with notable stability. BLEU scores for translation maintained remarkable consistency around 38– across all multitask configurations from Task 2 through Task 6, showing minimal variation ( to ). This exceptional stability suggests that translation capabilities are well-insulated from interference effects generated by additional semantic processing requirements.

Sentiment Analysis demonstrated excellent stability across all multitask configurations, with exact match scores ranging from to ; the minimal variation in performance indicates that binary sentiment classification represents a highly consolidated capability within the architecture, showing strong resistance to degradation under increasing prompt complexity.

Emotion Analysis showed moderate performance with notable consistency across task configurations, and performance remained stable around – from Task 4 through Task 6, demonstrating resilience to the interference effects that severely impacted other models. This stability suggests effective resource management for fine-grained emotional discrimination tasks.

Topic Extraction maintained robust performance levels, achieving in Task 5 and in Task 6, representing only a percentage point decrease. This minimal degradation indicates that thematic categorization capabilities remain well-preserved even when combined with Named Entity Recognition tasks, suggesting efficient cognitive resource allocation.

Finally, as detailed in

Table 8, Qwen 3 4B shows the highest recall scores across all models, particularly for the PERSON category (

) and LOC category (

), indicating a more balanced approach to entity recognition that captures a larger proportion of true entities. The total number of entities classified out of scope is 14 entities out of 3322 (

), the lowest among all evaluated models, indicating superior precision in entity recognition within the multitask context. NER achieved a strong F1 score of

in the complete six-task configuration, representing the highest performance level among all evaluated capabilities. This indicates that the model maintains excellent entity identification and categorization abilities even under maximum cognitive load conditions.

The results shown in

Figure 12 reveal exceptional architectural stability under increasing task complexity. Unlike other models that showed dramatic degradation in specific areas, Qwen3 4B maintained functional performance across all evaluated tasks simultaneously, with degradation patterns being gradual rather than catastrophic. The performance profile indicates highly effective resource allocation strategies within the architecture, demonstrates the ability to maintain high performance across diverse task types without the severe trade-offs observed in other architectures, suggesting sophisticated attention mechanisms and resource distribution capabilities. The model’s ability to achieve consistently high performance across six diverse NLP tasks concurrently demonstrates superior architectural capacity for complex multitask processing, and the absence of catastrophic interference patterns indicates robust cognitive parallelization capabilities that effectively manage competing demands for attention and processing resources. The Qwen3 4B results demonstrate an architecture optimized for multitask scenarios, with exceptional stability across diverse task types and sophisticated resource management that enables high-quality performance even under maximum cognitive complexity conditions.

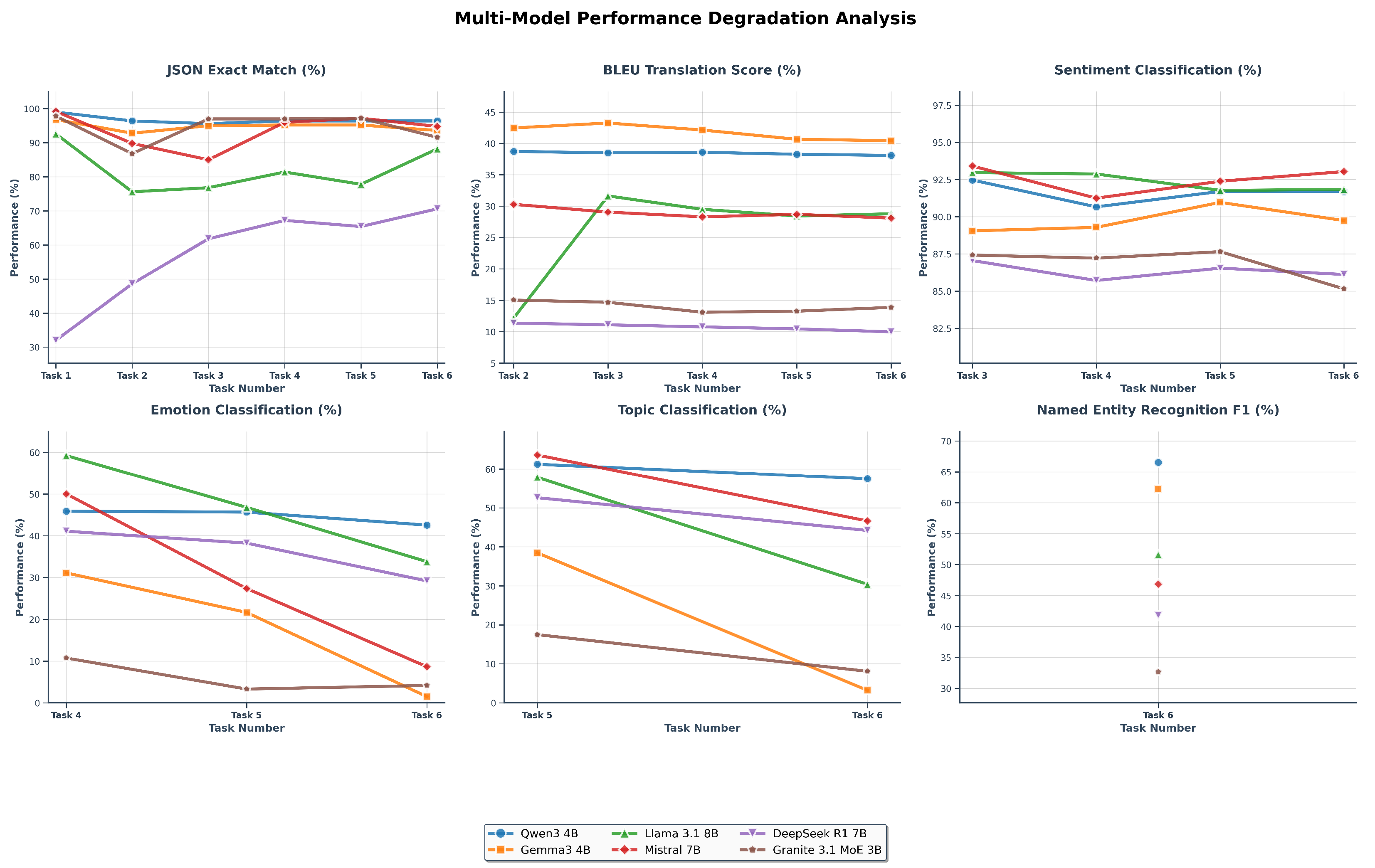

3.1.2. Overall Performance Comparison

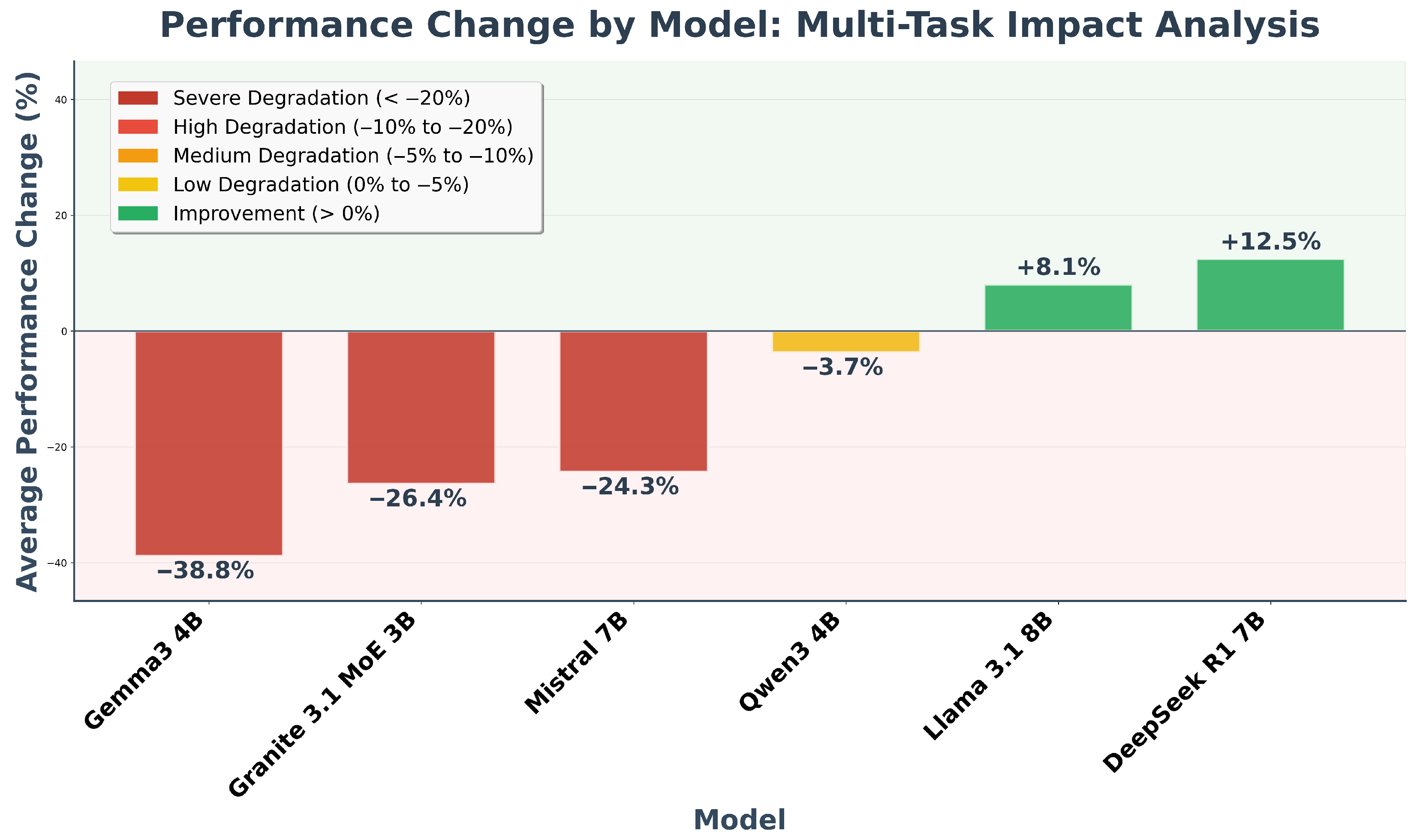

The multi-model performance analysis reveals a high degree of heterogeneity in how various language models manage task sequence transitions, as reported in

Figure 13. The detailed task-specific view demonstrates that performance degradation is not uniform across all tasks. While highly structured generative tasks, such as JSON Exact Match, exhibit relative stability, classification tasks, particularly Emotion Classification and Topic Extraction, are highly susceptible to significant performance drops as models progress through the task sequence. This suggests that the complexity of the task structure and the potential for catastrophic forgetting are major determinants of multitask performance vulnerability. The vulnerability is most pronounced in smaller models like Gemma3 4B (

) and Granite 3.1 Moe 3B (

), which show severe degradation from the initial to the final task.

Contrasting this degradation, the overall performance change analysis highlights a critical split in model behavior, as shown in

Figure 14. A subset of models, most notably DeepSeek R1 7B (

) and Llama 3.1 8B (

), exhibited an average performance improvement across the task sequence. This counter-intuitive outcome suggests the presence of significant positive transfer learning or knowledge synergy within their architectures and training methodologies, where exposure to earlier tasks implicitly benefits performance on later tasks. The stability of Qwen 3 4B (

) further emphasizes that model architecture and training regimen are pivotal factors governing multitask resilience, polarizing performance outcomes between catastrophic degradation and beneficial knowledge transfer.

3.2. Inverted Order Results

To assess whether task sequence influences multitask performance, we conducted an additional experiment in which the order of task introduction was reversed. The inverted sequence was as follows:

JSON Formatting: we kept JSON formatting as first task because it’s a precondition for the subsequent tasks.

Sentiment Analysis: we moved Sentiment Analysis from third to second place.

Emotion Analysis: previously at fourth place in the standard order sequence.

Topic Extraction: located in the fifth place in the first experiment.

NER: previously the last task in the sequence.

Machine Translation (English-to-Italian): shifted from second to sixth and last position.

The sequence has been decided arbitrarily to test the hypothesis that placing structurally simpler tasks earlier in the sequence might alleviate cognitive load and improve overall performance. In this section we analyze the results obtained in the inverted task order scenario for the same models evaluated previously.

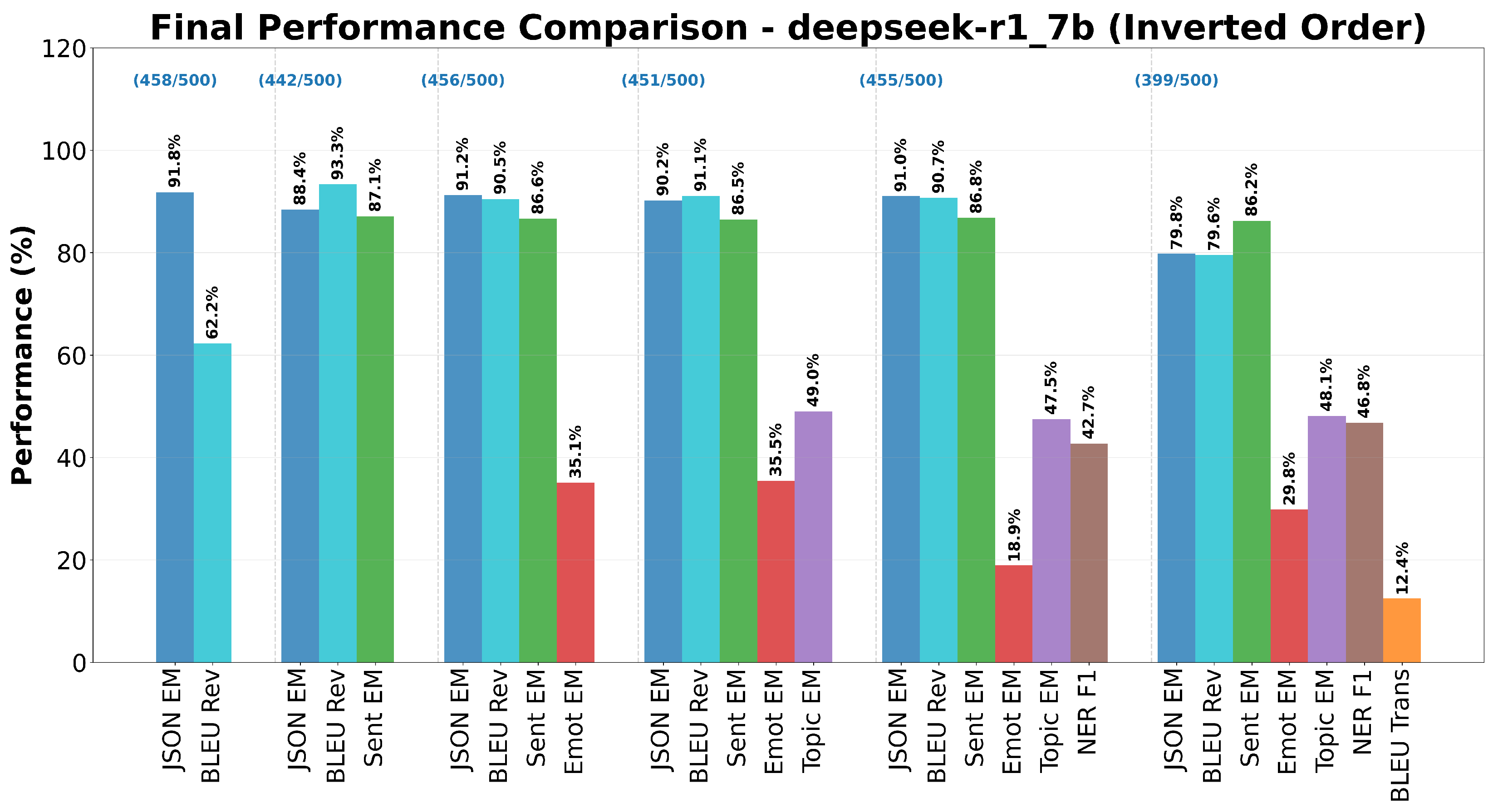

Under the inverted task sequence, DeepSeek R1 7B displayed similar behavioral trends to the standard order but with accentuated volatility in fine-grained semantic tasks.

Figure 15 summarizes the final performance across all six tasks.

JSON Formatting, still introduced first, reached exact-match accuracy, slightly below its peak in the standard sequence, confirming that the model benefits marginally from the structural reinforcement of later tasks. Sentiment Analysis maintained stable performance (–), whereas Emotion Analysis degraded sharply once NER was introduced, falling from to . Topic Extraction remained moderately stable (–), while Translation—now the final task—recovered partially () compared with previously. Overall, changing the order slightly alleviated the translation decline but accentuated degradation in emotional classification, confirming that DeepSeek’s multitask fragility stems primarily from semantic interference rather than sequence positioning.

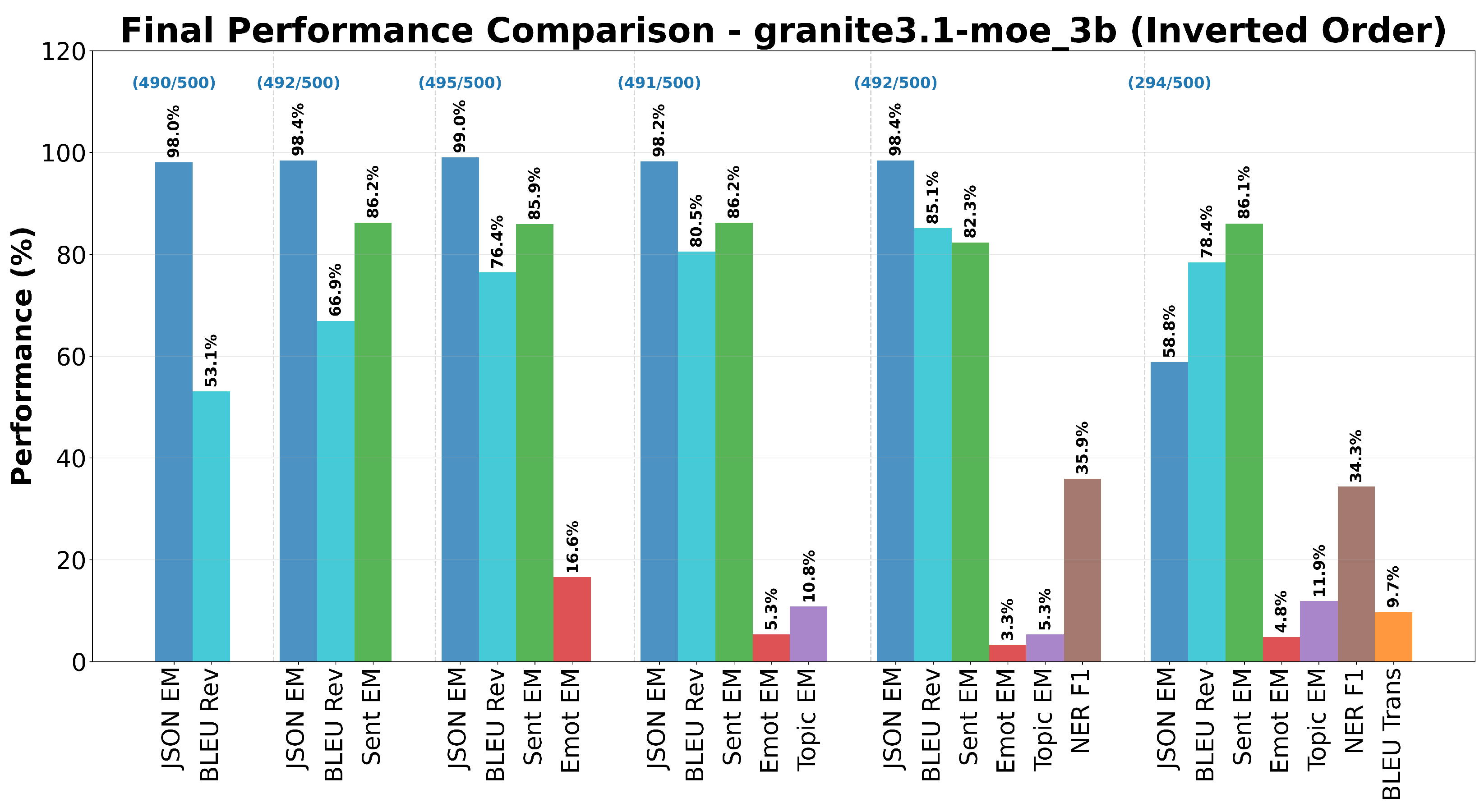

Granite 3.1 3B exhibited consistent degradation regardless of sequence direction, reinforcing its sensitivity to multitask interference. The final performance comparison is illustrated in

Figure 16.

JSON Formatting retained high performance () but showed increased variance () compared with the standard order. Sentiment Analysis remained stable around , whereas Emotion Analysis and Topic Extraction again collapsed to single-digit accuracies ( and , respectively). Placing NER before Translation yielded marginal gains in entity recognition ( vs. ) but did not prevent loss propagation to semantic subtasks. Translation, executed last, improved modestly (), suggesting that isolating the generative load at the end of the sequence mitigates—but does not resolve—interference.

Inverted sequencing induced mixed but interpretable effects in Llama 3.1 8B, as shown in

Figure 17.

JSON Formatting remained strong (), confirming structural resilience. Early placement of Sentiment and Emotion tasks resulted in smoother degradation curves: Emotion Analysis declined from to , an improvement of points compared with the standard order. Topic Extraction also benefited ( vs. ), while Translation—now appended last—dropped slightly ( vs. ). NER maintained comparable (). The overall trend suggests that introducing semantically related tasks earlier provides contextual scaffolding that stabilizes affective reasoning but slightly penalizes generative precision.

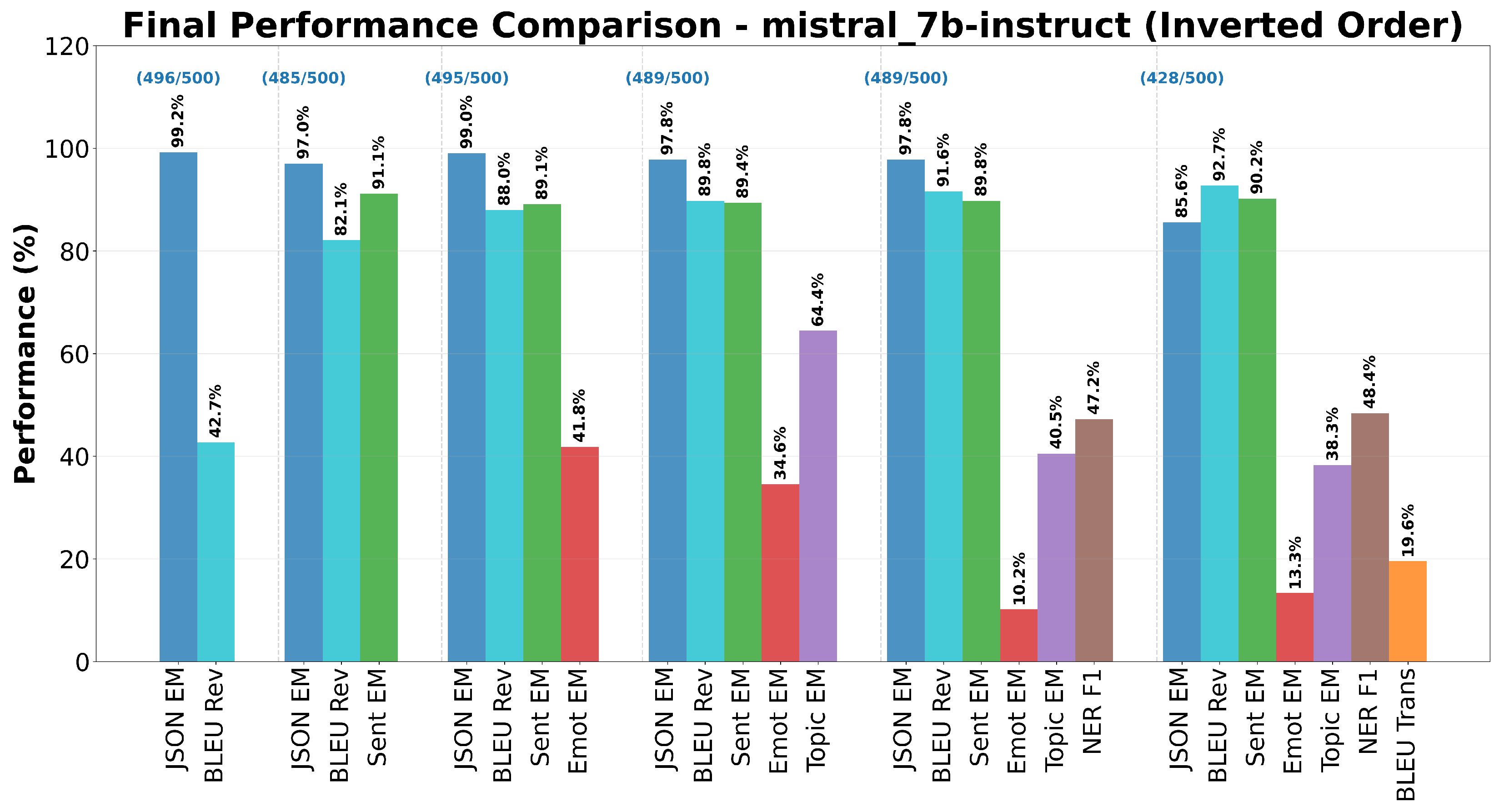

Mistral 7B preserved its general robustness in the reversed setup.

As reported in

Figure 18, JSON Formatting accuracy remained high (

), and Sentiment Analysis held steady (

). Notably, Emotion Analysis performance improved markedly, reaching

in the final configuration compared with

previously, indicating that placing this task earlier reduces interference from NER. Topic Extraction likewise improved slightly (

vs.

), while NER F1 remained similar (

). Translation, concluding the sequence, showed mild reduction (

).

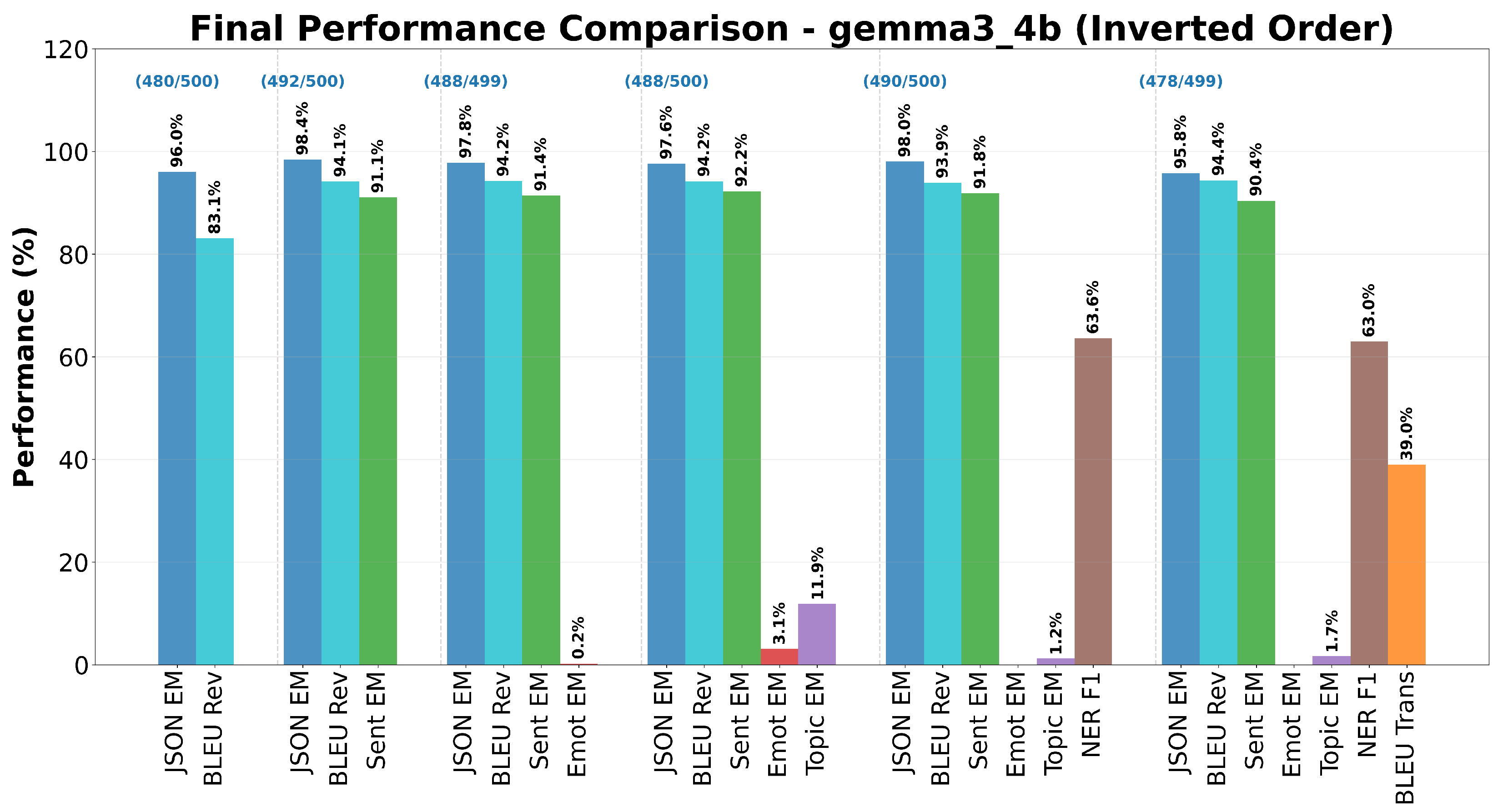

Gemma 3 4B again revealed catastrophic degradation, albeit with subtle shifts in which tasks collapsed first.

Figure 19 illustrates that JSON Formatting remained solid (

), but Emotion Analysis degraded earlier (from

to

), while Topic Extraction declined to

. NER achieved

(

points vs. standard), and Translation—now final—improved slightly (

).

Qwen 3 4B remained the most stable architecture under order inversion. JSON Formatting preserved near-perfect accuracy (), Sentiment Analysis , Emotion Analysis , and Topic Extraction . NER , virtually identical to the standard run, while Translation . Degradation slopes across steps were nearly flat, confirming that Qwen’s balanced attention and memory gating mitigate interference effects irrespective of sequence.

In summary, the inverted-order experiment revealed no qualitative change in degradation dynamics. While minor quantitative shifts emerged—particularly improved emotion recognition in Llama 3.1 and Mistral 7B and slight translation recovery in DeepSeek R1 and Granite 3.1—overall stability rankings remained unchanged. Qwen 3 4B preserved top-level consistency, Gemma 3 4B experienced early semantic collapse, and MoE architectures continued to show amplified volatility. These outcomes suggest that model-intrinsic resource allocation mechanisms, rather than prompt sequence, principally determine multitask degradation behavior.