Abstract

This study investigates how increasing prompt complexity affects the performance of Large Language Models (LLMs) across multiple Natural Language Processing (NLP) tasks. We introduce an incremental evaluation framework where six tasks—JSON formatting, English-Italian translation, sentiment analysis, emotion classification, topic extraction, and named entity recognition—are progressively combined within a single prompt. Six representative open-source LLMs from different families (Llama 3.1 8B, Gemma 3 4B, Mistral 7B, Qwen3 4B, Granite 3.1 3B, and DeepSeek R1 7B) were systematically evaluated using local inference environments to ensure reproducibility. Results show that performance degradation is highly architecture-dependent: while Qwen3 4B maintained stable performance across all tasks, Gemma 3 4B and Granite 3.1 3B exhibited severe collapses in fine-grained semantic tasks. Interestingly, some models (e.g., Llama 3.1 8B and DeepSeek R1 7B) demonstrated positive transfer effects, improving in certain tasks under multitask conditions. Statistical analyses confirmed significant differences across models for structured and semantic tasks, highlighting the absence of a universal degradation rule. These findings suggest that multitask prompting resilience is shaped more by architectural design than by model size alone, and they motivate adaptive, model-specific strategies for prompt composition in complex NLP applications.

1. Introduction

Generative Artificial Intelligence (GenAI), powered by advances in large language models (LLMs), is transforming the way humans and machines interact. The rapid integration of these models into consumer applications, enterprise systems, and research workflows is reshaping multiple sectors, including healthcare, insurance, education, and the arts. Their widespread adoption has created both opportunities for innovation and challenges in ensuring transparency, reliability, and safety in AI-driven processes.

Humans typically interact with GenAI via natural language, which, while expressive, introduces ambiguity that can hinder control and explainability. Prompt engineering has emerged as a discipline aimed at reducing this ambiguity by optimizing the formulation of prompts to achieve more predictable and accurate outcomes. However, our previous research [1] did not find a universal strategy for effective prompting, highlighting the need for further investigation into the factors that influence prompt effectiveness.

In this work, we focus on the role of prompt complexity as a determinant of LLM performance. Specifically, we investigate how progressively increasing the number of tasks within a prompt affects output quality. To guide our investigation, we address the following research questions:

- How does the performance of LLMs evolve when multiple NLP tasks are incrementally combined into a single prompt?

- Do different LLM architectural families (dense Transformer vs. Mixture-of-Experts) exhibit distinct resilience or vulnerability patterns under increasing prompt complexity?

- Are specific NLP tasks (e.g., structured vs. semantic and coarse-grained vs. fine-grained) more susceptible to degradation in multitask settings?

- Can positive transfer effects emerge, where the addition of tasks enhances performance rather than causing degradation?

- To what extent do model size and family explain differences in multitask robustness compared to task-specific interference?

We propose an incremental inference pipeline that systematically adds tasks to an initial prompt, enabling a controlled evaluation of performance degradation. To ensure robustness, we evaluate models from multiple families, architectures, and sizes. Then, we apply statistical analysis to quantify the significance and consistency of our results.

The main contributions of this work are the following:

- We introduce a controlled evaluation framework to assess the impact of prompt complexity on LLM performance.

- We quantify performance degradation patterns across models of varying sizes, families, and architectures.

- We provide statistical analysis to strengthen the validity of our findings.

Recent studies have examined the interplay between LLM characteristics, prompting strategies, and task performance. Model size emerged as a critical factor: larger models typically achieve stronger baseline performance, whereas smaller models often show higher sensitivity to prompt engineering techniques [2]. Although advanced LLMs can be more robust to suboptimal prompts, they often display diminishing performance gains from additional prompt optimization, suggesting that scaling alone does not eliminate the need for careful prompt design.

Task complexity and model capabilities have also been widely investigated. Techniques such as chain-of-thought reasoning (CoT) [3] and in-context learning (ICL) [4] have demonstrated particular benefits in smaller or earlier generation models, but their effectiveness appears less pronounced in the latest large-scale LLMs [2]. This indicates a nuanced interaction between model architecture and the utility of specific prompting methods.

Beyond manual prompt design, automated approaches have gained attention. Small-to-Large Prompt Prediction (S2LPP), for example, leverages performance patterns across 14 different LLMs to predict effective prompts for larger models based on smaller ones, demonstrating promising cross-model consistency [5]. Such methods highlight the potential of leveraging prompt-performance relationships for transferability.

Performance variability is also strongly task-dependent. Mathematical and logical reasoning tasks consistently benefit from CoT approaches, while language understanding and open-domain question answering often achieve better results with ICL or retrieval-augmented prompting [6]. These findings reinforce that both task type and domain are key determinants of optimal prompting strategies.

Although this body of research offers valuable insights into the relationships between model size, prompting technique, and task characteristics, relatively little attention has been paid to how progressive increases in prompt complexity affect performance across diverse LLM families. To the best of our knowledge, no prior study has introduced a controlled incremental evaluation framework to systematically quantify performance degradation under increasing prompt complexity. This work addresses that gap by providing a statistically grounded, cross-model analysis of this phenomenon.

Our previous research [1] investigated single-task versus multitask prompt performance across multiple LLM families. The study revealed that prompt strategy effectiveness is highly model-dependent, with no universal approach emerging as consistently superior across different architectures and tasks. Specifically, we observed that while some models (e.g., Llama 3.1 8B) showed improved performance with multitask prompts in certain tasks, others (e.g., Gemma 2 9B) exhibited degradation when multiple tasks were combined in a single prompt. These model-specific response patterns highlighted the need for further investigation into the mechanisms underlying multitask prompt performance.

However, our prior work compared discrete single-task versus multitask configurations, without examining how performance evolves as tasks are incrementally added. The present study addresses this limitation by introducing a controlled incremental evaluation framework that quantifies performance changes as tasks are sequentially added to a prompt. This approach enables fine-grained analysis of degradation dynamics and identification of critical task combinations that trigger performance drops.

Furthermore, architectural diversity introduces additional complexity. The distinction between dense Transformer [7] models and sparse Mixture-of-Experts (MoE) [8] architectures represents fundamentally different resource allocation strategies. Yet relatively little attention has been paid to how these architectural differences influence response to increasing prompt complexity.

To the best of our knowledge, no prior study has systematically quantified performance degradation under progressive prompt complexity across both dense and MoE architectures. This work addresses that gap by evaluating six representative models—five dense and one MoE—across six NLP tasks with incremental task addition, enabling cross-architectural comparison of multitask resilience patterns.

2. Materials and Methods

This study was designed to systematically evaluate the effect of increasing prompt complexity on the performance of LLMs across six NLP tasks. Building on prior findings that prompt sensitivity can vary with both model architecture and task type [1], we adopted a controlled incremental evaluation framework in which tasks were progressively added to a single prompt. This approach allowed us to observe how performance changes as the number and diversity of tasks increase, thereby isolating the degradation patterns attributable to prompt complexity rather than to dataset or evaluation variability.

We selected six representative LLMs covering diverse architectural families, as reported in Table 1. The group comprised five Transformer decoder-only models—Llama 3.1 8B [9], Gemma 3 4B [10], Mistral 7B [11], Qwen3 4B [12], and DeepSeek R1 7B [13]—chosen for their prevalence in open-source deployments and competitive performance in recent benchmarks. To represent MoE architectures, we included Granite 3.1 3B. Model selection was guided by specific criteria:

- Parameter range: Models from 3B to 8B parameters to represent architectures deployable locally on consumer-grade hardware

- Architectural diversity: Comparison between dense (standard Transformer) and sparse (MoE) resource allocation strategies

- Benchmark performance: Models with competitive performance on enterprise-oriented and general NLP tasks

- Availability and reproducibility: Open-source models accessible through standard platforms (Hugging Face, Ollama)

Table 1.

Large Language Models used in this study.

Table 1.

Large Language Models used in this study.

| Name | Ollama ID | Family |

|---|---|---|

| qwen3:4b-instruct | 088c6bc07f1d | Alibaba |

| gemma3:4b | a2af6cc3eb7f | Google DeepMind |

| llama3.1:8b-instruct-q3_K_M | 4faa21fca5a2 | Meta |

| mistral:7b-instruct | 6577803aa9a0 | Mistral AI |

| deepseek-r1:7b | 755ced02ce7b | DeepSeek AI |

| granite3.1-moe:3b | b43d80d7fca7 | IBM |

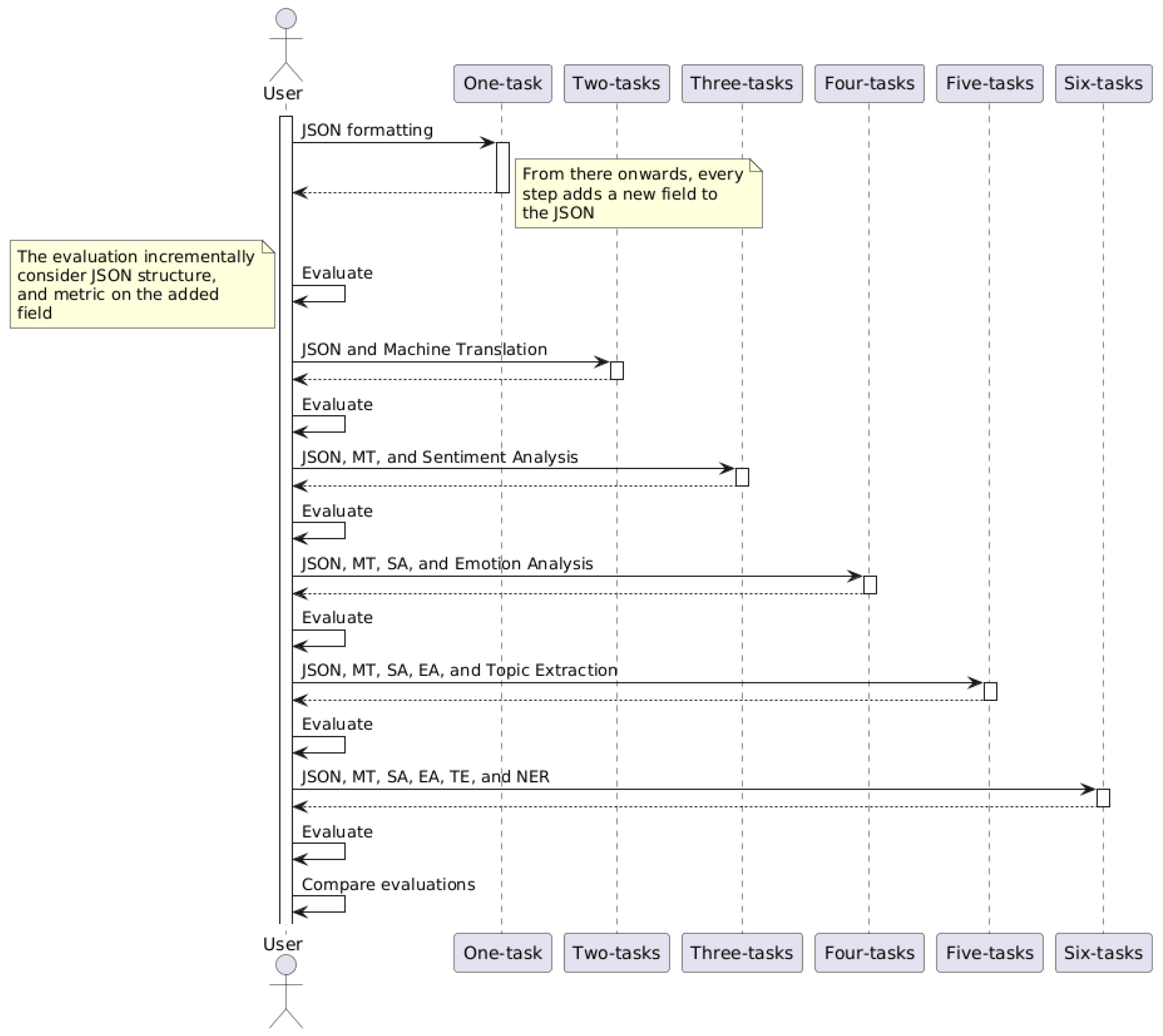

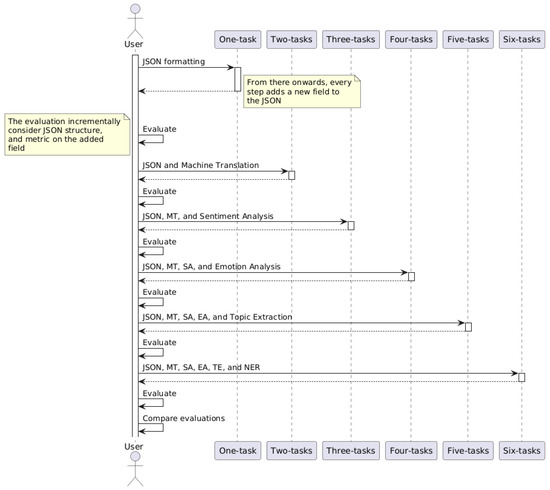

Model evaluation was conducted on six distinct NLP tasks: JSON formatting, Machine Translation (English-to-Italian), Sentiment Analysis, Emotion Analysis, Topic Extraction, and Named Entity Recognition (NER). These tasks were selected to represent a spectrum of cognitive and structural demands, ranging from semantic interpretation to strict output formatting. In the incremental prompting setup, a single-task baseline was established for each model, after which additional tasks were sequentially appended to the prompt in a fixed order. Performance was recorded at each stage to quantify both absolute accuracy and relative degradation. In Figure 1 a UML Sequence Diagram is presented to formally describe the experiment workflow.

Figure 1.

UML Sequence Diagram describing experiment workflow.

All experiments were conducted in local environments using consumer-grade hardware on both Windows 11 and macOS 14. The primary workstation for model inference was equipped with an Apple M2 Pro chip (12-core CPU, 19-core GPU, 16 GB unified memory), while additional runs were replicated on a Windows machine featuring an AMD Ryzen 9 5900HX with Radeon Graphics, and an NVIDIA GeForce RTX 3060 Laptop GPU with 6 GB VRAM. This dual-hardware setup ensured that results were not biased by a single computational architecture.

Model execution was managed through Ollama, chosen for its efficiency in running LLMs locally without requiring external APIs or cloud-based inference. This allowed for consistent experimental conditions, reproducibility, and reduced dependency on network latency. Python (v3.11) served as the orchestration layer, enabling the automation of prompt generation, inference requests, and metric computation. Core libraries employed included NumPy, Pandas, and scikit-learn for statistical analysis and evaluation metrics, along with sacreBLEU for translation scoring. To ensure reproducibility, all random seeds were fixed and identical datasets were used across all runs.

The choice of local execution, as opposed to API-based evaluation, was deliberate. It allowed us to maintain full control over model parameters, avoid hidden fine-tuning or throttling mechanisms from third-party providers, and replicate experiments under transparent conditions. This setup ensures that the reported results reflect the intrinsic performance of each model architecture under incremental multitask prompting.

2.1. NLP Tasks Employed

The evaluation framework was designed around six NLP tasks, selected to represent a broad spectrum of linguistic, semantic, and structural challenges. This diversity ensures that the incremental prompt methodology captures performance variations across tasks requiring different cognitive and representational abilities.

- JSON Formatting: A structural task aimed at verifying the ability of LLMs to produce outputs conforming to strict syntactic constraints. Each model was required to generate a valid JSON object containing a single field (“review”) with the full IMDB review text. Evaluation focused on exact structural correctness and fidelity of text preservation.

- Machine Translation (English-to-Italian): A semantic and generative task requiring accurate translation of IMDB reviews into Italian. This task assesses a model’s capability to preserve both meaning and fluency when transferring across languages. Quality was evaluated using the BLEU metric against human-validated reference translations.

- Sentiment Analysis: A coarse-grained classification task where models were asked to assign a binary label (positive/negative) to each review. This task measures the ability to capture global polarity, representing a relatively stable and well-established NLP benchmark.

- Emotion Analysis: A fine-grained classification task designed to stress-test the models’ ability to recognize nuanced emotional states beyond simple polarity. The categories considered include joy, anger, sadness, disgust, and neutral. Compared to sentiment, this task introduces ambiguity and requires deeper contextual interpretation.

- Topic Extraction: A multi-class categorization task in which models are asked to identify the main thematic domain of a review. The categories considered include action, comedy, drama, horror, thriller, romance, sci-fi, fantasy, documentary, and other. This task emphasizes high-level semantic abstraction, as it requires linking narrative content to broader conceptual categories.

- Named Entity Recognition: A sequence-labeling task where models needed to extract named entities and classify them into standard categories (PER, ORG, LOC). NER demands fine-grained linguistic analysis, precise boundary detection, and contextual disambiguation, making it one of the most challenging tasks in the evaluation.

Taken together, these tasks form a balanced experimental setting. JSON Formatting and Translation introduce structural and generative requirements, while Sentiment and Emotion Analysis focus on affective semantics. Topic Extraction and NER demand high-level thematic reasoning and fine-grained token-level processing, respectively. By progressively increasing the number of tasks in a single prompt, the experimental design captures not only performance baselines but also degradation dynamics across different task typologies.

JSON formatting and English to Italian translation were selected as the initial tasks in the incremental sequence because they represent, respectively, the most structurally constrained and the most semantically generative subtasks in the evaluation. JSON formatting establishes a deterministic syntactic baseline that constrains the model’s output structure, ensuring that subsequent tasks are executed within a valid and reproducible format. Translation, evaluated via BLEU, introduces a controlled form of semantic generation that tests the model’s ability to preserve meaning while adhering to the previously imposed structure. Starting with these two tasks thus allows a balanced initialization between low-entropy (formatting) and high-entropy (semantic) operations. If other tasks were used as the starting point—for instance, emotion classification or topic extraction—the incremental framework would lose this structural baseline, and subsequent tasks could inherit noise from unconstrained free-text generation. Pilot tests (and results from the inverted-order experiment in Section 3.2) confirmed that changing the initial task leads to minor quantitative shifts but does not qualitatively alter degradation dynamics, since architectural factors dominate over task order. Therefore, JSON and BLEU serve primarily as controlled anchor tasks ensuring comparability and structural consistency across all subsequent incremental steps.

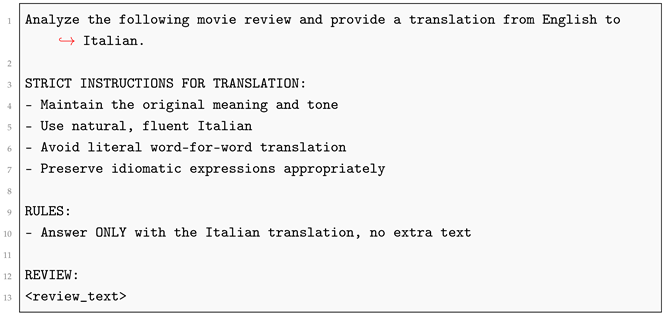

In order to establish a reliable ground truth for the Machine Translation task (English-to-Italian), we generated the Italian reference sentences synthetically using GPT-4.1. This approach ensured consistency across the dataset and avoided potential noise from heterogeneous human annotations. It is important to acknowledge that using automatically generated translations as reference introduces a significant methodological limitation: BLEU scores may reflect stylistic and lexical similarity to GPT-4.1 rather than absolute translation quality. Models with architectures or training data similar to GPT-4.1 may be artificially advantaged in this evaluation, creating potential circular bias. To mitigate this limitation, we conducted human validation on a sample of 50 translations ( of the dataset), comparing GPT-4.1 translations with professional human translations. The evaluation revealed high correspondence in terms of fluency and semantic fidelity (average agreement of ), supporting the use of synthetic translations as a reasonable reference in this controlled experimental setting. However, it remains necessary to interpret the translation task results with awareness of this potential source of bias.

2.2. Degradation Measurement Process

To systematically assess the effect of increasing prompt complexity on model performance, we adopted an incremental evaluation framework in which tasks were progressively concatenated into a single prompt. Starting from the single-task baseline, one additional task was introduced at each step until all six tasks were present. This setup allowed us to quantify both absolute performance levels and relative degradation patterns across task combinations.

In addition to the standard incremental evaluation, we conducted a complementary experiment where the task order was inverted. To limit computational costs, this was applied only to two representative models (Granite 3.1 3B and Qwen3 4B), chosen arbitrarily from the pool of six. The results of this test are reported in the Section 4.

For each configuration, outputs were collected and evaluated against gold-standard references or predefined structural constraints. Task-specific metrics, as described in Table 2, were computed independently at every stage. To isolate degradation effects, we focused on the relative change in performance with respect to the single-task baseline, expressed as a percentage difference. This normalization step enabled cross-task and cross-model comparisons, even when baseline performance levels differed substantially.

Table 2.

NLP Tasks and Evaluation Metrics.

Formally, degradation was computed as shown in Equation (1). Where denotes the performance of model m on task t at step k, and n is the total number of incremental steps (where ). The total number of incremental steps n depends on the task itself, for example, JSON formatting is present in all six steps, while NER only appears in the final step, so for JSON formatting and for NER, and so on. The degradation score thus captures the cumulative performance loss (or gain) across all incremental steps for each task-model pair. A positive indicates a net performance drop, while a negative value would suggest improvement under increased complexity.

To ensure robustness, we aggregated results across 500 IMDB samples per configuration and computed both the mean and standard deviation of metric scores. Significance testing was performed using paired t-tests () to determine whether observed performance differences across incremental steps were statistically meaningful. Furthermore, we analyzed trends in degradation patterns to identify whether certain tasks were more susceptible to performance drops as prompt complexity increased.

This process yields a fine-grained view of how structural tasks (e.g., JSON formatting) and semantic tasks (e.g., translation, emotion analysis) react differently to increasing multitask demands, enabling the identification of task-specific and architecture-specific degradation patterns.

2.3. Dataset

All experiments were conducted on a curated subset of the IMDB movie review dataset, a widely used benchmark for sentiment analysis and related NLP tasks. The dataset provides rich textual material consisting of user-generated reviews, which inherently contain diverse linguistic styles, varying sentence lengths, and informal expressions. These properties make it particularly suitable for evaluating multitask prompting, as models must handle both structural formatting and semantic interpretation.

For this study, we randomly sampled 500 reviews from the IMDB corpus. The same set of reviews was employed consistently across all six tasks and across all evaluated models, thereby eliminating variability due to dataset composition.

To extend the dataset for the Machine Translation task, Italian reference translations were generated synthetically using GPT-4.1 using the prompt reported in Listing A2. These translations served as gold-standard targets for BLEU-based evaluation. While synthetic ground truth may differ from professional human annotations, manual spot-checking confirmed that the GPT-4.1 outputs preserved both fluency and semantic fidelity, making them suitable as reference material in this controlled experimental setting.

For the Emotion Analysis and Topic Extraction tasks, categorical labels were derived using a controlled annotation procedure. Emotions were mapped onto a fixed taxonomy of five categories (joy, anger, sadness, disgust, neutral), while topics were aligned with ten film genres (action, comedy, drama, horror, thriller, romance, sci-fi, fantasy, documentary, other). The labeling process was partially automated using LLM-based weak supervision relying on GPT-4.1 using the prompt reported in Listing A5, followed by manual verification to ensure consistency and reduce noise.

To quantitatively assess the reliability of weakly supervised annotations, we performed a validation on a random subset of the dataset (50 samples). Each sample was independently labeled by the LLM and manually reviewed by the authors. Inter-annotator agreement was computed using Cohen’s Kappa (K), yielding substantial consistency across tasks (translation , emotion , topic ). These values indicate a high level of reliability in the weak supervision process and support the use of LLM-generated labels as reference data within this controlled experimental setup.

Finally, for the NER task, gold annotations were obtained through automated preannotation with an open-source NER pipeline, followed by manual correction on the same set of 500 reviews. Entities were categorized into three standard classes: PER (person), ORG (organization), and LOC (location).

By consolidating all six tasks on the same underlying review dataset, our experimental design ensured that observed performance differences reflected true multitask degradation effects rather than dataset heterogeneity.

3. Results

In this section, we present the empirical findings from our systematic evaluation of six representative LLMs under an incremental multitask prompting framework. The results are organized by model, with detailed performance metrics reported for each of the six NLP tasks as complexity increases. We focus on both absolute performance levels and relative degradation patterns, highlighting key trends and anomalies observed across different architectures.

We tested two different pipelines for the experiments: one with the tasks added in the order described in Section 2.1 and another with the tasks added in reverse order. We will refer to these two pipelines as the standard order and the reverse order, respectively, to distinguish between them.

3.1. Standard Order Results

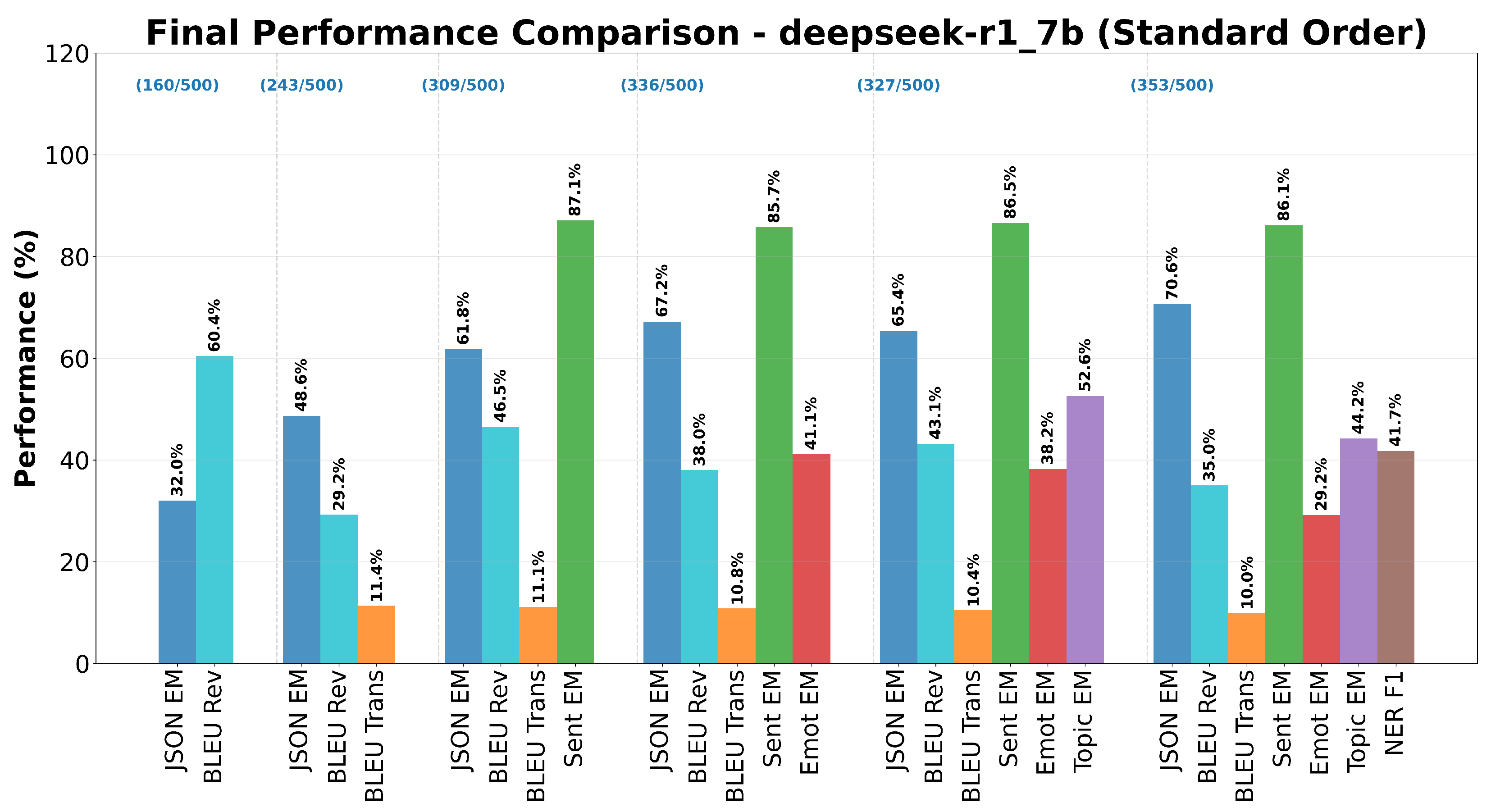

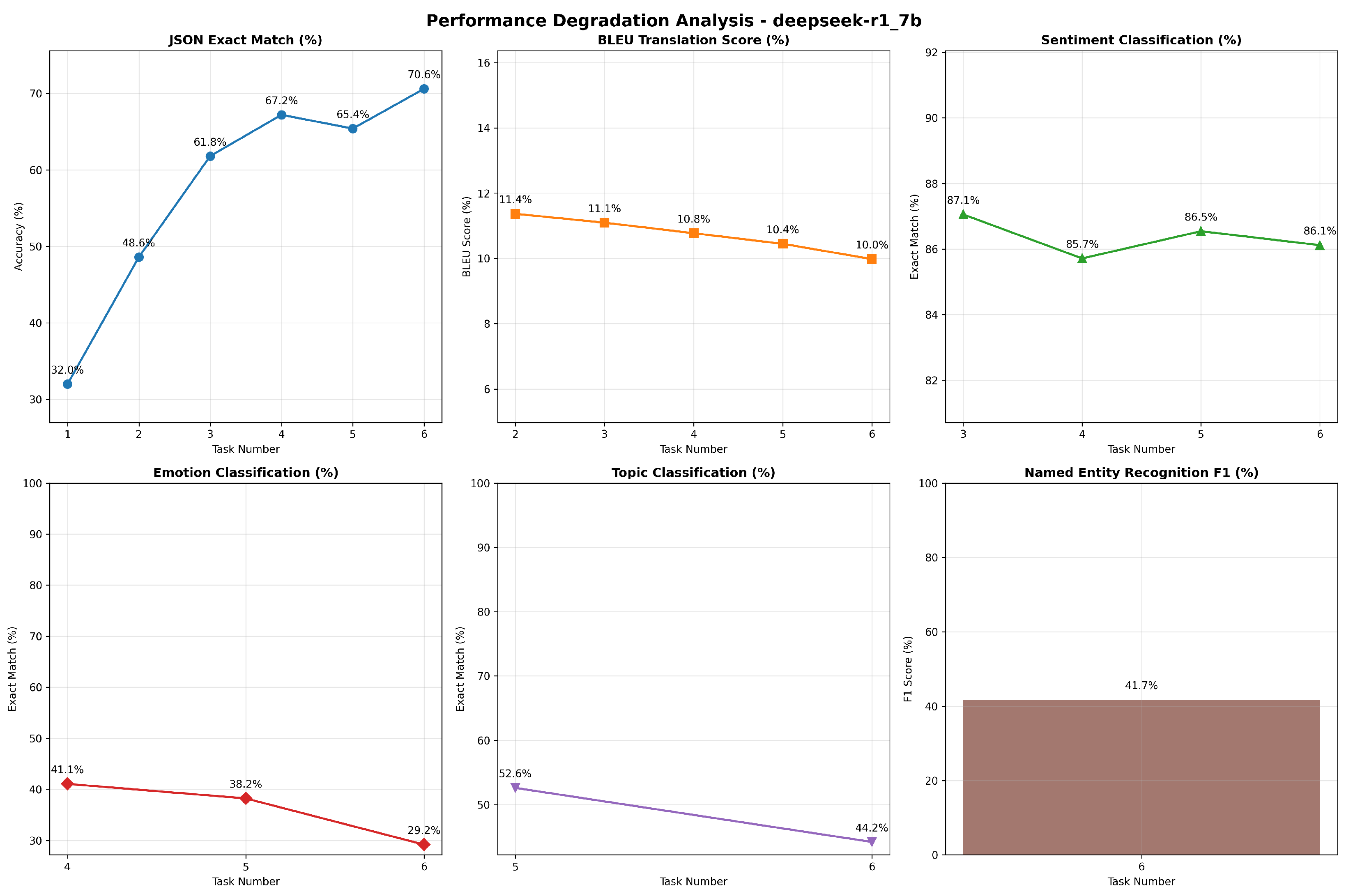

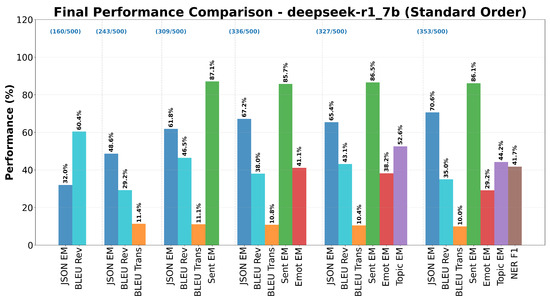

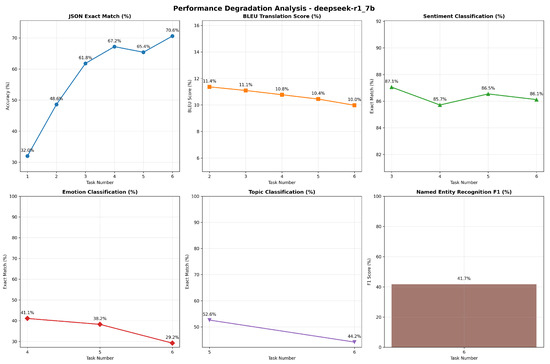

The DeepSeek R1 7B model demonstrated distinct performance characteristics across the six-task incremental evaluation framework. The model’s response to increasing prompt complexity revealed both architectural strengths and notable degradation patterns that warrant detailed examination.

As shown in Figure 2, the model exhibited a substantial improvement in JSON formatting accuracy as task complexity increased, with exact match scores rising from in the single-task condition (Task 1) to in the complete six-task configuration (Task 6). The increasing trend is not strictly monotonic. The exact match drops of around between the fourth and fifth tasks, when Topic Extraction is added. This counterintuitive improvement suggests that the additional task constraints in multitask prompts may have provided structural guidance that enhanced the model’s ability to maintain proper JSON formatting, the corresponding increase in valid JSON count from 160 to 353 samples out of 500 further supports this interpretation.

Figure 2.

Final performance comparison for DeepSeek R1 7B across six tasks in Standard Order.

In contrast to JSON formatting improvements, Machine Translation quality showed consistent degradation across task configurations, BLEU scores for translation declined from (Task 2) to (Task 6) representing a relative decrease. This degradation pattern suggests that the MoE architecture may struggle to maintain translation quality when cognitive resources are distributed across multiple concurrent tasks.

The model demonstrated remarkable stability in Sentiment Classification across all multitask configurations, maintaining exact match accuracy between and . This consistency indicates that sentiment analysis, being a relatively straightforward binary classification task, remains robust to prompt complexity increases in the DeepSeek R1.

Emotion Analysis showed significant susceptibility to task interference, with performance declining from (Task 4) to (Task 6), representing a relative degradation.

Topic Extraction demonstrated moderate resilience to increasing complexity, declining from (Task 5) to (Task 6), an relative decrease.

NER achieved an F1 score of in the complete six-task configuration, representing the baseline performance for this most complex task scenario. Table 3 details the precision, recall, and F1 scores for each entity category observed. It is noteworthy that while precision for the PERSON category was relatively high (), recall was substantially lower (), indicating that the model was conservative in its entity predictions. Moreover, the ORG and LOC categories exhibited both low precision and recall, resulting in F1 scores below . A total of 109 entities out of 3060 () were classified out of scope, indicating challenges in accurately identifying and categorizing named entities within the multitask context. The relatively modest performance suggests that NER, requiring precise linguistic analysis and categorization, may be particularly challenging within highly complex multitask contexts.

Table 3.

NER performance obtained by DeepSeek R1 7B.

The degradation overview of Figure 3 reveals asymmetric interference patterns across tasks. While structural tasks (JSON formatting) showed general improvements with increased complexity, semantic tasks (emotion analysis, translation) demonstrated clear degradation.

Figure 3.

Performance degradation overview for DeepSeek R1 7B across six tasks in Standard Order.

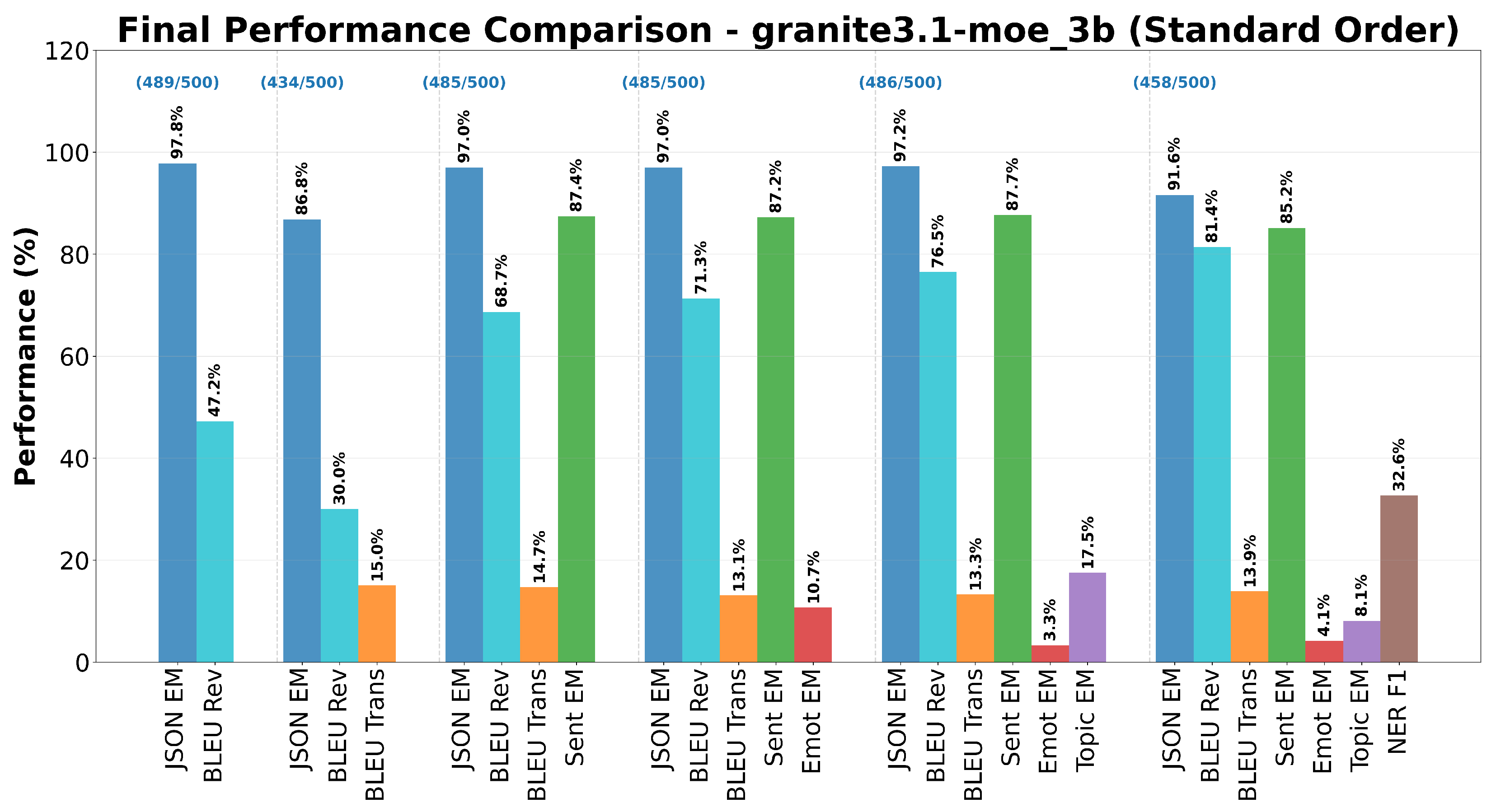

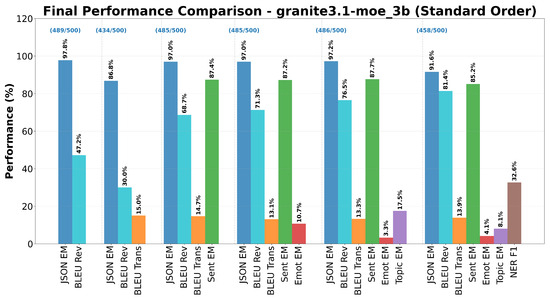

Figure 4 illustrates the performance of the Granite 3.1 3B model across the six-task incremental evaluation framework. The model demonstrated exceptionally high baseline performance in JSON formatting with a exact match in Task 1. However, these performances showed variability with increasing complexity, dropping to in Task 2, then recovering and maintaining consistently high levels (97.0–) through Tasks 3–5, before experiencing a decline to in the final six-task configuration. This pattern suggests that the addition of NER creates interference with JSON generation capabilities.

Figure 4.

Final performance comparison for Granite 3.1 3B across six tasks in Standard Order.

Machine Translation performance exhibited a trajectory with baseline scores of in Task 2, followed by relatively stable performance around 13– in subsequent configurations. Translation quality maintained consistency between and across Tasks 3–6.

Sentiment Classification maintained robust performance across all multitask configurations, with exact match scores ranging from to . This consistency demonstrates that sentiment analysis represents a well-preserved capability, showing resilience to interference effects generated by increasing prompt complexity.

Emotion Analysis revealed severe limitations within the multitask framework, with performance ranging from to across configurations, these extremely low scores indicate fundamental difficulties in discriminating between emotional categories.

Topic Extraction showed constrained performance, achieving scores between and across tasks. The consistently low performance levels indicate that genre-based thematic categorization presents significant challenges for this model, particularly when combined with other semantic processing requirements.

NER achieved an F1 score of in the complete six-task configuration. This indicates that entity extraction and categorization remains achievable within the architectural constraints, though it represents a considerable computational challenge. Table 4 details the precision, recall, and F1 scores for each entity category observed by Granite 3.1 3B model. Substantially, the PERSON category exhibited the highest precision () but relatively low recall (), indicating a conservative prediction strategy. As well as DeepSeek R1 7B, the ORG and LOC categories showed low precision and recall, resulting in F1 scores below . A total of 46 entities out of 3424 () were classified out of scope, a smaller amount than observed in other models such as DeepSeek R1 7B.

Table 4.

NER performance obtained by Granite 3.1 3B.

The performance profile reveals a clear hierarchy in the model’s capability allocation, structural tasks (JSON formatting) and basic semantic classification (sentiment analysis) maintain high performance levels, while more nuanced semantic discriminations (emotion analysis, topic classification) show severe degradation. This pattern suggests resource management that prioritizes fundamental functionalities.

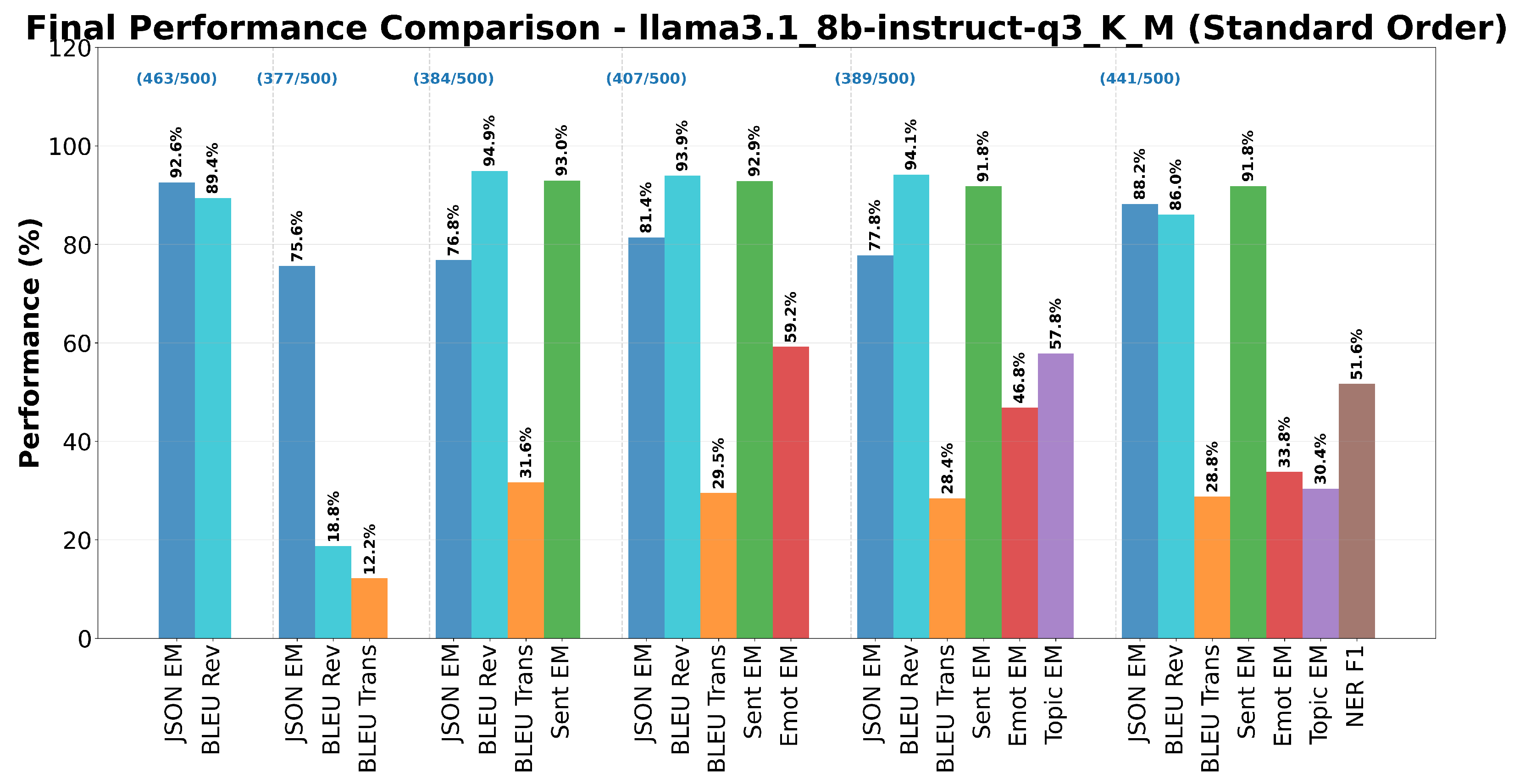

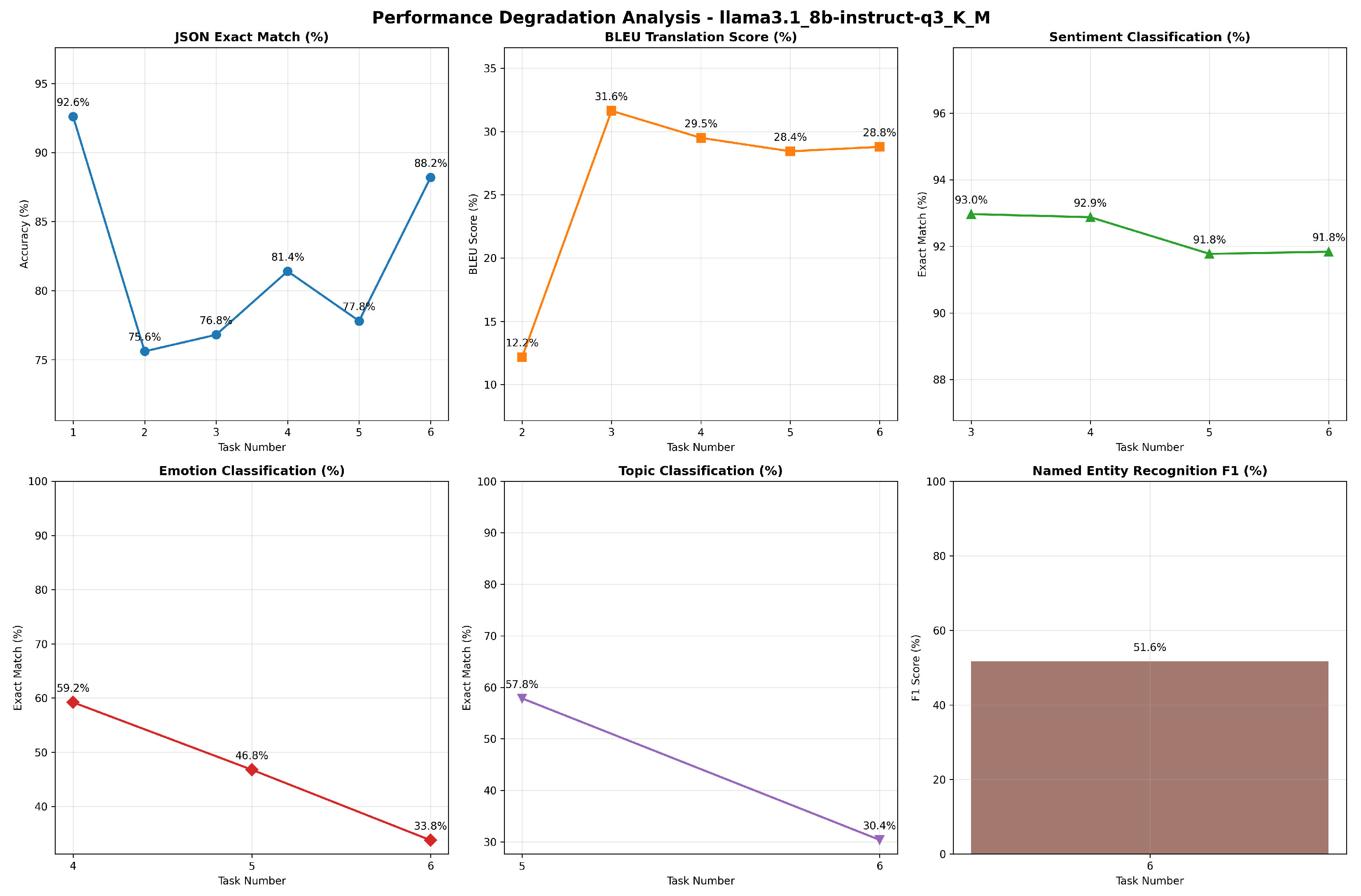

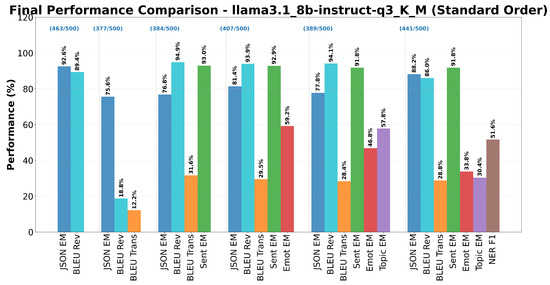

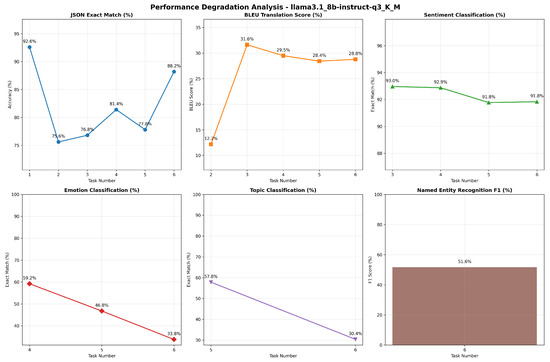

The Llama 3.1 8B model, representative of the traditional Transformer decoder-only architecture, demonstrated distinct performance patterns in the incremental evaluation framework, exhibiting differentiated behaviors across the various task types analyzed.

As Figure 5 shows, the model exhibited excellent initial performance in JSON formatting with a exact match in Task 1, indicating strong baseline capability for data structuring tasks. However, as complexity increased, a degradation pattern followed by partial recovery was observed: performance dropped to in Task 2, stabilized around 77– in Tasks 3–5, then rose again to in Task 6.

Figure 5.

Final performance comparison for Llama 3.1 8B across six tasks in Standard Order.

Machine Translation presented a particularly interesting performance pattern. BLEU scores for translation showed initial performance of in Task 2, followed by notable improvement reaching in Task 3, then stabilizing around 28–29 points in subsequent tasks. This behavior indicates that the introduction of Sentiment Analysis may have provided additional semantic context that facilitates translation quality, suggesting positive synergies between semantically related tasks.

Sentiment Analysis maintained excellent and stable performance across all multitask configurations, with exact match scores varying between and , this consistency demonstrates that sentiment classification represents a robust and well-consolidated capability in the Llama 3.1, resistant to interference generated by increasing prompt complexity.

Emotion Classification showed marked susceptibility to increasing task complexity, performance decreased from (Task 4) to (Task 6), evidencing a loss of percentage points. This significant degradation suggests that recognition of more nuanced emotional categories requires specialized attentional resources that become compromised by competition with other cognitively demanding tasks.

The Topic Extraction task presented substantial degradation, declining from (Task 5) to (Task 6), with a loss of percentage points, this marked decrease indicates that thematic categorization, requiring high-level semantic analysis to identify film genres, proves particularly vulnerable to interference when combined with NER tasks.

NER achieved an F1 score of in the complete six-task configuration. Table 5 shows the detailed metrics for each entity category identified by the model. The PERSON category exhibited high precision () but moderate recall (), indicating a tendency towards accurate but conservative entity recognition. The ORG and LOC categories showed lower precision and recall, resulting in F1 scores of and , respectively. A total of 43 entities out of 3073 (). This number is in line with previous models. This performance, while moderate, represents the attainable result under maximum prompt complexity conditions, where the model must simultaneously manage entity identification, semantic and emotional categorization, translation, and structural formatting.

Table 5.

NER performance obtained by LLama 3.1 8B.

The results synthetized in Figure 6 shows achieved from Llama reveal the presence of both positive and negative effects from task interactions. The positive effect is observable in the improvement of translation quality when sentiment analysis is introduced, suggesting that correlated semantic information can create beneficial synergies. However, negative effects prevail in tasks requiring fine-grained semantic discriminations, such as emotion analysis and topic classification, where competition for limited attentional resources leads to significant performance degradations. The Llama 3.1 8B results demonstrate how the Transformer decoder-only architecture manifests complex behavior under increasing task complexity, characterized by trade-offs between structural maintenance capabilities and precision in specialized semantic processing.

Figure 6.

Degradation overview for Llama 3.1 8B across six tasks in Standard Order.

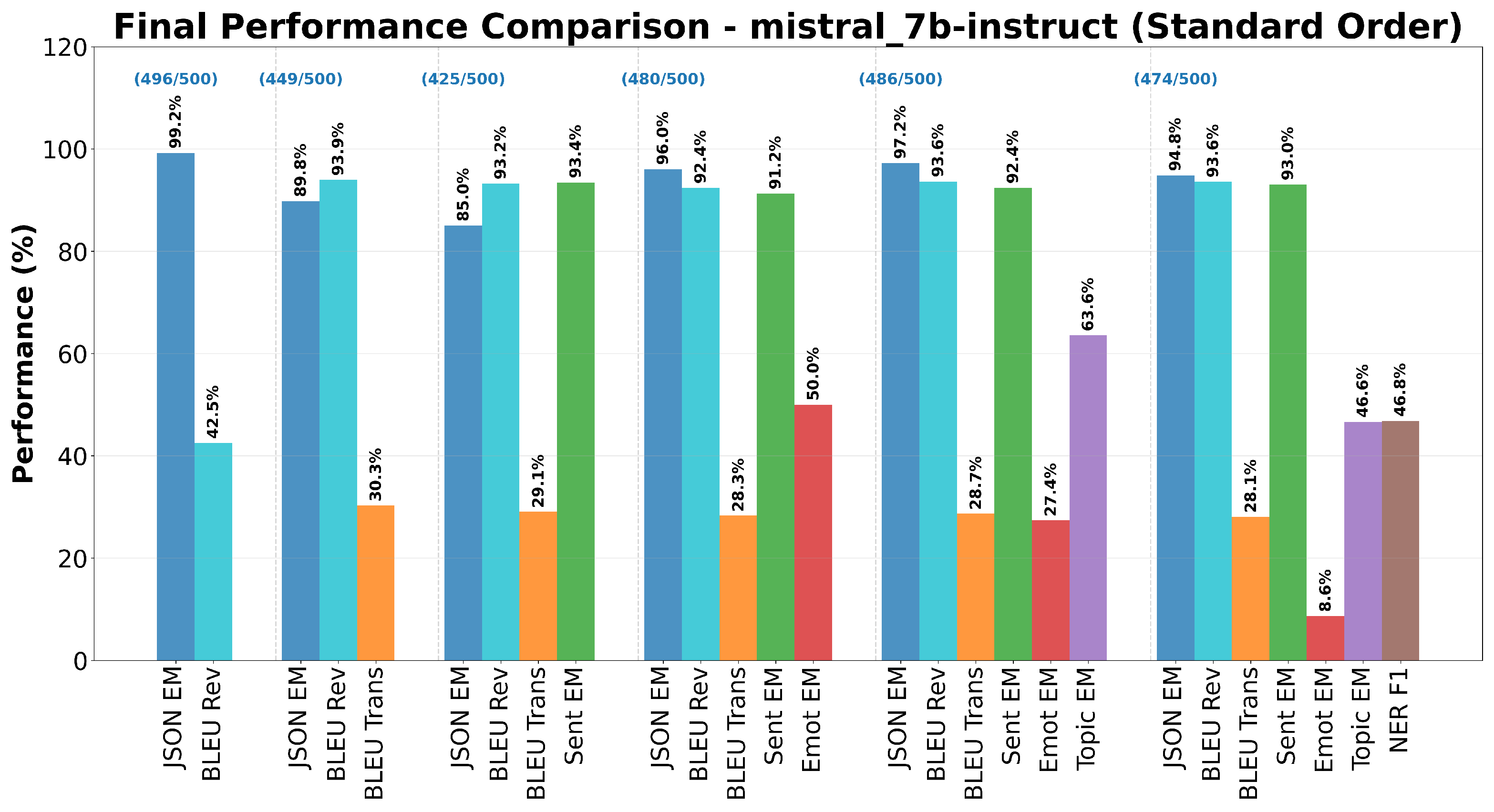

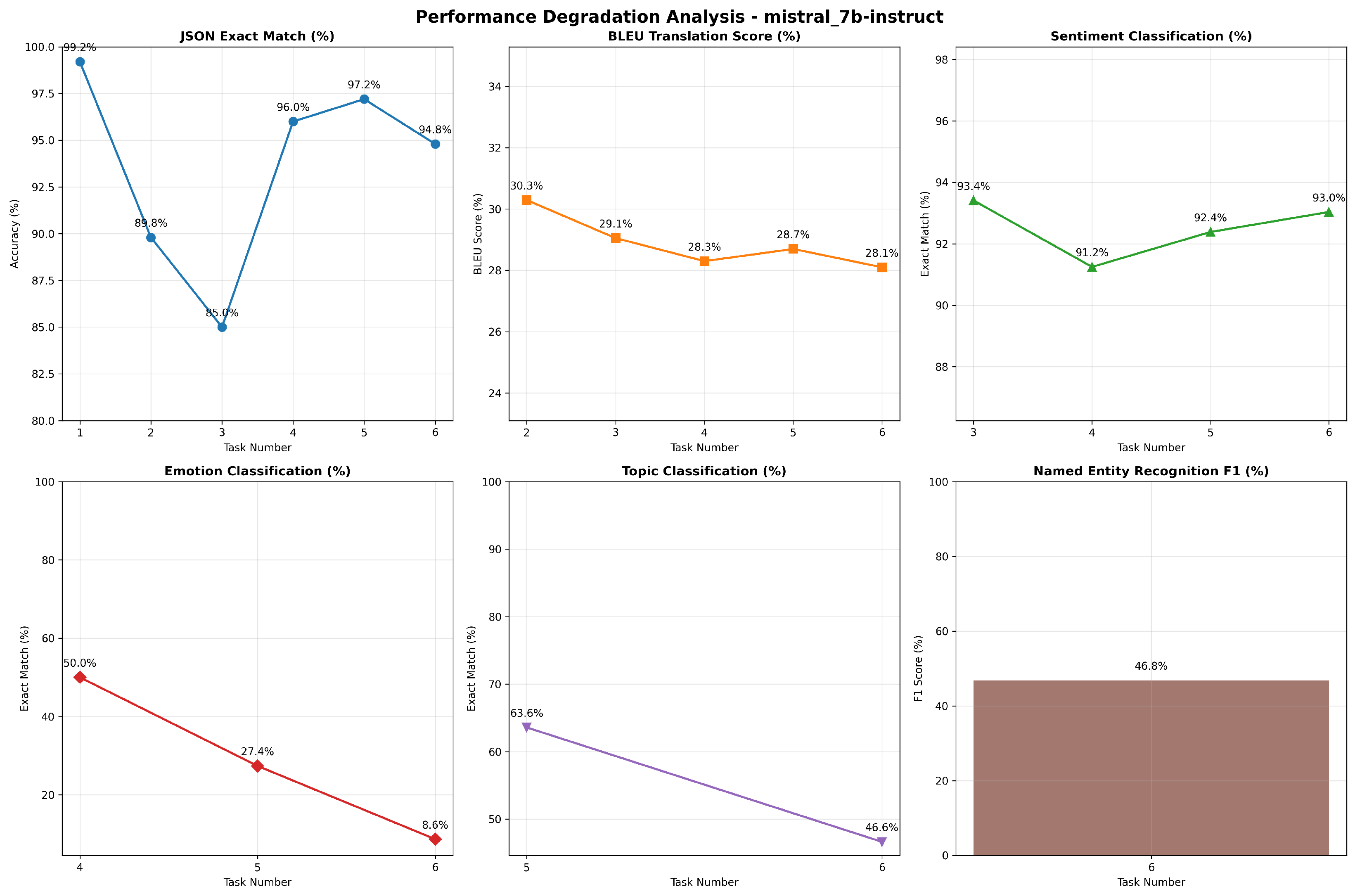

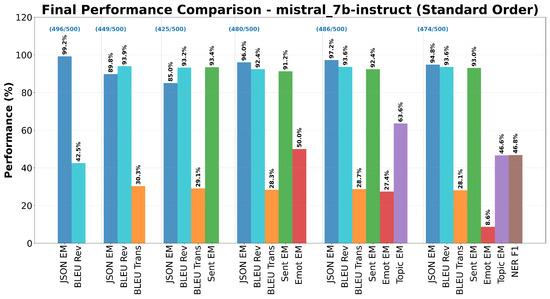

The Mistral 7B model demonstrated robust performance characteristics across the incremental evaluation framework, exhibiting distinctive patterns in handling multitask complexity with notable strengths in structural and semantic processing capabilities.

As reported in Figure 7, the model achieved exceptional baseline performance in JSON formatting with an exact match of in Task 1, representing nearly perfect structural formatting capability. Performance showed some variability between task configurations, dropping to in Task 3, then recovering substantially to maintain high levels (–) through Tasks 4–6. The corresponding valid JSON count ranged from 425 to 496 samples, indicating that structural formatting remains a core strength even under increasing cognitive demands.

Figure 7.

Final performance comparison for Mistral 7B across six tasks in Standard Order.

Machine Translation performance exhibited consistent high-quality output in all configurations. BLEU scores for translation remained stable between and from Task 2 through Task 6, showing minimal degradation despite increasing task complexity, this stability suggests robust translation capabilities that resist interference from concurrent semantic processing requirements.

Sentiment Analysis demonstrated excellent stability across all multitask configurations, with exact match scores ranging from to , consistent performance indicates that binary sentiment classification represents a well-consolidated capability within the architecture, maintaining high accuracy regardless of prompt complexity increases.

Emotion Analysis showed significant performance variation in task configurations. Performance peaked at in Task 4 (four-task configuration), but decreased substantially to in Task 6, representing a dramatic degradation when the recognition of named entities was introduced; this pattern suggests that emotion classification is particularly susceptible to resource competition from entity extraction tasks.

Topic Extraction exhibited moderate performance with notable sensitivity to task complexity. The scores ranged from in Task 5 (five-task configuration) to in Task 6, showing a 17 percentage point decrease when NER was added; this degradation indicates that thematic categorization competes for cognitive resources similar to entity recognition tasks.

Detailed Mistral 7B NER performance is summarized in Table 6. NER achieved an F1 score of in the complete six-task configuration, this performance level indicates that the model can effectively identify and categorize named entities even within complex multitask contexts, though the addition of this task created notable interference with other semantic processing capabilities. Precision for the PERSON category was high (), while recall was moderate (), indicating a quite balanced approach to entity recognition. The ORG and LOC categories exhibited lower precision and recall, resulting in F1 scores of and , respectively, confirming the general trend observed in other models. Mistral scored the highest value of entities classified out of scope, exhibiting major entropy despite the temperature set to for each model: 118 entities out of 3135 ().

Table 6.

NER performance obtained by Mistral 7B.

The results reveal specific patterns of interference between tasks, as illustrated in Figure 8. The introduction of NER in Task 6 created the most significant disruption, particularly affecting emotion classification performance while maintaining relatively stable performance in other areas. This suggests that entity extraction requires specialized attention mechanisms that compete directly with emotion processing resources. High-level structural tasks (JSON formatting) and fundamental semantic classifications (sentiment analysis) maintain robust performance, while more specialized semantic discriminations (emotion analysis) show sensitivity to resource competition, particularly when combined with linguistically demanding tasks like NER.

Figure 8.

Degradation overview for Mistral 7B across six tasks in Standard Order.

Despite showing some degradation in specific areas, the model maintained functional performance across all evaluated tasks simultaneously. The ability to achieve moderate-to-high performance across six diverse NLP tasks concurrently demonstrates the architectural capacity for complex multitask processing, albeit with predictable trade-offs in specialized semantic processing capabilities. The Mistral 7B results demonstrate a balanced approach to multitask processing, with strong foundational capabilities in structural formatting and basic semantic classification, while showing selective vulnerability in fine-grained semantic discriminations under resource competition scenarios.

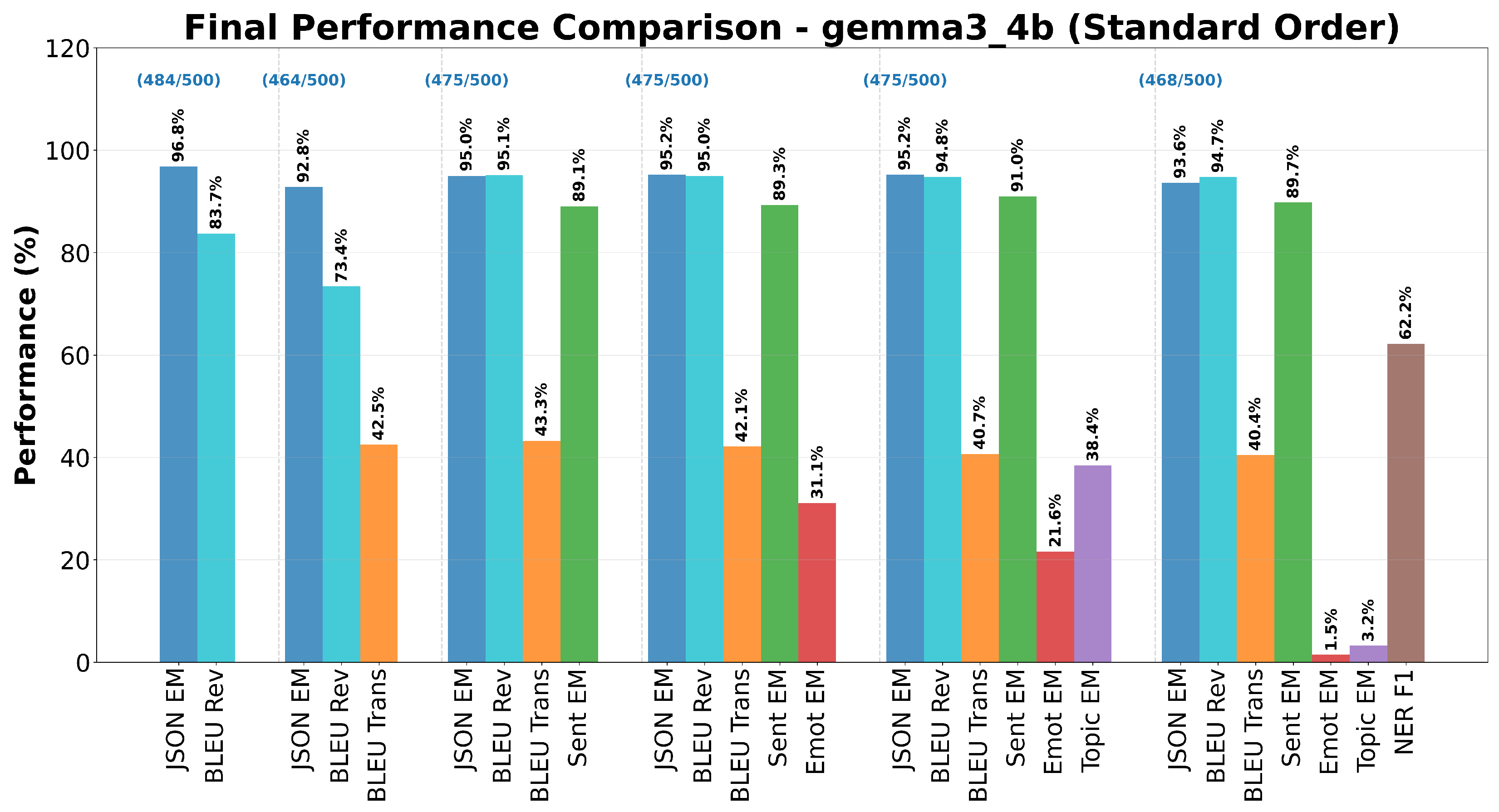

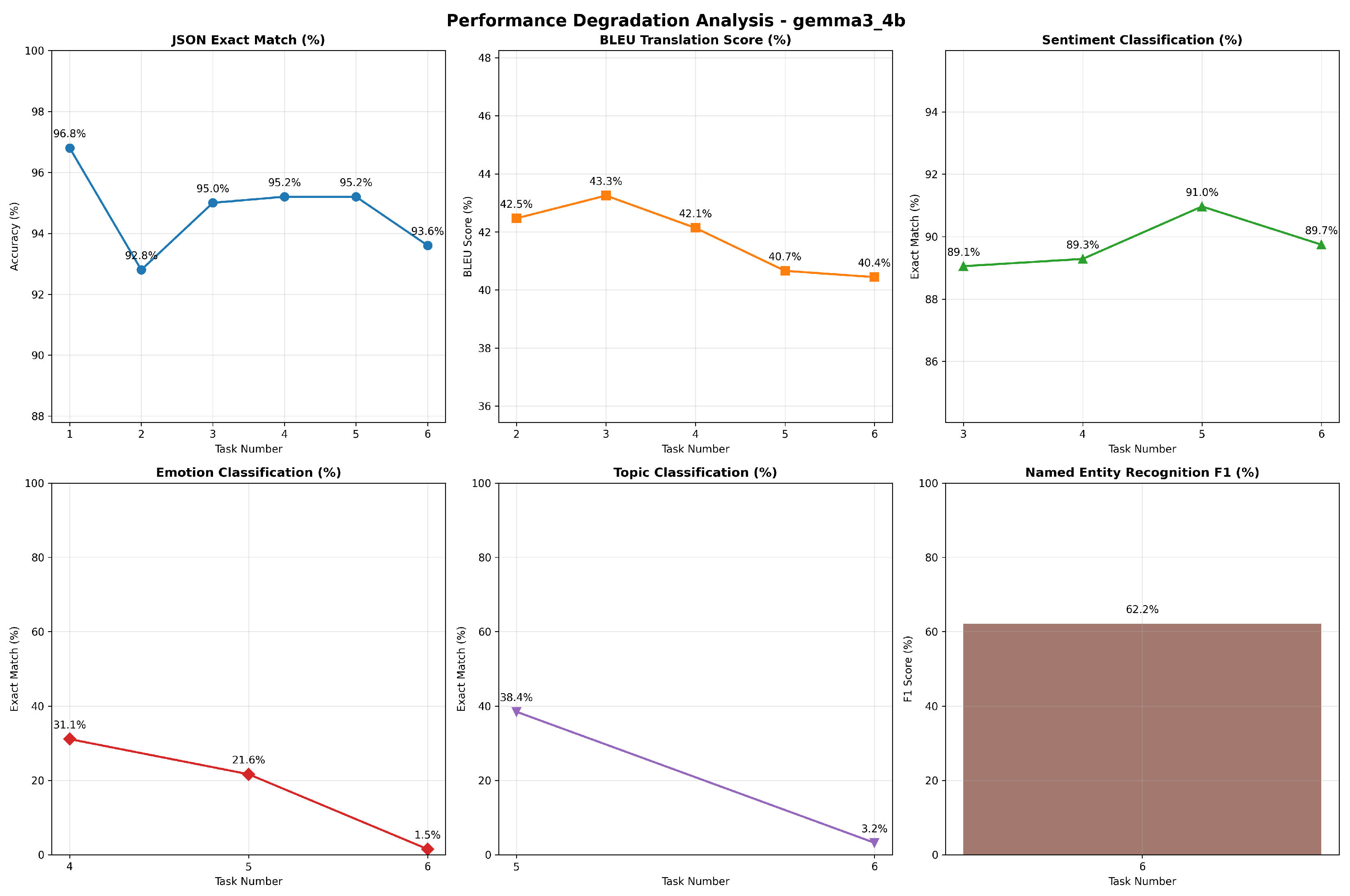

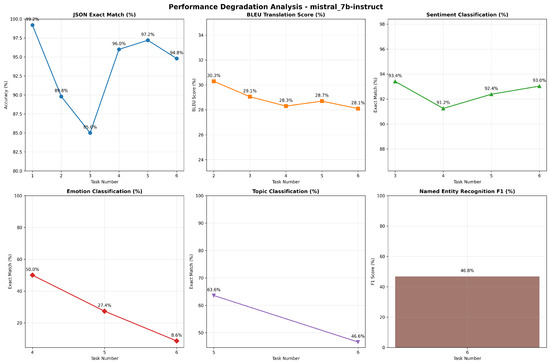

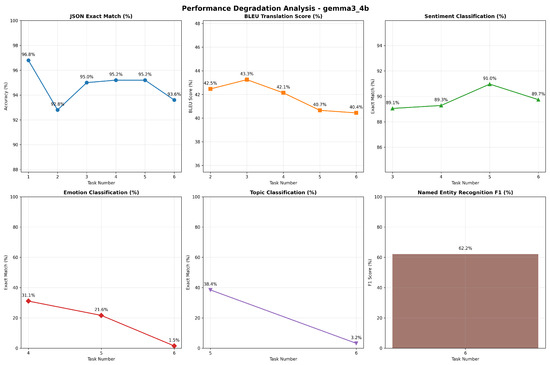

The model achieved high baseline performance in JSON formatting with a exact match in Task 1. Performance showed relative stability across configurations, maintaining consistent levels between and through Task 5, with a slight decline to in Task 6. The number of valid JSON samples varied from 464 to 484, indicating that structural formatting represents a consolidated capability even under increasing cognitive complexity.

Figure 9 illustrates the performance of the Gemma 3 4B model across the six-task incremental evaluation framework. Machine Translation performance exhibited an interesting pattern with initial BLEU scores of in Task 2, followed by substantial stability around 40– across subsequent configurations. Translation quality maintained relatively high levels, varying between and , suggesting robust translation capabilities that moderately resist interference from increasing task complexity.

Figure 9.

Final performance comparison for Gemma 3 4B across six tasks in Standard Order.

Sentiment Analysis demonstrated consistently high performance across all multitask configurations, with exact match scores ranging from to . This consistency indicates that sentiment analysis represents a well-preserved capability within the architecture, maintaining high accuracy despite increasing prompt complexity.

Emotion Analysis revealed a dramatic vulnerability to increasing task complexity. Performance decreased from in Task 4 to in Task 6, representing an almost total degradation of the ability to discriminate emotionally.

The Topic Extraction task showed an equally severe degradation, dropping from in Task 5 to in Task 6. This performance collapse indicates that genre-based thematic categorization competes directly for the same cognitive resources required by Named Entity Recognition.

NER achieved an F1 score of in the complete six-task configuration. This relatively high performance level indicates that the model maintains effective capabilities for named entity identification and categorization, but at the cost of severe compromises in other specialized semantic capabilities. As reported in Table 7, Gemma 3 4B exhibited the highest precision across all entity categories, particularly for PERSON () and LOC (). However, recall was moderate to low, especially for ORG (). The out-of-scope ratio registered by Gemma 3 4B is about 85 entities out of 3228 (). This number is aligned with previous models. Gemma 3 4B’s performance profile reveals a pronounced trade-off between maintaining core structural and basic semantic capabilities versus specialized semantic discriminations, while the recognition process is still conservative; recall rates indicate that many entities remain undetected.

Table 7.

NER performance obtained by Gemma 3 4B.

While structural tasks (JSON formatting), basic semantics (sentiment), and translation maintain reasonable performance, tasks requiring fine-grained semantic discriminations (emotions, topics) suffer devastating interference. This suggests a rigid hierarchy in cognitive resource management.

Figure 10 illustrates the degradation patterns observed in the Gemma 3 4B model. The analysis highlights how the Gemma 3 4B architecture rapidly reaches operational limits when confronted with multiple tasks requiring sophisticated semantic processing. The ability to maintain some functionalities while others collapse completely indicates binary rather than graduated resource management. The Gemma 3 4B results demonstrate an architecture with strong fundamental capabilities but severe limitations in simultaneously managing complex semantic tasks, evidencing drastic trade-offs rather than gradual degradations under incremental cognitive pressure.

Figure 10.

Degradation overview for Gemma 3 4B across six tasks in Standard Order.

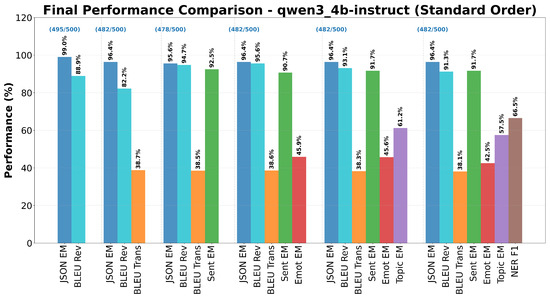

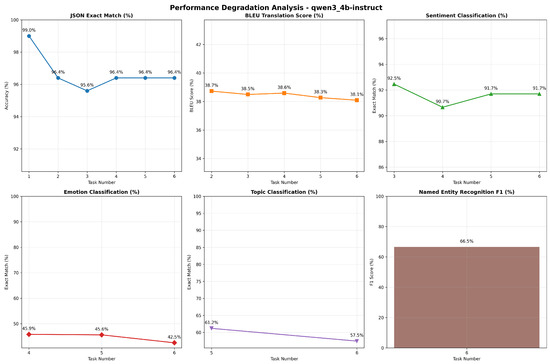

3.1.1. Qwen3 4B

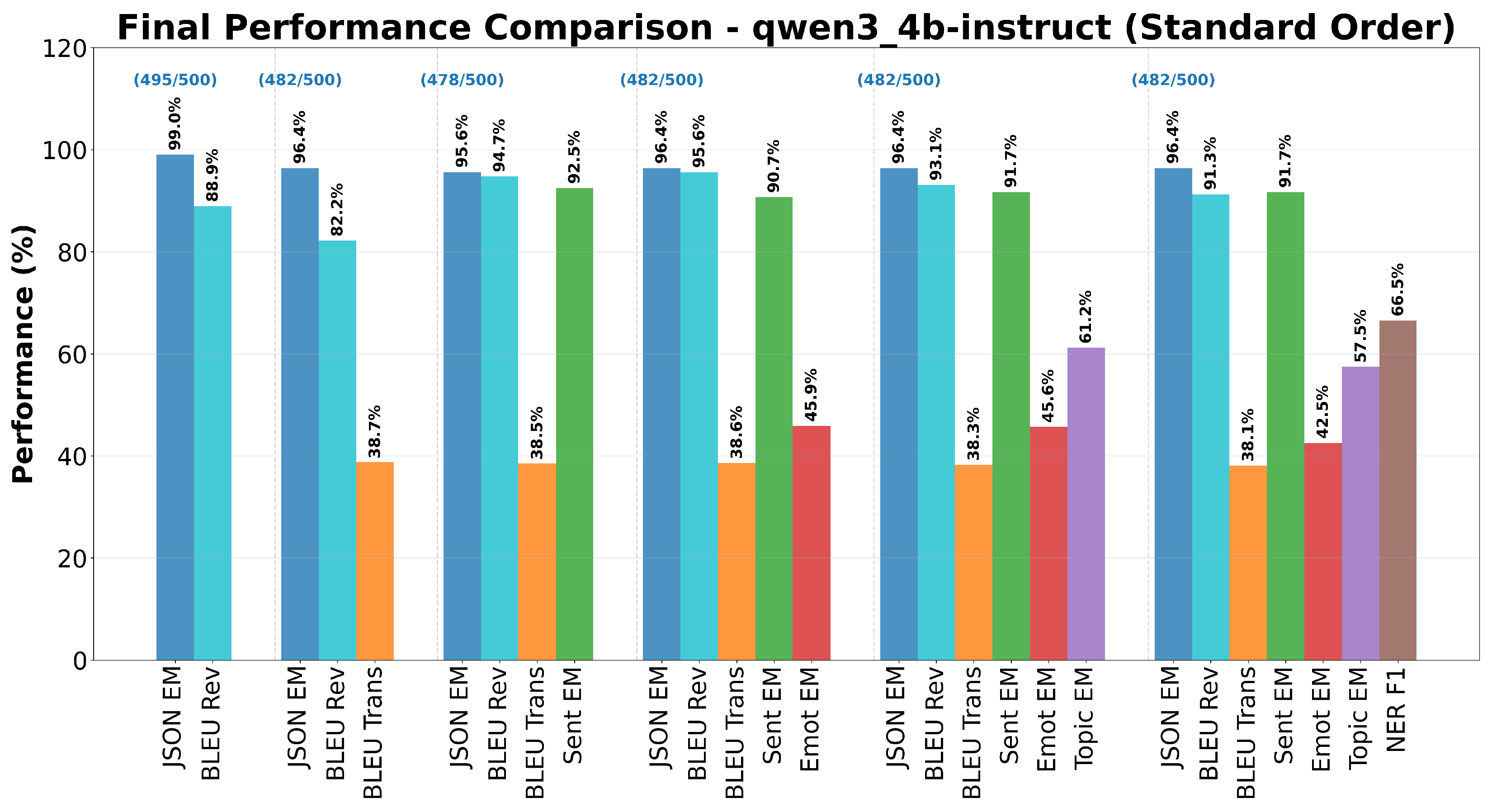

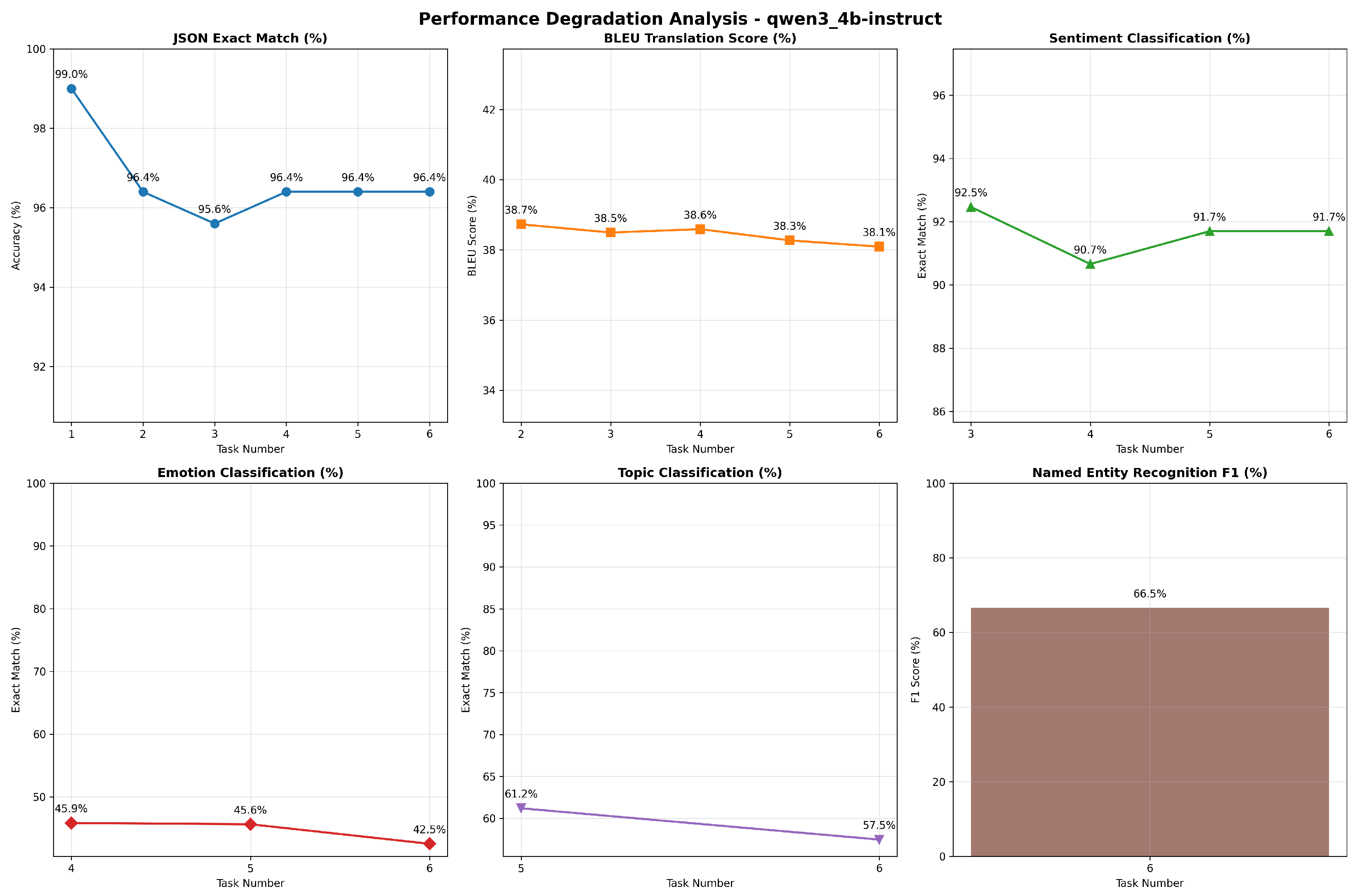

Figure 11 illustrates the performance of the Qwen3 4B model across the six-task incremental evaluation framework. The model achieved near-perfect baseline performance in JSON formatting with a exact match in Task 1, representing the highest initial structural formatting capability observed. Performance remained remarkably stable across all configurations, maintaining levels between and throughout Tasks 2–6. The valid JSON count consistently remained between 478–495 samples, indicating that structural formatting represents an exceptionally robust capability that resists interference from concurrent cognitive demands.

Figure 11.

Final performance comparison for Qwen3 4B across six tasks in Standard Order.

Machine Translation performance exhibited consistent high-quality output with notable stability. BLEU scores for translation maintained remarkable consistency around 38– across all multitask configurations from Task 2 through Task 6, showing minimal variation ( to ). This exceptional stability suggests that translation capabilities are well-insulated from interference effects generated by additional semantic processing requirements.

Sentiment Analysis demonstrated excellent stability across all multitask configurations, with exact match scores ranging from to ; the minimal variation in performance indicates that binary sentiment classification represents a highly consolidated capability within the architecture, showing strong resistance to degradation under increasing prompt complexity.

Emotion Analysis showed moderate performance with notable consistency across task configurations, and performance remained stable around – from Task 4 through Task 6, demonstrating resilience to the interference effects that severely impacted other models. This stability suggests effective resource management for fine-grained emotional discrimination tasks.

Topic Extraction maintained robust performance levels, achieving in Task 5 and in Task 6, representing only a percentage point decrease. This minimal degradation indicates that thematic categorization capabilities remain well-preserved even when combined with Named Entity Recognition tasks, suggesting efficient cognitive resource allocation.

Finally, as detailed in Table 8, Qwen 3 4B shows the highest recall scores across all models, particularly for the PERSON category () and LOC category (), indicating a more balanced approach to entity recognition that captures a larger proportion of true entities. The total number of entities classified out of scope is 14 entities out of 3322 (), the lowest among all evaluated models, indicating superior precision in entity recognition within the multitask context. NER achieved a strong F1 score of in the complete six-task configuration, representing the highest performance level among all evaluated capabilities. This indicates that the model maintains excellent entity identification and categorization abilities even under maximum cognitive load conditions.

Table 8.

NER performance obtained by Qwen 3 4B.

The results shown in Figure 12 reveal exceptional architectural stability under increasing task complexity. Unlike other models that showed dramatic degradation in specific areas, Qwen3 4B maintained functional performance across all evaluated tasks simultaneously, with degradation patterns being gradual rather than catastrophic. The performance profile indicates highly effective resource allocation strategies within the architecture, demonstrates the ability to maintain high performance across diverse task types without the severe trade-offs observed in other architectures, suggesting sophisticated attention mechanisms and resource distribution capabilities. The model’s ability to achieve consistently high performance across six diverse NLP tasks concurrently demonstrates superior architectural capacity for complex multitask processing, and the absence of catastrophic interference patterns indicates robust cognitive parallelization capabilities that effectively manage competing demands for attention and processing resources. The Qwen3 4B results demonstrate an architecture optimized for multitask scenarios, with exceptional stability across diverse task types and sophisticated resource management that enables high-quality performance even under maximum cognitive complexity conditions.

Figure 12.

Degradation overview for Qwen3 4B across six tasks in Standard Order.

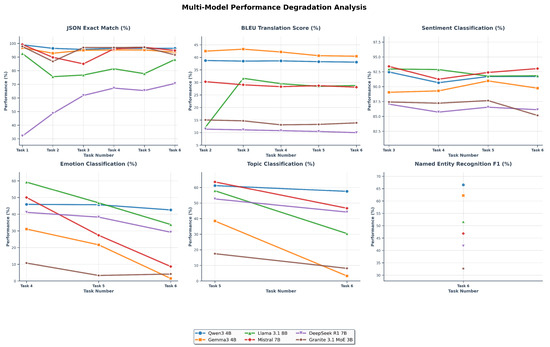

3.1.2. Overall Performance Comparison

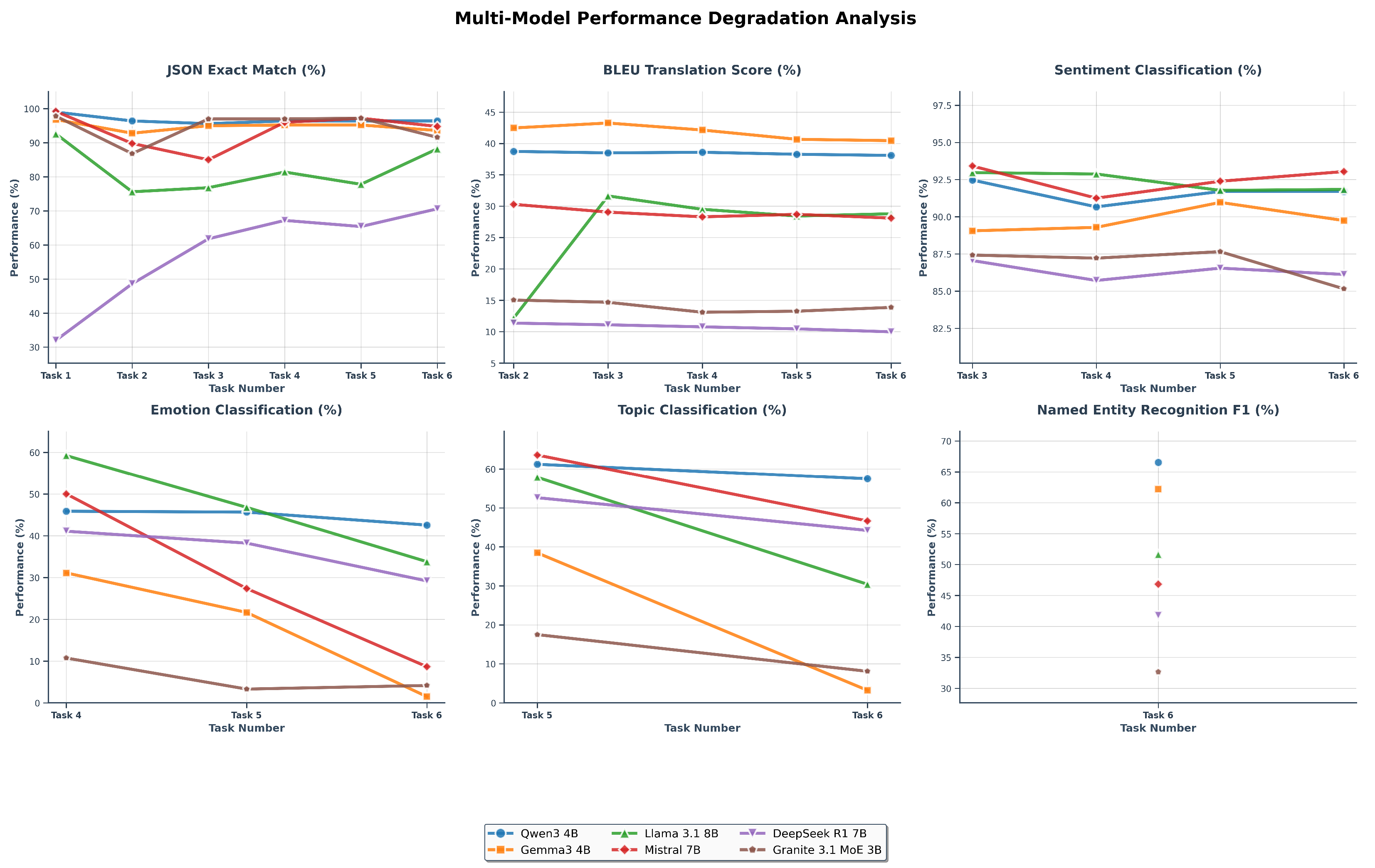

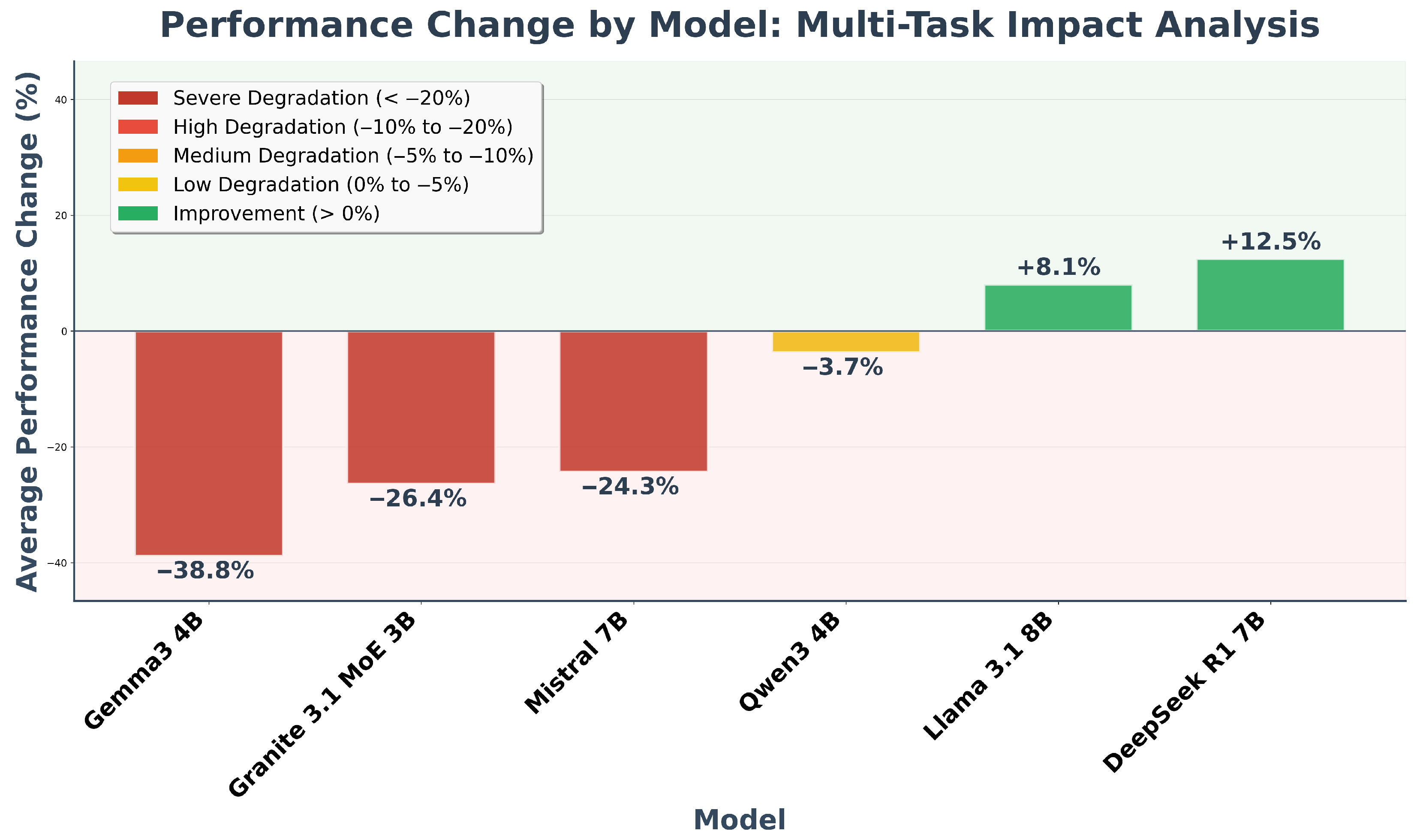

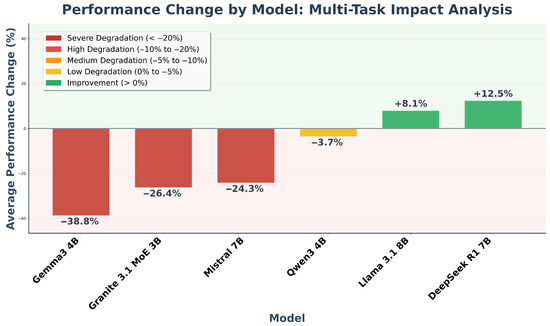

The multi-model performance analysis reveals a high degree of heterogeneity in how various language models manage task sequence transitions, as reported in Figure 13. The detailed task-specific view demonstrates that performance degradation is not uniform across all tasks. While highly structured generative tasks, such as JSON Exact Match, exhibit relative stability, classification tasks, particularly Emotion Classification and Topic Extraction, are highly susceptible to significant performance drops as models progress through the task sequence. This suggests that the complexity of the task structure and the potential for catastrophic forgetting are major determinants of multitask performance vulnerability. The vulnerability is most pronounced in smaller models like Gemma3 4B () and Granite 3.1 Moe 3B (), which show severe degradation from the initial to the final task.

Figure 13.

Overall performance degradation overview across six tasks for all evaluated models.

Contrasting this degradation, the overall performance change analysis highlights a critical split in model behavior, as shown in Figure 14. A subset of models, most notably DeepSeek R1 7B () and Llama 3.1 8B (), exhibited an average performance improvement across the task sequence. This counter-intuitive outcome suggests the presence of significant positive transfer learning or knowledge synergy within their architectures and training methodologies, where exposure to earlier tasks implicitly benefits performance on later tasks. The stability of Qwen 3 4B () further emphasizes that model architecture and training regimen are pivotal factors governing multitask resilience, polarizing performance outcomes between catastrophic degradation and beneficial knowledge transfer.

Figure 14.

Overall performance change analysis across six tasks for all evaluated models in Standard Order.

3.2. Inverted Order Results

To assess whether task sequence influences multitask performance, we conducted an additional experiment in which the order of task introduction was reversed. The inverted sequence was as follows:

- JSON Formatting: we kept JSON formatting as first task because it’s a precondition for the subsequent tasks.

- Sentiment Analysis: we moved Sentiment Analysis from third to second place.

- Emotion Analysis: previously at fourth place in the standard order sequence.

- Topic Extraction: located in the fifth place in the first experiment.

- NER: previously the last task in the sequence.

- Machine Translation (English-to-Italian): shifted from second to sixth and last position.

The sequence has been decided arbitrarily to test the hypothesis that placing structurally simpler tasks earlier in the sequence might alleviate cognitive load and improve overall performance. In this section we analyze the results obtained in the inverted task order scenario for the same models evaluated previously.

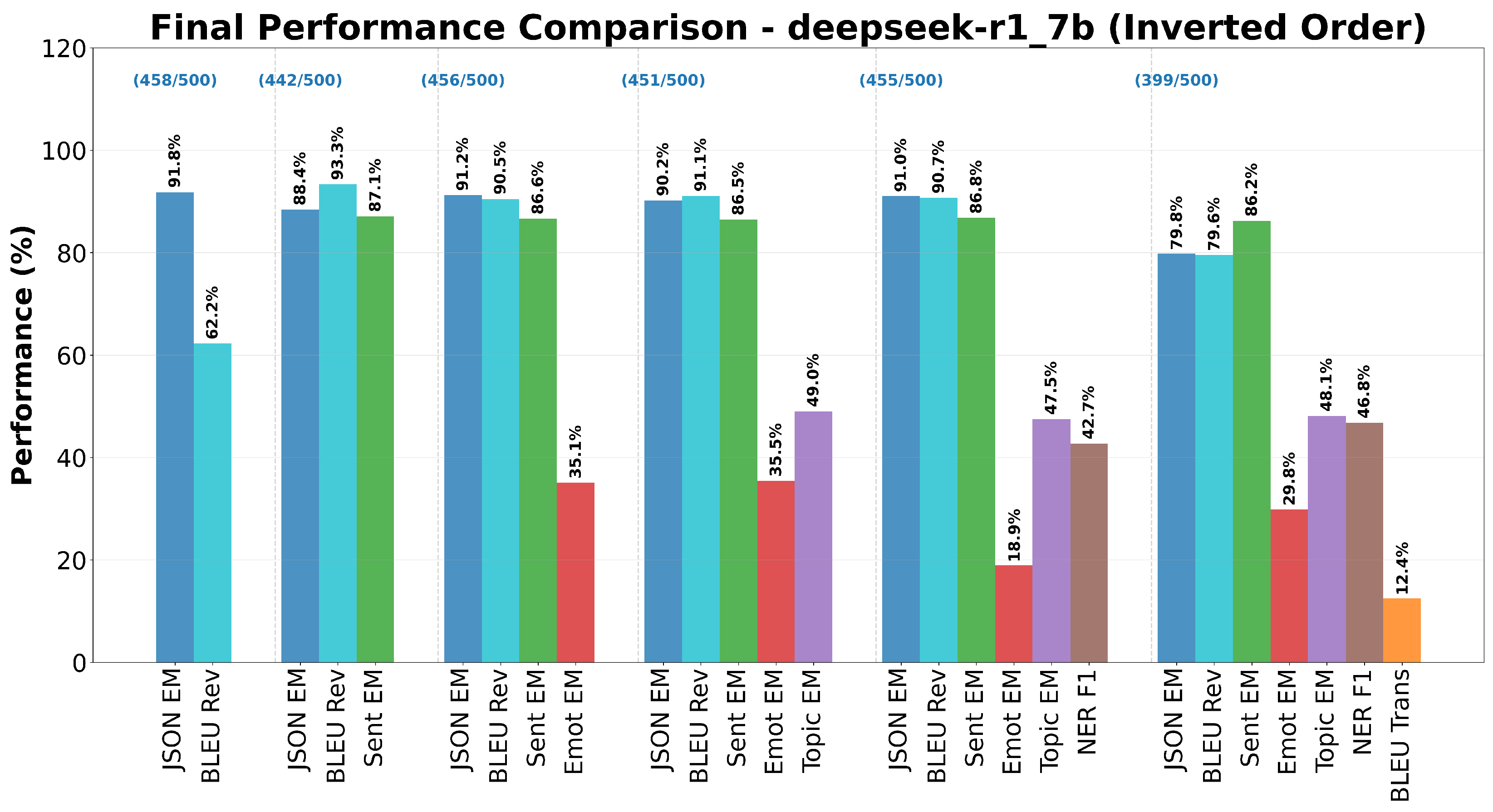

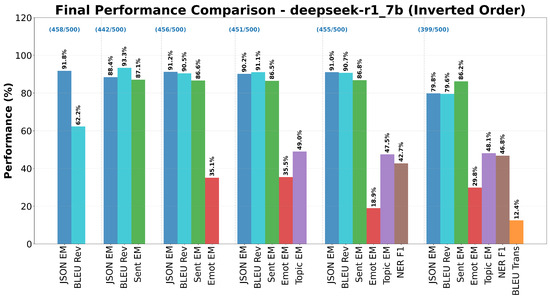

Under the inverted task sequence, DeepSeek R1 7B displayed similar behavioral trends to the standard order but with accentuated volatility in fine-grained semantic tasks. Figure 15 summarizes the final performance across all six tasks.

Figure 15.

Final performance comparison for DeepSeek R1 7B across six tasks in Inverted Order.

JSON Formatting, still introduced first, reached exact-match accuracy, slightly below its peak in the standard sequence, confirming that the model benefits marginally from the structural reinforcement of later tasks. Sentiment Analysis maintained stable performance (–), whereas Emotion Analysis degraded sharply once NER was introduced, falling from to . Topic Extraction remained moderately stable (–), while Translation—now the final task—recovered partially () compared with previously. Overall, changing the order slightly alleviated the translation decline but accentuated degradation in emotional classification, confirming that DeepSeek’s multitask fragility stems primarily from semantic interference rather than sequence positioning.

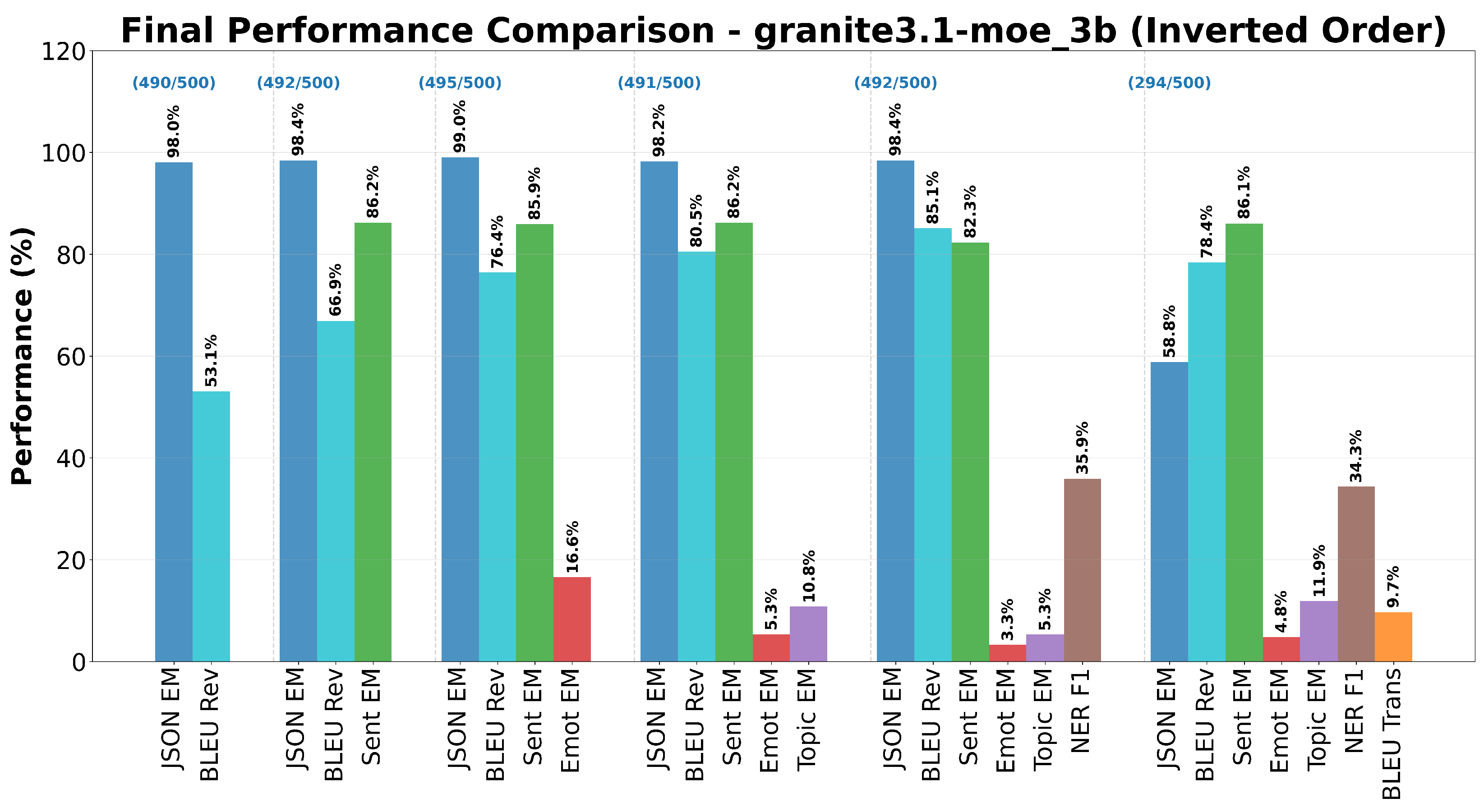

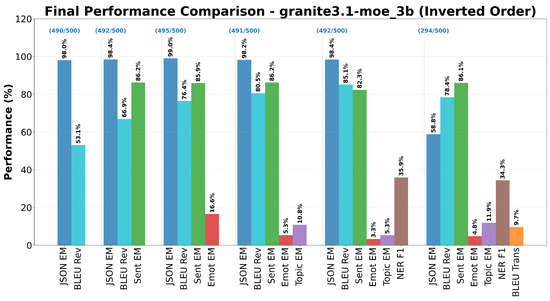

Granite 3.1 3B exhibited consistent degradation regardless of sequence direction, reinforcing its sensitivity to multitask interference. The final performance comparison is illustrated in Figure 16.

Figure 16.

Final performance comparison for Granite 3.1 3B across six tasks in Inverted Order.

JSON Formatting retained high performance () but showed increased variance () compared with the standard order. Sentiment Analysis remained stable around , whereas Emotion Analysis and Topic Extraction again collapsed to single-digit accuracies ( and , respectively). Placing NER before Translation yielded marginal gains in entity recognition ( vs. ) but did not prevent loss propagation to semantic subtasks. Translation, executed last, improved modestly (), suggesting that isolating the generative load at the end of the sequence mitigates—but does not resolve—interference.

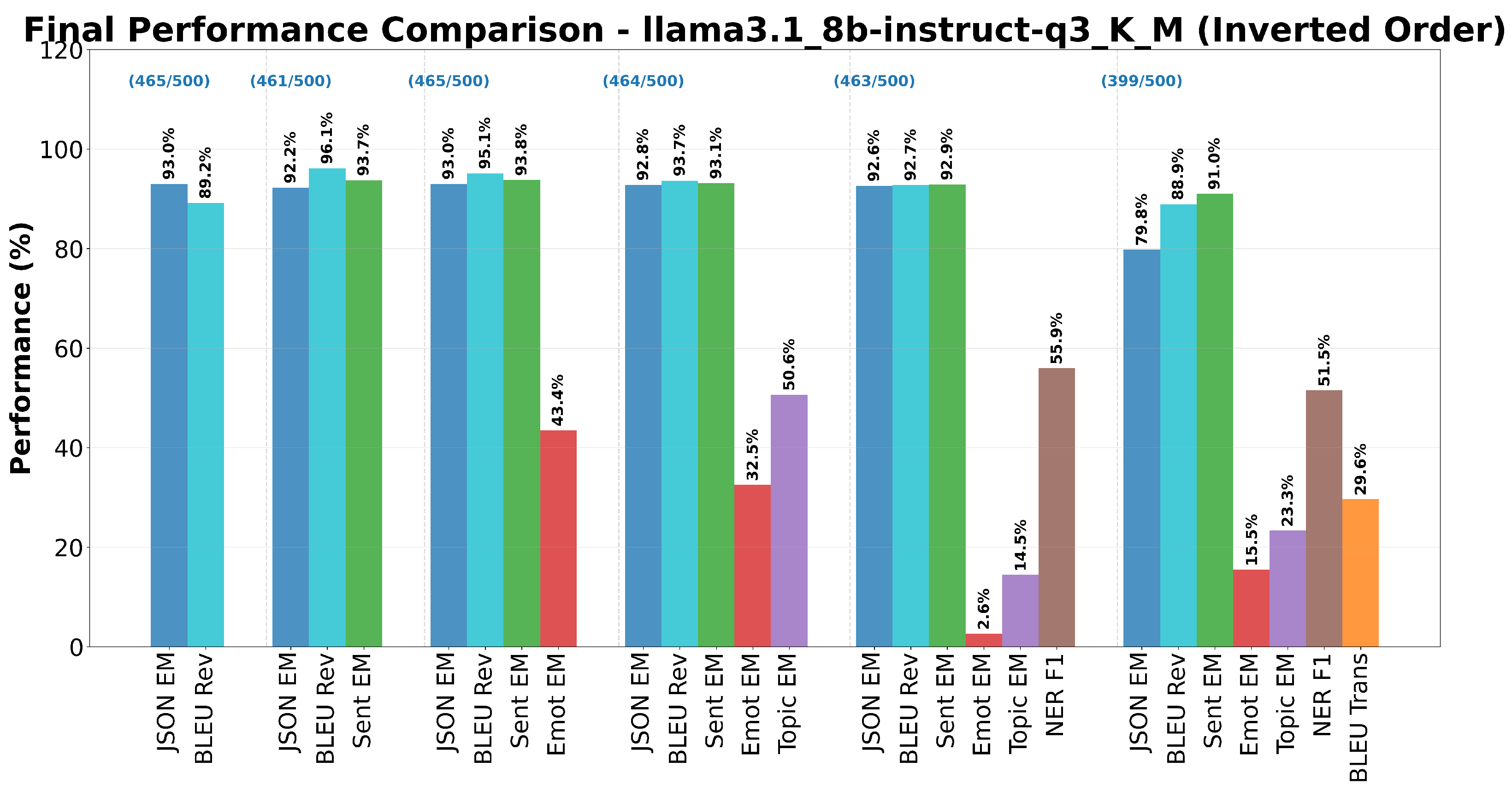

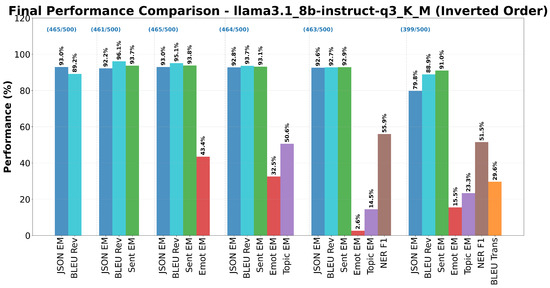

Inverted sequencing induced mixed but interpretable effects in Llama 3.1 8B, as shown in Figure 17.

Figure 17.

Final performance comparison for Llama 3.1 8B across six tasks in Inverted Order.

JSON Formatting remained strong (), confirming structural resilience. Early placement of Sentiment and Emotion tasks resulted in smoother degradation curves: Emotion Analysis declined from to , an improvement of points compared with the standard order. Topic Extraction also benefited ( vs. ), while Translation—now appended last—dropped slightly ( vs. ). NER maintained comparable (). The overall trend suggests that introducing semantically related tasks earlier provides contextual scaffolding that stabilizes affective reasoning but slightly penalizes generative precision.

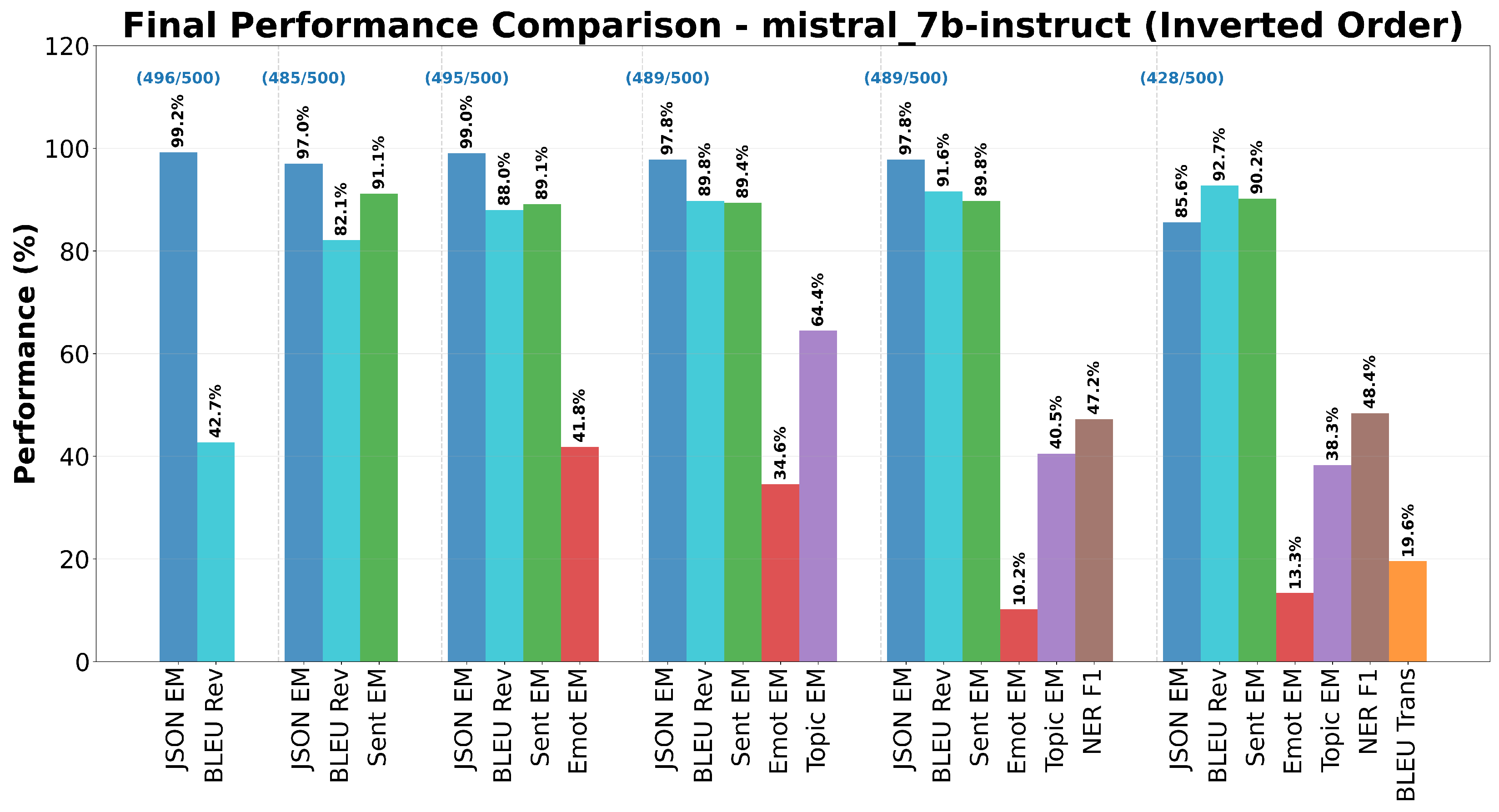

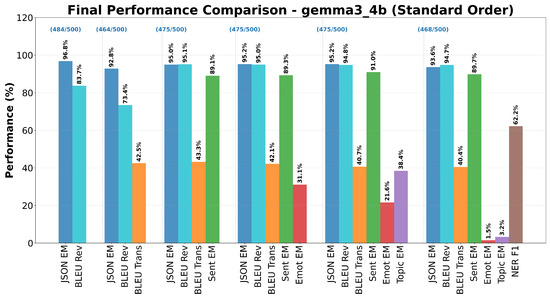

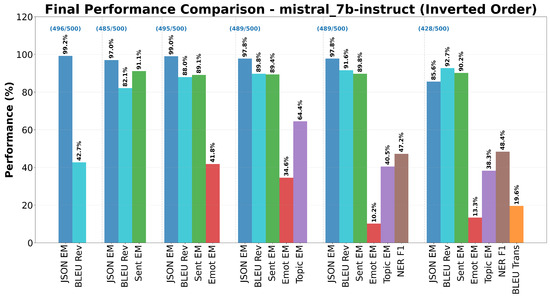

Mistral 7B preserved its general robustness in the reversed setup.

As reported in Figure 18, JSON Formatting accuracy remained high (), and Sentiment Analysis held steady (). Notably, Emotion Analysis performance improved markedly, reaching in the final configuration compared with previously, indicating that placing this task earlier reduces interference from NER. Topic Extraction likewise improved slightly ( vs. ), while NER F1 remained similar (). Translation, concluding the sequence, showed mild reduction ().

Figure 18.

Final performance comparison for Mistral 7B across six tasks in Inverted Order.

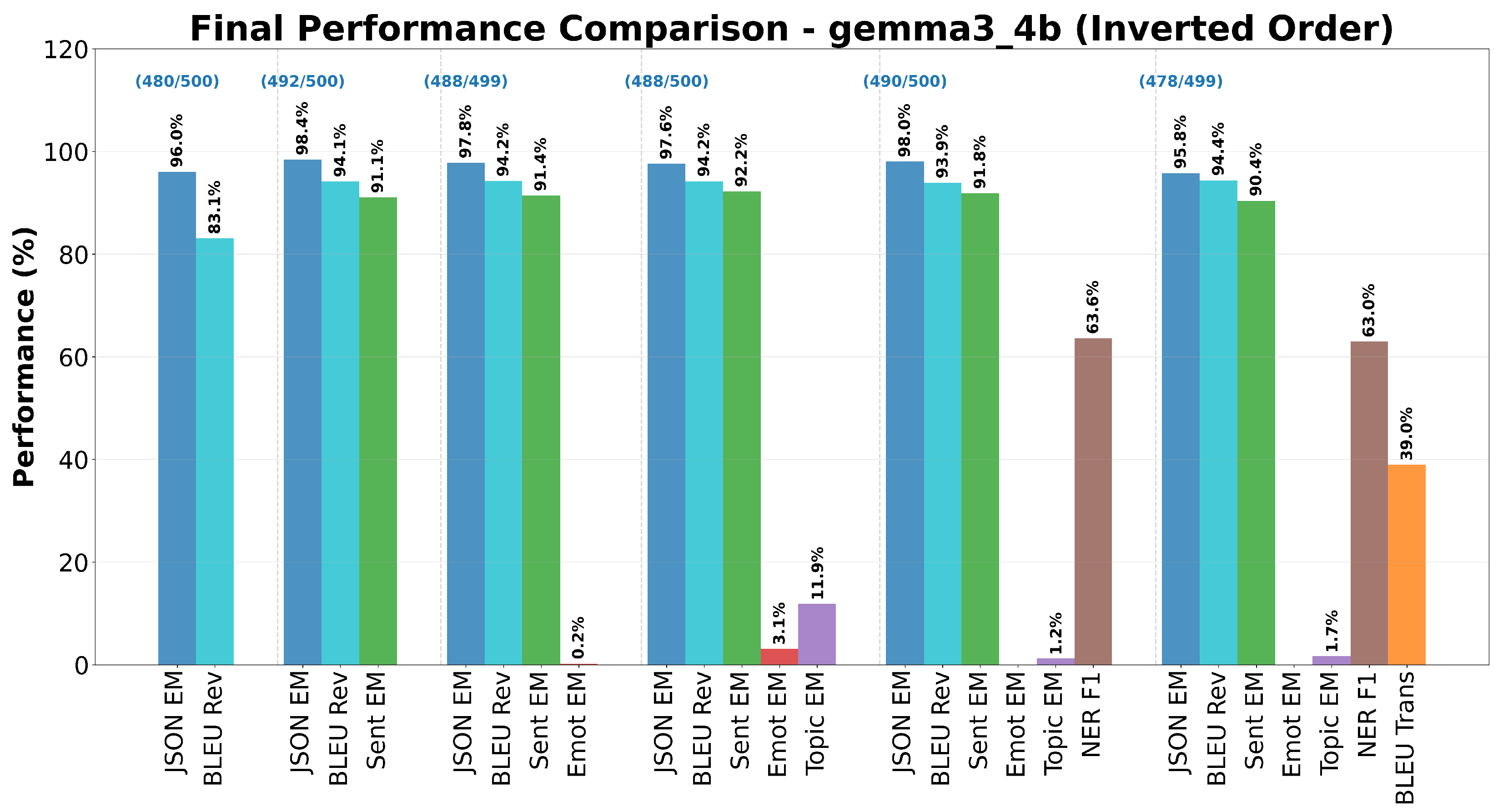

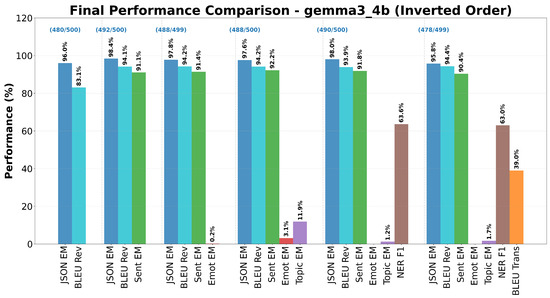

Gemma 3 4B again revealed catastrophic degradation, albeit with subtle shifts in which tasks collapsed first.

Figure 19 illustrates that JSON Formatting remained solid (), but Emotion Analysis degraded earlier (from to ), while Topic Extraction declined to . NER achieved ( points vs. standard), and Translation—now final—improved slightly ().

Figure 19.

Final performance comparison for Gemma 3 4B across six tasks in Inverted Order.

Qwen 3 4B remained the most stable architecture under order inversion. JSON Formatting preserved near-perfect accuracy (), Sentiment Analysis , Emotion Analysis , and Topic Extraction . NER , virtually identical to the standard run, while Translation . Degradation slopes across steps were nearly flat, confirming that Qwen’s balanced attention and memory gating mitigate interference effects irrespective of sequence.

In summary, the inverted-order experiment revealed no qualitative change in degradation dynamics. While minor quantitative shifts emerged—particularly improved emotion recognition in Llama 3.1 and Mistral 7B and slight translation recovery in DeepSeek R1 and Granite 3.1—overall stability rankings remained unchanged. Qwen 3 4B preserved top-level consistency, Gemma 3 4B experienced early semantic collapse, and MoE architectures continued to show amplified volatility. These outcomes suggest that model-intrinsic resource allocation mechanisms, rather than prompt sequence, principally determine multitask degradation behavior.

3.3. Statistical Analysis

To verify the robustness of the observed performances, a comprehensive statistical analysis was conducted, including both normality tests and model comparisons. As reported in Table 9, Table 10, Table 11, Table 12, Table 13 and Table 14 the Shapiro–Wilk test revealed that most metric distributions do not follow a normal distribution ( in the majority of cases), indicating the need to employ non-parametric tests. Subsequently, to assess differences between models, the Friedman test was applied.

Table 9.

Shapiro–Wilk normality test results for DeepSeek R1 7B.

Table 10.

Shapiro–Wilk normality test results for Gemma3 4B.

Table 11.

Shapiro–Wilk normality test results for Granite 3.1 3B.

Table 12.

Shapiro–Wilk normality test results for Llama 3.1 8B.

Table 13.

Shapiro–Wilk normality test results for Mistral 7B.

Table 14.

Shapiro–Wilk normality test results for Qwen3 4B.

The Shapiro–Wilk test was applied to each model-metric combination to assess whether the performance distributions follow a normal distribution.

The results obtained on Friedman’s test-presented in Table 15—show statistically significant differences for several metrics. JSON’s Exact Match (, best model Qwen3 4B), Emotion Accuracy (, best model Qwen3 4B), BLEU score on translation (, best model Gemma3 4B), BLEU score on review reporting (, best model Gemma3 4B), and Sentiment Analysis Accuracy (, best model Mistral 7B). In contrast, the F1 Score applied to NER () and Topic Extraction Accuracy () did not show significant differences among the models considered.

Table 15.

Friedman test results (multi-model comparison).

It is important to note that both the Shapiro–Wilk and Friedman tests were applied to aggregated task-level metrics (mean scores per task and model), rather than per-sample distributions. This approach was chosen to ensure computational feasibility and consistency across models, but it inherently limits the granularity of the statistical inference. Future analyses could extend these tests to per-sample scores to better capture within-task variability and potential heteroscedastic effects across configurations. As the statistical tests operate on aggregated means, p-values should be interpreted as indicators of between-model trends rather than strict per-sample significance levels.

The power analysis, based on a sample size of per configuration, confirmed that the experimental design ensures adequate statistical sensitivity, sufficient to detect medium-to-small effects with . Overall, the results indicate that some tasks sharply discriminate the capabilities of the models (particularly JSON Exact Match, BLEU score on Translation, and Sentiment Analysis Accuracy), whereas others (e.g., NER F1 and Topic Extraction Accuracy) do not reveal relevant differences. This suggests that the choice of the most suitable model should be guided not only by aggregate metrics but also by the type of task and the application context.

4. Discussion

This Discussion contextualizes the heterogeneous multitask behaviors observed across models, synthesizing how task ordering, interference patterns, and architectural design jointly shape degradation or transfer effects. We first examine sequence effects, then relate stability profiles to putative architectural mechanisms, and finally outline implications for adaptive prompt composition and future evaluation methodology. Our findings reveal that performance degradation under multitask prompting is not uniform across tasks or architectures, but follows identifiable patterns that highlight both model-specific strengths and vulnerabilities.

Across both models tested, the inverted sequence did not introduce fundamentally new degradation dynamics but rather amplified existing tendencies. Granite 3.1 3B remained highly sensitive to multitask interference, while Qwen3 4B preserved stable performance with only minor losses. This suggests that the primary driver of degradation lies in architectural characteristics rather than task order itself, with order effects modulating but not redefining each model’s inherent stability profile.

Although this study explored two representative task order configurations (standard and inverted), the full factorial space of possible task sequences grows exponentially with the number of subtasks, making exhaustive evaluation computationally infeasible. As such, the present findings capture only a subset of potential order effects. Future research should systematically investigate additional permutations—such as Sentiment-first or Translation-first sequences—to determine whether degradation dynamics depend on the initial task type or the semantic relationships between consecutive subtasks.

Our results indicate that structural and deterministic tasks, such as JSON formatting and binary sentiment classification, are generally more resilient to increasing prompt complexity than fine-grained semantic tasks like Emotion Analysis or Topic Extraction. This resilience stems from their lower ambiguity and clearer decision boundaries, which leave less room for interference from competing tasks. However, this trend is not universal: for example, DeepSeek R1 7B showed an atypical improvement in JSON formatting as complexity increased. More broadly, it is important to note that the notion of “complexity” used in human-centered task descriptions does not map directly to LLM processing. While we treat complexity in terms of cognitive load or fine-grained semantic discrimination, LLMs operate through statistical associations and causal language modeling objectives, which means that what is “complex” for humans may not align with what is “complex” for these models. This gap underscores the need for caution when transferring cognitive terminology to the evaluation of LLM performance.

Our results indicate that no universal golden rule governs performance behavior under incremental multitask prompting across current LLM families. Rather than exhibiting a consistent monotonic degradation or predictable pattern of interference, each model manifests a distinct interaction profile shaped by architectural biases, training regime, and internal resource allocation strategies. Improvements in one task (e.g., translation gains in Llama 3.1 after sentiment conditioning) co-exist with sharp collapses in others (e.g., emotion and topic erosion in Gemma 3 4B once NER is introduced), while models such as Qwen3 4B maintain broad stability with only marginal cross-task trade-offs. This heterogeneity suggests that multitask prompt composition cannot be reliably optimized through static heuristics (e.g., ordering simple before complex tasks or privileging structural tasks first); instead, effectiveness appears model-contingent and emergent. Collectively, these findings caution against prescriptive, model-agnostic prompting strategies in multitask settings and motivate adaptive, diagnostics-driven approaches that infer a model’s interference topology before deployment.

The present findings are based on the IMDB movie review dataset, which primarily reflects informal and creative language. While this domain is suitable for studying the interaction between semantic diversity and task interference, it inherently limits generalizability to other textual genres. In non-creative domains such as legal contracts, scientific abstracts, or biomedical reports, the linguistic entropy is typically lower, and semantic density is higher—meaning that task-specific features are more stable and less contextually dispersed. Under these conditions, structural and semantic resilience to multitask prompting may manifest differently: degradation could be less pronounced for classification-oriented tasks but potentially more sensitive for generation or reasoning tasks. Future studies should therefore investigate whether the observed patterns of task interference and relative degradation hold across text types characterized by reduced lexical variability and stronger structural regularities.

It should be noted that all evaluated models share the same Transformer foundation, differing mainly in scale and parameter sparsity (e.g., dense vs. MoE configurations). Therefore, the conclusions regarding architecture-dependent degradation are restricted to this family of models. Architectures that incorporate retrieval mechanisms, extended context windows, or explicit reasoning modules may exhibit different resilience patterns under multitask prompting, and should be investigated in future work.

5. Conclusions

This work introduced an incremental multitask prompting evaluation framework across six heterogeneous NLP tasks and six LLM families, revealing that performance degradation is architecture-contingent rather than governed by a universal heuristic. Instead of a monotonic loss profile, we observed model-specific interference signatures ranging from severe collapse (e.g., Gemma 3 4B in fine-grained semantic tasks) to near-stable retention (Qwen3 4B) and selective positive transfer (Llama 3.1 translation gains under sentiment co-conditioning). These findings reinforce the view that static prompt composition rules (e.g., “simpler first” and “structural before semantic”) are insufficient for robust multitask deployment and should be replaced by adaptive, diagnostics-driven strategies.

This study offers a controlled incremental task protocol that disentangles cumulative from stepwise interference, enabling fine-grained attribution of degradation dynamics. A cross-family comparison spanning dense Transformer and MoE architectures exposes divergent resilience profiles rather than scale-driven regularities. All six tasks draw from the same IMDB review subset, removing cross-corpus variance and ensuring that observed shifts are attributable to prompt accretion rather than domain drift. A partial task-order inversion experiment further probes ordering sensitivity, while fully local, API-independent execution guarantees reproducibility and eliminates hidden provider adaptations. Collectively, these design choices provide a transparent, architecture-aware baseline for future multitask prompting research.

The scope is constrained by a single domain (movie reviews), limiting extrapolation to technical, legal, biomedical, or multilingual settings. Reference translations were synthetically generated (GPT-4.1), potentially encoding stylistic bias in the absence of professionally curated parallel data. Weak supervision plus partial manual verification for emotion and topic labels introduces residual annotation noise that may depress achievable ceilings. Evaluation breadth is narrow: translation relies solely on BLEU (omitting chrF, COMET, or hallucination auditing) and NER is summarized with a single span-level F1 without boundary-type error decomposition. Architectural coverage is incomplete, excluding retrieval-augmented, long-context specialized, or explicit reasoning-enhanced variants. A single instruction template per task precludes analysis of phrasing sensitivity or compression trade-offs. Task ordering space remains largely unexplored beyond one canonical sequence and a single inversion on two models, preventing characterization of combinatorial interference surfaces.

The absence of a generalizable degradation rule across architectures underscores the necessity of model-specific multitask governance. Rather than seeking a universal recipe, effective multitask prompting will likely rely on adaptive orchestration informed by empirical interference profiling. This study establishes a reproducible baseline for such profiling and motivates a shift from heuristic prompt stacking toward principled, measurement-driven composition.

Author Contributions

Conceptualization, F.D.M. and M.G.; methodology, F.D.M. and M.G.; software, F.D.M. and M.G.; validation, F.D.M. and M.G.; formal analysis, F.D.M. and M.G.; investigation, F.D.M. and M.G.; resources, F.D.M. and M.G.; data curation, F.D.M. and M.G.; writing—original draft preparation, F.D.M. and M.G.; writing—review and editing, F.D.M. and M.G.; visualization, F.D.M. and M.G.; supervision, F.D.M. and M.G.; project administration, F.D.M. and M.G.; funding acquisition, F.D.M. and M.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data supporting the results reported in this study are available online in a publicly accessible GitHub repository. All datasets analyzed or generated during the research can be found at the following link: https://github.com/gozus19p/Comparative-Analysis-of-Prompt-Strategies-for-LLMs (accessed on 2 October 2025). We encourage further exploration and verification of our findings using these data.

Conflicts of Interest

The authors declare no conflicts of interest.

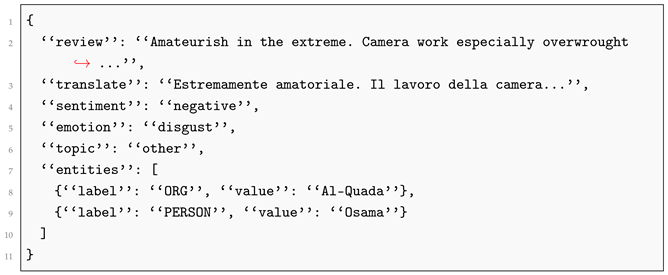

Appendix A. Dataset Structure Example

The dataset used contains movie reviews with the following columns:

- ID: Unique identifier for the review

- Review: Original review text in English

- Sentiment: Sentiment classification (positive/negative)

- Entities: List of extracted named entities (with label and value fields)

- Translation: Italian translation of the review (generated using GPT-4.1)

- Emotion: Emotion classification (joy, anger, sadness, disgust, neutral)

- Topic: Topic/genre classification (action, comedy, drama, horror, thriller, romance, sci-fi, fantasy, documentary, other)

- Row Number: Progressive row number

| Listing A1. Example of a ground truth JSON from Dataset. |

|

Appendix B. GPT-4.1 Prompts

This section provides the exact prompt templates used for generating reference translations and NER ground truth with GPT-4.1.

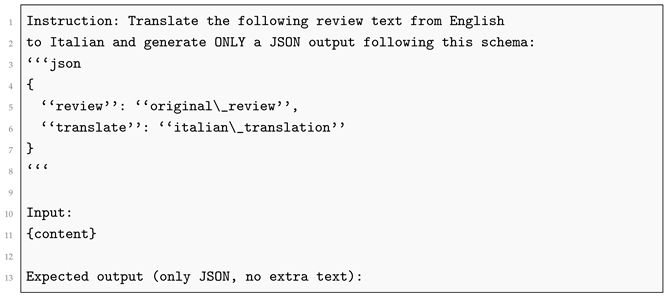

| Listing A2. Prompt used to translate review text from English to Italian. |

|

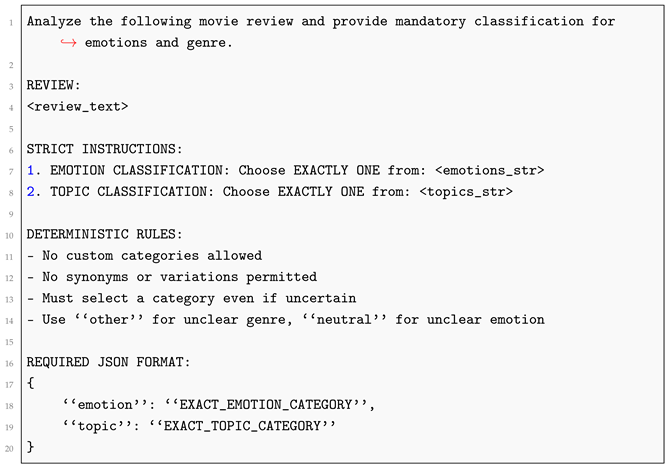

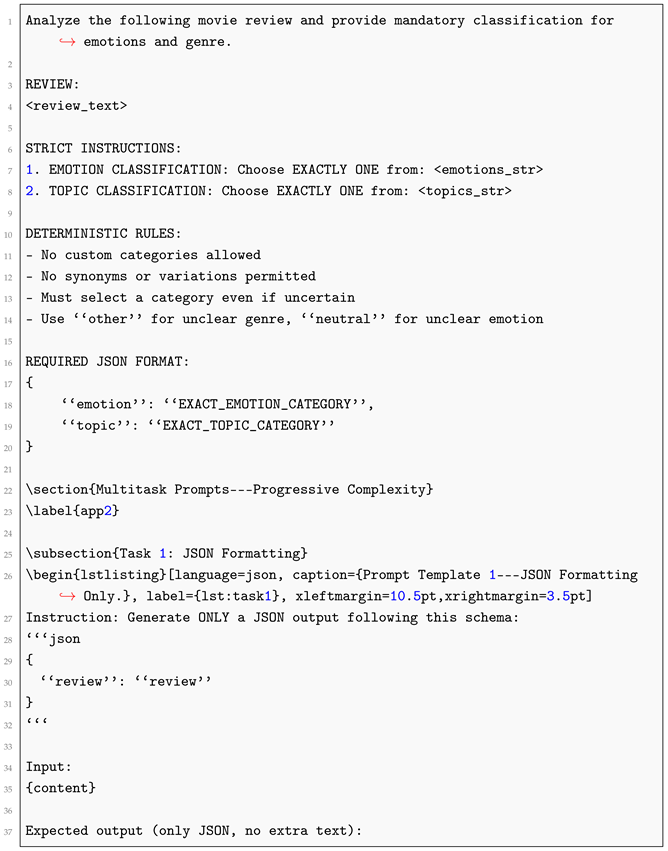

| Listing A3. Prompt used to generate emotion and topic classification ground truth. |

|

Appendix C. Multitask Prompts—Progressive Complexity

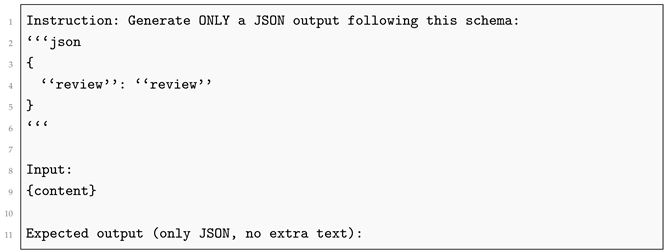

Appendix C.1. Task 1: JSON Formatting

| Listing A4. Prompt Template 1—JSON Formatting Only. |

|

| Listing A5. Prompt used to generate emotion and topic classification ground truth. |

|

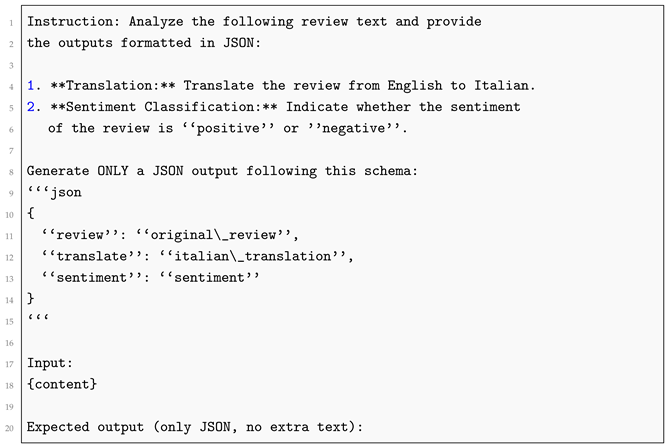

Appendix C.2. Task 2: JSON + Translation

| Listing A6. Prompt Template 2—JSON and Translation. |

|

Appendix C.3. Task 3: JSON + Translation + Sentiment Analysis

| Listing A7. Prompt Template 3—JSON, Translation and Sentiment. |

|

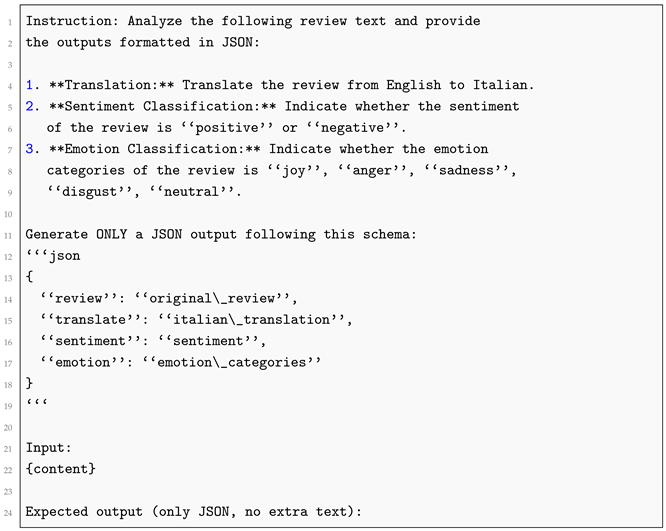

Appendix C.4. Task 4: JSON + Translation + Sentiment + Emotion

| Listing A8. Prompt Template 4—Adding Emotion Classification. |

|

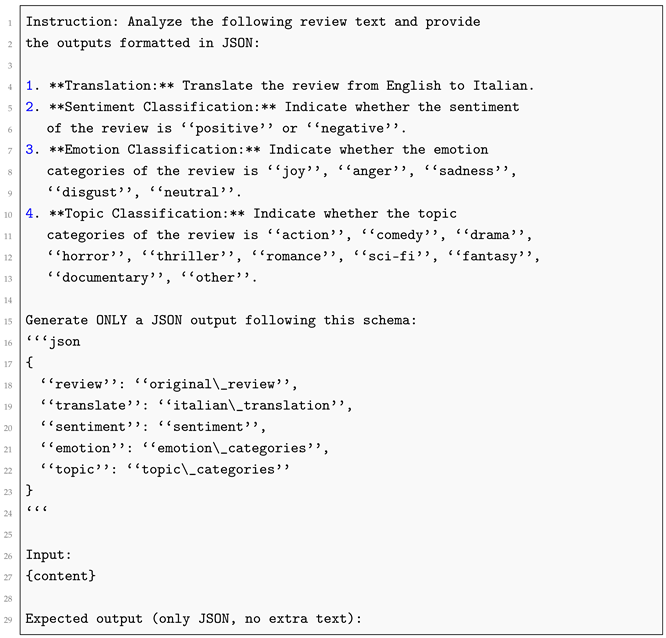

Appendix C.5. Task 5: JSON + Translation + Sentiment + Emotion + Topic

| Listing A9. Prompt Template 5—Adding Topic Classification. |

|

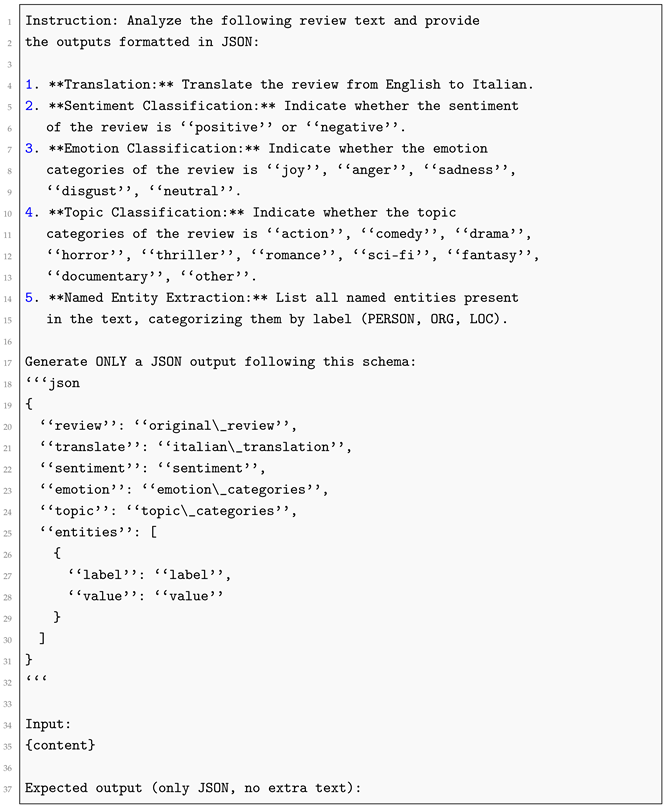

Appendix C.6. Task 6: Complete Multitask—All Features

| Listing A10. Prompt Template 6—All Features. |

|

References

- Gozzi, M.; Di Maio, F. Comparative Analysis of Prompt Strategies for Large Language Models: Single-Task vs. Multitask Prompts. Electronics 2024, 13, 4712. [Google Scholar] [CrossRef]

- Son, M.; Won, Y.J.; Lee, S. Optimizing Large Language Models: A Deep Dive into Effective Prompt Engineering Techniques. Appl. Sci. 2025, 15, 1430. [Google Scholar] [CrossRef]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.; Zhou, D. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. arXiv 2023, arXiv:2201.11903. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- Cheng, L.; Li, T.; Wang, Z.; Steedman, M. S2LPP: Small-to-Large Prompt Prediction across LLMs. arXiv 2025, arXiv:2505.20097. [Google Scholar]

- Sivarajkumar, S.; Kelley, M.; Samolyk-Mazzanti, A.; Visweswaran, S.; Wang, Y. An Empirical Evaluation of Prompting Strategies for Large Language Models in Zero-Shot Clinical Natural Language Processing: Algorithm Development and Validation Study. JMIR Med. Inform. 2024, 12, e55318. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar] [PubMed]

- Jacobs, R.A.; Jordan, M.I.; Nowlan, S.J.; Hinton, G.E. Adaptive Mixtures of Local Experts. Neural Comput. 1991, 3, 79–87. [Google Scholar] [CrossRef] [PubMed]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The Llama 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Team, G.; Kamath, A.; Ferret, J.; Pathak, S.; Vieillard, N.; Merhej, R.; Perrin, S.; Matejovicova, T.; Ramé, A.; Rivière, M.; et al. Gemma 3 Technical Report. arXiv 2025, arXiv:2503.19786. [Google Scholar] [CrossRef]

- Jiang, A.Q.; Sablayrolles, A.; Mensch, A.; Bamford, C.; Chaplot, D.S.; de las Casas, D.; Bressand, F.; Lengyel, G.; Lample, G.; Saulnier, L.; et al. Mistral 7B. arXiv 2023, arXiv:2310.06825. [Google Scholar] [CrossRef]

- Yang, A.; Li, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Gao, C.; Huang, C.; Lv, C.; et al. Qwen3 Technical Report. arXiv 2025, arXiv:2505.09388. [Google Scholar] [CrossRef]

- DeepSeek-AI; Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; et al. DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).