Abstract

Manual pixel-level annotation remains a major bottleneck in deploying deep learning models for dense prediction and semantic segmentation tasks across domains. This challenge is especially pronounced in applications involving fine-scale structures, such as cracks in infrastructure or lesions in medical imaging, where annotations are time-consuming, expensive, and subject to inter-observer variability. To address these challenges, this work proposes a weakly supervised and annotation-efficient segmentation framework that integrates sparse bounding-box annotations with a limited subset of strong (pixel-level) labels to train robust segmentation models. The fundamental element of the framework is a lightweight Bounding Box Encoder that converts weak annotations into multi-scale attention maps. These maps guide a ConvNeXt-Base encoder, and a lightweight U-Net–style convolutional neural network (CNN) decoder—using nearest-neighbor upsampling and skip connections—reconstructs the final segmentation mask. This design enables the model to focus on semantically relevant regions without relying on full supervision, drastically reducing annotation cost while maintaining high accuracy. We validate our framework on two distinct domains, road crack detection and skin cancer segmentation, demonstrating that it achieves performance comparable to fully supervised segmentation models using only 10–20% of strong annotations. Given the ability of the proposed framework to generalize across varied visual contexts, it has strong potential as a general annotation-efficient segmentation tool for domains where strong labeling is costly or infeasible.

1. Introduction

Semantic segmentation is widely used in various computer vision applications, including biomedical analysis [1], autonomous driving [2], and infrastructure inspection [3,4]. It performs dense, pixel-level classification to localize target regions such as tumors, road signs, or surface cracks. However, obtaining strong performance with deep learning models in this domain typically requires substantial manually annotated data, particularly for fully supervised models such as U-Net [1] and DeepLab [5]. Producing pixel-level annotations is labor-intensive, time-consuming, and prone to inconsistencies—especially for small or ambiguous structures (e.g., pavement cracks [6,7] and skin lesions [4,8]).

To alleviate the annotation burden, researchers have studied weakly supervised segmentation methods based on coarse labels—such as image-level tags [9], scribbles [10], points [11], or bounding-boxes [12]—instead of full supervision. Among these, bounding-box annotations offer a good compromise between annotation effort and spatial localization. However, models trained only from boxes often produce imprecise boundaries or over-segmentation, because they lack fine-grained guidance [13].

Meanwhile, semi-supervised and hybrid methods seek to combine weak and strong supervision to enhance segmentation quality. While promising, many of these methods still handle the two label types separately and do not enforce alignment during training [14]. Moreover, most approaches are designed for a single domain and may not generalize well to visually different tasks, particularly when domain-specific features vary in scale, texture, or structure.

To address these limitations, we propose an annotation-efficient and domain-general segmentation framework that integrates sparse bounding box annotations with a small subset of strong (pixel-level) labels in a unified model. At the core of our approach is a lightweight Bounding Box Encoder, which transforms box-level labels into multi-scale spatial attention maps. These attention maps steer the encoder stages of a ConvNeXt–U-Net backbone [15], helping the network attend to semantically relevant regions, even with limited strong supervision.

Our framework is evaluated on two distinct and challenging domains: road crack detection and skin cancer segmentation. The results indicate that our method achieves segmentation performance on par with fully supervised models using only 10–20% of strong annotations, and it generalizes well across domains. These findings support bounding box-guided attention as a cost-effective and robust supervisory signal for training segmentation models with limited annotations. The contributions of this paper are:

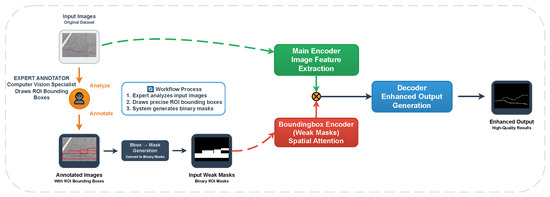

- A novel segmentation framework is proposed that combines weak bounding box annotations with a small subset of strong pixel-level labels in an end-to-end training pipeline. The overall structure of the framework is shown in Figure 1.

Figure 1. General workflow of the proposed bounding box-guided segmentation framework. Annotators first draw bounding boxes over regions of interest (ROIs). These annotations are automatically converted into binary weak masks, which are processed by the Bounding Box (weak) encoder to produce multi-scale spatial attention maps. These maps modulate the main encoder for more accurate segmentation, and a U-Net–style CNN decoder reconstructs the final segmentation mask. Training: image + bounding boxes (all N) and strong masks (subset ) are used for supervision. Inference: image + bounding boxes → predicted pixel-level masks.

Figure 1. General workflow of the proposed bounding box-guided segmentation framework. Annotators first draw bounding boxes over regions of interest (ROIs). These annotations are automatically converted into binary weak masks, which are processed by the Bounding Box (weak) encoder to produce multi-scale spatial attention maps. These maps modulate the main encoder for more accurate segmentation, and a U-Net–style CNN decoder reconstructs the final segmentation mask. Training: image + bounding boxes (all N) and strong masks (subset ) are used for supervision. Inference: image + bounding boxes → predicted pixel-level masks. - A lightweight Bounding Box Encoder is introduced to convert binary box masks into multi-scale spatial attention maps, which guide the ConvNeXt-U-Net encoder to focus on semantically relevant regions.

- The framework significantly reduces annotation costs, achieving performance comparable to fully supervised models while requiring only 10–20% of strong labels.

- Extensive experiments across two structurally distinct domains—road crack detection and skin lesion segmentation—demonstrate the method’s generalizability and effectiveness in real-world, annotation-limited settings.

This work extends our conference paper [16] with a fuller description of the Bounding Box Encoder and training objectives, expanded cross-domain experiments, comprehensive ablations, and an efficiency analysis.

The rest of this paper is organized as follows: Section 2 reviews related work. Section 3 details the proposed segmentation framework. Section 4 describes the datasets and training setup. Section 5 presents experimental results and analysis. Finally, Section 6 concludes the paper and discusses future directions.

2. Related Work

Semantic segmentation with limited supervision is increasingly important due to the high cost of dense, pixel-level annotations. We summarize recent advances in three relevant areas: (i) weakly supervised semantic segmentation with coarse annotations, (ii) methods guided by bounding-box supervision, and (iii) frameworks for annotation efficiency and domain generalization.

2.1. Weakly Supervised Semantic Segmentation

Weakly supervised semantic segmentation (WSSS) aims to reduce the need for dense pixel-level annotations by utilizing lower-cost forms of supervision, such as image-level tags, points, or scribbles. Early approaches used image-level labels to train image classifiers and derive class activation maps (CAMs), which were then refined using saliency cues or post-processing techniques such as conditional random fields (CRFs) [9,14]. While effective at coarse localization of objects, these methods often struggle to delineate fine-grained boundaries, particularly for thin or discontinuous structures.

To improve spatial fidelity, researchers have explored scribble-based [10] and point-based [11] supervision strategies. Although these methods provide localized cues at minimal annotation cost, they often require additional spatial priors or heuristic region-growing schemes to complete object shapes. Bounding boxes offer a practical compromise, striking a favorable balance between annotation effort and spatial specificity [12]. Given this, box-based approaches are examined in more detail in Section 2.2.

The advent of vision foundation models such as the Segment Anything Model (SAM) [17] and Grounding-DINO [18] has opened new avenues for WSSS. These models enable prompt-driven segmentation and localization across diverse tasks. For example, Ravanbakhsh et al. [19] used SAM and Grounding-DINO to automatically convert bounding boxes into accurate segmentation masks, showing the potential of large-scale pre-trained models to reduce annotation burden.

2.2. Bounding Box-Guided Segmentation

Bounding box-guided segmentation offers a practical compromise between annotation efficiency and spatial supervision. Early box-guided pipelines such as BoxSup [12] and the GrabCut-based refinement by Khoreva et al. [13] convert box-level annotations into pseudo-masks, which are subsequently used as surrogate ground truth during model training. However, these pipelines are typically multi-stage, rely on handcrafted heuristics, and can be sensitive to initialization quality.

To address these limitations, recent efforts have focused on integrating box-derived priors directly into learning objectives. Wang et al. [20] introduced a multiple-instance-learning (MIL)-based tightness constraint, encouraging predictions to remain within box regions and improving spatial consistency. Other studies, such as BBAM [21], use detector-driven attribution maps to highlight discriminative pixels within bounding boxes, thus guiding mask prediction without requiring manual refinement.

Bounding box guidance has also been explored for 3D instance segmentation. For instance, Deng et al. [22] proposed Sketchy-3DIS, which initializes segmentation from coarse 3D boxes and iteratively refines instance masks by enforcing geometric consistency.

Unlike the above approaches, the method proposed in this work does not rely on pseudo-mask generation, external prompting, or multi-step refinement. Instead, we introduce a lightweight Bounding Box Encoder that maps binary box masks into multi-scale spatial attention maps. These maps are aligned (via resizing) with the encoder’s feature stages and applied through element-wise modulation to influence the encoding process. This design enables the network to focus on semantically relevant regions under weak supervision while supporting end-to-end optimization without auxiliary modules or post-processing steps.

2.3. Feature Modulation in Segmentation

Feature modulation is widely used in computer vision to bias models toward informative regions. Attention modules such as SE-Net [23] and CBAM [24] use channel and/or spatial attention to improve feature selectivity. In segmentation tasks, spatial priors have been used to strengthen weak supervision, as seen in [25,26]. Feature conditioning approaches inject external cues (e.g., masks or priors) into the backbone to guide learning.

Our method follows this line of work by modulating encoder features using spatial attention maps generated from bounding-box masks. Unlike prior work, our design injects attention early within the encoder via element-wise modulation, enabling end-to-end guidance without additional refinement stages.

2.4. Annotation-Efficient and Domain-General Segmentation

A complementary direction is to reduce annotation costs by combining weak and strong labels through semi-supervised or hybrid training paradigms. Several methods have investigated confidence-weighted fusion or label propagation from a small subset of fully labeled images. A recent study by Kweon and Yoon [27] proposed WISH, a unified framework capable of training from image tags, points, scribbles, and bounding boxes by leveraging promptable foundation models like SAM. Although flexible, WISH relies on pre-trained vision–language models and is not optimized end-to-end for a specific segmentation target.

In addition to annotation efficiency, generalization across domains remains a critical challenge. Many segmentation frameworks are tailored for either medical imaging or natural scenes and struggle to transfer due to changes in scale, color distribution, and structural complexity [28]. Domain adaptation methods attempt to mitigate this through adversarial learning or feature alignment, but they typically require access to both source and target domain data during training.

In contrast, our approach demonstrates strong applicability across two structurally different domains—road crack detection and skin lesion segmentation—without modification to the architecture or supervision strategy. By directly incorporating weak bounding-box cues into the encoder and fusing them with a small set of strong labels, our framework achieves competitive performance under constrained annotation budgets and across diverse data distributions.

3. Methodology

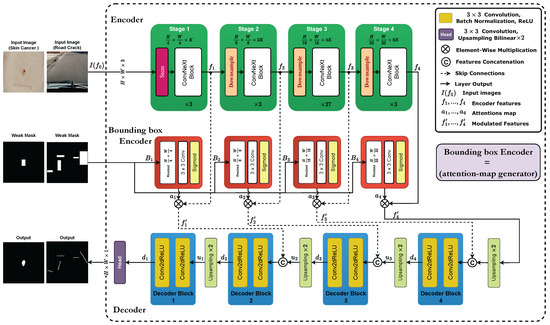

This section outlines our proposed annotation-efficient segmentation framework, which combines a small number of strong pixel-level annotations with auxiliary spatial cues derived from bounding box masks. The architecture follows an encoder–decoder design based on ConvNeXt, enhanced by an attention mechanism that modulates feature encoding. Strong annotations are used exclusively for supervision, while bounding boxes serve as auxiliary spatial context. We describe the problem setup, model components, and training strategy in the following subsections. Figure 2 illustrates the overall architecture and data flow.

Figure 2.

Overview of the proposed bounding box-guided segmentation framework. The input is an RGB image and its bounding-box mask. A ConvNeXt-Base encoder extracts multi-scale features that are spatially modulated by attention maps generated by the Bounding Box Encoder; a U-Net–style CNN decoder then reconstructs the final segmentation mask. The framework supports training with both strong (pixel-level) and weak (bounding-box) annotations and generalizes across domains such as road-crack and skin lesion segmentation. Inference: given image + bounding boxes, the model produces refined pixel-level masks.

3.1. Problem Formulation

We address the task of semantic segmentation under constrained supervision, where full pixel-level annotations are available only for a subset of the training data. Each image in the dataset is accompanied by a binary bounding box mask that provides weak localization cues. Formally, the training set is defined as , where:

- denotes the input RGB image,

- is a binary mask derived from bounding box annotations, available for all images,

- is a pixel-level segmentation mask, provided only for a subset of the data.

The objective is to learn a segmentation function , parameterized by , that predicts dense segmentation masks for unseen inputs. During training, bounding box masks are used to generate spatial attention maps that guide the encoder to focus on semantically relevant regions.

3.2. Model Components

Our framework adopts an encoder–decoder architecture and introduces a lightweight mechanism to integrate spatial cues derived from bounding box masks. It comprises three main modules: (i) a ConvNeXt-Base encoder for hierarchical feature extraction, (ii) a lightweight Bounding Box Encoder for generating spatial attention maps, and (iii) a U-Net-style decoder for dense mask prediction. This design is task-agnostic and has been validated across two structurally different domains: road crack detection and skin lesion segmentation, demonstrating its robustness to varying image modalities, textures, and object scales.

Relation to prior work. This article is an extension of our preliminary conference version [16], providing a fuller methodological description (including the Bounding Box Encoder and training objectives), expanded experiments on two domains (RCFD and ISIC 2018) with stronger baselines, comprehensive ablations, and an efficiency analysis.

3.2.1. Encoder: ConvNeXt-Base

We employ ConvNeXt-Base [15] as the encoder backbone to extract multi-level semantic features from input images. Designed with insights from both convolutional networks and transformers, ConvNeXt achieves strong performance on dense prediction tasks while maintaining computational efficiency. Its architecture includes large kernel convolutions, inverted bottlenecks, and layer normalization, making it particularly well-suited for segmentation problems involving fine structures and complex textures.

Given an input image , the encoder processes it through four sequential stages. Each stage reduces spatial resolution and increases feature abstraction via convolutional downsampling and stacked ConvNeXt blocks:

with . Each output captures features at a distinct semantic and spatial scale.

These hierarchical features are subsequently modulated using bounding box-driven attention maps before being passed to the decoder.

3.2.2. Bounding Box Encoder and Modulation

To incorporate auxiliary spatial cues, we introduce a lightweight Bounding Box Encoder, inspired by [29], which transforms binary bounding box masks into attention maps aligned with the encoder’s feature hierarchy. For each input image, its corresponding bounding box mask is first resized to match the resolution of each encoder stage.

The encoder applies a single convolution followed by a sigmoid activation to produce a spatial attention map:

where denotes the resized mask at stage l, and is the corresponding attention map.

Each attention map is then broadcast and multiplied element-wise with the output feature map from the ConvNeXt encoder:

where ⊙ denotes element-wise multiplication. This modulation highlights semantically relevant regions indicated by the bounding boxes while suppressing irrelevant background noise.

The modulated features are subsequently passed to the corresponding decoder stages, enabling attention-guided segmentation without relying on full pixel-level labels for the entire dataset.

3.2.3. Decoder: U-Net-Style Reconstruction

The decoder follows a U-Net-style design that progressively upsamples and fuses features from the encoder to reconstruct the segmentation mask at full resolution. It operates on the four modulated encoder outputs , where and represent progressively lower resolutions.

Let the decoder function be defined as follows:

where is the upsampled feature from the previous decoder block, and is the skip-connected modulated feature from the encoder and Bounding Box Encoder at the corresponding scale. Decoder block 4 is initialized as:

and subsequent blocks fuse the output of the previous decoder with the next encoder feature. The final decoder block (block 1) produces the low-level decoder output .

Each consists of:

- Nearest-neighbor upsampling to double the spatial resolution.

- Concatenation with the corresponding encoder feature (except for the last block, which receives no skip).

- Two sequential convolution layers, each followed by BatchNorm and ReLU activation.

The final segmentation prediction is obtained by applying a convolution followed by bilinear upsampling by scale factor equal to 2:

This architecture enables spatially accurate mask reconstruction without a bottleneck, maintaining fine details critical for thin or discontinuous structures.

3.3. Training Strategy

The proposed model is trained using a hybrid loss that combines strong supervision from a limited set of pixel-level annotations with an auxiliary consistency loss based on bounding box masks. Let N be the total number of training images, among which only a subset of size is associated with strong pixel-level labels.

- 1.

- Binary Cross-Entropy Loss.

For each strongly labeled image , let denote the ground truth binary segmentation mask, and the predicted probability map. The binary cross-entropy loss (BCE) is defined as:

where is the ground-truth and is the esitmated output.

- 2.

- Box-Mask Consistency Loss.

To reduce false positive predictions outside regions marked by bounding boxes, we introduce a consistency loss applied to all N training images. Let be the binary bounding box mask for image i, where 1 indicates the interior of the box and 0 denotes the background. The consistency loss penalizes activations outside the bounding box as:

which encourages the model to suppress crack predictions in areas not supported by bounding box evidence.

- 3.

- Total Loss.

The final training objective combines both terms:

Here, weights the Box–Mask Consistency loss relative to the supervised segmentation loss. Setting removes the box term; setting close to 1 makes the box term dominant and degrades performance by over-constraining predictions to the boxes. We select based on a small validation sweep (cf. [30]) and observe stable performance for (see Appendix A, Table A1).

- 4.

- Strong–Weak Supervision.

In our setting, a subset of images carries pixel-level masks (strong labels, ), which supervise the primary segmentation loss (BCE) on those samples. Bounding boxes are available for all images (N) and provide guidance through the auxiliary Box-Mask Consistency term. During training (mini-batch size ), the BCE loss is averaged only over samples with pixel-level masks (strong labels), whereas the Box-Mask Consistency loss is averaged over all samples in the batch. If a batch contains no strong samples, the update is computed solely from the Box-Mask Consistency loss scaled by .

4. Datasets and Experimental Setup

This section presents the datasets used to evaluate the proposed framework, along with the data augmentation strategies employed during training. It also outlines the implementation details of the training setup and describes the evaluation metrics used to assess segmentation performance across tasks.

4.1. Datasets

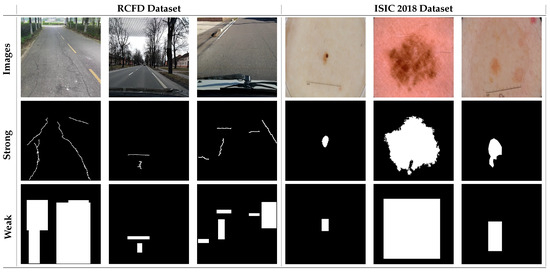

We evaluate the proposed framework on two structurally distinct datasets: the Road Crack Full-Scene Dataset [31,32] (RCFD) for road crack detection, and the ISIC 2018 [33] dataset for skin lesion segmentation. Both datasets contain bounding box annotations and strong pixel-level masks. In each case, bounding boxes are converted into binary mask format for use in the bounding box encoder. Figure 3 provides representative examples from both datasets, including the original images, strong pixel-level annotations, and weak bounding box masks. Dataset statistics are summarized in Table 1.

Figure 3.

Sample images from the RCFD and ISIC 2018 datasets. For each dataset, the first row shows the original images, the second row shows the strong pixel-level ground truth annotations, and the third row shows the weak bounding box annotations.

Table 1.

Details of the crack and skin lesion segmentation datasets used in this study. All images are resized to 512 × 512 pixels to ensure uniformity and facilitate robust model training and evaluation.

- RCFD Dataset: The RCFD [31,32] dataset contains 1428 full-scene RGB images of roads with visible cracks, captured in China, India, the Czech Republic, and Japan using drones, motorcycles, and dashboard-mounted cameras. The images cover a wide range of lighting, weather, and surface conditions. The dataset is split into 973 training, 170 validation, and 285 test images. For weak supervision, bounding box annotations from the source dataset were converted into binary masks aligned with the image resolution.

- ISIC 2018 Dataset: The ISIC 2018 Skin Lesion Segmentation Challenge dataset [33] comprises dermoscopic images of skin lesions acquired under diverse imaging conditions. We use a total of 3694 images, split into 2594 for training, 100 for validation, and 1000 for testing. Similar to the RCFD dataset, bounding box annotations were generated from lesion outlines and converted into binary mask format for integration with the bounding box encoder.

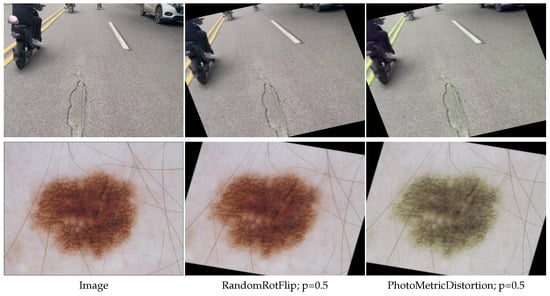

4.2. Data Augmentation

To enhance model generalization and mitigate overfitting, we apply a set of augmentations provided by the MMSegmentation framework [34]. These augmentations increase data diversity and help simulate real-world variations in lighting, orientation, and contrast:

- Resizing: All images and corresponding masks are resized to a fixed resolution of pixels to ensure consistent input dimensions for the network.

- Random rotations and flips: Using RandomRotFlip, each image undergoes random rotation (, , or ) and horizontal/vertical flipping with a combined probability of 0.5.

- Photometric distortions: Includes random brightness and contrast adjustments, HSV perturbations (saturation and hue), and conversion back to RGB, all applied with a probability of 0.5.

Figure 4 presents sample augmented images, illustrating how these transformations enhance the model’s robustness to variations in lighting and imaging conditions.

Figure 4.

Examples of data augmentation transformation applied to the images in the training sets.

4.3. Implementation Details

All experiments were conducted using the MMSegmentation framework [34]. Models were trained on an NVIDIA RTX 3090 GPU (24 GB) using the AdamW optimizer [35] with an initial learning rate of . A two-phase learning rate schedule was used: linear warm-up for the first 3000 iterations (starting at ), followed by a polynomial decay until iteration 20,000. Input images were resized to with a batch size of 4.

All models were trained for 20,000 iterations on each dataset. The same configuration was used for both crack and skin lesion segmentation tasks, ensuring fair comparison across domains.

4.4. Evaluation Metrics

We evaluate segmentation performance using five widely adopted pixel-wise measures, each capturing a different aspect of prediction quality. Let be the ground-truth mask and the predicted probability map. We binarize at threshold to obtain and then compute pixel-level counts of true positives (TP), false positives (FP), false negatives (FN), and true negatives (TN) by comparing with M.

- Intersection over Union (IoU): Quantifies the overlap between predicted and ground truth masks:where , , and represent true positives, false positives, and false negatives, respectively.

- Recall: The proportion of actual positive pixels correctly identified:High recall indicates that most of the true positive pixels are detected, though it does not penalize false positives.

- Precision: The proportion of predicted positive pixels that are correct:High precision means fewer false positives, but it may come at the cost of lower recall.

- F1-score: The harmonic mean of precision and recall:This balances precision and recall into a single metric.

- Mean Intersection over Union (mIoU): The average IoU calculated over all C classes:For binary segmentation (), this is the mean IoU of the foreground and background classes.

Unless stated otherwise, we report micro-averaged results, i.e., pixel counts are accumulated across all images before computing the measures.

5. Experimental Results and Discussion

This section presents a comprehensive evaluation of the proposed framework from two perspectives: (i) an ablation study assessing the contribution of each architectural component and the impact of varying proportions of weak and strong annotations, and (ii) a comparative analysis against state-of-the-art segmentation methods. Experiments are conducted on both crack detection (RCFD dataset) and skin lesion segmentation (ISIC 2018) datasets, enabling performance assessment under different annotation budgets and across structurally distinct domains.

5.1. Ablation Study

We perform an ablation study to quantify the contribution of the proposed bounding box encoder and to evaluate the effect of varying proportions of weak (bounding box) and strong (pixel-level) annotations. The baseline model consists of a ConvNeXt-Base encoder and a U-Net-style decoder trained exclusively with strong annotations. Our full model augments this baseline with the bounding box encoder, which modulates encoder features using spatial attention maps derived from weak masks.

Table 2 and Table 3 report the results on the RCFD and ISIC 2018 datasets, respectively. For both datasets, incorporating the bounding box encoder improves performance across all metrics, even when the proportion of strong labels is small. We further examine annotation coverage by varying the strong-label ratio and the weak-label (bounding-box) ratio , and report the resulting performance to quantify the trade-off between annotation cost and accuracy.

Table 2.

Ablation study results on the RCFD dataset. Weak = bounding box annotations, Strong = pixel-level annotations.

Table 3.

Ablation study results on the ISIC 2018 dataset. Weak = bounding box annotations, Strong = pixel-level annotations.

On the RCFD dataset, using only 10% strong annotations and weak masks for all images achieves an IoU of 61.97%, which is 1.48% lower than the fully supervised baseline (63.45%), and an F1-score of 76.52%, which is just 1.12% lower than the baseline (77.64%). The mIoU difference is minimal at −0.82% (80.15% vs. 80.97%). With 20% strong annotations, performance improves significantly to an IoU of 69.29% (+5.84%), F1-score of 81.86% (+4.22%), and mIoU of 83.99% (+3.02%) compared to the baseline. Further increases in strong annotations lead to consistent improvements, reaching 87.81% mIoU with full supervision.

A similar trend is observed on ISIC 2018. With only 10% strong annotations and full weak masks, our method achieves an IoU of 87.41% (+8.06%), F1-score of 93.28% (+4.79%), and mIoU of 90.92% (+5.61%) compared to the fully supervised baseline. Using 20% strong annotations, the IoU rises to 87.95% (+8.60%), F1-score to 93.59% (+5.10%), and mIoU to 91.29% (+5.98%). The best performance of 92.38% mIoU is obtained with 100% strong and weak annotations.

Overall, these results confirm that: (i) the bounding box encoder consistently boosts segmentation accuracy across IoU, Precision, Recall, F1-score, and mIoU; (ii) the proposed framework can achieve near full-supervision or better performance with as little as 10–20% strong annotations, reducing annotation cost without sacrificing quality.

5.2. Comparative Experiments to the State of the Art

We compare the proposed framework with fully supervised segmentation methods and recent semi-/weakly supervised approaches that utilize both weak (bounding box) and strong (pixel-level) annotations. Table 4 and Table 5 summarize the results for the RCFD and ISIC 2018 datasets.

For methods whose original papers did not report results on RCFD or ISIC 2018 (e.g., EfficientCrackNet [36], Self-Correcting [29], DCR [37], Strong-Weak [38], Xiong et al. [39]), we re-trained the authors’ implementations—when available—or faithful re-implementations on our data splits using the unified configuration described in Section 4.3 and the evaluation protocol in Section 4.4.

RCFD dataset: Among fully supervised methods, CrackRefineNet [32] achieves the highest score with 65.41% IoU, 79.09% F1-score, and 81.97% mIoU. Our framework, with only 10% strong labels and full weak annotations, achieves 61.97% IoU, 76.52% F1-score, and 80.15% mIoU—just 3.44% IoU and 2.57% F1 below CrackRefineNet despite using only one-tenth of the strong annotations, while outperforming the best mixed-supervision competitor (Self-Correcting [29]) by +13.83% IoU, +11.53% F1, and +7.23% mIoU.

Table 4.

Comparison with state-of-the-art methods on the RCFD dataset. Weak = bounding box annotations, Strong = pixel-level annotations. Bold highlighting values denote the highest results.

Table 4.

Comparison with state-of-the-art methods on the RCFD dataset. Weak = bounding box annotations, Strong = pixel-level annotations. Bold highlighting values denote the highest results.

| Model | Supervision Type | Weak | Strong | IoU (%) | Precision (%) | Recall (%) | F1-Score (%) | mIoU (%) |

|---|---|---|---|---|---|---|---|---|

| U-Net [1] | Full | – | 100% | 48.82 | 58.42 | 74.82 | 65.61 | 73.03 |

| MobileNetv3 [40] | – | 100% | 51.69 | 71.57 | 65.04 | 68.15 | 74.78 | |

| SwinT [41] | – | 100% | 53.94 | 73.84 | 66.68 | 70.08 | 75.97 | |

| EfficientCrackNet [36] | – | 100% | 35.47 | 39.42 | 77.93 | 52.36 | 65.24 | |

| CrackMaster [42] | – | 100% | 63.53 | 79.37 | 76.09 | 77.7 | 81.0 | |

| CrackRefineNet [32] | – | 100% | 65.41 | 79.2 | 78.98 | 79.09 | 81.97 | |

| Self-Correcting [29] | Mixed (Box + Full) | 100% | 10% | 48.14 | 69.65 | 60.92 | 64.99 | 72.92 |

| Macro-Micro [43] | 100% | 10% | 40.35 | 63.16 | 52.78 | 57.5 | 68.82 | |

| DCR [37] | 100% | 10% | 40.62 | 64.88 | 52.07 | 57.77 | 68.98 | |

| Strong-Weak [38] | 100% | 10% | 24.39 | 26.25 | 77.47 | 39.22 | 57.97 | |

| Xiong et al. [39] | 100% | 10% | 16.99 | 18.35 | 69.66 | 29.05 | 52.53 | |

| Ours | Mixed (Box + Full) | 100% | 10% | 61.97 | 75.75 | 77.30 | 76.52 | 80.15 |

| Ours | 100% | 20% | 69.29 | 80.24 | 83.54 | 81.86 | 83.99 |

When increasing to 20% strong labels, the performance reaches 69.29% IoU, 81.86% F1-score, and 83.99% mIoU, surpassing CrackRefineNet by +3.88% IoU, +2.77% F1, and +2.02% mIoU, and significantly outperforming all other baselines.

Table 5.

Comparison with state-of-the-art methods on the ISIC 2018 dataset. Weak = bounding box annotations, Strong = pixel-level annotations. Bold highlighting values denote the highest results.

Table 5.

Comparison with state-of-the-art methods on the ISIC 2018 dataset. Weak = bounding box annotations, Strong = pixel-level annotations. Bold highlighting values denote the highest results.

| Model | Supervision Type | Weak | Strong | IoU (%) | Precision (%) | Recall (%) | F1-Score (%) | mIoU (%) |

|---|---|---|---|---|---|---|---|---|

| U-Net [1] | Full | – | 100% | 58.39 | 77.41 | 70.38 | 73.73 | 69.81 |

| MobileNetv3 [40] | – | 100% | 78.41 | 89.68 | 86.18 | 87.9 | 84.49 | |

| SwinT [41] | – | 100% | 78.31 | 90.9 | 84.97 | 87.84 | 84.50 | |

| EfficientCrackNet [36] | – | 100% | 71.4 | 80.42 | 86.43 | 83.31 | 78.81 | |

| CrackMaster [42] | – | 100% | 81.81 | 92.15 | 87.94 | 89.99 | 86.99 | |

| CrackRefineNet [32] | – | 100% | 83.11 | 89.97 | 91.6 | 90.78 | 87.77 | |

| Self-Correcting [29] | Mixed (Box+Full) | 100% | 10% | 74.81 | 86.8 | 84.41 | 85.59 | 81.79 |

| Macro-Micro [43] | 100% | 10% | 71.98 | 85.15 | 82.31 | 83.7 | 79.71 | |

| DCR [37] | 100% | 10% | 73.56 | 86.81 | 82.82 | 84.77 | 80.94 | |

| Strong-Weak [38] | 100% | 10% | 66.28 | 79.47 | 79.97 | 79.72 | 75.25 | |

| UCMT (U-Net) [44] | 100% | 10% | 69.81 | 84.75 | 81.60 | 83.33 | 80.67 | |

| DSBD [45] | 100% | 10% | 71.42 | 84.71 | 87.54 | 86.31 | 78.05 | |

| Xiong et al. [39] | 100% | 10% | 65.0 | 82.56 | 75.34 | 78.79 | 74.77 | |

| EGE-UDSMT [46] | 100% | 10% | 74.62 | 89.65 | 86.36 | 88.65 | 81.63 | |

| Ours | Mixed (Box + Full) | 100% | 10% | 87.41 | 92.35 | 94.23 | 93.28 | 90.92 |

| Ours | 100% | 20% | 87.95 | 91.99 | 95.24 | 93.59 | 91.29 |

ISIC 2018 dataset: In the fully supervised setting, CrackRefineNet [32] achieves the highest score with 83.11% IoU, 90.78% F1-score, and 87.77% mIoU.

Among mixed-supervision methods using 10% strong labels, Self-Correcting [29] achieves 74.81% IoU, 85.59% F1-score, and 81.79% mIoU, while EGE-UDSMT [46] achieves 74.62% IoU, 88.65% F1-score, and 81.63% mIoU.

Our framework, with 10% strong labels and full weak annotations, achieves 87.41% IoU, 93.28% F1-score, and 90.92% mIoU—outperforming Self-Correcting by +12.60% IoU, +7.69% F1, and +9.13% mIoU, and surpassing EGE-UDSMT by +12.79% IoU, +4.63% F1, and +9.29% mIoU. Compared to the best fully supervised model (CrackRefineNet), our method improves by +4.30% IoU, +2.50% F1, and +3.15% mIoU, despite using only one-tenth of the strong annotations.

When increasing to 20% strong labels, our method further boosts performance to 87.95% IoU, 93.59% F1-score, and 91.29% mIoU, achieving the best results across all metrics. The IoU is +4.84% higher than CrackRefineNet, and the mIoU is +3.52% higher, demonstrating that the proposed framework scales effectively with additional strong labels while maintaining a substantial lead over both mixed- and fully supervised baselines.

These results show that the proposed framework not only bridges the performance gap between weakly and fully supervised segmentation but also outperforms state-of-the-art fully supervised models in multiple settings while using as little as 10–20% of strong annotations. This confirms the effectiveness of the bounding box encoder in leveraging weak supervision to achieve competitive and even superior performance across different domains and metrics.

5.3. Qualitative Analysis of Results

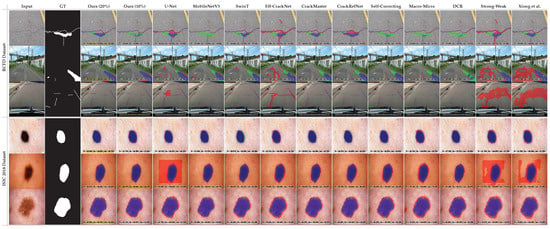

To further illustrate the effectiveness of the proposed framework, Figure 5 presents qualitative comparisons on both the RCFD and ISIC 2018 datasets. Each column shows the input image, ground truth mask, and segmentation outputs from representative state-of-the-art methods alongside our approach with 10% and 20% strong annotations. Blue regions correspond to true positives, red regions indicate false positives, and green regions mark false negatives, with the best IoU scores highlighted in yellow.

Figure 5.

Qualitative comparison of crack detection and skin lesion segmentation across two datasets: RCFD (top) and ISIC 2018 (bottom). Each column shows the Input, Ground Truth (GT), and predicted overlays from multiple models. Blue regions indicate true positives, red regions are false positives, and green regions are false negatives. Best IoU scores are highlighted in yellow.

On the RCFD dataset, our method produces more precise crack delineations with fewer spurious predictions compared to baselines such as Strong-Weak [38] and Xiong et al. [39], which tend to generate noisy or fragmented masks. Even under limited supervision (10% strong), our framework captures fine crack structures and suppresses background noise more effectively than fully supervised models such as U-Net [1] and MobileNetv3 [40]. Increasing the proportion of strong annotations to 20% further refines the predictions, yielding continuous and complete crack regions that closely match the ground truth.

On ISIC 2018, lesion boundaries obtained by our method align more faithfully with ground truth masks compared to competing approaches. For example, macro–micro [43] and DCR [37] often under-segment lesion areas, while Self-Correcting [29] and SwinT [41] occasionally introduce false positives along the lesion border. In contrast, our framework with only 10% strong labels already achieves compact and accurate delineations, and the 20% setting produces the sharpest lesion boundaries with minimal false positives and negatives.

These visual results reinforce the quantitative findings: the bounding box encoder effectively leverages weak supervision to guide feature learning, enabling the model to outperform both fully and weakly supervised baselines while reducing annotation requirements.

5.4. Efficiency Analysis

To complement the quantitative and qualitative results, we report inference efficiency and model size of different segmentation models in terms of trainable parameters, per-image inference time, and floating-point operations (FLOPs) under the implementation setup described in Section 4.3, as reported in Table 6.

Table 6.

Comparative analysis of model size and inference efficiency. Columns report trainable parameters (M), per-image inference time (s), and FLOPs per forward pass, measured under the hardware/software configuration in Section 4.3 (batch size 1; no test-time augmentation; times averaged after warm-up).

Lightweight baselines such as MobileNetv3 [40] and EfficientCrackNet [36] demonstrate small model size (3.28 M and 0.35 M parameters, respectively) with very low compute (FLOPs). However, this efficiency comes at the cost of accuracy, as confirmed in previous evaluations. In contrast, fully supervised architectures such as SwinT [41] and CrackRefineNet [32] achieve higher accuracy but at a higher compute and model-size cost, requiring up to 99.66 M parameters and 0.206 T FLOPs, with longer inference times.

When considering semi-supervised frameworks, Self-Correcting network [29] achieves a relatively low compute footprint (48.77 M parameters and 0.061 T FLOPs) due to its streamlined design. However, this reduced compute budget comes with limitations in segmentation accuracy. Our proposed model, which integrates a ConvNeXt-Base backbone with a lightweight U-Net style CNN decoder and a dedicated bounding box encoder, introduces a higher parameter count (92.67 M) but keeps the computation tractable with only 0.092 T FLOPs. Importantly, the inference time (0.0201 s per image) remains close to that of U-Net (0.0192 s) and substantially faster than CrackRefineNet (0.0387 s), despite the stronger backbone.

This efficiency analysis highlights that the proposed framework strikes an effective balance between accuracy and efficiency in the semi-supervised setting. By leveraging bounding box guidance through the encoder, our model achieves superior segmentation performance while remaining efficient at inference, demonstrating its suitability for both research and real-world deployment.

5.5. Analysis of Failure Cases

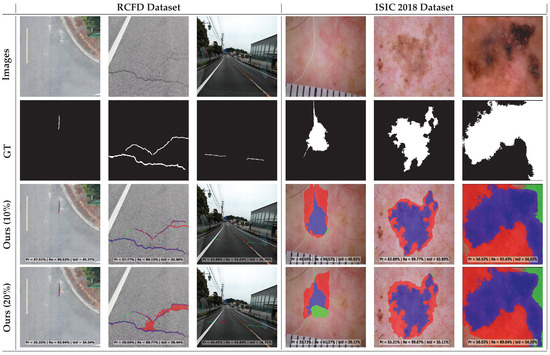

Although the proposed framework achieves strong overall performance, certain limitations remain in challenging scenarios. Figure 6 highlights representative failure cases from the RCFD and ISIC 2018 datasets. The main sources of error can be grouped into three categories.

Figure 6.

Illustration of representative failure cases on the RCFD (left) and ISIC 2018 (right) datasets. Each column presents an input image, the corresponding ground truth mask, and predictions from our model trained with 10% and 20% strong annotations. Common errors include boundary ambiguity, missed fine structures (false negatives in green), and spurious detections in textured regions (false positives in red). Despite these challenges, the predictions remain reasonably aligned with ground truth (in blue), demonstrating robustness under limited supervision.

First, boundary ambiguity arises in regions where cracks or lesion edges are poorly defined. In such cases, the model may either under-segment the structure or include irrelevant background, leading to false positives or false negatives. Second, low-contrast or fine-scale details are sometimes missed, particularly when only a small fraction of strong annotations is available. This results in incomplete predictions, with thin or subtle structures not being fully captured. Finally, textured or noisy regions occasionally cause the model to over-segment, producing spurious detections that do not correspond to true target regions.

Despite these challenges, the overall alignment between predictions and ground truth remains high, even with as little as 10–20% strong supervision. These observations suggest that while the bounding box encoder effectively leverages weak annotations, future work could focus on improving boundary refinement and robustness to noise, for example through boundary-aware losses or post-processing modules.

6. Conclusions

In this work, we introduced a weakly supervised segmentation framework that combines a ConvNeXt-Base encoder with a lightweight bounding box encoder and a U-Net-style decoder. The key novelty lies in leveraging bounding box annotations to generate spatial attention maps that guide feature modulation, thereby reducing the reliance on dense pixel-level annotations.

Extensive experiments on two challenging datasets, RCFD and ISIC 2018, demonstrate that our method not only outperforms conventional fully supervised baselines but also achieves competitive or superior results compared to state-of-the-art semi- and weakly supervised approaches. Notably, with only 10–20% strong annotations and full weak supervision, the proposed framework surpasses the performance of fully supervised baselines, highlighting its annotation efficiency. For RCFD (Table 4), our model achieves IoU 69.29%, F1 81.86%, and mIoU 83.99% with 20% strong labels (vs. CrackRefineNet at 65.41% IoU, 79.09% F1, 81.97% mIoU using 100% strong). Even at 10% strong, ours attains 61.97% IoU, 76.52% F1, 80.15% mIoU, exceeding mixed baselines at 10% strong (e.g., Self-Correcting: 48.14% IoU, 64.99% F1, 72.92% mIoU). On ISIC 2018 (Table 5), our method reaches 87.41–87.95% IoU, 93.28–93.59% F1, and 90.92–91.29% mIoU with 10–20% strong labels, outperforming fully supervised CrackRefineNet (83.11% IoU, 90.78% F1, 87.77% mIoU) and other baselines.

Ablation studies further confirmed the contribution of the bounding box encoder and the effectiveness of integrating weak masks during training. Complexity analysis showed that the model achieves a favorable trade-off between accuracy and computational cost, maintaining efficiency while delivering high performance. Qualitative analyses also revealed the strengths and remaining challenges, particularly in handling boundary ambiguity and noisy textures. In terms of efficiency (Table 6), our model uses 92.67 M parameters with 0.092 T FLOPs and achieves 0.0201 s per-image inference—close to U-Net (0.0192 s) and faster than CrackRefineNet (0.0387 s) under the setup in Section 4.3.

Future work will focus on improving boundary refinement and generalizing the framework to other domains where annotation costs are high. We also aim to explore the integration of advanced loss functions and post-processing strategies to further mitigate errors in challenging cases. Overall, this study demonstrates that bounding box-guided feature modulation offers a practical and effective path toward annotation-efficient semantic segmentation.

Author Contributions

Conceptualization, A.M.O., H.A.R., S.C. and D.P.; methodology, A.M.O., H.A.R. and S.C.; software, A.M.O.; validation, A.M.O., H.A.R. and S.C.; formal analysis, A.M.O. and H.A.R.; investigation, A.M.O.; resources, S.C. and D.P.; data curation, A.M.O.; writing—original draft preparation, A.M.O.; writing—review and editing, A.M.O., H.A.R., S.C. and D.P.; visualization, A.M.O.; supervision, H.A.R. and D.P.; project administration, D.P.; funding acquisition, D.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work is part of the PECT “Cuidem el que ens uneix” project, Operation 4, within the framework of the RIS3CAT and ERDF Catalonia Operational Programme 2014–2020. PECT is co-financed by the Catalan Government, the Provincial Council of Tarragona, “Diputació de Tarragona” and Universitat Rovira i Virgili.

Data Availability Statement

The datasets used in this study are as follows: RCFD [31,32], which is a private dataset and not publicly available, and ISIC 2018 [33], which is publicly accessible. The code is publicly available at: https://github.com/AmmarOkran/Bbox-Guided-Segmentation (accessed on 25 September 2025).

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. Additional Hyperparameter Sensitivity

Table A1.

Sensitivity of performance to the loss weight on the test split (20% strong, 100% weak; threshold ). Bold highlighting values denote the highest results.

Table A1.

Sensitivity of performance to the loss weight on the test split (20% strong, 100% weak; threshold ). Bold highlighting values denote the highest results.

| RCFD Dataset | ISIC 2018 Dataset | |||

|---|---|---|---|---|

| IoU (%) | F1-Score (%) | IoU (%) | F1-Score (%) | |

| 0.00 | 67.40 | 80.40 | 86.60 | 92.60 |

| 0.05 | 68.20 | 80.90 | 87.00 | 92.90 |

| 0.10 | 68.73 | 81.30 | 87.30 | 93.10 |

| 0.20 | 68.40 | 81.05 | 87.10 | 93.00 |

| 0.50 | 65.02 | 78.80 | 85.40 | 91.80 |

| 0.70 | 63.80 | 77.60 | 84.70 | 91.10 |

| 1.00 | 61.90 | 76.10 | 83.60 | 90.20 |

References

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention, Proceedings of the MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Okran, A.M.; Saleh, A.; Puig, D.; Rashwan, H.A. Stacking Up for Success: A Cascade Network Model for Efficient Road Crack Segmentation. In Artificial Intelligence Research and Development; IOS Press: Amsterdam, The Netherlands, 2023; pp. 38–47. [Google Scholar]

- Yang, F.; Zhang, L.; Yu, S.; Prokhorov, D.; Mei, X.; Ling, H. Feature pyramid and hierarchical boosting network for pavement crack detection. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1525–1535. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Okran, A.M.; Abdel-Nasser, M.; Rashwan, H.A.; Puig, D. Effective deep learning-based ensemble model for road crack detection. In Proceedings of the 2022 IEEE International Conference on Big Data (Big Data), Osaka, Japan, 17–20 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 6407–6415. [Google Scholar]

- Okran, A.M.; Rashwan, H.A.; Puig, D. Enhanced Crack Segmentation Network: Leveraging Multi-Dimensional Attention. In Artificial Intelligence Research and Development; IOS Press: Amsterdam, The Netherlands, 2024; pp. 94–96. [Google Scholar]

- Codella, N.C.; Gutman, D.; Celebi, M.E.; Helba, B.; Marchetti, M.A.; Dusza, S.W.; Kalloo, A.; Liopyris, K.; Mishra, N.; Kittler, H.; et al. Skin lesion analysis toward melanoma detection: A challenge at the 2017 international symposium on biomedical imaging (isbi), hosted by the international skin imaging collaboration (isic). In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 201; IEEE: Piscataway, NJ, USA, 2018; pp. 168–172. [Google Scholar]

- Pathak, D.; Krahenbuhl, P.; Darrell, T. Constrained convolutional neural networks for weakly supervised segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1796–1804. [Google Scholar]

- Lin, D.; Dai, J.; Jia, J.; He, K.; Sun, J. Scribblesup: Scribble-supervised convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3159–3167. [Google Scholar]

- Bearman, A.; Russakovsky, O.; Ferrari, V.; Fei-Fei, L. What’s the point: Semantic segmentation with point supervision. In Computer Vision—ECCV 2016, Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 549–565. [Google Scholar]

- Dai, J.; He, K.; Sun, J. Boxsup: Exploiting bounding boxes to supervise convolutional networks for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1635–1643. [Google Scholar]

- Khoreva, A.; Benenson, R.; Hosang, J.; Hein, M.; Schiele, B. Simple does it: Weakly supervised instance and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 876–885. [Google Scholar]

- Ahn, J.; Kwak, S. Learning pixel-level semantic affinity with image-level supervision for weakly supervised semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4981–4990. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Okran, A.M.; Rashwan, H.A.; Abdulwahab, S.; Chambon, S.; Puig, D. Annotation-Efficient Crack Segmentation in Full Scene Images via Bounding Box-Guided Feature Modulation. In Artificial Intelligence Research and Development; IOS Press: Amsterdam, The Netherlands, 2025. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 4015–4026. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Jiang, Q.; Li, C.; Yang, J.; Su, H.; et al. Grounding dino: Marrying dino with grounded pre-training for open-set object detection. In Computer Vision—ECCV 2024, Proceedings of the 18th European Conference, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2024; pp. 38–55. [Google Scholar]

- Ravanbakhsh, E.; Niu, C.; Liang, Y.; Ramanujam, J.; Li, X. Enhancing Weakly Supervised Semantic Segmentation with Multi-Modal Foundation Models: An End-to-End Approach. arXiv 2024, arXiv:2405.06586. [Google Scholar]

- Wang, J.; Xia, B. Bounding box tightness prior for weakly supervised image segmentation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2021, Proceedings of the 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Springer: Cham, Switzerland, 2021; pp. 526–536. [Google Scholar]

- Lee, J.; Yi, J.; Shin, C.; Yoon, S. Bbam: Bounding box attribution map for weakly supervised semantic and instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2643–2652. [Google Scholar]

- Deng, Q.; Hui, L.; Xie, J.; Yang, J. Sketchy Bounding-box Supervision for 3D Instance Segmentation. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 8879–8888. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hong, S.; Oh, S.J.; Han, B. Spatial priors for weakly supervised semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Li, Z.; Zhao, Y.; Xie, E.; Yu, Z. Mask-distillation: Enhancing weakly supervised segmentation with mask consistency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Kweon, H.; Yoon, K.J. WISH: Weakly Supervised Instance Segmentation using Heterogeneous Labels. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 25377–25387. [Google Scholar]

- Valindria, V.V.; Lavdas, I.; Bai, W.; Kamnitsas, K.; Aboagye, E.O.; Rockall, A.G.; Rueckert, D.; Glocker, B. Domain adaptation for MRI organ segmentation using reverse classification accuracy. arXiv 2018, arXiv:1806.00363. [Google Scholar] [CrossRef]

- Ibrahim, M.S.; Vahdat, A.; Ranjbar, M.; Macready, W.G. Semi-supervised semantic image segmentation with self-correcting networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12715–12725. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. Adv. Neural Inf. Process. Syst. 2017, 30, 1195–1204. [Google Scholar]

- Okran, A.M.; Abdel-Nasser, M.; Rashwan, H.A.; Puig, D. A Curated Dataset for Crack Image Analysis: Experimental Verification and Future Perspectives; IOS Press: Amsterdam, The Netherlands, 2022; pp. 225–228. [Google Scholar]

- Okran, A.M.; Rashwan, H.A.; AL Khalidy, S.K.M.; Chambon, S.; Puig Valls, D.S. Crackrefinenet: A Context-and Refinement-Driven Convolutional Architecture for Robust Crack Segmentation Under Real-World and Zero-Shot Conditions. 2025. Available online: https://ssrn.com/abstract=5360396 (accessed on 25 September 2025).

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef] [PubMed]

- Contributors, M. MMSegmentation: OpenMMLab Semantic Segmentation Toolbox and Benchmark. 2020. Available online: https://github.com/open-mmlab/mmsegmentation (accessed on 25 September 2025).

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Zim, A.H.; Iqbal, A.; Al-Huda, Z.; Malik, A.; Kuribayashi, M. EfficientCrackNet: A lightweight model for crack segmentation. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 28 February–4 March 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 6279–6289. [Google Scholar]

- Pan, J.; Bi, Q.; Yang, Y.; Zhu, P.; Bian, C. Label-efficient hybrid-supervised learning for medical image segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 2026–2034. [Google Scholar]

- Luo, W.; Yang, M. Semi-supervised semantic segmentation via strong-weak dual-branch network. In Computer Vision—ECCV 2020, Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 784–800. [Google Scholar]

- Xiong, X.; Wang, C.; Li, W.; Li, G. Semi-and Weakly-Supervised Learning for Mammogram Mass Segmentation with Limited Annotations. In Proceedings of the 2024 IEEE International Symposium on Biomedical Imaging (ISBI), Athens, Greece, 27–30 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–5. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Los Alamitos, CA, USA, 11–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Okran, A.M.; Rashwan, H.A.; Saleh, A.; Puig, D. Efficient crack segmentation with multi-decoder networks and enhanced feature fusion. Eng. Appl. Artif. Intell. 2025, 152, 110697. [Google Scholar] [CrossRef]

- Ning, M.; Bian, C.; Lu, D.; Zhou, H.Y.; Yu, S.; Yuan, C.; Guo, Y.; Wang, Y.; Ma, K.; Zheng, Y. A macro-micro weakly-supervised framework for as-oct tissue segmentation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2020, Proceedings of the 23rd International Conference, Lima, Peru, 4–8 October 2020; Springer: Cham, Switzerland, 2020; pp. 725–734. [Google Scholar]

- Shen, Z.; Cao, P.; Yang, H.; Liu, X.; Yang, J.; Zaiane, O.R. Co-training with high-confidence pseudo labels for semi-supervised medical image segmentation. arXiv 2023, arXiv:2301.04465. [Google Scholar]

- Huang, Z.; Gai, D.; Min, W.; Wang, Q.; Zhan, L. Dual-stream-based dense local features contrastive learning for semi-supervised medical image segmentation. Biomed. Signal Process. Control 2024, 88, 105636. [Google Scholar] [CrossRef]

- Zhang, G.; Lu, J.; Chen, Y.; Deng, Y.; Zhao, B.; Chen, H.; Xue, L. A Lightweight Dual-Student Mean Teacher Semi-Supervised Semantic Segmentation Method for Skin Lesions. Neural Netw. 2025, 192, 107882. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).