Fusion of Electrical and Optical Methods in the Detection of Partial Discharges in Dielectric Oils Using YOLOv8

Abstract

1. Introduction

- Data source automation: the first and fundamental improvement lies in the automation of the data source itself. Conventional approaches typically start with already digitized data. Our system, on the other hand, uses computer vision not only to analyze but also to generate structured data in the first place. By directly reading the display of a conventional, non-smart instrument, it transforms it into a self-contained, structured, digital data source. This eliminates the dependence on manual operator interpretation or proprietary data interfaces, offering an innovative solution for the modernization and automation of existing equipment.

- Novel bimodal fusion for contextualized diagnosis: the second key improvement is the novel bimodal fusion that provides diagnostic context unattainable with unimodal models. Our approach fuses and combines this quantitative electrical information—the “how much”—automatically extracted from the detector with the qualitative and morphological characterization of direct optical images—the “where” and the “how.” Therefore, the improvement lies not only in the classification algorithm itself, but in the contextual richness of the fused data provided to it. This synergy enables a much more comprehensive diagnosis, correlating the electrical severity of an event with its precise physical manifestation in space.

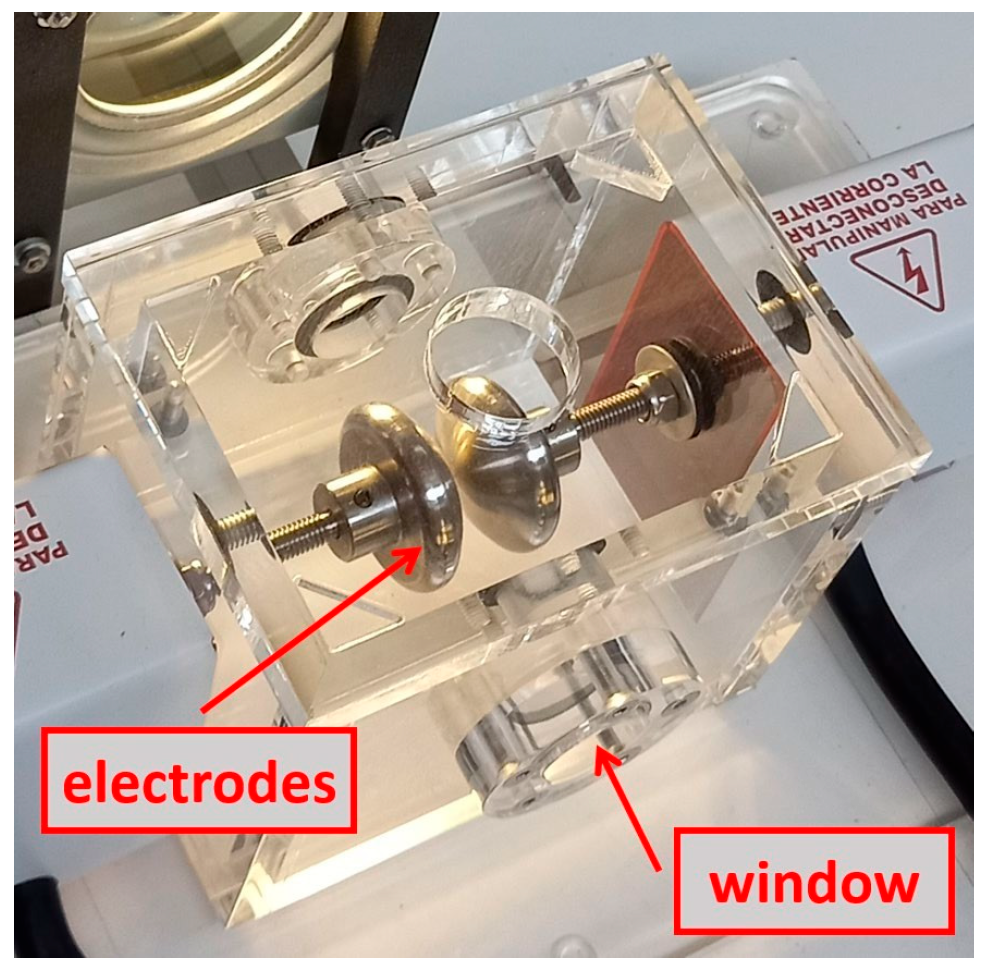

2. Experimental Set Up

- Sensitivity to lighting conditions and optical environment: The performance of both YOLOv8 object detection and OCR character recognition is intrinsically dependent on the quality of the captured images. The methodology is sensitive to variations in ambient lighting conditions. Factors such as reflections, shadows, or uneven lighting can introduce noise and affect the accuracy of the algorithms. A controlled environment was maintained in our laboratory, but in situ implementation would require more robust solutions, such as the use of controlled and polarized light sources or more advanced image preprocessing algorithms to normalize the captures.

- Transparency of the dielectric medium: The effectiveness of optical discharge detection relies on the assumption that the dielectric medium, in this case the oil, is optically transparent. In real-world applications, insulating oils degrade over time due to thermal and electrical stress, which can increase their turbidity, change their color, or generate suspended byproducts. This degradation would cause attenuation of the optical signal through scattering or absorption, making it difficult or even impossible to capture the discharge morphology, especially for low-intensity events.

- Direct optical access requirement: A fundamental requirement of this technique is the existence of a direct line of sight to the area where the discharges occur. Our experimental setup used a vessel with transparent walls, simulating an inspection window. However, most high-voltage electrical equipment in service is sealed metal vessels. Widespread application of this method would require the availability of equipment with inspection windows or the possibility of making significant structural modifications to install them, which is not always feasible, safe, or economically viable.

- Scalability to field equipment: The transition from a laboratory environment to on-site diagnostics on large equipment, such as power transformers, presents considerable challenges. The large internal volume of this equipment makes it complex to determine the optimal location of one or more cameras to cover all potential risk areas. Furthermore, integrating a vision system into the existing monitoring infrastructure and ensuring its durability in the harsh environmental conditions of an electrical substation are engineering hurdles that must be addressed for practical, large-scale implementation.

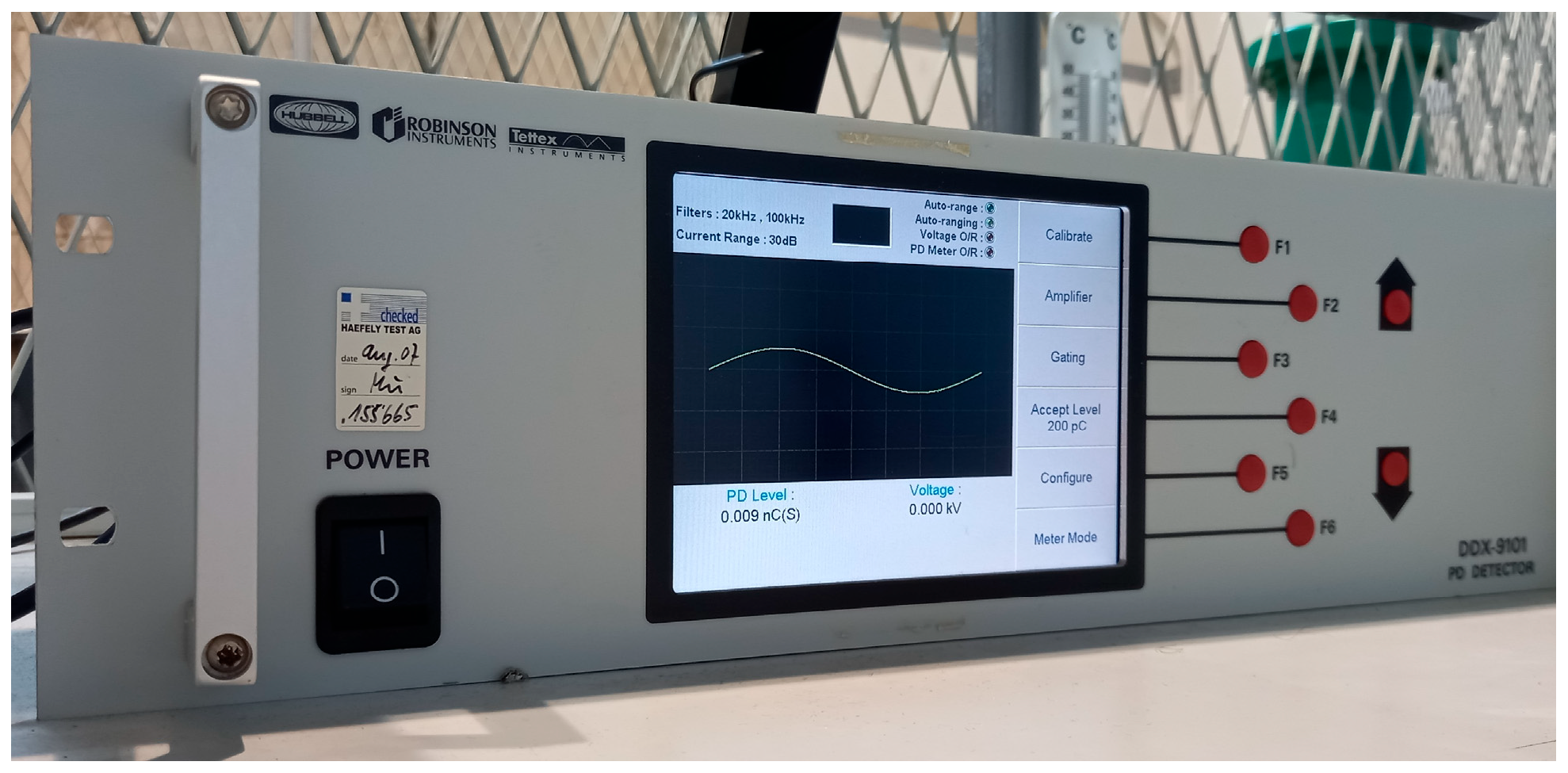

3. Method 1: Automating the Electrical Detector via Computer Vision

3.1. CNN Training

3.1.1. Training Environment

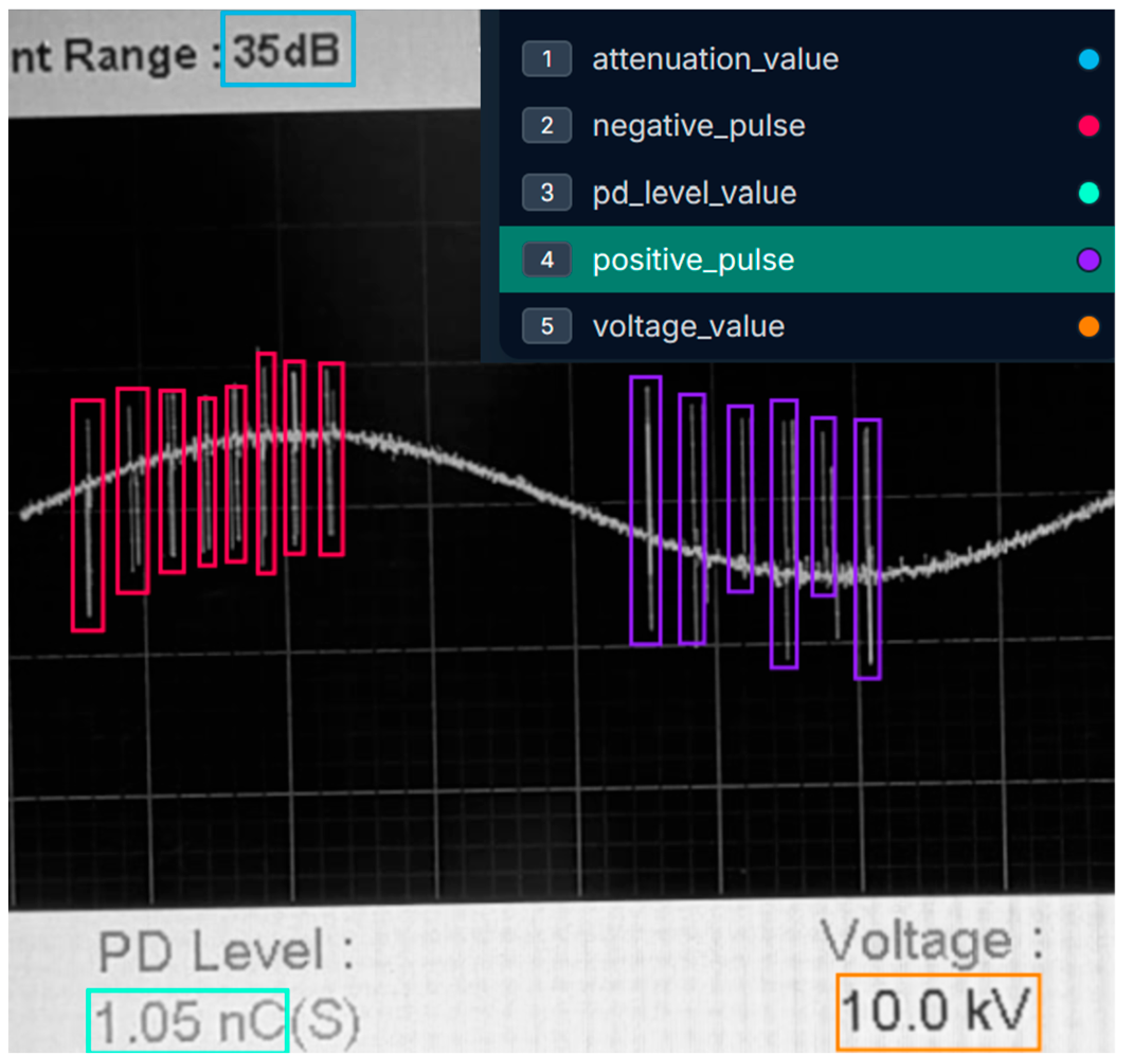

3.1.2. Manual Labeling of DDX Images

- attenuation_value

- negative_pulse

- pd_level_value

- positive_pulse

- voltaje_value

3.1.3. Dataset Setup and Training

- Training: 594 images (71%).

- Validation: 146 images (17%).

- Test: 100 images (12%).

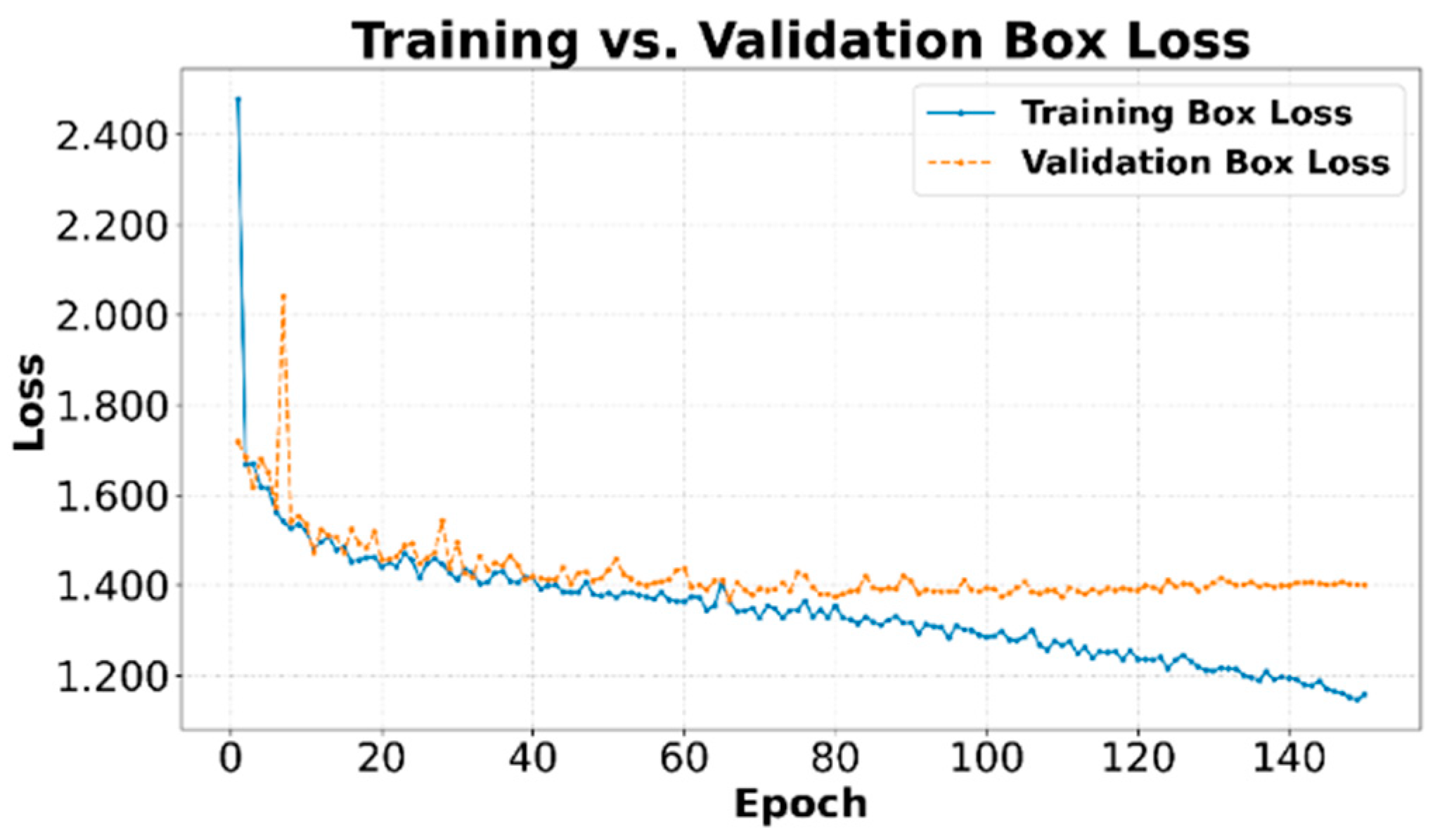

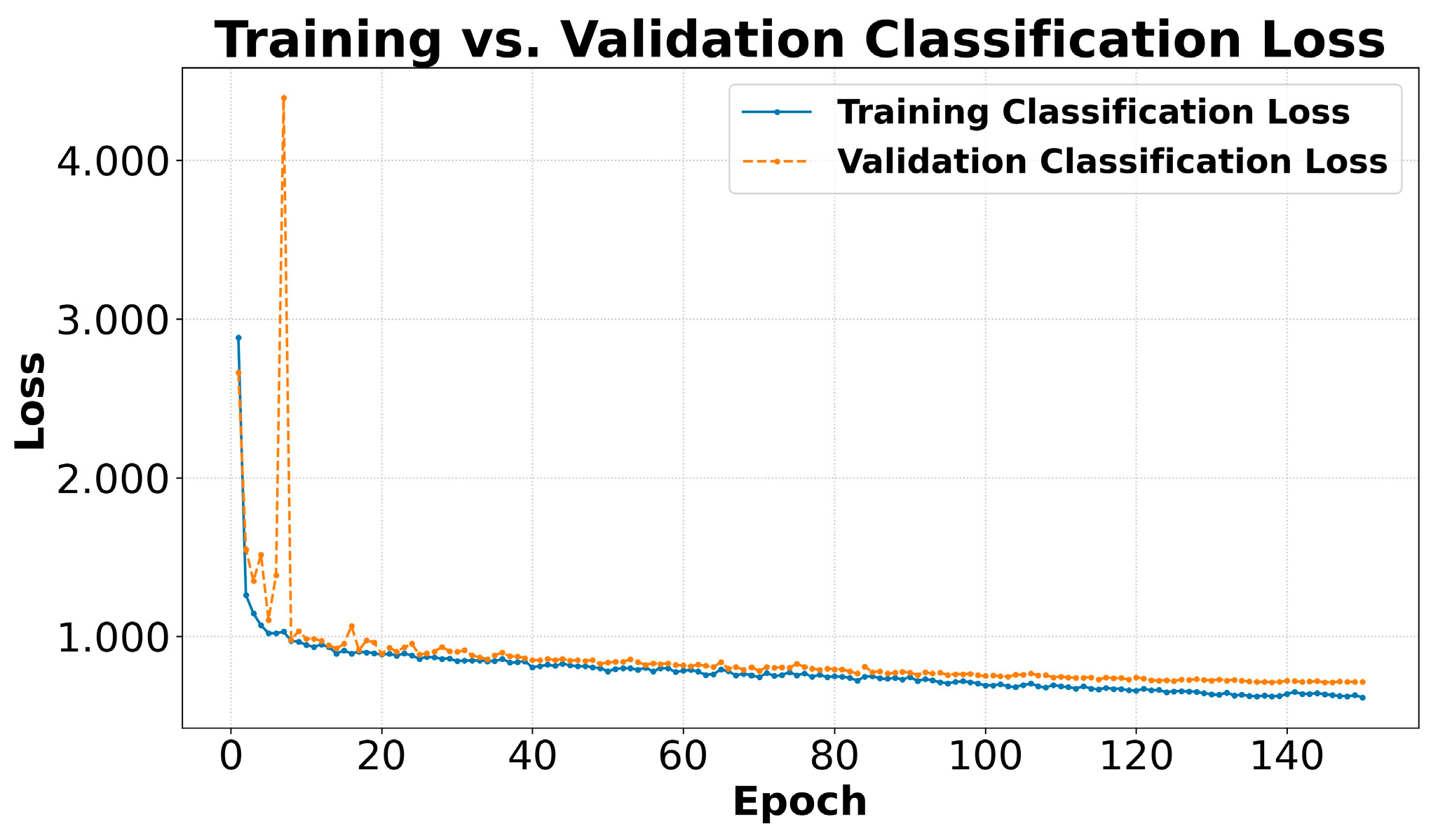

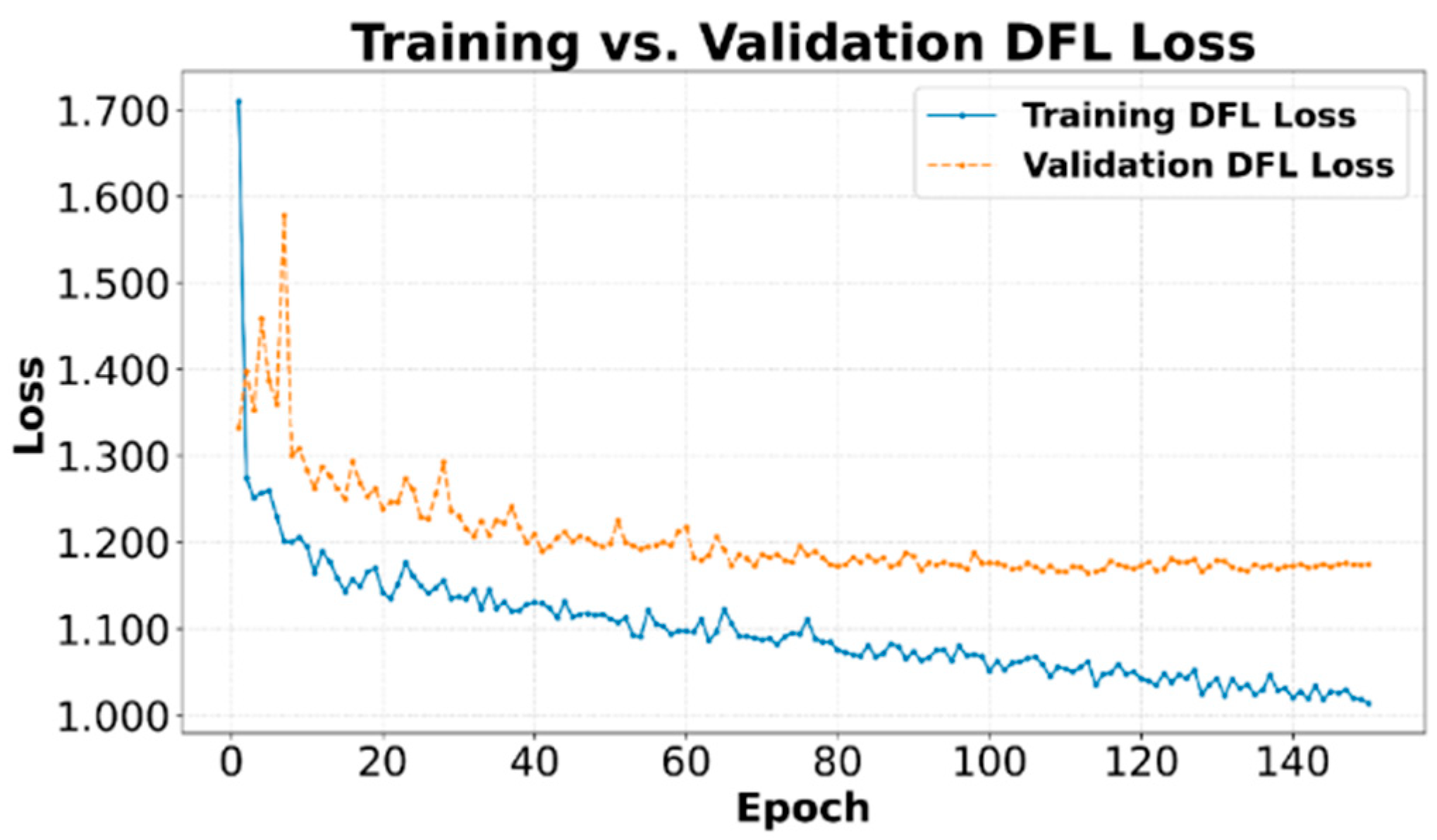

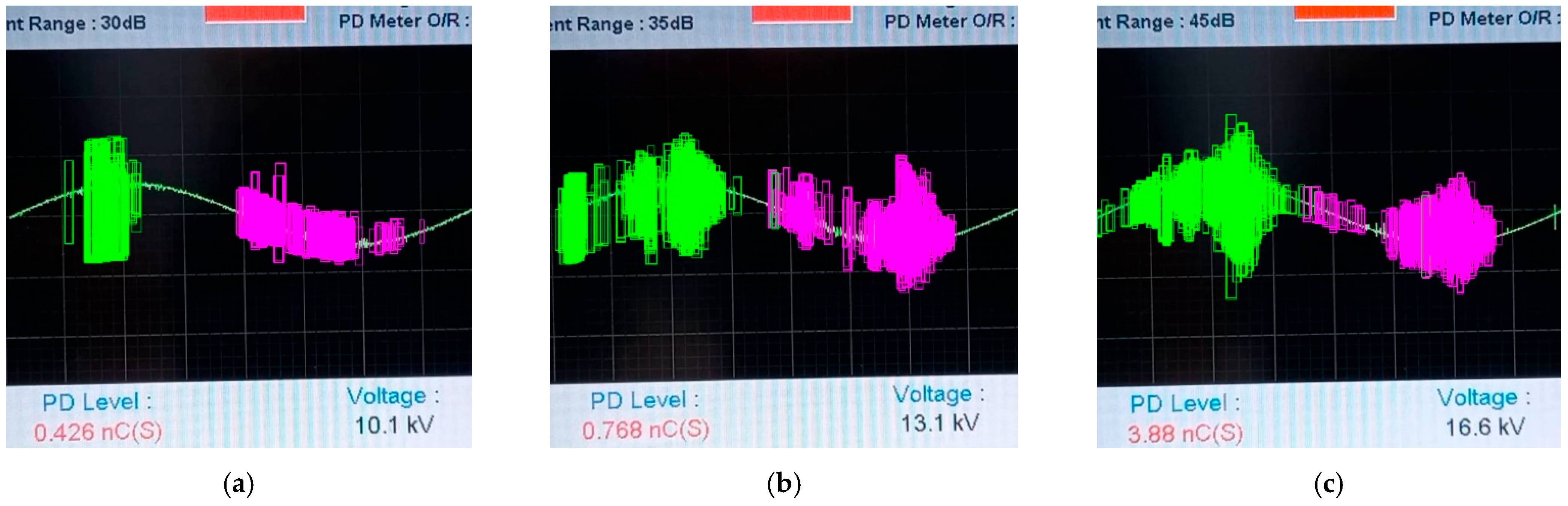

3.1.4. Analysis of Loss Curves

Box Loss

Classification Loss

Distribution Focal Loss

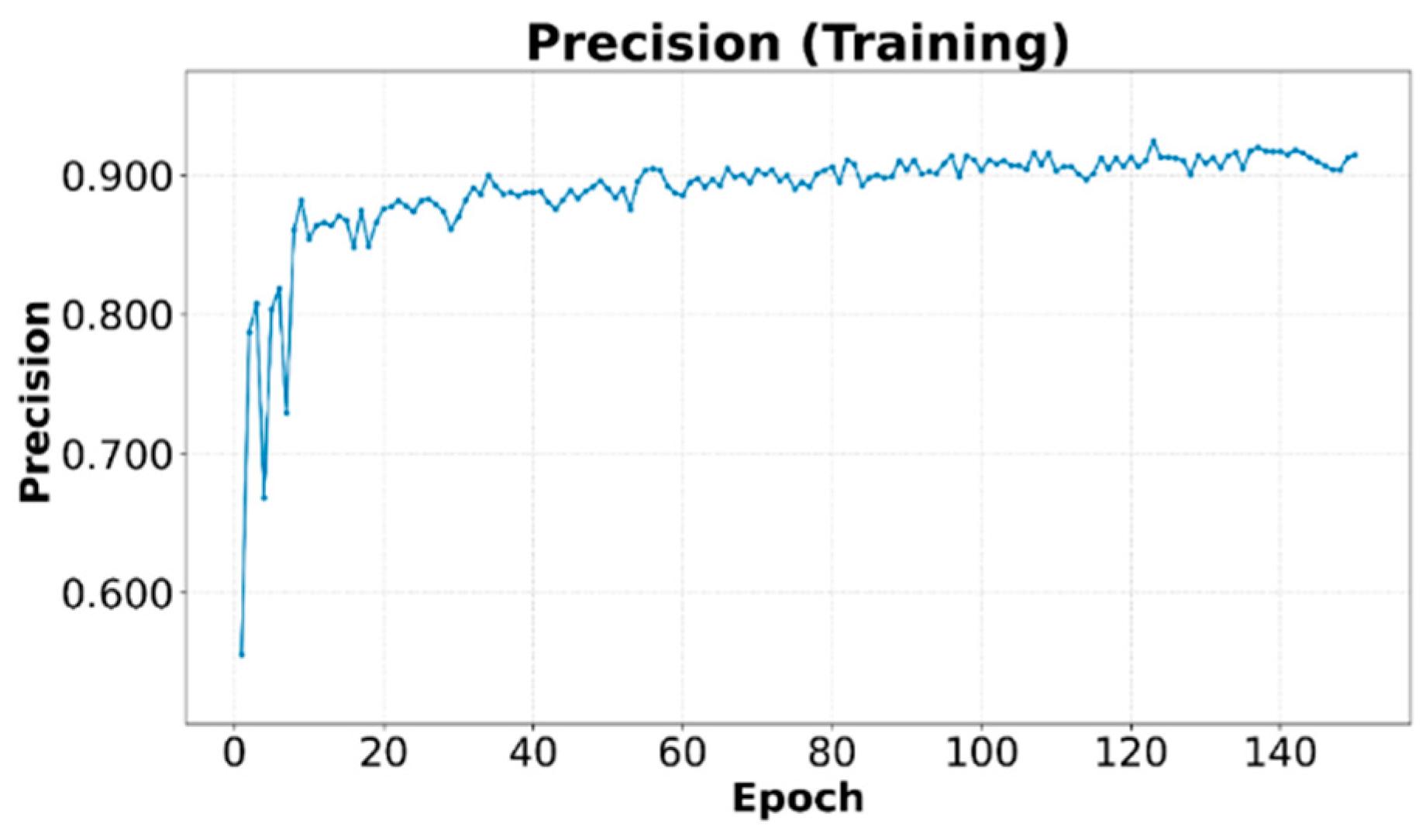

3.1.5. Performance Metrics

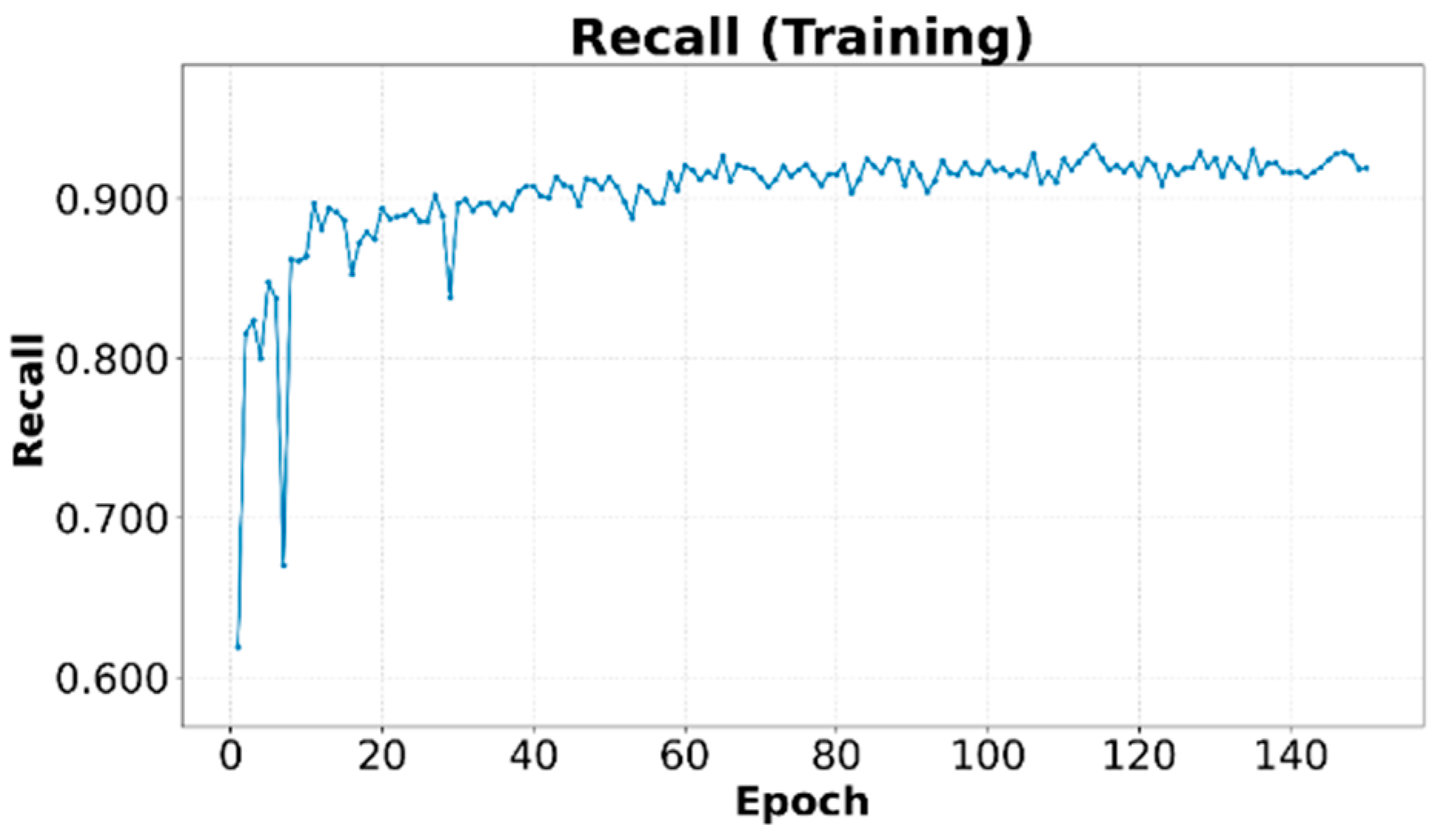

Precision and Recall in Training

mAP in the Validation

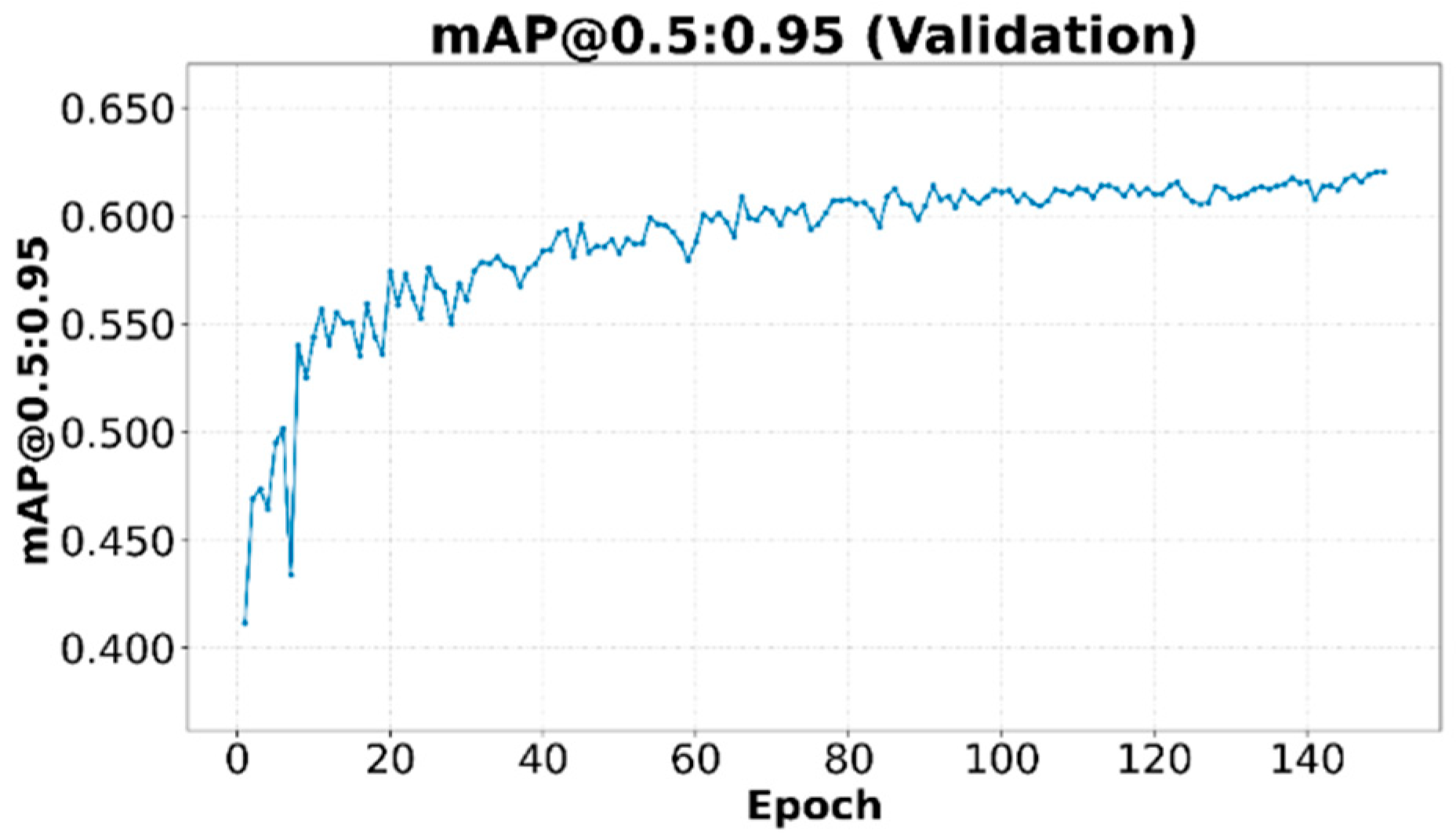

3.2. Confusion Matrix

3.2.1. Confusion Matrix on the Validation Set

3.2.2. Confusion Matrix on the Test Set

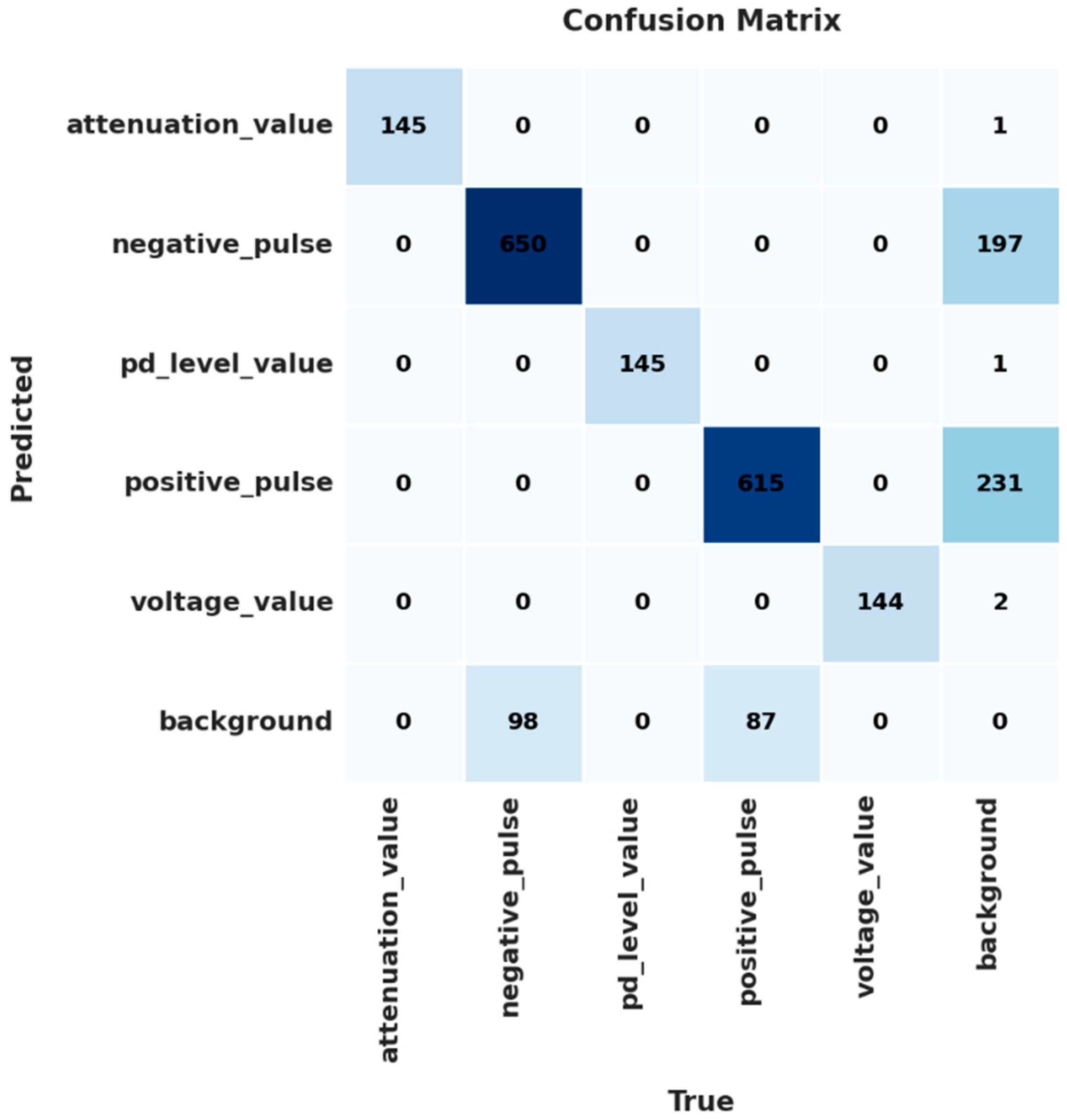

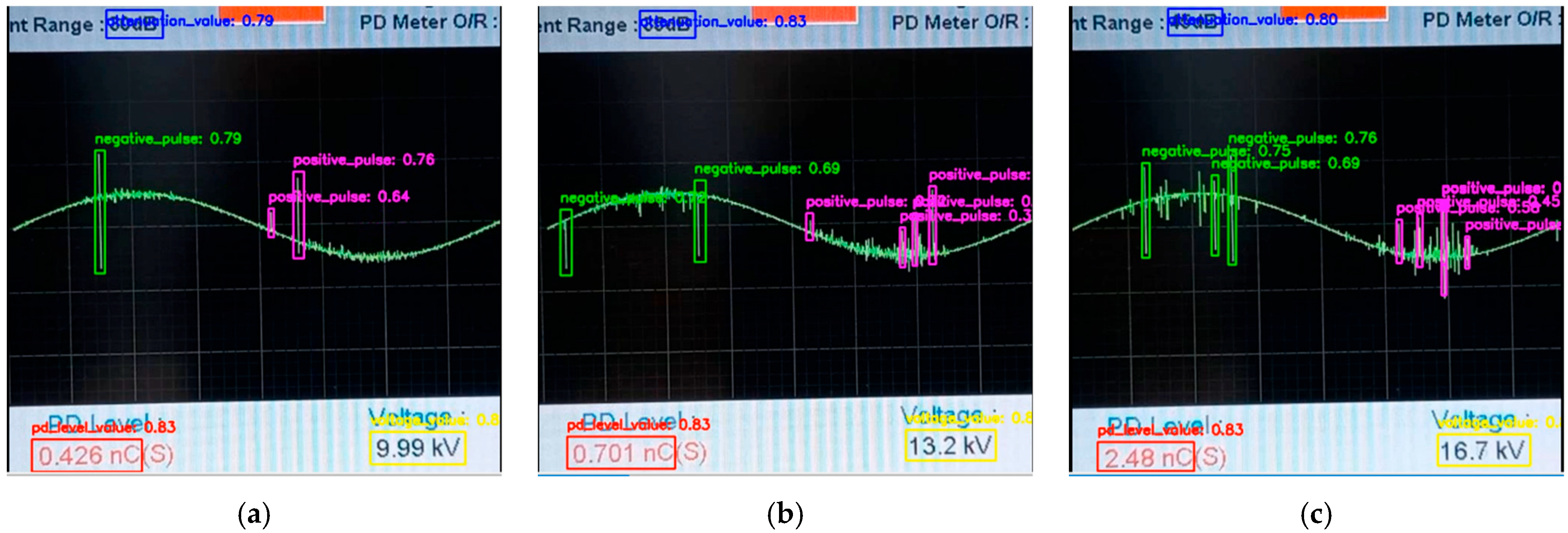

3.3. Inference in Operational Scenarios

3.3.1. Inference Flowchart

3.3.2. Results and Discussion

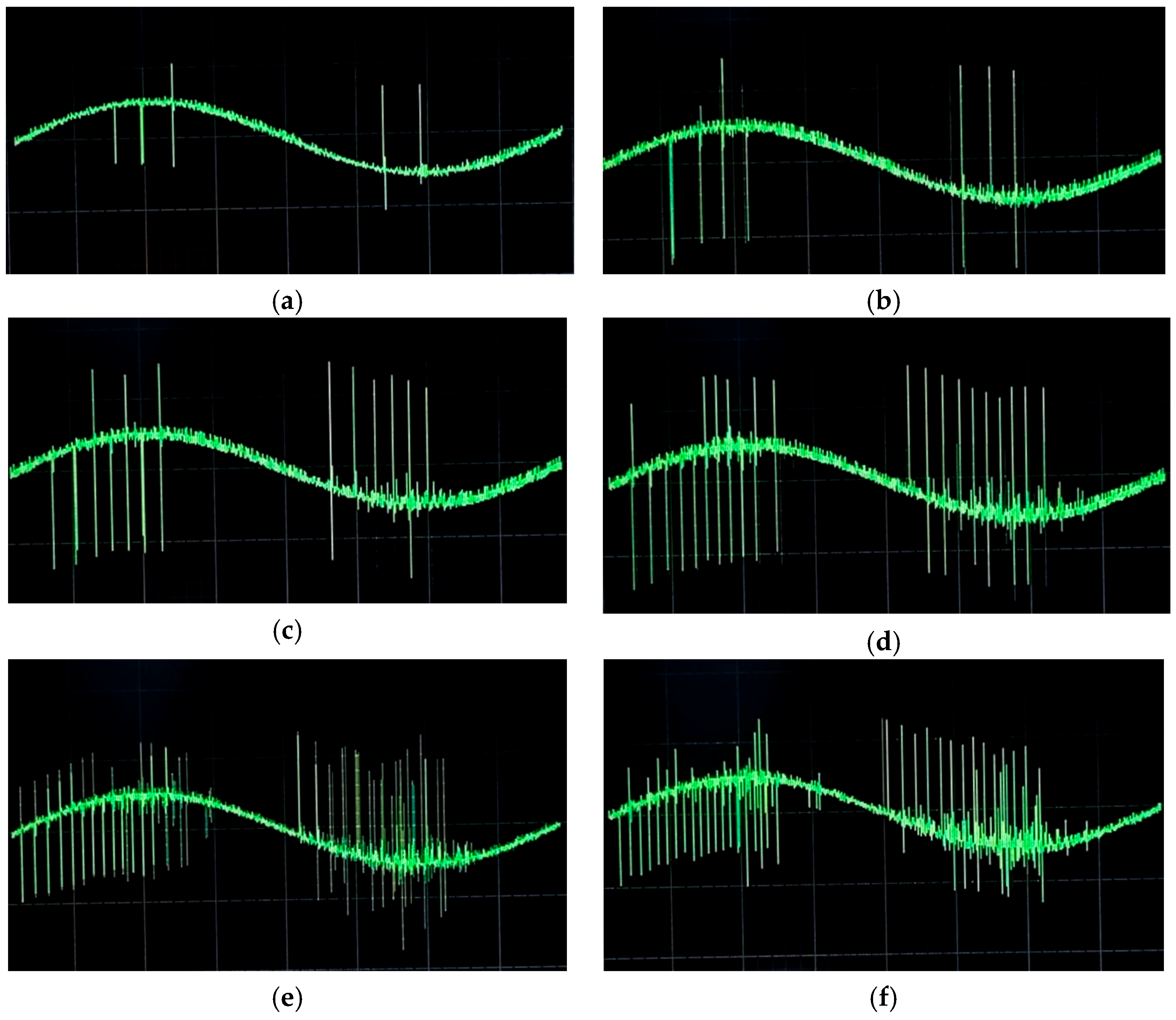

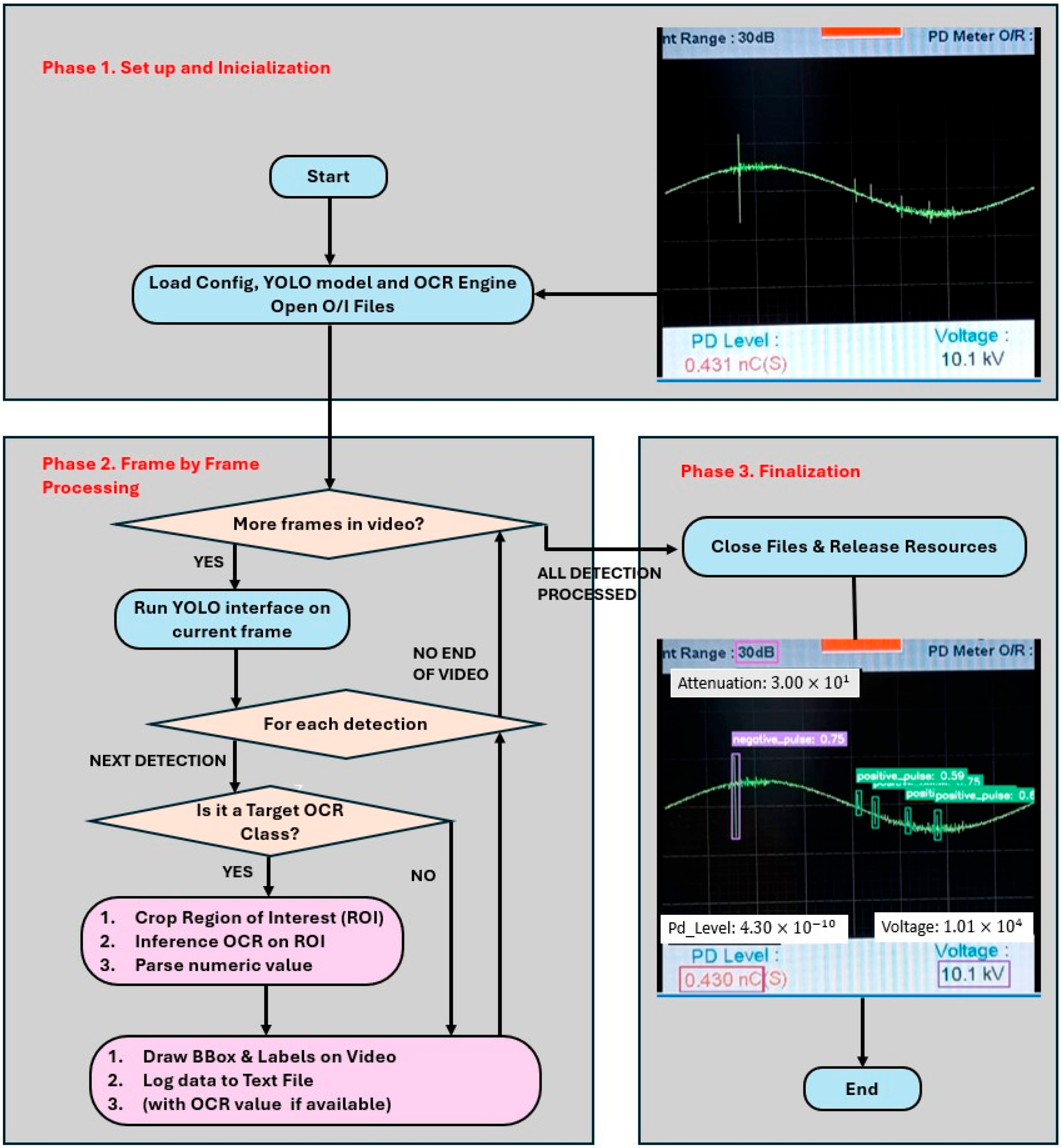

- At 10 kV, the model detects moderate discharge activity, with well-defined but relatively compact green clusters of negative and magenta positive pulses. This corresponds to an instrumental reading of PD Level 0.426 nC and Voltage 10.1 kV in Figure 17a.

- At 13 kV, with increasing voltage, a significant increase in the density and spatial extent of detections is observed. Both the negative and positive cumulative pulses are visibly larger and denser. This increased visual activity directly correlates with the increased discharge level measured by the instrument, which now shows PD Level 0.701 nC and Voltage 13.1 kV in Figure 17b.

- At 16 kV, the phenomenon intensifies dramatically. The cumulative image shows a much larger and more saturated area of activity, indicating a very severe PD regime. This exponential increase in visual activity is consistent with the instrumental reading, which reaches a PD Level of 3.88 nC and a Voltage of 16.6 kV, as shown in Figure 17c.

4. Method 2: Optical Characterization of PDs via Computer Vision

4.1. CNN Training

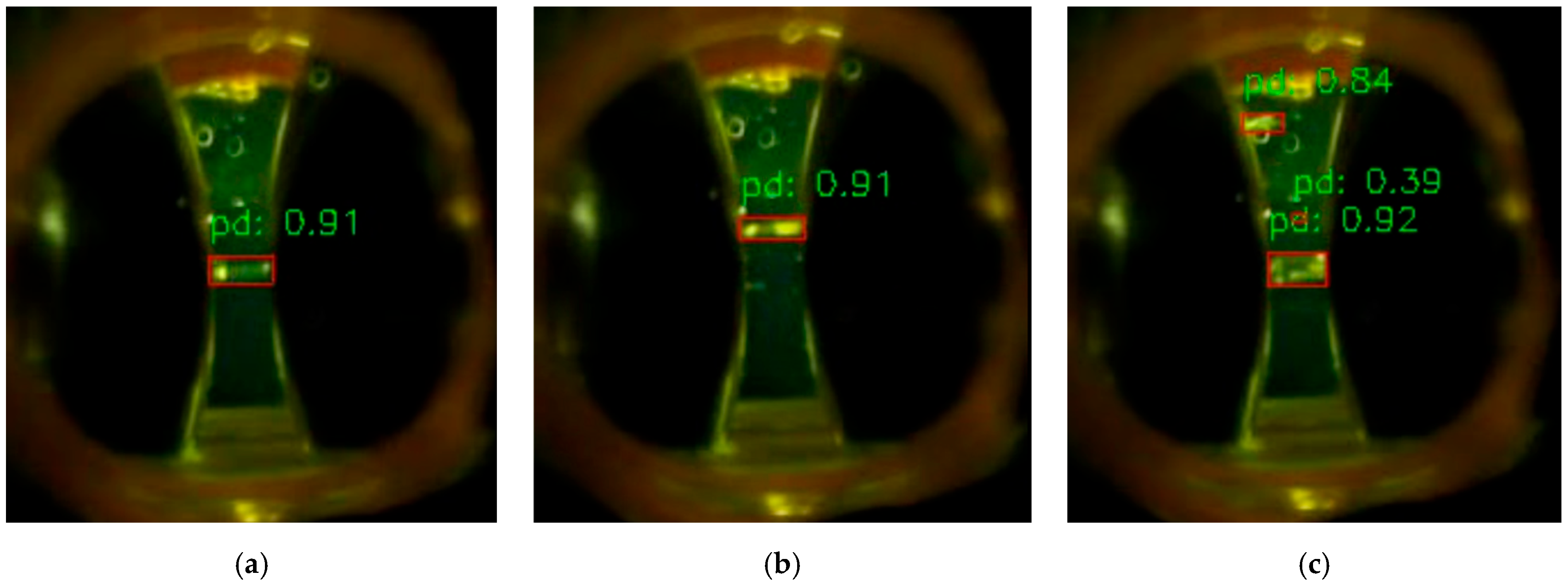

4.1.1. Semi-Automatic Generation of the Dataset

Configuration and Initialization

PD Detection and Extraction

Filtering, Validation and Data Collection

- The bounding box.

- The centroid coordinates, area and average RGB color intensity in a .dat text file for further analysis.

- A copy of the original, unprocessed frame and the list of bounding boxes for all valid events found are saved. This pair (image, labels) is the input data in YOLOv8 format.

Generating the Dataset in YOLOv8 Format

Summary Flowchart of the Process

- Setup and loading: in this initial phase, all resources are prepared. The script reads the file paths, uploads the video, and manually defines exclusion zones, which are key to filtering out known false positives.

- Video processing loop: this is the core of the script. It operates frame by frame, performing two main tasks in sequence:

- (a)

- PD detection and filtering: this block encapsulates all the computer vision logic, subtracts the background (see Figure 19a) to find the changes that occur, binarizes the image, finds the PD boundaries and applies filters, both the minimum area filter and the manual exclusion zones filter.

- (b)

- Temporary storage: if a frame contains at least one PD that has passed all filters, the script saves the original image of that frame along with the coordinates of the bounding boxes (see Figure 19b,c of the valid PD).

- YOLOv8 dataset generation: once the entire video has been analyzed, this final phase takes all the valid data collected and organizes it into the folder structure and file formats required by YOLOv8. This includes splitting the data into training/validation/test sets, normalizing the coordinates, and creating the .yaml configuration file.

4.1.2. Training Results

- Training set: 2967 images.

- Validation set: 982 images.

- Test set: 508 images.

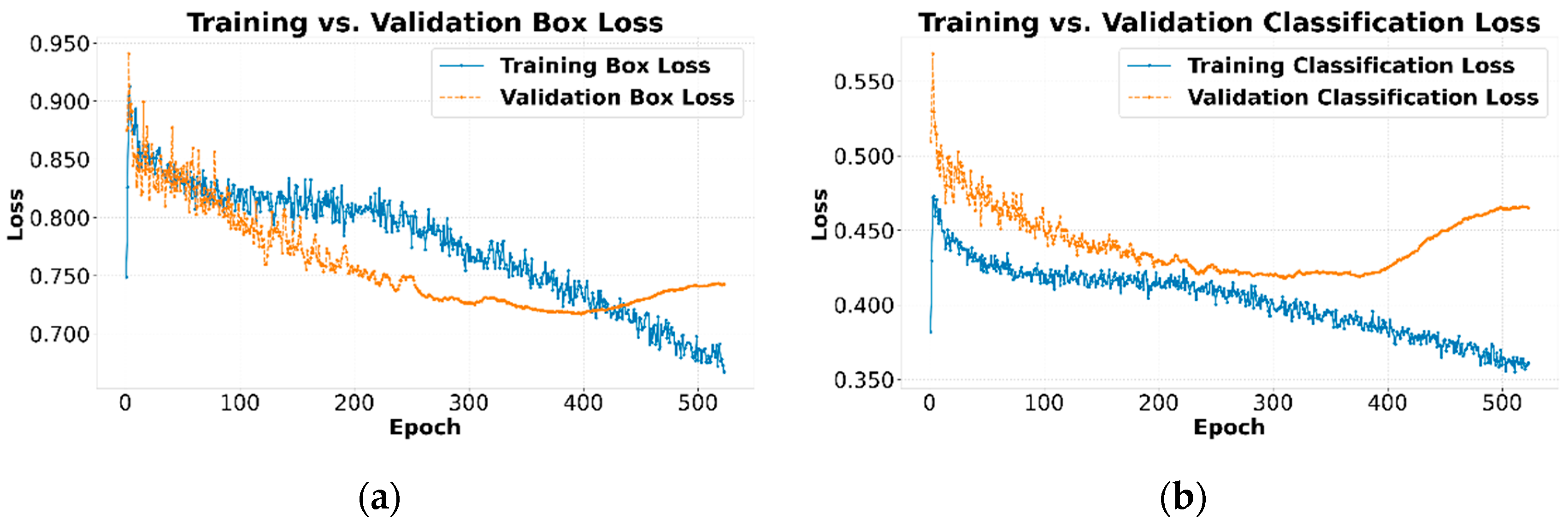

4.2. Inference in Operational Scenarios

- (a)

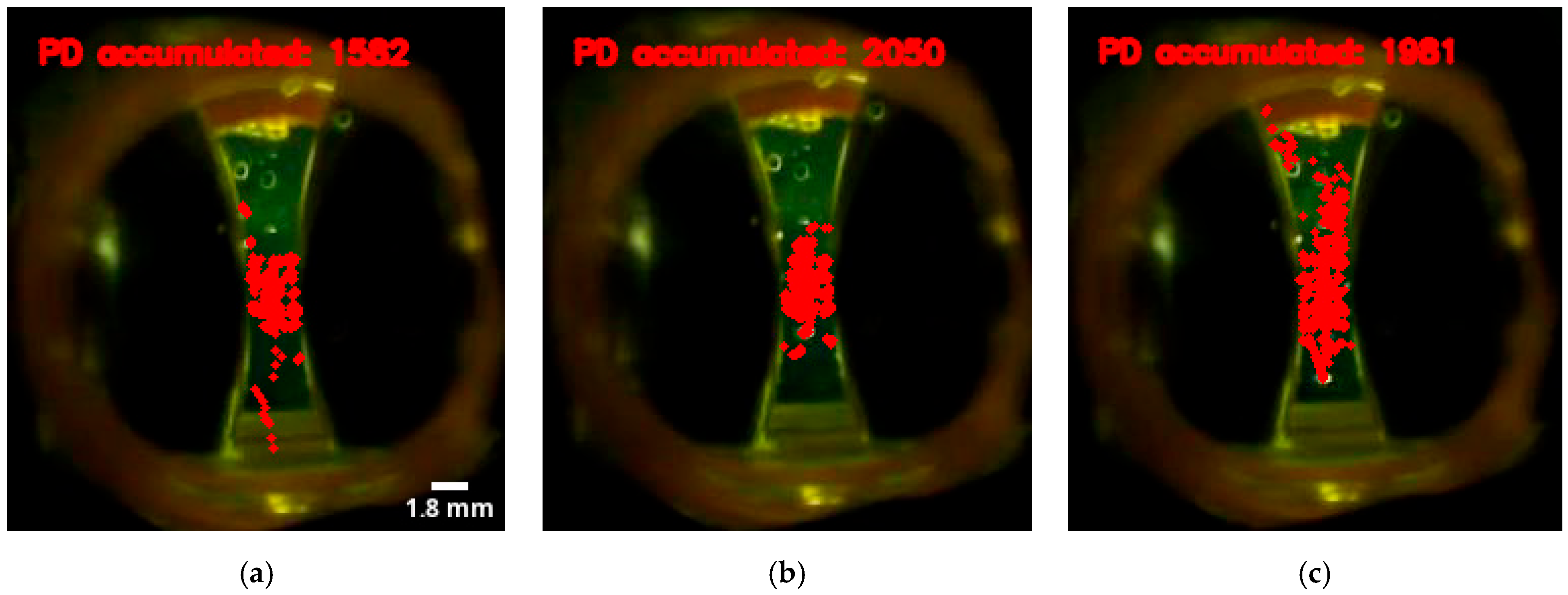

- Correlation between voltage and discharge activity: there is a clear relationship between the voltage applied to the electrodes and the number of detected PDs. At 10 kV, 1582 PDs were accumulated (Figure 23a). As the voltage is increased to 13 kV, the activity increases significantly, recording 2050 PDs (Figure 23b). However, at 16 kV, the total number of detected PD drops slightly to 1981 (Figure 23c). A reasonable hypothesis for this small decrease is that at higher energies the PDs are larger and may merge, being detected by the model as a single PD with a larger area instead of multiple smaller PDs.

- (b)

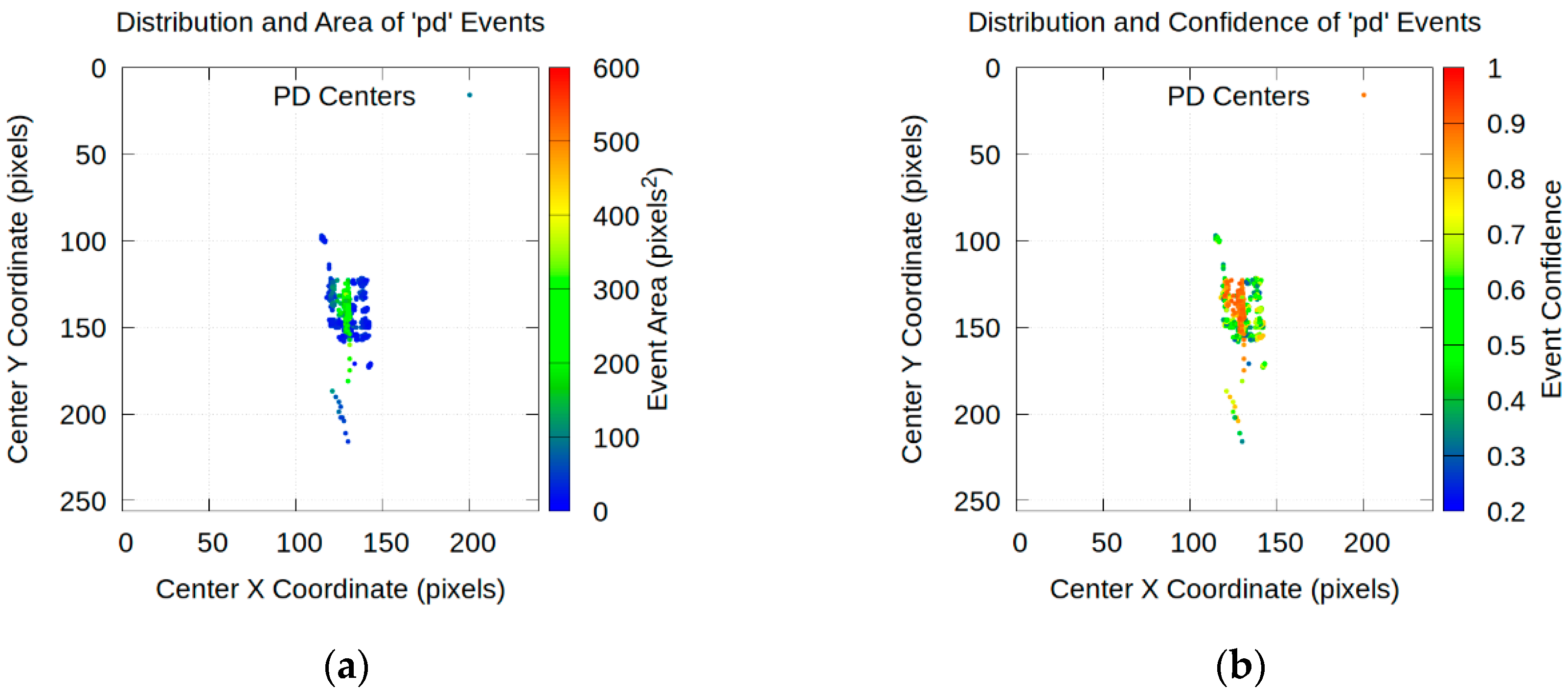

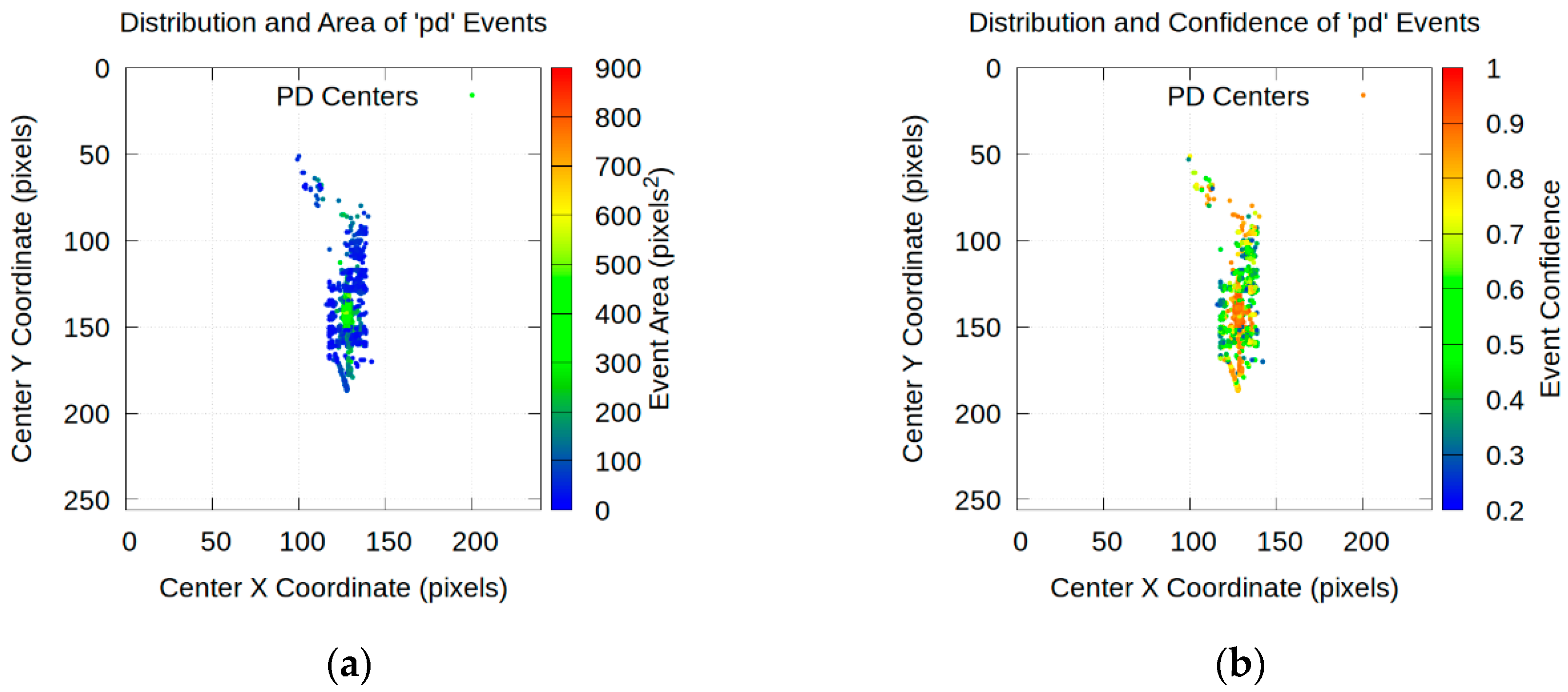

- Spatial expansion of activity: the scatter plots shown in Figure 24, Figure 25 and Figure 26 visually confirm that the area of discharge activity expands with increasing voltage. The cluster of points, initially highly concentrated in the dielectric space at 10 kV, expands both vertically and horizontally at 13 kV and, more pronouncedly, at 16 kV. This suggests that at higher voltage levels in the dielectric, PDs are not only more frequent but also occupy a larger volume.

- (c)

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Saravanan, B.; Kumar, P.; Vengateson, A. Benchmarking Traditional Machine Learning and Deep Learning Models for Fault Detection in Power Transformers. arXiv 2025, arXiv:2505.06295. [Google Scholar]

- Zhang, R.; Zhang, Q.; Zhou, J.; Wang, S.; Sun, Y.; Wen, T. Partial Discharge Characteristics and Deterioration Mechanisms of Bubble-Containing Oil-Impregnated Paper. IEEE Trans. Dielectr. Electr. Insul. 2022, 29, 1282–1289. [Google Scholar] [CrossRef]

- IS IEC 60270:2000AMD1:2015 CSV; High-Voltage Test Techniques—Partial Discharge Measurements. Edition 3.1, 2015-11. Consolidated Version. International Electrotechnical Commission: Geneva, Switzerland, 2015.

- Thobejane, L.T.; Thango, B.A. Partial Discharge Source Classification in Power Transformers: A Systematic Literature Review. Appl. Sci. 2024, 14, 6097. [Google Scholar] [CrossRef]

- Madhar, S.A.; Mor, A.R.; Mraz, P.; Ross, R. Study of DC Partial Discharge on Dielectric Surfaces: Mechanism, Patterns and Similarities to AC. Int. J. Electr. Power Energy Syst. 2021, 126, 106600. [Google Scholar] [CrossRef]

- Wotzka, D.; Sikorski, W.; Szymczak, C. Investigating the Capability of PD-Type Recognition Based on UHF Signals Recorded with Different Antennas Using Supervised Machine Learning. Energies 2022, 15, 3167. [Google Scholar] [CrossRef]

- Sikorski, W. Development of Acoustic Emission Sensor Optimized for Partial Discharge Monitoring in Power Transformers. Sensors 2019, 19, 1865. [Google Scholar] [CrossRef]

- Ren, M.; Zhou, J.; Song, B.; Zhang, C.; Dong, M.; Albarracín, R. Towards Optical Partial Discharge Detection with Micro Silicon Photomultipliers. Sensors 2017, 17, 2595. [Google Scholar] [CrossRef] [PubMed]

- Riba, J.-R.; Gómez-Pau, Á.; Moreno-Eguilaz, M. Experimental Study of Visual Corona under Aeronautic Pressure Conditions Using Low-Cost Imaging Sensors. Sensors 2020, 20, 411. [Google Scholar] [CrossRef]

- Monzón-Verona, J.M.; González-Domínguez, P.; García-Alonso, S. Characterization of Partial Discharges in Dielectric Oils Using High-Resolution CMOS Image Sensor and Convolutional Neural Networks. Sensors 2024, 24, 1317. [Google Scholar] [CrossRef]

- Xia, C.; Ren, M.; Chen, R.; Yu, J.; Li, C.; Chen, Y.; Wang, K.; Wang, S.; Dong, M. Multispectral Optical Partial Discharge Detection, Recognition, and Assessment. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Guo, J.; Zhao, S.; Huang, B.; Wang, H.; He, Y.; Zhang, C.; Zhang, C.; Shao, T. Identification of Partial Discharge Based on Composite Optical Detection and Transformer-Based Deep Learning Model. IEEE Trans. Plasma Sci. 2024, 52, 4935–4942. [Google Scholar] [CrossRef]

- Shahsavarian, T.; Pan, Y.; Zhang, Z.; Pan, C.; Naderiallaf, H.; Guo, J.; Li, C.; Cao, Y. A Review of Knowledge-Based Defect Identification via PRPD Patterns in High Voltage Apparatus. IEEE Access 2021, 9, 77705–77728. [Google Scholar] [CrossRef]

- Khan, M.A.M. AI and Machine Learning in Transformer Fault Diagnosis: A Systematic Review. Am. J. Adv. Technol. Eng. Solut. 2025, 1, 290–318. [Google Scholar] [CrossRef]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor Data Fusion: A Review of the State-of-the-Art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Deng, X.; Jiang, Y.; Yang, L.T.; Lin, M.; Yi, L.; Wang, M. Data Fusion Based Coverage Optimization in Heterogeneous Sensor Networks: A Survey. Inf. Fusion 2019, 52, 90–105. [Google Scholar] [CrossRef]

- Xing, Z.; He, Y. Multi-Modal Information Analysis for Fault Diagnosis with Time-Series Data from Power Transformer. Int. J. Electr. Power Energy Syst. 2023, 144, 108567. [Google Scholar] [CrossRef]

- Yin, K.; Wang, Y.; Liu, S.; Li, P.; Xue, Y.; Li, B.; Dai, K. GIS Partial Discharge Pattern Recognition Based on Multi-Feature Information Fusion of PRPD Image. Symmetry 2022, 14, 2464. [Google Scholar] [CrossRef]

- Abubakar, A.; Zachariades, C. Phase-Resolved Partial Discharge (PRPD) Pattern Recognition Using Image Processing Template Matching. Sensors 2024, 24, 3565. [Google Scholar] [CrossRef]

- Dempster, A.P. Upper and Lower Probabilities Induced by a Multivalued Mapping. In Classic Works of the Dempster-Shafer Theory of Belief Functions; Yager, R.R., Liu, L., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 57–72. [Google Scholar] [CrossRef]

- Sentz, S.; Ferson, K. Combination of Evidence in Dempster-Shafer Theory; United States Department of Energy: Washington, DC, USA, 2002.

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Opencv-Python 4.12.0.88. Available online: https://pypi.org/project/opencv-python/ (accessed on 21 July 2025).

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ultralytics. Ultralytics/Yolov5: V7.0–YOLOv5 SOTA Realtime Instance Segmentation; Ultralytics: Frederick, MD, USA, 2022. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J.; Chaurasia, A. Ultralytics YOLO. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 30 August 2025).

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Alif, M.A.R.; Hussain, M. YOLOv1 to YOLOv10: A Comprehensive Review of YOLO Variants and Their Application in the Agricultural Domain. arXiv 2024, arXiv:2406.10139. [Google Scholar] [CrossRef]

- Kang, S.; Hu, Z.; Liu, L.; Zhang, K.; Cao, Z. Object Detection YOLO Algorithms and Their Industrial Applications: Overview and Comparative Analysis. Electronics 2025, 14, 1104. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-Time Flying Object Detection with YOLOv8. arXiv 2024, arXiv:2305.09972. [Google Scholar]

- Raspberry Pi HQ Camera. Available online: https://www.raspberrypi.com/documentation/accessories/camera.html#hq-camera (accessed on 17 June 2025).

- Rasband, W. ImageJ. 1997. Available online: https://imagej.net/ij/ (accessed on 21 July 2025).

- Roboflow. Available online: https://www.roboflow.com (accessed on 21 July 2025).

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Janocha, K.; Czarnecki, W.M. On Loss Functions for Deep Neural Networks in Classification. arXiv 2017, arXiv:1702.05659. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized Focal Loss: Learning Qualified and Distributed Bounding Boxes for Dense Object Detection. arXiv 2020, arXiv:2006.04388. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision–ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Che, Q.; Wen, H.; Li, X.; Peng, Z.; Chen, K.P. Partial Discharge Recognition Based on Optical Fiber Distributed Acoustic Sensing and a Convolutional Neural Network. IEEE Access 2019, 7, 101758–101764. [Google Scholar] [CrossRef]

- Rodgers, J.L.; Nicewander, W.A. Thirteen Ways to Look at the Correlation Coefficient. Am. Stat. 1988, 42, 59–66. [Google Scholar] [CrossRef]

| Approach | Sensors/Data Source | Information Obtained | Key Limitations |

|---|---|---|---|

| Traditional electrical (IEC 60270) | Coupling capacitor, Measuring impedance [3] | Quantitative (Apparent Charge) | No spatial/morphological info. Requires expert interpretation. |

| Acoustic & UHF Fusion | Acoustic sensors, UHF antennas [4] | Localization, Event detection | Indirect correlation with charge magnitude. Can be affected by noise/barriers. |

| Optical (e.g., SiPMs, multispectral cameras) | Optical [8,11] | High sensitivity, Morphological | Often qualitative. Less direct quantification of electrical severity. |

| Our proposed method | DDX detector screen as image source and High-Resolution CMOS camera | Quantitative (charge, voltage) and Spatial & Morphological | Discussed in Section 2 |

| Attribute 1 | Attribute 2 | Coefficient (r) |

|---|---|---|

| Strong positive correlations (r > 0.7) | ||

| ocr_voltage | ocr_pd_level | 0.90 |

| num_pulse_negatives | ocr_voltage | 0.77 |

| Area | Height | 0.90 |

| Area | Width | 0.78 |

| CenterX | CenterY | 0.77 |

| Significant negative correlations (r < −0.3) | ||

| ocr_voltage | Width | −0.41 |

| ocr_pd_level | Width | −0.39 |

| num_pulsos_negativos | Width | −0.34 |

| Other moderate positive correlations (0.5 < r < 0.7) | ||

| num_pulse_negatives | ocr_pd_level | 0.59 |

| Confidence | Width | 0.55 |

| Area | Confidence | 0.53 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Monzón-Verona, J.M.; García-Alonso, S.; Santana-Martín, F.J. Fusion of Electrical and Optical Methods in the Detection of Partial Discharges in Dielectric Oils Using YOLOv8. Electronics 2025, 14, 3916. https://doi.org/10.3390/electronics14193916

Monzón-Verona JM, García-Alonso S, Santana-Martín FJ. Fusion of Electrical and Optical Methods in the Detection of Partial Discharges in Dielectric Oils Using YOLOv8. Electronics. 2025; 14(19):3916. https://doi.org/10.3390/electronics14193916

Chicago/Turabian StyleMonzón-Verona, José Miguel, Santiago García-Alonso, and Francisco Jorge Santana-Martín. 2025. "Fusion of Electrical and Optical Methods in the Detection of Partial Discharges in Dielectric Oils Using YOLOv8" Electronics 14, no. 19: 3916. https://doi.org/10.3390/electronics14193916

APA StyleMonzón-Verona, J. M., García-Alonso, S., & Santana-Martín, F. J. (2025). Fusion of Electrical and Optical Methods in the Detection of Partial Discharges in Dielectric Oils Using YOLOv8. Electronics, 14(19), 3916. https://doi.org/10.3390/electronics14193916