Abstract

Three-dimensional (3D) reconstruction from images has significantly advanced due to recent developments in deep learning, yet methodological variations and diverse application contexts pose ongoing challenges. This systematic review examines the state-of-the-art deep learning techniques employed for image-based 3D reconstruction from 2019 to 2025. Through an extensive analysis of peer-reviewed studies, predominant methodologies, performance metrics, sensor types, and application domains are identified and assessed. Results indicate multi-view stereo and monocular depth estimation as prevailing methods, while hybrid architectures integrating classical and deep learning techniques demonstrate enhanced performance, especially in complex scenarios. Critical challenges remain, particularly in handling occlusions, low-texture areas, and varying lighting conditions, highlighting the importance of developing robust, adaptable models. Principal conclusions highlight the efficacy of integrated quantitative and qualitative evaluations, the advantages of hybrid methods, and the pressing need for computationally efficient and generalizable solutions suitable for real-world applications.

1. Introduction

Three-dimensional (3D) reconstruction has emerged as a crucial area in computer vision, significantly impacting various fields, including autonomous navigation [1,2], underwater scenes [3,4], medical imaging [5,6], agriculture [7,8], civil engineering [9,10], and robotics [11,12], among others. These application scenarios span a wide range of conditions, from structured indoor environments to challenging domains such as underwater imaging, which introduce unique obstacles like refraction, turbidity, and low-contrast textures, prompting advances in preprocessing techniques like color correction [13] and denoising [14].

The capability of reconstructing accurate three-dimensional representations from images or video sequences enables better decision making, spatial understanding, and interaction with real-world environments. Recently, deep learning techniques have led to notable advancements in this domain [15,16], enabling more robust, precise, and computationally efficient solutions compared to traditional methods.

However, the rapid growth and diversity of deep learning-based approaches have resulted in varying performance and applicability results, sparking ongoing debates regarding the best methodologies, metrics, and practical implementations. Although methods employing convolutional neural networks (CNNs) [17], transformers [18], and hybrid architectures [19] show significant promise, discrepancies exist regarding their efficacy, computational cost, and adaptability across different contexts. These divergent perspectives emphasize the need for systematic evaluations and comparative analyses of existing methods.

The objective of this study is to systematically review recent developments (2019–2025) in deep learning techniques applied to image-based 3D reconstruction. By rigorously analyzing peer-reviewed articles, we aim to identify predominant methodologies, application domains, and performance metrics utilized within the field. Our comprehensive review includes deep learning models applied to monocular, stereo, and multiview imaging systems, providing insights into the strengths and limitations of current approaches.

Ultimately, this work highlights critical trends and identifies gaps in existing research, contributing to the advancement and refinement of deep learning methodologies for 3D reconstruction tasks. Principal conclusions emphasize the importance of integrated quantitative and qualitative evaluations, the effectiveness of hybrid deep learning models, and the increasing need for computational efficiency and accuracy in real-world applications.

2. Methods

This section outlines our systematic approach to evaluating deep learning methods for 3D reconstruction using image-based systems. We describe the methodology applied in this review, including the inclusion and exclusion criteria used to identify relevant studies, the databases and search strategies used, and the screening, full-text evaluation, and data extraction procedures. Our aim is to ensure transparency and reproducibility, enabling other researchers to replicate and build upon this work in the field of computer vision and 3D scene understanding.

The central research question guiding this study is: What are the prevailing deep learning-based techniques employed for 3D reconstruction from image-based systems?

2.1. Data Selection

The period from 2019 to 2025 was selected for this study to capture the most recent advances in 3D reconstruction using deep learning techniques applied to image-based systems. This timeframe reflects the rapid evolution of computer vision architectures, datasets, and applications relevant to stereo vision, catadioptric cameras, and aerial imagery. By focusing on this period, we ensure the inclusion of innovative methodologies that align with the current state of the art in deep learning-based 3D scene understanding.

To complete this systematic review, we conducted a search for relevant articles following the exclusion and inclusion criteria listed below.

- Inclusion criteria:

- Articles that apply deep learning models for 3D reconstruction, depth estimation, or structure-from-motion tasks;

- Articles that use images or video frames (from monocular, stereo, catadioptric, omnidirectional, or drone-based cameras);

- Articles that aim to reconstruct or estimate 3D geometry, depth maps, disparity maps, or point clouds;

- Only peer-reviewed conference papers and journal articles;

- Articles written in English;

- Articles published from 2019 to 2025.

- Exclusion criteria:

- Articles not available in English, as this language is required to ensure understanding of the content and proper analysis;

- Articles published before 2019 will focus on recent advancements and relevance;

- Documents other than peer-reviewed journal articles, including theses, conference proceedings, and reports, are maintained to maintain a consistent level of academic rigor;

- Articles behind a high-cost paywall to ensure that all selected studies were readily accessible for a comprehensive review.

The following search formulas were created, as shown in Table 1.

Table 1.

Search strings used for retrieving articles and theses from Google Scholar and Scopus databases.

2.2. Data Extraction

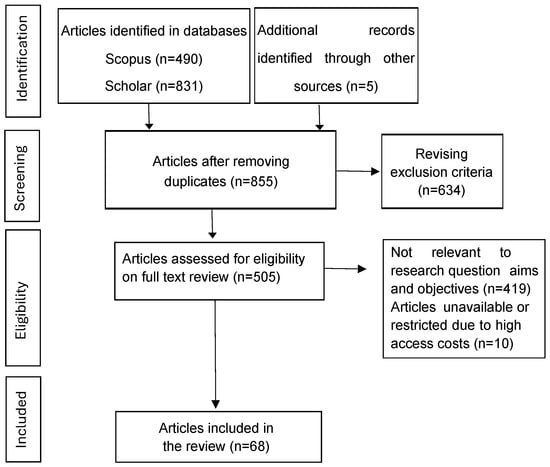

In June 2025, a search using the Scopus and Google Scholar databases was conducted, and 1321 articles were found. In addition, a set of five relevant studies were identified through other sources, including expert recommendations and manual inspection of recently published literature. After removing duplicates, we were left with 855 articles. We screened the title and abstract of each article and excluded 634 articles that were not meeting the initial criteria.We were left with 505 articles to be evaluated by full text. In order to eliminate bias and provide accurate results, two independent reviewers sifted through every article for eligibility. Here, 428 articles were excluded because they were not in accordance with the goals of the research question. Furthermore, 10 articles were excluded because they were not available on Scopus or were available only with subscription. Thus, 67 articles were left for the systematic review. The process of extracting data was aimed at collecting useful information, such as deep learning models used, reported evaluation metrics, dataset details, and the results of performance. Pre-determined form of extraction of data was used to ensure consistency and reliability across all the studies included. Figure 1 provides a detailed description of these steps.

Figure 1.

PRISMA flow diagram illustrating the process of identifying, screening, and selecting articles from various databases, culminating in the inclusion of articles for the review.

3. Results

3.1. Evaluation Metrics

Quantitative evaluation under standardized metrics is a fundamental pillar for comparing the performance of 3D reconstruction models; all reviewed articles report at least one quantitative metric to assess their models, reflecting a high degree of rigor in result validation. The main reported metrics are:

RMSE (Root Mean Square Error) is the most commonly used metric to evaluate depth estimation errors. However, it varies in units between studies: as an example, Ref. [20] reports an RMSE of 0.430 m, while [21] achieves a much lower RMSE of 0.0091 m, indicating significantly higher accuracy, in contrast with articles such as [22] (RMSE of 2.4 mm) and [23] (RMSE of 1.84 mm), which demonstrate millimeter-level precision, which is essential for high-resolution applications such as engineering or biomedicine.

MAE (Mean Absolute Error) in Ref. [24] is 1.5 mm, while [23] presents a lower MAE of 1.49 mm.

IoU (Intersection over Union) is primarily used in segmentation tasks. Ref. [25] reports an IoU of 0.8793, and [26] achieves 89.7%, both indicating high overlap between predictions and ground truth.

Dice Similarity Coefficient (DSC) is widely used in medical segmentation. For example, Ref. [27] reports a DSC of 0.912, suggesting excellent alignment between real and reconstructed anatomical structures.

Accuracy, Precision, and Recall are common metrics in classification and segmentation tasks. Ref. [28] achieves 0.88 in precision and 0.83 in recall, compared to its single-view baseline (precision: 0.74), highlighting the benefits of multi-view input.

Chamfer Distance (CD) is used in 3D mesh reconstruction. Ref. [29] achieves a CD of 0.000384, outperforming Pixel2Mesh++ (0.000471). Similarly, Ref. [30] reports a CD of 0.79 mm, which is better than PIFuHD or ICON.

The work presented in [31] compares several methods using RMSE: COLMAP (0.58 m), NeuralRecon (3.14 m), and MiDaS (10.24 m), which showcase significant precision differences between classical and modern techniques. Ref. [32] does not report any metrics, limiting its inclusion in objective comparisons. Refs. [33,34] report similar RMSEs (0.353 mm), but differ in model complexity (114.6M vs. 143M parameters), introducing additional factors such as computational efficiency.

Table 2 provides a consolidated view of the quantitative evidence reported by the primary studies included in our review. For each work, we list the exact performance metric(s) published by the authors: root mean square error (RMSE) and mean absolute error (MAE) for depth accuracy, intersection over union (IoU) and Dice similarity coefficient (DSC) for segmentation overlap, and the conventional classification scores of accuracy, precision, and recall. To enable direct comparison, all distance-based metrics were converted to meters and, where necessary, inverted so that lower values uniformly indicate better performance. Dashes denote metrics not reported by a given study.

Table 2.

Performance metrics reported across selected studies rounded to four decimal places.

Table 3 zooms in on the Chamfer distance. Because this metric measures the mean bidirectional distance between the predicted and ground-truth point clouds, smaller values indicate a more accurate surface reconstruction. For ease of comparison, we express all figures in millimeters.

Table 3.

Reported Chamfer distance (CD) across selected studies. All values are in millimeters (mm).

According to [29], their method achieves the lowest absolute CD of 0.384 mm, an improvement of many times from their baseline. Similarly, Refs. [30,59] also achieve submillimeter CDs, indicating high accuracy in surface reconstruction. Ref. [58] also shows competitive performance in 3D shape detection, improving over their baseline by reducing it from 0.74 to 0.68 mm. Although [55] expresses only a relative value, not a true numerical value, the study documents a relative performance gain of 23%, which is another measure of the method’s relative effectiveness.

Quantitative metrics are crucial for evaluating 3D reconstruction models, but their validity also requires established benchmarks and qualitative evaluations. Most studies use a multifaceted approach that combines numerical metrics with qualitative validation. Understanding benchmarks and reference systems is essential. The KITTI Odometry Benchmark [60] provides real-world driving sequences with precise trajectories and depth maps, establishing a standard for comparing visual odometry and depth estimation methods in autonomous navigation. KITTI and NYU Depth v2 are standard datasets to evaluate the accuracy of monocular and stereo models. Although not a formal benchmark, COLMAP [61,62] serves as a reference to evaluate new methods in terms of precision and speed. Benchmarks enable reproducible comparisons of studies.

Experiments often employ standard baselines, such as Monodepth2 [63] and PackNet [64], for depth estimation tasks. Some studies demonstrate superior performance compared to these baselines. For example, Ref. [38] achieves 88.3% precision in the KITTI benchmark, a depth MAE of 0.72 m, and a processing speed of 28.4 FPS, ideal for embedded autonomous systems.

Similarly, Ref. [20] reports a better RMSE (4.632) compared to higher baseline values and 85. 7% precision, demonstrating stereo vision capability. Ref. [65], focusing on efficiency, shows that its model is four times faster than COLMAP with similar accuracy.

In addition to numerical scores, several papers complement their analysis with qualitative ratings of visual outputs to facilitate intuitive perception of reconstruction quality. For example, Ref. [29] indicates sharper reconstructions with enhanced edge preservation, corroborated by superior Chamfer distance. Ref. [40] demonstrates uniform visual results under varying lighting conditions, and [50] reports structural completeness of 98% with millimeter spatial consistency.

Some research studies further represent their advances through combined visual and quantitative improvements. Ref. [66], for example, sees a 5.3-point increase in peak signal-to-noise ratio (PSNR) and a 21% reduction in RMSE with its HDR pipeline, with clearly superior visual detail. Ref. [21] achieves a 20% improvement in accuracy compared to CNN baselines, with reduced noise and smoother depth estimations. Likewise, Ref. [67] delivers a 44% improvement in RMSE with the addition of stereo data in a monocular architecture, demonstrating the capability of hybrid models.

Ref. [68] reports ADD(-S) scores of 97.45% and 84.38% on the Linemod and Occlusion Linemod datasets, respectively, indicating strong pose estimation accuracy.

Ref. [69] reports an absolute error not exceeding 8 μm and a standard deviation below 3 μm in 3D reconstruction tasks.

Ref. [70], for the NYUv2 dataset, achieves an RMSE of 0.131 in normalized units.

The quantitative evaluation of the SSSR-FPP system in [46] demonstrates high accuracy for 3D shape measurements. The root mean square (RMS) error for two standard ceramic spheres was reported as 77.74 μm and 57.34 μm, respectively, with a measured center-to-center distance of 100.21 μm, closely matching the ground truth of 100.0532 μm. These results confirm the system’s precision in capturing fine 3D details, with overall measurement accuracy better than 80 μm.

3.2. Emerging Research and Topical Landscape

Table 4 condenses the shifting technical priorities identified in the surveyed articles. Two patterns emerge; the first is bridging the lab–field gap. The most common emphasis is on real-world validation: datasets collected outdoors, on embedded hardware, or on-site robots, and comparisons against industry baselines. This trend signals a maturing field in which demonstrating robustness outside controlled benchmarks now outranks incremental metric gains.

Table 4.

Emerging technical focus areas in deep learning-based 3D reconstruction.

The second patterns is hybridisation over replacement. Rather than discarding geometry-aware heuristics, authors graft CNN/transformer modules onto well-established workflows to curb failure modes such as scale drift or matching in texture-poor areas.

Biomedical imaging, low-texture or repetitive-pattern handling, and resource-aware deployment (edge optimisation, compression) form a tight second tier.

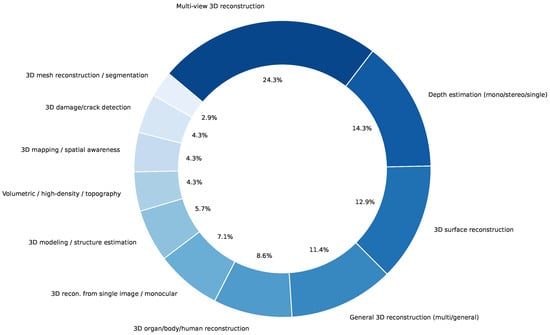

Figure 2 shows the distribution of 3D reconstruction tasks in the literature. Multiview 3D reconstruction is the most common at 24.3%, followed by monocular, stereo, and hybrid depth estimation at 14.3%; 3D surface is 12.9%, and general-purpose reconstructions are 11.4% of the sample. Organ or human body and monocular single-image reconstructions are also notable at 8.6% and 7.1%, respectively. Less frequent tasks, such as structural modeling and crack detection, account for 5.7% or less. This highlights a research focus on multiview and depth estimation approaches due to their versatility and ease of use with deep learning models.

Figure 2.

Percentage distribution of 3D reconstruction tasks reported in the literature.

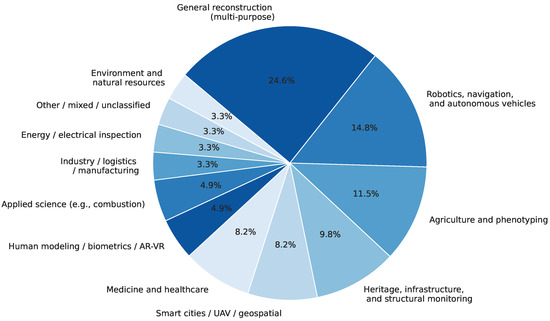

Figure 3 shows the frequency distribution of the application domain among the surveyed literature. The predominant category is general-purpose 3D reconstruction with 24.6% of the entries, reflecting the cross-domain and general applicability of reconstruction algorithms. Robotics, navigation, and autonomous vehicles are the second-largest domain, reflecting the importance of spatial knowledge in smart and mobile systems. Medicine, agriculture, and structural monitoring indicate the increased use of 3D reconstruction in high-impact areas such as healthcare, phenotyping, and infrastructure inspection. Additional areas with moderate representation are smart cities and geospatial analysis, and human modeling and AR/VR applications.

Figure 3.

Frequency distribution of application domains in 3D reconstruction literature.

3.3. Network Architectures Employed

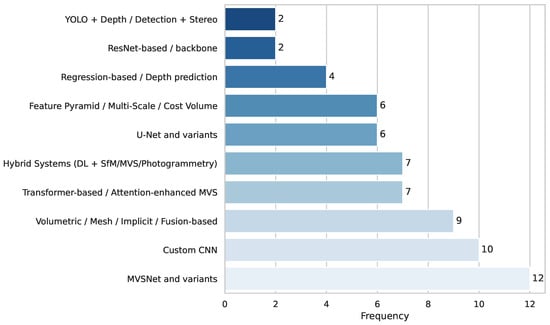

Several deep learning frameworks have been proposed and utilized for 3D reconstruction tasks, each designed to address specific challenges and data modalities. Such diversity is an indication of the emerging nature of the field, where architectural innovation is directed towards enhancing depth precision, scalability, and performance for diverse applications. Figure 4 shows the most widely used network architectures in the reviewed literature.

Figure 4.

Distribution of network architectures employed. Categories were grouped by architectural family or functional paradigm as reported by the authors.

Figure 4 illustrates the prevalence of architectures used in 3D reconstruction papers. Multi-view stereo network (MVSNet) and its variants are the most prevalent architectures, as expected of a cornerstone technique in multi-view stereo reconstruction. Custom CNNs are also prevalent, indicating persistent efforts to optimize networks for specific datasets or limitations. The other popular topics include volumetric and fusion models, as well as cost volume and multiscale feature designs, all of which address issues in 3D reasoning and depth estimation. Hybrid architectures combine the classical photogrammetry or SfM techniques with deep learning, transformer, and U-Net architectures. Less frequently utilized architectures include review/comparative architecture, regression-based approaches, and ResNet- or You Only Look Once (YOLO)-based extensions, suggesting future or specialized use in the discipline.

3.4. Cameras or Sensor Types

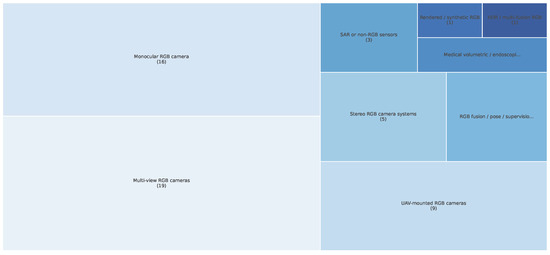

The selection of sensor and camera modalities is critical to the performance and application of 3D reconstruction systems. The literature reviewed in this work indicates a heavy bias towards RGB-image-based modalities, particularly multi-view and monocular camera configurations. RGB cameras on UAVs also indicate substantial usage, paralleling the burgeoning usage in aerial and geospatial applications. In contrast, highly specialized modalities such as SAR sensors or volumetric medical imaging reveal far fewer appearances, indicating specialized application domains. Awareness of these trends reveals the current state of data acquisition methods and their alignment with various reconstruction objectives. Figure 5 shows this.

Figure 5.

Camera and sensor types in 3D reconstruction studies. Note: RGB-based sensors dominate the landscape, with limited representation from synthetic and non-visible spectrum modalities.

3.5. Principal Challenges

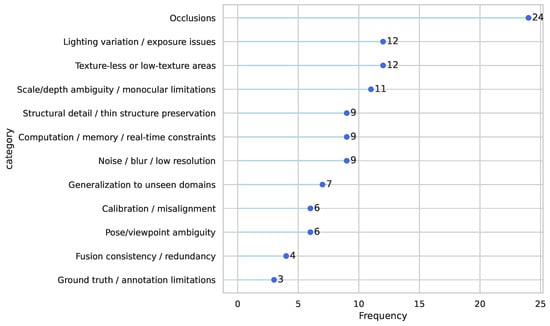

In the context of 3D reconstruction systems, numerous challenges hinder the development of accurate and efficient models. These challenges range from intrinsic issues in visual information, such as occlusions [24,28,50], textureless surfaces [71], and scale ambiguity [76], to technical issues, including computational demands [21,42] and real-time processing requirements [65]. Among these challenges are environmental ones, such as changing lighting [66,71], in addition to domain-specific issues like misalignment [83], annotation errors [22], and noise [84]. In addition, generalization to unknown domains remains a persistent challenge, especially for deployment beyond controlled laboratory settings [48,52]. These diverse challenges are not only reused across the literature, but are also key to defining the performance and usefulness of reconstruction techniques. Figure 6 illustrates these occurrences within recent work for each challenge, confirming the prominence of occlusions and low-texture scenes as major roadblocks to existing methods.

Figure 6.

Key challenges in 3D reconstruction systems.

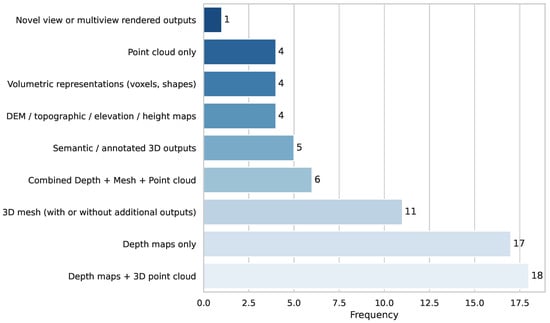

3.6. Output Formats in 3D Reconstruction Studies

The way in which the validity of the model is presented plays a critical role in assessing the effectiveness and applicability of 3D reconstruction techniques. Depending on the intended use case, such as visualization, navigation, simulation, or quantitative analysis, different types of output may be required. The most commonly reported results across the reviewed literature are depth maps, either standalone or in conjunction with 3D point clouds, reflecting a focus on efficient and scalable depth estimation methods. In addition to these, more comprehensive representations, such as 3D meshes, volumetric structures, or semantic 3D scenes, are also presented, particularly in applications involving simulation, robotics, or scene understanding. Less frequently, studies produce topographic elevation maps or novel view-synthesis outputs, which are typically tailored to specific domains such as remote sensing or augmented reality. Figure 7 presents the distribution of the output formats reported in the analyzed studies, highlighting the dominance of depth-based and point-cloud representations in current 3D reconstruction research.

Figure 7.

Distribution of output formats.

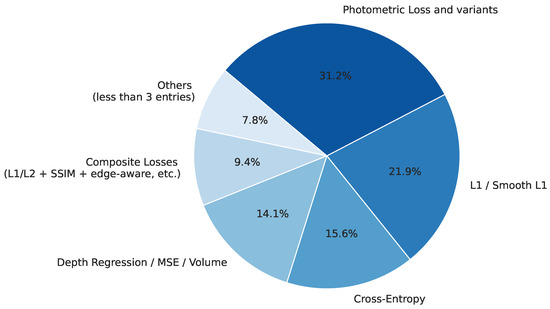

3.7. Loss Functions

Loss functions play a crucial role in the training of deep learning models for 3D reconstruction by guiding the optimization of model predictions. Among the reviewed literature, photometric loss and its variants are the most widely adopted [34,52,85], as they directly penalize photometric discrepancies between projected and reference images. This is followed by standard L1/Smooth L1 losses [42,48,54], which are valued for their simplicity and robustness. Cross-entropy loss and depth regression losses, such as MSE or volumetric error metrics, are also frequently employed [22,26,51,72]. Composite approaches that integrate multiple components, such as SSIM, edge-aware terms, or perceptual constraints, appear in multiple studies, indicating an effort to balance multiple aspects of reconstruction quality [23,86]. Less common but still relevant are losses like Dice, Chamfer distance/surface consistency, perceptual/adversarial losses, uncertainty-aware techniques, and classical methods. For simplicity and clarity, these less frequent techniques are grouped under “Others” in the figure [25,28]. Figure 8 shows the distribution of loss functions reported in the selected 3D reconstruction studies.

Figure 8.

Loss functions.

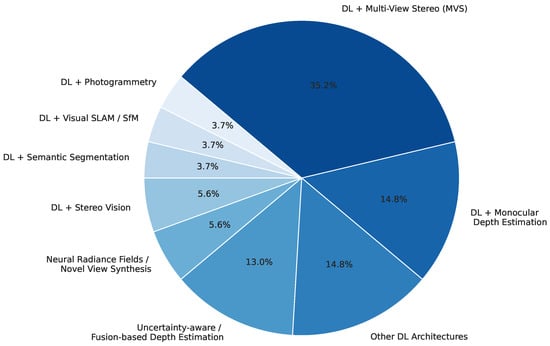

3.8. Methods Observed

The methodological landscape of 3D reconstruction has become more diverse as methods from various deep learning-based approaches have been integrated with conventional computer vision paradigms. In the interest of conceptual consistency and comparative ease, the methods described in the reviewed literature were recast into more uniform categories based on their technical focus.

The most frequently used method is multi-view stereo integration with deep learning,. This compound method leverages the strength of traditional MVS pipelines and adds deep feature extraction or depth regularization modules to significantly improve reconstruction accuracy. The second most frequent are monocular depth estimation models based on a single RGB image, found in eight studies, and often used because they are convenient and usable in limited environments.

Depth estimation methods with uncertainty modeling, input fusion, or loss functions specifically designed for damage detection were classified as uncertainty-aware and fusion-based methods. Stereo vision deep learning indicates an increased interest in calibrated dual-camera systems. The most recent advancements are represented by neural radiance fields and novel view synthesis techniques.

The other hybrid methods include the integration of photogrammetry pipelines with deep learning, and visual SLAM and structure-from-motion. Other work integrates semantic segmentation modules into the process of reconstruction itself. Fewer studies rely exclusively on conventional non-deep learning and are noted here for contrast. Figure 9 illustrates this information.

Figure 9.

Combinations of methods reported.

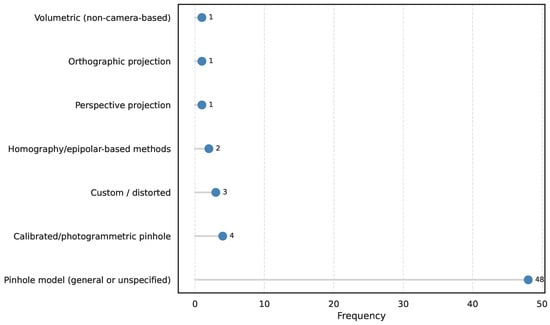

3.9. Geometric Model

The geometric model used to simulate the image formation process is essential in establishing the precision and realism of 3D reconstruction pipelines. The reviewed literature shows a dominant application of the pinhole camera model, which produces a simplified perspective projection and serves as the de facto standard protocol for most traditional methods based on deep learning.

Some work utilized directly calibrated or photogrammetric versions of the pinhole model, which allow for more precise modeling of intrinsic camera parameters. Some utilized non-pinhole or distorted projection models to account for lens-specific distortions or special optics, as observed in three studies.

Other geometrical techniques involving homography or epipolar-based projection were found in some studies, typically pertaining to stereo matching or multi-view alignment.

Figure 10 displays the prevalence of projection models in the reviewed literature and indicates the dominance of the pinhole model and the limited examination of other projection paradigms.

Figure 10.

Geometric models used to simulate the image formation process.

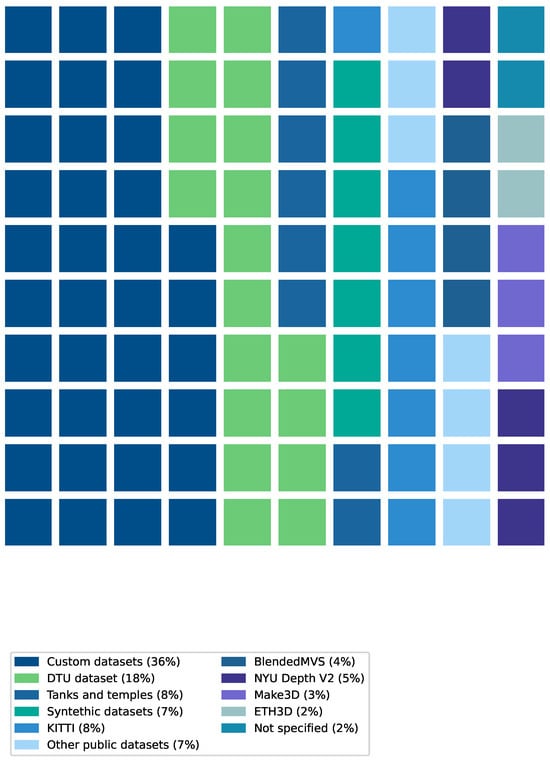

3.10. Distribution of Dataset Sources

The datasets used in the studied articles were collected from various sources, as explained below and depicted in Figure 11. Several studies used various datasets for model training or testing. This distribution shows that the research community prefers some datasets due to their reliability, diversity, or representativeness. Custom datasets were used most frequently, especially for study objectives, and were often collected using UAVs, specialized imaging devices, or field experiments. Many studies have used the DTU dataset, which is notable for its frequency in training and evaluating 3D reconstruction models due to the inclusion of high-quality ground truth.

Figure 11.

Dataset sources.

Synthetic data, such as ShapeNet or generated data, is often used to assess model generalization or when real data is scarce. Datasets such as KITTI, ETH3D, BlendedMVS, NYU Depth V2, and Make3D are frequently used. Other public datasets (e.g., AMOS, 300W-LP, SinHuman3D) and some unknown sources comprise the rest. This diversity represents the range of usage in 3D reconstruction and the trade-off between generic benchmarks and domain-specific custom data.

The application of the reviewed datasets in 3D reconstruction modeling exhibits a great complement between data characteristics and task-specific requirements. KITTI [60], commonly employed in outdoor depth estimation tasks, offers high-scale scenes with real illumination variation, occlusion, and vehicle motion—conditions to mimic autonomous driving scenarios. Alternatively, NYU Depth v2 [87] is utilized primarily for indoor scenes with fine-grained depth annotations and regular lighting, with tightly cropped indoor floor plans captured. This selection strategy, while practical, introduces domain bias: models trained on one domain may struggle to generalize to the other due to differences in scene structure, scale, and texture. For instance, models tested on NYU often report lower error margins in indoor datasets, whereas performance degrades when applied to unstructured or expansive outdoor scenarios. As observed in multiple studies within the compendium, this emphasizes the necessity for cross-domain evaluation protocols and the inclusion of hybrid datasets to improve model robustness and adaptability across diverse environments.

3.11. Performance Trade-Offs

Transformer-based MVS approaches such as [23] have very high performance efficiency with inference times ranging as low as 0.08 s per view. Multi-stage refinement networks such as Multi-Step Depth Enhancement Refine Network (MSDERNet) [88], conversely, consume up to 1.2 s per view, which shows higher accuracy but at the cost of performance. Per-frame, lightweight CNN-based models such as those proposed by [47,89] are real time with a performance of 25–35 ms/frame (30–40 FPS), suitable for tasks like self-driving navigation. More complex models, such as those with transformer blocks or disparity refinement, e.g., [41,90], are more computationally intense, requiring over 40–50 ms/frame. This contrast highlights the trade-off of architectural sophistication for real-time performance, with less complex models ruling latency-critical applications and more sophisticated implementations catering to accuracy-hungry operations such as high-fidelity reconstruction or dense mapping as shown in Table 5.

Table 5.

Inference time reported in the state of the art.

Three representative models were selected for their outstanding trade-offs between accuracy, inference time, and architectural innovation, as detailed in Table 5.

Edge-MVSFormer [23] is a transformer-enhanced MVS optimized for high-precision plant structure reconstruction. It achieves the fastest inference time among the MVS-based approaches (0.08 s/view). The architecture includes a convolutional backbone enhanced with transformer layers for capturing both local textures and global spatial dependencies. These features are refined via an edge-enhanced fusion transformer that preserves structural boundaries, followed by a multiscale cost volume regularization stage and depth regression. This modular interaction—feature extraction, attention-based fusion, cost volume construction, and depth estimation—results in high reconstruction fidelity, robustness to occlusions, and adaptability to natural environments.

RGB-FusionNet [47] targets monocular 3D reconstruction by inferring depth from a single RGB image. The architecture follows an encoder–decoder structure enriched with skip connections, attention modules, and multiscale fusion blocks. Semantic and structural features are first extracted and then fused across modalities and spatial resolutions to reduce scale ambiguity and increase robustness to occlusions. The decoder synthesizes the final depth map. With an inference speed of 25 ms/frame, this architecture is well-suited for real-time applications, offering a solid compromise between efficiency and prediction quality, particularly in indoor or resource-constrained scenarios.

GARNet [34] introduces a hybrid pipeline that combines 2D CNNs, a global-aware feature encoder, and a 3D cost volume module. Initially, 2D features are extracted and globally contextualized across multiple views to ensure spatial coherence. These features are warped into a shared reference frame to build a cost volume via depth-wise variance, refined through a 3D CNN decoder. While it has a higher runtime (1.205 s/view), its fusion of local and global information enables high accuracy in occluded or low-texture regions. GARNet excels in scenarios requiring depth precision at scale, exemplifying cost–performance optimization.

4. Discussion and Conclusions

One of the most notable findings is the wide range of application domains in which 3D reconstruction techniques are implemented. Around sixty unique domains were identified, including medicine, where organs or anatomical structures were reconstructed; agriculture, which involves estimating phenotypic crop characteristics; civil engineering, used for infrastructure and structural monitoring; and automotive and robotics, for autonomous navigation and spatial perception.

In reconstruction tasks, more of fifty 3D tasks were found; the ones that stand out are depth estimation (monocular or multiview) as described in [20,23,24,31,38,40,41,42,44,45,47,55,67,77,82], mesh recontruction or point clouds [29,30,58,59,71,73,74,92], 3D detection and segmentation [25,28,72,84,90,93] and surface recontruction [21,23,50,74]

4.1. Core Contributionsand Performance Highlights

This review provides a range of new contributions that enhance the field of 3D reconstruction in several areas. Most techniques displayed impressive improvements over baselines such as MVSNet, PatchMatchNet, and Cascade MVSNet, particularly for depth accuracy, reconstruction quality, and memory consumption. A few studies were successful in integrating deep learning with existing pipelines (e.g., stereo vision, photogrammetry, SfM) and provide more robust performance under challenging conditions such as textureless regions, occlusions, and lighting changes. Medical and agricultural applications took center stage, where DL-supported segmentation and phenotyping showed reliable measurement capability. UAV-based methods were enabled using effective view planning and fusion methods, exhibiting real-time processing capability in large scenes.

The contrasted and reviewed architectures are relevant examples of competing yet complementary strategies for 3D reconstruction. Their design reflects how task-specific module interaction leads to substantial performance gains. Future work will explore modular hybrids that fuse monocular and multi-view signals, as well as lightweight transformer architectures to capture global context while reducing latency.

In addition, improvements in network architecture such as attention refinement, adaptive cost volumes, and geometric consistency always led to better accuracy on indoor and outdoor scenes. Generalizability to unseen data, applicability on edge devices, and the ability to support the high-resolution input processing were also emphasized in most of the research as additional evidence for the applied worth of recent advances.

4.2. Methodological Gaps, Limitations, and Future Work

Three common weaknesses emerged. First, only about one in five studies reports variance or confidence intervals, which hinders a rigorous risk assessment. Second, ablation studies are often fragmented, making it difficult to isolate the contribution of individual pipeline components. A standard protocol that disables one module at a time would clarify the causal gains. Third, dataset opacity remains a barrier: roughly a third of custom datasets are withheld from the community, limiting reproducibility and cross-study comparison.

The present review is also constrained. Although we surveyed two major bibliographic databases and identified 68 articles, gray literature released after our cut-off date was excluded. Performance metrics were harmonized to meters or millimeters, but differences in masking rules or depth truncation may still bias comparisons. Qualitative coding, for example, deciding whether a study counts as "real-world validation", inevitably involves subjective judgment, even though two authors cross-checked all tags.

Looking ahead, three priorities stand out. First, uncertainty-aware depth heads or model ensembles should become standard practice, particularly in situations where reconstruction errors could compromise human safety. Second, resource-adaptive architectures that integrate quantization and pruning with geometry-aware losses are needed to maintain accuracy on edge devices. Third, cross-domain generalization benchmarks—testing a single model on indoor scenes, outdoor urban imagery, and aerial farmland without fine tuning—would offer a more realistic measure of robustness.

The reviewed studies consistently adhere to the pinhole camera model. Thus, the use of non-pinhole or wide-angle imaging systems remains unexplored by the authors reviewed. Future research must explore the trade-offs among increased scene coverage and introduced geometric distortions by such optics. Incorporating distortion correction techniques and hybrid calibration mechanisms appears to be essential in order to improve reconstruction quality with wide-angle optics. Addressing these points could accelerate the transition from impressive laboratory demonstrations to reliable 3D reconstruction systems deployed at scale.

Author Contributions

Conceptualization, D.-C.R.-L. and D.-M.C.-E.; methodology, D.-C.R.-L., D.-M.C.-E., J.T., J.-A.R.-G., J.M.A.-A., J.-J.G.-B., and A.R.-P.; formal analysis, D.-C.R.-L., D.-M.C.-E., J.T., J.-A.R.-G., J.M.A.-A., J.-J.G.-B., and A.R.-P.; investigation, D.-C.R.-L., D.-M.C.-E., J.T., J.-A.R.-G., J.M.A.-A., J.-J.G.-B., and A.R.-P.; resources, D.-M.C.-E., J.T., J.-A.R.-G., J.M.A.-A., J.-J.G.-B., and A.R.-P.; writing—original draft preparation, D.-C.R.-L. and D.-M.C.-E.; writing—review and editing, D.-C.R.-L., D.-M.C.-E., J.T., J.-A.R.-G., J.M.A.-A., J.-J.G.-B., and A.R.-P.; supervision, D.-M.C.-E.; project administration, D.-C.R.-L. and D.-M.C.-E. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

WWe thank the Autonomous University of Querétaro for its support in conducting this research, and the Ministry of Science, Humanities, Technology, and Innovation (SECIHTI) for support through the National System of Researchers (SNII).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kompis, Y.; Bartolomei, L.; Mascaro, R.; Teixeira, L.; Chli, M. Informed sampling exploration path planner for 3d reconstruction of large scenes. IEEE Robot. Autom. Lett. 2021, 6, 7893–7900. [Google Scholar] [CrossRef]

- Ren, R.; Fu, H.; Xue, H.; Sun, Z.; Ding, K.; Wang, P. Towards a fully automated 3d reconstruction system based on lidar and gnss in challenging scenarios. Remote Sens. 2021, 13, 1981. [Google Scholar] [CrossRef]

- Hu, S.; Liu, Q. Fast underwater scene reconstruction using multi-view stereo and physical imaging. Neural Netw. 2025, 189, 107568. [Google Scholar] [CrossRef]

- Yu, Z.; Shen, Y.; Zhang, Y.; Xiang, Y. Automatic crack detection and 3D reconstruction of structural appearance using underwater wall-climbing robot. Autom. Constr. 2024, 160, 105322. [Google Scholar] [CrossRef]

- Maken, P.; Gupta, A. 2D-to-3D: A review for computational 3D image reconstruction from X-ray images. Arch. Comput. Methods Eng. 2023, 30, 85–114. [Google Scholar] [CrossRef]

- Zi, Y.; Wang, Q.; Gao, Z.; Cheng, X.; Mei, T. Research on the application of deep learning in medical image segmentation and 3d reconstruction. Acad. J. Sci. Technol. 2024, 10, 8–12. [Google Scholar] [CrossRef]

- Yu, S.; Liu, X.; Tan, Q.; Wang, Z.; Zhang, B. Sensors, systems and algorithms of 3D reconstruction for smart agriculture and precision farming: A review. Comput. Electron. Agric. 2024, 224, 109229. [Google Scholar] [CrossRef]

- Gu, W.; Wen, W.; Wu, S.; Zheng, C.; Lu, X.; Chang, W.; Xiao, P.; Guo, X. 3D reconstruction of wheat plants by integrating point cloud data and virtual design optimization. Agriculture 2024, 14, 391. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, S.; Fan, S.; Lu, J.; Li, P.; Tang, P. Image-based 3D reconstruction for Multi-Scale civil and infrastructure Projects: A review from 2012 to 2022 with new perspective from deep learning methods. Adv. Eng. Inform. 2024, 59, 102268. [Google Scholar] [CrossRef]

- Muhammad, I.B.; Omoniyi, T.M.; Omoebamije, O.; Mohammed, A.G.; Samson, D. 3D Reconstruction of a Precast Concrete Bridge for Damage Inspection Using Images from Low-Cost Unmanned Aerial Vehicle. Disaster Civ. Eng. Archit. 2025, 2, 46–62. [Google Scholar] [CrossRef]

- Banerjee, D.; Yu, K.; Aggarwal, G. Robotic arm based 3D reconstruction test automation. IEEE Access 2018, 6, 7206–7213. [Google Scholar] [CrossRef]

- Sumetheeprasit, B.; Rosales Martinez, R.; Paul, H.; Shimonomura, K. Long-range 3D reconstruction based on flexible configuration stereo vision using multiple aerial robots. Remote Sens. 2024, 16, 234. [Google Scholar] [CrossRef]

- Wang, H.; Sun, S.; Ren, P. Underwater color disparities: Cues for enhancing underwater images toward natural color consistencies. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 738–753. [Google Scholar] [CrossRef]

- Wang, H.; Sun, S.; Chang, L.; Li, H.; Zhang, W.; Frery, A.C.; Ren, P. INSPIRATION: A reinforcement learning-based human visual perception-driven image enhancement paradigm for underwater scenes. Eng. Appl. Artif. Intell. 2024, 133, 108411. [Google Scholar] [CrossRef]

- Samavati, T.; Soryani, M. Deep learning-based 3D reconstruction: A survey. Artif. Intell. Rev. 2023, 56, 9175–9219. [Google Scholar] [CrossRef]

- Vinodkumar, P.K.; Karabulut, D.; Avots, E.; Ozcinar, C.; Anbarjafari, G. Deep learning for 3d reconstruction, augmentation, and registration: A review paper. Entropy 2024, 26, 235. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 2002, 86, 2278–2324. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017; Volume 30, pp. 5998–6008. [Google Scholar]

- Shi, Z.; Meng, Z.; Xing, Y.; Ma, Y.; Wattenhofer, R. 3d-retr: End-to-end single and multi-view 3d reconstruction with transformers. arXiv 2021, arXiv:2110.08861. [Google Scholar]

- Ding, C.; Dai, Y.; Feng, X.; Zhou, Y.; Li, Q. Stereo vision SLAM-based 3D reconstruction on UAV development platforms. J. Electron. Imaging 2023, 32, 013041. [Google Scholar] [CrossRef]

- Huang, J.; Xu, F.; Chen, T.; Zeng, Y.; Ming, J.; Niu, X. Real-Time Ocean Waves Three-Dimensional Reconstruction Research Based on Deep Learning. In Proceedings of the 2023 2nd International Conference on Robotics, Artificial Intelligence and Intelligent Control (RAIIC), Mianyang, China, 11–13 August 2023; pp. 334–339. [Google Scholar] [CrossRef]

- Lu, G. Bird-view 3d reconstruction for crops with repeated textures. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 4263–4270. [Google Scholar] [CrossRef]

- Cheng, Y.; Liu, Z.; Lan, G.; Xu, J.; Chen, R.; Huang, Y. Edge_MVSFormer: Edge-Aware Multi-View Stereo Plant Reconstruction Based on Transformer Networks. Sensors 2025, 25, 2177. [Google Scholar] [CrossRef]

- Chen, Q.; Song, L.; Zhang, X.; Zhang, G.; Lu, H.; Liu, J.; Pan, Y.; Luo, W. Accurate 3D anthropometric measurement using compact multi-view imaging. Measurement 2025, 247, 116777. [Google Scholar] [CrossRef]

- Ma, D.; Wang, N.; Fang, H.; Chen, W.; Li, B.; Zhai, K. Attention-optimized 3D segmentation and reconstruction system for sewer pipelines employing multi-view images. Comput.-Aided Civ. Infrastruct. Eng. 2025, 40, 594–613. [Google Scholar] [CrossRef]

- Yu, J.; Yin, W.; Hu, Z.; Liu, Y. 3D reconstruction for multi-view objects. Comput. Electr. Eng. 2023, 106, 108567. [Google Scholar] [CrossRef]

- Liu, J.; Bajraktari, F.; Rausch, R.; Pott, P.P. 3D Reconstruction of Forearm Veins Using NIR-Based Stereovision and Deep Learning. In Proceedings of the 2023 IEEE 36th International Symposium on Computer-Based Medical Systems (CBMS), L’Aquila, Italy, 22–24 June 2023; pp. 57–60. [Google Scholar] [CrossRef]

- Niri, R.; Gutierrez, E.; Douzi, H.; Lucas, Y.; Treuillet, S.; Castañeda, B.; Hernandez, I. Multi-view data augmentation to improve wound segmentation on 3D surface model by deep learning. IEEE Access 2021, 9, 157628–157638. [Google Scholar] [CrossRef]

- Chen, R.; Yin, X.; Yang, Y.; Tong, C. Multi-view Pixel2Mesh++: 3D reconstruction via Pixel2Mesh with more images. Vis. Comput. 2023, 39, 5153–5166. [Google Scholar] [CrossRef]

- Kui, X.; Xiaoran, G. SinHuman3D: Novel Multi-View Synthesis and 3D Reconstruction from a Single Human Image. In Proceedings of the 2024 IEEE 4th International Conference on Data Science and Computer Application (ICDSCA), Dalian, China, 22–24 November 2024; pp. 355–359. [Google Scholar] [CrossRef]

- Hermann, M.; Weinmann, M.; Nex, F.; Stathopoulou, E.; Remondino, F.; Jutzi, B.; Ruf, B. Depth estimation and 3D reconstruction from UAV-borne imagery: Evaluation on the UseGeo dataset. ISPRS Open J. Photogramm. Remote Sens. 2024, 13, 100065. [Google Scholar] [CrossRef]

- Zhang, S.; Yang, Y.; Sun, Y.; Liu, N.; Sun, F.; Fang, B. Artificial Skin Based on Visuo-Tactile Sensing for 3D Shape Reconstruction: Material, Method, and Evaluation. Adv. Funct. Mater. 2025, 35, 2411686. [Google Scholar] [CrossRef]

- Gao, T.; Hong, Z.; Tan, Y.; Sun, L.; Wei, Y.; Ma, J. HC-MVSNet: A probability sampling-based multi-view-stereo network with hybrid cascade structure for 3D reconstruction. Pattern Recognit. Lett. 2024, 185, 59–65. [Google Scholar] [CrossRef]

- Zhu, Z.; Yang, L.; Lin, X.; Yang, L.; Liang, Y. Garnet: Global-aware multi-view 3d reconstruction network and the cost-performance tradeoff. Pattern Recognit. 2023, 142, 109674. [Google Scholar] [CrossRef]

- de Queiroz Mendes, R.; Ribeiro, E.G.; dos Santos Rosa, N.; Grassi Jr, V. On deep learning techniques to boost monocular depth estimation for autonomous navigation. Robot. Auton. Syst. 2021, 136, 103701. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Dong, Z.; Gao, M.; Lin, H.; Miao, Q. SABV-Depth: A biologically inspired deep learning network for monocular depth estimation. Knowl.-Based Syst. 2023, 263, 110301. [Google Scholar] [CrossRef]

- Madhuanand, L.; Nex, F.; Yang, M.Y. Deep learning for monocular depth estimation from UAV images. In Proceedings of the XXIVth ISPRS Congress 2020. International Society for Photogrammetry and Remote Sensing (ISPRS), Nice, France, 31 August–2 September 2020; pp. 451–458. [Google Scholar]

- Abdullah, R.M. A deep learning-based framework for efficient and accurate 3D real-scene reconstruction. Int. J. Inf. Technol. 2024, 16, 4605–4609. [Google Scholar] [CrossRef]

- Teng, J.; Sun, H.; Liu, P.; Jiang, S. An Improved TransMVSNet Algorithm for Three-Dimensional Reconstruction in the Unmanned Aerial Vehicle Remote Sensing Domain. Sensors 2024, 24, 2064. [Google Scholar] [CrossRef] [PubMed]

- Nex, F.; Zhang, N.; Remondino, F.; Farella, E.; Qin, R.; Zhang, C. Benchmarking the extraction of 3D geometry from UAV images with deep learning methods. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2023, 48, 123–130. [Google Scholar] [CrossRef]

- Dhanushkodi, K.; Bala, A.; Chaplot, N. Single-View Depth Estimation: Advancing 3D Scene Interpretation with One Lens. IEEE Access 2025, 13, 20562–20573. [Google Scholar] [CrossRef]

- Hu, Y.; Fu, T.; Niu, G.; Liu, Z.; Pun, M.O. 3D map reconstruction using a monocular camera for smart cities. J. Supercomput. 2022, 78, 16512–16528. [Google Scholar] [CrossRef]

- Izadmehr, Y.; Satizábal, H.F.; Aminian, K.; Perez-Uribe, A. Depth estimation for egocentric rehabilitation monitoring using deep learning algorithms. Appl. Sci. 2022, 12, 6578. [Google Scholar] [CrossRef]

- Hong, Z.; Yang, Y.; Liu, J.; Jiang, S.; Pan, H.; Zhou, R.; Zhang, Y.; Han, Y.; Wang, J.; Yang, S.; et al. Enhancing 3D reconstruction model by deep learning and its application in building damage assessment after earthquake. Appl. Sci. 2022, 12, 9790. [Google Scholar] [CrossRef]

- Li, G.; Li, K.; Zhang, G.; Zhu, Z.; Wang, P.; Wang, Z.; Fu, C. Enhanced multi view 3D reconstruction with improved MVSNet. Sci. Rep. 2024, 14, 14106. [Google Scholar] [CrossRef]

- Wang, B.; Chen, W.; Qian, J.; Feng, S.; Chen, Q.; Zuo, C. Single-shot super-resolved fringe projection profilometry (SSSR-FPP): 100,000 frames-per-second 3D imaging with deep learning. Light Sci. Appl. 2025, 14, 70. [Google Scholar] [CrossRef]

- Duan, Z.; Chen, Y.; Yu, H.; Hu, B.; Chen, C. RGB-Fusion: Monocular 3D reconstruction with learned depth prediction. Displays 2021, 70, 102100. [Google Scholar] [CrossRef]

- Dai, L.; Chen, Z.; Zhang, X.; Wang, D.; Huo, L. CPH-Fmnet: An Optimized Deep Learning Model for Multi-View Stereo and Parameter Extraction in Complex Forest Scenes. Forests 2024, 15, 1860. [Google Scholar] [CrossRef]

- Dalai, R.; Dalai, N.; Senapati, K.K. An accurate volume estimation on single view object images by deep learning based depth map analysis and 3D reconstruction. Multimed. Tools Appl. 2023, 82, 28235–28258. [Google Scholar] [CrossRef]

- Knyaz, V.A.; Kniaz, V.V.; Remondino, F.; Zheltov, S.Y.; Gruen, A. 3D reconstruction of a complex grid structure combining UAS images and deep learning. Remote Sens. 2020, 12, 3128. [Google Scholar] [CrossRef]

- Jiang, S.; Zhang, Y.; Wang, F.; Xu, Y. Three-dimensional reconstruction and damage localization of bridge undersides based on close-range photography using UAV. Meas. Sci. Technol. 2024, 36, 015423. [Google Scholar] [CrossRef]

- Wang, J.; Ma, D.; Wang, Q.; Wang, J. CSA-MVSNet: A Cross-Scale Attention Based Multi-View Stereo Method With Cascade Structure. IEEE Trans. Consum. Electron. 2025, 1. [Google Scholar] [CrossRef]

- Song, L.; Liu, T.; Jiang, D.; Li, H.; Zhao, D.; Zou, Q. 3D multi views reconstruction of flame surface based on deep learning. J. Phys. Conf. Ser. 2023, 2593, 012006. [Google Scholar] [CrossRef]

- Gao, Y.; Wang, Q.; Rao, X.; Xie, L.; Ying, Y. OrangeStereo: A navel orange stereo matching network for 3D surface reconstruction. Comput. Electron. Agric. 2024, 217, 108626. [Google Scholar] [CrossRef]

- Wei, R.; Guo, J.; Lu, Y.; Zhong, F.; Liu, Y.; Sun, D.; Dou, Q. Scale-aware monocular reconstruction via robot kinematics and visual data in neural radiance fields. Artif. Intell. Surg. 2024, 4, 187–198. [Google Scholar] [CrossRef]

- Niknejad, N.; Bidese-Puhl, R.; Bao, Y.; Payn, K.G.; Zheng, J. Phenotyping of architecture traits of loblolly pine trees using stereo machine vision and deep learning: Stem diameter, branch angle, and branch diameter. Comput. Electron. Agric. 2023, 211, 107999. [Google Scholar] [CrossRef]

- Zhao, G.; Cai, W.; Wang, Z.; Wu, H.; Peng, Y.; Cheng, L. Phenotypic parameters estimation of plants using deep learning-based 3-D reconstruction from single RGB image. IEEE Geosci. Remote Sens. Lett. 2022, 19, 2506705. [Google Scholar] [CrossRef]

- Engin, S.; Mitchell, E.; Lee, D.; Isler, V.; Lee, D.D. Higher order function networks for view planning and multi-view reconstruction. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 11486–11492. [Google Scholar] [CrossRef]

- Wu, J.; Thomas, D.; Fedkiw, R. Sparse-View 3D Reconstruction of Clothed Humans via Normal Maps. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; pp. 11–22. [Google Scholar] [CrossRef]

- Zhang, Z.; Cheng, J.; Xu, G.; Wang, X.; Zhang, C.; Yang, X. Leveraging consistent spatio-temporal correspondence for robust visual odometry. Proc. AAAI Conf. Artif. Intell. 2025, 39, 10367–10375. [Google Scholar] [CrossRef]

- Gao, S.; Xu, Z. Efficient 3DGS object segmentation via COLMAP point cloud. In Proceedings of the Eighth International Conference on Computer Graphics and Virtuality (ICCGV 2025), Chengdu, China, 21–23 February 2025; Volume 13557, pp. 65–76. [Google Scholar] [CrossRef]

- Medhi, M.; Ranjan Sahay, R. Adversarial learning for unguided single depth map completion of indoor scenes. Mach. Vis. Appl. 2025, 36, 30. [Google Scholar] [CrossRef]

- Cai, Y.; Cao, Y. Positional tracking study of greenhouse mobile robot based on improved Monodepth2. IEEE Access 2025, 13, 106690–106702. [Google Scholar] [CrossRef]

- Habib, Y.; Papadakis, P.; Le Barz, C.; Fagette, A.; Gonçalves, T.; Buche, C. Densifying SLAM for UAV navigation by fusion of monocular depth prediction. In Proceedings of the 2023 9th International Conference on Automation, Robotics and Applications (ICARA), Abu Dhabi, United Arab Emirates, 10–12 February 2023; pp. 225–229. [Google Scholar] [CrossRef]

- Fu, W.; Lv, B.; Tong, S. DTR-Map: A Digital Twin-Enabled Real-Time Mapping System Based on Multi-View Stereo. In Proceedings of the 2023 China Automation Congress (CAC), Chongqing, China, 17–19 November 2023; pp. 4273–4278. [Google Scholar] [CrossRef]

- Feng, Y.; Wu, R.; Li, P.; Wu, W.; Lin, J.; Liu, X.; Chen, L. Multi-view high-dynamic-range 3D reconstruction and point cloud quality evaluation based on dual-frame difference images. Appl. Opt. 2024, 63, 7865–7874. [Google Scholar] [CrossRef]

- Zhang, J.; Fu, X.D.T.; Srigrarom, S. Depth Estimation in Static Monocular Vision with Stereo Vision Assisted Deep Learning Approach. In Proceedings of the 2024 4th International Conference on Computer, Control and Robotics (ICCCR), Shanghai, China, 19–21 April 2024; pp. 101–107. [Google Scholar] [CrossRef]

- Al-Selwi, M.; Ning, H.; Gao, Y.; Chao, Y.; Li, Q.; Li, J. Enhancing object pose estimation for RGB images in cluttered scenes. Sci. Rep. 2025, 15, 8745. [Google Scholar] [CrossRef]

- Lu, L.; Bu, C.; Su, Z.; Guan, B.; Yu, Q.; Pan, W.; Zhang, Q. Generative deep-learning-embedded asynchronous structured light for three-dimensional imaging. Adv. Photonics 2024, 6, 046004. [Google Scholar] [CrossRef]

- Zuo, Y.; Hu, Y.; Xu, Y.; Wang, Z.; Fang, Y.; Yan, J.; Jiang, W.; Peng, Y.; Huang, Y. Learning Guided Implicit Depth Function with Scale-aware Feature Fusion. IEEE Trans. Image Process. 2025, 34, 3309–3322. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Liu, T.; Wang, X. Advanced pavement distress recognition and 3D reconstruction by using GA-DenseNet and binocular stereo vision. Measurement 2022, 201, 111760. [Google Scholar] [CrossRef]

- Padkan, N.; Battisti, R.; Menna, F.; Remondino, F. Deep learning to support 3d mapping capabilities of a portable vslam-based system. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 48, 363–370. [Google Scholar] [CrossRef]

- Zhang, R.; Jing, M.; Yi, X.; Li, H.; Lu, G. Dense reconstruction for tunnels based on the integration of double-line parallel photography and deep learning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 1117–1123. [Google Scholar] [CrossRef]

- Liu, L.; Wang, C.; Feng, C.; Gong, W.; Zhang, L.; Liao, L.; Feng, C. Incremental SFM 3D Reconstruction Based on Deep Learning. Electronics 2024, 13, 2850. [Google Scholar] [CrossRef]

- Luo, W.; Lu, Z.; Liao, Q. LNMVSNet: A low-noise multi-view stereo depth inference method for 3D reconstruction. Sensors 2024, 24, 2400. [Google Scholar] [CrossRef] [PubMed]

- Zhou, L. Multi-View Reconstruction of Landscape Images Considering Image Registration and Feature Mapping. In Proceedings of the 2025 International Conference on Multi-Agent Systems for Collaborative Intelligence (ICMSCI), Erode, India, 20–22 January 2025; pp. 1349–1354. [Google Scholar] [CrossRef]

- Ding, Y.; Lin, L.; Wang, L.; Zhang, M.; Li, D. Digging into the multi-scale structure for a more refined depth map and 3D reconstruction. Neural Comput. Appl. 2020, 32, 11217–11228. [Google Scholar] [CrossRef]

- Yang, Y.; Cao, J.; Zhao, H.; Chang, Z.; Wang, W. High frequency domain enhancement and channel attention module for multi-view stereo. Comput. Electr. Eng. 2025, 121, 109855. [Google Scholar] [CrossRef]

- Wang, T.; Gan, V.J. Multi-view stereo for weakly textured indoor 3D reconstruction. Comput.-Aided Civ. Infrastruct. Eng. 2024, 39, 1469–1489. [Google Scholar] [CrossRef]

- Kamble, T.U.; Mahajan, S.P. 3D Image reconstruction using C-dual attention network from multi-view images. Int. J. Wavelets, Multiresolut. Inf. Process. 2023, 21, 2250044. [Google Scholar] [CrossRef]

- Gong, L.; Gao, B.; Sun, Y.; Zhang, W.; Lin, G.; Zhang, Z.; Li, Y.; Liu, C. preciseSLAM: Robust, Real-Time, LiDAR–Inertial–Ultrasonic Tightly-Coupled SLAM With Ultraprecise Positioning for Plant Factories. IEEE Trans. Ind. Inform. 2024, 20, 8818–8827. [Google Scholar] [CrossRef]

- Kumar, R.; Luo, J.; Pang, A.; Davis, J. Disjoint pose and shape for 3d face reconstruction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 3107–3117. [Google Scholar] [CrossRef]

- Chuah, W.; Tennakoon, R.; Hoseinnezhad, R.; Bab-Hadiashar, A. Deep learning-based incorporation of planar constraints for robust stereo depth estimation in autonomous vehicle applications. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6654–6665. [Google Scholar] [CrossRef]

- Hu, G.; Zhao, H.; Huo, Q.; Zhu, J.; Yang, P. Multi-view 3D Reconstruction by Fusing Polarization Information. In Pattern Recognition and Computer Vision–7th Chinese Conference, PRCV 2024, Urumqi, China, October 18–20, 2024, Proceedings, Part XIII; Lecture Notes in Computer Science; Springer: Singapore, 2024; Volume 15043, pp. 181–195. [Google Scholar] [CrossRef]

- Yang, R.; Miao, W.; Zhang, Z.; Liu, Z.; Li, M.; Lin, B. SA-MVSNet: Self-attention-based multi-view stereo network for 3D reconstruction of images with weak texture. Eng. Appl. Artif. Intell. 2024, 131, 107800. [Google Scholar] [CrossRef]

- Lai, H.; Ye, C.; Li, Z.; Yan, P.; Zhou, Y. MFE-MVSNet: Multi-scale feature enhancement multi-view stereo with bi-directional connections. IET Image Process. 2024, 18, 2962–2973. [Google Scholar] [CrossRef]

- Ignatov, D.; Ignatov, A.; Timofte, R. Virtually Enriched NYU Depth V2 Dataset for Monocular Depth Estimation: Do We Need Artificial Augmentation? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 6177–6186. [Google Scholar] [CrossRef]

- Ding, Y.; Li, K.; Zhang, G.; Zhu, Z.; Wang, P.; Wang, Z.; Fu, C.; Li, G.; Pan, K. Multi-step depth enhancement refine network with multi-view stereo. PLoS ONE 2025, 20, e0314418. [Google Scholar] [CrossRef] [PubMed]

- Abderrazzak, S.; Omar, S.; Abdelmalik, B. Supervised depth estimation for visual perception on low-end platforms. In Proceedings of the 2020 IEEE International Conference on Technology Management, Operations and Decisions (ICTMOD), Marrakech, Morocco, 24–27 November 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Qiao, J.; Hong, H.; Cui, G. Spatial Ranging and Distance Recognition of Electrical Targets and Distribution Lines Based on Stereo Vision and Deep Learning. In Proceedings of the 2024 5th International Conference on Clean Energy and Electric Power Engineering (ICCEPE), Yangzhou, China, 9–11 August 2024; pp. 815–819. [Google Scholar] [CrossRef]

- Xie, Q.; Xin, Y.; Sun, K.; Zeng, X. Fusion Multi-scale Features and Attention Mechanism for Multi-view 3D Reconstruction. In Proceedings of the 2023 IEEE 3rd International Conference on Electronic Technology, Communication and Information (ICETCI), Changchun, China, 26–28 May 2023; pp. 1120–1123. [Google Scholar] [CrossRef]

- Freller, A.; Turk, D.; Zwettler, G.A. Using deep learning for depth estimation and 3d reconstruction of humans. In Proceedings of the 32nd European Modeling and Simulation Symposium, Vienna, Austria, 16–18 September 2020; pp. 281–287. [Google Scholar] [CrossRef]

- Cui, D.; Liu, P.; Liu, Y.; Zhao, Z.; Feng, J. Automated Phenotypic Analysis of Mature Soybean Using Multi-View Stereo 3D Reconstruction and Point Cloud Segmentation. Agriculture 2025, 15, 175. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).