Abstract

Due to the large amount of CSAR echo data carried by UAVs, either the original echo data need to be transmitted to the ground for processing or post-processing must be implemented after the flight. Therefore, it is difficult to use edge computing power such as a UAV onboard computer to implement image processing. The commonly used back projection (BP) algorithm and corresponding improved imaging algorithms require a large amount of computation and have slow imaging speed, which further limits the realization of CSAR 3D imaging on edge nodes. To improve the speed of CSAR 3D imaging, this paper proposes a CSAR 3D imaging method suitable for edge computation. Firstly, the improved Crazy Climber algorithm extracts sine track ridges that represent the amplitude changes in the range-compressed echo. Secondly, two-dimensional (2D) profiles of CSAR with different heights are obtained via inverse Radon transform (IRT). Thirdly, the Hough transform is used to extract the intersection points of the defocused circle along the heights in the X and Y directions. Finally, 3D point cloud extraction is completed through voting screening. In this paper, image detection methods such as ridge extraction, IRT, and Hough transform replace the phase compensation processing of the traditional BP 3D imaging method, which significantly reduces the time of CSAR 3D imaging. The correctness and effectiveness of the proposed method are verified by the 3D imaging results for the simulated data of ideal targets and X-band CSAR outfield flight raw data carried by a small rotor unmanned aerial vehicle (SRUAV). The proposed method provides a new direction for the fast 3D imaging of edge nodes, such as aircraft and small ground terminals. The image can be directly transmitted, which can improve the information transmission efficiency of the Internet of Things (IoT).

1. Introduction

As a remote sensing method, airborne CSAR is an important part of the Internet of Things (IoT) sensing system. Making full use of edge computing power for, e.g., aircraft or small ground stations can reduce the transmission pressure of the IoT and improve the efficiency of information acquisition [1,2]. Compared to linear SAR, CSAR imaging on UAVs requires a long observation time and a large amount of echo data, so imaging requires more computing resources. In general, the original data must transmit to the ground for processing or post-processing after the flight. It is challenging to use edge computing power for real-time imaging processing on a UAV platform. Therefore, the speed of acquiring imaging target information is poor.

The process of CSAR observations and imaging consumes significant computing resources due to the application of the Back Projection (BP) imaging algorithm in the time domain [3]. The computational efficiency of CSAR is also not high. Taking X-band CSAR imaging carried by a small rotor unmanned aerial vehicle (SRUAV) as an example, when the flight radius is 600 m, there are 180,000 heading sampling points and 3000 range direction sampling points. The BP imaging algorithm needs to carry out phase compensation in the echo signal for each unit in the 3D imaging grid at each sampling point, which requires many calculations and has low imaging speed. The authors in [4] proposed an improved BP algorithm (IBP) by constructing a geometric interpolation kernel to transform 3D interpolation operations into 1D interpolations and range vector searching operations. Using this method, the BP 3D imaging time was compressed by two-thirds. However, the imaging speed was still not ideal. Other 3D imaging methods of the CSAR sub-aperture class based on BP imaging [5,6,7] showed no optimization in terms of imaging speed and were focused on sub-aperture partitioning according to the scattering characteristics of the target. Overall, the high time delay and low speed of the current BP 3D imaging algorithms severely limit the realization of CSAR 3D imaging at edge nodes.

In this paper, a CSAR 3D imaging method suitable for edge computation is proposed. This method applies inverse Radon transform (IRT) and Hough transform to complete the 3D point cloud extraction and replaces phase compensation processing of the BP algorithm with azimuth point-by-point and grid-based one-by-one 3D imaging. This method can greatly improve the rate of CSAR 3D imaging and reduce imaging delays. The 3D imaging results of the simulation and measured CSAR echo data carried by an SRUAV demonstrate the correctness and effectiveness of the proposed method.

This paper is organized as follows. Section 2 introduces the principle of BP 3D imaging. Then, the processes and key technologies of the CSAR 3D imaging method suitable for edge computation are described in Section 3. Section 4 presents the results and analyses of the proposed method for ideal targets and the X-band CSAR echo dataset carried by an SRUAV. Finally, in Section 5, conclusions are drawn.

2. BP 3D Imaging

2.1. Imaging Geometric Model of CSAR

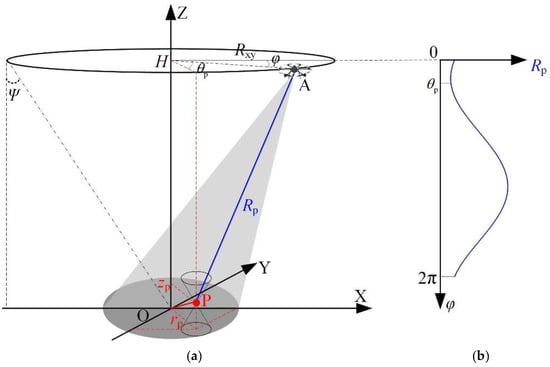

The CSAR imaging system’s geometric model is shown in Figure 1a. In this system, the SAR is carried by an SRUAV in a circle around the observation scene at a fixed height. The beam center of the SAR antenna always points to the center of the imaging scene. The instantaneous slant range from the SAR platform to the point target P in the movement process is as follows [8]:

where is the radius of the circular trajectory; is the position coordinate of the carrier platform flying at point A, and is the angle with the positive half of the X axis as the reference direction; incident angle is the angle between the center of the SAR beam and the negative half axis of the Z axis; and is the coordinate of any point target P in the observation scene.

Figure 1.

Geometric model of airborne CSAR imaging; (a) CSAR imaging geometry diagram; (b) diagram of the relationship between and .

The instantaneous slant range of point target P measured in CSAR mode changes with the position changes in the SAR platform, as shown in Figure 1b.

2.2. BP 3D Imaging

Here, the signal emitted by the radar is a frequency-modulated continuous wave (FMCW), and the echo received by the de-chirp procedure after range compression can be expressed as follows [9,10]:

where is fast time; is the scattering coefficient of point target P; is the sinc function, which can be expressed as ; denotes the transmission signal bandwidth; indicates the slant range between SAR and the center of the scene; m/s indicates the propagation speed of electromagnetic waves; and is the frequency of the transmitted signal, is the center frequency of the transmitted signal, is the range frequency, and is the linear modulation frequency.

2.2.1. Traditional BP 3D Imaging

The traditional BP 3D imaging algorithm used to obtain 3D images via azimuth pulse coherent accumulation [11] can be expressed by the following formula:

where is the scattering intensity value of the grid point in the imaging space region. BP 3D imaging can only be realized when every grid point in the 3D imaging space is processed by Formula (3).

2.2.2. IBP 3D Imaging

The IBP 3D Imaging method transforms the 3D processing of the BP algorithm into 1D phase compensation and searches by constructing a geometric interpolation kernel [4]. The geometric interpolation kernel is as follows:

where is the slant range vector, which can be expressed as follows:

where and are the minimum and maximum values of the slant range, respectively, and is the slant range interval.

In the IBP 3D Imaging method, firstly, the slant range between the coordinate unit and the platform is calculated. Secondly, the sequence number of is calculated as the closest element in . Thirdly, the element in the slant range vector is searched, and coherent accumulation is achieved.

3. A CSAR 3D Imaging Method Suitable for Edge Computation

3.1. Principle of the Method

Let . When the radar and the target meet the far-field conditions, i.e., , , then Formula (1) can be expressed as follows:

During the CSAR movement, the incident angle of the beam remains unchanged. Substituting Formula (6) into Formula (2) yields the following:

where represents the position tracking of target point P in the echo after range compression. When point P is located at the center of the scene, Formula (7) can be expressed as follows:

Here, the position trajectory of the target is constant; that is, the trajectory of the center point of the scene will not change with a position change in the SAR platform after the target range is compressed. Next, ignore the change in the scattering coefficient for target . Except for the central point of the scene, the trajectories of all other target points have the characteristics of a sinusoidal curve change, and the change frequency is , which is consistent with the CSAR motion platform. The amplitude of oscillation is , which is related to the bandwidth of the radar signal , the distance between the target point and the center of the scene , and the incident angle of the radar beam . When the CSAR observation geometry is determined, the amplitude of the sinusoidal change in the trajectory of a fixed-point target is only related to . The larger is, the greater the amplitude of sinusoidal oscillation becomes. The initial phase is , which is the inversion of the azimuth angle of the target point.

When there are multiple targets located at different positions and azimuth angles in the scene, the range-compressed echoes form multiple sinusoidal curves that have the same frequency, different amplitudes, and different initial phases. These sinusoids overlap each other with the locus of the central point of the scene as the symmetrical center . By detecting the sinusoidal curve of range-compressed CSAR echo data along the azimuth direction, the position of the target in the scene can be extracted, and 2D imaging of the scene can be realized.

IRT can accumulate and focus the sinusoidal curve in the image, transforming it into a point on the imaging plane [12]. Ignoring the changes to , the scattering coefficient of the target and the sine change track of the ideal point target echo after distance compression is as follows:

The IRT of is as follows:

where and . When the height of the imaging plane is consistent with the actual size of the target, that is , a sine curve in the range-compressed echo signal is transformed into a point in the imaging plane after IRT, and the relationship between the amplitude and initial phase of the point corresponding to the original sine curve and the point coordinates is as follows:

The IRT of the compressed echo of the N ideal point target distance is:

The height of the imaging plane is inconsistent with the actual height of the target; that is, . Based on CSAR confocal 3D imaging theory [13], only when the imaging height is consistent with the target height can the target on the 2D image be accurately focused; On the contrary, at other imaging heights, the target on the 2D image will be defocused into a circle, and the radius of the circle has a linear relationship with the deviation between the imaging height and the target height. Then, the sine curve is transformed into a circle in the imaging plane by IRT, and the relationship between the radius of the circle and the height deviation can be defined as follows:

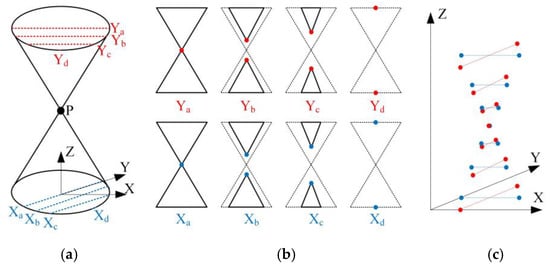

In CSAR imaging mode, the incident angle is constant, and the relationship between the radius of the circle and the height deviation is linear. As shown in Figure 1, the target focus point P, which is the vertex of the cone formed by circles with different heights, is the intersection point of the cone generatrix passing through the vertex.

Hough transform is an effective method to detect the intersection of many straight lines [14]. In the Hough-transformed space of the binary image, the meeting of two line segments on the image is the sine curve that passes through two peak points simultaneously. According to the coordinates and , which are the two peak points, the intersection coordinates of two line segments in the image can be calculated via the Hough transformation formula as follows:

3.2. Algorithm Flow

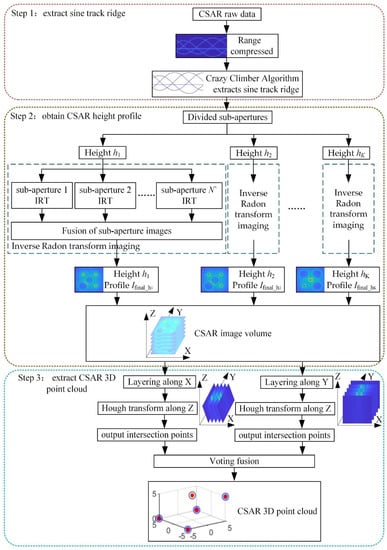

A flow chart of the CSAR fast 3D imaging method based on image detection presented in this paper is shown in Figure 2. This method includes three steps: (1) using the Crazy Climber algorithm to extract the sine track ridge [15], (2) using IRT to obtain the CSAR height profile, and (3) applying Hough transform to extract a CSAR 3D point cloud. The details are as follows.

Figure 2.

Flowchart of the proposed method.

Step 1: Extract the sine track ridge. Because the sinusoidal trajectories of different targets overlap and cross in the range-compressed echo signal, high-quality CSAR images cannot be obtained directly by IRT. The improved Crazy Climber algorithm is used to extract the spine of the target sinusoidal trajectory [15], which can effectively improve the imaging effect of CSAR. The specific steps of the improved Crazy Climber algorithm are as follows.

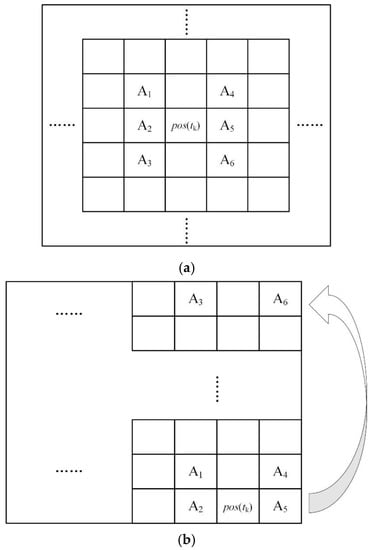

(1) Initialization. is the observation matrix, the observation matrix and the measurement matrix are zero matrices with the exact dimensions of , and the Climbers are evenly distributed in the observation matrix . Let the number of climber moves be .

(2) Move the Climber. The time corresponding to the movement of the Climber is . If the position of the Climber at time is , the rule for estimating the position of the Climber at time involves calculating the probability of the Climber moving to the adjacent six positions:

where , and is the amplitude increment of the six adjacent positions relative to the position . When is located inside the matrix, that is and , the adjacent six positions are , , , , , and . Conversely, when is located at the edge of the matrix, first connect the end of the matrix and then shift it. For example, if and , the adjacent six positions are , , , , , and , as shown in Figure 3.

Figure 3.

Schematic diagram of climber position prediction: (a) at a non-boundary; (b) at the boundary.

(3) According to the moving result, add 1 to the corresponding position of the measurement matrix .

(4) Repeat the above steps until the Climber traverses to obtain the final metric matrix .

(5) Recurse the measurement matrix in the slow time direction to form a sinusoidal ridge line and eliminate the ridge lines with too small a length to obtain the ridge line matrix .

Step 2: Obtain the CSAR height profile. Two-dimensional profiles of CSAR with different heights are obtained via IRT.

(1) Divide the sub-apertures. According to the scattering characteristics of the target in the scene, is divided into sub-apertures along the slow time, where , is the number of sub-apertures, and is the sub-aperture width;

(2) Set the height of the imaging plane . By changing the range center of the data during the IRT, 2D imaging of the CSAR at different height planes can be realized;

(3) Sub-aperture IRT imaging. IRT is performed on along the slow time direction to obtain , which is a 2D image of each sub-aperture at height :

where denotes the IRT.

(4) Sub-aperture image fusion. To reduce the average effect of sub-apertures in incoherent processing and the influence of scattering center intensity in different sub-apertures, sub-aperture images are fused based on the generalized likelihood ratio test (GLRT) [16], and the largest pixel in each sub-image is taken as the pixel of the final 2D image:

where is the CSAR 2D image representing the height . CSAR 2D images with different heights are then superimposed to form a CSAR image volume .

Step 3: Extract the CSAR 3D point cloud. Hough transform is used to extract the intersection points of the height slices of the defocused circle in the X and Y directions, respectively. Lastly, 3D point cloud extraction is completed after voting fusion.

(1) Layering along the X (Y) direction. Firstly, the CSAR image volume obtained in step 2 is layered along the X direction and the Y direction, respectively, as shown in Figure 4a. Each layer’s data () represent a change in the Z−Y (Z−X) direction, where . Then, the Canny operator is used to binarize each layer of data () to obtain (), which prepares the data for the Hough transform;

Figure 4.

Schematic diagram of extracting a 3D point cloud via Hough transform; (a) layering along the X (Y) direction; (b) Hough transform output intersection point; and (c) voting fusion of the intersecting points.

(2) Hough transform along the Z direction. Carry out a one-dimensional Hough transform on () along the Z direction and extract the peak points of the Hough transform in each layer, where . The upper limit needs of can be set according to the number of targets to be extracted in this layer;

(3) Output intersection points. According to Formula (14), calculate the intersection points of the straight lines in (), as shown in Figure 4b;

(4) Voting fusion to extract the 3D point cloud. In the 3D grid composed of the CSAR image volume , according to the Hough transform, the output intersection coordinates are voted for in the corresponding grid. As seen in Figure 4c, only when the height of the imaging plane is consistent with the actual height of the target will the output intersection coordinates in the X and Y directions completely coincide when the target is entirely focused. If the grid voting result exceeds a certain threshold, the CSAR 3D point cloud extraction is considered complete.

3.3. Algorithm Complexity Analysis

The traditional 3D BP imaging algorithm has to perform phase compensation for each 3D grid in each azimuth pulse, so the imaging speed is extremely low. In this paper, IRT and Hough transforms are used to extract the 3D point cloud, which can greatly improve the rate of CSAR 3D imaging. Let the azimuth pulse number of the full CSAR aperture data be , the distance pulse number be , and the 3D imaging grid size be .

The traditional 3D BP algorithm needs phase compensation operations, and the algorithm time is as follows:

where is the time consumption of one phase compensation operation using the traditional 3D BP algorithm.

The IBP 3D imaging method requires one geometric interpolation kernel construction, interpolation and phase compensation operations, and vector search operations. The algorithm time is as follows:

where denotes the time consumption of one geometric interpolation kernel construction, and denotes the time consumption of one vector search operation.

The proposed method needs to extract the ridge line of the sinusoidal trajectory once, including an prediction of Climber movement. For IRT sub-aperture fusion imaging, if the full aperture data are divided into sub-apertures, each imaging dataset includes IRT and one sub-aperture image fusion based on GLRT; then, Hough transforms are used to extract the intersection points with one vote for fusion. The time consumption is as follows:

where is the time consumption of one Climber motion prediction, indicates the time consumption of one IRT, is the time consumption of one sub-aperture image fusion based on GLRT, indicates the time consumption of one Hough transform to extract intersection points, and is the time consumption of one vote for fusion.

The proposed algorithm simplifies the phase compensation operation of the traditional 3D BP or IBP algorithm into Climber motion prediction, IRT, sub-aperture image fusion, Hough transform to extract the intersection points, and one voting fusion, which effectively simplifies the computational complexity of the model and improves the imaging speed.

4. Data Processing

4.1. Simulation Data Processing

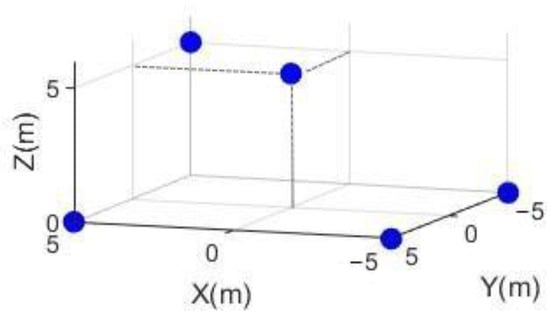

This section uses simulation data to analyze the proposed algorithm’s performance. Here, the radar transmits FMCW signals with a carrier frequency of 9.6 GHz and a bandwidth of 750 MHz. The flight radius of the SRUAV is 600 m, the flight altitude is 300 m, and the flight speed is 7 m/s. Slow time sampling points for one circle of flight total 179,520 s, while fast time sampling points total 1502. The size of the imaging scene is , which is divided into 3D imaging grids of based on the range resolution of the echo. There are five point targets in the simulation scene located in two height planes and distributed in the center and periphery of the scene. The distribution of the simulation point targets is shown in Figure 5, and the coordinates of the five point targets in the scene are provided in Table 1.

Figure 5.

Simulated point target distribution diagram.

Table 1.

Coordinates of the five point targets.

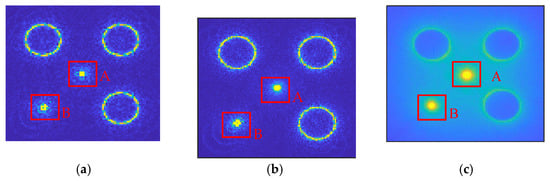

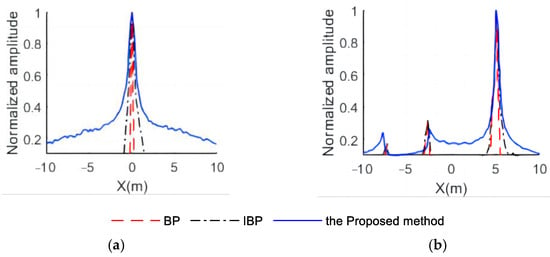

The 2D imaging results of CSAR obtained by IRT with a height of z = 5 m are shown in Figure 6. Compared to the imaging results when using the traditional BP and IBP algorithms, 2D imaging under the proposed method is precise and accurate. The X slices of point A and point B are shown in Figure 7. Point A is located in the center of the scene, and the focus position of point A in the two images is the same. However, the imaging resolution of point A proposed in this paper is lower than that of the traditional BP and IBP algorithms. Point B is far from the center of the scene, and the focus position of point A in the two images is the same. The imaging resolution of point B proposed in this paper is similar to that of the traditional BP and IBP algorithms. When the normalized amplitude is 0.707, the corresponding main lobe width is the resolution of the target. A resolution comparison of the A and B points is shown in Table 2. The current imaging resolution (point A 0.36 m and point B 0.36 m) is slightly lower than that of the traditional BP (point A 0.2 m and point B 0.3 m) and IBP algorithms (point A 0.24 m and point B 0.32 m). Nevertheless, the target’s position is consistent with the BP algorithm, enabling it to be used for fast CSAR image processing.

Figure 6.

Two-dimensional imaging comparison of the three methods when height z = 5 m: (a) 2D image using the traditional BP algorithm when z = 5 m; (b) 2D image using the IBP algorithm when z = 5 m; and (c) 2D image using the proposed imaging algorithm when z = 5 m.

Figure 7.

Comparison of point target slices: (a) X slices of point A; (b) X slices of point B.

Table 2.

Comparison of point target resolution.

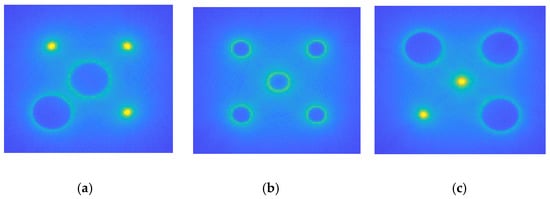

The 2D imaging results of CSAR with typical heights obtained by IRT are shown in Figure 8. It can be seen that when the height z = 0 m, three points are well focused, and the other two points are defocused into a circle. When the height z = 5 m, two points are in good focus, and the other three are out of focus and form a circle. When the height z = 2.5 m, there is no point target, and the distances from the set point target (z = 0 m and z = 5 m) are basically the same, defocusing all five point targets into circles. These circles are fully consistent with the actual set point targets in the scene.

Figure 8.

Typical height images based on IRT: (a) image when the height z = 0 m; (b) image when the height z = 2.5 m; and (c) image when the height z = 5 m.

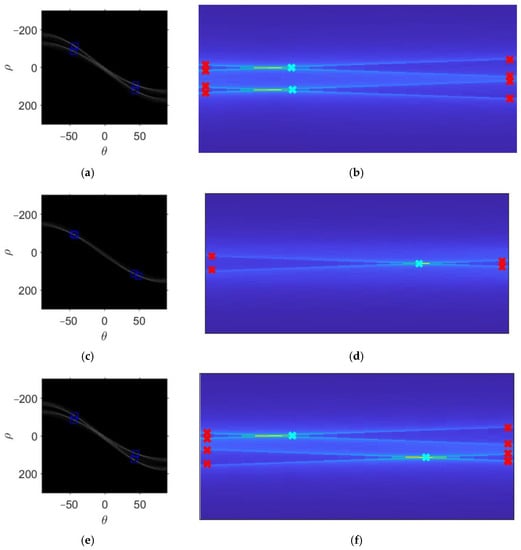

After the X-direction is layered by position, the Hough transform is used to extract the intersection of the defocused circle that changes with height to form a cone. The extraction result of the intersection of typical X-position slices is shown in Figure 9. When x = −5 m, the peak point after the Hough transform is extracted as shown in Figure 9a; the endpoint of the extracted line is shown as the red “ ” in Figure 9b; and the intersection point of the detected output line is shown as the cyan “

” in Figure 9b; and the intersection point of the detected output line is shown as the cyan “ ” in Figure 9b. Here, the two intersection points are consistent with the two-point target with a height of z = 0 m in the scene. When x = 0 m, the peak point after the Hough transform is extracted, as shown in Figure 9c, and the intersection point of the extracted straight line and the detected output straight line is shown in Figure 9d. When x = 5 m, the peak point after the Hough transform is extracted, as shown in Figure 9e, and the intersection point of the extracted straight line and the detected output straight line is shown in Figure 9f. The two intersection points are located at different heights, consistent with heights of z = 0 m and z = 5 m in the scene.

” in Figure 9b. Here, the two intersection points are consistent with the two-point target with a height of z = 0 m in the scene. When x = 0 m, the peak point after the Hough transform is extracted, as shown in Figure 9c, and the intersection point of the extracted straight line and the detected output straight line is shown in Figure 9d. When x = 5 m, the peak point after the Hough transform is extracted, as shown in Figure 9e, and the intersection point of the extracted straight line and the detected output straight line is shown in Figure 9f. The two intersection points are located at different heights, consistent with heights of z = 0 m and z = 5 m in the scene.

” in Figure 9b; and the intersection point of the detected output line is shown as the cyan “

” in Figure 9b; and the intersection point of the detected output line is shown as the cyan “ ” in Figure 9b. Here, the two intersection points are consistent with the two-point target with a height of z = 0 m in the scene. When x = 0 m, the peak point after the Hough transform is extracted, as shown in Figure 9c, and the intersection point of the extracted straight line and the detected output straight line is shown in Figure 9d. When x = 5 m, the peak point after the Hough transform is extracted, as shown in Figure 9e, and the intersection point of the extracted straight line and the detected output straight line is shown in Figure 9f. The two intersection points are located at different heights, consistent with heights of z = 0 m and z = 5 m in the scene.

” in Figure 9b. Here, the two intersection points are consistent with the two-point target with a height of z = 0 m in the scene. When x = 0 m, the peak point after the Hough transform is extracted, as shown in Figure 9c, and the intersection point of the extracted straight line and the detected output straight line is shown in Figure 9d. When x = 5 m, the peak point after the Hough transform is extracted, as shown in Figure 9e, and the intersection point of the extracted straight line and the detected output straight line is shown in Figure 9f. The two intersection points are located at different heights, consistent with heights of z = 0 m and z = 5 m in the scene.

Figure 9.

Intersection points extracted from slices at different X positions via Hough transform: (a) peak points detected at x = −5 m; (b) end points and intersection points detected at x = −5 m; (c) peak points detected at x = 0 m; (d) end points and intersection points detected at x = 0 m; (e) peak points detected at x = 5 m; and (f) end points and intersection points detected at x = 5 m.

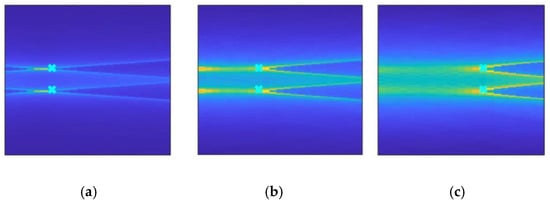

Taking point targets (−5, 5, 0) and (−5, −5, 0) as examples, the intersection points of x = −5 m, x = −6.5 m, and x = −8 m slices are extracted, as shown in Figure 10. As the slice sits away from the target slice x = −5 m, the position of the extracted intersection points moves to the right. That is, the height gradually increases, and the intersection points away from the focusing height, consistent with the analysis in Figure 4b.

Figure 10.

Intersection points extracted from different X position slices via Hough transform for the same target points: (a) intersection points detected at x = −5 m; (b) intersection points detected at x = −6.5 m; and (c) intersection points detected at x = −8 m.

Using Hough transform, extracting the intersection points of the height slice of the defocused circle in the Y direction is consistent with that in the X direction, so the process will not be repeated.

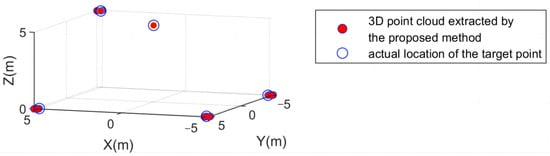

After voting fusion, the threshold is set to 2. The results after extracting the 3D point cloud (Figure 11) demonstrate that the method proposed in this paper can effectively remove CSAR 3D point clouds. However, due to the limitations of resolution in the slant range direction of the system, the target position is not entirely located in the imaging grid, resulting in a deviation of about one grid in the process of extracting intersection points via the Hough transform. Thus, the target points far from the imaging center are scattered to some extent.

Figure 11.

Result of 3D point cloud extraction.

The above data processing adopted MATLAB R2019b with an i7−10750H processor. The imaging time consumption of the traditional BP 3D imaging algorithm, the IBP 3D imaging method, and the proposed algorithm was also compared, as shown in Table 3. The time consumption of the proposed algorithm was 582.1 s, which was about half that of the IBP 3D imaging method and one-sixth that of the traditional BP 3D imaging algorithm.

Table 3.

Time consumption comparison.

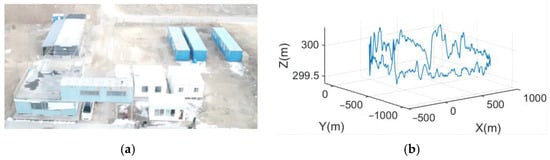

4.2. Measured Data Processing

In this section, measured echo data are used to analyze the proposed algorithm’s performance. Here, the radar equipped on an SRUAV transmits FMCW signals with a carrier frequency of 9.6 GHz and bandwidth of 750 MHz. The flight radius of the SRUAV is 600 m, the flight altitude is 300 m, and the flight speed is 7 m/s. There are 179,520 slow-time sampling points in the flight circle and 1502 fast-time sampling points. The size of the imaging scene is , which is divided into 3D imaging grids based on the range resolution of the echo. The center of the scene is a house composed of scattered containers, as shown in Figure 12a; the flight path is shown in Figure 12b. Due to the limitations of system performance, the three-axis self-stabilizing platform of airborne CSAR is closed. Therefore, the beam center direction of CSAR changes with the attitude of the SRUAV platform and cannot be guaranteed to point only to the observation center.

Figure 12.

CSAR experiment scene and flight trajectory: (a) optical image of the scene center; (b) flight trajectory of the SRUAV using the airborne recorder.

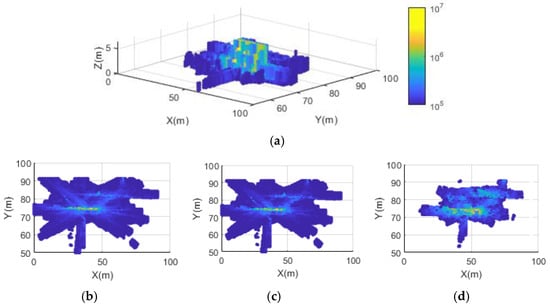

The scattering characteristics of containers change with the observation angle. Using the sinusoidal track ridge of , the whole aperture is evenly divided into eight non-overlapping sub-apertures, and the sub-aperture images are obtained by IRT. The full aperture image is obtained by GLRT between the sub-aperture images. The full aperture images of different heights constitute the CSAR image volume. Hough transform is used to extract the intersection points of the defocusing ring height slices at different positions in the X and Y directions, and the voting fusion threshold is set as 10. The results of extracting the CSAR 3D point cloud are shown in Figure 13.

Figure 13.

Three-dimensional point cloud extraction results of the measured scene: (a) 3D imaging results of the method proposed in this paper; (b) top view of the 3D point cloud using the traditional BP algorithm; (c) top view of the 3D point cloud using the IBP algorithm; and (d) top view of the 3D point cloud using the proposed method.

The reconstructed geometric shape of the container house in the center of the scene is shown in Figure 13a. The height of the second floor is 6.36 m, which is consistent with the height of the actual scene. Because there are many containers on the first floor that influence each other, the geometric shape of the point cloud on the first floor is seriously distorted. However, this method can still provide a reasonable estimation of the height. Figure 13b–d shows that compared to traditional BP and IBP 3D imaging methods, the proposed method offers a better 3D focusing effect.

The above data processing was performed using MATLAB R2019b with an i7−10750H processor. The imaging time consumption of the traditional BP 3D imaging algorithm, the IBP 3D imaging method, and the proposed algorithm were also compared, as shown in Table 4. The time consumption of the proposed algorithm was 3809.32s, which was about half that of the IBP 3D imaging method and one-sixth that of the traditional BP 3D imaging algorithm.

Table 4.

Time consumption comparison.

5. Conclusions

CSAR is a typical 3D imaging mode of SAR that uses a small airborne platform to realize 3D imaging by observing targets in . However, the low efficiency of the BP 3D imaging algorithm limits its implementation for edge nodes, which is not conducive to the popularization and application of practical engineering. To fill this gap, a CSAR 3D imaging method suitable for edge calculation was proposed in this paper. By directly using image processing methods such as track ridge extraction, IRT, and Hough transform to image the target, our method replaces the phase compensation and accumulation of the azimuth-by-azimuth pulse of traditional BP imaging and greatly shortens the imaging time. The correctness and effectiveness of the proposed method were verified by the imaging results for the simulation and measured data. The proposed method can be applied to aircraft, small ground terminals, and other edge nodes to realize fast CSAR 3D imaging and provide a technical means for the efficient transmission and application of SAR perception information in the IoT.

Author Contributions

Conceptualization and writing—original draft, L.C.; methodology and funding acquisition, Y.M.; validation, Z.H.; formal analysis, B.L.; resources, Y.S.; writing review and editing, W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Research of Military Internal Scientific Project under grant number KYSZQZL2019.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CSAR | Circular synthetic aperture radar |

| 2D | Two-dimensional |

| 3D | Three-dimensional |

| BP | Back projection |

| FMCW | Frequency-modulated continuous wave |

| GLRT | Generalized likelihood ratio test |

| IBP | Improved BP |

| IRT | Inverse Radon transform |

| IoT | Internet of Things |

| SRUAV | Small rotor unmanned aerial vehicle |

References

- Hu, X.; Liu, Z.; Yu, X.; Zhao, Y.; Chen, W.; Hu, B.; Du, X.; Li, X.; Helaoui, M. Convolutional Neural Network for Behavioral Modeling and Predistortion of Wideband Power Amplifiers. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 3923–3937. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Zhang, Y.; Liao, X.; Liu, Z.; Wang, W.; Ghannouchi, F.M. Dynamic Beam Hopping Method Based on Multi-Objective Deep Reinforcement Learning for Next Generation Satellite Broadband Systems. IEEE Trans. Broadcast. 2020, 66, 630–646. [Google Scholar] [CrossRef]

- Zuo, F.; Li, J.; Hu, R.; Pi, Y. Unified coordinate system algorithm for terahertz video-SAR image formation. IEEE Trans. Terahertz Sci. Technol. 2018, 8, 725–735. [Google Scholar] [CrossRef]

- Han, D.; Zhou, L.; Jiao, Z.; Wu, Y. A coherent 3-D imaging method for multi-circular SAR based on an improved 3-D back projection algorithm. J. Electron. Inf. Technol. 2021, 43, 131–137. [Google Scholar]

- Li, Y.; Chen, L.; An, D.; Zhou, Z. A novel DEM extraction method based on chain correlation of CSAR subaperture images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8718–8728. [Google Scholar] [CrossRef]

- Zhang, J.; Suo, Z.; Li, Z.; Bao, Z. Joint cross-correlation DEM extraction method for CSAR subaperture image sequences. Syst. Eng. Electron. 2018, 40, 1939–1944. [Google Scholar]

- Feng, S.; Lin, Y.; Wang, Y.; Teng, F.; Hong, W. 3D Point Cloud Reconstruction Using Inversely Mapping and Voting from Single Pass CSAR Images. Remote Sens. 2021, 13, 3534. [Google Scholar] [CrossRef]

- Zhang, X.; Shi, J.; Wei, S. Three Dimensional Synthetic Aperture Radar; National Defense Industry Press: Beijing, China, 2017. [Google Scholar]

- Bao, Z.; Xing, M.; Wang, T. Radar Imaging Technique; Electronic Industry Press: Beijing, China, 2005. [Google Scholar]

- Ma, Y.; Chu, L.; Yang, X.; Hou, X. SAR autofocusing method based on slant range wavenumber subband division. J. Eng. Univ. PLA 2022, 1, 12–21. [Google Scholar]

- Kan, X.; Li, Y.; Wang, H.; Wang, Y.; Fu, C. A new algorithm of fast back projection in circular SAR. Guid. Fuze 2018, 39, 10–14. [Google Scholar]

- Stankovic, L.; Dakovic, M.; Thayaparan, T.; Vesna, P. Inverse radon transform–based micro-doppler analysis from a reduced set of observations. IEEE Trans. Aerosp. Electron. Syst. 2015, 51, 1155–1169. [Google Scholar] [CrossRef]

- Ishimaru, A.; Chan, T.K.; Kuga, Y. An imaging technique using confocal circular synthetic aperture radar. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1524–1530. [Google Scholar] [CrossRef]

- Jiang, J.; Shen, J.; Zhou, Z.; Han, P. Research on rotary drum assembly and adjustment technology based on improved probabilistic Hough transform. J. Appl. Opt. 2020, 41, 394–399. [Google Scholar]

- Chen, J.; Yang, B.; Huang, K.; Liu, Y.; Liu, X. Applications of a ridgeline extraction method in bearing fault diagnosis. China Mech. Eng. 2021, 32, 1157–1163. [Google Scholar]

- Feng, D.; An, D.; Chen, L.; Huang, X. Holographic SAR tomography 3-D reconstruction based on iterative adaptive approach and generalized likelihood ratio test. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 59, 305–315. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).