Abstract

A general lack of understanding pertaining to deep feedforward neural networks (DNNs) can be attributed partly to a lack of tools with which to analyze the composition of non-linear functions, and partly to a lack of mathematical models applicable to the diversity of DNN architectures. In this study, we analyze DNNs using directed acyclic graphs (DAGs) under a number of basic assumptions pertaining to activation functions, non-linear transformations, and DNN architectures. DNNs that satisfy these assumptions are referred to as general DNNs. Our construction of an analytic graph was based on an axiomatic method in which DAGs are built from the bottom–up through the application of atomic operations to basic elements in accordance with regulatory rules. This approach allowed us to derive the properties of general DNNs via mathematical induction. We demonstrate that the proposed analysis method enables the derivation of some properties that hold true for all general DNNs, namely that DNNs “divide up” the input space, “conquer” each partition using a simple approximating function, and “sparsify” the weight coefficients to enhance robustness against input perturbations. This analysis provides a systematic approach with which to gain theoretical insights into a wide range of complex DNN architectures.

1. Introduction

Deep feedforward neural networks (DNNs) have revolutionized the use of machine learning in many fields, such as computer vision and signal processing, where they have been used to resolve ill-posed inverse problems and sparse recovery problems [1,2]. Much of the previous research in the field of deep learning literature describes the construction of neural networks capable of attaining a desired level of performance for a given task. However, researchers have yet to elucidate several fundamental issues that are critical to the function of DNNs. Predictions that are based on a non-explainable and non-interpretable models raise trust issues pertaining to the deployment of that neural network in practical applications [3]. This lack of understanding can be attributed, at least partially, to a lack of tools with which to analyze the composition of non-linear activation functions in DNNs, as well as a lack of mathematical models applicable to the diversity of DNN architectures. This paper reports on a preliminary study of fundamental issues pertaining to function approximation and the inherent stability inherent of DNNs.

Simple series-connected DNN models, such as , have been widely adopted for analysis [4,5,6]. According to this model, the input domain is partitioned into a collection of polytopes in a tree-like manner. Each node of the tree can be associated with a polytope and one affine linear mapping, which gives a local approximation of an unknown target function with a domain restriction on the polytope [7,8]. We initially considered whether the theoretical results derived in those papers were intrinsic/common to all DNNs or unique to this type of network. However, our exploration of this issue was hindered by problems encountered in representing a large class of DNN architectures and in formulating the computation spaces for activation functions and non-linear transformations. In our current work, we addressed the latter problem by assuming that all activation functions can be expressed as networks with point-wise continuous piecewise linear (CPWL) activation functions and that all non-linear transforms are Lipschitz functions. The difficulties involved in covering all possible DNNs prompted us to address the former problem by associating DNNs with graphs that can be described in a bottom–up manner using an axiomatic approach, thereby allowing for analysis of each step in the construction process. This approach made it possible for us to build complex networks from simple ones and derive their intrinsic properties via mathematical induction.

In the current study, we sought to avoid the generation of graphs with loops by describing DNNs using directed acyclic graphs (DAGs). The arcs are associated with basic elements that correspond to operations applied to the layers of a DNN (e.g., linear matrix, affine linear matrix, non-linear activation function/transformation), while nodes that delineate basic elements are used to relay and reshape the dimension of an input or combine outputs from incoming arcs to outgoing arcs. We refer to DNNs that can be constructed using the proposed axiomatic approach as general DNNs. It is unclear whether general DNNs are equivalent to all DNNs that are expressible using DAGs. Nevertheless, general DNNs include modules widely employed in well-known DNN architectures. The proposed approach makes it possible to extend the theoretical results for series-connected DNNs to general DNNs, as follows:

- A DNN DAG divides the input space via partition refinement using either a composition of activation functions along a path or a fusion operation combining inputs from more than one path in the graph. This makes it possible to approximate a target function in a coarse-to-fine manner by applying a local approximating function to each partitioning region in the input space. Furthermore, the fusion operation means that domain partition tends not to be a tree-like process.

- Under mild assumptions related to point-wise CPWL activation functions and non-linear transformations, the stability of a DNN against local input perturbations can be maintained using sparse/compressible weight coefficients associated with incident arcs to a node.

Accordingly, we can conclude that a general DNN “divides” the input space, “conquers” the target function by applying a simple approximating function over each partition region, and “sparsifies” weight coefficients to enhance robustness against input perturbations.

In the literature, graphs are commonly used to elucidate the structure of DNNs; however, they are seldom used to further the analysis of DNNs. Both graph DNNs [9] and the proposed approach adopt graphs for analysis; however, graph DNNs focus on the operations of neural networks in order to represent real-world datasets in graphs (e.g., social networks and molecular structure [10]), whereas our approach focuses on the construction of analyzable graph representations by which to deduce intrinsic properties of DNNs.

The remainder of this paper is organized as follows. In Section 2, we present a review of related works. Section 3 outlines our bottom–up axiomatic approach to the construction of DNNs. Section 4 outlines the function approximation and stability of general DNNs. Concluding remarks are presented in Section 5.

Notation 1.

Matrices are denoted using bold upper case, and vectors are denoted using bold lower case. We also use to denote the i-th entry of a vector (), to denote its Euclidean norm, and to denote a diagonal matrix with diagonal .

2. Related Works

Below, we review analytic methods that are applicable to the derivation of network properties with the aim of gaining a more complete understanding of DNNs.

The ordinary differential equation (ODE) approach was originally inspired by a residual network (ResNet) [11], which is regarded as a discrete implementation of an ODE [12]. The ODE approach can be used to interpret networks by treating them as different discretizations of different ODEs. Note that the process of developing numerical methods for ODEs makes it possible to develop new network architectures [13]. The tight connection between the ODE and dynamic systems [14] makes it possible to study the stability of forward inference in a DNN and the well-posedness of learning a DNN (i.e., whether a DNN can be generalized by adding appropriate regularizations or training data), in which the stability of the DNN is related to initial conditions, while network design is related to the design of a system of the ODE system. The ODE approach can also be used to study recurrent networks [15]. Nevertheless, when adopting this approach, one must bear in mind that the conclusions of an ODE cannot be applied to the corresponding DNN in a straightforward manner due to the fact that a numerical ODE may undergo several discretization approximations (e.g., forward/backward Euler approximations), which can generate inconsistent results [16].

Some researchers have sought to use existing knowledge of signal processing in the design of DNN-like networks that are more comprehensive without sacrificing performance. This can often be achieved by replacing non-linear activation functions with interpretable non-linear operations in the form of non-linear transforms. Representative examples include the scattering transform [17,18] and Saak transform [19]. Scattering transform takes advantage of the wavelet transform and scattering operations in physics. Saak transform employs statistical methods with invertible approximations.

Network design was also inspired by the methods used in optimization algorithms to solve ill-posed inverse problems [20]. The unrolling approach involves the systematic transformation of an iterative algorithm (for an ill-posed inverse problem) into a DNN. The number of iterations becomes the number of layers, and the matrix in any given iteration is relaxed through the use of affine linear operations and activation functions. This makes it possible to infer the solution of the inverse problem by using a DNN. This is an efficient approach to deriving a network for an inverse problem and often achieves performance exceeding the theoretical guarantees for conventional methods [21,22]; however, it does not provide sufficient insight into the properties of DNNs that are capable of solving the inverse problem. A thorough review of this topic can be found in [2].

The un-rectifying method is closely tied to the problem-solving method used in piecewise functions, wherein the domain is partitioned into intervals to be analyzed separately. This approach takes advantage of the fact that a piecewise function is generally difficult to analyze as a whole but is a tractable function when the domain is restricted to a partitioned interval. When applying the un-rectifying method, a point-wise CPWL activation function is replaced with a finite number of data-dependent linear mappings. This makes it possible to associate different inputs with different functions. The method replaces the point-wise CPWL activation function as a data-dependent linear mapping, as follows:

where is the un-rectifying matrix for at . If is the ReLU, then is a diagonal matrix with diagonal entries . The un-rectifying variables in the matrix provide crucial clues according to which to characterize the function of the DNN. For example, comparing the following un-rectifying representation of with inputs and yields

Note that the sole difference between (2) and (3) lies in the un-rectifying matrices ( and , respectively). This is illustrated in the following example involving the application of the un-rectifying method to the analysis of domain partitioning in a neural network. Refer to [5] for more examples of the approach.

Un-Rectifying Analysis

Consider a simple regression model comprising a sequence of composition operations:

where denotes affine linear mappings and . Theoretical results for this network were derived using affine spline insights [4] and the un-rectifying method [5,23].

When considering an input space partitioned using (4), it is important to consider the ReLU activation functions due to the fact that affine linear mappings are global continuous functions. Let denote the finest input domain partition generated by . Appending an additional layer () to refines partition , which results in (i.e., every partitioning region in is contained in one of the partitioning regions in ) [5]. Below, we demonstrate that partitioning regions can be expressed via induction using an un-rectifying representation of ReLUs.

Consider the basic case of a single ReLU layer:

where state vector lists diagonal entries in , the values of which depend on . Input space is partitioned by hyperplanes derived from the rows of into no more than partitioning regions. Let denote the collection of all possible vectors, and let denote its size. The fact that each partitioning region in can be associated with precisely one element in means that partitioning regions can be treated as indices using vector . The partitioning region indexed by vector as for can be characterized as an intersection of half-spaces:

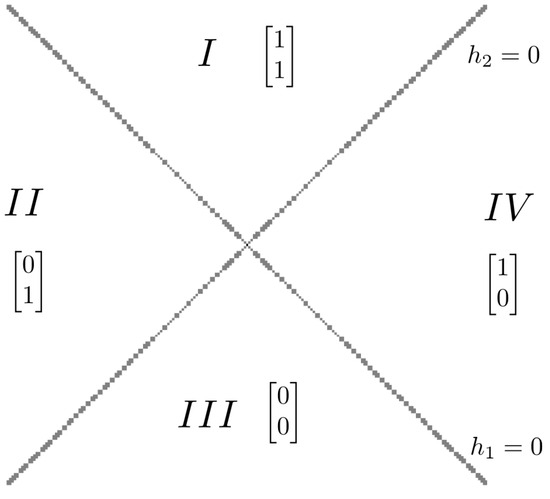

Thus, regression function comprises partitioning regions. When restricted to region , the function is . Figure 1 illustrates the input domain partitioning of , where

Figure 1.

Partitioning regions of regression function are indexed by the lists of diagonal entries of un-rectifying matrices , and corresponding to I, , , and , respectively.

consists of two hyperplanes ( and ), which divide into partition comprising regions I, , , and . Region I is characterized by and , while region is characterized by and , etc.

Now, consider two layers of ReLUs: , where the finest partition of as ; indicates the size of , and refers to a collection of all possibles vectors (, where ). Each partitioning region of can be associated with precisely one element in and one affine linear mapping. Given that , the partitioning region indexed by is denoted as , where any can be characterized as an intersection of half-space as follows:

The last two inequalities in (8) are equivalent to , such that

The first two inequalities in (9) divide according to the value of . This means that , which allows us to express , where vector is dependent/conditional on . Regression function comprises partitioning regions. When restricted to with , the function is .

Example 1.

Given , where is given in (7) and

Let be the list of diagonal entries in . Then,

In region I, where , we obtain

In region , where , we obtain

the components of which are parallel to .

In region , where , we obtain

the components of which are parallel to .

In region , where ,

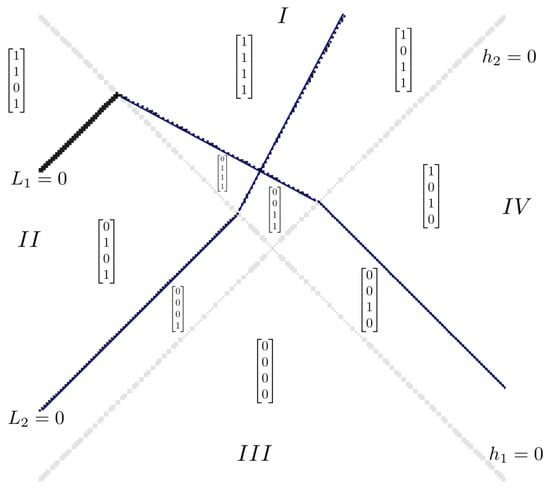

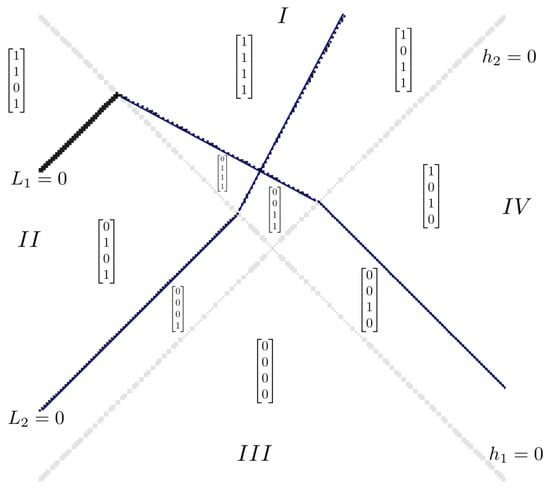

All points in this region are mapped to the zero vector. Figure 2 shows input domain partition of .

Figure 2.

Partitioning regions of , where and corresponds to the first and second components in (12), (13) and (14), respectively. The second component of does not fall within region . Each region is indexed by . Note that p denotes region of , and , and (the expression is not optimal because is irrelevant to the region). We adopted the conventional notation in which the values obtained by substituting points in the half-space above a hyperplane are positive, while the values obtained below the hyperplane are negative. Thus, points in region satisfy and .

3. DNNs and DAG Representations

The class of DNNs addressed in this study is defined by specific activation functions, non-linear transformations, and its underlying architecture. Note that legitimate activation functions, non-linear transformations, and architectures should be analyzable and provide sufficient generalizability to cover all DNNs in common use.

Activation functions and non-linear transformations are both considered functions; however, we differentiate between them due to differences in the way they are treated under un-rectifying analysis. A non-linear transformation is a function in the conventional sense when mapping to , in which different inputs are evaluated using the same function. This differs from activation functions, in which different inputs can be associated with different functions. For example, for ReLU , un-rectifying considers where as two functions depending on whether where or where .

3.1. Activation Functions and Non-Linear Transformations

In this paper, we focus on activation functions that can be expressed as networks of point-wise CPWL activation functions (). Based on this assumption and the following lemma, we assert that the activation functions of concern are ReLU networks.

Lemma 1

([24]). Any point-wise CPWL activation function () of m pieces can be expressed as follows:

where and indicate the slopes of segments and and are breakpoints of the corresponding segments.

Note that the max-pooling operation (arguably the most popular non-linear pooling operation), which outputs the largest value in the block and maintains the selected location [25], is also a ReLU network. The max pooling of a block of any size can be recursively derived by max pooling a block of size 2. For example, let and denote the max pooling of blocks of sizes 4 and 2, respectively. Then, and . The max pooling of a block of size 2 can be expressed as follows:

where is

In this paper, we make two assumptions pertaining to activation function :

- (A1)

- can be expressed as (16).

This assumption guarantees that for any input (), the layer of can be associated with diagonal un-rectifying matrix with real-valued diagonal entries, where the value of the l-th diagonal entry is , while and denote the sets in which ReLUs are active.

- (A2)

- There exists a bound that for any activation function () regardless of input (). This corresponds to the assumption that

This assumption is used to establish the stability of a network against input perturbations.

The outputs of a non-linear transformation layer can be interpreted as coefficient vectors related to that domain of the transformation. We make the following assumption pertaining to non-linear transformation () addressed in the current paper.

- (A3)

- There exists a uniform Lipschitz constant bound () with respect to norm for any non-linear transformation function () and any inputs ( and ) in :

This assumption is used to establish the stability of a network against input perturbations. Sigmoid and tanh functions are 1-Lipschitz [26]. The softmax layer from to is defined as , where is the inverse temperature constant. The output is the estimated probability distribution of the input vector in the simplex of and . Softmax function persists as -Lipschitz [27], as follows:

3.2. Proposed Axiomatic Method

Let denote the class of DNNs that can be constructed using the following axiomatic method with activation functions that satisfy (A1) and (A2) and non-linear transformations that satisfy (A3). This axiomatic method employs three atomic operations (O1–O3), the basic set , and a regulatory rule (R) describing a legitimate method by which to apply an atomic operation to elements in in order to yield another element in .

Basis set comprises the following operations:

where denotes the identify operation; denotes any finite dimensional linear mapping with bounded spectral norms; denotes any affine linear mapping, where and refer to the linear and bias terms, respectively; denotes activation functions satisfying (A1) and (A2); denotes functions with ; denotes non-linear transformations satisfying (A3); and denotes functions with .

Assumptions pertaining to and are combined to obtain the following:

(A) The assumption of uniform bounding is based on the existence of a uniform bound, where for any activation function (), any input (), and any non-linear transformation (), such that

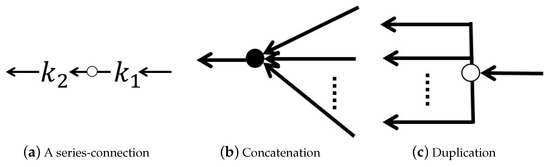

Let denote the input space for any elements in . The results of the following atomic operations belong to . The corresponding DAG representations are depicted in Figure 3 (a reshaping of input or output vectors is implicitly applied at nodes to validate these operations).

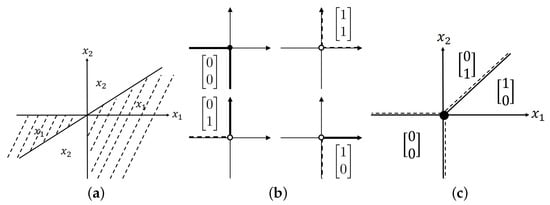

Figure 3.

Graphical representation of atomic operations O1–O3, where functions attached to arcs of concatenation and duplication are identify operation (omitted for brevity).

- O1.

- Series connection (∘): We combine and by letting the output of be the input of , where

- O2.

- Concatenation: We combine multichannel inputs into a vector as follows:

- O3.

- Duplication: We duplicate an input to generate m copies of itself as follows:

From the basic set and O1–O3, regulatory rule R generates other elements in by regulating the application of atomic operations on . The aim of R is to obtain DNNs that can be represented as DAGs; therefore, this rule precludes the generation of graphs that contain loops.

- R.

- DAG closure: We apply O1–O3 to in accordance with

The DAGs of comprise nodes and arcs, each of which belongs to one of operations O1–O3. Rule R mandates that any member in can be represented as a DAG, in which arcs are associated with members in and nodes coordinate the inlets and outlets of arcs. The rule pertaining to the retention of DAGs after operations on DAGs is crucial to our analysis based on the fact that nodes in a DAG can then be ordered (see Section 4). To achieve a more comprehensive understanding, we use figures to express DAGs. Nevertheless, a formal definition of a DAG must comprise triplets of nodes, arcs, and functions associated with arcs. An arc can be described as , where and refer to the input and output nodes of the arc, respectively, and is the function associated with the arc.

We provide the following definition for the class of DNNs considered in this paper.

Definition 1.

General DNNs (denoted as ) are DNNs constructed using the axiomatic method involving point-wise CPWL activation functions and non-linear transformations, which, together, satisfy assumption (A).

Note that DNNs comprise hidden layers and an output layer. For the remainder of this paper, we do not consider the output layers in DNNs because adding a layer of continuous output functions does not alter our conclusion.

3.3. Useful Modules

Generally, the construction of a DNN network is based on modules. Below, we illustrate some useful modules in pragmatic applications of general DNNs.

(1) MaxLU module: Pooling is an operation that reduces the dimensionality of an input block. The operation can be linear or non-linear. For example, average pooling is linear (outputs the average of the block), whereas max pooling is non-linear. Max pooling is usually implemented in conjunction with the ReLU layer (i.e., maxpooling ∘ ReLU) [28] to obtain a MaxLU module. The following analysis is based on the MaxLU function of block size 2 using un-rectification, where the MaxLU function of another block size can be recurrently derived using the MaxLU of block size 2 and analyzed in a similar manner [5]. The MaxLU is a CPWL activation function that partitions into three polygons. By representing the MaxLU layer with un-rectifying, we obtain the following:

where is a diagonal matrix with entries and

Figure 4 compares the domain partition of max pooling, ReLU, and MaxLU.

Figure 4.

Comparisons of max pooling, ReLU, and MaxLU partitioning of , where vectors in (b,c) are diagonal vectors of the un-rectifying matrices of ReLU and MAXLU layers and dashed lines denote open region boundaries: (a) partitions into two regions; (b) ReLU partitions into four polygons; (c) MaxLU partitions into three polygons. Note that the region boundary in the third quadrant of (a) is removed in (c).

(2) Series module: This module is a composition of and (denoted as ), which differs from operation O1 ().

The series-connected network () is derived using a sequence of series modules. We consider that is a ReLU. The theoretical underpinnings of this module involving MaxLU activation functions can be found in [5]. We first present an illustrative example of , then extend our analysis to . Note that input space is partitioned into a finite number of regions using , where is an affine mapping. The partition is denoted as . The composition of and (i.e., ) refines such that the resulting partition can be denoted as . Figure 5 presents a tree partition of using , where , in which , , and . The affine linear functions over can be expressed as , where and indicate un-rectifying matrices of ReLU and ReLU using and as inputs, respectively.

Figure 5.

(Top-left) partitioned using ReLU and (bottom-left) refined using ReLU. (Right) Tree partition on from composition of ReLUs, where the function with domain over a region is indicated beneath the leaf and regions are named according to vectors obtained by stacking diagonal elements of the un-rectifying matrix of ReLU over the un-rectifying matrix of ReLU.

For a series-connected network (), we let denote the partition and corresponding functions. The relationship between and is presented as follows.

Lemma 2

([5]). Let denote the partition of input space χ and the collection of affine linear functions of . Furthermore, let the domain of the affine linear function () be . Then,

- (i)

- refines (any partition region in can be subsumed to one and only one partition region in ).

- (ii)

- The affine linear mappings of can be expressed as , where is an un-rectifying matrix of ReLU. This means that if , then there must be a j in which , such that , where the un-rectifying matrix () depends on .

refines (i.e., ), which means that if a directed graph is based on the partition refinement in the leftmost subfigure of Figure 6, then the node corresponding to is the only child node of node , as well as the only parent node of node . The graph of this module is a tree with precisely one child at each node.

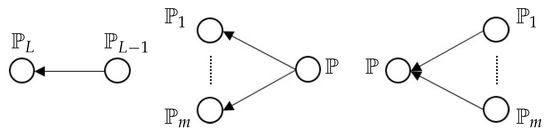

Figure 6.

Graph based on partition refinement in which nodes denote partitions and arc denotes that the partition of node b is a refinement of the partition of node a. (Left) Graph of a serial module; (middle) graph of a parallel module; (right) graph of a fusion module.

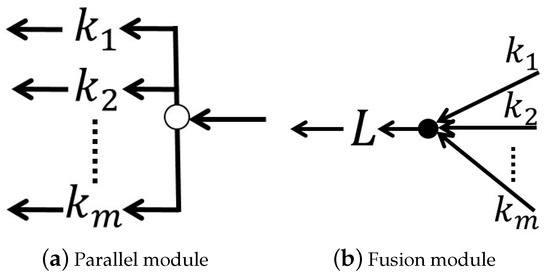

(3) Parallel module (Figure 7a): This module is a composition comprising an element in to each output of the duplication operation, denoted as follows:

Figure 7.

Graphical representations of (a) a parallel module (which becomes a duplication operation when ) and (b) a fusion module (which becomes a concatenation operation when and ).

When expressed in matrix form, we obtain the following:

In the literature on DNNs, this module is also referred to as a multifilter ( is typically a filtering operation followed by an activation function) or multichannel module. If partition is associated with the input (i.e., ) in (22) and partition is associated with the output of the i-th channel (i.e., ), then is a refinement of in accordance with (2). As shown in the middle subgraph of Figure 6, the node corresponding to is a child node of the node corresponding to . This graph is a tree in which the node of has m child nodes corresponding to partitions .

(4) Fusion module (Figure 7b): This type of module can be used to combine different parts of a DNN and uses linear operations to fuse inputs. The module is denoted as follows:

In matrix form, we obtain the following:

Note that a non-linear fusion can be obtained by applying a composition of / to the fusion module in which .

We denote the domain partition of associated with the i-th channel as , where is the number of partition regions. Partition of generated by the fusion module can be expressed as the union of non-empty intersections of partition regions in for all i, as follows:

Any partition region in is contained in precisely one region in any . In other words, is a refinement of for . An obvious bound for partition regions of is . We let denote the affine mappings associated with the i-th channel and be the affine mapping with the domain restricted to partition region . The affine mapping of the fusion module over partition region is derived as follows:

Lemma 3.

Suppose that a fusion module comprises m channels. Let denote the partition associated with the i-th channel of the module and let denote the partition of the fusion module. Then, is a refinement of for . Moreover, let denote the collection of affine linear mappings over . Thus, the affine linear mapping over a partition region of can be obtained in accordance with (25).

As shown in the rightmost subfigure of Figure 6, is a refinement of any , which means that the node corresponding to partition is a child node of the node corresponding to partition . The fact that the node associated with partition has more than one parent node means that the graph for this module is not a tree.

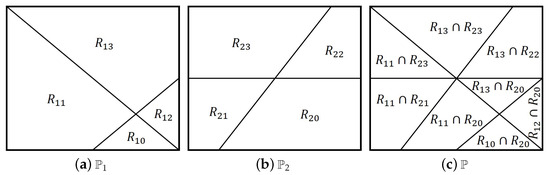

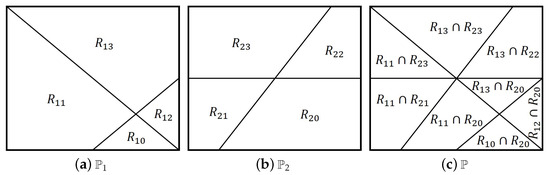

Example 2.

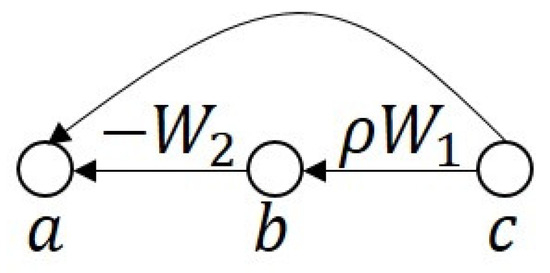

Figure 8 illustrates the fusion of two channels, as follows:

where , and and are ReLUs. The partition induced by comprises eight polytopes, each of which is associated with an affine linear mapping.

Figure 8.

Fusion of two channels in which each channel partitions into four regions: (a) is the partition due to , where ; (b) is the partition due to , where ; (c) is a refinement of and .

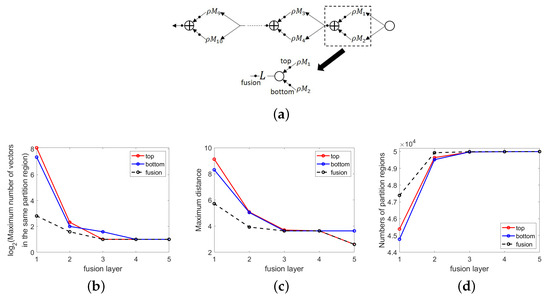

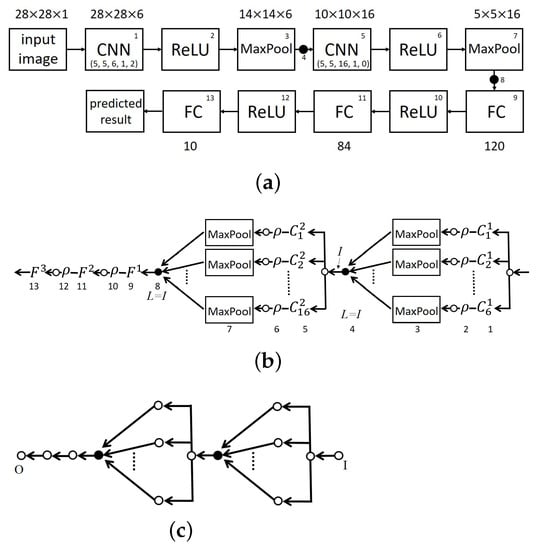

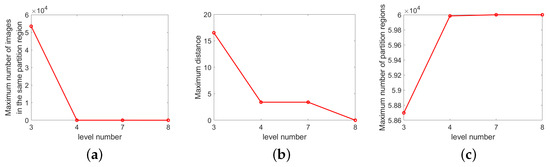

Figure 9 illustrates the refinement of partitions in the network in Figure 9a, which involved a series connection of five fusion layers. Each fusion layer includes a fusion module derived by a concatenation of inputs ( (top) and (bottom)). The result of the concatenation is subsequently input into linear function (fusion). The curves in Figure 9b–d top–bottom respectively) and fusion channels are consistent with the assertion of Lemma 3, wherein the partitions of the top and bottom channels are refined by the fusion channel. The fusion channel is the refinement of top and bottom channels, which means that the maximum number of vectors in a given partition region and the maximum distance between pairs of points in the same partition region are smaller than in the top and bottom channels, as shown in Figure 9b,c. On the other hand, the number of partition regions in the fusion channel is larger than in the top and bottom channels, as shown in Figure 8 and Figure 9d.

Figure 9.

Simulation of partition refinement using fusion modules with 50,000 random points as inputs with entries sampled independently and identically distributed (i.i.d.) from standard normal distribution. (a) Network comprising five layers of fusion modules, each of which comprises three channels (top, bottom, and fusion). The dimensions of weight matrix and bias of in the top and bottom channels are and , respectively, with coefficients in sampled i.i.d. from standard normal distribution and . (b) The number of points in partition regions containing at least two elements versus fusion layers. (c) The maximum distance between pairs of points located in the same partition region versus fusion layers. (d) The number of partition regions versus fusion layers. The fact that in (b,c), the curves corresponding to fusion channels are beneath those of the other channels and that in (d), the curve of fusion channel is above the other channels is consistent with the analysis that the partitions at fusion channels is finer than those at the bottom and top channels.

(5) The following DNN networks were derived by applying the DAG closure rule (R) to the modules.

Example 3.

As shown in Figure 10, the ResNet module [11] comprises ReLU and a fusion module:

Figure 10.

ResNet module featuring direct link and the same domain partitions at points a and b. In DenseNet, the addition node is replace with the concatenation node.

Using matrix notation, we obtain the following:

where and are affine mappings. The unique feature of ResNet is the direct link, which enhances resistance to the gradient vanishing problem in back-propagation algorithms [29]. It is a fact that direct linking and batch normalization [30,31] have become indispensable elements in the learning of very deep neural networks using back-propagation algorithms.

Let denote an L-layer DNN. A residual network [11] extends from L layers to layers as follows: . Repetition of this extension allows a residual network to maintain an arbitrary number of layers. As noted in the caption of Figure 10, domain partitioning is the same at a and b. This can be derived in accordance with the following analysis. Let denote the domain partitioning of χ at the input of the module. The top channel of the parallel module retains the partition, whereas in the bottom channel, the partition is refined as using . In accordance with (24), the domain of the fusion function is (i.e., ). Thus, the domain partitions at a and b are equivalent. Note that the DenseNet module [32] replaces the addition node in Figure 10 with the concatenation node. The partitions of DenseNet at b and a are the same, as in the ResNet case.

Example 4.

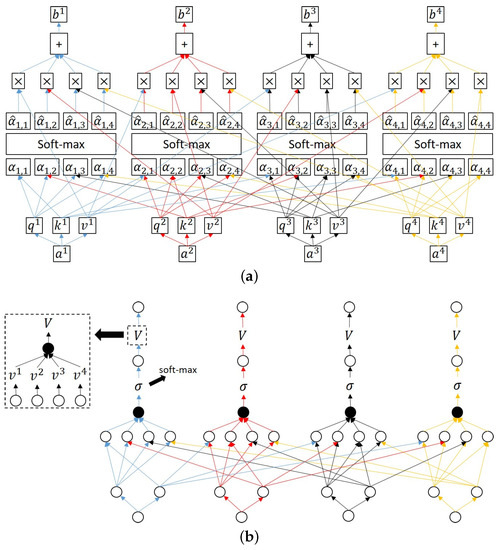

Transformers are used to deal with sequence-to-sequence conversions, wherein the derivation of long-term correlations between tokens in a sequence is based on the attention module [33,34]. A schematic illustration of a self-attention module is presented in Figure 11a, where the input vectors are , , , and and outputs vectors are , , , and . The query, key, and value vectors for are generated from matrix , , and , respectively, where , , and for all i. Attention score indicates the inner product between the normalized vectors of and . The vector of attention scores , , , and are input into the soft-max layer to obtain probability distribution , , , , where . Output vector is derived via multiplications and additions as a linear combination of value vectors with coefficients derived from the probability distribution. Figure 11b presents a graphical representation of (a), wherein non-linear transformation σ is the soft-max function. Dictionary V of value vectors can be obtained by performing a concatenation operation, which implicitly involves reshaping the dimensions of the resulting vector to the matrix (see dashed box in the figure).

Figure 11.

Self-attention module: (a) network; (b) graph in which the highlighted dashed box represents the graph used to obtain dictionary V from value vectors. The different color is corresponding to different input .

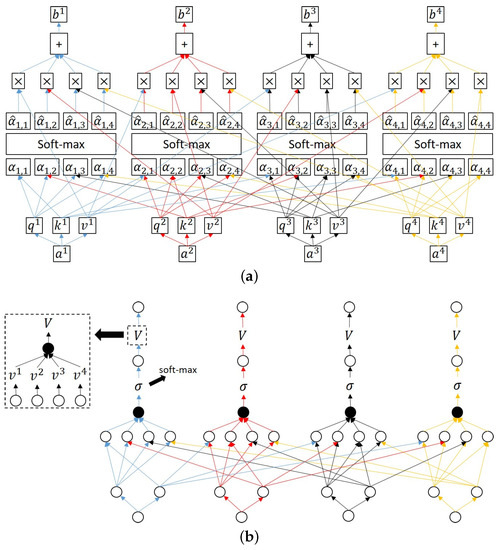

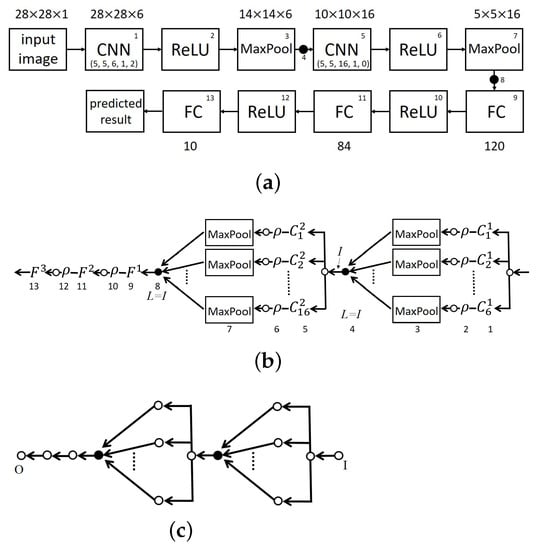

Example 5.

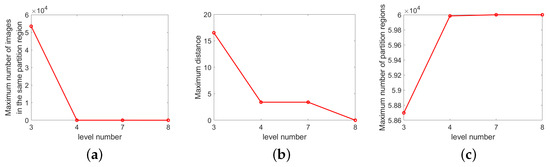

Figure 12 illustrates the well-known LeNet-5 network [35]. Figure 12a presents a block diagram of the network in which the input is an image ( px) and the output is ten classes of characters (0 to 9). CNN block number 1 is a convolution layer with the following parameters: filter size, ; stride, 1; padding, 2; and six-channel output, six images. Block numbers 2 and 3 indicate MaxLU operations and six-channel output (six images). Black circle 4 indicates a concatenation operation (O2), the output of which is an image. Block number 5 indicates a convolution layer with the following parameters: filter size, ; stride, 1; and padding, 0. This layer outputs sixteen images. Block numbers 6 and 7 indicate MaxLU operations, the output of which is sixteen images. Black circle 8 indicates a concatenation operation (O2), the output of which is a vector. Block number 9 indicates a fully connected network with input dimensions of 400 and output dimensions of 120. Block number 10 indicates a ReLU activation function. Block number 11 is a fully connected network with an input dimension of 120 and an output dimension of 84. Block number 12 indicates a ReLU activation function. Block number 13 indicates a fully connected network with an input dimension of 84, where the output is a prediction that includes 1 of the 10 classes. Figure 12b presents a graphical representation of (a), and (c) presents a simplified graphical representation of (a). In Figure 12c, we can see that LeNet-5 begins with a sequence of compositions of modules (featuring a parallel module followed by a fusion module), then a sequence of MaxLU layers. Here, we apply the LeNet-5 network to the MNIST dataset (not limited to other datasets) with images. This is a simple implementation illustrating the proposed approach. Figure 13 illustrates the properties of partitions at the outputs of levels 3, 4, 7, and 8 in Figure 12b. The curves in Figure 13a–c are consistent with the assertion of Lemma 3, which indicates that the partitions of the previous channels are refined by the fusion channel. The results are similar to those in Figure 9.

Figure 12.

LeNet-5: (a) network; (b) graph in which the numbers beneath the nodes and arcs correspond to block numbers in (a) and solid nodes indicate fusion module/concatenation; (c) simplification of (b) illustrating composition of modules, where I and O denote input and output, respectively.

Figure 13.

Illustrations of partition refinements in LeNet-5 at output levels 3, 4, 7, and 8 (as shown in Figure 12b), where the input is 60,000 images from the MNIST dataset (note that levels 3 and 7 are parallel connections linking several channels; therefore, only the maximum values of all channels at those levels are plotted): (a) maximum number of images in a given partition region; (b) maximum distance between pairs of images located in the same partition region (distance at level 8 is zero, which means that each partitioning region contains no more than one image); (c) maximum number of partition regions in a channel (note that as the level increases, the curves in (a,b) decrease, while the curve in (c) increases, which is consistent with our assertion that the partitions of the previous channels are refined by the fusion channel).

Remark 1.

Graphs corresponding to partitions generated by series and parallel modules are trees. Accordingly, the partitions created by a network comprising only series and parallel modules also from a tree. According to [36], trees learned for regression or classification purposes suffer from overfitting due to the bias–variance tradeoff dilemma. However, in [37,38], it was reported that this tradeoff is not an over-riding concern (referred to as benign overfitting) when learning a deep neural network in which the number of parameters exceeds the training data by a sufficient margin. This suggests that benign overfitting is relevant to the fusion module, considering that the graph for the module is not a tree.

4. Properties of General DNNs

In accordance with the axiomatic approach, we define general DNNs (i.e., ) as those that can be represented using DAGs. In the following, we outline the properties belonging to all members in the class.

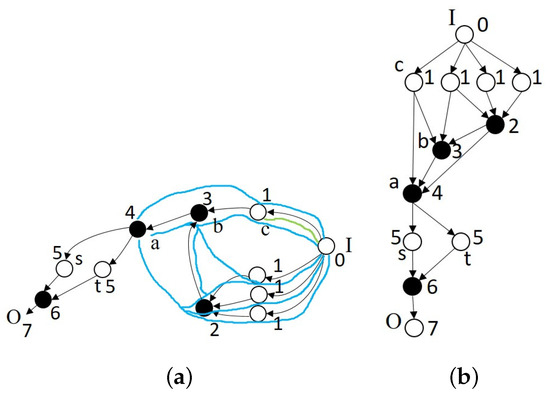

4.1. Function Approximation via Partition Refinement

We define the computable subgraph of node a as the subgraph of the DAG containing precisely all the paths from the input node of the DAG to node a. Clearly, the computable subgraph defines a general DNN, the output node of which is a, such that it computes a CPWL function. In Figure 14a, the computable subgraph of node a (highlighted in light blue) contains node numbers 0, 1, 2, 3, and 4. The computable subgraph of node c (highlighted in light green) is contained in the subgraph of a.

Figure 14.

DAG representation of a DNN illustrating the computable subgraphs and levels of nodes (solid nodes denote fusion/concatenation nodes, and I and O denote input and output, respectively). (a) Computable subgraph associated with each node. Light blue denotes the computable subgraph of node a, wherein the longest path from the input node to node a contains four arcs (i.e., ). Light green denotes the computable subgraph of c, wherein the longest path from the input node to node c contains one arc (i.e., ). (b) Nodes in (a) are partially ordered in accordance with levels (e.g., level 1 has four nodes, and level 2 has one node).

In the following, we outline the domain refinement of a general DNN. Specifically, if node b is contained in a computable subgraph of a, then the domain partition imposed by that subgraph is a refinement of the partition imposed by the computable subgraph of b.

Theorem 1.

The domain partitions imposed by computable subgraphs (at node a) and (at node b) of a general DNN are denoted as and , respectively. Suppose that node b is contained in subgraph . This means that refines .

Proof.

Without a loss of generality, we suppose that arcs in the general DNN are atomic operations and that the functions applied to arcs are included in base set . Furthermore, we suppose that p is a path from the input node to node a, which also passes through node b. denotes the subpath of p from node b to node a (). denotes the partition of input space defined using the computable subgraph at node . In the following, we demonstrate that if , then is refined by . Note that arc belongs to one of the three atomic operations. If it is a series-connection operation (O1), then the refinement can be obtained by referring to Lemma 2. If it is a concatenation operation (O2), then the refinement is obtained by referring to Lemma 3. If it is a duplication operation (O3), then the partitions for nodes and are the same. Thus, is a refinement of . □

Figure 14a presents the DAG representation of a DNN. Node b is contained in the computable subgraph of node a, whereas node c is contained in the computable sub-graph of b such that the domain partition of a is a refinement of the partition of b, and the domain partition of b is a refinement of the partition of c. Thus, the domain partition of node a is a refinement of the domain partition of node c.

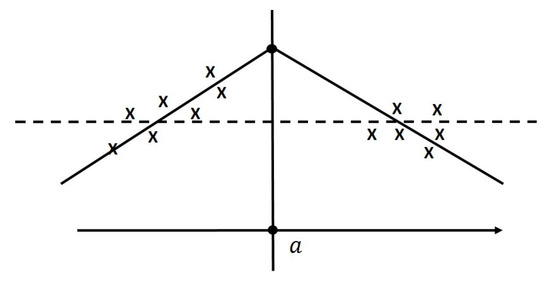

As hinted in Theorem 1, general DNNs are implemented using a data-driven “divide and conquer” strategy when performing function approximation. In other words, when traveling a path from the input node to a node of the DNN, we can envision the progressive refinement of the input space partition along the path, where each partition region is associated with an affine linear mapping. Thus, computing a function using a general DNN is equivalent to approximating the function using local simple mappings over regions in a partition derived using the DNN. A finer approximation of the function can be obtained by increasing the lengths of paths from the input node to the output node. Figure 15 illustrates the conventional and general DNN approaches to the problem of linear regression. The conventional approach involves fitting “all” of the data to obtain a dashed hyperplane, whereas the general DNN approach involves dividing the input space into two parts and fitting each part using hyperplanes.

Figure 15.

Approaches to linear regression. The dashed line indicates the regression derived from all data (i.e., conventional approach), and the solid lines indicate regressions derived from data of and data of continuous at (i.e., general DNN approach).

4.2. Stability via Sparse/Compressible Weight Coefficients

We introduce the l function of node a to denote the number of arcs along the longest path from input node I to node a of a DAG, where is referred as the level of node a. According to this definition, the level of the input node is zero.

Lemma 4.

The level is a continuous integer across the nodes in a general DNN. In other words, if node a is not the output node, then there must exist a node b where .

Proof.

Clearly, for input node I. This lemma can be proven via contradiction. Suppose that the levels are non-continuous integers. Without a loss of generality, the nodes can be divided into two groups (A and B), where A includes all of the nodes with level and B includes all of the nodes with level and . is assigned the lowest level in graph B, and . Furthermore, denotes the longest path from the input node to node b, and a is a node along the path with arc . Thus, ; otherwise, , which violates the assumption that . If , then (since a is on the longest path () to b and a has a direct link to b). This violates the assumption that with . Thus, , according to which and . This violates the assumption that all nodes can be divided into two groups (A and B). We obtain a contradiction and, hence, complete the proof. □

The nodes in a DAG can be ordered in accordance with the levels. Assume that there is only one output node, denoted as O. Clearly, is the highest level associated with that DAG. The above lemma implies that the nodes can be partitioned into levels from 0 to L. We introduce notation (referring to the nodes at level n) to denote the collection of nodes with levels equal to n and let denote the number of nodes at that level. As shown in Figure 14b, the nodes in Figure 14a are ordered in accordance with their levels, as indicated by the number besides the nodes. For any DNN (), we can define DNN function (with ) by stacking the DNN functions of nodes at level n into a vector as follows:

where is the function derived using the computable subgraph of node . Clearly, because it is formed by the concatenation of . For example, in Figure 14b, and . The order of components in is irrelevant to subsequent analysis of stability conditions.

The stability of a DNN can be measured as the output perturbation against the input perturbation such that

where L is the level of the output node for DNN . A stable deep architecture implies that a deep forward inference is well-posed and robust in noisy environments. A sufficient condition for the stability of a DNN requires that be a bounded, non-increasing function of L when .

Lemma 5.

Let d be the uniform bound defined in (21), (the nodes directly linking to the node a), and denote the number of nodes in . Further, let and denote the restriction of over partition region . Suppose that and denote the CPWL function associated with the computable subgraph of node a and the restriction of the function over , respectively. As defined in (27), denotes the function derived by nodes at level , and denotes the restriction of on domain partition ; i.e.,

(i) For a given n and , there exists such that for any ,

where is referred to as the Lipschitz constant in at level n.

(ii) If there exists level m such that for ,

where is the weight matrix associated with the atomic operation on arc , which means that the Lipschitz constant is a bounded function of n on .

Proof.

See Appendix A for the proof. □

This lemma establishes local stability in a partition region of a DNN. To achieve global stability in the input space, we invoke the lemma in [5], which indicates that piece-wise functions that maintain local stability have global stability, provided that the functions are piece-wise continuous.

Lemma 6

([5]). Let be a partition and with domain be -Lipschitz continuous with for . Let f be defined by for . Then, f is -Lipschitz continuous.

Theorem 2.

For the sake of stability, we adopted the assumption pertaining to Lemma 5. Let DNN with the domain in input space (χ) and let denote the function with nodes of up to level . If there exists level m such that for ,

where d is the uniform bound defined in (21), and is the weight matrix associated with arc ; then, for any partitioning region p of χ, is a bounded, non-increasing function of L on χ. Note that is defined in (28). Hence, is a stable architecture.

Proof.

See Appendix B for the proof. □

This theorem extends the stability of the series-connected DNNs in [5] to general DNNs. According to , the condition determining the stability of an DNN can be expressed as follows:

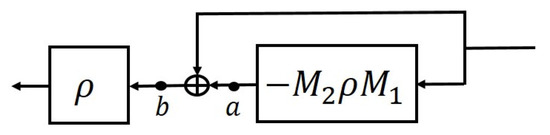

This condition holds, regardless of the size of . Thus, if the matrix is large, then (33) implies that the weight coefficients are sparse/compressible. Note that (32) is a sufficient condition for a DNN to achieve stability; however, it is not a necessary condition. The example in Figure 16 demonstrates that satisfying (32) is not necessary.

Figure 16.

Conditions for stability of ResNet module , where : If (32) is satisfied, then (hence, ) due to the fact that if and only if . In fact, it is sufficient for the model to achieve stability if with (all eigenvalues of lie within ).

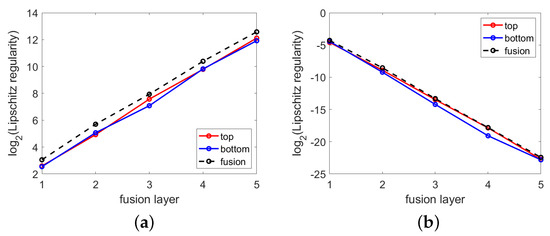

Figure 17 illustrates simulations pertaining to Theorem 2 for the network presented in Figure 9a, which comprises a fusion module with five layers. The simulations illustrate how the weight coefficients affect the Lipschitz constant. In Figure 17a, the upper bound for Lipschitz constant , where (presented as the maximum gain for all pairs of training data), increases with an increase in the number of fusion layers if the weight coefficients do not satisfy (32) in terms of stability. If the weight coefficients were scaled to be compressible in accordance with (32), then the upper bound would decrease with an increase in the number of fusion layers, as shown in Figure 17b. This is an indication that the network is stable relative to the input perturbation.

Figure 17.

Stability simulation using the network shown in Figure 9a, in which the maximum gain () for all training pairs is plotted against each fusion layer (j), where (dimensions of the weight matrices and biases in in the top, bottom, and fusion channels of each module are and , respectively; coefficients in are sampled i.i.d. from standard normal distribution (mean zero and variance of one); inputs are 2000 random vectors (each of size ) with entries sampled i.i.d. from the standard normal distribution): (a) maximum gain increases with an increase in the number of fusion layers; (b) maximum gain remains bounded when weight coefficients in are scaled to meet (33) for Theorem 2. Note that for ReLU activation functions.

5. Conclusions

Using an axiomatic approach, we established a systematic approach to representing deep feedforward neural networks (DNNs) as directed acyclic graphs (DAGs). The class of DNNs constructed using this approach is referred to as general DNNs, which includes DNNs with pragmatic modules, activation functions, and non-linear transformations. We demonstrated that general DNNs approximate a target function in a coarse-to-fine manner by learning a directed graph (generally not in a tree configuration) through the refinement of input space partitions. Furthermore, if the weight coefficients become increasingly sparse along any path of a graph, then the DNN function gains stability with respect to perturbations in the input space due to a bounded global Lipschitz constant. This study provides a systematic approach to studying a wide range of DNNs with architectures that are more complex than those of simple series-connected DNN models. In the future, we will explore the analysis and conclusions reported in this paper in terms of their applications. In this direction, we refer to [39] with respect to network pruning.

Author Contributions

Conceptualization, W.-L.H.; Software, S.-S.T.; Validation, S.-S.T.; Formal analysis, W.-L.H.; Data curation, S.-S.T.; Writing—original draft, W.-L.H.; Writing—review & editing, W.-L.H.; Visualization, S.-S.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The MNIST dataset can be found at https://www.kaggle.com/datasets/oddrationale/mnist-in-csv.

Acknowledgments

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Lemma 5

Proof.

(i) Without a loss of generality, the arcs in can be treated as atomic operations associated with functions in basis set . The base step is on input node I (i.e., ). Clearly, (30) holds when .

Implementation of the induction step is based on levels. Suppose that (30) holds for all nodes in levels lower than n. If a is a node in level n, then node must be in level . Thus, for ,

where , in accordance with the fact that functions associated with axiomatic operations on arcs are members of . If the atomic operation is a duplication, then , and if the atomic operation is a series connection/concatenation, then . Furthermore,

Case 1. Consider . For , the bias term in (if any) can be canceled. Applying the uniform bound assumption on activation functions (18) and applying (29) and (30) to levels lower than n results in the following:

Case 2. Consider . Similarly, for , we can obtain the following:

where is the linear part of the affine function (), and is the Lipschitz constant bound defined in (19). Finally, (A3) and (A4) are combined using (21) to yield

where . This concludes the proof of (i).

Hence, . Considering the fact that (31) holds for , we obtain

The fact that leads to the conclusion that is a bounded sequence of n on . □

Appendix B. Theorem 2

Proof.

In accordance with (32) and Lemma 5, is bounded for any p and n. The fact that activation functions of satisfy (A1) implies that the total number of partitions induced by an activation function is finite. Thus, the number of partition regions induced by is finite. Hence,

is defined and bounded above for any n. In accordance with Lemma 6 and the definition of , we obtain

We define and, for any , obtain

The sequence is bounded for any L such that is stable as . □

References

- Yang, Y.; Sun, J.; Li, H.; Xu, Z. Deep ADMM-Net for compressive sensing MRI. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2016; Volume 29. [Google Scholar]

- Monga, V.; Li, Y.; Eldar, Y.C. Algorithm unrolling: Interpretable, efficient deep learning for signal and image processing. IEEE Signal Process. Mag. 2021, 38, 18–44. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning. Lulu.com. 2020. Available online: https://christophmolnar.com/books/interpretable-machine-learning/ (accessed on 9 September 2023).

- Balestriero, R.; Cosentino, R.; Aazhang, B.; Baraniuk, R. The Geometry of Deep Networks: Power Diagram Subdivision. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 15806–15815. [Google Scholar]

- Hwang, W.L.; Heinecke, A. Un-rectifying non-linear networks for signal representation. IEEE Trans. Signal Process. 2019, 68, 196–210. [Google Scholar] [CrossRef]

- Li, Q.; Lin, T.; Shen, Z. Deep learning via dynamical systems: An approximation perspective. arXiv 2019, arXiv:1912.10382. [Google Scholar] [CrossRef]

- Baraniuk, R. The local geometry of deep learning (Power Pointer slides). In Proceedings of the 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (Plentary Speech), Rhodes Island, Greece, 4–10 June 2023. [Google Scholar]

- Sun, W.; Tsiourvas, A. Learning Prescriptive ReLU Networks. arXiv 2023, arXiv:2306.00651. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Duvenaud, D.; Maclaurin, D.; Aguilera-Iparraguirre, J.; Gómez-Bombarelli, R.; Hirzel, T.; Aspuru-Guzik, A.; Adams, R.P. Convolutional networks on graphs for learning molecular fingerprints. arXiv 2015, arXiv:1509.09292. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Weinan, E. A proposal on machine learning via dynamical systems. Commun. Math. Stat. 2017, 1, 1–11. [Google Scholar]

- Lu, Y.; Zhong, A.; Li, Q.; Dong, B. Beyond finite layer neural networks: Bridging deep architectures and numerical differential equations. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 3276–3285. [Google Scholar]

- Haber, E.; Ruthotto, L. Stable architectures for deep neural networks. Inverse Probl. 2017, 34, 014004. [Google Scholar] [CrossRef]

- Chang, B.; Chen, M.; Haber, E.; Chi, E.H. AntisymmetricRNN: A dynamical system view on recurrent neural networks. arXiv 2019, arXiv:1902.09689. [Google Scholar]

- Ascher, U.M.; Petzold, L.R. Computer Methods for Ordinary Differential Equations and Differential-Algebraic Equations; SIAM: Philadelphia, PA, USA, 1998; Volume 61. [Google Scholar]

- Mallat, S. Group invariant scattering. Commun. Pure Appl. Math. 2012, 65, 1331–1398. [Google Scholar] [CrossRef]

- Bruna, J.; Mallat, S. Invariant scattering convolution networks. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1872–1886. [Google Scholar] [CrossRef] [PubMed]

- Kuo, C.C.J.; Chen, Y. On data-driven saak transform. J. Vis. Commun. Image Represent. 2018, 50, 237–246. [Google Scholar]

- Gregor, K.; LeCun, Y. Learning fast approximations of sparse coding. In Proceedings of the 27th International Conference on International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 399–406. [Google Scholar]

- Zhang, J.; Ghanem, B. ISTA-Net: Interpretable optimization-inspired deep network for image compressive sensing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1828–1837. [Google Scholar]

- Chan, S.H. Performance analysis of plug-and-play ADMM: A graph signal processing perspective. IEEE Trans. Comput. Imaging 2019, 5, 274–286. [Google Scholar] [CrossRef]

- Heinecke, A.; Ho, J.; Hwang, W.L. Refinement and universal approximation via sparsely connected ReLU convolution nets. IEEE Signal Process. Lett. 2020, 27, 1175–1179. [Google Scholar] [CrossRef]

- Arora, R.; Basu, A.; Mianjy, P.; Mukherjee, A. Understanding deep neural networks with rectified linear units. arXiv 2016, arXiv:1611.01491. [Google Scholar]

- Goodfellow, I.; Warde-Farley, D.; Mirza, M.; Courville, A.; Bengio, Y. Maxout networks. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1319–1327. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Gao, B.; Pavel, L. On the properties of the softmax function with application in game theory and reinforcement learning. arXiv 2017, arXiv:1704.00805. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Geman, S.; Bienenstock, E.; Doursat, R. Neural networks and the bias/variance dilemma. Neural Comput. 1992, 4, 1–58. [Google Scholar] [CrossRef]

- Belkin, M.; Hsu, D.; Ma, S.; Mandal, S. Reconciling modern machine-learning practice and the classical bias–variance trade-off. Proc. Natl. Acad. Sci. USA 2019, 116, 15849–15854. [Google Scholar] [CrossRef] [PubMed]

- Cao, Y.; Chen, Z.; Belkin, M.; Gu, Q. Benign overfitting in two-layer convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 25237–25250. [Google Scholar]

- Hwang, W.L. Representation and decomposition of functions in DAG-DNNs and structural network pruning. arXiv 2023, arXiv:2306.09707. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).