Vehicle Detection Based on Information Fusion of mmWave Radar and Monocular Vision

Abstract

1. Introduction

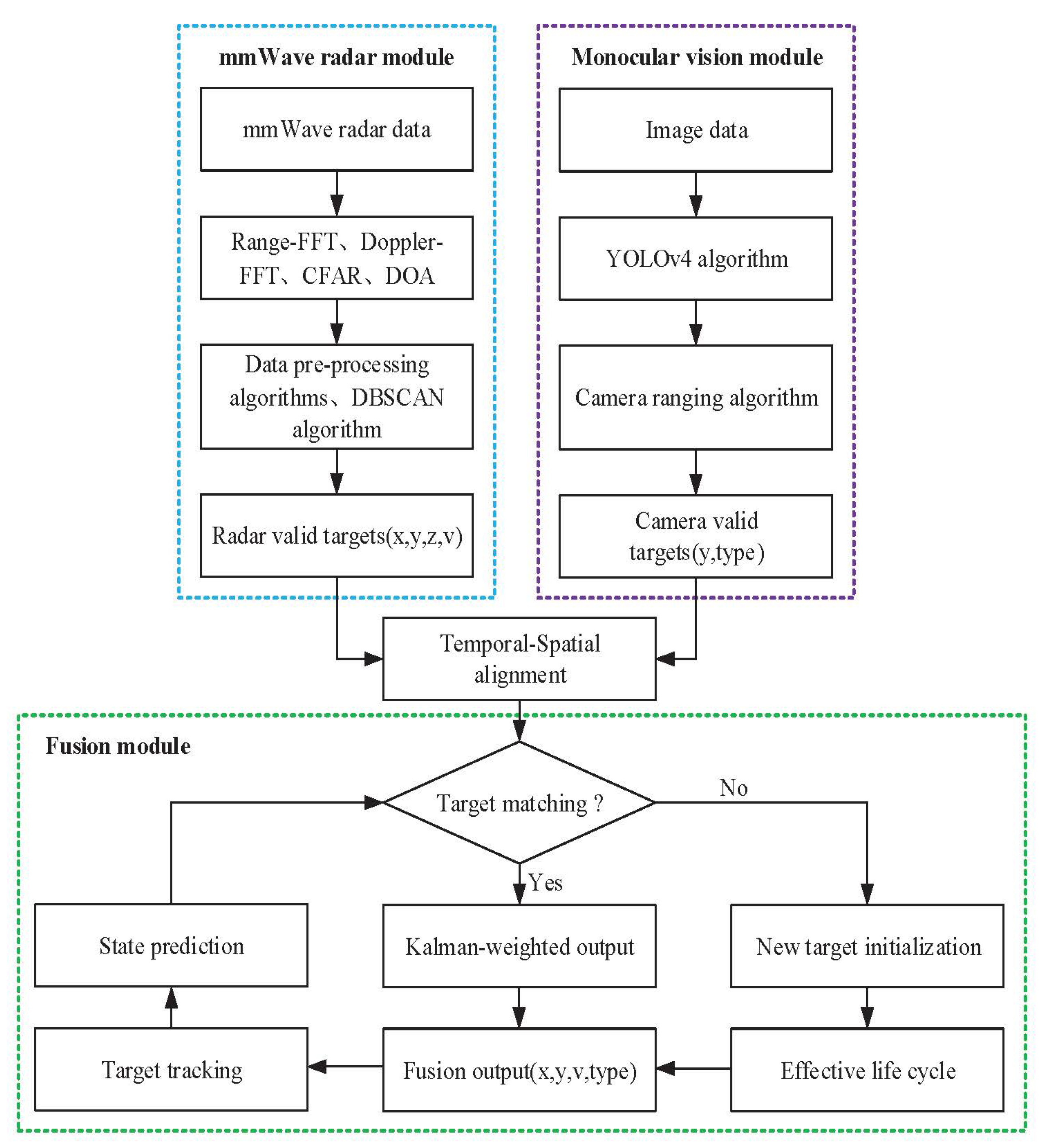

2. Data Fusion Model

3. Realization of Fusion

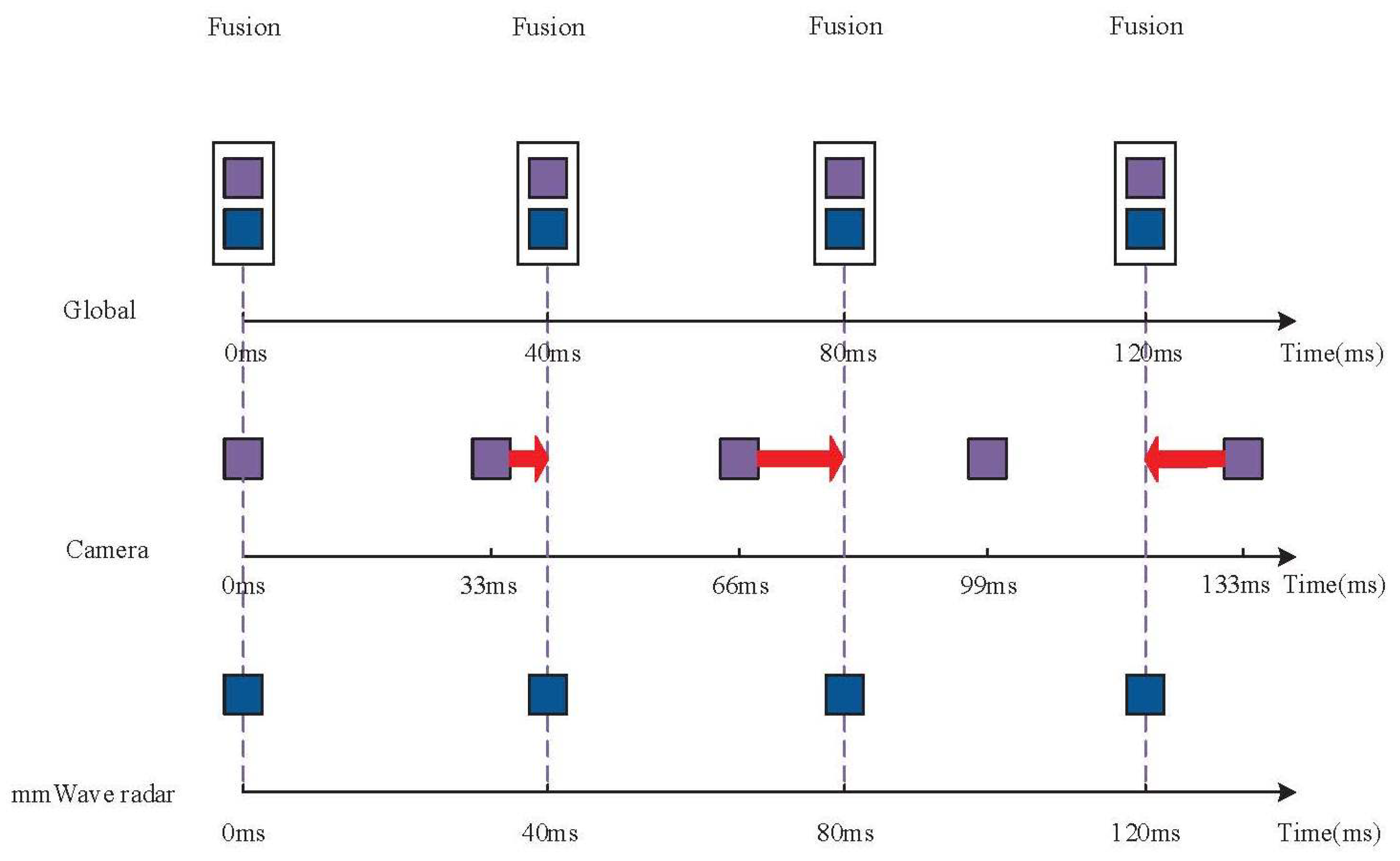

3.1. Temporal Alignment

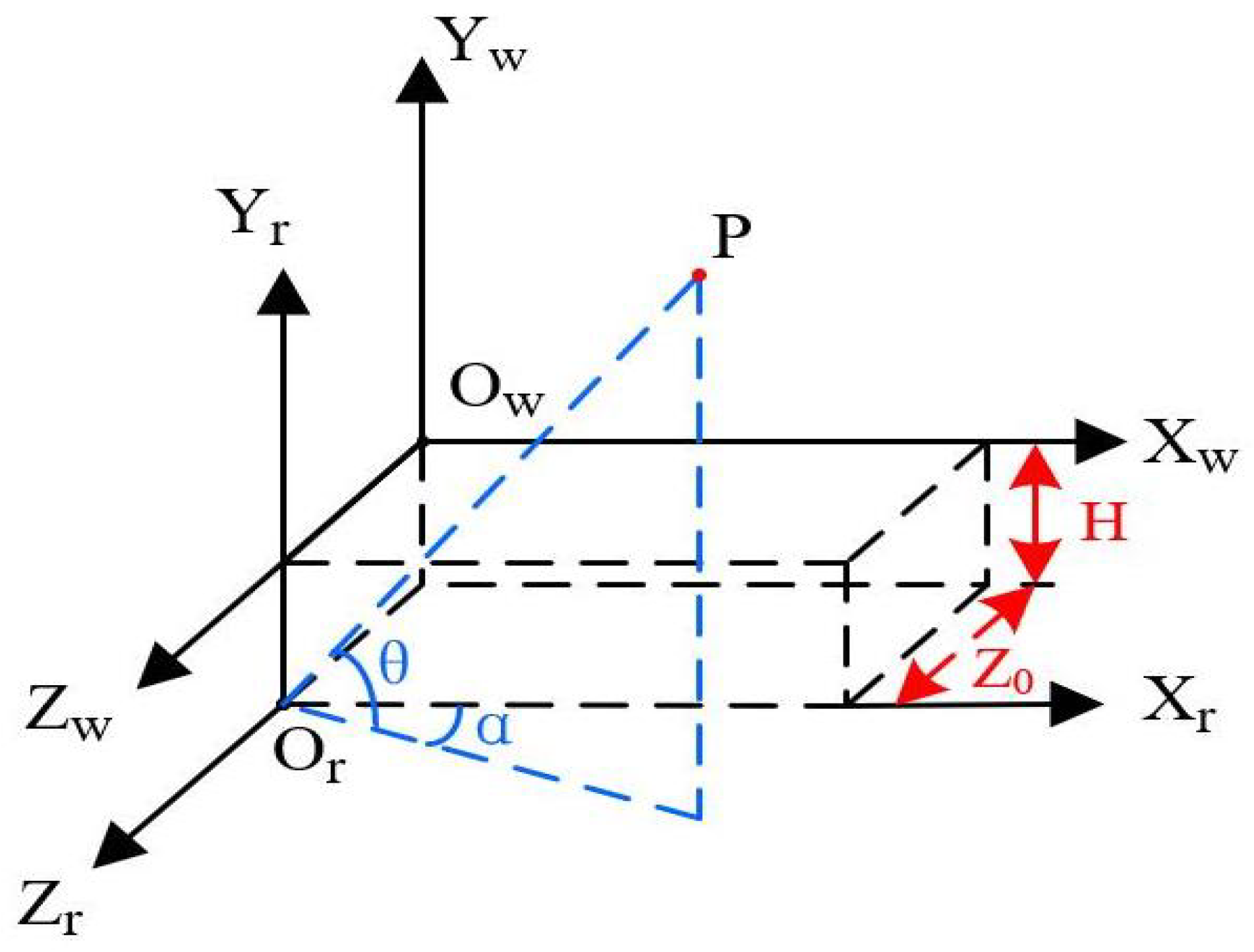

3.2. Spatio Calibration

3.3. mmWave Radar Data Processing

3.3.1. mmWave Radar Data Pre-Processing Algorithms

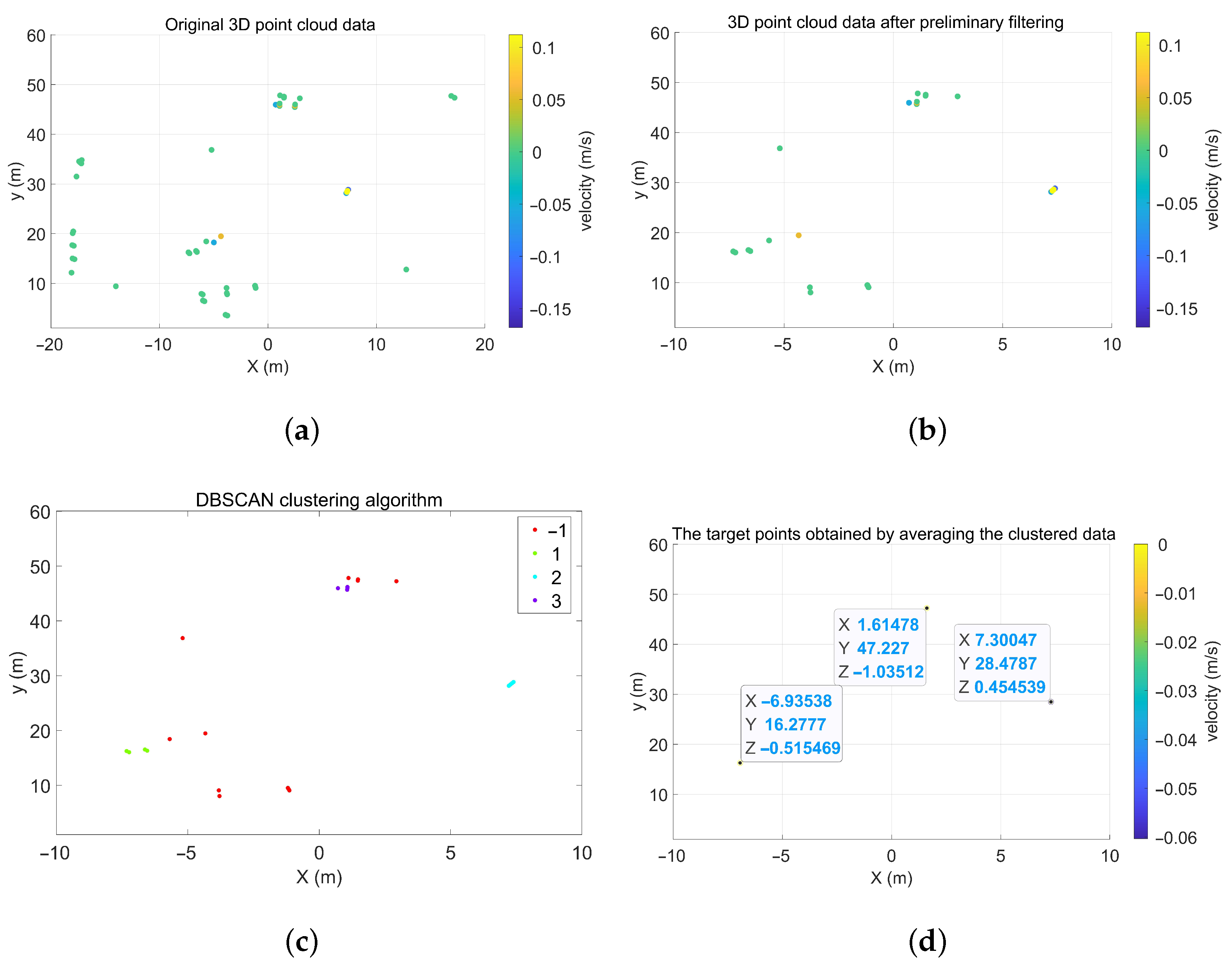

3.3.2. Acquisition of Effective mmWave Radar Targets

- (1)

- An improved algorithm based on DBSCAN clustering fusion

- (2)

- Data association algorithm based on the nearest neighbor method.

3.4. Monocular Camera Data Processing

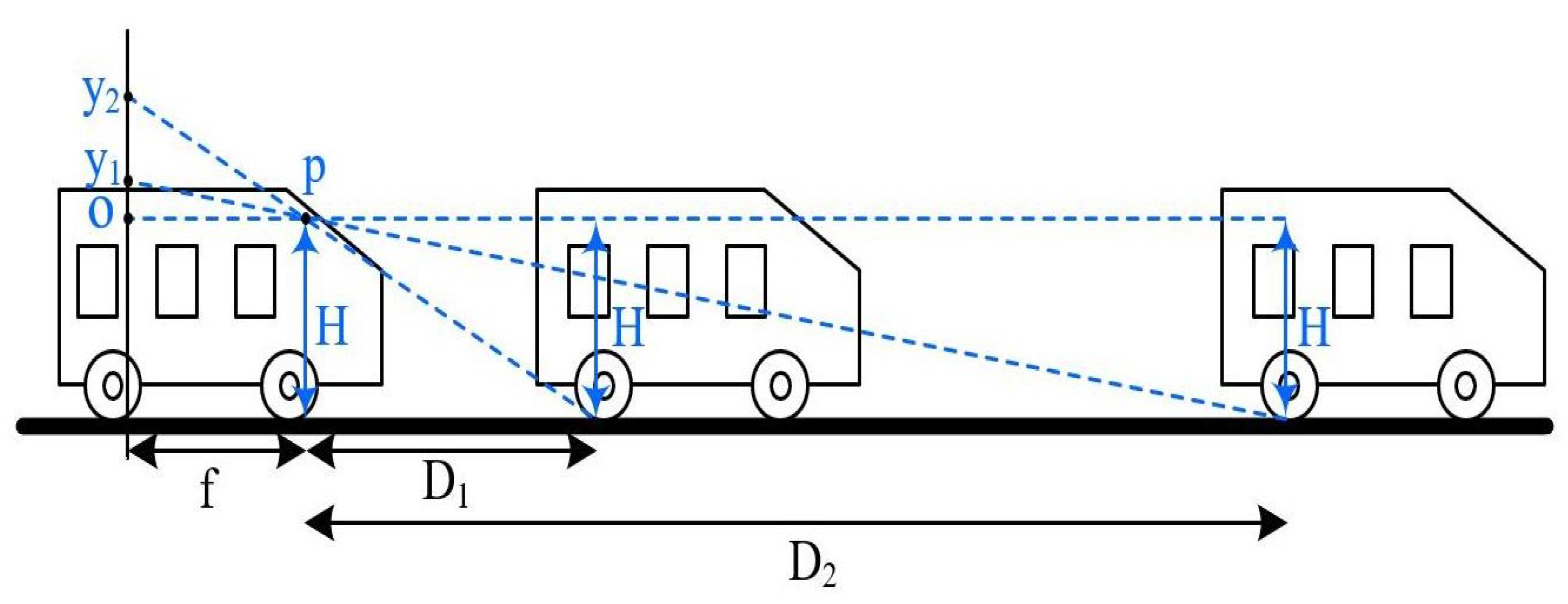

3.4.1. Monocular Camera Ranging Algorithm

3.4.2. Target Detection of the Camera

3.5. Fusion of Sensors Data

3.5.1. Target Matching

3.5.2. Target Fusion

- (1)

- Target is detected by the monocular camera but not by the mmWave radar.

- (2)

- Target is detected by the mmWave radar but not by the camera.

4. Experiments and Results

4.1. Validation of mmWave Radar Algorithms

4.2. Validation of YOLOv4 Algorithm and Monocular Camera Ranging Algorithm

4.3. Validation of Data Fusion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fue, K.; Porter, W.; Barnes, E.; Li, C.; Rains, G. Autonomous Navigation of a Center-Articulated and Hydrostatic Transmission Rover using a Modified Pure Pursuit Algorithm in a Cotton Field. Sensors 2020, 20, 4412. [Google Scholar] [CrossRef] [PubMed]

- Dan, P.; Stoican, F.; Stamatescu, G.; Ichim, L.; Dragana, C. Advanced UAV–WSN System for Intelligent Monitoring in Precision Agriculture. Sensors 2020, 20, 817. [Google Scholar]

- Ji, Y.; Peng, C.; Li, S.; Chen, B.; Miao, Y.; Zhang, M.; Li, H. Multiple object tracking in farmland based on fusion point cloud data. Comput. Electron. Agric. 2022, 200, 107259. [Google Scholar] [CrossRef]

- Yi, C.; Zhang, K.; Peng, N. A multi-sensor fusion and object tracking algorithm for self-driving vehicles. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2019, 233, 2293–2300. [Google Scholar] [CrossRef]

- Cho, M.G. A study on the obstacle recognition for autonomous driving RC car using lidar and thermal infrared camera. In Proceedings of the 2019 Eleventh International Conference on Ubiquitous and Future Networks (ICUFN), Zagreb, Croatia, 2–5 July 2019; pp. 544–546. [Google Scholar]

- Zhang, R.; Cao, S. Real-time human motion behavior detection via CNN using mmWave radar. IEEE Sens. Lett. 2018, 3, 3500104. [Google Scholar] [CrossRef]

- Yoneda, K.; Hashimoto, N.; Yanase, R.; Aldibaja, M.; Suganuma, N. Vehicle localization using 76 GHz omnidirectional millimeter-wave radar for winter automated driving. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; pp. 971–977. [Google Scholar]

- Nabati, R.; Qi, H.C. Center-based Radar and Camera Fusion for 3D Object Detection. arXiv 2020, arXiv:2011.04841. [Google Scholar]

- Premachandra, C.; Murakami, M.; Gohara, R.; Ninomiya, T.; Kato, K. Improving landmark detection accuracy for self-localization through baseboard recognition. Int. J. Mach. Learn. Cybern. 2017, 8, 1815–1826. [Google Scholar] [CrossRef]

- Cavanini, L.; Benetazzo, F.; Freddi, A.; Longhi, S.; Monteriu, A. SLAM-based autonomous wheelchair navigation system for AAL scenarios. In Proceedings of the 2014 IEEE/ASME 10th International Conference on Mechatronic and Embedded Systems and Applications (MESA), Senigallia, Italy, 10–12 September 2014; pp. 1–5. [Google Scholar]

- Ji, Y.; Li, S.; Peng, C.; Xu, H.; Cao, R.; Zhang, M. Obstacle detection and recognition in farmland based on fusion point cloud data. Comput. Electron. Agric. 2021, 189, 106409. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, B.; Luo, L. Multi-feature fusion tree trunk detection and orchard mobile robot localization using camera/ultrasonic sensors. Comput. Electron. Agric. 2018, 147, 91–108. [Google Scholar] [CrossRef]

- Maldaner, L.F.; Molin, J.P.; Canata, T.F.; Martello, M. A system for plant detection using sensor fusion approach based on machine learning model. Comput. Electron. Agric. 2021, 189, 106382. [Google Scholar] [CrossRef]

- Xue, J.; Fan, B.; Yan, J.; Dong, S.; Ding, Q. Trunk detection based on laser radar and vision data fusion. Int. J. Agric. Biol. Eng. 2018, 11, 20–26. [Google Scholar] [CrossRef]

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep learning sensor fusion for autonomous vehicle perception and localization: A review. Sensors 2020, 20, 4220. [Google Scholar] [CrossRef]

- Steinbaeck, J.; Steger, C.; Brenner, E.; Holweg, G.; Druml, N. Occupancy grid fusion of low-level radar and time-of-flight sensor data. In Proceedings of the 2019 22nd Euromicro Conference on Digital System Design (DSD), Kallithea, Greece, 28–30 August 2019; pp. 200–205. [Google Scholar]

- Will, C.; Vaishnav, P.; Chakraborty, A.; Santra, A. Human target detection, tracking, and classification using 24-GHz FMCW radar. IEEE Sens. J. 2019, 19, 7283–7299. [Google Scholar] [CrossRef]

- Chen, B.; Pei, X.; Chen, Z. Research on target detection based on distributed track fusion for intelligent vehicles. Sensors 2019, 20, 56. [Google Scholar] [CrossRef]

- Kim, D.; Kim, S. Extrinsic parameter calibration of 2D radar-camera using point matching and generative optimization. In Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 15–18 October 2019; pp. 99–103. [Google Scholar]

- Fang, Y.; Masaki, I.; Horn, B. Depth-based target segmentation for intelligent vehicles: Fusion of radar and binocular stereo. IEEE Trans. Intell. Transp. Syst. 2002, 3, 196–202. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Zhou, M.; Qiu, P.; Huang, Y.; Li, J. Radar and vision fusion for the real-time obstacle detection and identification. Ind. Robot. Int. J. Robot. Res. Appl. 2019, 46, 391–395. [Google Scholar] [CrossRef]

- Cong, J.; Wang, X.; Huang, M.; Wan, L. Robust DOA Estimation Method for MIMO Radar via Deep Neural Networks. IEEE Sens. J. 2020, 21, 7498–7507. [Google Scholar] [CrossRef]

- Cong, J.; Wang, X.; Yan, C.; Yang, L.T.; Dong, M.; Ota, K. CRB Weighted Source Localization Method Based on Deep Neural Networks in Multi-UAV Network. IEEE Internet Things J. 2023, 10, 5747–5759. [Google Scholar] [CrossRef]

- Jiang, W.; Ren, Y.; Liu, Y.; Leng, J. Artificial Neural Networks and Deep Learning Techniques Applied to Radar Target Detection: A Review. Electronics 2022, 11, 156. [Google Scholar] [CrossRef]

- Lv, P.; Wang, B.; Cheng, F.; Xue, J. Multi-Objective Association Detection of Farmland Obstacles Based on Information Fusion of Millimeter Wave Radar and Camera. Sensors 2023, 23, 230. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, L.; Li, P.; Jia, T.; Du, J.; Liu, Y.; Li, R.; Yang, S.; Tong, J.; Yu, H. Laser Radar Data Registration Algorithm Based on DBSCAN Clustering. Electronics 2023, 12, 1373. [Google Scholar] [CrossRef]

- Pearce, A.; Zhang, J.A.; Xu, R.; Wu, K. Multi-Object Tracking with mmWave Radar: A Review. Electronics 2023, 12, 308. [Google Scholar] [CrossRef]

- Hsu, Y.W.; Lai, Y.H.; Zhong, K.Q.; Yin, T.K.; Perng, J.W. Developing an on-road object detection system using monovision and radar fusion. Energies 2019, 13, 116. [Google Scholar] [CrossRef]

- Jin, F.; Sengupta, A.; Cao, S.; Wu, Y.J. Mmwave radar point cloud segmentation using gmm in multimodal traffic monitoring. In Proceedings of the 2020 IEEE International Radar Conference (RADAR), Washington, DC, USA, 28–30 April 2020; pp. 732–737. [Google Scholar]

- Zhou, T.; Jiang, K.; Xiao, Z.; Yu, C.; Yang, D. Object detection using multi-sensor fusion based on deep learning. In Proceedings of the CICTP 2019, Nanjing, China, 6–8 July 2019; pp. 5770–5782. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11682–11692. [Google Scholar]

- Cao, C.; Gao, J.; Liu, Y.C. Research on space fusion method of millimeter wave radar and vision sensor. Procedia Comput. Sci. 2020, 166, 68–72. [Google Scholar] [CrossRef]

- Neal, R.M. Bayesian methods for machine learning. NIPS Tutor. 2004, 13. [Google Scholar]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Bhatia, J.; Dayal, A.; Jha, A.; Vishvakarma, S.K.; Cenkeramaddi, L.R. Object Classification Technique for mmWave FMCW Radars using Range-FFT Features. In Proceedings of the 2021 International Conference on COMmunication Systems and NETworkS (COMSNETS), Bangalore, India, 5–9 January 2021. [Google Scholar]

- Zhang, X.; Xu, L.; Xu, L.; Xu, D. Direction of Departure (DOD) and Direction of Arrival (DOA) Estimation in MIMO Radar with Reduced-Dimension MUSIC. IEEE Commun. Lett. 2010, 14, 1161–1163. [Google Scholar] [CrossRef]

- Yun, D.J.; Jung, H.; Kang, H.; Yang, W.Y.; Seo, D.W. Acceleration of the Multi-Level Fast Multipole Algorithm Using K-Means Clustering. Electronics 2020, 9, 1926. [Google Scholar] [CrossRef]

- Wu, X.; Ren, J.; Wu, Y.; Shao, J. Study on Target Tracking Based on Vision and Radar Sensor Fusion; Technical Report, SAE Technical Paper; SAE: Warrendale, PA, USA, 2018. [Google Scholar]

- Gong, P.; Wang, C.; Zhang, L. Mmpoint-gnn: Graph neural network with dynamic edges for human activity recognition through a millimeter-wave radar. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–7. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Cong, J.; Wang, X.; Lan, X.; Huang, M.; Wan, L. Fast Target Localization Method for FMCW MIMO Radar via VDSR Neural Network. Remote Sens. 2021, 13, 1956. [Google Scholar] [CrossRef]

| Actual Distance (m) | Estimated Distance (m) | Error |

|---|---|---|

| 38 | 44.38 | 16.78% |

| 42 | 45.81 | 9.07% |

| 46 | 45.81 | 0.43% |

| 50 | 47.24 | 5.52% |

| 54 | 51.65 | 4.35% |

| 58 | 52.40 | 9.65% |

| 62 | 52.83 | 14.79% |

| Scene A | Scene B | Scene C | |

|---|---|---|---|

| Number of data frames | 186 | 114 | 167 |

| Testing environment | night, fog | night, strong light | day, covered |

| Total targets | 744 | 228 | 708 |

| Targets by vision-only detection | 504 | 114 | 483 |

| Accuracy of vision-only detection | 67.74% | 50.00% | 68.22% |

| Targets by fusion detection | 672 | 178 | 568 |

| Accuracy by fusion detection | 90.32% | 78.07% | 80.23% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, G.; Wang, X.; Shi, J.; Lan, X.; Su, T.; Guo, Y. Vehicle Detection Based on Information Fusion of mmWave Radar and Monocular Vision. Electronics 2023, 12, 2840. https://doi.org/10.3390/electronics12132840

Cai G, Wang X, Shi J, Lan X, Su T, Guo Y. Vehicle Detection Based on Information Fusion of mmWave Radar and Monocular Vision. Electronics. 2023; 12(13):2840. https://doi.org/10.3390/electronics12132840

Chicago/Turabian StyleCai, Guizhong, Xianpeng Wang, Jinmei Shi, Xiang Lan, Ting Su, and Yuehao Guo. 2023. "Vehicle Detection Based on Information Fusion of mmWave Radar and Monocular Vision" Electronics 12, no. 13: 2840. https://doi.org/10.3390/electronics12132840

APA StyleCai, G., Wang, X., Shi, J., Lan, X., Su, T., & Guo, Y. (2023). Vehicle Detection Based on Information Fusion of mmWave Radar and Monocular Vision. Electronics, 12(13), 2840. https://doi.org/10.3390/electronics12132840