A Motion-Direction-Detecting Model for Gray-Scale Images Based on the Hassenstein–Reichardt Model

Abstract

1. Introduction

2. Methods

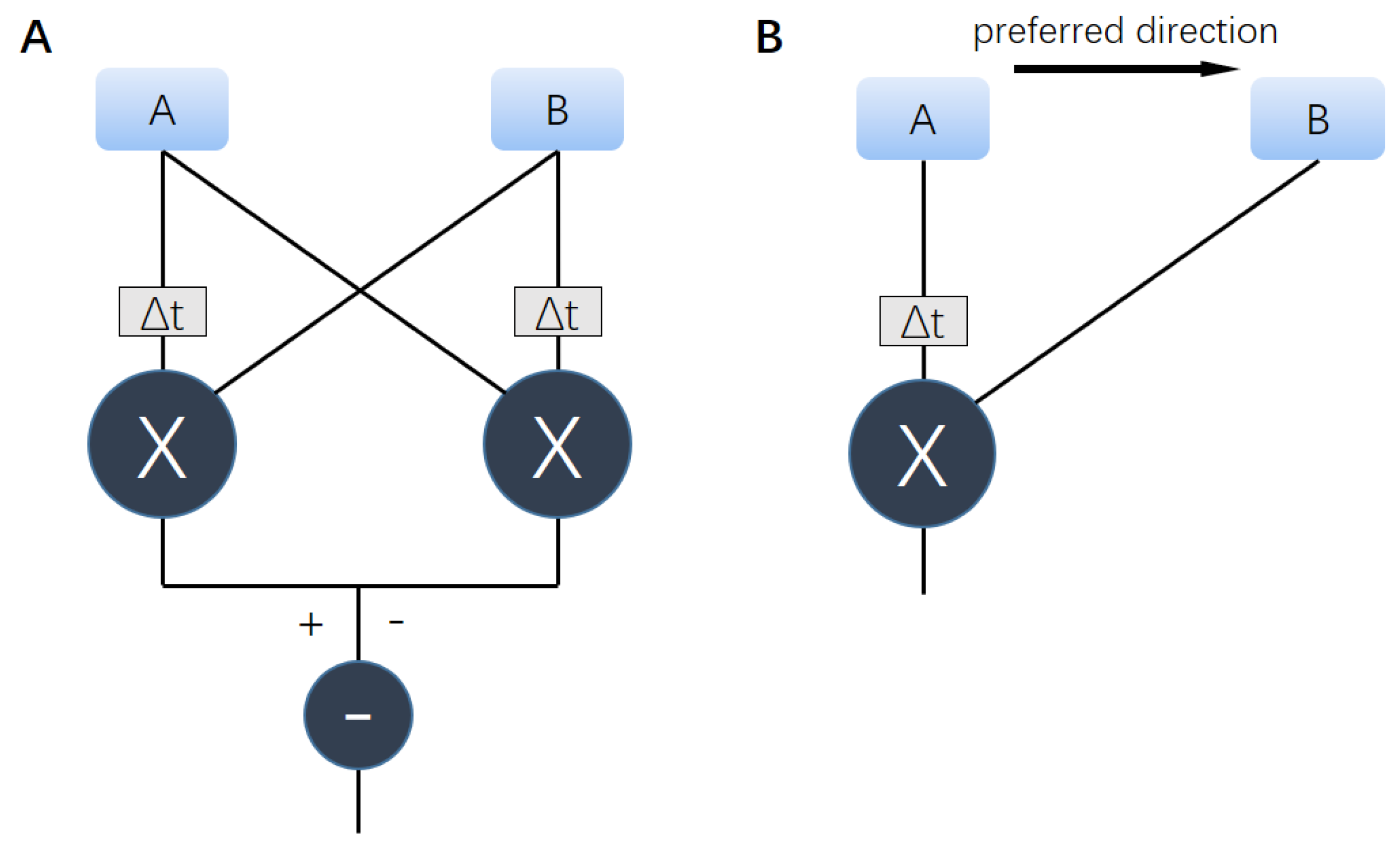

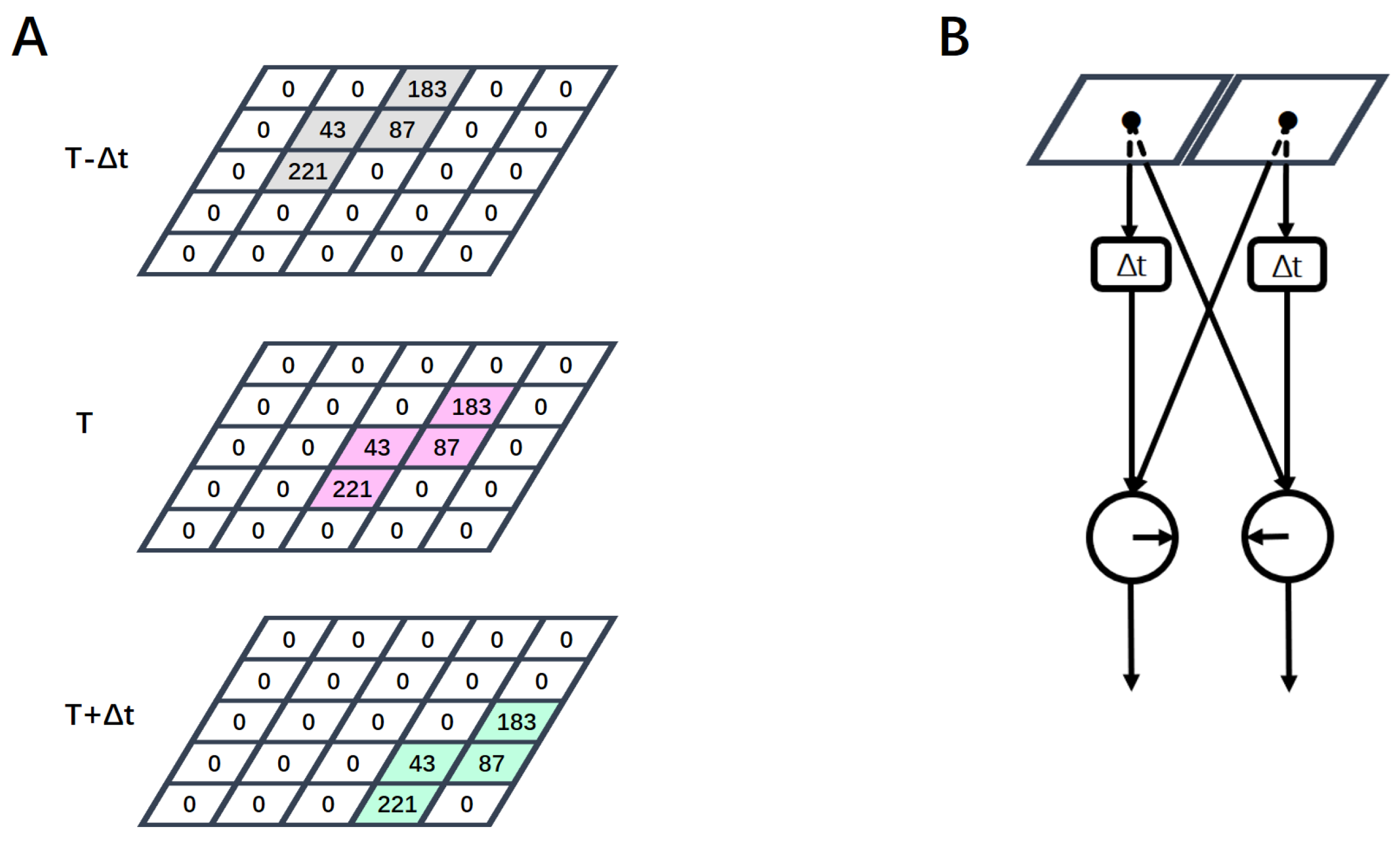

2.1. Hassenstein–Reichardt Correlator Model

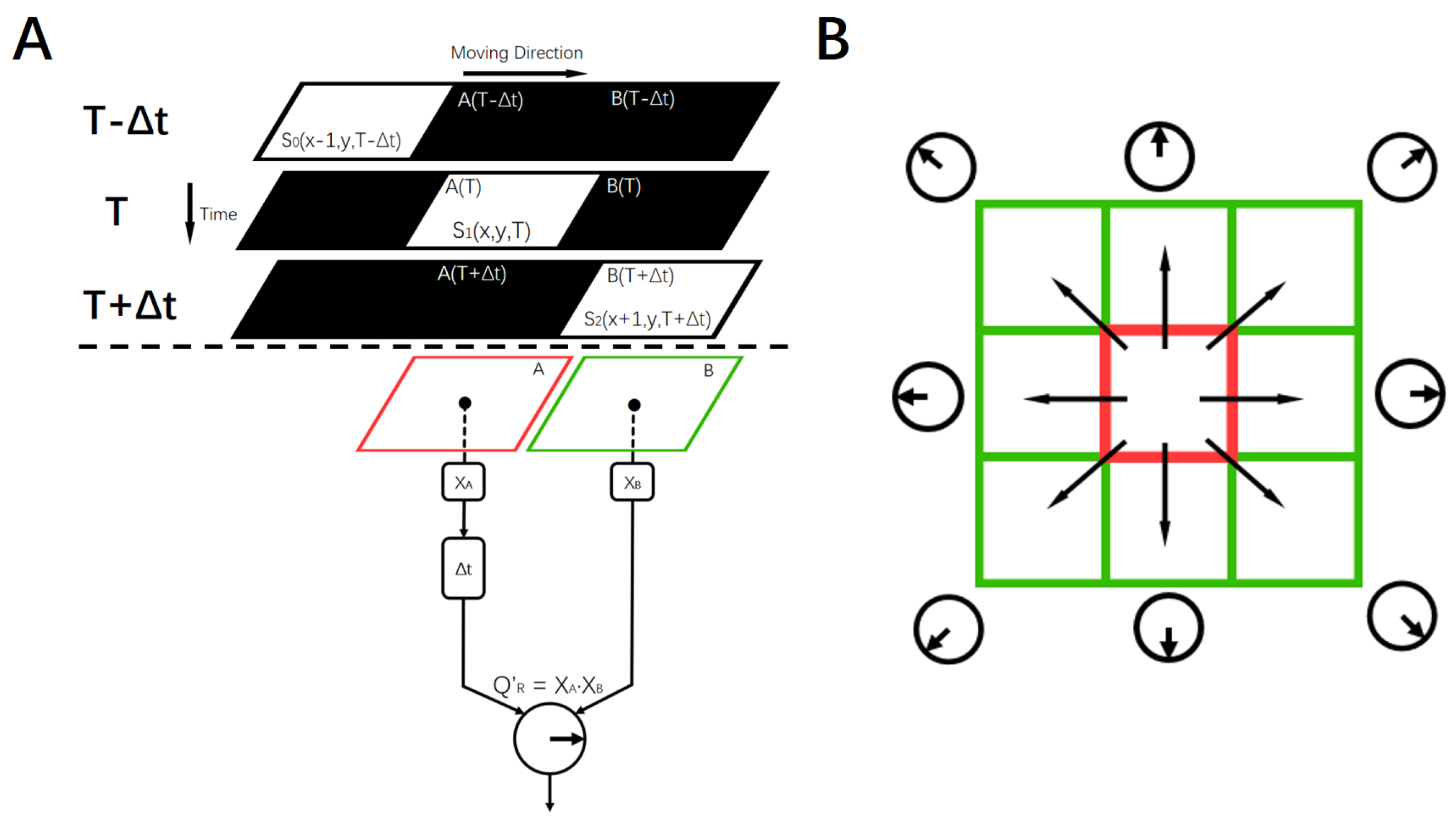

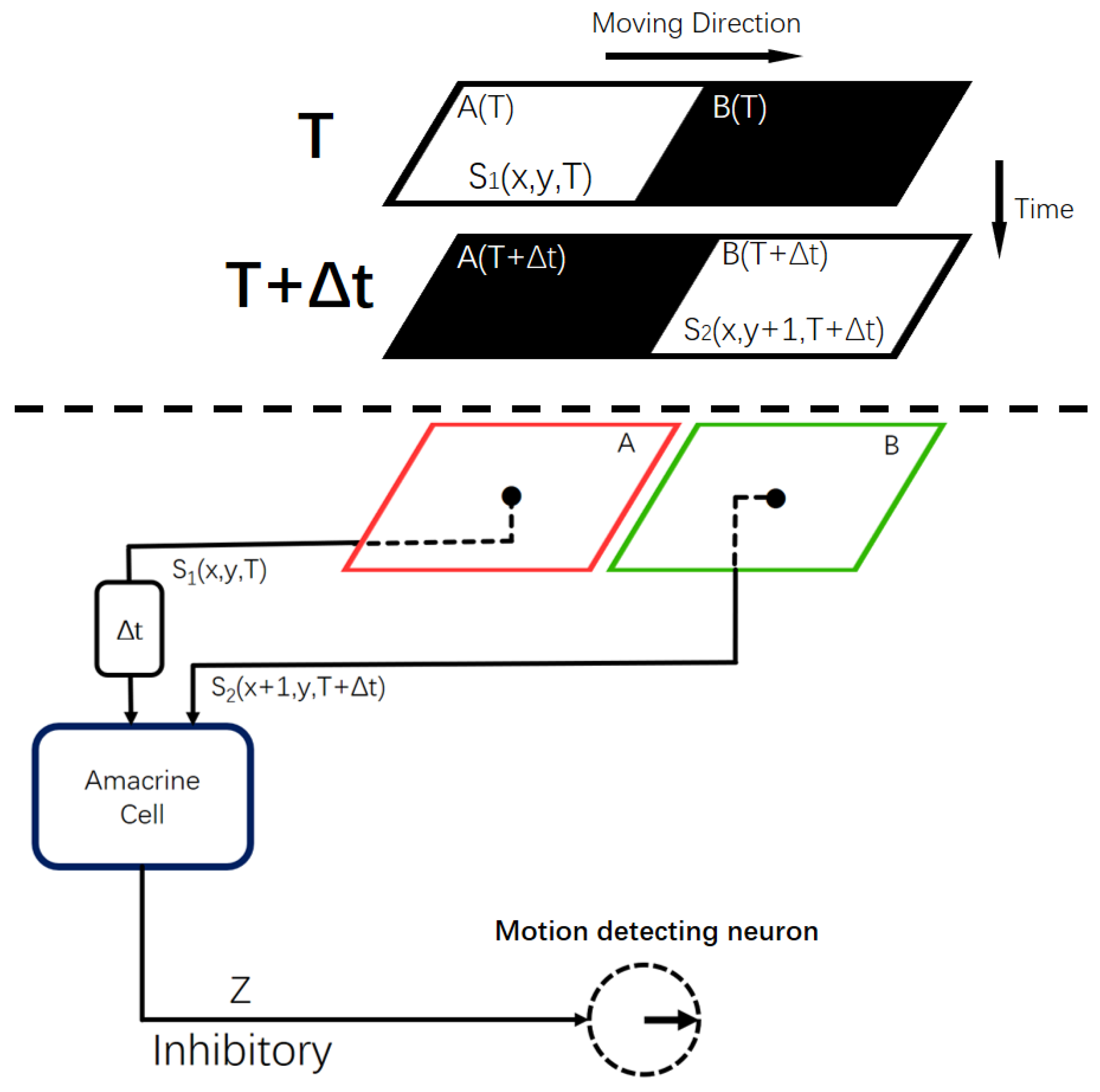

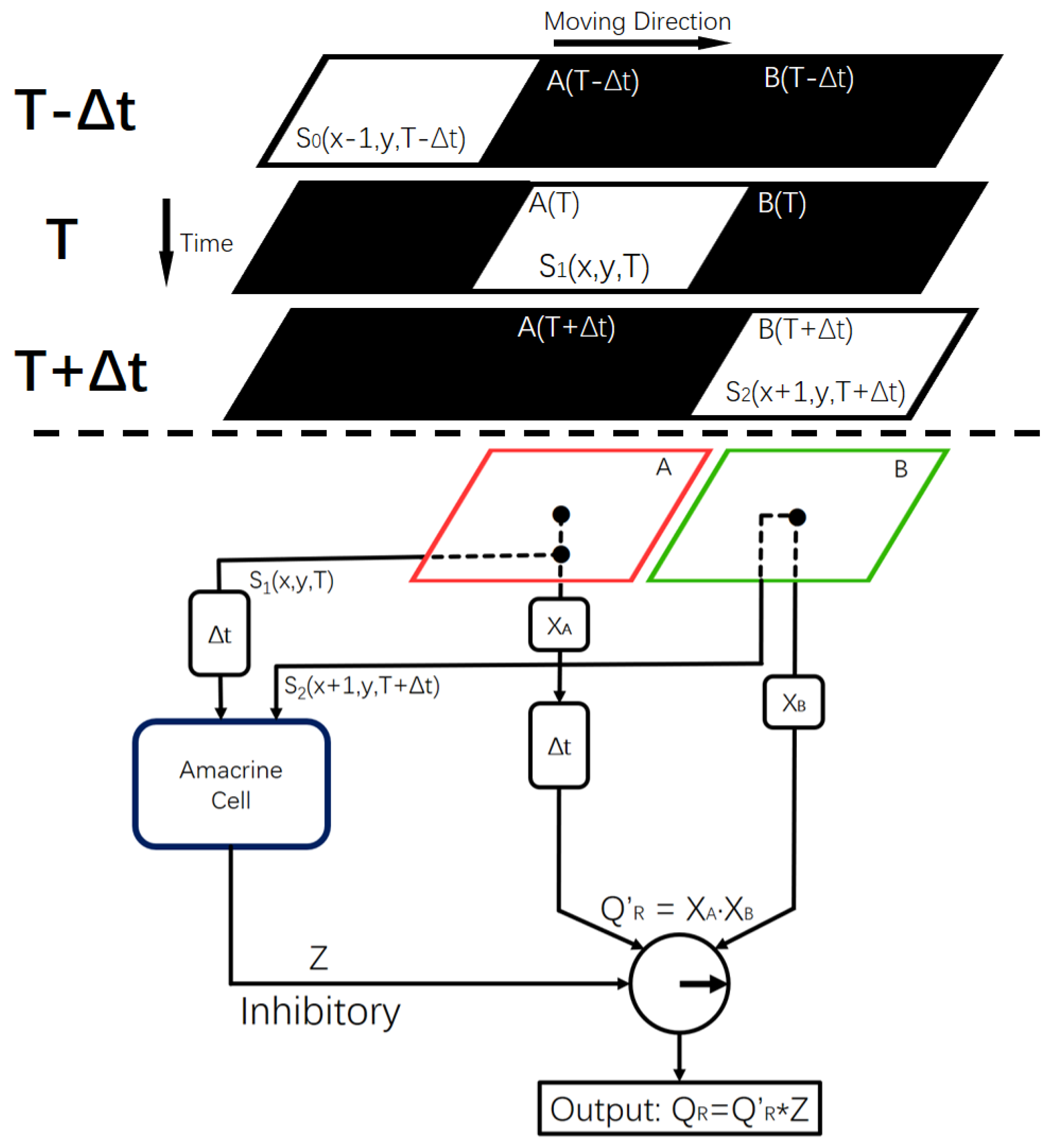

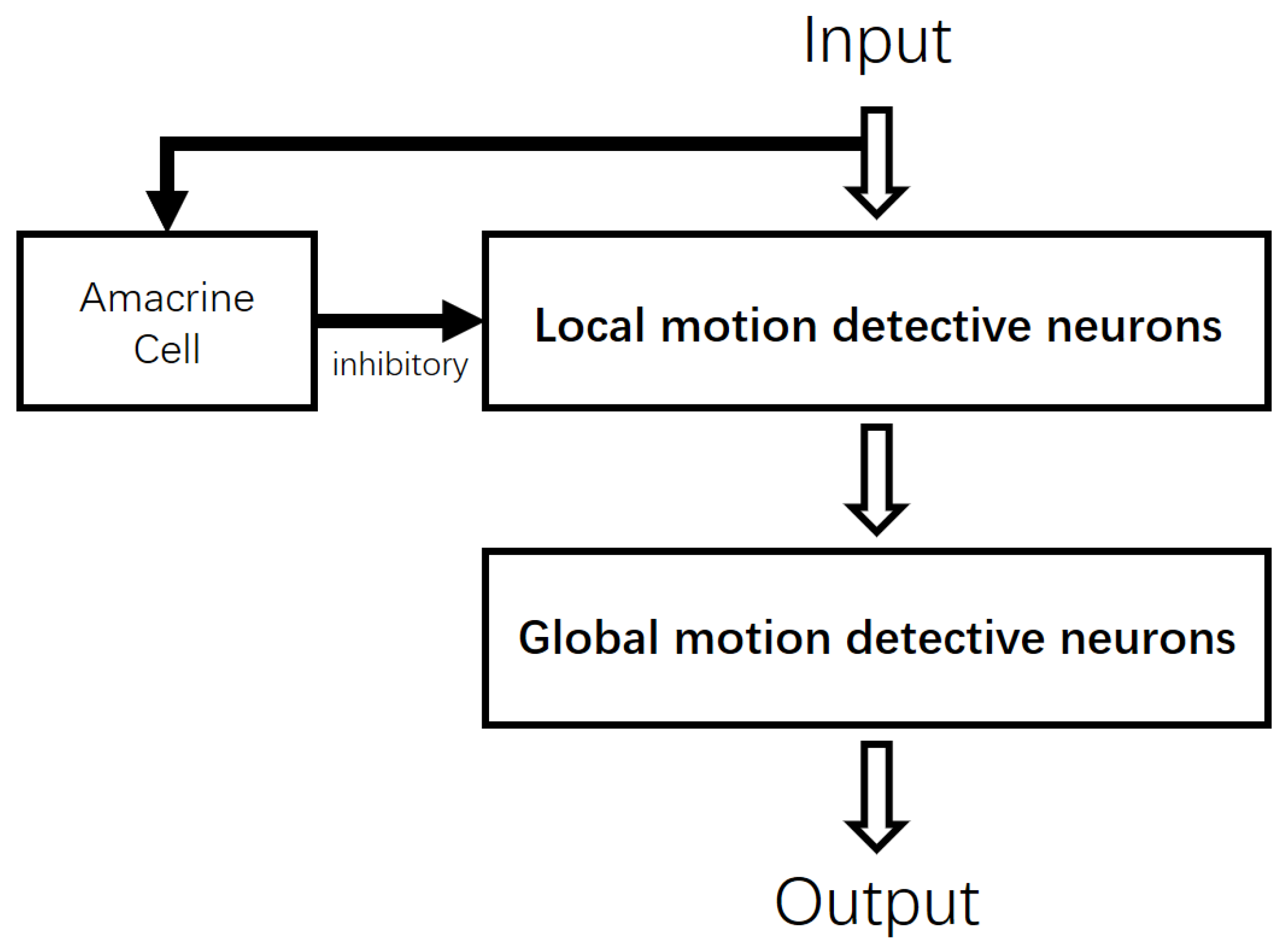

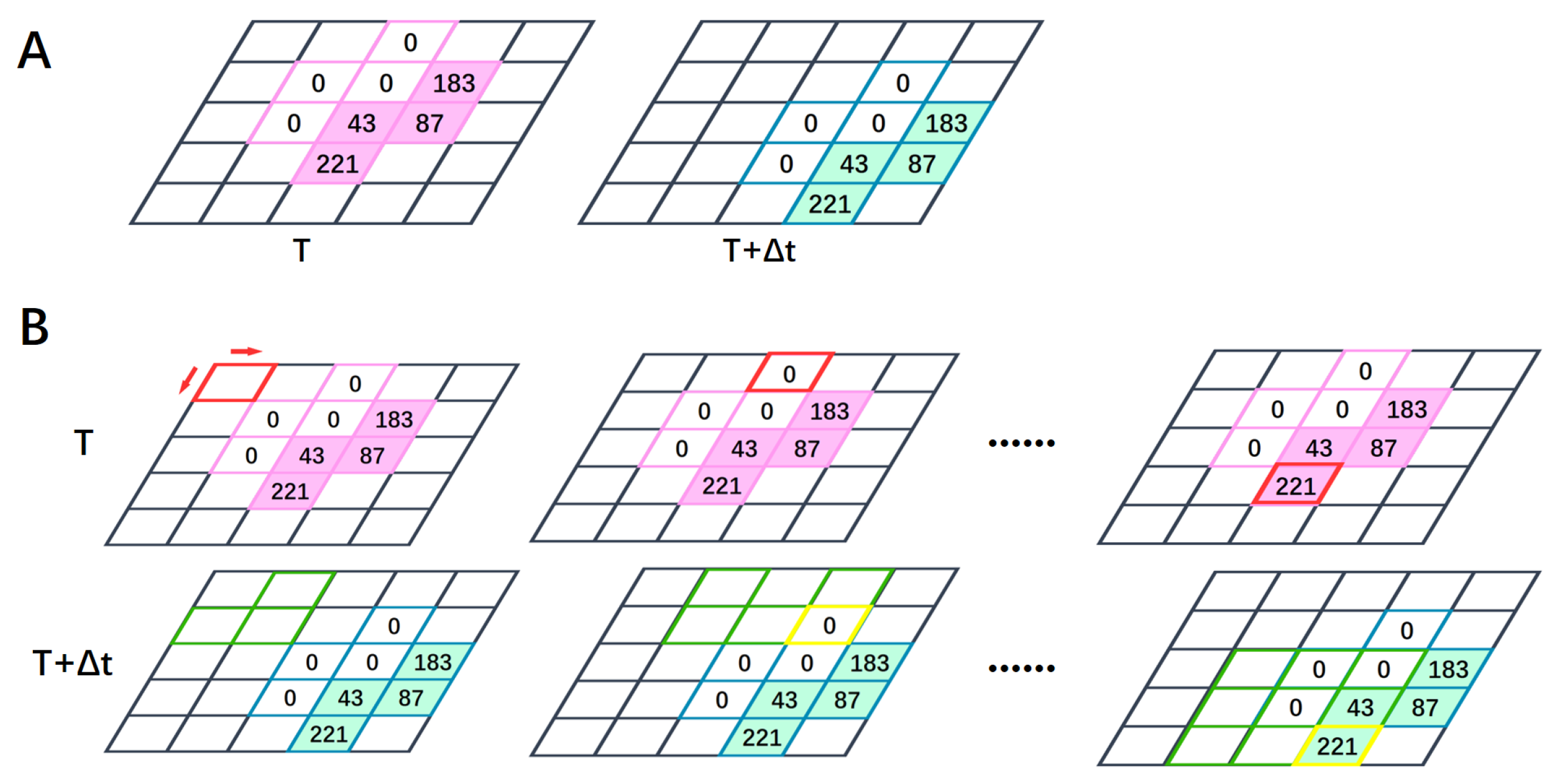

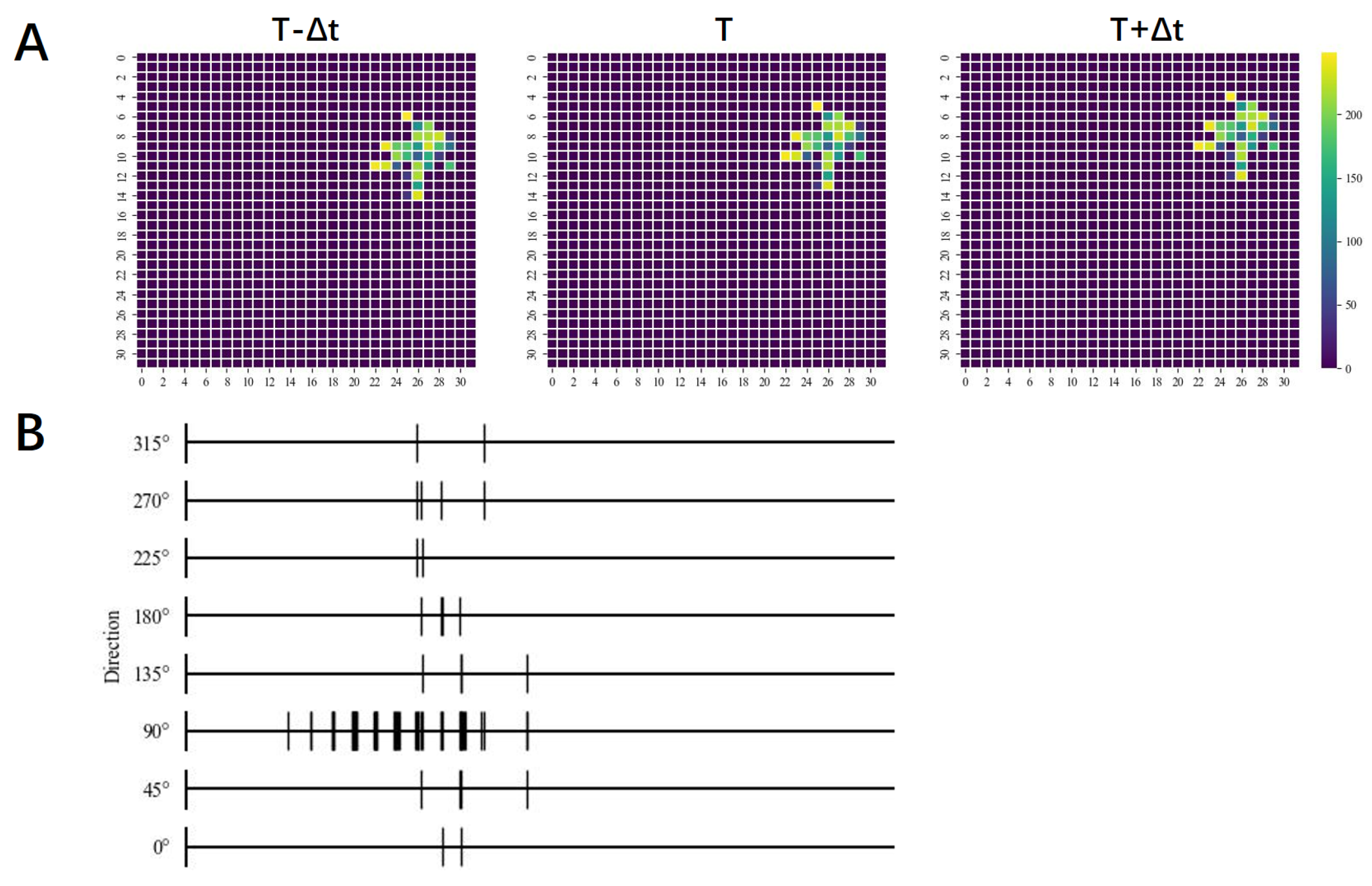

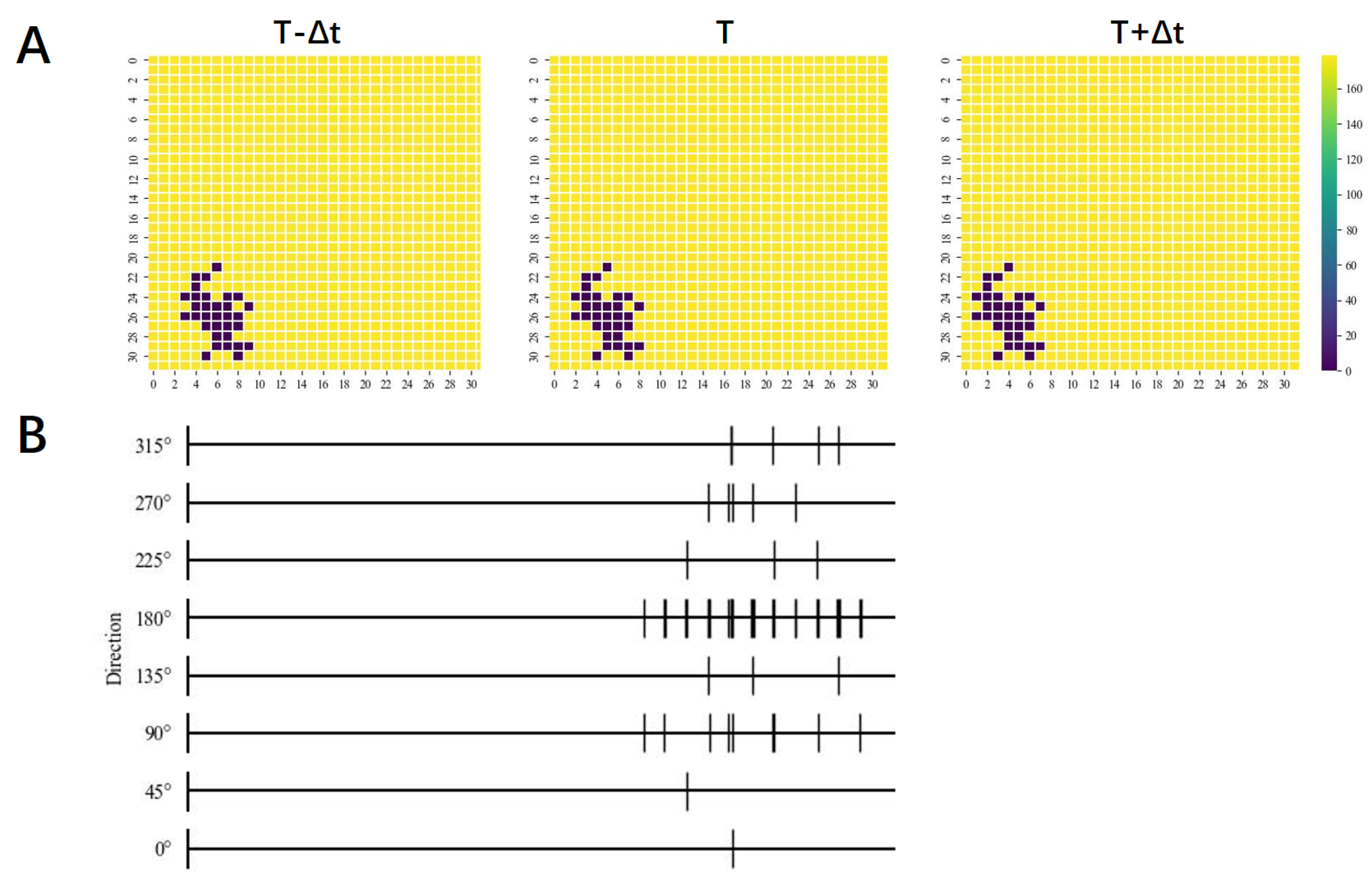

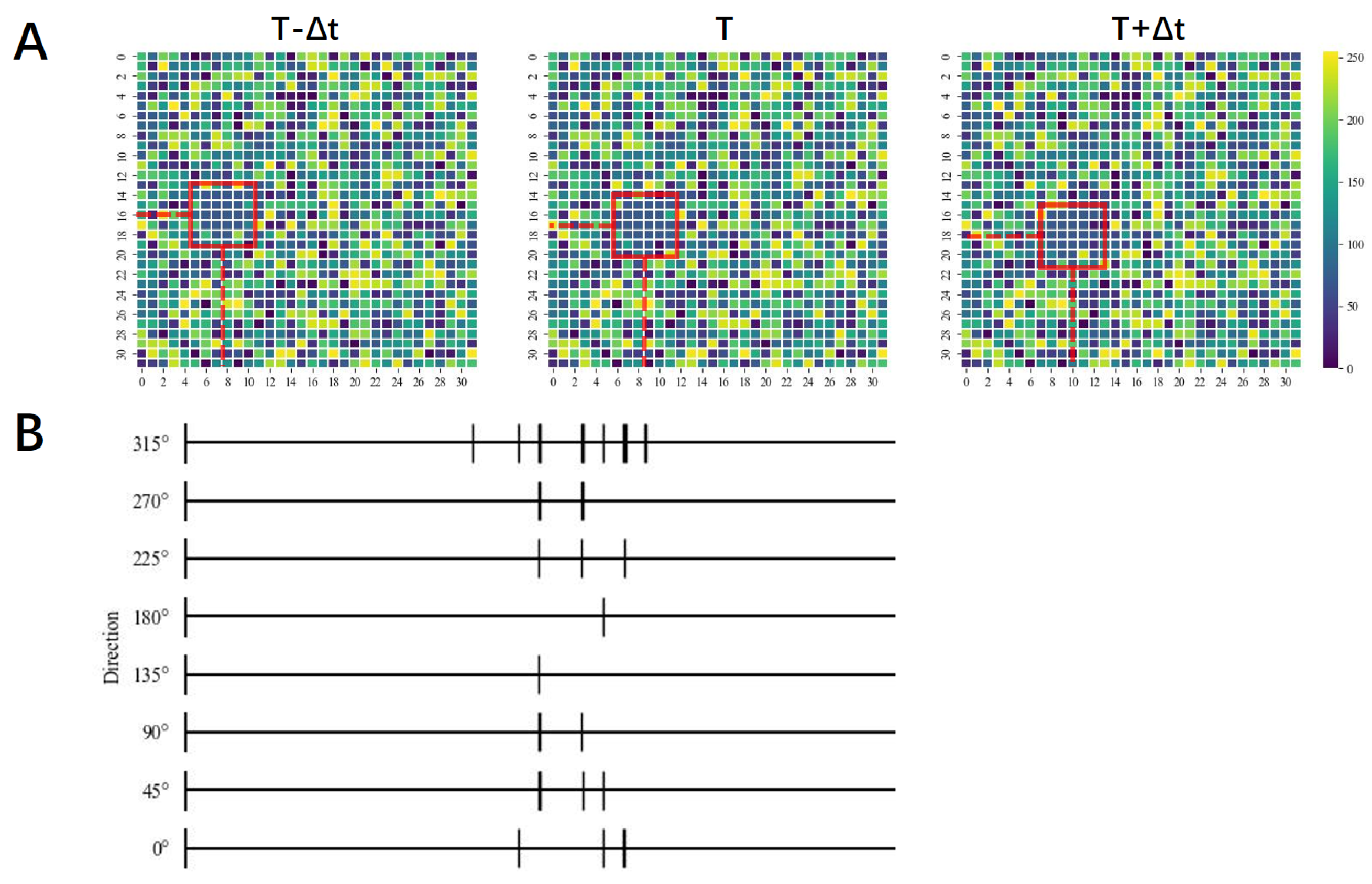

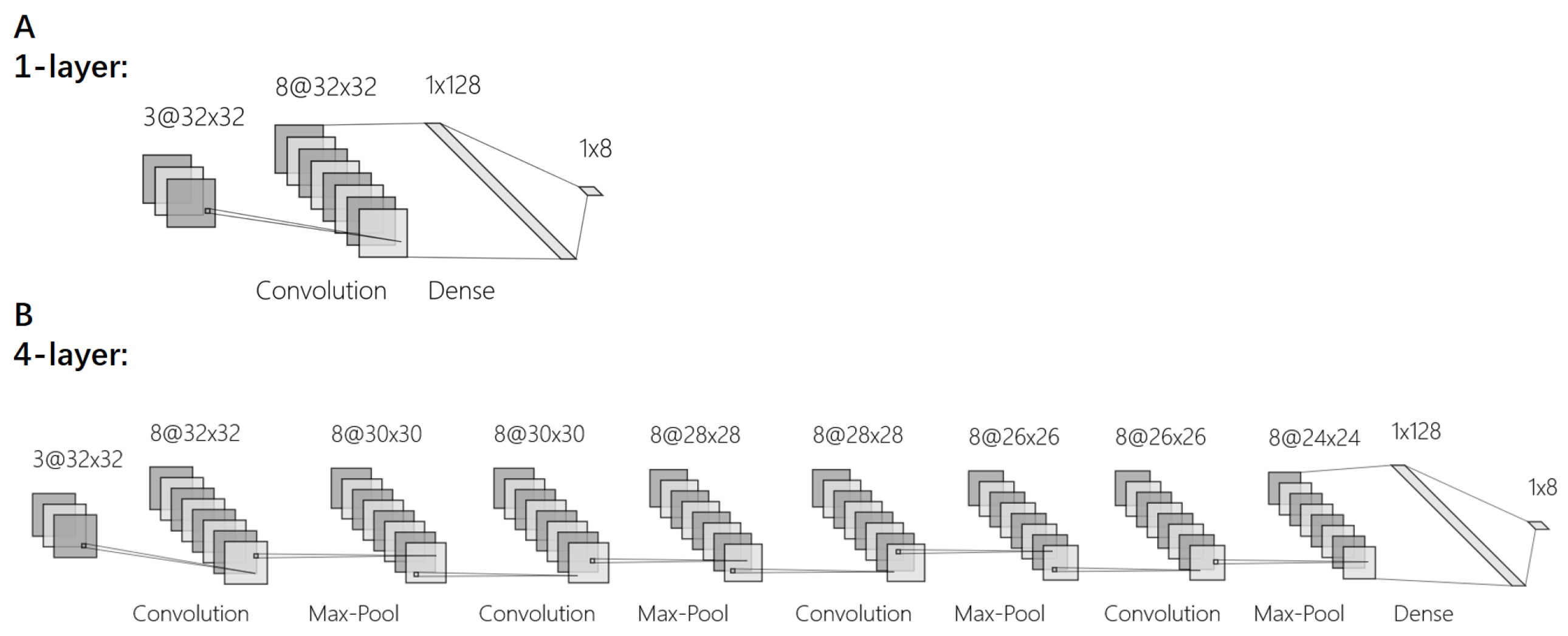

2.2. Local Motion-Direction-Detecting Neuron for Gray-Scale Images

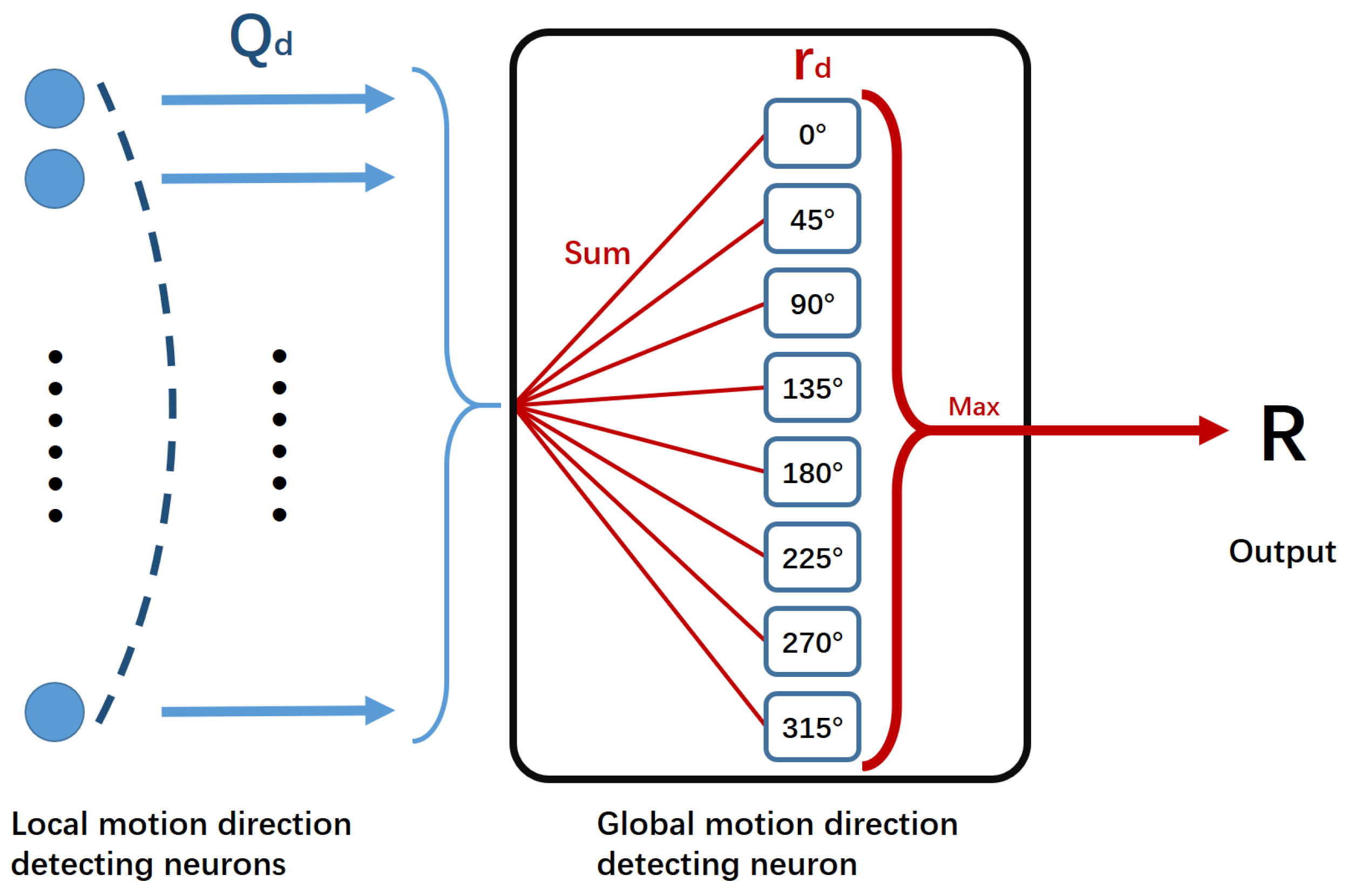

2.3. Global Motion-Direction-Detecting System

3. Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hassenstein, B.; Reichardt, W. Systemtheoretische analyse der zeit-, reihenfolgen-und vorzeichenauswertung bei der bewegungsperzeption des rüsselkäfers chlorophanus. Z. Nat. B 1956, 11, 513–524. [Google Scholar] [CrossRef]

- Borst, A. In search of the holy grail of fly motion vision. Eur. J. Neurosci. 2014, 40, 3285–3293. [Google Scholar] [CrossRef] [PubMed]

- Götz, K.G. Optomotorische untersuchung des visuellen systems einiger augenmutanten der fruchtfliege Drosophila. Kybernetik 1964, 2, 77–92. [Google Scholar] [CrossRef] [PubMed]

- Götz, K.G. Die optischen Übertragungseigenschaften der komplexaugen von Drosophila. Kybernetik 1965, 2, 215–221. [Google Scholar] [CrossRef]

- Joesch, M.; Plett, J.; Borst, A.; Reiff, D.F. Response properties of motion-sensitive visual interneurons in the lobula plate of Drosophila melanogaster. Curr. Biol. 2008, 18, 368–374. [Google Scholar] [CrossRef]

- Schnell, B.; Joesch, M.; Forstner, F.; Raghu, S.V.; Otsuna, H.; Ito, K.; Borst, A.; Reiff, D.F. Processing of horizontal optic flow in three visual interneurons of the Drosophila brain. J. Neurophysiol. 2010, 103, 1646–1657. [Google Scholar] [CrossRef]

- Mauss, A.S.; Vlasits, A.; Borst, A.; Feller, M. Visual circuits for direction selectivity. Annu. Rev. Neurosci. 2017, 40, 211–230. [Google Scholar] [CrossRef]

- Tang, C.; Todo, Y.; Ji, J.; Tang, Z. A novel motion direction detection mechanism based on dendritic computation of direction-selective ganglion cells. Knowl.-Based Syst. 2022, 241, 108205. [Google Scholar] [CrossRef]

- Han, M.; Todo, Y.; Tang, Z. Mechanism of Motion Direction Detection Based on Barlow’s Retina Inhibitory Scheme in Direction-Selective Ganglion Cells. Electronics 2021, 10, 1663. [Google Scholar] [CrossRef]

- Yan, C.; Todo, Y.; Tang, Z. The Mechanism of Motion Direction Detection Based on Hassenstein-Reichardt Model. In Proceedings of the 2021 6th International Conference on Computational Intelligence and Applications (ICCIA), Xiamen, China, 11–13 June 2021; pp. 180–184. [Google Scholar]

- Hua, Y.; Todo, Y.; Tang, Z.; Tao, S.; Li, B.; Inoue, R. A Novel Bio-Inspired Motion Direction Detection Mechanism in Binary and Grayscale Background. Mathematics 2022, 10, 3767. [Google Scholar] [CrossRef]

- Zhang, X.; Todo, Y.; Tang, C.; Tang, Z. The Mechanism of Orientation Detection Based on Dendritic Neuron. In Proceedings of the 2021 IEEE 4th International Conference on Big Data and Artificial Intelligence (BDAI), Qingdao, China, 2–4 July 2021; pp. 225–229. [Google Scholar]

- Li, B.; Todo, Y.; Tang, Z. The Mechanism of Orientation Detection Based on Local Orientation-Selective Neuron. In Proceedings of the 2021 6th International Conference on Computational Intelligence and Applications (ICCIA), Xiamen, China, 11–13 June 2021; pp. 195–199. [Google Scholar]

- Tao, S.; Todo, Y.; Tang, Z.; Li, B.; Zhang, Z.; Inoue, R. A novel artificial visual system for motion direction detection in grayscale images. Mathematics 2022, 10, 2975. [Google Scholar] [CrossRef]

- Yan, C.; Todo, Y.; Kobayashi, Y.; Tang, Z.; Li, B. An Artificial Visual System for Motion Direction Detection Based on the Hassenstein–Reichardt Correlator Model. Electronics 2022, 11, 1423. [Google Scholar] [CrossRef]

- Chapot, C.A.; Euler, T.; Schubert, T. How do horizontal cells ‘talk’ to cone photoreceptors? Different levels of complexity at the cone–horizontal cell synapse. J. Physiol. 2017, 595, 5495–5506. [Google Scholar] [CrossRef]

- Sanes, J.R.; Zipursky, S.L. Design principles of insect and vertebrate visual systems. Neuron 2010, 66, 15–36. [Google Scholar] [CrossRef] [PubMed]

- Joesch, M.; Schnell, B.; Raghu, S.V.; Reiff, D.F.; Borst, A. ON and OFF pathways in Drosophila motion vision. Nature 2010, 468, 300–304. [Google Scholar] [CrossRef] [PubMed]

- Eichner, H.; Joesch, M.; Schnell, B.; Reiff, D.F.; Borst, A. Internal structure of the fly elementary motion detector. Neuron 2011, 70, 1155–1164. [Google Scholar] [CrossRef]

- Joesch, M.; Weber, F.; Eichner, H.; Borst, A. Functional specialization of parallel motion detection circuits in the fly. J. Neurosci. 2013, 33, 902–905. [Google Scholar] [CrossRef]

- Strother, J.A.; Nern, A.; Reiser, M.B. Direct observation of ON and OFF pathways in the Drosophila visual system. Curr. Biol. 2014, 24, 976–983. [Google Scholar] [CrossRef]

- Behnia, R.; Clark, D.A.; Carter, A.G.; Clandinin, T.R.; Desplan, C. Processing properties of ON and OFF pathways for Drosophila motion detection. Nature 2014, 512, 427–430. [Google Scholar] [CrossRef]

- Borst, A.; Haag, J.; Mauss, A.S. How fly neurons compute the direction of visual motion. J. Comp. Physiol. A 2020, 206, 109–124. [Google Scholar] [CrossRef]

- Fisher, Y.E.; Silies, M.; Clandinin, T.R. Orientation selectivity sharpens motion detection in Drosophila. Neuron 2015, 88, 390–402. [Google Scholar] [CrossRef] [PubMed]

- Bahl, A.; Serbe, E.; Meier, M.; Ammer, G.; Borst, A. Neural mechanisms for Drosophila contrast vision. Neuron 2015, 88, 1240–1252. [Google Scholar] [CrossRef] [PubMed]

- Takemura, S.Y.; Nern, A.; Chklovskii, D.B.; Scheffer, L.K.; Rubin, G.M.; Meinertzhagen, I.A. The comprehensive connectome of a neural substrate for ‘ON’motion detection in Drosophila. Elife 2017, 6, e24394. [Google Scholar] [CrossRef] [PubMed]

- Shinomiya, K.; Huang, G.; Lu, Z.; Parag, T.; Xu, C.S.; Aniceto, R.; Ansari, N.; Cheatham, N.; Lauchie, S.; Neace, E.; et al. Comparisons between the ON-and OFF-edge motion pathways in the Drosophila brain. Elife 2019, 8, e40025. [Google Scholar] [CrossRef]

- Meier, M.; Borst, A. Extreme compartmentalization in a Drosophila amacrine cell. Curr. Biol. 2019, 29, 1545–1550. [Google Scholar] [CrossRef]

- Gonzalez-Suarez, A.D.; Zavatone-Veth, J.A.; Chen, J.; Matulis, C.A.; Badwan, B.A.; Clark, D.A. Excitatory and inhibitory neural dynamics jointly tune motion detection. Curr. Biol. 2022, 32, 3659–3675. [Google Scholar] [CrossRef]

- Borst, A.; Haag, J.; Reiff, D.F. Fly motion vision. Annu. Rev. Neurosci. 2010, 33, 49–70. [Google Scholar] [CrossRef]

- Takemura, S.Y.; Bharioke, A.; Lu, Z.; Nern, A.; Vitaladevuni, S.; Rivlin, P.K.; Katz, W.T.; Olbris, D.J.; Plaza, S.M.; Winston, P.; et al. A visual motion detection circuit suggested by Drosophila connectomics. Nature 2013, 500, 175–181. [Google Scholar] [CrossRef]

| Size∖Type | C0 | CC | CR | R0 | RC | RR | 0C | 0R |

|---|---|---|---|---|---|---|---|---|

| 1 | 100% | 100% | 99.38% | 100% | 100% | 99.26% | 100% | 100% |

| 2 | 100% | 100% | 99.5% | 100% | 100% | 99.99% | 100% | 99.82% |

| 4 | 100% | 100% | 99.8% | 100% | 100% | 100% | 100% | 99.95% |

| 8 | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 99.99% |

| 16 | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 100% |

| 32 | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 100% |

| 64 | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 100% |

| 128 | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 100% |

| Size∖Type | C0 | CC | CR | R0 | RC | RR | 0C | 0R |

|---|---|---|---|---|---|---|---|---|

| 1 | 98.57% | 59.33% | 12.95% | 98.94% | 66.52% | 12.38% | 96.58% | 21.32% |

| 2 | 99.29% | 51.04% | 12.64% | 99.47% | 69.2% | 12.19% | 96.5% | 38.49% |

| 4 | 99.53% | 41.61% | 13.06% | 99.46% | 72.32% | 12.64% | 88.23% | 36.96% |

| 8 | 99.72% | 67.94% | 13.93% | 99.64% | 89.45% | 21.05% | 89.66% | 26.73% |

| 16 | 99.93% | 74.12% | 14.23% | 99.72% | 92.54% | 12.08% | 90.58% | 36.07% |

| 32 | 99.95% | 80.24% | 14.3% | 99.99% | 92.81% | 28.1% | 82.32% | 29.01% |

| 64 | 99.99% | 80.11% | 13.56% | 100% | 99.29% | 28.57% | 91.12% | 38.43% |

| 128 | 100% | 69.95% | 17.24% | 100% | 99.38% | 19.32% | 91.16% | 47.26% |

| Size∖Type | C0 | CC | CR | R0 | RC | RR | 0C | 0R |

|---|---|---|---|---|---|---|---|---|

| 1 | 99.98% | 96.1% | 60.08% | 99.87% | 88.36% | 43.7% | 99.37% | 92.41% |

| 2 | 99.93% | 96.69% | 47.58% | 99.98% | 98.11% | 51.58% | 99.62% | 96.96% |

| 4 | 99.84% | 96.99% | 74.69% | 99.98% | 99.02% | 45.37% | 99.33% | 97.03% |

| 8 | 99.99% | 95.55% | 21.71% | 99.95% | 99.51% | 70.99% | 99.47% | 98.04% |

| 16 | 99.93% | 93.59% | 68.68% | 99.91% | 99.53% | 65.18% | 99.86% | 97.96% |

| 32 | 99.88% | 95.4% | 68.98% | 99.89% | 99.66% | 58.05% | 99.54% | 98.46% |

| 64 | 99.86% | 96.38% | 69.44% | 99.87% | 99.71% | 49.72% | 99.87% | 99.18% |

| 128 | 99.88% | 92.22% | 67.39% | 99.61% | 99.71% | 50.46% | 99.76% | 98.75% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, Z.; Todo, Y.; Yan, C.; Tang, Z. A Motion-Direction-Detecting Model for Gray-Scale Images Based on the Hassenstein–Reichardt Model. Electronics 2023, 12, 2481. https://doi.org/10.3390/electronics12112481

Qiu Z, Todo Y, Yan C, Tang Z. A Motion-Direction-Detecting Model for Gray-Scale Images Based on the Hassenstein–Reichardt Model. Electronics. 2023; 12(11):2481. https://doi.org/10.3390/electronics12112481

Chicago/Turabian StyleQiu, Zhiyu, Yuki Todo, Chenyang Yan, and Zheng Tang. 2023. "A Motion-Direction-Detecting Model for Gray-Scale Images Based on the Hassenstein–Reichardt Model" Electronics 12, no. 11: 2481. https://doi.org/10.3390/electronics12112481

APA StyleQiu, Z., Todo, Y., Yan, C., & Tang, Z. (2023). A Motion-Direction-Detecting Model for Gray-Scale Images Based on the Hassenstein–Reichardt Model. Electronics, 12(11), 2481. https://doi.org/10.3390/electronics12112481