Exploring Lightweight Deep Learning Solution for Malware Detection in IoT Constraint Environment

Abstract

1. Introduction

Contributions

- Exploring a light-weight deep learning-based approach that is applicable in constraint IoT devices.

- Proposing the deep learning models based on minimal parameters and hidden layers.

- Evaluating the proposed approach on the existing state-of-the-art malware dataset.

- Outperforming the existing baselines in terms of accuracy, precision and recall.

2. Literature Review

3. Proposed Approach

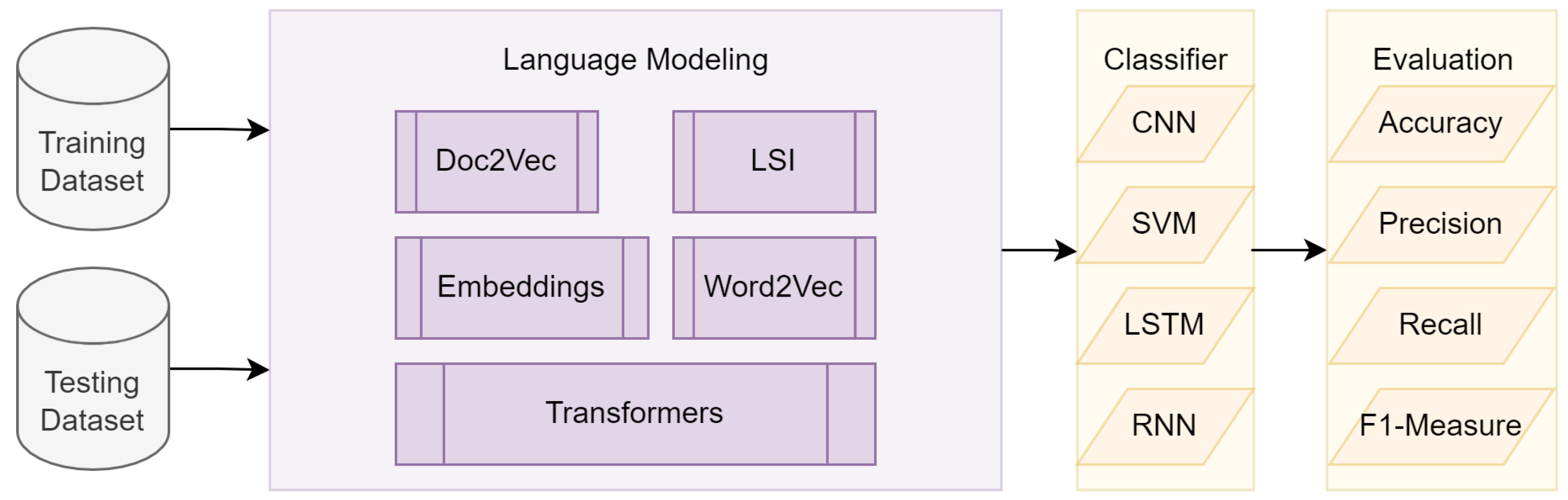

3.1. Overview of the Approach

3.2. Architectural Design

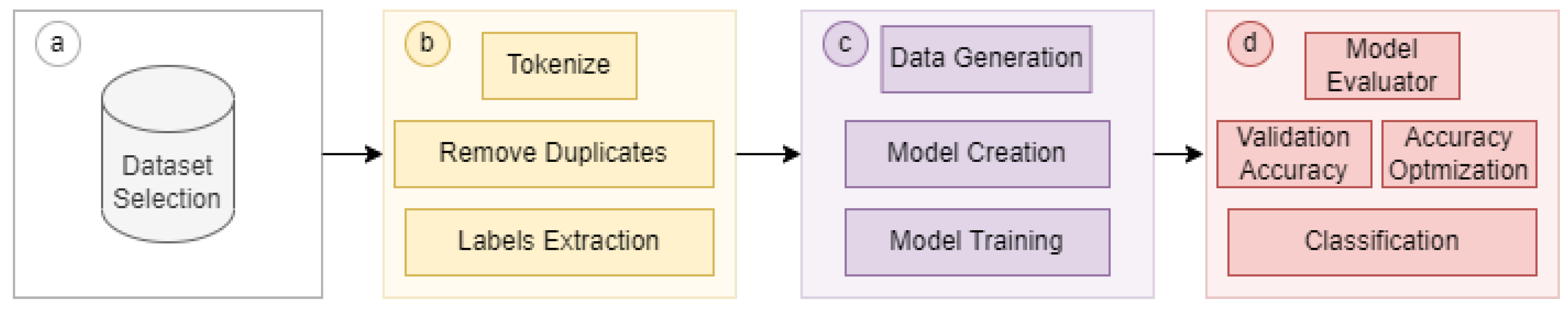

3.2.1. Data Layer

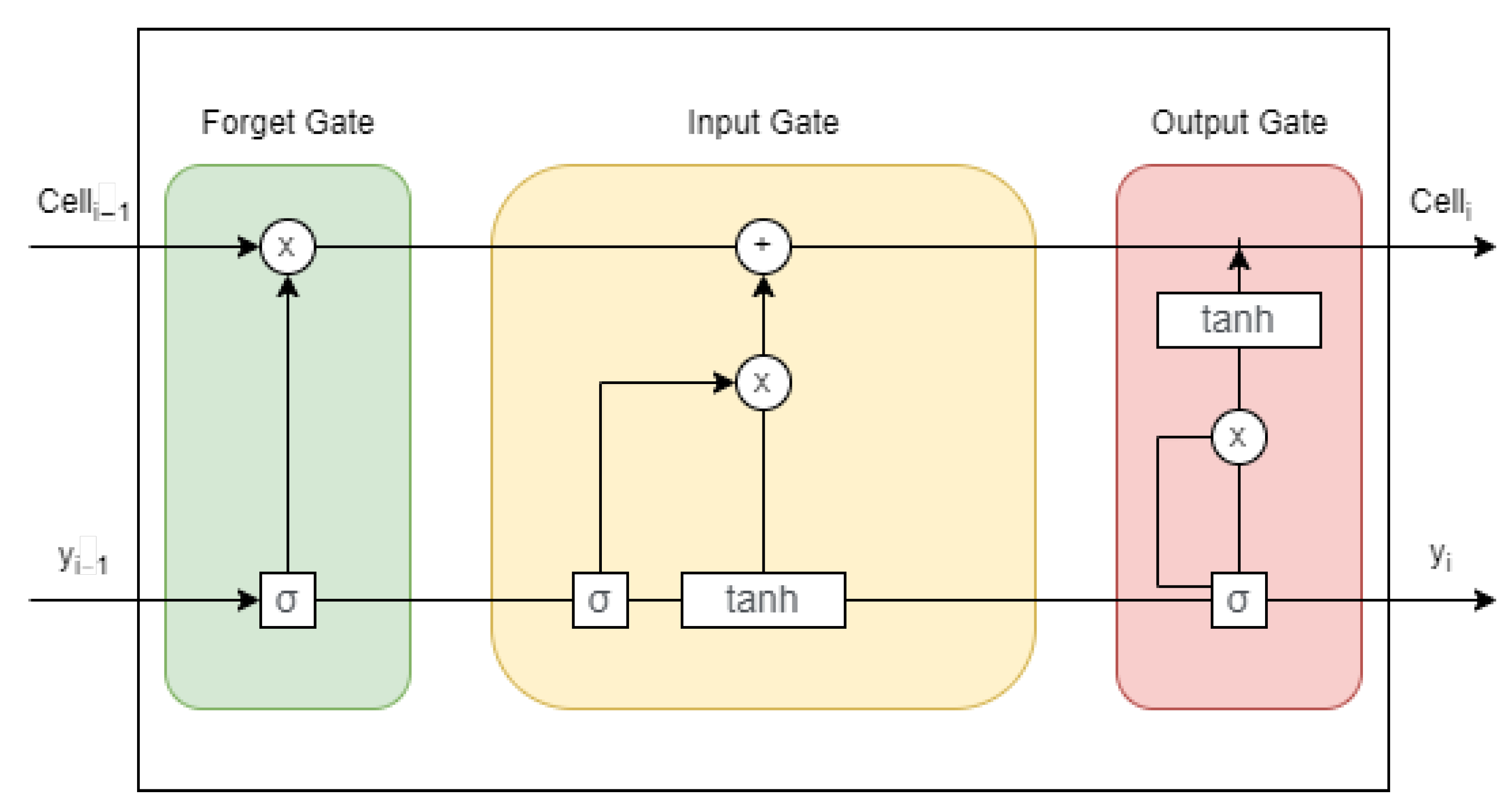

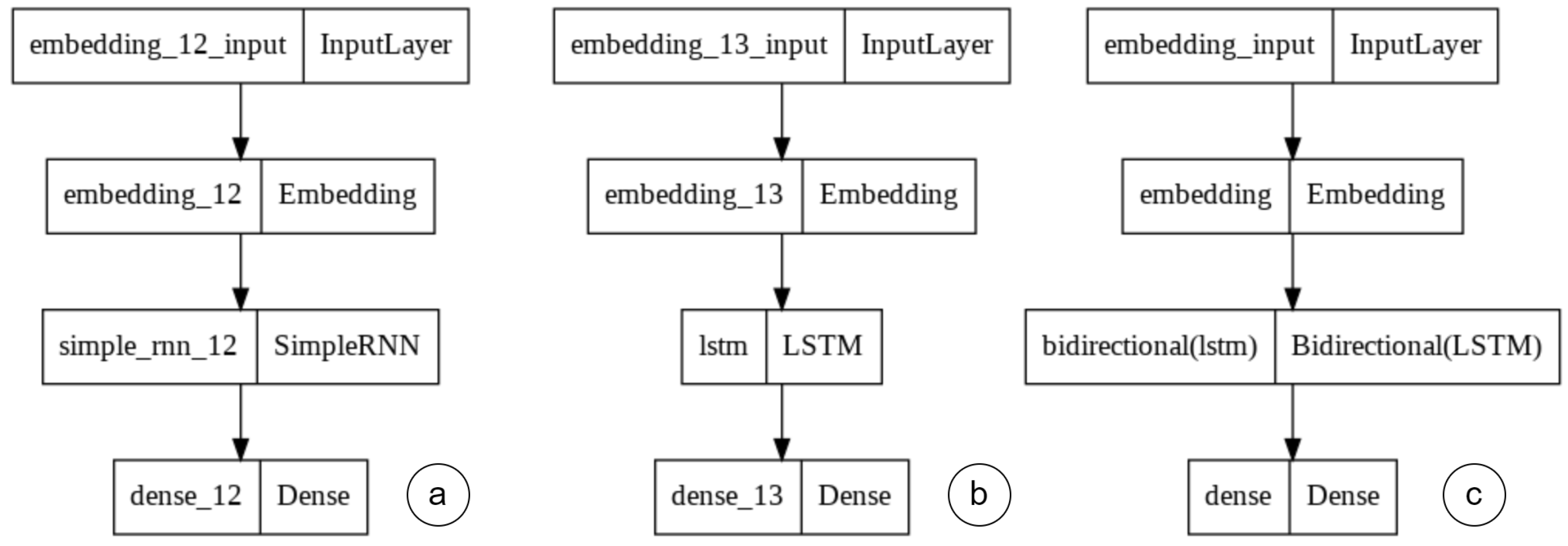

3.2.2. Model Layer

3.2.3. Optimization Layer

4. Implementation Details

4.1. Benchmark

4.2. Instantiation

4.3. Parameterization

5. Results and Discussion

6. Conclusions and Future Directions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Al-Fuqaha, A.; Guizani, M.; Mohammadi, M.; Aledhari, M.; Ayyash, M. Internet of things: A survey on enabling technologies, protocols, and applications. IEEE Commun. Surv. Tutor. 2015, 17, 2347–2376. [Google Scholar] [CrossRef]

- Naveed, M.; Arif, F.; Usman, S.M.; Anwar, A.; Hadjouni, M.; Elmannai, H.; Hussain, S.; Ullah, S.S.; Umar, F. A Deep Learning-Based Framework for Feature Extraction and Classification of Intrusion Detection in Networks. Wireless Commun. Mob. Comput. 2022, 2022, 2215852. [Google Scholar] [CrossRef]

- Sonar, K.; Upadhyay, H. A survey: DDOS attack on Internet of Things. Int. J. Eng. Res. Dev. 2014, 10, 58–63. [Google Scholar]

- Zohora, F.T.; Khan, M.R.R.; Bhuiyan, M.F.R.; Das, A.K. Enhancing the capabilities of IoT based fog and cloud infrastructures for time sensitive events. In Proceedings of the 2017 International Conference on Electrical Engineering and Computer Science (ICECOS), Palembang, Indonesia, 22–23 August 2017; pp. 224–230. [Google Scholar]

- Naveed, M.; Usman, S.M.; Satti, M.I.; Aleshaiker, S.; Anwar, A. Intrusion Detection in Smart IoT Devices for People with Disabilities. In Proceedings of the 2022 IEEE International Smart Cities Conference (ISC2), Paphos, Cyprus, 26–29 September 2022; pp. 1–5. [Google Scholar]

- Ko, S.W.; Kim, S.L. Impact of node speed on energy-constrained opportunistic Internet-of-Things with wireless power transfer. Sensors 2018, 18, 2398. [Google Scholar] [CrossRef] [PubMed]

- Rebelo Moreira, J.L.; Ferreira Pires, L.; Van Sinderen, M. Semantic interoperability for the IoT: Analysis of JSON for linked data. In Enterprise Interoperability: Smart Services and Business Impact of Enterprise Interoperability; Wiley: Hoboken, NJ, USA, 2018; pp. 163–169. [Google Scholar]

- Lu, Y.; Da Xu, L. Internet of Things (IoT) cybersecurity research: A review of current research topics. IEEE Internet Things J. 2018, 6, 2103–2115. [Google Scholar] [CrossRef]

- Alhakami, W.; Alharbi, A.; Bourouis, S.; Alroobaea, R.; Bouguila, N. Network Anomaly Intrusion Detection Using a Nonparametric Bayesian Approach and Feature Selection. IEEE Access 2019, 7, 52181–52190. [Google Scholar] [CrossRef]

- Hassan, W.H. Current research on Internet of Things (IoT) security: A survey. Comput. Netw. 2019, 148, 283–294. [Google Scholar]

- Lee, I. Internet of Things (IoT) cybersecurity: Literature review and IoT cyber risk management. Future Internet 2020, 12, 157. [Google Scholar] [CrossRef]

- Thakur, K.; Hayajneh, T.; Tseng, J. Cyber security in social media: Challenges and the way forward. IT Prof. 2019, 21, 41–49. [Google Scholar] [CrossRef]

- Gopal, T.S.; Meerolla, M.; Jyostna, G.; Reddy Lakshmi Eswari, P.; Magesh, E. Mitigating Mirai Malware Spreading in IoT Environment. In Proceedings of the 2018 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Bangalore, India, 19–22 September 2018; pp. 2226–2230. [Google Scholar] [CrossRef]

- Vengatesan, K.; Kumar, A.; Parthibhan, M.; Singhal, A.; Rajesh, R. Analysis of Mirai Botnet Malware Issues and Its Prediction Methods in Internet of Things. In Proceedings of the International Conference on Computer Networks, Big Data and IoT (ICCBI-2018), Madurai, India, 19–20 December 2018; Pandian, A., Senjyu, T., Islam, S.M.S., Wang, H., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 120–126. [Google Scholar]

- Humayun, M.; Jhanjhi, N.; Alsayat, A.; Ponnusamy, V. Internet of things and ransomware: Evolution, mitigation and prevention. Egypt. Inform. J. 2021, 22, 105–117. [Google Scholar] [CrossRef]

- Sarker, I.; Kayes, A.S.M.; Badsha, S.; Alqahtani, H.; Watters, P.; Ng, A. Cybersecurity data science: An overview from machine learning perspective. J. Big Data 2020, 7, 41. [Google Scholar] [CrossRef]

- Phandi, P.; Silva, A.; Lu, W. SemEval-2018 task 8: Semantic extraction from CybersecUrity REports using natural language processing (SecureNLP). In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 697–706. [Google Scholar]

- Mahdavifar, S.; Ghorbani, A.A. Application of deep learning to cybersecurity: A survey. Neurocomputing 2019, 347, 149–176. [Google Scholar] [CrossRef]

- Ushmani, A. Machine learning pattern matching. J. Comput. Sci. Trends Technol. 2019, 7, 4–7. [Google Scholar]

- Bourouis, S.; Bouguila, N. Nonparametric learning approach based on infinite flexible mixture model and its application to medical data analysis. Int. J. Imaging Syst. Technol. 2021, 31, 1989–2002. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Soman, K.; Poornachandran, P.; Menon, V.K. A deep-dive on machine learning for cyber security use cases. In Machine Learning for Computer and Cyber Security; CRC Press: Boca Raton, FL, USA, 2019; pp. 122–158. [Google Scholar]

- Lim, S.K.; Muis, A.O.; Lu, W.; Ong, C.H. Malwaretextdb: A database for annotated malware articles. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 1557–1567. [Google Scholar]

- Tariq, M.I.; Memon, N.A.; Ahmed, S.; Tayyaba, S.; Mushtaq, M.T.; Mian, N.A.; Imran, M.; Ashraf, M.W. A review of deep learning security and privacy defensive techniques. Mob. Inf. Syst. 2020, 2020, 6535834. [Google Scholar] [CrossRef]

- Shurman, M.M.; Khrais, R.M.; Yateem, A.A. IoT denial-of-service attack detection and prevention using hybrid IDS. In Proceedings of the 2019 International Arab Conference on Information Technology (ACIT), Al Ain, United Arab Emirates, 3–5 December 2019; pp. 252–254. [Google Scholar]

- Tawalbeh, L.; Muheidat, F.; Tawalbeh, M.; Quwaider, M. IoT Privacy and security: Challenges and solutions. Appl. Sci. 2020, 10, 4102. [Google Scholar] [CrossRef]

- Zhou, W.; Jia, Y.; Peng, A.; Zhang, Y.; Liu, P. The effect of iot new features on security and privacy: New threats, existing solutions, and challenges yet to be solved. IEEE Internet Things J. 2018, 6, 1606–1616. [Google Scholar] [CrossRef]

- Perwej, Y.; Parwej, F.; Hassan, M.M.M.; Akhtar, N. The internet-of-things (IoT) security: A technological perspective and review. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2019, 5, 2456–3307. [Google Scholar] [CrossRef]

- Yu, Y.; Li, Y.; Tian, J.; Liu, J. Blockchain-based solutions to security and privacy issues in the internet of things. IEEE Wirel. Commun. 2018, 25, 12–18. [Google Scholar] [CrossRef]

- Syed, N.F.; Baig, Z.; Ibrahim, A.; Valli, C. Denial of service attack detection through machine learning for the IoT. J. Inf. Telecommun. 2020, 4, 482–503. [Google Scholar] [CrossRef]

- Sheikhan, M.; Jadidi, Z.; Farrokhi, A. Intrusion detection using reduced-size RNN based on feature grouping. Neural Comput. Appl. 2012, 21, 1185–1190. [Google Scholar] [CrossRef]

- Althubiti, S.A.; Jones, E.M.; Roy, K. LSTM for anomaly-based network intrusion detection. In Proceedings of the 2018 28th International telecommunication networks and applications conference (ITNAC), Sydney, Australia, 21–23 November 2018; pp. 1–3. [Google Scholar]

- Mirza, A.H.; Cosan, S. Computer network intrusion detection using sequential LSTM neural networks autoencoders. In Proceedings of the 2018 26th signal processing and communications applications conference (SIU), Izmir, Turkey, 2–5 May 2018; pp. 1–4. [Google Scholar]

- Mimura, M.; Ito, R. Applying NLP techniques to malware detection in a practical environment. Int. J. Inf. Secur. 2022, 21, 279–291. [Google Scholar] [CrossRef]

- Wang, A.; Liang, R.; Liu, X.; Zhang, Y.; Chen, K.; Li, J. An inside look at IoT malware. In Proceedings of the International Conference on Industrial IoT Technologies and Applications, WuHu, China, 25–26 March 2017; pp. 176–186. [Google Scholar]

- Wang, H.; Zhang, W.; He, H.; Liu, P.; Luo, D.X.; Liu, Y.; Jiang, J.; Li, Y.; Zhang, X.; Liu, W.; et al. An evolutionary study of IoT malware. IEEE Internet Things J. 2021, 8, 15422–15440. [Google Scholar] [CrossRef]

- Jaramillo, L.E.S. Malware detection and mitigation techniques: Lessons learned from Mirai DDOS attack. J. Inf. Syst. Eng. Manag. 2018, 3, 19. [Google Scholar] [CrossRef]

- Akhtar, M.S.; Feng, T. Detection of Malware by Deep Learning as CNN-LSTM Machine Learning Techniques in Real Time. Symmetry 2022, 14, 2308. [Google Scholar] [CrossRef]

- Bourouis, S.; Sallay, H.; Bouguila, N. A Competitive Generalized Gamma Mixture Model for Medical Image Diagnosis. IEEE Access 2021, 9, 13727–13736. [Google Scholar] [CrossRef]

- Alharithi, F.S.; Almulihi, A.H.; Bourouis, S.; Alroobaea, R.; Bouguila, N. Discriminative Learning Approach Based on Flexible Mixture Model for Medical Data Categorization and Recognition. Sensors 2021, 21, 2450. [Google Scholar] [CrossRef]

- Almulihi, A.H.; Alharithi, F.S.; Bourouis, S.; Alroobaea, R.; Pawar, Y.; Bouguila, N. Oil Spill Detection in SAR Images Using Online Extended Variational Learning of Dirichlet Process Mixtures of Gamma Distributions. Remote Sens. 2021, 13, 2991. [Google Scholar] [CrossRef]

- Li, H. Deep learning for natural language processing: Advantages and challenges. Natl. Sci. Rev. 2017, 5, 24–26. [Google Scholar] [CrossRef]

- Smagulova, K.; James, A.P. A survey on LSTM memristive neural network architectures and applications. Eur. Phys. J. Spec. Top. 2019, 228, 2313–2324. [Google Scholar] [CrossRef]

- Che, C.; Xiao, C.; Liang, J.; Jin, B.; Zho, J.; Wang, F. An rnn architecture with dynamic temporal matching for personalized predictions of parkinson’s disease. In Proceedings of the 2017 SIAM International Conference on Data Mining, Houston, TX, USA, 27–29 April 2017; pp. 198–206. [Google Scholar]

- Amudha, S.; Murali, M. Deep learning based energy efficient novel scheduling algorithms for body-fog-cloud in smart hospital. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 7441–7460. [Google Scholar] [CrossRef]

- Roopak, M.; Tian, G.Y.; Chambers, J. Deep learning models for cyber security in IoT networks. In Proceedings of the 2019 IEEE 9th annual computing and communication workshop and conference (CCWC), Las Vegas, NV, USA, 7–9 January 2019; pp. 452–457. [Google Scholar]

- Taiwo, O.; Ezugwu, A.E.; Oyelade, O.N.; Almutairi, M.S. Enhanced Intelligent Smart Home Control and Security System Based on Deep Learning Model. Wirel. Commun. Mob. Comput. 2022, 2022, 9307961. [Google Scholar] [CrossRef]

- Loyola, P.; Gajananan, K.; Watanabe, Y.; Satoh, F. Villani at SemEval-2018 Task 8: Semantic Extraction from Cybersecurity Reports using Representation Learning. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 885–889. [Google Scholar]

- Sikdar, U.K.; Barik, B.; Gambäck, B. Flytxt_NTNU at SemEval-2018 task 8: Identifying and classifying malware text using conditional random fields and Naive Bayes classifiers. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 890–893. [Google Scholar]

- Ma, C.; Zheng, H.; Xie, P.; Li, C.; Li, L.; Si, L. DM_NLP at SemEval-2018 Task 8: Neural sequence labeling with linguistic features. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 707–711. [Google Scholar]

- Fu, M.; Zhao, X.; Yan, Y. HCCL at SemEval-2018 Task 8: An End-to-End System for Sequence Labeling from Cybersecurity Reports. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 874–877. [Google Scholar]

- Brew, C. Digital Operatives at SemEval-2018 Task 8: Using dependency features for malware NLP. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 894–897. [Google Scholar]

- Ravikiran, M.; Madgula, K. Fusing Deep Quick Response Code Representations Improves Malware Text Classification. In Proceedings of the ACM Workshop on Crossmodal Learning and Application, Ottawa, ON, Canada, 10 June 2019; pp. 11–18. [Google Scholar]

- Pfeiffer, J.; Simpson, E.; Gurevych, I. Low Resource Multi-Task Sequence Tagging–Revisiting Dynamic Conditional Random Fields. arXiv 2020, arXiv:2005.00250. [Google Scholar]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Jeon, J.; Jeong, B.; Baek, S.; Jeong, Y.S. Hybrid Malware Detection Based on Bi-LSTM and SPP-Net for Smart IoT. IEEE Trans. Ind. Inform. 2021, 18, 4830–4837. [Google Scholar] [CrossRef]

- Banerjee, K.; Gupta, R.R.; Vyas, K.; Mishra, B. Exploring alternatives to softmax function. arXiv 2020, arXiv:2011.11538. [Google Scholar]

| Year | System | Authors | Features | Model | Accuracy | Limitations |

|---|---|---|---|---|---|---|

| 2017 | SemEva | Lim et al. [22] | POS | SVM | 69.70 | Used only statistical method |

| SemEva | Naive Bays | 59.50 | ||||

| 2018 | Villani | Loyola et al. [47] | Wikipedia Tokens | Word-embeddings initialized using Glove vectors | 84.47 | Trained solely on Wikipedia text data |

| Flytxt | Sikdar et al. [48] | NER labels | Ensemble of CRF and NB classifiers | 85.28 | Used only statistical method | |

| DM | Ma et al. [49] | NER labels | BiLSTM-CNN-CRF | 79.45 | Overly complex model configuration | |

| HCCL | Fu et al. [50] | POS | BiLSTM-CNN-CRF | 86.41 | ||

| Digital | Brew et al. [51] | POS, lemma, bigrams | linear SVM classifier | 79.45 | Used only statistical method | |

| NLP | Phandi et al. [17] | Tokens | Convolutional neural network with original glove embeddings | 76.86 | Low accuracy scores | |

| 2019 | Neural | Ravikiran et al. [52] | NLP labels | Multimodal convolutional neural network | - | Accuracy scores not reported |

| 2020 | CRF | Pfeiffer et al. [53] | POS | Dynamic conditional random fields | - |

| Item | Number | Dataset | Instances |

|---|---|---|---|

| Sentences | 6819 | Training | 4877 |

| Words | 168,138 | Testing | 1045 |

| Tags | 7 | Validation | 1045 |

| Unique words | 13,730 | Total | 6967 |

| Model | Layer (Type) | Output Shape | Param # |

|---|---|---|---|

| LSTM & RNN | embedding_13 (Embedding) | (None, 141, 32) | 439,392 |

| lstm (LSTM) | (None, 141, 32) | 8320 | |

| dense_13 (Dense) | (None, 141, 8) | 264 | |

| Total params: 447,976 | |||

| Trainable params: 447,976 | |||

| Trainable params: 447,976 | |||

| Bi-LSTM | Layer (type) | Output Shape | Param # |

| embedding_13 (Embedding) | (None, 141, 32) | 439,392 | |

| lstm (LSTM) | (None, 141, 32) | 2080 | |

| dense_13 (Dense) | (None, 141, 8) | 264 | |

| Total params: 441,736 | |||

| Trainable params: 441,736 | |||

| Trainable params: 447,976 |

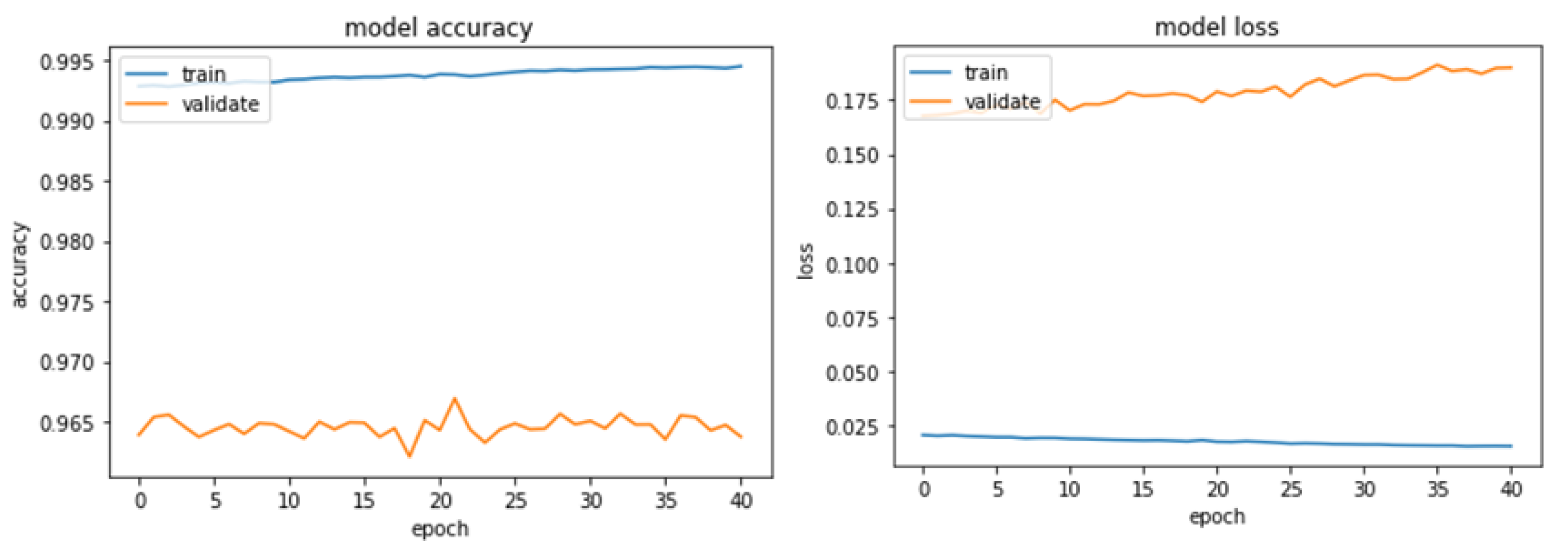

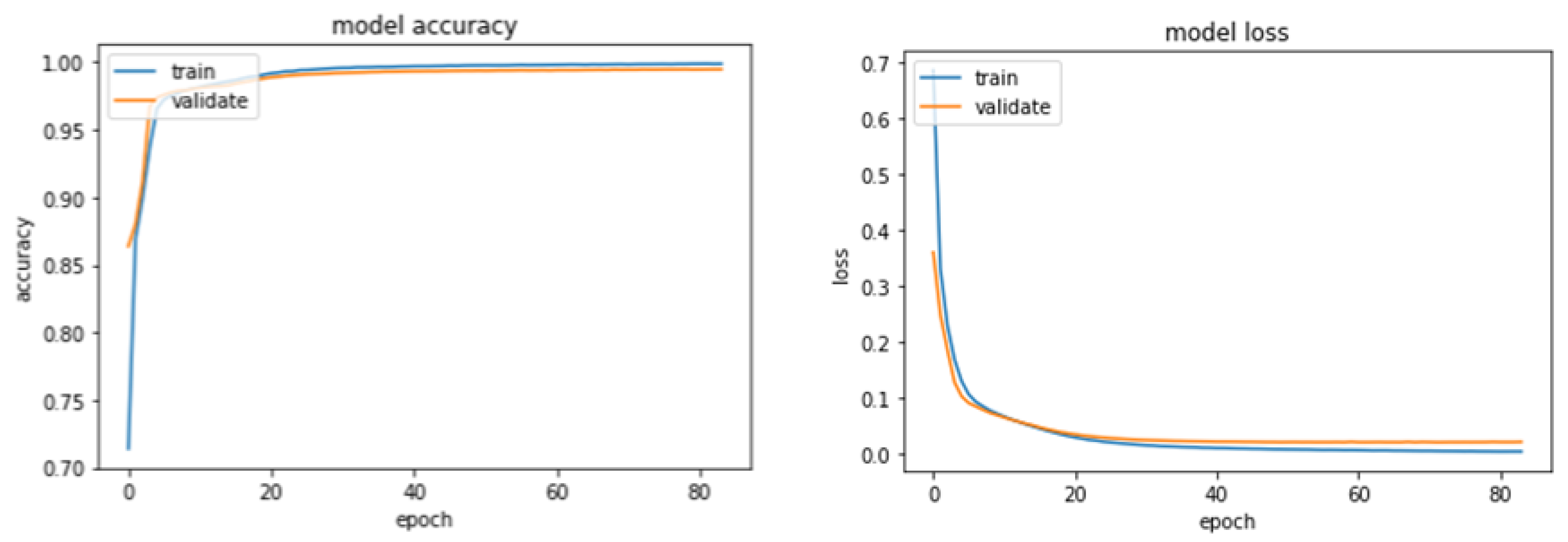

| Model Parameters | LSTM | BiLSTM | RNN |

|---|---|---|---|

| Layers | 1 | 1 | 1 |

| Embedding Size | 141 | 141 | 141 |

| Dropout Rate | 0.2 | 0.2 | 0.2 |

| Epochs (Till Convergence) | 41 | 136 | 79 |

| Learning Rate | 0.0001 | 0.0001 | 0.0001 |

| Batch Size | 128 | 128 | 128 |

| Accuracy | 0.9945 | 0.9988 | 0.9987 |

| Training Loss | 0.0154 | 0.0040 | 0.0042 |

| Validation Loss | 0.1899 | 0.1977 | 0.0214 |

| Optimizer | Adam | Adam | Adam |

| Loss Function | Cross entropy | ||

| Activation Function | Softmax | ||

| Model Parameters | LSTM | BiLSTM | RNN |

|---|---|---|---|

| Test Accuracy | 0.9631 | 0.9681 | 0.9946 |

| Test Loss | 0.2023 | 0.2274 | 0.0212 |

| Year | System | Authors | Model | Accuracy | Precision | Recall |

|---|---|---|---|---|---|---|

| 2017 | SemEval | Lim et al. | SVM | 69.70 | 49.55 | 62.22 |

| Naive Bays | 59.50 | 38.17 | 78.89 | |||

| 2018 | Villani | Loyola et al. | Word-embeddings initialized using Glove vectors | 84.47 | 47.76 | 71.11 |

| Flytxt | Sikdar et al. | Ensemble of CRF and NB classifiers | 85.28 | 49.59 | 66.67 | |

| DM | Ma et al. | BiLSTM-CNN-CRF | 79.45 | 39.43 | 76.67 | |

| HCCL | Fu et al. | BiLSTM-CNN-CRF | 86.41 | 53.57 | 50.00 | |

| Digital | Brew et al. | linear SVM classifier | 79.45 | 39.31 | 75.56 | |

| NLP | Phandi et al. | Convolutional neural network with original glove embeddings | 76.86 | 38.46 | 72.22 | |

| 2019 | Neural | Ravikiran et al. | Multimodal convolutional neural network | - | 54.00 | 68.00 |

| 2020 | CRF | Pfeiffer et al. | Dynamic conditional random fields | - | - | - |

| 2022 | Proposed | LSTM | 96.31 | 96.58 | 96.31 | |

| BiLSTM | 96.81 | 96.78 | 96.75 | |||

| RNN | 99.46 | 96.79 | 96.63 | |||

| HMM | 78.33 | - | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, A.R.; Yasin, A.; Usman, S.M.; Hussain, S.; Khalid, S.; Ullah, S.S. Exploring Lightweight Deep Learning Solution for Malware Detection in IoT Constraint Environment. Electronics 2022, 11, 4147. https://doi.org/10.3390/electronics11244147

Khan AR, Yasin A, Usman SM, Hussain S, Khalid S, Ullah SS. Exploring Lightweight Deep Learning Solution for Malware Detection in IoT Constraint Environment. Electronics. 2022; 11(24):4147. https://doi.org/10.3390/electronics11244147

Chicago/Turabian StyleKhan, Abdur Rehman, Amanullah Yasin, Syed Muhammad Usman, Saddam Hussain, Shehzad Khalid, and Syed Sajid Ullah. 2022. "Exploring Lightweight Deep Learning Solution for Malware Detection in IoT Constraint Environment" Electronics 11, no. 24: 4147. https://doi.org/10.3390/electronics11244147

APA StyleKhan, A. R., Yasin, A., Usman, S. M., Hussain, S., Khalid, S., & Ullah, S. S. (2022). Exploring Lightweight Deep Learning Solution for Malware Detection in IoT Constraint Environment. Electronics, 11(24), 4147. https://doi.org/10.3390/electronics11244147