Implementing a Timing Error-Resilient and Energy-Efficient Near-Threshold Hardware Accelerator for Deep Neural Network Inference

Abstract

:1. Introduction

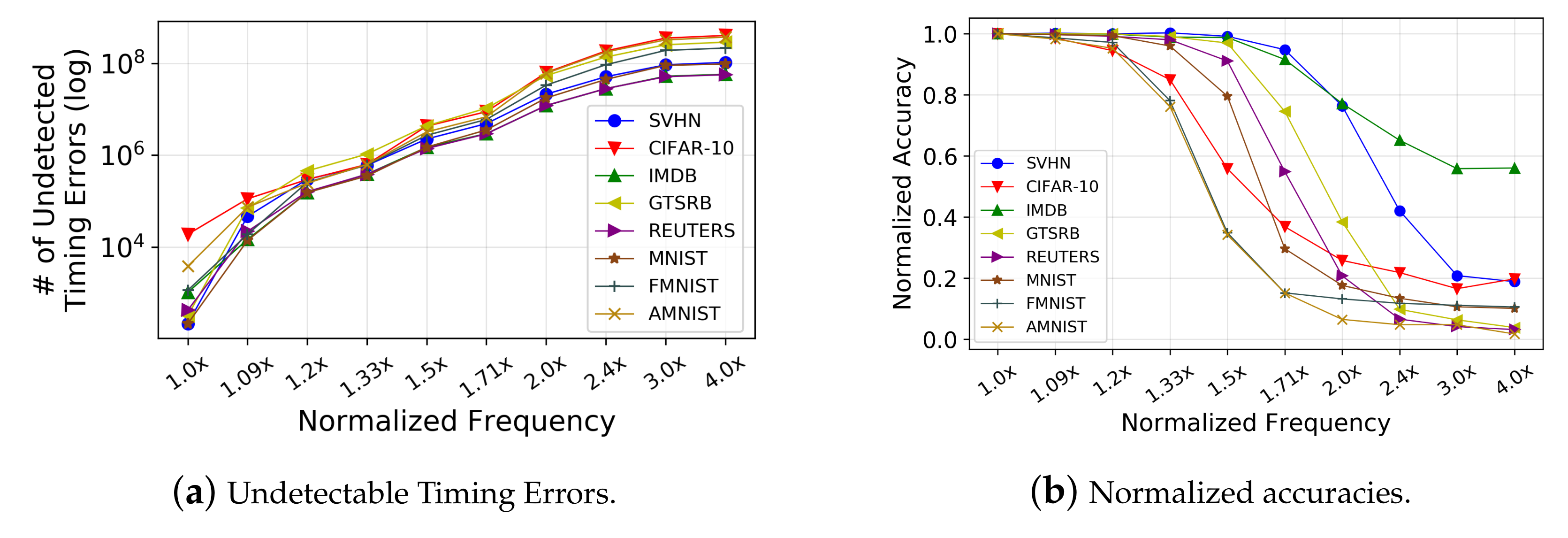

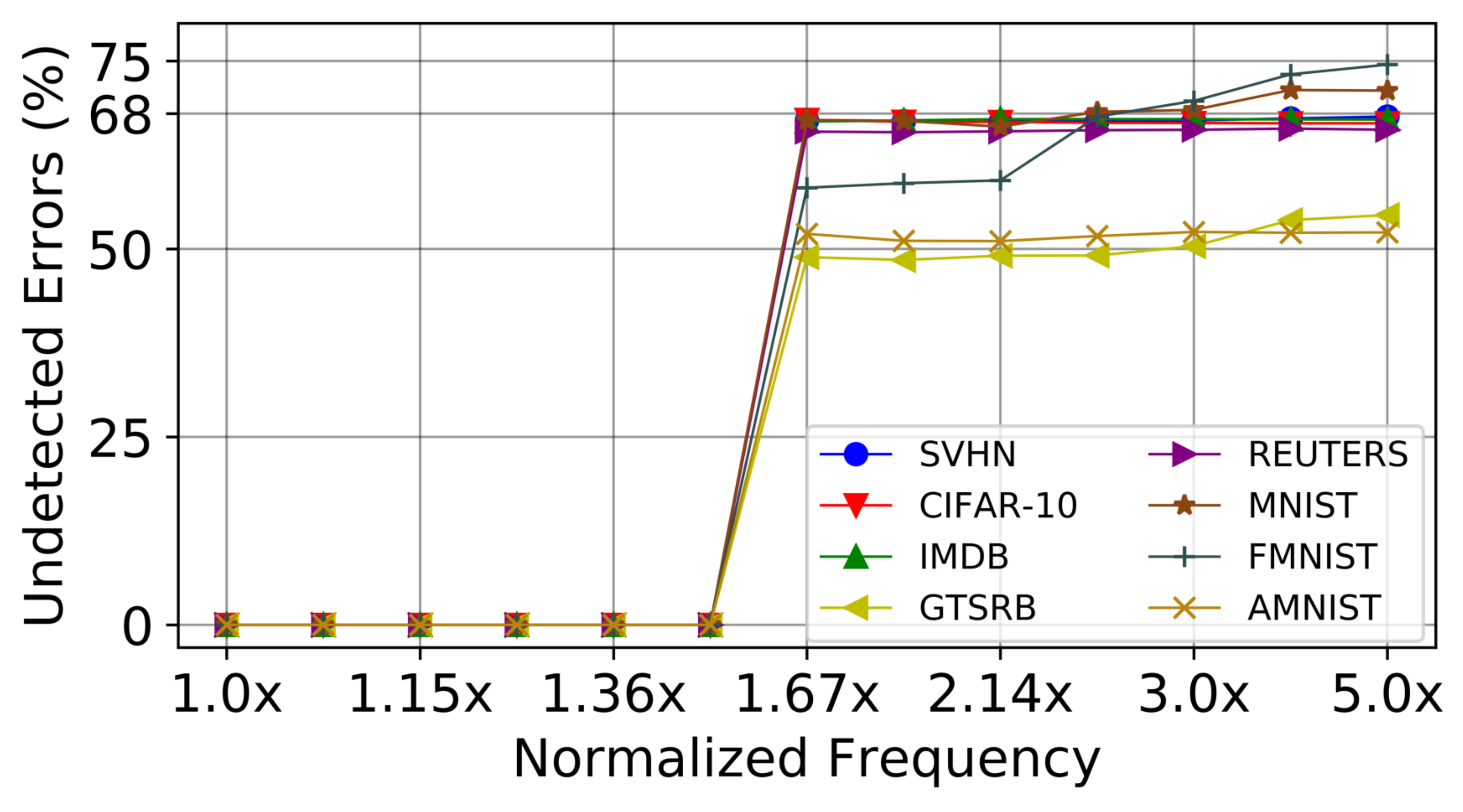

- We observe a monumental increase of undetected timing errors at higher frequencies and ultra-low NTC voltages (Section 2).

- We propose PREDITOR—a low-power TPU design paradigm that predicts and mitigates the timing errors over a range of operational cycles using an effective voltage boost mechanism (Section 3).

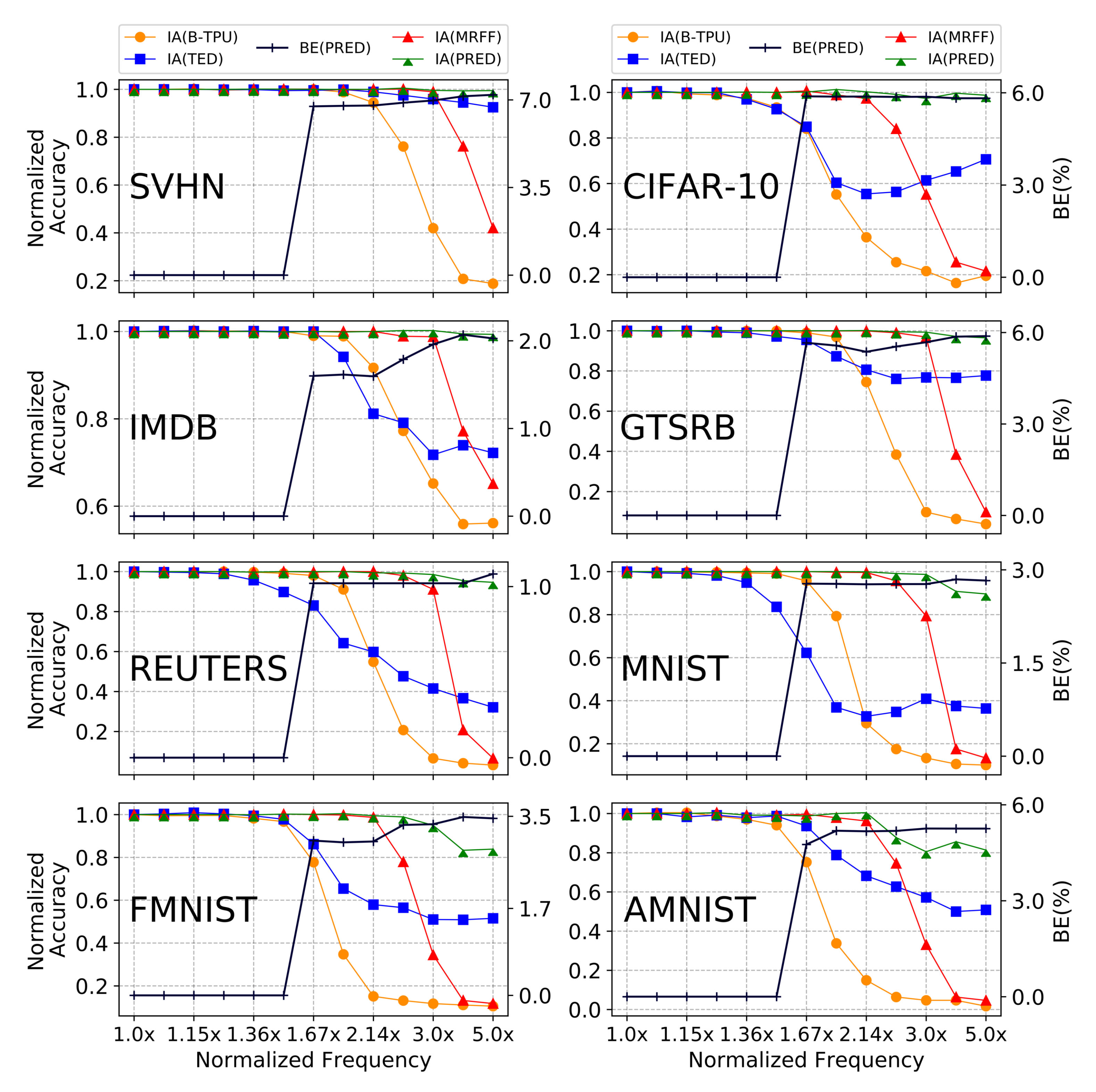

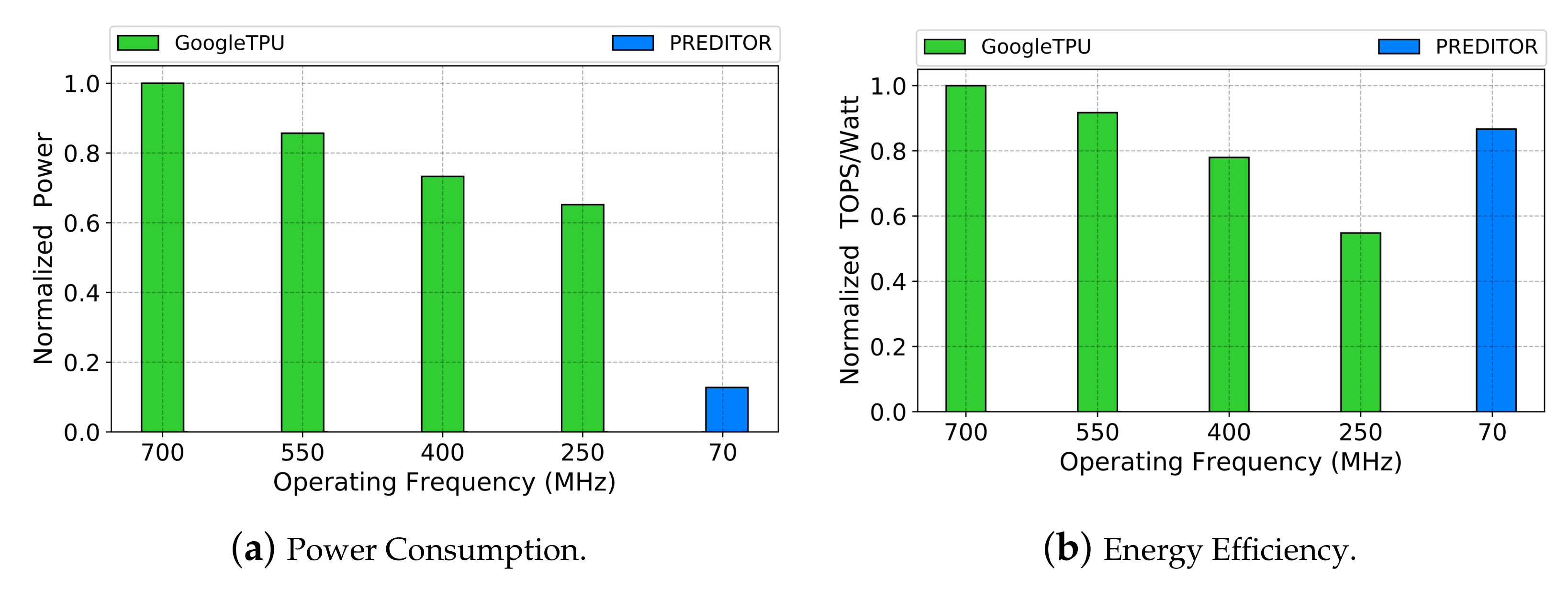

- We demonstrate that PREDITOR tackles up to ∼ of the undetectable timing errors, thereby preserving the inference accuracy of the DNN datasets. PREDITOR offers 3×–5× better performance in comparison to TE-DROP and Modified Razor Flip-Flop, with under 3% average loss in accuracy for five out of eight DNN datasets and incurs an area and power overhead of ∼ and ∼, respectively (Section 5).

- We illustrate that PREDITOR offers up to energy-efficiency gain in relative to a TPU operating in the Super-Threshold Computing (STC) realm, while utilizing only of its power (Section 5).

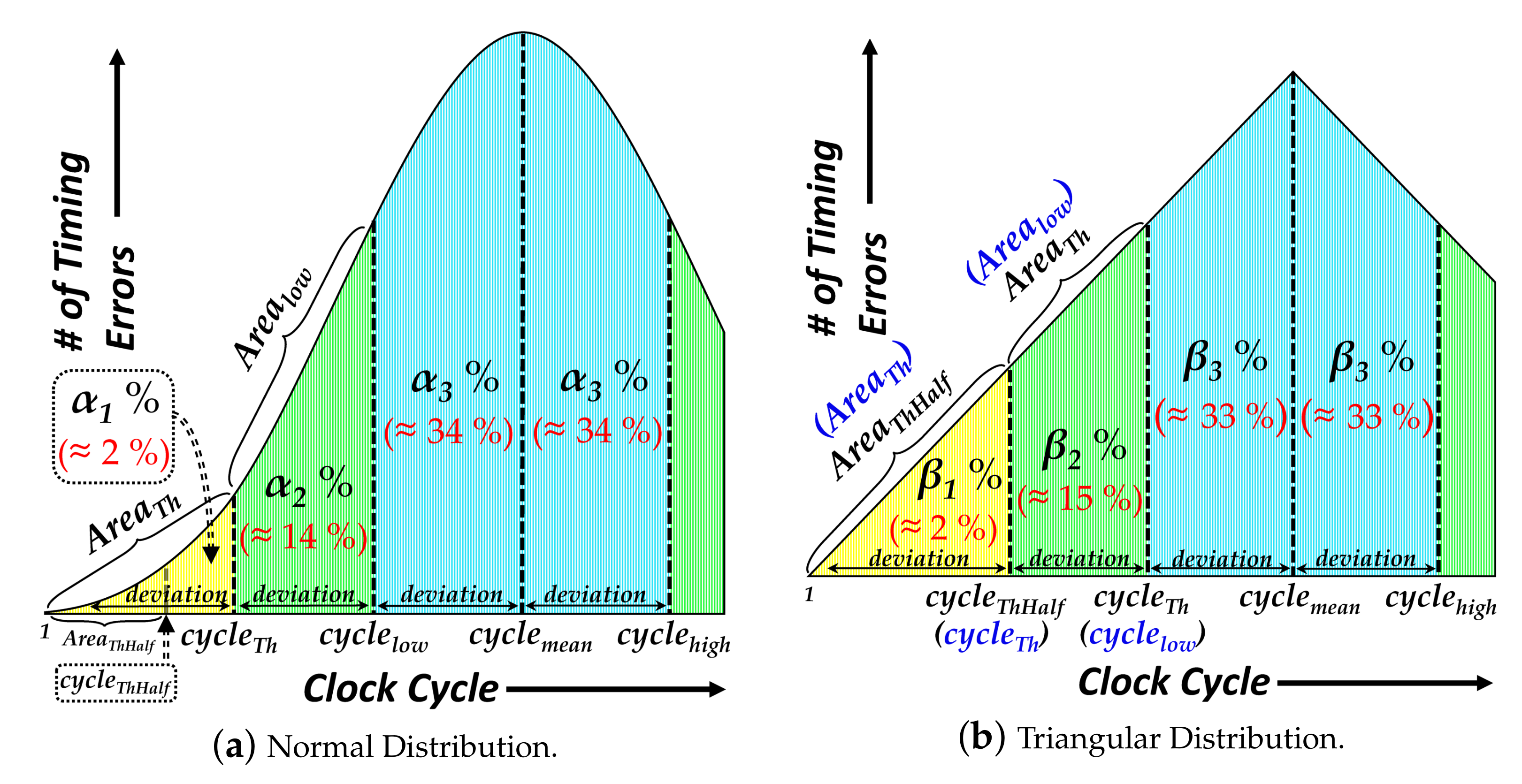

2. Motivation

2.1. Background and Limitations

2.1.1. Systolic Array Based DNN Accelerator

2.1.2. Limitations to NTC Performance

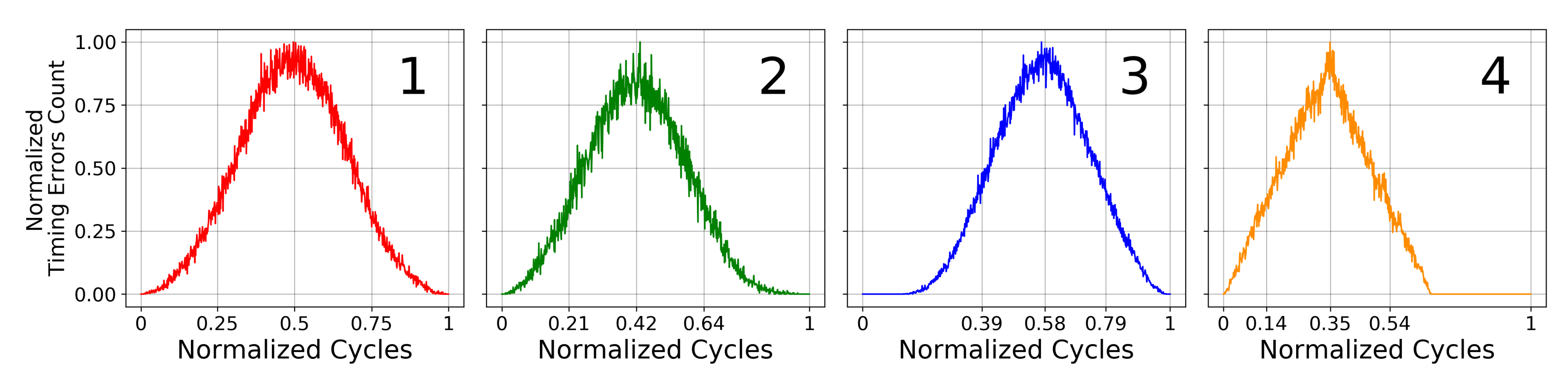

2.2. Predictive Systolic Array Dataflow

2.3. Methodology

2.4. Results and Significance

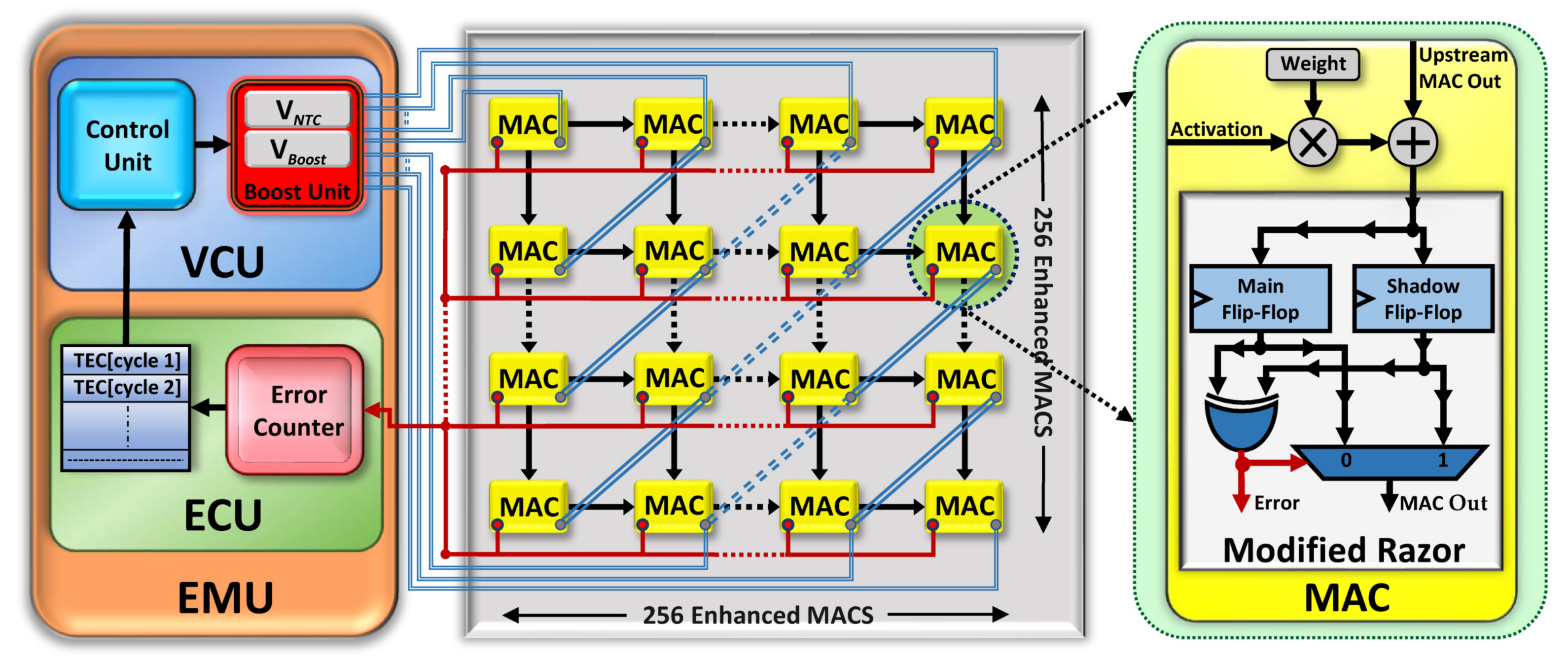

3. Design

3.1. Design Overview

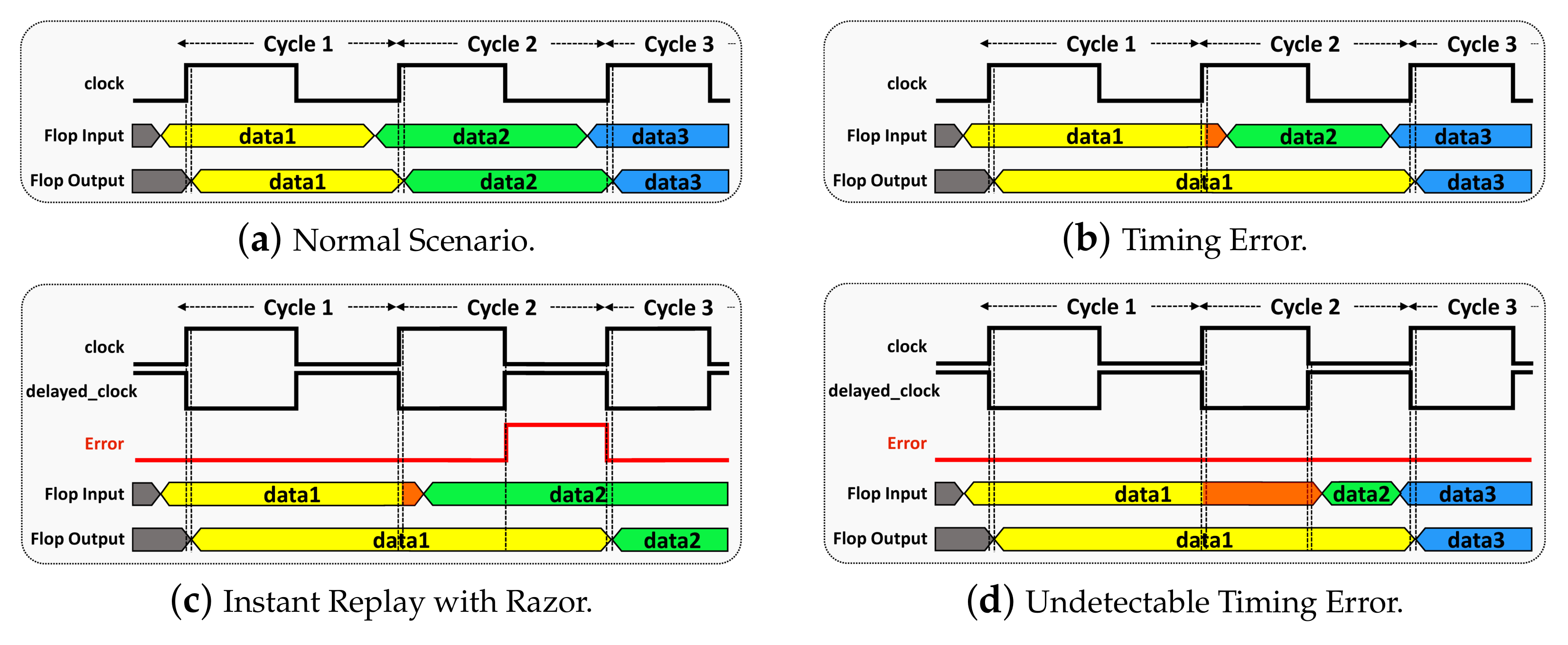

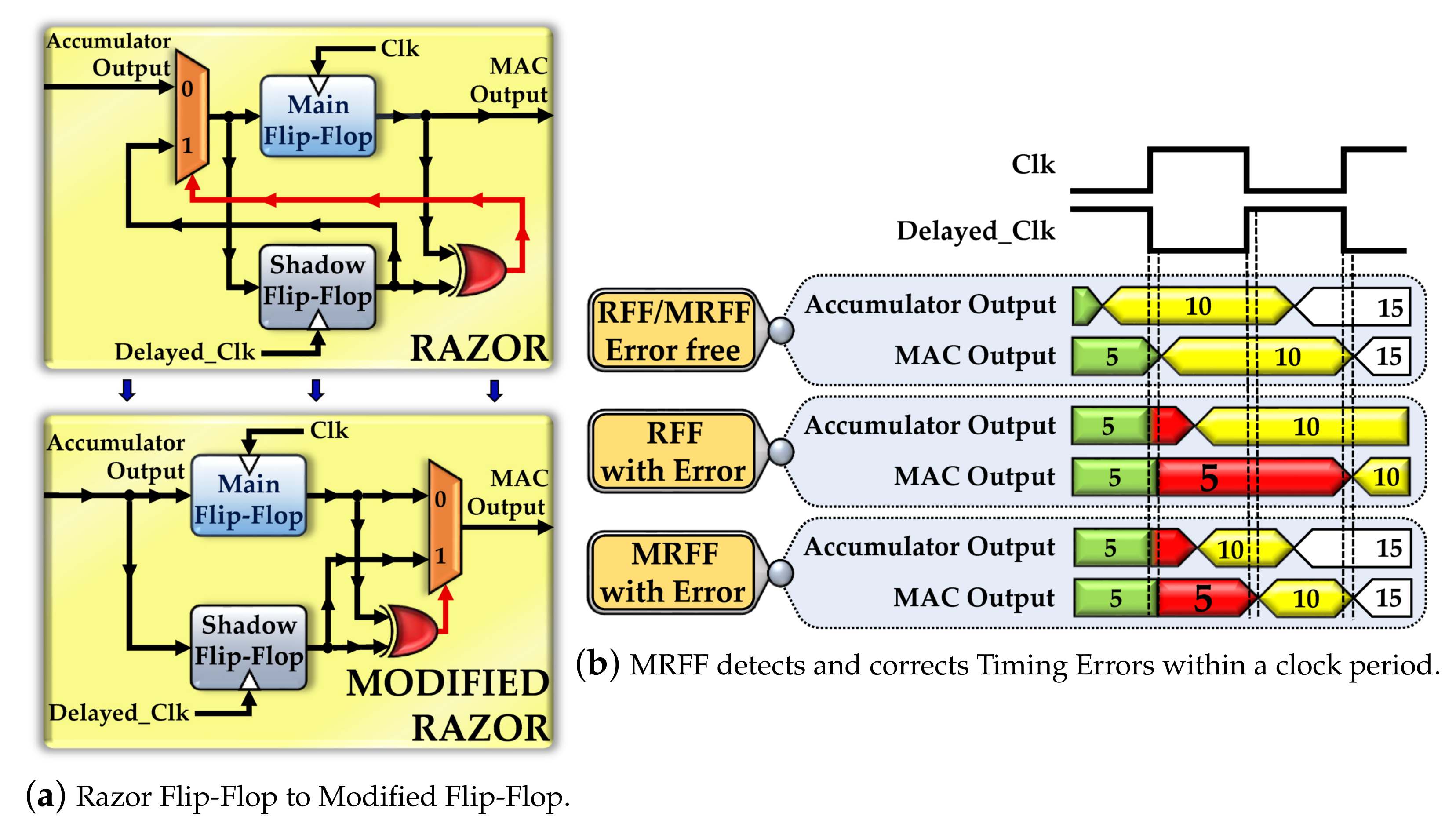

3.2. Modified Razor Flip-Flop (MRFF)

3.3. Error Collection Unit (ECU)

3.4. Voltage Control Unit (VCU)

3.4.1. Control Unit (CU)

| Algorithm 1: Boost Cycles Prediction Algorithm |

|

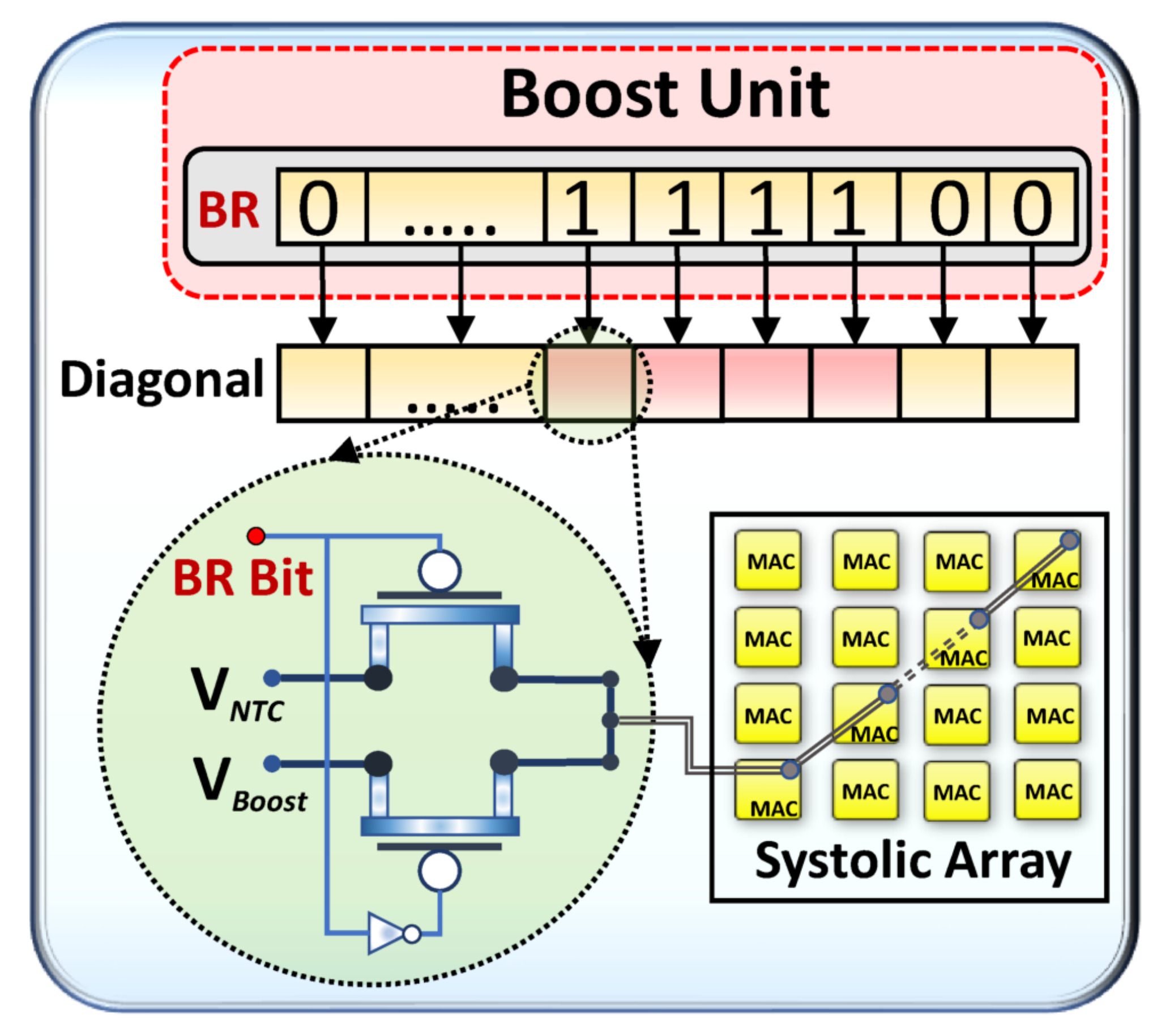

3.4.2. Boost Unit (BU)

| Algorithm 2:Voltage Boost Algorithm |

|

4. Methodology

4.1. Device Layer

4.2. Circuit Layer

4.3. Architecture Layer

5. Experimental Results

5.1. Comparative Schemes

- Baseline TPU (B-TPU): This technique does not employ any timing speculation methodologies and propagates the erroneous values down the systolic array computation stages [25].

- TE-DROP (TED): In this scheme, an MAC encountering a timing error recomputes the correct value by borrowing a clock cycle from the downstream MAC. The downstream MAC effectively annuls its operation and procures the recomputed upstream MAC output onto the next stage [9].

- MRFF: This scheme exploits the timing aperture to drive the delayed output onto the downstream MAC. Delayed output beyond detection results in an erroneous value being propagated down the systolic array.

- PREDITOR (PRED): This is our proposed scheme which uses the timing error information obtained using the timing speculation mechanism to predict and mitigate eminent timing errors by boosting the operating voltage of the MAC units for a definite period of operation (Section 3).

5.2. Error Resilience

5.3. Inference Accuracy and Voltage Boost

5.4. Is NTC TPU Worth It?

5.5. Hardware Overheads

6. Related Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Long, Y.; She, X.; Mukhopadhyay, S. Design of Reliable DNN Accelerator with Un-reliable ReRAM. In Proceedings of the 2019 Design, Automation & Test in Europe Conference & Exhibition (DATE), Florence, Italy, 25–29 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1769–1774. [Google Scholar]

- Reagen, B.; Whatmough, P.; Adolf, R.; Rama, S.; Lee, H.; Lee, S.K.; Hernández-Lobato, J.M.; Wei, G.Y.; Brooks, D. Minerva: Enabling low-power, highly-accurate deep neural network accelerators. In Proceedings of the ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), Seoul, Korea, 22 June 2016; Volume 44, pp. 267–278. [Google Scholar]

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D.; Agrawal, G.; Bajwa, R.; Bates, S.; Bhatia, S.; Boden, N.; Borchers, A.; et al. In-datacenter performance analysis of a tensor processing unit. In Proceedings of the Computer Architecture (ISCA), 2017 ACM/IEEE 44th Annual International Symposium on, Toronto ON Canada, 24–28 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–12. [Google Scholar]

- Creating an AI can be Five Times Worse for the Planet Than a Car. Available online: https://www.newscientist.com/article/2205779-creating-an-ai-can-be-five-times-worse-for-the-planet-than-a-car/ (accessed on 22 May 2022).

- Seok, M.; Chen, G.; Hanson, S.; Wieckowski, M.; Blaaw, D.; Sylvester, D. CAS-FEST 2010: Mitigating Variability in Near-Threshold Computing. J. Emerg Selec. Topics Cir. Sys. 2011, 1, 42–49. [Google Scholar] [CrossRef] [Green Version]

- Jiao, X.; Luo, M.; Lin, J.H.; Gupta, R.K. An assessment of vulnerability of hardware neural networks to dynamic voltage and temperature variations. In Proceedings of the 36th International Conference on Computer-Aided Design, Irvine, CA, USA, 13–16 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 945–950. [Google Scholar]

- Karpuzcu, U.; Kim, N.S.; Torrellas, J. Coping with Parametric Variation at Near-Threshold Voltages. IEEE Micro. 2013, 33, 6–14. [Google Scholar] [CrossRef]

- Ernst, D.; Kim, N.S.; Das, S.; Pant, S.; Rao, R.R.; Pham, T.; Ziesler, C.H.; Blaauw, D.; Austin, T.M.; Flautner, K.; et al. Razor: A Low-Power Pipeline Based on Circuit-Level Timing Speculation. In Proceedings of the 36th Annual IEEE/ACM International Symposium on Microarchitecture, San Diego, CA, USA, 5 December 2003; pp. 7–18. [Google Scholar]

- Zhang, J.; Rangineni, K.; Ghodsi, Z.; Garg, S. ThunderVolt: Enabling Aggressive Voltage Underscaling and Timing Error Resilience for Energy Efficient Deep Neural Network Accelerators. In Proceedings of the 55th Annual Design Automation Conference, San Francisco, CA, USA, 24–29 June 2018. [Google Scholar]

- Karpuzcu, U.R.; Kolluru, K.B.; Kim, N.S.; Torrellas, J. VARIUS-NTV: A microarchitectural model to capture the increased sensitivity of manycores to process variations at near-threshold voltages. In Proceedings of the DSN, Boston, MA, USA, 25–28 June 2012; pp. 1–11. [Google Scholar]

- NanGate. Available online: http://www.nangate.com/?page_id=2328 (accessed on 22 May 2022).

- Sarangi, S.; Greskamp, B.; Teodorescu, R.; Nakano, J.; Tiwari, A.; Torrellas, J. VARIUS:A Model of Process Variation and Resulting Timing Errors for Microarchitects. IEEE Tran. Semicond. Manufac. 2008, 21, 3–13. [Google Scholar] [CrossRef]

- Gundi, N.D.; Shabanian, T.; Basu, P.; Pandey, P.; Roy, S.; Chakraborty, K.; Zhang, Z. EFFORT: Enhancing Energy Efficiency and Error Resilience of a Near-Threshold Tensor Processing Unit. In Proceedings of the 2020 25th Asia and South Pacific Design Automation Conference (ASP-DAC), Beijing, China, 13–16 January 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 241–246. [Google Scholar]

- Miller, T.N.; Pan, X.; Thomas, R.; Sedaghati, N.; Teodorescu, R. Booster: Reactive Core Acceleration for Mitigating the Effects of Process Variation and Application Imbalance in Low-Voltage Chips. In Proceedings of the HPCA, New Orleans, LA, USA, 25–29 February 2012; pp. 1–12. [Google Scholar]

- Khatamifard, S.K.; Resch, M.; Kim, N.S.; Karpuzcu, U.R. VARIUS-TC: A modular architecture-level model of parametric variation for thin-channel switches. In Proceedings of the ICCD, Scottsdale, AZ, USA, 2–5 October 2016; pp. 654–661. [Google Scholar]

- Keras. 2015. Available online: https://keras.io (accessed on 22 May 2022).

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Wu, B.; Ng, A.Y. Reading Digits in Natural Images with Unsupervised Feature Learning. In Proceedings of the NIPS Workshop on Deep Learning and Unsupervised Feature Learning, Granada, Spain, 12–17 December 2011. [Google Scholar]

- Stallkamp, J.; Schlipsing, M.; Salmen, J.; Igel, C. Man vs. computer: Benchmarking machine learning algorithms for traffic sign recognition. Neural Networks 2012, 32, 323–332. [Google Scholar] [CrossRef] [PubMed]

- Reuters-21578 Dataset. 2021. Available online: http://kdd.ics.uci.edu/databases/reuters21578/reuters21578.html (accessed on 22 May 2022).

- Maas, A.L.; Daly, R.E.; Pham, P.T.; Huang, D.; Ng, A.Y.; Potts, C. Learning Word Vectors for Sentiment Analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland Oregon, 19–24 June 2011; pp. 142–150. [Google Scholar]

- LeCun, Y.; Cortes, C. MNIST Handwritten Digit Database. 2010. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 22 May 2022).

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images. Tech. Rep. 2009, 7. [Google Scholar]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Free Spoken Digit Dataset (FSDD). 2021. Available online: https://github.com/Jakobovski/free-spoken-digit-dataset (accessed on 22 May 2022).

- Whatmough, P.N.; Das, S.; Bull, D.M.; Darwazeh, I. Circuit-level timing error tolerance for low-power DSP filters and transforms. IEEE Trans. Very Large Scale Integr. (Vlsi) Syst. 2012, 21, 989–999. [Google Scholar] [CrossRef]

- Koppula, S.; Orosa, L.; Yağlıkçı, A.G.; Azizi, R.; Shahroodi, T.; Kanellopoulos, K.; Mutlu, O. EDEN: Enabling energy-efficient, high-performance deep neural network inference using approximate DRAM. In Proceedings of the 52nd Annual IEEE/ACM International Symposium on Microarchitecture, Columbus, OH, USA, 12–16 October 2019; pp. 166–181. [Google Scholar]

- Zhao, K.; Di, S.; Li, S.; Liang, X.; Zhai, Y.; Chen, J.; Ouyang, K.; Cappello, F.; Chen, Z. FT-CNN: Algorithm-based fault tolerance for convolutional neural networks. IEEE Trans. Parallel Distrib. Syst. 2020, 32, 1677–1689. [Google Scholar]

- Ozen, E.; Orailoglu, A. SNR: S queezing N umerical R ange Defuses Bit Error Vulnerability Surface in Deep Neural Networks. Acm Trans. Embed. Comput. Syst. (TECS) 2021, 20, 1–25. [Google Scholar] [CrossRef]

- Shafique, M.; Marchisio, A.; Putra, R.V.W.; Hanif, M.A. Towards Energy-Efficient and Secure Edge AI: A Cross-Layer Framework. arXiv 2021, arXiv:2109.09829. [Google Scholar]

- Yu, J.; Lukefahr, A.; Palframan, D.; Dasika, G.; Das, R.; Mahlke, S. Scalpel: Customizing dnn pruning to the underlying hardware parallelism. In Proceedings of the ACM SIGARCH Computer Architecture News, Toronto, ON, Canada, 24–28 June 2017; ACM: New York, NY, USA, 2017; Volume 45, pp. 548–560. [Google Scholar]

- Ozen, E.; Orailoglu, A. Boosting bit-error resilience of DNN accelerators through median feature selection. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2020, 39, 3250–3262. [Google Scholar] [CrossRef]

- Ye, H.; Zhang, X.; Huang, Z.; Chen, G.; Chen, D. HybridDNN: A framework for high-performance hybrid DNN accelerator design and implementation. In Proceedings of the 2020 57th ACM/IEEE Design Automation Conference (DAC), Virtual, 20–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Choi, W.; Shin, D.; Park, J.; Ghosh, S. Sensitivity Based Error Resilient Techniques for Energy Efficient Deep Neural Network Accelerators. In Proceedings of the 56th Annual Design Automation Conference, Las Vegas, NV, USA, 2–6 June 2019; ACM: New York, NY, USA, 2019; pp. 204:1–204:6. [Google Scholar] [CrossRef]

- Lin, Y.; Zhang, S.; Shanbhag, N.R. Variation-tolerant architectures for convolutional neural networks in the near threshold voltage regime. In Proceedings of the Signal Processing Systems (SiPS), 2016 IEEE International Workshop on, Dallas, TX, USA, NJ, USA, 2016, 26–28 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 17–22. [Google Scholar]

- Kim, S.; Howe, P.; Moreau, T.; Alaghi, A.; Ceze, L.; Sathe, V.S. Energy-Efficient Neural Network Acceleration in the Presence of Bit-Level Memory Errors. IEEE Trans. Circuits Syst. Regul. Pap. 2018, 65, 4285–4298. [Google Scholar] [CrossRef]

- Wang, X.; Hou, R.; Zhao, B.; Yuan, F.; Zhang, J.; Meng, D.; Qian, X. Dnnguard: An elastic heterogeneous dnn accelerator architecture against adversarial attacks. In Proceedings of the Twenty-Fifth International Conference on Architectural Support for Programming Languages and Operating Systems, Lausanne Switzerland, 16–20 March 2020; pp. 19–34. [Google Scholar]

- Pandey, P.; Gundi, N.D.; Basu, P.; Shabanian, T.; Patrick, M.C.; Chakraborty, K.; Roy, S. Challenges and opportunities in near-threshold dnn accelerators around timing errors. J. Low Power Electron. Appl. 2020, 10, 33. [Google Scholar] [CrossRef]

| Datasets | |

|---|---|

| Name | Layer Architecture |

| SVHN [17] | CONV: (32, 32, 3) × (32, 32, 32) × (32, 32, 32) × (14, 14, 64) × (14, 14, 64) × (5, 5, 128) × (5, 5, 128), |

| FC: 512 × 512 × 10 | |

| GTSRB [18] | CONV: (3, 48, 48) × (32, 48, 48) × (32, 46, 46) × (64, 23, 23)× (64, 21, 21) × (128, 10, 10) × (128, 8, 8), |

| FC: 2048 × 512 × 43 | |

| Reuters [19] | FC: 2048 × 256 × 256 × 46 |

| IMDB [20] | CONV: 400 × (400x50) × (398, 256), FC: 256 × 1 |

| MNIST [21] | FC: 784 × 256 × 256 × 10 |

| CIFAR-10 [22] | CONV: (32, 32, 3) × (32, 32, 32) × (32, 32, 32) × (16, 16, 64) × (16, 16, 64) × (8, 8, 128) × (8, 8, 128), |

| FC: 2048 × 512 × 10 | |

| FMNIST [23] | FC: 784 × 256 × 512 × 10 |

| AMNIST [24] | CONV: (20, 25, 1) × (20,25,128) × (20,25,64), FC: 32000 × 256 × 128 × 40 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gundi, N.D.; Pandey, P.; Roy, S.; Chakraborty, K. Implementing a Timing Error-Resilient and Energy-Efficient Near-Threshold Hardware Accelerator for Deep Neural Network Inference. J. Low Power Electron. Appl. 2022, 12, 32. https://doi.org/10.3390/jlpea12020032

Gundi ND, Pandey P, Roy S, Chakraborty K. Implementing a Timing Error-Resilient and Energy-Efficient Near-Threshold Hardware Accelerator for Deep Neural Network Inference. Journal of Low Power Electronics and Applications. 2022; 12(2):32. https://doi.org/10.3390/jlpea12020032

Chicago/Turabian StyleGundi, Noel Daniel, Pramesh Pandey, Sanghamitra Roy, and Koushik Chakraborty. 2022. "Implementing a Timing Error-Resilient and Energy-Efficient Near-Threshold Hardware Accelerator for Deep Neural Network Inference" Journal of Low Power Electronics and Applications 12, no. 2: 32. https://doi.org/10.3390/jlpea12020032

APA StyleGundi, N. D., Pandey, P., Roy, S., & Chakraborty, K. (2022). Implementing a Timing Error-Resilient and Energy-Efficient Near-Threshold Hardware Accelerator for Deep Neural Network Inference. Journal of Low Power Electronics and Applications, 12(2), 32. https://doi.org/10.3390/jlpea12020032