Manufacturing Readiness Assessment Technique for Defense Systems Development Using a Cybersecurity Evaluation Method

Abstract

1. Introduction

2. Literature Review

2.1. Acquisition Process of a Weapon System with Respect to MRLA: Comparison Between the US and South Korea

2.2. Risk Assessment Model Based on the MITRE Framework

3. Quality Engineering Approach for Considering Cybersecurity Capabilities in an MRLA Evaluation Based on Systems Engineering

3.1. Overview

3.2. Cybersecurity Evaluation Method for Weapon Systems in MRLA

3.2.1. Identifying Cybersecurity Requirements for AT/DT

3.2.2. AT/DT Configuration-Based Design Plan (Procedure)

3.2.3. Review of the AT/DT-Based Cyber Test Scenario Configuration

3.2.4. System Engineering with the Modified MRLA: AT/DT-Based Cybersecurity Capability Test

4. Case Study

4.1. Identifying Cybersecurity Requirements for AT/DT

4.2. Procedure for An AT/DT Configuration-Based Design Plan

- Attack and defense tree designs confirmed that component-specific attack scenarios

- And Corresponding defense strategies can be systematically derived.

- Mapping across MITRE ATT&CK, D3FEND, and NIST SP 800-53 Rev. 5 demonstrates the feasibility of aligning security requirements with applicable controls.

- This case illustrates the practical potential of establishing a security design process that spans vulnerability-based threat analyses to evaluate mitigation measures

4.3. Review of the AT/DT-Based Cyber Test Scenario Configuration

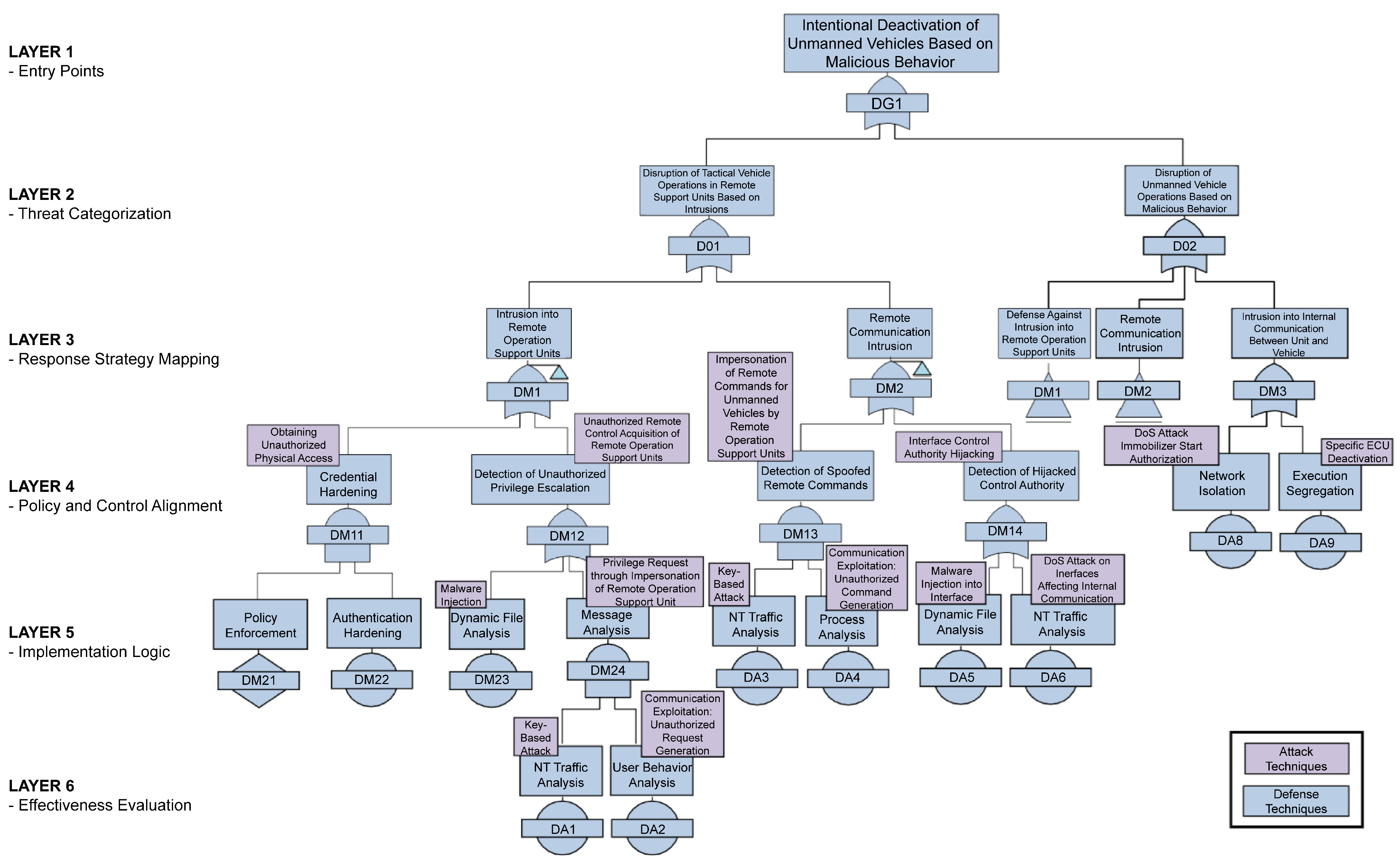

- LAYER 1—Entry Points: Represent initial access attempts through physical access or social engineering targeting remote operational units.

- LAYER 2—Threat Categorization: Potential threats, including privilege escalation, malware injection, and command hijacking, are grouped into distinct types.

- LAYER 3—Response Strategy Mapping: Associates each threat category with defensive actions defined in the MITRE D3FEND framework.

- LAYER 4—Policy and Control Alignment: Aligns defense techniques with NIST SP 800-53 Rev. 5 security controls to ensure policy compliance.

- LAYER 5—Implementation Logic: Converts each mapped defense strategy into specific technical implementations (e.g., multi-factor authentication, access restriction, file analysis) to form connected defense paths.

- LAYER 6—Effectiveness Evaluation: Defense performance is assessed using metrics such as detection, response, and recovery time, and feedback is incorporated for improvement.

- Attack and defense scenarios were constructed with clearly defined objectives and procedures, and their validity was verified through alignment with the MITRE ATT&CK and D3FEND frameworks as well as the NIST SP 800-53 security controls.

- Incorporating the latest CVEs and RTOS vulnerabilities, the scenarios enhanced practical relevance and demonstrated an ability to construct logically connected paths with multiple attack vectors and corresponding defense strategies.

- This case highlights the applicability of cyber-test configurations based on attack–defense scenarios, supporting verification of security capabilities during the TRR phase.

4.4. System Engineering with Modified MLRA: AT/DT-Based Cybersecurity Capability Test

- The case study confirmed that realistic threat identification and the establishment of defense strategies for unmanned vehicle components are achievable through the application of the MITRE ATT&CK and D3FEND frameworks.

- The cybersecurity evaluation items were systematically defined for each phase, demonstrating the applicability of threat-based security designs throughout the lifecycle.

- This case illustrates the practical validity of cybersecurity requirements identification by linking theoretical frameworks with implementable technical security controls.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| MRA | Manufacturing Readiness Assessment |

| MRLA | Manufacturing Readiness Level Assessment |

| AT/DT | Attack/Defense Trees |

| MCA | Major Capability Acquisition |

| MRL | Manufacturing Readiness Level |

| R&D | Research And Development |

| ATT&CK | Adversarial Tactics, Techniques, And Common Knowledge |

| ICS | Industrial Control Systems |

| CPS | Cyber-Physical Systems |

| D3FEND | Defensive Cybersecurity |

| SRR | System Requirements Review |

| SFR | System Functional Review |

| SSR | Software Specification Review |

| PDR | Preliminary Design Review |

| DT/OT | Design/Operation Tests |

| DoS | Denial Of Service |

| CVE | Common Vulnerabilities And Exposures |

| ECU | Electronic Control Unit |

| OS | Operating System |

Appendix A

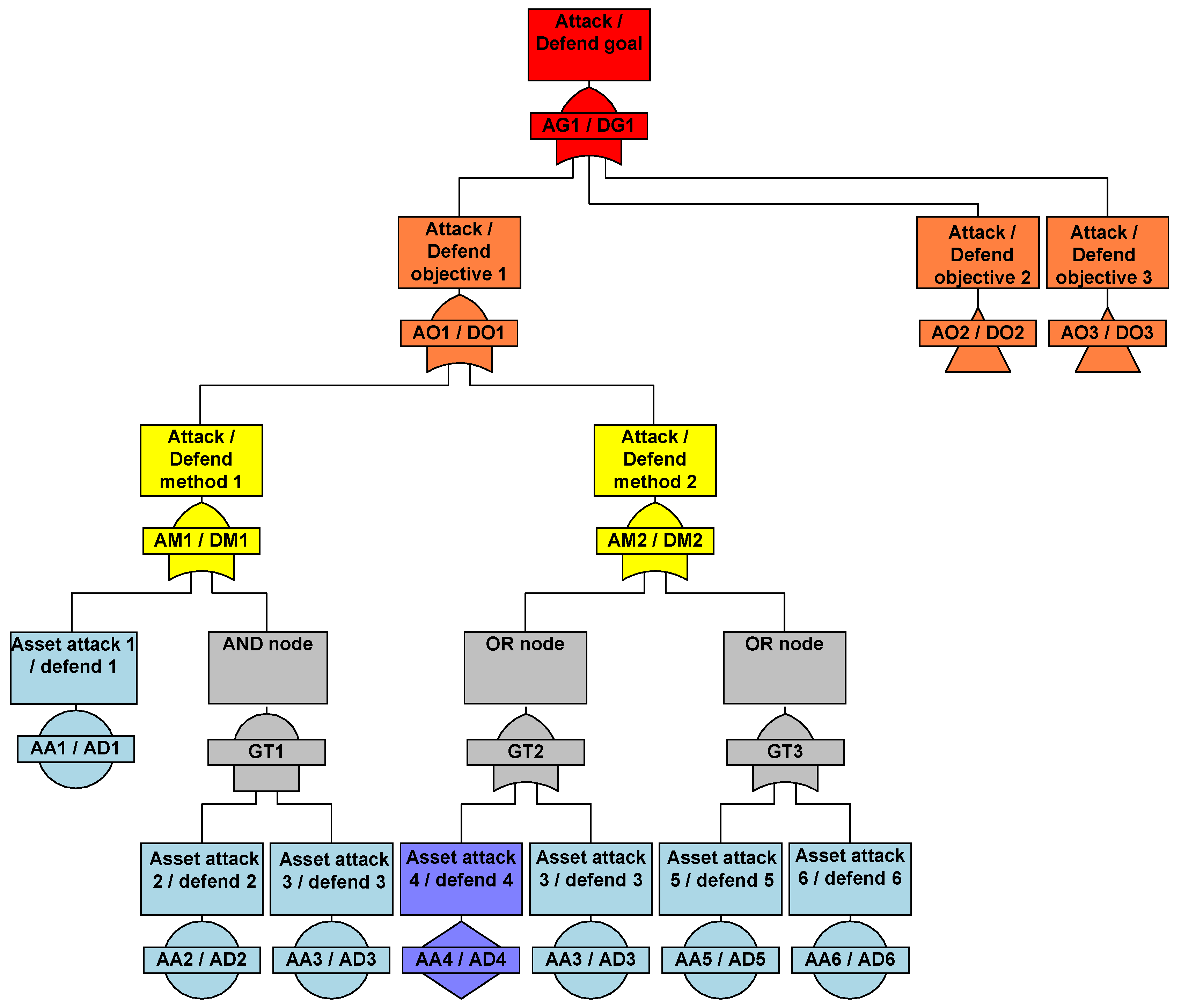

- AG/DG (attack/defense goal): The root of the tree represents the ultimate goal of the attacker or objective to be defended.

- AO/DO (attack/defense objective): Intermediate targets that must be reached to achieve the overall goal.

- AM/DM (attack/defense method): Specific techniques used to accomplish attack or defense objectives map to MITRE ATT&CK and D3FEND.

- AA1/AD1 and AA2/AD2 (actions): Concrete actions taken to execute methods that allow multiple branches in a scenario.

- GT1–GT3 (goal trees): Subtrees representing separate or parallel attack–defense scenario flows.

Appendix B

| Category | Content | Evaluation Method |

|---|---|---|

| defense rate | Evaluate how often attacks succeed during testing. | Calculate the attack success rate for each scenario and analyze the successful and unsuccessful attack paths to identify key vulnerabilities. |

| detection and response time | Evaluate how long it takes for the security system to detect and respond to an attack. | Measure detection and response times by analyzing logs and alert messages from the security system after an attack scenario is executed. |

| system resiliency | Evaluate the ability of the system to return to a healthy state after an attack. | Evaluate how quickly the system returns to normal operations after the attack is over, and analyze problems in the recovery process. |

| effectiveness of security controls | Evaluate how well existing security controls block attacks. | Observe the operation of security controls during the attack scenario and analyze the attack vectors that were effectively blocked versus those that failed. |

| Adequacy of risk response plans | Evaluate whether a risk response plan has been developed based on the test results. | Analyze and evaluate whether a response plan has been developed for the vulnerabilities discovered, the feasibility of the plan, and the status of implementation within the organization. |

| Compliance with security policies | Evaluate whether the defense testing process complies with existing security policies and regulations. | Review defensive testing scenarios and procedures for alignment with the security policies and applicable regulations of the organization, and adjust the test plan if necessary. |

| Category | #. | MRL 8 Standard Metrics |

|---|---|---|

| 1. Technical and industry capabilities | 1 | Are technical risks documented, and are plans and actions in place to reduce them? |

| 2 | Have you identified single/monopoly/overseas sources of supply, assessed their reliability, and established alternatives? | |

| 3 | By monitoring and analyzing your suppliers, do you have a plan in place for any delays to the overall business timeline due to supplier issues? | |

| 4 | Have you developed a contingency plan in case of business delays or disruptions? | |

| 5 | Has the supplier analysis considered the production capacity of each company? | |

| 6 | Is there a domestic industrial base with experience in producing similar components, or are there plans to expand new production facilities to produce the components? | |

| 7 | Has the environmental impact of the manufacturing process been analyzed and a plan of action developed? | |

| 8 | Has the company analyzed the potential for joint development and co-production? | |

| 2. Design | 9 | Are production and manufacturing experts formally involved in shape control, and is this formalized and practiced? |

| 10 | Have the individual product designs, including parts, components, and assemblies, been verified to meet the overall system design specification? | |

| 11 | Was the design completed on schedule? | |

| 12 | Have critical processes been identified and manufacturing process flowcharts defined? | |

| 13 | Has a technical acceptance criterion (acceptance criteria for checking physical/functional shape) been established and practiced? | |

| 14 | Has the key technology been demonstrated? | |

| 15 | Has the system development process verified that the key design characteristics can be achieved through the manufacturing process? | |

| 16 | Are geometry controls and geometry control history systematically managed and reflected in the technical data? | |

| 17 | Do the prime contractor and subcontractors have procedures and systems for shape tracking and control? | |

| 18 | Has the process design for manufacturing the product been completed and feasibility analyzed? | |

| 19 | Has the production process, including components produced by subcontractors, been stabilized and proven to be reliable? | |

| 20 | Are the requirements for firmware and software documented? | |

| 21 | Have all production and test equipment and facilities been designed and verified? | |

| 3. Expenses and funding | 22 | (For the project where the guidelines for the scientific business management practice are applied) Has the forecast of production costs been reviewed and analyzed to meet the target cost? |

| 23 | Are cost guidelines reflected in the design process, and target values for design cost and manufacturing cost are set? | |

| 24 | Are design cost targets for subsystems established and evaluated, and are costs traceable to the WBS? | |

| 25 | Does the prime contractor have cost-tracking procedures in place and cost-control metrics for subcontractors and suppliers? | |

| 4. Materials | 26 | Have the materials used been validated during the system development phase? |

| 27 | Have low-risk substitutes been considered in case of shortages of applied raw materials and their impact on performance? | |

| 28 | Are discontinued parts analyzed and acted upon, and is a material acquisition plan in place for mass production? | |

| 29 | Are procedures for part standardization in place and operational? | |

| 30 | Has the need to use new materials been identified, and an action plan developed based on material cost, delivery time, production capacity, etc.? | |

| 31 | Has the department that manages subcontracts developed and implemented a vendor management plan to ensure smooth procurement of parts/materials? | |

| 32 | Has a bill of materials (M-BOM) been completed for production? | |

| 33 | Have the issues of storage and handling of materials been considered, and storage and handling procedures put in place? | |

| 34 | Has the environmental impact of materials been analyzed and an action plan developed? | |

| 35 | Has a material management procedure and system been established and operated, including a parts management plan? | |

| 36 | Have you completed an analysis of long lead time items and developed a plan to acquire long lead time items for the first production? | |

| 5. Process Capabilities and process control | 37 | Is manufacturing technology acquisition for new and similar processes completed and validated? |

| 38 | Are processes that require manufacturing process changes when transitioning from development to mass production identified and validated? | |

| 39 | Are process variables defined for key process control? | |

| 40 | Have factors that can cause schedule delays been identified and improved through simulation? | |

| 41 | Has software for simulating the production process been identified and demonstrated? | |

| 42 | Has yield data been collected and analyzed during system development? | |

| 43 | Have facilities and test equipment been validated through system development? | |

| 6. Quality | 44 | Are activities for continuous process and quality improvement being performed? |

| 45 | Are procedures for quality improvement in place and operationalized? | |

| 46 | Are checkpoints defined in the manufacturing process? | |

| 47 | Has a quality management plan for suppliers been established and implemented during the system development phase? | |

| 48 | Is the quality assurance history documented and utilized in the system development phase? | |

| 49 | Are calibration/inspection procedures for measuring equipment in place and applied? | |

| 50 | Are procedures in place to incorporate failure information from the development phase into the process, and have they been operationalized during the system development phase? | |

| 51 | Are statistical process control (SPC) activities performed? | |

| 7. Workforce | 52 | Has the organization identified the manpower required to carry out the project and established a plan to expand it? |

| 53 | Has the company identified the specialized technical personnel required, and has a plan to secure them been established? | |

| 54 | Is there a program for training personnel in production, quality, etc.? | |

| 8. Facilities | 55 | Has the production facility been verified? |

| 56 | Has the production capacity of the facility been analyzed, and necessary measures taken to achieve the required production rate for mass production? | |

| 57 | Are activities being conducted to increase the capacity of existing facilities? | |

| 58 | Has the company identified whether government-owned facilities/equipment, etc., are needed for production and taken measures? | |

| 9. Manufacturing planning and scheduling | 59 | Has a production plan and management method been established and operated to enable low-rate initial production? |

| 60 | Has the risk management plan been updated? | |

| 61 | Are all necessary resources specified in the production plan? | |

| 62 | Is a plan for improving the maintenance performance of manufacturing equipment established and managed? | |

| 63 | Has the need for special equipment or test equipment been analyzed? | |

| 64 | Is the development of manufacturing standards, technical documentation, and manufacturing documentation required for production complete? | |

| 10. Cybersecurity capabilities | 65 | Is the attack mitigation rate and the time to detect and respond to those attacks adequate? |

| 66 | Is the system resilient to attacks, and are the effectiveness of security controls adequate? | |

| 67 | Do Does the defense testing process comply with existing security policies and regulations? |

References

- Yi, C.; Kim, Y. Security testing for naval ship combat system software. IEEE Access 2021, 9, 66839–66851. [Google Scholar] [CrossRef]

- Oh, S.; Cho, S.; Seo, Y. Harnessing ICT-enabled warfare: A comprehensive review on South Korea’s military meta power. IEEE Access 2024, 12, 46379–46400. [Google Scholar] [CrossRef]

- Defense Acquisition Program Administration. Regulations on the Processing of Technology Readiness Level and Manufacturing Readiness Level Evaluations; Defense Acquisition Program Administration: Gwacheon-si, Republic of Korea, 2024. [Google Scholar]

- Department of Defense. Manufacturing Readiness Level (MRL) Deskbook; Department of Defense: Arlington County, VA, USA, 2022. [Google Scholar]

- Straub, J. Modeling attack, defense and threat trees and the Cyber Kill Chain, ATT&CK and STRIDE frameworks as blackboard architecture networks. In Proceedings of the 2020 IEEE International Conference on Smart Cloud, Washington, DC, USA, 6–8 November 2020. [Google Scholar] [CrossRef]

- Abuabed, Z.; Alsadeh, A.; Taweel, A. STRIDE threat model-based framework for assessing the vulnerabilities of modern vehicles. Comput. Secur. 2023, 133, 103391. [Google Scholar] [CrossRef]

- Naik, N.; Jenkins, P.; Grace, P.; Song, J. Comparing attack models for IT systems: Lockheed Martin’s Cyber Kill Chain, MITRE ATT&CK Framework and Diamond Model. In Proceedings of the 2022 IEEE International Symposium on Systems Engineering (ISSE), Vienna, Austria, 24–26 October 2022. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology (NIST). Security and Privacy Controls for Information Systems and Organizations; SP 800-53, Rev. 5; National Institute of Standards and Technology (NIST): Gaithersburg, MD, USA, 2020. [Google Scholar] [CrossRef]

- Manocha, H.; Srivastava, A.; Verma, C.; Gupta, R.; Bansal, B. Security assessment rating framework for enterprises using MITRE ATT&CK matrix. arXiv 2021, arXiv:2108.06559. [Google Scholar] [CrossRef]

- Chen, R.; Li, Z.; Han, W.; Zhang, J. A survey of attack techniques based on MITRE ATT&CK Enterprise Matrix. In Network Simulation and Evaluation (NSE 2023), Proceedings of the Second International Conference, NSE 2023, Shenzhen, China, 22–24 November 2023; Communications in Computer and Information Science; Springer: Singapore, 2023; Volume 2064. [Google Scholar] [CrossRef]

- Khan, M.S.; Siddiqui, S.; Ferens, K. A cognitive and concurrent cyber kill chain model. In Computer and Network Security Essentials; Springer: Cham, Switzerland, 2017; pp. 585–602. [Google Scholar] [CrossRef]

- Ahmed, A.; Gkioulos, V. Assessing cyber risks in cyber-physical systems using the MITRE ATT&CK Framework. ACM Trans. Priv. Secur. 2023, 26, 1–33. [Google Scholar] [CrossRef]

- Svilicic, B.; Junzo, K.; Rooks, M.; Yano, Y. Maritime cyber risk management: An experimental ship assessment. J. Navig. 2019, 72, 1108–1120. [Google Scholar] [CrossRef]

- AUTOSAR. Requirements on Secure Onboard Communication; AUTOSAR: Munich, Germany, 2019. [Google Scholar]

- AUTOSAR. Specification of Key Manager; AUTOSAR: Munich, Germany, 2019. [Google Scholar]

- AUTOSAR. Specification of Secure Onboard Communication AUTOSAR CP R19-11; AUTOSAR: Munich, Germany, 2019. [Google Scholar]

- EVITA Project. EU FP7 (2008–2011). Available online: https://www.evita-project.org (accessed on 21 July 2025).

| MRL | US | South Korea |

|---|---|---|

| 1–3 | Criteria address manufacturing maturity and risks, beginning with pre-systems acquisition | A basic level of analyzing manufacturing issues, manufacturing concepts, or feasibility to achieve the objectives of the weapon system development project, or verifying the manufacturing concept |

| 4 | continue through the selection of a solution | At the preliminary research level, reviewing manufacturability |

| 5–6 | Criteria address manufacturing maturation of the needed technologies through early prototypes of components or subsystems/systems, culminating in a preliminary design. | Exploratory development level. A level that involves reviewing manufacturing capabilities for prototype production and assessing manufacturability of critical technologies or components |

| 7 | The criteria continue by providing metrics for an increased capability to produce systems, subsystems, or components in a production-representative environment, leading to a critical design review. | Completion stage of system development. A phase to confirm feasibility for initial production, conducting a manufacturing maturity assessment based on level 8 |

| 8 | The next level of criteria encompasses proving manufacturing process, procedure, and techniques on the designated “pilot line” | |

| 9 | Once a decision is made to begin LRIP, the focus is on meeting quality, throughput, and rate to enable transition to FRP | Entry level for initial mass production |

| 10 | The final MRL measures aspects of lean practices and continuous improvement for systems in production | Mass production phase |

| Area | Components | Security Threats | Security Risk | Security Technologies | |

|---|---|---|---|---|---|

| Weapon system | ECU | ECU disable | C(H), I(M), A(H) | Hardware-based process isolation, IO ports restricted | |

| Immobilizer | DoS attack for immobilizer start permissions | C(M), I(M), A(H) | |||

| Communication system | Control command receiver | Execution of unauthorized command | C(H), I(H), A(M) | Signature-based detection, multi-factor authentication, and Command Verification | |

| Internal communication DoS attack | C(M), I(M), A(H) | Behavior-based detection, IPS, and firewall rules) | Internal communication DoS attack | ||

| Backend infrastructure | Interface system Malware injection | C(H), I(H), A(H) | Anomaly detection, memory analysis, and network traffic analysis | ||

| Domain | Evaluation Criteria | Evaluation Methods |

|---|---|---|

| Weapon system | Has the security vulnerability of the ECU been identified? | Review whether it is possible to map ECU vulnerabilities and attack paths using the MITRE ATT&CK framework and generate related technical scenarios. |

| Has the latest technology related to ECU security vulnerabilities been identified? | Check whether it is possible to map the latest attack techniques using the MITRE ATT&CK framework. | |

| Is it possible to establish security controls for the ECU? | Review whether there are applicable security control items for the ECU using the MITRE ATT&CK and D3FEND frameworks based on NIST 800-53 REV.2. | |

| .......... | .......... | |

| Communication system | Has the security vulnerability of the control command receiver been identified? | Check whether it is possible to map network protocol vulnerabilities using the MITRE ATT&CK framework. |

| Does the control command receiver’s security protocol respond to the latest threats? | Review whether the security technology related to protocol communication threats or cryptographic module application can be mapped using the MITRE ATT&CK framework. | |

| Is data integrity verification for the control command receiver possible? | Check whether it is possible to map measures for maintaining data integrity using the MITRE D3FEND framework. | |

| .......... | .......... |

| Level 3 | Level 4 | Level 5 | Level 6 | Evaluation Metrics | C, I, A | ||||

|---|---|---|---|---|---|---|---|---|---|

| Mapping Based on NIST SP 800-53 Rev. 5/MITRE ATT&CK | |||||||||

| Control ID | Control Name | Mapping Type | Technique ID | Priority Order | |||||

| A T T A C K T R E E | [AM1] Intrusion into Remote Operation Support Units Parent: [A01/A02] …………. | [AM11] Obtaining Unauthorized Physical Access Parent: [AM1] …………. | [AM21] Malware Injection Parent: [AM12] | …………. [AA1] Key-based attack; illegal acquisition, modification, or destruction Parent: [AM22] | AC-16 | Security and privacy attributes | mitigates | T1550.001 | C(H), I(L), A(L) |

| CA-7 | Continuous monitoring | mitigates | T1204.001/T1204.002/T1204.003 | C(H), A(M), I(L) | |||||

| CA-8 | Penetration testing | mitigates | T1204.003 | C(H), A(M), I(L) | |||||

| CM-2 | Default configuration | mitigates | T1204.001/T1204.002/T1204.003 | C(H), A(M), I(L) | |||||

| RA-5 | Vulnerability monitoring and scanning | mitigates | T1204.003 | C(H), A(M), I(L) | |||||

| ………. | ………. | …………. | ………. | …………. | ………. | ||||

| Level 3 ~ Level. 6 | Evaluation Metrics | C, I, A | Contents | Vulnerabilities | ||||

|---|---|---|---|---|---|---|---|---|

| Mapping Based on NIST SP 800-53 Rev. 5/MITRE ATT&CK | ||||||||

| Control ID | … | Technique ID | Priority Order | Technique Name | General CVE Vulnerabilities | RTOS Vulnerabilities | ||

| A T T A C K T R E E | AC-16 | T1550.001 | C(H), I(L), A(L) | Stealing application access Tokens | CVE-2018-15801, CVE-2019-5625, CVE-2022-39222, CVE-2022-46382 | x | ||

| AC-2 | T1052 | C(H), I(M), A(L) | Application access tokens | CVE-2023-3497, CVE-2022-3312, CVE-2021-4122, CVE-2022-47578 | CVE-2021-36133 | |||

| CA-7 | T1204.001/T1204.002/T1204.003 | C(H), A(M), I(L) | Malicious link/Malicious file/Malicious Image | CVE-2019-16009, CVE-2019-1838, CVE-2019-15287, CVE-2019-1772 | CVE-2021-31566, CVE-2022-35260, CVE-2017-1000100, CVE-2018-18439 | |||

| CA-8 | T1204.003 | C(H), A(M), I(L) | Malicious link/Malicious file/Malicious Image | CVE-2016-8867, CVE-2021-35497, CVE-2022-23584, CVE-2022-20829 | CVE-2018-18439 | |||

| ……… | …… | …………. | …………. | …………. | …………. | …………. | ||

| Level. 3 | Level. 4 | Level. 5~6 | Evaluation Metrics | |||

|---|---|---|---|---|---|---|

| Mapping Based on MITRE D3FEND | ||||||

| Control ID | Control Name | ATT&CK ID/ATT&CK Mitigation | ||||

| D E F E N D T R E E | [DM1] Defense Against Intrusion into Remote Operation Support Units Parent: [D01/D02] | [DM11] Credential Hardening (Obtaining Unauthorized Physical Access) Parent: [DM1] | ………… | D3-DTP | Policy Enforcement: Domain Trust Policy | M1015/Active Directory Configuration |

| D3-SPP | Policy Enforcement: Strong Password Policy | M1026/Privileged Account Management M1027/PasswordPolicies | ||||

| D3-UAP | Policy Enforcement: User Account Privileges | M1015/Active Directory Configuration | ||||

| ………… | ………… | ………… | ||||

| [DM12] Unauthorized Privilege Escalation (Unauthorized Remote Control Acquisition of Remote Operation Support Units) Parent: [DM1] | [DM23] File analysis (malware injection) Parent: [DM12] | D3-DA | File Analysis: Dynamic Analysis | M1026/Privileged Account Management M1047/Audit M1048/Application Isolation and Sandboxing | ||

| D3-FH | File Analysis: File Hash | M1049/Antivirus/Antimalware | ||||

| …………. | …………. | …………. | …………. | …………. | …………. | |

| Level 3 ~ Level. 6 | Evaluation Metrics | |||||

|---|---|---|---|---|---|---|

| Mapping Based on MITRE D3FEND | NIST SP 800-53 Rev. 5 Framework Mapping | |||||

| Control ID | ATT&CK ID/ATT&CK Mitigation | Contents | Control ID | Control Name | ||

| D E F E N D T R E E | D3-DTP | M1015/Active Directory Configuration | Modify domain configurations to limit trust between domains | AC-4 | Enforcement of information flow control | |

| D3-UAP | M1015/Active Directory Configuration | Restrict resource access for user accounts | AC-2 | Account management | ||

| D3-MFA | M1032/Multi-factor Authentication | Require two or more proofs of evidence to authenticate users | AC-2, IA-2 | Account management, identification, and authentication (entity: user) | ||

| D3-OTP | M1027/Password Policies | One-time passwords are valid for only one user authentication | - | - | ||

| …… | … | …………. | …………. | …………. | …………. | |

| Domain | Evaluation Item | Evaluation Method |

|---|---|---|

| Offensive Tree for Autonomous Vehicles | Does the offensive tree align with the MITRE ATT&CK framework and scenarios/TTPs? | Verify whether the offensive tree is aligned with scenarios and TTPs through MITRE ATT&CK mapping. |

| Is the offensive tree appropriately mapped to NIST SP 800-53? | Analyze the NIST SP 800-53 mapping results to assess whether the offensive tree is properly linked to the corresponding regulation. | |

| Are the impacts on Confidentiality (C), Integrity (I), and Availability (A) clearly reflected? | Check whether the C, I, A priority is properly reflected in the offensive scenarios. | |

| Are vulnerabilities in the CVE and RTOS lists adequately reflected? | Compare the CVE database and RTOS vulnerability lists to determine whether the offensive scenarios reflect these vulnerabilities. | |

| .......... | .......... | |

| Defensive Tree for Autonomous Vehicles | Does the defensive strategy align with the MITRE D3FEND framework? | Verify whether the defensive strategy aligns with MITRE D3FEND mapping. |

| Does the defensive strategy include effective mitigation measures? | Compare the defensive strategy against the NIST 800-53 criteria to assess the inclusion of effective mitigation measures. | |

| Is the defensive strategy appropriately mapped to NIST SP 800-53? | Analyze the NIST SP 800-53 mapping results to assess whether the defensive strategy is properly linked to the corresponding regulation. | |

| Does the defensive strategy adequately address vulnerabilities from CVE and RTOS lists? | Compare the CVE database and RTOS vulnerability lists to check if the defensive strategy properly addresses these vulnerabilities. | |

| .......... | .......... |

| Domain | Evaluation Item | Evaluation Method |

|---|---|---|

| Construction of attack scenarios | Specificity and clarity of attack objectives | Evaluate whether the final objectives of the attack scenario and the steps to achieve them are clear and specific. |

| Diversity and complexity of attack techniques | Analyze the diversity and complexity of techniques used in the attack scenario to assess the realism of the scenario. | |

| Completeness and connectivity of the attack path | Evaluate whether each step of the attack is logically connected and whether the overall path is well-constructed. | |

| Mapping to the MITRE ATT&CK framework | Review whether the attack scenario is properly mapped to the MITRE ATT&CK framework. | |

| Mapping to NIST SP 800-53 security controls | Verify if each stage of the attack scenario is consistently mapped to the NIST SP 800-53 Rev. 5 security control items. | |

| Application of CVE and RTOS vulnerabilities | Assess whether the latest CVE and RTOS vulnerabilities are appropriately mapped within the attack scenario. | |

| Construction of defense scenarios | Specificity and clarity of defense objectives | Evaluate whether the final objectives of the defense scenario and the steps to achieve them are clear and specific. |

| Diversity and complexity of defense techniques | Assess the diversity and complexity of the defense techniques used in the scenario to determine the ability to respond to various attacks. | |

| Completeness and connectivity of the defense path | Evaluate whether each step of the defense scenario is logically connected and whether the overall path is well-constructed. | |

| Mapping to the MITRE D3FEND framework | Review whether the defense scenario is properly mapped to the MITRE D3FEND framework. | |

| Effectiveness of mitigation techniques | Assess whether the mitigation techniques defined in the defense scenario operate effectively in real environments. | |

| Mapping to NIST SP 800-53 security controls | Verify if each stage of the defense scenario is consistently mapped to the NIST SP 800-53 Rev. 5 security control items. |

| Evaluation Item | Description | Evaluation Method | Evaluation Results |

|---|---|---|---|

| Attack success rate | Assesses how often an attack succeeds during defense testing. | Calculate the success rate for each attack scenario and analyze major vulnerabilities. | Out of five scenarios, three attacks succeeded (60% success rate). |

| Detection and response time | Evaluates the time it takes for the security system of the autonomous vehicle to detect and respond to an attack. | Measure the time by analyzing logs and alert messages from the security system after executing the attack scenario. | Average detection time: 30 s, average response time: 45 s. |

| System resilience | Assesses the ability of the autonomous vehicle to return to normal state after an attack. | Measure system recovery time after the attack and analyze any issues in the recovery process. | Average recovery time: 3 min, with some errors occurring during the recovery process. |

| Effectiveness of security controls | Evaluates how well existing security controls block attacks. | Observe the operation of security controls during attack testing and analyze both successfully blocked and failed attacks. | 2 out of 5 attacks were blocked by security controls (40% blocking rate). |

| Vulnerability exposure | Assesses the number and severity of identified vulnerabilities during testing. | Analyze the final vulnerability results based on the severity and scores of CWE and CVSS mapped to NIST 800-53 Rev. 2. | 6 major vulnerabilities identified, 2 of which were rated as severe. |

| Appropriateness of risk response plan | Evaluates whether the risk response plan is appropriately established based on test results. | Review whether a response plan for vulnerabilities is established and assess its feasibility. | Response plan for vulnerabilities was established and rated as feasible. |

| Compliance with security policies | Evaluates whether the defense testing process complies with existing security policies and regulations. | Review whether the test scenarios and procedures conform to security policies. | Test conducted in accordance with security policies, with some adjustments needed for certain regulations. |

| No. | Evaluation Item | Pass Status |

|---|---|---|

| 65 | Is the attack mitigation rate and the time to detect and respond to those attacks adequate? | [v] Yes/[ ] No |

| 66 | Is the system resilient to attacks, and are the effectiveness of security controls adequate? | [v] Yes/[ ] No |

| 67 | Does the defense testing process comply with existing security policies and regulations? | [v] Yes/[ ] No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sung, S.-I.; Kim, D. Manufacturing Readiness Assessment Technique for Defense Systems Development Using a Cybersecurity Evaluation Method. Systems 2025, 13, 738. https://doi.org/10.3390/systems13090738

Sung S-I, Kim D. Manufacturing Readiness Assessment Technique for Defense Systems Development Using a Cybersecurity Evaluation Method. Systems. 2025; 13(9):738. https://doi.org/10.3390/systems13090738

Chicago/Turabian StyleSung, Si-Il, and Dohoon Kim. 2025. "Manufacturing Readiness Assessment Technique for Defense Systems Development Using a Cybersecurity Evaluation Method" Systems 13, no. 9: 738. https://doi.org/10.3390/systems13090738

APA StyleSung, S.-I., & Kim, D. (2025). Manufacturing Readiness Assessment Technique for Defense Systems Development Using a Cybersecurity Evaluation Method. Systems, 13(9), 738. https://doi.org/10.3390/systems13090738