Abstract

In the manufacturing supply chain, management reports often begin with concise messages that summarize key inventory insights. Traditionally, human analysts manually crafted these summary messages by sifting through complex data—a process that is both time-consuming and prone to inconsistency. In this research study, we present an AI-based system that automatically generates high-quality inventory insight summaries, referred to as “headline messages,” using real-world inventory data. The proposed system leverages lightweight natural language processing (NLP) and machine learning models to achieve accurate and efficient performance. Historical messages are first clustered using a sentence-translation MiniLM model that provides fast semantic embedding. This is used to derive key message categories and define structured input features for this purpose. Then, an explainable and low-complexity classifier trained to predict appropriate headline messages based on current inventory metrics using minimal computational resources. Through empirical experiments with real enterprise data, we demonstrate that this approach can reproduce expert-written headline messages with high accuracy while reducing report generation time from hours to minutes. This study makes three contributions. First, it introduces a lightweight approach that transforms inventory data into concise messages. Second, the proposed approach mitigates confusion by maintaining interpretability and fact-based control, and aligns wording with domain-specific terminology. Furthermore, it reports an industrial validation and deployment case study, demonstrating that the system can be integrated with enterprise data pipelines to generate large-scale weekly reports. These results demonstrate the application and technological innovation of combining small-scale language models with interpretable machine learning to provide insights.

1. Introduction

Inventory management is the process of supervising, controlling, and tracking a company’s goods and materials throughout the supply chain, from raw materials to finished goods. Effective inventory management in the manufacturing industry is widely recognized as a key element of operational and financial performance [1,2]. Inventory management is a cornerstone of manufacturing success for several key reasons. First, inventory often represents a significant portion of a manufacturer’s assets and operating costs, so optimizing inventory levels can lead to significant cost savings and improved cash flow [3]. Second, proper inventory management directly impacts production efficiency by providing the right materials and parts at the right time, thereby preventing costly delays or downtime [4,5]. Third, it plays a pivotal role in meeting customer demand and maintaining service quality [6]. Well-managed inventory prevents stockouts and delivery delays, thereby supporting consistent customer satisfaction [7]. Inventory management is a balance between having enough inventory to keep operations running smoothly and minimizing excess inventory that drains capital [8]. Practices such as just-in-time (JIT) manufacturing exemplify this balance [9]. While inventory holding costs are minimized, it requires very precise management to avoid production interruptions. However, achieving optimal inventory management is a complex task [10]. This complexity arises from the many dynamic and interrelated factors that must be considered in the decision-making process [10,11]. For example, managers must consider future demand forecasts and production plans, supplier lead times and reliability, availability of replacement or replacement parts, and the current progress of various processes on the factory floor. Each of these factors can change over time. Customer demand can spike or drop unexpectedly, suppliers can face delays, and production lines can experience unexpected issues [12]. Moreover, inventory itself can be multilayered, including raw materials, work-in-process, and finished goods, each with its own unique set of considerations. Integrating all of these aspects into a coherent decision requires not only data from multiple sources but also contextual knowledge of the manufacturing process. Because so many data streams and domain-specific insights must be synthesized, companies have traditionally relied on skilled planners and inventory managers who have the deep expertise and know-how to make these decisions [12].

In practice, these skilled field personnel continuously monitor inventory-related information and provide analysis results in reports or recommendations to decision makers [7]. For example, an inventory planner on the shop floor may observe that inventory levels for a critical component are declining faster than expected and may discover that deliveries from a key supplier are being delayed. In such cases, the planner reports this information to management and makes recommendations (e.g., expediting the ordering of the relevant parts or temporarily adjusting the production schedule). These reports are then forwarded to higher-level decision makers (e.g., operations managers or supply chain managers), who then decide on actions such as acquiring additional inventory, investigating discrepancies, recalibrating safety stock levels, or modifying production plans to prevent potential problems. Practices vary across countries and firms. In many contexts, enterprise systems such as enterprise resource planning (ERP) and material requirement planning (MRP) already generate exception or advisory messages, whereas in others, expert analysts compile narrative briefings.

These expert-generated reports and messages play a critical role in the decision-making process, essentially acting as a bridge between raw data from the field and strategic management activities. While the value of human-generated reports is undeniable, relying solely on an individual’s expertise has clear drawbacks. The process is time-consuming, subjective, and prone to inconsistency and error [13,14,15,16]. Furthermore, important knowledge often remains in the mind of experts, making it difficult to communicate or standardize [17]. These issues create a need for a more objective, efficient, and automated way to generate inventory management messages. Given these challenges, there is a strong incentive to automate and formalize inventory management report generation. Automated systems can consistently analyze relevant data, generate recommendations or alerts much faster than humans, and are based on defined logic or learned patterns rather than individual intuition. Such systems provide speed, objectivity, and reproducibility in decision support. However, developing automated solutions for this task is by no means an easy task. Traditional machine learning approaches struggle because decision making requires holistic reasoning on diverse and high-dimensional data sources. Feature spaces are vast, and relationships between variables are complex and context-dependent. Moreover, supervised learning is often difficult due to the lack of labeled examples that map specific inventory conditions to appropriate textual advice [18,19,20]. Emerging AI techniques offer potential solutions to this problem. In particular, the advent of large-scale language models (LLMs) has opened up the possibility of generating consistent textual summaries and recommendations from data. In theory, LLMs could be given prompts containing relevant inventory and production data and asked to produce reports similar to those written by human experts. In practice, large-scale language models are adept at processing language and have shown impressive results on a variety of text-generation tasks [21,22,23]. However, the simple application of LLMs to this problem faces serious challenges. The most pressing challenge is the sheer volume of data required to make informed decisions. This far exceeds the current input size limitations of LLMs, making this approach computationally infeasible [24]. Even if all relevant data could be fed into LLMs, the computation would be prohibitively slow and expensive. Furthermore, without careful grounding of the data, LLMs could generate recommendations that appear fluent and plausible but are not reliably supported by real-world facts [25]. To address these challenges, we reframe the report generation task as a prediction problem. Instead of generating free-form text from scratch, we first cluster a collection of historical inventory reports written by experts using natural language processing techniques to limit the output space. This clustering effectively captures common types of advisory reports by grouping similar messages into representative categories or scenarios. Once these typical message categories are identified, generating new reports is reduced to selecting the appropriate category for the current situation. Based on selected input features, a model is trained to predict the report category most appropriate for the given system state. Once the model selects a category, the corresponding message is generated as output, extracted from templates or examples related to that category, with details such as item name and quantity filled in as needed. By shifting report creation to a process of categorizing and template creation, this approach dramatically reduces the computational burden and could avoid hallucination problems. The model uses compact feature vectors and a limited set of outputs, making it fast and inexpensive to generate. Furthermore, because the output is based on clusters of real expert reports, the generated messages are consistent with human judgment and domain knowledge. In order to emphasize industrial relevance, the system’s outputs are explicitly linked to standard planning KPIs, working capital (via DIO and carrying cost), and service level, ensuring that each flagged exception supports profitability and supply–demand matching in routine operations.

2. Related Works

2.1. Headline Message Generation from Inventory Data

Early inventory management software primarily provided data tracking and static reporting capabilities. However, modern ERP and “inventory information” systems have evolved to generate proactive insights and recommendations from inventory data [26,27]. For example, modern ERP and supply chain platforms often include modules that automatically flag inventory anomalies or suggest actions (such as reorder prompts or surplus alerts). Even traditional material requirements planning (MRP) systems and manufacturing resource management systems (MRP II) generated simple exception messages, templated alerts that instructed planners to expedite or cancel orders based on inventory status [28]. Building on this concept, modern industry solutions integrate advanced analytics to generate more sophisticated headline messages. They not only identify issues such as potential stock shortages or overstocks, but also contextualize those issues (such as linking stock shortage risks to upcoming promotions or supplier delays). These inventory insight systems aim to transform raw data into concise, actionable information [29,30]. In practice, this often takes the form of dashboards or reports that highlight key inventory metrics along with generated narratives or recommendations. Existing research on inventory decision support follows suit, emphasizing the use of automated analysis of inventory levels, demand forecasts, and lead times to support decision making. Overall, the research clearly demonstrates a shift from passive inventory monitoring to active insight generation, with systems presenting important observations to users in a form that is close to natural language.

2.2. Data-to-Text Generation in Operational Context

The core underlying technology for automated inventory messaging is data-to-text generation, the process of converting structured data into fluent natural language [31,32,33,34]. Data-to-text generation has been extensively studied in areas such as weather reporting, finance, and personalized journalism; however, its application in manufacturing and operations is relatively recent [35,36,37]. Unlike general text summaries or open-ended chatbot conversations, operational data-to-text generation focuses on conveying concise, factual, and domain-specific information. In a manufacturing environment, this can include production line performance summaries, inventory trend descriptions, and automated daily operational briefings based on database and sensor inputs. Several approaches have been studied in previous studies. Template-based approaches have long been used in operational reporting in the industry [38,39]. For example, pre-written sentences with slots for inputting values (e.g., “X stock is below safety stock. Replenishment by date”) can ensure reliability and clarity. These rule-based natural language generation systems used for early decision support prototypes offer high precision and control, but lack flexibility. Recent studies have introduced neural generative models into these environments, fine-tuning language models on structured operational datasets to generate more diverse and fluent narratives. Neural natural language processing research demonstrates the feasibility of generating readable reports on assembly line metrics or supply chain KPIs. However, pure end-to-end Neural Network methods face challenges in operations, as they need to avoid hallucinations, which are false facts that do not exist in the data, and adhere to the jargon and formal tone expected in manufacturing reports [25]. In short, data-to-text transformation technologies are increasingly tailored to operations management, enabling systems to automatically explain the meaning of numbers on the factory floor or in the warehouse. Such research aids in the development of automated headline messages by providing algorithms that map complex data inputs to plain text outputs.

2.3. Lightweight AI Models and Scalable Implementations

Large-scale neural language models have demonstrated impressive text-generation capabilities. However, manufacturing applications often require lightweight, scalable solutions due to practical constraints [40]. In inventory management, systems must generate reports for thousands of items, and thus, the generation method must be highly efficient. Deploying a very large model for every inventory check or update would be too slow or cost-prohibitive. Additionally, many manufacturing companies favor on-premises or edge computing for reasons of data privacy and reliability, which imposes strict limits on the size and complexity of models that can be deployed [41].

As a result, researchers have explored various strategies to compress or streamline natural language generation (NLG) models for operational use. Common approaches include knowledge distillation, which is training a compact model to replicate a larger model’s behavior, model quantization, architecture optimizations, and using smaller pre-trained language models fine-tuned on domain-specific data [42]. The goal of these techniques is to reduce memory footprint and computation cost while preserving the model’s ability to produce consistent and relevant text for inventory reports.

Furthermore, template-based or rule-based generation methods are inherently lightweight and remain competitive for certain routine reporting tasks. These systems involve minimal computation—essentially inserting data into pre-defined sentence templates—so they can easily scale to produce large volumes of reports with negligible overhead. The obvious drawback is that purely template-driven approaches lack adaptability; they cannot easily handle unforeseen scenarios or linguistic variations beyond their pre-scripted rules. However, in stable domains like inventory reporting, this consistency and predictability can be advantageous, ensuring that critical information is delivered uniformly and without error.

Reflecting these trade-offs, some modern NLG platforms adopt hybrid architectures. For example, a system might use fixed templates or rule-based components for the most critical, high-precision parts of a message, while employing simpler neural models or statistical methods to generate the more variable, descriptive portions. This ensures that key facts are represented with guaranteed accuracy, while still maintaining a degree of linguistic flexibility to avoid overly formulaic text. An additional facet of scalability is maintainability: a text-generation system should be easy to update as data sources, business rules, or product lines evolve, without requiring extensive retraining or reprogramming. To this end, NLG engineering research often advocates modular pipeline designs, where separate components handle data processing, content selection, and linguistic realization. Such modularity facilitates adaptability and makes the overall system easier to maintain over time.

3. Methods

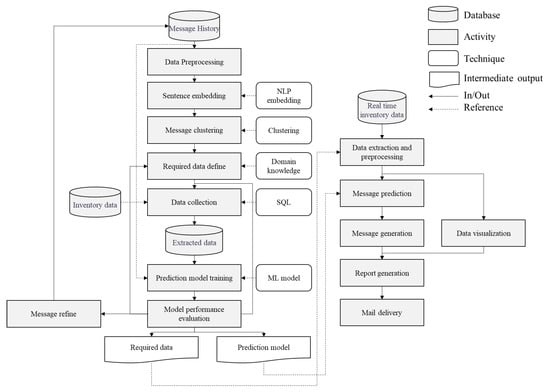

The proposed system converts raw inventory data into concise, informative message summaries through a series of sequential processing stages. This proposed system is policy-independent. It captures existing policy objectives (e.g., target DIO and service level settings) and transparently flags deviations, allowing corrective action to be taken without changing the organization’s ordering policies. Figure 1 presents a schematic overview of the end-to-end framework, from data ingestion to automated message generation.

Figure 1.

Proposed framework for the automated message generation for inventory management.

The proposed system automates the generation of insight messages for manufacturing inventory management by integrating natural language processing and machine learning. The pipeline transforms the status of inventory into human-readable recommendation messages that reflect expert assessments. The methodology consists of four main steps: (1) sentence embedding of past expert comments, (2) message clustering to explore standard insight categories, (3) development of a predictive model to map inventory metrics to these categories, and (4) message generation to generate final text insights.

On the left, message history is processed through NLP embedding and clustering to define message categories. On the right, real-time inventory data is extracted, aggregated, and fed into an ML model to predict applicable message types. The predicted messages are generated in natural language and embedded in automated reports (e.g., PowerPoint slides) and emailed to stakeholders. This end-to-end pipeline encompasses data collection, model training, message generation, report writing, and distribution.

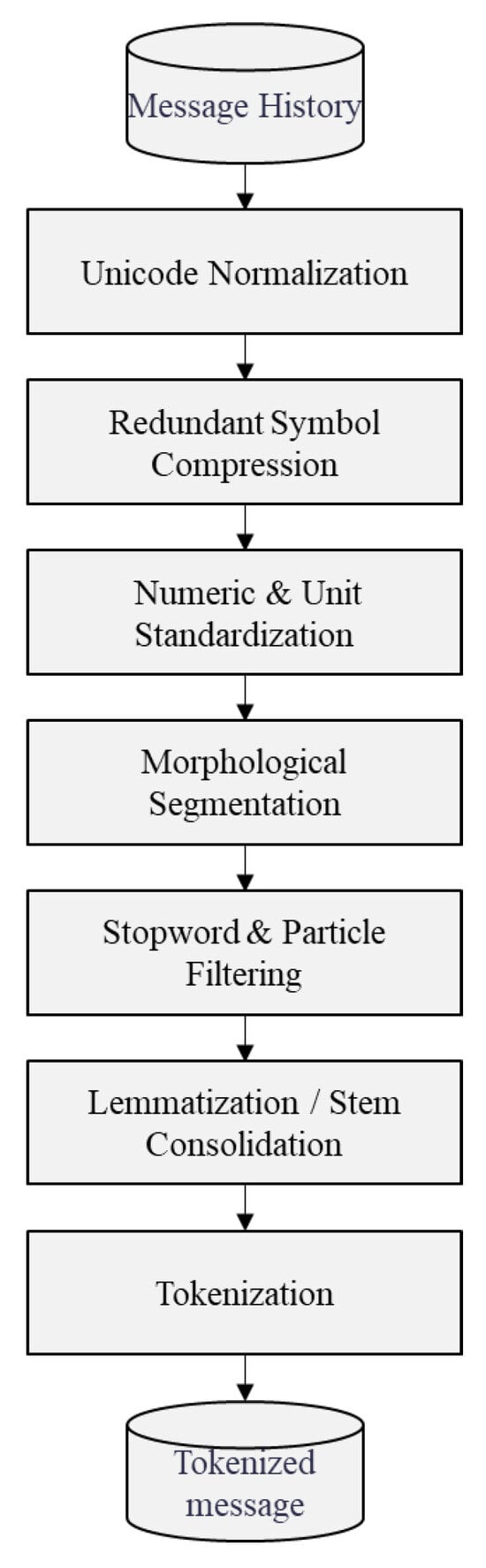

3.1. Data Preprocessing

Prior to embedding, a Korean text was processed through a multi-stage normalization and tokenization pipeline in order to ensure consistency and reduce noise (Figure 2). All text was first normalized to a standard Unicode form so that canonically equivalent characters had a uniform representation. This step eliminated discrepancies between visually identical characters (such as pre-composed Korean syllables and their decomposed counterparts) and provided a consistent encoding foundation. Next, extraneous whitespace and redundant symbols were removed or compressed. In practice, consecutive spaces were collapsed into a single space, and sequences of identical punctuation marks or special characters were reduced to a single instance, thereby eliminating noise from repetitive symbols. Numeric expressions and units of measurement were then standardized to a uniform format. Various unit notations were unified to reduce variability. This included ensuring a standard representation for quantities (e.g., consistently formatting currency or measurement units and, if appropriate, converting different unit abbreviations to a common notation). These normalization steps collectively regularized the text, preparing it for linguistic analysis.

Figure 2.

Data preprocessing process for sentence embedding.

After the above normalization, morphological segmentation was performed using a Korean morphological analyzer to address the language’s agglutinative nature. This process split each eojeol (i.e., each space-delimited Korean word form) into its constituent morphemes, effectively separating lexical stems from inflectional endings and particles. By analyzing each token at the morpheme level, the system identified meaningful units such as root nouns, verbs, and modifiers, as well as grammatical morphemes (e.g., case markers and conjugation suffixes). The result of this segmentation was a sequence of morpheme-level tokens (often accompanied by part-of-speech tags), which provided a finer-grained representation of the sentence. Following segmentation, high-frequency function words and particles were filtered out to reduce noise. In particular, common Korean stopwords, including grammatical particles (such as subject and object markers) and other function morphemes that carry little semantic content, were removed from the token list. This stopword and particle filtering step ensured that the remaining tokens predominantly consisted of semantically salient terms. Concurrently, lemmatization was applied to normalize each token’s form. All inflected or conjugated morphemes were converted to their base dictionary form (lemma) so that different surface forms of the same word would be treated uniformly. For example, conjugated verb forms and honorific or tense variations were mapped to a single canonical stem. This consolidation of stems under their lemma reduced sparsity in the token set and ensured that semantically identical word forms did not appear as distinct tokens in the embedding training data.

To handle out-of-vocabulary (OOV) words and extremely rare tokens, a robust fallback strategy was employed before finalizing the token list. Tokens that were not present in the training vocabulary (or fell below a minimum frequency threshold) were identified, and special measures were taken to mitigate their impact. In general, such tokens were either replaced with a generic “unknown word” symbol (e.g., an <UNK> token) or decomposed into smaller subword units that were likely to be in-vocabulary. For instance, an unseen compound word could be broken into known morphemes or syllable-level segments using a subword tokenization algorithm (such as byte-pair encoding), allowing partial information to be retained rather than discarding the token entirely. By applying this OOV handling procedure, the preprocessing ensured that no token passed to the embedding model was truly out-of-vocabulary; every token was either in the known vocabulary or reduced to sub-components that the model could recognize. Finally, with the text fully normalized, cleaned, and analyzed, the tokenization was executed to produce the final sequence of tokens for the sentence embedding model. In this final step, the processed text was transformed into a list of token indices. Each token in the sequence corresponded to a vocabulary element.

3.2. Sentence Embedding

First, we encoded a corpus of past inventory insight messages written by supply chain experts into a numerical representation suitable for clustering. For this purpose, we used the pretrained mini language model (MiniLM) sentence transformer model [43].

This model effectively captures the semantic content of messages by mapping each message to a dense 384-dimensional vector. This model is a lightweight transformer model with about 22 million parameters; however, it produces high-quality sentence embeddings for clustering and similarity tasks. Using these embeddings, messages with similar meanings (e.g., warnings about overstocks expressed in different words) are placed close together in vector space, allowing the next step of clustering to group messages based on true topical similarity rather than exact wording.

Each historical message, which was preprocessed above, was fed through the sentence transformer model to obtain a fixed-length embedding vector. The result is a set of semantic vectors for all historical insight sentences. These embeddings are used as input for the next step of unsupervised clustering analysis.

3.3. Message Clustering

After obtaining the message embeddings, we applied -means clustering [44] to group similar inventory insight messages into distinct categories. To determine an appropriate number of clusters , we evaluated values of from 2 to 15 using both quantitative metrics and qualitative visualization techniques [45]. Our goal was to find a clustering granularity that balanced within-cluster cohesion and between-cluster separation without over- or under-clustering the messages.

We first performed a silhouette coefficient analysis for each candidate . The silhouette coefficient provides a measure of clustering quality by assessing how well each data point fits within its assigned cluster compared to other clusters. For each message embedding in a cluster , we define the average intra-cluster distance:

which is the mean pairwise distance between and all other members of its own cluster (a measure of cluster cohesion). A lower indicates that is closely packed with others in its cluster. We then compute the silhouette value for each point by comparing this intra-cluster distance to the nearest-cluster distance (the distance from to the closest cluster of which it is not a part). The silhouette ranges from –1 to +1, where values close to +1 indicate that the point is much closer to points in its own cluster than to points in other clusters. We adopt the classical silhouette index, where a(i) is the mean intra-cluster distance and b(i) the mean distance to the nearest other cluster, because it directly balances cohesion and separation. Silhouette index is a widely recommended internal validity criterion, and mainstream toolkits support its use with cosine distance for vector embedding spaces [46]. We calculated the average silhouette score for each clustering solution (k = 2 to 15) to quantify overall clustering quality. A higher average silhouette score signifies more compact, well-separated clusters. In practice, we looked for an “elbow” or peak in the silhouette score curve as a clue to the optimal number of clusters.

In addition to the quantitative metric, we qualitatively assessed cluster separation using two-dimensional t-distributed Stochastic Neighbor Embedding (-SNE) plots [47]. For representative values of k (such as 2, 4, 6, and 10), we generated -SNE projections of the message embeddings colored by their -means cluster assignment. This allowed us to visually inspect how distinct or overlapping the clusters appeared in a reduced 2D space. Well-defined, tight groupings in the -SNE plot would indicate cohesive clusters, whereas significant overlap or indistinct boundaries would suggest that the clustering might be too coarse or otherwise suboptimal. By comparing -SNE plots at different k values, we could observe at what point increasing the number of clusters started to yield diminishing returns (e.g., splitting naturally coherent groups into arbitrary sub-groups).

Using the combination of silhouette analysis and -SNE visualization, we selected an optimal cluster count for our data. We aimed to choose a k that maximized cluster quality metrics and produced interpretable groupings of messages. This optimal k was then used to train a final -means model on all message embeddings, and the resulting clusters were carried forward for interpretation in the results.

3.4. Prediction Model Development

The next step was to develop a predictive model that could determine the appropriate insight clusters based on current inventory data. First, we trained a multi-class classification model by extracting several features from the raw inventory records. Each feature captured an aspect of weekly inventory performance based on structured tabular inventory records, where each entry corresponds to a distinct inventory item characterized by a comprehensive set of features. These features span general item metadata, current stock levels, target inventory levels, computed coverage metrics (estimated days-of-inventory remaining under current consumption rates), and forward-looking demand indicators (such as short-term demand forecasts or other leading demand signals). Due to the confidentiality of the company, the exact field names or detailed schema specifications are intentionally not disclosed. Table 1 is an example of pseudonymized and simplified input data.

Table 1.

Example of pseudonymized and simplified input data.

We evaluated several candidate machine learning algorithms to learn the mapping from weekly data to the correct insight categories. In particular, we tested a support vector machine (SVM) with an RBF kernel, a multilayer perceptron (MLP), an Extra Trees, a single Decision Tree, and an XGBoost [48,49,50,51,52]. We compared the performance of these models using stratified five-fold cross-validation on the training set, evaluating them primarily with overall classification accuracy and macro-average F1-score. Accuracy is calculated as below.

where is the number of classes and denote true positives, true negatives, false positives, and false negatives for class . Because several clusters show extreme class imbalance, accuracy alone could mask poor minority class performance. Therefore, we report the macro-average F1-score, which is defined as

where , and (precision) and (recall) are computed independently for each class. F1-score was emphasized because several message clusters were markedly imbalanced, making F1 a more reliable indicator of class-wise performance than accuracy alone. Macro-averaging gives equal weight to all classes regardless of their prevalence, making a more reliable indicator of imbalance.

We assessed statistical significance on fold-wise scores using non-parametric tests. Pairwise comparisons (best vs. runner-up within a cluster) used one-sided Wilcoxon signed-rank tests (exact, = 5) aligned with a directional hypothesis. Multiple-model comparisons used the Friedman test, followed by Nemenyi post hoc (α = 0.05). We also report Holm-adjusted p values across the six clusters per metric.

We performed hyperparameter tuning on all the candidate models using grid search. After selecting the optimal hyperparameters, we trained the final model using the entire training dataset and evaluated the model on a separate holdout test set containing several months of unidentified weekly data.

The classifier operates on weekly tabular features such as current inventory levels, target levels, and coverage metrics (days inventory outstanding (DIO), weekly changes, and short-term demand indicators) to assign observations to predefined insight categories (e.g., shortage risk, overstock). Because the mapping is learned from observed data, it is distribution-independent. Therefore, parametric assumptions about the demand generation process, such as Gaussian, intermittent, or chaotic, are not required. Rapid demand changes are reflected in the feature vectors and can be captured through the learned decision boundary. The system is intentionally decoupled from inventory management and does not calculate order quantities. Instead, it takes as input policy objectives, such as service level settings in a newsvendor formula or buffer profiles in demand-driven MRP (DDMRP), and issues transparent messages when deviations are detected. This structural separation allows organizations to maintain existing control policies while utilizing proposed condition assessment and exception management methods, thereby aligning the generated narratives with profitability and supply–demand objectives.

3.5. Message Generation

The final step in the pipeline is to generate human-readable insight messages based on the predicted categories and current data context. Instead of generating text freely, we take a template-based natural language generation (NLG) approach. Template-based NLG uses pre-written sentence patterns with slots for data values. We worked with domain experts to create a set of message templates, one for each of the six insight categories, to ensure that the tone and terminology matched the expert’s language. Each template encodes the general structure of the insight and includes placeholders where one can insert specific metrics or item details. For example, the excess inventory template states, “Current inventory is a {current_DIO}-day supply compared to the {target_DIO}-day supply.” It then recommends actions, such as reducing orders or increasing demand. In this template, the placeholders {current_DIO} and {target_DIO} are filled in with actual values for the week before output. As another example, the long-term inventory template quantifies the percentage of long-term inventory and suggests interventions (such as price reductions on obsolete items) when the percentage is high. At runtime, when the predictive model assigns a cluster label to the data for that week, the system selects that template and fills in the placeholders with the latest data values for that week. This rule-based generation strategy ensures that the message is consistent (similar situations generate similarly worded insights) and accurate (messages do not deviate from the facts provided by the data). It also improves explainability, since each generated sentence explicitly references the data (e.g., DIO values or percentages) that generated the insight. Using expert-validated, fixed templates, the system delivers insights that are reliable, understandable, and meet inventory managers’ expectations.

4. Results and Analysis

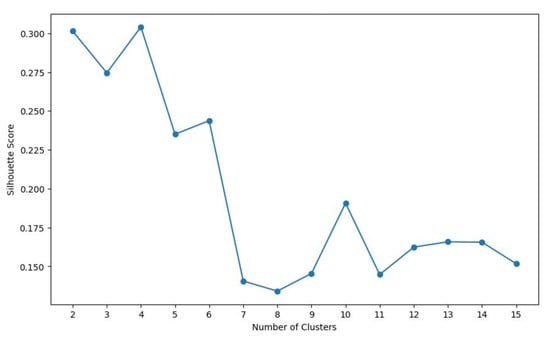

We analyzed 4280 item-week records from one global manufacturer across around thirty plants over 52 consecutive weeks. Using the methodology above, we evaluated clustering outcomes for k = 2 through 15 and identified six clusters as the most suitable grouping for the inventory insight messages. The average silhouette coefficient as a function of k (illustrated in Figure 3) showed an initial rise and peaked at a lower cluster count (around k = 3 or 4). However, those low-k solutions were too coarse; they merged several semantically distinct topics into the same cluster. We observed that at k = 6, the silhouette score was slightly below the absolute peak, but this point marked a clear inflection in the curve. In other words, k = 6 appeared to be an “elbow point”; beyond six clusters, the average silhouette coefficient declined steadily. Increasing the number of clusters past six yielded only marginal gains in cohesion at the cost of fragmenting previously coherent groups. This indicated that adding more clusters (for k > 6) mostly split existing categories into smaller sub-groups without markedly improving between-cluster separation. Thus, from a quantitative standpoint, six clusters offered the best trade-off between intra-cluster compactness and inter-cluster isolation.

Figure 3.

Silhouette coefficient according to clustering number.

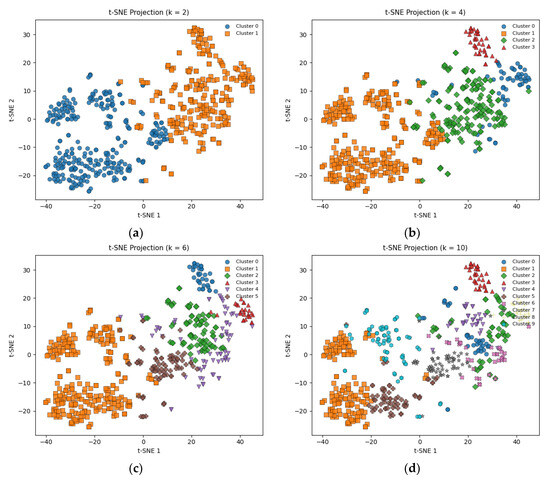

Qualitative visualization of the embedding space further supported this choice. The -SNE projections for different k values are shown in Figure 4 (with subplots for k = 2, 4, 6, and 10). Consistent with the silhouette analysis, the -SNE plot for k = 2 (Figure 4a) displayed only two very broad clusters in the 2D space, within which multiple distinct sub-patterns were visible, indicating that two clusters were overly broad and were each capturing several disparate message topics. At k = 4 (Figure 4b), the clusters became more distinct than at k = 2, yet we still observed some semantically different message types appearing within the same cluster region in the plot. This suggested that four clusters were still insufficient to capture all the key thematic variations in the insights. In contrast, the -SNE visualization for k = 6 (Figure 4c) showed six well-separated, cohesive clusters with minimal overlap between them. Each cluster appeared as a tight grouping of points in the 2D projection, indicating that messages within each cluster were very similar to each other and dissimilar from those in other clusters. Finally, when increasing to k = 10 (Figure 4d), some of the clusters in the -SNE plot began to split into adjacent smaller groupings. This over-segmentation at k = 10 suggested that a few of the six core topics were being broken down into less meaningful sub-clusters. These qualitative observations reinforced that k = 6 was capturing the natural structure in the data without over-clustering.

Figure 4.

t-SNE projections of the sentence-embedding space under varying -means cluster cardinalities: (a) k = 2, (b) k = 4, (c) k = 6, (d) k = 10.

In summary, both the quantitative metric (silhouette scores) and the qualitative examination (-SNE plots) indicated that six clusters were the optimal choice for our dataset. We therefore set k = 6 and applied -means to partition all message embeddings into six distinct clusters. This produced a manageable number of groups, each corresponding to a unique insight topic category present in the inventory management messages. We then examined representative messages in each cluster to interpret and label the theme of that cluster. The six identified insight clusters were defined as follows:

- Long-term inventory: Messages about the accumulation of obsolete or slow-moving stock, typically items held in inventory beyond a certain age threshold, indicating low turnover;

- Excess inventory: Messages highlighting overstocked items or situations where inventory levels substantially exceed the targets or expected demand;

- Risk of shortage: Warnings about potential near-term stockouts or critically low inventory levels for certain items, suggesting a risk of not meeting demand;

- Inventory performance: General assessments of overall inventory status and efficiency, such as evaluations of days inventory outstanding (DIO) or turnover performance against defined targets;

- Item-specific issues: Insights flagging anomalies or noteworthy conditions for particular items or SKUs (stock-keeping units), for example, items with exceptionally high or low inventory metrics compared to norms;

- Data/target gaps: Alerts about missing reference data, configuration issues, or undefined targets that hinder proper inventory assessment (e.g., an item missing a target DIO value, making it impossible to evaluate its performance against a benchmark).

Historical insight messages were authored in Korean free text. To construct a learnable dataset, each message was annotated against the six defined clusters, yielding a set of six binary labels per message. The value one denotes the presence of the corresponding insight, and the value zero denotes its absence. Because a message can include several sentences, multiple labels may be positive for the same message. We trained an independent binary classifier for each cluster using a common set of structured features derived from weekly inventory records. Each classifier produced a calibrated probability for its cluster, and the decision threshold on the validation folds was chosen to maximize macro F1 for that cluster. At inference time the system allows multiple positive predictions for a single message.

Table 2 compares the accuracy and F1-scores of five different classifiers on six distinct inventory-related message clusters. We used group-blocked five-fold cross-validation by week to avoid temporal leakage and stratified folds by cluster label. Hyperparameters were tuned by inner grid search. We report the mean and standard deviation across five folds for accuracy and macro-F1. A held-out eight-week set was reserved for system validation. The key observation is that no single model consistently outperforms the others across all clusters. Each message cluster exhibits a different pattern of which model performs best, indicating a balanced, cluster-by-cluster comparison without a clear overall favorite. Below is a breakdown of the performance in each cluster:

Table 2.

Comparative accuracy and F1-scores of five machine learning classifiers across six inventory message clusters. Values are bold means reported as proportions.

Long-Term Inventory: In this cluster, XGBoost, Extra Trees, and Decision Tree achieved the highest accuracy and F1-score (each 0.95). These three models were perfectly tied as top performers, indicating they were equally effective at classifying long-term inventory messages. By comparison, the SVM and Neural Network slightly trailed (around 0.91 accuracy and 0.89 F1), suggesting a modest drop in performance for those methods on this cluster.

Excess Inventory: The Extra Trees classifier excelled on excess inventory messages, attaining nearly perfect performance (0.99 accuracy and 0.99 F1-score). This is significantly higher than the other models on this cluster. The Neural Network and Decision Tree also performed well (both with F1 around 0.91 and accuracy in the mid-90% range). In contrast, XGBoost and SVM achieved about 0.93 accuracy but only 0.80 F1. This disparity (high accuracy but lower F1) suggests that XGBoost and SVM likely predicted the majority class frequently (perhaps classifying many messages as not indicating excess inventory), thus achieving high overall accuracy but missing a number of true excess inventory cases—resulting in a lower F1 for that positive class. Extra Trees’ superior performance here indicates it was especially adept at capturing the characteristics of excess inventory messages, with both precision and recall being very high.

Risk of Shortage: For messages signaling a risk of stock shortage, XGBoost was the top performer with 0.90 accuracy and 0.90 F1, indicating robust, balanced predictions for this category. SVM was the next best, though notably lower (around 0.83 on both metrics). Both the Extra Trees and Decision Tree models achieved about 0.81 accuracy and slightly lower F1 (around 0.79), indicating moderate performance. The Neural Network struggled on this cluster, only reaching 0.67 accuracy and F1—substantially worse than the other methods. This poor result suggests the Neural Network had difficulty recognizing the “risk of shortage” patterns, potentially due to the cluster’s features or limited data, whereas XGBoost’s ensemble approach captured them more effectively.

Inventory Performance: In this cluster (which likely aggregates messages about overall inventory KPIs or status), XGBoost, Extra Trees, and Decision Tree again delivered excellent and identical results (each about 0.95 for both accuracy and F1). This three-way tie at high performance implies that even a simple Decision Tree can perform as well as more complex ensemble models for this type of message, possibly because the patterns in this cluster are easier to learn or more linearly separable. SVM also performed strongly, with 0.91 accuracy and an F1 of 0.94, nearly matching the leaders. Interestingly, the Neural Network attained 91% accuracy (comparable to SVM), but its F1-score was much lower (0.70). This gap between accuracy and F1 suggests that the Neural Network might have been predicting the majority class (whichever class makes up most of the inventory performance messages dataset) correctly most of the time—hence the high accuracy—but failing to properly identify the minority class instances within this cluster. In other words, it likely had lower recall or precision for the key category, dragging down the F1-score despite high accuracy.

Item-Specific Issues: For messages focused on individual item problems, the SVM classifier performed best, achieving about 0.89 accuracy and 0.89 F1. XGBoost was the next closest (around 0.86 accuracy and 0.88 F1), showing comparable effectiveness. The Neural Network and Extra Trees classifiers yielded moderate performance in this cluster (roughly 0.82 accuracy and low-to-mid 0.80 s F1). The Decision Tree lagged behind the others here, with the lowest scores (~0.78 for both metrics). The fact that SVM slightly outperformed the ensemble methods in this category might indicate that the decision boundary for item-specific issue messages is well-suited to SVM’s capabilities (perhaps the data is relatively high-dimensional or the class separation is suited to SVM’s kernel), whereas the randomness in tree-based methods did not confer much advantage for this particular cluster.

Data/Target Gaps: In the messages concerning data or target gaps (likely issues where inventory data is missing or target stock levels are not met), XGBoost and Decision Tree achieved the top performance, each with 0.86 accuracy and 0.88 F1. It is noteworthy that a simple Decision Tree matched XGBoost here, implying that the pattern of these gap-related messages might be captured with straightforward rules. SVM and Extra Trees both followed with slightly lower results (around 0.82 accuracy, and F1 of 0.84 for SVM and 0.82 for Extra Trees). The Neural Network again had the lowest performance on this cluster (approximately 0.78 accuracy, 0.81 F1), though the gap between it and the others was not as large as in the risk of shortage cluster. This trend reinforces that the Neural Network, in this study, often did not outperform simpler models and, in some clusters, was distinctly underperforming.

Overall, the results in Table 2 demonstrate that performance is highly dependent on the message cluster. Each algorithm had strengths in certain clusters and weaknesses in others. There is no single classifier that dominates across all categories of inventory messages. For instance, tree-based ensemble methods (XGBoost and Extra Trees) tended to excel in several clusters (like long-term inventory and excess inventory), whereas SVM was the top choice for item-specific issues. Even the humble single Decision Tree held its own in a few clusters (tying for best in long-term inventory, inventory performance, and data/target gaps), and XGBoost was unmatched for risk of shortage. On the other hand, the Neural Network, despite usually being a powerful classifier, did not generalize as well in this particular application, possibly due to limited data per cluster or the need for more tuning. Across all six clusters, Friedman tests indicated statistically significant differences among classifiers (p ≤ 0.008). In clusters exhibiting two-decimal ties (long-term, inventory performance, data/target gaps), Nemenyi did not detect differences among co-best models, although each outperformed the weaker baseline. Where a unique best model existed (Excess, risk of shortage, item-specific), one-sided Wilcoxon tests favored the best over the runner-up (raw p = 0.031 for excess and risk of shortage; Acc p = 0.063 and F1 p = 0.313 for Item-specific). Holm adjustment across clusters retained significance for excess and risk of shortage. Table 3 presents one-sided Wilcoxon signed-rank tests that compare the unique best model with the runner-up in clusters without ties. In excess inventory and risk of shortage, raw p values equal 0.031 for both accuracy and F1, indicating statistically significant advantages for Extra Trees and for XGBoost. In item-specific issues, the SVM advantage over XGBoost is smaller with ΔAccuracy 0.031 and ΔF1 0.011, and the F1 test yields a p value of 0.313, which is not significant. After Holm adjustment across six pairwise comparisons, all adjusted p values exceed 0.05. The report, therefore, includes both adjusted p values and effect magnitudes to convey practical relevance alongside statistical evidence.

Table 3.

Pairwise one-sided Wilcoxon signed-rank test results comparing the best model with the runner-up in clusters with a unique best model.

Table 4 summarizes multiple-model comparisons using Friedman tests and Nemenyi post hoc analysis. The Friedman tests detect significant differences among classifiers for both metrics in every cluster. For F1, p values range from to . For accuracy, p values range from to . The Nemenyi analysis uses five models and five folds, and the critical difference equals 2.728. The top-ranked model is consistently superior to weaker baselines such as Decision Tree or Neural Network, while differences relative to the runner-up are generally not significant in clusters with small performance gaps or two-decimal ties. Taken together, these findings support the conclusion that no single classifier dominates across all categories and that selecting the classifier on a per-cluster basis yields the highest fidelity of the generated headline messages.

Table 4.

Friedman tests and Nemenyi post hoc summary (k = 5, N = 5, CD = 2.728).

The system was deployed with a multinational manufacturer operating multiple plants. Weekly reports cover item-level KPIs and headline messages

5. Conclusions

This study introduced and industrially validated an end-to-end framework that transforms heterogeneous inventory data into concise, actionable insights through (i) embedding-based message clustering, (ii) cluster-aware predictive classification, and (iii) template-driven natural language generation. By employing semantic sentence embeddings, we discovered six latent themes in historical inventory narratives without manual keyword engineering. Each message was subsequently labeled with its cluster membership, creating a rich training set that links textual insights to structured inventory indicators.

A comparative evaluation of five classifiers across the six clusters demonstrated that model performance is cluster-specific rather than globally uniform. Extra Trees achieved near-perfect accuracy in the excess inventory cluster, whereas XGBoost provided the most reliable predictions for risk of shortage and tied for best performance in long-term inventory and inventory performance. SVM excelled for item-specific issues, and both XGBoost and a single Decision Tree performed best for data/target gaps. Neural Network performance was competitive in some clusters but lagged in others, illustrating that deep models do not universally dominate tabular industrial data. These findings confirm that a cluster-dependent modelling strategy, deploying the classifier that best fits each insight category, can yield higher overall fidelity than a single, monolithic model.

The rule-based NLG component converted each model output into interpretable sentences whose placeholders are populated with real-time metrics. Expert review confirmed that the generated messages accurately reflected the underlying data and adopted a domain-appropriate tone, enhancing trust and facilitating rapid managerial uptake.

The entire system has been deployed at scale within a global manufacturing enterprise. Integrated with the firm’s live data pipeline, it continuously clusters new messages, selects the most suitable classifier for each cluster, and generates weekly insight reports. Operational users report improved visibility into stock anomalies such as emerging shortages, overstock trends, and data quality gaps, enabling proactive interventions that were previously manual and time-consuming.

Future work will quantify business impact, such as avoided stockouts and reduced working capital, evaluate human-in-the-loop review at scale, study concept drift and adaptive template revision, and assess a structured message-entry interface with predefined text modules to improve data quality at source.

Author Contributions

Conceptualization, B.J. and Y.C.; methodology, B.J.; software, Y.H., D.K., M.R. and J.P.; validation, Y.H., D.K., J.P. and Y.C.; data curation, Y.H.; writing—original draft preparation, B.J.; writing—review and editing, Y.C.; funding acquisition, B.J. and Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the 2025 Specialization Project of Pusan National University. This work was supported by the IITP (Institute of Information & Communications Technology Planning & Evaluation)-ICAN (ICT Challenge and Advanced Network of HRD) grant funded by the Korea government (Ministry of Science and ICT) (IITP-2024-00436954). This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. RS2023-00209978). This work was supported by the GRRC program of Gyeonggi province [GRRC KGU 2023-B01, Research on Intelligent Industrial Data Analytics].

Data Availability Statement

The datasets presented in this article are not publicly available because they are proprietary internal data and subject to corporate confidentiality restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kleindorfer, P.R.; Singhal, K.; Van Wassenhove, L.N. Sustainable operations management. Prod. Oper. Manag. 2005, 14, 482–492. [Google Scholar] [CrossRef]

- Chan, S.W.; Tasmin, R.; Aziati, A.N.; Rasi, R.Z.; Ismail, F.B.; Yaw, L.P. Factors influencing the effectiveness of inventory management in manufacturing SMEs. In Proceedings of the IOP Conference Series: Materials Science and Engineering, International Research and Innovation Summit (IRIS2017), Melaka, Malaysia, 6–7 May 2017; IOP Publishing: Bristol, UK, 2017; Volume 226, p. 012024. [Google Scholar] [CrossRef]

- Adeyemi, S.L.; Salami, A.O. Inventory management: A tool of optimizing resources in a manufacturing industry—A case study of Coca-Cola Bottling Company, Ilorin plant. J. Soc. Sci. 2010, 23, 135–142. [Google Scholar] [CrossRef]

- Elsayed, K. Exploring the relationship between efficiency of inventory management and firm performance: An empirical research. Int. J. Serv. Oper. Manag. 2015, 21, 73–86. [Google Scholar] [CrossRef]

- Chen, L.; Bhaumik, A.; Wang, X.; Wu, J. The effects of inventory management on business efficiency. Int. J. Multidiscip. Res. 2023, 5, 1–17. [Google Scholar] [CrossRef]

- Mpwanya, M.F. Inventory Management as a Determinant for Improvement of Customer Service. Ph.D. Thesis, University of Pretoria, Pretoria, South Africa, 2007. [Google Scholar]

- Beheshti, H.M. A decision support system for improving performance of inventory management in a supply chain network. Int. J. Prod. Perform. Manag. 2010, 59, 452–467. [Google Scholar] [CrossRef]

- Wisner, J.D.; Tan, K.C.; Leong, G.K. Principles of Supply Chain Management; South-Western Cengage Learning: Mason, OH, USA, 2008; pp. 95–110. [Google Scholar]

- Balkhi, B.; Alshahrani, A.; Khan, A. Just-in-time approach in healthcare inventory management: Does it really work? Saudi Pharm. J. 2022, 30, 1830–1835. [Google Scholar] [CrossRef]

- Giannoccaro, I.; Pontrandolfo, P. Inventory management in supply chains: A reinforcement learning approach. Int. J. Prod. Econ. 2002, 78, 153–161. [Google Scholar] [CrossRef]

- Ryciuk, U.; Zabrocka, A. Investigating challenges and responses in supply chain management amid unforeseen events. Eng. Manag. Prod. Serv. 2024, 16, 30–50. [Google Scholar] [CrossRef]

- Singh, N.; Adhikari, D. AI in inventory management: Applications, challenges, and opportunities. Int. J. Res. Appl. Sci. Eng. Technol. 2023, 11, 2049–2053. [Google Scholar] [CrossRef]

- Daniels-Koch, O.; Freedman, R. The expertise problem: Learning from specialized feedback. arXiv 2022, arXiv:2211.06519. [Google Scholar] [CrossRef]

- Alur, R.; Laine, L.; Li, D.; Raghavan, M.; Shah, D.; Shung, D. Auditing for human expertise. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 10–16 December 2023; Volume 36, pp. 79439–79468. [Google Scholar]

- Madamidola, O.A.; Daramola, O.A.; Akintola, K.G.; Adeboje, O.T. A review of existing inventory management systems. Int. J. Res. Eng. Sci. 2024, 12, 40–50. [Google Scholar]

- Ghazi, M.; Salih, H.S. Implementing an automated inventory management system for small and medium-sized enterprises. Iraqi J. Comput. Sci. Math. 2023, 4, 21–30. [Google Scholar] [CrossRef]

- Bartholomew, D. Building on Knowledge: Developing Expertise, Creativity and Intellectual Capital in the Construction Professions; Wiley-Blackwell: Oxford, UK, 2009. [Google Scholar]

- Gutierrez, J.C.; Polo Triana, S.I.; León Becerra, J.S. Benefits, challenges and limitations of inventory control using machine learning algorithms: Literature review. OPSEARCH 2024, 1–33. [Google Scholar] [CrossRef]

- Gijsbrechts, J.; Boute, R.N.; Van Mieghem, J.A.; Zhang, D. Can deep reinforcement learning improve inventory management performance on dual sourcing, lost-sales and multi-echelon problems? Manuf. Serv. Oper. Manag. 2022. [Google Scholar] [CrossRef]

- Kumar, P.; Bhonde, V.N.; Rajak, S.; Sundararaman, M. Machine learning applications in inventory management. In Industry 4.0 for Manufacturing Systems: Concepts, Technologies, and Applications; Springer: Singapore, 2025; pp. 137–160. [Google Scholar]

- Shu, L.; Luo, L.; Hoskere, J.; Zhu, Y.; Liu, Y.; Tong, S.; Chen, J.; Meng, L. ReWriteLM: An instruction-tuned large language model for text rewriting. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 18970–18980. [Google Scholar]

- Kumar, S.V.; Saroo Raj, R.B.; Praveenchandar, J.; Vidhya, S.; Karthick, S.; Madhubala, R. Future prospects of large language models: Enabling natural language processing in educational robotics. Int. J. Interact. Mob. Technol. 2024, 18, 204–215. [Google Scholar] [CrossRef]

- Peng, B.; Galley, M.; He, P.; Cheng, H.; Xie, Y.; Hu, Y.; Huang, Q.; Liden, L.; Yu, Z.; Chen, W.; et al. Check your facts and try again: Improving large language models with external knowledge and automated feedback. arXiv 2023, arXiv:2302.12813. [Google Scholar] [CrossRef]

- Wang, Z.; Kodner, J.; Rambow, O. Exploring limitations of LLM capabilities with multi-problem evaluation. In Proceedings of the 6th Workshop on Insights from Negative Results in NLP, Punta Cana, Dominican Republic, 1–3 May 2025; pp. 121–140. [Google Scholar]

- Jiang, L.; Jiang, K.; Chu, X.; Gulati, S.; Garg, P. Hallucination detection in LLM-enriched product listings. In Proceedings of the 7th Workshop on e-Commerce and NLP @ LREC-COLING 2024, Turin, Italy, 21–22 May 2024; pp. 29–39. [Google Scholar]

- Purwasih, R.; Candana, D.M. Development of inventory management information system in a retail company. J. Sains Inform. Terap. 2024, 3, 133–137. [Google Scholar] [CrossRef]

- Fika, M.; Hutagalung, R. Application of inventory information systems as a work practice management strategy in improving technology learning in education management. Edumaniora 2024, 3, 1–9. [Google Scholar] [CrossRef]

- Pekarcíková, M.; Trebuna, P.; Kliment, M.; Trojan, J. Demand driven material requirements planning: Some methodical and practical comments. Manag. Prod. Eng. Rev. 2019, 10, 14–24. [Google Scholar] [CrossRef]

- Kortabarria, A.; Apaolaza, U.; Lizarralde, A.; Amorrortu, I. Material management without forecasting: From MRP to demand driven MRP. J. Ind. Eng. Manag. 2018, 11, 632–650. [Google Scholar] [CrossRef]

- Roden, S.; Nucciarelli, A.; Li, F.; Graham, G. Big data and the transformation of operations models: A framework and a new research agenda. Prod. Plan. Control 2017, 28, 929–944. [Google Scholar] [CrossRef]

- Puduppully, R.; Dong, L.; Lapata, M. Data-to-text generation with content selection and planning. Proc. AAAI Conf. Artif. Intell. 2019, 33, 6908–6915. [Google Scholar] [CrossRef]

- Chen, W.; Su, Y.; Yan, X.; Wang, W.Y. KGPT: Knowledge-grounded pre-training for data-to-text generation. arXiv 2020, arXiv:2010.02307. [Google Scholar] [CrossRef]

- Ferreira, T.C.; van der Lee, C.; Van Miltenburg, E.; Krahmer, E. Neural data-to-text generation: A comparison between pipeline and end-to-end architectures. arXiv 2019, arXiv:1908.09022. [Google Scholar] [CrossRef]

- Phillips, W.D.; Sankar, R. Improved transient weather reporting using people-centric sensing. In Proceedings of the IEEE 10th Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 11–14 January 2013; pp. 920–925. [Google Scholar] [CrossRef]

- Lin, Y.; Ruan, T.; Liu, J.; Wang, H. A survey on neural data-to-text generation. IEEE Trans. Knowl. Data Eng. 2024, 36, 1431–1449. [Google Scholar] [CrossRef]

- Osuji, C.C.; Ferreira, T.C.; Davis, B. A systematic review of data-to-text NLG. arXiv 2024, arXiv:2402.08496. [Google Scholar] [CrossRef]

- Riza, L.S.; Putra, B.; Wihardi, Y.A.Y.A.; Paramita, B.E.T.A. Data-to-text for generating information of weather and air quality in the R programming language. J. Eng. Sci. Technol. 2019, 14, 498–508. [Google Scholar]

- Loizides, A. Development of a SaaS Inventory Management System. Master’s Thesis, Kemi-Tornio University of Applied Sciences, Tornio, Finland, 2013. [Google Scholar]

- Sanyal, C. Development of a Web-Based Inventory Management System for a Small Retail Business. Master’s Thesis, Regis University, Denver, CO, USA, 2005. [Google Scholar]

- Peng, J.; Wang, D.; Kreuzwieser, S.; Kimmig, A.; Tao, X.; Wang, L.; Ovtcharova, J. Product quality recognition and its industrial application based on lightweight machine learning. Eng. Optim. 2024, 56, 2242–2267. [Google Scholar] [CrossRef]

- Chen, B.; Wan, J.; Celesti, A.; Li, D.; Abbas, H.; Zhang, Q. Edge computing in IoT-based manufacturing. IEEE Commun. Mag. 2018, 56, 103–109. [Google Scholar] [CrossRef]

- Zhang, D.; Listiyani, D.; Singh, P.; Mohanty, M. Distilling wisdom: A review on optimizing learning from massive language models. IEEE Access 2025, 13, 56296–56325. [Google Scholar] [CrossRef]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence embeddings using Siamese BERT-networks. In Proceedings of the EMNLP-IJCNLP 2019, Hong Kong, 37 November 2019; pp. 3982–3992. [Google Scholar] [CrossRef]

- McQueen, J.B. Some methods of classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 21 June–18 July 1965; pp. 281–297. [Google Scholar]

- Rousseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Kaufman, L.; Rousseeuw, P.J. Finding Groups in Data: An Introduction to Cluster Analysis; Wiley: New York, NY, USA, 1990. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).