Self-Organized Structuring of Recurrent Neuronal Networks for Reliable Information Transmission

Abstract

Simple Summary

Abstract

1. Introduction

2. Methods and Materials

2.1. Model Network

2.1.1. Neuron Model

2.1.2. Neural Adaptation

2.1.3. Synaptic Plasticity and Normalization

2.2. Training and Testing Paradigm

- (1)

- Warm-up: The network is simulated without input for a period of 50 s to allow all dynamical variables to converge to an equilibrium distribution.

- (2)

- Training: In the following, five non-overlapping stimulation groups of 40 excitatory neurons are driven through strong connections (20 nS) from a group-specific Poisson spike source firing at 50 Hz when activated. Every 200 ms another source is activated for 100 ms.

- (3)

- Relaxation: Afterwards, plasticity and inputs are turned off and the homeostatic mechanisms are allowed to re-equilibrate for 50 s.

- (4)

- Testing: The network is presented with recall cues which consist of one precisely timed input spike to all neurons in one stimulation-group conveyed through a strong (20 nS) connection. To allow for sufficient network relaxation, there is only one recall stimulus every 500 ms for 100 s.

2.3. Evaluation Measures

2.3.1. Classification Accuracy

2.3.2. Analytical Approximation of the Decoding Accuracy Depending on Response Probabilities

2.4. Number of Long-Range Connections Needed to Decode Partly Tuned Networks

2.4.1. Mutual Information

2.4.2. Correlation Dependent Densities

3. Results

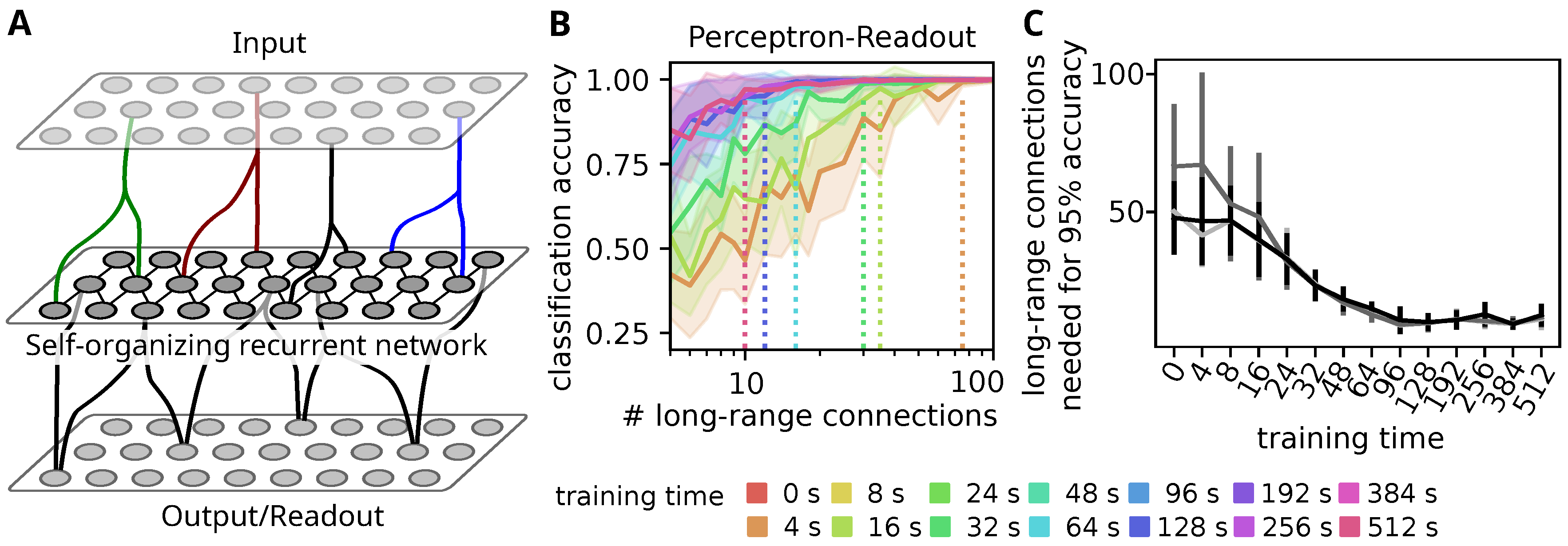

3.1. Self-Organization Improves Stimulus Decoding through Sparse Readouts

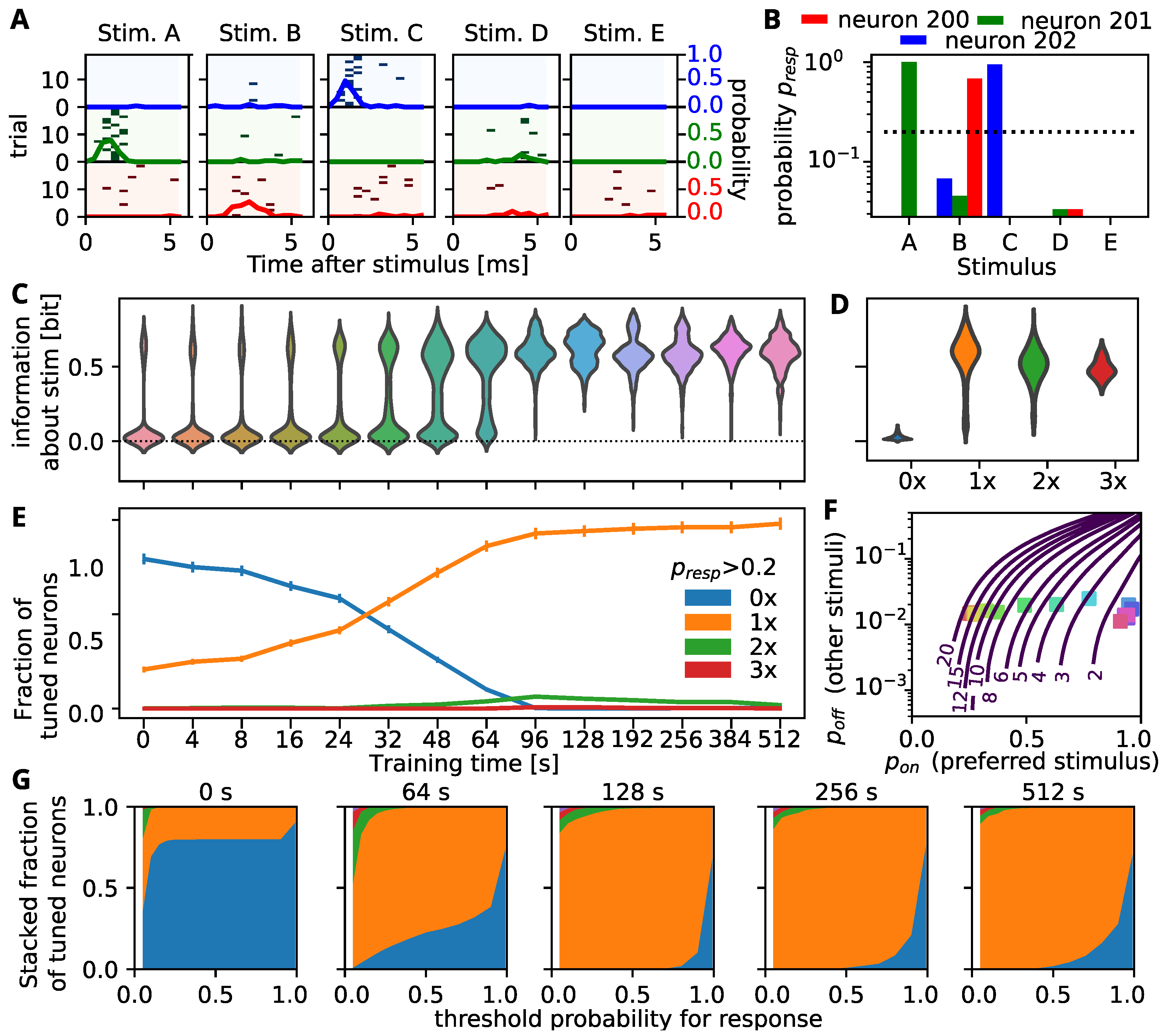

3.2. Self-Organization Distributes Information by Tuning All Neurons to a Single Stimulus

3.3. Decodability Improves through Increasing the Response to Preferred Stimulus

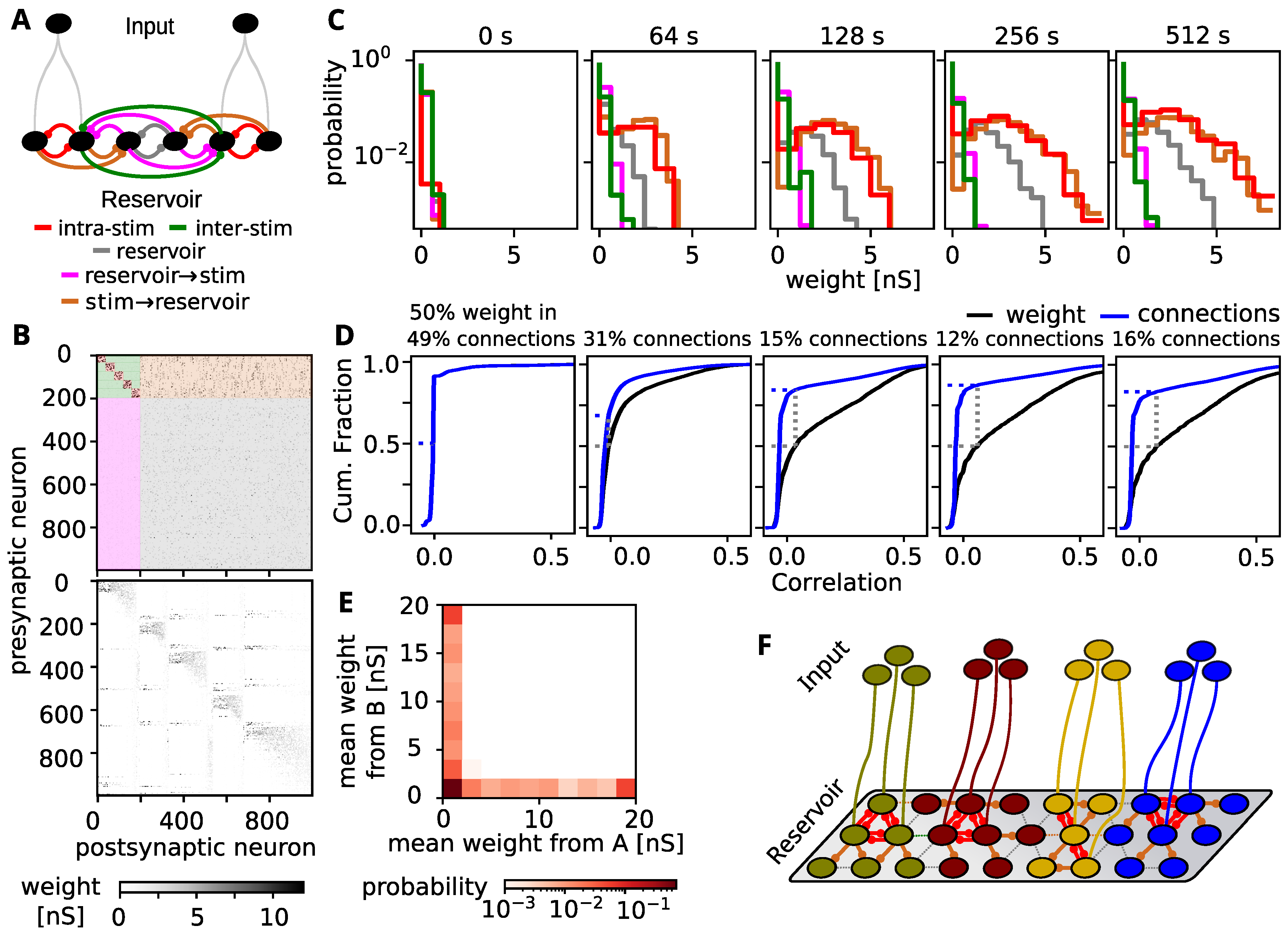

3.4. Tuning Spreads Due to Strong Feed-Forward Connections

3.5. Comparison to Experimental Findings

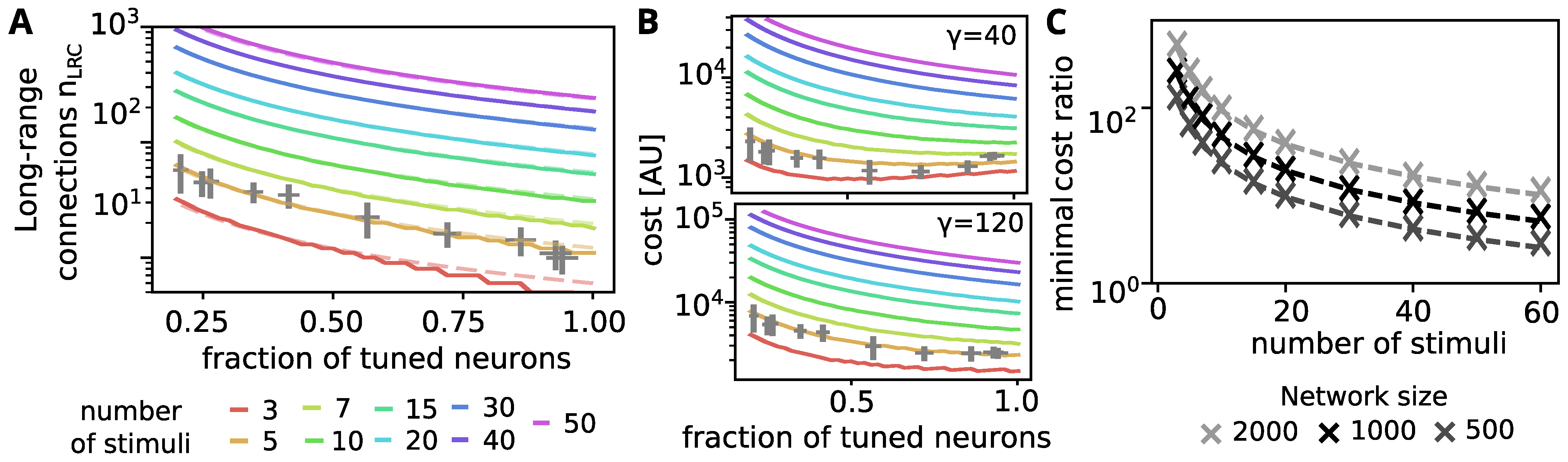

3.6. Is the Spread-Out of Stimulus Representation Energy-Efficient?

4. Discussion

4.1. Model Predictions

4.2. Limitations and Possible Extensions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| STDP | Spike-timing-dependent plasticity |

Appendix A. Estimation for the Number of Needed Long-Range Connections

References

- Fauth, M.; Tetzlaff, C. Opposing effects of neuronal activity on structural plasticity. Front. Neuroanat. 2016, 10, 75. [Google Scholar] [CrossRef]

- Sperry, R.W. Chemoaffinity in the orderly growth of nerve fiber patterns and connections. Proc. Natl. Acad. Sci. USA 1963, 50, 703. [Google Scholar] [CrossRef]

- De Wit, J.; Ghosh, A. Specification of synaptic connectivity by cell surface interactions. Nat. Rev. Neurosci. 2016, 17, 4. [Google Scholar] [CrossRef] [PubMed]

- Seiradake, E.; Jones, E.Y.; Klein, R. Structural perspectives on axon guidance. Annu. Rev. Cell Dev. Biol. 2016, 32, 577–608. [Google Scholar] [CrossRef]

- Stoeckli, E.T. Understanding axon guidance: Are we nearly there yet? Development 2018, 145, dev151415. [Google Scholar] [CrossRef] [PubMed]

- Hassan, B.A.; Hiesinger, P.R. Beyond molecular codes: Simple rules to wire complex brains. Cell 2015, 163, 285–291. [Google Scholar] [CrossRef]

- Fares, T.; Stepanyants, A. Cooperative synapse formation in the neocortex. Proc. Natl. Acad. Sci. USA 2009, 106, 16463–16468. [Google Scholar] [CrossRef] [PubMed]

- Hill, S.L.; Wang, Y.; Riachi, I.; Schürmann, F.; Markram, H. Statistical connectivity provides a sufficient foundation for specific functional connectivity in neocortical neural microcircuits. Proc. Natl. Acad. Sci. USA 2012, 109, E2885–E2894. [Google Scholar] [CrossRef]

- Reimann, M.W.; King, J.G.; Muller, E.B.; Ramaswamy, S.; Markram, H. An algorithm to predict the connectome of neural microcircuits. Front. Comp. Neurosci. 2015, 9, 28. [Google Scholar] [CrossRef]

- Hebb, D.O. The Organization of Behavior: A Neuropsychological Theory; Wiley Book in Clinical Psychology; Wiley: New York, NY, USA, 1949. [Google Scholar]

- Bliss, T.V.; Lomo, T. Long-lasting potentiation of synaptic transmission in the dentate area of the anaesthetized rabbit following stimulation of the perforant path. J. Physiol. 1973, 232, 331–356. [Google Scholar] [CrossRef] [PubMed]

- Dudek, S.M.; Bear, M.F. Homosynaptic long-term depression in area CA1 of hippocampus and effects of N-methyl-D-aspartate receptor blockade. Proc. Natl. Acad. Sci. USA 1992, 89, 4363–4367. [Google Scholar] [CrossRef]

- Markram, H.; Lübke, J.; Frotscher, M.; Sakmann, B. Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 1997, 275, 213–215. [Google Scholar] [CrossRef] [PubMed]

- Bi, G.Q.; Poo, M.M. Synaptic modifications in cultured hippocampal neurons: Dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci. 1998, 18, 10464–10472. [Google Scholar] [CrossRef] [PubMed]

- Song, S.; Miller, K.D.; Abbott, L.F. Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat. Neurosci. 2000, 3, 919–926. [Google Scholar] [CrossRef] [PubMed]

- van Rossum, M.C.; Bi, G.Q.; Turrigiano, G.G. Stable Hebbian learning from spike timing-dependent plasticity. J. Neurosci. 2000, 20, 8812–8821. [Google Scholar] [CrossRef]

- Markram, H.; Gerstner, W.; Sjöström, P.J. Spike-timing-dependent plasticity: A comprehensive overview. Front. Synaptic Neurosci. 2012, 4, 2. [Google Scholar] [CrossRef]

- Turrigiano, G.G.; Leslie, K.R.; Desai, N.S.; Rutherford, L.C.; Nelson, S.B. Activity-dependent scaling of quantal amplitude in neocortical neurons. Nature 1998, 391, 892–896. [Google Scholar] [CrossRef]

- Tetzlaff, C.; Kolodziejski, C.; Timme, M.; Wörgötter, F. Analysis of Synaptic Scaling in Combination with Hebbian Plasticity in Several Simple Networks. Front. Comput. Neurosci. 2012, 6. [Google Scholar] [CrossRef] [PubMed]

- Desai, N.S.; Rutherford, L.C.; Turrigiano, G.G. Plasticity in the intrinsic excitability of cortical pyramidal neurons. Nat. Neurosci. 1999, 2, 515–520. [Google Scholar] [CrossRef] [PubMed]

- Triesch, J. A Gradient Rule for the Plasticity of a Neuron’s Intrinsic Excitability. In Artificial Neural Networks: Biological Inspirations–ICANN 2005; Duch, W., Kacprzyk, J., Oja, E., Zadrożny, S., Eds.; Springer: Berlin, Germany, 2005; pp. 65–70. [Google Scholar]

- Zenke, F.; Agnes, E.J.; Gerstner, W. Diverse synaptic plasticity mechanisms orchestrated to form and retrieve memories in spiking neural networks. Nat. Commun. 2015, 6, 6922. [Google Scholar] [CrossRef] [PubMed]

- Klos, C.; Miner, D.; Triesch, J. Bridging structure and function: A model of sequence learning and prediction in primary visual cortex. PLOS Comp. Biol. 2018, 14, 1–22. [Google Scholar] [CrossRef]

- Hartmann, C.; Miner, D.C.; Triesch, J. Precise Synaptic Efficacy Alignment Suggests Potentiation Dominated Learning. Front. Neural Circuits 2015, 9, 90. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Miner, D.; Triesch, J. Plasticity-Driven Self-Organization under Topological Constraints Accounts for Non-random Features of Cortical Synaptic Wiring. PLoS Comput. Biol. 2016, 12, e1004759. [Google Scholar] [CrossRef] [PubMed]

- Zheng, P.; Dimitrakakis, C.; Triesch, J. Network self-organization explains the statistics and dynamics of synaptic connection strengths in cortex. PLoS Comput. Biol. 2013, 9, e1002848. [Google Scholar] [CrossRef] [PubMed]

- Stimberg, M.; Brette, R.; Goodman, D.F. Brian 2, an intuitive and efficient neural simulator. eLife 2019, 8, e47314. [Google Scholar] [CrossRef] [PubMed]

- Miner, D.; Tetzlaff, C. Hey, look over there: Distraction effects on rapid sequence recall. PLoS ONE 2020, 15, e0223743. [Google Scholar] [CrossRef]

- Gerstner, W.; Kistler, W.M. Spiking Neuron Models: Single Neurons, Populations, Plasticity; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar] [CrossRef]

- Benda, J. Neural adaptation. Curr. Biol. 2021, 31, R110–R116. [Google Scholar] [CrossRef] [PubMed]

- Gerstner, W.; Kempter, R.; van Hemmen, J.L.; Wagner, H. A neuronal learning rule for sub-millisecond temporal coding. Nature 1996, 383, 76–78. [Google Scholar] [CrossRef]

- Kempter, R.; Gerstner, W.; van Hemmen, J.L. Hebbian learning and spiking neurons. Phys. Rev. E 1999, 59, 4498–4514. [Google Scholar] [CrossRef]

- Elliott, T. An Analysis of Synaptic Normalization in a General Class of Hebbian Models. Neural Comput. 2003, 15, 937–963. [Google Scholar] [CrossRef]

- Lazar, A.; Pipa, G.; Triesch, J. SORN: A self-organizing recurrent neural network. Front. Comput. Neurosci. 2009, 3, 23. [Google Scholar] [CrossRef] [PubMed]

- Kriegeskorte, N.; Douglas, P.K. Interpreting encoding and decoding models. Curr. Opin. Neurobiol. 2019, 55, 167–179. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Emigh, T.H. On the Number of Observed Classes from a Multinomial Distribution. Biometrics 1983, 39, 485–491. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory 2nd Edition (Wiley Series in Telecommunications and Signal Processing); Wiley-Interscience: Hoboken, NJ, USA, 2006. [Google Scholar]

- MacKay, D.J.C. Information Theory, Inference, and Learning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Fauth, M.; Wörgötter, F.; Tetzlaff, C. Formation and Maintenance of Robust Long-Term Information Storage in the Presence of Synaptic Turnover. PLoS Comput. Biol. 2015, 11, e1004684. [Google Scholar] [CrossRef]

- Ko, H.; Cossell, L.; Baragli, C.; Antolik, J.; Clopath, C.; Hofer, S.B.; Mrsic-Flogel, T.D. The emergence of functional microcircuits in visual cortex. Nature 2013, 496, 96. [Google Scholar] [CrossRef] [PubMed]

- Ko, H.; Hofer, S.B.; Pichler, B.; Buchanan, K.A.; Sjöström, P.J.; Mrsic-Flogel, T.D. Functional specificity of local synaptic connections in neocortical networks. Nature 2011, 473, 87–91. [Google Scholar] [CrossRef] [PubMed]

- Ko, H.; Mrsic-Flogel, T.D.; Hofer, S.B. Emergence of Feature-Specific Connectivity in Cortical Microcircuits in the Absence of Visual Experience. J. Neurosci. 2014, 34, 9812–9816. [Google Scholar] [CrossRef]

- Cuntz, H.; Forstner, F.; Borst, A.; Häusser, M. One rule to grow them all: A general theory of neuronal branching and its practical application. PLoS Comput. Biol. 2010, 6. [Google Scholar] [CrossRef]

- Betzel, R.F.; Bassett, D.S. Specificity and robustness of long-distance connections in weighted, interareal connectomes. Proc. Natl. Acad. Sci. USA 2018, 115, E4880–E4889. [Google Scholar] [CrossRef]

- Attwell, D.; Laughlin, S.B. An Energy Budget for Signaling in the Grey Matter of the Brain. J. Cereb. Blood Flow Metab. 2001, 21, 1133–1145. [Google Scholar] [CrossRef]

- Harris, J.J.; Jolivet, R.; Attwell, D. Synaptic energy use and supply. Neuron 2012, 75, 762–777. [Google Scholar] [CrossRef] [PubMed]

- Harris, J.J.; Attwell, D. The energetics of CNS white matter. J. Neurosci. 2012, 32, 356–371. [Google Scholar] [CrossRef] [PubMed]

- Keck, T.; Mrsic-Flogel, T.D.; Vaz Afonso, M.; Eysel, U.T.; Bonhoeffer, T.; Hubener, M. Massive restructuring of neuronal circuits during functional reorganization of adult visual cortex. Nat. Neurosci. 2008, 11, 1162–1167. [Google Scholar] [CrossRef]

- Bazhenov, M.; Stopfer, M.; Sejnowski, T.J.; Laurent, G. Fast Odor Learning Improves Reliability of Odor Responses in the Locust Antennal Lobe. Neuron 2005, 46, 483–492. [Google Scholar] [CrossRef] [PubMed]

- Rigotti, M.; Barak, O.; Warden, M.R.; Wang, X.J.; Daw, N.D.; Miller, E.K.; Fusi, S. The importance of mixed selectivity in complex cognitive tasks. Nature 2013, 497, 585–590. [Google Scholar] [CrossRef] [PubMed]

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| 30 nS | −70 mV | ||

| 300 pF | 20 ms | ||

| 2 ms | 5 ms | ||

| 0 mV | −85 mV | ||

| 0.2 mV/s | 0.066 mV | ||

| 1 mV | 20 ms | ||

| 0.05 nS | 0.05 nS | ||

| 20 ms | 20 ms | ||

| 50 nS |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miner, D.; Wörgötter, F.; Tetzlaff, C.; Fauth, M. Self-Organized Structuring of Recurrent Neuronal Networks for Reliable Information Transmission. Biology 2021, 10, 577. https://doi.org/10.3390/biology10070577

Miner D, Wörgötter F, Tetzlaff C, Fauth M. Self-Organized Structuring of Recurrent Neuronal Networks for Reliable Information Transmission. Biology. 2021; 10(7):577. https://doi.org/10.3390/biology10070577

Chicago/Turabian StyleMiner, Daniel, Florentin Wörgötter, Christian Tetzlaff, and Michael Fauth. 2021. "Self-Organized Structuring of Recurrent Neuronal Networks for Reliable Information Transmission" Biology 10, no. 7: 577. https://doi.org/10.3390/biology10070577

APA StyleMiner, D., Wörgötter, F., Tetzlaff, C., & Fauth, M. (2021). Self-Organized Structuring of Recurrent Neuronal Networks for Reliable Information Transmission. Biology, 10(7), 577. https://doi.org/10.3390/biology10070577