Abstract

Software-defined networking (SDN) is a transformative approach for managing modern network architectures, particularly in Internet-of-Things (IoT) applications. However, ensuring the optimal SDN performance and security often needs a robust sensitivity analysis (SA). To complement existing SA methods, this study proposes a new SA framework that integrates design of experiments (DOE) and machine-learning (ML) techniques. Although existing SA methods have been shown to be effective and scalable, most of these methods have yet to hybridize anomaly detection and classification (ADC) and data augmentation into a single, unified framework. To fill this gap, a targeted application of well-established existing techniques is proposed. This is achieved by hybridizing these existing techniques to undertake a more robust SA of a typified SDN-reliant IoT network. The proposed hybrid framework combines Latin hypercube sampling (LHS)-based DOE and generative adversarial network (GAN)-driven data augmentation to improve SA and support ADC in SDN-reliant IoT networks. Hence, it is called DOE-GAN-SA. In DOE-GAN-SA, LHS is used to ensure uniform parameter sampling, while GAN is used to generate synthetic data to augment data derived from typified real-world SDN-reliant IoT network scenarios. DOE-GAN-SA also employs a classification and regression tree (CART) to validate the GAN-generated synthetic dataset. Through the proposed framework, ADC is implemented, and an artificial neural network (ANN)-driven SA on an SDN-reliant IoT network is carried out. The performance of the SDN-reliant IoT network is analyzed under two conditions: namely, a normal operating scenario and a distributed-denial-of-service (DDoS) flooding attack scenario, using throughput, jitter, and response time as performance metrics. To statistically validate the experimental findings, hypothesis tests are conducted to confirm the significance of all the inferences. The results demonstrate that integrating LHS and GAN significantly enhances SA, enabling the identification of critical SDN parameters affecting the modeled SDN-reliant IoT network performance. Additionally, ADC is also better supported, achieving higher DDoS flooding attack detection accuracy through the incorporation of synthetic network observations that emulate real-time traffic. Overall, this work highlights the potential of hybridizing LHS-based DOE, GAN-driven data augmentation, and ANN-assisted SA for robust network behavioral analysis and characterization in a new hybrid framework.

1. Introduction

Recent projections have indicated that the number of connected devices globally will reach several billions in the coming years, due to the proliferation of internet technologies, such as the Internet of Things (IoT) and its associated systems and devices [1,2]. A parallel trend is also noticeable in the growth of cloud technologies, including cloud-based software, surveillance solutions, real-time network automation, and distributed connections [3]. In relation to this, software-defined networking (SDN), which introduces a decoupling of control and data planes in IoT networks, has also become a prevalent technology, highlighting the need for scalable and intelligent network management solutions [4]. Both past and ongoing research point to SDN as a foundational component of IoT architectures [5,6,7]. One early contribution in this area employed multilayer SDN controllers specifically designed for heterogeneous vehicular IoT traffic, demonstrating end-to-end quality of service (QoS) guarantees through network-calculus-based scheduling mechanisms [8].

SDN, which leverages the OpenFlow protocol, is a rapidly evolving technology that significantly enhances network management, administration, and monitoring processes [4]. By enabling programmable interaction with data plane elements and providing network administrators with a holistic view of the network through a controller, SDN positions the controller as the network’s intelligence, which is responsible for managing devices in the data plane via the application-programming interface (API) [4]. This capability has led to the adoption of SDN-based frameworks in advanced IoT network topologies, including fifth-generation (5G) and next-generation network architectures, such as sixth-generation (6G) [9,10,11].

While the programmability, flexibility, and decentralized control of SDN are critical to its effective implementation, these same features can introduce significant security challenges [12]. IoT networks and other SDN-reliant systems are susceptible to a number of security risks, such as an increased likelihood of distributed-denial-of-service (DDoS) flooding attacks [13,14]. These inherent vulnerabilities associated with SDN highlight the pressing need for advanced and intelligent security frameworks that are capable of mitigating evolving threats, such as DDoS flooding attacks, while preserving the operational benefits of SDN-based architectures in network environments, such as IoT systems.

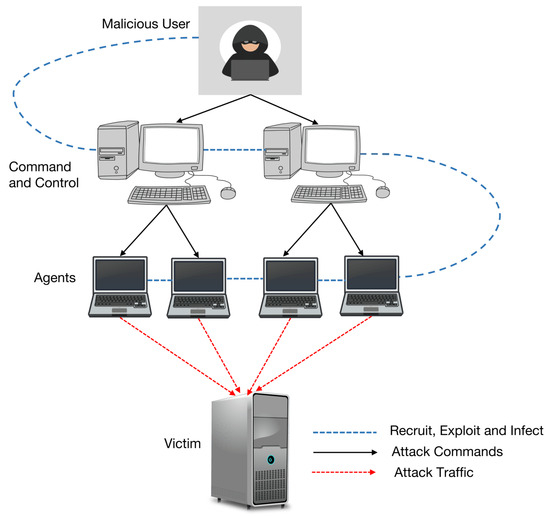

DDoS flooding attacks are carefully planned assaults that use a network of hacked devices, called botnets, to overwhelm a system. The goal is to clog up the network’s bandwidth or shut down specific servers and devices, making them unusable for regular users [15]. These attacks constitute a significant portion of the most dangerous malicious traffic on the internet [16]. Typically, attackers deploy these botnets to execute these operations covertly [17]. As a result, end users of the targeted network’s device nodes often remain clueless that their devices and internet protocol (IP) addresses are being used to perpetrate DDoS flooding attacks. IoT devices are especially vulnerable to coordinated DDoS flooding attacks because of how they are built and the fact that the internet does not enforce strict traffic control on individual devices [16]. This combination makes it easier for attackers to target and overwhelm IoT devices. This vulnerability is made even worse by the rapid growth in the number of IoT devices out there, even though their security is improving over time [18]. In the face of increasingly complex DDoS flooding attacks, the critical need for innovative and proactive security techniques that can address the particular vulnerabilities of IoT networks becomes imperative.

As IoT networks that utilize SDN architectures expand in complexity and scale, optimizing their performance and security presents significant challenges that demand robust and well-optimized network configurations [19]. Identifying critical network performance metrics and assessing their influences on the overall network performance through sensitivity analysis (SA) have consistently been put forward as pivotal tools for enhancing the reliability of network configurations [20]. This is primarily because developing a reliable IoT network model requires a comprehensive understanding of how variations in model parameters influence the network’s behavior and the accuracy of its predictive outcomes. SA plays critical roles in this process by systematically analyzing the importance of input parameters, evaluating their contributions to the network model’s outputs, and quantifying the impacts of individual inputs on the overall system performance [21].

SA techniques are commonly categorized based on their scope, as either local or global, and their framework, as either deterministic or statistical [22,23]. As discussed in [21,24,25], statistical frameworks for SA are generally derived from principles associated with the design of experiments (DOE). These frameworks also classify SA methods into local and global approaches, depending on the parameter space under consideration and the specific objectives to be achieved [26]. SA, both local (LSA) and global (GSA), is critical for understanding the influences of different parameters on the performance of IoT networks that are SDN reliant [27]. Therefore, advancing the application of SA in SDN-reliant IoT networks is vital for developing adaptive and data-driven models that enhance predictive accuracy and system resilience.

The application of artificial intelligence (AI) techniques (machine-learning (ML) techniques in particular) is growing significantly in numerous fields and disciplines, including SA, within SDN [10,26]. In the context of the local SA (LSA), ML-based approaches often rely on gradient-based methods to evaluate how specific parameters affect performance metrics [28]. Techniques such as gradient-boosting machines (GBMs) and artificial neural networks (ANNs) or neural networks are widely used to approximate gradients efficiently to support parameter tuning and optimization [28,29]. ANNs, in particular, offer enhanced capabilities to capture complex dependencies between parameters and performance for ML-assisted LSA in SDN environments [30]. These capabilities not only facilitate the identification of critical parameters but also provide actionable insights for improving SDN configurations for IoT networks.

For global SA (GSA), ML techniques, such as the Gaussian process [31], can play an important role in improving traditional methods, such as Monte Carlo simulations [32]. By training ML models on subsets of data, predictions can be extended across a larger parameter space, enabling a more comprehensive analysis [33]. Gaussian processes are also effective for modeling the distribution of network performance metrics under varying parametric conditions, providing a probabilistic framework that supports GSA [34]. Furthermore, Monte Carlo simulations, when combined with these ML models, provide a detailed evaluation of how parameter variations affect the overall network performance [35]. ML techniques, such as regression methods for surrogate modeling, further streamline GSA by approximating the behaviors of complex network systems, reducing computational costs, and improving scalability [26,35]. These ML-based approaches emphasize the potential of ML-assisted SA methodologies to optimize SDN configurations for IoT network applications and improve their performance and reliability in increasingly complex IoT network environments.

Practitioners often aim to enhance the effectiveness and efficiency of ML models by regulating and optimizing key processes in SDN environments [36]. This practice inherently aligns with the principles of DOE, a data-driven approach that plays a crucial role in SA [25,37,38]. DOE provides a structured experimental framework that is essential for addressing the complexities of both SA and ML challenges, particularly in the behavioral analysis of IoT networks [39]. For instance, QoS is a vital metric in IoT networks [40]. DOE can be used to systematically identify relationships between factors that influence QoS in IoT networks, thus improving network performance while minimizing the need for extensive experimental trials [25,41]. Furthermore, DOE can facilitate a targeted investigation of cause-and-effect relationships in scenarios involving the application of ANNs and evolutionary algorithms (EAs), both of which are commonly applied in IoT networks [42,43]. This structured approach reduces reliance on trial-and-error methods, saving time and computational resources. Hence, the integration of DOE with ML clearly offers great potential for enhancing model interpretability, reproducibility, and optimization efficiency within complex network environments, such as IoT networks.

A popular example of DOE in practice is Latin hypercube sampling (LHS) [44,45]. LHS is a widely used technique for addressing complex and high-dimensional problems, including ML-assisted global optimization of complex models [46,47]. LHS works by stratifying the sample space to ensure uniform sampling of each variable across its range, which is particularly advantageous for simulation-based optimization tasks in computational intelligence [46]. This approach significantly improves the convergence rates of ML models, such as ANNs, making it a valuable tool in data-driven analytics [47]. By integrating DOE methodologies, such as LHS, with ML for SA implementation, more robust insights can be obtained about model behavior, enabling the development of more reliable and resilient network systems. This is why extending the application of DOE within ML-assisted SA frameworks remains a potential direction for research efforts aimed at optimizing the performance and reliability of network systems, such as SDN-reliant IoT networks.

Generative adversarial networks (GANs), a widely used class of ML frameworks, have also become quite popular in recent years due to their ability to generate synthetic data by learning the underlying distribution of real-world datasets [48]. Originally introduced in [49], GANs typically comprise two neural networks: a generator, which creates synthetic data, and a discriminator, which aims to differentiate between generated and real data [50]. These networks are trained simultaneously in a zero-sum game, where the generator continuously and iteratively refines its output to deceive the discriminator [51]. Due to this continuous iterative process, the generator can create extremely realistic synthetic data, making GANs an effective tool for a variety of computing and networking applications [52,53]. The growing roles of GANs in optimizing the performance and addressing the complexities of modern network systems [54] further emphasize their potential to support ML-driven SA in SDN-reliant IoT networks.

This work introduces a new data-driven framework that hybridizes LHS-based DOE, GAN-based synthetic data generation, and ANN-assisted SA to facilitate an enhanced behavioral study (including anomaly detection and classification (ADC)) in SDN-reliant IoT networks. Termed as DOE-GAN-SA, the proposed hybrid framework leverages simulated network scenarios, LHS-driven augmentation of network performance data, GAN-enabled synthetic data generation, and ANN-assisted SA to provide a comprehensive approach to behavioral analysis (including ADC) within SDN-reliant IoT networks. DOE-GAN-SA explores the harmonious co-working of LHS-based DOE, GAN-driven synthetic data generation, and ANN-based supervised learning by focusing on their complementary roles to support ADC and SA in SDN-reliant IoT network environments. The key contributions of this work include:

- Using LHS and GAN for data augmentation in SDN-reliant IoT networks;

- Showing the application of a newly augmented SDN-reliant IoT network dataset for detecting and classifying DDoS flooding attacks;

- Improving the mitigation of DDoS flooding attacks through improved detection accuracy and ANN-assisted SA;

- Demonstrating DOE-GAN-SA as a new hybrid ADC and SA framework for SDN-reliant IoT networks.

To the best of our knowledge, and based on the literature reviewed in Section 2, this study is the first to introduce a hybrid approach combining DOE and ML techniques for the specific purpose of conducting SA on network performance metrics within SDN-dependent IoT networks. Although the individual methodologies employed are well-established within their respective domains, this work represents the first documented integration of these techniques for this targeted application. The structure of the remainder of this paper is as follows: Section 2 reviews the recent literature relevant to the research conducted to provide a foundation for the study. Section 3 outlines the SDN architecture and IoT network topology utilized to simulate various network scenarios to establish the technical context. The basic techniques guiding the formulation of the proposed DOE-GAN-SA framework are introduced in Section 4, and the proposed DOE-GAN-SA framework is detailed in Section 5, highlighting its components and functionalities. Section 6 describes the experimental setup, presents the results, and discusses the findings, offering insights into DOE-GAN-SA’s performance, scope, and recommended approach for its practical adoption. Finally, Section 7 provides the concluding remarks, summarizing the key contributions and potential directions for future work.

2. Related Work

SDN is a widely used approach for scaling heterogeneous IoT deployments, primarily due to its decoupling of control and data planes. However, much of the existing research in this area still relies heavily on heuristic-based parameter tuning, often overlooking the importance of feedback mechanisms that link anomaly detection with flow-rule adaptation [55]. Early contributions, such as [8], demonstrated the feasibility of SDN for vehicular IoT infrastructures via the multipurpose infrastructure for network applications (MINA)-SDN controller, yet their study did not address the critical constraints related to sensor energy consumption and embedded security mechanisms. Subsequent works, including the survey by [56], emphasized the necessity of slice isolation and controller scalability in supporting large-scale IoT deployments. Similarly, Ref. [5] introduced software-defined APIs designed for smart city infrastructures to enable the shared use of gateways and cloud services, accompanied by some quantitative analysis using a case study. More recently, Ref. [6] showcased a multilevel architecture that effectively reduced packet loss in smart home networks; however, several challenges persist. Further highlighting these challenges, a comprehensive meta-analysis, conducted by [7] and spanning over 160 studies, identified microservice-based controllers, flow table compression techniques, and energy-efficient routing as pivotal components in the secure deployment of SDN-reliant IoT systems for smart communities. Collectively, these studies highlight the importance of programmability and slice isolation, among other design strategies, in supporting the development of secure and scalable IoT infrastructures. Nonetheless, none of the reviewed approaches adequately addresses data-scarce SA or integrates ADC mechanisms directly within SDN architectures, an evident gap that the DOE-GAN-SA framework proposed in this work seeks to bridge.

While GANs have found several applications across multiple disciplines [57], their adoption for ADC and SA, particularly in SDN-reliant IoT networks, can be said to still be in its early stages, arguably. Since the introduction of GANs in [49], numerous studies have demonstrated their practicality in data augmentation. For instance, the work in [58] investigated the use of GANs to generate realistic network traffic data, enabling the simulation of network behaviors under various conditions. However, this study, like other similar recent works [59], did not specifically address ADC and SA, highlighting the untapped prospect of GAN-based synthetic data generation in supporting robust ADC and SA within SDN-reliant IoT network environments. Supporting this perspective is a recent study that demonstrated the effectiveness of GANs in augmenting datasets necessary for analyzing complex high-dimensional systems and improving classification performance [60]. Despite these promising developments, the current literature indicates that the full potential of GANs in the context of ADC and SA within SDN-reliant IoT networks still remains largely unexplored, offering significant opportunities for further research. Hence, this current work explores the integration of GANs into ADC and SA workflows with the aim of exploiting their synthetic data generation capabilities for deeper insight into parameter-driven behaviors within complex network environments.

SA in SDN-reliant networks, such as IoT networks, has traditionally relied on standard techniques, like variance-based methods and Monte Carlo simulations [24,61]. These conventional approaches, while effective for several applications, often require large datasets and substantial computational resources [62], making them less practical for low-latency, real-time, large-scale systems, such as IoT networks [63]. Variance-based SA methods, for example, are very practical in measuring the contribution of each input factor to the overall variance of the output, which helps to identify key influential parameters [30,64]. However, in scenarios that involve a large number of parameters or complex interaction effects, they could become impractical due to increased computational demands and instability in estimations [65]. To address these challenges, researchers have explored alternative techniques, such as sample-based estimations, that reduce computational overhead without sacrificing analytical accuracy [66]. However, this is often at the cost of introducing new design parameters [66].

Statistical sampling methods, such as LHS, are well-known for their efficiency in parameter selection and analysis [67]. Unlike random sampling, LHS ensures a more uniform coverage of the input space to improve robustness and minimize computational costs [47]. Despite its advantages, LHS is not without its own limitations. One significant drawback of LHS is its inability to thoroughly account for statistical relationships between input variables [47,67], which can compromise SA accuracy in systems such as SDN-reliant IoT networks, where inputs could be highly correlated [39]. Combining LHS with GAN offers a promising solution to this limitation. GANs are capable of generating synthetic datasets that tend to preserve some statistical properties of real-world network data while also reasonably covering the input space of real-world network traffic comprehensively and robustly [68]. By combining LHS-based data and GAN-generated data into a unified framework, harmonizing their advantages in data augmentation could be realized as carried out in this work. This hybrid approach has the potential to improve SA in SDN-reliant IoT networks, enabling more effective analyses of such complex and high-dimensional systems while meeting the demands of real-time applications.

Although GANs have demonstrated significant potential in synthetic data generation across various applications [69], as established earlier, their application for SA in SDN-reliant networks, such as IoT networks, remains underexplored. Much of the existing research in this area has concentrated more on enhancing GAN architectures for data generation or refining synthetic data generation techniques using GANs [69,70]. Therefore, there is a gap in studies that harness the complementary strengths of GANs and LHS to perform SA in SDN-reliant IoT networks. Conventional SA frameworks, while effective in many scenarios, are often purpose built and may not necessarily offer additional insights into other operations, such as data augmentation and ADC, when implemented. These limitations also emphasize the need for innovative approaches that can efficiently address the complexities of modern network systems by providing more robust insights while maintaining analytical precision and complementing existing SA methods. Hence, exploring a hybrid SA framework that combines DOE and ML techniques, as typified in this work, can potentially redefine the methodological landscape for analyzing intelligent, large-scale network infrastructures in a resource-efficient and scalable manner.

Specifically, this work addresses the existing gaps discussed above by proposing the DOE-GAN-SA framework, which integrates LHS-based DOE with GAN-driven synthetic data generation to enable efficient and scalable ADC and ANN-assisted SA in SDN environments. GANs are leveraged to generate high-quality synthetic datasets that preserve some properties of real-world network data while enhancing data diversity. This capability is paired with the structured uniform sampling offered by LHS, which ensures comprehensive coverage of the input parameter space with reduced computational costs. By combining these methodologies, the DOE-GAN-SA framework facilitates a more robust and comprehensive approach to SA and ADC, enabling the precise identification of critical parameters that impact the SDN performance. As a result, the DOE-GAN-SA framework introduced in this study is expected to help create more efficient and reliable IoT network setups by enhancing ADC and improving SA for SDN-reliant IoT networks.

3. SDN Architecture and IoT Network Topology

This section introduces the SDN architecture and IoT network topology employed in this work. Additionally, it outlines the simulation of traffic scenarios conducted using the SDN-reliant IoT network model and methodologies established in the authors’ earlier works to make the study more self-contained [30,39,71]. The subsections cover the architecture and topology setup of the modeled SDN-reliant IoT network, the network traffic simulation approach, and the integration of insights from previous research to validate the SDN-reliant IoT network model.

3.1. SDN Architecture

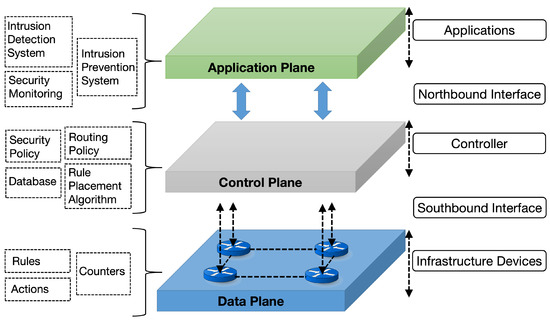

In contrast to conventional networking architectures, SDN architectures principally work by employing a fundamental structure around the decoupling of the control and data planes to enable a more centralized and intelligent management of network devices [72]. This architecture is critical for achieving programmability and dynamic network configuration. As depicted in Figure 1, the SDN architecture typically consists of three distinct planes: the application, control, and data planes, each serving specialized roles. The application plane encompasses applications, such as intrusion detection systems, intrusion prevention systems, and security-monitoring tools, which provide critical inputs for decision making [73]. The control plane, dominated by the SDN controller, governs network operations through security policies, routing strategies, databases, and rule placement algorithms [10]. Finally, the data plane comprises infrastructure devices responsible for executing network operations based on predefined rules, actions, and counters [10].

Figure 1.

Typical SDN architecture.

In the SDN architecture typified in Figure 1, the controller operates as the centralized decision-making entity, bridging the application and data planes [4]. By leveraging inputs from applications in the application plane, the controller determines appropriate actions for the data plane [14]. Forwarding devices within the data plane, implemented in hardware or software, execute these instructions by handling packet routing and forwarding based on established forwarding policies [14]. This arrangement, illustrated in Figure 1, allows for efficient policy implementation and dynamic network adaptability. For this study, the described SDN architecture forms the foundational framework, enabling the exploration of SDN in enhancing IoT network security and operational efficiency.

3.2. IoT Network Topology

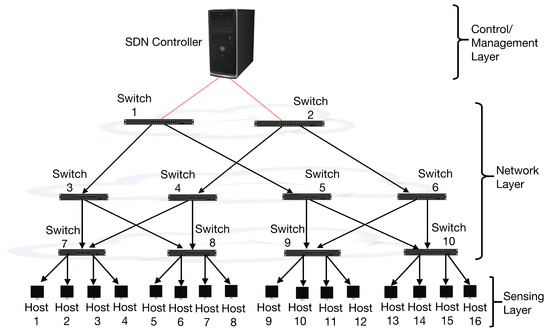

IoT networks are characterized by their diverse topologies (mostly inherited from traditional data and communication networks) that enable flexible arrangements and interconnections of devices [74]. Among the common topologies employed are mesh, star, tree, and point-to-point configurations, each offering specific advantages depending on the application context [75]. Within IoT systems, the data center (DC) serves as the central hub, which manages substantial traffic and ensures efficient data processing. For DC architectures, the Fat-Tree and BCube topologies are widely utilized [76]. The Fat-Tree topology shown in Figure 2, in particular, is favored in this study due to its scalability, consistent bandwidth distribution, and cost-effective design [77].

Figure 2.

Illustration of the modeled SDN-reliant IoT network architecture (a typification).

By utilizing switches of uniform capacity, Fat-Tree networks support predictable performance and enable line-speed transmission when packets are evenly distributed [78]. These characteristics provide an ideal foundation for IoT networks that require efficient and scalable data management. IoT networks are generally multilayered [79] and highly reliant on DCs that are also a part of the network infrastructure. This is especially true when taking into account the data-processing, storage, and management (including traffic management) levels in IoT networks [80]. The network topology used in this work, therefore, closely resembles a typical IoT network as previously established [71]. Similar IoT architectures, featuring tree topologies, hierarchical layouts, and multilayer network designs, have also been reported in previous studies [6,7,56,81]. Therefore, the custom SDN architecture in this work adopts an IoT network with a tree topology (see Figure 2), facilitating wide-area network adaptability and scalability.

Using emulators or simulation techniques to analyze network behavior and characteristics before deployment is a common practice in network management [6,82,83]. Among these tools, Mininet is widely recognized in SDN research for its effectiveness [6,71,82,83]. Numerous studies have demonstrated that networks modeled within the Mininet environment accurately represent real-world SDN scenarios [6,82,83]. The SDN-reliant IoT network model adopted in this work was implemented using the Mininet emulator on a system featuring 32 GB of RAM and Kali Linux operating on an Intel Xeon E3-1220 CPU. Floodlight controller [71] was hosted in VirtualBox, running on Ubuntu 18.10 LTS, while the Mininet environment operated on Ubuntu 16.10 LTS. The experimental setup consisted of 10 OpenFlow switches and 16 interconnected hosts connected by 100 Mbps links. Though relatively small, this network configuration reflects real-world scenarios [30,39,71], such as enterprise or campus networks. By bridging the simplicity of a tree topology with the advanced features of SDN, this study explores the sensitivity and vulnerabilities of IoT networks under DDoS flooding attacks, highlighting the adaptability of tree and/or Fat-Tree approaches in designing resilient network infrastructures. It should also be noted that the network topology used in this work has also been adopted in the authors’ previous works [30,39,71].

3.3. Simulation of Traffic Scenarios in the SDN-Reliant IoT Network Model

To simulate non-malicious and malicious traffic in the SDN environment, the tools and were employed to generate legitimate network communication between nodes, while the Low-Orbit Ion Cannon (LOIC) was utilized to execute DDoS flooding attacks. The experiments involved six compromised hosts (hosts six to eight and ten to twelve, see Figure 2) used to target the network server with sequential HTTP (hypertext transfer protocol), TCP (transmission control protocol), and UDP (user datagram protocol) DDoS flooding attacks, each lasting 15 min and resulting in a total attack duration of 45 min per experimental round. During these periods, critical system properties (throughput (), jitter (), and response time ()) were recorded every second for analysis to have 900 observations per DDoS flooding attack. These properties represent essential performance metrics: quantifies the actual data transfer rate within the network, measures the variation in packet transmission delays, and indicates the latency between task initiation and completion [39]. The data were collected under both normal traffic conditions (900 observations) and DDos flooding attack conditions (2700 observations), providing comprehensive insights into network performance variations.

3.4. Performance Metrics of the SDN-Reliant IoT Network Model

To specifically generate the dataset for the performance metrics of the SDN-reliant IoT network model, a structured methodology was followed, starting with a confirmation of the connectivity using the command. was then used to create TCP and UDP servers at different ports, allowing hosts to send traffic to measure baseline or normal , , and metrics over a 15 min interval. Subsequently, LOIC was employed from compromised hosts to launch successive HTTP, UDP, and TCP DDoS flooding attacks to emulate the typical DDoS flooding attack strategy (Figure 3). During each attack, the server’s performance metrics were recorded, and port numbers were dynamically adjusted to ensure no interference between scenarios. The data, initially captured in .txt format, were processed using Konstanz Information Miner (KNIME) to extract the target metrics and eliminate duplicates to ensure a clean and structured dataset [39]. This dataset not only included normal and anomalous traffic patterns but also addressed limitations of existing SDN benchmarks by incorporating modern attack footprints and traffic variations reflective of the IoT era [30,39,71,84]. The resulting dataset formed the basis for ML-based ADC and SA, carried out in this study, to support a more robust behavioral analysis of SDN-reliant IoT networks and the mitigation of modern DDoS flooding attacks using the proposed DOE-GAN-SA framework. It should be noted that these simulation scenarios have also been adopted in our previous works [30,39,71]. The statistical distributions of the generated data for each of the SDN-reliant IoT network traffic scenarios are presented later on in Section 6.

Figure 3.

Typification of the DDoS attack strategy.

4. Basic Techniques

To support the development of the proposed DOE-GAN-SA framework, several fundamental techniques have been explored and adopted in this work. Even though these techniques are well known and established in the literature, detailing them in this work, with a strong focus on how they have been specifically applied, serves the primary purpose of ensuring that this work is self-contained and complete for understanding. Hence, these techniques are briefly discussed as follows.

4.1. Design of Experiments (DOE)

One popular strategy for building a surrogate model is to use a well-fitted DOE method to sample the design space and then approximate the computationally costly model data. In this way, any strategy that directs sample allocation in the design space to maximize the amount of information obtained is generally referred to as a DOE method [25,85]. To create the training data needed to construct the surrogate model, the computationally costly models are assessed at these sampled points [25]. An efficient DOE approach makes sure the samples are usually spread apart as much as possible to better capture global trends in the design space because of the inherent tradeoff between the number of sample points and the amount of information available from these points [25,85]. Classical factorial designs, Hammersley sampling, LHS, and Quasi Monte Carlo sampling are among the available DOE approaches [44,86].

Latin Hypercube Sampling (LHS)

For uniform sampling distributions, LHS is possibly the most popular DOE technique [44]. Numerous works in mathematics, engineering, and computational science have adopted LHS [25,46,87]. LHS has also been demonstrated to offer superior qualities that support effective filtering and significant variance reduction for numerous applications in comparison to other DOE approaches [25,87,88]. To assign n samples, LHS separates each sample’s range into d bins, resulting in a total of bins in the design space for the n samples. Numerous LHS variations have been developed and/or proposed to minimize the possibility of non-uniform distributions [89], improve space filling [90], optimize projective properties [91], lessen spurious correlations [92], minimize least-square error, and maximize entropy [93]. As a statistical method, LHS samples input variables efficiently, ensuring that the entire range of each input parameter is represented. In the context of SDN-reliant IoT networks, LHS can be used to generate representative samples of network parameters, such as , , and , indicative of bandwidth, latency, and packet loss, respectively, which are then used for SA, as typified in the proposed DOE-GAN-SA framework. By efficiently covering the input space, LHS reduces the number of simulations required to assess the effects of input parameters on the network’s performance [94]. Since LHS is a well-known technique [44,95,96], its full algorithmic details are not provided herein. However, the essential steps in the implementation of LHS, in the context of this work, are described as follows:

- Step 1: Suppose there are n input parameters (), each with a specified range (), where . In this work, this will be the set of any of the SDN performance metrics being considered, i.e., any of , , and and their ranges for a given network scenario. LHS then works by generating k sample points, with each input parameter divided into k equally probable intervals. It should be noted that k is typically specified by the user, as discussed later;

- Step 2: For each input parameter (), the range is divided into k equally spaced intervals. For example, if the range of is , it is divided into intervals , where and . This step is very essential because it ensures that the samples are distributed uniformly across their respective ranges;

- Step 3: A value from each interval is randomly selected for each input parameter (). This ensures that all the parts of the parameter space are represented. The resulting sample points for each parameter are then arranged in a Latin hypercube structure. The Latin hypercube structure allows for a more even and representative sampling in comparison to that of conventional random sampling, hence, the name LHS;

- Step 4: The sampled values for each parameter are shuffled to form k distinct sample points, with each sample point having one value from each parameter’s range.

It should be noted that regardless of the value of k that the user specifies, the sample size affects the results of techniques like LHS, which seek to distribute samples uniformly across the range of feature values [96]. In this work, the sample space has been divided into bins, and new samples have been drawn at a predetermined bin fraction, 30 bins to be specific, as recommended by [95]. This procedure is implemented to ensure that LHS effectively covers the space of the SDN performance metrics across various scenarios within the SDN-reliant IoT network, thereby maximizing insight into parameter trends. The primary objective of this approach is to progressively expand the sample size while maintaining consistent marginal distributions of the SDN performance metrics [95].

4.2. Machine Learning (ML)

ML is a key component of contemporary IoT systems, providing data-driven methods for automated analysis and decision making [97,98]. ML usually provides systems with the ability to learn and enhance from experience automatically without being specifically programmed. To intelligently and robustly analyze data and develop the corresponding real-world data-driven paradigms, ML is essential. ML techniques can be generally categorized into four broad archetypes: supervised, unsupervised, semi-supervised, and reinforcement learning techniques [99]. Among these ML techniques, supervised learning techniques stand out for their abilities to model complex relationships between input features and desired outcomes using labeled data. This makes them particularly well suited for the proposed DOE-GAN-SA framework, which requires precise prediction, to support ADC and SA in the typified SDN-reliant IoT network considered in this work [99]. The main supervised learning techniques that underpin the proposed DOE-GAN-SA framework are neural networks (ANN and GAN to be precise) and the classification and regression tree (CART). These supervised learning techniques are discussed as follows.

4.2.1. Neural Networks

Neural networks (NNs) can be viewed as computational frameworks designed to simulate learning and data processing, inspired by the structure of the human brain, where interconnected nodes or neurons function collaboratively to process inputs and generate outputs [100]. Generally referred to as universal approximators, NNs play a crucial role in the characterization of complex systems, such as IoT networks, by enabling the analysis and optimization of system responses based on learned patterns and knowledge [101]. Their abilities to model and process information mirror biological neural systems, supporting advancements in network analysis and system optimization. Further exploration of NNs encompasses specific applications and architectures, including ANNs for structured data processing and GANs for generating and enhancing data representations, as discussed below.

Artificial Neural Networks (ANNs)

ANNs have been used to address several real-world problems, such as classification, prediction, optimization, and pattern recognition, including distinguishing traffic in intrusion detection and prevention systems (IDPSs) [102]. The efficiency and effectiveness of ANNs generally depend on data improvement, prior information, data representation, and feedback [103,104,105,106]. By adjusting the connection weights through training algorithms, like backpropagation, the performance of ANNs can be optimized to make them more suited for target applications [107]. Other algorithms exist for training neural networks, each differing in speed, precision, and memory requirements [108,109].

To develop a robust ANN model that fits the given data (see Section 3.3), any of the learning strategies can be merged or altered. In this work, the Levenberg–Marquardt training algorithm, widely regarded as one of the most efficient for ANNs [110], has been employed. The Levenberg–Marquardt training algorithm works by combining gradient descent and the Gauss–Newton method, addressing their limitations [110,111]. The primary drawback of the Levenberg–Marquardt training algorithm is its computational cost, stemming from the inversion of the Hessian matrix and storage requirements for the Jacobian matrix, which depend on the number of patterns, outputs, and weights [111]. For large-sized networks, this can become prohibitively expensive. However, considering our dataset of a few thousand instances or observations, having three inputs and one output, under two hundred fifty epochs on average, the Levenberg–Marquardt training algorithm offered a balance of speed, stable convergence, and manageable memory consumption for all the ANN models built, making it well suited for this work (see Section 6.2). Since ANNs are a well-known technique [30,100], their full algorithmic details are not provided herein. However, the essential steps in the ANN implementation in the proposed DOE-GAN-SA framework are described as follows:

- Step 1: Initialize the weights () and biases () randomly or using a predefined scheme for all the layers (l), where the activation or the neuron’s output (A) is fed to the next layer;

- Step 2: For each layer (l), compute the weighted sum of the inputs as follows:Apply an activation function () to get

- Step 3: Calculate the loss function () to measure the error between the predicted output () and the actual target (Y) as follows:where ℓ represents a chosen loss function, such as the mean-squared error (MSE) or cross-entropy loss;

- Step 4: Compute the gradients of the loss for parameters using the chain rule as follows:where is the error term as follows:

- Step 5: Update the weights and biases using the Levenberg–Marquardt algorithm as follows:where is the learning rate.

Generative Adversarial Networks (GANs)

GANs, introduced by [49], are a class of generative models that operate through an adversarial learning process. They consist of two NNs: a generator (G), which creates synthetic data intended to mimic real-world samples, and a discriminator (D), which evaluates the data and distinguishes between real and generated samples [49]. These two NNs are trained simultaneously in a dynamic competition, where the generator improves its ability to produce realistic data, while the discriminator becomes more adept at identifying differences. This adversarial interplay drives both neural networks to reach a state where the generator’s outputs are virtually indistinguishable from real data, making GANs particularly effective for modeling complex and high-dimensional data distributions [49,70]. GANs are particularly relevant to this work because of their proven abilities to generate high-fidelity synthetic data that can capture intricate patterns and structures in various data types, including images, text, and time series [49,70]. More specifically, their adaptability makes them versatile tools for several applications requiring synthetic data [112,113].

Aside from GANs, several deep learning architectures have been explored for data generation, classification, and modeling. Convolutional neural networks (CNNs) excel at extracting visual features but are less effective for sequential network traffic [114]. Recurrent neural networks (RNNs) and their variants, such as long short-term memory (LSTM) and gated recurrent unit (GRU) networks, are better suited for time-series data [115]. However, GANs outperform them in generating high-quality synthetic data [116]. Due to their adversarial training process, GANs are highly effective in synthesizing realistic network traffic [49]. Unlike conventional methods for synthetic data generation, they also excel in scenarios involving challenging data landscapes, providing a robust mechanism for modeling and synthesizing realistic datasets [49,113]. These attributes justify their adoption for data augmentation in the proposed DOE-GAN-SA framework.

In the context of SDN-reliant IoT networks, GANs can be used to generate synthetic network traffic data that mimic the characteristics of real traffic. These data can then be used to explore how different input parameters affect the network’s performance without the need for extensive and computationally expensive real-world simulations. The generated data can serve as input for SA to support a more scalable and cost-effective way to explore the parameter space [117]. This approach allows for a more flexible exploration of the network parameter space [118]. To do this, the generator () takes a random noise vector (z), sampled from a noise distribution (), and generates synthetic data () that resemble the real network traffic data. The discriminator () then takes either real data (x) from the distribution () or synthetic data () and outputs the probability that the input is real data rather than synthetic data. Since GANs are a well-known technique [49,70,119], their full algorithmic details are not provided herein. However, the essential steps in the GAN architecture featured in the proposed DOE-GAN-SA framework are further (briefly) described as follows:

- Step 1: Initialize the parameters and of and , respectively;

- Step 2: For each training step, sample real data () and noise (). Update by maximizing the likelihood that it correctly classifies real and synthetic data as follows:

- Step 3: Update to minimize the ability of to distinguish real from synthetic data as follows:

- Step 4: Alternate between training and until produces data indistinguishable from real data.

The min–max game between and can be summarized using the objective function as follows:

4.2.2. Classification and Regression Tree (CART)

CART is a vital supervised learning technique employed within the proposed DOE-GAN-SA framework, mainly due to its robust and interpretable decision-making capabilities. Unlike other classification methods, CART is renowned for its simplicity, efficiency, and ability to handle both categorical and continuous data that are commonplace in complex SDN-reliant network scenarios [71]. Its relevance is further highlighted by its inclusion in the top 10 data-mining algorithms [120] and its demonstrated high level of accuracy in predictive tasks within SDN-reliant network scenarios [71]. CART constructs decision trees (DTs) by recursively partitioning the input feature space to maximize information gain at each split. This technique is non-parametric, meaning it makes no assumptions about the data distribution, which enhances its applicability across diverse datasets. For a set of input attributes (), the CART algorithm selects the optimal split at each node by evaluating the impurity reduction using a metric such as Gini’s diversity index [121]. Then, the following essential steps are carried out for CART implementation in the proposed DOE-GAN-SA framework (since CART is a well-known technique [71,122] its full algorithmic details are not provided herein):

- Step 1: Determine the impurity of each node (I) by applying Gini’s diversity index as follows:where the number of classes is N, and is the proportion of observations in class n;

- Step 2: Evaluate all the possible splits and select the one that maximizes the impurity gain as follows:where T is the set of observations at the current node, and and are the subsets resulting from the split;

- Step 3: Continue splitting recursively until a stopping criterion is met, such as reaching a maximum depth or a minimum number of samples per leaf;

- Step 4: Prune the tree (if enabled) to reduce overfitting and improve the generalization.

It should be emphasized that ML techniques generally achieve superior performance after their hyperparameters have been properly optimized [71]. This is because the reliability of ML models is inherently tied to the quality of the data and methodologies used during training, and spurious correlations that are interpretable by human analysts after evaluation are often inevitable [71]. In reality, a solution between high precision (positive predictive value (PPV)) and high recall (sensitivity or true positive rate (TPR)) is typically unavoidable, as achieving optimality in both metrics simultaneously is rarely feasible [123]. Hyperparameter tuning is one approach to optimize (i.e., maximize) either the precision or recall in the validation set (the set of observations used for hyperparameter optimization) [123]. However, this process falls outside the scope of the current study. Consequently, this paper does not investigate hyperparameter optimization. Instead, all ML the techniques employed within the DOE-GAN-SA framework utilize default hyperparameter settings from MATLAB R2023b (Matrix Laboratory) and the Scikit-learn library [124,125]. It is presumed that the default configurations in MATLAB and the Scikit-learn library are unlikely to be modified by the majority of network engineers, particularly those without substantial expertise in ML. The default values for a few of the critical hyperparameters are provided in Table 1.

Table 1.

Table of hyperparameters.

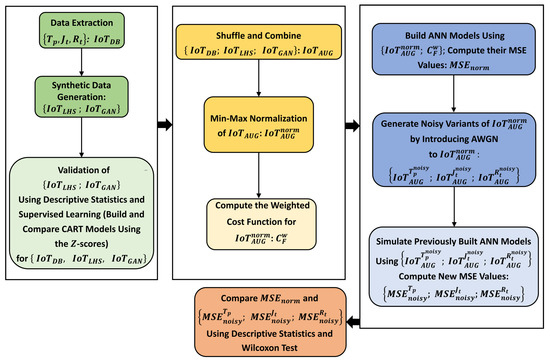

5. The Proposed DOE-GAN-SA Framework

The flow diagram for the proposed DOE-GAN-SA framework is shown in Figure 4, and the principal steps in the framework are discussed as follows:

Figure 4.

Flow diagram of the proposed DOE-GAN-SA framework.

- Step 1: Collect the simulated metric data describing the behavior and/or performance of the SDN-reliant IoT network (see Section 3). In this case, the dataset () formed from collecting , , and metrics for all the scenarios in the SDN-reliant IoT network can be described as follows:

- Step 2: Use the collected data from Step 1 (i.e., ) to implement LHS-based DOE (see Section 4.1) and GAN-based synthetic data generation (see Section “Generative Adversarial Networks (GANs)”) concurrently and enlarge the parameter space by samples for each network scenario to mimic on-the-fly , , and metrics for the SDN-reliant IoT network. The new datasets from the LHS-based DOE and GAN-based synthetic data generation (i.e., and , respectively) can be described as follows:In this Step, N is set at 900 to match the number of observations per scenario for all the SDN-reliant IoT network scenarios considered (see Section 3.3);

- Step 3: Validate the new LHS and GAN metrics (i.e., , , and and , , and in Equations (13) and (14), respectively) from Step 2 for each of the considered SDN-reliant IoT network scenarios (i.e., normal, HTTP DDoS flooding attack, TCP DDoS flooding attack, and UDP DDoS flooding attack operating conditions) using descriptive statistics and by building and comparing classification models using the standard scores (Z-scores) for [, , ], [, , ], and [, , ], respectively, and a suitable supervised learning technique (CART in this particular instance—see Section 4.2.2).The standardization procedure in this step is carried out as suggested in [39,71,126], and it can be described as follows:where is the Z-score of the ith observation for any metric (∈ {, , and }) having a mean and a standard deviation of and , respectively;

- Step 4: Shuffle the validated LHS and GAN metrics from Step 3 and combine them with the simulated data of metrics from Step 1 (i.e., [; ; ], [; ; ], and [; ; ]) for each of the SDN-reliant IoT network scenarios considered (i.e., normal, HTTP DDoS flooding attack, TCP DDoS flooding attack, and UDP DDoS flooding attack operating conditions) to have an augmented dataset for the network metrics, described as follows:

- Step 5: Normalize the combined metrics from Step 4 using min–max normalization. The min–max or linear transformation normalization in this step is carried out as suggested in [30,126,127], and it can be described as follows:where is the ith observation in the augmented SDN-reliant IoT network dataset (), whose minimum and maximum values are and , respectively, and is the normalized value defined as ∈ .In this step, min–max normalization is preferred to ensure that each metric in [, , ] contributes a similar relative numerical weight, reducing data redundancy and ensuring that all the target input values have an amicable metric scale prior to the implementation of ML-driven SA. The formed normalized augmented dataset can be described mathematically as follows:

- Step 6: Generate response variables for every observation in by deriving a weighted cost function (), as recommended in [30]. Specifically, for the ith observation in can be derived as follows:where w is set at 1, 2, 3, and 4 when the SDN-reliant IoT network is operating normally, under a TCP DDoS flooding attack, under a UDP DDoS flooding attack, and under an HTTP DDoS flooding attack, respectively, as recommended in [30];

- Step 7: Build ANN models (see Section “Artificial Neural Networks (ANNs)”) using as the explanatory variables and as the corresponding response variables ∀ IoT network scenarios. Then, compute the MSE values for the respective ANN models and store them in ;

- Step 8: Generate noisy variants of by introducing an additive white Gaussian noise (AWGN) severally to , , and , as carried out in [30]. The new noisy datasets can be described mathematically as follows:

- Step 10: Compare the MSE values from Steps 7 and 9 using descriptive statistics and hypothesis testing (specifically, the Wilcoxon test [128]) to ascertain and statistically validate the sensitivities of , , and in [, , and ], respectively. The comparisons are mathematically described as follows:where denotes the SA inferences drawn from the comparisons in Equation (23).

In Step 3, the preferred method is standardization (Z-score normalization) over min–max normalization. This is because classifiers used to solve classification problems are assumed to have a distribution that is normal or close to it [129]. As discussed in Section 4.2.2, the classifier used to implement Step 3 is the CART method, due to its popularity and demonstrated ability to perform better than other popular classifiers in predicting SDN-reliant network scenarios [71,120]. To assess the predictive accuracy of the fitted CART models in Step 3 and mitigate the risk of overfitting, a cross-validation approach has been employed. The selection of this validation method is informed by the dataset size, which comprises around 11,000 observations. In line with established practices for addressing classification challenges, a fivefold nested cross-validation framework has been uniformly applied across all the classifiers and experiments [71,130]. This approach aligns with widely recognized methodologies for enhancing model generalization and reliability [71,130].

In Steps 7 and 9, an ANN is preferred because of its robustness and popularity as a universal approximation function [131], and is used to alter the states of , , and severally in Step 8, as recommended in [30] for the ML-driven SA of SDN parameters. It should also be noted that the quantity of processing elements within each layer, as well as the overall number of layers, significantly impacts the training process of the ANN models built in Step 7. In other words, an insufficient number of processing elements may hinder the learning process, while an excessive number can result in overfitting to the training dataset [105,132]. For this study, the dataset was partitioned in accordance with methodologies suggested in prior research [30,133]. Specifically, 7560 data samples, representing 70% of the total dataset, were allocated for training. The remaining data were equally divided, with 1620 data samples (15%) designated for validation and an additional 1620 data samples (15%) reserved for testing purposes. This partitioning strategy ensures a balanced approach to model development and evaluation.

Unless otherwise noted, all the experiments carried out in this work to implement the DOE-GAN-SA framework outlined above were conducted on a workstation equipped with an Intel 6-core i7-8700 3.20 GHz CPU and 32.0 GB of RAM. All the independent experimental runs are repeated 50 times to achieve a sufficiently large sample size (50 in this instance) that allows a z-statistic to be utilized to determine the probability values for all the hypothesis tests performed [71,127]. For the synthetic data generated, i.e., and , the median of the 50 instances of generating them is employed to minimize the potential impact of extreme values and take into account the stochasticity of the LHS and GAN procedures [44,49].

6. Experimental Results and Discussion

Before employing and for the ML-driven SA carried out in this work, they are first validated to ascertain their representativeness of the modeled SDN-reliant IoT network with respect to the performance metrics and the network scenarios considered. The validation procedure and subsequent ML-driven SA implementation are detailed as follows, in line with the proposed DOE-GAN-SA framework (see Section 5).

6.1. Validation of Generated Synthetic Data

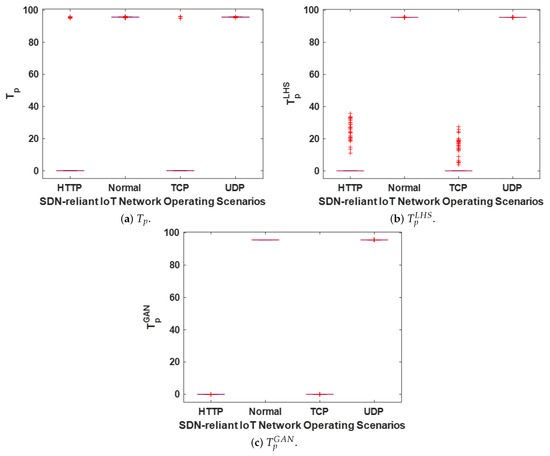

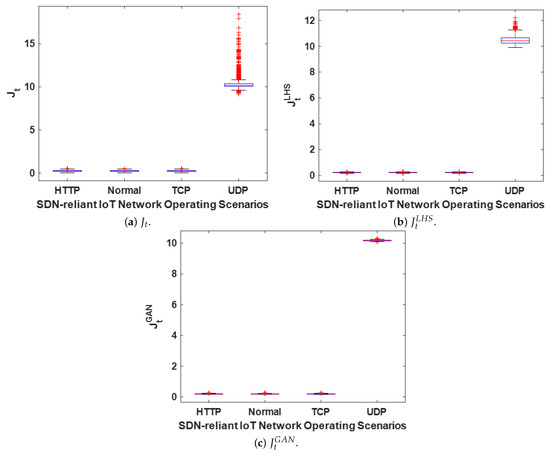

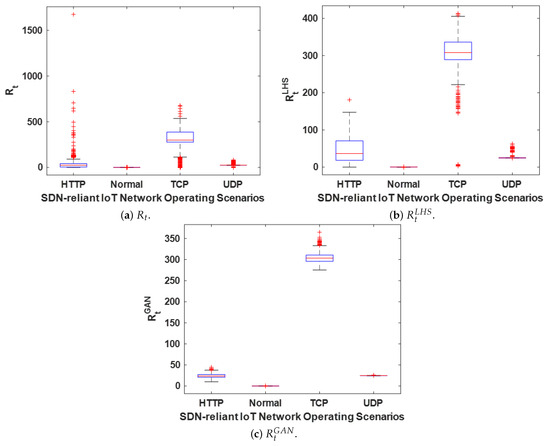

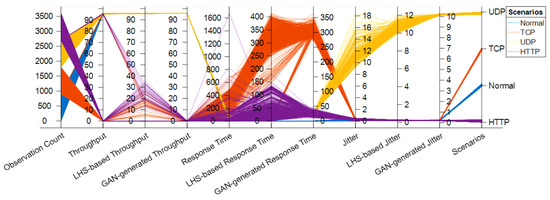

To carefully validate the generated synthetic datasets ( and ), a systematic comparison with the originally simulated dataset () is conducted using both descriptive statistics and supervised learning techniques. The statistical characteristics of , , and are detailed in Table 2, Table 3, Table 4 and Table 5, and their distributions are visually represented in Figure 5, Figure 6, Figure 7 and Figure 8. A thorough analysis of these tables and figures reveals that both and successfully generate synthetic instances of , , and (i.e., , , , , , and ), which can be utilized to augment the original metrics for emulating real-time traffic scenarios within the modeled SDN-reliant IoT network. To ensure a robust comparison, the median values from 50 generated instances of and have been employed rather than individual generated instances for reasons stated earlier. Despite this methodological choice, both and exhibit a high degree of similarity to in terms of statistical attributes and overall trends. Furthermore, across all the analyzed network scenarios, the descriptive statistics and distributions of , , and maintain strong alignment, as evidenced by the observations in Table 2, Table 3, Table 4 and Table 5 and Figure 5, Figure 6, Figure 7 and Figure 8.

Table 2.

Descriptive statistics of all the metrics over 900 observations (normal network scenario).

Table 3.

Descriptive statistics for all the metrics over 900 observations (TCP DDoS flooding attack network scenario).

Table 4.

Descriptive statistics for all the metrics over 900 observations (UDP DDoS flooding attack network scenario).

Table 5.

Descriptive statistics for all the metrics over 900 observations (HTTP DDoS flooding attack network scenario).

Figure 5.

Box plots showing the distributions of the original and synthetic throughput metrics for all the scenarios in the modeled SDN-reliant IoT network.

Figure 6.

Box plots showing the distributions of the original and synthetic jitter metrics for all the scenarios in the modeled SDN-reliant IoT network.

Figure 7.

Box plots showing the distributions of the original and synthetic response time metrics for all the scenarios in the modeled SDN-reliant IoT network.

Figure 8.

Parallel coordinates of the simulated, LHS-based, and GAN-generated , , and metrics for all the network scenarios.

Based on the results presented in Table 2, Table 3, Table 4 and Table 5 and Figure 5, several conclusions can be drawn regarding the behaviors of , , and : (1) A DDoS flooding attack on the SDN-reliant IoT network significantly affects or alters , , and . (2) When the SDN is subjected to a UDP DDoS flooding attack, there is no substantial change observed in the distributions of , , and . (3) In contrast, exposure to TCP and HTTP DDoS flooding attacks results in noticeable shifts in the distributions of , , and , with the majority of the values clustered around 0. This differs from the typical range of approximately 95 to 96 observed under normal conditions or during UDP DDoS flooding attacks. From a practical standpoint, the metrics , , and (illustrated in Figure 5 and reported in Table 2, Table 3, Table 4 and Table 5) appear to be more vulnerable to TCP and HTTP flooding attacks. This susceptibility is attributed to the operational characteristics of these attacks, which involve saturating the target server with excessive connection requests or numerous browser-based HTTP requests. These actions deplete network resources and ultimately lead to denial-of-service conditions for legitimate traffic [39,134,135].

Based on the results presented in Table 2, Table 3, Table 4 and Table 5 and Figure 6, several conclusions can be drawn regarding the behaviors of , , and : (1) A DDoS flooding attack on the SDN-reliant IoT network impacts or alters the values of , , and . (2) The distributions of , , and remain largely unchanged when the SDN is subjected to HTTP or TCP DDoS flooding attacks. (3) When exposed to a UDP DDoS flooding attack, the distributions of , , and exhibit significant variation, with most values clustering around 10. In contrast, under normal operating conditions or during TCP and HTTP DDoS flooding attacks, the values are typically distributed within the range from approximately 0 to 0.3. is highly influenced by the timing and sequence of packet arrivals. High values occur when packets arrive out of order or in bursts separated by irregular gaps. From a practical standpoint, Figure 6 and Table 2, Table 3, Table 4 and Table 5 indicate that , , and are particularly susceptible to UDP DDoS flooding attacks. This vulnerability arises from the operational nature of such attacks, which do not require a handshake process to flood the target server with unsolicited UDP traffic, bypassing any initial consent from the server [39,136].

Based on the results presented in Table 2, Table 3, Table 4 and Table 5 and Figure 7, several conclusions can be drawn regarding the behaviors of , , and : (1) A DDoS flooding attack on the SDN-reliant IoT network has measurable impacts on , , and . (2) Under TCP, UDP, and HTTP DDoS flooding attacks, the distributions of , , and exhibit significant variation. (3) The majority of the values fall within the ranges from approximately 0 to 500 for TCP DDoS flooding attacks, approximately 10 to 50 for UDP DDoS flooding attacks, and approximately 0 to 200 for HTTP DDoS flooding attacks. These distributions differ markedly from the typical range of approximately 0 to 0.5 observed under normal operating conditions. From a practical standpoint, Figure 7 and Table 2, Table 3, Table 4 and Table 5 indicate that , , and are susceptible to all the investigated DDoS flooding attacks, owing to the inherent operational characteristics of these attacks, as previously discussed [39,134,135,136]. Consequently, is a critical network-monitoring metric, with the potential to be significantly degraded by DDoS flooding attacks. This is particularly relevant in applications that rely on acknowledgment-based communication before the transmission of subsequent packets. Under such conditions, the unified communication systems within SDN-reliant IoT networks may experience substantial disruption.

A closer examination of Table 2, Table 3, Table 4 and Table 5 highlights that the mean and median values for the corresponding metrics in , , and remain largely consistent (have minimal or acceptable deviations), with the exception of specific large deviations observed in the HTTP DDoS flooding attack scenario. These discrepancies can be attributed to the inherent distributional properties of and under the HTTP DDoS flooding attack, where noticeable outliers are present (see Figure 5 and Figure 7). Similarly, Figure 5, Figure 6, Figure 7 and Figure 8 demonstrate that under normal network conditions, the distributions of , , and are in strong agreement, while in most DDoS flooding attack scenarios, their distributions exhibit reasonable conformity. Additionally, similar to , both and effectively distinguish between normal and DDoS flooding attack scenarios, as observed in Figure 5, Figure 6, Figure 7 and Figure 8. This attribute is particularly advantageous for the implementation of ADC, which is further explored in Section 6.1.

Comparisons and Hypothesis Testing for the Validation of Generated Datasets

In cases where discrepancies are observed between the generated datasets ( and ) and , it is important to consider the collective behavior of and . When combined, these synthetic datasets are expected to effectively capture the distributional patterns present in better, demonstrating their complementary nature and making them particularly valuable in augmenting to enhance its utility for the predictive modeling of diverse scenarios on SDN-reliant IoT networks. To verify this, supervised learning is employed to compare how well SDN-reliant IoT network scenarios can be classified considering and the combination of and . The supervised learning technique employed is CART for reasons stated earlier (see Section 4.2.2). The implementation framework and settings used have also been earlier provided in Section 4.2.2. To compare how and the combination of and perform for the predictive modeling of the SDN-reliant IoT network scenarios with a strong focus on ADC, 50 CART models are built using and the combination of and , respectively, and the descriptive statistics of the predictive accuracy () and -score are compared.

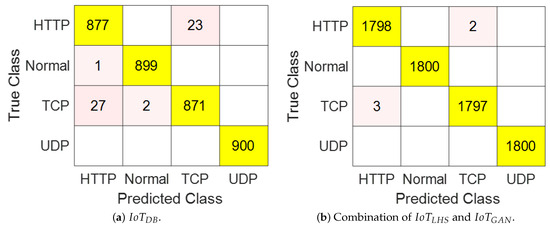

The value of a classifier is generally defined as the ratio of correctly classified observations to the total number of observations classified. The values of the CART models constructed using and the combination of and are deduced from each of their confusion matrices. Figure 9 displays the confusion matrices for typical CART models among the 50 models built using the two datasets (i.e., and the combination of and ), respectively. From a given confusion matrix, the value of the CART classifier can be evaluated as follows [71,123]:

where is a true positive (i.e., observations classified as that are actually ), is a true negative (i.e., observations classified as not and are not ), is a false positive (i.e., observations classified as but are not ), and is a false negative (i.e., observations classified as not but are actually ).

Figure 9.

Typical confusion matrices for the CART models built.

The descriptive statistics of over the 50 independent statistical runs, in which a CART model was constructed each time using and the combination of and , are reported in Table 6. Consistent with the observations from the typical confusion matrices shown in Figure 9, these results indicate that is consistently high for both and the combination of and , suggesting that both datasets are suitable for CART-based ADC for the modeled SDN-reliant IoT network, even in the worst-case scenarios. Furthermore, the value achieved using the combination of and is slightly higher than that obtained using , hinting that the synthetic dataset may possess features that enhance its ability to distinguish between the scenarios in the modeled SDN-reliant IoT network compared to , likely due to its larger number of observations. Additionally, the very low standard deviations observed for both datasets further confirm their suitability for CART-based ADC, considering the SDN-reliant IoT network scenarios investigated.

Table 6.

Descriptive statistics of PA over 50 independent runs.

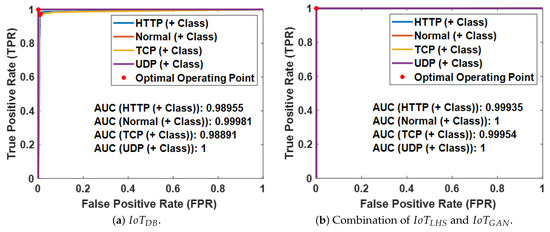

Since all the CART models provide confidence scores (i.e., posterior probabilities) for their predictions, their receiver-operating characteristic (ROC) curves and areas under the ROC curves (AUCs) can also be used to analyze the summaries of their individual values [71,123]. Figure 10 shows the ROC curves for typical CART models among the 50 models built using the two datasets (i.e., and the combination of and ), respectively. When the confidence scores of a classification model (the CART model in this case) are discretized, the sensitivity (also known as recall or true positive rate (TPR)) and the false positive rate (FPR) are combined to create the summary for a specific ROC curve. The observations in the dataset are then classified using these discrete scores as prediction thresholds.

Figure 10.

Typical ROC curves for the CART models built.

For a typical ROC curve, TPR, FPR, PPV, and the resulting F1-score can be estimated as follows [71,123]:

The descriptive statistics of the F1-scores over the 50 independent runs, in which a CART model was constructed each time using and the combination of and , are reported in Table 7. Consistent with the observations from the typical ROC curves shown in Figure 10, these results indicate that the F1-scores are consistently high for both and the combination of and , suggesting that both datasets are suitable for CART-based ADC for the modeled SDN-reliant IoT network, even in the worst-case scenarios. Furthermore, the F1-score achieved using the combination of and is slightly higher than that obtained using , hinting that the synthetic dataset may possess features that enhance its ability to distinguish between the scenarios in the modeled SDN-reliant IoT network compared to , likely due to its larger number of observations. Additionally, the very low standard deviations observed for both datasets further confirm their suitability for CART-based ADC, considering the SDN-reliant IoT network scenarios investigated.

Table 7.

Descriptive statistics of F1 over 50 independent runs.

To statistically verify that the CART models built using the combination of and are slightly more robust for ADC for the modeled SDN-reliant IoT network in comparison to the CART models built using , a hypothesis test (the Wilcoxon test [128]) is carried out. To carry out the test, the results for the and -scores obtained using the two types of datasets (i.e., and the combination of and ) over 50 independent statistical runs are used as the data samples. In this instance, the null hypothesis is that the data samples of and the combination of and have equal medians at the 5% significance level (i.e., the 95% confidence level) against the alternative that they do not. The null hypothesis is rejected if there is substantial evidence against it, as indicated by the two-sided probability value (p-value) of the hypothesis test (i.e., the Wilcoxon rank-sum test) being less than or equal to 0.05.

From Table 8, it can be seen that the slight improvement in the and F1-scores of the CART models built using the combination of and over the and F1-scores of the CART models built using is statistically significant, with p-values of 6.0493 × 10−18 and 2.9193 × 10−22, respectively. Overall, the findings from the validation procedure and comparisons suggest that synthetic data from the combination of and closely reflect the modeled SDN-reliant IoT network’s characteristics, both under normal operating conditions and in the presence of DDoS flooding attacks. Particularly, their combined use presents a promising approach to enrich existing datasets and improve the fidelity of SDN-reliant IoT network simulations. In the next section, a robust ML-driven SA is carried out using a combination of the original simulated dataset () and the validated synthetic data set (the combination of and ), i.e., .

Table 8.

Hypothesis test: vs. the combination of and .

6.2. ML-Driven SA

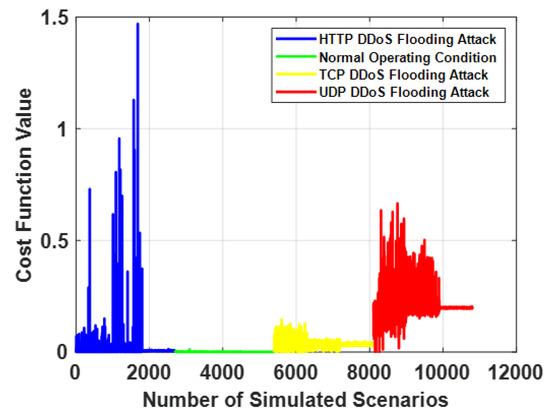

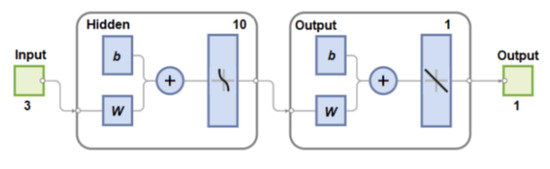

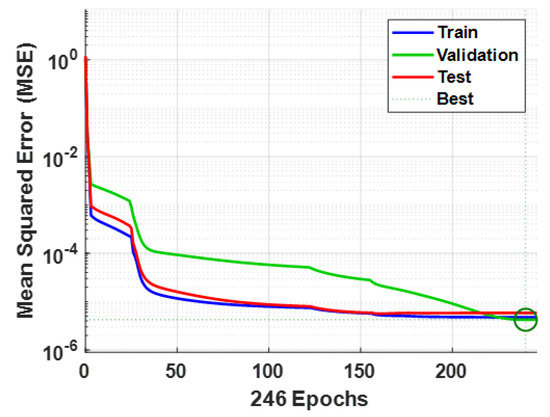

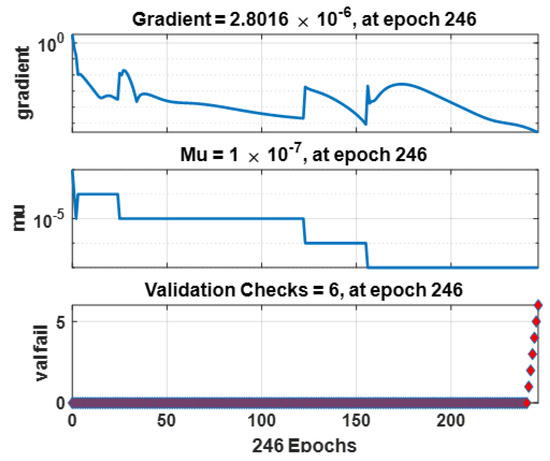

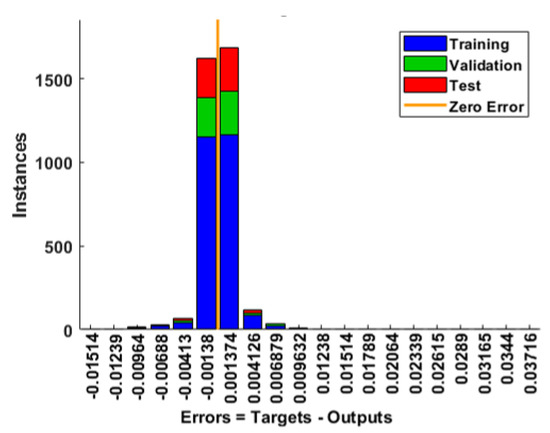

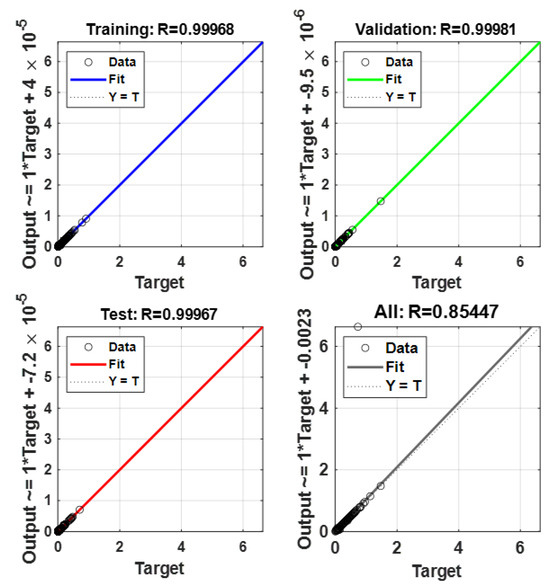

The ML-driven SA component of the proposed DOE-GAN-SA framework is implemented as described in Section 5. Figure 11 illustrates the trend of (see Equation (11)) across all the scenarios in the modeled SDN-reliant IoT network, considering (see Equation (18)). From Figure 11, it can be observed that using as the response or target variable for the ANN models to be built enables, relatively, an additional layer of distinction between different scenarios in the modeled SDN-reliant IoT network. Specifically, approaches or remains close to null when the network operates normally, while it exhibits higher values when the network is subjected to DDoS flooding attacks. The same structure, comprising three input nodes, ten hidden layers, and one output node, as shown in Figure 12, is used for all the ANN models built. Using , a total of 50 ANN models were built over 50 independent statistical runs. The training details, including the MSE trend, error histogram, gradient plot, and regression plots of a typical ANN model trained with , are presented in Figure 13, Figure 14, Figure 15, and Figure 16, respectively.

Figure 11.

Plot of against the IoT network scenarios.

Figure 12.

Layout of all the ANN models built.

Figure 13.

Typical convergence trend of the MSE values for the ANN models built using (the best validation performance is 4.2194 ×10−6 at epoch 240).

Figure 14.

Typical gradient information trend for the ANN models built using .

Figure 15.

Typical error histogram (with 20 bins) for the ANN models built using .

Figure 16.

Typical regression plots for the ANN models built using .

From Figure 13 and Figure 14, it can be observed that the training of the ANN models was not computationally expensive, as they typically converged to low MSE values in fewer than 250 epochs. The performances of the ANN models can also be considered as generally good, given their typically low error rates and high correlation coefficients according to Figure 15 and Figure 16. As described in Section 5, to evaluate the sensitivity of the performance metrics of the modeled SDN-reliant IoT network (i.e., , , and ), the ANN models built using were re-simulated severally over 50 independent statistical runs using , , and , respectively. The descriptive statistics of the MSE values for all the datasets used in simulating the ANN models are reported in Table 9.

Table 9.

Descriptive statistics of the MSE values of the ANN models built over 50 independent runs.

From Table 9, the following observations can be made: (1) The MSE values of the ANN models are generally sensitive to changes in , , and when comparing with , , and , respectively. (2) On average, appears to be the most sensitive, with a mean MSE value of 6.9898 for , while is the least sensitive, with a mean MSE value of 0.0200 for . (3) In the best-case scenario, induced the least change in , with a minimum MSE value of 0.0108 for (similar to , with a minimum MSE value of 0.0109 for ), whereas induced the most change, with a minimum MSE value of 0.0146 for . (4) In the worst-case scenario, caused the most disruption to , with a maximum MSE value of 22.3078 for , while caused the least disruption, with a maximum MSE value of 0.0302 for . (5) In terms of the robustness and consistency, appears to have induced the most consistent changes in , with a standard deviation of 0.0520 for , whereas induced the most inconsistent changes, with a standard deviation of 5.0983 for . These observations, which indicate that , , and are all sensitive, with being more sensitive compared to and , are consistent with findings in the literature [39], thereby validating the approach.

Hypothesis Testing for Sensitivity Analysis

Similar to the hypothesis test reported earlier in Section 6.1, a hypothesis test (the Wilcoxon test [128]) is also conducted to statistically verify whether is impacted by changes in , , and when their respective noisy variants (i.e., , , and ) are used to evaluate the ANN models built using . To perform the test, the results for , , , and , obtained over 50 independent statistical runs, are used as the data samples. The null hypothesis in this case is that the data samples of and those of , , and have equal medians at a 5% significance level (i.e., a 95% confidence level) against the alternative hypothesis that they do not. As previously mentioned, there is strong evidence against the null hypothesis if the two-sided probability value (p-value) of the hypothesis test (Wilcoxon rank-sum test) is less than or equal to 0.05, leading to the rejection of the hypothesis.

From Table 10, it can be observed that , , and are all statistically significantly different from , with a p-value of . This further reinforces the notion that , , and are sensitive performance metrics for the modeled SDN-reliant IoT network.

Table 10.

Hypothesis test: () vs. other datasets.

6.3. Comparisons with Other Methods

Due to the scope of this study and time limitations, a comprehensive comparative analysis between the proposed DOE-GAN-SA framework and alternative approaches has not been conducted. However, considering the successful implementation of the DOE-GAN-SA framework demonstrated in this work, alongside the reported effectiveness of alternative methodologies in the literature [66,137,138,139], meaningful insights can be drawn from the comparisons presented in Table 11. As noted in Table 11, the proposed framework not only aligns with existing methods but also offers distinct advantages. Specifically, its purpose-built hybridization of DOE and ML techniques improves the applicability of hybrid approaches in understanding the behaviors of SDN-reliant IoT networks. Rather than serving as a direct replacement, the DOE-GAN-SA framework complements existing methods by addressing specific challenges and facilitating a more robust adoption of ML-based solutions.

Table 11.

Comparative assessment.

6.4. Recommended Approach for Future Practical Implementation