1. Introduction

Using technology in education, especially through virtual labs (VLs), transforms students from passive listeners into active investigators and enhances conceptual mastery [

1,

2,

3]. The teaching of physics in the Democratic Republic of the Congo (DRC) plays a central role in students’ scientific education. In the towns of Inkisi and Kimpese, schools face significant challenges, such as a lack of hands-on laboratories, a shortage of well-trained educators, frequent power outages, and poor internet connectivity.

This situation has forced physics teachers to favor a strictly theoretical approach, thereby limiting students’ experience to a bookish and abstract assimilation of fundamental concepts. The repercussions of this educational shortfall are numerous, including a series of common conceptual and terminological confusions in mechanics, such as the distinction between path and trajectory, speed and acceleration, or weight and mass [

4,

5,

6]. These difficulties are further exacerbated by misconceptions, which are considered cognitive obstacles, making the acquisition of key concepts even more challenging [

7].

Moreover, as some studies confirm, there is a significant gap between students’ performance in physics in sub-Saharan African countries compared to developed nations. This gap is attributed, among other factors, to the lack of material resources, the pedagogical shortcomings of teachers, the prioritization of theory over practice, and insufficient mastery of technology [

8].

VLs are often considered a reliable solution to the shortage of physics laboratories in schools across sub-Saharan Africa. They help overcome economic challenges related to acquiring equipment for the construction of physical hands-on labs [

8,

9]. However, most of them are global, meaning they are designed for practical physics education in a general context. As a result, they overlook the specific needs of physics education in the DRC and do not always align with the current curriculum.

In this article, we propose a VL that addresses both the economic challenges faced by developing countries and the curricular requirements of the DRC: Bazin-R VirtLab (BRVL). Such an approach has already been proposed by several researchers in various parts of the world [

1,

10,

11,

12,

13].

However, most of publications on VLs focus solely on the purely technical aspects or the pedagogical and didactic implications of VLs. The evaluations they present of VLs overlook the robust comparative methodologies offered by multi-criteria aggregation functions.

In our study, we not only propose a custom-designed VL tailored for physics education in the DRC, but, more importantly, we evaluate it alongside other VLs using multi-criteria analysis methods, based on pedagogical criteria established by professionals.

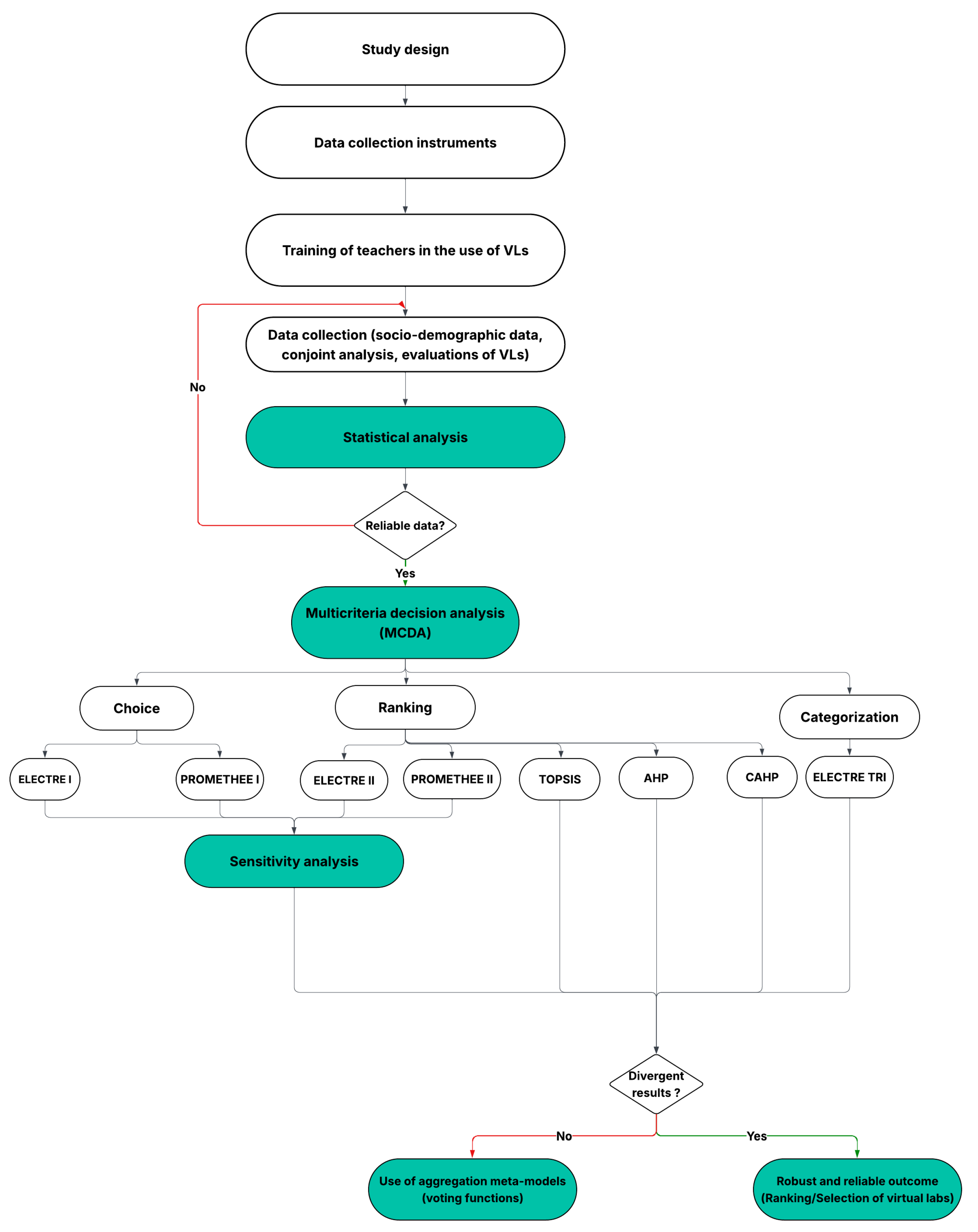

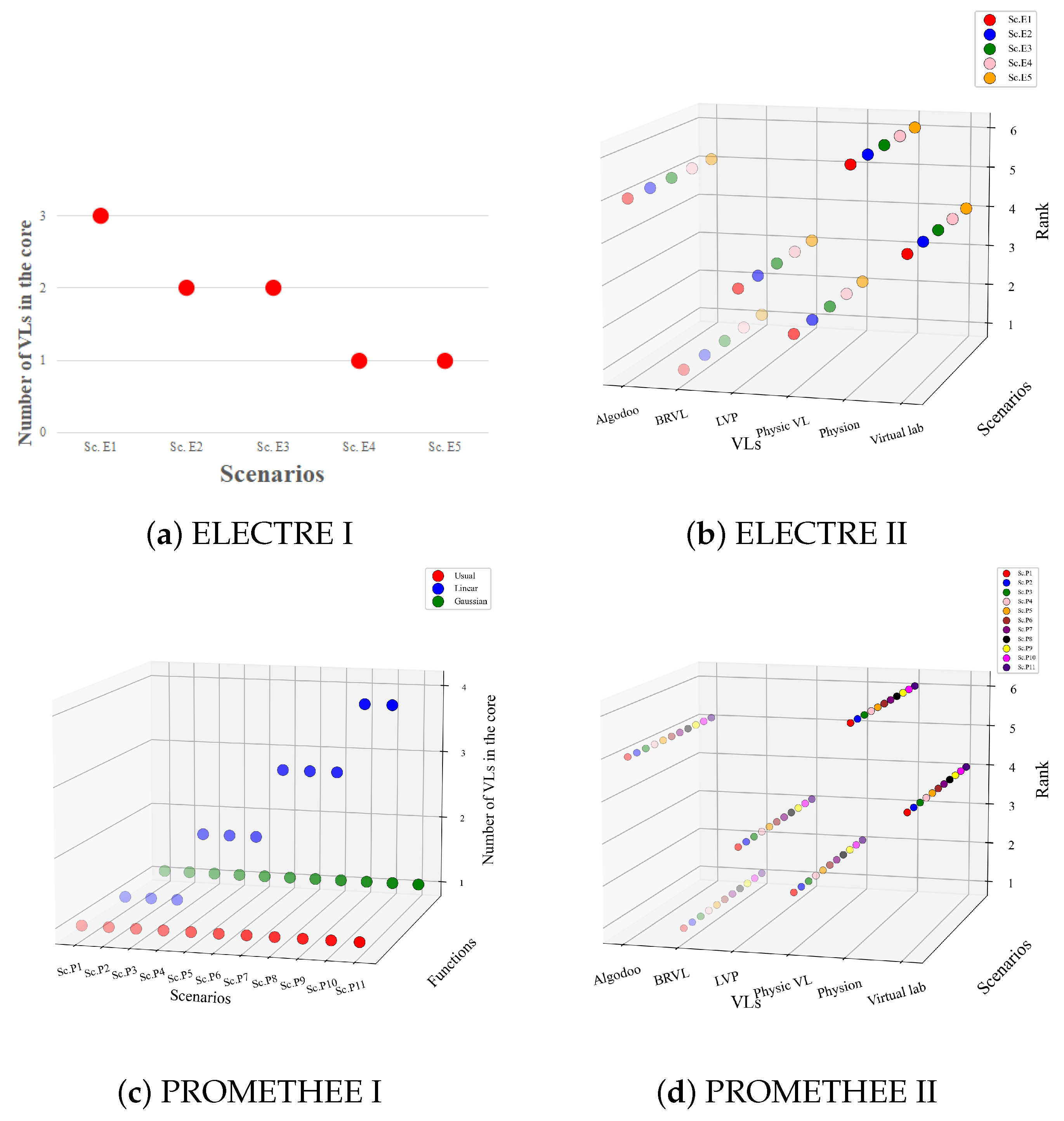

To validate BRVL, we used the ELECTRE I, ELECTRE II, ELECTRE TRI, PROMETHEE I, PROMETHEE II, TOPSIS, CAHP, and AHP methods. These approaches were independently applied to compare BRVL with several global, free, and offline VLs. Prior to this, the weights of the selected criteria were determined using Conjoint Analysis (CA). The TOPSIS, AHP, CAHP, ELECTRE II, and PROMETHEE II methods allow for ranking alternatives (VLs) from best to worst. Although ELECTRE I and PROMETHEE I are designed for a different type of decision problem (choice), they help identify a set of non-dominated alternatives called the “core.” ELECTRE TRI is dedicated to categorizing alternatives into different levels (“High”, “Medium”, and “Low”). The advantage of ELECTRE methods is that they reveal non-compensatory dynamics. Indeed, with ELECTRE, a low performance on a single criterion could eliminate an alternative despite its excellent performance on other criteria.

The main objectives pursued in our study are:

Performing a statistical analysis of the collected data to assess its reliability and consistency.

Comparing and ranking competing VLs using multicriteria decision-making methods.

Assessing the robustness and reliability of VL rankings against parameter shifts using sensitivity analysis.

Examining the consistency of outcomes across MCDA methods and proposing aggregation strategies for divergent results.

Our study focuses exclusively on documented hypotheses—even implicit ones—provided they are testable and stakeholder-relevant. The hypotheses framing our decision-making are:

H1: Using multiple MCDA methods makes VL assessments more robust and reduces methodological bias [

14,

15,

16].

H2: Statistically validated input data improves the reliability and consistency of VL rankings across decision models [

17].

H3: VL alternative rankings can differ significantly based on the MCDA method used, showing how sensitive the results are to methodology [

18].

H4: Sensitivity analysis should confirm whether the preferred VL solution stays stable across different parameter settings [

19].

H5: When rankings disagree, combining them using meta-decision models (e.g., voting rules) can help reach consensus [

20,

21,

22].

The remainder of this paper is organized as follows:

Section 2 presents the literature review. In

Section 3, we outline our research methodology.

Section 4 summarizes the key findings of our work (Results). The results are discussed in

Section 5, followed by the conclusion of our study in

Section 6.

6. Conclusions

Our study has demonstrated that BRVL significantly outperforms competing global alternatives, particularly concerning the two criteria with the highest weights: misconception correction (weighted at 28.8%) and curricular alignment with the fourth-year scientific physics program in the Democratic Republic of the Congo (weighted at 26.1%). This superiority is further reinforced by the robustness of the results obtained through the eight multicriteria methods employed in this study, as well as by the sensitivity analysis, which confirms BRVL’s resilience to extremely strict thresholds. These results lead us to revisit our initial hypotheses:

H1—Confirmed. All eight MCDA methods used converged on the same result: BRVL is part of the core set in outranking methods and ranks first in total aggregation methods. This convergence suggests that methodological bias is negligible and reinforces the overall reliability of the outcome.

H2—Partially confirmed. Although statistical validation confirmed the general reliability of the data, we identified and analyzed respondent-related biases using linear regression.

H3—Refuted. Unexpectedly, the results remained stable despite the methodological diversity of the MCDA approaches and the range of parameter configurations tested during the sensitivity analysis.

H4—Confirmed. Even under varied threshold and preference settings, the outcome showed no significant change, underscoring its stability.

H5—Not applicable. Since all methods pointed to the same result, meta-ranking mechanisms were simply unnecessary.

From a pedagogical standpoint, unlike other physics VLs, BRVL is specifically designed to suit the local educational context in the DRC—a developing country facing multiple challenges in equipping its scientific schools with modern laboratory facilities and materials.

From a theoretical perspective, our work has made a significant contribution to the development of a new MCDA evaluation framework for resource-constrained physics VLs. Practically, BRVL, due to its accessible architecture, offline functionality, low cost, and alignment with local curricula, can be replicated in other countries with similar contexts seeking to integrate ICT into their national curricula. The responsibility now lies with policymakers to allocate substantial budgets for the design and implementation of locally tailored virtual laboratories. Moreover, the BRVL could be integrated into the Congolese national curriculum to support not only the correction of misconceptions among young learners but also the teaching, learning, and assessment of physics.

Among its limitations, we highlight the restricted study area and its dependence on regions with easy access to electricity and smartphones. Moving forward, it is necessary to expand this research to other cities and provinces in the DRC, as well as to other STEM disciplines (such as mathematics, chemistry, and biology). Furthermore, versions adapted to Congolese and African regions where populations lack access to electricity should be developed. BRVL could also be used as an interface for conducting practical physics examinations within the Congolese National Baccalaureate. Over several years of longitudinal study, BRVL’s results could serve as the basis for future research aimed at refining its capabilities, including the automation of evaluations through the integration of appropriate algorithms (e.g., Python-based MCDA toolkit).

This work suggests several possibilities for future research. The adaptation of the proposed evaluation framework to different educational settings is an immediate potential advancement, especially within resource-constrained systems. When curricula align with pedagogical relevance at the national level, integration strategies and policy decisions are more effectively crafted for learners by policymakers. Advanced technologies such as AI-enabled knowledge synthesis, augmented reality, mobile-based applications could potentially boost both accessibility and educational impact. Future research should prioritize the simultaneous pursuit of gender equity and geographical inclusion, while guaranteeing resource access for rural areas. The combination of continued long-term research with advancements in MCDA methods will enhance our ability to evaluate the persistent benefits of VLs and support their sustainable integration.

Author Contributions

Conceptualization, R.M.B., R.-B.M.N. and J.-R.M.B.; Data curation, R.M.B., R.-B.M.N. and J.-R.M.B.; Formal analysis, R.-B.M.N., J.-R.M.B. and G.K.K.; Investigation, R.M.B.; Methodology, R.M.B. and R.-B.M.N.; Software, R.-B.M.N. and J.-R.M.B.; Supervision, R.-B.M.N., G.K.K. and B.N.M.; Validation, R.-B.M.N., R.M.B., J.-R.M.B. and B.N.M.; Visualization, R.-B.M.N., R.M.B., G.K.K. and B.N.M.; Writing—original draft, R.M.B. and R.-B.M.N.; Writing—review & editing, R.-B.M.N., J.-R.M.B. and B.N.M. All authors have read and agreed to the published version of the manuscript.

Funding

There was no external funding for this study.

Data Availability Statement

Please contact authors for data and materials requests.

Acknowledgments

The authors express their deep thanks for the referees’ valuable suggestions about revising and improving the manuscript.

Conflicts of Interest

The authors declare that none of the work reported in this paper could have been influenced by any known competing financial interests or personal relationships.

Abbreviations

The following abbreviations are used in this manuscript:

| Algo_Us | Performance of Algodoo on usability |

| Betw. subj. | Between subjects |

| BRVL | Bazin-R VirtLab |

| BRVL_Curr | Performance of BRVL on curriculum compliance |

| BRVL_Misc | Performance of BRVL on misconceptions correction |

| Curr. compl. | Curriculum compliance |

| Dep. var. | Dependent variable |

| Dev. Tech. | Development Technology |

| DRC | Democratic Republic of the Congo |

| df | Degree of freedom |

| Educ. level | Education level |

| Know. build. | Knowledge building |

| ICC | Intraclass Correlation Coefficient |

| ICT | Information and Communication Technology |

| Ind. var. | Independent variable |

| Intra pop. | Intra population |

| Mast. | Master’s equivalent |

| Misc. corr. | Misconceptions correction |

| MCDA | Multi-Criteria Decision Aiding |

| Nonadd. | Nonadditivity |

| Phys. Back. | Physics Teaching Background in 4th Grade Science |

| Phys_Curr | Performance of Physics Virtual lab on curriculum compliance |

| Phys_Know | Performance of Physics Virtual lab on knowledge building |

| Phys_Misc | Performance of Physics Virtual lab on misconceptions correction |

| Phys_Us | Performance of Physics Virtual lab on usability |

| Physion_Misc | Performance of Physion on misconceptions correction |

| Sec. | Upper secondary level |

| Sig. | Significance threshold |

| Sig. post hoc comp. | Significant post hoc comparisons |

| STEM | Science, Technology, Engineering, and Mathematics |

| Sum Sq. | Sum of squares |

| TPD | Teacher Professional Development |

| VL | Virtual lab |

References

- Dori, Y.J.; Belcher, J. How does technology-enabled active learning affect undergraduate students’ understanding of electromagnetism concepts? J. Learn. Sci. 2005, 14, 243–279. [Google Scholar] [CrossRef]

- Kefalis, C.; Skordoulis, C.; Drigas, A. Digital Simulations in STEM Education: Insights from Recent Empirical Studies, a Systematic Review. Encyclopedia 2025, 5, 10. [Google Scholar] [CrossRef]

- Haberbosch, M.; Deiters, M.; Schaal, S. Combining Virtual and Hands-on Lab Work in a Blended Learning Approach on Molecular Biology Methods and Lab Safety for Lower Secondary Education Students. Educ. Sci. 2025, 15, 123. [Google Scholar] [CrossRef]

- Bar, V.; Brosh, Y.; Sneider, C. Weight, Mass, and Gravity: Threshold Concepts in Learning Science. Sci. Educ. 2016, 25, 22–34. [Google Scholar]

- Taibu, R.; Rudge, D.; Schuster, D. Textbook presentations of weight: Conceptual difficulties and language ambiguities. Phys. Rev. Spec. Top.-Phys. Educ. Res. 2015, 11, 010117. [Google Scholar] [CrossRef]

- Taibu, R.; Schuster, D.; Rudge, D. Teaching weight to explicitly address language ambiguities and conceptual difficulties. Phys. Rev. Phys. Educ. Res. 2017, 13, 010130. [Google Scholar] [CrossRef]

- Astolfi, J.P.; Peterfalvi, B. Obstacles et construction de situations didactiques en sciences expérimentales. Aster Rech. Didact. Sci. Exp. 1993, 16, 103–141. [Google Scholar] [CrossRef]

- Babalola, F.E.; Ojobola, F.B. Improving Learning of Practical Physics in Sub-Saharan Africa—System Issues. Can. J. Sci. Math. Technol. Educ. 2022, 22, 278–300. [Google Scholar] [CrossRef]

- Babalola, F. Advancing Practical Physics in Africa’s Schools; Open University: Milton Keynes, UK, 2017. [Google Scholar]

- Aljuhani, K.; Sonbul, M.; Althabiti, M.; Meccawy, M. Creating a Virtual Science Lab (VSL): The adoption of virtual labs in Saudi schools. Smart Learn. Environ. 2018, 5, 16. [Google Scholar] [CrossRef]

- Darrah, M.; Humbert, R.; Finstein, J.; Simon, M.; Hopkins, J. Are virtual labs as effective as hands-on labs for undergraduate physics? A comparative study at two major universities. J. Sci. Educ. Technol. 2014, 23, 803–814. [Google Scholar] [CrossRef]

- Laseinde, O.T.; Dada, D. Enhancing teaching and learning in STEM Labs: The development of an android-based virtual reality platform. Mater. Today Proc. 2024, 105, 240–246. [Google Scholar] [CrossRef]

- Lynch, T.; Ghergulescu, I. NEWTON virtual labs: Introduction and teacher perspective. In Proceedings of the 2017 IEEE 17th International Conference on Advanced Learning Technologies (ICALT), Timisoara, Romania, 3–7 July 2017; pp. 343–345. [Google Scholar]

- Youssef, A.E.; Saleem, K. A hybrid MCDM approach for evaluating web-based e-learning platforms. IEEE Access 2023, 11, 72436–72447. [Google Scholar] [CrossRef]

- Al-Gerafi, M.A.; Goswami, S.S.; Khan, M.A.; Naveed, Q.N.; Lasisi, A.; AlMohimeed, A.; Elaraby, A. Designing of an effective e-learning website using inter-valued fuzzy hybrid MCDM concept: A pedagogical approach. Alex. Eng. J. 2024, 97, 61–87. [Google Scholar] [CrossRef]

- Alshamsi, A.M.; El-Kassabi, H.; Serhani, M.A.; Bouhaddioui, C. A multi-criteria decision-making (MCDM) approach for data-driven distance learning recommendations. Educ. Inf. Technol. 2023, 28, 10421–10458. [Google Scholar] [CrossRef] [PubMed]

- Leskinen, P.; Kangas, J. Rank reversals in multi-criteria decision analysis with statistical modelling of ratio-scale pairwise comparisons. J. Oper. Res. Soc. 2005, 56, 855–861. [Google Scholar] [CrossRef]

- Wątróbski, J.; Jankowski, J.; Ziemba, P.; Karczmarczyk, A.; Zioło, M. Generalised framework for multi-criteria method selection. Omega 2019, 86, 107–124. [Google Scholar] [CrossRef]

- Ishizaka, A.; Nemery, P. Multi-Criteria Decision Analysis: Methods and Software; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Dias, L.C.; Kadziński, M. Meta-Rankings of Journals Publishing Multiple Criteria Decision Aiding Research: Benefit-of-Doubt Composite Indicators for Heterogeneous Qualitative Scales. In Intelligent Decision Support Systems: Combining Operations Research and Artificial Intelligence-Essays in Honor of Roman Słowiński; Springer: Berlin/Heidelberg, Germany, 2022; pp. 245–268. [Google Scholar]

- Ferretti, V. Convergencies and Divergencies in Collaborative Decision-Making Processes. In Proceedings of the International Conference on Group Decision and Negotiation, Toronto, ON, Canada, 6–10 June 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 155–169. [Google Scholar]

- Luque-Martínez, T.; Faraoni, N. Meta-ranking to position world universities. Stud. High. Educ. 2020, 45, 819–833. [Google Scholar] [CrossRef]

- Sellberg, C.; Nazari, Z.; Solberg, M. Virtual laboratories in STEM higher education: A scoping review. Nord. J. Syst. Rev. Educ. 2024, 2, 58–75. [Google Scholar] [CrossRef]

- Sypsas, A.; Paxinou, E.; Zafeiropoulos, V.; Kalles, D. Virtual Laboratories in STEM Education: A Focus on Onlabs, a 3D Virtual Reality Biology Laboratory. In Online Laboratories in Engineering and Technology Education: State of the Art and Trends for the Future; May, D., Auer, M.E., Kist, A., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 323–337. [Google Scholar] [CrossRef]

- August, S.E.; Hammers, M.L.; Murphy, D.B.; Neyer, A.; Gueye, P.; Thames, R.Q. Virtual engineering sciences learning lab: Giving STEM education a second life. IEEE Trans. Learn. Technol. 2015, 9, 18–30. [Google Scholar] [CrossRef]

- Murdan, A.P. Tailoring STEM Education for Slow Learners Through Artificial Intelligence. In Proceedings of the 2024 5th International Conference on Emerging Trends in Electrical, Electronic and Communications Engineering (ELECOM), Port Louis, Mauritius, 20–22 November 2024; pp. 1–7. [Google Scholar]

- Gnesdilow, D.; Puntambekar, S. Middle School Students’ Application of Science Learning From Physical Versus Virtual Labs to New Contexts. Sci. Educ. 2025. early view. [Google Scholar] [CrossRef]

- Yordanov, T.; Mihailov, N.; Gabrovska-Evstatieva, K. Low-cost Remote Lab on Renewable Energy Sources with a Focus on STEM Education. In Proceedings of the 2023 18th Conference on Electrical Machines, Drives and Power Systems (ELMA), Varna, Bulgaria, 29 June–1 July 2023; pp. 1–5. [Google Scholar]

- Nedungadi, P.; Raman, R.; McGregor, M. Enhanced STEM learning with Online Labs: Empirical study comparing physical labs, tablets and desktops. In Proceedings of the 2013 IEEE Frontiers in Education conference (FIE), Oklahoma City, OK, USA, 23–26 October 2013; pp. 1585–1590. [Google Scholar]

- El Kharki, K.; Berrada, K.; Burgos, D. Design and implementation of a virtual laboratory for physics subjects in Moroccan universities. Sustainability 2021, 13, 3711. [Google Scholar] [CrossRef]

- Hassan, J.; Devi, A.; Ray, B. Virtual laboratories in tertiary education: Case study analysis by learning theories. Educ. Sci. 2022, 12, 554. [Google Scholar] [CrossRef]

- Krathwohl, D.R. A revision of Bloom’s taxonomy: An overview. Theory Pract. 2002, 41, 212–218. [Google Scholar] [CrossRef]

- Sonje, S.A.; Pawar, R.S.; Shukla, S. Assessing blockchain-based innovation for the “right to education” using MCDA approach of value-focused thinking and fuzzy cognitive maps. IEEE Trans. Eng. Manag. 2021, 70, 1945–1965. [Google Scholar] [CrossRef]

- Jeong, J.S.; González-Gómez, D. MCDA/F-DEMATEL/ICTs Method Under Uncertainty in Mathematics Education: How to Make a Decision with Flipped, Gamified, and Sustainable Criteria. In Decision Making Under Uncertainty Via Optimization, Modelling, and Analysis; Springer: Berlin/Heidelberg, Germany, 2025; pp. 91–113. [Google Scholar]

- Ransikarbum, K.; Leksomboon, R. Analytic hierarchy process approach for healthcare educational media selection: Additive manufacturing inspired study. In Proceedings of the 2021 IEEE 8th International Conference on Industrial Engineering and Applications (ICIEA), Virtual Conference, 23–26 April 2021; pp. 154–158. [Google Scholar]

- Kurilovas, E.; Kurilova, J. Several decision support methods for evaluating the quality of learning scenarios. In Proceedings of the 2015 IEEE 3rd Workshop on Advances in Information, Electronic and Electrical Engineering (AIEEE), Riga, Latvia, 13–14 November 2015; pp. 1–6. [Google Scholar]

- Hisamuddin, M.; Faisal, M. Exploring Effective Decision-Making Techniques in Learning Environment: A Comprehensive Review. In Proceedings of the 2024 Second International Conference Computational and Characterization Techniques in Engineering & Sciences (IC3TES), Lucknow, India, 15–16 November 2024; pp. 1–8. [Google Scholar]

- Kuzmanovic, M.; Savic, G. Avoiding the privacy paradox using preference-based segmentation: A conjoint analysis approach. Electronics 2020, 9, 1382. [Google Scholar] [CrossRef]

- Green, P.E.; Rao, V.R. Conjoint measurement-for quantifying judgmental data. J. Mark. Res. 1971, 8, 355–363. [Google Scholar]

- Green, P.E.; Srinivasan, V. Conjoint analysis in consumer research: Issues and outlook. J. Consum. Res. 1978, 5, 103–123. [Google Scholar] [CrossRef]

- Green, P.E.; Srinivasan, V. Conjoint analysis in marketing: New developments with implications for research and practice. J. Mark. 1990, 54, 3–19. [Google Scholar] [CrossRef]

- Van Etten, B.; Smit, K. Learning material in compliance with the Revised National Curriculum Statement: A dilemma. Pythagoras 2005, 2005, 48–58. [Google Scholar] [CrossRef]

- Abbasi-Ghahramanloo, A.; Abedi, M.; Shirdel, Y.; Moradi-Asl, E. Examining the Degree of Compliance of the Continuing Public Health Bachelor’s Curriculum with the Job Needs of Healthcare Networks. J. Health 2024, 15, 180–186. [Google Scholar] [CrossRef]

- Fazeli, S.; Esmaeili, A.; Mohammadi, Y.; Raeisoon, M. Investigating the Compliance of the Curriculum Content of the Psychiatric Department of Medicine (Externship and Internship) with the Future Job Needs from the Perspective of General Practitioners. Res. Med. Educ. 2021, 13, 72–79. [Google Scholar] [CrossRef]

- Reyes, R.L.; Isleta, K.P.; Regala, J.D.; Bialba, D.M.R. Enhancing experiential science learning with virtual labs: A narrative account of merits, challenges, and implementation strategies. J. Comput. Assist. Learn. 2024, 40, 3167–3186. [Google Scholar] [CrossRef]

- Kilani, H.; Markov, I.V.; Francis, D.; Grigorenko, E.L. Screens and Preschools: The Bilingual English Language Learner Assessment as a Curriculum-Compliant Digital Application. Children 2024, 11, 914. [Google Scholar] [CrossRef]

- Queiroz-Neto, J.P.; Sales, D.C.; Pinheiro, H.S.; Neto, B.O. Using modern pedagogical tools to improve learning in technological contents. In Proceedings of the 2015 IEEE Frontiers in Education Conference (FIE), Washington, DC, USA, 21–24 October 2015; pp. 1–8. [Google Scholar]

- Gutiérrez-Braojos, C.; Montejo-Gámez, J.; Marín-Jiménez, A.E.; Poza-Vilches, F. A review of educational innovation from a knowledge-building pedagogy perspective. In The Future of Innovation and Technology in Education: Policies and Practices for Teaching and Learning Excellence; Emerald Publishing: Leeds, UK, 2018; pp. 41–54. [Google Scholar]

- Mishra, S. The world in the classroom: Using film as a pedagogical tool. Contemp. Educ. Dialogue 2018, 15, 111–116. [Google Scholar] [CrossRef]

- Lee, H.Y.; Chen, P.H.; Wang, W.S.; Huang, Y.M.; Wu, T.T. Empowering ChatGPT with guidance mechanism in blended learning: Effect of self-regulated learning, higher-order thinking skills, and knowledge construction. Int. J. Educ. Technol. High. Educ. 2024, 21, 16. [Google Scholar] [CrossRef]

- Liu, G.; Fang, N. The effects of enhanced hands-on experimentation on correcting student misconceptions about work and energy in engineering mechanics. Res. Sci. Technol. Educ. 2023, 41, 462–481. [Google Scholar] [CrossRef]

- Kowalski, P.; Taylor, A.K. Reducing students’ misconceptions with refutational teaching: For long-term retention, comprehension matters. Scholarsh. Teach. Learn. Psychol. 2017, 3, 90. [Google Scholar] [CrossRef]

- Liu, G.; Fang, N. Student misconceptions about force and acceleration in physics and engineering mechanics education. Int. J. Eng. Educ. 2016, 32, 19–29. [Google Scholar]

- Thomas, C.L.; Kirby, L.A. Situational interest helps correct misconceptions: An investigation of conceptual change in university students. Instr. Sci. 2020, 48, 223–241. [Google Scholar] [CrossRef]

- Moosapoor, M. New teachers’ awareness of mathematical misconceptions in elementary students and their solution provision capabilities. Educ. Res. Int. 2023, 2023, 4475027. [Google Scholar] [CrossRef]

- Kapenieks, J. User-friendly e-learning environment for educational action research. Procedia Comput. Sci. 2013, 26, 121–142. [Google Scholar] [CrossRef]

- Navas, C. User-Friendly Digital Tools: Boosting Student Engagement and Creativity in Higher Education. Eur. Public Soc. Innov. Rev. 2025, 10, 1–17. [Google Scholar] [CrossRef]

- Park, H.; Song, H.D. Make e-learning effortless! Impact of a redesigned user interface on usability through the application of an affordance design approach. J. Educ. Technol. Soc. 2015, 18, 185–196. [Google Scholar]

- Pham, M.; Singh, K.; Jahnke, I. Socio-technical-pedagogical usability of online courses for older adult learners. Interact. Learn. Environ. 2023, 31, 2855–2871. [Google Scholar] [CrossRef]

- Rakic, S.; Softic, S.; Andriichenko, Y.; Turcin, I.; Markoski, B.; Leoste, J. Usability Platform Test: Evaluating the Effectiveness of Educational Technology Applications. In Proceedings of the International Conference on Interactive Collaborative Learning, Tallinn, Estonia, 24–27 September 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 250–258. [Google Scholar]

- Lefkos, I.; Mitsiaki, M. Users’ preferences for pedagogical e-content: A utility/usability survey on the Greek illustrated science dictionary for school. In Research on e-Learning and ICT in Education: Technological, Pedagogical and Instructional Perspectives; Springer International Publishing: Cham, Switzerland, 2021; pp. 197–217. [Google Scholar]

- Balanyà Rebollo, J.; De Oliveira, J.M. Teachers’ evaluation of the usability of a self-assessment tool for mobile learning integration in the classroom. Educ. Sci. 2024, 14, 1. [Google Scholar] [CrossRef]

- Almusharraf, A.I. An Investigation of University Students’ Perceptions of Learning Management Systems: Insights for Enhancing Usability and Engagement. Sustainability 2024, 16, 10037. [Google Scholar] [CrossRef]

- Uchima-Marin, C.; Murillo, J.; Salvador-Acosta, L.; Acosta-Vargas, P. Integration of Technological Tools in Teaching Statistics: Innovations in Educational Technology for Sustainable Education. Sustainability 2024, 16, 8344. [Google Scholar] [CrossRef]

- Ngoie, R.B.M.; Bansimba, J.R.; Mpolo, F.; Bazangika, R.; Sakulu, J.A.; Mbaka, R.; Bonkile, F. A Hybrid approach combining Conjoint Analysis and the Analytic Hierarchy Process for multicriteria group decision-making. Int. J. Anal. Hierarchy Process 2025, 17. [Google Scholar] [CrossRef]

- Ngoie, R.B.M.; Dibakidi, O.; Mbaka, R.; Sakulu, J.A.; Musoni, D. Combining AHP, TOPSIS and Conjoint Analysis to rank shopping centers in the locality of Mbanza-Ngungu. In Proceedings of the International Symposium on the Analytic Hierarchy Process, Virtual Conference, 15–18 December 2022. Paper presentation, DRC. [Google Scholar] [CrossRef]

- Hong, B.X.; Ichihashi, M.; Ngoc, N.T.B. Analysis of Consumer Preferences for Green Tea Products: A Randomized Conjoint Analysis in Thai Nguyen, Vietnam. Sustainability 2024, 16, 4521. [Google Scholar] [CrossRef]

- Ngoie, R.B.M.; Kamwa, E.; Ulungu, B. Joint use of the mean and median for multi criteria decision support: The 3MCD method. Econ. Bull. 2019, 39, 1602–1611. [Google Scholar]

- Balinski, M.; Laraki, R. Majority Judgment: Measuring, Ranking, and Electing; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Saaty, T.L. The analytic hierarchy process (AHP). J. Oper. Res. Soc. 1980, 41, 1073–1076. [Google Scholar]

- Hwang, C.L. Multiple Attributes Decision Making. Methods and Applications; CRC Press: Boca Raton, FL, USA, 1981. [Google Scholar]

- Roy, B. Classement et choix en présence de points de vue multiples. Rev. Fr. Inform. Rech. Oper. 1968, 2, 57–75. [Google Scholar] [CrossRef]

- Figueira, J.R.; Greco, S.; Roy, B.; Słowiński, R. ELECTRE methods: Main features and recent developments. In Handbook of Multicriteria Analysis; Springer: Berlin/Heidelberg, Germany, 2010; pp. 51–89. [Google Scholar]

- Roy, B.; Bertier, P. La Méthode ELECTRE II; Technical Report; METRA International: Paris, France, 1973. [Google Scholar]

- Mousseau, V.; Slowinski, R.; Zielniewicz, P. ELECTRE TRI 2.0 a. Methodological Guide and User’s Manual; Document du Lamsade; Université Paris–Dauphine: Paris, France, 1999; Volume 111, pp. 263–275. [Google Scholar]

- Brans, J.P.; Vincke, P. Note—A Preference Ranking Organisation Method (The PROMETHEE Method for Multiple Criteria Decision-Making). Manag. Sci. 1985, 31, 647–656. [Google Scholar] [CrossRef]

- Brans, J.P.; Vincke, P.; Mareschal, B. How to select and how to rank projects: The PROMETHEE method. Eur. J. Oper. Res. 1986, 24, 228–238. [Google Scholar] [CrossRef]

- Figueira, J.; Greco, S.; Ehrogott, M.; Brans, J.P.; Mareschal, B. PROMETHEE methods. In Multiple Criteria Decision Analysis: State of the Art Surveys; Springer International Publishing: Cham, Switzerland, 2005; pp. 163–186. [Google Scholar]

- Greco, S.; Ehrgott, M.; Figueira, J. ELECTRE methods. In Multiple Criteria Decision Analysis: State of the Art Surveys; Springer: New York, NY, USA, 2016; pp. 155–185. [Google Scholar]

- Maystre, L.Y.; Pictet, J.; Simos, J. Méthodes Multicritères ELECTRE: Description, Conseils Pratiques et Cas d’Application à la Gestion Environnementale; EPFL Press: Lausanne, Switzerland, 1994; Volume 8. [Google Scholar]

- Roy, B. The outranking approach and the foundations of ELECTRE methods. Theory Decis. 1991, 31, 49–73. [Google Scholar] [CrossRef]

- Behzadian, M.; Kazemzadeh, R.B.; Albadvi, A.; Aghdasi, M. PROMETHEE: A comprehensive literature review on methodologies and applications. Eur. J. Oper. Res. 2010, 200, 198–215. [Google Scholar] [CrossRef]

- Brans, J.P.; Mareschal, B. Promethee Methods. Available online: https://link.springer.com/chapter/10.1007/0-387-23081-5_5 (accessed on 2 July 2025).

- Davies, J.; Katsirelos, G.; Narodytska, N.; Walsh, T.; Xia, L. Complexity of and algorithms for the manipulation of Borda, Nanson’s and Baldwin’s voting rules. Artif. Intell. 2014, 217, 20–42. [Google Scholar] [CrossRef]

- Brams, S.J.; Fishburn, P.C. Voting procedures. In Handbook of Social Choice and Welfare; North Holland: Amsterdam, The Netherlands, 2002; Volume 1, pp. 173–236. [Google Scholar]

- Balinski, M.; Laraki, R. Majority judgment vs. majority rule. Soc. Choice Welf. 2020, 54, 429–461. [Google Scholar] [CrossRef]

- Ngoie, R.B.M.; Kasereka, S.K.; Sakulu, J.A.B.; Kyamakya, K. Mean-Median Compromise Method: A Novel Deepest Voting Function Balancing Range Voting and Majority Judgment. Mathematics 2024, 12, 3631. [Google Scholar] [CrossRef]

- Ngoie, R.B.M.; Savadogo, Z.; Ulungu, B.E.L. New Prospects in Social Choice Theory: Median and Average as Tools for Measuring, Electing and Ranking. Adv. Stud. Contemp. Math. 2015, 25, 19–38. [Google Scholar]

- Cardy, R.L.; Bernardin, H.J.; Abbott, J.G.; Senderak, M.P.; Taylor, K. The effects of individual performance schemata and dimension familiarization on rating accuracy. J. Occup. Psychol. 1987, 60, 197–205. [Google Scholar] [CrossRef]

- Govaerts, M.J.; Schuwirth, L.W.; Van der Vleuten, C.P.; Muijtjens, A.M. Workplace-based assessment: Effects of rater expertise. Adv. Health Sci. Educ. 2011, 16, 151–165. [Google Scholar] [CrossRef] [PubMed]

- UNESCO. UNESCO Spotlights How Digital Learning Can Promote Equity in Low-Resource Contexts. 2025. Available online: https://www.unesco.org/en/articles/unesco-spotlights-how-digital-learning-can-promote-equity-low-resource-contexts (accessed on 24 June 2025).

- Graham, C.R. Blended learning systems. Handb. Blended Learn. Glob. Perspect. Local Des. 2006, 1, 3–21. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).