A Map Information Collection Tool for a Pedestrian Navigation System Using Smartphone

Abstract

1. Introduction

- Usability in real-world settings: How intuitive and effective is the system for novice users navigating in complex indoor environments, particularly where GPS or pre-mapped data are unavailable (e.g., non-intuitive corridors)?

- Data quality assurance: How can the accuracy and completeness of the automatically collected map data (e.g., room numbers and occupant details) be validated and maintained, given inconsistencies in signage and web sources?

2. Related Works

2.1. Pedestrian Navigation System

2.2. Map Information Collection

3. Adopted Technologies

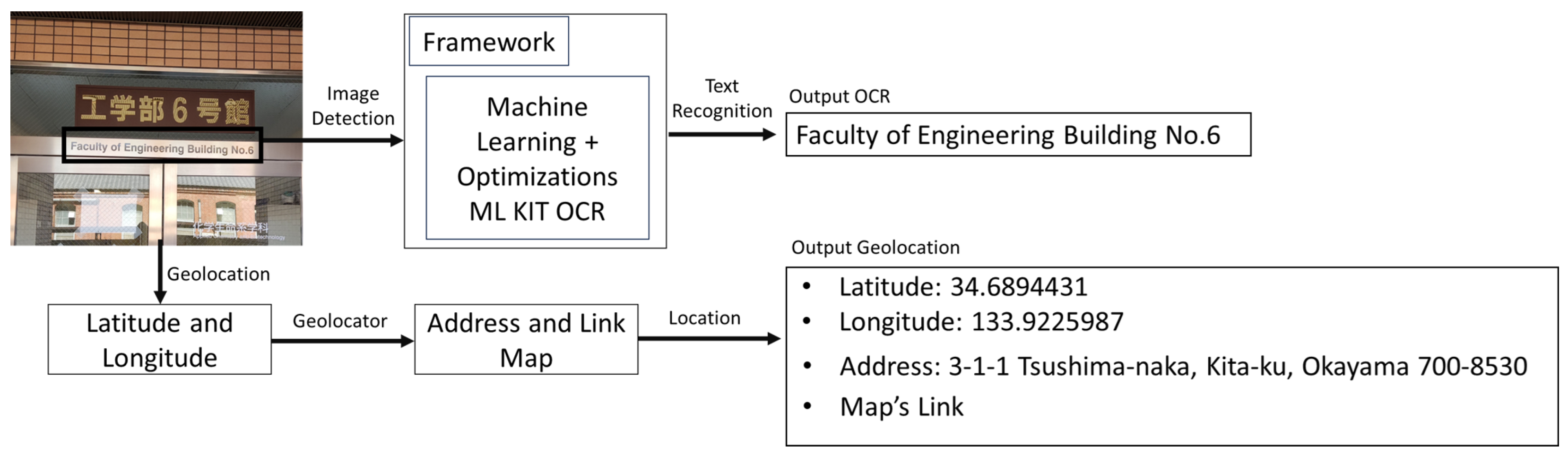

3.1. ML Kit for OCR and Geolocation for Data Collection

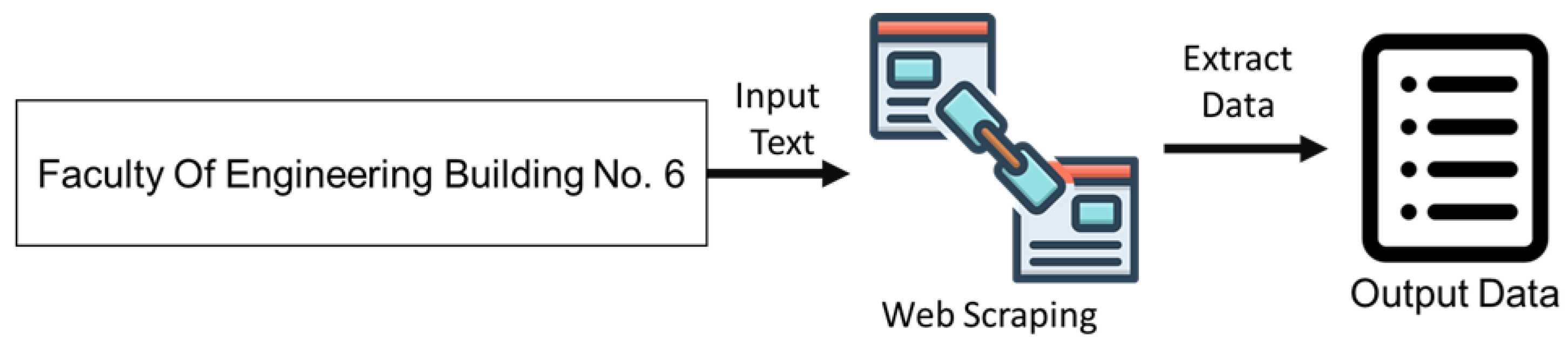

3.2. Web Scraping with Scrapy Framework

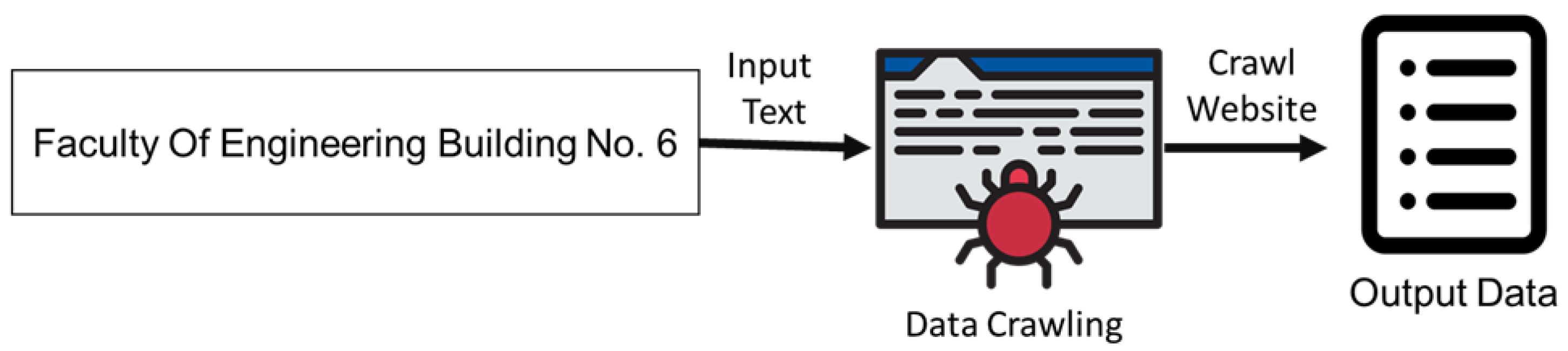

3.3. Data Crawling with Apache Nutch

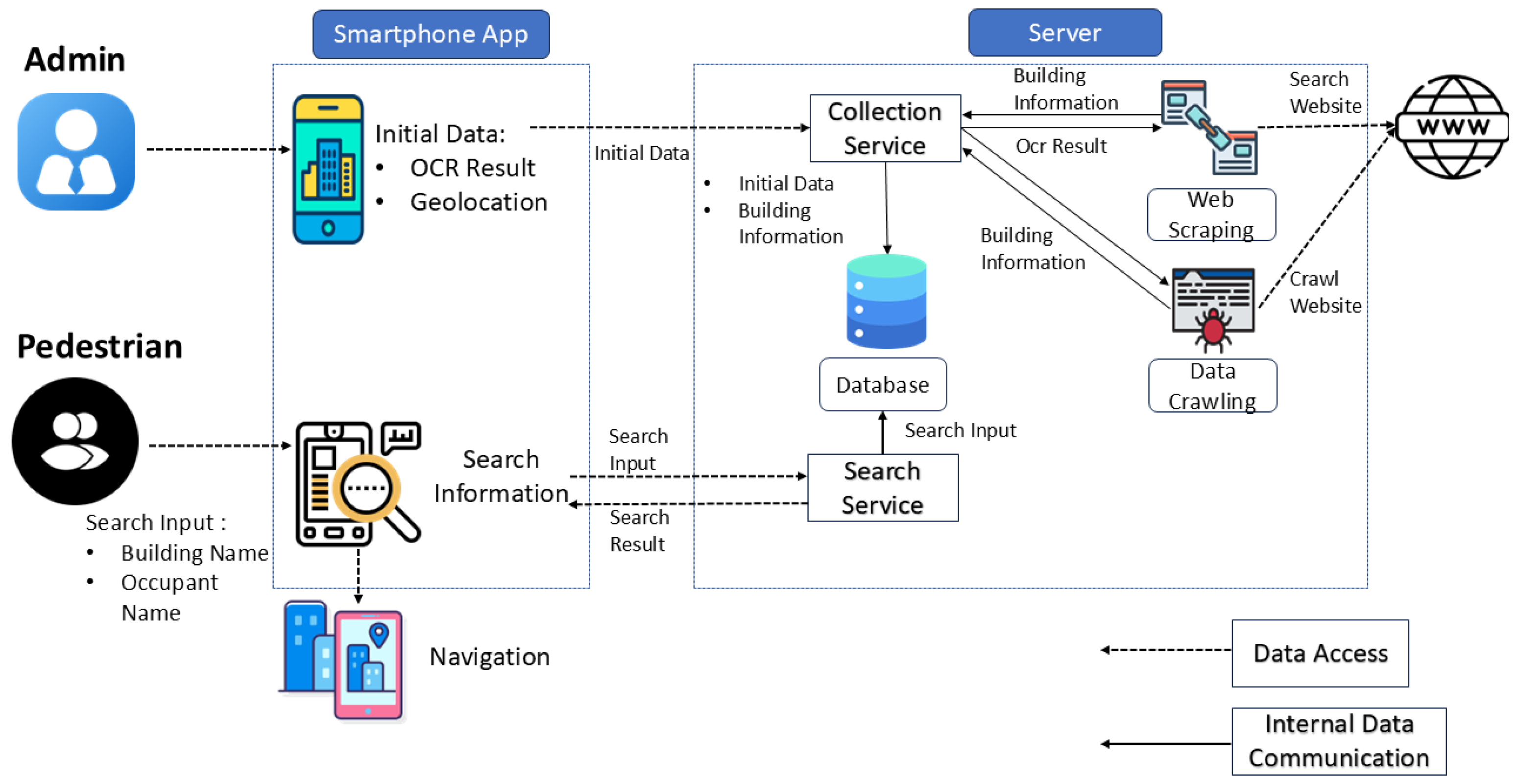

4. Design of the Map Information Collection Tool

4.1. Tool Overview

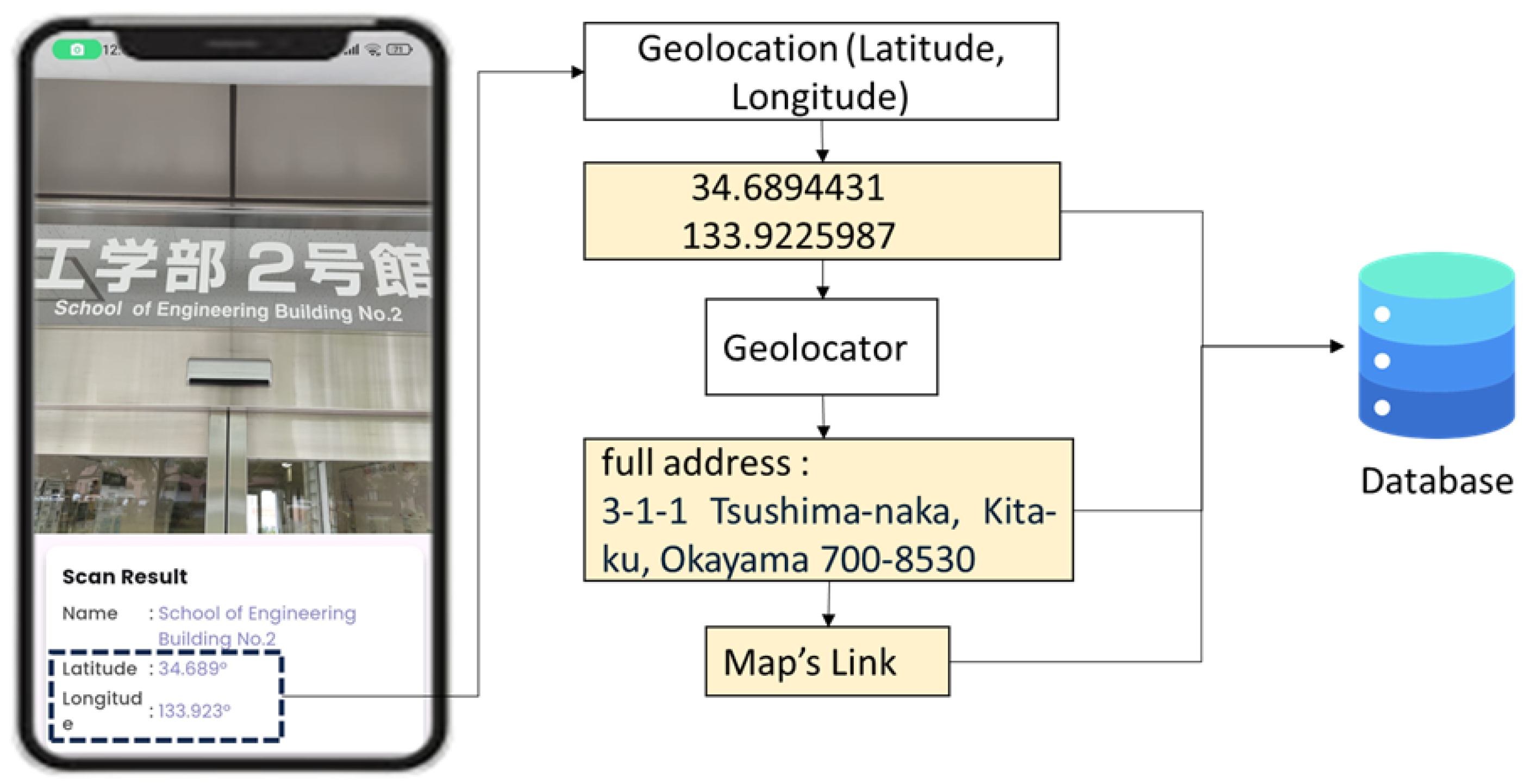

4.2. Image Capture and Geolocation Integration

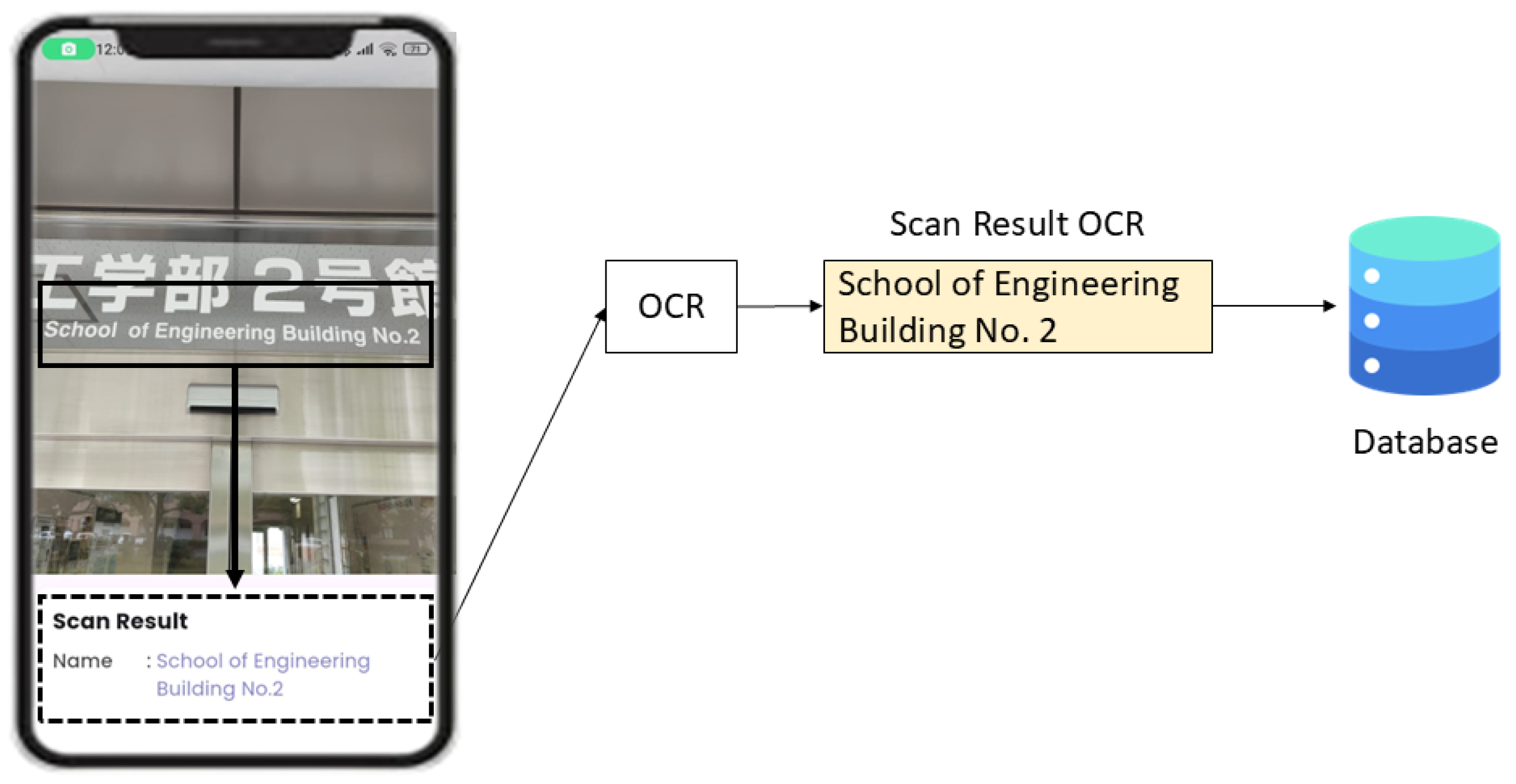

4.3. Text Extraction Using OCR

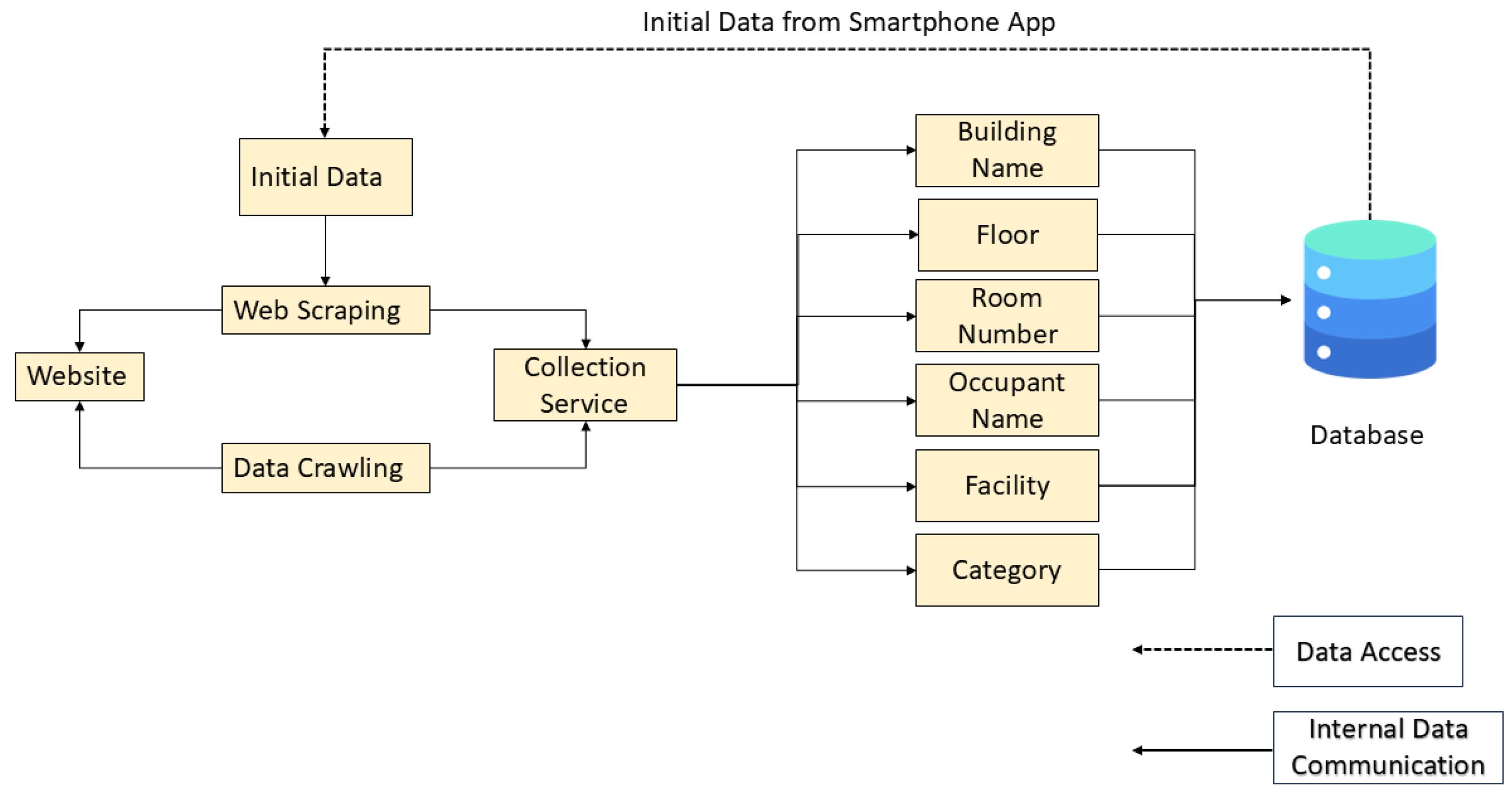

4.4. Information Collection Using Web Scraping and Crawling

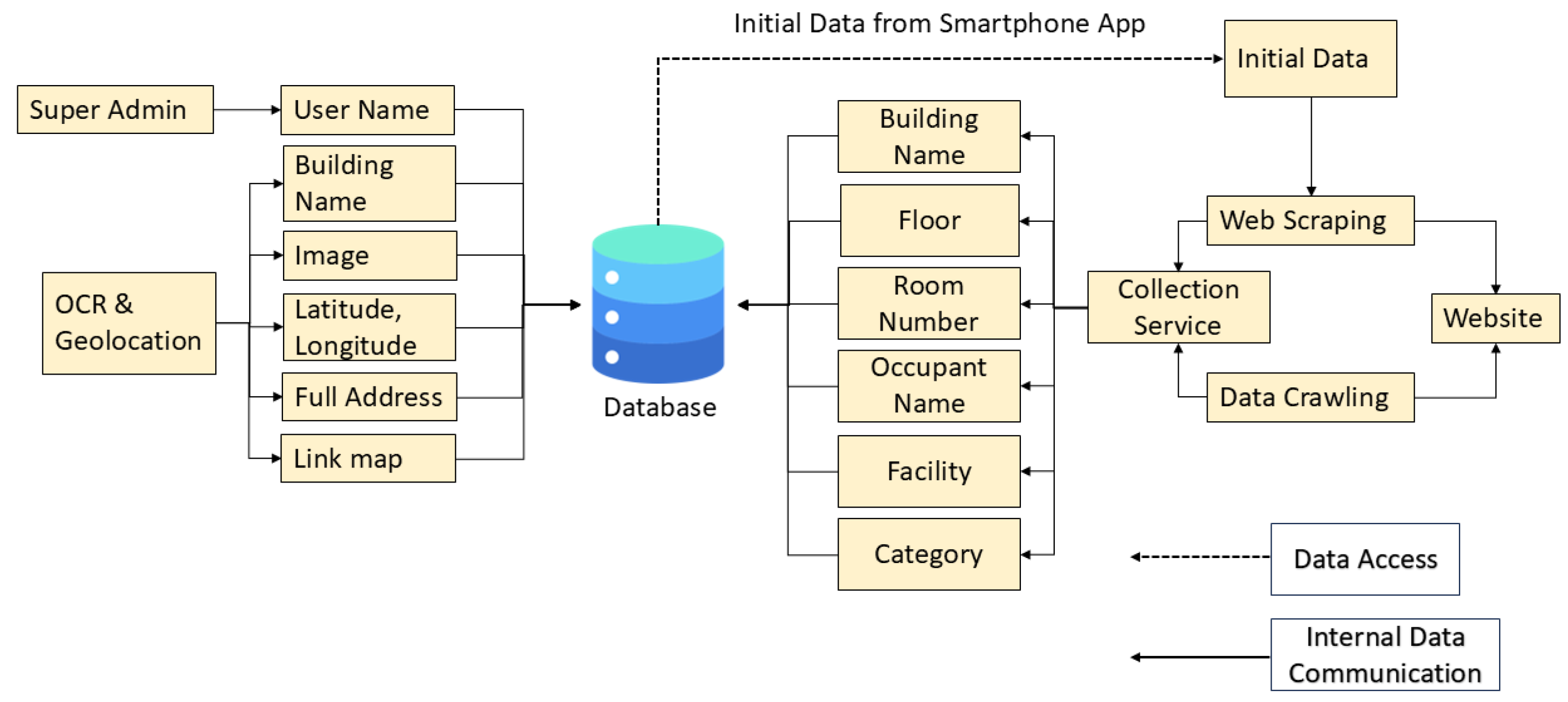

4.5. Data in Database

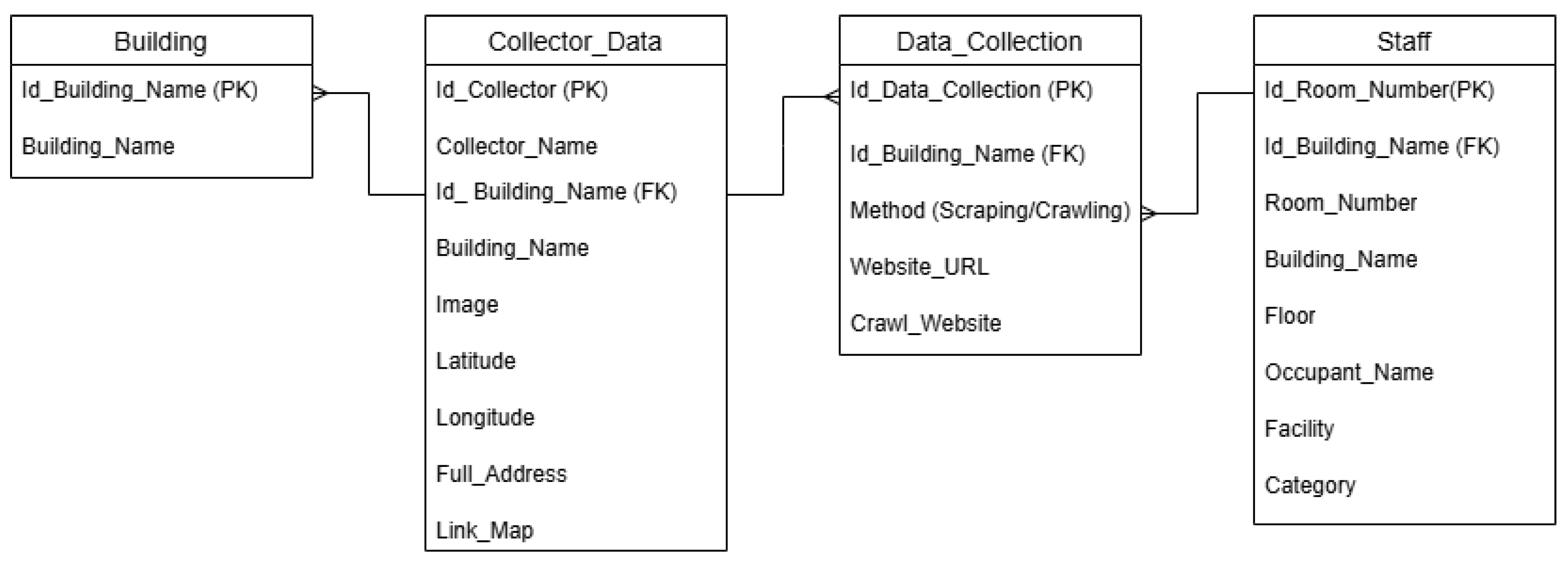

4.6. Database Schema for Building Data

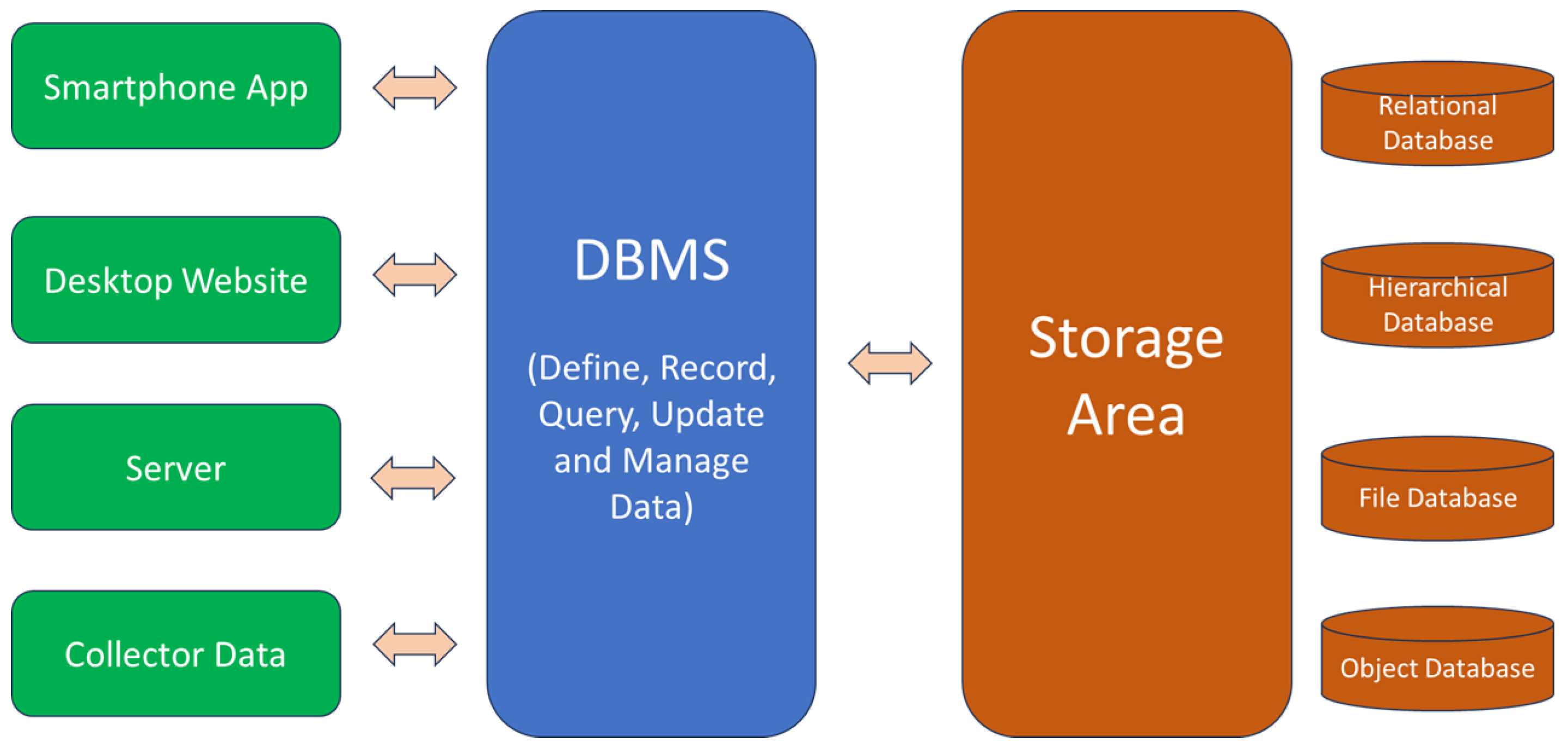

4.7. Data Management Overview of Database

5. Implementation of Map Information Collection Tool

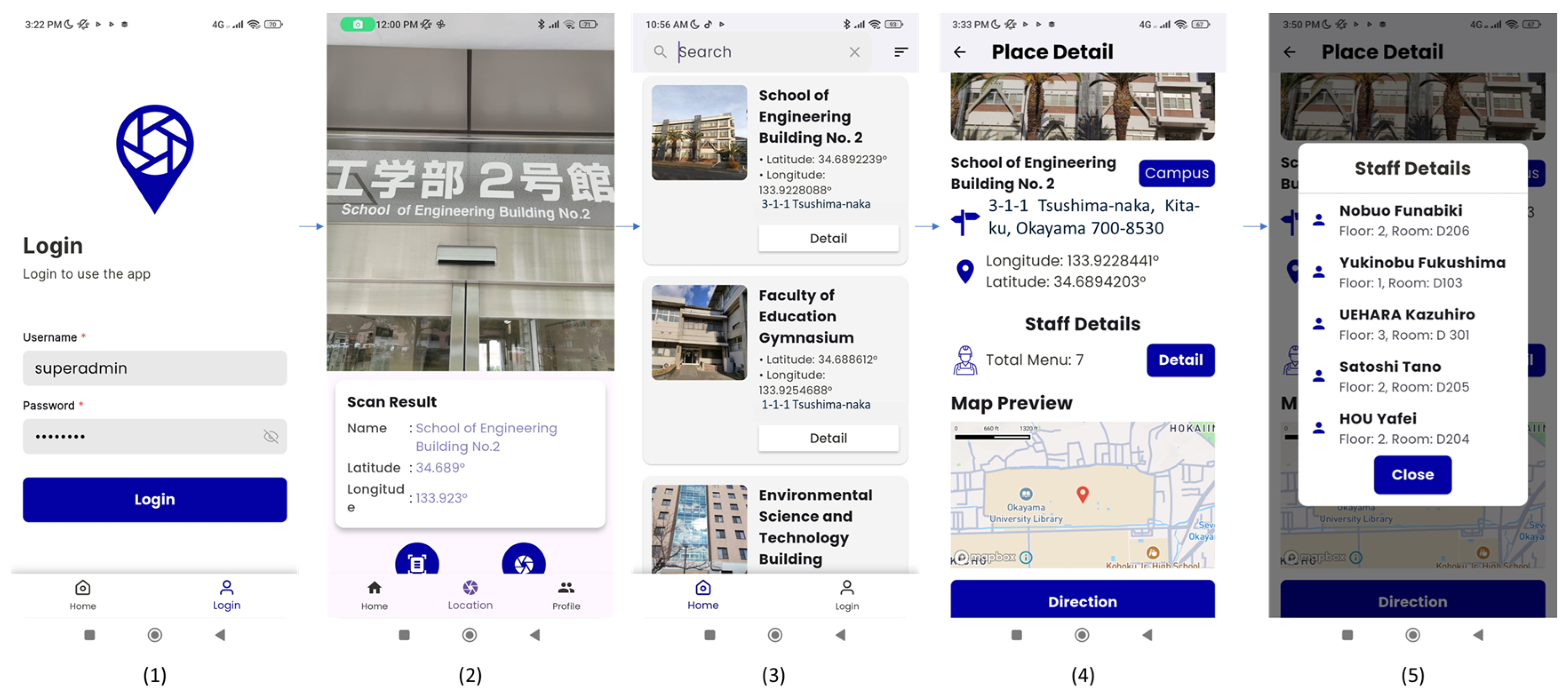

5.1. Data Collection Interface

5.2. Implementation of the Database Storage Interface

5.2.1. Integration of OCR and Geolocation for Campus Navigation

5.2.2. Integration Table Web Scraping and Crawling

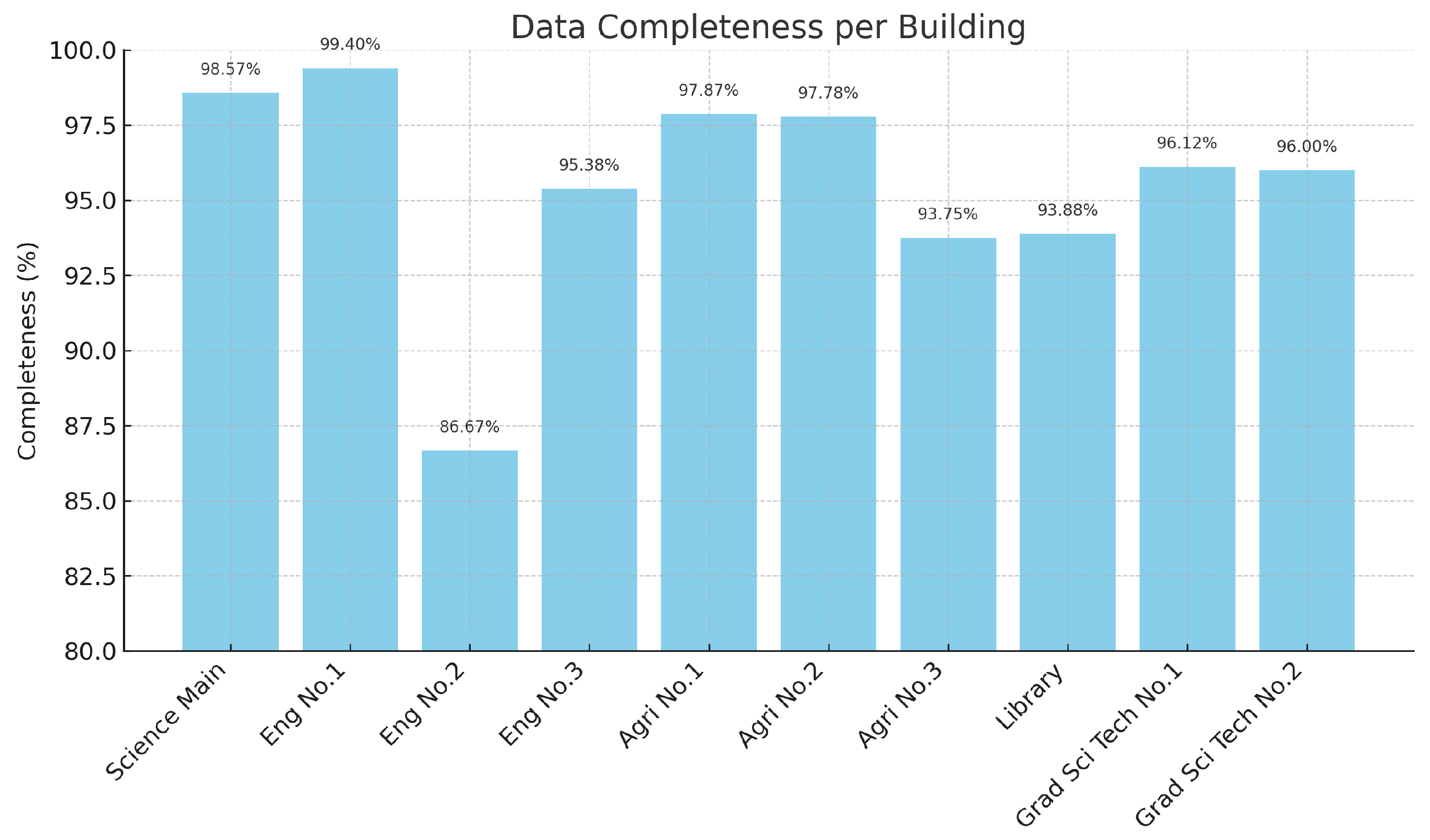

5.2.3. Data Collection Results

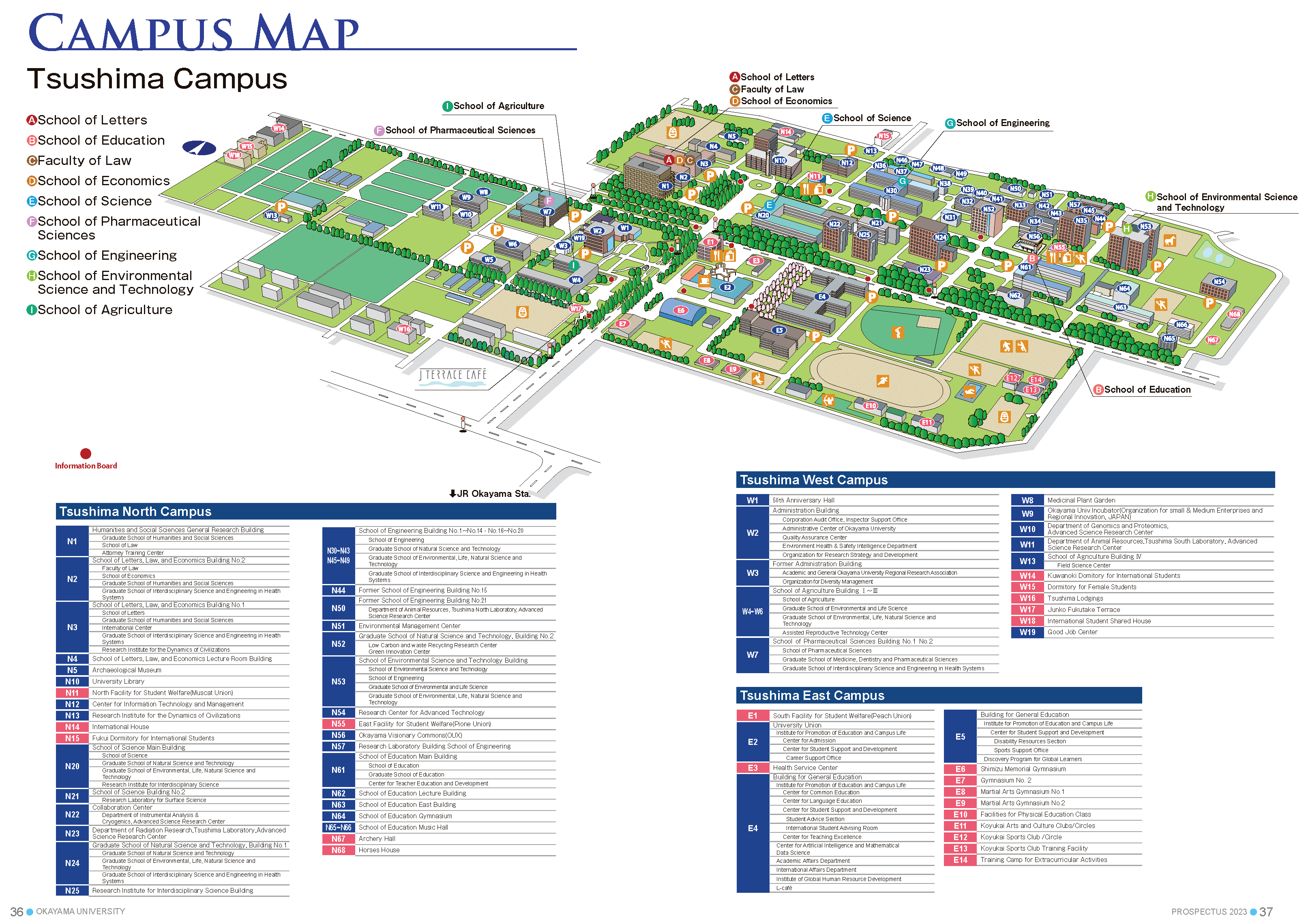

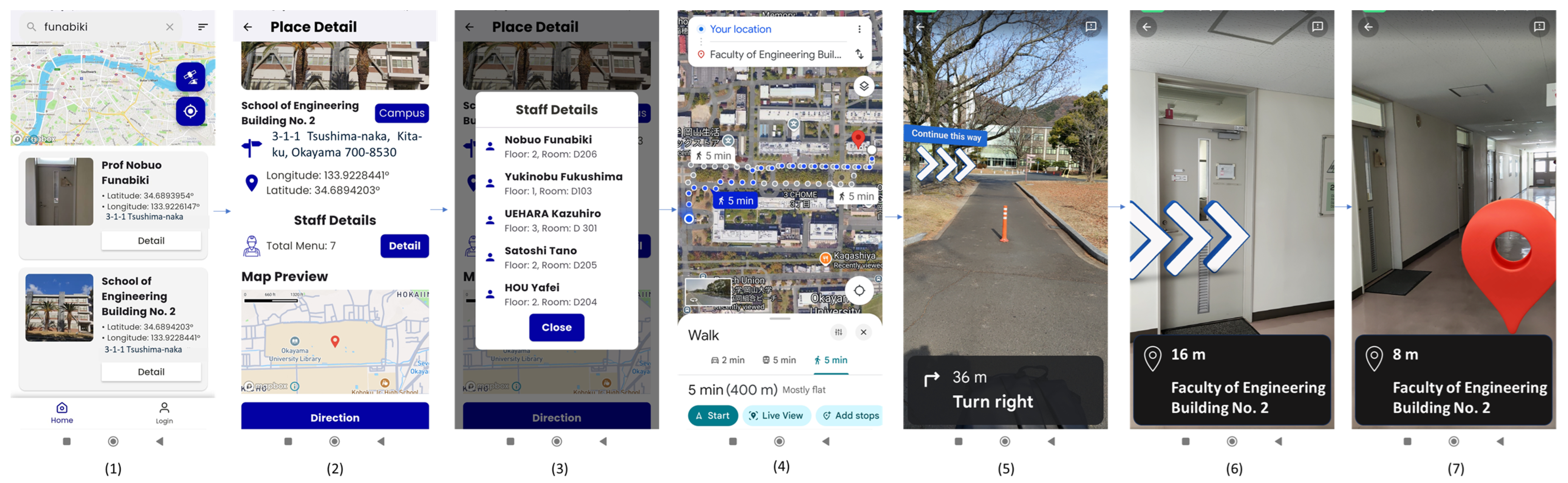

5.3. Pedestrian Navigation Interface

6. Evaluations

6.1. Data Collection Evaluation

6.2. Pedestrian Navigation Evaluation

6.2.1. Pre-Test and Post-Test

6.2.2. Pre-Test Result

6.2.3. System Usability Scale Result

- For each odd-numbered item (), compute

- For each even-numbered item (), compute:where is the respondent’s score for item i.

- Sum all adjusted scores:

- Multiply the total score by 2.5 to obtain the SUS score for that respondent:

- If there are n respondents, the average SUS score is computed as

6.3. Overall Evaluation Results

6.4. Discussions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fonseca, F.; Conticelli, E.; Papageorgiou, G.; Ribeiro, P.; Jabbari, M.; Tondelli, S.; Ramos, R. Use and perceptions of pedestrian navigation apps: Findings from Bologna and Porto. ISPRS Int. J. Geoinf. 2021, 10, 446. [Google Scholar] [CrossRef]

- Fogli, D.; Arenghi, A.; Gentilin, F. User-centered design of a mobile app for accessible cultural heritage. Multimed. Tools Appl. 2020, 79, 33577–33601. [Google Scholar] [CrossRef]

- Sheryl Sharon, G.; Rohit Vikaas, P.; Chanduru, A.; Barathkumar, S.; Harsha Vardhan, P.; Mohanapriya, M. Coimbatore Institute of Technology Campus Navigation System. Coimbatore Institute of Technology Campus Navigation System (Version 1.0). Int. J. Res. Appl. Sci. Eng. Technol. (IJRASET) 2023, 11, 1121–1127. [Google Scholar] [CrossRef]

- Sheikh, M. Indoor Navigation System. Int. J. Sci. Technol. Eng. 2023, 11, 30–33. [Google Scholar] [CrossRef]

- El-Sheimy, N.; Li, Y. Indoor navigation: State of the art and future trends. Satell. Navig. 2021, 2, 7. [Google Scholar] [CrossRef]

- Jamshidi, S.; Ensafi, M.; Pati, D. Wayfinding in Interior Environments: An Integrative Review. Front. Psychol. 2020, 11, 549628. [Google Scholar] [CrossRef]

- Sarot, R.V.; Delazari, L.S.; Camboim, S.P. Proposal of a spatial database for indoor navigation. Acta Sci. Technol. 2021, 43, e51718. [Google Scholar] [CrossRef]

- Wu, Y.; Shang, J.; Chen, P.; Zlatanova, S.; Hu, X.; Zhou, Z. Indoor Mapping and Modeling by Parsing Floor Plan Images. Int. J. Geogr. Inf. Sci. 2021, 35, 1205–1231. [Google Scholar] [CrossRef]

- Okayama University. Tsushima Campus Map. Available online: https://www.okayama-u.ac.jp/eng/access_maps/Tsushima_Campus.html (accessed on 12 April 2025).

- Park, S.; Kang, T.; Lee, S.; Rhee, J.H. Detection of Pedestrian Turning Motions to Enhance Indoor Map Matching Performance. In Proceedings of the 2023 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 11–13 October 2023; IEEE: Piscatway, NJ, USA, 2023. [Google Scholar] [CrossRef]

- Hu, Y. The application of SLAM technology in indoor navigation to complex indoor environments. Appl. Comput. Eng. 2023, 12, 52–57. [Google Scholar] [CrossRef]

- Wang, J. A Study of The OCR Development History and Directions of Development. Highlights Sci. Eng. Technol. 2023, 72, 409–415. [Google Scholar] [CrossRef]

- Rahman, M.M. Text Information Extraction from Digital Image Documents Using Optical Character Recognition. In Computational Intelligence in Image and Video Processing; Patil, M.D., Birajdar, G.K., Chaudhari, S.S., Eds.; CRC Press: Boca Raton, FL, USA, 2022; pp. 1–31. [Google Scholar] [CrossRef]

- Archana, D.; Deepak, K.; Lokesh Dhanvanthri, K.S.; Sridharan, S.N.; Vasanth, G.; Sriram Naren, S.; Prawin Balaji, K.S. Image Text Detection and Documentation Using OCR. In Proceedings of the 2024 International Conference on Smart Systems for Electrical, Electronics, Communication and Computer Engineering (ICSSEECC), Coimbatore, India, 28–29 June 2024. [Google Scholar] [CrossRef]

- Subramanya, P.K.H. AI-Based Solution for Web Crawling. Int. J. Sci. Res. 2023, 12, 79–183. [Google Scholar] [CrossRef]

- Ruchitaa Raj, N.R.; Nandhakumar Raj, S.; Vijayalakshmi, M. Web Scrapping Tools and Techniques: A Brief Survey. In Proceedings of the 2023 International Conference on Innovative Trends in Information Technology (ICITIIT), Kottayam, India, 11–12 February 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Okayama University. Okayama University Tsushima Campus Map. 2024. Available online: https://www.okayama-u.ac.jp/up_load_files/freetext/en__Tsushima_Campus/file/map_tsushima.pdf (accessed on 12 April 2025).

- Brata, K.C.; Liang, D. Comparative Study of User Experience on Mobile Pedestrian Navigation Between Digital Map Interface and Location-Based Augmented Reality. Int. J. Electr. Comput. Eng. (IJECE) 2020, 10, 2037–2044. [Google Scholar] [CrossRef]

- Leitch, S.; Ahmed, Q.; Abbas, W.B.; Hafeez, M.; Laziridis, P.; Sureephong, P.; Alade, T. On Indoor Localization Using WiFi, BLE, UWB, and IMU Technologies. Sensors 2023, 23, 8567. [Google Scholar] [CrossRef] [PubMed]

- Bibbò, L.; Bramanti, A.; Sharma, J.; Cotroneo, F. AR Platform for Indoor Navigation: New Potential Approach Extensible to Older People with Cognitive Impairment. BioMedInformatics 2024, 4, 1589–1619. [Google Scholar] [CrossRef]

- Biradar, P.M.; Jadhav, S.; Tijore, N.; Tiwari, K.; Jagtap, A. Study of Optical Character Recognition. Alochana Chakra J. 2024, 13, 302–306. [Google Scholar]

- Khairi, N.; Rahman, A.; Abdulrahim, K. A Review of Current Trend in Indoor Pedestrian Navigation. J. Eng. Technol. (JET) 2024, 15, 59–82. [Google Scholar] [CrossRef]

- Strecha, C.; Rehak, M.; Cucci, D. Mobile Phone Based Indoor Mapping. ISPRS Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2024, XLVIII-2, 415–420. [Google Scholar] [CrossRef]

- Zhang, W.; Li, Y.; Li, P.; Feng, Z. A BIM and AR-based indoor navigation system for pedestrians on smartphones. KSCE J. Civ. Eng. 2025, 29, 100005. [Google Scholar] [CrossRef]

- Triyono, L.; Prayitno; Rahaman, M.; Sukamto; Yobioktabera, A. Smartphone-based Indoor Navigation for Guidance in Finding Location Buildings Using Measured WiFi-RSSI. Int. J. Inform. Vis. 2022, 6, 829–834. [Google Scholar] [CrossRef]

- Jin, Z.; Li, Y.; Yang, Z.; Zhang, Y.; Cheng, Z. Real-Time Indoor Positioning Based on BLE Beacons and Pedestrian Dead Reckoning for Smartphones. Appl. Sci. 2023, 13, 4321. [Google Scholar] [CrossRef]

- Gang, H.; Pyun, J. A Smartphone Indoor Positioning System Using Hybrid Localization Technology. Energies 2019, 12, 3789. [Google Scholar] [CrossRef]

- Huang, B.; Hsu, J.; Chu, E.; Wu, H. ARBIN: Augmented Reality Based Indoor Navigation System. Sensors 2020, 20, 5789. [Google Scholar] [CrossRef] [PubMed]

- Rubio-Sandoval, J.; Martinez-Rodriguez, J.; Lopez-Arevalo, I.; Rios-Alvarado, A.; Rodriguez-Rodriguez, A.; Vargas-Requena, D. An indoor navigation methodology for mobile devices by integrating augmented reality and semantic web. Sensors 2021, 21, 5460. [Google Scholar] [CrossRef]

- Che, F.; Ahmed, Q.; Lazaridis, P.; Sureephong, P.; Alade, T. Indoor Positioning System (IPS) Using Ultra-Wide Bandwidth (UWB)—For Industrial Internet of Things (IIoT). Sensors 2023, 23, 5392. [Google Scholar] [CrossRef] [PubMed]

- Pivavaruk, I.; Fonseca Cacho, J.R. OCR Enhanced Augmented Reality Indoor Navigation. In Proceedings of the 2022 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Online, 12–14 December 2022; pp. 186–192. [Google Scholar] [CrossRef]

- Wu, Z.; Chen, X.; Wang, J.; Wang, X.; Gan, Y.; Fang, M.; Xu, T. OCR-RTPS: An OCR-based real-time positioning system for the valet parking. Appl. Intell. 2023, 53, 17920–17934. [Google Scholar] [CrossRef]

- Weerasinghe, M.; Maduranga, M.; Kawya, M.M.V.T. Enhancing Web Scraping with Artificial Intelligence: A Review. In Proceedings of the 4th Student Symposium of General Sir John Kotelawala Defence University, Ratmalana, Sri Lanka, 17 January 2024; Available online: https://www.researchgate.net/publication/379024314_Enhancing_Web_Scraping_with_Artificial_Intelligence_A_Review (accessed on 10 March 2025).

- Brenning, A.; Henn, S. Web scraping: A promising tool for geographic data acquisition. arXiv 2023, arXiv:2305.19893. [Google Scholar] [CrossRef]

- Galvez-Hernandez, P.; Gonzalez-Viana, A.; Gonzalez-de Paz, L.; Shankardass, K.; Muntaner, C. Generating Contextual Variables From Web-Based Data for Health Research: Tutorial on Web Scraping, Text Mining, and Spatial Overlay Analysis. JMIR Public Health Surveill. 2024, 10, e50379. [Google Scholar] [CrossRef]

- Agarwal, D.; Jeevan, J.; Manikandan, R.K.; Ramith, N.R.; Vandana, M.L. Advanced Automated Document Processing Using Optical Character Recognition (OCR). In Proceedings of the 2024 IEEE 9th International Conference for Convergence in Technology (I2CT), Pune, India, 5–7 April 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Google Developers. ML Kit Text Recognition v2. 2024. Available online: https://developers.google.com/ml-kit/vision/text-recognition/v2?hl=id (accessed on 27 April 2025).

- Isgar, C. System for Social Interaction Regarding Features Based on Geolocation. U.S. Patent US20200219205A1, 9 January 2020. [Google Scholar]

- Mustapha, S.; Man, M.; Wan Abu Bakar, W.A.; Yusof, M.K.; Sabri, I.A.A. Demystified Overview of Data Scraping. Int. J. Data Sci. Anal. Appl. 2024, 6, 290–296. [Google Scholar] [CrossRef]

- Sharma, G. Web Crawling and Scraping: A Survey. In Proceedings of the 2024 International Conference on Healthcare Innovations, Software and Engineering Technologies (HISET), Karad, India, 18–19 January 2024; pp. 190–192. [Google Scholar] [CrossRef]

- Batista, N.A.; Brandão, M.A.; Pinheiro, M.B.; Dalip, D.H.; Moro, M.M. Data from Multiple Web Sources: Crawling, Integrating, Preprocessing, and Designing Applications. In Special Topics in Multimedia, IoT and Web Technologies; Roesler, V., Barrére, E., Willrich, R., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–242. [Google Scholar] [CrossRef]

- Khder, M.A. Web Scraping or Web Crawling: State of Art, Techniques, Approaches, and Application. Int. J. Adv. Soft Comput. Appl. (IJASCA) 2021, 13, 11–20. [Google Scholar] [CrossRef]

- ScrapeHero Team. Web Scraping Vs. Web Crawling: Similarities and Differences. 2023. Available online: https://www.scrapehero.com/web-scraping-vs-web-crawling/?utm_source=chatgpt.com (accessed on 25 April 2025).

- Brata, K.C.; Funabiki, N.; Riyantoko, P.A.; Panduman, Y.Y.F.; Mentari, M. Performance Investigations of VSLAM and Google Street View Integration in Outdoor Location-Based Augmented Reality under Various Lighting Conditions. Electronics 2024, 13, 2930. [Google Scholar] [CrossRef]

- Brata, K.C.; Funabiki, N.; Panduman, Y.Y.F.; Mentari, M.; Syaifudin, Y.W.; Rahmadani, A.A. A Proposal of In Situ Authoring Tool with Visual-Inertial Sensor Fusion for Outdoor Location-Based Augmented Reality. Electronics 2025, 14, 342. [Google Scholar] [CrossRef]

- Brata, K.C.; Funabiki, N.; Panduman, Y.Y.F.; Fajrianti, E.D. An Enhancement of Outdoor Location-Based Augmented Reality Anchor Precision through VSLAM and Google Street View. Sensors 2024, 24, 1161. [Google Scholar] [CrossRef]

- Fajrianti, E.D.; Funabiki, N.; Sukaridhoto, S.; Panduman, Y.Y.F.; Dezheng, K.; Shihao, F.; Surya Pradhana, A.A. INSUS: Indoor Navigation System Using Unity and Smartphone for User Ambulation Assistance. Information 2023, 14, 359. [Google Scholar] [CrossRef]

- Fajrianti, E.D.; Panduman, Y.Y.F.; Funabiki, N.; Haz, A.L.; Brata, K.C.; Sukaridhoto, S. A User Location Reset Method through Object Recognition in Indoor Navigation System Using Unity and a Smartphone (INSUS). Network 2024, 4, 295–312. [Google Scholar] [CrossRef]

- Biljecki, F.; Chow, Y.S.; Lee, K. Quality of Crowdsourced Geospatial Building Information: A Global Assessment of OpenStreetMap Attributes. Build. Environ. 2023, 237, 110295. [Google Scholar] [CrossRef]

- Glomb, D.; Wolff, C. User Experience and Multimodal Usability for Navigation Systems. In Annals of Computer Science and Information Systems; Polish Information Processing Society: Warsaw, Poland, 2022; Volume 30, pp. 207–210. [Google Scholar] [CrossRef]

- Kotama, I.N.D.; Funabiki, N.; Panduman, Y.Y.F.; Brata, K.C.; Pradhana, A.A.S.; Noprianto; Desnanjaya, I.G.M.N. Improving the Accuracy of Information Retrieval Using Deep Learning Approaches. Information 2025, 16, 108. [Google Scholar] [CrossRef]

- Harwati, T.S.; Nendya, M.B.; Dendy Senapartha, I.K.; Lukito, Y.; Tjahjono, F.N.; Jovan, K.I. Usability Evaluation of Augmented Reality Indoor Navigation: A System Usability Scale Approach. In Proceedings of the 2024 2nd International Conference on Technology Innovation and Its Applications (ICTIIA), Medan, Indonesia, 12–13 September 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Cao, D.; Yan, X.; Li, J.; Li, J.; Wu, L. Automated Icon Extraction from Tourism Maps: A Synergistic Approach Integrating YOLOv8x and SAM. ISPRS Int. J. Geo-Inf. 2025, 14, 55–67. [Google Scholar] [CrossRef]

- Usha, S.M.; Kumar, D.M.; Mahesh, H.B. Traffic Signboard Recognition and Text Translation System using Word Spotting and Machine Learning. ITM Web Conf. 2022, 50, 01010. [Google Scholar] [CrossRef]

- Nazeem, M.; Anitha, R.; Navaneeth, S.; Rajeev, R.R. Open-Source OCR Libraries: A Comprehensive Study for Low Resource Language. In Proceedings of the 21st International Conference on Natural Language Processing (ICON), Chennai, India, 19–22 December 2024; Lalitha Devi, S., Arora, K., Eds.; AU-KBC Research Centre: Chennai, India, 2024; pp. 416–421. [Google Scholar]

| Aspect | Web Scraping | Data Crawling |

|---|---|---|

| Objective | To extract specific data elements from identified web pages | To discover and index web pages relevant to the input text |

| Function | To retrieve structured or unstructured data from page content | To navigate through multiple URLs in locating data sources to locate data sources |

| Input | To input specific web pages (typically from crawling results) | To insert/input user-defined keywords or seed URLs |

| Output | To obtain the targeted data such as titles, prices, or metadata | To gather/generate a list of relevant URLs or page structures |

| Technology Used | To implement parsers, HTML extractors, tools like BeautifulSoup or Scrapy | To implement crawlers, spiders, URL explorers |

| No. | Name Building | Name Staff | Floor | Room |

|---|---|---|---|---|

| 1 | School of Science Main Building | Koji Yoshimura | 1 | 101 |

| 2 | School of Engineering Building No. 1 | Tomoya Miura | 3 | A306 |

| 3 | School of Engineering Building No. 2 | Nobuo Funabiki | 2 | D206 |

| 4 | School of Engineering Building No. 2 | Htoo Htoo Sandi Kyaw | 3 | D308 |

| 5 | School of Engineering Building No. 3 | Yasuki Nogami | 2 | E219 |

| 6 | Faculty of Agriculture Building No. 1 | Koichiro Ushijima | 2 | 1267 |

| 7 | Faculty of Agriculture Building No. 2 | Tamura Takashi | 3 | 2325 |

| 8 | Faculty of Agriculture Building No. 3 | Hiroaki Funahashi | 2 | 3203 |

| 9 | Graduate School Natural Science and Technology Building No. 1 | Kondo Kei | 3 | D303 |

| 10 | Graduate School of Natural Science and Technology Building No. 2 | Shinichi Nishimura | 1 | 116 |

| No | Category | Total Entries |

|---|---|---|

| 1 | Professor’s Room | 402 |

| 2 | Room | 1225 |

| 3 | Building | 38 |

| 4 | Menu Food in Muscat | 66 |

| 5 | Menu Food in PIONE | 52 |

| 6 | Toilet | 340 |

| 7 | Canteen | 4 |

| 8 | Bus Stop | 6 |

| 9 | POS | 2 |

| 10 | Sports Venue | 15 |

| Name of Building | Total Rooms | Captured Rooms | Completeness |

|---|---|---|---|

| School of Science Main Building | 349 | 344 | 98.57% |

| School of Engineering Building No. 1 | 336 | 334 | 99.40% |

| School of Engineering Building No. 2 | 30 | 26 | 86.67% |

| School of Engineering Building No. 3 | 65 | 62 | 95.38% |

| Faculty of Agriculture Building No. 1 | 141 | 138 | 97.87% |

| Faculty of Agriculture Building No. 2 | 45 | 44 | 97.78% |

| Faculty of Agriculture Building No. 3 | 48 | 45 | 93.75% |

| Library University Okayama | 49 | 46 | 93.88% |

| Graduate School Natural Science and Technology Building No. 1 | 129 | 124 | 96.12% |

| Graduate School of Natural Science and Technology Building No. 2 | 75 | 72 | 96.00% |

| No. | Question | Category |

|---|---|---|

| 1 | I think I would like to use this pedestrian navigation system frequently for finding rooms or destinations inside buildings. | Usefulness |

| 2 | I found the navigation system unnecessarily complex when trying to search for room or occupant information. | Ease of Use |

| 3 | I thought the system was easy to use when navigating through building interiors. | Ease of Use |

| 4 | I think I would need technical support to use this system effectively. | Learning Curve |

| 5 | I found the system’s features, such as image capture, OCR results, and navigation integration, were well integrated. | Efficiency |

| 6 | I noticed inconsistencies in the system, such as mismatched or unclear room information. | Error Handling |

| 7 | I believe most people would quickly learn how to use this system for indoor navigation. | Learning Curve |

| 8 | I found the system cumbersome to use when collecting or navigating with map information. | Ease of Use |

| 9 | I felt confident using this system to locate rooms or persons inside campus buildings. | Usefulness |

| 10 | I had to learn a lot before I could start effectively using the system. | Learning Curve |

| Answer | Pedestrian | Rate |

|---|---|---|

| Yes | 10 | 100% |

| No | 0 | 0% |

| Participant | Responses (Q1–Q10) | SUS Score |

|---|---|---|

| 1 | 5, 1, 5, 1, 5, 1, 5, 1, 5, 1 | 100.0 |

| 2 | 5, 1, 5, 1, 5, 1, 1, 2, 4, 1 | 90.0 |

| 3 | 4, 3, 4, 3, 4, 4, 4, 2, 4, 1 | 72.5 |

| 4 | 5, 1, 5, 1, 5, 1, 5, 1, 5, 1 | 100.0 |

| 5 | 5, 1, 5, 1, 5, 1, 5, 1, 5, 1 | 100.0 |

| 6 | 5, 1, 5, 1, 5, 1, 5, 1, 5, 1 | 100.0 |

| 7 | 4, 2, 4, 2, 4, 1, 5, 1, 4, 2 | 87.5 |

| 8 | 5, 1, 5, 1, 5, 1, 5, 1, 5, 1 | 100.0 |

| 9 | 5, 1, 5, 1, 5, 1, 5, 1, 5, 1 | 100.0 |

| 10 | 4, 2, 5, 2, 4, 2, 5, 1, 4, 2 | 87.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Batubulan, K.S.; Funabiki, N.; Brata, K.C.; Kotama, I.N.D.; Kyaw, H.H.S.; Hidayati, S.C. A Map Information Collection Tool for a Pedestrian Navigation System Using Smartphone. Information 2025, 16, 588. https://doi.org/10.3390/info16070588

Batubulan KS, Funabiki N, Brata KC, Kotama IND, Kyaw HHS, Hidayati SC. A Map Information Collection Tool for a Pedestrian Navigation System Using Smartphone. Information. 2025; 16(7):588. https://doi.org/10.3390/info16070588

Chicago/Turabian StyleBatubulan, Kadek Suarjuna, Nobuo Funabiki, Komang Candra Brata, I Nyoman Darma Kotama, Htoo Htoo Sandi Kyaw, and Shintami Chusnul Hidayati. 2025. "A Map Information Collection Tool for a Pedestrian Navigation System Using Smartphone" Information 16, no. 7: 588. https://doi.org/10.3390/info16070588

APA StyleBatubulan, K. S., Funabiki, N., Brata, K. C., Kotama, I. N. D., Kyaw, H. H. S., & Hidayati, S. C. (2025). A Map Information Collection Tool for a Pedestrian Navigation System Using Smartphone. Information, 16(7), 588. https://doi.org/10.3390/info16070588