The Impact of AI-Driven Application Programming Interfaces (APIs) on Educational Information Management

Abstract

1. Introduction

1.1. Artificial Intelligence and Educational Transformation: New Horizons in Teaching and Learning

1.2. Transformation of Instructional Planning and the Impact of Generative AI on Education

1.3. Artificial Intelligence and APIs in Education: Trasnformation, Ethical Challenges and New Research Perspectives

2. Materials and Methods

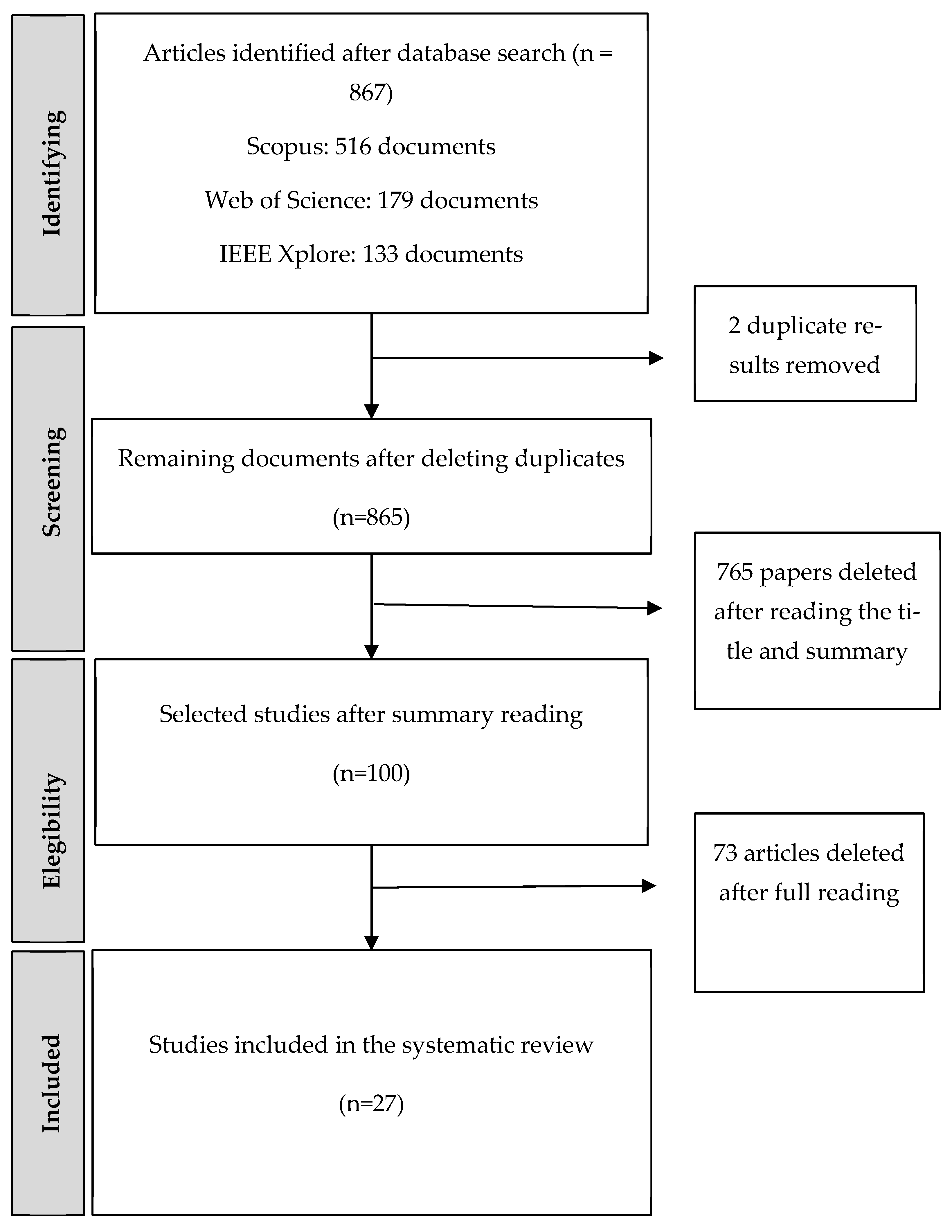

2.1. Design

2.2. Inclusion and Exclusion Criteria

2.3. Study Selection Procedure

2.4. Inter-Rater Agreement Analysis

- I = Reliability coefficient

- F = Number of judges or evaluators

- p_j = Proportion of judges who assigned a response to category j

- N = Number of items or units assessed

- k = Number of possible response categories

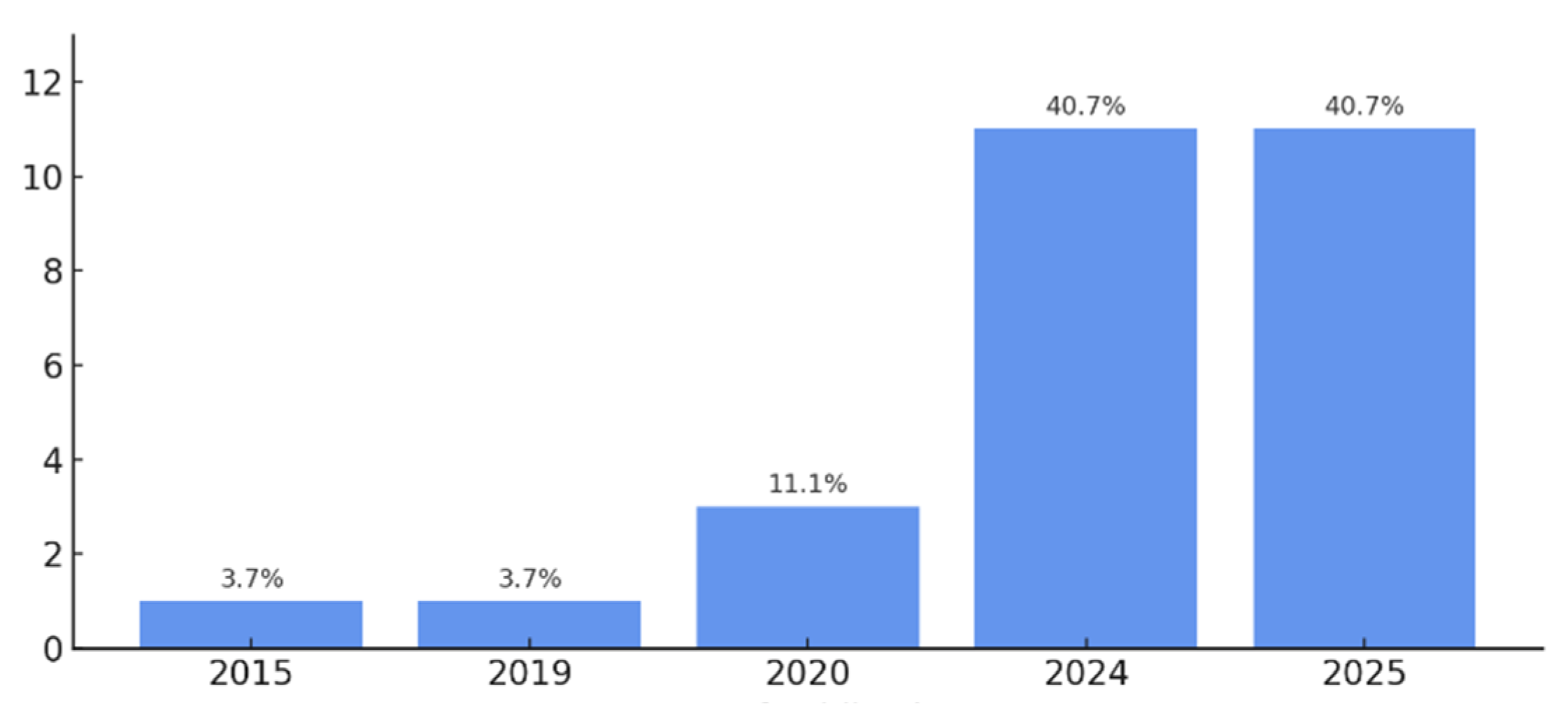

3. Results

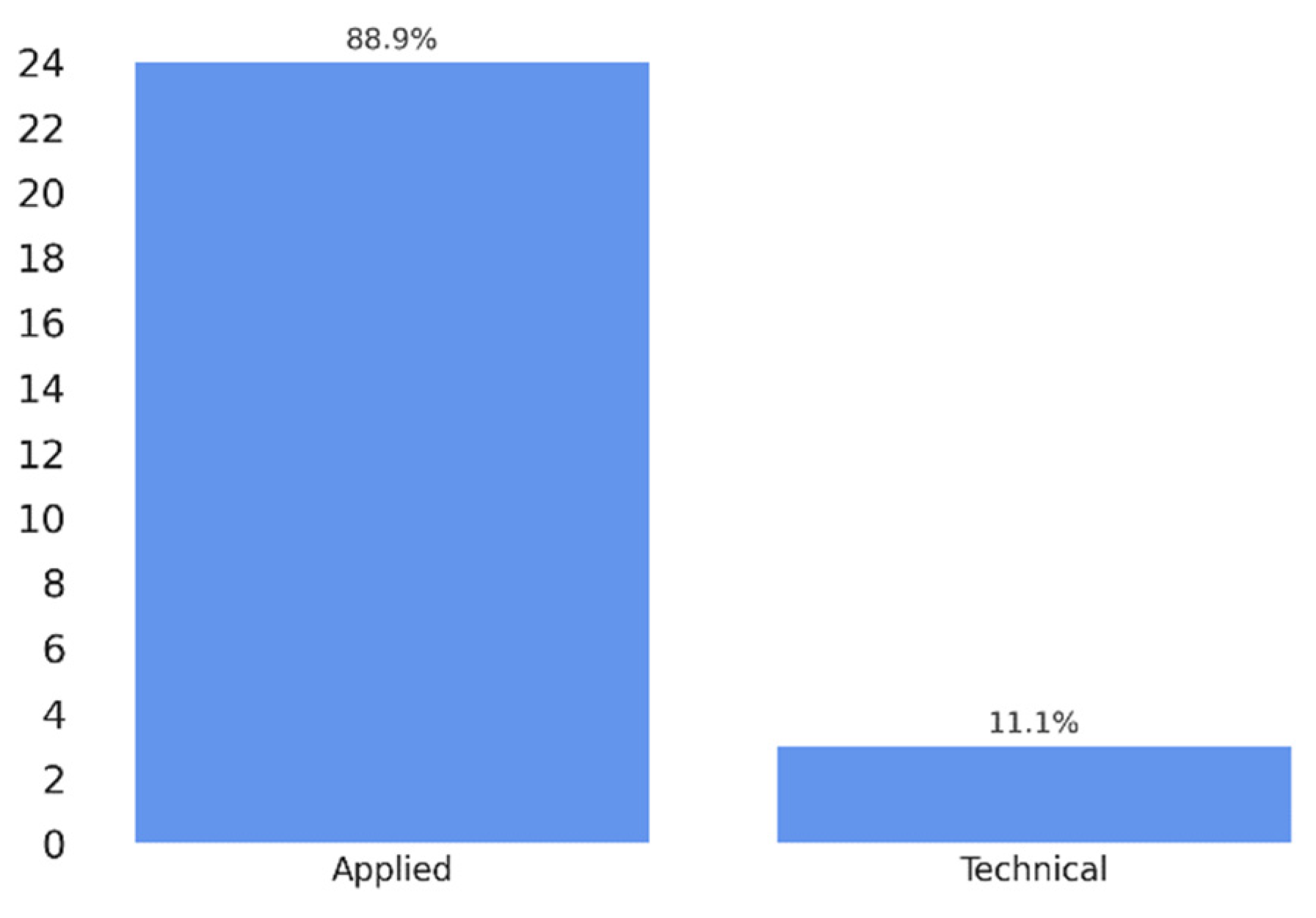

3.1. Study Objectives

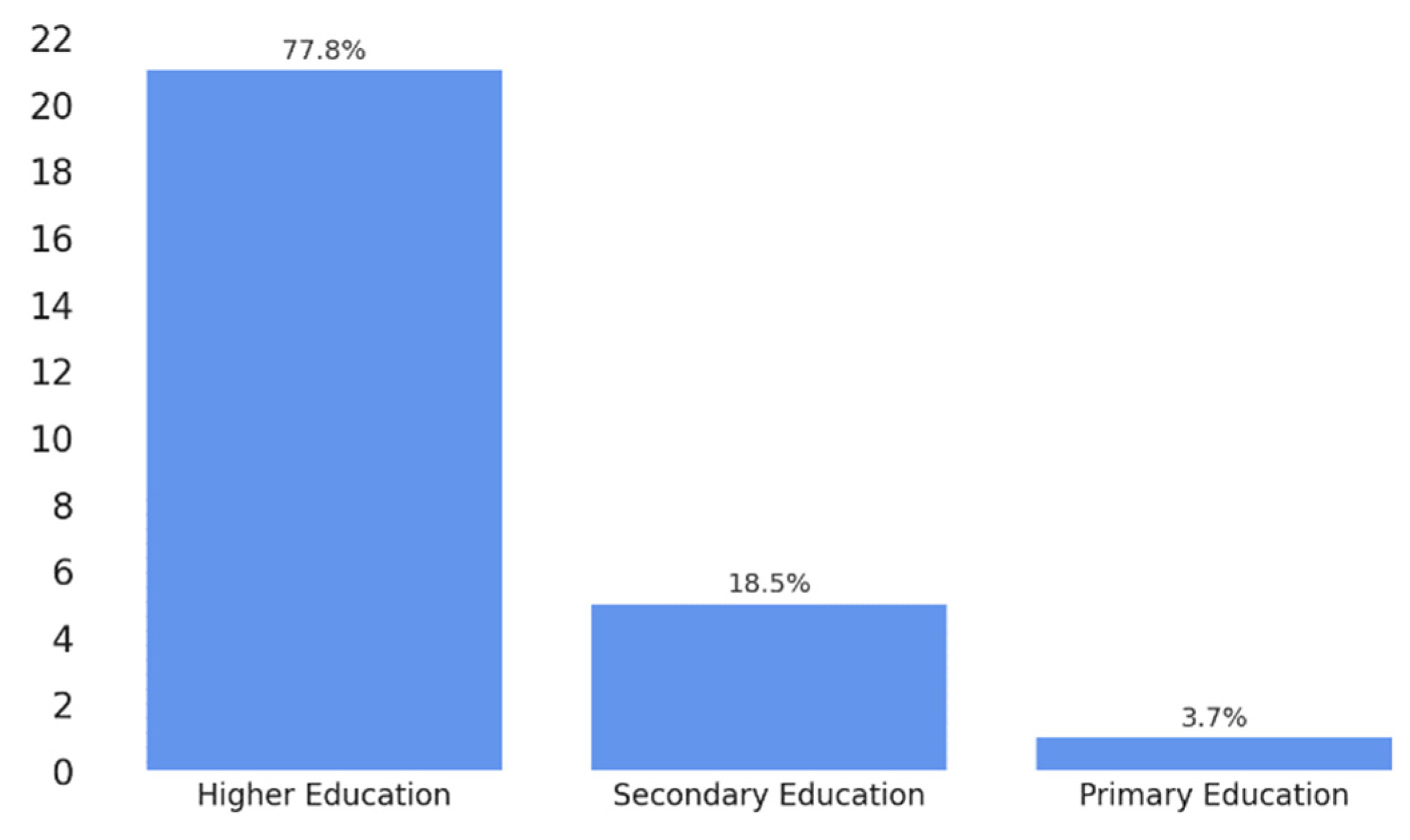

3.2. Educational Level

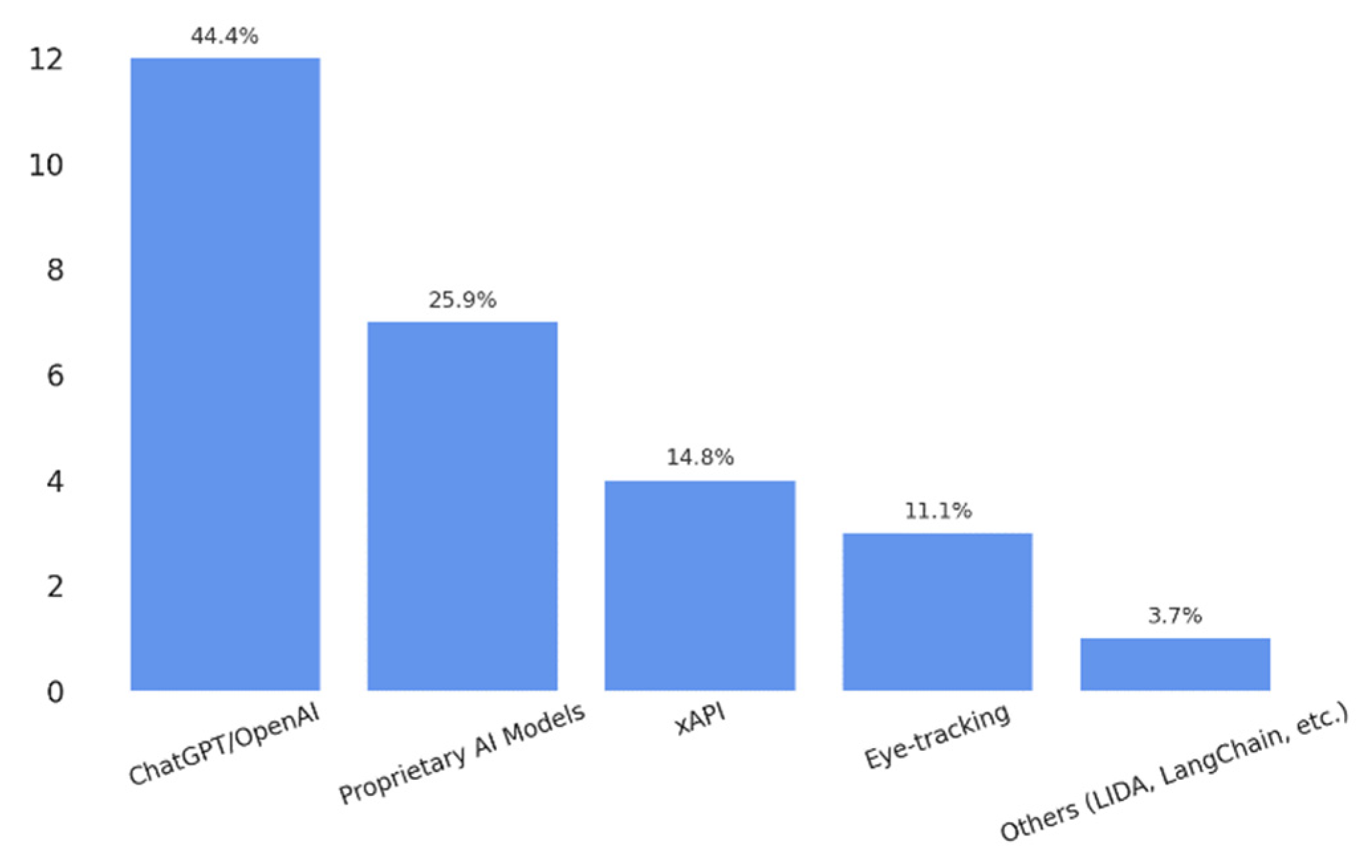

3.3. Technologies Used

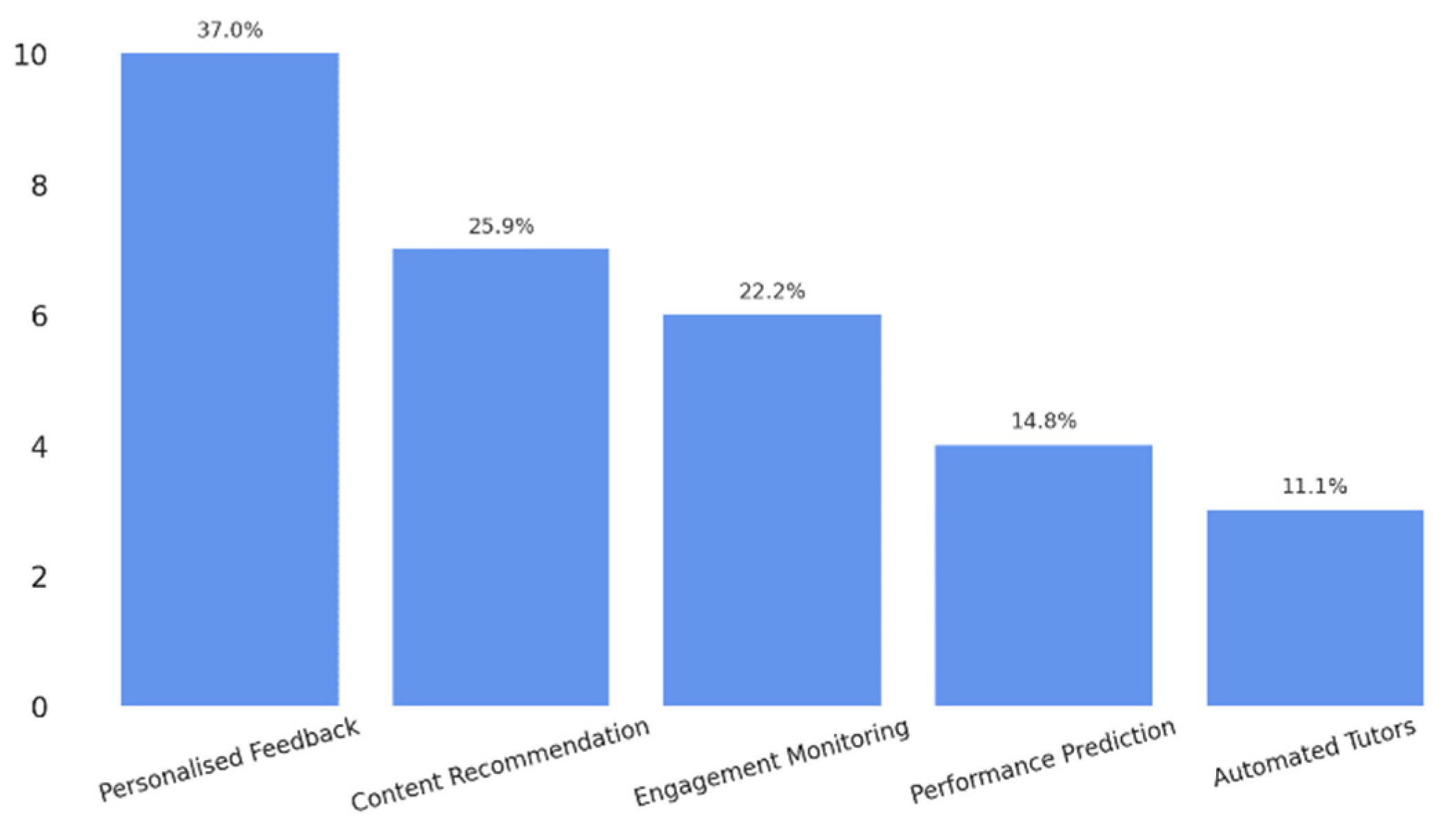

3.4. Functions of Applied APIs and AI

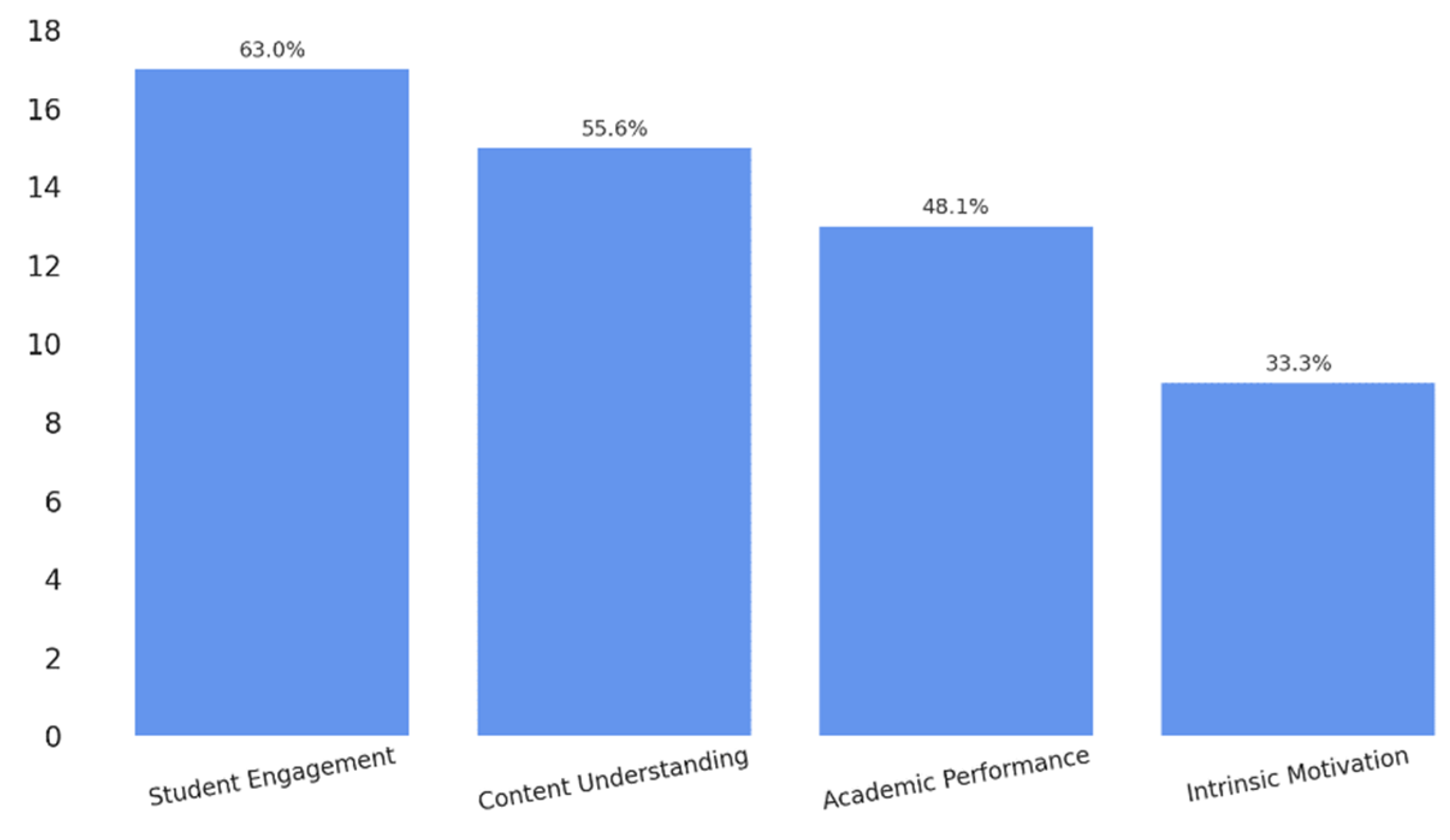

3.5. Observed Impact on Learning

3.6. Methodological Limitations

4. Discussion

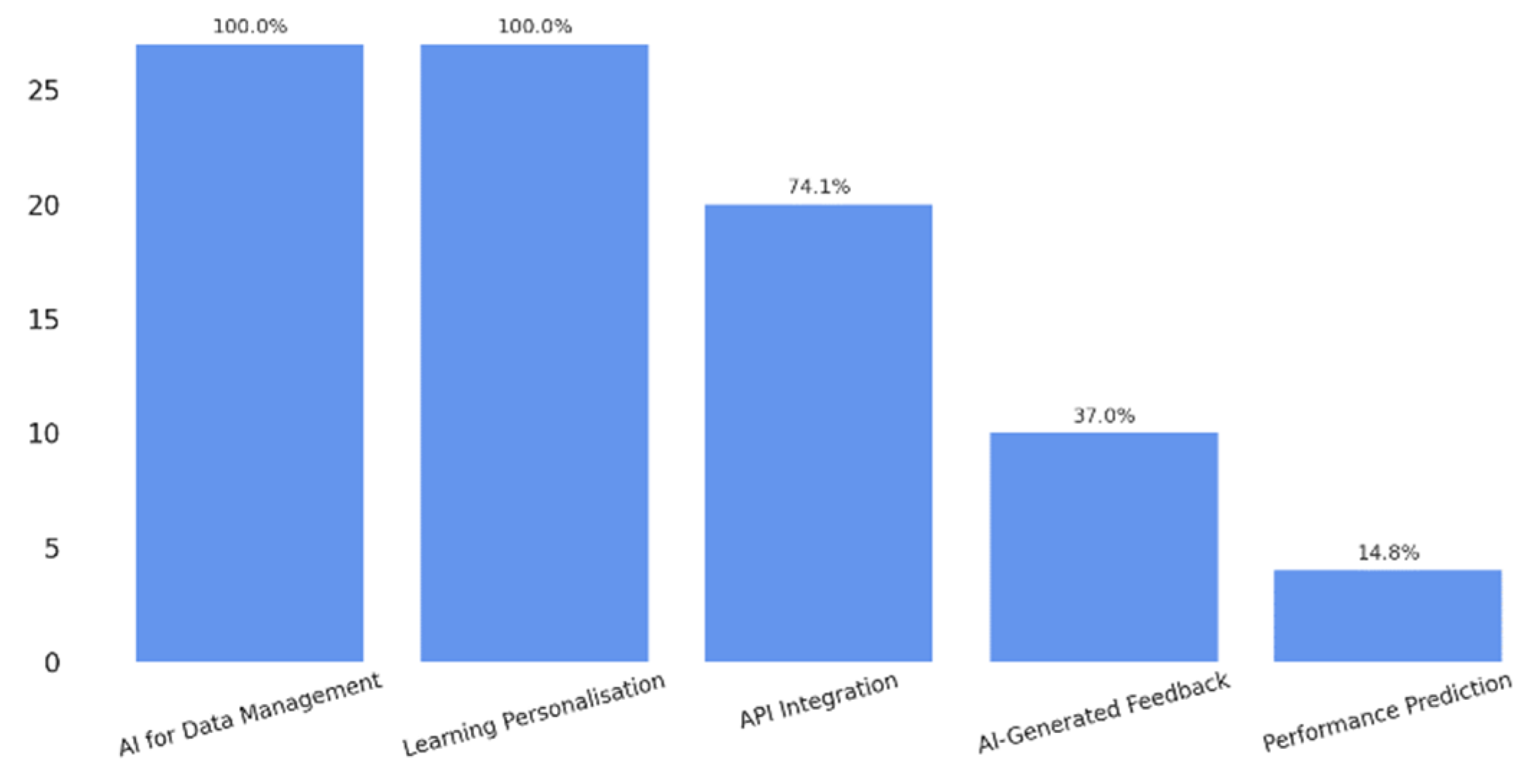

4.1. AI-Driven Educational APIs for Intelligent Information Management

4.2. Effective Integration Across Educational Platforms and Systems

4.3. Pedagogical Benefits of Automated Data Use

4.4. Ethical, Technical and Regulatory Challenges in the Management of Educational Data

4.5. A Conceptual Framework for Informed, Connected and Adaptive Educational Ecosystems

- Intelligent management of educational information

- API integration and platform interoperability

- Personalization through generative AI

- Automated adaptive feedback

- Performance prediction and support for decision-making

5. Conclusions

- Conducting large-scale longitudinal studies to evaluate the sustained impact of AI and APIs in diverse educational contexts.

- Designing inclusive frameworks for API integration that take into account infrastructure disparities and the need for pedagogical adaptation.

- Developing teacher training programs to strengthen digital competence and ethical awareness in the use of AI-based tools.

- Establishing clear guidelines to ensure transparency, data protection, and appropriate data use.

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, C.M.; Wang, W.F. Mining Effective Learning Behaviors in a Web-Based Inquiry Science Environment. J. Sci. Educ. Technol. 2020, 29, 519–535. [Google Scholar] [CrossRef]

- Zhang, M. Integrating Deep Learning into Educational Big Data Analytics for Enhanced Intelligent Learning Platforms. Inf. Technol. Control 2024, 53, 1060–1073. [Google Scholar] [CrossRef]

- Yilmaz, R.; Yurdugül, H.; Yilmaz, F.G.K.; Şahin, M.; Sulak, S.; Aydin, F.; Tepgeç, M.; Müftüoğlu, C.T.; Oral, Ö. Smart MOOC integrated with intelligent tutoring: A system architecture and framework model proposal. Comput. Educ. Artif. Intell. 2022, 3, 100092. [Google Scholar] [CrossRef]

- Gubareva, R.; Lopes, R.P. Virtual Assistants for Learning: A Systematic Literature Review. In Proceedings of the 12th International Conference on Computer Supported Education; Chad Lane, H., Zvacek, S., Uhomoibhi, J., Eds.; SciTePress: Setubal, Portugal, 2020; pp. 97–103. [Google Scholar] [CrossRef]

- Chng, E.; Tan, A.L.; Tan, S.C. Examining the Use of Emerging Technologies in Schools: A Review of Artificial Intelligence and Immersive Technologies in STEM Education. J. STEM Educ. Res. 2023, 6, 385–407. [Google Scholar] [CrossRef]

- Vázquez-Ingelmo, A.; García-Peñalvo, F.J.; Therón, R. Towards a Technological Ecosystem to Provide Information Dashboards as a Service: A Dynamic Proposal for Supplying Dashboards Adapted to Specific Scenarios. Appl. Sci. 2021, 11, 3249. [Google Scholar] [CrossRef]

- Prahani, B.K.; Rizki, I.A.; Jatmiko, B.; Suprapto, N.; Amelia, T. Artificial Intelligence in Education Research During the Last Ten Years: A Review and Bibliometric Study. Int. J. Emerg. Technol. Learn. 2022, 17, 169–188. [Google Scholar] [CrossRef]

- Andrey Bernate, J.; Puerto Garavito, S.C. Impacto de la Educación Física en las Competencias Ciudadanas: Una Revisión Bibliométrica. Cienc. Y Deporte 2023, 8, 507–522. Available online: http://scielo.sld.cu/scielo.php?script=sci_abstract&pid=S2223-17732023000300507&lng=en&nrm=iso&tlng=es (accessed on 12 May 2025).

- Moreno-Fernández, O.; Gómez-Camacho, A. Impact of the Covid-19 pandemic on teacher tweeting in Spain: Needs, interests, and emotional implications. Educ. XX1 2023, 26, 2. [Google Scholar] [CrossRef]

- Pérez Imaicela, R.H. Plataforma Educativa Asistida por el Modelo de Inteligencia Artificial GPT Para el Refuerzo Académico de Estudiantes del Módulo Tecnologías; Desarrollo Web en la Carrera de TI de la FISEI-UTA; Universidad Técnica de Ambato: Ambato, Ecuador, 2024. [Google Scholar]

- Alam, A. Possibilities and Apprehensions in the Landscape of Artificial Intelligence in Education. In Proceedings of the 2021 International Conference on Computational Intelligence and Computing Applications (ICCICA), Nagpur, India, 26–27 November 2021; pp. 1–8. Available online: https://ieeexplore.ieee.org/document/9697272 (accessed on 12 May 2025).

- Beaulac, C.; Rosenthal, J.S. Predicting university students’ academic success and major using random forests. Res. High. Educ. 2019, 60, 1048–1064. [Google Scholar] [CrossRef]

- Lloret, C.M.; González, A.H.; Raboso, D.D. Sistemas y recursos educativos basados en IA que apoyan y evalúan la educación. IA EñTM 2022. [Google Scholar] [CrossRef]

- Laupichler, M.C.; Aster, A.; Schirch, J.; Raupach, T. Artificial intelligence literacy in higher and adult education: A scoping literature review. Comput. Educ. Artif. Intell. 2022, 3, 100101. [Google Scholar] [CrossRef]

- Srinivasan, V. AI & learning: A preferred future. Comput. Educ. Artif. Intell. 2022, 3, 100062. [Google Scholar] [CrossRef]

- Wolters, A.; Arz Von Straussenburg, A.F.; Riehle, D.M. AI Literacy in Adult Education-A Literature Review. In Proceedings of the 57th Hawaii International Conference on System Sciences, Beach Resort, HI, USA, 3–6 January 2024. [Google Scholar] [CrossRef]

- Zhai, X. ChatGPT User Experience: Implications for Education. SSRN 2022, 1–10. [Google Scholar] [CrossRef]

- Tuomi, I.; Cachia, R.; Villar Onrubia, D. On the Futures of Technology in Education: Emerging Trends and Policy Implications; JRC Science for Policy Report; Publications Office of the European Union: Luxembourg, 2023; Available online: https://publications.jrc.ec.europa.eu/repository/handle/JRC134308 (accessed on 27 May 2025).

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are few-Shot Learners. arXiv 2020. [Google Scholar] [CrossRef]

- Bozkurt, A.; Xiao, J.; Lambert, S.; Pazurek, A.; Crompton, H.; Koseoglu, S.; Farrow, R.; Bond, M.; Nerantzi, C.; Honeychurch, S.; et al. Speculative futures on ChatGPT and generative artificial intelligence (AI): A collective reflection from the educational landscape. Asian J. Distance Educ. 2023, 18, 53–130. [Google Scholar]

- Ng, D.T.K.; Lee, M.; Tan, R.J.Y.; Hu, X.; Downie, J.S.; Chu, S.K.W. A review of AI teaching and learning from 2000 to 2020. Educ. Inf. Technol. 2022, 28, 8445–8501. [Google Scholar] [CrossRef]

- García-Peñalvo, F.J.; Llorens-Largo, F.; Vidal, J. La nueva realidad de la educación ante los avances de la inteligencia artificial generativa. RIED-Rev. Iberoam. Educ. Distancia 2024, 27, 9–39. [Google Scholar] [CrossRef]

- Berggren, A.; Söderström, T. Virtual assistants in higher education—From research to practice. Educ. Inf. Technol. 2021, 26, 2865–2883. [Google Scholar]

- Murtaza, M.; Ahmed, Y.; Shamsi, J.A.; Sherwani, F.; Usman, M. AI-Based Personalized E-Learning Systems: Issues, Challenges, and Solutions. IEEE Access 2022, 10, 81323–81342. Available online: https://ieeexplore.ieee.org/document/9840390 (accessed on 12 May 2025). [CrossRef]

- Adako, O.P.; Adeusi, O.C.; Alaba, P.A. Enhancing education for children with ASD: A review of evaluation and measurement in AI tool implementation. Disabil. Rehabil. Assist. Technol. 2025, 1–22. [Google Scholar] [CrossRef]

- Almeida, C.; Kalinowski, M.; Feijó, B. A systematic mapping of negative effects of gamification in education/learning systems. In Proceedings of the 47th Euromicro Conference on Software Engineering and Advanced Applications (SEAA), Palermo, Italy, 1–3 September 2021. [Google Scholar] [CrossRef]

- Encalada Jumbo, F.C.; Cedeño Granda, S.A.; Córdova Macas, L.R.; Granda Guerrero, B.C. Aplicaciones educativas para mejorar el aprendizaje en el aula. Pedagogical Constellations 2025, 4, 92–109. [Google Scholar] [CrossRef]

- Castrillón, O.; Sarache, W.; Ruíz, S. Predicción del rendimiento académico por medio de técnicas de inteligencia artificial. Form. Univ. 2020, 13, 93–102. [Google Scholar] [CrossRef]

- Gašević, D.; Dawson, S.; Siemens, G. Let’s not forget: Learning analytics are about learning. TechTrends 2015, 59, 64–71. [Google Scholar] [CrossRef]

- Gilbert, S.B.; Blessing, S.B.; Guo, E. Authoring effective embedded tutors: An overview of the extensible problem specific tutor (xPST) system. Int. J. Artif. Intell. Educ. 2015, 25, 428–454. [Google Scholar] [CrossRef]

- Kinder, A.; Briese, F.J.; Jacobs, M.; Dern, N.; Glodny, N.; Jacobs, S.; Leßmann, S. Effects of adaptive feedback generated by a large language model: A case study in teacher education. Comput. Educ. Artif. Intell. 2025, 8, 100349. [Google Scholar] [CrossRef]

- Perrotta, C.; Gulson, K.N.; Williamson, B.; Witzenberger, K. Automation, APIs and the distributed labour of platform pedagogies in Google Classroom. Crit. Stud. Educ. 2021, 62, 97–113. [Google Scholar] [CrossRef]

- Sánchez-Vera, M.D.M. La inteligencia artificial como recurso docente: Usos; posibilidades para el profesorado. Educar 2023, 60, 33–47. [Google Scholar] [CrossRef]

- Venter, J.; Coetzee, S.A.; Schmulian, A. Exploring the use of artificial intelligence (AI) in the delivery of effective feedback. Assess. Eval. High. Educ. 2024, 50, 516–536. [Google Scholar] [CrossRef]

- Williamson, B.; Eynon, R. Historical threads, missing links, and future directions in AI in education. Learn. Media Technol. 2020, 45, 223–235. [Google Scholar] [CrossRef]

- Baidoo-Anu, D.; Owusu Ansah, L. Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. J. IA 2023, 7, 52–62. [Google Scholar] [CrossRef]

- Hartong, S.; Förschler, A. Opening the black box of data-based school monitoring: Data infrastructures, flows and practices in state education agencies. Big Data Soc. 2019, 6, 2053951719853311. [Google Scholar] [CrossRef]

- Shibani, A.; Knight, S.; Buckingham Shum, S. Educator perspectives on learning analytics in classroom practice. Internet High. Educ. 2020, 46, 100730. [Google Scholar] [CrossRef]

- Tlili, A.; Shehata, B.; Adarkwah, M.A.; Bozkurt, A.; Hickey, D.T.; Huang, R.; Agyemang, B. What If Devil Is My Guard. Angel: ChatGPT A Case Study Using Chatbots education. Smart Learn. Environ. 2023, 10, 15. [Google Scholar] [CrossRef]

- García-López, I.M.; González, C.S.G.; Ramírez-Montoya, M.S.; Molina-Espinosa, J.M. Challenges of implementing ChatGPT on education: Systematic literature review. Int. J. Educ. Res. Open 2025, 8, 100401. [Google Scholar] [CrossRef]

- Gónzález-González, C. El impacto de la inteligencia artificial en la educación: Transformación de la forma de enseñar y de aprender. Rev. Qurriculum 2023, 2, 51–60. [Google Scholar] [CrossRef]

- Holmes, W.; Porayska-Pomsta, K. The Ethics of Artificial Intelligence in Education; Routledge: London, UK, 2023. [Google Scholar]

- Liebrenz, M.; Schleifer, R.; Buadze, A.; Bhugra, D.; Smith, A. Generating scholarly content with ChatGPT: Ethical challenges for medical publishing. Lancet Digit. Health 2023, 5, e105–e106. [Google Scholar] [CrossRef]

- Xu, W.; Ouyang, F. The application of AI technologies in STEM education: A systematic review from 2011 to 2021. Int. J. STEM Educ. 2022, 9, 59. [Google Scholar] [CrossRef]

- Figaredo, D.D.; Reich, J.; Ruipérez-Valiente, J.A. Analítica del aprendizaje; educación basada en datos: Un campo en expansión. RIED-Rev. Iberoam. Educ. Distancia 2020, 23, 33–43. [Google Scholar] [CrossRef]

- Ifenthaler, D.; Schumacher, C. Reciprocal issues of artificial and human intelligence in education. J. Res. Technol. Educ. 2023, 55, 1–6. [Google Scholar] [CrossRef]

- Ifenthaler, D.; Majumdar, R.; Gorissen, P.; Judge, M.; Mishra, S.; Raffaghelli, J.; Shimada, A. Artificial intelligence in education: Implications for policymakers, researchers, and practitioners. Technol. Knowl. Learn. 2024, 29, 1693–1710. [Google Scholar] [CrossRef]

- Lee, U.; Jeong, Y.; Koh, J.; Byun, G.; Lee, Y.; Lee, H.; Kim, H. I see you: Teacher analytics with GPT-4 vision-powered observational assessment. Smart Learn. Environ. 2024, 11, 48. [Google Scholar] [CrossRef]

- Santamaría-Bonfil, G.; Escobedo-Briones, G.; Pérez-Ramírez, M.; Arroyo-Figueroa, G. A learning ecosystem for linemen training based on big data components and learning analytics. J. Univers. Comput. Sci. 2019, 25, 541–568. [Google Scholar] [CrossRef]

- Zambrano, P.L.; Bazurto, L.M.; Bazurto, G.M.; Llerena, T.R. El desarrollo de interfaces de programación de aplicaciones (APIs) dinamiza el acceso a contenidos en plataformas de educación virtual: The development of application programming interfaces (APIs) streamlines access to content on virtual education platforms. LATAM Rev. Latinoam. Cienc. Soc. Humanidades 2025, 6, 3039–3047. [Google Scholar] [CrossRef]

- Castillo, M.E. Impacto de la inteligencia artificial en el proceso de enseñanza aprendizaje en la educación secundaria. LATAM Rev. Latinoam. Cienc. Soc. Humanidades 2023, 4, 515–530. [Google Scholar] [CrossRef]

- Uzcátegui Pacheco, R.A.; Ríos Colmenárez, M.J. Inteligencia Artificial para la Educación: Formar en tiempos de incertidumbre para adelantar el futuro. Areté Rev. Digit. Dr. Educ. 2024, 10, 1–21. [Google Scholar] [CrossRef]

- Holmes, W.; Porayska-Pomsta, K.; Holstein, K.; Sutherland, E.; Baker, T.; Shum, S.B.; Santos, O.C.; Rodrigo, M.T.; Cukurova, M.; Bittencourt, I.I.; et al. Ethics of AI in Education: Towards a Community-Wide Framework. Int. J. Artif. Intell. Educ. 2022, 32, 504–526. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Alonso-Fernández, S. Declaración PRISMA 2020: Una guía actualizada para la publicación de revisiones sistemáticas. Rev. Española Cardiol. 2021, 74, 790–799. [Google Scholar] [CrossRef]

- Higgins, J.P.T.; Green, S. (Eds.) Cochrane Handbook for Systematic Reviews of Interventions, Version 5.1.0; The Cochrane Collaboration: London, UK, 2011; Available online: https://handbook-5-1.cochrane.org (accessed on 12 May 2025).

- Perreault, W.D., Jr.; Leigh, L.E. Reliability of nominal data based on qualitative judgments. J. Mark. Res. 1989, 26, 135–148. [Google Scholar] [CrossRef]

- Peyton, K.; Unnikrishnan, S.; Mulligan, B. A review of university chatbots for student support: FAQs and beyond. Discov. Educ. 2025, 4, 21. [Google Scholar] [CrossRef]

- Huang, Y.M.; Chen, P.H.; Lee, H.Y.; Sandnes, F.E.; Wu, T.T. ChatGPT-Enhanced Mobile Instant Messaging in Online Learning: Effects on Student Outcomes and Perceptions. Comput. Hum. Behav. 2025, 168, 108659. [Google Scholar] [CrossRef]

- Yu, S.; Androsov, A.; Yan, H. Exploring the prospects of multimodal large language models for Automated Emotion Recognition in education: Insights from Gemini. Comput. Educ. 2025, 232, 105307. [Google Scholar] [CrossRef]

- Caccavale, F.; Gargalo, C.L.; Kager, J.; Larsen, S.; Gernaey, K.V.; Krühne, U. ChatGMP: Un caso de chatbots de IA en la formación en ingeniería química para la automatización de tareas repetitivas. Comput. Educ. Artif. Intell. 2025, 8, 100354. [Google Scholar] [CrossRef]

- Castellanos-Reyes, D.; Olesova, L.; Sadaf, A. Transforming online learning research: Leveraging GPT large language models for automated content analysis of cognitive presence. Internet High. Educ. 2025, 65, 101001. [Google Scholar] [CrossRef]

- Bernasconi, E.; Redavid, D.; Ferilli, S. Enhancing Personalised Learning with a Context-Aware Intelligent Question-Answering System and Automated Frequently Asked Question Generation. Electronics 2025, 14, 1481. [Google Scholar] [CrossRef]

- Gharbi, M.; Mohtadi, M.T. Personalizing mooc assessments with ai: Fine-tuning chatgpt for scalable learning. Int. J. Tech. Phys. Probl. Eng. 2025, 17, 192–203. [Google Scholar]

- Jusoh, S.; Kadir, R.A. Chatbot in education: Trends, personalisation, and techniques. Multimed. Tools Appl. 2025, 1–24. [Google Scholar] [CrossRef]

- Takii, K.; Flanagan, B.; Li, H.; Yang, Y.; Koike, K.; Ogata, H. Explainable eBook recommendation for extensive reading in K-12 EFL learning. Res. Pract. Technol. Enhanc. Learn. 2024, 20, 027. [Google Scholar] [CrossRef]

- Bagci, M.; Mehler, A.; Abrami, G.; Schrottenbacher, P.; Spiekermann, C.; Konca, M.; Engel, J. Simulation-Based Learning in Virtual Reality: Three Use Cases from Social Science and Technological Foundations in Terms of Va. Si. Li-Lab. Technol. Knowl. Learn. 2025, 1–40. [Google Scholar] [CrossRef]

- Sajja, R.; Sermet, Y.; Demir, I.; Pursnani, V. AI-Assisted Educational Framework for Floodplain Manager Certification: Enhancing Vocational Education and Training Through Personalized Learning. IEEE Access 2025, 13, 42401–42413. [Google Scholar] [CrossRef]

- Farhood, H.; Joudah, I.; Beheshti, A.; Muller, S. Evaluating and enhancing artificial intelligence models for predicting student learning outcomes. Informatics 2024, 11, 46. [Google Scholar] [CrossRef]

- Naatonis, R.N.; Rusijono, R.; Jannah, M.; Malahina, E.A.U. Evaluation of Problem Based Gamification Learning (PBGL) Model on Critical Thinking Ability with Artificial Intelligence Approach Integrated with ChatGPT API: An Experimental Study. Qubahan Acad. J. 2024, 4, 485–520. [Google Scholar] [CrossRef]

- Pesovski, I.; Santos, R.; Henriques, R.; Trajkovik, V. Generative AI for Customizable Learning Experiences. Sustainability 2024, 16, 3034. [Google Scholar] [CrossRef]

- Wang, L.; Lou, Y.; Li, X.; Xiang, Y.; Jiang, T.; Che, Y.; Ye, C. GlyphGenius: Unleashing the potential of AIGC in Chinese character learning. IEEE Access 2024, 12, 136420–136434. [Google Scholar] [CrossRef]

- Valverde-Rebaza, J.; González, A.; Navarro-Hinojosa, O.; Noguez, J. Advanced large language models and visualization tools for data analytics learning. Front. Educ. 2024, 9, 1418006. [Google Scholar] [CrossRef]

- Garefalakis, M.; Kamarianakis, Z.; Panagiotakis, S. Towards a supervised remote laboratory platform for teaching microcontroller programming. Information 2024, 15, 209. [Google Scholar] [CrossRef]

- Hervás, R.; Francisco, V.; Concepción, E.; Sevilla, A.F.; Méndez, G. Creating an API Ecosystem for Assistive Technologies Oriented to Cognitive Disabilities. IEEE Access 2024, 12, 163224–163240. [Google Scholar] [CrossRef]

- Santhosh, J.; Dengel, A.; Ishimaru, S. Gaze-Driven Adaptive Learning System with ChatGPT-Generated Summaries. IEEE Access 2024, 12, 173714–173733. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Python-bot: A chatbot for teaching python programming. Eng. Lett. 2020, 29, 25. [Google Scholar]

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| a. Documents published between 2013–2025 | a. Documents published before 2013 |

| b. Documents in Spanish or English | b. Documents in languages other than Spanish or English |

| c. Peer-reviewed studies | c. Publications not peer-reviewed or lacking academic validation |

| d. Studies addressing the use of APIs with AI components in educational or training contexts | d. Studies that do not address the combination of APIs and AI or are not related to educational contexts |

| e. Studies focused on educational information management, learning personalization, or learning analytics |

| Database | Truncated Terms/Search Equation |

|---|---|

| Scopus | TITLE-ABS-KEY((“artificial intelligence” OR “inteligencia artificial”) AND (“application programming interface” OR “API”) AND (“educational technology” OR “learning environment” OR “digital education” OR “plataformas educativas”) AND (“information management” OR “learning analytics” OR “student data” OR “educational data”) AND (“personalization” OR “integration” OR “interoperability” OR “decision making”)) |

| WoS | TS = (“artificial intelligence” OR “AI”) AND TS = (“API” OR “application programming interface”) AND TS = (“education” OR “educational technology” OR “learning analytics”) |

| IEEE Xplore | “artificial intelligence” AND API AND education |

| Dialnet | “inteligencia artificial” “educación” “plataforma digital” y “inteligencia artificial” “educación” “plataforma educativa” |

| Reference | Objectives | Sample | Country | Methodology | Results |

|---|---|---|---|---|---|

| Peyton et al. [57] | To review the use of chatbots in universities for student support beyond the classroom, evaluating implemented models, NLP technologies and platforms. | 8 university chatbot implementations | International (cases from universities in the US, UK, Australia and Asia; comparison with non-educational sectors) | Narrative review with targeted search on Google Scholar. Descriptive analysis of chatbot architectures (retrieval, AIML, GPT, etc.) and tools such as QnA Maker and Dialogflow. | Predominant use of FAQ-based models. Limited adoption of generative AI due to lack of educational datasets and low formal evaluation. Opportunities for improvement identified through RAG, LangChain and APIs such as OpenAI to enrich response systems and personalization. |

| Huang et al. [58] | To evaluate the impact of an AI messaging tool (ChatMIM) on student engagement, the development of higher-order thinking skills and student perceptions in online learning environments. | 63 postgraduate students (33 in the experimental group, 30 in the control group) enrolled in an advanced digital learning course | Taiwan | Experimental study with a pretest/posttest design and a mixed-methods approach. Combined questionnaires, log analysis, interviews and the use of ChatGPT (GPT-3.5) as a support tool. | Significant improvements in engagement (behavioral, cognitive and emotional), critical thinking, problem-solving and creativity. Students positively valued the system’s usefulness and its educational potential due to reduced cognitive load and automated feedback. |

| Yu et al. [59] | To evaluate the performance of the Gemini model (Multimodal LLM) in automatic emotion recognition (AER) tasks based on images in educational contexts, comparing accuracy, error patterns and emotional inference mechanisms. | 2.627 images extracted from five datasets: CK+, FER-2013, RAF-DB (basic emotions) and OL-SFED, DAiSEE (academic emotions) | China | Quantitative analysis of model performance using precision metrics (F1-score, recall). Coding of emotional inference patterns. | Gemini was effective in recognizing basic emotions (e.g., happiness, surprise), but less accurate with academic emotions (e.g., confusion, distraction). Preprocessing improved recognition quality. The model showed a reliance on facial features with insufficient contextual understanding. |

| Caccavale et al. [60] | To develop and implement ChatGMP, an LLM-based chatbot designed to conduct simulated audits in a postgraduate course, comparing its effectiveness to that of human instructors and evaluating its impact on the learning experience. | 21 master’s student groups (~120 students). 3 groups conducted the audit exercise using ChatGMP. | Denmark | Case study with a mixed-methods approach. Technical evaluation of the model (FLAN-T5), comparative testing with instructors, student surveys and analysis of response quality. | Similar experience and perceived quality across groups. ChatGMP automated repetitive tasks successfully and was well received, though it showed limitations in some responses and documents. |

| Kinder et al. [31] | To compare the impact of adaptive feedback generated by ChatGPT versus static expert feedback on students’ written performance, justification quality and perception of feedback in pre-service teacher education. | 269 master’s students in teacher training, randomly assigned to two groups: adaptive feedback (n = 132) and static feedback (n = 137). | Germany | Randomized controlled trial. Evaluation of written performance before and after receiving feedback. Quantitative analysis (ANCOVA, logistic regression, Wilcoxon) and perception measures. | Adaptive feedback improved justification quality and increased word count, but did not affect decision accuracy. It was perceived as more useful and engaging and students spent more time processing it. |

| Castellanos-Reyes et al. [61] | To explore the reliability and efficiency of GPT models in automating the analysis of cognitive presence (CP) in online discussions using an adapted LLM-based coding approach. | 293 paragraphs extracted from 180 forum messages in a postgraduate instructional design course. | United States | Comparative study of AI-assisted content coding (LACA) versus human coding, using GPT models with one-shot and few-shot prompts. Evaluation of reliability (Cohen’s k), time and cost. | The fine-tuned model using a one-shot prompt achieved moderate agreement with human coders (k = 0.59). High levels of accuracy were achieved in the integration phase, but low reliability was observed in phases such as resolution. The LACA approach significantly reduced analysis time and cost, though it requires prior knowledge of data processing and prompt engineering. |

| Bernasconi et al. [62] | To develop and implement an intelligent question-answering system (IQAS) with contextual sensitivity, along with an automatic FAQ generation tool, aimed at enhancing personalized learning. | 2.000 questions (1.200 factual and 800 inferential) evaluated using performance metrics. Students and experts participated in validation and feedback testing. | Italy | Hybrid design combining rule-based systems with transformer models (BERT, DistilBERT, BART), reasoning through knowledge graphs and automatic FAQ extraction. Quantitative evaluation (accuracy, F1) and qualitative assessment (usability, cognitive load, explainability). | The IQAS achieved 95% accuracy on factual questions and an F1 score of 0.85 on inferential ones. 80% of the generated FAQs were considered relevant by experts. Integration with the LMS proved feasible. User experience was rated positively (78% positive feedback, 4.1/5 in explainability). |

| Gharbi, M., & Mohtadi, M. T. [63] | To explore how personalized assessment in MOOCs can be achieved through the fine-tuning of ChatGPT to generate individualized feedback, adaptive quizzes and scalable learning pathways. | Experiments conducted across multiple MOOCs (mathematics, programming, English and data science), with control and experimental groups. Comparative data on performance, feedback and satisfaction. | Morocco | Development and validation of a system based on fine-tuned ChatGPT. Evaluation using quantitative metrics (accuracy, F1 score, response time) and qualitative indicators (engagement, cognitive load, satisfaction). Comparison between AI-supported and non-AI-supported groups. | Groups that received adaptive feedback through ChatGPT improved performance by 12% to 25%. Error rates decreased by up to 50% and student satisfaction exceeded 89%. The system proved effective in terms of scalability, personalization and real-time response, though ethical and technical challenges were noted. |

| Jusoh, S., & Abdul Kadir, R. [64] | To conduct a systematic review on the current state, personalization approaches and development techniques of chatbots in education, with special emphasis on their potential to enhance teaching and learning. | Review of 720 identified articles, of which 116 met quality criteria for final analysis (published between 2018 and 2024). | Malaysia | Systematic review following PRISMA guidelines. Search conducted in IEEE Xplore and ACM Digital Library. Application of inclusion, exclusion and quality assessment criteria with weighting for novelty, content, analysis and results. | The review identified trends in the use of chatbots as educational support tools, highlighting their capacity for personalization and usefulness in virtual environments. Development techniques such as programming, AIML and no-code platforms were analyzed. Challenges were noted, including limitations in natural language understanding, technological dependency and ethical concerns. |

| Takii et al. [65] | To develop and evaluate an explainable recommendation system for digital books in extensive reading programs in English as a Foreign Language (EFL) at the secondary level, tailored to students’ difficulty preferences. | 240 Japanese secondary school students (120 first-year and 120 s-year). | Japan | Mixed-methods design: algorithm based on TF-IDF and CEFR-J lexical profiles to estimate material difficulty and student preferences. Technical evaluation of the system, along with usage and perception analysis through log data and a TAM questionnaire (n = 203). | The system accurately estimated the difficulty of simpler texts. Although it did not improve overall performance or motivation, it was well received by already motivated students. A correlation was found between system use and increased reading activity. Improvements to the explanation of recommendations are suggested to enhance persuasiveness. |

| Bagci et al. [66] | To develop and evaluate the Va.Si.Li-Lab system for simulating social learning scenarios in virtual reality, exploring its ability to predict communicative contexts through multimodal data analysis. | 9 simulations across 6 sub-scenarios (school education, organizational pedagogy and social work), with 3 participants per simulation (total: 27). | Germany | Experimental design based on VR simulations with multimodal data collection (voice, gaze, movement, gestures). Analysis using multimodal interaction graphs, SVM classification and evolutionary feature selection. | Multimodal interaction patterns accurately predicted the type of educational scenario. Optimal F1 scores of 1.0 were achieved in selected configurations. The system demonstrated potential for identifying complex educational contexts in real time and fostering critical reflection in training environments. |

| Sajja et al. [67] | To develop and evaluate an AI-assisted educational tool for preparing for the Floodplain Manager (FPM) certification exam, offering personalized learning, adaptive quizzes and automated feedback. | Evaluation with 145 open-ended questions and 82 multiple-choice questions drawn from real certification preparation materials. | United States | Development of a platform based on ChatGPT-4o and RAG architecture. Evaluation through answer comparison, cosine similarity analysis and accuracy in closed-ended questions. Validation with experts and presentation at an international conference. | The system achieved 91.03% accuracy on open-ended questions and 95.12% on multiple-choice items. Experts rated it positively for applicability, scalability and personalization, although challenges were noted regarding integration of local data, deeper semantic evaluation and visualization of geospatial data. |

| Zhang [2] | To apply deep learning techniques to analyze large-scale educational data and predict students’ academic performance, incorporating variables such as engagement, resource usage and parental presence. | 480 student records from the LMS platform Kalboard 360, with 16 attributes related to behavior, performance and family involvement. | China | Predictive model based on LSTM networks. Elastic Net was used for feature selection and the model was trained using regularization techniques, hyperparameter tuning and cross-validation. | The LSTM model achieved 99% accuracy in predicting academic performance (high, medium and low categories), significantly outperforming traditional models (Random Forest, SVM, KNN). The predictive value of variables such as participation in announcements, resource use and parental involvement was confirmed. |

| Lee et al. [48] | To develop and evaluate the VidAAS system based on GPT-4V for automated classroom observation, aimed at enhancing teachers’ reflective practice through real-time teaching analytics. | 5 primary school teachers with experience in educational use of AI, selected as usability test experts. | South Korea | Exploratory study with six phases: theoretical review, design and implementation of VidAAS, usability testing, qualitative interviews, thematic analysis and SWOT analysis. GPT-4V, Whisper and LangChain were employed to integrate computer vision and text analysis. | VidAAS demonstrated high accuracy in evaluating psychomotor domains, detailed explanations and potential to support both reflection-in-action and reflection-on-action. Identified limitations included latency, scalability and the assessment of affective domains. Opportunities were noted to improve teacher training, personalize feedback and diversify assessment strategies, although ethical challenges related to privacy and evaluative authority were also highlighted. |

| Farhood et al. [68] | To compare and optimize ten machine learning (ML) and deep learning (DL) models for predicting student academic performance, also evaluating the impact of feature selection using Lasso and hyperparameter tuning. | Two public datasets: 395 Portuguese students (mathematics, secondary education) 480 students from 14 countries (primary to secondary education, various subjects) | Australia | Comparison of 7 ML models and 3 DL models (Random Forest, XGBoost, SVM, CNN, FFNN, GBNN, etc.). Evaluation using cross-validation and holdout. Application of Lasso regularization and Bayesian hyperparameter optimization. | The most accurate models were Random Forest and XGBoost (ML) and GBNN (DL). Lasso improved performance in several cases (e.g., logistic regression, CNN). Hyperparameter tuning increased model adaptability and accuracy. The study offers practical guidance for selecting predictive models applicable to real educational settings. |

|

Naatonis & Acevedo [69] | To evaluate the impact of a personalized learning model based on the ChatGPT API on the academic performance and motivation of technical high school students in social sciences. | 46 s-year technical high school students, divided into an experimental group (n = 23) and a control group (n = 23). | Argentina | Quasi-experimental design with pretest and posttest. Evaluation of academic performance, motivation and learning perception. The ChatGPT API was integrated to provide personalized feedback during text study and practical activities. | The experimental group showed significant improvements in reading comprehension, problem-solving and intrinsic motivation. Students positively valued the interaction with AI, highlighting immediate feedback, natural language use and personalization. Limitations included response time and the need for teacher supervision to prevent excessive dependency. |

| Pesovski et al. [70] | To design, implement and evaluate a GPT-4-based system for generating personalized educational materials in three styles (traditional teacher, Batman and Wednesday Addams), automatically integrated into an LMS. | 20 first-year software engineering students (average age: 20). Longitudinal study with surveys administered at the end of the course and six months later. | North Macedonia and Portugal | Exploratory study with integration of the GPT-4 API into the LMS. Automatic generation of content in three styles, tracking of interaction time, two questionnaires (immediate and 6-month follow-up) and mixed-method analysis of use, preferences and performance. | Students primarily used the traditional style content, although the versions with fictional characters led to increased total study time. The system was positively rated in terms of accessibility, personalization and motivation—particularly among initially low-performing students. A long-term preference shift toward the traditional style was observed. The study highlights the technical and pedagogical feasibility of integrating generative AI to personalize learning via the LMS. |

| Venter et al. [34] | To design, implement and evaluate a web application integrated with GPT-4 to generate automated feedback on written assignments in a large university accounting course, based on the effective feedback principles of Nicol and Macfarlane-Dick. | 75 written assignments from second-year accounting students, selected from five different assessments. | South Africa | Exploratory study with iterative development of a no-code application (Bubble.io), prompt design and validation and feedback quality analysis using a rubric based on seven principles of effective feedback. Evaluation conducted at three levels (adherence: none, partial, or full). | GPT-4-generated feedback achieved an average adherence score of 2.67 out of 3. Strengths were noted in motivation, dialogue and closing feedback loops, while weaknesses appeared in content accuracy and promotion of self-reflection. Teacher supervision was recommended to ensure quality and avoid ethical risks or critical errors. |

| Wang et al. [71] | To develop GlyphGenius, a platform based on AIGC and visual redrawing of Chinese characters through a multi-stage generative model, aimed at improving semantic learning of characters among non-native learners. | 135 participants (121 Chinese language beginners) in a semantic recognition questionnaire, plus 21 volunteers in touchscreen usability tests. | China | Development of an interactive system with a graphical UI and customized generative modules (Stable Diffusion + LoRA + handwriting recognition). Technical evaluation (FID, CLIP, SSIM, OCR), quantitative A/B testing and satisfaction analysis. | The experimental group using redrawn characters improved semantic recognition by 12.76% compared to the control group. The average satisfaction score was 4.24/5. The multi-stage model enhanced structural and aesthetic recognizability of characters, though limitations were noted with unclear prompts and non-pictographic characters. |

| Valverde-Rebaza et al. [72] | To compare the effectiveness of three approaches (traditional programming, ChatGPT and LIDA + GPT) for developing data analytics projects among students and professionals without advanced computational training. | 59 participants (43 students and 16 professionals from various disciplines), with different levels of experience in programming and data analytics. | Mexico | Case study conducted through practical sessions with three sequential activities: traditional development, development assisted by ChatGPT and development using LIDA + GPT integration via API. Data were collected through questionnaires, logs and comparative analysis of time, ease of use, accuracy and result perception. | The LIDA + GPT approach was rated the most accurate and appropriate, although it involved higher initial technical complexity. ChatGPT stood out for its ease and speed of use. Both approaches outperformed traditional programming in perceived efficiency, especially among professionals. The study concludes that integrating generative tools can significantly enhance data analytics learning, provided technical and training barriers are addressed. |

| Garefalakis et al. [73] | To develop and evaluate a remote laboratory platform (HMU-RLP) for teaching Arduino microcontroller programming, integrating supervision and automated assessment systems using AI and xAPI. | No specific student sample reported. The study focuses on technical design, implementation and functional comparison with other RL platforms. | Greece | Technical design and comparative evaluation. Implementation of three types of automated assessment (user actions, shadow microcontroller control and AI-based evaluation). Use of xAPI to log learning analytics and enable personalization. | The HMU-RLP platform automates code evaluation, monitors user interactions and allows for hardware-level analysis. It uses xAPI to track learning and offer adaptive learning pathways. It overcomes limitations of other RLs by enabling real-time evaluation and differentiated teacher control. The platform is proposed as a model for supervised and ubiquitous learning. |

| Hervás et al. [74] | To design and evaluate a modular API ecosystem to support the development of assistive technologies for individuals with cognitive disabilities, promoting interoperability, personalization and service reusability. | 6 applications for cognitive support developed using this ecosystem: PICTAR, LeeFácil, AprendeFácil, ReadIt, Pict2Text and EmoTraductor. Validation with users, special education experts and technical integration tests. | Spain | Architectural design based on microservices and open APIs (REST and GraphQL), with real use cases. Qualitative evaluation of adaptability, scalability and development efficiency according to accessibility and reusability criteria. | The ecosystem enabled integration of features such as text simplification, pictograms and emotional analysis across multiple applications. It was noted for its personalization capabilities, low maintenance cost and high potential for collaborative innovation. A centralized platform is planned to enhance API management in terms of security, scalability and traceability. |

| Santhosh et al. [75] | To develop and evaluate an adaptive learning system based on real-time eye tracking, which generates personalized summaries using ChatGPT when low student engagement is detected. | 22 university students (11 experimental, 11 control), all with advanced English proficiency. | Germany and Japan | Mixed-method design using eye tracking (Tobii 4C, 90 Hz), InceptionTime and Transformer models for engagement prediction. The experimental group received summaries generated by ChatGPT when low attention was detected. Evaluation included questionnaires, objective and subjective metrics and statistical validation. | The experimental group demonstrated higher engagement, comprehension and confidence (p < 0.01). The system achieved 68.15% accuracy in engagement prediction. Adaptive interventions stabilized visual patterns, reduced cognitive load and improved focus on content. The study highlights the feasibility of integrating gaze data and LLMs to personalize learning experiences in real time. |

| Chen & Wang [1] | To identify the most effective learning behaviors in a web-based scientific inquiry environment through process data analysis, sequential pattern mining and lag-sequential analysis supported by xAPI. | 48 seventh-grade secondary education students. Divided into high- and low-performing groups for comparative analysis. | Taiwan | Pre-experimental design. Process data recorded via xAPI in CWISE. Analysis included correlations, sequential pattern mining and lag-sequential analysis to compare behavioral sequences between groups with different performance levels. | Inquiry competence and time spent on the buoyancy simulation significantly predicted performance. High-performing students revised their hypotheses after experiments, while low-performing students analyzed without experimenting. The analytical tools enabled identification of effective behavioral sequences to guide instructional interventions. |

| Okonkwo & Ade-Ibijola [76] | To design and evaluate Python-Bot, an educational chatbot developed using the SnatchBot platform to support beginner students in understanding fundamental Python programming concepts. | 205 university students (mostly first-year) enrolled in an introductory programming course at the University of Johannesburg. | South Africa | No-code chatbot design with a conversational interface. Evaluation through a perception survey covering ease of use, response accuracy, usefulness of feedback and learning improvement. Integrated features included algorithm explanations, code examples and tutorial scheduling. | 81.4% of students reported that Python-Bot facilitated their programming learning. Over 99% validated the accuracy of its responses and 73.7% found it easy to use. Benefits were noted in terms of accessibility, personalization and support in remote learning contexts (COVID-19). Improvements were suggested for advanced syntax support and teacher integration. |

| Santamaría-Bonfil et al. [49] | To develop a learning ecosystem for lineman training based on xAPI, Big Data and learning analytics, capable of integrating both legacy and new data to personalize learning pathways. | 43 maintenance procedures from the LMT training program and 9 graduate students involved in a proof-of-concept study on self-records (SR). | Mexico | Technical and experimental design. Development of a domain model using text mining (BoW, DTM, clustering) and exploratory analysis of informal and emotional self-records using xAPI and DEQ. Proof of concept conducted with bookmarklets and LRS. | The hierarchical model enabled the definition of personalized learning pathways based on procedure similarity. Informal self-records showed potential as a source of support content, while emotional records served as indicators of student engagement. Technical, ethical and standardization challenges were identified in using xAPI with emotional SRs, but the feasibility of the approach for personalized training in technical contexts was validated. |

| Gilbert et al. [30] | To describe the architecture, applications and evaluations of the xPST system, an authoring tool designed to create intelligent tutors integrated into third-party software without requiring advanced programming or cognitive science knowledge. | xPST was evaluated with 75 participants: 49 statistics students and 16 content authors across various educational and development environments. | United States | Series of exploratory and comparative studies (both quantitative and qualitative): implementation of tutors in real software environments (Paint.NET v.3.5, CAPE v7, Torque 3D v. 3.5, Firefox v.29), analysis of learning outcomes, usability, efficiency, user perception and authoring time. | xPST enables the creation of effective tutors at low training cost, making it accessible to non-programmers. In several contexts, xPST-generated tutors improved student performance and satisfaction. The tool was positively rated for ease of use, though it showed limitations in flexibility and generalizability compared to other systems such as CTAT. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pérez-Jorge, D.; González-Afonso, M.C.; Santos-Álvarez, A.G.; Plasencia-Carballo, Z.; Perdomo-López, C.d.l.Á. The Impact of AI-Driven Application Programming Interfaces (APIs) on Educational Information Management. Information 2025, 16, 540. https://doi.org/10.3390/info16070540

Pérez-Jorge D, González-Afonso MC, Santos-Álvarez AG, Plasencia-Carballo Z, Perdomo-López CdlÁ. The Impact of AI-Driven Application Programming Interfaces (APIs) on Educational Information Management. Information. 2025; 16(7):540. https://doi.org/10.3390/info16070540

Chicago/Turabian StylePérez-Jorge, David, Miriam Catalina González-Afonso, Anthea Gara Santos-Álvarez, Zeus Plasencia-Carballo, and Carmen de los Ángeles Perdomo-López. 2025. "The Impact of AI-Driven Application Programming Interfaces (APIs) on Educational Information Management" Information 16, no. 7: 540. https://doi.org/10.3390/info16070540

APA StylePérez-Jorge, D., González-Afonso, M. C., Santos-Álvarez, A. G., Plasencia-Carballo, Z., & Perdomo-López, C. d. l. Á. (2025). The Impact of AI-Driven Application Programming Interfaces (APIs) on Educational Information Management. Information, 16(7), 540. https://doi.org/10.3390/info16070540