Business Logic Vulnerabilities in the Digital Era: A Detection Framework Using Artificial Intelligence

Abstract

1. Introduction

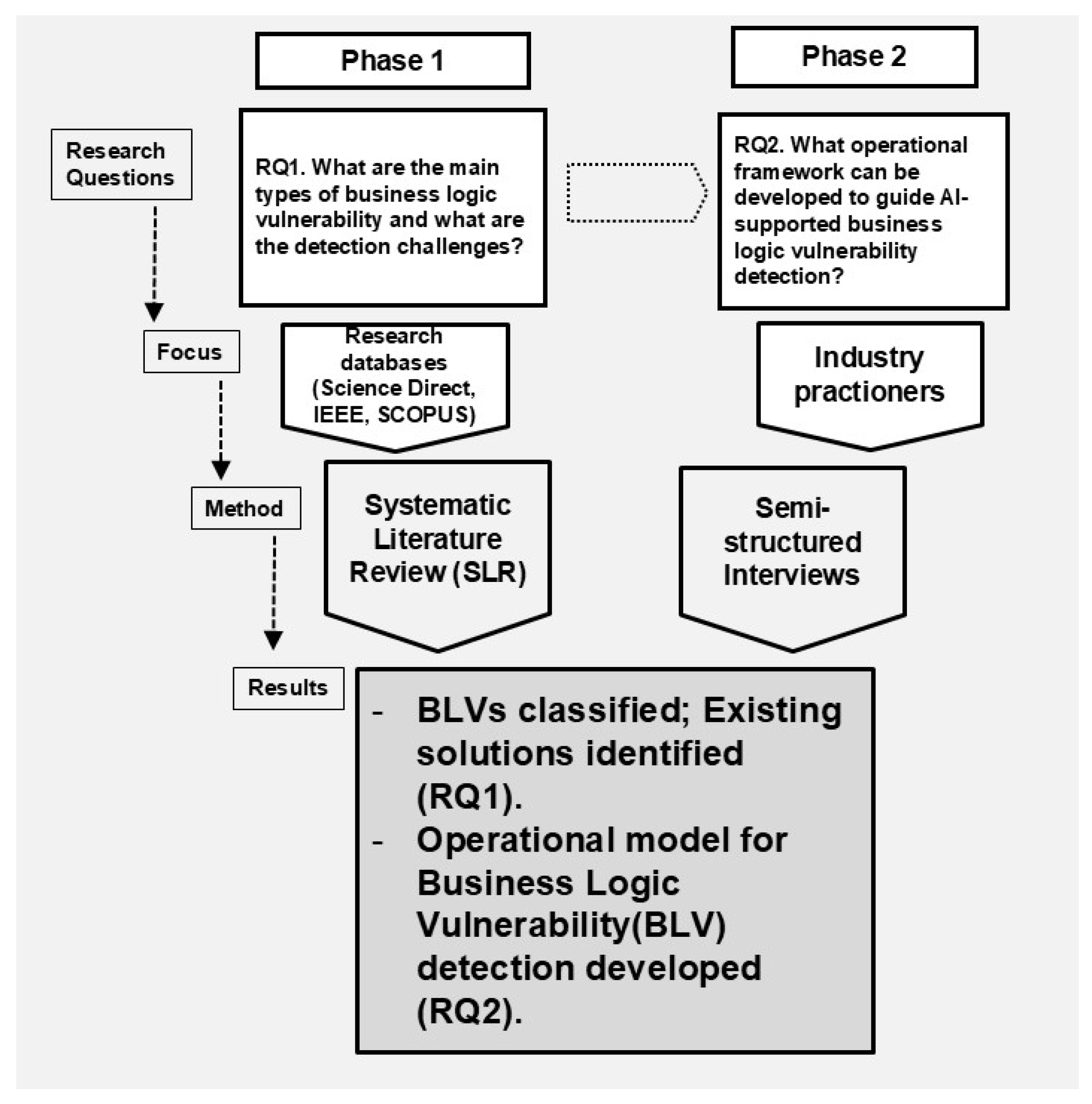

2. Research Method

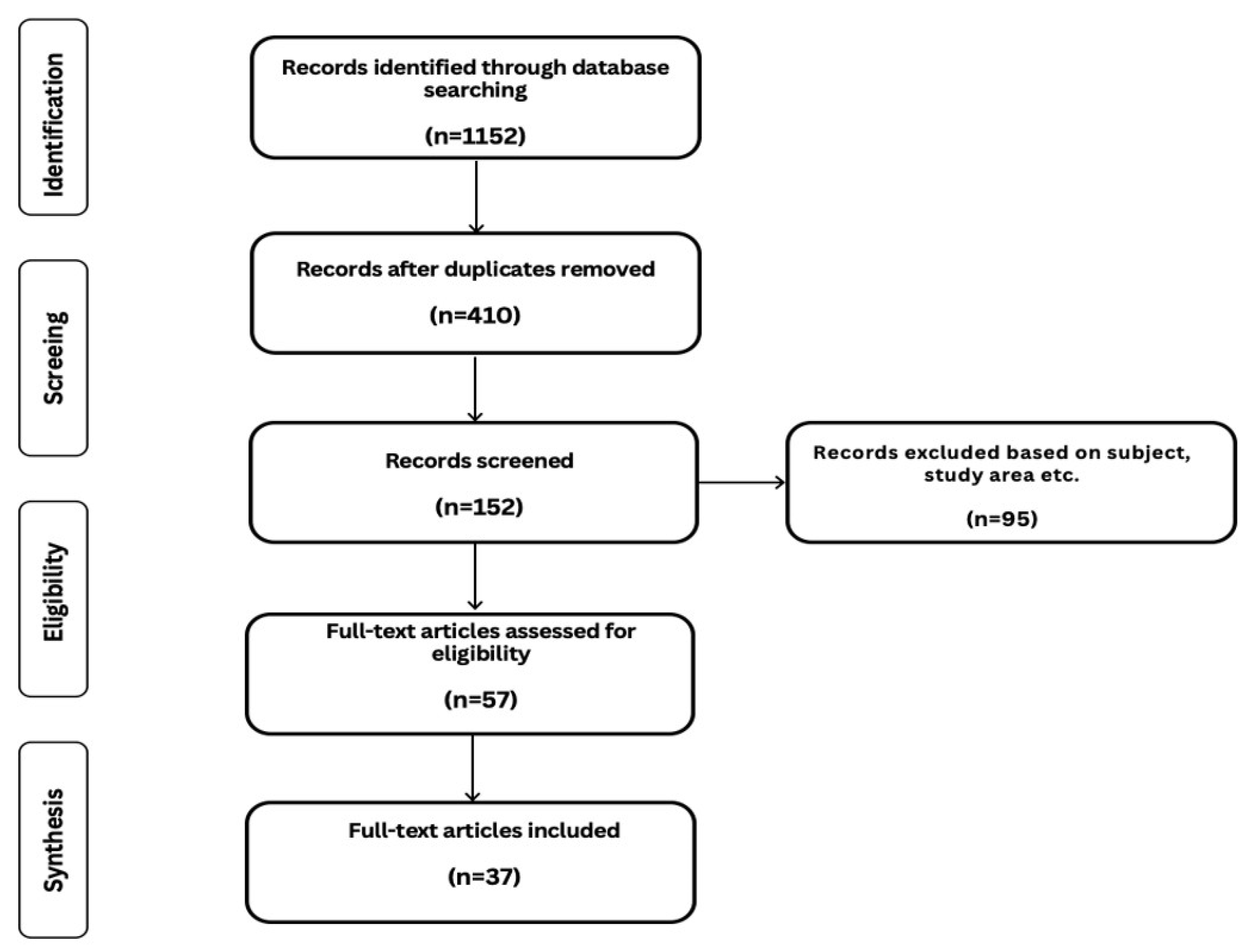

2.1. Systematic Literature Review

2.2. Qualitative Interviews

3. Results

3.1. Introduction

3.2. RQ1. What Are the Main Types of Business Logic Vulnerability and What Are the Detection Challenges?

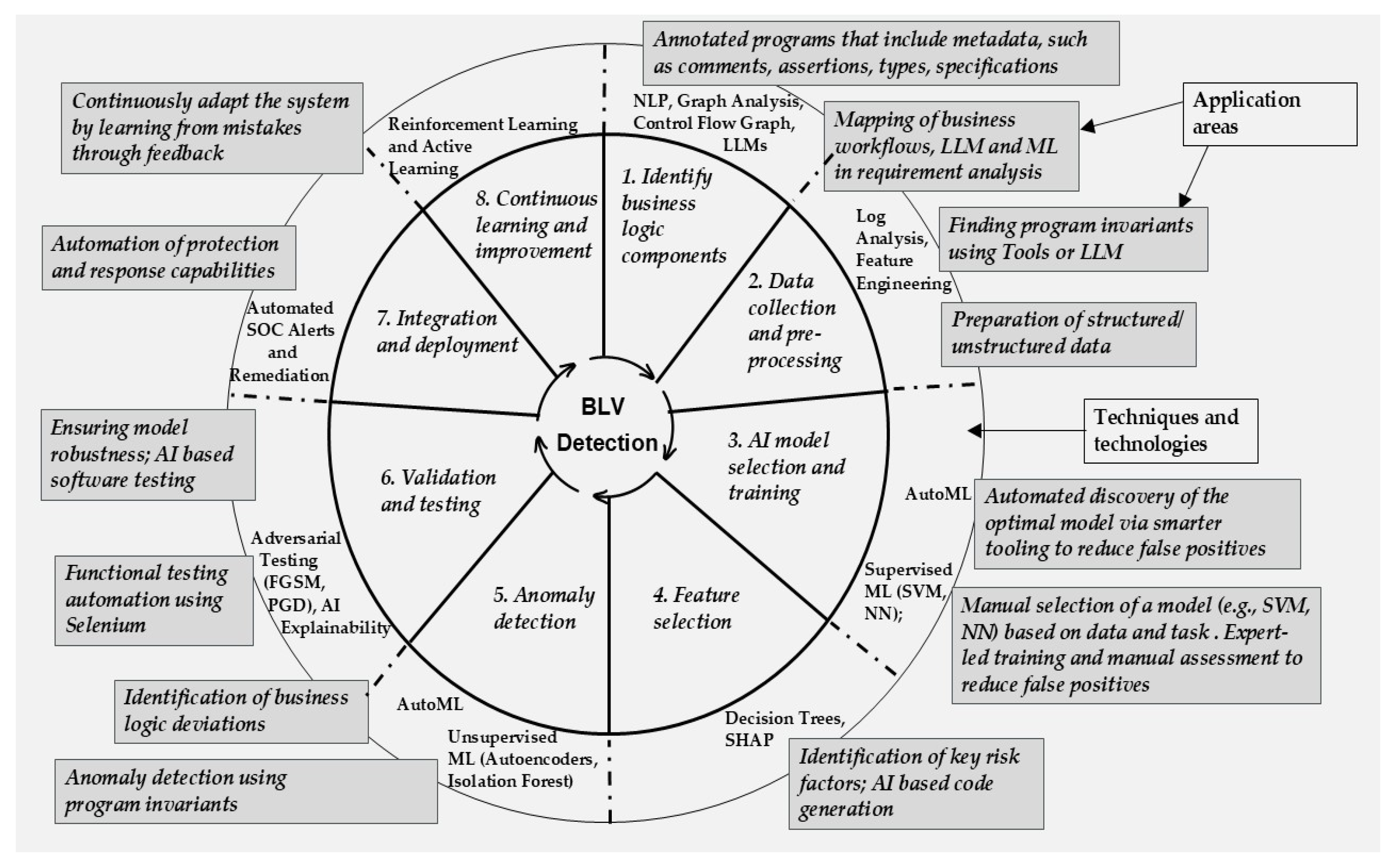

3.3. RQ2. What Operational Framework Can Be Developed to Guide Al-Supported Business Logic Vulnerability Detection?

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Systematic Literature Review Detail

| RQ | Search Terms | Count |

|---|---|---|

| IEEE | ||

| RQ1 | (“All Metadata”:“Business Logic Vulnerabilities”) | 1 |

| RQ1 | (“All Metadata”:“Logic Attacks”) | 10 |

| RQ1 | (“All Metadata”:Business Logic Vulnerabilities) AND (“All Metadata”:AI) | 0 |

| RQ1 | (“All Metadata”:Logic Attacks) AND (“All Metadata”:AI) | 188 |

| RQ1 | (“All Metadata”:AI) AND (“All Metadata”:business process) AND (“All Metadata”:cyber security) | 115 |

| RQ1 | (“All Metadata”:Artificial intelligence) AND (“All Metadata”:“process security”) | 39 |

| RQ1 | (“All Metadata”:Artificial intelligence) AND (“All Metadata”:“business security”) | 14 |

| RQ1 | (“All Metadata”:Artificial intelligence) AND (“All Metadata”:business process) AND (“All Metadata”:compromise) | 1 |

| RQ2 | (“All Metadata”:“Artificial intelligence”) AND (“All Metadata”:vulnerability scanner) | 20 |

| RQ2 | (“All Metadata”:“Artificial intelligence”) AND (“All Metadata”:Framework) AND (“All Metadata”:Business Security) AND (“All Metadata”:Vulnerability Detection) | 16 |

| RQ2 | (“All Metadata”:“Artificial intelligence”) AND (“All Metadata”:threat detection) AND (“All Metadata”:Business Process) | 89 |

| Scopus | ||

| RQ1 | ALL (“Business Logic Vulnerabilities”) | 20 |

| RQ1 | ALL (“Logic Attacks”) | 117 |

| RQ1 | ALL (“Business Logic Vulnerabilities”) AND ALL (“AI”) | 0 |

| RQ1 | ALL (“Logic Attacks”) AND ALL (“AI”) | 5 |

| RQ1 | ALL (“AI”) AND ALL (“Business Process”) AND ALL (“Cyber Security”) AND ALL (“Corporate”) | 25 |

| RQ1 | ALL (Artificial Intelligence) AND (“Business Process Security”) AND (“Business Vulnerability”) | 9 |

| RQ1 | ALL (Artificial Intelligence) AND (“Business Logic Vulnerability”) | 6 |

| RQ1 | ALL (Artificial intelligence) AND ALL (“business process”) AND ALL (“Generative AI”) AND (“Cybersecurity”) | 3 |

| RQ2 | ALL (“Artificial Intelligence”) AND (“Vulnerability Scanner”) AND (“Cybersecurity”) AND (“Detection”) | 64 |

| RQ2 | ALL (“Artificial Intelligence”) AND (“Framework”) AND (“Business Security”) AND (“Vulnerability”) | 23 |

| RQ2 | ALL (“Artificial Intelligence”) AND (“Threat Detection”) AND (“Business Process”) | 128 |

| ScienceDirect | ||

| RQ1 | “Business Logic Vulnerabilities” | 6 |

| RQ1 | “Logic Attacks” | 68 |

| RQ1 | “Business Logic Vulnerabilities” AND “AI” | 1 |

| RQ1 | “Logic Attacks” AND “AI” | 14 |

| RQ1 | “Artificial Intelligence” AND “Business Process” AND “Cyber Security” AND “Corporate Security” | 6 |

| RQ1 | “Artificial Intelligence” AND “Business Vulnerability” | 10 |

| RQ1 | “Artificial Intelligence” AND “Business Logic Vulnerability” | 1 |

| RQ1 | Artificial intelligence AND “business process” AND “Generative AI” AND “Cybersecurity” | 27 |

| RQ2 | “Artificial Intelligence” AND “Vulnerability Scanner” AND “Cybersecurity” | 42 |

| RQ2 | “Artificial Intelligence” AND “Threat Detection” AND “Business Process” | 55 |

| RQ2 | Artificial Intelligence AND “Framework” AND “Business Security” AND “Vulnerability” | 29 |

| Li et al. [33] | “The business logic in the software design phase reflects the interaction between the objects. Such interactions may be exploited by an attacker, which can be used to break software system for illegitimate interests.” |

| Stergiopoulos et al. [8] | “Application Business Logic Vulnerability” (BLV) is the flaw present in the faulty implementation of business logic rules within the application code.” |

| Stergiopoulos et al. [8] | “Business logic vulnerabilities are an important class of defects that are the result of faulty application logic. Business logic refers to requirements implemented in algorithms that reflect the intended functionality of an application.” |

| Pellegrino and Balzarotti [26] | “Logic vulnerabilities still lack a formal definition, but, in general, they are often the consequence of an insufficient validation of the business process of a web application.” |

| Deepa & Thilagam [2] | “Business Logic Vulnerabilities (BLVs) are weaknesses that commonly allow attackers to manipulate the business logic of an application. They are easily exploitable, and the attacks exploiting BLVs are legitimate application transactions used to carry out an undesirable operation that is not part of normal business practice.” |

| Ghorbanzadeh and Shahriari [11] | “Logic vulnerabilities are due to defects in the application logic implementation such that the application logic is not the logic that was expected. Indeed, such vulnerabilities pattern depends on the design and business logic of the application. There are no specific and common patterns for application logic vulnerabilities in commercial applications.” |

| Kim et al. [28] | “Business logic vulnerabilities occur when the application logic is exposed to the client-side, allowing attackers to tamper with the business flow and perform unintended operations.” |

| Zeller et al. [7] | “An important class of security problems are vulnerabilities in business rules. (…) Such attacks are called logical attacks and pose a distinct challenge to securing software applications. In logical attacks, weaknesses in the business rules are identified and exploited with the intent of disrupting services offered to legitimate users.” |

| OWASP [6] | “Weaknesses in this category identify some of the underlying problems that commonly allow attackers to manipulate the business logic of an application. Errors in business logic can be devastating to an entire application. They can be difficult to find automatically, since they typically involve legitimate use of the application’s functionality. However, many business logic errors can exhibit patterns that are similar to well-understood implementation and design weaknesses.” |

| PortSwigger [27] | “Business logic vulnerabilities are flaws in the design and implementation of an application that allow an attacker to elicit unintended behavior. This potentially enables attackers to manipulate legitimate functionality to achieve a malicious goal. These flaws are generally the result of failing to anticipate unusual application states that may occur and, consequently, failing to handle them safely.” |

References

- SiteLock. Statistics & Insights into Today’s Most Challenging Cybersecurity Threats. 2021. Available online: https://www.sitelock.com/resources/security-report/ (accessed on 23 April 2025).

- Deepa, G.; Thilagam, P.S. Securing web applications from injection and logic vulnerabilities: Approaches and challenges. Inf. Softw. Technol. 2016, 74, 160–180. [Google Scholar] [CrossRef]

- Affia, A. A Black-Box Methodology for Attacking Business Logic Vulnerabilities in Web Applications. Research Paper. Faculty of Information Technology, Tallinn University of Technology. 2017. Available online: https://www.researchgate.net/publication/336367792_A_Black-box_Methodology_for_Attacking_Business_Logic_Vulnerabilities_in_Web_Applications (accessed on 23 April 2025).

- HackerOne. The HackerOne Top 10 Vulnerability Types. 2023. Available online: https://www.hackerone.com/lp/top-ten-vulnerabilities (accessed on 5 May 2025).

- Hofesh, B. Business Logic Vulnerabilities: Busting the Automation Myth. 2025. Available online: https://brightsec.com/blog/business-logic-vulnerabilities-busting-the-automation-myth/ (accessed on 10 April 2025).

- OWASP Foundation. Introduction to Business Logic. Available online: https://owasp.org/www-project-web-security-testing-guide/stable/4-Web_Application_Security_Testing/10-Business_Logic_Testing/00-Introduction_to_Business_Logic (accessed on 10 May 2025).

- Zeller, S.; Khakpour, N.; Weyns, D.; Deogun, D. Self-protection against business logic vulnerabilities. In Proceedings of the 2020 IEEE/ACM 15th International Symposium on Software Engineering for Adaptive and Self-Managing Systems (SEAMS), Seoul, Republic of Korea, 25–26 May 2020; pp. 174–180. [Google Scholar]

- Stergiopoulos, G.; Tsoumas, B.; Gritsalis, D. On business logic vulnerabilities hunting: The APP_LogGIC framework. In Network and System Security: Proceedings of the 7th International Conference, NSS 2013, Madrid, Spain, 3–4 June 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 236–249. [Google Scholar]

- Ramezan, C.A. Examining the cyber skills gap: An analysis of cybersecurity positions by sub-field. J. Inf. Syst. Educ. 2023, 34, 94–105. [Google Scholar]

- Sheikh, A. Introduction to Ethical Hacking. In Certified Ethical Hacker (CEH) Preparation Guide: Lesson-Based Review of Ethical Hacking and Penetration Testing; Apress: Berkeley, CA, USA, 2021; pp. 1–9. [Google Scholar]

- Ghorbansadeh, M.; Shahriari, H.R. Detecting application logic vulnerabilities via finding incompatibility between application design and implementation. IET Softw. 2020, 14, 377–388. [Google Scholar] [CrossRef]

- OWASP. Web Security Testing Guide. 2021. Available online: https://owasp.org/www-project-web-security-testing-guide/ (accessed on 21 February 2025).

- Selenium. Selenium WebDriver. 2025. Available online: https://www.selenium.dev/documentation/webdriver/ (accessed on 30 May 2025).

- Felmetsger, V.; Cavedon, L.; Kruegel, C.; Vigna, G. Toward automated detection of logic vulnerabilities in web applications. In Proceedings of the 19th USENIX Security Symposium (USENIX Security 10), Washington, DC, USA, 11–13 August 2010. [Google Scholar]

- Pei, K.; Bieber, D.; Shi, K.; Sutton, C.; Yin, P. Can large language models reason about program invariants? In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; Volume 202, pp. 27496–27520. Available online: https://proceedings.mlr.press/v202/pei23a.html (accessed on 15 May 2025).

- Maslak, O.I.; Maslak, M.V.; Grishko, N.Y.; Hlazunova, O.O.; Pererva, P.G.; Yakovenko, Y.Y. Artificial intelligence as a key driver of business operations transformation in the conditions of the digital economy. In Proceedings of the 2021 IEEE International Conference on Modern Electrical and Energy Systems (MEES), Kremenchuk, Ukraine, 21–24 September 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Rawindaran, N.; Jayal, A.; Prakash, E. Machine Learning Cybersecurity Adoption in Small and Medium Enterprises in Developed Countries. Computers 2021, 10, 150. [Google Scholar] [CrossRef]

- Zhao, Z.C.; Li, D.X.; Dai, W.S. Machine-learning-enabled intelligence computing for crisis management in small and medium-sized enterprises (SMEs). Technol. Forecast. Soc. Change 2023, 191, 122492. [Google Scholar] [CrossRef]

- Cubric, M.; Li, F. Bridging the ‘Concept-Product’ gap in new product development: Emerging insights from the application of artificial intelligence in FinTech SMEs. Technovation 2024, 134, 103017. [Google Scholar] [CrossRef]

- Neupane, S.; Fernandez, I.A.; Mittal, S.; Rahimi, S. Impacts and Risk of Generative AI Technology on Cyber Defense. 2023. Available online: https://arxiv.org/pdf/2306.13033 (accessed on 14 February 2025).

- Metin, B.; Özhan, F.G.; Wynn, M. Digitalisation and Cybersecurity: Towards an Operational Framework. Electronics 2024, 13, 4226. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Keele University: Keele, UK; University of Durham: Durham, UK, 2007; Volume 2. [Google Scholar]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Guest, G.; Bunce, A.; Johnson, L. How Many Interviews Are Enough? An Experiment with Data Saturation and Variability. Field Methods 2006, 18, 59–82. [Google Scholar] [CrossRef]

- Flick, U. An Introduction to Qualitative Research, 4th ed.; Sage Publications, Ltd.: London, UK, 2009. [Google Scholar]

- Pellegrino, G.; Balsarotti, D. Toward Black-Box Detection of Logic Flaws in Web Applications. In Proceedings of the NDSS Symposium 2014, San Diego, CA, USA, 23–26 February 2014; Volume 14, pp. 23–26. [Google Scholar]

- PortSwigger. Business Logic Vulnerabilities. Available online: https://portswigger.net/web-security/logic-flaws#what-are-business-logic-vulnerabilities (accessed on 7 April 2025).

- Kim, I.L.; Zheng, Y.; Park, H.; Wang, W.; You, W.; Aafer, Y.; Shang, X. Finding client-side business flow tampering vulnerabilities. In Proceedings of the 2020 IEEE/ACM 42nd International Conference on Software Engineering (ICSE), Seoul, Republic of Korea, 5–11 October 2020; pp. 222–233. [Google Scholar]

- Alidoosti, M.; Nowroosi, A.; Nickabadi, A. Evaluating the web-application resiliency to business-layer DoS attacks. ETRI J. 2020, 42, 433–445. [Google Scholar] [CrossRef]

- Alidoosti, M.; Nowroosi, A.; Nickabadi, A. BLProM: A Black-Box Approach for Detecting Business-Layer Processes in the Web Applications. J. Comput. Secur. 2019, 6, 65–80. [Google Scholar]

- Alidoosti, M.; Nowroosi, A.; Nickabadi, A. Business-Layer Session Pussling Racer: Dynamic Security Testing Against Session Pussling Race Conditions in Business Layer. ISC Int. J. Inf. Secur. 2022, 14, 83–104. [Google Scholar]

- Li, X.; Xue, Y. LogicScope: Automatic discovery of logic vulnerabilities within web applications. In Proceedings of the 8th ACM SIGSAC Symposium on Information, Computer and Communications Security, Hangzhou, China, 8–10 May 2013; pp. 481–486. [Google Scholar]

- Li, X.; Meng, G.; Feng, S.; Li, X.; Pan, D. A framework based security-knowledge database for vulnerabilities detection of business logic. In Proceedings of the 2010 International Conference on Optics, Photonics and Energy Engineering (OPEE), Wuhan, China, 10–11 May 2010; Volume 1, pp. 292–297. [Google Scholar]

- Deepa, G.; Thilagam, P.S.; Praseed, A.; Pais, A.R. DetLogic: A black-box approach for detecting logic vulnerabilities in web applications. J. Netw. Comput. Appl. 2018, 109, 89–109. [Google Scholar] [CrossRef]

- Taubenberger, S.; Jürjens, J.; Yu, Y.; Nuseibeh, B. Resolving vulnerability identification errors using security requirements on business process models. Inf. Manag. Comput. Secur. 2023, 21, 202–223. [Google Scholar] [CrossRef]

- Merrell, S.A.; Stevens, J.F. Improving the vulnerability management process. EDPAC: EDP Audit Control Secur. Newsl. 2008, 38, 13–22. [Google Scholar] [CrossRef]

- Al-Turkistani, H.F.; Aldobaian, S.; Latif, R. Enterprise architecture frameworks assessment: Capabilities, cyber security and resiliency review. In Proceedings of the 2021 1st International Conference on Artificial Intelligence and Data Analytics (CAIDA), Riyadh, Saudi Arabia, 6–7 April 2021; pp. 79–84. [Google Scholar]

- Chalvatsis, I.; Karras, D.; Papademetriou, R. Evaluation of Security Vulnerability Scanners for Small and Medium Enterprises Business Networks Resilience towards Risk Assessment. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 29–31 March 2019; pp. 52–58. [Google Scholar]

- McMahon, E.; Patton, M.; Samtani, S.; Chen, H. Benchmarking Vulnerability Assessment Tools for Enhanced Cyber-Physical System (CPS) Resiliency. In Proceedings of the 2018 IEEE International Conference on Intelligence and Security Informatics (ISI), Miami, FL, USA, 9–11 November 2018; pp. 100–105. [Google Scholar]

- Altaha, S.; Rahmann, M.M. A Mini Literature Review on Integrating Cybersecurity for Business Continuity. In Proceedings of the 2023 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Bali, Indonesia, 20–23 February 2023; pp. 353–359. [Google Scholar] [CrossRef]

- Lópes, M.T.G.; Gasca, R.M.; Péres-Álvares, J.M. Compliance validation and diagnosis of business data constraints in business processes at runtime. Inf. Syst. 2015, 48, 26–43. [Google Scholar]

- Ernst, M.D.; Perkins, J.H.; Guo, P.J.; McCamant, S.; Pacheco, C.; Tschantz, M.S.; Xiao, C. The Daikon system for dynamic detection of likely invariants. Sci. Comput. Program. 2007, 69, 35–45. [Google Scholar] [CrossRef]

- Marin-Castro, H.; Tello-Leal, E. Event Log Preprocessing for Process Mining: A Review. Appl. Sci. 2021, 11, 10556. [Google Scholar] [CrossRef]

- Fiore, U.; Santis, A.D.; Perla, F.; Sanetti, P.; Palmieri, F. Using generative adversarial networks for improving classification effectiveness in credit card fraud detection. Inf. Sci. 2017, 479, 448–455. [Google Scholar] [CrossRef]

- Raychaudhuri, K.; Kumar, M.; Bhanu, S. A Comparative Study and Performance Analysis of Classification Techniques: Support Vector Machine, Neural Networks and Decision Trees. In Proceedings of the International Conference on Intelligent Computing, Communication and Convergence, Ghaziabad, India, 11–12 November 2016; pp. 13–21. [Google Scholar]

- Gyimah, N.K.; Akinie, R.; Mwakalonge, J.; Izison, B.; Mukwaya, A.; Ruganuza, D.; Sulle, M. An AutoML-based approach for network intrusion detection. In Proceedings of the 2025 IEEE SoutheastCon, Concord, NC, USA, 22–30 March 2025; pp. 1177–1183. [Google Scholar] [CrossRef]

- LeDell, E.; Poirier, S. H2O AutoML: Scalable automatic machine learning. In Proceedings of the 7th ICML Workshop on Automated Machine Learning, Online, 17–18 July 2020; Available online: https://www.automl.org/wp-content/uploads/2020/07/AutoML_2020_paper_61.pdf (accessed on 12 May 2025).

- Sabeel, U.; Heydari, S.S.; Elgassar, K.; El-Khatib, K. Building an intrusion detection system to detect atypical cyberattack flows. IEEE Access 2021, 9, 94352–94370. [Google Scholar] [CrossRef]

- Dasgupta, S.; Yelikar, B.V.; Naredla, S.; Ibrahim, R.K.; Alassam, M.B. AI-powered cybersecurity: Identifying threats in digital banking. In Proceedings of the 2023 3rd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE), Greater Noida, India, 12–13 May 2023; pp. 2614–2619. [Google Scholar]

- Tao, C.; Gao, J.; Wang, T. Testing and Quality Validation for AI Software–Perspectives, Issues, and Practices. IEEE Access 2019, 7, 120164–120175. [Google Scholar] [CrossRef]

- Raad, R.; Searah, S.A.; Saleem, M.; Al-Tahee, A.M.; Abbas, S.Q.; Kadhim, M. Research on Corporate Protection Systems using Advanced Protection Techniques and Information Security. In Proceedings of the 2023 International Conference on Emerging Research in Computational Science (ICERCS), Coimbatore, India, 7–9 December 2023; pp. 1–6. [Google Scholar]

- Lethbridge, T.C. Low-code is often high-code, so we must design low-code platforms to enable proper software engineering. In Leveraging Applications of Formal Methods, Verification and Validation: Proceedings of the 10th International Symposium on Leveraging Applications of Formal Methods, ISoLA 2021, Rhodes, Greece, 17–29 October 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 202–212. [Google Scholar]

- Kushwaha, M.K.; David, P.; Suseela, G. Automation and DevSecOps: Streamlining Security Measures in Financial System. In Proceedings of the 2024 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 12–14 July 2024; pp. 1–6. [Google Scholar]

- Mengi, G.; Singh, S.K.; Kumar, S.; Mahto, D.; Sharma, A. Automated machine learning (AutoML): The future of computational intelligence. In International Conference on Cyber Security, Privacy and Networking (ICSPN 2022); Springer International Publishing: Cham, Switzerland, 2021; pp. 309–317. [Google Scholar]

| Included | Excluded |

|---|---|

| Papers published in English | Papers not published in English |

| Studies focusing on business logic vulnerabilities in organizations and AI implementation in order to reduce cyber risks | Studies only focusing on the negative impact of AI to organizational security |

| Contents that are categorized in conferences, journal articles, and books from databases such as IEEE, Scopus, and ScienceDirect | Studies not focusing on business logic vulnerabilities in organizations and AI implementation in order to reduce cyber risks |

| Literature available through open access or university library access through research databases | Sources that cannot be accessed open access or through using our university library |

| Literature sources published between 2010 and 2024 | Literature published before 2010 |

| Code | Experience (yrs) | Age | Gender | Role/Position | Team Size/ Context |

|---|---|---|---|---|---|

| P1 | 3 | 26 | Female | Co-Founder & Chief Developer | 3-person team |

| P2 | 3 | 37 | Female | Co-Founder & Chief Developer | 3-person core start-up |

| P3 | 17 | 45 | Male | Senior Development Team Leader | 5-person team |

| P4 | 13 | 51 | Male | Software Development Team Leader | 28-person team |

| P5 | 10 | 38 | Male | Software Development Team Leader/Partner | 15-person team |

| P6 | 27 | 45 | Male | Software Development Team Manager & Company Owner | 6-person team |

| P7 | 23 | 47 | Male | R&D & Software-Quality Development Team Manager | 6-person team |

| Primary Theme | Sub-Theme | Relevant Interviewee Quotation | Interpretation |

|---|---|---|---|

| Detection Challenges | Manual-first detection culture | “We rely exclusively on manual assessments to detect BLVs … automated tools create too many false positives.” P7 | Reliance on human judgement introduces scalability, cost and consistency issues. |

| Limited QA resources & time pressure | “In start-ups … many BLVs are discovered in production because we can’t cover every edge path.” P5 | Budget and schedule pressure shift discovery to post-release. | |

| Incomplete or misunderstood requirements | “BLVs often result from human error, such as incomplete or incorrectly communicated requirements.” P6 | Early analysis flaws propagate into hidden logic issues. | |

| Developer blindness/familiarity bias | “Developers may be unable to recognise flaws in the logic they themselves implemented.” P7 | Insider perspective masks hidden assumptions. | |

| Automation blind spots | “Automated testing and scanning tools are typically ineffective … the system behaves correctly under standard conditions.” P2 | Current scanners lack business-context understanding, forcing manual fallback. | |

| Nature and Complexity of BLVs | Access-control & authorisation bypass | “A customer was able to view data they were not authorised to access due to a permission misconfiguration.” P4 | Mis-scoped privileges silently expose data. |

| Time-sensitive financial logic gaps | “Quarter-based pricing logic was missed, so the system failed to reflect time-sensitive costs.” P7 | Overlooked temporal rules create direct revenue impact. | |

| Concurrency/race-condition exploits | “A race condition let the same balance be used multiple times, creating funds that do not actually exist.” P6 | State timing issues allow monetary abuse. | |

| Undetected fraud scenarios | “Failure to detect fraudulent credit-card use can lead to significant financial loss.” P3 | Fraud logic needs behavioural context as well as code checks. | |

| Hidden integration limits | “An undocumented 10 MB API limit silently truncated data, making the issue hard to detect.” P3 | Third-party constraints become latent BLVs when unvalidated. |

| Stage | Process | Objective | Consequences (Key Outcome) |

|---|---|---|---|

| 1. Identify business logic components | Map all business processes and their interactions. Analyze source code using data/control flow analysis and annotated programs. Utilize NLP, Graph Analysis, and LLMs for analysis. | To understand the complete structure and flow of operations to identify potential points of failure. | A clear and comprehensive model of the application’s intended logic (e.g., in UML diagrams), which serves as the “ground truth” for detecting future deviations. |

| 2. Data collection & pre-processing | Collect historical data including logs, user interactions, and transaction records. Use tools like Selenium to simulate user behavior and Daikon to collect execution traces and infer program invariants. | To gather comprehensive data from diverse sources and establish a behavioral baseline of normal program execution. | A rich, structured dataset and a “behavioral fingerprint” of the application, addressing issues like insufficient logging and providing the foundation for model training. |

| 3. AI model selection & training | Train AI models (e.g., Neural Networks, SVMs) using the collected data and records of known vulnerabilities. Use LLMs for program invariant prediction. | To train models on diverse data to accurately distinguish between legitimate transactions and potential vulnerabilities manually by Supervised ML or automatically using AutoML. | A trained, context-aware AI model capable of identifying known vulnerability patterns, aiming to reduce the false positives that practitioners report with existing tools. |

| 4. Feature selection | Extract relevant features from data that indicate logic flaws. Use domain knowledge to select features that highlight anomalies in mission-critical processes. Utilize techniques like Decision Trees and SHAP. | To identify the most critical data characteristics (e.g., abnormal login frequency) that signal a potential vulnerability. | A refined set of key risk factors that allows the AI model to focus on the most important signals, improving detection accuracy and efficiency. |

| 5. Anomaly detection | Employ unsupervised learning methods (e.g., Autoencoders, Isolation Forest) to find deviations from normal patterns. Use violations of Daikon-inferred invariants to flag anomalies. AutoML for anomaly dedection. | To identify unexpected system behaviors and previously unknown vulnerabilities that deviate from established business logic. | The detection of novel and hidden logic flaws, such as credit-card fraud that may have previously gone unnoticed by other systems. |

| 6. Validation & testing | Test the model on unseen data to evaluate real-world performance. Use layered testing, including unit tests, manual penetration testing, and functional testing with Selenium. Employ adversarial testing techniques (e.g., FGSM, PGD). | To ensure the model accurately identifies true vulnerabilities while minimizing false positives and unnecessary alerts. | A robust, validated model with higher accuracy and lower false alarm rates, increasing trust in the automated detection system. |

| 7. Integration & deployment | Integrate the model into the security control system, sending automated alerts upon threat detection. Have a pre-defined action plan for which teams will be alerted and how they will respond. | To deploy the validated model into the live environment and automate the initial stages of incident response. | Faster threat response times and enhanced labor utilization, though manual oversight may still be needed for certain cases. |

| 8. Continuous learning & improvement | Establish a system that adapts to changing business processes and new threats. Use Reinforcement Learning and Active Learning to learn from feedback. Periodically retrain and review the model, embedding learning loops into maintenance. | To create a living, evolving defense system that maintains its relevance and effectiveness over time. | A resilient and adaptive security framework that can defend against emerging BLVs, reflecting the practice of continuous improvement seen in industry. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Metin, B.; Wynn, M.; Tunalı, A.; Kepir, Y. Business Logic Vulnerabilities in the Digital Era: A Detection Framework Using Artificial Intelligence. Information 2025, 16, 585. https://doi.org/10.3390/info16070585

Metin B, Wynn M, Tunalı A, Kepir Y. Business Logic Vulnerabilities in the Digital Era: A Detection Framework Using Artificial Intelligence. Information. 2025; 16(7):585. https://doi.org/10.3390/info16070585

Chicago/Turabian StyleMetin, Bilgin, Martin Wynn, Aylin Tunalı, and Yağmur Kepir. 2025. "Business Logic Vulnerabilities in the Digital Era: A Detection Framework Using Artificial Intelligence" Information 16, no. 7: 585. https://doi.org/10.3390/info16070585

APA StyleMetin, B., Wynn, M., Tunalı, A., & Kepir, Y. (2025). Business Logic Vulnerabilities in the Digital Era: A Detection Framework Using Artificial Intelligence. Information, 16(7), 585. https://doi.org/10.3390/info16070585