Abstract

Public-health campaigns have to capture and hold visual attention, but little is known about the influence of message framing and visual appeal on attention to bowel-cancer screening ad campaigns. In a within-subjects test, 42 UK adults aged 40 to 65 viewed 54 static adverts that varied by (i) slogan frame—anticipated regret (AR) vs. positive (P); (ii) image type—hand-drawn, older stock, AI-generated; and (iii) identity congruence—viewer ethnicity matched vs. unmatched to the depicted models. Remote eye-tracking measured time to first fixation (TTFF), dwell, fixations, and revisits on a priori pre-defined regions of interest (ROIs); analyses employed linear mixed-effects models (LMMs), generalized estimating equations (GEEs), and median quantile regressions with cluster at the participant level. Across models, the AR slogans produced faster orienting (smaller TTFF) and more intense maintained attention (longer dwell, more fixations and revisits) than the P slogans. Image type set baseline attention (hand-drawn > old stock > AI) but did not significantly decrease the AR benefit, which was equivalent for all visual styles. Identity congruence enhanced early capture (lower TTFF), with small effects for dwell-based measures, suggesting that tailoring benefits only the “first glance.” Anticipated-regret framing is a reliable, design-level alternative to improving both initial capture and sustained processing of screening messages. In practice, the results indicate that advertisers should pair regret-based slogans with warm, human-centred imagery; place slogans in high-salience, low-competition spaces, and, when incorporating AI-generated imagery, reduce composition complexity and exclude uncanny details. These findings ground regret framing as a visual-attention mechanism for public-health campaigns in empirical fact and provide practical recommendations for testing and production.

1. Introduction

Bowel cancer, also known as colorectal cancer, is responsible for 10% of all cancer-related deaths in the UK [1] (more recent data are not fully available at the time of writing) and ranks as the fourth leading cause of cancer mortality worldwide [2]. Early detection is vital for effective treatment and reducing mortality rates associated with this disease [3]. One widely used screening tool is the Faecal Immunochemical Test (FIT), which identifies traces of blood in stool—a possible sign of bowel cancer [4]. Detecting the disease at an early stage through FIT enables healthcare providers to intervene promptly, improving patient outcomes and helping to lower overall mortality rates [4].

Systematic screening schemes are standard in a large proportion of areas worldwide and use visual representations standardly to support take-up and encourage participation [5]. Despite all of this effort and promotion, widely varying inequalities in take-up of such screening persist. In a number of countries, uptake completion rates for such schemes are still 16% [5]. According to the latest available figures from NHS Bowel Cancer Screening Programme (England, April 2023–March 2024), the uptake, or more precisely the completion rate, for bowel cancer screening of those within the eligible age group of 60 to 74 years is 67.6%, but it is particularly uneven regionally and by socioeconomic group. For instance, in the most deprived wards, the uptake was 55.8%, whereas in the least deprived wards, it was 75.8% [1].

Health marketing is the planned application of marketing and communication principles to encourage healthy behaviour, services, and interventions [6]. It entails crafting certain messages, via media, including YouTube and Facebook [7], as well as community mobilization in order to create awareness, change attitudes, and promote positive health actions. Health marketing has, over time, been a key driver of altering public behaviour, i.e., increasing vaccination rates, smoking cessation, balanced diets, and screening program initiation [8,9]. In making health more understandable and accessible, such campaigns have helped to bridge knowledge gaps, de-stigmatize conditions, and enable individuals to make informed choices about their health.

Numerous studies have used eye-tracking and other neuromarketing tools to investigate the impact of health promotion campaigns on changing behaviour. For example, breast cancer advertisements were examined by Skandali et al. [10] in a study that involved Facial Expression Analysis software (Noldus FaceReader version 9); Champlin et al. [11] used eye-trackers to measure the attention and the attractiveness of various adverts around obesity, pregnancy, and others; Milošević et al. [12] evaluated the effect of monochromatic public health messages, particularly related to alcohol consumption and use of tobacco, among 58 adults, using eye-trackers. Our eye-tracking study adds upon the aforementioned research, as we investigate individual elements shown in bowel-cancer screening advertisements, contrasting anticipated regret (AR) compared to positive (P) slogan framing, and to assess whether these influences are dependent on image type. The complexity associated with AR must also be acknowledged with some research suggesting its impact on reducing bowel-cancer screening intentions [13].

Regret is an aversive emotion people experience when they perceive that the present circumstances available to them could have been improved had they previously made different choices at an earlier moment in time [14,15]. Scholars agree that regret can be felt retrospectively, considering previous decisions—or anticipated in anticipating future decisions. They note that regret is a multifaceted, comparative emotion that is normally based on self-blame. Landman [16] defines regret as a cognitively motivated reaction to irreversible choices that generate uncertainty. Similarly, ref. [17] defines regret as hurting awareness that one’s existing situation is not as desirable as it could otherwise have been.

At the centre of the regretful experience is counterfactual thinking—mentally calculating “what could have been”, comparisons between what has transpired and what could have transpired [18]. It normally exists as “if-then” sentences, where the “if” is a person’s action and the “then” is a valued outcome. They not only account for making sense of what has transpired in life, but they also determine future choice and behavioural change [19,20]. Therefore, counterfactual thinking plays a critical role in developing goal-directed behaviour [18], including cancer screening [2,21].

Research has revealed that regret about a future action—or inaction—can powerfully affect decision-making. In particular, the anticipation of regret about performing some specific action reduces one’s propensity to perform it, while anticipation of regret at failing to act raises one’s propensity for acting. Anticipated regret, for instance, has been found to predict healthy behaviour intentions, such as drinking alcohol within safe limits [22], avoiding junk food [23], and engaging in physical activity [24].

Importantly, anticipated regret adds to the prediction of health intention uniquely above the standard determinants of attitudes, perceived behavioural control, and subjective norms [14,15,25,26]. Contemporary meta-analysis confirmed that interventions aimed at increasing negative anticipated feelings such as regret have the potential to effect large changes in health behaviour [24,26,27]. Overall, this literature points to the healthy contribution of anticipated regret to better choice-making.

Historically, many public health campaigns have aimed to increase individuals’ awareness of disease risks, operating under the assumption that greater knowledge would lead to healthier behaviour. However, research suggests that emotional responses to health-related activities are often stronger predictors of behaviour than factual understanding of the associated risks. In particular, people tend to perceive greater threats to their health when they experience a decline in trust [8,9,10,28].

Although there has been extensive research on (i) message framing for health messages, (ii) visual style and imagery for advertisements, and (iii) visual salience/layout, existing research has the tendency to analyse these levers in isolation and very seldom in bowel-cancer screening using ROI-level eye-tracking. Framing papers usually include attention or intention, as opposed to overt attentional capture and maintenance; visual style comparison (e.g., illustration vs. photography vs. AI) usually does not investigate interaction with the cognitive process of the slogan; and salience/layout operations shift placement or contrast without theory-informed framing contrast. We therefore do not have cumulative evidence as to whether an anticipated-regret frame consistently accelerates early orienting (TTFF) and sustained processing (dwell, fixations, revisits) to all image types, or whether identity congruence only speeds up the “first glance” without embedding processing. Filling this cumulative gap is essential for practice because creative decisions (copy + imagery + placement) are made jointly; limited guidance to any one factor may lead to suboptimal campaign design.

Against this backdrop and the integrated gap outlined above, we examine the effects of message framing and visual design influence on attention to bowel-cancer screening advertisements at two processing stages—early orienting vs. maintenance. Anticipated-regret (AR) framing should increase vigilance by eliciting counterfactual thinking (“what if I miss screening?”), perhaps speeding up early orienting to the call-to-action slogan, while positive (P) framing should facilitate maintenance inspection via benefit-highlighting and reassurance. We therefore examine whether AR and P frames temporally differentially direct attention dynamics—indexed by time to first fixation (TTFF), compared with measures based on dwell time—onto the slogan area of interest (AOI). We also examine how image category influences these effects. Visual form can modify perceptual fluency, novelty, and credibility cues. AI images can trigger fast orienting on the basis of novelty but cast doubt on authenticity; hand drawings can be intimate and prosocial, supporting fixation; older stock images can convey institutional credibility but compete less for attention. We manipulate AI-generated, hand-drawn, and retro stock images to assess how the design form enhances or reduces framing effects on both initial (TTFF) and sustained (dwell, number of fixations, revisits) attention. Lastly, we treat identity congruence—the fit between viewer-perceived ethnicity and the ethnicity depicted in the ad—our boundary condition. Identity match should enhance perceived self-relevance and trust, two attention and compliance drivers. If framing with AR is stronger when self-relevance is high, identity congruence could enhance initial capture and increase duration with the slogan; mismatch could hinder or even reverse them. In general, our interest binds theory of anticipated regret and counterfactuals to empirical, testable attention machinery in actual advertising copy, with an expectation that visual design and social identity content could affect the machinery systematically.

Our main goal is to measure how audiences distribute visual attention between the slogan and other ad components in bowel-cancer screening ads as a function of slogan frame (positive vs. anticipated-regret) and image type (hand-drawn, AI-generated, older stock photo). Early orienting is conceived as TTFF to the slogan AOI, whereas sustained interest is indexed as total dwell time, number of fixations, and number of revisits to the slogan AOI, as compared with non-slogan AOIs (e.g., person/scene, logos, symbols). Another goal is to explore moderation by (a) image type—whether framing effects vary in dependence on AI-generated compared to hand-drawn compared to stock photos—and (b) congruence in identity—whether the ethnicity congruence of viewers’ and audiences’ increases or decreases framing effects on first and sustained attention. Exploratorily, we investigate whether effects generalize over ad layouts and whether image-type differences reflect novelty (fast orienting) rather than credibility (extended looking). Collectively, these goals yield an evidence-based explanation of the translation of creative decision-making in message framing and imagery into measurable attention profiles that are theoretically associated with screening intentions. Thus, the following hypotheses were formulated:

- H1 (early attention): AR slogans will yield faster time to first fixation (TTFF) on the slogan AOI compared to P slogans.

- H2 (sustained attention): AR slogans will receive greater total dwell time on the slogan AOI compared to P slogans.

- H3 (image-type moderation): Attention to slogans (TTFF, dwell time, fixation count, revisits) will differ by image type (AI vs. hand-drawn vs. older stock).

2. Materials and Methods

2.1. Equipment and Software

Participants initially filled in the QuestionPro items to the comprehension check. After successful completion, they were instructed to RealEyes study to look at and rate pictures whilst eye-gaze was monitored. RealEyes participation URLs were set to capture Prolific IDs as external participant IDs to allow deterministic data linkage. Participants were asked to work using Google Chrome, keep steady well-lit webcam settings, not rush calibration, and have laptops charged. Participants who failed in calibration were asked to follow a screen-out link and then asked to retake the survey. Participants were then directed, after eye-tracking session, to another QuestionPro survey, where they were asked to respond to the behavioural-intention questions. The eye-tracking experiment lasted 15–20 min on average.

2.2. Participants and Sampling

The last sample was N = 42 adults aged 40–65 years, who were recruited in the UK from an online research panel (Prolific) with pre-screeners for age and UK residency. We used convenience sampling, as this is the age group that the National Health Service (NHS) in the UK sends bowel-cancer screening invitations to. We quota-sampled two ethnicity groups (White and Southeast Asian) with matched targets. Normal or corrected-to-normal vision and working laptop/desktop with webcam were required for eligibility. Exclusion criteria included self-reported eye disease or recent eye surgery, not being able to calibrate, and high track loss. RealEyes session IDs were deterministically matched with Prolific participant IDs for ensuring integrity of data. Age, gender, ethnicity, and education were measured and pseudonymous IDs P001–P042 were allocated. This is an experimental, within subjects, quota purposive sample rather than a population-prevalence estimation one. Demographics were noted and served as covariates (where appropriate) to facilitate assessment of external validity. Electronic informed consent was obtained from all participants prior to any task and only remained active after a click on “I Agree.” The research was compliant with the Declaration of Helsinki and met Liverpool John Moores University’s ethical standards (23/PSY/060; 7 August 2023).

2.3. Experimental Design, Procedure and Stimuli

In total, 54 static advertisements crossed image type (AI-created; hand drawing [HD]; older stock/archival [OLD]) × image population (White [W]; Southeast Asian [SA]) × slogan framing (anticipated regret [AR] vs. positive [P]) with multiple exemplars within each cell (total = 54). The slogans were in English. The mages were displayed for 5 s, followed by a 5 s grey inter-stimulus interval (ISI). Areas of interest (AOIs) were defined to facilitate slogan-focused and people-focused analyses: SLOGAN_AR, SLOGAN_P, PEOPLE (faces/persons; the fine-grained terms “older white couple” or “middle-aged woman” were preserved), and OBJECTS (keys, remote). AOIs were defined as rectangles in RealEyes at native resolution, 1920 × 1080 px; code mappings and per-image AOI coordinates (px) were archived [29]. Low-level features and stimulus control were managed. All the ads were rendered on a 1920 × 1080 canvas with two fixed layout templates, counterbalanced and utilised across lists in an equal manner. Slogans were lexically fixed and specified with a fixed font family/weight/size and high figure–ground contrast; their location was at the mercy of the template constraints. Aside from controlled variables (framing, image type, identity), we did not experimentally balance low-level image features (e.g., texture, luminance/contrast, and visual complexity/clutter) across AI, hand-drawn, and older stock types. They were defined as an inherent part of image type and as a limitation. Confounding was reduced through the within-subjects design (all conditions were presented to all participants), counterbalancing, and checks for robustness (winsorisation/log-transform options, alternative specifications) brought in Section 3.

We employed a within-subjects design, with the 54 pictures shown to all participants. Participants were randomly allocated to six counterbalanced lists (Latin-square across type × population × slogan blocks) and order randomized within list [30]. Instructions were to look freely: “Please look at each picture naturally, as you would an advertisement.” No secondary task was provided. Nine-point calibration with acceptance validation of ≤0.6° mean and <1.0°maximum error was used; re-calibration was performed when drift was >1.0° or about every 12 trials. A trial began with a 500 ms fixation cross (drift check), 5000 ms stimulus presentation, and a 5000 ms grey ISI [31]. “Mean (average) error” and “max error” are used to provide the RealEyes nine-point validation summary: the mean angular deviation (degrees of visual angle) over the nine validation targets, and the single-point max deviation, respectively. “Drift” is the mean angular offset over the 500 ms pre-stimulus fixation-cross period between target fixation point (screen centre) and estimated gaze. Recalibration was initiated whenever validation deviated above thresholds (mean > 0.6° or max ≥ 1.0°) or whenever drift checks deviated more than 1.0°; we also programmed preventive recalibrations every ~12 trials to avoid cumulative drift throughout the session.

From each trial’s area of interest (AOI), we obtained the following: AOI_FixationCount (fixations with centroid in AOI), AOI_FixationDuration (ms; total duration within AOI), AOI_TTFF (ms; image onset to first fixation in AOI), and AOI_RevisitCount (number of unique revisits after first exit). For no AOI fixation during exposure, TTFF was coded as censored for survival-type analyses, and NA for descriptive summaries. Fixations were detected by identification-by-velocity (I-VT) based on a 30°/s velocity criterion, minimum fixation time ≥ 60 ms, and adjacency of fixations combined with <75 ms and <0.5° between them. AOI visits necessitated ≥100 ms dwell within the AOI; fixations <100 ms accounted for fixation counts but not revisit/visit requirements [29,30].

2.4. Data Pre-Processing

A priori quality requirements on data were defined prior to inspection of study results. Track loss was the percentage of samples per trial for which no valid gaze could be estimated (blinks, off-screen looking, missing frames). Trials > 40% track loss were excluded, and participants with >25% total track loss (across presented trials) were excluded from analysis [30,31].

Short gaps (≤100 ms) due to blinks were not interpolated to prevent inflation of fixation dwell; longer gaps were left missing [32,33]. Fixations were already detected at source using I-VT (30°/s threshold, minimum fixation duration ≥ 60 ms, merge if <75 ms and <0.5°), so pre-processing kept these events and eliminated remaining micro saccade-like events with a duration under 60 ms. For every trial we estimated frame-level drift (between fixation cross and measured gaze over the 500 ms pre-stimulus interval) and flagged trials greater than 1.0° for re-calibration [34]; drift estimates were saved as covariates for sensitivity analyses.

To constrain excessive leverage by outlier observations, we winsorised at the 99th percentile for every metric × AOI type (i.e., TTFF-SLOGAN, dwell-PEOPLE). This retains rank order but constrains the upper tail in a metric-specific way [32]. As duration-based measures (e.g., dwell time) were heavily tailed and positively skewed, we log-transformed such outcomes for analysis; estimates are presented on the transformed scale and, where possible, back-transformed (with geometric means and 95% CIs) for interpretability. Count outcomes (number of fixations and revisits) were retained on the natural scale and were monitored for over-dispersion in model diagnostics [30,35].

3. Data Analysis and Results

We structure the results according to attention phase and hypothesis. We first test H1 (early orienting) with time to first fixation (TTFF) on the slogan AOI [36]. Second, we test H2 (sustained engagement) with dwell time on the slogan AOI, fixation number and revisit number being further measures of persistence [37,38]. For H3, we examine ImageType (HD, OLD, AI) both as a main effect and as a framing effect moderator [39,40]; for the boundary condition, we examine IdentityMatch (match vs. non-match) on the same measures. For cross-test analysis, we examine ImageType (HD, OLD, AI) main effects and moderation controlling for IdentityMatch (match vs. non-match) as a boundary condition. All the models were adjusted for ImagePopulation (W vs. SA), age (centred), gender, and education, and participant-level clustering or random effects were applied as needed [41,42].

We used complementary estimators to align with outcome distributions and to test robustness rather than to duplicate findings: the base models employed a linear mixed-effects model (REML) on log-TTFF (with random slopes and intercepts by participant), Gamma GEE with a log link to dwell time, a Poisson GEE to number of fixations (switching over to negative binomial where over-dispersion was identified), and negative binomial GEE to number of revisits. Median (τ = 0.50) quantile regressions with cluster-bootstrap CIs offer distribution-robust central tendency estimation. Convergent inferences between model families maximize confidence that results are not artefacts of an initial modelling decision. Robustness analyses comprise random-slope LMM alternatives, median (τ = 0.50) quantile regression with cluster-bootstrap CIs, and sensitivity to winsorisation/log-transform alternatives and TTFF censor rules [43,44,45]. We present effects as time ratios (TRs) for TTFF, mean ratios (RM) for the Gamma models, and incidence rate ratios (IRRs) for the count models; models are linear mixed-effects models (LMMs) and generalized estimating equations (GEEs). Model assumptions and diagnostics were verified as below: residual scale–location plots and QQ-plots (normality/heteroscedasticity) and convergence comments for LMMs; deviance/Pearson residuals and appropriateness of the log link for Gamma GEEs; over-dispersion tests (Pearson χ2/df) with negative binomial substitution where necessary, and working-correlation testing (QIC) with stability tests for count GEEs. Figures show estimated marginal means and effect sizes with 95% CIs; tables show model coefficients and ratios (TR for TTFF; RM/IRR for dwell/counts). Model diagnostics (convergence notes, residual/over-dispersion tests, and working-correlation fit) are included with each result as appropriate.

3.1. H1—Early Attention (TTFF to Slogan AOI)

We fit a linear mixed-effects model (REML) of log-TTFF (ms) with fixed effects of SloganType (AR vs. P), ImageType (HD, OLD, AI), IdentityMatch (match vs. non-match), and interactions SloganType × ImageType and SloganType × IdentityMatch; covariates were ImagePopulation (W vs. SA), age (centred), gender, and education. Random effects were a by-participant intercept and a random slope for SloganType. Optimizer warnings suggested non-convergence, so fixed-effect estimates must be interpreted with caution, even though patterns were consistent with marginal means (Table 1).

Table 1.

Significant fixed effects on TTFF (MixedLM on log-TTFF).

Compared to AR, P slogans evoked slower TTFF to the slogan AOI, b = 0.342, SE = 0.014, z = 23.998, p < 0.001, time ratio (TR) = 1.41 [1.37, 1.45]. Relative to HD, OLD photos slowed TTFF (b = 0.141, SE = 0.013, z = 11.066, p < 0.001, TR = 1.15 [1.12, 1.18]) and AI photos slowed TTFF even more (b = 0.239, SE = 0.013, z = 18.760, p < 0.001, TR = 1.27 [1.24, 1.30]). Identity match predicted quicker TTFF (b = −0.147, SE = 0.011, z = −13.330, p < 0.001, TR = 0.86 [0.85, 0.88]). The interaction SloganType × ImageType was not significant (OLD: p = 0.602; AI: p = 0.076). Baseline (AR, HD, W, non-match, Age_c = 0) was exp(6.350) ≈ 572 ms.

Support was found for H1: AR > P in early orienting (shorter TTFF). Large main effects of ImageType (HD < OLD < AI) were also found, and identity match helped orienting.

Specification: log_TTFF ~ C(SloganType) × C(ImageType) + C(SloganType) × IdentityMatch + C(ImagePopulation) + Age_c + C(Gender) + C(Education) + (1 + C(SloganType) | ParticipantID).

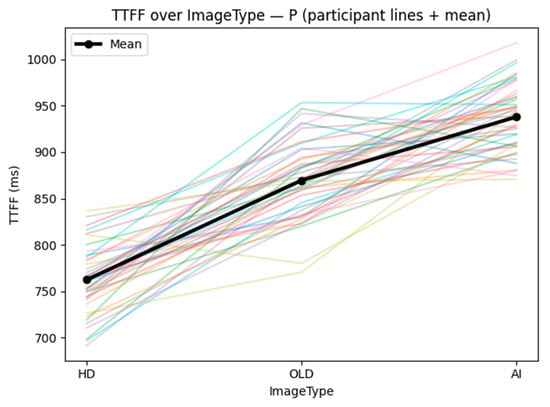

All except the bottom line slope upward (HD < OLD < AI), and the mean increases from ~760→~870→~940 ms, showing slower orienting to older and AI pictures. Small crossing and overall parallel lines indicate similar image-type effects across all groups, as might be expected from the significant ImageType main effects and the non-significant Slogan × ImageType interaction of the TTFF LMM. This trend confirms H3 (image-type differences) and the ECDF in Figure 1 (AR faster than P overall).

Figure 1.

Geometric mean time to first fixation (TTFF, ms) to the area of interest (AOI) of a slogan as a function of image type under positive (P) slogans. Thin black line shows one participant’s geometric mean TTFF (milliseconds) to the slogan AOI for hand-drawn (HD), older stock/archival (OLD), and AI-generated (AI) images; the bold black line represents the absolute across-participant mean. Lower values reflect quicker absolute orienting.

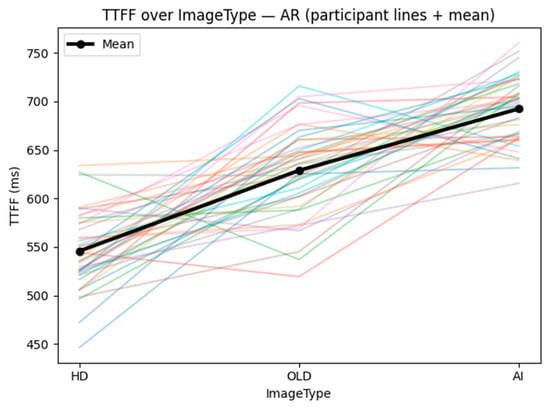

The participant traces in both panels move in the direction HD → OLD → AI, progressively slower orienting with stock and AI images; this replicates the large ImageType main effects (HD < OLD < AI). Maybe most notably, the AR panel (Figure 2) is lower than the P panel (Figure 1) for all image types, as would be expected for a ~30–40% AR benefit (TR ≈ 1/1.41) and for additivity over moderation. The approximately parallel slopes between panels indicate the non-significant Slogan × ImageType interaction: image type moves the baseline (vertical positioning of the lines), but AR yields a relatively stable gain on HD, OLD, and AI. Within condition, thin participant lines converge near the bold mean with similar ordering (HD is fastest; AI is slowest) and demonstrate that the group pattern is not the result of the inclusion of a small number of participating subjects. Together, the plots constitute a graphical test of H1 (AR < P in TTFF) and H3 (HD < OLD < AI), and account for why the interaction fell short of significance: the effects are additive rather than multiplicative.

Figure 2.

Geometric mean time to first fixation (TTFF, ms) to the slogan area of interest (AOI) for various image types under anticipated-regret (AR) slogans. As in Figure 1, thin lines indicate single participants and the thick line the across-participant mean for HD, OLD, and AI images. Lower TTFF (ms) indicates quicker orienting to the slogan.

In Figure 2, lines also slope upward (HD < OLD < AI) but with smaller mean overall (~545→~630→~690 ms) than the P panel, in line with quicker orienting under AR (supports H1), and with the same ordering of image types (supports H3). Visual comparability of the slopes between panels A and B supports the non-existence of moderation of slogan × ImageType within the LMM and quantile-regression tests.

The TTFF slopes in Figure 1 and Figure 2 exhibit approximately parallel HD→OLD→AI slopes for P and AR, which indicate an additive structure: image type alters the baseline latency, and AR provides a relatively stable benefit. This trend is then in line with (i) an early, slogan-level orienting mechanism operating prior to image-style variation having much impact; and (ii) restricted statistical power for the detection of cross-level interactions in within-subject latency data, where random-slope variance raids some of the cross-product signal. Small nonparallelities (e.g., p = 0.076 for weak AI term) are in line with this position—directionally but below typical significance thresholds after participant heterogeneity is controlled.

3.2. H2—Sustained Attention (Dwell on Slogan AOI)

We fit a Gamma GEE with log link (exchangeable working correlation; robust/clustered SEs by participant, on dwell time (ms) on slogan AOI for SloganType × ImageType, covariates being ImagePopulation, age (centred), gender, and education (Table 2).

Table 2.

Significant effects on dwell (Gamma GEE, log link; robust SEs).

Relative to those for AR slogans, P slogans attracted less focus on the slogan AOI, b = −0.256, SE = 0.007, z = −35.11, p < 0.001, with a ratio of means (RM) of 0.77 [0.76, 0.79]. Compared with hand-drawn (HD) images, OLD images elicited smaller attention, b = −0.092, SE = 0.006, z = −15.88, p < 0.001, RM = 0.91 [0.90, 0.92], and AI images elicited significantly lower attention, b = −0.209, SE = 0.008, z = −27.68, p < 0.001, RM = 0.81 [0.80, 0.82].

There was a negligible Slogan × ImageType interaction for AI images, b = 0.025, SE = 0.009, z = 2.82, p = 0.005, RM = 1.03 [1.01, 1.04]: the AR benefit (AR > P) in dwell was roughly 2–3% lower for AI compared to HD images (AR vs. P RM in HD ≈ 0.77; in AI ≈ 0.79). The slogan × ImageType interaction for OLD images was nonsignificant, p = 0.984. ImagePopulation (SA), gender, education, and age were not significant predictors (all p > 0.05). The baseline estimated dwell (AR, HD, W, non-match, Age_c = 0) was exp(6.894) ≈ 986 ms [975–998].

The results confirm H2 (AR > P under dwell). Dwell in H3 is ImageType-dependent (HD > OLD > AI), and the AR-P difference is slightly smaller for AI images, showing modest moderation by image type. Specification: Dwell_ms ~ C(SloganType) × C(ImageType) + C(SloganType) × C(ImagePopulation) + Age_c + C(Gender) + C(education).

Dwell indicates a small but consistent decline in the AR benefit to AI images (P × AI RM ≈ 1.03), while OLD is the same as HD. This is a mirror that image style influences preservation rather than capture: AI imagery exacts light disfluency that cuts—but does not eliminate—the AR benefit. This moderate magnitude of this moderation (≈2–3%) is in line with an additive main effect of framing involving a mild maintenance-stage penalty to AI, and not complete reversal. Combined with the TTFF findings, it accounts for the mixed interaction pattern: AR functions mostly as a framing prior (additive over styles), with image category contributing stage-different baseline shifts and with only modest interaction under prolonged viewing.

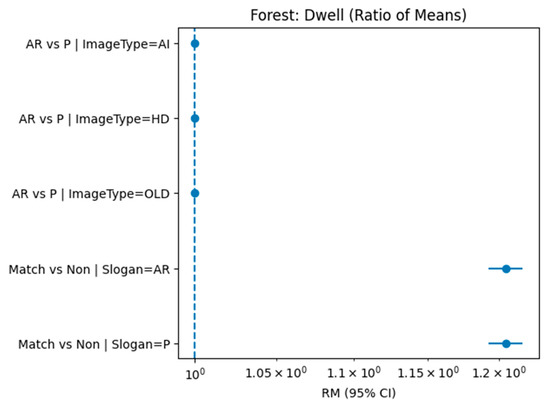

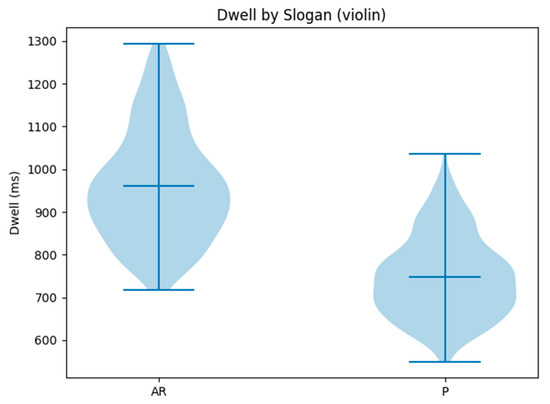

All the AR vs. P comparisons are less than 1, validating H2 (AR > P residence) for all types of images and displaying little moderation by ImageType in this case (as with the nonsignificant slogan × ImageType terms). These plots supplements the GEE table (effects on a log scale) and the EMM line graphs by providing effect sizes with uncertainty in one view (Figure 3 and Figure 4).

Figure 3.

Forest plot of ratios of means of dwell-time (RM) vs. slogan area of interest (AOI) by SloganType (AR vs. P) and IdentityMatch (match vs. non-match). Points are RM estimates from a log link Gamma GEE model; horizontal bars are 95% confidence intervals. Values < 1 show lower dwell under P compared to AR; IdentityMatch rows > 1 show higher dwell when viewer and represented ethnicity match. Dwell time is in milliseconds.

Figure 4.

Kernel-density (“violin”) plots of dwell time (ms) on the slogan AOI by SloganType. Distributions (averaged within image types and image populations at the participant-cell level) are shifted toward longer dwell under AR compared to P. Central markers are medians; widths are kernel density. Units: milliseconds.

3.3. Supplementary Attention Metrics (Fixation Count and Revisit Count)

3.3.1. Fixation Count on Slogan AOIs (Exploratory Support to H2/H3)

We model a Poisson GEE with log link and exchangeable working correlation (cluster-robust SEs; 42 participants) of the number of fixations on the slogan AOI predicted from SloganType × ImageType and SloganType with ImagePopulation, age (centred), gender, and education as covariates. Dispersion diagnostics corroborated the Poisson specification (Table 3).

Table 3.

Significant effects on fixation count (Poisson GEE, log link; robust SEs).

Relative to the anticipated-regret (AR) slogans, the positive (P) slogans led to fewer fixations on the slogan AOI, b = −0.469, SE = 0.027, z = −17.10, p < 0.001, with an incidence rate ratio (IRR) of 0.63 [0.59, 0.66]. For images, the OLD images received fewer fixations than hand-drawn (HD) images, b = −0.112, SE = 0.017, z = −6.66, p < 0.001, IRR = 0.89 [0.87, 0.92], whereas the AI images also received fewer fixations, b = −0.193, SE = 0.017, z = −11.16, p < 0.001, IRR = 0.83 [0.80, 0.85]. Slogan × ImageType interactions were reliable (all p ≥ 0.148), suggesting no moderation of the AR–P difference in fixation counts that was consistent across image type. There was also a small negative effect of age, b = −0.0018, SE = 0.0009, z = −1.98, p = 0.048, IRR ≈ 0.998 [0.996, 1.000] per year (centred), indicating a small decrease with age.

The modelled baseline fixation rate (AR, HD, W, non-match, Age_c = 0, reference gender/education) was exp(2.286) ≈ 9.83 fixations.

The results are consistent with more slogan attention under AR (increased fixations), support H3 (identity match raises fixations to the slogan), and feature main-effect differences by ImageType (HD > OLD > AI) in line with H3; we found no Slogan × ImageType moderation of fixation counts here.

3.3.2. Revisit Count on Slogan AOIs (Exploratory Persistence Metric)

We applied a negative-binomial GEE with log link and exchangeable working correlation (robust/clustered SEs; 42 participants) to forecast revisit frequencies to the slogan AOI on SloganType × ImageType, controlling for ImagePopulation, age (centred), gender, and education as covariates. The data had a 12.0% zero rate and minimal over-dispersion (α ≈ 0.001, method-of-moments) (Table 4).

Table 4.

Significant effects on RevisitCount (NegBin GEE, log link; robust SEs).

Relative to anticipated regret (AR), positive (P) messages resulted in decreased revisits, b = −0.318, SE = 0.069, z = −4.61, p < 0.001, IRR = 0.73 [0.64, 0.83] (Table 4). AI pictures resulted in decreased revisits than hand-drawn (HD) pictures, b = −0.152, SE = 0.061, z = −2.51, p = 0.012, IRR = 0.86 [0.76, 0.97]; but OLD vs. HD was not significant, p = 0.650. Gender (M vs. reference) was weakly negatively affecting, b = −0.094, SE = 0.042, z = −2.22, p = 0.026, IRR = 0.91 [0.84, 0.99]. Slogan × ImageType interactions did not come close to achieving significance (OLD: p = 0.061; AI: p = 0.148), indicating there was no stable moderation of the AR–P difference on revisits by image type or match. ImagePopulation (SA) and Age were not significant. The reference predicted rate of returning (AR, HD, W, non-match, Agec = 0, reference Gender/Education) was exp(0.941) ≈ 2.56 returns (per collapsed condition cell).

3.4. Robustness and Complementary Analyses

3.4.1. TTFF: 3-Way Interaction with Random Slopes for ImageType

A linear mixed model of log-transformed TTFF (ms) on SloganType × ImageType with covariates (ImagePopulation, age, gender, education) and by-participant random intercepts and random slopes on SloganType and ImageType, was fit to 756 observations from 42 participants (18 per participant). The optimizer indicated non-convergence, so fixed-effect estimates must be taken cautiously, though patterns are consistent with the reduced model (Table 5).

Table 5.

Significant fixed effects on TTFF (mixed LMM on log-TTFF).

In comparison to the anticipated-regret (AR) slogans, the positive (P) slogans showed slower TTFF to the slogan AOI, b = 0.343, SE = 0.016, z = 21.73, p < 0.001, with a time ratio (TR) of 1.41 [1.37, 1.45] (Table 5). In comparison to hand-drawn (HD) images, the OLD images had longer TTFF, b = 0.151, SE = 0.016, z = 9.48, p < 0.001, TR = 1.16 [1.13, 1.20], and the AI images had even longer TTFF, b = 0.254, SE = 0.016, z = 16.19, p < 0.001, TR = 1.29 [1.25, 1.33]. SloganType by ImageType (OLD, AI) interactions were insignificant (all p ≥ 0.12), and no stable moderation of the AR–P difference in TTFF was yielded by image type or identity match in this model. ImagePopulation = SA trend for quicker TTFF was near significance but not significant (p = 0.051). The estimated baseline model geometric mean TTFF for AR, HD, W, non-match, and Agec = 0 was exp(6.342) ≈ 568 ms. Overall, the findings affirm H1 (AR is quicker than P).

3.4.2. Median Quantile Regression (τ = 0.50) with Cluster Bootstrap CIs for TTFF (log) and Dwell (log)

A median quantile regression on log(TTFF), with cluster-bootstrapped 95% CIs by participant, indicated that the positive (P) slogans led to slower first fixation to the slogan AOI than the anticipated-regret (AR) slogans, b = 0.347, 95% CI [0.311, 0.382], corresponding to a time ratio TR = exp(b) = 1.42 [1.36, 1.46]. Compared to the hand-drawn (HD) images, OLD increased median TTFF, b = 0.144, TR = 1.16 [1.11, 1.20], and AI increased it further, b = 0.242, TR = 1.27 [1.23, 1.31]. Slogan × ImageType terms were not significant at the median. These results support H1 (AR faster than P) and reveal image-type differences in line with H3 (HD < OLD < AI).

A median quantile regression on log(Dwell) with cluster-bootstrapped CIs showed that P slogans resulted in lower dwell on the slogan AOI compared to AR, b = −0.255, RM = exp(b) = 0.78 [0.76, 0.79] (Table 6). Compared with HD, both OLD and AI reduced dwell: OLD, b = −0.083, RM = 0.92 [0.90, 0.93]; AI, b = −0.206, RM = 0.81 [0.80, 0.83]. A small Slogan × ImageType (AI) interaction was observed, b = 0.031, RM = 1.03 [1.01, 1.05] (Table 6), indicating the AR advantage is slightly attenuated for AI relative to HD at the median; the OLD interaction was not significant. These results support H2 (AR > P in dwell), and they show robust image-type differences (HD > OLD > AI) consistent with H4, with only limited moderation by ImageType.

Table 6.

Minimal supplementary table (ratios: TR for TTFF; RM for dwell).

4. Discussion

4.1. Aims and Principal Findings

The current research aimed to investigate the impact of appeal framing and visual design elements on attention to bowel-cancer screening advertisements. That is, we tested whether anticipated-regret (AR) slogans created more attentional interest compared to positive (P) slogans, and whether image type (i.e., hand-drawn, older stock photo, AI) moderated these effects. In a variety of eye-tracking measures (time to first fixation (TTFF), dwell time, fixation frequency, and revisit counts) the research discovered that AR slogans were fixated more rapidly and with stronger persistence than P slogans, and image type had an effect on global level of attention but not in a systematic fashion to moderate the AR–P contrast.

Surprisingly, the benefit of AR framings was apparent in all the measurements. Participants were primed to AR slogans much more quickly, with TTFF being about 29% shorter than for positive slogans (i.e., TR = 1.41), i.e., viewers were faster to find and direct attention to regret-based information [46,47]. When attended, participants took much more time to view AR slogans, with P slogans taking about 23% less dwell time (i.e., RM = 0.77). Fixation analyses also supported this trend, AR slogans attracting some 37% more fixations (IRR = 0.63 for P vs. AR), and exhibiting more fastidious examination of the slogans’ words. Additionally, analyses showed another benefit, with viewers returning to AR slogans some 27% more than to P slogans (IRR = 0.73), proving that regret-based messages engaged, but also re-engaged following disengagement. Lastly, TTFF analyses indicated another identity-match effect [48]. That is, participants looked more rapidly when the represented audience was ethnically comparable to their own (TR = 0.86), indexing the extra rewards of cultural tailoring [49].

4.2. Mechanism: Anticipated Regret as an Attentional Prior

The uniformity of such effects across TTFF, dwell time, fixation frequency, and re-visits indicates that regret appeals not only are more salient but are also processed in a manner so as to maintain and re-activate cognitive resources over time [10]. For instance, Gong et al. [49] showed that while rational appeals handle individual relevance in an attempt to manage cognitive load, emotional appeals (i.e., regret-based appeals) are better at maintaining attention where individual relevance would otherwise be low. Eye-tracking experiments corroborate this distinction as well. For example, ref. [28] found that gain-framed messages elicited more dwell time than loss-framed messages but warned that longer visual attention does not necessarily indicate higher cognitive elaboration. These preferences can be accounted for more precisely in the context of regret theory [15,17,20], which posits that people are driven to pre-experience and pre-avoid future negative affect.

In health situations, regret anticipation is an extremely powerful motivator because it puts the spotlight on the unwanted outcomes of doing nothing compared to possible gains from cooperation. Experiments prove that this works. Indeed, a meta-analysis of 81 studies found that anticipated regret was highly correlated with both health intentions and actual health behaviour, and with expected inaction regret being a strong and stable predictor of behaviour in a variety of health contexts [24,50].

Notably, the impact of anticipated regret cannot be boiled down to prevailing motivational constructs. For example, in exercise behaviour, Abraham et al. [24] showed that anticipated regret accounted for a further 5% of variance in intentions over and above the established predictors of the Theory of Planned Behaviour and past behaviour. The current results add to this evidence by demonstrating that the influence of anticipated regret is not limited to intentions or self-report measures but is detectable at the very first stage of information processing. The AR participants reacted more quickly to the AR slogans and looked longer, indicating that regret-based messages prime attentional mechanisms to emphasize the potentially dangerous outcomes of not taking action. This synthesis of eye-tracking information with existing behavioural research thus fills the existing void with regard to the dual role of regret [23,24,51]. That is, as both a motivational force of health decision and a perceptual cue that raises the salience of essential health information. In this manner, our research illustrates that anticipated regret not only affects what people end up choosing but also how they perceive and process health-persuasive messages [52].

4.3. Visual Design: Processing Fluency, Disfluency, and Stage-Specific Effects

While positive slogans, which predominantly cast screening as a good thing, AR slogans lead audiences to think counterfactually (i.e., mentally project themselves into the future when they regret their inaction) [8,14,20]. This counterfactual activation process has also been observed to enhance the cognitive salience of the message, providing a clear explanation of both the faster orienting (i.e., shorter TTFF) and more sustained engagement (i.e., longer dwell time, more fixations, and revisits) obtained in the present study. That is, the very mechanism hypothesized by regret theory—forewarning the emotional expense of inaction—was reflected in our participants’ visual attention patterns. The importance of this result is in demonstrating that regret cues are not themselves affective stimuli but can rather act as attentional priors (i.e., in a Bayesian fashion), reweighting and reranking the information taken in during perception [20,26]. In Bayesian terms, people come to a stimulus with prior beliefs regarding what information is most pertinent or salient; regret cues update these priors by raising the subjective probability that inaction will result in an undesirable outcome [53]. For instance, when considering an advertisement for bowel cancer screening, an AR slogan (“You may regret not booking your screening”) effectively biases the perceptual system’s prior from neutral or benefit-based expectations to a loss-framed mindset. This Bayesian updating of the attentional distribution is in favour of the slogan itself, speeding up orienting (i.e., faster TTFF) and maintaining engagement (longer dwell, increased fixations and revisits). In this manner, anticipated regret acts as a cognitive gatekeeper [48], influencing not only how individuals feel about a message but also how individuals’ perceptual systems first determine which aspects of that message are given access to working memory and, in turn, to the decision-making process [47].

Although these results are interesting insofar as the cognitive process by which regret-based framings exert their impact, our results also show that attentional engagement is under the control of the visual form in which the message is presented [27,54]. That is, whereas AR slogans were rewarded across all measures, baseline level of attention to present them varied as a function of imagery type used to present them. In particular, our findings indicated that image type was an important predictor of influencing overall attention levels. For task, hand-drawn images consistently accelerated orienting and maximized dwelling time compared to older stock images and AI-produced images. Conversely, AI-produced images had the lowest attentional dwelling time, where slower TTFF, shorter dwell time, and shorter revisiting of the slogan were observed.

These are in line with processing fluency theory [5,9], in that more easily processable stimuli lead to higher cognitive ease and therefore attract more sustained attention. In support of this, Reber et al. [55] found that fluent stimuli bring about short positive affective reactions, and therefore processing facilitation can affect affective state directly. However, the relationship between fluency and sustained attention is otherwise thought to be more complex. Research has demonstrated that those with greater sustained attention capacity were more sensitive to small stimulus similarities during a continuous performance task and proposed that attention could modulate best-fit perceptual template construction rather than be an index of ease of processing. Likewise, Martin et al. [56] discovered that sustained attention influences early visual stages of lexical categorization processing. What this implies is that although fluency during processing is one of the contributing factors in initial accessibility, facilitation of sustained attention is more likely to rely on general attentional control processes operating through, but not directly from, stimulus fluency.

Hand-drawn images may be perceived as being warm and authentic, while AI-generated images may introduce subtle perceptual disfluency [17,27,48]. We did not capture coder inspections or participant ratings of ‘uncanny’ characteristics (e.g., realism, face/hand irregularities), so any application of uncanny-valley processes is interpretive and not validated for this stimulus set. The pattern found—hand-drawn < older stock < AI in TTFF and decreased dwell/revisits for AI—is in line with fluency accounts but cannot here be independently ascribed to ‘uncanniness’. Interestingly, these stylistic differences shift baseline attention but do not nullify the AR advantage: anticipated-regret framing generates a largely additive benefit across image types.

4.4. Stage-Specific Disfluency: Stock vs. AI Imagery

Another significant finding is that the difference order between hand-drawn, stock, and AI pictures was the same on all four eye-tracking measures (TTFF, dwell, fixation counts, revisits), although the size of the effects varied [54,57,58]. For instance, while OLD and AI pictures both slowed orienting (TTFF TR = 1.16 and 1.29, respectively), AI pictures alone cut revisits in half (IRR = 0.86). This implies that disfluency in AI imagery not only hinders initial orienting but also inhibits re-approach after attention has lapsed. Stock imagery, on the other hand, is seen to function mostly at the point of attention entry, hindering orientation but not inhibiting subsequent re-entries into the message. Here, therefore, our findings indicate that different kinds of disfluency influence attentional process at different stages.

This specificity at the stage level has its practical parallels in linguistic disfluency research, which, in concert with visual disfluency, has been found to differentially influence attentional deployment. For instance, Diachek et al. [59] observed that repetitions, fillers, and pauses can aid memory by focusing attention onto as-yet-unpresented material, with short-lived effects at sentence boundaries. Analogously, Collard et al. [60] discovered that hesitations like “er” capture the attention of listeners, and enhanced recognition for leading hesitated words. These results indicate that some disfluencies may enhance attentional focus at certain points in time. The magnitude of this effect is still debated. Other research failed to replicate disfluency advantages in advertisement word memorization and fact-learning tasks, even with images present, indicating that disfluency’s attentional benefits are task-specific [48,50,58]. This was further expanded by [23,49], who demonstrated that advertisement imagery fluency impacted attitudes toward the consumer, once again indicating that ease and difficulty of processing have downstream persuasion effects. On our account, this is to say that disfluency of AI-produced imagery that has been implemented can not only slow initial orienting, but lower the probability of attentional re-engagement, as linguistic disfluences can selectively lower or intercept attentional distribution based on task requirements and context (i.e., visual attractiveness does not impact in a uniform way but works through diverse channels of attention, ultimately compelling both when and if audiences re-prioritize cognitive resources towards health information).

4.5. Boundary Condition: Identity Congruence

The research also corroborated that viewer identity was an important factor in the early formation of attention. More specifically, participants oriented faster to slogans when the ethnicity of the people presented in the advert was congruent with their own. This influence was most pronounced in TTFF, indicating that identity congruence supports early attentional capture but not necessarily longer-term engagement. These findings are in line with social identity theory [47], which predicts that in-group cues will render perceptual salience and relevance more likely, especially at the very initial stage of information processing. But as the influence did not carry over to dwell time, fixation counts, or revisits, it seems that identity congruence may be enough to attract the eye initially but not to fix repeated or prolonged attention to the message. For public health communication, this implies that although culturally targeted imagery can be used to gain an initial gaze, it is optimized when combined with motivational framings (i.e., anticipated regret) that elicit prolonged attention to essential health information.

4.6. Demographic Trends and Audience Segmentation

Even though subsidiary to the primary results, small demographic influences were also observed. The older participants generated fewer total fixations, and male participants were less likely to re-engage with slogans following disengagement. These trends indicate that attentional re-engagement processes might differ between groups. This finding is in line with earlier studies that have shown age-differential impacts on information processing strategies where older adults are likely to engage in more attentional selective or heuristic-based approaches [26,48,54]. Similarly, empirical evidence has also produced gender-based differences in response to health appeals, whereby men have sometimes exhibited reduced re-identifying with preventive appeals than women. While modest in effect size, these results demonstrate the relevance of considering audience segmentation while constructing campaigns: adjusting message frame and visual design not only to cultural identity but to demographic indicators can improve overall impacts.

Lastly, the similarity of outcomes, including linear mixed models for TTFF, Gamma GEEs for dwell time, Poisson and negative binomial GEEs for fixation and revisits, and quantile regressions for median effects, supports preference for the robustness of discovered attentional patterns, that is, systematic benefit of anticipated-regret frames and image type-based baseline differences. For example, the fact that the AR advantage persisted in appearing on latency-based (TTFF) and time-based (dwell, fixations, and revisits) measures, and that the outcomes of image type were replicated across model specifications reduces the chances of such findings being model-specification artifacts. Rather, they appear to indicate robust attentional processes that persist across analytic approaches [12,28,54].

For theory, this consistency favours the reading that regret cues act as attentional priors (above), causing perceptual weighting change that is quantifiable across eye movement behaviour measures. It also implies that visual disfluency effects from stock or AI imagery are stage-specific and insensitive to alternative modelling assumptions. For practical application, consistency in such effects ensures that the advantages evidenced for AR framing and the baseline effect of image type are not susceptible to methodological fineness but rather reflect robust cognitive processes that can be depended upon in campaign construction. Therefore, our findings have not just statistical but also translational implications in that they show anticipated regret and image style to reliably predict attention regardless of context or analytic frame.

5. Practical Implications

Framing in terms of anticipated regret should be the default where rapid capture and ongoing processing of vital screening messages are the goals. On an operational level, copy can literally invoke the counterfactual (“If you don’t screen now, you’ll regret it”) and directly link it to a tangible, low-friction follow-up action (“Schedule your free test today”). To prevent reactance, tone down to matter-of-fact from alarmist; anchor regret in one’s control (booking); and place the call-to-action (CTA) next to the slogan to capitalize on the noted early-orienting benefit. Where possible, include a short efficacy cue (“It takes 5 min; early detection saves lives”) to translate attention into intention without losing the regret cue.

Visual style needs to be used as an attention multiplier. Hand-drawn, human-centric illustrations consistently increased baseline attention and engaged people for longer periods with slogans, whereas AI artwork impaired early orienting and reduced returns to the message. Practically, this makes the case for ordering uncomplicated, warm illustrations or choosing genuine stock that features real individuals and real-world settings instead of artificial or hyper-stylized AI artwork. If AI image usage is unavoidable (i.e., budget or turnaround limitations), reducing disfluency by simplifying composition, eschewing uncanny faces/hands, softening contrast, and introducing human-generated elements (e.g., hand-lettered flourishes) can enhance perceived veracity [11,48].

Tailoring can be used to deliver the necessary “first glance”. Identity congruence sped up TTFF but did not, in itself, extend engagement [11,48]. This would suggest that creative content should make local audiences which match local demographics see the slogan quickly and then use regret framing and short CTAs to maintain attention. A real-world process is as follows: (1) localize the imagery (age, ethnicity, setting), (2) maintain the regret-based slogan constant across versions, and (3) A/B test only the visuals in each market to maintain message integrity without sacrificing the early-capture benefit of congruence [31,48].

Design and layout options must function actively toward slogan legibility as the focal AOI. Position the slogan in an upper third or middle salience area, apply high figure–ground contrast, and maintain typographic hierarchy specific (one powerful head, liberal spacing). Reduce distracting foci close to the slogan (busy backgrounds, second-level icons). In accordance with standard image-type ordering (HD < OLD < AI in TTFF), delay slower formats by making the slogan larger, further increasing contrast, and decreasing peripheral clutter. Where appropriate, include the slogan along with a minimal directional cue (gaze line, arrow) aimed at the CTA in lieu of sacrificing orienting.

Lastly, prioritize ethics, equity, and accessibility. Regret appeals must move but not cause excessive worry: use them in combination with positive, encouraging wording and [8] tangible assistance (hotline telephone number, booking link, assurances of convenience and privacy). Use inclusive image design, alt text, high contrast colour, and legible font sizes to ensure resources are accessible to all on every age and ability level. In selling to underserved segments, pair identity-congruent imagery with attenuation of functional barriers (free kits, easy booking, multilingual support); the research indicates such a pairing is able to gain both the initial glance and the second.

6. Conclusions, Limitations, and Future Directions

On four measures of eye-tracking—TTFF, dwell time, fixations, and revisits—future-regret (AR) messages exceeded positive (P) messages, grabbing viewers’ attention more quickly and holding it longer. Baseline attention depended on picture type (hand-drawn > older stock > AI), but not always the AR–P difference. Identity congruence between watchers and on-screen viewers also sped first orienting (shorter TTFF). These impacts survived mixed-effects, GEE, and quantile-regression analyses.

This research is not without limitations. First, although the sample population was small (N = 42), it accords with eye-tracking norms where repeated-measures and trial-level data provide large within-subjects data counterbalancing smaller group-level samples [12,55]. The demographic restriction to UK residents between ages of 40–65 limits generalizability. Cultural difference in visual attention and cross-cohort age difference in cognitive control and sensitivity to messages will require replication with younger cohorts and across cultures to determine the broader applicability of regret-based framing effects. Second, the paradigm assessed only short, free-viewing presentations (5 s) [11,49]. Such exposure durations are readily appropriate for the examination of early-stage attentional capture and maintenance but are not applicable to subsequent stages in persuasion, such as comprehension, recall, or behavioural uptake. Subsequent research may therefore try to draw on hybrid paradigms that apply the use of eye-tracking to delayed recall tasks or behavioural choice paradigms, as an attempt to more directly relate attentional measures to decision-making products [11]. Lastly, since the comparison between AI, OLD, and HD images was intended to reflect differences in generation process, these types necessarily had to vary on other sensory attributes such as texture, contrast, visual clutter, and face salience [48]. Thus, attentional differences cannot be entirely accounted for by generation process. Subsequent work would have to explore testing manipulations of low-level visual features with framing manipulations and determine whether the perceptual fluency contributions can be distinguished from stylistic or technological inferences. We also did not obtain coder audits or participant ratings of ‘uncanny’ attributes (e.g., realism, facial/hand anomalies), so any link to uncanny-valley mechanisms is interpretive and should be tested directly in future work. Similarly, extension of the set of AR wordings might be used to determine whether the observed attentional benefits are specific to regret per se, or to more general categories of negative-framed appeals. Future researchers should examine generalizability testing in larger, preregistered international and age-group samples. Second, connect attention to downstream effects (recall, intentions, actual booking/kit return) with hybrid eye-tracking and follow-up designs. Third, try to dissociate low-level visual features (contrast, clutter, face salience) to tease apart image “generation” from fluency perception. We did not standardize or quantify these low-level properties across image types, so residual confounding cannot be ruled out. The within-subjects design, counterbalancing, and robustness checks mitigate, but do not eliminate, this issue; future work should compute objective image metrics (e.g., luminance, RMS contrast, edge density, clutter) and include them as covariates. Fourth, contrast different negative frames and AR wordings to calibrate effect and reduce reactance. Fifth, investigate test context effects (mobile feed v. print; static vs. brief video) and CTA placement/duration. Lastly, investigate mechanisms with causal mediation and individual-difference moderators (trust, health anxiety, prior screening) to optimize targeting without compromising equity.

In general, both models and media combined, anticipated-regret framing paired with identity-congruent image consistently drew eyes to the screening slogan. Hand-drawn human-focused images motivated close reading, whereas AI-produced rendered images trailed behind. In varying mixes and styles, the trajectory of AR was consistent, providing a consistent avenue for public health communications: a headline glanced at briefly, held just long enough to matter.

Author Contributions

Conceptualization, I.Y., M.P. and C.V.W.; methodology, I.Y., S.B., B.M., C.V.W., C.S.B., M.S., J.S. and S.S.; software, I.Y. and M.S.; validation, M.P., L.S. and C.V.W.; formal analysis, I.Y., S.B. and B.M.; investigation, M.P., M.S., J.S. and S.S.; resources, I.Y., M.P., M.S. and J.S.; data curation, I.Y., S.B. and M.S.; writing—original draft preparation, I.Y., B.M. and S.B.; writing—review and editing, L.S. and M.P.; visualization, C.S.B. and S.B.; supervision, M.P., C.V.W. and I.Y.; project administration, M.P.; funding acquisition, M.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by CANCER RESEARCH UK, grant number EDDPMA-May23/100035.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and was approved by the Institutional Ethics Committee of LIVERPOOL JOHN MOORES UNIVERSITY (approval reference number: 23/PSY/060, 7 August 2023).

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study in the form of a pop-up window and participants had to click on ‘I Agree’ to give their consent and continue with the eye-tracking experiment.

Data Availability Statement

Data is available in Open Access format at LJMU Repository in the following links https://doi.org/10.17632/3pwm9yvygs.1 and https://doi.org/10.17632/rm6vv3xwj9.1.

Acknowledgments

The authors want to deeply thank CANCER RESEARCH UK for funding this research project.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AR | Anticipated regret |

| P | Positive (message framing) |

| I-VT | Identification-by-velocity (fixation filter) |

| AOI | Area of interest |

| ROI | Region of Interest |

| TTFF | Time-to-first-fixation |

| ISI | Inter-stimulus interval |

| LMM | Linear mixed-effects model |

| REML | Restricted Maximum Likelihood |

| GEE | Generalized Estimating Equations |

| TR | Time ratio (for TTFF; exp(b)) |

| RM | Ratio of means (for Gamma models; exp(b)) |

| IRR | Incidence rate ratio (for count models; exp(b)) |

| HD | Hand-drawn (image type) |

| OLD | Older stock/archival (image type) |

| AI | AI-generated (image type) |

| CTA | Call-to-action |

References

- Bowel Cancer Mortality Statistics|Cancer Research UK. Available online: https://www.cancerresearchuk.org/health-professional/cancer-statistics/statistics-by-cancer-type/bowel-cancer/mortality#heading-Zero (accessed on 29 September 2025).

- Ferlay, J.; Soerjomataram, I.; Dikshit, R.; Eser, S.; Mathers, C.; Rebelo, M.; Parkin, D.M.; Forman, D.; Bray, F. Cancer Incidence and Mortality Worldwide: Sources, Methods and Major Patterns in GLOBOCAN 2012. Int. J. Cancer 2015, 136, E359–E386. [Google Scholar] [CrossRef] [PubMed]

- Levin, T.; Corley, D.; Jensen, C.; Schottinger, J.E.; Quinn, V.P.; Zauber, A.G.; Lee, J.K.; Zhao, W.K.; Udaltsova, N.; Ghai, N.R.; et al. Effects of Organized Colorectal Cancer Screening on Cancer Incidence and Mortality in a Large Community-Based Population. Gastroenterology 2018, 155, 1383–1391.e5. [Google Scholar] [CrossRef] [PubMed]

- Zheng, S.; Schrijvers, J.; Greuter, M.; Kats-Ugurlu, G.; Lu, W.; de Bock, G.H. Effectiveness of Colorectal Cancer (CRC) Screening on All-Cause and CRC-Specific Mortality Reduction: A Systematic Review and Meta-Analysis. Cancers 2023, 15, 1948. [Google Scholar] [CrossRef]

- Navarro, M.; Nicolas, A.; Ferrandez, A.; Lanas, A. Colorectal Cancer Population Screening Programs Worldwide in 2016: An Update. World J. Gastroenterol. 2017, 23, 3632–3642. [Google Scholar] [CrossRef]

- Nutbeam, D.; Muscat, D.M. Health Promotion Glossary 2021. Health Promot. Int. 2021, 36, 1578–1598. [Google Scholar] [CrossRef]

- Durkin, S.; Broun, K.; Guerin, N.; Morley, B.; Wakefield, M. Impact of a Mass Media Campaign on Participation in the Australian Bowel Cancer Screening Program. J. Med. Screen. 2020, 27, 18–24. [Google Scholar] [CrossRef]

- Doherty, T.M.; Del Giudice, G.; Maggi, S. Adult Vaccination as Part of a Healthy Lifestyle: Moving from Medical Intervention to Health Promotion. Ann. Med. 2019, 51, 128–140. [Google Scholar] [CrossRef]

- Golechha, M. Health Promotion Methods for Smoking Prevention and Cessation: A Comprehensive Review of Effectiveness and the Way Forward. Int. J. Prev. Med. 2016, 7, 7. [Google Scholar] [CrossRef]

- Skandali, D.; Yfantidou, I.; Tsourvakas, G. Neuromarketing and Health Marketing Synergies: A Protection Motivation Theory Approach to Breast Cancer Screening Advertising. Information 2025, 16, 715. [Google Scholar] [CrossRef]

- Champlin, S.; Lazard, A.; Mackert, M.; Pasch, K.E. Perceptions of Design Quality: An Eye Tracking Study of Attention and Appeal in Health Advertisements. J. Commun. Healthc. 2014, 7, 285–294. [Google Scholar] [CrossRef]

- Milošević, M.; Kovačević, D.; Brozović, M. The Influence of Monochromatic Illustrations on the Attention to Public Health Messages: An Eye-Tracking Study. Appl. Sci. 2024, 14, 6003. [Google Scholar] [CrossRef]

- Hunkin, H.; Turnbull, D.; Zajac, I.T. Considering Anticipated Regret May Reduce Colorectal Cancer Screening Intentions: A Randomised Controlled Trial. Psychol. Health 2020, 35, 555–572. [Google Scholar] [CrossRef]

- Sandberg, T.; Conner, M. Anticipated Regret as an Additional Predictor in the Theory of Planned Behaviour: A Meta-analysis. Br. J. Soc. Psychol. 2008, 47, 589–606. [Google Scholar] [CrossRef]

- Sandberg, T.; Conner, M. A Mere Measurement Effect for Anticipated Regret: Impacts on Cervical Screening Attendance. Br. J. Soc. Psychol. 2009, 48, 221–236. [Google Scholar] [CrossRef]

- Landman, J. Regret and Elation Following Action and Inaction: Affective Responses to Positive versus Negative Outcomes. Pers. Soc. Psychol. Bull. 1987, 13, 524–536. [Google Scholar] [CrossRef]

- Sugden, R. Regret, Recrimination and Rationality. Theory Decis. 1985, 19, 77–99. [Google Scholar] [CrossRef]

- Smallman, R.; Roese, N.J. Counterfactual Thinking Facilitates Behavioral Intentions. J. Exp. Soc. Psychol. 2009, 45, 845–852. [Google Scholar] [CrossRef] [PubMed]

- Markman, K.D.; McMullen, M.N. A Reflection and Evaluation Model of Comparative Thinking. Pers. Soc. Psychol. Rev. 2003, 7, 244–267. [Google Scholar] [CrossRef] [PubMed]

- Saffrey, C.; Summerville, A.; Roese, N.J. Praise for Regret: People Value Regret above Other Negative Emotions. Motiv. Emot. 2008, 32, 46–54. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Lim, F.; Prest, M.; Ferris, J.S.; Aziz, Z.; Agyekum, A.; Wagner, S.; Gulati, R.; Hur, C. Quantifying the Potential Benefits of Early Detection for Pancreatic Cancer through a Counterfactual Simulation Modeling Analysis. Sci. Rep. 2023, 13, 20028. [Google Scholar] [CrossRef]

- Cooke, R.; Sniehotta, F.; Schüz, B. Predicting Binge-Drinking Behaviour Using an Extended TPB: Examining the Impact of Anticipated Regret and Descriptive Norms. Alcohol Alcohol. 2006, 42, 84–91. [Google Scholar] [CrossRef]

- Richard, R.; Van der Pligt, J.; De Vries, N. Anticipated Regret and Time Perspective: Changing Sexual Risk-taking Behavior. J. Behav. Decis. Mak. 1996, 9, 185–199. [Google Scholar] [CrossRef]

- Abraham, C.; Sheeran, P. Deciding to Exercise: The Role of Anticipated Regret. Br. J. Health Psychol. 2004, 9, 269–278. [Google Scholar] [CrossRef]

- Choi, E.; Wan, E.Y. Attitude toward Prostate Cancer Screening in Hong Kong: The Importance of Perceived Consequence and Anticipated Regret. Am. J. Men’s Health 2021, 15, 15579883211051442. [Google Scholar] [CrossRef]

- Rivis, A.; Sheeran, P.; Armitage, C.J. Expanding the Affective and Normative Components of the Theory of Planned Behavior: A Meta-analysis of Anticipated Affect and Moral Norms. J. Appl. Soc. Psychol. 2009, 39, 2985–3019. [Google Scholar] [CrossRef]

- Sheeran, P.; Harris, P.; Epton, T. Does Heightening Risk Appraisals Change People’s Intentions and Behavior? A Meta-Analysis of Experimental Studies. Psychol. Bull. 2014, 140, 511–543. [Google Scholar] [CrossRef] [PubMed]

- Bassett-Gunter, R.L.; Latimer-Cheung, A.E.; Martin Ginis, K.A.; Castelhano, M. I Spy with My Little Eye: Cognitive Processing of Framed Physical Activity Messages. J. Health Commun. 2014, 19, 676–691. [Google Scholar] [CrossRef]

- Carter, B.T.; Luke, S.G. Best Practices in Eye Tracking Research. Int. J. Psychophysiol. 2020, 155, 49–62. [Google Scholar] [CrossRef]

- Duchowski, A.T.; Duchowski, A.T. Eye Tracking Methodology: Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2017; ISBN 3-319-57883-9. [Google Scholar]

- Balaskas, S.; Rigou, M. The Effects of Emotional Appeals on Visual Behavior in the Context of Green Advertisements: An Exploratory Eye-Tracking Study. In Proceedings of the 27th Pan-Hellenic Conference on Progress in Computing and Informatics, Lamia, Greece, 24–26 November 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 141–149. [Google Scholar]

- Salvucci, D.D.; Goldberg, J.H. Identifying Fixations and Saccades in Eye-Tracking Protocols. In Proceedings of the 2000 Symposium on Eye Tracking Research & Applications, Palm Beach Gardens, FL, USA, 6–8 November 2000; pp. 71–78. [Google Scholar]

- Olsen, A. The Tobii I-VT Fixation Filter. Tobii Technol. 2012, 21, 4–19. [Google Scholar]

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H.; Van de Weijer, J. Eye Tracking: A Comprehensive Guide to Methods and Measures; Oxford University Press: Oxford, UK, 2011; ISBN 0-19-162542-6. [Google Scholar]

- Balaskas, S.; Koutroumani, M.; Rigou, M. The Mediating Role of Emotional Arousal and Donation Anxiety on Blood Donation Intentions: Expanding on the Theory of Planned Behavior. Behav. Sci. 2024, 14, 242. [Google Scholar] [CrossRef] [PubMed]

- Barr, D.J. Analyzing ‘Visual World’Eyetracking Data Using Multilevel Logistic Regression. J. Mem. Lang. 2008, 59, 457–474. [Google Scholar] [CrossRef]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting Linear Mixed-Effects Models Using Lme4. J. Stat. Softw. 2015, 67, 1–18. [Google Scholar] [CrossRef]

- Müller, H.-G.; Stadtmüller, U. Generalized Functional Linear Models. Ann. Statist. 2005, 33, 774–805. [Google Scholar] [CrossRef]

- Khuri, A.I.; Mukherjee, B.; Sinha, B.K.; Ghosh, M. Design Issues for Generalized Linear Models: A Review. Stat. Sci. 2006, 21, 376–399. [Google Scholar] [CrossRef]

- Pauler, D.K.; Escobar, M.D.; Sweeney, J.A.; Greenhouse, J. Mixture Models for Eye-tracking Data: A Case Study. Stat. Med. 1996, 15, 1365–1376. [Google Scholar] [CrossRef]

- Mézière, D.C.; Yu, L.; Reichle, E.D.; Von Der Malsburg, T.; McArthur, G. Using Eye-tracking Measures to Predict Reading Comprehension. Read. Res. Q. 2023, 58, 425–449. [Google Scholar] [CrossRef]

- Silva, B.B.; Orrego-Carmona, D.; Szarkowska, A. Using Linear Mixed Models to Analyze Data from Eye-Tracking Research on Subtitling. Transl. Spaces 2022, 11, 60–88. [Google Scholar] [CrossRef]

- Ng, V.K.Y.; Cribbie, R.A. The Gamma Generalized Linear Model, Log Transformation, and the Robust Yuen-Welch Test for Analyzing Group Means with Skewed and Heteroscedastic Data. Commun. Stat. -Simul. Comput. 2019, 48, 2269–2286. [Google Scholar] [CrossRef]

- Kiefer, C.; Mayer, A. Average Effects Based on Regressions with a Logarithmic Link Function: A New Approach with Stochastic Covariates. Psychometrika 2019, 84, 422–446. [Google Scholar] [CrossRef]

- Hastie, T.J.; Pregibon, D. Generalized Linear Models. In Statistical Models in S; Routledge: Oxford, UK, 2017; pp. 195–247. [Google Scholar]

- Summerfield, C.; Egner, T. Expectation (and Attention) in Visual Cognition. Trends Cogn. Sci. 2009, 13, 403–409. [Google Scholar] [CrossRef] [PubMed]

- Tajfel, H.; Turner, J.C. The Social Identity Theory of Intergroup Behavior. In Political Psychology; Psychology Press: Oxfordshire, UK, 2004; pp. 276–293. [Google Scholar] [CrossRef]

- Yılmaz, N.G.; Timmermans, D.R.M.; Van Weert, J.C.M.; Damman, O.C. Breast Cancer Patients’ Visual Attention to Information in Hospital Report Cards: An Eye-Tracking Study on Differences between Younger and Older Female Patients. Health Inform. J. 2023, 29, 14604582231155279. [Google Scholar] [CrossRef]

- Gong, Z.; Cummins, R.G. Redefining Rational and Emotional Advertising Appeals as Available Processing Resources: Toward an Information Processing Perspective. J. Promot. Manag. 2020, 26, 277–299. [Google Scholar] [CrossRef]

- Zeelenberg, M.; Pieters, R. A Theory of Regret Regulation 1.0. J. Consum. Psychol. 2007, 17, 3–18. [Google Scholar] [CrossRef]

- Brewer, N.; DeFrank, J.T.; Gilkey, M.B. Anticipated Regret and Health Behavior: A Meta-Analysis. Health Psychol. 2016, 35, 1264–1275. [Google Scholar] [CrossRef] [PubMed]

- Liao, H.; Xu, Y.; Fang, R. A Regret Theory-Based Even Swaps Method with Complex Linguistic Information and Its Application in Early-Stage Lung Cancer Treatment Selection. Inf. Sci. 2024, 681, 121194. [Google Scholar] [CrossRef]

- Knill, D.; Pouget, A. The Bayesian Brain: The Role of Uncertainty in Neural Coding and Computation. Trends Neurosci. 2004, 27, 712–719. [Google Scholar] [CrossRef]

- Slessor, G.; Phillips, L.; Bull, R. Age-Related Declines in Basic Social Perception: Evidence from Tasks Assessing Eye-Gaze Processing. Psychol. Aging 2008, 23, 812–822. [Google Scholar] [CrossRef]

- Reber, R.; Schwarz, N.; Winkielman, P. Processing Fluency and Aesthetic Pleasure: Is Beauty in the Perceiver’s Processing Experience? Pers. Soc. Psychol. Rev. 2004, 8, 364–382. [Google Scholar] [CrossRef]

- Martin, C.D.; Thierry, G.; Démonet, J.F. ERP Characterization of Sustained Attention Effects in Visual Lexical Categorization. PLoS ONE 2010, 5, 9892. [Google Scholar] [CrossRef]

- Heer, E.; Ruan, Y.; Pader, J.; Mah, B.; Ricci, C.; Nguyen, T.; Chow, K.; Ford-Sahibzada, C.; Gogna, P.; Poirier, A.; et al. Performance of the Fecal Immunochemical Test for Colorectal Cancer and Advanced Neoplasia in Individuals under Age 50. Prev. Med. Rep. 2023, 32, 102124. [Google Scholar] [CrossRef]

- Granger, S.P.; Preece, R.A.D.; Thomas, M.G.; Dixon, S.W.; Chambers, A.C.; Messenger, D.E. Colorectal Cancer Incidence Trends by Tumour Location among Adults of Screening-Age in England: A Population-Based Study. Color. Dis. 2023, 25, 1771–1782. [Google Scholar] [CrossRef]

- Diachek, E.; Brown-Schmidt, S. The Effect of Disfluency on Memory for What Was Said. J. Exp. Psychol. Learn. Mem. Cogn. 2023, 49, 1306–1324. [Google Scholar] [CrossRef] [PubMed]

- Collard, P.; Corley, M.; Macgregor, L.J.; Donaldson, D.I.; Psychology, P.C. Attention Orienting Effects of Hesitations in Speech: Evidence from ERPs. J. Exp. Psychol. Learn. Mem. Cogn. 2008, 34, 696–702. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).