Abstract

Based on 350 visualizations, this paper examines the depiction of museum curators by the popular generative artificial intelligence (AI) model, ChatGPT4o. While the AI-generated representations do not reiterate popular stereotypes of curators as nerdy, conservative in dress, and stuck in time, rummaging through collections, they contrast sharply with real-world demographics. AI-generated imagery severely under-represents women (3.5% vs. 49–72% in reality) and disregards ethnic communities outside of Caucasian communities (0% vs. 18–36%). It not only over-represents young curators (79% vs. approx. 27%) but also renders curators to resemble yuppie professionals or people featured in fashion advertising. Stereotypical attributes are prevalent, with curators widely depicted as having beards and holding clipboards or digital tablets. The findings highlight biases in the generative AI image creation data sets, which are poised to shape an inaccurate portrayal of museum professionals if the images were to be taken uncritically at ‘face value’.

1. Introduction

Some professions in the cultural industries are very public-facing, such as librarians, musicians, and actors, while others largely work away from the public eye, such as archivists and museum curators. It is those professions that work in the background that tend to struggle with their public image and thus are prone to stereotypes. Museum curators are a good example. Yet, there would be no exhibitions, no public education, and no preservation of humanity’s cultural heritage, or no documentation and collection of the world’s natural history, without the work of curators. How does the public perceive them and their roles? A considerable body of work exists on the nature and dangers of stereotyping narratives, objects, and their creators when displayed in museum exhibitions [1,2,3]. Yet, there is a surprisingly limited and incoherent body of work that considers the cultural stereotypes of museum curators themselves. A systematic review found only a handful of references, with those that only specifically comment on this topic, including one that is dated [4] and one that is a blog post [5]. Curators differ from other cultural industry professions, such as archivists [6,7] and librarians [8,9,10].

The pervasive stereotypes of a curator, largely driven by ignorance of their actual work by the broader public, are that of ‘icy, turtlenecked snobs or puttering old men toiling away in dusty tombs’ [11]. They are most commonly seen as stuck in time, boring, dusty, and old [12], or dusty and dry [13], persons. They are ‘nerdy, conservative in dress and wear glasses’ [4], commonly being a bearded, White, male … shuffling through dusty boxes and curating specimens [14] in the basement. Other stereotypes of curators reflect the perceptions of the spaces they are working in, such as “dusty, gloomy, and nostalgic places frozen in time” [15]. An analysis of representations of curators in pop culture stereotyped them either as an art curator with the desirable attributes of being glamorous, powerful, and avant-garde, whereas “museum curator characters are all stuffiness, dogma, and tweed” [5]. Looking at popular representations in literature and film, 62% of curators are portrayed as women, with 89% being white/Caucasian [5].

Stereotypes are oversimplified and often inaccurate generalizations about groups that ignore individual differences. Professions and their attire are frequently stereotyped (e.g., “men are builders,” and “scientists wear lab coats”). Gender and ethnic stereotypes in careers can harm self-esteem, limit opportunities, and lead to discrimination. As a result, those who do not fit these molds may avoid certain professions, unintentionally reinforcing these biases [16]. Stereotypes, which form early, often in childhood, and which become difficult to change once firmly entrenched, are often reinforced through visual imagery in advertisements, movies, and social media [17,18].

The recent development of generative AI text-to-image models, such as DALL-E, Stable Diffusion, or Midjourney, has enabled the rapid creation of realistic, copyright-free, and royalty-free images for various uses, reducing the reliance on stock photos. Moreover, the text-to-image generation process generates a similar but slightly different output every time a prompt is issued, even if the prompt text is the same [19], and a visually different output when the prompt’s text is tweaked. Consequently, generative AI-created images are always ‘fresh,’ which adds to the appeal of their use in lieu of stock photos. Generative AI models cannot be entirely free from bias due to factors like model design, training data quality, and developer influence during design and training. Bias may stem from ideological leanings, the choice of language when training, and the use of sources that may contain outdated or racially biased information, as well as the cultural and geographical origin and the ideology of programmers and evaluators in the red-teaming phase [20,21,22,23,24,25,26]. As generative AI-created images may include subliminal biases that will go unnoticed in uncritical use [27], such imagery may cause unintended harm.

Biases in multimodal generative AI models comprise biases inherent in the interpretation of the user input (as above), which results in a prompt that is injected into the image generation ‘engine’, which interprets and visualizes the prompt [28,29]. The nature and effects of biases at the text-to-image stage vary depending on which point in the model development cycle they are injected into [30]. Studies have shown that AI-generated responses and images frequently depict professions in gender-stereotypical ways. A cross-professional study found that 60% of AI-generated professions aligned with societal stereotypes (curators were not assessed) [31]. Bias is also evident in text-to-image AI programs like DALL-E, where prompts often lead to under-representation or misrepresentation of gender and ethnicity [32,33]. When gender is unspecified, generative AI tends to depict men in authoritative roles more often than women [8,34]. Similarly, AI-generated images predominantly feature Caucasian (White) individuals [8,34,35,36], while African American characters are often shown in service roles [33]. These biases extend to physical appearance, favoring young individuals and adhering to stereotypical beauty norms. Notably, images of pregnant women or people with disabilities are largely absent in single-shot AI-generated prompts, highlighting the lack of diverse representation in AI-generated visuals [34].

While considerable work has been carried out on the representation of professions by generative AI, this has mainly focused on the medical and allied health professions [34,35,37,38,39,40,41], with limited work on educators [36,42], chemists [43], scientists, and engineers [44], as well as IT specialists and software engineers [45,46]. In the cultural industries, so far, only librarians have been assessed as a profession [8].

The question arises whether the observed generative AI biases are also reflected in the representation of museum curators, and if so, how these biases manifest themselves. Only one generative AI-created data set exists, but that was specifically generated to examine how women working in the creative and cultural industries are represented [47]. That data set of 200 images of women included 50 women curators who were imaged as purely Caucasian (White), predominantly young, and wearing blazers with primarily open blouses and glasses.

This paper will examine and experimentally demonstrate how a popular generative AI model, ChatGPT4o, interprets and visualizes the following:

- (1)

- The ethnicity, gender, appearance, and attire of curators when gender is not specified and in a variety of museum settings (art, fashion, maritime, natural history, science, social history, technology);

- (2)

- The key exhibits in the setting of each museum type;

- (3)

- The architecture of the museum interiors.

The focus on a single generative AI application, ChatGPT4o, is deliberate as this is by far the most commonly used generative AI tool, with 18 billion messages (prompts and responses) by over 700 million users (in July 2025), which equates to approximately 10% of the world’s adult population [48].

2. Methodology

2.1. Data Collection Process

When interpreting a user’s request as written in the prompt, ChatGPT4o is liable to generate a tailored response shaped by linguistic cues that reflect the user’s values and ideological orientations, if these do not contravene the ethical constraints and guardrails imposed by the system provider. To minimize the risk of responses being skewed toward a user’s particular ideological position or the perceptions derived from it, the prompts were deliberately formulated in an unconstrained fashion (zero-shot prompting). ChatGPT4o autogenerated the complex textual prompt injected into DALL-E to render the image. No human manipulation in the image conceptualization and implementation process occurred. The following prompt was used: “Think about [insert museum type] museums and the curators working in these. Provide me with a visualization that shows a typical curator against the background of the interior of the museum.”

For methodological consistency, the same prompt was used for each museum type. Each resulting image was saved to a disk, the AI-generated prompt retrieved it from the image panel, and, together with the image, copied it into a data file. After saving, the chat was deleted to ensure a clean and unbiased generation of a new image without legacy information being available to ChatGPT4o. The prompt specifically did not ask ChatGPT4o to generate multiple visualizations of the same prompt, or in the same chat sequence, as multiple questions would allow ChatGPT4o to infer that the user was not satisfied with the initial answer/rendering.

The museum settings were chosen to cover the conceptual range of museums, ranging from art (art, fashion) and history (maritime, social history) to science (science, technology) and the natural environment (natural history). It was posited that as these museums target different clienteles, and therefore, differences in the representation of curators might be observable. Fifty images each were generated for each of the seven key museum types: ‘art museum’ (AM), ‘fashion museum’ (FA), ‘maritime museum’ (MA), ‘natural history museum’ (NH) ‘science museum’ (SC), ‘social history museum’ (SH), and ‘technology museum’ (TE). Fifty images per data cell, with a total sample of 350, were considered an appropriate number to test for group differences and relationships [49].

The images were generated on 9–11 March 2025 (50 each of AM, FA, NH, and SH, and 10 each of MA, SC, and TE) and 20–22 September 2025 (40 each of MA, SC, and TE) (see Figure 1 for examples). The images as well as the prompts that ChatGPT4o used to generate the images have been archived in two stand-alone data files according to an established protocol [50] at the author’s institution and can be accessed via that depository [51,52].

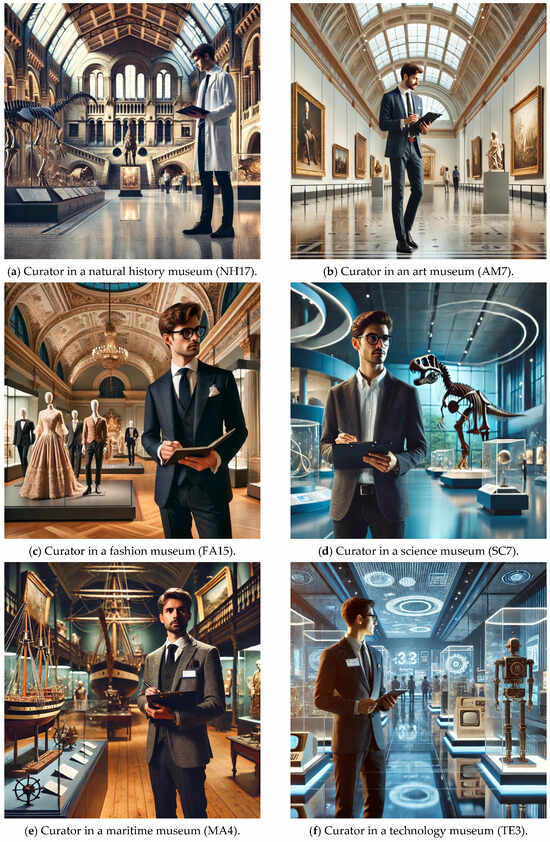

Figure 1.

Examples of museum curators depicted by ChatGPT4o.

2.2. Image Scoring

Visual cues of gender (male/female) and age were scored, with the latter scored as young (smooth skin texture, full cheeks), middle-aged (aged skin tone, some wrinkles), and old (wrinkles, folds, under-eye bags, gray hair, and gray beard). The criteria boundaries for age were somewhat fluid, drawing solely on facial features, skin tone, and hair coloring. Ethnicity was scored based on facial features (shape, eyes, and nose) and skin color. None of the ethnicity representations showed any ambiguity, in which case validation would have been sought from an external validator. Additionally, the hair style for women (bob, open long hair, bun, ponytail) was also scored, as well as the presence/absence of spectacles, a name tag, a book, tablet, or clipboard, as well as a beard (for males).

The museum setting in the background was scored in terms of the nature of exhibits, the design age, the positioning of windows, and the nature of showcases. The presumed age of museums was primarily inferred from the ornamentation of the ceiling, with the design of the window as a secondary indicator.

2.3. Luminosity and Colorfulness Determinations

The luminosity and colorfulness determinations of the images were conducted on 23 and 24 September 2025. For this analysis, the person in the foreground was masked, isolating the background for luminosity and colorfulness determination. The images were uploaded to ChatGPT4o in batches of ten, with ChatGPT4o being instructed with the following prompt:

Examine each image. For the following tasks disregard the person in front.

Task (1) calculate the average luminosity and the colorfulness of the remainder of the image.

Task (2) state the predominant color of the image in scientific color terminology

Output the values as table giving the file name, luminosity value, colorfulness value and predominant color (use a three-part response of lightness, temperature and hue such as “medium-light cold cyan hues”)

The perceived luminosity of each uploaded image was approximated by ChatGPT4o using a weighted sum of the RGB channels for each pixel outside the masked area, using the formula Equation (1) [53]:

L = 0.2126 × R + 0.7152 × G + 0.0722 × B

The maximum range for luminosity is between 0 (pure black) and 255 (pure white).

For the calculation of colorfulness, ChatGPT4o uses the Hasler and Suesstrunk metric (Equation (2)), which, based on the opponent color space, mimics human perception [54]:

and where σrg, σyb is the standard deviation of rg and yb; and μrg, μyb is the mean of rg and yb:

rg = R − G and yb = 0.5 × (R + G) − B

The maximum range for colorfulness is between 0 (a grayscale image) and 469 (a highly synthetic image with extreme red–green and yellow–blue contrasts alternating at full intensity and variation). The maximum in natural photography is between 120 and 130 [54].

2.4. Statistics

Multivariate Analyses

All multivariate analyses are based on 10,000 permutations and were performed using primer 7 version 7.0.23 [55].

Curators: Visual cues extracted from each image were converted to presence and absence measures for 17 attributes (Table 1). To assess patterns in attributes within and between images of curators from the different museums, we used a multivariate analysis in three phases. Firstly, we generated the Jaccard similarity coefficient between every pair of images. This coefficient was easily interpreted as the proportion of shared attributes between two images, and ranges between 0.0 for two images with no attributes in common and 1.0 for two images with identical attributes. For the overall data set (n = 350), we performed a permutation analysis of variance (PERMANOVA) to determine whether the 7 museum types had significantly different attributes [56]. That is, the PERMANOVA assesses whether images within museums were more or less similar to each other than they were to images from other museums. To complement the PERMANOVA, we performed similarity percentage analyses (SIMPER) to identify which attributes contributed the most to similarity within each museum type. Secondly, we generated the distance between the multivariate centroids for each museum (n = 7) and performed group average cluster analyses to identify groups of attributes that tended to co-occur between museum types and groups of museums that tended to have similar attributes. After inspecting the cluster outputs, we used arbitrary cut-off values of 75% similarity for attributes to be grouped and 70% similarity for museums to be grouped. Thirdly, we generated a two-way table with an 11-point shading scale based on average attribute occurrence to visually display the overall pattern in attribute co-occurrence and occurrence between museums. We present the two-way table results in the same Figure as the cluster analyses.

Table 1.

Visual cues of curators converted to presence and absence of attributes.

Museums: Museum visual scores for hue, lightness, and temperature were converted to presence and absence of attributes (Table 2). Luminosity was retained as a continuous variable. As the data are from a mixed format (presence/absence and continuous variables), we used Spearman’s rank correlation coefficient as a measure of similarity between images. We performed PERMANOVA to assess similarity of image attributes within and between museum types, and cluster analyses to identify groups of similar museums and attributes with similar patterns of occurrence. To evaluate the relationship of the attributes to the museum types, we performed a non-metric multidimensional ordination of the centroids for the seven museum images, with a principal axis correlation to assess which attributes were associated with the ordination space [57].

Table 2.

Visual cues of museums converted to presence and absence of attributes.

2.5. Limitations

The use of a single scorer for the assessment of personal attributes, exhibit content, and museum design introduces a level of subjectivity. That is offset by the extensive experience of the principal author in the analysis of AI-generated images of people [8,19,58,59].

3. Results

3.1. Representation of Curators

3.1.1. Gender

Of the total 350 images of curators generated for this study, the overwhelming majority (97.7%) depicted men (Table 3). All eight women curators rendered by DALL-E are young and professionally dressed in an ensemble. None of them are wearing glasses or a name tag. Their hair is worn long and open (4), tied in a bun (2), tied back, or cut short. Given the small number of women, the remainder of the discussion will address the portrayal of male curators.

Table 3.

Characteristics of male curators depicted by ChatGPT4o. Museum type: AM–art; FA–fashion; MA–maritime; NH–natural history; SC–science; SH–social history; and TE–technology.

3.1.2. Age

Most male curators are depicted as young men (Table 3), ranging from 100% among curators in fashion museums to 74% of curators in social history museums. The museums cluster into three groups: fashion, natural history, and science; art and social history; and maritime and technological. Art and social history have a significantly greater proportion of middle-aged staff than fashion or natural history (AM vs. NH χ2 = 4.921, df = 1, p = 0.0265; SH vs. NH χ2 = 5.683, df = 1, p = 0.0171), while curators in maritime and technological museums are significantly even older than the curators in social history museums (SH vs. MA χ2 = 10.503, df = 1, p = 0.0012; SH vs. TE χ2 = 7.139, df = 1, p = 0.0075).

3.1.3. Curator Attributes

The generative text-to-image combination of ChatGPT and DALL-E generated several persistent curator attributes. For example, more likely than not, curators in natural history, maritime, and science museums are wearing name tags or lanyards in the images rendered by ChatGPT/DALL-E, while they are eschewed altogether by curators working in art and fashion museums (Table 3). If the depictions by ChatGPT/DALL-E were to be believed, it is de rigeur for museum curators to walk through their exhibits armed with a clipboard (and notebooks) or digital tablets. Clipboards are very ‘popular’ among curators in art and fashion museums (96–98%), while tablets dominate in technological museums (90%) (Table 1). Given that beards are a stereotypical attribute of a male scientist [14,60,61], it is not surprising that three-quarters of the curators in the art, natural history, and maritime museums are depicted in that way. The exception are curators in fashion museums, where facial hair is depicted in only 20.9% of instances (Table 3). Glasses (spectacles), another common stereotypical attribute associated with learnedness and scientists [62,63,64], are not depicted with any coherent logic. They are particularly common in the portrayals of curators of technical museums as well as art museums (71.4–80%) and less common among curators working in science and fashion museums (30–34.9%) (Table 3).

3.1.4. Attire

Curators in art museums are significantly more likely to wear informal clothing, such as a blazer and pants (Figure 1b) than formal wear, such as suits (with or without vests) (Figure 1c) (χ2 = 12.83, df = 1, p = 0.0003), as are curators in social history museums (χ2 = 5.702, df = 1, p = 0.0169) (Table 4). Curators working in natural history museums are significantly more likely to be depicted as wearing formal clothing than laboratory coats (χ2 = 17.813, df = 1, p < 0.0001) (Figure 1a) or casual attire (χ2 = 26.009, df = 1, p < 0.0001) (Table 4).

Table 4.

Attire worn by curators depicted by ChatGPT4o (in %). Museum type: AM–art; FA–fashion; MA–maritime; NH–natural history; SC–science; SH–social history; and TE–technology.

3.1.5. Activity

All curators are depicted in some form of activity or interaction with the viewer of the image. Curatorial activities are primarily rendered as note-taking on clipboards or tablets, with a solitary case of a showcase being arranged in a fashion museum (Table 5). Representations of note-taking range from 92% in art museums to 90% in natural history museums, to 20% in science museums, and none in technology museums. Additionally, many curators were holding notebooks, clipboards, or tablets while facing, and presumably interacting with, the viewer (facing the viewer), suggesting that they were interrupted from their note-taking activity. Setting aside art museums and natural history museums (due to their 90%+ figures of note-taking), these percentages ranged from 10% (fashion museum) to 24% (social history museum) (Table 5). All museum types had curators who engaged with visitors (facing the viewer), either in a casual fashion (as indicated by having a hand in their pocket) or more formally. In addition, curators were depicted as looking pensively into the exhibition space, ranging from 10% in maritime and social history museums (again, setting aside art museums and natural history museums), to 60% in technology museums.

Table 5.

Activities of curators depicted by ChatGPT4o (in %). Museum type: AM–art; FA–fashion; MA–maritime; NH–natural history; SC–science; SH–social history; and TE–technology.

3.1.6. Inter-Museum Comparison

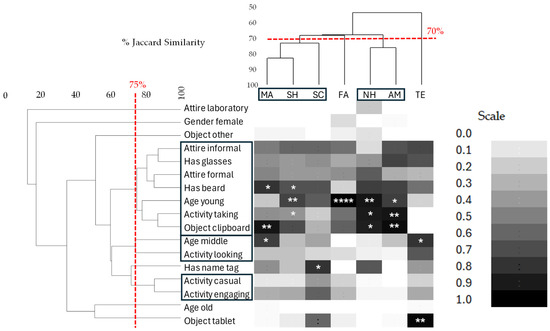

Images attributes of curators were more similar within museum types than between museum types overall (Pseudo F = 25.2, df = 6, 243, p < 0.0001), and all seven museum images were significantly different from every other museum (p-values < 0.0003). The most similar museum types were MA and SH (83% similar), which were grouped with SC (73% similar), whilst NH and AM were grouped together at 76% similarity (Figure 2). Curators from TE museums were the least similar to all other museums and joined the cluster at 54% similarity overall (Figure 2). The NH and AM curators were characterized as similar because of the prevalence of young curators, the taking of notes, and carrying a clipboard (Figure 2). MH, SC, and SH curators were more likely to have a beard and less likely to be young or taking notes. TE curators were mainly characterized by having a tablet and being middle-aged. FA curators were strongly depicted as being young without a beard (Figure 2). The less prevalent attributes, such as females, laboratory coats, other objects, and older curators, tended not to occur with similar patterns to any other attributes (Figure 2). Tablets were also dissimilar in occurrence to all other attributes, but occurred in 25% of curators overall, and in all but one of the TE museum curators.

Figure 2.

Two-way table showing relationship between attributes (visual scores) of curators generated by AI for images by museum type. Shading scale is the proportion of images within each museum type that have the attribute. Dendrograms show relationships between the attributes (Left) and museums (Top) based on the Jaccard similarity coefficient. Boxes show groups of attributes that have similar occurrence profiles (Left) and museums that have similar attributes (Top) at 75% and 70% similarity, respectively. Asterisks identify attributes that define each museum based on percent contribution to similarity (* ≥ 15%, ** ≥ 20%, **** ≥ 40%).

3.2. Museum Setting

The wording of the prompt requested ChatGPT4o to place the curators into a museum setting, which allows us to examine how ChatGPT4o/DALL-E constructs these images in terms of museum architecture (Table 6) and exhibitions (Table 7).

Table 6.

Characteristics of museums depicted by ChatGPT4o.

Table 7.

Perceived brightness and colorfulness of the interior of the museums.

3.2.1. Museum Architecture

Most of the museum buildings are depicted as hallowed halls of the nineteenth century with tall galleries, ornate walls, and large windows in the ceiling (Table 6, Figure 1a–c). Science and technology museums (Figure 1d,f), on the other hand, are portrayed as modern exhibition spaces with clean lines. Social history museums occupy a design space that is intermediate between these two extremes. Some visualizations show visitors in the background. Their presence ranges from entirely absent in fashion museums to being in 90% of all depictions in science and technology museums (Table 6).

3.2.2. Light Conditions and Color of the Museum Spaces

The perceived brightness (luminosity) of the library space in each image was approximated using a weighted sum of the RGB channels of the image outside of the depicted person (Table 7). The formula (see Methods) is based on the physiological aspects of human vision sensitivity, where the eye is most sensitive to green light, less to red, and least to blue [53]. All of the museum spaces barred those that show marked differences in lighting.

In addition to light conditions, we can consider the colorfulness of the interior design. The perceived colorfulness of an image, i.e., the intensity and diversity of color, can be calculated using the Hasler and Suesstrunk [54] metric, which mimics human perception. The calculated average values for the seven museum types range from 41.4 in the case of natural history museums to 160.6 in the case of technology museums (Table 7). Using the Hasler and Suesstrunk classification, the interiors of the maritime museums and natural history museums are ‘moderately colorful’, with art museums, science museums, and social history museums classified as ‘highly colorful’ and fashion museums and technology museums as ‘extremely colorful’ (Table 7 and Table 8).

Table 8.

Color tones of the interior of the museums (in %).

3.2.3. Museum Exhibits

While most of the dominant features of exhibition content are museum-specific, such as mannequins in fashion museums (e.g., Figure 1c) and boats in maritime museums, some features occur in multiple museum types (Table 9). An example is paintings, which are not only in art museums but also feature prominently in maritime museums as well as social history museums. Dinosaur skeletons not only feature in all representations of natural history museums (e.g., Figure 1a) but also in science museums (Figure 1d), while robots and screens feature prominently in both science and technology museums (Figure 1d,f)

Table 9.

Museums: exhibition content depicted by ChatGPT4o (percentages are given in brackets).

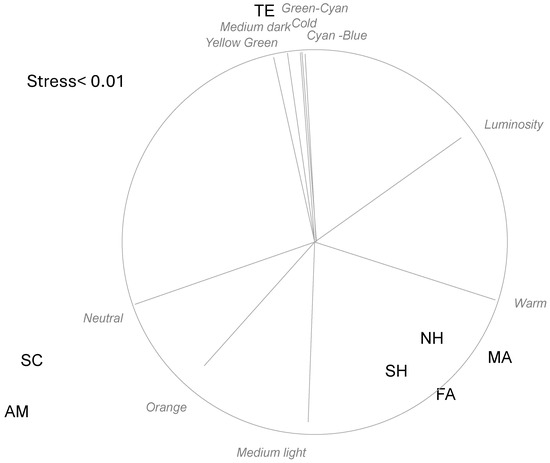

3.2.4. Inter-Museum Comparison

The museum images were also more similar to each other than to those representing other museums (Pseudo F = 55.2, df = 6, 243, p < 0.0001), and, collectively, each museum’s images were on average more similar to other images from the same museum than to other museum types (all p < 0.004). MA museum images were invariant for all attributes except for luminosity. All AM, FA, MA, and SC museums scored medium-light for lightness; MA, NH, and SH museums’ images were all warm in temperature, and all MA images were Red-yellow in hue. TE museums scored much more often for cold, medium-dark, tellow-green, green-cyan, and cyan-blue, making them appear considerably different from all other museum images (Figure 3). AM and SC images were the only ones that scored for neutral temperature, and both also scored regularly for orange hue, and this combination separated them from most other museum image types. NH, SF, FA, and MA museum images were generally associated with warm temperatures, medium light, and higher luminosity images (Figure 3).

Figure 3.

Non-metric multidimensional ordination of the centroids of 50 images for each of seven museum types based on the attributes of the museum in the image. Similarity is Spearman’s correlation coefficient. Vectors show the correlation of the attributes with the ordination space; the circle indicates the maximum correlation value of 1.

4. Discussion

As there is no systematic overview of the demographic composition of curators employed in the world’s museums and galleries [65], a comparison of the representations by DALL-E with the real world is difficult. The available data are skewed towards anglophone countries, as data from major populous nations such as India, China, and Russia have not been compiled by the national census bodies.

In Canada, 70.3% of all curators and conservators who identified their gender in 2021 were women [66], while in the United Kingdom, the figure was 49% in 2018 [67]. In the USA, women made up 58.6% of archivists, curators, and museum technicians in the 2022 census (2014: 63.1%; 2017: 63.9%; 2020: 60.4%) [68]. The representation of women Australian gallery and museum curators has been steadily increasing over the past two decades, making up 72% according to the 2021 census (1996: 54.3%; 2001: 60.7%; 2006: 63.7%; 2011: 67.0%; 2016: 67.6%) [69,70,71,72,73], while the representation in New Zealand has been stable (2006: 57.7%, 2012: 57.5%) [74]. In the non-Anglophone world, data are available from Switzerland (2021: 62% women [75]), France (2003: 72% [76]) and Sweden (2022: 66% [77]). In Germany, the representation of women increased from 21.2% in 1994 to 33.6% in 2014 [78]. As the German data are limited to museum directors, rather than all curators, the low representation may be an artifact of gender representation in leadership roles overall.

Thus, the extremely biased gender representation of the visualizations of curators by generative AI, with 97.7% being presented as male, does not reflect the reality on the ground, which ranges from 49% to 72%, but perpetuates the stereotype that ‘responsible’ and ‘influential’ positions are held by men, a pattern also observed in other generative AI visualizations [8,31].

The age profile of archivists, curators, and museum technicians in the USA, when concatenated into the classes young (≤34), middle-aged (35–54) and old (≥55), showed a broadly even distribution in 2014 (30.3%:30.0%:39.8%), becoming more skewed in 2022, with a decrease in middle-aged and an increase in older curators (31.9%:26.4%:41.7%) [68]. The Australian data show that over 50% of curators were middle-aged, with the remainder roughly evenly split between young and older curators (2021—22.3%:52.0%:25.9%). Overall, Australian gallery and museum curators are becoming an increasingly aging workforce (≥55: 1996:12.4%; 2011: 22.4%; 2021: 25.9%) [69,70,79]. As in the case of the generative AI representation of curators’ gender, the visualizations of the age profiles are heavily skewed towards younger staff, which does not reflect the reality in the institutions, nor does it reflect ChatGPT4o’s own ‘understanding’ of the curatorial universe (see below).

Few statistics exist for the ethnicity of museum curators. In the USA, 82.6% of archivists, curators, and museum technicians were Caucasian in 2022 (down from 86.1% in 2014) [68]. In the United Kingdom, of all museum staff who stated their ethnicity, 64% were identified as White [67]. The ethnic composition of the curatorial population depicted by ChatGPT/DALL-E was one hundred percent White/Caucasian. This representation is significantly higher than even the actual representation in the USA (χ2 = 18.605, df = 1, p < 0.0001). Other papers have also shown a strong bias towards Caucasian ethnicity, albeit not as extreme as in the current study [8,34,35,36].

This is not surprising if we posit that the primary source of training data is derived from English-speaking, primarily US sources [80], and that the composition of the red team membership providing output quality control and moderation during the training phase is heavily drawn from North American experts [27].

Not only are most curatorial staff represented as young Caucasians, but all curators are also depicted as elegantly dressed, even when wearing casual wear, with coiffed, stylish hair and well-trimmed beards. Not only are the curatorial characters as presented by ChatGPT/DALL-E a far cry from the popular stereotype of curators as nerdy, conservative in dress, and stuck in time (see introduction), but in these visualizations, they generally resemble yuppie professionals or people portrayed in fashion advertising (male ‘eye-candy’) rather than curators in real life.

Based on these observations of dissonance, the question was put to ChatGPT4o to “describe a typical curator in terms of gender, ethnicity, age and attire” to assess whether the response conformed to the stereotypes that were visualized (see supportive material, ‘conversations’ A–D) [51]. Congruent with its training to make ChatGPT4o respond in a balanced fashion [81,82], the answers noted a preponderance of Caucasian curators, noting increasing numbers of other ethnic backgrounds as well as the (possible) existence of non-binary individuals. A male dominance in art and natural history museums was stated, while women were strongly represented in social history and fashion museums. When asked whether these observations would inform the creation of an image of a ‘typical curator,’ ChatGPT4o responded that it “wouldn’t rely solely on outdated stereotypes (e.g., an older white male in a tweed jacket), but rather create a plausible, modern representation that reflects the growing diversity in the field” (‘conversation’ A) and that it would “use the description as a general guide but avoid reinforcing stereotypes” (‘conversation’ C).

Clearly, there is a dissonance between the ‘understanding’ of curators by ChatGPT and the resulting visualization. Analysis of the image prompts generated by ChatGPT showed that biases are generated at two steps in the image generation process: the initial step that is autonomously generated by ChatGPT, which may specify age, ethnicity, or gender, or may remain silent on this, and the interpretation of the injected prompt by DALL-E [19]. That ties back to the various points of origin of the biases in the source data and their injection into the training data, as well as the development of the algorithm [30]. Essentially, the underlying original image–caption pairs that form the basis of the training data sets [30] were flawed. Public images of curators in their workspaces and museum settings are not very common, exacerbated by the fact that much of a curator’s work occurs out of the public eye. Thus, it can be speculated that the image bias is derived from representations in generic stock photography that were incorporated in several of the large training data sets [30].

The curatorial profession may need to consider the implications of these AI-generated stereotypes that differ so dramatically from the realities. It can be posited that the profession was not aware of the misrepresentations in stock photography or did not see them as harmful. While these biases are now ‘baked’ into the model, they can be overcome by the judicious crafting of prompts. That, however, requires that the public at large is aware of the nature of curators, which may necessitate proactive image management by the profession.

The portrayal of the museums also follows existing stereotypes. The renderings of the natural history museum interiors (replete with dinosaur skeletons (e.g., Figure 1a)), for example, have very strong echoes of the main hall of the Natural History Museum in South Kensington (London, UK) designed by Alfred Waterhouse [83], while the interiors of the art museums, with their arched glass ceilings, have strong semblances to the grand galleries of the Louvre (Paris, France) [84]. The renderings of the fashion museums echo these galleries, but on a less grandiose scale. While the rendering of the other museums is generally consistent for each museum type, the key architectural details in each rendering are not as consistent as the two previous examples. Yet, all museum interiors (understandably) have echoes of existing museums. Broadly speaking, maritime museums have a ‘wooden look,’ while social history museums have a subdued appearance with compact exhibition spaces. When considering the depictions of science and technology museums, it is worth noting that ‘futurist’ technology is rendered in a translucent azure or cyan blue color (Figure 1f). This has also been observed in other studies that examine futurist renderings by ChatGPT4o/DALL-3 [27,85,86].

This study has three limitations that need to be acknowledged. While there are a number of text-to-image generative AI applications, e.g., Midjourney, Stable Diffusion, Adobe Firefly, and ChatGPT/DALL-E, the study only examined the output generated by the latter. This was deliberate, as ChatGPT is by far the most commonly used generative AI tool, with over 700 million users [48]. The second potential limitation is that the study relies on prompts that request a single rendering, rather than asking ChatGPT4o to provide a suite of, say, four different renderings. Forcing ChatGPT4o to provide a single rendering was deliberate in order to trigger underlying biases, whereas multiple renderings at the same time will force ChatGPT4o to provide variation by including other expressions of the same variables (e.g., gender, ages, etc.). The third limitation is that the study drew on version ChatGPT4o, which has now been superseded by ChatGPT5. This present study serves as a firm baseline for future work evaluating whether subsequent versions provide for less-biased data.

More generally, the findings of this study contribute to the broader dialogue on the societal implications of AI, with particular attention to education, representation, and the dissemination of information. The stereotypical depiction of curators not only diminishes public understanding of these crucial roles but also reinforces outdated narratives. This research highlights the urgent need for critical evaluation of images generated by AI, as the biases embedded within these outputs can have significant consequences, shaping public perception and perpetuating stereotypes that fail to reflect reality. When illustrating web content, authors seeking to generate copyright- and royalty-free images ‘on the fly’ need to be cognizant of the subliminal biases and stereotypes that seemingly ‘innocent’ imagery may convey. By drawing attention to the inaccuracies and constraints of AI-generated imagery, we can better prepare future generations to engage with technology critically and foster a more sophisticated understanding of its broader implications.

5. Conclusions

The study set out to examine how the popular generative AI model, ChatGPT4o, interprets and visualizes curators, and to what extent these visualizations reflect the popular stereotype of curators as boring or at least nerdy, conservative in dress, and stuck in time, rummaging through collections in the basement. Based on 230 individually generative AI-created visualizations of curators in seven different museum types, the study found a strong bias toward depicting men, with only a handful of women included. The AI-generated representations contrast sharply with real-world demographics. Women are extremely under-represented, despite making up a significant portion of museum professionals in the English-speaking world. The women portrayed were all young and professionally dressed. Most male curators were also shown as young, particularly in fashion and natural history museums, while social history, maritime, and technology museums included more middle-aged individuals. Curatorial attire varied, with art and social history curators more often in informal clothing, while natural history curators were dressed more formally.

The AI-generated representations contrast sharply with real-world demographics. Women, for example, were extremely under-represented, despite making up a significant portion of museum professionals in the English-speaking world. Likewise, the young professionals dominated the age structure of curators among the generative AI visualizations, while most curators were in their late 40s and 50s.

Stereotypical attributes, however, were consistently depicted. Name tags appeared mostly in science, natural history, and maritime museums, but were absent in art and fashion museums. Curators in art and fashion museums were frequently depicted with clipboards, while those in technology museums often held tablets. While glasses appeared inconsistently, beards were common among curators from art, natural history, and maritime museums, but rare in fashion museums. Museum settings followed traditional representations, as hallowed halls of the nineteenth century with tall galleries, ornate walls, and large windows in the ceilings. Only science and technology museums were portrayed as modern exhibition spaces.

The findings highlight biases introduced at multiple stages of AI-generated content, shaping an inaccurate portrayal of museum professionals and museum interiors if the images are taken uncritically at ‘face value’. There is a risk that generative AI visualizations, due to their copyright-free nature, will be used as a generic stand-in illustration in media items and web pages of public presentations, generating and perpetuating false impressions of curators and museum interiors.

Author Contributions

Conceptualization: D.H.R.S.; methodology: D.H.R.S.; data collection: D.H.R.S.; formal analysis: D.H.R.S. and W.R.; writing—original draft preparation: D.H.R.S.; writing—review and editing: D.H.R.S. and W.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data presented in the study are openly available via doi: https://doi.org/10.26189/951bb16a-3dda-4a84-bd3d-994203d28c7e and doi: https://doi.org/10.26189/46f08bfc-a9f4-4172-8fea-96c4641fdef5.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sandell, R. Museums, Prejudice and the Reframing of Difference; Routledge: London, UK, 2007. [Google Scholar]

- Coffee, K. Cultural inclusion, exclusion and the formative roles of museums. Mus. Manag. Curatorship 2008, 23, 261–279. [Google Scholar] [CrossRef]

- Bargna, I. The Anthropologist as Curator; Routledge: London, UK, 2020. [Google Scholar]

- Mayer, C.E. The contemporary curator: Endangered species or brave new profession? Muse 1991, 9, 34–43. [Google Scholar]

- Lawther, K. Who Are Museum Curators According to Pop Culture? 15 November 2020. Available online: http://acidfreeblog.com/curation/who-are-museum-curators-according-to-pop-culture/ (accessed on 12 March 2025).

- Aldred, T.; Burr, G.; Park, E. Crossing a librarian with a historian: The image of reel archivists. Archivaria 2008, 66, 57–93. [Google Scholar]

- Vilar, P.; Šauperl, A. Archives, quo vadis et cum quibus? Archivists’ self-perceptions and perceptions of users of contemporary archives. Int. J. Inf. Manag. 2015, 35, 551–560. [Google Scholar] [CrossRef]

- Spennemann, D.H.R.; Oddone, K. What do librarians look like? Stereotyping of a profession by generative AI. J. Librariansh. Inf. Sci. 2025, 16, 09610006251357286. [Google Scholar] [CrossRef]

- Seale, M. Old maids, policeman, and social rejects: Mass media representations and public perceptions of librarians. Electron. J. Acad. Spec. Librariansh. 2008, 9, 107. [Google Scholar]

- White, A. Not Your Ordinary Librarian: Debunking the Popular Perceptions of Librarians; Chandos Publishing: Oxford, UK, 2012. [Google Scholar]

- Carter, S. Review of: Rosalind, M. Pepall, Talking to a Portrait: Tales of an Art Curator. 5 October 2020. Available online: https://quillandquire.com/review/talking-to-a-portrait-tales-of-an-art-curator/#:~:text=But%20because%20most%20people%20aren,toiling%20away%20in%20dusty%20tombs (accessed on 12 March 2025).

- Moniz, M. Source of nostalgia and archival recreation: The South African hellenic archive. De Arte 2021, 56, 82–106. [Google Scholar] [CrossRef]

- Voris, H.K.; Resetar, A. Hymen Marx. Iguana 2007, 14, 135–136. [Google Scholar]

- Rogers, S.L.; Giles, S.; Dowey, N.; Greene, S.E.; Bhatia, R.; Van Landeghem, K.; King, C. “you just look at rocks, and have beards” Perceptions of Geology From the United Kingdom: A Qualitative Analysis From an Online Survey. Earth Sci. Syst. Soc. 2024, 4, 10078. [Google Scholar] [CrossRef]

- Cabrera, R.M. Beyond Dust, Memories and Preservation: Roles of Ethnic Museums in Shaping Community Ethnic Identities; University of Illinois at Chicago: Chicago, IL, USA, 2008. [Google Scholar]

- Greenwald, A.G.; Banaji, M.R.; Rudman, L.A.; Farnham, S.D.; Nosek, B.A.; Mellott, D.S. A unified theory of implicit attitudes, stereotypes, self-esteem, and self-concept. Psychol. Rev. 2002, 109, 3. [Google Scholar] [CrossRef] [PubMed]

- Dixon, T.L. Understanding how the internet and social media accelerate racial stereotyping and social division: The socially mediated stereotyping model. In Race and Gender in Electronic Media; Lind, R.A., Ed.; Routledge: Abingdon, UK, 2016; pp. 161–178. [Google Scholar]

- Lafky, S.; Duffy, M.; Steinmaus, M.; Berkowitz, D. Looking through gendered lenses: Female stereotyping in advertisements and gender role expectations. J. Mass Commun. Q. 1996, 73, 379–388. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. Who is to blame for the bias in visualizations, ChatGPT or Dall-E? AI 2025, 6, 92. [Google Scholar] [CrossRef]

- Choudhary, T. Political Bias in AI-Language Models: A Comparative Analysis of ChatGPT-4,Perplexity, Google Gemini, and Claude. IEEE Access 2025, 13, 11341–11365. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. The origins and veracity of references ‘cited’ by generative artificial intelligence applications. Publications 2025, 13, 12. [Google Scholar] [CrossRef]

- Tao, Y.; Viberg, O.; Baker, R.S.; Kizilcec, R.F. Cultural bias and cultural alignment of large language models. PNAS Nexus 2024, 3, pgae346. [Google Scholar] [CrossRef]

- Chua, A.Y.; Chen, M.; Kan, M.; Seoh, W. Digital prejudices: An analysis of gender, racial and religious biases in generative AI chatbots. Internet Res. 2025, 1–27. [Google Scholar] [CrossRef]

- Ye, S. Generative AI May Prefer to Present National-level Characteristics of Cities Based on Stereotypical Geographic Impressions at the Continental Level. arXiv 2023, arXiv:2310.04897. [Google Scholar] [CrossRef]

- Hörmeyer, M. Verzerrte Datensätze-ein Dilemma für den wirksamen KI-Einsatz in Verwaltungen? Innov. Verwalt. 2024, 46, 9. [Google Scholar] [CrossRef]

- Strasser, K.; Niedermayer, B. Unvoreingenommenheit von Künstliche-Intelligenz-Systemen. Die Rolle von Datenqualität und Bias für den verantwortungsvollen Einsatz von künstlicher Intelligenz. In CSR und Künstliche Intelligenz; Altenburger, R., Schmidpeter, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2021; pp. 121–135. [Google Scholar]

- Spennemann, D.H.R. Non-Responsiveness of DALL-E to Exclusion Prompts Suggests Underlying Bias Towards Bitcoin. 2025. Available online: https://ssrn.com/abstract=5199640 (accessed on 12 March 2025).

- Saleh, M.; Tabatabaei, A. Building Trustworthy Multimodal AI: A Review of Fairness, Transparency, and Ethics in Vision-Language Tasks. Int. J. Web Res. 2025, 8, 11–24. [Google Scholar] [CrossRef]

- Narnaware, V.; Vayani, A.; Gupta, R.; Swetha, S.; Shah, M. Sb-bench: Stereotype bias benchmark for large multimodal models. arXiv 2025, arXiv:2502.08779. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. The Layered Injection Model of Algorithmic Bias as a Conceptual Framework to Understand Biases Impacting the Output of Text-to-Image Models. 2025. Available online: https://ssrn.com/abstract=5393987 (accessed on 12 March 2025).

- Melero Lázaro, M.; García Ull, F.J. Gender stereotypes in AI-generated images. El Prof. Inf. 2023, 32, e320505. [Google Scholar] [CrossRef]

- Hacker, P.; Mittelstadt, B.; Borgesius, F.Z.; Wachter, S. Generative discrimination: What happens when generative AI exhibits bias, and what can be done about it. In The Oxford Handbook of the Foundations and Regulation of Generative AI Hacker; Hacker, P., Mittelstadt, B., Hammer, S., Engel, A., Eds.; Oxford University Press: Oxford, UK, 2025. [Google Scholar]

- Hosseini, D.D. Generative AI: A problematic illustration of the intersections of racialized gender, race, ethnicity. OSF Prepr. 2024. [Google Scholar] [CrossRef]

- Currie, G.; John, G.; Hewis, J. Gender and ethnicity bias in generative artificial intelligence text-to-image depiction of pharmacists. Int. J. Pharm. Pract. 2024, 32, 524–531. [Google Scholar] [CrossRef] [PubMed]

- Currie, G.; Hewis, J.; Hawk, E.; Rohren, E. Gender and ethnicity bias of text-to-image generative artificial intelligence in medical imaging, part 2: Analysis of DALL-E 3. J. Nucl. Med. Technol. 2024, 52, 356–359. [Google Scholar] [CrossRef]

- Currie, G.; Hewis, J.; Wheat, J. Gender and ethnicity representation of university academics by generative artificial intelligence using DALL-E 3. J. Furth. High. Educ. 2025, 49, 1064–1078. [Google Scholar] [CrossRef]

- Currie, G.; Chandra, C.; Kiat, H. Gender Bias in Text-to-Image Generative Artificial Intelligence When Representing Cardiologists. Information 2024, 15, 594. [Google Scholar] [CrossRef]

- Currie, G.; Currie, J.; Anderson, S.; Hewis, J. Gender bias in generative artificial intelligence text-to-image depiction of medical students. Health Educ. J. 2024, 83, 732–746. [Google Scholar] [CrossRef]

- Morcos, M.; Duggan, J.; Young, J.; Lipa, S.A. Artificial Intelligence Portrayals in Orthopaedic Surgery: An Analysis of Gender and Racial Diversity Using Text-to-Image Generators. J. Bone Jt. Surg. 2024, 106, 2278–2285. [Google Scholar] [CrossRef]

- Cevik, J.; Lim, B.; Seth, I.; Sofiadellis, F.; Ross, R.J.; Cuomo, R.; Rozen, W.M. Assessment of the bias of artificial intelligence generated images and large language models on their depiction of a surgeon. ANZ J. Surg. 2024, 94, 287–294. [Google Scholar] [CrossRef]

- Abdulwadood, I.; Mehta, M.; Carrion, K.; Miao, X.; Rai, P.; Kumar, S.; Lazar, S.; Patel, H.; Gangopadhyay, N.; Chen, W. AI Text-to-Image Generators and the Lack of Diversity in Hand Surgeon Demographic Representation. Plast. Reconstr. Surg. Glob. Open 2024, 12, 4–5. [Google Scholar] [CrossRef]

- Thomson, E.; Chapman, R.; Cooper, G.; Cooper, Z. Exploring bias in artificial intelligence: Stereotypes and gendered narratives in digital imagery of early childhood educators. Gend. Educ. 2025, 1–20. [Google Scholar] [CrossRef]

- Kaufenberg-Lashua, M.M.; West, J.K.; Kelly, J.J.; Stepanova, V.A. What does AI think a chemist looks like? An analysis of diversity in generative AI. J. Chem. Educ. 2024, 101, 4704–4713. [Google Scholar] [CrossRef]

- Arriola-Mendoza, J.; Aleman de la Garza, L.Y.; Valerio-Urena, G. Biases in Generative AI in STEM Fields: An Analysis of ChatGPT-4, Bing, and Image Creator. In Proceedings of the 6th International Conference on Modern Educational Technology, Tokyo, Japan, 13–15 December 2024; pp. 71–77. [Google Scholar]

- d’Aloisio, G.; Fadahunsi, T.; Di Marco, A.; Sarro, F. How Do Generative Models Draw a Software Engineer? An Empirical Study on Implicit Bias of Open-Source Image Generation Models. Available online: https://ssrn.com/abstract=5247729 (accessed on 12 March 2025).

- Fadahunsi, T.; d’Aloisio, G.; Di Marco, A.; Sarro, F. How Do Generative Models Draw a Software Engineer? A Case Study on Stable Diffusion Bias. arXiv 2025, arXiv:2501.09014. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. Two Hundred Women Working in Cultural and Creative Industries: A Structured Data Set of Generative Ai-Created Images; Charles Sturt University: Albury, Australia, 2025. [Google Scholar] [CrossRef]

- Chatterji, A.; Cunningham, T.; Deming, D.J.; Hitzig, Z.; Ong, C.; Shan, C.Y.; Wadman, K. How People Use Chatgpt; National Bureau of Economic Research: Cambridge, MA, USA, 2025. [Google Scholar]

- VanVoorhis, C.W.; Morgan, B.L. Understanding power and rules of thumb for determining sample sizes. Tutor. Quant. Methods Psychol. 2007, 3, 43–50. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. Children of AI: A protocol for managing the born-digital ephemera spawned by Generative AI Language Models. Publications 2023, 11, 45. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. What do curators look like? Stereotyping of a profession by generative AI—Supplementary Data. 2025. [Google Scholar] [CrossRef]

- Spennemann, D.H.R. What do curators look like? Stereotyping of a profession by generative AI—Additional Images. 2025. [Google Scholar] [CrossRef]

- ITU. Parameter Values for the HDTV Standards for Production and International Programme Exchange. Recommendation ITU-R BT.709-6; Radiocommunication Sector, International Telecommunications Union: Geneva, Switzerland, 2015. [Google Scholar]

- Hasler, D.; Suesstrunk, S.E. Measuring colorfulness in natural images. In Proceedings of the Human Vision and Electronic Imaging VIII, Santa Clara, CA, USA, 20–24 January 2003; pp. 87–95. [Google Scholar]

- Clarke, K.R.; Gorley, R.N. PRIMER v7: User Manual/Tutorial; PRIMER-E Ltd.: Plymouth, UK, 2015. [Google Scholar]

- Anderson, M.J.; Gorley, R.N.; Clarke, K.R. PERMANOVA+ for PRIMER: Guide to Software and Statistical Methods; PRIMER-E: Plymouth, UK, 2008. [Google Scholar]

- Faith, D.; Norris, R. Correlation of environmental variables with patterns of distribution and abundance of common and rare freshwater macroinvertebrates. Biol. Conserv. 1989, 50, 77–98. [Google Scholar] [CrossRef]

- Rosser, E.; Spennemann, D.H.R. Indigenous erasure in the age of generative Artificial Intelligence. AI Soc. under review.

- Spennemann, D.H.R.; Oddone, K. Creating ‘Margaret’ How does ChatGPT construct the image of a typical librarian? Aust. J. Teach. Educ. under review.

- Mitchell, M.; McKinnon, M. ‘Human’ or ‘objective’faces of science? Gender stereotypes and the representation of scientists in the media. Public Underst. Sci. 2019, 28, 177–190. [Google Scholar] [CrossRef]

- Newton, D.P.; Newton, L.D. Young children’s perceptions of science and the scientist. Int. J. Sci. Educ. 1992, 14, 331–348. [Google Scholar] [CrossRef]

- Razina, T.; Volodarskaya, E. Opportunities and specificity of applying the draw-a-scientist test technique on Russian schoolchildren. In Early Childhood Care and Education, European Proceedings of Social and Behavioural Sciences; Sheridan, S., Veraksa, N., Eds.; Future Academy: Singapore, 2018; Volume 43, pp. 109–117. [Google Scholar] [CrossRef]

- Yang, D.; Zhou, M. Exploring Lower-Secondary School Students’ Images and Opinions of the Biologist. J. Balt. Sci. Educ. 2017, 16, 855–872. [Google Scholar] [CrossRef]

- Thomson, M.M.; Zakaria, Z.; Radut-Taciu, R. Perceptions of scientists and stereotypes through the eyes of young school children. Educ. Res. Int. 2019, 2019, 6324704. [Google Scholar] [CrossRef]

- Boylan, P.J. The museum profession. In A Companion to Museum Studies; Macdonald, S., Ed.; Wiley: New York, NY, USA, 2006; pp. 415–430. [Google Scholar]

- Statistics Canada. Table 98-10-0449-01 Occupation Unit Group by Labour Force Status, Highest Level of Education, Age and Gender: Canada, Provinces and Territories, Census Metropolitan Areas and Census Agglomerations with Parts. 2022, 10 March 2025. Available online: https://www150.statcan.gc.ca/t1/tbl1/en/tv.action?pid=9810044901 (accessed on 30 September 2025).

- England, A.C. Equality, Diversity and the Creative Case. A Data Report 2018–19; Arts Council England: London, UK, 2015. [Google Scholar]

- Data USA. Archivists, Curators, & Museum Technicians. 2025. Available online: https://datausa.io/profile/soc/archivists-curators-museum-technicians (accessed on 12 March 2025).

- Australian Bureau of Statistics. ABS Labour Force Survey. Occupation Data (November 2024); Australian Bureau of Statistics: Canberra, Australia, 2024.

- Australian Bureau of Statistics. 6273.0 Employment in Culture, Australia, 2011; Australian Bureau of Statistics: Canberra, Australia, 2012.

- Australian Bureau of Statistics. 62730DO001 Employment in Culture, Australia, 2006; Australian Bureau of Statistics: Canberra, Australia, 2008.

- Australian Bureau of Statistics. 6273.0 Employment in Culture, Australia, 2001; Australian Bureau of Statistics: Canberra, Australia, 2003.

- Western Australia. 6273.0 Employment in Culture, 2016; Western Australia: San Francisco, CA, USA, 2021. [Google Scholar]

- Whiteford, A.; van Seventer, D.; Patterson, B. A Profile of the Museums Sector in New Zealand; ServiceIQ: Wellington, New Zealand, 2014. [Google Scholar]

- Hagin, L. Museum Statistics: How Many People Work in the Museum. 2024. Available online: https://en.audio-cult.com/museumsstatistik-struktur-und-arbeitsbedingungen-im-museum (accessed on 22 September 2025).

- Benhamou, f.; Moureau, N. Les « Nouveaux Conservateurs » Enquête Auprès des Conservateurs Formés Par l’Institut National du Patrimoine (Promotions 1991 à 2003); Ministère de la Culture et de la Communication: Paris, France, 2006.

- Gordan, R. Sveriges Curatorer: “Vi Har Hamnat Mellan Stolarna”. 13 June 2022. Available online: https://svenskcuratorforening.se/en/magasin-k-swedens-curators-we-have-been-left-in-the-lurch/#:~:text=Only%20in%202014%20did%20Statistics,same%20since%20the%20measurements%20began (accessed on 20 September 2025).

- Schulz, G.; Ries, C.; Zimmermann, O. Frauen in Kultur und Medien. Ein Überblick über Aktuelle Tendenzen, Entwicklungen und Lösungsvorschläge; Deutscher Kulturrat e.V.: Berlin, Germany, 2016. [Google Scholar]

- Australian Bureau of Statistics. 6273.0 Employment in Selected Culture/Leisure Occupations, Australia; Australian Bureau of Statistics: Canberra, Australia, 1998.

- Garcia, N.; Hirota, Y.; Wu, Y.; Nakashima, Y. Uncurated image-text datasets: Shedding light on demographic bias. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6957–6966. [Google Scholar]

- OpenAI. GPT-4 System Card; OpenAi: San Francisco, CA, USA, 2024. [Google Scholar]

- OpenAI. GPT-4o System Card; OpenAi: San Francisco, CA, USA, 8 August 2024. [Google Scholar]

- Bullen, J.B. Alfred Waterhouse’s Romanesque ‘Temple of Nature’: The Natural History Museum, London. Archit. Hist. 2006, 49, 257–285. [Google Scholar] [CrossRef]

- Connelly, J.L. The Grand Gallery of the Louvre and the Museum Project: Architectural Problems. J. Soc. Archit. Hist. 1972, 31, 120–132. [Google Scholar] [CrossRef]

- Oddone, K.; Spennemann, D.H.R. Libraries in 2075. How does a generative artificial intelligence model envision a library in 50 years’ time? Int. Inf. Libr. Rev. under review.

- Spennemann, D.H.R. Wither Heritage? How Does a Generative Artificial Intelligence Model Envision Humanity’s Cultural Heritage in 30 Years’ Time?—Supplementary Data; Charles Sturt University: Albury, Australia, 2025. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).