Driving across Markets: An Analysis of a Human–Machine Interface in Different International Contexts

Abstract

1. Introduction

1.1. Evaluation and Development of Human–Machine Interfaces

1.2. Benchmarking in Different Markets

1.3. Research Question

2. Materials and Methods

2.1. Participants

2.2. Human–Machine Interface

2.3. Material

2.3.1. Measurement of Satisfaction

2.3.2. Measurement of Hedonic Qualities

2.3.3. Measurement of Overall Evaluation

2.3.4. Measurement of Interaction Performance

2.4. Study Design and Procedure

2.5. Statistical Procedure

3. Results

3.1. Results: Study 1

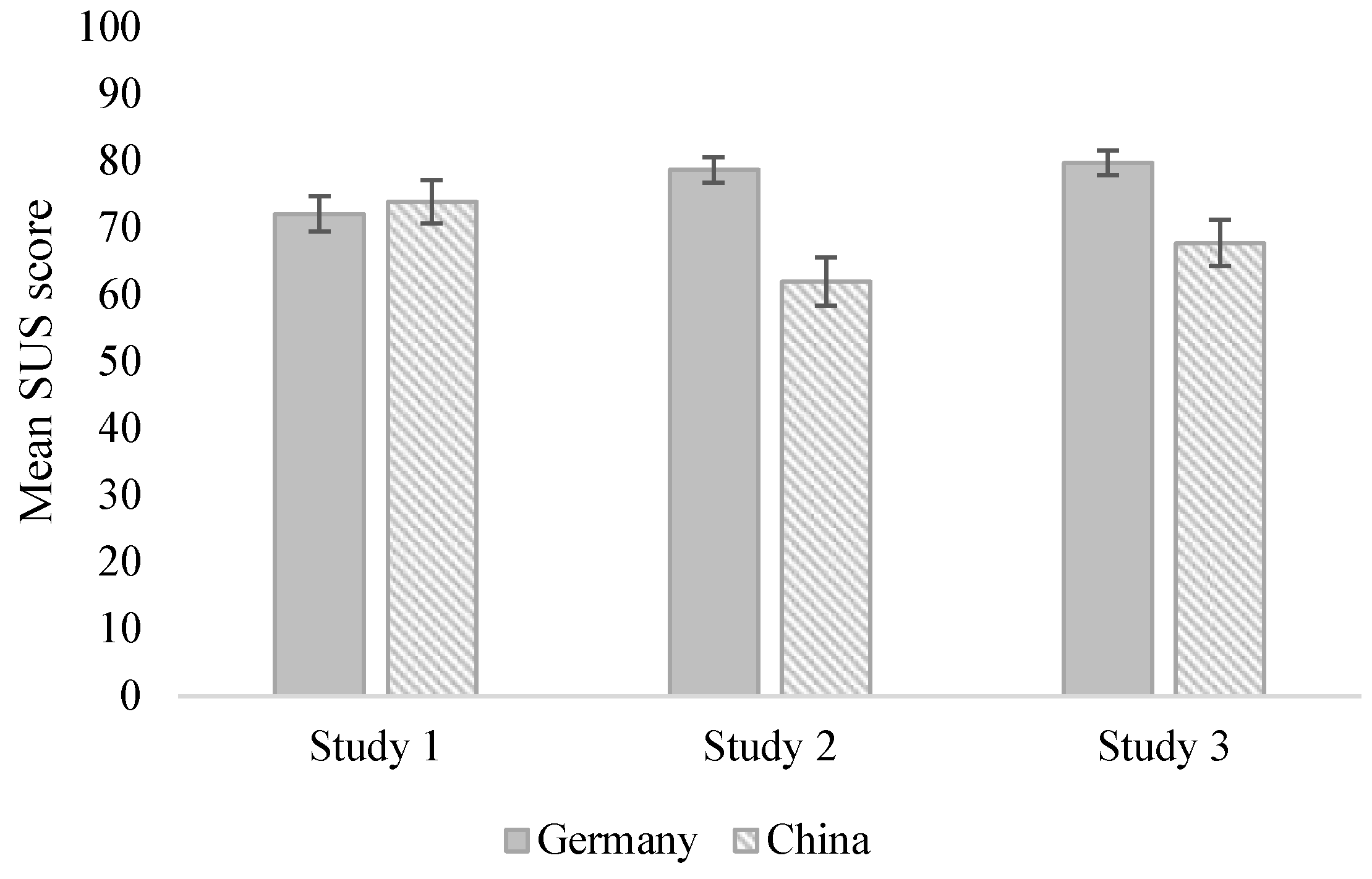

3.1.1. SUS

3.1.2. NPS

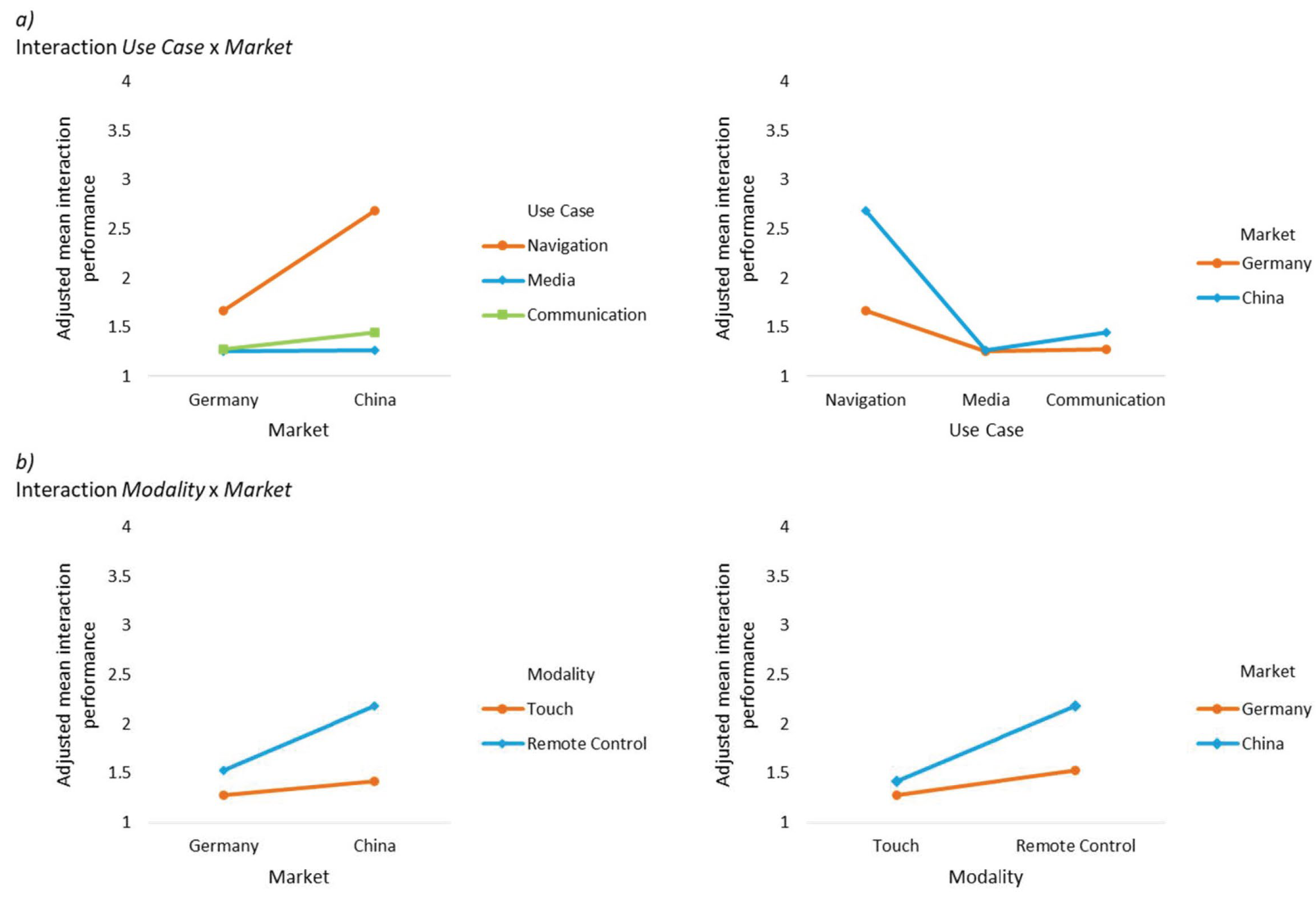

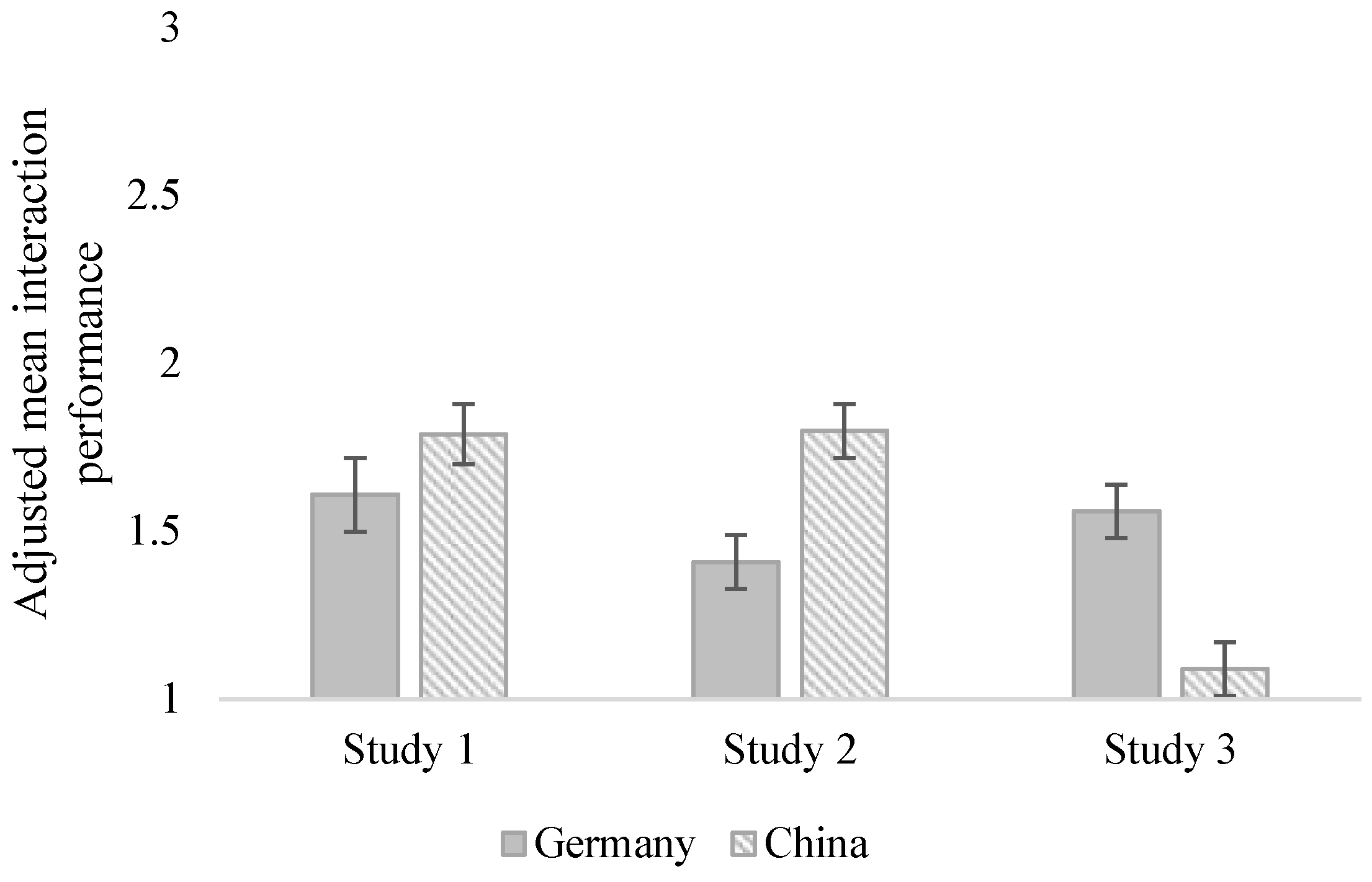

3.1.3. Experimenter Ratings

3.2. Results: Study 2

3.2.1. SUS

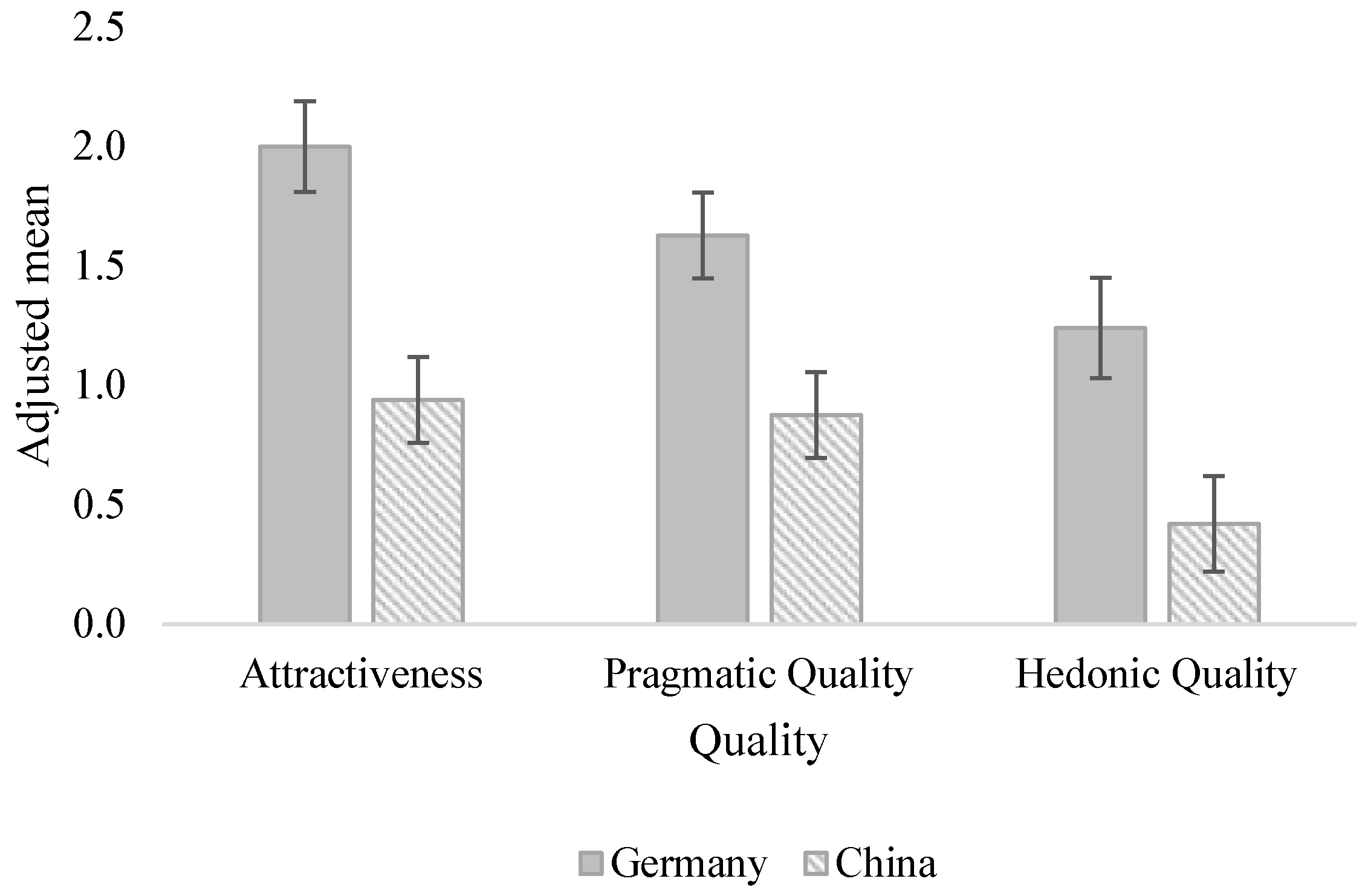

3.2.2. UEQ

3.2.3. NPS

3.2.4. Experimenter Ratings

3.3. Results: Study 3

3.3.1. SUS

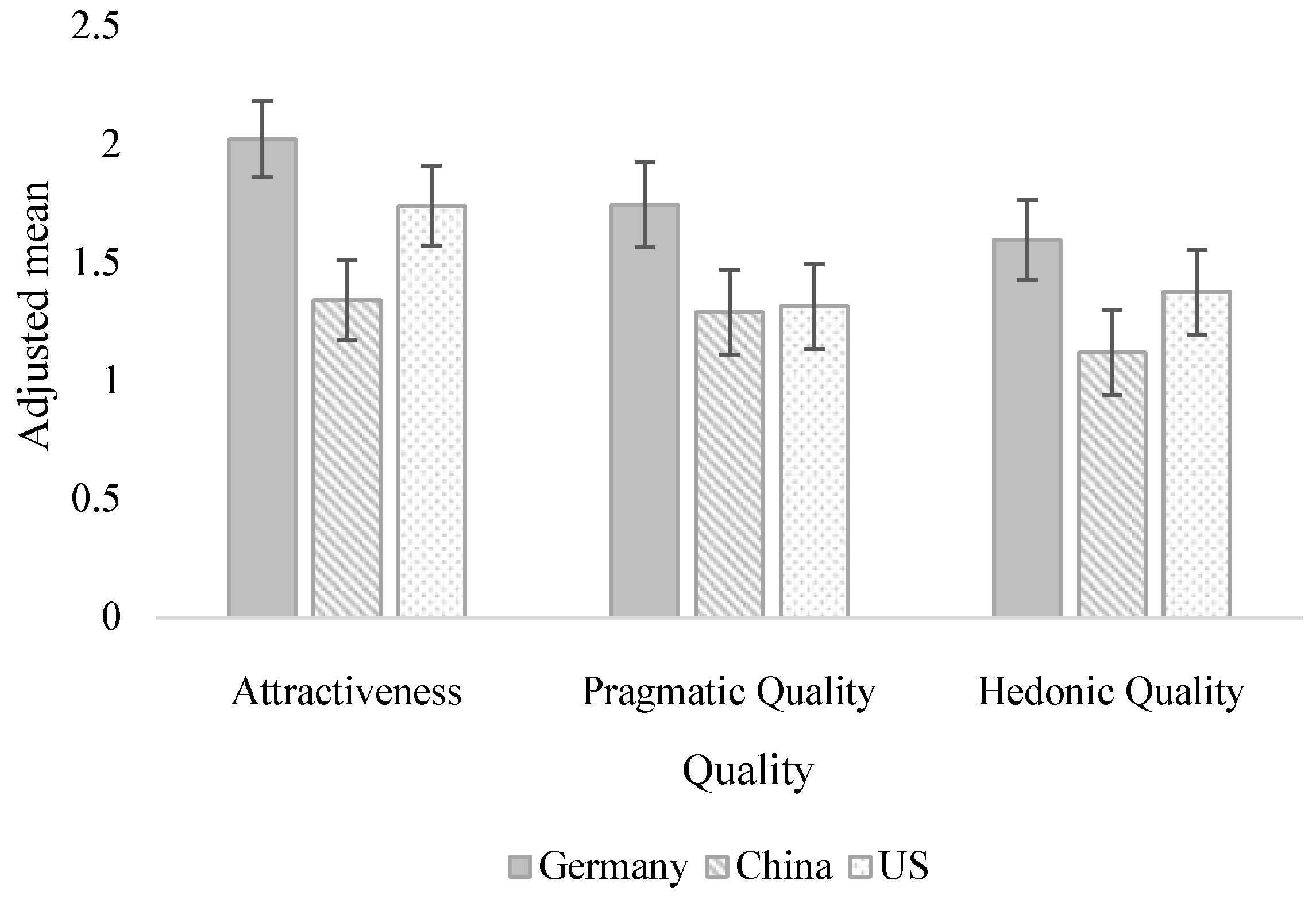

3.3.2. UEQ

3.3.3. NPS

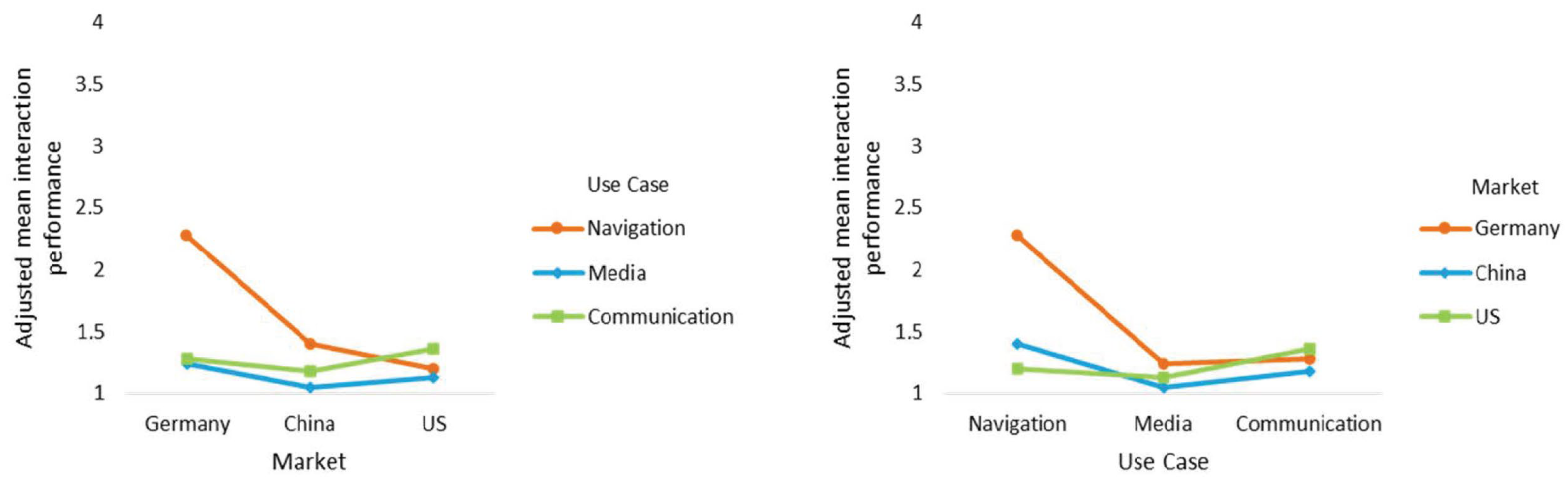

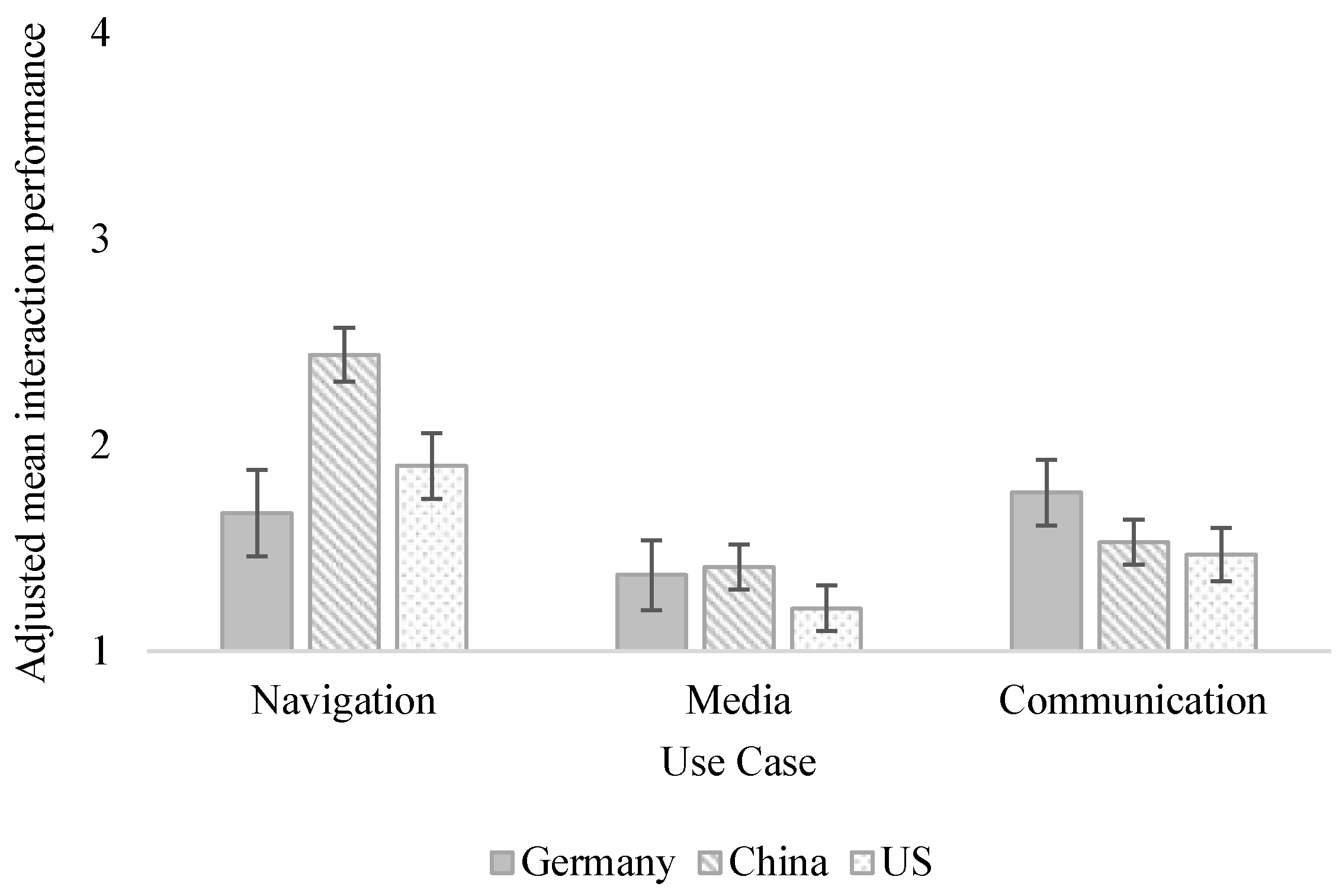

3.3.4. Experimenter Ratings

3.4. Overview of Satisfaction and Interaction Performance for German and Chinese Users across the Three Studies

4. General Discussion

4.1. Differences in Satisfaction

4.2. Differences in Hedonic Qualities

4.3. Differences in Overall Ratings

4.4. Differences in Interaction Performance

4.5. Limitations and Future Research

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| (a) | (b) | ||||||

|---|---|---|---|---|---|---|---|

| Market | Use Case | Adjusted M | SE | Market | Modality | Adjusted M | SE |

| Germany | Navigation | 1.67 | 0.14 | Germany | Touch | 1.28 | 0.08 |

| Media | 1.26 | 0.10 | Remote | 1.53 | 0.13 | ||

| Communication | 1.28 | 0.12 | |||||

| China | Navigation | 2.68 | 0.14 | China | Touch | 1.42 | 0.08 |

| Media | 1.27 | 0.10 | Remote | 2.18 | 0.13 | ||

| Communication | 1.45 | 0.12 |

Appendix B

| Market | Use Case | Adjusted M | SE |

|---|---|---|---|

| Germany | Navigation | 2.27 | 0.16 |

| Media | 1.23 | 0.07 | |

| Communication | 1.28 | 0.11 | |

| China | Navigation | 1.40 | 0.16 |

| Media | 1.05 | 0.08 | |

| Communication | 1.18 | 0.12 | |

| US | Navigation | 1.20 | 0.16 |

| Media | 1.13 | 0.07 | |

| Communication | 1.36 | 0.11 |

Appendix C

| Effect | df1 | df2 | F | p | ηp2 |

|---|---|---|---|---|---|

| Market | 1 | 79 | 0.04 | 0.842 | 0.001 |

| Age | 1 | 79 | 5.82 | 0.018 | 0.07 |

| UC | 1.38 | 109.30 | 1.49 | 0.230 | 0.02 |

| UC × Market | 2 | 158 | 0.02 | 0.982 | 0.00 |

| UC × Age | 2 | 158 | 0.54 | 0.585 | 0.01 |

References

- Watson, G.H. Strategic Benchmarking: How to Rate Your Company’s Performance Against the World’s Best; John Wiley & Sons Inc.: Hoboken, NJ, USA, 1993. [Google Scholar]

- Sweeney, M.; Maguire, M.; Shackel, B. Evaluating user-computer interaction: A framework. Int. J. Man-Mach. Stud. 1993, 38, 689–711. [Google Scholar] [CrossRef]

- Kumar, A.; Antony, J.; Dhakar, T.S. Integrating quality function deployment and benchmarking to achieve greater profitability. BIJ 2006, 13, 290–310. [Google Scholar] [CrossRef]

- Erdil, A.; Erbıyık, H. The Importance of Benchmarking for the Management of the Firm: Evaluating the Relation between Total Quality Management and Benchmarking. Procedia Comput. Sci. 2019, 158, 705–714. [Google Scholar] [CrossRef]

- Anand, G.; Kodali, R. Benchmarking the benchmarking models. Benchmarking Int. J. 2008, 15, 257–291. [Google Scholar] [CrossRef]

- Rössger, P. Intercultural HMIs in Automotive: Do We Need Them?—An Analysis. In HCI International 2021—Late Breaking Papers: Design and User Experience; Stephanidis, C., Soares, M.M., Rosenzweig, E., Marcus, A., Yamamoto, S., Mori, H., Rau, P.-L.P., Meiselwitz, G., Fang, X., Moallem, A., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 584–596. ISBN 978-3-030-90237-7. [Google Scholar]

- Kern, D.; Schmidt, A. Design Space for Driver-based Automotive User Interfaces. In Proceedings of the AutomotiveUI’09: 1st International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Essen, Germany, 21–22 September 2009. [Google Scholar]

- Young, K.; Regan, M. Driver Distraction: A Review of the Literature. In Distracted Driving; Australasian College of Road Safety: Sydney, NSW, Australia, 2007. [Google Scholar]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.; Keinath, A. User Education in Automated Driving: Owner’s Manual and Interactive Tutorial Support Mental Model Formation and Human-Automation Interaction. Information 2019, 10, 143. [Google Scholar] [CrossRef]

- ISO 9241-210:2010; Prozess zur Gestaltung Gebrauchstauglicher Interaktiver Systeme. Deutsches Institut für Normung: Berlin, Germany, 2011.

- Forster, Y.; Kraus, J.M.; Feinauer, S.; Baumann, M. Calibration of Trust Expectancies in Conditionally Automated Driving by Brand, Reliability Information and Introductionary Videos. In Proceedings of the AutomotiveUI’18: 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Toronto, ON, Canada, 23–25 September 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 118–128, ISBN ISBN 9781450359467. [Google Scholar]

- Norman, D.A. Human-Centered Design Considered Harmful. Interactions 2005, 12, 14–19. [Google Scholar] [CrossRef]

- Hassenzahl, M. The Effect of Perceived Hedonic Quality on Product Appealingness. Int. J. Hum. Comput. Interact. 2001, 13, 481–499. [Google Scholar] [CrossRef]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.F.; Keinath, A. Self-report measures for the assessment of human–machine interfaces in automated driving. Cogn. Technol. Work 2020, 22, 703–720. [Google Scholar] [CrossRef]

- Pettersson, I.; Lachner, F.; Frison, A.-K.; Riener, A.; Butz, A. A Bermuda Triangle?—A Review of Method Application and Triangulation in User Experience Evaluation. In Proceedings of the CHI’18: CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; Mandryk, R., Hancock, M., Perry, M., Cox, A., Eds.; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–16, ISBN ISBN 9781450356206. [Google Scholar]

- Lindgren, A.; Chen, F.; Jordan, P.W.; Zhang, H. Requirements for the Design of Advanced Driver Assistance Systems—The Difference between Swedisch and Chinese Drivers. Int. J. Des. 2008, 2, 41–54. [Google Scholar]

- Heimgaertner, R. Towards Cultural Adaptability in Driver Information and -Assistance Systems. In Usability and Internatiolization Part II; Springer: Berlin/Heidelberg, Germany, 2007; pp. 372–381. [Google Scholar]

- Lesch, M.F.; Rau, P.-L.P.; Zhao, Z.; Liu, C. A cross-cultural comparison of perceived hazard in response to warning components and configurations: US vs. China. Appl. Ergon. 2009, 40, 953–961. [Google Scholar] [CrossRef]

- Khan, T.; Williams, M. A Study of Cultural Influence in Automotive HMI: Measuring Correlation between Culture and HMI Usability. SAE Int. J. Passeng. Cars-Electron. Electr. Syst. 2014, 7, 430–439. [Google Scholar] [CrossRef]

- Wang, M.; Lyckvi, S.L.; Chen, F. Same, Same but Different: How Design Requirements for an Auditory Advisory Traffic Information System Differ Between Sweden and China. In Proceedings of the AutomotiveUI’16: 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ann Arbor, MI, USA, 24–26 October 2016; Green, P., Boll, S., Gabbard, J., Osswald, S., Burnett, G., Borojeni, S.S., Löcken, A., Pradhan, A., Eds.; Association for Computing Machinery: New York, NY, USA, 2016; pp. 75–82, ISBN ISBN 9781450345330. [Google Scholar]

- Khan, T.; Williams, M. Cross-Cultural Differences in Automotive HMI Design: A Comparative Study Between UK and Indian Users’ Design Preferences. J. Usability Stud. 2016, 11, 45–65. [Google Scholar]

- Young, K.L.; Rudin-Brown, C.M.; Lenné, M.G.; Williamson, A.R. The implications of cross-regional differences for the design of In-vehicle Information Systems: A comparison of Australian and Chinese drivers. Appl. Ergon. 2012, 43, 564–573. [Google Scholar] [CrossRef] [PubMed]

- Braun, M.; Li, J.; Weber, F.; Pfleging, B.; Butz, A.; Alt, F. What If Your Car Would Care? Exploring Use Cases For Affective Automotive User Interfaces. In Proceedings of the MobileHCI’20: 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services, Oldenburg, Germany, 5–8 October 2020; Association for Computing Machinery: New York, NY, USA, 2020. ISBN ISBN 9781450375160. [Google Scholar]

- Zhu, D.; Wang, D.; Huang, R.; Jing, Y.; Qiao, L.; Liu, W. User Interface (UI) Design and User Experience Questionnaire (UEQ) Evaluation of a To-Do List Mobile Application to Support Day-To-Day Life of Older Adults. Healthcare 2022, 10, 2068. [Google Scholar] [CrossRef] [PubMed]

- Niklas, U.; von Behren, S.; Eisenmann, C.; Chlond, B.; Vortisch, P. Premium factor—Analyzing usage of premium cars compared to conventional cars. Res. Transp. Bus. Manag. 2019, 33, 100456. [Google Scholar] [CrossRef]

- François, M.; Osiurak, F.; Fort, A.; Crave, P.; Navarro, J. Automotive HMI design and participatory user involvement: Review and perspectives. Ergonomics 2016, 60, 541–552. [Google Scholar] [CrossRef] [PubMed]

- Brooke, J. SUS—A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Li, R.; Chen, Y.V.; Sha, C.; Lu, Z. Effects of interface layout on the usability of In-Vehicle Information Systems and driving safety. Displays 2017, 49, 124–132. [Google Scholar] [CrossRef]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Beggiato, M.; Krems, J.F.; Keinath, A. Learning to use automation: Behavioral changes in interaction with automated driving systems. Transp. Res. Part F Traff. Psychol. Behav. 2019, 62, 599–614. [Google Scholar] [CrossRef]

- Gao, M.; Kortum, P.; Oswald, F.L. Multi-Language Toolkit for the System Usability Scale. Int. J. Hum. Comput. 2020, 36, 1883–1901. [Google Scholar] [CrossRef]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum. Comput. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- Loew, A.; Sogemeier, D.; Kulessa, S.; Forster, Y.; Naujoks, F.; Keinath, A. A Global Questionnaire? An International Comparison of the System Usability Scale in the Context of an Infotainment System. In Proceedings of the International Conference on Applied Human Factors and Ergonomics. International Conference on Applied Human Factors and Ergonomics, New York, NY, USA, 24–28 July 2022; pp. 224–232. [Google Scholar]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and Evaluation of a User Experience Questionnaire. In Proceedings of the HCI and Usability for Education and Work: 4th Symposium of the Workgroup Human-Computer Interaction and Usability Engineering of the Austrian Computer Society, Graz, Austria, 20–21 November 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 63–76. [Google Scholar]

- Schankin, A.; Budde, M.; Riedel, T.; Beigl, M. Psychometric Properties of the User Experience Questionnaire (UEQ). In Proceedings of the CHI’22: CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; Barbosa, S., Lampe, C., Appert, C., Shamma, D.A., Drucker, S., Williamson, J., Yatani, K., Eds.; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1–11, ISBN ISBN 9781450391573. [Google Scholar]

- Greve, G.; Benning-Rohnke, E. (Eds.) Kundenorientierte Unternehmensführung: Konzept und Anwendung des Net Promoter® Score in der Praxis, 1st ed.; Gabler: Wiesbaden, Germany, 2010; ISBN 9783834923196. [Google Scholar]

- Naujoks, F.; Wiedemann, K.; Schömig, N.; Jarosch, O.; Gold, C. Expert-based controllability assessment of control transitions from automated to manual driving. MethodsX 2018, 5, 579–592. [Google Scholar] [CrossRef] [PubMed]

- Kenntner-Mabiala, R.; Kaussner, Y.; Hoffmann, S.; Volk, M. Driving performance of elderly drivers in comparison to middle-aged drivers during a representative, standardized driving test in real traffic. Z. Für Verkehrssicherheit 2016, 3, 73. [Google Scholar]

- Jarosch, O.; Bengler, K. Rating of Take-Over Performance in Conditionally Automated Driving Using an Expert-Rating System. In Advances in Human Aspects of Transportation; Stanton, N., Ed.; Springer International Publishing: Cham, Switzerland, 2019; pp. 283–294. ISBN 978-3-319-93884-4. [Google Scholar]

- Forster, Y. Preference versus Performance in Automated Driving: A Challenge for Method Development. Doctoral Thesis, University of Technology Chemnitz, Chemnitz, Germany, 2019. [Google Scholar]

- Field, A.P. Discovering Statistics Using SPSS, 3rd ed.; SAGE Publications: Los Angeles, CA, USA, 2009; ISBN 9781847879066. [Google Scholar]

- Armstrong, R.A. When to use the Bonferroni correction. Ophthalmic Physiol. Opt. 2014, 34, 502–508. [Google Scholar] [CrossRef] [PubMed]

- Reichheld, F.F. The one number you need to grow. Harv. Bus. Rev. 2003, 81, 46–55. [Google Scholar] [PubMed]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; L. Erlbaum Associates: Hillsdale, NJ, USA, 1988. [Google Scholar]

- Heimgärtner, R. ISO 9241-210 and Culture?—The Impact of Culture on the Standard Usability Engineering Process. In Design, User Experience, and Usability. User Experience Design Practice; Hutchison, D., Kanade, T., Kittler, J., Kleinberg, J.M., Kobsa, A., Mattern, F., Mitchell, J.C., Naor, M., Nierstrasz, O., Rangan, C.P., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 39–48. ISBN 978-3-319-07637-9. [Google Scholar]

- Mehler, J.; Guo, Z.; Zhang, A.; Rau, P.-L.P. Quick Buttons on Map-Based Human Machine Interface in Vehicles is Better or Not: A Cross-Cultural Comparative Study Between Chinese and Germans. In Culture and Computing. Design Thinking and Cultural Computing; Rauterberg, M., Ed.; Springer International Publishing: Cham, Switzerland, 2021; pp. 432–449. ISBN 978-3-030-77430-1. [Google Scholar]

- Hofstede, G. Dimensionalizing Cultures: The Hofstede Model in Context. Psychol. Cult. 2011, 2, 8. [Google Scholar] [CrossRef]

- Hofstede, G.J. Sixth Dimension Synthetic Culture Profiles. 2010. Available online: https://geerthofstede.com/wp-content/uploads/2016/08/sixth-dimension-synthetic-culture-profiles.doc (accessed on 11 May 2022).

- Trompenaars, F.; Hampden-Turner, C. Riding the Waves of Cultures: Understanding Cultural Diversity in Business; Nicholas Brealey: London, UK, 2011. [Google Scholar]

- Chang, S.; Kim, C.-Y.; Cho, Y.S. Sequential effects in preference decision: Prior preference assimiliates current preference. PLoS ONE 2017, 12, e0182442. [Google Scholar] [CrossRef] [PubMed]

- De Souza, T.R.C.B.; Bernardes, J.L. The Influences of Culture on User Experience. In Cross-Cultural Design; Rau, P.-L.P., Ed.; Springer International Publishing: Cham, Switzerland, 2016; pp. 43–52. ISBN 978-3-319-40092-1. [Google Scholar]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Applying the User Experience Questionnaire (UEQ) in Different Evaluation Scenarios. In Design, User Experience, and Usability. Theories, Methods, and Tools for Designing the User Experience; Hutchison, D., Kanade, T., Kittler, J., Kleinberg, J.M., Kobsa, A., Mattern, F., Mitchell, J.C., Naor, M., Nierstrasz, O., Rangan, C.P., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 383–392. ISBN 978-3-319-07667-6. [Google Scholar]

- Sogemeier, D.; Forster, Y.; Naujoks, F.; Krems, J.F.; Keinath, A. How to Map Cultural Dimensions to Usability Criteria: Implications for the Design of an Automotive Human-Machine Interface. In Proceedings of the AutomotiveUI’22: 14th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Seoul, Republic of Korea, 17–20 September 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 123–126, ISBN 9781450394284. [Google Scholar]

- Hofstede, G. Culture and Organizations. Int. Stud. Manag. Organ. 1980, 10, 15–41. [Google Scholar] [CrossRef]

- Gong, Z.; Ma, J.; Zhang, Q.; Ding, Y.; Liu, L. Automotive HMI Guidelines For China Based On Culture Dimensions Interpretation. In HCI International 2020—Late Breaking Papers: Digital Human Modeling and Ergonomics, Mobility and Intelligent Environments Proceedings of the 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020; LNCS; Springer: Cham, Switzerland, 2020; pp. 96–110. [Google Scholar]

- Hultsch, D.F.; MacDonald, S.W.; Dixon, R.A. Variability in Reaction Time Performance of Younger and Older Adults. J. Gerontol. B Psychol. Sci. Soc. Sci. 2002, 57B, 101–115. [Google Scholar] [CrossRef]

- Sharit, J.; Czaja, S.J. Ageing, computer-based task performance, and stress: Issues and challenges. Ergonomics 1994, 37, 559–577. [Google Scholar] [CrossRef]

- Dingus, T.A.; Hulse, M.C.; Mollenhauer, M.A.; Fleischman, R.N.; Mcgehee, D.V.; Manakkal, N. Effects of Age, System Experience, and Navigation Technique on Driving with an Advanced Traveler Information System. Hum. Factors 1997, 39, 177–199. [Google Scholar] [CrossRef] [PubMed]

- Lerner, N.; Singer, J.; Huey, R. Driver Strategies for Engaging in Distracting Tasks Using In-Vehicle Technologies HS DOT 810 919; U.S. Department of Transportation: Washington, DC, USA, 2008. [Google Scholar]

- Totzke, I. Einfluss des Lernprozesses auf den Umgang mit Menügesteuerten Fahrerinformationssystemen. Doctoral Thesis, Julius-Maximilians-Universität Würzburg, Würzburg, Germany, 2013. [Google Scholar]

- Roberts, M.J.; Gray, H.; Lesnik, J. Preference versus performance: Investigating the dissociation between objective measures and subjective ratings of usability for schematic metro maps and intuitive theories of design. Int. J. Hum. Comput. 2017, 98, 109–128. [Google Scholar] [CrossRef][Green Version]

- Knapp, B. Mental Models of Chinese and German Users and Their Implications for MMI: Experiences from the Case Study Navigation System. In Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2007; pp. 882–890. [Google Scholar]

- Law, E.L.-C.; van Schaik, P.; Roto, V. Attitudes towards User Experience (UX) Measurement. Int. J. Hum. Comput. 2014, 72, 526–541. [Google Scholar] [CrossRef]

- Forster, Y.; Naujoks, F.; Neukum, A. Increasing anthropomorphism and trust in automated driving functions by adding speech output. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 365–372, ISBN ISBN 978-1-5090-4804-5. [Google Scholar]

- Bortz, J.; Döring, N. Forschungsmethoden und Evaluation, 4th ed.; Springer: Berlin/Heidelberg, Germany, 2006; ISBN 978-3-540-33305-0. [Google Scholar]

- Boduroglu, A.; Shah, P.; Nisbett, R.E. Cultural Differences in Allocation of Attention in Visual Information Processing. J. Cross-Cult. Psychol. 2009, 40, 349–360. [Google Scholar] [CrossRef] [PubMed]

- Hadders-Algra, M. Human face and gaze perception is highly context specific and involves bottom-up and top-down neural processing. Neurosci. Biobehav. Rev. 2022, 132, 304–323. [Google Scholar] [CrossRef]

- Nisbett, R.E.; Miyamoto, Y. The influence of culture: Holistic versus analytic perception. Trends Cogn. Sci. 2005, 9, 467–473. [Google Scholar] [CrossRef]

| Label | Market | Year |

|---|---|---|

| Study 1 | Germany | 2018 |

| China | 2019 | |

| US | 2019 | |

| Study 2 | Germany | 2020 |

| China | 2020 | |

| Study 3 | Germany | 2021 |

| China | 2022 | |

| US | 2022 |

| Study | Market | n | Sex | Age | ||

|---|---|---|---|---|---|---|

| Female | Male | Mean (M) | Standard Deviation (SD) | |||

| 1 | Germany | 30 | 3 | 27 | 54.0 | 11.2 |

| China | 36 | 8 | 28 | 35.5 | 7.1 | |

| US | 36 | 12 | 24 | 39.5 | 8.3 | |

| Total | 102 | 23 | 89 | |||

| 2 | Germany | 36 | 10 | 26 | 41.5 | 11.8 |

| China | 37 | 9 | 28 | 35.2 | 7.9 | |

| Total | 73 | 19 | 54 | |||

| 3 | Germany | 37 | 7 | 30 | 40.0 | 13.0 |

| China | 39 | 9 | 30 | 35.0 | 7.2 | |

| US | 50 | 15 | 35 | 50.7 | 10.6 | |

| Total | 126 | 31 | 95 | |||

| Category | Value | Description |

|---|---|---|

| No problem | 1 |

|

| Hesitation | 2 |

|

| Minor errors | 3 |

|

| Massive errors | 4 |

|

| Help of experimenter | 5 |

|

| Use Case Number | Task | Mode |

|---|---|---|

| 1 | Start navigation | P |

| 2 | Cancel navigation | P |

| 3 | View call list | P |

| 4 | Change volume/mute | D |

| 5 | Restaurant list | D |

| 6 | Skip radio station | D |

| 7 | Adjust temperature | D |

| 8 | Call contact | D |

| 9 | Play song | P |

| 10 * | Send voice message | P |

| Data Type | Construct | Method | Subscales | Source | Studies | Analyses Applied |

|---|---|---|---|---|---|---|

| Self-report measures | Satisfaction | SUS | Brooke [27] | 1, 2, 3 | t-test, ANOVA | |

| Pragmatic and hedonic qualities | UEQ | Attractiveness Perspicuity Efficiency Dependability Stimulation Novelty | Laugwitz et al. [33] | 2, 3 | MANCOVA | |

| Overall evaluation | NPS | Reichheld [42] | 1, 2, 3 | Mann–Whitney U test, Kruskal–Wallis test | ||

| Observational measures | Interaction performance | Experimenter rating | UC (Navi, Media, Communication) Modality (Touch, Remote) | Naujoks et al. [36] | 1, 2, 3 | Mixed within-between ANCOVA |

| Market | M | SD |

|---|---|---|

| Germany | 72.08 | 14.26 |

| China | 73.89 | 19.50 |

| US | 78.89 | 21.22 |

| Effect | df1 | df2 | F | p | ηp2 |

|---|---|---|---|---|---|

| Market | 2 | 94 | 2.45 | 0.092 | 0.50 |

| Age | 1 | 94 | 12.30 | <0.001 | 0.12 |

| UC | 2 | 188 | 1.27 | 0.283 | 0.01 |

| Modality | 1 | 94 | 0.92 | 0.340 | 0.01 |

| UC × Market | 4 | 188 | 2.53 | 0.042 | 0.05 |

| UC × Age | 2 | 188 | 0.71 | 0.495 | 0.007 |

| Modality × Market | 2 | 94 | 2.07 | 0.132 | 0.04 |

| Modality × Age | 1 | 94 | 0.02 | 0.904 | 0.00 |

| UC × Modality | 2 | 188 | 1.42 | 0.245 | 0.02 |

| UC × Modality × Market | 4 | 188 | 1.88 | 0.115 | 0.04 |

| UC × Modality × Age | 2 | 188 | 0.17 | 0.841 | 0.002 |

| UEQ Scales | Market | Adjusted M | SE | df1 | df2 | F | p | ηp2 |

|---|---|---|---|---|---|---|---|---|

| Attractiveness | Germany | 2.00 | 0.19 | 1 | 70 | 15.67 | <0.001 | 0.18 |

| China | 0.94 | 0.18 | ||||||

| Perspicuity | Germany | 1.69 | 0.18 | 1 | 70 | 12.34 | <0.001 | 0.15 |

| China | 0.76 | 0.18 | ||||||

| Efficiency | Germany | 1.62 | 0.19 | 1 | 70 | 6.68 | 0.012 | 0.09 |

| China | 0.90 | 0.19 | ||||||

| Dependability | Germany | 1.97 | 0.18 | 1 | 70 | 15.05 | <0.001 | 0.18 |

| China | 0.97 | 0.18 | ||||||

| Stimulation | Germany | 1.67 | 0.19 | 1 | 70 | 17.68 | <0.001 | 0.20 |

| China | 0.54 | 0.18 | ||||||

| Novelty | Germany | 0.81 | 0.23 | 1 | 70 | 2.52 | 0.117 | 0.04 |

| China | 0.30 | 0.22 |

| Effect | df1 | df2 | F | p | ηp2 |

|---|---|---|---|---|---|

| Market | 1 | 67 | 12.79 | <0.001 | 0.16 |

| Age | 1 | 67 | 2.30 | 0.134 | 0.03 |

| UC | 2 | 134 | 4.60 | 0.012 | 0.06 |

| Modality | 1 | 67 | 1.47 | 0.230 | 0.02 |

| UC × Market | 2 | 134 | 9.87 | <0.001 | 0.13 |

| UC × Age | 2 | 134 | 2.62 | 0.077 | 0.04 |

| Modality × Market | 1 | 67 | 6.33 | 0.014 | 0.09 |

| Modality × Age | 1 | 67 | 0.01 | 0.916 | <0.01 |

| UC × Modality | 1.62 | 108.57 | 2.47 | 0.100 | 0.04 |

| UC × Modality × Market | 2 | 134 | 4.58 | 0.012 | 0.06 |

| UC × Modality × Age | 2 | 134 | 0.64 | 0.529 | 0.01 |

| Market | M | SD |

|---|---|---|

| Germany | 79.73 | 12.77 |

| China | 67.76 | 21.48 |

| US | 71.65 | 18.94 |

| UEQ Subscale | Market | Adjusted M | SE |

|---|---|---|---|

| Attractiveness | Germany | 2.02 | 0.16 |

| China | 1.34 | 0.17 | |

| US | 1.74 | 0.17 | |

| Perspicuity | Germany | 1.71 | 0.18 |

| China | 1.34 | 0.19 | |

| US | 1.09 | 0.19 | |

| Efficiency | Germany | 1.70 | 0.18 |

| China | 1.22 | 0.18 | |

| US | 1.45 | 0.18 | |

| Dependability | Germany | 1.82 | 0.17 |

| China | 1.31 | 0.17 | |

| US | 1.40 | 0.17 | |

| Stimulation | Germany | 1.67 | 0.17 |

| China | 1.20 | 0.17 | |

| US | 1.56 | 0.17 | |

| Novelty | Germany | 1.52 | 0.18 |

| China | 1.04 | 0.18 | |

| US | 1.19 | 0.18 |

| Effect | df1 | df2 | F | p | ηp2 |

|---|---|---|---|---|---|

| Market | 2 | 115 | 11.10 | <0.001 | 0.16 |

| Age | 1 | 115 | 22.47 | <0.001 | 0.16 |

| UC | 1.65 | 189.25 | 5.48 | 0.005 | 0.05 |

| UC × Market | 4 | 230 | 7.08 | <0.001 | 0.11 |

| UC × Age | 2 | 230 | 10.76 | <0.001 | 0.09 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sogemeier, D.; Forster, Y.; Naujoks, F.; Krems, J.F.; Keinath, A. Driving across Markets: An Analysis of a Human–Machine Interface in Different International Contexts. Information 2024, 15, 349. https://doi.org/10.3390/info15060349

Sogemeier D, Forster Y, Naujoks F, Krems JF, Keinath A. Driving across Markets: An Analysis of a Human–Machine Interface in Different International Contexts. Information. 2024; 15(6):349. https://doi.org/10.3390/info15060349

Chicago/Turabian StyleSogemeier, Denise, Yannick Forster, Frederik Naujoks, Josef F. Krems, and Andreas Keinath. 2024. "Driving across Markets: An Analysis of a Human–Machine Interface in Different International Contexts" Information 15, no. 6: 349. https://doi.org/10.3390/info15060349

APA StyleSogemeier, D., Forster, Y., Naujoks, F., Krems, J. F., & Keinath, A. (2024). Driving across Markets: An Analysis of a Human–Machine Interface in Different International Contexts. Information, 15(6), 349. https://doi.org/10.3390/info15060349