Abstract

Learnability in Automated Driving (LiAD) is a neglected research topic, especially when considering the unpredictable and intricate ways humans learn to interact and use automated driving systems (ADS) over the sequence of time. Moreover, there is a scarcity of publications dedicated to LiAD (specifically extended learnability methods) to guide the scientific paradigm. As a result, this generates scientific discord and, thus, leaves many facets of long-term learning effects associated with automated driving in dire need of significant research courtesy. This, we believe, is a constraint to knowledge discovery on quality interaction design phenomena. In a sense, it is imperative to abstract knowledge on how long-term effects and learning effects may affect (negatively and positively) users’ learning and mental models. As well as induce changeable behavioural configurations and performances. In view of that, it may be imperative to examine operational concepts that may help researchers envision future scenarios with automation by assessing users’ learning ability, how they learn and what they learn over the sequence of time. As well as constructing a theory of effects (from micro, meso and macro perspectives), which may help profile ergonomic quality design aspects that stand the test of time. As a result, we reviewed the literature on learnability, which we mined for LiAD knowledge discovery from the experience perspective of long-term learning effects. Therefore, the paper offers the reader the resulting discussion points formulated under the Learnability Engineering Life Cycle. For instance, firstly, contextualisation of LiAD with emphasis on extended LiAD. Secondly, conceptualisation and operationalisation of the operational mechanics of LiAD as a concept in ergonomic quality engineering (with an introduction of Concepts for Applying Learnability Engineering (CALE) research based on LiAD knowledge discovery). Thirdly, the systemisation of implementable long-term research strategies towards comprehending behaviour modification associated with extended LiAD. As the vehicle industry revolutionises at a rapid pace towards automation and artificially intelligent (AI) systems, this knowledge is useful for illuminating and instructing quality interaction strategies and Quality Automated Driving (QAD).

1. Introduction

Vehicle automation is evolving at a higher level, with advanced systems set to hit the market in the near future. For example, the level 3 automated driving system (ADS) (L3, according to the SAE J3016_202104 []) will be introduced on public roads soon. Thus, in order to comprehend human-automation interactions (HAI) and how humans learn to interact with or use automation over time, there are several aspects that need to be considered. Arguably, automation systems should be designed in such a way that encourages efficiency, satisfaction, and effectiveness, as well as safety-inducing trust, behaviour-based safety, agility, and comfortability over time. User experiences (UX) are important to consider [], such as the power law of learning, trust, reliance, and acceptance. In the automotive field, trust is described in terms of automation and reliance in terms of the ADS []. According to [], trust is considered a key element in continuous use and acceptance. Therefore, how well trust matches the true capabilities of automation has an effect on inappropriate reliance, which is associated with misuse and disuse [,]. This begs us to question different user characteristics, demographic factors, perceived aims of learning and the context of use []. Consequently, assessing different changing aspects of automated driving may result in advance knowledge discovery on users’ behaviour adaptability and/or changeability (BAC) towards automation. For instance, assessing whether adaptive cruise control (ACC) induces behavioural adaptation in drivers (see []). When considering change in behaviour, Ref. [] hypothesised that “change will be for the better and that performance will be more effective and decisions will be improved”. Moreover, it can be argued that states of ‘negative hedonicity’ (“which is frustration due to user-hostile aspects of the interface and surprise when the automation does things the person does not understand”) can be induced by intervention []. For instance, see Table 1 for other aspects to consider.

Table 1.

Quality aspects.

As users are continuously exposed to automation, they learn new ways to interact and use it. Thus, their behaviours, mental models/user models, expectations and preferences evolve with time. This is grounded on a process of reengineering or redesigning their own preferred interaction configurations with automation—keeping in mind their personalised goals. For instance, this has the potential to result in ‘resilient misbehaviours’ or escalated misuses based on ‘destructive learning effects’ and misdirected/misapplied trust as a result of an undesirable change in behaviour towards automation. When discussing users’ misbehaviours with ADS, one expert stated that “they [users] learn the speed, they learn at speed—how fast do I have to act, how often does it flash, how fast do I have to look up, and they actually get a little faster as they learn, and then that makes them feel more comfortable” []. Thus, as long as the automated system affords the user the opportunity to misuse it, this may be stimulus enough for them to continue the misbehaviours. Some undesirable use behaviours are due to resounding subjective expectations, peculiar philosophies on the systems, the system not being transparent and understandable enough, easily modified system features, and functions being erroneous, among others. Therefore, users’ subjective expectations may change based on system mechanisms unaccounted for. In addition, this may influence overall performance, with ‘unsatisfied’ being the leading cause of procedural inaccuracies, in part by users. Part of the issue is that users are presented with the power law of ‘learning and relearning’ new ways to interact and use automation systems. This then influences them to ‘redesign’ their own personalised interaction patterns with the system. For example, as the user matures in experience with the system, new branches of ideas are formed and compartmentalised in their mental model. Usually, these personalised interaction/use patterns are outside the system’s design scope, as we have seen with various YouTube videos on Tesla autopilot, for example.

As a result, there is a dire need for long-term research that assesses extended learnability. Essentially, considers the easiness with which users learn to interact/use automation, as well as how they behave and misbehave over time. This then requires quality engineering researchers to assess the quality of the designed interaction strategy. Moreover, how users ‘easily learn’ to redesign their own interaction patterns with automation. As well as how the automated system affords users specific learning configurations and behaviours, which is highly neglected in research. Principally, the automation affordances are fundamental to understanding users’ BAC, apathy, and inclinations. Therefore, it is important to assess and comprehend how the user gradually learns new abilities and the influence of passive or active learning. As such, pioneering data on long-term learning effects and behaviour modification towards automation over time may advance knowledge discovery in designing Quality Automated Driving (QAD) experiences to extraordinary heights. In view of that, it is imperative that researchers derive research strategies that link theory (e.g., on learning) and practise (e.g., behaviour modification). This was also argued by [] as important in achieving optimal usability. Additionally, Ref. [] reasoned that it is important to choose the right set of metrics and questions when it comes to measuring UX towards a system design. For example, using the following metrics: learnability, time/effort required to achieve maximum efficiency, measuring previous efficiency metrics over time, as well as behavioural (verbal and nonverbal behaviours) and physiological metrics [].

Due to the anticipated higher automation design prototypes that are set to appear on the market in the imminent future, this raises initiatives for useful long-term research that assists in developing safely usable systems and human–machine interfaces (HMIs). Thus, designs consistently limit misbehaviours, destructive learning effects, irresponsible usages, unexpected/undesirable behaviours, intended/unintended misuses, and incorrect mental models. Accordingly, inspires interoperability across different original equipment manufacturer (OEM) products.

1.1. Motivation

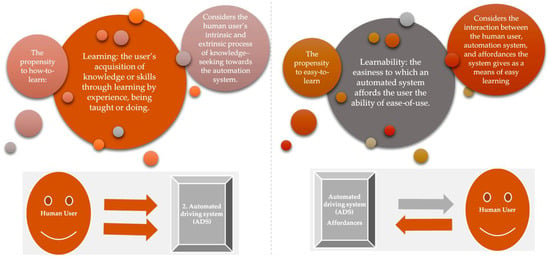

This paper aims to fill the knowledge gap explicitly on extended learnability and automated driving. Additionally, it should not be overshadowed by general literature on technological systems and learning dynamics. Consequently, we argue that not all technological systems will be experienced or learned the same, and different technologies or automated systems will induce different learning effects and user behaviour outcomes. Thus, in the collection of reviews, the aim was to consider the extended learnability of automated driving as an operative concept. Moreover, comprehends how it is operationalised and defined rather than addressing learnability in the general sense of technology. Learning, as a concept, can be seen as merely the user learning process towards a system; however, with learnability, there is an underlying layer to that understanding. For example, the affordances the system design provides to users for “easy” learning (Figure 1). The most visible attempts to theorise ADS draw on the idea of affordance. Affordance was described by Gibson (1979) in the field of ecological psychology as “the affordances of the environment are what it offers the animal, what it provides or furnishes, either for good or ill” []. Thus, the affordances of an automated system are what it offers the user and what it provides or furnishes, either for good (e.g., easy-to-learn, safe to use) or bad (e.g., hard-to-learn, risky to use).

Figure 1.

Theorising learning and learnability in terms of affordances.

We could not find sufficient publications suitable for this review. The idea was to examine the papers using thematic analysis, a core method for qualitative research. The trustworthiness of this type of analysis is greatly determined by precision, consistency and exhaustiveness in the data analysis. The papers would be screened individually, and the most relevant references related to the research question would be extracted into a universally applied form with several items that serve as preliminary codes, which include category, type of study, type of ADS used/discussed, and guiding the concept extended learnability. The papers are coded according to the data collected in each of the items of the form. The initial codes are based on a review of the literature and adjusted throughout the coding process, where new information emerges. The codes pertaining to extended learnability are further coded according to the purpose and context of use. The content of this data extraction is then the subject of a content analysis, with the presentation of the results being textual, tabular or diagrammatic.

1.2. Contribution

This paper addresses the questions: Why and how should we measure the learnability of ADS? We understand that high-quality learnability induces satisfaction, as users may feel confident in their abilities []. Thus, it is important that a system design’s task amenability is efficiently simple and clear. In a sense, learnability studies are time and budget-consuming []; however, they are useful to lower costs (e.g., for training users) and promote satisfaction. Conversely, there are different theories, models and definitions, and most are contested. Clear and unambiguous definitions are important for both theoretical and practical considerations of any phenomenon, and learnability is no exception. In this paper, we seek to shed light on the concept within the context of automated driving, specifically addressing the following issues.

- Identify core operational concepts in order to consider the contributions, implications and boundaries for researching ‘learnability in the context of automated driving’,

- Propose a working definition for learnability that underpins long-term research as a way to advocate for the consequence of long-term effects on user behaviour.

To satisfy these objectives, the paper is divided into three sections:

- Section 1 (Introduction): An introductory discussion of the topic of interest.

- Section 2 (‘What Is Already Known’): A literature overview on learnability is discussed.

- Section 3 (‘What This Paper Adds’): Engineering knowledge that underpins the operationalisation of the concept. Furthermore, we elaborate on how automated driving engineers and researchers may need to employ extended assessment tools when designing for lifelong behaviour-based safety.

The concept of learnability is widely researched in the human–computer interaction (HCI) field; however, the opposite rings true in the automated driving field. As a result, we hope this paper brings this concept to the forefront of academic and industry research interests. In effect, it encourages more studies, especially pertaining to extended learnability and fundamental accounts that may underpin changes in user behaviour. We thus position this paper as a foundational condition for quality interaction design strategies that consider learnability as a prerequisite for safety. Moreover, highlights a set of challenges for metrics and suggests a class of possible solutionism as a multi-level phenomenon.

2. What Is Already Known?

This section aims to theorise learning abilities and profusely comprehend the translation of learnability theory into practise. Thus, considering the correlation between learning effects and behaviour modification. Therefore, it is significant that we theorise the power law of learning from an intellectual (behavioural and cognitive) point of view. The idea that learning has an impact on mental models is also crucial to consider and should be researched over the sequence of time. Furthermore, it is important to consider the different user types, mental models, HMI and automation system design, environment, as well as learning effects that contribute to users learning abilities and skill acquisitions over time. The observation that learning as the product of interactions between the cognitive activities of users as learners, the social behaviours they see modelled, the system stimuli and the wider environment are imperative.

2.1. Learnability

While there exists an abundance of published work on learnability, they are scattered across HCI and software engineering [], informatics [], technical communication [], and Programmable User Models (PUMS) [], etc. In a sense, HCI researchers preserve the concept of learnability and have relentlessly popularised it. However, there is neglect in the automated driving field. Learnability is primarily used as a transformative and regulatory concept that guides or regulates the easiness of learning a system. From understanding individual human reactions to automation systems or HMIs to system design and understanding of reaction and recovery to the understanding of learnability in system functioning, to AI/PUMS and understanding task-action mappings, among others. Most authors consider learnability in terms of errors, task time, error recovery time, and time to mastery. Author [] described learnability as covering the time to mastery and error avoidance or recovery. Ref. [] evaluated learnability by counting errors and measuring the time that is needed to perform a task as well as the time that is needed for error recovery. Author [] wrote that learnability could be operationalised as the time that a new user needs to reach a predefined level of proficiency. Moreover, scholars argue that learnability concerns the system features that allow users to understand how to use it and then how to attain a maximal level of performance. Essentially, learnability literature has long focused on comprehensibility. In short, if a user cannot understand it, then the user cannot learn it and furthermore cannot use it (or rather use it correctly). Thus, comprehensibility influences learnability, as well as learnability yields, augmented comprehensibility of a system design. With reference to [], they explained learnability as “allowing users to reach a reasonable level of usage proficiency within a short time” by assessing novice users and measuring the time it takes them to learn the basic tasks of the system. For instance, the effort required for a novice user to perform basic tasks. Therefore, the time and effort required to be able to perform specified functionalities. Author [] saw learnability as allowing users to quickly begin to work with the system. Most scholars state that an automated system should be easy to learn by the type of users for whom it is intended. However, a major concern is the lack of longitudinal evaluations of learnability that further evaluate the user types’ learning ability over time. Ref. [] focused on different user types as a way to comprehend how different users may experience an automated system. For example, unexperienced (novice) users as well as experienced users.

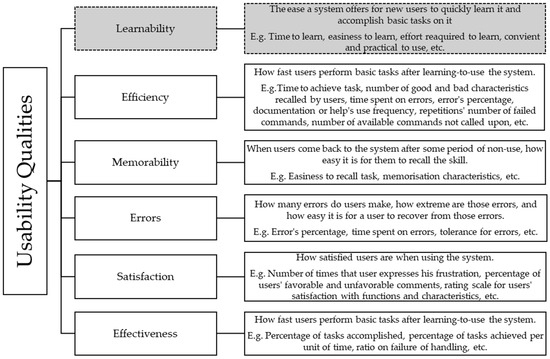

Generic learnability is understood as “easy-to-learn” [] and described as a quality attribute of usability, while generic usability is understood as “easy-to-use” []. Thus, there exists “a need to determine if learnability and usability are mutually exclusive” []. In a sense, to comprehend learnability, we must first comprehend usability (see Figure 2). The International Organization for Standardization (ISO) has developed a variety of models to identify and measure software usability []. The term usability refers to “a set of multiple concepts, such as execution time, performance, user satisfaction and ease of learning (“learnability”), taken together”. However, the researchers or by the standardization bodies have yet to define it homogeneously []. Usability is defined (ISO 9241-11 cited in []) as “the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use”. Usability is dependent on the context of use, users, tasks, equipment, and environment (ISO 9241-11, 1991 cited in []), and it is regarded as a quality attribute that assesses how easy systems are to be used and methods for improving ease-of-use [].

Figure 2.

A baseline model of usability qualities.

Learnability as an aspect of usability can be traced back to 1976 []. However, the first substantial attempt to describe it was by [], denoting that “a system should be easy-to-learn by the class of users for whom it is intended”. This description is rather vague, as the idea that a system should be “easy-to-learn” leaves room for many interpretations. It emphasises the need to evaluate learnability while considering the level of user experience with a system []. Undeniably, learnability is a significant component of usability and use satisfaction, with some classifying it as the most fundamental attribute []. Learnability is a quality attribute that evaluates ‘ease-of-learning’ and assesses user efficiency and effectiveness in carrying out specific tasks. ISO described learnability as the extent to which a system can be easily learned by specific users to achieve task-specific goals efficiently, effectively, and with a high level of satisfaction. The quality-in-use (QinU) directly relates to the capability of a system to influence users. In addition, holds five attributes: effectiveness, productivity or efficiency, safety or free of risk, satisfaction, and context of use coverage (ISO 25010:2011 cited in []). Interaction quality is achieved when users meet operational tasks in a safe and comprehensive manner. In that, learnability is characterised by the capability of a system to enable the user to learn how to use it within a reasonable amount of time []. Ref. [] “proposes an action-based technique to improve users’ learnability and understandability by studying users’ interactions while they are interacting with the user interface of a Web application”. This is because the quality of interactions directly relates and reflects the user’s knowledge and understanding of the system []. As a result, “the system should be easy to learn” []. System quality is described as the degree to which the system satisfies the intended needs of users in a valuable manner (ISO/IEC 25010:2011). Ref. [] argues that there is generally no accepted operational definition of learnability; however, it describes it as the quality of being easily learnable and as a quality attribute that assesses how easy a system is to learn, as well as deduces methods for improving ease-of-learning. Even so, some common features that are inherently related to it can be known:

- Learnability is a function of the user’s experience, i.e., it depends on the type of user for which the learning occurs;

- Learnability can be evaluated on a single usage period (initial learnability) or after several usages (extended learnability);

- Learnability measures the performance of user interaction in terms of completion times, error and success rates or percentage of functionality understood.

The majority of learnability definitions are framed as initial or extended experience (Table 2). For example, it is categorised as first-time user performance, first-time performance after instruction, easy-to-use, easy-to-learn, the ability to master a system, a change in performance over time and the ability to remember skills over time [].

Table 2.

Learnability descriptions.

Essentially, there is no accepted or agreed model of learnability. Moreover, “despite the consensus that learnability is an important aspect of usability, there is little consensus among scholars as to how learnability should be defined, evaluated, and improved” []. Primarily, there are several groups of scholars with different views concerning learning experiences; for example,

- (1)

- Group A consider ease-of-learning to be a sub-concept of ease-of-use.

- (2)

- Group B sees ease-of-learning and ease-of-use as competing attributes that can seldom be fulfilled at the same time.

- (3)

- Group C sees ease-of-learning as an attribute that covers the whole usage process (e.g., a system can be easy-to-learn for beginners; however, for experienced users, it may constantly assist users in finding new and more ways to use).

Human factors/ergonomics scholars have argued that the notorious frustrations and failures triggered by software interventions [,,,] have led to significant concerns with evaluation processes []. Thus, usability methods are considered to be useful methodological options [,,,,,,,]. Moreover, additional consideration has been metrics for ‘key performance indicators’ [,] and assessing performance in terms of satisfaction, efficiency and effectiveness []. Furthermore, other performance methods are self-assessment questionnaires and, in real-time, using user profiling and experience sampling methods (ESM). Furthermore, think-out-loud (TOL) methods for eliciting verbalizations related to thinking and problem solving []. Essentially, TOL verbal protocol techniques have wide applicability in cognitive psychology, and “have proved to be useful techniques for probing possible cognitive mechanisms underlying user performance and problems” []. The following table (Table 3) summarises some research approaches that have been used to assess learnability. As much as these are influential to consider, they were, however, tailored towards HCI and not necessarily automated driving. Granting, this is not to assert that there are no studies in the automated driving domain (see [,,,,,]); however, most of these studies do not focus on learnability as a concept but rather learning, with the exception of []. In a sense, there is little, if not none, that specifically focuses on how to define learnability in the context of automated driving, and especially extended learnability.

Table 3.

Learnability research approaches tailored in HCI.

Therefore, in this paper, the aim is to shed light on extended learnability and reason potential factors that impact the concept and its effects on user BAC. These topics of interest are usually not approached in a clear and conscious manner. The aim is to comprehend research approaches that can be implemented, as well as evaluation metrics within the context of automated driving. Extended learnability is yet to occupy a high point of reference in research, as measuring it does not have a standardised set of strategies.

2.2. Theories of Learning and Behaviour

The normative possibilities of learning lie in understanding the multidimensionality of issues and experimenting with new approaches to mould accurate behaviour (responsible usage) and mitigate misbehaviours (irresponsible usage). The science of successful learning (see []) based on human learning and memory (see []) is important to consider. Our efforts to expand knowledge towards learnability (e.g., in the context of automated driving) can be seen as a form of learning within and about the context of HAI, with the aim of galvanising holistic knowledge discovery. When studying the learning maps of humans concerning automation or AI systems, it is important to explore and expand knowledge on how a human thinks they behave or misbehave in response to the system in a particular situational context. Thus, coming up with theories and questions that examine this outcome is beneficial in understanding learning effects and BAC. Ref. [] noted that technological evolution has influenced learning theories in that learning theories and technologies are:

- Situated in a somewhat vague conceptual field, as well as connected and intertwined by information processing and knowledge acquisition;

- Learning theories represent a fuzzy mixture of principles and applications.

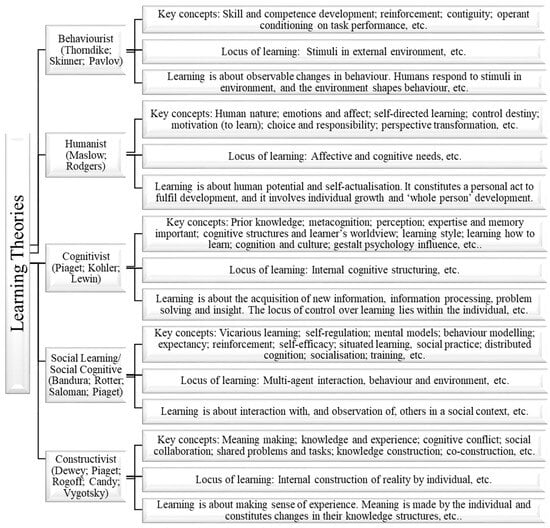

Thus, bottlenecks of knowledge discovery in how humans learn and the broader information structures that govern knowledge composition and practises are significant to consider. Ref. [] argues that “instead of regarding the learning theories as discordant, it is rather proposed that human cognition is complex and that there is a role for behaviourist, cognitivist, constructivist and social constructivist models of learning based on the objectives and context of learning”. Theories (Figure 3) are a good foundation for evaluating users’ learning routes.

Figure 3.

The network of learning theories.

Behaviourism views learning based on external factors and acknowledges that preferred learning can be controlled with reinforcements (rewards) and punishments. Humanistic learning comprises creative self-fulfilment and suggests that the learning process explores the self-reflection of the learner. Information processing views the human learning process as comparable to computer information processing functionality and stresses the path of information through the memory stages: first, the sensory memory, then working memory for further elaboration and stored in long-term memory due to repetitive, active processing. Thus, design, selection, coordination, use, understanding, and information barriers are key to consider []. Cognitive psychology is believed to “have moved further away from its roots in information processing toward a stance that emphasises individual and group construction of knowledge” []. The view that “the mind as a computer has fallen into disfavour largely due to the mechanistic representation of a human endeavour and the emphasis on the mind–body separation” is one to also consider []. What Churchland cited in [] has called “popular dualism”, that the “person” or mind is a “ghost in the machine”, is also important to consider. These theories help in comprehending how learnability in the context of automated driving evolves with time, as well as skills and knowledge acquisition. The theories add to cognitive theory by asserting that knowledge is never independent of the human that has the knowledge and the situation where the knowledge was acquired. The user as the learner is seen as an active information processor, and their previous knowledge is seen as the basis for their learning. As well as their ongoing learning influences new forms of knowledge and skills towards automation use and interaction. Thus, the user as a learner continuously adopts new automated driving knowledge and combines it with their prior knowledge to reform their mental model of the automation and driving experience. These learning theories hold an interesting outlook on different user types’ mental processes and mappings, as well as consider the influence of different external factors.

The goal here is knowledge that can be transferred to different situational contexts and user types. In addition, the ‘theory of mental models’ is important as it is useful in evaluating the learning maps from a user’s perspective. For example, two users can be exposed to an automation system, but those two users may go through different learning experiences. As a result, we reason that learnability and mental models are equivalent. Ref. [] explored mental models and user models and thus argued that “mental models are usually considered to be the ways in which people model processes”. We agree with [] that mental models may be incomplete and may even be internally inconsistent. Thus, in congruence, researchers need to derive long-term evaluation criteria for assessing user models, in that predicting behaviour modification, user’s performance as a result of learning effects. Ref. [] further argued that focusing on mental/user models highlights the intentionality of the interaction between the user and the system. However, studying these models can be a challenge as “mental models are inside the user’s heard, they are not accessible to direct inspection, and it is difficult to have confidence about how a mental model is constructed or how it can be modified” []. Contrary to popular believe, some scholars assert that “there are no mental models per se, but only generalisations from behaviourally conditioned expectations” []. Ref. [] argues that user models can be studied and modified; however, it might be difficult to capture and represent information about the user and the task. The evolution of the mental model, trust and acceptance is essential to assess []. Ref. [] noted that “a stronger orientation in the development and introduction of automated systems, especially considering existing mental models, will increase acceptance and system trust”. According to [], “the dependability and usability of systems are not isolated constructs”, thus “a clearer picture of how these core attributes are dynamically related remains a vital area of future research”. Conversely, even though there is an enormous amount of research on learning and learning theories, its relationship to extended learnability in the context of automated driving is lacking.

2.3. “X about X”: Linking Learning Effects and BAC

To ensure a strong connection between theory and practise, different scholars have called for ‘linking science’ and employing an engineering analogy to aid in translating theory into practise. The “value of such a bridging function would be its ability to translate relevant aspects of the learning theories into optimal instructional actions” []. Thus highlighting potential learning theories and the persistent issues with practical learning, as well as the use of theoretical learning to facilitate solutions for practical learning. The term learning is used in a variety of topics; thus, it occupies several definitions. For example, such as learning as a child how to speak (vocal-based learning) or to speak a language (language-based learning), learning how to interact with others (social-based learning), learning how to regulate emotions (emotive-based learning), learning how to ride a bicycle (somatic-based learning), and learning chemistry (mental-based learning). Thus, it is not surprising that the concept of learning is a prevalent field in educational psychology and engineering research. There already exist multiple theories of learning constructed by different schools of thought, and some of the theories somewhat overlap with each other. Learning theories grounded on learnability in the context of automated driving may describe the dynamics of learning-to-use an automation system mainly from a pedagogical, cognitive and behavioural point of view. Moreover, it can be argued that the sociotechnical context for learning is “dynamic and makes great demands on those trying to seize the opportunities presented by emerging technologies” [].

In a sense, learning is described as a change in the user’s mental model or behaviour, resulting in behaviour modification towards an automation system based on continuous knowledge acquisition/information processing. Thus, there is a causal effect relationship between learning and BAC, with learning as a cause and behaviour modification as an effect (i.e., a change in behaviour due to internal and external influences). There is an assumed causal-effect relation between learning and behaviour built into the definition of learning. Learning is defined as “the observable change in behaviour of a specific organism as a consequence of regularities in the environment of that organism” []. It is seen as an effect—that is, as an observable change in behaviour that is attributed to an element in the environment. Adaptation is used as an example. To identify that learning has occurred, two conditions are believed to occur: (1) an observation change in behaviour must occur during the lifetime of the entity, and (2) the change in behaviour must be due to regularities in the environment []. Ref. [] further argued that when assessing if learning has occurred, it is important to note that the observed change in behaviour can occur at any point during the lifetime of an organism. The impact of regularity on behaviour might be evident:

- Immediately, or

- Only after a short delay (e.g., one hour), or

- Even after a long delay (e.g., one year) [].

Moreover, there are differences in how behaviour is defined, as well as what constitutes a change in behaviour. We, thus, adopt a broad definition that includes any observable reactions, regardless of whether that reaction is produced by the somatic nervous system (regulates voluntary physiologic processes, e.g., pressing a pedal), the autonomic nervous system (regulates involuntary physiologic processes, e.g., blood pressure or heart rates), or neural processes (the way the brain works, e.g., thoughts, memories, and feelings). In principle, the concept of behaviour also refers to reactions that are observable only by the individual themselves (e.g., a conscious mental image or thought) []. Learning is an occurrence that may go unnoticed in many cases []. Thus, in a way, it’s important to question how humans learn, what they learn, and what happens when skills develop []. In a sense, when looking closer at learning and knowledge or skills acquisition, it becomes clear that it’s important to assess learning effects based on long-term exposure to automation systems. Moreover, how these effects evolve over time. Thus, embracing future technologies requires a clearer understanding of the pedagogies that inform learning, as well as the learning strategies used to facilitate learning []. Additionally, people with different learning patterns (i.e., participatory, collaborative, and independent) approach learning differently []. Learning abilities (the ability to learn, characterised by the user’s learning success, i.e., taking into account the efficiency of this process) are different from knowledge acquisition (the quality of the knowledge the user learns, characterised by the speed and quality of the skill development, i.e., taking into account the outcome of the learning process). In acquiring comprehensive knowledge, we thus reflect on skills or skilfulness (as well as deskilling, upskilling, and reskilling) as factors to consider. The lack of studies covering concepts such as deskilling, upskilling, and reskilling as part of learnability in the context of automated driving limits the opportunity for quality interactions (QAD and QinU), where the strengths and weaknesses of automation and human systems can be considered. Ref. [] discussed mechanisms of skill acquisition and the law of practise, and considered theoretical and experimental approaches employed in assessing the mechanisms underlying these factors, for instance,

- On the experimental side, “it is argued that a single law, the power law of practise, adequately describes all of the practise data.

- On the theoretical side, “a model of practise rooted in modern cognitive psychology, the chunking theory of learning, is formulated”.

Skill acquisition is divided into three phases that support the idea of conceptual knowledge and procedural skills as being interrelated,

- (1)

- Cognitive stage: learner stores to memory (memorisation) a set of details relevant to the skill,

- (2)

- Associative stage: learner forms the details into a procedural model (contains step-by-step instructions for performing a certain action),

- (3)

- Autonomous phase: the procedure becomes more automated and rapid and, in the end, requires very little processing resources.

Essentially, conceptual knowledge develops into an efficient skill when it is practised. When considering acquiring conceptual information, the ability to learn means acquiring new information and combining it with previous knowledge to form a revised model of automated driving components to be learned. We also have to consider the aspect that learning requires active processing of information on automated driving/automation use to adopt it as a part of the users’ mental model. Concerning the ability to learn, repetition of an action is not enough, but the contextual background needs to be understood as well. Thus, combining conceptual knowledge and procedural skills is important for learning. For example, a user might have an inclination to take over the driving during automated driving mode but will need technical ability to carry it out without enduring risk. Using specific ADS generally requires memorising a lot of automated driving guff of knowledge, handover and takeover information, time delays, and automation HMI action sequences, among others []. In a sense, it is much easier for a user to recall details that are related to a thematic entity and connected to existing knowledge than to remember disconnected and non-existing details. Thus, users need to know how to interact with an automated system, how to interpret its actions/reactions, and, as a result, how to manipulate this information to their benefit. For example, interdisciplinary design strategies for HAI encompassing the KISS method (Keep It Simple and Simpler) []. This information should be understood on a conceptual level, whether as novice or experienced. Moreover, considering practising procedural abilities and skills acquisition, we deduce that skills must be practised for development in the mental model.

Another issue to consider is that the user may learn to solve only problems that are structured in a specific situation, and when exposed to new situations, they may not know the correct way to react. Thus resulting in explorative learning strategies with a relatively free-formed learning process. This may be the result of some automated driving crash causations in specific situations. The user may not have been fully aware of the high level of risk in that specific situation, for example. To avoid this, automation capabilities and limitations should be apparent to the users in all possible situations and contexts of use. Learning strategies are seen as either explorative learning with unsystematic directives or structured learning with systematic directives. Additionally, passive learning and active learning processes are important to consider. The user thus learns the automated system often in pursuit of knowledge and understanding. For instance, where a learning strategy is not efficient and does not compose thorough knowledge, some users may fill the gaps with their own beliefs. Thus, risk management techniques are crucial, as users may possibly face obstacles and partake in risk-taking behaviours during automated driving mode. Therefore, substantial knowledge may be helpful to recuperate from misjudgement.

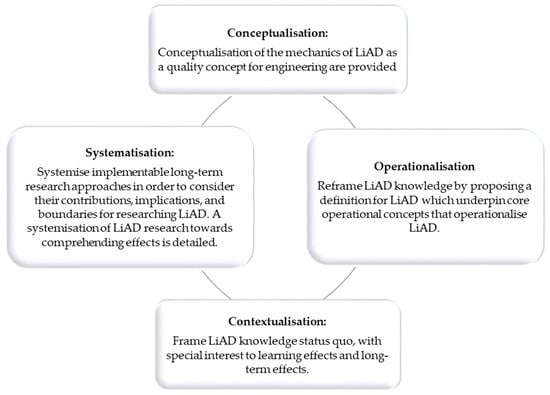

3. What This Paper Adds? The Learnability Engineering Life Cycle

This paper introduces the ‘learnability engineering life cycle’, with a special interest in learnability in automated driving (LiAD). The objective of this discussion is to provide an exploration of LiAD and foundational strategies to consider. Based on the above sections (‘What Is Already Known’), our aim is to demonstrably cogitate the learnability of automated driving tasks on the basis of quantifying the quality of performance, learning curve and (negative and positive) learning effects (destructive and constructive), and, implicitly, the behaviour maturation/modification on the basis of experience sampling. The resulting sections contribute to the learnability engineering life cycle (see Figure 4).

Figure 4.

The Learnability Engineering Life Cycle.

These discussion points or mechanisms consider different layers of knowledge discovery in the context of LiAD research. Integrating a wide range of information aids a multidimensional protocol that infers LiAD knowledge configurations.

3.1. Conceptualisation

We consider different mechanisms that influence LiAD over time in order to classify users’ abilities in response to situational dynamics, system performance, contextual elements, environment, and user states. Resultantly highlighting behaviour modification, conditions to change, and new interaction norms, forms, and practises. Essentially, the emphasis is centrally on user/mental models, abilities to learn and change coupled with the transformation of usage patterns and learning effects. Thus, we believe that any integrative view of LiAD should be able to accommodate different factors at all levels of automated driving understanding. Since ADS are such a big part of current driving culture, users carry many deeply held assumptions on how they should be used. Moreover, the operation of automation systems involves nuanced and complex interactions between humans and automation in unpredictable situations, thus proving significant in assessing the quality of learning over time. We consider the concept LiAD, with emphasis on initial (short-term) learning to extend (long-term) learning curves. We frame LiAD knowledge as a process of transforming quality engineering practices.

- Our conceptualisation:

- ○

- LiAD infers a characteristic of the user’s quality of learning, taking into account the easiness of learning-to-use automation systems within the spectrum of short-term or initial learnability (initial LiAD: [i]LiAD) to long-term or extended learnability (extended LiAD: [e]LiAD). It can be measured based on learning curves (considering its slope and its plateau) and behaviour modification that emphasises a scale of desirable (efficient, effective, satisfying, error-free and safety-taking behaviours with the system) and undesirable (inefficient/deficient, ineffective, unsatisfying, error-prone and risk-taking behaviours) behaviours as a result of learning effects/long-term effects.

This conceptualisation has been constructed by considering definitions given by different scholars [], as well as considering human factors, long-term effects, mental models, etc., from the experience perspectives of different users and environmental factors. Thus, combining these factors into a coherent description is useful in identifying possible learning pitfalls that hinder the competent use of automation. Moreover, our description frames knowledge from the experience perspectives of safety-inducing or risk-inducing learning over time, bearing in mind trust (over-trust, distrust, mistrust, trust calibration) and use (over-use, misuse, disuse) patterns.

Learnability is a key concept in safety research, and learnability engineering is an accepted domain within safety science. It attracts considerable interest, as it advocates for new ways of understanding HAI processes in complex sociotechnical environments. If the aim is towards risk-free use, then there is a strong case for understanding the mechanics of user behaviour. Thus defining risk-taking behaviours, emphasising learned behaviours as well as learning effects. In a sense, “risk is construed as a probabilistic and penalising contingency on a specific class of responses (like driving), and risk-taking as making responses to that class” []. We may consider most user behaviours as risky, where only the amount of risk varies []. Risk, defined as “the probability of accident involvement, differs between individuals and causes them to have quite different perceptions” []. Moreover, risk can be categorised as either physical, cognitive, or emotional. In addition, classifying behaviour as either ‘risky-misbehaved’ (risk-based misbehaviour) or ‘safely-behaved’ (safety-based behaviour) during automated driving is important to consider in formulating an in-depth description. As a result, encouraging research towards LiAD and thus addressing the intellectual peculiarity of [e]LiAD is crucial. ISO 9241-11 described learnability using three attributes (effectiveness, efficiency, and satisfaction). However, emphasis should be directed towards the event of a negative validity and undesired effect. For instance, effectiveness vs. ineffectiveness, efficiency vs. inefficiency, and satisfaction vs. dissatisfaction. For each attribute, one or more measures should be presented. It is also important to recognise the significant equivalence (high or low probability) or weight associated with each attribute. Consequently, it is important to classify the characteristics that make for quality LiAD.

3.2. Operationalisation

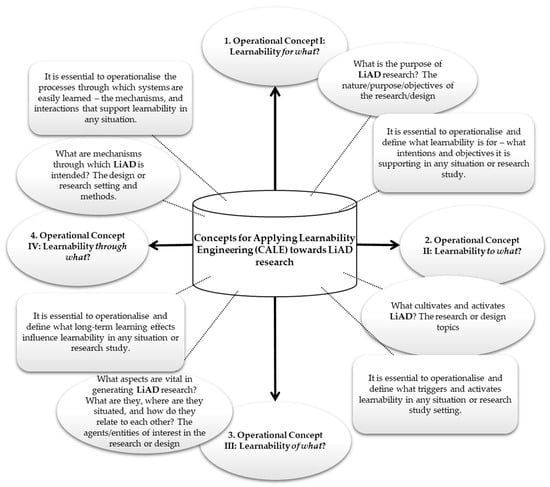

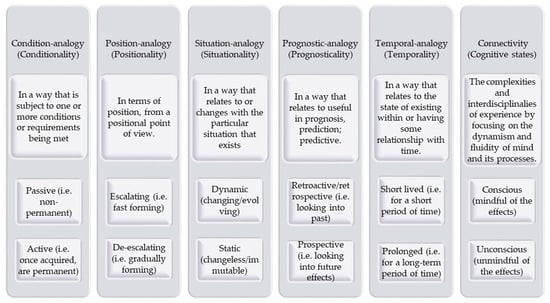

Fundamentally, learning theories have helped in framing learnability, as well as informed and shaped LiAD as a concept. We describe [i]LiAD and [e]LiAD based on ‘Concepts for Applying Learnability Engineering’ (CALE) (see Figure 5).

- Our operationalisation:

- ○

- CALE aligned to LiAD research considers learnability based on the following: the nature/purpose/objectives of the research or design (for what), the research or design topics (to what), the agents/entities of interest in the research or design (of what) and the design or research setting and methods (through what). These represent important fundamentals for operationalising LiAD research. Scientifically exploring these questions provides rich knowledge about the quality aspects of LiAD.

Figure 5.

CALE model: Sub-concepts for guiding LiAD research.

Thus, LiAD, as a neglected research field, seeks to understand and improve HAI in order to deliver high QAD and desirable BAC. We acknowledge that it is relatively challenging to systematically operationalise LiAD in an immaculate manner, as the concept is unregulated and not prolifically defined in the literature. We, therefore, advocate for using the CALE model to guide LiAD research. As well as to derive assessment scenarios that are equivalent to real-world situations. In the following sub-segments, we will discuss each operational concept, how it is operationalised and why it is important to consider. In this case, LiAD (‘for whom’) concerns an emergent ability of different user types. Achieving the primary objective likely depends on the system’s capacity to be easily learned by different users. Additionally, channel that information in designing systems that induce efficiency.

3.2.1. Operational Concept I: Learnability for What?

This emphasises the nature/purpose/objectives of the research/design. Investigating LiAD comprises examining the purpose of learnability. An important question, therefore, refers to what research components are important in this process. This might include behavioural and cognitive resources (e.g., competence, epistemic and informational resources, knowledge) or emotional and social resources (e.g., trust, attitude, etc.), etc. These factors and more might be relevant for understanding, reinforcing and maintaining LiAD. Based on the CALE model, we describe the considerations needed to align ‘automated driving-as-imagined’ with ‘automated driving-as-done’, as well as ‘learnability-as-imagined’ with ‘learnability-as-done’. These highlight differences in ‘learnability as intended’ and described based on long-term research, compared to how learnability is performed in practice over the sequence of time. The key concepts to analyse are, therefore, the safety capacity and risk capacity for driving performance, and it is imperative to identify and characterise these capacities. Furthermore, it is grounded in a trilogy process of human factors, environmental factors, and system factors—which represent processes that reinforce or (re)structure learning, evolution, and transformation. Thus, considers research strategies that are open-ended enough to accommodate the application of diverse concepts from different literature lenses and support various methods and approaches over time.

3.2.2. Operational Concept II: Learnability to What?

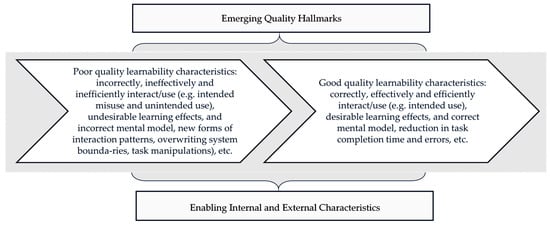

This emphasises the research or design topics. It is key to understand what reinforces abilities. This advocates for research that integrates ‘what goes well’ and ‘what goes wrong’ as fundamental aspects to consider. This can be broadened to also consider different nuances of understanding, which is consistent with behaviour-based safety and safety science. This depends on the nature, goals and objectives of the learning. Thus, should consider LiAD as characteristically ‘good’ (safety-inducing learnability) or ‘bad’ (risk-inducing learnability) attributes. If safety is easily affected, then the automation system cannot reasonably claim to be able to provide quality-in-learning (QoL), quality-in-use (QoU) or QAD. As a sub-characteristic of usability, it constitutes internal and external qualities. Thus, learnability is the QinL, and this directly relates to the capability of automation to influence the overall user experience. Thus, the QinL holds two qualities: (1) poor learnability and (2) good learnability. These hallmarks (see Figure 6) comprise important blueprints in understanding learning mental maps.

Figure 6.

Emerging quality hallmarks and the enabling external and internal factors.

Essentially, good learnability results in efficient performance and plays a vital role in trust and acceptance. Thus, in order to evaluate and improve learnability, “understanding the factors affecting learnability is necessary” [].

3.2.3. Operational Concept III: Learnability of What?

This emphasises the agents/entities of interest in the research or design. Considerable research investments have been vested in the development of new interaction concepts, system functionalities and features for new automation system designs. However, little research attention has been expended to explore the potential issues associated with user types and learning patterns over time. The aim is to identify and integrate problematic areas (from both human factors and system engineering) and to better understand aspects of design modalities that impede different users’ performance from these design systems with attributes that maximise accurate user behaviour, performance, and epistemology.

3.2.4. Operational Concept IV: Learnability through What?

This emphasises the design or research setting and methods. How LiAD unfolds can take a wide variety of methodological processes. Thus, understanding the nature and the evolution of these processes is important to understanding LiAD. The process should consider the speed of learning (fast vs. slow), the QoL, etc. Moreover, re-learning and un-learning is a process of continuous learning. Unravelling and deciphering these issues via long-term research approaches can help provide a clear understanding of LiAD. It is of importance to evaluate the different learning patterns (as novice and experienced), as we assume that learning takes different forms and that not all learning should be classified as a singular and linear phenomenon.

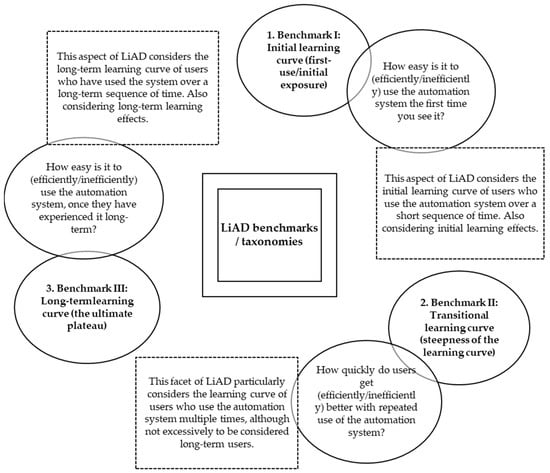

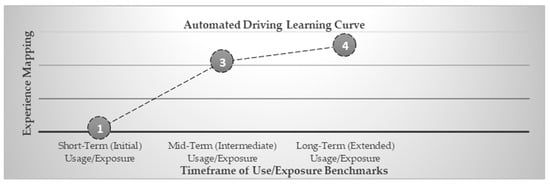

3.3. Contextualisation

It is apparent that learnability is a complex concept. Ref. [] argues that “what most of the definitions have in common is that they address the initial usage experience and include a criterion such as effectiveness or efficiency that can be used to measure the learning results”. However, most of these definitions are neglectful of the long-term experience and how human factors, learning effects, and external factors may influence users’ learning curves and behaviour. For example, procedural scenarios and the context of use may trigger different reactions based on various factors (e.g., environmental situations, weather conditions, road types, system functionality, human factors, user states, etc.). In addition, how the system may continuously afford the users new ways to use is important to consider. Moreover, “some researchers have emphasised that the term learnability should also cover expert users’ ability to learn functions that are new to them” []. It is probable to deduce long-term knowledge frames from learning effects based on [i]LiAD (a novice user’s initial performance with a system after instruction) and based on [e]LiAD (a user’s performance after continuous exposure to the system). In a sense, we cannot only measure LiAD-based short-term usage by defining a level of ability/efficiency or inability/inefficiency on first-time use because reaching a prolific level of proficiency may take hours, days or years—depending on different internal and external factors. Accordingly, LiAD research encompasses three benchmarks (Figure 7). Thus, the initial learning curve (first-use/exposure) assesses LiAD by defining a level of learning proficiency based on the first encounter, as well as gathering knowledge on the type of learning effects encountered during this short-term period. The transitional learning curve (incline/decline slope of the learning curve) assesses LiAD based on repeated measures, as well as gathering knowledge on the type of learning effects encountered during this mid-term period). The long-term learning curve (the ultimate plateau) assesses LiAD based on extended/lifelong learning, as well as gathering knowledge on the type of learning effects encountered during this period. See Figure 8 for an example of an automated driving experience-time frame of use graph showing changes from moving at one uniform speed/slope to moving at a different uniform speed/slope. Line 1–3 shows the greater speed/slope or steeper speed/slope, and line 3–4 shows the lower speed/slope. In measuring learnability, it is essential to define the metric, gather the data, and plot the learning effect curve in view of its slope and plateau. However, the number of repeated usages needed to reach saturation may vary from LOA-to-LOA, ADS-to-ADS, HMI-to-HMI, case-to-case, and even user types.

Figure 7.

Methodology benchmarks: Quantifying LiAD research.

Figure 8.

Learning curve: experience-timeframe graph.

Arguably, design decisions affecting the learning curve of a system depend on various factors, such as the target user type, goals of the automated system, existence of time-critical tasks, training, and other system design considerations []. It can be assumed that for systems having good learnability, the learning curve rises steeply from the beginning, which means that ability develops quickly over time. For systems having poor learnability, the learning curve may stay on a low level for a certain period of time, which means that ability develops very slowly. Efficiency is usually considered to refer to the right part of the learning curve [].

Assessing or measuring LiAD is important for designing automated systems, as gaining knowledge on how easily users can acclimate or adapt to the system is valuable for even objectively simple systems. Moreover, considering how easy it is for users to accomplish a task and how many repetitions it takes them to become efficient. The aim is to produce a learning curve, which reveals changes in UX and thus identifies how long is long enough for users to reach saturation—a plateau. For example, level 2 automated driving (based on SAE J3016_202104 []) with ACC. The aim would be to determine how users with different UX learn to drive with ACC over time or repeated usage. For instance, we recruited user types (with different usage timeframes) to participate in our current long-term study. The participants are repetitively asked to perform automated driving-related tasks, and as a result, we measure the learning curve and learning effects. Therefore, as much as it is important to measure users’ task completion time, the learning effects are also important to consider. Thus, it’s not only how fast they learn but also how efficiently they learn, as well as what and when they learn. Ref. [] noted that it is important “to quantify degree of learnability over time and to identify potential learning issues”.

Theorising Effects: A Theory of Effects

Notably, Ref. [] argued, “research on the usability of automated driving HMIs has shown that strong learning effects are at play when novice users interact with the driving automation systems for the first time”, as well as “appropriate understanding of driving automation requires repeated interactions”. The relative types of effects depend on UX. Novice users may want to learn the system quickly and reach the point of optimal (plateau) performance speedily, but experienced users may have different expectations, for example, tweak the design in different directions. Thus, an ADS may not always be used efficiently using system shortcuts. As users become well versed in the system, the design may feel monotonous and result in negative effects, etc. It is essential to assess learning effects (e.g., destructive/constructive) as a general rule of thumb. These first-order effects have a residual component, where they induce other layers/forms of second-order effects.

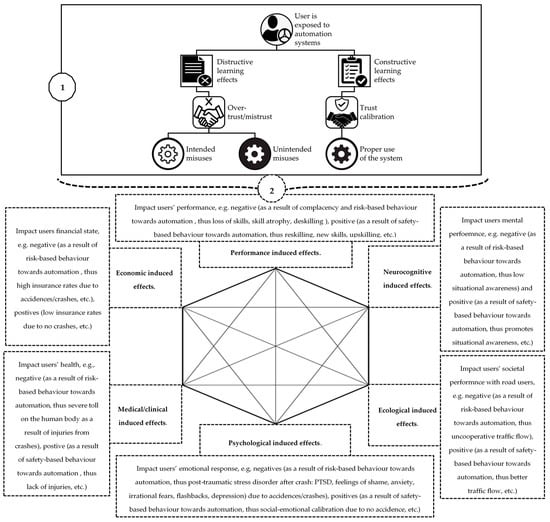

In a situation where automation is learned by the user (correctly or incorrectly), this may result in the inducement of different effects of causality (Figure 9). Moreover, first-order effects have the potential to either transform, conform or deform the user to behave or misbehave in specific ways that might not have been anticipated. Thus, this may result in secondary effects (e.g., high insurance due to crash accidents, physical disabilities, etc.). For instance, transformational effects (right information), deformational effects (wrong information), and conformational effects (common information) are important to assess. Even so, we believe it is better for the user to be transformed than deformed, as when the user is transformed, they are able to see the system as it is and use it to its true potential. Long-term and learning effects are fundamental to consider when framing users’ behaviour modification (BAC) and abilities toward automation systems over time. It is important to note that these effects come in different formulas or evaluative processes (Figure 10).

Figure 9.

Locus of effects: first-order effects (circled 1), second-order effects (dotted line, circled 2).

Figure 10.

A sequence of regressive and progressive effects.

In order to comprehend LiAD, we need to consider the procedural effects associated with BAC over time. There is no argument that the aim is to design ADS that users find ‘easy-to-learn-to-use’ in keeping with safety appearances. However, what is usually neglected is the ‘easy-to-learn-to-misuse’ (intendedly or unintendedly)—resulting in risk-taking behaviours. The neglected link between ease-of-learning and misuse, as well as its association with risk-taking behaviour, is vital. Therefore, it is important to investigate LiAD based on the etymological ‘efficient learning’ (i.e., easy-to-learn to use) and ‘inefficient learning’ (i.e., easy-to-learn to misuse) to fully understand learning effects encountered over time, particularly where the concept ‘easy’ is the context or standard. The goal is to fill the knowledge gap on learning effects and LiAD, based on the initial and long-term validity of effects, by considering the spectrum of positive (desirable) and negative (undesirable) as a way to manage learning configurations.

Thus, in conjunction with learnability (to learn), it’s important to consider understandability (to understand), operability (to operate/control), and usability compliance (to adhere/use). In essence, we consider the ‘ability to learn’, ‘the compatibility in learning’, ‘the meaning in learning’, ‘the consistency in learning’, ‘what they learn’, ‘how they learn’, and ‘when and where they learn’ as interesting questions to consider in the pursuit of LiAD knowledge frontiers. Therefore, long-term research can be a good basis for generating requirements for improving LiAD and quality-based interaction design strategies.

Concerning LiAD, assessing the learning phases of an ACC would be ideal, as in the case of Ref. []. Thus, two phases are suggested:

- First phase: The user learns how to operate the system, identifies system limits, and internalises the system functionality, and this learning phase can be influenced by how the automation system is introduced to the user [].

- Second phase: The “integration phase”, the driver integrates the system into the management of the overall operating task via increasing experience in different situations [].

Ref. [] argued that the first encounter phase “depends greatly on how intuitive and self-explaining the HMI is”. Thus, the learning phase “still depends highly on the HMI, especially in terms of required system input” []. Additionally, this corresponds with the compatibility phase. For instance, considering the Stimulus-Response Compatibility (S-RC), which refers to the phenomenon that “the subjects’ responses were faster and more accurate for the pairings of stimulus sets and response sets that corresponded naturally than for those that did not” []. Essentially, compatibility effects depend on physical and conceptual correspondence, and these effects “occur whenever implicit or explicit spatial relationships exist among stimuli and among responses” []. Ref. [] notes that “by attributing compatibility effects to the cognitive codes used to represent the stimulus and response sets, central cognitive processes must be responsible for the effects”. Furthermore, the mediating processes (cognitive processes) generally reflect the user’s mental model of the task. The implication of compatibility effects on LiAD is that user performance may be negatively influenced if the information displayed on the HMI and response required is not compatible. Thus, making the HMI a central part of positively reinforced LiAD. Thus, it is important to consider quality design strategies for human-automation compatibility (HAC). Moreover, the trust phase is mainly “characterised by a shift in locus of control” from the user to the automation system, and in the adjustment and readjustment phases, users “adjust their adapted behaviour depending on their experience of (critical) situations and system limitations” [].

3.4. Systematisation of LiAD and Multi-Level Long-Term Research

Many scholars argue that conducting long-term studies can be highly expensive and tedious, pertaining to budgets or cost, effort, and timelines [,,]. Ref. [] argued that observing and measuring users over an extended period consumes huge resources, costs and time consumption. Moreover, mentioned that “even if observation could be fully automated (through, for example, the use of event logging), a detailed analysis to study learnability would probably be very exorbitant” []. Ref. [] noted that the major obstacle to evaluating extended learnability is that it traditionally requires expensive and time-consuming techniques for observing users over an extended period of time. Consequently, due to its prohibitive costs, researchers have gradually refrained from performing extended learnability evaluation []. Despite all the difficulties associated with long-term studies, we believe that the evaluation of [e]LiAD is valuable and will produce fruitful and insightful results, as the continuous trend of a learning curve is crucial. Thus, better and more useful long-term research strategies should be considered, as this type of research is important for sustaining safety and predicting behaviour over time.

In addition, “too many studies are still conducted in a laboratory situation using traditional methodological paradigms” []. Thus, “more learnability studies with new methodological approaches in the natural environments are needed were the human, learning and non-linear process of learnability are in focus” []. Ref. [] argued that the “learning process is crucial for drivers to gain an appropriate understanding of the system’s functionality as well as system limits and helps to build an appropriate level of trust”. Thus, this learning process is presumed to “take some time and requires experience of the system in different situations and different environments” []. Further, noted that “field observations consist of usage data that are collected after the system is deployed and released”; user observation, monitoring of customer support calls, and user feedback are all methods that can be used to monitor usage over a long period of time, and estimate learning curves []. Another approach that should be considered is the retrospective reconstruction of personally meaningful experiences from memory. The recruitment of participants infers two main phases:

(1) Explorative phase with screening, synthesis, and validation of participants’ UX,

(2) Intervention phase with implementable quantitative and qualitative measures that support study design.

It is important to understand more deeply the process of learnability and investigate more in detail the key elements that enhance learnability and, on the other hand, cause learnability problems for users []. To this end, based on theoretical models of learnability, “we can develop tools for the commercial user interface world in order to measure and test the learnability process more precisely and better understand how skills are actually learnt and how to click learnability” []. We denote that evaluating [i]LiAD may be an easier task than [e]LiAD. Ref. [] states, “one simply picks some users who have not used the system before and measures the time it takes them to reach a specified level of proficiency in using it”. However, with [e]LiAD, the evaluation is complex and a challenging task, as it can require repeated measures (e.g., on performance, etc.) over time, with a daunting question as to how long is long enough? To proficiently conduct long-term studies on [e]LiAD, researchers need to track users’ use or interaction with the system over a sequence of time, a potentially taxing process. Ref. [] argues that “with a large, enough sample size, and an adequate range of user experience levels studied, this type of study could reveal important information on how user performance progresses over long periods of time”. Such a metric could be used when comparing users at different learning phases to gain a sense of not only the progression of performance over time but also the occurrence of learned effects. This could be significant in understanding what triggers intended or unintended misuses (based on internal and external factors, etc.). As well as how, why, and when do users learn to misuse an automation system? It is believed that, whether good or bad, just about all human behaviours are learned. Thus, long-term studies are important for recognising LiAD and learning/long-term effects from multi-level spectrums: micro-perspective (short-term), meso-perspective (mid-term), and macro-perspective (long-term) (Figure 11). Tracking users’ learning abilities and effects over a long period of time is crucial in determining users’ mental maps. Thus, our aim is to interrogate and negotiate the process to fully discern automation effects based on LiAD.

Figure 11.

Multi-level perspective studies.

Ref. [] highlights an extrapolation analysis of short-term performance to infer long-term performance. Moreover, how users approach ‘easy’ learning, ‘perceived ease-of-use’, perceived usefulness, ‘perceived ease-of-learning’, as well as ‘perceived ease of misuse’ are significant to address. Essentially, as learning is an ongoing process model, users remain learners throughout their entire use of the system; as a result, new thinking, new knowledge formation, attitudes and behaviours are formulated, which are important to consider. Furthermore, what user behaviour attributes and learning effects/curves are evoked (e.g., over-use, misuse, disuse, trust, etc.). A complete centralisation of UX in research developments should be highlighted; thus, a critical view of this phenomenon beyond its theorisation should be explored, including the nature of its effects. To support this framework’s arguments, it is significant to normalise long-term research and [e]LiAD. Understanding that, without an appropriate view of long-term research, it is impossible to build a body of transformative and informative knowledge relating to LiAD. Thus, this paper is dedicated towards the naturalisation of [e]LiAD.

4. Conclusions

We framed the discussion based on different factors that influence LiAD; thus, a familiarisation of the concept is formulated. This paper may help with prognostication or extrapolation of future LiAD studies. The framework discussion provides an opportunity to add to knowledge discovery on learning/long-term effects and LiAD. To perform this effectively across the broad spectrum of automated driving research, it is important to define a set of core concepts that can inform and guide the research strategy. We have introduced the learnability engineering life cycle and CALE for a better understanding of LiAD research. Our intent for this framework is to encourage researchers, developers, and all relevant stakeholders in automated driving to engage in more LiAD research. In addition, we have proposed several fundamental strategies that can guide study approaches. Conducting rigorous empirical work on LiAD in all its forms, as well as actively engaging with stakeholders across the diverse landscape of human factors and ergonomics engineering, will be fundamental to reforming knowledge. Developing an empirically grounded and theoretically informed approach to LiAD may help in making trade-off decisions on design more expedient to enhance safe HAI and QAD. In conclusion, we hope the reader attained discernment and conceptual understanding of LiAD. As well as encouraged scholars to open discussions on this type of research.

Author Contributions

Conceptualisation, N.Y.M.; methodology, N.Y.M.; software, N.Y.M.; validation, N.Y.M.; formal analysis, N.Y.M.; investigation, N.Y.M.; resources, N.Y.M. and K.B.; data curation, N.Y.M.; writing—original draft preparation, N.Y.M.; writing—review and editing, N.Y.M.; visualisation, N.Y.M.; supervision, K.B.; project administration, K.B.; funding acquisition, K.B. All authors have read and agreed to the published version of the manuscript.

Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation program under the Marie Skłodowska-Curie grant agreement No. 860410.

Data Availability Statement

No new data were created or analysed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript in non-alphabetical order:

| ACC | Adaptive Cruise Control |

| ADS | Automated Driving System |

| AI | Artificially Intelligent |

| BAC | Behaviour Adaptability and/or Changeability |

| CALE | Concepts for Applying Learnability Engineering |

| ESM | Experience Sampling Methods |

| HAI | Human-Automation Interaction |

| HAC | human-Automation Compatibility |

| HCI | Human–Computer Interaction |

| HMI | Human–Machine Interface |

| LiAD | Learnability in Automated Driving |

| OEM | Original Equipment Manufacturer |

| PUMS | Programmable User Models |

| QAD | Quality Automated Driving |

| QinU | Quality in Usability |

| QinL | Quality in Learnability |

| UX | User Experience |

| ISO | International Standard Organisation |

| [i]LiAD | Initial Learnability in Automated Driving (initial LiAD) |

| [e]LiAD | Extended Learnability in Automated Driving (extended LiAD) |

| S-RC | Stimulus-Response Compatibility |

References

- SAE International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles (J3016_202104); SAE International: Warrendale, PA, USA, 2021. [Google Scholar]

- Kyriakidis, M.; de Winter, J.C.F.; Stanton, N.; Bellet, T.; van Arem, B.; Brookhuis, K.; Martens, M.H.; Bengler, K.; Andersson, J.; Merat, N.; et al. A human factors perspective on automated driving. Theor. Issues Ergon. Sci. 2019, 20, 223–249. [Google Scholar] [CrossRef]

- Körber, M.; Baseler, E.; Bengler, K. Introduction matters: Manipulating trust in automation and reliance in automated driving. Appl. Ergon. 2018, 66, 18–31. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- Mbelekani, N.Y.; Bengler, K. Learning Design Strategies for Optimizing User Behaviour towards Automation: Architecting Quality Interactions from Concept to Prototype. In Proceedings of the International Conference on Human-Computer Interaction, Copenhagen, Denmark, 23–28 July 2023; Springer Nature: Cham, Switzerland, 2023; pp. 90–111. [Google Scholar]

- Heimgärtner, R. Human Factors of ISO 9241-110 in the Intercultural Context. Adv. Ergon. Des. Usability Spec. Popul. Part 2014, 3, 18. [Google Scholar]

- Rudin-Brown, C.M.; Parker, H.A.; Malisia, A.R. Behavioral adaptation to adaptive cruise control. In Proceedings of the Human Factors and Ergonomics Society 47th Annual Meeting, Denver, CO, USA, 13–17 October 2003; SAGE Publications: Los Angeles, CA, USA, 2003; Volume 47, pp. 1850–1854. [Google Scholar]

- Hoffman, R.R.; Marx, M.; Amin, R.; McDermott, P.L. Measurement for evaluating the learnability and resilience of methods of cognitive work. Theor. Issues Ergon. Sci. 2010, 11, 561–575. [Google Scholar] [CrossRef]

- Butler, K.A. Connecting theory and practice: A case study of achieving usability goals. ACM SIGCHI Bull. 1985, 16, 85–88. [Google Scholar] [CrossRef]

- Albert, B.; Tullis, T. Measuring the User Experience: Collecting, Analyzing, and Presenting UX Metrics; Morgan Kaufmann: Burlington, MA, USA, 2022. [Google Scholar]

- Oliver, M. Learning technology: Theorising the tools we study. Br. J. Educ. Technol. 2013, 44, 31–43. [Google Scholar] [CrossRef]

- Joyce, A. How to Measure Learnability of a User Interface; Nielsen Norman Group: Dover, DE, USA, 2019. [Google Scholar]

- Grossman, T.; Fitzmaurice, G.; Attar, R. A survey of software learnability: Metrics, methodologies and guidelines. In Proceedings of the Sigchi Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 649–658. [Google Scholar]

- Stickel, C.; Fink, J.; Holzinger, A. Enhancing universal access–EEG based learnability assessment. In Universal Access in Human-Computer Interaction. Applications and Services: 4th International Conference on Universal Access in Human-Computer Interaction, UAHCI 2007 Held as Part of HCI International 2007, Beijing, China, 22–27 July 2007; Proceedings, Part III 4; Springer: Berlin/Heidelberg, Germany, 2007; pp. 813–822. [Google Scholar]

- Haramundanis, K. Learnability in information design. In Proceedings of the 19th Annual International Conference on Computer Documentation, Sante Fe, NM, USA, 21–24 October 2001; pp. 7–11. [Google Scholar]

- Howes, A.; Young, R.M. Predicting the Learnability of Task-Action Mappings. The Soar Papers (Vol. II): Research on Integrated Intelligence; MIT Press: Cambridge, MA, USA, 1993; pp. 1204–1209. [Google Scholar]

- Burton, J.K.; Moore, D.M.M.; Magliaro, S.G. Behaviorism and instructional technology. In Handbook of Research for Educational Communications and Technology, 2nd ed.; Jonassen, D.H., Ed.; Lawrence Erlbaum Associates, Publishers: London, UK, 2004; pp. 46–73. [Google Scholar]

- Nielsen, J. Usability Engineering; Academic Press: Boston, MA, USA, 1994. [Google Scholar]

- Holzinger, A. Usability engineering methods for software developers. Commun. ACM 2005, 48, 71–74. [Google Scholar] [CrossRef]

- Carroll, J.M.; Carrithers, C. Training wheels in a user interface. Commun. ACM 1984, 27, 800–806. [Google Scholar] [CrossRef]

- Bevan, N.; Macleod, M. Usability measurement in context. Behav. Inf. Technol. 1994, 13, 132–145. [Google Scholar] [CrossRef]

- Michelsen, C.D.; Dominick, W.D.; Urban, J.E. A methodology for the objective evaluation of the user/system interfaces of the MADAM system using software engineering principles. In Proceedings of the 18th Annual Southeast Regional Conference, Tallahassee, FL, USA, 24–26 March 1980; pp. 103–109. [Google Scholar]

- Abran, A.; Khelifi, A.; Suryn, W.; Seffah, A. Usability meanings and interpretations in ISO standards. Softw. Qual. J. 2003, 11, 325–338. [Google Scholar] [CrossRef]

- Nielsen, M.B.; Winkler, B.; Colonel, L. Addressing Future Technology Challenges through Innovation and Investment; Air University: Islamabad, Pakistan, 2012. [Google Scholar]

- Marrella, A.; Catarci, T. Measuring the learnability of interactive systems using a Petri Net based approach. In Proceedings of the 2018 Designing Interactive Systems Conference, Hong Kong, China, 9–13 June 2018; pp. 1309–1319. [Google Scholar]