Abstract

This paper describes a real-time fatigue sensing and enhanced feedback system designed for video terminal operating groups. This paper analyzes the advantages and disadvantages of various current acquisition devices and various algorithms for fatigue perception. After comparison, this study uses an eye movement instrument to collect user PERCLOS, and then calculates and determines the user’s fatigue state. A detailed fatigue discrimination calculation method is provided in this paper. The fatigue level is divided into three levels: mild fatigue, moderate fatigue and severe fatigue. Finally, this study uses the fatigue method demonstrated above to achieve real-time discrimination of the fatigue level of the user in front of the video operation terminal. This paper elaborates a method for waking up users and enhancing feedback based on their fatigue level and the importance of information. This study provides a solution for avoiding the operational risks caused by fatigue and lays the foundation for the machine to sense the user and provide different service solutions based on the user’s status.

1. Introduction

With the continuous development of the information technology era, more and more work must be done with video display terminals. Long-term work in front of the video display terminal has a negative impact on the visual, physical and psychological aspects of the operator [1]. The visual load, brain load and even cognitive load of the operator are formed. These loads affect the operator’s work efficiency and work quality, and may even cause disoperation, resulting in irreparable fault, in particular in special industries such as flight control [2,3], instrument control, early warning monitoring and other core command and control positions. We analyzed the working environment characteristics of video terminal operators, such as disaster detection centers, intelligence analysts, etc. Due to the specificity of the work, the working hours are long and the workload is high. These types of positions are prone to fatigue. At the same time, such positions require operators to be highly alert at all times. Fatigue may cause operators to miss key information leading to work errors, which may cause significant risks.

The earliest research on fatigue detection can be traced back to the 1960s. In the 1980s, the U.S. Congress approved the Department of Transportation to study the relationship between commercial motor vehicle driving and traffic safety [4], and substantial research work was carried out. In the 1990s, great progress was made in fatigue detection for the driving field, and many countries such as the United States, Japan, the United Kingdom and Australia have begun to research the development of vehicle-mounted electronic measurement devices [5].

In China, Liao Jianqiao et al. [6] proposed in 1995 that human brain load should be considered when designing the system. He proposed to measure and calculate the brain load of the operators using a subjective measurement method, a main task measurement method, an auxiliary task measurement method, a physiological measurement method, etc. In 2003, Peng Xiaowu et al. [7] applied subjective and objective measurement methods to evaluate the brain load in VDT operations. At the same time, he explored the application of heart rate variability in the evaluation of mental workload. The main methods currently used to detect fatigue are PERCLOS, pupil diameter measurement, blink frequency measurement, maximum eye closure time measurement, head posture measurement, heart rate variability, pulse monitoring, etc. The PERCLOS algorithm was first proposed by Carnegie Mellon. In 2016, Xiang-Yu Gao et al. used the PERCLOS algorithm to label the fatigue of drivers [8]. In 2020, Yu Chen, Jintao Li and Xu Junli et al. used the PERCLOS algorithm to identify whether a driver is in a fatigue state and designed a corresponding early warning system [9]. In 2011, Qin Wei et al. analyzed the sensitivity of pupil diameter change corresponding to fatigue change during driver driving [10]. In 2017, Yan Ronghui proposed a method for applying pupil diameter measurement to the fatigue level of south-side railroad drivers [11]. In 2011, Fu Chuanyun experimentally concluded that there is a significant difference in the average blink time under normal and fatigue driving of the same driver, and that the average blink time of different drivers under the same fatigue state has a good cross-population consistency. It also highlights the corresponding method of using blink frequency to measure driver fatigue or not. Meanwhile, the applicability of the heart rate variability (HRV) method for driver fatigue detection was analyzed by Fu Chuanyun [12]. In 2019, Cao Yucong and Wu Jianyu proposed an algorithm for incorporating head features in the re-modal fusion measurement driver fatigue detection method [13]. In 2021, Feng Yuhang Ye Hui et al. conducted further research and analysis on fatigue detection and early warning based on head multi-pose fusion recognition [14].

Most of the previous studies on fatigue detection have been conducted for drivers. There has been no detailed investigation of fatigue detection for video terminal operators. In recent years, more and more research has been conducted in the field of fatigue detection for aviation controllers, pilots and other personnel in important positions. Jin Huibin et al. used KSS as an experimental calibration to test the correlation between CFF and PERCLOS indexes and controller fatigue, and found that both were significantly correlated with fatigue and showed higher sensitivity during the confederation [15]. After that, Jin Huibin et al. proposed a joint eye movement index model to characterize fatigue using six eye movement indexes such as sweep speed, blink frequency and PERCLOS value [16]. A subset of scholars have used EEG to study cognitive load on the brain. This also has some reference value for the study in this paper. Qi Peng, Ru Hua et al. used EEG techniques to explore the relationship between mental fatigue and functional reorganization between brain regions [17]. Shuo Yang, Zengxin Wang et al. used event-related potential techniques to test the effects of mental fatigue on the allocation of information resources to working memory functions [18]. Compared with the fatigue monitoring of drivers, the monitoring environment of video terminal operators is different. The state of the monitored person is different. The time of monitoring is different. Therefore, the fatigue monitoring of video terminal operators cannot be directly adopted from driver fatigue detection. When operator fatigue occurs, information omission and false responses are significantly increased. The current computer system lacks fatigue sensing and processing for video terminal operators, and cannot guarantee the effective communication of important information. This problem is becoming more serious as the amount of information handled by video terminal operators increases, and it needs to be addressed. Divjak M., Bischof H. et al. in 2009 designed a device that uses blink frequency to determine the user’s fatigue status. This device can be used to prevent computer vision syndrome [19]. This has informed much of the research in this paper.

Sensing the user’s state by monitoring the user’s fatigue is a part of context awareness. Contextual perception includes environment perception, user perception and event perception. User perception includes state perception, emotion perception, user portrait and other parts. Sun Shouqian et al. [20] point out that we are now entering the design 3.0 era, which is dominated by innovative design. This places greater demands on the human–machine interaction level. The research in this paper also falls into this area. Ming Dong et al. [21] point out that research has shown that dynamic human–machine task assignment based on brain load can significantly improve operational performance in a simulated complex human–machine interaction task environment. Adaptive automation technology based on brain load can improve human–machine collaboration performance in precision control such as flight control and UAV manipulation [22]. In addition, this technology can also improve the subjective load perception of humans. Wang Zenglei et al. [23] proposed a multichannel interaction method based on eye-tracking and gesture recognition. The target objects are pre-selected using gaze line detection and gaze point detection. The confirmation of the candidate target object and related operations is completed by gesture recognition. In addition, this is also important progress in the era of the new generation of “Internet +“ intelligent interaction. Based on camera, eye movement, face and other sensing devices to monitor user status and provide interaction services adaptively, it lays the technical foundation for natural and humanized intelligent interaction. Wang Peng et al. [24] designed a smart home controller based on eye-tracking technology. The controller enables users to control the on-and-off settings of home appliances by blinking to achieve eye-tracking control of home appliances. Gu Yulun et al. [25] used AI eye-tracking technology to design a smart home service solution for the elderly, including telephone contact with children, medication reminders and intelligent switch control.

In this paper, we build a fatigue detection environment for video terminal operators using an eye-tracking device for the characteristics of the working environment of video terminal operators. The fatigue of the operator is determined by the captured eye movement indicators. Experiments were performed to obtain the fatigue threshold of the operator. Finally, combined with the user cognitive attention model, we designed a fatigue monitoring and enhanced feedback system for video terminal operators. Therefore, the importance and originality of this paper are that it researches real-time fatigue detection methods for video terminal operations and enhances the design of information display elements for fatigued personnel to guarantee the accessibility of information.

2. Materials and Methods

2.1. Real-Time Fatigue Detection Equipment

According to different fatigue detection principles, devices for detecting fatigue include eye-tracking devices, EEG acquisition devices, cameras, heart rate acquisition devices, etc. Through comparative analysis, the acquisition methods, cost and accuracy of these devices were compared as shown in Table 1. This study hoped to find out whether the operator is fatigued in time through real-time fatigue detection of the operator in front of the video terminal. The EEG acquisition device and heart rate acquisition device need to allow the subject to wear the relevant acquisition equipment to collect data, which will cause certain restrictions to and interference with the subject’s range of activities and work status. The camera can collect facial expressions, images around the eyes, mouth images and other image data, and use the image recognition method to determine whether the operator is in a state of fatigue. However, its stability and recognition rate are low compared to those of eye-tracking devices. Therefore, the fatigue detection device used in this study was an eye-tracking device.

Table 1.

Collection device comparison analysis table.

In this study, the aSee A3 model eye-tracking device developed by 7invensun was used. The size of the product is 310 × 23 × 22 mm, the sampling rate is 60 Hz, the distance between the subject and the screen is 45–70 cm, the head movement range is 25 × 22 × 68 cm and the measurement accuracy is 0.5°. The latency is 17 ms (60 Hz). Eye tracking was conducted using the corneal reflex method, using a 3D model of the human eye based on a gaze point tracking algorithm. The device can support a screen size of 19 inches and uses a USB 3.0 interface connection. It is compatible with Windows 7/8/10 and other operating systems. The hardware environment built in this study is shown in Figure 1.

Figure 1.

Real-time fatigue detection hardware environment schematic.

2.2. Method and Index Analysis of Eye Movement Fatigue Monitoring

When fatigue occurs in the human body, the changes in the human eyes are more obvious. For example, the blink frequency is significantly reduced, and the area of the closed curve enclosed by the upper and lower eyelid contours is significantly smaller. There are four main types of eye movement indicators that can be used to monitor the user’s fatigue: eye closure time, pupil diameter, eyelid closure PERCLOS, and blink numbers and frequency [26].

- (1)

- Eye Closure Time

Eye closure time is the duration of eye closure. The length of eye closure time is closely related to the degree of fatigue. Studies have shown that the duration of eye closure is prolonged after fatigue. When the eye closure time reached 3 s/min, the subjects showed mild fatigue. When the eye closure time exceeded 3.5 s/min, the subjects showed significant fatigue.

- (2)

- Pupil diameter

The pupil, the small round hole in the center of the iris in the human eye, is the channel through which light enters the eye. The contraction of the pupillary sphincter on the iris causes the pupil to narrow, and the contraction of the pupil-opening muscle causes the pupil to dilate. The pupil is generally 2 to 5 mm in diameter, with an average of about 4 mm. Studies have shown that the pupil diameter gradually decreases as the workload increases and the person becomes more fatigued [27]. Pupil diameter can also be an effective indicator to detect driver psychological fatigue. As driver fatigue increases, the pupil diameter of the driver continues to decrease. At the same time, experiments have demonstrated that pupil diameter detection of fatigue has cross-population consistency [28].

- (3)

- PERCLOS

PERCLOS (Percent Eye Closure) is the percentage of time during which the eye is closed for a given unit of time. It is considered one of the most effective indicators for fatigue monitoring. Unlike other pupil-based eye metrics, eyelid closure is concerned with eyelid activity and its acquisition is mainly performed through image recognition. The general method is that the camera captures the user’s facial image, then acquires the eye image based on image processing methods, and after specific image analysis or pattern recognition methods, determines whether the eye is in a closed state, and finally performs mathematical operations to obtain the percentage of eye closure time.

- (4)

- Blink and blink rate

Normally, human eyes blink 10 to 15 times per minute, and the duration of each blink is about 0.2 s, with some differences between men and women. When a person enters a state of fatigue, two things happen: blinking will unconsciously increase for protection, or the eyes will become sluggish and blurry and the number of blinks will change. The increase or decrease in the number of blinks compared to the state of consciousness can reflect the degree of fatigue. Studies have shown that the normal number of blinks in a person is 10 to 20 in a minute and the normal number of blinks in a fatigued state is 20 to 40, which means that the number of blinks in a fatigued state are increased by 40.0% and the blink frequency is increased by 50.0%.

All of the above 4 types of eye movement metrics can be captured by the aSee A3 model eye-tracking device developed by 7invensun. However, each metric has certain drawbacks and limitations. In the early stage of fatigue, there is no significant prolongation of eye closure duration. This means that the eye closure duration is not effective in detecting early mild fatigue. In addition to being correlated with fatigue, pupil size is highly susceptible to interference from factors such as light intensity, emotion, and cognitive load [29]. In practice, the accuracy of pupil diameter in detecting fatigue is low. Blink frequency and a prolonged duration of eye closure usually coincide; thus, the same problem of not easily detecting fatigue at early stages exists. Moreover, in experiments, it was found that the blink frequency changes with the driver’s fatigue level in an insignificant trend, showing a certain randomness. PERCLOS is a fatigue detection method that is widely used in current research, and its effectiveness and real-time performance have been proven by a large number of experiments and studies. The accuracy of PERCLOS decreases significantly at low illumination levels. Furthermore, PERCLOS has a certain time delay [8,9]. In a comprehensive comparison, the operating environment of the video terminal operator can be controlled, and the environmental illumination can be adjusted and controlled. The time delay in the calculation can be controlled in the range of 500–1000 ms, which is within an acceptable range. Therefore, the index selected for the real-time monitoring of user fatigue in this study is PERCLOS.

We used the aSee A3 model eye-tracking device developed by 7invensun to collect the user’s eye schematic as shown in Figure 2. From the energetic state, it gradually evolves to the user’s fatigue state, and the eyelid closure gradually changes.

Figure 2.

Schematic diagram of eyelid closure changes.

The eyelid closure f is obtained by dividing the length of time per unit of time judged as eye closure, t, by the length of time per unit of time, T, and then multiplying by 100%. It is calculated as follows.

For the discrimination of eyelid closure, the current common method is to identify eyelid closure (reduction in the distance between the upper and the lower eyelids) above 80% as closed eyes, i.e., the P80 criterion, which is considered to best reflect the degree of human fatigue. In a 1998 National Highway Safety Administration study, researchers conducted a comparative study of the correlation between eyelid closure and mental fatigue as determined based on several guidelines. They found that P80 had the highest intrinsic correlation with subject fatigue and that it was a robust criterion for assessing eyelid closure.

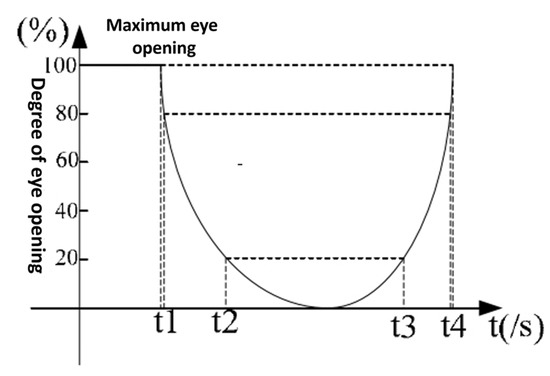

The principle for PERCLOS calculation is shown in Figure 3 [29]. The curve in the figure expresses a complete process of the eye from maximum opening to closure to maximum opening again, with the horizontal coordinate indicating the time and the vertical coordinate indicating the degree of eye opening. In the figure, t is the time spent from the maximum eye opening to 20% eye closing, t is the time spent from the maximum eye opening to 80% eye closing when the eye is fully closed and then starts to open again, t is the time spent from the initial maximum eye opening to the complete closing and then 2% eye opening, and finally, the time spent from the initial starting position to the eye opening to 80% is t. The PERCLOS value can be calculated based on the above four time periods, and the formula for calculating PERCLOS is shown in Equation (2). When monitored by this method, it is generally considered that the monitored person is in a fatigue state when the PERCLOS value is greater than 0.2.

Figure 3.

Principle diagram of PERCLOS calculation.

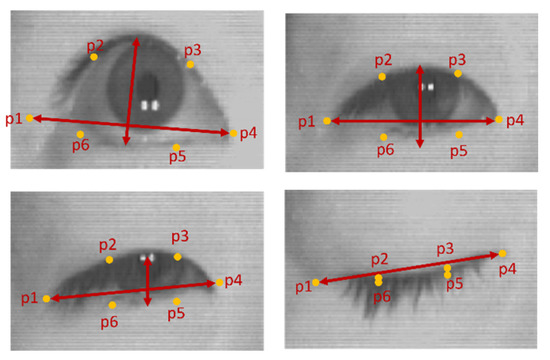

When using the camera for real-time monitoring, it is difficult to determine whether the eyes are fully open or closed, so it is impossible to determine the time of t and t, which makes it difficult to accurately calculate the PERCLOS value. Therefore, a new concept is introduced here—eye aspect ratio—to determine the degree of eye closure, as shown in Figure 4, which illustrates a single blink process.

Figure 4.

Eye opening and closing state monitoring.

The eye aspect ratio is calculated with the following formula:

The P, P, …. P are positioned as shown in the figure. When the EAR is less than 0.2, it is recognized as a closed eye state. If the acquisition system uses a camera that captures images at 60 frames/second, to calculate the PERCLOS per second, it is only necessary to know the number of frames of the image in which the eye is in the closed state during that second, at which time the PERCLOS per second is calculated as in Equation (3).

In Equation (3), 60 is the total number of image frames acquired per second, num(close) is the number of frames in which the eye is closed in that second, and if the value of PERCLOS over a period of time is calculated, then Equation (4) is to be used as shown.

In Equation (5), n × 25 indicates the total number of image frames acquired in n seconds, and num(close) is the number of image frames in which the eye is closed in n seconds. The PERCLOS value is calculated from the equation, and if PERCLOS > 2.0, then the person being tested is considered to be in a fatigue state.

2.3. Real-Time Fatigue Sensing System

The study added the aSee A3 model eye-tracking device, which can capture eye images in real time and acquire feedback eye movement index data, to the normal hardware operation for users of video screen terminals. The aSee A3 model eye-tracking device was used to calculate the PERCLOS value with the above-mentioned Equation (5). When PERCLOS>2.0, it was judged that the tested personnel were in a fatigue state.

When the operator appeared fatigued, we started timing. The moment of fatigue was recorded as t. When PERCLOS < 2.0, the operator returned to the normal state. The non-fatigue emergence moment was recorded as t. In this way, the time period when fatigue emerged was determined as T, as is shown in Equation (6):

When fatigue reappeared again, the moment was recorded as t. In this way, the waking time period after the generation of fatigue was T, as is shown in Equation (7):

Thus, we acquired the fatigue time period as {T, T, T, T……T, n>0}.

We collected the non-fatigue time period as {T, T, T, T……T, n>0}.

When the awake time period T was greater than 1 min, we considered that the operator returned to the awake state. We stopped the recording of the fatigue time period at this time. When fatigue occurred again, the recording was restarted.

In 2010, YANG and LIN et al. defined the waking state, mild fatigue, moderate fatigue and severe fatigue. Mild fatigue is defined as people sitting in a standard physical posture, performing well mentally and not showing signs of fatigue. In mild fatigue, people blink faster, start to rub their eyes, occasionally yawn, start to lean back against a table or chair and lose concentration. This causes people to start reacting more slowly and make mistakes. In moderate fatigue, the person’s eyes rotate less, they blink more frequently, they begin to yawn, their upper body movement decreases and they become significantly less focused and confused. At this point people’s reactions become significantly slower and their error rate increases significantly. The eyelids appear to close for short periods of time and the eyes barely turn. The head appears to drop, the hands are pressed against the plate and the handling is sluggish. At this point people are particularly slow to react or even unable to respond effectively. People begin to fall asleep uncontrollably. The correct rate tends to be close to 0. Therefore, we classified fatigue into three levels. Mild fatigue, moderate fatigue, and severe fatigue. We defined mild fatigue as occasional distractions with response times that were less than 10% longer than normal response times. Moderate fatigue was defined as frequent distractions, with significantly longer reaction times and a sudden drop in correctness. We defined severe fatigue as the occurrence of napping, the inability to complete any task or the occurrence of people going offline.

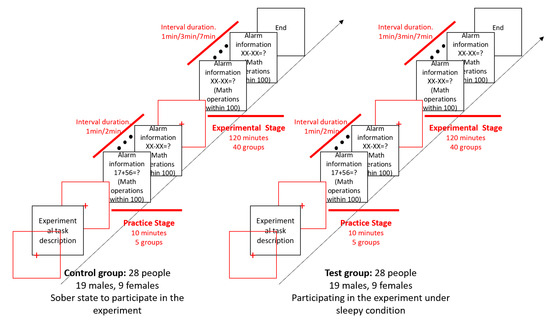

2.4. Fatigue Level Threshold Experiment

Twenty-eight workers, 19 males and 9 females, were selected to participate in the experiment. These 28 subjects were invited to complete a target monitoring and feedback task in front of a video screen terminal. The experimental procedure was to display an alarm message at a random location on the screen and to ask the subjects to complete a mathematical problem within 100 at the same time. During the experiment, we extended the test target interval and reduced the intensity of the alert message when it appeared. These made the experiment boring and tedious. The subjects easily relaxed and quickly entered the fatigue state.

Specific practices included the following. To improve each user’s attention requirements, no tone was provided during the alarm, and the alarm box was not displayed flashing. In this way, the subjects could complete the monitoring task only in the awake state. They would not be awakened by the alarm tone or a flashing message. The duration of the experimental interval was not fixed to enable the subjects enter the fatigue state quickly. The experiment was divided into a practice phase and an experimental phase. In the practice phase, the interval between alarms and tests was 1 min or 2 min to familiarize the subjects with the experimental procedure quickly. The practice phase was 10 min in total. For the test phase, we had interval waiting times in between of 1 min, 3 min and 7 min. The duration of the experiment was 2 h. Spatial line graphs that tended to cause fatigue or sleep were played in the interval to accelerate subjects’ fatigue. We set up two sets of experiments with test and control groups. The test group tested these 28 workers after a full night shift for the experiment. The control group was the experimental situation of 28 workers after sufficient sleep. The experimental procedure is shown in Figure 5.

Figure 5.

Experimental flow chart.

The equipment we employed in the experiments used the same hardware environment built in our study. We used a DELL Precision 3640 tower graphics workstation with a Core i7-10700 CPU and 32 G of RAM. The aSee A3 model eye-tracking device developed by 7invensun was used. The screen size of the computer used by the subjects was 19 inches.

During the experiment, we used eye movement equipment to record the fatigue of the users. We recorded the fatigue emergence moment as t and the wakefulness emergence moment (non-fatigue emergence moment) as t to calculate the fatigue percentage Pf of the operator in each case in time.

We recorded the reaction time Tc and the correct rate Cc for the control group, and the reaction time T and the correct rate Ct for the test group. In accordance with the classification of fatigue levels above, we defined the corresponding data relationships as shown in Table 2.

Table 2.

Definition of fatigue level.

Since the subjects entered different fatigue levels as they completed the test set, it was a gradual process. That is, the users may have gone from the initial waking state, gradually into mild fatigue, then into moderate fatigue, and finally into severe fatigue. Due to the different constitutions and states of the different subjects, some subjects may only have reached the light fatigue state or the moderate fatigue state, or even remained awake the entire time. Therefore, in the process of data analysis, we segmented the data of 40 test segments with different intervals in the experimental phase. One time segment was used according to every 8 sets of tests. Thus, each subject had 5 time periods of data available for analysis. The total number of test groups was 5 × 28, totaling 140 sets of data.

The 140 sets of data were classified according to the definition of fatigue level, and the mean reaction time of the control group was recorded as T, and the mean correct rate was recorded as C.

We refer to the definition of fatigue levels proposed by YANG and LIN et al. [30] in 2010. We classified the state of the person into 4 levels: normal state, mild fatigue, moderate fatigue and severe fatigue. In the normal state, the person’s cognitive ability to act is normal. In mild fatigue, the person shows a lack of concentration and a slight decrease in cognitive ability. In moderate fatigue, the person shows a significant lack of concentration and a decrease in cognitive ability. In severe fatigue, the person shows disorientation, slow reaction time and a significant decrease in or loss of cognitive behavioral ability.

In 2012, Wang Lei et al. [31] experimentally tested the correct rate of the number of light spot tests under different fatigue levels. The experiment showed that as fatigue increased, the correct rate of the subject’s light-point test showed a decreasing trend. When the fatigue was mild, the correct rate was about 90% of the normal. In moderate fatigue, the correct rate was less than 85% of the normal. In severe fatigue, the correct rate was below 70% of the normal. 2016, Fan et al. [32] experimentally analyzed that as the task time changed, the subjects became increasingly fatigued and the reaction time changed significantly, in a linear trend. Correctness rates decreased progressively as fatigue deepened, with non-significant differences.

The real-time operator fatigue monitoring and enhanced feedback system designed for this study is intended for use by operators of intelligence surveillance systems. This type of work is characterized by long working hours and a high repetition of work. At the same time, there is a need for an immediate and correct response in case of emergency. Taking into account the needs of this type of work scenario and the results of the literature analysis, we defined the relationship between response time and correctness data for the different fatigue levels for the subsequent tests.

The test group with T > 110% T and T < 200% T at reaction time, or C < 90% C and C > 70% C, was judged as mildly fatigued. T > 200% T and T < 500% T, or C < 70% C and C > 50% C at the response of the test group were judged as moderately fatigued. The rest of the data were all severe fatigue data segments. The results of statistical analysis after classification are shown in Table 3.

Table 3.

Statistical results of fatigue class classification experiments.

During the experiment, we recorded the status of the subjects using an eye-tracking device. The control group was tested, with a total of 140 sets of experimental results. For 27 of these groups, the oculograph captured subjects experiencing fatigue (PERCLOS > 80%). Therefore, we excluded these 27 sets of data results from the analysis. We performed statistical analyses on the remaining 113 sets of data. The mean response time T for the control group (normal conditions) was 2078 ms and the correct rate C was 97.1%. In the mild fatigue group, the mean response time T was 3315 ms and the correct rate C was 89.2%. The test group tested 28 subjects taking part in the experiment after a night shift. During the experiment, all 28 subjects experienced fatigue (PERCLOS > 80%). According to the fatigue rating bands defined above, 17 of the 140 test groups had normal status. There were 56 groups that qualified as mildly fatigued. The mean reaction time T for the participants during mild fatigue was 3315 ms and the correct rate C was 89.2%. The fatigue rate for the participants was calculated as between 7.3% and 14.8% for mild fatigue. Forty-three groups were found to be moderately fatigued. The mean response time Tt was 5724 ms and the correct rate C was 42.7% for moderate fatigue. The fatigue rate P was calculated as 22.3–47.5% for subjects with mild fatigue. There were 24 groups that qualified as severely fatigued. In severely fatigued subjects, the experimental test was not responded to as the subjects went into a snooze or offline state. Therefore, the participants’ correctness and response time could not be calculated and assessed during severe fatigue. It was calculated that the fatigue rate P was greater than 52.1% during severe fatigue.

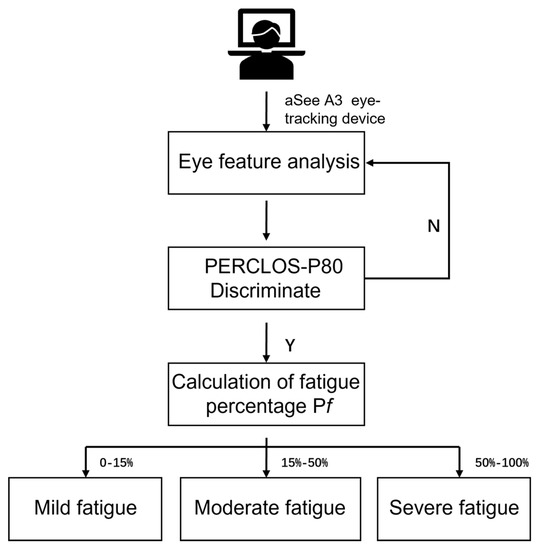

Combined with the data obtained from the experiment, the fatigue percentage P was selected as 0–15%, 15–50% and 50–100% for mild fatigue, moderate fatigue and severe fatigue, respectively, in this study for the convenience of later calculations.

3. Results

The real-time fatigue sensing and enhanced feedback system studied in this paper refers to a system that enhances the feedback output of the video screen terminal system according to different fatigue levels in response to the real-time fatigue monitoring results of operators. Enhanced feedback was divided into two aspects. On the one hand, for different fatigue levels, the operators were given corresponding feedback stimulation to help the operator get out of the fatigue state. At the same time, the status of the operators was considered as part of the system monitoring, and real-time feedback was provided to the higher-level users. It was convenient for higher-level users to grasp the status of operators in real time and find out the links with operational risks. On the other hand, for different fatigue levels, combined with the importance of the information, the information was delivered in different feedback forms according to the information importance hierarchy, to ensure the accessibility of important information in any situation.

First, we discriminated the user’s state according to the analysis above. The fatigue state detection process is shown in Figure 6. We used the aSeeA3 eye movement instrument to capture and analyze the eye characteristics of the operator. We calculated the PERCLOS value of the operator, and the fatigue state was judged to be in accordance with P80. Otherwise, we continued to monitor the user’s status in real time. After the fatigue state appeared, the fatigue percentage of the accumulated time period was recorded until the waking state (T> 1 min). If the fatigue ratio was in the range of 0–15%, it was judged as mild fatigue. The fatigue ratio in the interval of 15–50% was judged as moderate fatigue. If the fatigue ratio was more than 50%, it was considered severe fatigue.

Figure 6.

Flow chart of fatigue level discrimination.

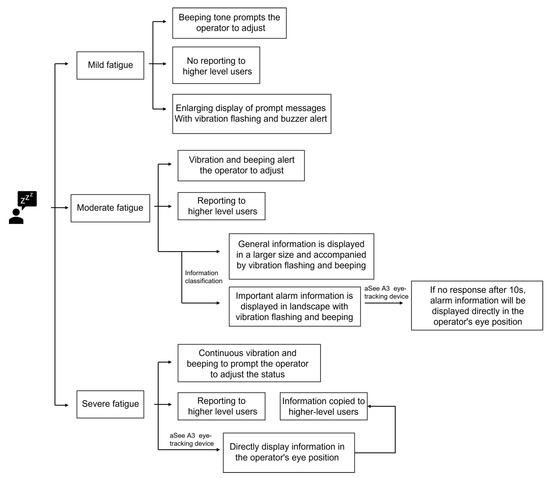

For mildly fatigued workers, we used wake-up cues as the main focus. By means of a beeping sound, the operator was prompted to adjust his or her own state. The situation of mildly fatigued operators did not need to be fed back to the higher-level users. When the prompt message appeared, the information display was enhanced by means of information amplification with vibration, flashing and beeping.

For moderately fatigued workers, we woke them up by both vibration and beeping. At the same time, we reported the situation of the moderate fatigue of the operators to the higher-level users so that they could grasp the situation of the operators in time and avoid the operation risks. For the information conveyed, we carried out information grading. General prompt information was displayed by means of enlargement and vibration and buzzer sound prompts. After 10 s, if there was no real-time response from the operator, the alarm message was displayed in the visual center of the operator. The beeping and vibration would not stop until the warning message was responded to. The operator’s visual center was obtained through the gaze point location information captured by the aSeeA3 eye movement instrument.

For severely fatigued operators, we woke up the operators by means of continuous vibration and a beeping tone. At the same time, we had to promptly report the situation of the severely fatigued personnel to the superiors. In this way, the superior could foresee the risk points in the operation and adjust the personnel deployment plan in time. When alarm information appeared, the alarm information was directly amplified and pushed to the heavy fatigue operators. Finally, in order to prevent heavy operational fatigue, personnel could not properly process information, and important information such as alarm information was copied to higher-level users synchronously. Through multidimensional means, the effective pushing of information was ensured.

The system flow of enhanced feedback is shown in Figure 7.

Figure 7.

Enhanced feedback schematic.

We selected 40 intelligence monitors to participate in the experiment, 32 males and 8 females. These 40 subjects were invited to complete a target surveillance task in front of a video screen terminal. We designed the task for intelligence monitors to monitor anomalous targets using our experimental system. The duration of the surveillance was 5 h. The number of anomalous targets was 100. The process is as follows. After finding the anomalous target, click the “Report Target” button, select the color of the anomalous target and click the “Send” button. The time when the anomalous information appears is random. The experiment simulated the process of intelligence monitoring. In order to induce the subjects to enter the fatigue state quickly, we asked them to participate in the experiment after 8 h of normal work. The goal of the experiment was to test whether there was a significant difference in the three metrics of correctness, response time and omission rate between users using the designed system and the undesigned system in the presence of fatigue. Since it normally takes a longer time to reach the state of fatigue, we asked the subjects to participate in the experiment after 8 h of work. The subjects were already in a tired state when they participated in the experiment. This increased the probability that the subjects would be fatigued during the experiment. It also shortened the overall length of the experiment.

We divided the subjects into two groups of 20 each. One group used the original intelligence monitoring system; i.e., the real-time fatigue sensing and enhanced feedback system studied in this paper was not used. We refer to it as the control group. The other group used the system studied in this paper. We refer to it as the test group.

As the experiment was conducted after the subjects had worked for 8 h, all 40 subjects reached fatigue to varying degrees during the test. The fatigue levels were categorized according to the study in this paper. In the control group, 20 subjects experienced fatigue and 9 subjects experienced only mild fatigue; 7 subjects experienced periods of mild fatigue and moderate fatigue; 4 subjects experienced severe fatigue. During the test, 55.8% of the 20 subjects were found to be awake. The time period of the mild fatigue state accounted for 10.4%. The time period of moderate fatigue was 21.4%. Severe fatigue accounted for 12.4% of the time period. All 20 subjects in the test group were fatigued to varying degrees. A total of 11 were only mildly fatigued, 3 were mildly and moderately fatigued and 6 were severely fatigued. Statistically, the time period of wakefulness accounted for 42.6% of the 20 subjects during the test. The time period of the mild fatigue state accounted for 19.4%. The time period of moderate fatigue was 20.2%. The time period of severe fatigue was 17.8%. The fatigue statuses of the experimental groups are shown in Table 4.

Table 4.

Fatigue status of the experimental group.

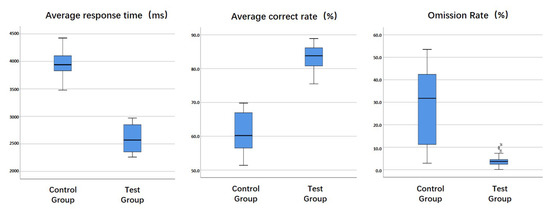

The SPSS26.0 statistical software was applied to analyze the experimental data. The experimental data box diagram is shown in Figure 8.

Figure 8.

Experimental data box diagram.

In order to test whether the test results of the control and control groups were statistically different, a one-sample Shapiro–Wilk test was performed on the test results. The three data sets of correctness, response time and omission rate all contained non-positively distributed data. Therefore, we used the median (interquartile range) to represent the data. We used the independent samples nonparametric Mann–Whitney U test to test whether there was a significant difference between the control and the experiment. This method was used to determine whether the improved system was effective for fatigue detection and enhanced feedback. The results of the analysis are shown in the table. The results show a significant reduction in response time (p < 0.001), a significant increase in correctness, (p < 0.001) and a significant decrease in error rate (p < 0.001) using the fatigue detection and augmented feedback system studied in this paper. The detailed experimental results are shown in Table 5.

Table 5.

Comparison table of the results of the experiments.

The experimental data illustrates that the system designed in this study can effectively ensure the transmission of information and enhance the user’s ability to operate under fatigue by monitoring the fatigue status in real time and enhancing the design of feedback.

4. Discussion

This paper investigated a system for monitoring the fatigue status of the operators in front of the video terminal in real time and provided enhanced feedback. In this paper, we chose to use an eye movement meter to collect a user’s eye movement data and applied the PERCLOS method to discriminate the status of the video terminal operators. Although the PERCLOS method is the most common and effective fatigue monitoring method, it still has some problems and limitations. For example, some people are fatigued when they show a dull but not a closed gaze, when the PERCLOS method will fail. At the same time, the variance in the trend of eye risk closure over time is large and there are large individual differences. In addition, the PERCLOS method can be affected by the complex lighting and posture of personnel fatigue monitoring. Changes in illumination and operator posture lead to reduced or undetectable localization of user face and eye detection, thus reducing the success rate of fatigue monitoring. The extraction of fatigue features is very dependent on the detection and localization accuracy of the operator’s face and eyes. Many studies have proposed improvement methods for this problem, but no general unified conclusion has yet been formed. This study also does not propose a suitable solution to this part of the problem at present.

Currently, some algorithms for multi-information fusion have been introduced in the field of driver fatigue detection. They fuse features such as the driver’s upper and lower eyelid closure, the state of snorting, and blink frequency. The fused metrics are applied to evaluate the driver’s fatigue status.

In further research, we will introduce a multi-information fusion method to fuse four sets of data on the upper and lower eyelid closure, blink frequency, pupil diameter and facial features of the video terminal operator. There are many methods for fusion. The methods applicable to multi-source data fusion mainly include: (1) the signal and estimation theory method, (2) the information metric method, and (3) the statistical inference method. In this paper, through the analysis of multi-information fusion methods, we will apply the improved rough-set-theory-based fusion algorithm to determine the fatigue of video terminal operators through the decision level information fusion method. It is mainly divided into three steps: (1) feature calculation, (2) the combination of multi-information data sources, and (3) fatigue information fusion status determination.

- (1)

- Feature calculation

The first three of the four metrics that will be fused in this study are all eye movement feature metrics. We can obtain all of them through the eye tracker in this paper. The facial feature metrics require feature extraction of the facial images captured by the camera using a deep learning approach. In the first step of feature calculation, we first assign the feature quality function to each feature source, and then apply the maximum likelihood estimation method to obtain the optimal parameters.

- (2)

- Combination of multiple information data sources

Suppose: A = {A, A, …, A, n > 0} is the set of fatigue states, e = {e, e, …, e, n > 0} is the set of data sources, and e = {e, e, …, e, n > 0} is the set of data sources. The fusion rule formula can then be shown as follows:

- (3)

- Determination of fatigue information fusion status

This study will use fused facial features and eye movement features to jointly reflect the degree of fatigue characteristics of video terminal operators. Four data items, namely, upper and lower eyelid closure, blink frequency, pupil diameter and facial features of the video terminal operator, are to be fused. Each of the above metrics can represent the current state of the operator to a certain extent, and the final fatigue determination scale will be derived from the fusion analysis of each metric, which will finally quantify the qualitative fatigue analysis. The fusion of the features is to be performed using modified rough set theory.

In this paper, fatigue levels were classified and defined. In this study, fatigue was defined to include three grades: mild fatigue, moderate fatigue and severe fatigue. We experimentally classified the fatigue classes based on the fatigue percentage P. This method currently needs further practice and validation. The fatigue metric and the fatigue classification threshold have not yet formed a unified standard. Therefore, we need to conduct more in-depth research on the PERCLOS fatigue monitoring technology and the subsequent classification of fatigue grades.

In future research, we will use experiments to classify the different fatigue classes more accurately. We intend to use a random forest algorithm model to classify the fatigue classes. Based on bagging, a decision tree will be used as the base classifier, and the decision tree training sample dataset will be constructed randomly. This will increase the disturbance of the training dataset. We will introduce random attributes. We intend to determine the number of features used for classification K before training, and then randomly select K from all feature values of the selected dataset as the optimal attribute classification for the current node. We will further validate the feasibility of this approach in a subsequent study.

This paper is only about detecting user fatigue states. The eye-tracking environment built in this paper is also able to provide parameters on cognitive load, visual fatigue and other aspects. These factors also influence the user’s reaction time and correctness. In subsequent research, we will consider how to design a better system for the user’s visual fatigue, cognitive load and mental load. This system could help users to implement more precise operational control.

5. Conclusions

First, this study systematically analyzes the method of fatigue monitoring. For the operation characteristics in front of the video screen terminal, fatigue state monitoring relying on the eye-tracking instrument was selected. The aSee A3 eye tracker developed by 7invensun was used to build the real-time monitoring environment in this study. Four types of indicators were compared and analyzed for monitoring user fatigue: eye closure time, pupil diameter, eyelid closure PERCLOS value and blink frequency. Finally, the PERCLOS index was chosen to achieve real-time monitoring of user fatigue status in this study.

Secondly, this paper described the PERCLOS calculation method in detail and combined the periocular images and eye movement metrics captured by the aSee A3 oculomotor with the PERCLOS calculation method in this study. Using the method in this paper, it was determined that the tested person showed fatigue when PERCLOS was >2.0.

Moreover, we classified fatigue into three classes: mild fatigue, moderate fatigue and severe fatigue. Through two experiments on 28 workers, the fatigue time percentage P corresponding to different fatigue levels was obtained. Combined with the experimental results, the fatigue percentages P selected for mild fatigue, moderate fatigue and severe fatigue were defined as 0–15%, 15–50% and 50–100%, respectively, in this study.

Finally, for different fatigue states, combined with the importance of the information, this study provides a complete system design scheme including prompting to wake up users, reporting to superiors and enhancing information display feedback.

This study provides a system design solution for fatigue state monitoring and enhanced feedback for the video terminal operating population. Through the research in this paper, one is able to monitor the fatigue of video terminal operation users in real time, discover the fatigue status of users in time and provide corresponding solutions. In this way, on the one hand, the system can prompt and wake up the users with mild fatigue in time. On the other hand, it is able to promptly report the status of moderately fatigued and severely fatigued users to higher-level users, to control risks in time. Finally, it is able to enhance feedback through information and improve the reachability of the information. In this way, problems such as information omission and misjudgment caused by user fatigue can be effectively solved. The real-time fatigue sensing and enhanced feedback system studied in this paper can reduce the risk of fatigue operations in important positions.

In addition, this research is part of machine perception of user states and provides different service solutions based on user states. The research team has been working on multidimensional perception and human–machine integration technology. This research lays a certain research foundation for multidimensional perception and intelligent service of the machine for the user.

Author Contributions

Conceptualization, X.M. and C.X.; methodology, X.M.; software, X.M.; validation, X.M., X.L. and L.Y.; formal analysis, X.M.; investigation, X.M.; resources, X.M.; data curation, X.M. and X.L.; writing—original draft preparation, X.M.; writing—review and editing, X.L.; visualization, X.M.; supervision, X.M.; project administration, X.M.; funding acquisition, C.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data are not publicly available due to the protection of the subjects’ privacy.

Conflicts of Interest

The authors declare no conflict of interest.

Sample Availability

Not applicable.

Abbreviations

The following abbreviations are used in this manuscript:

| PERCLOS | Percent eye closure |

References

- Yu, Q.; Chen, D.; Ming, X.; Li, Y. Impact of video display terminal operation on visual system and its prevention. Chin. J. Ind. Med. 2012, 25, 361–363. [Google Scholar]

- Qiao, L.; Zhen, H.; Cao, D. Hazards of flight fatigue and its prevention. In Proceedings of the 2014 Symposium on Aviation Safety and Equipment Maintenance Technology, Hong Kong, China, 24–27 November 2014. [Google Scholar]

- Cheng, S.; Hu, W.; Ma, J.; Zhang, L.; Zhang, T.; Hui, D.; Dang, W. The role of postural control sensory remodeling effect in pilots’ brain fatigue warning. Occup. Health 2019, 35, 2868–2871. [Google Scholar]

- Zhou, Y.; Yu, M. Research on fatigue driving detection method. Med. Health Equip. 2003, 10, 25–28. [Google Scholar]

- Jiang, S. Vision-Based Driver Fatigue Detection Research. Master’s Thesis, Shanghai Jiaotong University, Shanghai, China, 2008. [Google Scholar]

- Liao, J. Brain load and its measurement. J. Syst. Eng. 1995, 10, 5. [Google Scholar]

- Peng, X. Experimental Study on the Evaluation of Brain Workload of VDT Operations; Huazhong University of Science and Technology: Shanghai, China, 2003. [Google Scholar]

- Gao, X. Research on Fatigue Labeling Method Based on Eye Movement Data. Master’s Thesis, Shanghai Jiaotong University, Shanghai, China, 2016. [Google Scholar]

- Chen, Y.; Li, J.; Xu, J.; Chen, W. Design of driver fatigue warning system based on eye-movement characteristics. Softw. Guide 2020, 19, 116–119. [Google Scholar]

- Qin, W. Sensitivity Study of Driver Physiological Indicators on the Response to Driving Fatigue. Master’s Thesis, Inner Mongolia Agricultural University, Hohhot, China, 2011. [Google Scholar]

- Yan, R. Research on Driving Fatigue Detection of Rail Transit Drivers Based on Eye-Movement Features. Master’s Thesis, Soochow University, Suzhou, China, 2017. [Google Scholar]

- Fu, C. Study on the Physiological and Oculomotor Characteristics of Drivers under Fatigue; Harbin Institute of Technology: Harbin, China, 2011. [Google Scholar]

- Cao, Y.; Wu, J.; Fan, L.; Wang, S.; Mei, X. Research on fatigue driving detection system based on multi-feature fusion. In Proceedings of the 2019 China Society of Automotive Engineers (CSAE), Sanya, China, 22–24 October 2019. [Google Scholar]

- Feng, Y.; Ye, H.; Huang, W.; Xu, Z.; Lin, J. Research on fatigue detection and early warning based on head multi-pose fusion recognition. Comput. Knowl. Technol. 2021, 17, 12–14. [Google Scholar]

- Jin, H.; Liu, Y. Research on the performance of eye movement indicators to detect regulatory fatigue. J. Saf. Environ. 2018, 1004–1007. [Google Scholar]

- Yang, S.; Wang, Z.; Wang, L.; Shi, B.; Peng, S. Study on the effect of mental fatigue on the allocation of information resources in working memory. J. Biomed. Eng. 2021, 38, 7. [Google Scholar]

- Divjak, M.; Bischof, H. Eye Blink Based Fatigue Detection for Prevention of Computer Vision Syndrome. ACM 2009. [Google Scholar]

- Jin, H.; Zhu, G. Effectiveness of eye movement indicators to detect control fatigue. Sci. Technol. Eng. 2018, 136–140. [Google Scholar]

- Qi, P.; Ru, H.; Gao, L.; Zhang, X.; Zhou, T.; Tian, Y.; Nitish, T.; Anastasios, B. Neural mechanisms ofmental fatigue revisited: New insights from the brain connectome. Engineering 2019, 5, 276–286. [Google Scholar] [CrossRef]

- Sun, S.; Zhao, D.; Qi, W. Innovative Design for Human–Machine Integration. Packag. Eng. 2021. [Google Scholar]

- Ming, D.; Ke, Y.F.; He, F.; Zhao, X.; Wang, C.; Qi, H.; Jiao, X.; Zhang, I.; Chen, S. Research on physiological signal based brain load detection and adaptive automation system: A 40-year review and recent progress. J. Electron. Meas. Instrum. 2015, 29, 13. [Google Scholar]

- Solovey, E.T.; Schermerhorn, P.; Scheutz, M.; Sassaroli, A.; Fantini, S.; Jacob, R. Brainput: Enhancing interactive systems with streaming fNIRS brain input. In Proceedings of the 2012 ACM Annual Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012. [Google Scholar]

- Wang, Z.; Zhang, S.; Bai, X. Research on mixed reality hand-eye interaction technology for collaborative assembly. Comput. Appl. Softw. 2019, 36, 8. [Google Scholar]

- Wang, P.; Chen, Y.Y.; Shao, M.; Liu, B.; Zhang, W. Eye-tracking-based smart home controller. J. Electr. Mach. Control. 2020, 24, 10. [Google Scholar]

- Gu, Y.; Wang, B.; Tang, Y. Combining AI eye-control technology with smart home technology. China Sci. Technol. Inf. 2021, 2, 3. [Google Scholar]

- Baisheng, N.; Xin, H.; Yang, C.; Anjin, L.; Ruming, Z.; Jinxin, H. Experimental study on visual detection for fatigue of fixed-position staff. Appl. Ergon. 2017, 65. [Google Scholar]

- Lowenstein, O.; Loewenfeld, I.E. The Sleep-Waking Cycle and Pupillary Activity. Ann. N. Y. Acad. Sci. 2010, 117, 142–156. [Google Scholar] [CrossRef]

- Ma, J.; Chang, R.; Gao, Y. An empirical validity analysis of pupil diameter size to detect driver fatigue. J. Liaoning Norm. Univ. (Soc. Sci. Ed.) 2014, 1, 67–70. [Google Scholar]

- Li, Q. Design of Fatigue Driving Detection System Based on Facial Features; Zhengzhou University: Zhengzhou, China, 2019. [Google Scholar]

- Yang, G.; Lin, Y.; Bhattacharya, P. A driver fatigue recognition model based on information fusion and dynamic Bayesian network. Inf. Sci. 2010, 180, 1942–1954. [Google Scholar] [CrossRef]

- Wang, L.; Sun, R. Research on controller fatigue monitoring method based on facial feature recognition. Chin. J. Saf. Sci. 2012, 22, 6. [Google Scholar]

- Fan, X.; Niu, H.; Zhou, Q.; Liu, Z. Research on brain fatigue characteristics based on EEG. J. Beijing Univ. Aeronaut. Astronaut. 2016, 7, 8. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).